Abstract

Category-specificity has been demonstrated in the human posterior ventral temporal cortex for a variety of object categories. Although object representations within the ventral visual pathway must be sufficiently rich and complex to support the recognition of individual objects, little is known about how specific objects are represented. Here, we used representational similarity analysis to determine what different kinds of object information are reflected in fMRI activation patterns and uncover the relationship between categorical and object-specific semantic representations. Our results show a gradient of informational specificity along the ventral stream from representations of image-based visual properties in early visual cortex, to categorical representations in the posterior ventral stream. A key finding showed that object-specific semantic information is uniquely represented in the perirhinal cortex, which was also increasingly engaged for objects that are more semantically confusable. These findings suggest a key role for the perirhinal cortex in representing and processing object-specific semantic information that is more critical for highly confusable objects. Our findings extend current distributed models by showing coarse dissociations between objects in posterior ventral cortex, and fine-grained distinctions between objects supported by the anterior medial temporal lobes, including the perirhinal cortex, which serve to integrate complex object information.

Keywords: object recognition, perirhinal cortex, RSA, searchlight, semantic knowledge

Introduction

A large number of studies have shown category-specificity in the posterior ventral temporal cortex (pVTC) for variety of object categories such as faces, places, body parts, houses, tools, and animals (Kanwisher et al., 1997; McCarthy et al., 1997; Chao et al., 1999; Epstein et al., 1999; Downing et al., 2001). However because this research focuses on similarities and differences between object categories, our understanding of how specific objects (e.g., cat, knife) are differentiated is limited. Although object representations within the ventral visual pathway must be sufficiently rich and complex to support the recognition of individual objects, there are few theories that specify how specific objects are represented and differentiated from their category neighbors. One structure that may be critical for object specific representations is the perirhinal cortex. This region is hypothesized to enable fine-grained distinctions between objects (Buckley et al., 2001; Bussey and Saksida, 2002; Moss et al., 2005; Taylor et al., 2006, 2009; Barense et al., 2010, 2012; Mion et al., 2010; Kivisaari et al., 2012) and may code specific object relations in contrast to categorical representations in pVTC.

Here we explore the extent to which individual objects can be differentiated from their category neighbors within the ventral stream. We use a large and diverse set of common objects, rather than object categories, and a specific form of MVPA, representational similarity analysis (RSA; Kriegeskorte et al., 2008a), to analyze fMRI activation patterns evoked by single objects. Similar activation patterns are predicted for similar objects (Edelman et al., 1998; O'Toole et al., 2005; Kriegeskorte et al., 2008a), where the basis of object similarity can be defined by different properties of the stimuli; such as visual shape, object category, and object-specific semantic information. Therefore, using RSA we can test where object representations along the ventral stream are organized by object category, or by object-specific properties.

To test for object-specific representations requires calculating specific measures for each object. One type of representational scheme is provided by models in which the semantic similarity between objects is captured with semantic features (e.g., has legs, made of metal) (Tyler and Moss, 2001; Taylor et al., 2007, 2011; Tyler et al., 2013; Devereux et al., 2013). Object similarity defined by semantic features captures both category structure (as objects from the same category will have overlapping features) and additionally within-category individuation (as each member of a category will have a unique set of features).

To test for categorical and object-specific semantic representations in the ventral stream, participants were scanned with fMRI while they performed a basic-level naming task (e.g., dog, hammer) with 131 different objects from a variety of categories (Fig. 1). We tested where different forms of visual, categorical, and object-specific semantic information were expressed in the brain using a searchlight procedure (Kriegeskorte et al., 2006) that compared the observed similarity of local activation patterns to the predicted similarity defined by different theoretically derived similarity predictions (Fig. 2).

Figure 1.

The 131 object images used in the current study. The order of images matches that of the representational dissimilarity matrices presented in Figure 2.

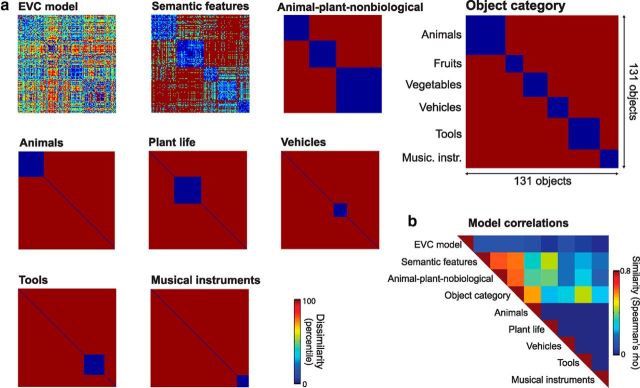

Figure 2.

Representational dissimilarity matrices. a, Dissimilarity predictions of the nine theoretical models tested. An EVC model captured the low-level visual properties in the pictorial images. Categorical models were based on grouping objects into different categories (i.e., animal-plant-nonbiological and object category) or single-category models (e.g., animals). The object-specific semantic model was based on similarity according to semantic feature information. Predicted similarity based on object category shown in large highlighting the categorical partitions of stimuli. b, Similarity of theoretical models.

Materials and Methods

Participants.

Sixteen right-handed participants took part in the study (6 male, 10 female) whose ages ranged from 19 to 29 (mean, 23 years). All participants had normal or corrected to normal vision, and gave informed consent. The study was approved by the Cambridge Research Ethics Committee.

Stimuli.

A total of 145 objects were used (73 living, 72 nonliving), where 131 of these were from one of six object categories (34 animals, 15 fruit, 21 vegetables, 27 tools, 18 vehicles, 16 musical instruments) and 14 additional objects that did not adhere to a clear category (and were not included in our analyses). Isolated colored objects were shown in the center of a white background, and normalized to a maximum visual angle of 7.5°. All objects were chosen to depict concepts from an anglicized version of the McRae production norms (McRae et al., 2005; Taylor et al., 2012) to calculate object-specific semantic similarity measures.

Procedure.

Participants performed an overt basic-level naming task (e.g., dog, hammer). Each trial consisted of a fixation cross lasting 500 ms, before an object for 500 ms followed by a blank screen lasting between 3 and 11 s. All objects were repeated six times across six different blocks. The object presentation order for each block was randomized for each participant, although a constant category order was maintained ensuring an even distribution of object category across the block. This category ordering ensures objects from the six different categories do not cluster in time, avoiding potential category clustering as a consequence of temporal proximity. On average, there were 145 objects between subsequent presentations of the same image (SD was 60 objects) with the range of distances approximating a normal distribution. The presentation and timing of stimuli were controlled with Eprime version 1 (Psychology Software Tools), and naming accuracy was recorded by the experimenter during acquisition.

fMRI acquisition.

Participants were scanned at the MRC Cognition and Brain Sciences Unit, Cambridge, in a Siemens 3-T Tim Trio MRI scanner. There were 3 functional scanning sessions using gradient-echo echoplanar imaging sequences collecting 32 slices in descending order of 3 mm thickness and between slice gap of 0.75 mm, and a resolution of 3 × 3 mm. The field-of-view was 192 × 192 mm, matrix size 64 × 64 with a TR of 2 s, TE of 30 ms, and a flip angle of 78°. Each functional session lasted ∼9–10 min, containing two object blocks. Before functional scanning, a high-resolution structural MRI image was collected using an MPRAGE sequence with 1 mm isotropic resolution.

fMRI RSA preprocessing.

Preprocessing consisted of slice-time correction and the spatial realignment of the functional images only using SPM8 (Wellcome Institute of Cognitive Neurology, London, UK). The resulting unsmoothed, un-normalized data for each participant were analyzed using the general linear model to create a single β image for each object based on all six repetitions that were used to create single object t-statistic maps. In addition to the 145 object predictors, predictors were included to capture slow trends using 18 regressors for each session based on the basis functions of a discrete cosine transform (minimum frequency = 1/128 Hz), six head motion regressors for each session, and a global mean predictor for each scanning session.

Before fMRI scanning, participants received instructions and practice of how to name objects during the scanning session to reduce any potential motion artifacts. Further we examined the realignment parameters to ensure head motion was not in excess of 3 mm in any direction during a session which was the case for 13 of the participants. The remaining three participants had motion not in excess of 4 mm in any direction. Only objects named correctly on all six repetitions (86%, SE = 1.53%) were included in further analyses.

RSA searchlight mapping: theoretical model predictions.

RSA was used to determine the kinds of information reflected in spatial fMRI patterns throughout the brain. An RSA searchlight mapping procedure (Kriegeskorte et al., 2006) was implemented using the RSA toolbox (Nili et al., 2014) and custom MATLAB functions to determine whether object dissimilarity predicted by theoretical models was significantly related to dissimilarity defined by local fMRI activity patterns. A number of theoretical representational dissimilarity matrices (RDMs) were created from the predicted dissimilarity according to visual, categorical, and object-specific semantic models (Fig. 2). Each RDM was constructed by creating a vector for each object and calculating the distance between all pairs of vectors (objects).

The early visual cortex (EVC) RDM was based on the C1 responses extracted from the HMax package of the Cortical Network Simulator framework (Mutch et al., 2010; http://cbcl.mit.edu/jmutch/cns/) using the parameters of the full model described by Mutch and Lowe (2006). The C1 responses of the HMax model are proposed to reflect properties of early visual cortex (V1/V2; Riesenhuber and Poggio, 1999; Serre et al., 2007). Response vectors from the C1 layer were computed for gray-scale versions of each image and dissimilarity values were calculated as 1 − Pearson's correlation between object vectors.

Binary categorical RDMs were constructed based on combinations of the six object categories included in the study. Two classes of categorical RDMs were defined. Single-category RDMs predict that the response patterns to all objects from a single object category will cluster together relative to objects from all other categories (that will not cluster). An RDM of this type was created for each category separately. Multicategory RDMs predict that the activation patterns to objects will cluster together according to a categorical scheme, so that the members of each category will cluster together, whereas between-category distances are predicted to be uniformly greater than within category distances (Fig. 2).

The object-specific semantic feature RDM was based on data from the anglicized McRae feature norms (McRae et al., 2005; Taylor et al., 2012). The feature norms contain lists of features associated with a large range of objects (e.g., has 4 legs, has stripes and lives in Africa are features of a zebra; Table 1) and were collected by presenting participants with a written concept name and asking them to produce properties of the concept. As the features were originally collected from North American English speakers, the data were modified to be relevant to native British English speakers (e.g., concepts like “gopher” were removed, whereas other names were changed; Taylor et al., 2012). Based on the feature norms, each object can be represented by a binary vector indicating whether each feature is associated with the object or not. The 131 objects used in this study were associated with 794 features in total and an average of 12.9 features per object. The semantic feature RDM reflects the semantic (dis) similarity of individual objects, where dissimilarity values were calculated as 1 − the cosine angle between feature vectors of each pair of objects (using a Jaccard distance also gives equivalent results). Moreover, this semantic-feature model captures both categorical similarity between objects (as objects from similar categories have similar features) and within-category object individuation (as objects are composed of a unique set of features).

Table 1.

Semantic feature lists for example objects

| Zebra | Apple | Mushroom | Knife | Airplane | Saxophone |

|---|---|---|---|---|---|

| travels in herds | has a core | has a cap | has a blade | flies | has a mouthpiece |

| eaten by lions | has seeds | has a stem | has a handle | crashes | has keys |

| has 4 legs | has skin | is small | is shiny | is fast | has reeds |

| has a mane | is round | is brown | made of metal | has a propeller | is shiny |

| has a tail | is green | is white | is serrated | has engines | made of brass |

| has hooves | is red | grows | is sharp | has wings | made of metal |

| has stripes | tastes sweet | eaten in salads | used by butchers | is large | is gold |

| is black and white | is crunchy | eaten on pizza | used for cutting | made of metal | produces music |

| hunted by people | is juicy | is edible | found in kitchens | used for passengers | used for music |

| lives in Africa | eaten in pies | used as drug | is dangerous | used for travel | used for playing jazz |

| lives in zoos | used for cider | grows in forests | used with forks | found in airports | used in bands |

| grows on trees | is poisonous | requires pilots | requires air |

RSA searchlight mapping: procedure.

At each voxel, object activation values from gray matter voxels within a spherical searchlight (radius 7 mm, maximum dimensions 5 × 5 × 3 voxels) were extracted to calculate distances between all objects (using 1 − Pearson's correlation) creating an object dissimilarity matrix based on that searchlight. This fMRI RDM was then compared with each theoretical model RDM (using Spearman's rank correlation) and the resulting similarity values were Fisher transformed and mapped back to the voxel at the center of the searchlight. In an additional analysis, each fMRI RDM was also compared with each theoretical model RDM while controlling for effects of all other theoretical model RDMs (using partial Spearman's rank correlations).

The similarity map for each theoretical predictor and each participant was normalized to the MNI template space and spatially smoothed using an 6 mm FWHM Gaussian kernel. The similarity maps for each participant were entered into a group-level random-effects analysis (RFX) and permutation-based statistical nonparametric mapping (SnPM; http://go.warwick.ac.uk/tenichols/snpm) was used to test for significant positive similarities between theoretical and fMRI RDMs, correcting for multiple comparisons across voxels and the number of theoretical model RDMs tested. In the follow-up partial Spearman's correlation analysis, we corrected for multiple comparisons across voxels. Variance smoothing of 6 mm FWHM and 10,000 permutations were used in all analyses. All results are presented on an inflated representation of the cortex using Caret (http://www.nitrc.org/projects/caret/), or the normalized structural image averaged over participants. We also note that our main results were conserved across a range of searchlight sizes ranging from 3 to 10 mm spheres.

Parametric analysis of semantic confusability.

We also tested whether voxelwise activation was related to the confusability of an object with all other objects. Our measure of semantic confusability was calculated from the semantic similarity matrix constructed by finding the cosine between all concept-feature vectors. Each row of this matrix contains how similar an object is to all other objects. To generate a semantic confusability score for each object we calculated a weighted mean across this row of the matrix, where the weights were defined as the exponential of the ranked similarities along the row of the matrix. The measure was calculated in this manner so that semantic confusability scores would most closely reflect the similarity of an object to its closest semantic neighbors. As the measure gives stronger weights to more semantically similar objects, high confusability scores will be generated for objects that are similar to many other objects (those objects that shared lots of semantic features with many other things and occupy a dense area of semantic space, e.g., a lion) and those that are highly similar to a small set of objects but not similar to objects in general (e.g., an orange, lemon, grapefruit, lime). Further, the measure has a low correlation with object familiarity (r = 0.17), although we note that our measure may share some properties with an objects' conceptual typicality (defined as the extent to which an object shares features with other members of its category; Patterson, 2007). Under this definition, some highly confusable objects will also be highly typical members of a category due to a high proportion of shared features, whereas some less confusable objects would be rated less typical (and more distinctive). However, typicality alone does not account for highly confusable objects that are not highly typical members of a category or capture confusability of subcategory clusters. Further, it does not take into account semantic similarities across categories. These qualities are additionally captured with the measure of semantic confusability defined above.

Here, fMRI preprocessing consisted of slice-time correction, spatial realignment and normalization to the MNI template space, and spatial smoothing using a 6 mm Gaussian kernal of the functional images using SPM8 (Wellcome Institute of Cognitive Neurology, London, UK). Data for each participant were analyzed using the general linear model to show the parametric effects of semantic confusability of objects. The fMRI response to each stimulus was modeled as a single regressor with an additional modulating regressor based on the semantic confusability values. This parametric modulator captures the fMRI response to objects, modulated by how semantically confusable each object is. In addition, predictors were included to capture slow artifactual trends with a high-pass cutoff of 128 seconds, head motion and a global mean predictor for each scanning session. The parameter estimate images for the semantic confusability modulator were entered into an RFX analysis using a one-sampled t test against zero. Due to our a priori interest in the perirhinal cortex, we first restricted our analysis using a small volume correction based on a probabilistic perirhinal cortex mask thresholded at 10% (Holdstock et al., 2009), before also conducting a more exploratory whole-brain analysis.

Results

RSA searchlight mapping

RSA was used to determine whether the similarity of activation patterns were significantly related to the predicted similarity of any of the nine theoretical models tested which captured image-based visual, categorical and object-specific semantic properties (Fig. 2). RSA searchlight mapping revealed that five of the theoretical model predictions (subsequently referred to as models) showed significant similarity to patterns of fMRI activation (Fig. 3a). Patterns of activity in early visual areas showed a significant relationship with the EVC model (peak MNI coordinate in each hemisphere; −21, −91, 14 and 15, −94, 2), replicating the known properties of primary visual cortex relating to retinotopy and local edge-orientations that have been previously shown in fMRI activity patterns (Kamitani and Tong, 2005; Kay et al., 2008; Connolly et al., 2012).

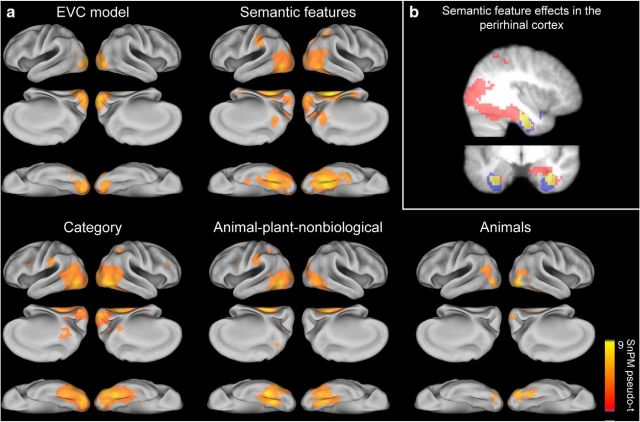

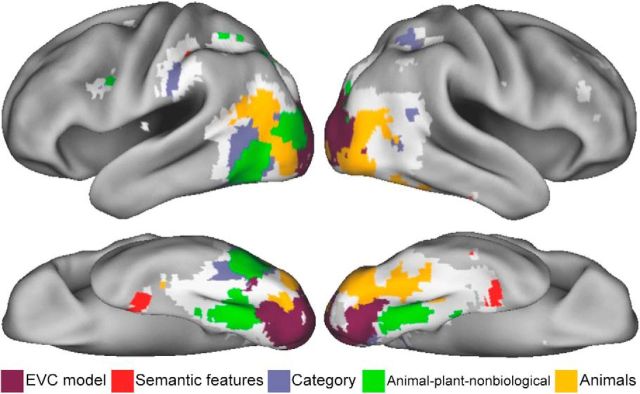

Figure 3.

RSA searchlight results. a, Showing regions where activation patterns were significantly related to each model's predicted similarity, thresholded at pFDR < 0.0056 (corrected for 9 model RDMs). b, Semantic-feature searchlight results (red) overlap with perirhinal cortex (blue; overlap in yellow). Shown at MNI slices x = 38, y = −7.

Both the object-specific semantic-feature and category models were significantly related to patterns of activation in the ventral and dorsal visual pathways. Specifically, similarity defined by semantic-features (peak MNI coordinates; 30, −49, −12 and −27, −55, −9), category (peak MNI coordinates; 33, −55, −16 and −48, −73, −5) and animal-plant-nonbiological distinctions (peak MNI coordinates; −45, −67, −5 and 30, −52, −9) were significantly related to patterns in posterior parts of the ventral stream including the lateral occipital cortex (LOC) and pVTC (including both lateral and medial regions). In contrast, the only single-category effects seen were where animal objects produced similar activity patterns in LOC and the right lateral fusiform (peak MNI coordinates; 48, −76, −5 and −45, −82, −1). The spatial extent of the effects for categorical models was largely restricted to posterior parts of the ventral stream, highlighting the coarse nature of object information represented in pVTC. Similarity based on the object-specific semantic-feature model showed the most extensive significant effects extending into the anterior medial temporal lobe, including the perirhinal cortex (Fig. 3b).

RSA searchlight mapping revealed overlapping and distinct representations throughout occipital and ventral temporal cortex. However as some of our model RDMs are correlated (Fig. 2) and show spatially overlapping effects, it is not possible to make strong assertions about the exact nature of representations along the ventral stream. For example, the semantic feature model encompasses both category structure and object-specific semantic individuation and showed effects in both posterior and anterior parts of the ventral stream, whereas categorical models only show effects in the posterior regions. Although this suggests object representations in the ventral stream better reflect categorical groupings and representations in the anterior medial temporal lobe additionally reflect object-specific information, we require a more formal evaluation to fully test this.

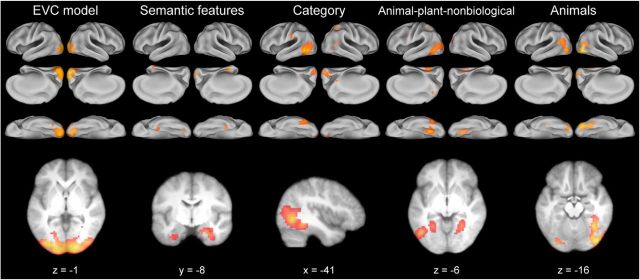

An additional searchlight analysis was performed mapping the unique effects of each model RDM by controlling for effects of the other significant models (Fig. 3) using partial correlation (Fig. 4; Table 2). Therefore, this analysis determines the effects for each model that cannot be accounted for by any other model. Patterns of activity in primary visual areas remained significantly related to the EVC model (this would be expected given the low correlation with other models; Fig. 2). Crucially, semantic feature effects remained significant in bilateral anterior medial temporal lobe and the perirhinal cortex after controlling for categorical and visual effects of objects. Finally, categorical effects were largely restricted to the pVTC and LOC. Object-category groupings were present in left LOC and overlapped with previously reported peak coordinates for the contrast of animals greater than tools (Chao et al., 2002) and a lateral object-specific region (Andrews and Ewbank, 2004). Animal-plant-nonbiological distinctions were seen in bilateral medial pVTC and left lateral pVTC which overlapped with previously reported category-specific effects for animals and tools in lateral and medial pVTC respectively (Chao et al., 2002). Activation patterns for animals clustered in bilateral LOC and the right lateral pVTC and overlapped with the coordinates reported for the LOC (Malach et al., 1995; Andrews and Ewbank, 2004), and the mean location of the FFA (Kanwisher et al., 1997) in addition to peak effects found for the contrast of animal and tool objects (Chao et al., 2002). Overall, these results show coarse, categorical representations are dominant in the posterior ventral stream, whereas the more fine-grained similarity patterns in the anterior medial temporal lobe reflect object-specific semantic similarity over and above that which is explained by categorical or visual similarity.

Figure 4.

Unique effects of each theoretical similarity model. RSA searchlight results using a partial correlation analysis showing regions where activation patterns were uniquely related to each model's predicted similarity. Images presented at the same color scale as Figure 3 and thresholded at pFDR < 0.05.

Table 2.

RSA results showing unique effect for each theoretical model RDM

| Regions | Cluster extent | Voxel-level p(FDR) | Pseudo-t | x | y | z |

|---|---|---|---|---|---|---|

| EVC model | ||||||

| L mid occip, R calcarine | 2139 | 0.0001 | 8.55 | −21 | −91 | 14 |

| Semantic features | ||||||

| R PRc | 128 | 0.0150 | 4.76 | 27 | −4 | −28 |

| R calcarine | 78 | 0.0290 | 4.44 | 15 | −94 | 2 |

| L lingual | 25 | 0.0166 | 4.12 | −24 | −82 | −12 |

| L PRc | 43 | 0.0248 | 3.63 | −33 | −7 | −35 |

| Category | ||||||

| L IT | 619 | 0.0024 | 7.10 | −51 | −67 | −5 |

| L/R calcarine | 359 | 0.0077 | 4.27 | 15 | −91 | −1 |

| R precuneus | 23 | 0.0077 | 3.75 | 12 | −52 | 14 |

| L supramarginal | 41 | 0.0107 | 3.59 | −63 | −25 | 32 |

| Animal-plant-nonbiological | ||||||

| L IT, lingual, mid occip | 736 | 0.0069 | 5.71 | −48 | −64 | −5 |

| L sup occipital | 135 | 0.0069 | 5.15 | −21 | −70 | 32 |

| R lingual | 124 | 0.0069 | 4.59 | 21 | −61 | −9 |

| R mid occipital | 81 | 0.0126 | 3.28 | 30 | −82 | 29 |

| Animals | ||||||

| R mid temporal, fusiform | 561 | 0.0002 | 7.89 | 51 | −73 | −1 |

| R calcarine | 121 | 0.0002 | 6.24 | 21 | −97 | 2 |

| L mid temporal, mid occip, lingual | 324 | 0.0002 | 5.54 | −54 | −64 | 21 |

MNI coordinates and significance levels shown for the peak voxel in each cluster. Anatomical labels are provided for up to three peak locations in each cluster. Effects in clusters smaller than 20 voxels not shown. mid, Middle; occip, occipital; PRc, perirhinal cortex; IT, inferior temporal; sup, superior.

Decomposing semantic feature effects in the perirhinal cortex

The unique effects of the semantic feature model in bilateral perirhinal cortex show that activation patterns in these regions reflect semantic structure over that explained by category membership. However, members of some object categories are more semantically similar to one another (e.g., musical instruments are in general dark blue in the semantic feature RDM in Fig. 2), whereas other categories are on average less cohesive (e.g., tools are in general a lighter blue compared with other clusters in the semantic feature RDM). Further, the members of some object categories are more similar to some other object categories than others. As such, it is unclear whether our semantic feature effects in the perirhinal cortex are underpinned by the differential mean semantic distances within each category (and mean between-category distances) or the object-specific semantic distances after accounting for differential category distances. To address this issue, we further tested a category-mean semantic model by performing a partial correlation analysis to test for unique effects of either object-specific variability or category-mean semantic distances within the bilateral perirhinal cortex region identified in the searchlight analysis above (Fig. 4). We defined the category-mean semantic model where the distance between two objects from the same category was defined as the mean distance across all category members. The distance for objects from two different categories was defined as the mean distance between all cross-category pairs (resulting in a 131 × 131 matrix).

Activation patterns in the perirhinal cortex were significantly related to the category-mean semantic model (t(15) = 3.40, p = 0.002) after controlling for categorical and visual models. Further, the results remained significant when additionally controlling for the effects of the semantic-feature model (t(15) = 3.14, p = 0.003), showing that activation patterns in the perirhinal cortex reflect broad semantic distances between categories in addition to the overall cohesiveness of each category. Crucially, the semantic feature model also showed a significant relationship to activation patterns in the perirhinal cortex (t(15) = 3.44, p = 0.002) after controlling for the category-mean semantic model, categorical, and visual models. This suggests that object-specific semantic information forms part of complex object representations in the perirhinal cortex together with more general semantic category information.

Parametric effect of semantic confusability

The object-specific RSA effects for semantic-features echoes studies showing the greater involvement of the anterior medial temporal lobes when more fine-grained semantic processes are required for object differentiation, especially for more semantically confusable objects (Tyler et al., 2004, 2013; Moss et al., 2005; Taylor et al., 2006; Barense et al., 2010). This suggests that, in addition to the perirhinal cortex representing fine-grained semantic information about objects (captured by our semantic feature RDM), the region also becomes increasingly engaged for objects that require the most fine-grained differentiation, i.e., those that are most semantically confusable. This implies that for highly confusable objects, the object-specific coding needs to be fully instantiated leading to greater activation within the region (Patterson et al., 2007). To test whether the perirhinal cortex representations are increasingly engaged for more confusable objects we performed a parametric univariate analysis looking for effects of semantic confusability. Our metric of confusability was derived from the semantic-feature similarity model, where an object's semantic confusability was defined as a weighted sum of the similarity an object has with all other objects (Table 3 shows examples of high and low confusable objects for each category).

Table 3.

Examples of the most and least confusable objects in each category

| Animals (0.51) | Fruits (1.00) | Vegetables (0.81) | Vehicles (0.43) | Tools (0.25) | Musical instrument (0.83) |

|---|---|---|---|---|---|

| More confusable objects | |||||

| lamb | raspberry | broccoli | van | ladle | cello |

| sheep | plum | cauliflower | lorry | knife | piano |

| lion | peach | peas | car | spoon | flute |

| Less confusable objects | |||||

| snake | banana | pumpkin | tractor | crowbar | harmonica |

| pig | pineapple | garlic | helicopter | corkscrew | drum |

| camel | coconut | mushroom | submarine | thermometer | bagpipes |

Examples of the most and least confusable objects in each category according to the semantic features in the McRae et al. (2005) production norms. The mean (relative) confusability score within each category is shown next to the category headings in brackets.

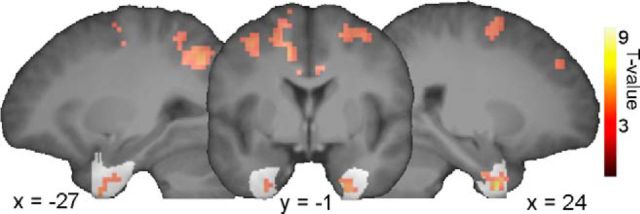

We found increased semantic confusability was associated with increased activity in bilateral perirhinal cortex (Fig. 5; voxel-level p < 0.001, cluster-level p < 0.05 FWE after small volume correction using a perirhinal cortex mask), showing that in addition to semantic similarity being reflected in multivoxel activity patterns, perirhinal cortex activity is sensitive to whether an object is highly semantically confusable with other objects, therefore requiring fine-grained differentiation processes and more complex integration of semantic information to uniquely recognize the object.

Figure 5.

Parametric effect of semantic confusability where objects that are more semantically confusable show increased activation in the perirhinal cortex (shown in white; voxelwise p < 0.001, cluster-level p < 0.05 FWE after small volume correction). Voxels outside the perirhinal cortex show increased activation with increasing semantic confusability in the whole-brain analysis (voxelwise p < 0.001, cluster-level p < 0.05 using FWE).

Finally, we performed a more exploratory whole-brain analysis that showed that effects of increased semantic confusability engaged a wider network beyond the perirhinal cortex including clusters in the middle cingulum and supplementary motor area, bilateral inferior parietal lobes, middle frontal and precentral gyrus, aspects of which are associated with the dorsal attention network (all voxel-level p < 0.001, cluster-level p < 0.05 FEW; Corbetta and Shulman, 2002). This may further indicate that more semantically confusable objects require increased spatial attention for unique recognition.

Discussion

Despite a wealth of fMRI research aiming to understand how objects are represented there are few accounts that consider the representation of individual objects (Kriegeskorte et al., 2008b; Mur et al., 2012; Tyler et al., 2013 for other examples of research using a large and diverse set of objects). Instead, research has tended to focus on object categories. Here we asked how object-specific information is represented in the ventral stream and how this relates to categorical information. We found distinct categorical and object-specific semantic representations in the brain predicted by the similarity of theoretically motivated measures. Using RSA we revealed object-specific semantic representations coded in the perirhinal cortex, while also showing a gradient of informational specificity along the ventral stream, from image-based visual properties in primary visual cortex to coarse, categorical representations in posterior VTC (Fig. 6). These results support distributed models of object semantics that claim a progression of object information along the ventral stream from category information in pVTC to more fine-grained semantic information in the anterior medial temporal lobe, which functions to integrate complex semantic information (Tyler and Moss, 2001; Taylor et al., 2007, 2011; Tyler et al., 2013).

Figure 6.

Summary of the main findings. Representational topographic map showing significant unique effects of each theoretical model RDM. If significant effects were seen for more than one model, the model with the highest partial Spearman's correlation is shown. Regions that showed significant effects in the original analysis (Fig. 3) that did not show effects in the partial correlation analysis are shown in white. These regions show shared effects across multiple models.

Our central finding is that the perirhinal cortex represents object-specific semantic information and that such representations are modulated depending on how semantically confusable an object is. The perirhinal cortex sits at the most anterior aspect of the ventral visual pathway and is hypothesized to represent complex conjunctions of object features enabling fine-grained distinctions between objects (Buckley et al., 2001; Murray and Richmond, 2001; Bussey and Saksida, 2002; Tyler et al., 2004, 2013; Moss et al., 2005; Taylor et al., 2006, 2009; Barense et al., 2007, 2010, 2012; Mion et al., 2010; Kivisaari et al., 2012). Here, activation patterns within the perirhinal cortex reflected semantic similarities between individual objects. Further, this effect was preserved when controlling for both categorical and low-level visual similarities between objects. This shows that although similar objects cluster in perirhinal cortex, the subtle distinctions in activation patterns between objects (and between objects within each category) covary with subtle semantic distinctions based on semantic feature information. This differentiation between objects is vital to the task of basic-level naming, as to name an object at the basic-level requires the differentiation between similar items to support lexical and phonological processes involved in naming. The unique object-specific semantic effects we see in the perirhinal cortex provide the necessary object differentiation for these processes.

Moreover, if a function of the perirhinal is to enable fine-grained differentiation, then it not only needs to code this information, but will also become increasingly activated when recognizing objects that are highly confusable with other objects. For example, more confusable objects can be thought of as occupying a dense area of semantic space, and so to individuate a confusable object from similar concepts depends on fine-grained distinctions that rely on the integrative properties of the perirhinal cortex leading to increases in activation. Less confusable objects will have fewer semantic neighbors and are more easily differentiated resulting in less activation. This is indeed the result we found in our analysis of semantic confusability where activation in the perirhinal cortex parametrically increased for objects that were more semantically confusable. Both the RSA and univariate results support the regions' critical involvement in object recognition when fine-grained semantic information is needed to individuate between similar objects.

This is not to claim that the perirhinal cortex represents object-specific semantics per se, but that the region codes the computations necessary for object-specific representations to be formed. As in the representational-hierarchy theory (Bussey et al., 2005; Cowell et al., 2010; Barense et al., 2012), we claim the representation of individual objects in the perirhinal cortex are based on conjunctions of coarser information represented in pVTC, and integrated through recurrent connectivity between posterior and anterior sites in the ventral stream (Clarke et al., 2011). This may occur by a similar mechanism to pair-coding responses in pVTC underpinned by backward projections from the perirhinal cortex (Higuchi and Miyashita, 1996; Miyashita et al., 1996). Further, objects that are more semantically confusable with other objects have an increased dependence on the conjunctive properties of the perirhinal cortex. This is consistent with research showing the perirhinal cortex plays a critical role in visual processing for tasks that cannot solely rely on simple feature information, and supports these processes by enabling the conjunction of simpler object features into complex feature conjunctions (Buckley et al., 2001; Murray and Richmond, 2001; Bussey and Saksida, 2002; Bussey et al., 2005; Barense et al., 2007, 2012). This contribution of the perirhinal cortex to complex visual object processing is underpinned by its anatomical connections with occipitotemporal regions (Suzuki and Amaral, 1994) in addition to functional connections with other sensory areas (Libby et al., 2012), making it an important site for cross-modal binding of semantic information (Taylor et al., 2006, 2009) and integrating more complex semantic information about objects (Tyler et al., 2004, 2013; Moss et al., 2005; Barense et al., 2010; Kivisaari et al., 2012).

By isolating the unique effects of each theoretical model, we showed that activation patterns in the perirhinal cortex covaried with the object-specific variability specified by the semantic feature model, but did not find evidence of object-specific semantic effects elsewhere in the ventral stream (in line with a previous report using human faces; Kriegeskorte et al., 2007). Some previous studies have reported that activation patterns in the posterior ventral stream correlate with measures of semantic similarity either based on behavioral semantic similarity judgments or semantic similarity based on word usage (Connolly et al., 2012; Carlson et al., 2014). Although we initially report semantic feature effects in pVTC, these effects were nonsignificant once we accounted for categorical and visual similarity, both of which may contribute to previously reported effects. However, it may also be that our semantic feature model does not capture some aspects of semantic similarity as feature lists tend to under-represent the most highly shared information. Future work could assess to what extent semantic feature models capture perceived semantic similarity, and whether any divergence provides additional information that accounts for object representations in the ventral stream.

Our results also replicated object category effects robustly seen in MVPA studies (Haxby et al., 2001; Cox and Savoy, 2003; Pietrini et al., 2004; Kriegeskorte et al., 2008b; Reddy et al., 2010). Here activation patterns in pVTC were associated with categorical similarity models; however, partial correlations showed unique category effects in different regions, object category in left lateral LOC, a category-specific representation of animals in right lateral pVTC and the animal-plant-nonbiological distinctions in bilateral medial VTC. Lateral VTC effects for animals is consistent with a category-specific view based on previous univariate evidence that animals elicit greater activity in the lateral fusiform (Chao et al., 1999, 2002; Mahon et al., 2009), although we failed to find a medial pVTC effect for tools which has been previously reported (Chao et al., 1999, 2002; Mahon et al., 2009).

Contrasting with animal effects in left lateral pVTC, distinctions of animate and inanimate objects according to domain (animal-plant-nonbiological) were seen in bilateral medial pVTC and right lateral pVTC. Previous studies have suggested that object animacy is a prominent factor in how activation patterns in VTC cluster (Kriegeskorte et al., 2008b), whereas animacy is further proposed as a principle of lateral-to-medial VTC organization (Connolly et al., 2012). As such, previously reported category-specific effects have been conceived as an emergent property of different underlying organizing principles; one of which may relate to object animacy. An alternative and complementary principle, is that categorical effects emerge because different object categories are associated with different statistical structures of the underlying property-based semantic representations (Tyler and Moss, 2001; Tyler et al., 2013). As different categories of object tend to have different statistical properties associated with their semantic features (e.g., animals have many frequently co-occurring features that are shared by other animals), categorical effects can emerge. One promising approach to untangling potential representational principles in posterior VTC is to represent activation patterns in a high-dimensional space, that may be used to test whether categorical effects are emergent from a combination of noncategory factors (Op de Beeck et al., 2008; Haxby et al., 2011).

In conclusion we find a gradient of visual, to categorical, to object-specific semantic representations of objects along the ventral stream through occipital and temporal cortices culminating in the perirhinal cortex. Our results show distinct semantic feature effects in the perirhinal cortex that highlight a key role for the region in representing and processing object-specific semantic information. Further, the perirhinal cortex was increasingly activated during the recognition of more semantically confusable objects. These results suggest a fundamental role of the human perirhinal cortex in representing object-specific semantic information, and provide evidence for its role in integrating complex information from posterior regions in the ventral visual pathway.

Footnotes

This work was supported by funding from the European Research Council under the European Community's Seventh Framework Programme (FP7/2007--2013)/ ERC Grant agreement no. 249640 to L.K.T.

The authors declare no competing financial interests.

References

- Andrews TJ, Ewbank MP. Distinct representations for facial identity and changeable aspects of faces in the human temporal lobe. Neuroimage. 2004;23:905–913. doi: 10.1016/j.neuroimage.2004.07.060. [DOI] [PubMed] [Google Scholar]

- Barense MD, Gaffan D, Graham KS. The human medial temporal lobe processes online representations of complex objects. Neuropsychologia. 2007;45:2963–2974. doi: 10.1016/j.neuropsychologia.2007.05.023. [DOI] [PubMed] [Google Scholar]

- Barense MD, Rogers TT, Bussey TJ, Saksida LM, Graham KS. Influence of conceptual knowledge on visual object discrimination: insights from semantic dementia and MTL amnesia. Cereb Cortex. 2010;20:2568–2582. doi: 10.1093/cercor/bhq004. [DOI] [PubMed] [Google Scholar]

- Barense MD, Groen II, Lee AC, Yeung LK, Brady SM, Gregori M, Kapur N, Bussey TJ, Saksida LM, Henson RN. Intact memory for irrelevant information impairs perception in amnesia. Neuron. 2012;75:157–167. doi: 10.1016/j.neuron.2012.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckley MJ, Booth MC, Rolls ET, Gaffan D. Selective perceptual impairments after perirhinal cortex ablation. J Neurosci. 2001;21:9824–9836. doi: 10.1523/JNEUROSCI.21-24-09824.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bussey TJ, Saksida LM. The organization of visual object representations: a connectionist model of effects of lesions in perirhinal cortex. Eur J Neurosci. 2002;15:355–364. doi: 10.1046/j.0953-816x.2001.01850.x. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Saksida LM, Murray EA. The perceptual-mnemonic/feature conjunction model of perirhinal cortex function. Q J Exp Psychol B. 2005;58:269–282. doi: 10.1080/02724990544000004. [DOI] [PubMed] [Google Scholar]

- Carlson TA, Simmons RA, Kriegeskorte N, Slevc LR. The emergence of semantic meaning in the ventral temporal pathway. J Cogn Neurosci. 2014;26:120–131. doi: 10.1162/jocn_a_00458. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chao LL, Weisberg J, Martin A. Experience-dependent modulation of category-related cortical activity. Cereb Cortex. 2002;12:545–551. doi: 10.1093/cercor/12.5.545. [DOI] [PubMed] [Google Scholar]

- Clarke A, Taylor KI, Tyler LK. The evolution of meaning: spatiotemporal dynamics of visual object recognition. J Cogn Neurosci. 2011;23:1887–1899. doi: 10.1162/jocn.2010.21544. [DOI] [PubMed] [Google Scholar]

- Connolly AC, Guntupalli JS, Gors J, Hanke M, Halchenko YO, Wu YC, Abdi H, Haxby JV. The representation of biological classes in the brain. J Neurosci. 2012;32:2608–2618. doi: 10.1523/JNEUROSCI.5547-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Cowell RA, Bussey TJ, Saksida LM. Components of recognition memory: dissociable cognitive processes or just differences in representational complexity? Hippocampus. 2010;20:1245–1262. doi: 10.1002/hipo.20865. [DOI] [PubMed] [Google Scholar]

- Cox DD, Savoy RL. Functional magnetic resonance imaging (fMRI) “brain reading”: detecting and classifying distributed patterns of fMRI activity in human visual cortex. Neuroimage. 2003;19:261–270. doi: 10.1016/S1053-8119(03)00049-1. [DOI] [PubMed] [Google Scholar]

- Devereux BJ, Tyler LK, Geertzen J, Randall B. The centre for speech language and the brain (CSLB) concept property norms. Behav Res Methods. 2013 doi: 10.3758/s13428-013-0420-4. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Edelman S, Grill-Spector K, Kushnir T, Malach R. Toward direct visualization of the internal shape representation space by fMRI. Psychobiology. 1998;26:309–321. [Google Scholar]

- Epstein R, Harris A, Stanley D, Kanwisher N. The parahippocampal place area: recognition, navigation, or encoding? Neuron. 1999;23:115–125. doi: 10.1016/S0896-6273(00)80758-8. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Guntupalli JS, Connelly AC, Halchenko YO, Conroy BR, Gobbini MI, Hanke M, Ramadge PJ. A common, high-dimensional model of the representational space in human ventral temporal cortex. Neuron. 2011;72:404–416. doi: 10.1016/j.neuron.2011.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higuchi S, Miyashita Y. Formation of mnemonic neuronal responses to visual paired associates in inferotemporal cortex is impaired by perirhinal and entorhinal lesions. Proc Natl Acad Sci U S A. 1996;93:739–743. doi: 10.1073/pnas.93.2.739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holdstock JS, Hocking J, Notley P, Devlin JT, Price CJ. Integrating visual and tactile information in the perirhinal cortex. Cereb Cortex. 2009;19:2993–3000. doi: 10.1093/cercor/bhp073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay KN, Naselaris T, Prenger RJ, Gallant JL. Identifying natural images from human brain activity. Nature. 2008;452:352–355. doi: 10.1038/nature06713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kivisaari SL, Tyler LK, Monsch AU, Taylor KI. Medial perirhinal cortex disambiguates confusable objects. Brain. 2012;135:3757–3769. doi: 10.1093/brain/aws277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Formisano E, Sorger B, Goebel R. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc Natl Acad Sci U S A. 2007;104:20600–20605. doi: 10.1073/pnas.0705654104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis- connecting the branches of systems neuroscience. Front Syst Neurosci. 2008a;2:4. doi: 10.3389/neuro.01.016.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, Bandettini PA. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron. 2008b;60:1126–1141. doi: 10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Libby LA, Ekstrom AD, Ragland JD, Ranganath C. Differential connectivity of perirhinal and parahippocampal cortices within human hippocampal subregions revealed by high-resolution functional imaging. J Neurosci. 2012;32:6550–6560. doi: 10.1523/JNEUROSCI.3711-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Anzellotti S, Schwarzbach J, Zampini M, Caramazza A. Category-specific organization in the human brain does not require visual experience. Neuron. 2009;63:397–405. doi: 10.1016/j.neuron.2009.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci U S A. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T. Face-specific processing in the human fusiform gyrus. J Cogn Neurosci. 1997;9:605–610. doi: 10.1162/jocn.1997.9.5.605. [DOI] [PubMed] [Google Scholar]

- McRae K, Cree GS, Seidenberg MS, McNorgan C. Semantic feature production norms for a large set of living and nonliving things. Behav Res Methods. 2005;37:547–559. doi: 10.3758/BF03192726. [DOI] [PubMed] [Google Scholar]

- Mion M, Patterson K, Acosta-Cabronero J, Pengas G, Izquierdo-Garcia D, Hong YT, Fryer TD, Williams GB, Hodges JR, Nestor PJ. What the left and right anterior fusiform gyri tell us about semantic memory. Brain. 2010;133:3256–3268. doi: 10.1093/brain/awq272. [DOI] [PubMed] [Google Scholar]

- Miyashita Y, Okuno H, Tokuyama W, Ihara T, Nakajima K. Feedback signal from medial temporal lobe mediates visual associative mnemonic codes of inferotemporal neurons. Brain Res Cogn Brain Res. 1996;5:81–86. doi: 10.1016/S0926-6410(96)00043-2. [DOI] [PubMed] [Google Scholar]

- Moss HE, Rodd JM, Stamatakis EA, Bright P, Tyler LK. Anteromedial temporal cortex supports fine-grained differentiation among objects. Cereb Cortex. 2005;15:616–627. doi: 10.1093/cercor/bhh163. [DOI] [PubMed] [Google Scholar]

- Mur M, Ruff DA, Bodurka J, De Weerd P, Bandettini PA, Kriegeskorte N. Categorical, yet graded- single-image activation profiles of human category-selective cortical regions. J Neurosci. 2012;32:8649–8662. doi: 10.1523/JNEUROSCI.2334-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Richmond BJ. The role of perirhinal cortex in object perception, memory, and associations. Curr Opin Neurobiol. 2001;11:188–193. doi: 10.1016/S0959-4388(00)00195-1. [DOI] [PubMed] [Google Scholar]

- Mutch J, Lowe DG. Multiclass object recognition with sparse, localized features. IEEE Conference on Computer Vision and Pattern Recognition (CVPR); New York. 2006. pp. 11–18. [Google Scholar]

- Mutch J, Knoblich U, Poggio T. MIT-CSAIL-TR-2010-013/CBCL-286. Cambridge, MA: Massachusetts Institute of Technology ; 2010. CNS: a GPU-based framework for simulating cortically-organized networks. [Google Scholar]

- Nili H, Wingfield C, Su L, Walther A, Marslen-Wilson W, Kriegeskorte N. A toolbox for representational similarity analysis. PLoS Comput Biol. 2014 doi: 10.1371/journal.pcbi.1003553. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Op de Beeck HP, Haushofer J, Kanwisher NG. Interpreting fMRI data: maps, modules and dimensions. Nat Rev Neurosci. 2008;9:123–135. doi: 10.1038/nrn2314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Toole AJ, Jiang F, Abdi H, Haxby JV. Partially distributed representations of objects and faces in ventral temporal cortex. J Cogn Neurosci. 2005;17:580–590. doi: 10.1162/0898929053467550. [DOI] [PubMed] [Google Scholar]

- Patterson K. The reign of typicality in semantic memory. Philos Trans R Soc Lond B Biol Sci. 2007;362:813–821. doi: 10.1098/rstb.2007.2090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WH, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: object-based representation in the human ventral pathway. Proc Natl Acad Sci U S A. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reddy L, Tsuchiya N, Serre T. Reading the mind's eye: decoding category information during mental imagery. Neuroimage. 2010;50:818–825. doi: 10.1016/j.neuroimage.2009.11.084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riesenhuber M, Poggio T. Hierarchical models of object recognition. Nat Neurosci. 1999;2:1019–1025. doi: 10.1038/14819. [DOI] [PubMed] [Google Scholar]

- Serre T, Wolf L, Bileschi S, Riesenhuber M, Poggio T. Robust object recognition with cortex-like mechanisms. IEEE Trans Pattern Anal Mach Intell. 2007;29:411–426. doi: 10.1109/TPAMI.2007.56. [DOI] [PubMed] [Google Scholar]

- Suzuki WA, Amaral DG. Topographic organization of the reciprocal connections between the monkey entorhinal cortex and the perirhinal and parahippocampal cortices. J Neurosci. 1994;14:1856–1877. doi: 10.1523/JNEUROSCI.14-03-01856.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor KI, Moss HE, Stamatakis EA, Tyler LK. Binding crossmodal object features in perirhinal cortex. Proc Natl Acad Sci U S A. 2006;103:8239–8244. doi: 10.1073/pnas.0509704103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor KI, Moss HE, Tyler LK. The conceptual structure account: a cognitive model of semantic memory and its neural instantiation. In: Hart J, Kraut M, editors. The neural basis of semantic memory. Cambridge: Cambridge UP; 2007. pp. 265–301. [Google Scholar]

- Taylor KI, Stamatakis EA, Tyler LK. Crossmodal integration of object features: voxel-based correlations in brain-damaged patients. Brain. 2009;132:671–683. doi: 10.1093/brain/awn361. [DOI] [PubMed] [Google Scholar]

- Taylor KI, Devereux BJ, Tyler LK. Conceptual structure: towards an integrated neurocognitive account. Lang Cogn Process. 2011;26:1368–1401. doi: 10.1080/01690965.2011.568227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor KI, Devereux BJ, Acres K, Randall B, Tyler LK. Contrasting effects of feature-based statistics on the categorisation and identification of visual objects. Cognition. 2012;122:363–374. doi: 10.1016/j.cognition.2011.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tyler LK, Moss HE. Towards a distributed account of conceptual knowledge. Trends Cogn Sci. 2001;5:244–252. doi: 10.1016/S1364-6613(00)01651-X. [DOI] [PubMed] [Google Scholar]

- Tyler LK, Stamatakis EA, Bright P, Acres K, Abdallah S, Rodd JM, Moss HE. Processing objects at different levels of specificity. J Cogn Neurosci. 2004;16:351–362. doi: 10.1162/089892904322926692. [DOI] [PubMed] [Google Scholar]

- Tyler LK, Chiu S, Zhuang J, Randall B, Devereux BJ, Wright P, Clarke A, Taylor KI. Objects and categories: feature statistics and object processing in the ventral stream. J Cogn Neurosci. 2013;25:1723–1735. doi: 10.1162/jocn_a_00419. [DOI] [PMC free article] [PubMed] [Google Scholar]