Abstract

Purpose

The overall goal of the current study was to identify an optimal level and duration of acoustic experience that facilitates language development for pediatric cochlear implant (CI) recipients—specifically, to determine whether there is an optimal duration of hearing aid (HA) use and unaided threshold levels that should be considered before proceeding to bilateral CIs.

Method

A total of 117 pediatric CI recipients (ages 5–9 years) were given speech perception and standardized tests of receptive vocabulary and language. The speech perception battery included tests of segmental perception (e.g., word recognition in quiet and noise, and vowels and consonants in quiet) and of suprasegmental perception (e.g., talker and stress discrimination, and emotion identification). Hierarchical regression analyses were used to determine the effects of speech perception on language scores, and the effects of residual hearing level (unaided pure-tone average [PTA]) and duration of HA use on speech perception.

Results

A continuum of residual hearing levels and the length of HA use were represented by calculating the unaided PTA of the ear with the longest duration of HA use for each child. All children wore 2 devices: Some wore bimodal devices, while others received their 2nd CI either simultaneously or sequentially, representing a wide range of HA use (0.03–9.05 years). Regression analyses indicate that suprasegmental perception contributes unique variance to receptive language scores and that both segmental and suprasegmental skills each contribute independently to receptive vocabulary scores. Also, analyses revealed an optimal duration of HA use for each of 3 ranges of hearing loss severity (with mean PTAs of 73, 92, and 111 dB HL) that maximizes suprasegmental perception.

Conclusions

For children with the most profound losses, early bilateral CIs provide the greatest opportunity for developing good spoken language skills. For those with moderate-to-severe losses, however, a prescribed period of bimodal use may be more advantageous for developing good spoken language skills.

For young children with severe-to-profound hearing loss (HL), there is a general consensus that maximizing hearing at each ear is best, that is, two ears/devices are better than one. Support for bilateral devices comes from both physiological and behavioral evidence suggesting that bilateral input to the auditory system, as opposed to unilateral input, may prevent bilateral auditory deprivation and ultimately facilitate binaural hearing skills (e.g., localization; Bauer, Sharma, Martin, & Dorman, 2006; Gordon, Jiwani, & Papsin, 2013; Litovsky & Gordon, 2016). Binaural hearing abilities are among a number of factors, both intrinsic (i.e., cognitive/learning potential) and extrinsic (i.e., socioeconomic, maternal education, linguistic input) to the child, that contribute to spoken language acquisition. Moreover, binaural hearing abilities are necessary for effective communication in daily listening and learning environments and ultimately for academic success (Boons et al., 2012; Litovsky & Gordon, 2016; Sarant, Harris, & Bennet, 2015; Sarant, Harris, Bennet, & Bant, 2014). Thus, for young children with a particular level of severe-to-profound HL, the most pertinent decision is not choosing between bilateral devices and a unilateral device, rather “which” bilateral devices, bilateral cochlear implants (BCIs) or bimodal devices (cochlear implant [CI] at one ear and hearing aid [HA] at the other ear), to recommend and “when.”

Most clinicians agree that children with the most profound levels of HL are suitable candidates for BCIs. However, for children with less profound bilateral HL or with asymmetric HL (e.g., profound HL at one ear and moderate-to-severe HL at the opposite ear), the decision is less clear (Peters, Wyss, & Manrique, 2010). Currently, adults and children with greater levels of residual hearing are being considered for CIs in at least one ear (Cadieux, Firszt, & Reeder, 2013; Gifford, Dorman, Shallop, & Sydlowski, 2010; Mowry, Woodson, & Gantz, 2012; Sampaio, Araújo, & Oliveira, 2011). Thus, due to these expanding CI candidacy guidelines (moderate-to-severe HL), the questions of “which” devices are best and “when” are more relevant than ever. A recent report of surgeons from CI centers in the United States (Carlson et al., 2018) revealed that 38% of those surveyed considered CI surgery for children with asymmetric HL (i.e., severe/profound in one and better hearing at the opposite ear), and for those children with bilateral profound HL, 58% preferred simultaneous implantation while 38% preferred sequential implantation. Moreover, 43% of surgeons surveyed consider cochlear implantation for children younger than 12 months of age, for which the reliance on audiometric thresholds to guide candidacy is highly likely.

For the pediatric population, audiometric thresholds are the primary criteria, although not the only ones, for determining CI candidacy, including BCIs (Dhondt, Swinnen, & Dhooge, 2018). Otological, medical, and other child and family characteristics are also considered. Speech cutoff scores, derived from comparisons of pre- and post-CI speech perception tests, have been used to guide CI candidacy (Mondain et al., 2002) but are applicable only to children who have the developmental, cognitive, and linguistic ability to complete speech testing. Thus, with the minimum age for CI surgery at 12 months or younger, pure-tone average (PTA) hearing levels are often utilized to guide device recommendations. Empirically driven hearing level (i.e., PTAs) guidelines for CI candidacy, for unilateral CIs (Blamey et al., 2001; Boothroyd & Eran, 1994; Davidson, 2006), and more recently for BCI versus bilateral HAs (Lovett, Vickers, & Summerfield, 2015) have been developed by examining the relationship between hearing level (i.e., unaided PTA) and speech perception scores in a group of children with HAs and comparing these scores to a group of children with CIs. Typically, regression models are used to determine a PTA cutoff level for recommending a CI (or CIs) or an HA (or HAs). For example, Leigh, Dettman, Dowell, and Sarant (2011) evaluated open-set speech perception scores of 80 children with CIs and of 62 children with HAs. Aided speech perception performance for the children with HAs was plotted against the three-frequency (0.5, 1, and 2 kHz) unaided PTA for the ear tested. The first quartile and median speech perception scores for children using CIs were then converted to an equivalent unaided PTA HL. Based on these analyses, children with unaided PTA hearing levels from 75 to 90 dB HL have a 75% chance of improved hearing outcomes with a CI over an HA. In a subsequent report, they suggest that children under 3 years with PTAs of greater than 65 dB HL bilaterally may be considered as candidates for a CI (Leigh, Dettman, & Dowell, 2016). Notably, in the aforementioned studies, the majority of children used a single CI, with only three children using bimodal devices and five using BCIs. The authors acknowledge that these models do not take into account possible benefits provided by use of a CI and HA combined (i.e., bimodal devices) and recommend that caution should be exercised when using this model to make recommendations regarding BCIs. A recent study by Lovett et al. (2015) in the United Kingdom developed audiometric candidacy guidelines by comparing speech perception scores of 28 children with bilateral CIs to 43 children with bilateral HAs (age 3–7 years). The authors suggest that children with a four-frequency (average of 0.5, 1, 2, and 4 kHz) PTA of ≥ 80 dB HL should be considered for BCIs. The authors point out that sequential BCIs are rarely recommended (over simultaneous) in the United Kingdom. These specific guidelines do not address asymmetrical hearing thresholds across ears where bimodal stimulation may be an option. Notably, in all of the studies discussed above, guidelines were based on speech perception tests, either open-set word or sentence recognition scores in quiet.

Determining the best device configuration (i.e., BCIs vs. bimodal) for the pediatric population becomes more critical as candidacy guidelines expand to include children with more residual hearing. A worldwide survey of trends in bilateral cochlear implantation revealed that, for children with hearing thresholds, ≥ 100 dB HL clinicians readily considered BCIs (Peters et al., 2010). Moreover, for this group of children, clinicians are likely to consider simultaneous BCIs and consider doing so early. For those with severe-to-profound losses (approximately 70–90 dB HL), the decision regarding “if” and “when” to choose BCIs is made with less certainty. Furthermore, conclusions regarding the best device configuration vary depending on the type of measured outcome. A recent comparison of children with bimodal versus bilateral CIs revealed no significant group differences in spoken language outcomes after accounting for various demographic variables (Ching et al., 2014). Likewise, a meta-analytic comparison of binaural listening benefits between bimodal and BCI recipients varied across outcome measures and test configurations (Schafer, Amlani, Paiva, Nozari, & Verret, 2011). In some studies that report benefits of early BCI for spoken language acquisition (Boons et al., 2012; Sarant et al., 2015, 2014), it is unclear whether the benefits are exaggerated by the lack of bilateral stimulation (e.g., lack of bimodal device use) prior to the second CI surgery. In one study, only 34% of the children used an HA during the interval between the first and second CI surgeries (Sarant et al., 2014), while in another study, HA use at the ear that received the second CI was not specified (Boons et al., 2012). By contrast, using a measure of expressive vocabulary, children with BCIs who had some bimodal experience had higher scores than those without bimodal experience, even when the BCIs were received simultaneously (Moberly, Lowenstein, & Nittrouer, 2016; Nittrouer & Chapman, 2009). More recently, early bimodal experience was found to have a positive effect on phonological skills of pediatric CI recipients at elementary grades (Nittrouer, Lowenstein, & Holloman, 2016; Nittrouer, Muir, Tietgens, Moberly, & Lowenstein, 2018). The level of hearing and duration of HA use (in combination with the first CI) necessary for benefits were not determined in these studies.

Outcome measures other than language have produced varied conclusions. For example, physiological measures (brainstem and cortical lateralization responses) suggest that children who receive BCIs simultaneously are more similar to children with typical hearing than those who receive sequentially implanted CIs (Gordon, Wong, & Papsin, 2013). However, prior to receipt of a second CI, the sequentially implanted children were unilateral CI users with no HA use at the unimplanted (second) ear. More recent studies of pediatric CI recipients found that HA use at the unimplanted ear may protect bilateral symmetry through the brainstem (Polonenko, Papsin, & Gordon, 2015) and facilitate better localization abilities for some children receiving sequential BCIs (Grieco-Calub & Litovsky, 2010). Although, as with the studies mentioned above, the level of hearing and duration of HA use required for these benefits were not determined.

In many of these studies and more importantly for those determining device candidacy guidelines, the most commonly measured outcome is speech perception, with a focus on segmental perception (phonemes, words, sentences) while usually neglecting suprasegmental perception (intonation, stress, rhythm). Yet, suprasegmental perception is extremely important. First, good suprasegmental perception serves as a foundation for spoken language and lexical development during an infant's early years (Jusczyk, Houston, & Newsome, 1999; Saffran, Aslin, & Newport, 1996; Segal, Houston, & Kishon-Rabin, 2016; Seidl & Johnson, 2008; Sidtis & Kreiman, 2012; Singh, Morgan, & Best, 2002; Swingley, 2009; Thiessen & Saffran, 2007; Werker & Yeung, 2005). Second, the acoustic cues associated with suprasegmental perception are not transmitted equally well with CIs and HAs; CIs are typically more effective for conveying segmental cues, while HAs, depending on a listener's residual hearing, may be more effective for conveying suprasegmental cues such as those associated with “voice pitch” (Carroll & Zeng, 2007; Chatterjee et al., 2015; Dorman, Gifford, Spahr, & McKarns, 2008; Gantz, Turner, Gfeller, & Lowder, 2005; Golub, Won, Drennan, Worman, & Rubinstein, 2012; Kong, Stickney, & Zeng, 2005; Most & Peled, 2007; Peng, Chatterjee, & Lu, 2012; Sheffield, Simha, Jahn, & Gifford, 2016; Zhang, Spahr, & Dorman, 2010). Thus, depending on the degree of residual hearing, continued bimodal device use or some period of early bimodal device use during critical language development ages may have positive consequences for spoken language skills of pediatric CI recipients. A specified period of bimodal device use, as would be encountered with sequential BCIs, may enable both good segmental perception and good suprasegmental perception (which underpins language development in infants with normal hearing).

The overall goal of the current study was to identify an optimal level and duration of acoustic experience that facilitates language development for pediatric CI recipients. Specifically, our aim is to determine whether there is an optimal duration of HA use and unaided threshold levels that should be considered before proceeding to BCIs. We hypothesize that children who have early access to acoustic cues through residual hearing can perceive both suprasegmental and segmental cues, which then lead to better language skills (both receptive language and vocabulary). Such benefits of acoustic residual hearing, however, are likely moderated by hearing level and length of acoustic experience via HA use.

For children with CIs, the following specific predictions were tested:

Segmental and suprasegmental speech perception skills contribute independently to receptive language and vocabulary performance.

Lower (better) unaided thresholds will be associated with higher (better) segmental and suprasegmental speech perception scores.

For speech perception and ultimately language development, the optimum period of HA use prior to a second CI will depend on unaided threshold levels; there exists a hearing loss cutoff above which acoustic experience is not beneficial for language outcomes.

Method

Participants

A total of 117 pediatric participants with CIs, ranging in age from 5 to 9 years, were recruited from multiple CI centers and schools across the United States. All children spoke English as their primary language and had typical cognitive function. Maternal education level was used as a sociodemographic variable and calculated in total years of education through college or beyond. Maternal education was categorized by academic degree achieved in the following categories: General Education Development (11 years), high school degree (12 years), associate's degree (14 years), bachelor's degree (16 years), master's degree (18 years), and doctorate degree (≥ 20 years.). Some mothers reported “some college” (13 years) or “some graduate school” (17 years) for their highest level of education. The mean years of total maternal education was approximately 16 years (SD = 2.2). Table 1 shows the distribution of demographic variables, including age at test, gender, maternal education, and race/ethnicity for these participants.

Table 1.

Demographics of children with cochlear implants.

| Demographics (n = 117) | M | SD | Range |

|---|---|---|---|

| Age at test (years) | 7.0 | 1.3 | 4.8–9.4 |

| Maternal education (years) | 15.9 | 2.2 | 11–20 |

| Count (%) | Count (%) | ||

| Gender | Female: 54 (46) | Male: 63 (54) | |

| Race (12 DNR a ) | Minority b : 30 (26) | White: 75 (64) | |

| Ethnicity (1 unknown/DNR a ) | Hispanic: 16 (14) | Not Hispanic: 100 (85) | |

Did not report.

Minority: African American, 11; American Indian, 1; Asian, 8; and multiracial, 10.

Children had congenital HL or HL acquired before 20 months of age. They had received their first CI/s at ≤ 4 years 11 months. The later age at first CI for some children is due to the fact that, in this sample, some children had greater levels of residual hearing (in at least one ear) and were considered for CIs later than the typical Food and Drug Administration guidelines (approximately 12 months of age). All children used oral communication in either a mainstream or oral special education classroom. All used two devices (BCIs or bimodal). For these 117 children, 29 had bimodal devices, 23 received BCIs simultaneously, and 65 received BCIs sequentially. Table 2 shows the audiological and device variables, including age at HA/s, age at first CI/s, age at second CI, and duration of acoustic experience. Comprehensive pre-implant audiological records and HA data were obtained from medical centers, schools, and CI centers from across the United States. From these records, acoustic experience, defined by unaided thresholds (PTA at 0.5, 1, and 2 kHz) and HA use, was determined. Nine children had a progression of HL, documented through multiple audiograms. A decrease in hearing thresholds, over time, ≥ 10 dB was considered a significant change. For each of these children, a single value of unaided PTA was calculated from the time-weighted average of the child's unaided PTAs for each time period (as determined by the dates of the multiple audiograms). All but one child had a permanent sensorineural HL diagnosed during infancy and used an HA from the time of diagnosis. 1

Table 2.

Audiological characteristics of participants, children (n = 117) with cochlear implants (CIs).

| Variables | M | SD | Range |

|---|---|---|---|

| Age at first HA (months) | 10 | 8.4 | 1–29 |

| Age at first CI (years) | 2.1 | 1.1 | 0.67–4.9 |

| Unaided PTA a , b (dB HL) | 92 | 19 | 30–125 |

| Duration of acoustic experience (years), i.e., duration of HA use b , c | 2.6 | 2.3 | 0.03–9.05 |

| Age at second CI (years), n = 88 | 2.6 | 1.4 | 0.7–6.7 |

| Device configuration | bimodal (29), sequential bilateral (65), simultaneous bilateral (23) | ||

| CI manufacturer | Cochlear (90), Advanced Bionics (23), MED-EL (4) | ||

Note. HA = hearing aid; PTA = pure-tone average; BCI = bilateral cochlear implant.

PTA of 0.5, 1, and 2 kHz (dB HL) for the acoustic ear. The unaided PTAs associated with acoustic experience for each child were as follows: the better ear pre-implant PTA for those receiving simultaneous BCIs, pre-implant PTA for the second CI ear for those receiving sequential BCIs, and PTA for the HA ear for those using bimodal devices.

For one participant who acquired meningitis at age 20 months, the unaided PTA, 30 dB HL, is a time-weighted average of thresholds: within normal limits through 20 months and profound thresholds for the 1 month prior to receipt of BCIs. This participant's duration of acoustic experience represents the time prior to receipt of simultaneous BCIs (21 months).

Duration of acoustic experience was calculated from age at first HAs to age at BCIs for children using simultaneously implanted BCIs, age at second CI for children using sequential BCIs, and age at test for children using bimodal devices.

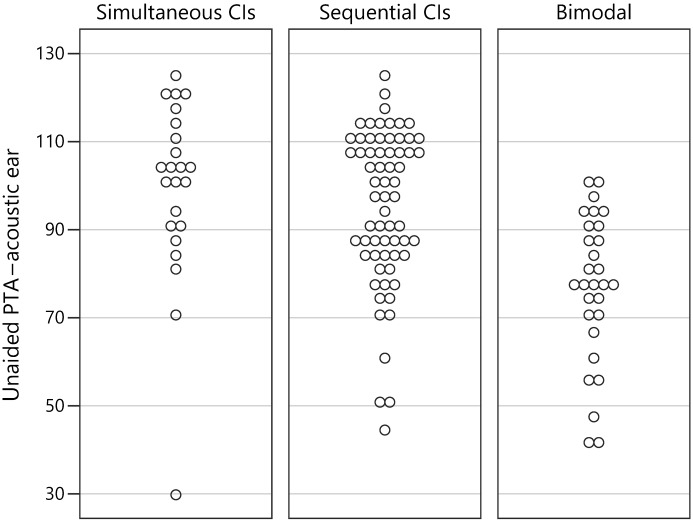

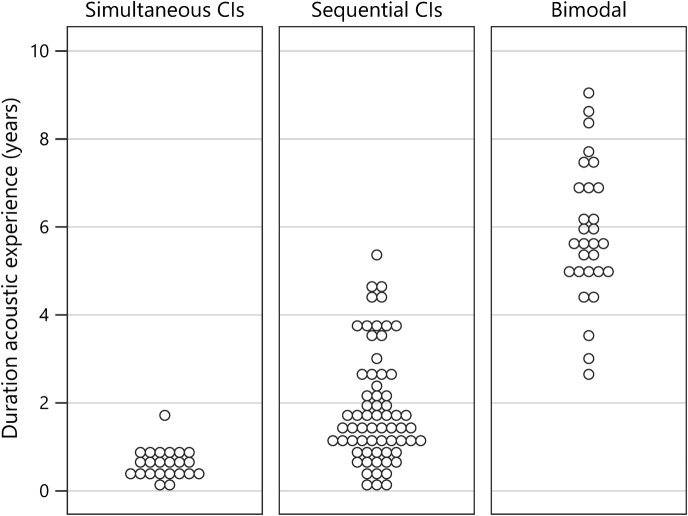

Duration of acoustic experience was calculated for each child as follows: (a) for children using simultaneously implanted BCIs, age at BCIs minus age at first HAs 2 ; (b) for children using sequential BCIs, age at second CI minus age at first HAs (86% of children who received sequential BCIs used an HA at the nonimplanted ear for some time); and (c) for children using bimodal devices, age at test minus age at first HAs. The unaided PTAs associated with acoustic experience for each child were as follows: the better ear pre-implant PTA for those receiving simultaneous BCIs, pre-implant PTA for the second CI ear for those receiving sequential BCIs, and PTA for the HA ear for those using bimodal devices. Figure 1 displays the unaided PTAs associated with the acoustic ear for each participant, separated by device configuration (simultaneous BCIs, sequential BCIs, and bimodal). Similarly, Figure 2 displays the duration of acoustic experience for each participant separated by device configuration. Note that, while there is a trend for children in the bimodal group to have better PTAs and those in the simultaneously implanted group to have poorer PTAs, there is a substantial amount of overlap in PTAs across all three groups. And, as expected, the children with simultaneous BCIs have shorter durations of acoustic experience while children with bimodal devices have longer durations of acoustic experience. However, there is overlap in the durations between the simultaneous and sequential BCI groups and an overlap in durations between the sequential BCI and bimodal groups.

Figure 1.

Graph displays the unaided pure-tone averages (PTAs) associated with the acoustic ear for each participant, separated by device configuration (simultaneous bilateral cochlear implants, sequential bilateral cochlear implants, and bimodal). CIs = cochlear implants.

Figure 2.

Graph displays the duration of acoustic experience for each participant separated by device configuration (simultaneous bilateral cochlear implants, sequential bilateral cochlear implants, and bimodal). CIs = cochlear implants.

All children attended a day-long test session held at their CI center or school for the deaf. Testing was carried out by members of the research team as well as local certified audiologists and speech-language pathologists. The test battery was composed of measures of both segmental and suprasegmental speech perception, standardized measures of receptive language and receptive vocabulary, nonverbal intelligence, aided threshold testing, HA gain and HA output checks (for bimodal device recipients), and several research measures developed by the research team. Each test is described below.

Speech Perception

The speech perception test battery included several tests of both segmental (perception of phonemes and of words in quiet and noise) and suprasegmental perception (perception of stress, emotion, and talkers' voices). All speech perception tests were administered at 60 dBA.

Segmental Perception

The Lexical Neighborhood Test (LNT; Kirk, Pisoni, & Osberger, 1995), a monosyllabic open-set word test, was administered using prerecorded stimuli presented from a laptop computer to a loudspeaker placed at 0° azimuth and approximately 1 m from the child. List 1 (50 words) was presented in quiet, and List 2 was presented at 60 dBA in four-talker background noise (speech and noise at 0° azimuth) at a signal-to-noise ratio of +8 dB. Two percent correct LNT word scores were calculated, one each for the quiet and noise conditions.

The On-Line Imitative Test of Speech-Pattern Contrast Perception (OlimSpac; Boothroyd, Eisenberg, & Martinez, 2010) was used to assess segmental perception at the phoneme level. Nonsense syllables, vowel–consonant–vowel (VCV) combinations of three vowels {/ɑ, i, u/} and 12 consonants {/b, d, p, t, f, v, s, ʃ, z, ʒ, ʧ, ʤ/}, were used. The child listened to and imitated each VCV presented in an auditory-alone condition, after practice in the auditory + visual condition. An examiner, who was “blinded” (by the presence of a brief masking noise) to the test presentation, listened to the child's imitation and chose one of the alternatives that best represented the child's utterance. The accuracy of six contrasts, three in each VCV, were scored: vowel height, vowel place, consonant voicing, consonant continuance, pre-alveolar consonant place, and postalveolar consonant place. Two lists of 16 nonsense syllables were administered; a total of 96 contrasts were scored automatically in the system. An overall percent correct contrast score, adjusted for chance (guessing) performance, was reported for each child.

Suprasegmental Perception

Emotion identification was assessed using prerecorded sentences spoken by a female talker with four emotional intentions: angry, scared, happy, and sad (Geers, Davidson, Uchanski, & Nicholas, 2013). Children listened to 36 semantically neutral sentences with simple vocabulary and chose among the four emotions pictured on the computer screen. For each child, a percent correct emotion identification score was calculated.

Talker discrimination of eight females was assessed using a subset of prerecorded sentences from the Indiana multitalker speech database (Bradlow, Torretta, & Pisoni, 1996; Geers et al., 2013; Karl & Pisoni, 1994). The child heard two different sentence scripts and indicated whether the sentences were spoken by the same female talker or by different female talkers. A total of 32 sentence pairs were presented. For each child, a percent correct talker discrimination score was calculated.

Stress discrimination was assessed using pairs of bisyllabic nonwords (CVCV; Thiessen & Saffran 2007; Wenrich, Davidson, & Uchanski, 2017). In each trial, the pair of nonwords had the same phonetic sequence (e.g., “diga”–“diga”) and the same total duration. The stress pattern, however, in the pairs of nonwords was either the same (spondee–spondee, trochee–trochee, iamb–iamb) or different (e.g., trochee–spondee, iamb–trochee). Stress was represented acoustically by elongated duration, increased intensity, and increased fundamental frequency. After each trial presentation, the child responded whether the two nonwords had the “same” or “different” rhythms (stress patterns). A total of 30 trials were presented, and an overall percent correct stress discrimination score was calculated.

An adapted (and shortened) version of the Children's Test of Nonword Repetition (Dillon, Burkholder, Cleary, & Pisoni, 2004) was also used to assess suprasegmental perception. The test list consists of 20 nonwords, five each with two, three, four, and five syllables. Digital recordings of nonwords were played in random order in the sound field. Children were instructed that they will hear a “funny word” and should repeat it back as well as they could. Their imitation responses were recorded via a head-mounted microphone connected to a digital recorder. The children's nonword productions were transcribed by graduate student/clinicians in speech-language pathology using the International Phonetic Alphabet. The production of each nonword was transcribed and scored for stress pattern accuracy.

Language

Receptive Language

The Clinical Evaluation of Language Fundamentals–Fourth Edition (CELF-4; Semel, Wiig, & Secord, 2004) was used to access receptive language abilities. The test protocol guidelines from the CELF-4 allow for calculation of a receptive language index (RLI) standard score. The RLI is derived using scaled scores from a combination of the following three subtests: (a) Concepts and Following Directions: evaluates the ability to interpret spoken directions of increasing length and complexity (containing concepts that require logical operations) and remember names, characteristics, and order of objects; (b) Word Classes–Receptive: evaluates the ability to understand relationships between words that are related by semantic class feature; and (c) Sentence Structure: evaluates the ability to interpret spoken sentences of increasing length and syntactic complexity.

Receptive Vocabulary

The Peabody Picture Vocabulary Test–Fourth Edition (PPVT-4; Dunn & Dunn, 2007) was administered live voice in an auditory + visual mode and was used as a measure of receptive spoken vocabulary. The examiner produced words and asked the child to point to the correct picture in a set of four pictures.

Nonverbal IQ

Performance on a block design task was used to estimate nonverbal IQ. Depending on the age of the child at the time of testing, either the Wechsler Intelligence Scale for Children–Fourth Edition (Wechsler, 2003) or the Wechsler Abbreviated Scale of Intelligence–Second Edition (Wechsler, 2011) was administered.

The CELF-4, PPVT-4, Wechsler Intelligence Scale for Children–Fourth Edition, and Wechsler Abbreviated Scale of Intelligence–Second Edition are all standardized tests that provide age-referenced standard scores based on normative samples of typically hearing children.

Data Analyses

Principal components analysis (PCA) was used to create two composite measures, one each for segmental and suprasegmental speech perception. Using composite scores reduces the number of potentially redundant statistical tests, reduces the probability of Type I errors, and increases the reliability of the measures used in the analyses. Furthermore, combining measures that load highly on a single factor has proven effective in past studies (Strube, 2003). The segmental composite score included the LNT word scores from the quiet and noise conditions and the OlimSpac total score. The suprasegmental composite score included percent correct scores for emotion identification, talker discrimination, stress discrimination, and stress pattern reproduction (from the nonword repetition test). Standardized versions of PCA scores were used in the subsequent analyses. Standardized scores are commonly used for reporting PCA scores and are the default in most statistical software packages. They are especially valuable in the type of regression analysis that examines the unique contribution of selected predictor variables on a given outcome measure. Furthermore, they permit comparisons of regression coefficients by using a common metric.

We used hierarchical multiple regression analyses in which sets of selected predictors are entered in stages so that the unique contribution of particular variables (e.g., unaided PTA and duration of acoustic experience) can be assessed after first controlling for the influence of other important variables such as child, family, and audiological characteristics (nonverbal IQ, maternal education, gender, age at first CI, age at test). This approach has been used in past studies to elicit meaningful and interpretable data (Davidson, 2006; Geers et al., 2013; Geers & Nicholas, 2013; Geers, Nicholas, & Sedey, 2003) and is described in detail in a 2003 publication (Strube, 2003). In the current study, hierarchical multiple regression was used (a) to examine the effects of segmental and suprasegmental speech perception on language outcomes (CELF-4 and PPVT-4) after controlling for pre-existing variables (nonverbal intelligence, age at first CI, gender, maternal education) and (b) to delineate speech perception performance in relation to acoustic hearing experience (duration of HA use and unaided PTA). As described earlier, for each child, both duration of acoustic hearing experience (i.e., duration of HA use) and unaided PTA were calculated. These were used as continuous predictor variables in the regression analysis. Regression diagnostic procedures were used to identify unusual or overly influential cases and to check that regression assumptions (e.g., normally distributed residuals, low multicollinearity) were met. The sample size necessary to conduct a multiple regression analysis for predicting speech perception and language skills for children with CIs was calculated using an alpha of .05 and a power of .80. The regression model allowed for up to eight independent variables to represent the degrees of freedom associated with the sources of variance in the model. The estimated total variance accounted for by the combined variables in the model was R 2 = .40 with an additional 5% added by the target predictor variable. This estimate was based on past studies that have attributed 40%–60% of the variance in language measures to speech perception, various demographic factors, device characteristics, and cognitive factors (Blamey et al., 2001; Geers, 2006; Geers, Brenner, & Davidson, 2003; Geers, Nicholas, & Sedey, 2003; Nicholas & Geers, 2006). A minimum sample size of 100 subjects was needed for the target effect size (5%; Cohen, Cohen, West, & Aiken, 2003).

Results

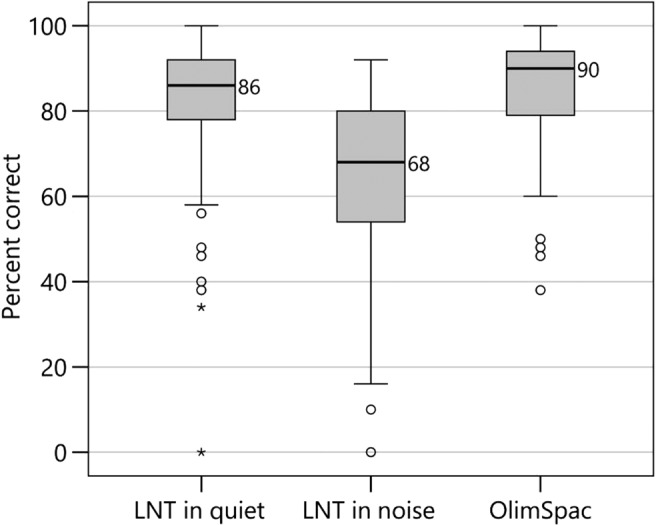

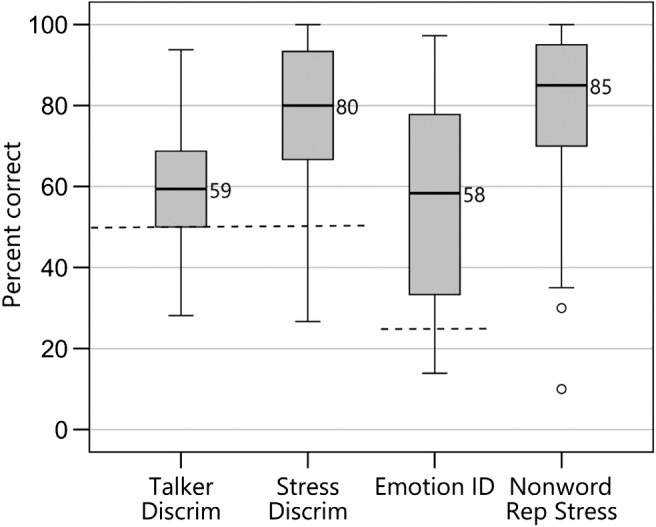

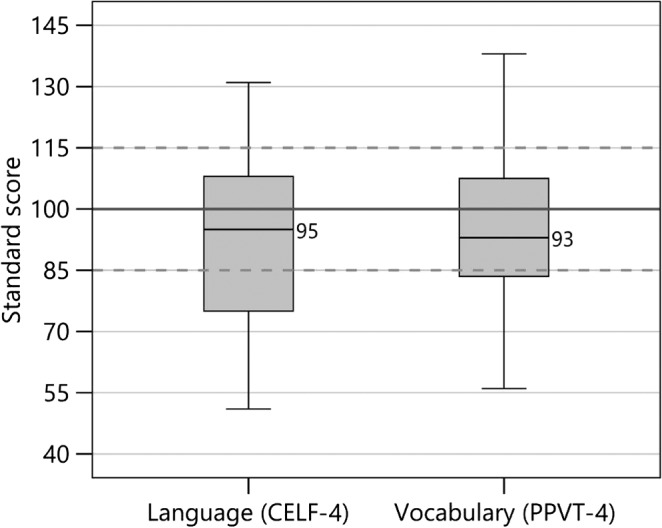

Percent correct scores for the segmental speech perception tests (LNT words in quiet and in noise and OlimSpac) are shown in Figure 3. The mean scores for the LNT in quiet, LNT in noise, and OlimSpac are 82%, 63%, and 80%, respectively. Similarly, percent correct scores for the suprasegmental speech perception tests (Talker Discrimination, Stress Discrimination, Emotion Identification, and Imitated Stress in the nonword repetition task) are displayed in Figure 4, where chance performance is also shown. Mean scores were 61%, 77%, 57%, and 81%, respectively. Children with typical hearing sensitivity of similar ages score near ceiling, 80%–100% correct, on the Talker Discrimination and Emotion Identification tests (Geers et al., 2013). Figure 5 displays standard language scores on the CELF-4 (RLI) and the PPVT-4 for these participants with CI. Both tests provide scores with a mean of 100 and a standard deviation of 15. The median scores for these children with CIs, 95 for the CELF-4 and 93 for the PPVT-4, fall within 1 SD of the normative mean for typically hearing age-mates (i.e., 85–115).

Figure 3.

Box plots from left to right show segmental speech perception scores in percent correct for children with cochlear implants for the Lexical Neighborhood Test (LNT) in Quiet, LNT in Noise, and On-Line Imitative Test of Speech-Pattern Contrast Perception (OlimSpac). The limits of the box represent the lower and upper quartile of the distribution (the difference is the interquartile range [IQR]), and the horizontal line through the box represents the median (median values are displayed next to the line). Whiskers represent the minimum and maximum scores in the distribution, excluding any outliers. Open-circle outliers are values between 1.5 and 3 IQRs from the end of a box; asterisk outliers are values more than 3 IQRs from the end of a box.

Figure 4.

Box plots from left to right show suprasegmental speech perception scores in percent correct for children with cochlear implants for talker discrimination (Talker Discrim), stress discrimination (Stress Discrim), emotion identification (Emotion ID), and nonword repetition stress pattern (Nonword Rep Stress). Dashed lines represent chance performance, applicable to the first three tests. Details about the box plots' structure are the same as those described in the caption for Figure 3.

Figure 5.

Box plots from left to right show standard scores (mean of 100 shown by solid line and standard deviation of ±15 shown by dashed lines) for children with cochlear implants for the receptive language index from Clinical Evaluation of Language Fundamentals–Fourth Edition (CELF-4) and Peabody Picture Vocabulary Test–Fourth Edition (PPVT-4). Details about the box plots' structure are the same as those described in the caption for Figure 3.

PCA Scores

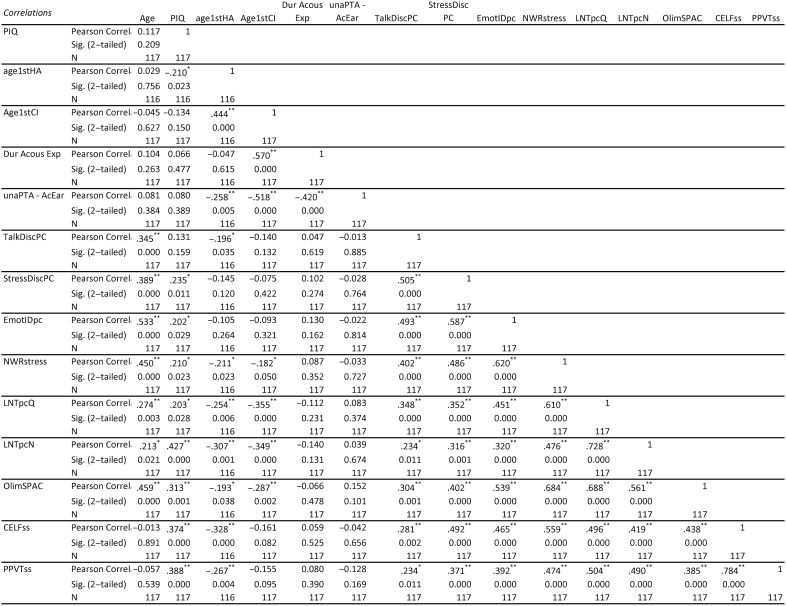

Separate segmental and suprasegmental composite scores were created using PCA. The segmental PCA score is a weighted combination of the LNT word score in quiet, LNT word score in noise, and overall OlimSpac score. The loadings of each measure on the first PCA were .92, .87, and .85, 3 respectively, and accounted for 77% of the variance. The suprasegmental PCA score is a weighted combination of emotion identification, talker discrimination, stress discrimination, and nonword repetition stress pattern percent correct scores. The loadings for each measure, on the first PCA, were .85, .74, .81, and .79, respectively, and accounted for 64% of the variance. PCA scores are weighted combinations of outcome measures, with the weights being the eigenvectors from the orthogonal decomposition of the correlation matrix. Rescaling by the square root of the eigenvalues converts PCA scores to standard scores for use in further analyses. These two standardized PCA scores, with means of 0 and standard deviations of 1, are used to represent segmental and suprasegmental perception skills, respectively. The segmental and suprasegmental PCA scores are significantly correlated (r = .599, p < .05), indicating that these perceptual skills are related. A correlation table of all predictor variables is included in the Appendix.

Hierarchical Regression to Predict Receptive Language and Vocabulary

Regression diagnostic procedures confirmed that no unusual or overly influential cases were present in the data and all regression assumptions (e.g., normally distributed residuals, low multicollinearity) were met. Since both segmental and suprasegmental attributes of spoken language are present in the acoustic speech signal at the same time, we were most interested in determining whether suprasegmental and segmental perception contributed significant variance to language scores when they were included simultaneously in regression models. However, we first conducted two regression analyses to examine the separate contributions of segmental perception and suprasegmental perception on language after controlling for child and demographic variables. For CELF-4 standard scores, the first stage of the regression included the following child and demographic variables: nonverbal IQ, age at first CI, gender, and maternal education. Collectively, these variables accounted for 19% of the variance in CELF-4 scores, with nonverbal IQ and maternal education emerging as significant predictors. At the second stage, either segmental perception or suprasegmental perception was added into the regression model. When segmental perception was added to the first-stage model, it accounted for an additional 14% of the variance in CELF-4 scores (total 33%). When suprasegmental perception was added to the first-stage model, it accounted for an additional 24% of variance in CELF-4 scores (total 43%). For the regression of most interest, both segmental perception and suprasegmental perception were added together at the second stage. These perceptual variables, together, accounted for an additional 25% of variance (total 44%), but only suprasegmental perception was a significant predictor in this model. Thus, while segmental and suprasegmental were both significant predictors when added separately to the first-stage regression model, only suprasegmental was a significant predictor when both were added simultaneously. Table 3 provides the coefficients, standard errors, and significance values for the regression model when both perceptual variables were entered simultaneously. The regression coefficient for suprasegmental perception (i.e., the suprasegmental PCA score) is 7.98 and is statistically significant, indicating that the better a child perceives suprasegmental characteristics of speech, the better that child's receptive language skills (CELF-4). The effect for segmental perception is not statistically significant.

Table 3.

Receptive language (Clinical Evaluation of Language Fundamentals–Fourth Edition).

| Stage | Variable | Coefficient |

|||

|---|---|---|---|---|---|

| Estimate | SE | t | Pr(> |t|) | ||

| 1 | (Intercept) | 92.4 | 1.62 | 57.2 | < .001 |

| 1 | Nonverbal IQ | .403 | .102 | 3.96 | < .001 |

| 1 | Age at first CI | −1.77 | 1.49 | −1.19 | .238 |

| 1 | Gender | −3.08 | 3.31 | −0.93 | .353 |

| 1 | Maternal education | 1.54 | .740 | 2.08 | .040 |

| 2 | Segmental PCA score | 3.16 | 1.94 | 1.63 | .106 |

| 2 | Suprasegmental PCA score | 7.98 | 1.74 | 4.59 | < .001 |

Note. F(6, 110) = 14.3, p < .001. Multiple R 2 = .44. CI = cochlear implant; PCA = principal components analysis.

Regressions to predict receptive vocabulary (PPVT-4 standard scores) were conducted in a similar manner. At the first stage, child and demographic variables (nonverbal IQ, age at first CI, gender, and maternal education) were included in the model. Collectively, they accounted for 19% of the variance in PPVT-4 scores, with nonverbal IQ and maternal education emerging as significant predictors. When only segmental perception was added next in the model, it accounted for an additional 15% of the variance in PPVT-4 scores (total of 34%). When only suprasegmental perception was added to the model at the second stage, it accounted for an additional 14% of the variance in PPVT-4 scores (total of 33%). When both segmental and suprasegmental perception were added at the second stage, each was a statistically significant predictor (beyond the demographic variables); together, these perceptual variables contributed an additional 19% to the variance in PPVT-4 scores (total of 38%). Table 4 provides the coefficients, standard errors, and significance values for the regression model when both perceptual variables were entered simultaneously. Both segmental and suprasegmental perception are statistically significant predictors of receptive vocabulary scores (PPVT-4), with regression coefficients of similar magnitude, namely, 4.93 and 4.11, respectively. Thus, for receptive vocabulary, both segmental and suprasegmental perception are significant predictors when entered into regression models separately and when entered simultaneously.

Table 4.

Receptive vocabulary (Peabody Picture Vocabulary Test–Fourth Edition).

| Stage | Variable | Coefficient |

|||

|---|---|---|---|---|---|

| Estimate | SE | t | Pr(> |t|) | ||

| 1 | (Intercept) | 95.1 | 1.44 | 66.1 | < .001 |

| 1 | Nonverbal IQ | 0.377 | 0.091 | 4.06 | < .001 |

| 1 | Age at first CI | −1.49 | 1.33 | −1.12 | .264 |

| 1 | Gender | −1.36 | 2.95 | −0.46 | .645 |

| 1 | Maternal education | 1.32 | 0.66 | 2.01 | .047 |

| 2 | Segmental PCA score | 4.93 | 1.83 | 2.69 | .008 |

| 2 | Suprasegmental PCA score | 4.11 | 1.64 | 2.51 | .014 |

Note. F(6, 110) = 11.0, p < .001. Multiple R 2 = .375. CI = cochlear implant; PCA = principal components analysis.

Hierarchical Regression to Predict Segmental and Suprasegmental Speech Perception

Regression diagnostic procedures for segmental and suprasegmental regressions confirmed that no unusual or overly influential cases were present in the data and all regression assumptions (e.g., normally distributed residuals, low multicollinearity) were met. A variance inflation factor (VIF) was calculated to assess multicollinearity among the predictor variables in the speech perception regression analyses. The most common VIF threshold for a problem with multicollinearity is 10, while more conservative thresholds are as low as 4 (O'Brien, 2007). The highest VIF for a predictor in the current model was 3.6. By either standard, the threshold for multicollinearity was not exceeded.

The regression analysis to predict segmental speech perception (i.e., segmental PCA scores) was also conducted in stages. Duration of acoustic experience and unaided PTA (calculated for each child as described previously) served as the main predictor variables for the segmental speech perception PCA scores after controlling for demographic variables (the same set used previously), and due to a presumed moderating effect of unaided PTA, these predictors were also explored for linear and nonlinear effects (quadratic effects). At the first stage, nonverbal IQ, age at test, age at first CI, gender, and maternal education were entered. Next, the main effects of unaided PTA and duration of acoustic experience were entered to identify significant linear effects. At the third stage, the linear interaction between unaided PTA and duration of acoustic experience was entered. At the fourth stage, the quadratic effect of duration of acoustic experience was added. Finally, on the last stage, the interaction of unaided PTA and the quadratic effect of duration of acoustic experience was added. Results after the first stage indicated nonverbal IQ, age at test, age at first CI, and maternal education were significant predictors and accounted for 36% of the total variance in the segmental PCA score. At the second stage, unaided PTA and duration of acoustic experience accounted for an additional 2% of variance in the segmental PCA score, with unaided PTA emerging as a significant predictor. At the third stage, a linear interaction between unaided PTA and duration of acoustic experience was significant and added an additional 3% variance for a total explained variance of 41%. The quadratic effect of duration of acoustic hearing and the interaction of the quadratic effect of acoustic hearing and unaided PTA at the last two stages (Stages 4 and 5) did not add significantly to the model. The regression coefficients, standard error, and significance values for the demographic predictors at the first stage and the audiological interaction variables at each stage in the model (i.e., the model through all five stages) are shown in Table 5.

Table 5.

Segmental perception.

| Stage | Variable | Coefficient |

|||

|---|---|---|---|---|---|

| Estimate | SE | t | Pr(> |t|) | ||

| 1 | (Intercept) | 0.00 | .076 | 0.00 | 1.00 |

| 1 | Nonverbal IQ | .015 | .005 | 3.11 | .002 |

| 1 | Age at test | .258 | .062 | 4.16 | < .001 |

| 1 | Age at first CI | −.291 | .070 | −4.15 | < .001 |

| 1 | Gender | .026 | .157 | 0.17 | .868 |

| 1 | Maternal education | .080 | .035 | 2.29 | .024 |

| 2 | Unaided PTA–acoustic ear (unaPTA-AcEar) | −.010 | .005 | −2.02 | .046 |

| 2 | Duration of acoustic experience (durAcousExp) | −.005 | .042 | −0.11 | .909 |

| 3 | Interaction (unaPTA-AcEar × durAcousExp) | −.005 | .002 | −2.01 | .047 |

| 4 | Quadratic effect (durAcousExp2) | −.016 | .017 | −0.93 | .354 |

| 4 | Interaction (unaPTA-AcEar × durAcousExp) | −.005 | .002 | −2.35 | .020 |

| 5 | Interaction (unaPTA-AcEar × durAcousExp2) | .0003 | .001 | 0.27 | .787 |

| 5 | Interaction (unaPTA-AcEar × durAcousExp) | −.005 | .002 | −2.03 | .045 |

Note. F(10, 106) = 7.4, p < .001. Multiple R 2 = .41. CI = cochlear implant; PCA = principal components analysis.

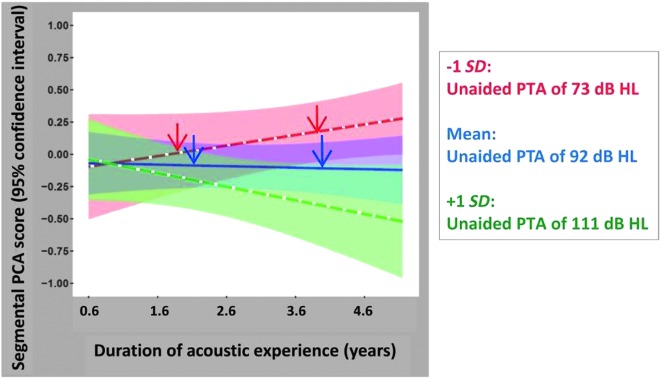

Shown in Figure 6 are the linear effects of unaided PTA and the interaction between unaided PTA and duration of acoustic experience. Predicted segmental PCA (standardized) scores, from the regression model, are plotted as a function of duration of acoustic experience (plotted over the range of 0.6–4.6 years, the mean ± 2 SDs) with unaided PTA as a parameter. The standard scores for the segmental PCA scores have a mean of 0 and a standard deviation of 1. Mean predicted segmental PCA scores are shown for three unaided PTA values indicated by three colors (mean − 1 SD: 73 dB HL [red], mean: 92 dB HL [blue], and mean + 1 SD: 111 dB HL [green]). These PTA values represent, for this sample of children, the mean and standard deviation of the unaided PTAs associated with the acoustic ear for each child. Also shown, with colored shading, are the 95% confidence intervals for these predicted regressions. The regression results may be best understood through these hypothetical examples. Two red arrows show predicted segmental PCA scores for children with unaided PTAs of approximately 73 dB HL; the left red arrow represents predicted scores for children with a total duration of acoustic experience of almost 2 years, while the right red arrow represents children with almost 4 years of acoustic experience. In this study, the shorter total duration of acoustic experience represents children who received a second CI at an earlier age, while the longer value corresponds to children who received a second CI at a later age. Both children accumulate acoustic experience by wearing an HA during the CI surgery interval. Segmental PCA scores are predicted to be greater for the child with the longer duration of acoustic experience. Predicted trends are quite different, however, for children with poorer unaided PTAs. Two blue arrows are shown along the predicted regression line for children with unaided PTAs of approximately 92 dB HL; again, the left blue arrow represents a total duration of acoustic experience of approximately 2 years, while the right blue arrow represents nearly 4 years of acoustic experience. For these children with poorer unaided PTAs (approximately 92 dB HL), segmental PCA scores are predicted to be the same, regardless of their duration of acoustic experience. Equivalently, there is no advantage (or disadvantage), with respect to these children's predicted segmental speech perception skills, from shorter or longer CI surgery intervals with continued use of an HA with the first CI. Finally, for children with the poorest unaided PTAs (approximately 111 dB HL), continued use of an HA (with a CI) for long periods of time (long durations of acoustic experience) is predicted to result in lower segmental scores compared to performance when HA use is short due to implantation of a second CI at an early age (green line).

Figure 6.

Predicted segmental principal components analysis (PCA; standardized with mean at 0 and ± 1 SD) scores, using the full regression model, are plotted as a function of duration of acoustic experience with unaided pure-tone average (PTA) as a parameter. Mean segmental PCA scores and 95% confidence intervals for the mean are plotted on the graph for three unaided PTA values (mean − 1 SD, overall mean, and mean + 1 SD for the study population)—specifically, unaided PTA = 73 dB HL (red line and red shaded area), unaided PTA = 92 dB HL (blue line and blue shaded area), and unaided PTA = 111 dB HL (green line and green shaded area).

The regression analyses for suprasegmental speech perception (suprasegmental PCA scores) proceeded in the same manner as described above for segmental speech perception. At the first stage, nonverbal IQ, age at test, age at first CI, gender, and maternal education were entered. Next, the main effects of unaided PTA and duration of acoustic experience were entered to identify significant linear effects. At the third stage, the linear interaction between unaided PTA and duration of acoustic experience was entered, and at the fourth stage, the quadratic effect of duration of acoustic experience was added. Finally, on the last (fifth) stage, the interaction of unaided PTA and the quadratic effect of duration was added. After the first stage of the regression model, nonverbal IQ and age at test emerged as significant predictors and accounted for 35% of the total variance in the suprasegmental PCA scores. At the next stage, the main effects unaided PTA and duration of acoustic hearing were entered, with unaided PTA emerging as a significant predictor adding an additional 4% of the variance (for an accumulated 39% of the variance). The interaction between unaided PTA and duration of acoustic experience was not significant at the third stage but was significant at the fourth and fifth stages when the quadratic effect of duration of acoustic hearing was added. The quadratic effect of duration of acoustic experience was significant at the fourth stage. Finally, the interaction of unaided PTA and the quadratic effect of duration of acoustic experience was significant at the last stage. These significant effects in the fourth and fifth stages (nonlinear quadratic effect of duration of acoustic experience and the interaction between unaided PTA and the quadratic effect of duration of acoustic experience) contributed an additional 7% of the variance, for a total of 46% explained variance in suprasegmental PCA scores. The regression coefficients, standard error, and significance values for the demographic variables at the first stage and the audiological interaction variables at each stage of the model are shown in Table 6.

Table 6.

Suprasegmental perception.

| Stage | Variable | Coefficient |

|||

|---|---|---|---|---|---|

| Estimate | SE | t | Pr(> |t|) | ||

| 1 | (Intercept) | 0.00 | .076 | 0.00 | 1.000 |

| 1 | Nonverbal IQ | .011 | .005 | 2.35 | .021 |

| 1 | Age at test | .425 | .063 | 6.78 | < .001 |

| 1 | Age at first CI | −.089 | .071 | −1.26 | .210 |

| 1 | Gender | −.210 | .158 | −1.33 | .187 |

| 1 | Maternal education | .006 | .035 | 0.16 | .873 |

| 2 | Unaided PTA acoustic ear (unaPTA-AcEar) | −.009 | .005 | −1.96 | .053 |

| 2 | Duration of acoustic experience (durAcousExp) | .062 | .042 | 1.49 | .140 |

| 3 | Interaction (unaPTA-AcEar × durAcousExp) | −.003 | .002 | −1.51 | .135 |

| 4 | Quadratic effect (durAcousExp2) | −.043 | .017 | −2.60 | .011 |

| 4 | Interaction (unaPTA-AcEar × durAcousExp) | −.004 | .002 | −2.12 | .036 |

| 5 | Interaction (unaPTA-AcEar × durAcousExp2) | .002 | .001 | 2.00 | .048 |

| 5 | Interaction (unaPTA-AcEar × durAcousExp) | −.007 | .002 | −2.93 | .004 |

Note. F(10, 106) = 8.96, p < .001. Multiple R 2 = .46. CI = cochlear implant; PCA = principal components analysis.

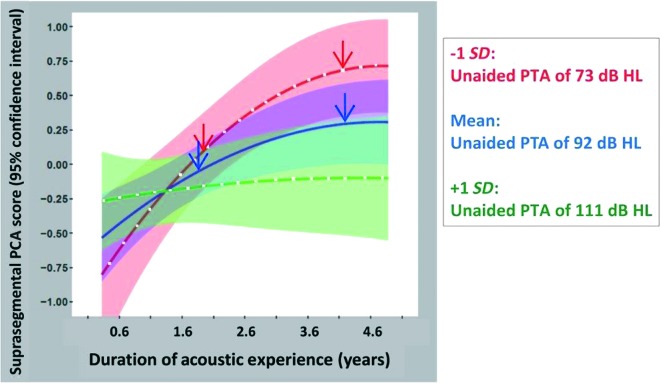

Shown in Figure 7 are the effects of unaided PTA and the interaction between unaided PTA and duration of acoustic experience. For suprasegmental perception, however, some significant main effects and interactions involve nonlinear (specifically quadratic) terms. Predicted suprasegmental PCA (standardized) scores, from the regression model, are plotted as a function of duration of acoustic experience (again, over the range of 0.6–4.6 years; mean ± 2 SDs) with unaided PTA as a parameter. Again, mean predicted suprasegmental PCA scores are shown for three unaided PTA values (mean − 1 SD: 73 dB HL [red], mean: 92 dB HL [blue], and mean + 1 SD: 111 dB HL [green]), indicated by three colors, and 95% confidence intervals for these predicted means are indicated by colored shading. Recall also that acoustic experience is accumulated primarily while using bimodal devices, such as would occur during a CI surgery interval when the nonimplanted ear is aided with an HA. As before, the regression results may be best understood through hypothetical examples. Two red arrows show predicted suprasegmental PCA scores for children with unaided PTAs of approximately 73 dB HL; the left red arrow represents children with a total duration of acoustic experience of almost 2 years, while the right red arrow represents children with about 4 years of acoustic experience. Suprasegmental PCA scores are predicted to be much greater (approximately 0.5 SD units) for children with the longer duration of acoustic experience. For children with more severe HL, unaided PTAs of approximately 92 dB HL, the prediction is similar in trend though smaller in magnitude. That is, children with longer durations of acoustic experience are predicted to have better suprasegmental PCA scores than those with shorter durations of acoustic experience (compare the left and right blue arrows; a difference of approximately 0.3 SD units). Finally, for children with the most profound HL, that is, unaided PTAs of approximately 111 dB HL, the suprasegmental PCA scores are roughly constant across this range of durations of acoustic experience (0.6–4.6 years; see the green line). Thus, unlike the case for children with less profound HL, longer durations of acoustic experience do not benefit children with the most profound HL with respect to their suprasegmental speech perception.

Figure 7.

Predicted suprasegmental principal components analysis (PCA; standardized with mean at 0 and ± 1 SD) scores, using the full regression model, are plotted as a function of duration of acoustic experience with unaided pure-tone average (PTA) as a parameter. Mean suprasegmental PCA scores and 95% confidence intervals for the mean are plotted on the graph for three unaided PTA values (mean − 1 SD, overall mean, and mean + 1 SD for the study population)—specifically, unaided PTA = 73 dB HL (red line and red shaded area), unaided PTA = 92 dB HL (blue line and blue shaded area), and unaided PTA = 111 dB HL (green line and green shaded area).

In addition to regressions diagnostics, bootstrapping analyses were conducted to check the validity of the inferences from the original regression analyses. We were specifically interested in the linear and nonlinear interactions of unaided PTA and duration of acoustic hearing for suprasegmental and segmental speech perception. In these analyses, 10,000 bootstrap samples were generated (each of size N, sampling with replacement), and each sample was analyzed using the regression model used with the original sample. These bootstrap analyses were used to create empirical sampling distributions for the coefficients tested in the regression model. The bias-corrected and accelerated 95% confidence intervals for these bootstrap coefficients were determined. Intervals that exclude zero are assumed to be robust in the face of assumption violations. The linear interactions of PTA and duration of acoustic hearing for both suprasegmental and segmental perception excluded zero; thus, the original effect of these variables was confirmed. The nonlinear effect of these variables for suprasegmental included zero, indicating that this effect should be interpreted with caution. Specifically, the conclusion that the benefits of acoustic hearing appear to plateau should be interpreted with caution.

Discussion

Currently, clinicians' advice for families of pediatric patients with HL is for the child to receive auditory stimulation at both ears immediately through the use of bilateral HAs. For children with HLs in the range from moderately severe to profound, at least one CI is frequently recommended; early implantation of at least one CI is unquestionably of great benefit to their ultimate speech and language development (Nicholas & Geers, 2007; Niparko et al., 2010; Svirsky, Teoh, & Neuburger, 2004). The critical question facing clinicians is: “which” device, at the “other” ear (with a CI already at one ear), offers a particular child the best chance of developing typical speech and language? We approached this question by examining both segmental and suprasegmental speech skills and by examining these skills' contributions to receptive language and vocabulary. In our approach, we characterized each child's early acoustic hearing experience via two continuous variables: unaided PTA and duration of HA use.

We examined receptive language and vocabulary of 117 children with CIs at ages 4.8–9.4 years. At the time of testing, all the children in this study utilized bilateral devices, 4 either BCIs or bimodal. On average, these participants exhibited receptive language and vocabulary skills within the normative range for typically developing children. Consistent with other studies (Geers, Nicholas, Tobey, & Davidson, 2016; Sarant et al., 2014), a large range in language (CELF-4 range: 51–131) and vocabulary (PPVT-4 range: 56–138) scores is noted. Child and family factors (nonverbal IQ, age at first CI, gender, and maternal education) account for approximately 19% of the variability observed in receptive language and vocabulary scores of these 117 bilateral device users. Consistent with other pediatric studies of children with CIs and HAs, the educational attainment of mothers in the current sample tended to be higher than the general population (Geers & Nicholas, 2013; Tomblin et al., 2015). Given that higher levels of maternal education have been positively associated with language development in typically developing children and children with CIs and HAs (Ching, Dillon, Leigh, & Cupples, 2018; Dollaghan et al. 1999; Geers, Moog, Biedenstein, Brenner, & Hayes, 2009; Niparko et al., 2010), the language levels of children in the current study are likely higher than would be expected from a sample with a wider and more typical range of educational attainment. Here, maternal education was accounted for in the first step of all the regression analyses. Furthermore, the conclusions of this study are based on relative language levels achieved rather than absolute levels. Presumably, even if a sample of children with more typical socioeconomic status families were tested, the result would not change.

Segmental and suprasegmental perception, together, account for 19% additional variance in receptive vocabulary. For receptive language, segmental and suprasegmental perception, together, account for 25% additional variance, although only suprasegmental perception was statistically significant. Noting that the CELF-4 RLI is composed of tests that assess comprehension of phrase- and sentence-level language units, it is reasonable to assume that the perception of suprasegmental units may assume a more important role than segmental perception. Specifically, sentence comprehension has been shown to depend on a number of factors including lexical content at a word level and structure at a syntactic level. Perception of prosodic information (i.e., suprasegmental perception) has been shown to influence comprehension at both lexical and syntactic levels and may aid in resolving lexical and syntactic ambiguities (e.g., Ferreira, 1993; Gervain & Werker, 2008; Osterhout & Holcomb, 1993; Stoyneshka, Fodor, & Fernández, 2010). The receptive vocabulary task does not place these same demands on suprasegmental perception, and it seems that receptive vocabulary performance is also reliant on segmental perception. For this reason, it is important to obtain separate estimates of language comprehension at both the word and sentence levels.

The contribution of segmental speech perception (i.e., perception of words and sentences) to language abilities of pediatric CI recipients is expected and has been well documented (Blamey et al., 2001; Dettman et al., 2016; Dowell, Dettman, Blamey, Barker, & Clark, 2002; Geers, 2006; Geers, Brenner, & Davidson, 2003, 2016; Svirsky et al., 2004). The contribution of suprasegmental perception, however, has received relatively less attention (Geers et al., 2013). Yet, as discussed previously, suprasegmental perception (intonation, stress, rhythm) serves as a foundation for spoken language and lexical development for typically developing infants (Werker & Yeung, 2005). Infants, even before they are able to encode phonemes unique to their language, attend to the acoustic cues of intonation, stress, and rhythm (suprasegmental perception) to parse continuous speech streams into words (infants with typical hearing sensitivity in English-speaking environments). Infants selectively attend to intonation/pitch changes at the ends of clauses and within pairs of syllables that are predominantly trochaic (i.e., stress on the first syllable) to parse words in connected speech (Jusczyk et al., 1999; Seidl & Cristià, 2008; Seidl & Johnson, 2008; Swingley, 2009). Additionally, newborn infants with normal hearing are able to discriminate among at least four different vocal emotions (sad, happy, angry, and neutral; Mastropieri & Turkewitz, 1999) and within months show a preference for positive vocal affect when listening to words (Papoušek, Bornstein, Nuzzo, Papoušek, & Symmes, 1990; Singh et al., 2002; Singh, Morgan, & White, 2004). Given that the acoustic cues associated with suprasegmental speech properties are not transmitted equally well with CIs and HAs and that suprasegmental perception is seemingly critical to spoken language development, we assert that the effects of suprasegmental perception on the spoken language skills of pediatric CI recipients be considered additionally (i.e., in addition to segmental perception effects) and strongly. It has been long established that children with severe-to-profound HL with CIs typically perform better on segmental perception tests (e.g., word and sentence recognition) than children with similar degrees of HL using HAs (Blamey & Sarant, 2002; Boothroyd & Eran, 1994; Osberger et al., 1991). The opposite result, however, has been found for suprasegmental perception (e.g., tests of syllable stress and sentence intonation; Most & Peled, 2007). Thus, as children present with greater levels of residual hearing, a period of HA use in conjunction with a CI may provide the greatest benefit for spoken language.

A primary goal of this study was to determine whether there is an optimal duration of HA use and unaided threshold level that should be considered before proceeding from bimodal devices to BCIs. Instead of comparing group performance for bimodal and BCI recipients, we characterized each child's early acoustic hearing using two continuous variables, namely, duration of acoustic experience and unaided hearing levels (unaided PTA). By design, our characterization of these children incorporates implicitly relations between the “variables of interest” and device configuration (e.g., duration of acoustic experience terminates when a child receives a second, sequentially implanted CI). More importantly, with our characterization (continuous variables: unaided PTA and duration of acoustic experience) and statistical model, we can compare the performance (speech perception, language) of children with similar levels of unaided PTA, but with different durations of acoustic hearing. These two variables serve as our main predictor variables for segmental and suprasegmental speech perception. The reason for this choice is that children with CI(s), despite common assignment to dichotomously named groups (e.g., BCI vs. bimodal), possess a continuum of early acoustic hearing experiences (and a continuum of bilateral CI experience, etc.). Furthermore, if children are categorized solely by their current device configuration (a “snapshot” characterization of the child), then any possible effects of hearing history (i.e., captured by duration of acoustic experience) would be simply ignored or “swept under the rug.” This study's population, with its wide ranges of residual hearing (i.e., unaided PTAs) and of duration of HA use, consequently present a broad range of early acoustic experiences (see Figures 1 and 2).

We found that acoustic experience with an HA facilitates both segmental and suprasegmental perception. These effects, however, are dependent on hearing level (unaided PTAs). Additionally, the pattern and magnitude of the effects differ across the two perceptual measures, segmental and suprasegmental. For segmental perception, the magnitude of the effect of acoustic hearing is smaller than for suprasegmental perception, and the interaction of duration of HA use and unaided PTA is linear. For segmental speech perception, the regression model indicates that scores increase with longer HA use, but only for children with threshold levels in the approximately 73–dB HL range (see Figure 6). There is little or no increase in segmental scores as HA use increases for children with threshold levels of approximately 92 dB HL. And for children with the most profound HL (approximately 111dB HL), segmental scores are expected to decrease with prolonged HA use (e.g., a child with 111 dB HL PTA receives a second CI at a later age, after prolonged bimodal device use). For suprasegmental perception, the regression model is somewhat different in both magnitude and pattern. In the models of both segmental and suprasegmental scores, the greatest effects of acoustic hearing are predicted for children with unaided PTAs of approximately 73 dB HL (see red lines in Figures 6 and 7). The magnitude of the effect, however, is greater for suprasegmental perception than for segmental perception. For example, over the range of acoustic experience from 1.6 to 4.6 years, there is an approximately +0.25 SD difference in segmental scores and a much larger approximately +0.75 SD difference in suprasegmental scores. For children with this level of hearing (unaided PTAs of approximately 73 dB HL), those with long durations of HA experience have higher suprasegmental scores than children with shorter durations of HA use. This effect holds through approximately 4 years in duration of HA use but plateaus somewhat beyond this point. As noted previously, results from the bootstrapping analyses indicate that the nonlinear effects of the model should be interpreted with caution. Further longitudinal data should be collected to determine whether this regression underestimates continued benefits for bimodal users. Based on the regression analysis in Table 3, such an increase of 0.75 SD in suprasegmental scores is predicted to increase CELF-4 standard scores by 6 points (0.75 × 7.98 ≈ 6). The predicted segmental scores for children with similar levels of hearing, approximately 73 dB HL, do not plateau for longer durations of acoustic experience (compare red lines in Figures 6 and 7). The nonlinear pattern for suprasegmental perception is seen also for children with thresholds in the 92–dB HL range, albeit smaller in magnitude. Finally, for children with thresholds in the most profound range (approximately 111 dB HL), continued HA use is not associated with any increase (or decrease) in suprasegmental scores. Thus, for children with profound losses, earlier BCIs appear warranted.

As bimodal use becomes more prevelant due to consideration of CIs for individuals with greater degrees of residual hearing, a careful examination of the evidence for recommending a particular device is warranted. First, the exclusive use of segmental perception tests neglects the critical role that suprasegmental perception has in spoken language development. In previous reports, segmental speech perception, exclusively, has been used to determine PTA “cutoff” levels for hearing device recommendations (CIs vs. HAs) for pediatric patients (Leigh et al., 2016, 2011; Lovett et al., 2015). Second, since acoustic cues associated with segmental and suprasegmental speech properties are not transmitted equally well with acoustic (HAs) and electric (CIs) hearing devices, segmental and suprasegmental perception will likely depend on the type(s) of hearing devices a child uses. Suprasegmental features are generally represented in the speech signal's time intensity envelope and fundamental frequency information, and their perception requires accurate encoding of low-frequency and/or temporal information (Carroll & Zeng, 2007; Grant, 1987; Most & Peled, 2007). Due to the restricted spectral resolution of current CI systems, listening via CI(s) only (electrical hearing only) may limit a CI user's ability to perceive suprasegmental features (Carroll & Zeng, 2007). Acoustic hearing (via HAs) may enable the perception of fundamental frequency information, although the amount of residual hearing necessary for good fundamental frequency perception is currently unspecified (Dorman et al., 2015; Illg, Bojanowicz, Lesinski-Schiedat, Lenarz, & Büchner, 2014). Third, device recommendations or guidelines are often made for an ear, independent of the level of HL and/or device at the other ear, neglecting the situation of one brain receiving spoken language information from two ears. In fact, the “best” hearing devices or a pediatric patient with HL need not be the same at each ear even when the child's HL is approximately the same at each ear (i.e., symmetrical HL). Children with severe HL (approximately 73 dB HL) who receive bilateral CIs at very early ages may lose, during the critical language-learning years, the opportunity to perceive the complementary speech cues that are transmitted through acoustic (HA) and electric (CI) devices. During the early language development critical years of life, the complementary nature of speech cues perceived via bimodal devices may provide a better language foundation than two CIs for pediatric patients with adequate residual hearing.

As clinicians strive to make the most appropriate device configuration recommendations for pediatric patients, they should keep in mind that, for certain levels of HL, CIs and HAs may provide complementary perceptual benefits that can have long-term consequences for early receptive language and vocabulary skills (i.e., at ages 5–9 years). We note here that the effects of residual hearing (unaided PTAs) on receptive language are attributed to the child's ability to perceive the speech signal (as assessed by our speech perception test battery). We analyzed the effects of unaided PTA on speech perception to be consistent with other studies that developed empirical guidelines for CI candidacy. We acknowledge that the variance attributed to receptive language through speech perception could also be attributed directly to the PTA levels, as has been found in other studies on CI recipients (Geers et al., 2016; Geers & Nicholas, 2013; Nicholas & Geers, 2006). We found that, for children with losses in the severe range (in at least one ear), a combination of HA and CI use (i.e., bimodal devices) for an extended time (approximately 3–4 years) facilitates better receptive language and vocabulary than found for children who had discontinued HA use by receiving a second CI. Based on these data, the benefits of acoustic experience for suprasegmental perception are maximal after 3–4 years and approximately plateau for children who accumulated longer durations. Note that children with longer durations of HA use are both current bimodal users and those with sequentially implanted BCIs (with, of course, a long CI surgery interval).

These data should not be construed, however, that all children with bimodal devices should be considered for a second CI after approximately 3–4 years of bimodal use. We stress that, for optimal suprasegmental perception and ultimately receptive language skills, a 3- to 4-year time period, at early ages, supports the greatest benefit for those with losses in the severe range. While HA use might be interpreted as mostly providing complementary acoustic information that is suprasegmental in nature, HA use also benefited segmental perception in children with approximately 73 dB HL PTAs in the HA ear. These results, which are more specific regarding level of hearing and duration of HA use, are similar to other studies' results that have suggested some period of bimodal use (i.e., HA + CI) provides overall benefits for receptive and expressive language skills (Boons et al., 2012; Nittrouer & Chapman, 2009; Nittrouer et al., 2016). For children with unaided PTAs of approximately 92 dB HL, there are benefits, although smaller in magnitude and primarily for suprasegmental perception, to continuing HA use after the first CI. For children with HL in the most profound range (i.e., 111 dB HL), no benefits were noted for continued HA use after the first CI for either suprasegmental or segmental perception, and prolonged HA use appeared to have negative effects for segmental perception. Thus, these results are similar to other studies suggesting that earlier second CI use supports higher levels of spoken language skills for children with the most profound bilateral HL (approximately 110 dB HL; Boons et al. 2012; Sarant et al., 2014).

Ultimately, the question most critical for clinicians is not unilateral versus bilateral device use, but which bilateral devices (bimodal vs. BCI) provide the best opportunity for developing good perceptual abilities and spoken language skills for children with severe-to-profound HL. For children with the most profound losses, early BCIs provide the greatest opportunity for developing good spoken language skills. For those with moderate-to-severe losses, however, a prescribed period of bimodal use may be more advantageous than early simultaneous BCIs. Ideally, device recommendations from clinicians and decisions by parents should be made in the context of a variety of outcome measures (segmental perception, suprasegmental perception, audio-visual speech perception, binaural processing, etc.) and using evidence from long-term studies when children have well-established spoken language and reading skills. Parents concerned about multiple surgeries, implicit in sequential BCIs, may opt for simultaneous BCIs. However, for children with more residual hearing (moderately severe–profound range), there may be substantial benefits for language development from continued HA use in conjunction with a first (only) CI. Regardless of any particular CI surgery decision(s), early use of HAs prior to receiving a first and/or second CI should be encouraged; acoustic hearing experience is associated with better suprasegmental perception, even for children with profound HL (i.e., near 90 dB HL).

The current study evaluated the effects of both segmental and suprasegmental perception on receptive language skills. Their effects on other skills such as localization and listening in spatially separated noise, however, were not examined. Additionally, for pediatric CI populations, the extent to which binaural listening skills facilitate spoken language development is unknown. Future studies should be undertaken to examine the relations among segmental and suprasegmental perception, binaural listening skills, spoken language and communication skills, and academic achievement.

For children with CIs, the following specific predictions were tested, and results are summarized below:

Segmental and suprasegmental speech perception skills contribute independently to receptive vocabulary performance, and suprasegmental skills contribute to receptive language performance.

Lower (better) unaided thresholds are associated with higher (better) segmental and suprasegmental speech perception scores. The effects of unaided PTA are greater in magnitude for suprasegmental than segmental perception.

For speech perception and ultimately language development, the optimum period of HA use prior to a second CI depends on unaided threshold levels; there exists a hearing loss cutoff above which acoustic experience is not beneficial for language outcomes. For children with the most profound HL (approximately 111 dB HL), BCI surgery should be performed as early as possible. For children with severe-to-profound HL (approximately 92 dB HL), up to about 3.6 years of HA use benefits suprasegmental perception and has no effect on segmental perception. Also, for children with severe levels of HL (approximately 73 dB HL), 3.6 years or more may improve suprasegmental and segmental perception.

Acknowledgments