Abstract

Recent meta analyses suggest there is a common brain network involved in processing emotion in music and sounds. However, no studies have directly compared the neural substrates of equivalent emotional Western classical music and emotional environmental sounds. Using functional magnetic resonance imaging we investigated whether brain activation in motor cortex, interoceptive cortex, and Broca’s language area during an auditory emotional appraisal task differed as a function of stimulus type. Activation was relatively greater to music in motor and interoceptive cortex – areas associated with movement and internal physical feelings – and relatively greater to emotional environmental sounds in Broca’s area. We conclude that emotional environmental sounds are appraised through verbal identification of the source, and that emotional Western classical music is appraised through evaluation of bodily feelings. While there is clearly a common core emotion-processing network underlying all emotional appraisal, modality-specific contextual information may be important for understanding the contribution of voluntary versus automatic appraisal mechanisms.

Keywords: music, sound, emotion, motor, interoception, language

1. Introduction

Recent meta analyses suggest that there is a common brain network involved in processing emotion in music and in sounds. Koelsch [1] synthesized the musical neurobiological literature to explore brain regions associated with aspects of music processing and affective stimulation. Frühholtz and colleagues [2] took this a step further to integrate musical emotional processing with other emotional stimuli, including speech prosody, nonverbal expressions, and non-human sounds by generating overlapping regional maps to identify a core network that includes the amygdala, basal ganglia, auditory cortex, superior temporal cortex, insula, medial and inferior frontal cortices, and cerebellum. Both meta analyses suggest, perhaps unsurprisingly, that there is a great deal of overlap for emotion processing, regardless of the vehicle of the information. It is also clear from these summaries that musical stimuli activate brain regions outside of the core emotion processing areas.

These studies systematically reviewed the literature focused on the brain response to music as an emotional stimulus, as well as other emotional auditory stimuli in an attempt to isolate emotion processing within the auditory system. Each analysis was based on a thorough investigation of high-quality research, and together they provide an important snapshot into the striking similarity of brain response patterns to music and other emotional auditory stimuli. However, the studies reviewed measured response to either music or sound and did not directly compare the two in the same experiment. As no studies have directly compared the neural substrates of equivalent emotional music and emotional environmental sounds, we don’t fully understand how responses to these musical stimuli are represented in the brain compared to other auditory emotional stimuli, or whether other brain systems, such as the motor or language networks would be more or less involved in processing music versus emotional environmental sounds. While there is clearly a common core emotion-processing network underlying all emotional appraisal, modality-specific contextual information may be important for understanding the contribution of different appraisal mechanisms. Our objective was to determine whether brain activation in regions outside of the core emotion-processing areas – the motor, interoceptive, and language cortices – differed based on the context of music versus sounds.

Our previous work has compared brain responses to emotional Western classical musical and emotional environmental nonmusical auditory stimuli from the International Affective Digital Sounds (IADS; [3]) in people with and without depression. Importantly, the stimuli had been validated as matched on both valence and arousal across stimulus type [4], allowing us to determine whether differences in emotional arousal were due to modality or valence. Individuals with depression had reduced activation to music in the anterior cingulate cortex (ACC) compared to people without symptoms of depression [5]. Across all participants, music and sounds elicited activation in different brain regions, even though participants rated the emotional quality similarly for the two stimulus types. Emotional environmental sounds activated regions associated with early emotional processing – including the thalamus, amygdala, cerebellum, and primary auditory cortex – and voluntary emotional appraisal – the lateral and dorsomedial prefrontal cortex. Western classical music activated regions of the default mode network (DMN) and reward processing regions, such as the ACC, which are associated with automatic emotional appraisal [6].

This led us to hypothesize that participants were using a different appraisal process depending on the modality of the auditory information. Based on Meyer’s metaphorizing medium hypothesis [7], music is abstract, without specific linguistic labels automatically linked during listening. On the other hand, the emotional environmental sounds in the IADS are concrete and nameable; they can be identified through language, for example: roller-coaster, children crying, glass breaking [8]. Different appraisal processes for these stimuli make sense from the perspective of embodied cognition and interoceptive inference models of emotion [9, 10]. In these models, core emotions of valence and arousal are thought to arise from the interpretation of physical body sensations. Higher order emotions are then abstracted from the integration of these core emotions with memories and schema regarding the self in top-down processing. In the brain, this abstraction from core emotions to more abstract concepts takes place largely in a network that includes the insular cortex, inferior frontal cortex, and anterior cingulate gyrus. Bodily sensations are initially processed in middle and posterior interoceptive regions of the insula and integrated with representations of the self in areas of inferior frontal cortex and ACC [11]. This has been more fully described as it relates to music by Reybrouck and Eerola [12], who argue that music as a temporal experience continuously activates the sensorimotor-cognitive integration system. Activation of this system can be observed even in the absence of overt movement [13, 14]. A recent meta-analysis supports this idea, showing that along with insula, motor areas are activated when listening to music [15]. This may in part lead to the urge to move to music, or feel the groove [16]. Together, these studies suggest that aspects of the stimulus engage different primary sensory and action systems [17–20]. What is still unknown is whether stimuli of equivalent subjective levels of emotionality would engage different systems during an emotional appraisal task.

We build on these findings and specifically address this gap in the current study by examining brain activation during emotional appraisal to different modalities of non-linguistic auditory stimuli: Western classical music and emotional environmental sounds. Our research question was whether emotional music and sounds activate primary motor cortex, interoceptive cortex and generative syntactic (BA 44) and semantic (BA 45) language areas of the brain differently. To address this, we conducted secondary region of interest data analysis of a within-subject fMRI study [5]. Participants with and without depression listened to emotional musical and nonmusical stimuli matched on valence and arousal during fMRI scanning. Because our primary goal was to investigate the brain response to music and nonmusical stimuli specifically in these regions, and we did not hypothesize group differences in activation in these regions as they are not typically implicated in depression, we included all data from the parent study regardless of participants’ depression status. Brain activation to these stimuli was extracted from motor cortex, interoceptive cortex, and Broca’s language area and compared. As the musical stimuli were instrumental and rhythmic and the sound stimuli could be described with language, we hypothesized that Western music would activate areas associated with physical feelings (motor and interoception) to a greater degree than emotional environmental sounds, and that environmental sounds would activate Broca’s language area to a greater degree than Western music.

2. Method

The study presented here represents a secondary analysis of data collected during a study conducted by Lepping, et al. [5] investigating differences in brain activation to emotional auditory stimuli in major depressive disorder. Sample size was fixed according to the available data; however, effect sizes are reported here for all statistics. This work was carried out in accordance with the Declaration of Helsinki, and all participant provided written consent for participation in the parent trial. Imaging data are available on the OpenfMRI database, accession number ds000171, https://www.openfmri.org/dataset/ds000171.

2.1. Participants

Demographic information is described in detail in the parent study [5]. Briefly, participants were right-handed adults who either had no history of depression (N = 22; 9 males; MAGE = 28.5; SDAGE = 11.1; RangeAGE = 18-59) or were currently experiencing a depressive episode (N = 20; 9 males; MAGE = 34.2; SDAGE = 13.6; RangeAGE = 18-56). Two Never Depressed participants had high scores on the Beck Depression Inventory – Second Edition (BDI-II) [21] on the day of testing and were excluded (final group: N = 20). The only participant in the MDD group taking medication for depression was also excluded (final group: N = 19). Participants had all completed high school (MED = 15.2 years; SDED = 2.7 years), and had normal or above average IQ (MIQ = 119; SDIQ = 11.7) as assessed by the Vocabulary and Matrix Reasoning subtests of the Wechsler Abbreviated Scale of Intelligence (WASI) [22].

2.2. Materials

Emotionally evocative positive and negative musical examples from Western art music, and positive and negative nonmusical environmental sound stimuli selected from the International Affective Digital Sound set (IADS) [3] were used in this study. Musical examples included professional recordings of twelve different pieces, spanning musical periods from Baroque to Postmodern Minimalism. Across the pieces, musical instrumentation included solo organ, string quartet, solo instrument with orchestral accompaniment, and full orchestra. Vocal music was not included. Tempo, key, mode, dynamics, articulation, percussion, rhythmic drive, and consonance/dissonance varied across and within the pieces.

Each piece was segmented into 10-second clips, starting at phrase boundaries, and these stimuli were rated according to valence (positive/negative) and arousal (active/passive) using a bi-axial diagram. Participants could select a point anywhere within a circle to simultaneously indicate scale rating for both valence (x axis) and arousal (y axis). The most positive or negative clips were selected, and the two groups of stimuli were matched on arousal. This was followed by selecting a subset of emotional environmental sounds from the IADS that were matched on valence and arousal to the positive and negative musical stimuli and would be easily perceived over the noise of the functional MRI sequences. As the IADS stimuli are five seconds long, each was looped so that each stimulus was 10 seconds long. Musical and nonmusical stimuli were carefully selected and validated to evoke equivalent emotional responses, with similar emotional valence and arousal ratings for each stimulus type.

Pure tones, 10 seconds long, on a C major scale from A3 (220 Hz) to C5 (523.25 Hz) were created as a neutral emotional baseline comparison to control for aspects of auditory processing, such as pitch and tone, but which lacked any rhythmic or harmonic structure, complex timbre, or environmental context. These were also tested alongside the emotional stimuli described above, and with other potential baseline sounds like white noise and long instrumental tones, and were verified to be emotionally neutral and equally arousing as compared to the emotional stimuli. The final stimuli included 36 musical (18 positive, 18 negative), 24 nonmusical (12 positive, 12 negative), and 9 neutral (pure tone) stimuli. The stimuli and validation study are fully described in a separate publication [4], and are available from the corresponding author (RL).

2.3. Procedure

2.3.1. fMRI Data Collection.

fMRI data were collected during the scanning session described in the parent study [5]. Participants had a single fMRI scanning session (3 Tesla Siemens Skyra MRI, Siemens, Erlangen, Germany) including five functional scanning runs (50 interleaved oblique axial slices at a 40° angle, repetition time/echo time [TR/TE] = 3000/25 msec, flip angle = 90°, field of view [FOV] = 220 mm, matrix = 64×64, slice thickness = 3 mm, 0 mm skip, in-plane resolution = 2.9×2.9 mm, 105 data points, 5 min: 24 sec). Stimuli were presented through MR compatible earbuds (Sensimetrics Corporation, Malden, MA) with E-Prime 2.0 software (Psychology Software Tools, Inc., Sharpsburg, PA). As the scanner creates constant high-pitched noise throughout the functional scans, a sound check procedure was employed prior at the beginning of the session. A musical example not used in the experimental stimuli was played through the earbuds while an fMRI sequence was running, and participants indicated with hand signals (thumbs up/down) whether the volume should be increased or decreased. The scanner was stopped, the participant confirmed verbally that they could hear the stimuli over the sound of the scanner, and the functional runs were begun.

During these functional runs, participants heard 30 second blocks of emotional musical or nonmusical stimuli alternating with 30 seconds of neutral pure tones. After each block, participants were given three seconds to press a button identifying the valence (positive or negative) of the group of stimuli just heard. This was presented as a forced choice task for all conditions, including the pure tone baseline blocks, and was primarily used to ensure participants were engaged during the task. After the scanning session, participants were asked to rate their emotional experience for each of the stimuli using the valence and arousal bi-axial scale described above. These ratings were similar to those provided by previous validation studies, and confirmed a significant difference on valence between positive and negative stimuli, but no other differences between the conditions [5].

2.3.2. fMRI Preprocessing.

Imaging data were analyzed using Analysis of Functional NeuroImages (AFNI) software [23]. Processing steps included trilinear 3D motion correction, 3D spatial smoothing to 4 mm with a Gaussian filter, high pass filter temporal smoothing, and resampling of the data to 2.5 mm3, followed by coregistration of the structural images to the functional data and normalization to Talairach space [24]. Individual frames (TRs) of the functional data were censored if motion between TRs exceeded 1 mm.

2.3.3. fMRI Statistical Analysis.

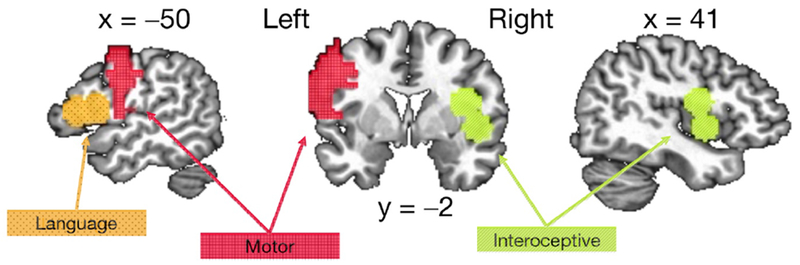

Statistical activation maps for each participant were calculated in AFNI using a voxel-wise general linear model (GLM), in which regressors representing the experimental conditions of interest were modeled with a hemodynamic response filter and entered into multiple regression analysis including motion estimates as nuisance regressors. Nonoverlapping regions of interest were defined for primary motor cortex (precentral gyrus, 1,606 voxels/25,094 mm3) and Broca’s language area (BA 44/45, 478 voxels/7,469 mm3) in the left hemisphere, defined from template masks within AFNI [25–27]. Interoceptive cortex was defined as 10 mm radius spheres around peak coordinates in the right hemisphere identified by Simmons and colleagues [28] as selective for interoceptive awareness (519 voxels/8,109 mm3). The Motor ROI was edited to remove voxels overlapping the Language ROI (Figure 1).

Figure 1.

Mean activation for each condition was extracted from the three regions of interest: Red = Left Motor Cortex, Orange = Left Broca’s Language Area, BA 44/45, Green = Right Interoceptive Cortex.

The mean percent signal change from baseline (Pure Tones) during each emotional stimulus condition (Positive Music, Negative Music, Positive Sounds, Negative Sounds) was extracted from each ROI for each participant and entered into IBM SPSS Statistics for Macintosh, Version 24.0 (2016, Armonk, NY: IBM Corp.). This mean percent signal change from baseline was set as the dependent variable (DV) in a repeated-measures Analysis of Variance (ANOVA) model. Our primary hypothesis was that emotional Western music and emotional environmental sounds would elicit different patterns of activation across the three brain regions. Therefore, the two primary independent variables (IV) were Stimulus Type (2 levels: Music, Sound) and Region (3 levels: Motor, Interoceptive, Language). Additionally, to examine whether this relationship was significantly impacted by depression (which is the main variable of interest in the parent study), we included depression and valence in the model.

Valence was critical for appraisal during the task and may have also had an impact on brain activation in these regions. To account fully for these variables, we ran a four-way, 2×3×2×2 repeated-measures ANOVA using the extracted percent signal change from baseline for each condition (Positive Music, Negative Music, Positive Sounds, Negative Sounds). The four independent variables (IV) included three within-subjects factors: Stimulus Type (2 levels: Music, Sound), Region (3 levels: Motor, Interoceptive, Language), and Valence (2 levels: Positive, Negative); and one between-subjects factor: Depression status (2 levels: ND, MDD). Post hoc pairwise tests were conducted for significant interactions in the model.

3. Results

Descriptive statistics for the primary variables of interest are included in Table 1. The complete ANOVA table is presented in Table 2. Mauchly’s test of sphericity indicated a departure from sphericity for the Region factor (χ2(2) = 17.0, p < .001), so we corrected for degrees of freedom with Greenhouse-Geisser correction for tests on Region and for all interactions with Region (ε reported in Table 2).

Table 1.

Descriptive Statistics: Stimulus Type by Region | N = 39

| Percent Signal Change from Baseline | ||||

|---|---|---|---|---|

| Type | Region | M | SD | 95% CI |

| Music | Interoceptive | 0.19 | 0.17 | [0.13-0.25] |

| Language | 0.09 | 0.29 | [−0.01-0.18] | |

| Motor | 0.07 | 0.20 | [0.00-0.13] | |

| Sound | Interoceptive | 0.10 | 0.25 | [0.02-0.18] |

| Language | 0.20 | 0.52 | [0.04-0.37] | |

| Motor | −0.03 | 0.27 | [−0.11-0.06] | |

Table 2.

Repeated Measures ANOVA: Within (Type, Region, Valence), Between (Depressed)

| Source | df | ε | F | ηp2 | p |

|---|---|---|---|---|---|

| Type | 1, 37 | 0.27 | .007 | .60 | |

| Region | 1.45, 53.8 | 0.73 | 8.10 | .18 | .002** |

| Valence | 1, 37 | 0.21 | .006 | .65 | |

| Depressed | 1, 37 | 2.55 | .06 | .12 | |

| Type * Region | 1.18, 43.6 | 0.59 | 9.04 | .20 | .003** |

| Type * Valence | 1, 37 | 6.22 | .14 | .02* | |

| Type * Depressed | 1, 37 | 0.60 | .02 | .44 | |

| Region * Valence | 1.31, 48.6 | 0.66 | 0.34 | .009 | .62 |

| Region * Depressed | 1.45, 53.8 | 0.73 | 0.88 | .02 | .39 |

| Valence * Depressed | 1, 37 | 1.07 | .03 | .31 | |

| Type * Region * Valence | 1.24, 46.0 | 0.62 | 0.05 | .001 | .87 |

| Type * Region * Depressed | 1.18, 43.6 | 0.59 | 0.01 | <.001 | .94 |

| Type * Valence * Depressed | 1, 37 | 0.81 | .02 | .37 | |

| Region * Valence * Depressed | 1.31, 48.6 | 0.66 | 0.07 | .002 | .86 |

| Type * Region * Valence * Depressed | 1.24, 46.0 | 0.62 | 0.45 | .01 | .55 |

Note. Mauchly’s Test of Sphericity indicated a departure from sphericity for the Region factor. Degrees of freedom and p values have been adjusted using the Greenhouse-Geisser correction.

Significant at the p < .05 level.

Significant at the p < .01 level.

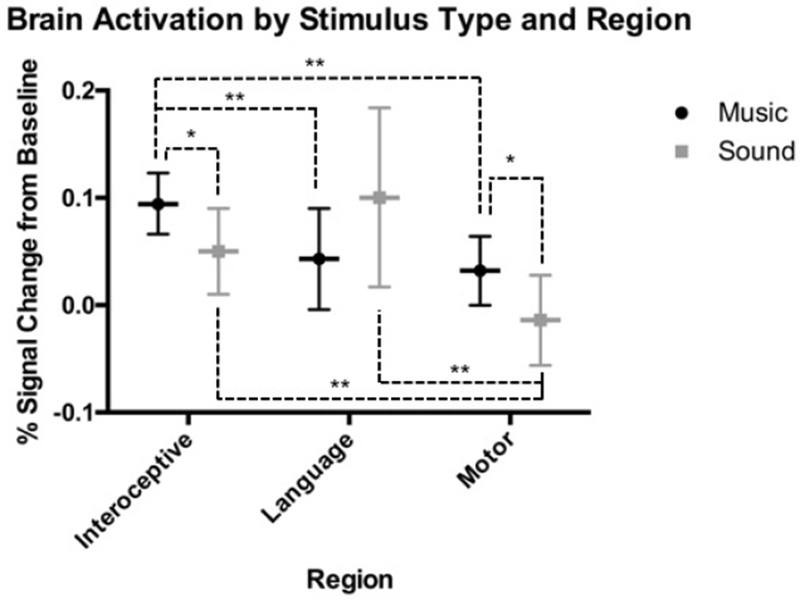

Our a priori hypothesis that Western classical music and environmental sounds would differentially activate the selected regions was supported. Results of the ANOVA revealed a significant interaction of stimulus Type and brain Region (Table 2), so we looked at each region separately. Post hoc tests revealed that Interoceptive and Motor cortices were significantly more activated during musical compared to nonmusical stimuli (Interoceptive: MDIFF = 0.09, SE = 0.03, 95% CI [0.02, 0.16], p = .012; Motor: MDIFF = 0.09, SE = 0.04, 95% CI [0.02, 0.16], p = .012). Although not statistically significant, the pattern of activation in Broca’s Language area was different than the other two regions, and relatively greater during nonmusical sounds compared to musical stimuli (MDIFF = 0.12, SE = 0.08, 95% CI [−0.04, 0.27], p = .15). Comparisons of each stimulus Type across the three Regions revealed that music elicited significantly greater activation in Interoceptive cortex compared to both Language (MDIFF = 0.05, SE = 0.02, 95% CI [0.02, 0.09], p = .007) and Motor (MDIFF = 0.06, SE = 0.01, 95% CI [0.04, 09], p < .001) areas. Emotional environmental sounds had significantly greater activation in both Interoceptive (MDIFF = 0.06, SE = 0.01, 95% CI [0.04, 0.09], p < .001) and Language (MDIFF = 0.11, SE = 0.03, 95% CI [0.05, 0.18], p = .001) areas compared to Motor cortex (Table 1, Figure 2).

Figure 2.

ANOVA results showing differential brain activation patterns across regions for music versus sound. Horizontal lines indicate estimated marginal means. Error bars display 95% CI. Significant pairwise comparisons are indicated with dashed lines, *Significant and the p < .05 level. **Significant at the p < .01 level.

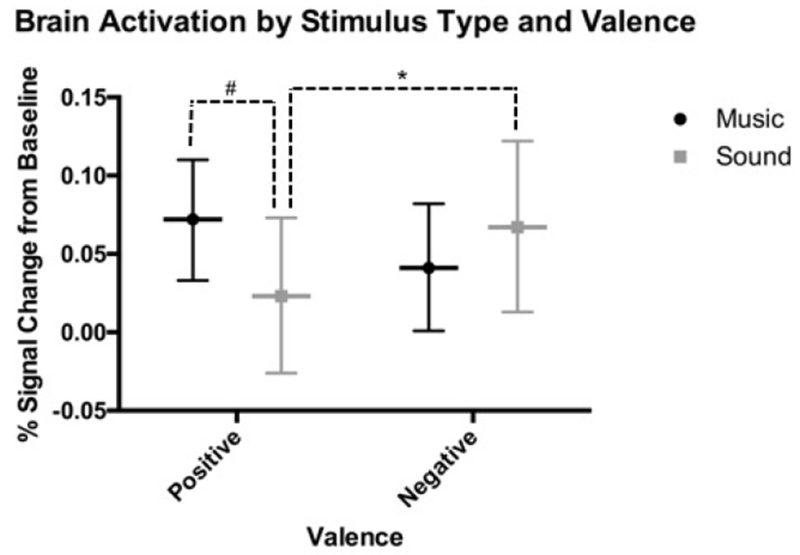

The model also revealed a significant interaction of stimulus Type and Valence. Post hoc tests revealed that across all regions, emotional environmental sounds elicited significantly greater activation for Negative compared to Positive stimuli (MDIFF = 0.04, SE = 0.02, 95% CI [0.007, 0.08], p = .02), while music was not significantly different for Positive versus Negative stimuli (MDIFF = 0.03, SE = 0.02, 95% CI [−0.02, 0.08], p = .21). There was a non-significant trend for greater activation for Positive music compared to Positive environmental sounds (MDIFF = 0.05, SE = 0.03, 95% CI [−0.003, 0.10], p = .06), but no difference was observed for Negative music versus Negative environmental sounds (MDIFF = 0.03, SE = 0.03, 95% CI [−0.03, 0.08], p = .34; Figure 3). No other interactions were significant (Table 2). As there were interactions between Type and Region and Type by Valence, we did not interpret the main effects of Type, Region, or Valence separately. Although the main effect of Depression status could be considered a trend, the lack of a significant interaction with Region, Type, or Valence indicate that depression status does not significantly change the pattern of activation for stimulus type across the regions – the primary focus of this investigation. Analyses conducted without the factors of Depression and Valence were similar.

Figure 3.

ANOVA results showing differential brain activation patterns by valence for music versus sound. Horizontal lines indicate estimated marginal means. Error bars display 95% CI. Significant pairwise comparisons are indicated with dashed lines, #NS trend at the p < .05 level. *Significant at the p < .05 level.

4. Discussion

This secondary data analysis using a region of interest approach revealed distinct patterns of relative activation in primary motor cortex, interoceptive cortex, and Broca’s language area during emotional appraisal of Western classical music and non-musical environmental sounds. Our hypothesis that different brain regions would respond to these stimuli in different ways was based on embodied cognition and interoceptive inference models of emotion [9, 10]. As an abstract metaphorizing medium, Western classical music has limited connections to linguistic processes for emotional appraisal, and therefore, is more likely to be reliant on the interpretation of bodily sensations [7]. Additionally, the sensorimotor system is engaged by Western classical music, possibly evoking thoughts of dancing [12, 13, 15, 16]. As concrete and nameable stimuli salient in the environment, emotional environmental sounds would be more likely appraised through automatic identification of the source through linguistic mechanisms [8].

This hypothesis was largely supported that music would activate areas related to physical feelings, i.e. motor and interoceptive cortices, to a greater degree than emotional environmental sounds, while environmental sounds would activate Broca’s area more than Western classical music. While activation within Broca’s area was not significantly greater to environmental sounds compared to Western music, the sound and musical stimuli did present with different patterns of activation across all regions. Specifically, Western music was associated with significantly greater activation in Interoceptive cortex compared with Broca’s area, and environmental sounds were associated with significantly greater activation in Broca’s area compared to Motor cortex. It is possible that the automatic labeling of the environmental sounds, evidenced by preferential activation of Broca’s area, may be unrelated to the emotional appraisal process. However, during this emotional appraisal task, participants were instructed to make a valence judgement of the stimuli, and not to identify whether they were musical on nonmusical, or to explicitly name the stimulus. Nevertheless, future studies employing both concrete and abstract emotional environmental sounds would be needed to clarify whether this finding applies to emotional environmental stimuli more broadly.

Our analysis focused on three regions of interest, identified from independent sources as likely candidates to address the specific research question presented here. Broca’s area and Motor cortex were identified from anatomic masks, and the interoceptive cortex was selected from previously published, unrelated functional imaging data. Additionally, the regions selected here were hypothesized to be activated by both stimulus types, consistent with the bulk of the literature to date [1, 2, 29, 30]. These regions are not typically thought of as emotion-processing regions; they are not part of the limbic system. Nor are they in the primary auditory cortex, which may be sensitive to other perceptual differences between the stimuli [31], or with analytic descriptors specific to music (e.g. intervals, instrumentation, tempo; [32]; however, this was not the focus of the current investigation. Both the limbic system and primary auditory cortex show robust activation to this emotional appraisal task, described in a previous publication [5].

We chose this region-of-interest approach as a conservative, hypothesis-driven confirmatory method of probing our research question. While other data-driven approaches, such as whole-brain voxelwise analysis or psychophysiological interaction analysis, may have yielded additional results outside of these regions, these exploratory analyses could have been harder to interpret. By averaging over large anatomic regions, the percent signal change we observed was small, presumably due to averaging across “activated” and “non-activated” voxels. As there is the potential that participants will show some variability in the exact location of activation, using larger regions helps to ensure that we can capture any effect that may be present. We did confirm that participants in this sample showed some activation within each region, however, by extracting the maximally activated voxel for each participant within each region. Averaged across subjects, the maximum percent signal change (in at least one voxel) in each region was 1.9% for Interoceptive cortex, 3.4% for Broca’s area and 3.9% for Motor cortex, with individual experimental conditions ranging from 1.5-4.5%. We therefore conclude that the regions were at least partially activated by the task. Also, these anatomic regions were defined based on a template rather than on subject-specific anatomy; some variability in brain structure is also expected, although these large areas should have captured that as well. Using this conservative approach, our data show that across these broad areas, both Western classical music and emotional environmental sounds activate the language and interoceptive regions, but that the relative activation between the two stimuli is different. Even so, it is possible that another analysis method could have revealed more subtle effects, including individual differences between subjects. Future studies could be done to identify factors related to intersubject variability in both the strength, location, and pattern of activation during emotional appraisal of Western music and environmental sounds.

Musical stimuli from Western classical music are often confounded in terms of valence and arousal. Often, Western music that is a faster tempo and in a major key will be rated as positive, for example, while music that is slower and in a minor key will be rated as negative. This is not always the case, and we were able to separate these two dimensions in the musical stimuli used in this study. Additionally, the characteristics of Western music do not always overlap with music from other cultures, which is one of the reasons why we used only Western classical music in this research. Music from another culture that may be described as positive by those who are familiar with it may be described as negative to someone who has never been exposed to it before. The results of this study can, therefore, clearly not be generalized to all music from around the globe. Regardless, the results of the current study using stimuli carefully matched for these parameters demonstrate that activation differences between regions are not related to these dimensions.

Additionally, the selected environmental sounds from the IADS were mildly emotional, and did not include extreme stimuli such as the sounds of violence against people. While these surely would elicit strong emotional responses, they would not have matched the musical stimuli, which do not imply harm being done. Although it was not our primary question, we did find a significant Type by Valence interaction that indicated Negative emotional environmental sounds had greater activation compared to Positive environmental sounds. This was not anticipated, as previous studies have shown that brain responses to valence have a U-shaped curve, with both negative and positive stimuli evoking high levels of activation compared to neutral [33]. Our stimuli were all relatively mild in emotionality, which may have allowed the observation of this effect. This finding could be an indication that mildly negative sounds have greater salience than mildly positive ones, possibly readying the autonomic nervous system for fight or flight. The anterior insula and dorsolateral prefrontal cortex are both key parts of the salience network [34], and overlap to some degree with our regions of interest for Interoceptive cortex and Broca’s area. Future research should examine the potential variability in response for mildly emotional environmental sounds.

We used pure tones as an emotionally neutral baseline in order to examine the effects of positive and negative stimuli separately. fMRI requires that two or more conditions be compared to detect relative differences between them. By selecting pure tones as a baseline, we were able to remove the common neural signature associated with some of the basic auditory features of the stimuli. Similarly, appraisals such as positive versus negative, or active versus passive are also relative to each other. A stimulus rated as positive alone might be rated as neutral or negative when compared with another, more positive stimulus. Therefore, we tested the pure tones against other potential baseline stimuli in our previous study, and selected the pure tones as the best comparator to the emotionally positive and negative stimuli [4]. It is possible, however, that this emotionally neutral stimulus was not as relevant for this specific task, as the comparison was less about valence and more about stimulus type. The pure tones are also unlikely to be named linguistically, unless the listener has perfect pitch. A future study using a baseline that isolates aspects of the linguistic labelling or contextual meaning associated with the experimental stimuli would be needed to confirm these results.

4.1. Limitations

This study is limited by several factors. The sample size was small and fixed in this secondary data analysis. Also, the stimuli were from a limited, albeit well-characterized set. All of the musical stimuli were limited to Western classical musical examples. Future studies would need to examine whether the effects seen here would apply to music from other parts of the world. Also, the baseline used in the parent trial was developed as an emotional foil, and may not have been the most effective baseline to answer the specific question outlined by this study comparing abstract and concrete stimuli of different contexts. Because a block design was used for the fMRI data collection, the brain response to individual stimuli cannot be isolated. Even though we collected individual ratings of valence on a scale after the fMRI scan, it is not possible to regroup the stimuli for analysis by individual rating. It is also possible that the noise from the MRI scanner may have impacted the results observed in this study. However, this noise was present in all conditions, including baseline; therefore, we believe that the activation patterns observed reflect activation above and beyond what would be expected in the auditory cortex from the scanner noise. A further examination with a different measurement technique, EEG for example, would confirm whether the differences in brain response to these stimuli is confounded by the scanner noise.

By design, participants were unfamiliar with the stimuli, however familiarity and liking have been shown to alter brain activation in certain regions, with music that is liked showing greater activation in both Broca’s area and motor cortex [35]. Familiarity with repeated exposures has also been shown to lead to increased polarization of liking by valence (positive stimuli are liked even more, negative stimuli are liked even less), and to increase facial expression responses to those stimuli as measured by electromyography [36]. As we did not measure familiarity and liking in this study, it is possible that these factors may have played a role in the patterns of activation we observed. In our earlier analysis of these data, we found that participants with depression showed positive correlations with measures of affect and mood and activation in limbic regions [37]. Future studies should incorporate measures of familiarity and liking in order to separate the effects of these individual difference variables.

Finally, it is important to note that the original parent trial was not designed or performed with the current analysis strategy in mind. While the results obtained from this analysis are interesting, some elements could be improved were a new study to be conducted. Specifically, the use of stimuli varying by concreteness/abstractness would help to isolate that variable, which we believe to be the confounded or cooccurring element with our Western musical and environmental sound stimuli that led to the pattern of brain activation we observed. Additionally, the use of a different baseline that captures the concreteness/abstractness qualities of the stimuli would further help to isolate that factor in the stimuli. Also, concurrent behavioral data, like reaction time using hand or mouth response buttons as has been used by other studies of embodied cognition, would provide convergent evidence with the fMRI data that a linguistic or bodily appraisal strategy is used [38].

4.2. Conclusion

We conclude that the appraisal of emotionality depends on the medium. Our results suggest that emotional environmental sounds are appraised through verbal identification of the content, evidenced by relatively greater activation of Broca’s area compared to motor cortex. Emotional Western classical music is appraised through bodily feelings, evidenced by greater activation of interoceptive cortex compared to Broca’s area, and sensation of movement, evidenced by greater activation in the motor cortex to music versus environmental sounds. We also identified an interaction between stimulus Type and Valence, with negative sounds and positive music having the greatest response across all regions. Further research with additional stimuli, including music from other cultures, is needed to understand whether this effect would generalize to other examples, or whether it is an artifact of an unmeasured difference between these particular stimuli.

The meta analyses performed by Koelsch [1] and Fruhholz [2] underscore the impact of music as an emotional stimulus. These meta analyses were crucial for understanding how music is similar as an emotional stimulus compared to other types of auditory stimuli. While these studies showed that there is clearly a common core emotion-processing network underlying all emotional appraisal within the auditory domain, the current study identifies that modality-specific contextual information may be important for understanding the contribution of voluntary versus automatic appraisal mechanisms.

Practical implications for this finding include the potential for music to be used to as a therapeutic device for conditions like depression. Depression is often associated with verbal rumination over negative thoughts. By engaging the body and potentially bypassing the linguistic system, music might serve as a tool to break the rumination circuit. Our current investigation revealed no differences in regional brain response to these stimuli based on depression status, which further supports this as a potential therapeutic avenue. Unfortunately, the current sample did not have data on rumination symptom severity among the participants with depression. Future research is needed investigating how depression symptoms of rumination might modulate the brain response, and whether music therapy or music listening would be effective for ameliorating those symptoms.

Highlights.

A common brain network is involved in processing emotion in Western classical music and emotional environmental sounds

Areas outside of this network differentiate the context of emotional information

Motor and interoceptive areas preferentially activate for music

Language areas preferentially activate for emotional environmental sound

Different appraisal strategies are used for music and sound

Acknowledgments

Funding Sources

This work was supported by funding to RL from The Society for Education, Music and Psychology Research (SEMPRE: Arnold Bentley New Initiatives Fund), and the University of Kansas Doctoral Student Research Fund. MR imaging was provided by pilot funding from the Hoglund Brain Imaging Center. The Hoglund Brain Imaging Center is supported by a generous gift from Forrest and Sally Hoglund, from the University of Kansas School of Medicine, and funding from the National Institutes of Health (S10 RR029577, UL1 TR000001, U54 HD090216). The content is solely the responsibility of the authors and does not necessarily represent the official views of the funding agencies.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Competing Interests

None of the authors have competing interests to declare.

Contributor Information

Rebecca J. Lepping, Hoglund Brain Imaging Center, University of Kansas Medical Center

Jared M. Bruce, Department of Psychology, and Department of Biomedical and Health Informatics, University of Missouri – Kansas City

Kathleen M. Gustafson, Department of Neurology, and Hoglund Brain Imaging Center, University of Kansas Medical Center

Jinxiang Hu, Department of Biostatistics, University of Kansas Medical Center.

Laura E. Martin, Department of Population Health, and Hoglund Brain Imaging Center, University of Kansas Medical Center

Cary R. Savage, Department of Psychology and Center for Brain, Biology and Behavior, University of Nebraska – Lincoln

Ruth Ann Atchley, Department of Psychology, University of South Florida.

References

- 1.Koelsch S, Brain correlates of music-evoked emotions. Nat Rev Neurosci, 2014. 15(3): p. 170–80. doi: 10.1038/nrn3666 [DOI] [PubMed] [Google Scholar]

- 2.Fruhholz S, Trost W, and Kotz SA, The sound of emotions-Towards a unifying neural network perspective of affective sound processing. Neurosci Biobehav Rev, 2016. 68: p. 96–110. doi: 10.1016/j.neubiorev.2016.05.002 [DOI] [PubMed] [Google Scholar]

- 3.Bradley MM and Lang PJ, Affective reactions to acoustic stimuli. Psychophysiology, 2000. 37(2): p. 204–15. [PubMed] [Google Scholar]

- 4.Lepping RJ, Atchley RA, and Savage CR, Development of a validated emotionally provocative musical stimulus set for research. Psychology of Music, 2016. 44(5): p. 1012–1028. doi: 10.1177/0305735615604509 [DOI] [Google Scholar]

- 5.Lepping RJ, et al. , Neural Processing of Emotional Musical and Nonmusical Stimuli in Depression. Plos One, 2016. 11(6). 10.1371/journal.pone.0156859 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Phillips ML, Ladouceur CD, and Drevets WC, A neural model of voluntary and automatic emotion regulation: implications for understanding the pathophysiology and neurodevelopment of bipolar disorder. Mol Psychiatry, 2008. 13(9): p. 829, 833–57. doi: 10.1038/mp.2008.65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Meyer LB, Emotion and meaning in music. 1956, Chicago: University of Chicago Press; xi, 307 p. [Google Scholar]

- 8.Brefczynski-Lewis JA and Lewis JW, Auditory object perception: A neurobiological model and prospective review. Neuropsychologia, 2017. 105: p. 223–242. doi: 10.1016/j.neuropsychologia.2017.04.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Seth AK, Interoceptive inference, emotion, and the embodied self. Trends Cogn Sci, 2013. 17(11): p. 565–73. doi: 10.1016/j.tics.2013.09.007 [DOI] [PubMed] [Google Scholar]

- 10.Niedenthal PM, Embodying emotion. Science, 2007. 316(5827): p. 1002–5. doi: 10.1126/science.1136930 [DOI] [PubMed] [Google Scholar]

- 11.Kleint NI, Wittchen HU, and Lueken U, Probing the Interoceptive Network by Listening to Heartbeats: An fMRI Study. PLoS One, 2015. 10(7): p. e0133164. doi: 10.1371/journal.pone.0133164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Reybrouck M and Eerola T, Music and Its Inductive Power: A Psychobiological and Evolutionary Approach to Musical Emotions. Front Psychol, 2017. 8: p. 494. doi: 10.3389/fpsyg.2017.00494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Winkielman P, Coulson S, and Niedenthal P, Dynamic grounding of emotion concepts. Philos Trans R Soc Lond B Biol Sci, 2018. 373(1752). doi: 10.1098/rstb.2017.0127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Korb S, et al. , Gender differences in the neural network of facial mimicry of smiles--An rTMS study. Cortex, 2015. 70: p. 101–14. doi: 10.1016/j.cortex.2015.06.025 [DOI] [PubMed] [Google Scholar]

- 15.Gordon CL, Cobb PR, and Balasubramaniam R, Recruitment of the motor system during music listening: An ALE meta-analysis of fMRI data. PLoS One, 2018. 13(11): p. e0207213. doi: 10.1371/journal.pone.0207213 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Janata P, Tomic ST, and Haberman JM, Sensorimotor coupling in music and the psychology of the groove. J Exp Psychol Gen, 2012. 141(1): p. 54–75. doi: 10.1037/a0024208 [DOI] [PubMed] [Google Scholar]

- 17.Tillmann B, Music and language perception: expectations, structural integration, and cognitive sequencing. Top Cogn Sci, 2012. 4(4): p. 568–84. doi: 10.1111/j.1756-8765.2012.01209.x [DOI] [PubMed] [Google Scholar]

- 18.Wilson-Mendenhall CD, et al. , Primary Interoceptive Cortex Activity during Simulated Experiences of the Body. J Cogn Neurosci, 2019. 31(2): p. 221–235. doi: 10.1162/jocn_a_01346 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Seth AK and Friston KJ, Active interoceptive inference and the emotional brain. Philos Trans R Soc Lond B Biol Sci, 2016. 371(1708). doi: 10.1098/rstb.2016.0007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Herbert BM and Pollatos O, The body in the mind: on the relationship between interoception and embodiment. Top Cogn Sci, 2012. 4(4): p. 692–704. doi: 10.1111/j.1756-8765.2012.01189.x [DOI] [PubMed] [Google Scholar]

- 21.Beck AT, Steer RA, and Brown GK, Manual for the Beck Depression Inventory-II. 1996, San Antonio, TX: Psychological Corporation. [Google Scholar]

- 22.Wechsler D, Wechsler Abbreviated Scale of Intelligence. 1999, San Antonio, TX: The Psychological Corporation. [Google Scholar]

- 23.Cox RW, AFNI: software for analysis and visualization offunctional magnetic resonance neuroimages. Comput Biomed Res, 1996. 29(3): p. 162–73. [DOI] [PubMed] [Google Scholar]

- 24.Talairach J and Tournoux P, Co-planar stereotaxic atlas of the human brain : 3-dimensional proportional system : an approach to cerebral imaging. 1988, Stuttgart ; New York: Georg Thieme; 122 p. [Google Scholar]

- 25.Eickhoff SB, et al. , Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage, 2007. 36(3): p. 511–21. doi: 10.1016/j.neuroimage.2007.03.060 [DOI] [PubMed] [Google Scholar]

- 26.Amunts K, et al. , Broca’s region revisited: cytoarchitecture and intersubject variability. J Comp Neurol, 1999. 412(2): p. 319–41. [DOI] [PubMed] [Google Scholar]

- 27.Geyer S, et al. , Two different areas within the primary motor cortex of man. Nature, 1996. 382(6594): p. 805–7. doi: 10.1038/382805a0 [DOI] [PubMed] [Google Scholar]

- 28.Simmons WK, et al. , Keeping the body in mind: insula functional organization and functional connectivity integrate interoceptive, exteroceptive, and emotional awareness. Hum Brain Mapp, 2013. 34(11): p. 2944–58. doi: 10.1002/hbm.22113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Maess B, et al. , Musical syntax is processed in Broca’s area: an MEG study. Nat Neurosci, 2001. 4(5): p. 540–5. doi: 10.1038/87502 [DOI] [PubMed] [Google Scholar]

- 30.Koelsch S, et al. , Bach speaks: a cortical “language-network” serves the processing of music. Neuroimage, 2002. 17(2): p. 956–66. [PubMed] [Google Scholar]

- 31.Nan Y and Friederici AD, Differential roles of right temporal cortex and Broca’s area in pitch processing: evidence from music and Mandarin. Hum Brain Mapp, 2013. 34(9): p. 2045–54. doi: 10.1002/hbm.22046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Klein ME and Zatorre RJ, Representations of Invariant Musical Categories Are Decodable by Pattern Analysis of Locally Distributed BOLD Responses in Superior Temporal andIntraparietal Sulci. Cereb Cortex, 2015. 25(7): p. 1947–57. doi: 10.1093/cercor/bhu003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Viinikainen M, Katsyri J, and Sams M, Representation of perceived sound valence in the human brain. Hum Brain Mapp, 2012. 33(10): p. 2295–305. doi: 10.1002/hbm.21362 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Seeley WW, et al. , Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci, 2007. 27(9): p. 2349–56. doi: 10.1523/JNEUROSCI.5587-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pereira CS, et al. , Music and emotions in the brain: familiarity matters. PLoS One, 2011. 6(11): p. e27241. doi: 10.1371/journal.pone.0027241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Witvliet CVO and Vrana SR, Play it again Sam: Repeated exposure to emotionally evocative music polarises liking and smiling responses, and influences other affective reports, facial EMG, and heart rate. Cognition & Emotion, 2007. 21(1): p. 3–25. doi:Doi 10.1080/0269930601000672 [DOI] [Google Scholar]

- 37.Lepping RJC, Neural processing of emotional music and sounds in depression. 2013, Lawrence, KS: University of Kansas; 133 pages. [Google Scholar]

- 38.Mazzuca C, et al. , Abstract, emotional and concrete concepts and the activation of mouth-hand effectors. PeerJ, 2018. 6: p. e5987. doi: 10.7717/peerj.5987 [DOI] [PMC free article] [PubMed] [Google Scholar]