Abstract

Vision and Change challenged biology instructors to develop evidence-based instructional approaches that were grounded in the core concepts and competencies of biology. This call for reform provides an opportunity for new educational tools to be incorporated into biology education. In this essay, we advocate for learning progressions as one such educational tool. First, we address what learning progressions are and how they leverage research from the cognitive and learning sciences to inform instructional practices. Next, we use a published learning progression about carbon cycling to illustrate how learning progressions describe the maturation of student thinking about a key topic. Then, we discuss how learning progressions can inform undergraduate biology instruction, citing three particular learning progressions that could guide instruction about a number of key topics taught in introductory biology courses. Finally, we describe some challenges associated with learning progressions in undergraduate biology and some recommendations for how to address these challenges.

INTRODUCTION

Vision and Change recognized the challenge college biology instructors face when deciding what to teach undergraduates in a diverse and ever-expanding field like biology (American Association for the Advancement of Science [AAAS], 2011). To help instructors address this challenge, the report identified five core concepts and six core competencies fundamental to students’ biology literacy. Biology instructors could organize their curricula and instruction around the core concepts and competencies to help students build a deep understanding of how biological systems work rather than encyclopedic knowledge about a wide range of topics. The report also encouraged instructors to develop more interactive, “student-centered courses and curricula” that “take into account student knowledge and experiences at the start of a course” and use evidence of student learning from assessments to inform their instructional strategies (AAAS, 2011, p. 22).

This call to revise how instructors approach teaching undergraduate biology provides an opportunity to incorporate new educational tools into biology education that assist instructors in achieving the objectives set forth in Vision and Change. We advocate for learning progressions as one such educational tool, because they 1) are grounded in evidence of student thinking about core ideas of science and 2) directly inform the instructional practices of creating learning goals, developing assessments, and constructing curricula that help students develop a rich understanding of biology.

In this essay, we explore how learning progressions can be used to enrich biology instruction at the undergraduate level. To do this, we draw heavily on learning progression research from the K–12 level that has been investigating the role of learning progressions in education for more than a decade (National Research Council [NRC], 2007). We begin by describing what a learning progression is and its essential features. Next, we examine a published learning progression about carbon cycling for a practical illustration of what a learning progression looks like. Then, we describe how instructors can use learning progressions as an educational tool to inform their instructional practices. Finally, we discuss some challenges to using learning progressions in biology education and provide recommendations for how to address these challenges.

WHAT IS A LEARNING PROGRESSION?

Considerable work has been done in the cognitive and learning sciences investigating how students construct scientific knowledge (NRC, 2001, 2007, 2018). From this work, we know that 1) students often have nonscientific conceptual frameworks that they use to understand scientific phenomena (Carey, 1986; Keil, 2012; Rosengren et al., 2012); 2) students’ nonscientific conceptual frameworks and ideas can persist even as they acquire scientific ideas (Kelemen and Rosset, 2009; Shtulman and Valcarcel, 2012; Evans, 2018); and 3) students’ nonscientific conceptual frameworks can both hinder and facilitate scientific reasoning (Hammer et al., 2005; Keil, 2012; Coley and Tanner, 2015; Evans, 2018). For example, work investigating how students categorize materials and processes shows that they often base their categorizations on surface features (e.g., observable characteristics), whereas scientists rely on deep features (e.g., chemical or genetic similarity) to determine category membership (Gelman and Markman, 1986; Gelman and Davidson, 2013; Hesse and Anderson, 1992; Chi et al., 1994; Herrmann et al., 2013). This tendency can lead students to view biological materials or processes as being distinct, when a scientist would view those same processes as related (Modell, 2000). Students also draw on nonscientific strategies when describing causal relationships, such as using teleological (i.e., purpose-driven) or essentialist (i.e., attribute-driven) motives to explain biological phenomena, descriptions in conflict with scientific ideas of physical–causal interactions (Kelemen, 2012; Coley and Tanner, 2015; Lombrozo and Vasilyeva, 2017). Teleological ideas can be tenacious and persist even when students become professional scientists in ways that can significantly impact their views of biological processes like evolution (Kelemen et al., 2013). Students can also rely on simplified assumptions to explain causal relations in complex systems that can lead them to an incorrect understanding of a system’s dynamics, such as relying on deterministic instead of probabilistic causal mechanisms, linear instead of nonlinear relationships, or a centralized cause with a limited number of actors instead of decentralized causes with complex or emergent interactions (Grotzer and Tutwiler, 2014). However, ideas that stem from nonscientific frameworks can also provide students with an initial way to begin constraining how organisms or materials relate in a hierarchical taxonomy that can provide a framework from which to build more scientifically rigorous ideas (Coley and Muratore, 2012).

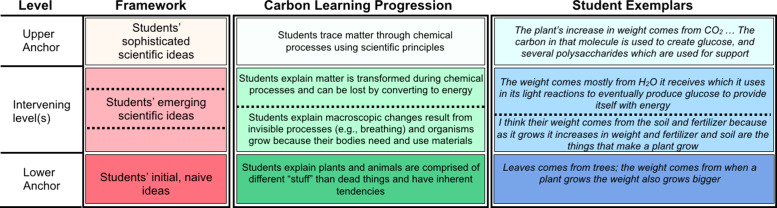

Learning progression research leverages work from the cognitive sciences by recognizing that students’ understanding of scientific phenomena evolves in a myriad of ways as they work toward mastery of a topic (NRC, 2007; Corcoran et al., 2009). This understanding often builds upon intuitive or colloquial ideas that can serve as “stepping-stones” for scientific knowledge (Mohan and Plummer, 2012; Anderson, 2015). Learning progression researchers capture this diversity of ideas by administering common assessment questions to students across the grade levels of interest (e.g., freshman to senior undergraduate students; Jin and Anderson, 2012b). From the assessment responses, learning progression researchers use qualitative methods to identify the various ways students reason about the topic of interest as they develop mastery, including nonscientific, vague, incomplete, or incorrect conceptions (Figure 1; Corcoran et al., 2009; Duschl et al., 2011). These myriad ideas are ordered into increasing levels of sophistication that represent cognitive shifts in how students conceive a topic (Gunckel et al., 2012b). The levels are bounded by an Upper Anchor, which represents the target reasoning strategy established by an instructor or experts in a field, and a Lower Anchor, which characterizes students’ incoming or base knowledge about a topic (Duschl et al., 2011; Evans et al., 2012; Mohan and Plummer, 2012). The intervening levels describe the many possible reasoning trajectories students will likely—but not inevitably—follow as they develop mature scientific thinking about a topic (Alonzo, 2012). Indeed, there is no requirement that learning proceed linearly across learning progression levels; students may hold ideas characteristic of multiple levels on the progression depending on the context of a question or their own cognitive development (NRC, 2001; Smith et al., 2006). When possible, the reasoning patterns described in the intervening levels draw from research in the cognitive and learning sciences on how students construct scientific knowledge (Duncan and Rivet, 2013). We recommend Jin et al. (2019b) for a more detailed discussion of how learning progressions are created.

FIGURE 1.

The alignment of a generic learning progression framework (“Framework”), an abbreviated version of Mohan et al.’s (2009) carbon learning progression (“Carbon Learning Progression”), and student exemplars for each level of the carbon learning progression (“Student Exemplars”).

By aggregating students’ diversity of ideas on a topic into one framework, learning progressions serve as a “road map” to student thinking that begins with students’ initial ideas about a topic and ends with their mature scientific ideas at the topmost level of the learning progression (Alonzo, 2011; Anderson, 2015). The intervening levels represent key “landmarks” or conceptual challenges/successes that students may encounter as they work toward the “final destination” of the topmost level (Black et al., 2011; Anderson, 2015; Wilson, 2018). Indeed, students may take different “routes” toward the highest level on the progression and may not experience all of the ideas described in the different levels. The road map also describes the proximity of different emerging ideas in the lower levels to the scientific ones articulated in the highest level, with ideas at higher levels aligning more closely with target ideas compared with the ideas expressed in lower levels (Alonzo, 2011; Black et al., 2011). Instructors can use the road map nature of learning progressions to guide their instructional practices in ways that are grounded in evidence of student thinking.

We want to emphasize that, when we use the term “learning progression,” we are referring to cognition-based learning progressions and not curriculum and instruction progressions, content progressions, or teaching sequences that describe an ordered sequence of scientific ideas developed by experts from which curricular units are constructed (Alonzo et al., 2012; Furtak, 2012). Instead, cognition-based learning progressions are developed from empirical data of student reasoning maturation through time (Alonzo and Steedle, 2008; Alonzo, 2012; Corcoran et al., 2009; Mohan et al., 2009). As such, learning progressions approach student learning from both a scientific and a developmental perspective (Gotwals and Alonzo, 2012): they are scientific, because they focus on the fundamental concepts of science that experts have identified as having broad explanatory power across multiple contexts (Duschl et al., 2011; Krajcik, 2012); they are developmental, because they describe what students actually think and do as they move toward mastery of those scientific concepts (Mohan et al., 2009; Evans et al., 2012).

According to Corcoran et al. (2009), there are five fundamental features of learning progressions:

They characterize student thinking about “big ideas” of science.

They describe how students’ reason when engaged in scientific practices.

They guide the development of learning goals that are connected to student thinking.

They characterize the breadth of student ideas about a central idea of science.

They are connected to a related set of assessment instruments that target key performances and track student learning.

In the following sections, we go into greater detail about each feature of learning progressions to more fully illustrate what makes them powerful educational tools.

Learning Progressions Characterize Student Thinking about “Big Ideas” of Science

“Big ideas” are fundamental concepts and principles of science that have broad explanatory power and contribute to students’ growth in conceptual understanding (Smith et al., 2006; Duschl et al., 2011; Krajcik, 2012). By focusing instruction around big ideas, instructors can help their students build conceptual coherence around multiple, seemingly discrete ideas that are governed by the same fundamental principles (Modell, 2000; Mohan et al., 2009; Cooper et al., 2017). This approach addresses the criticism that science curricula are often too focused on broad information dissemination rather than deep explorations of how systems work (AAAS, 2011; Cooper et al., 2015).

Big ideas of science are identified by experts in both scientific and science education fields (AAAS, 2011; NRC, 2012; Next Generation Science Standards [NGSS] Lead States, 2013). For example, in biology, the “overarching principles” described in the BioCore Guide (e.g., “All living organisms share a common ancestor”; Brownell et al., 2014) represent big ideas in the field of biology that could serve as the basis for a learning progression. Other examples of big ideas that have been the focus of a learning progression are: matter and atomic molecular theory (Smith et al., 2006), force and motion (Alonzo and Steedle, 2008), natural selection (Furtak et al., 2012), atomic structure and electric force (Stevens et al. 2010), data representation (Lehrer and Schauble, 2012), conceptual modeling (Schwarz et al., 2009), and common ancestry of plants (Wyner and Doherty, 2017).

Learning Progressions Describe How Students Reason When Engaged in Scientific Practices

A learning progression approach treats both knowledge and skills as essential, integrated components of scientific reasoning, similar to what scientists do when they generate new ideas (AAAS, 2011; NRC, 2012; Cooper et al., 2015). For example, when scientists engage in the practice of constructing explanations, they synthesize their knowledge of observed events into theories for why phenomena occur that link specific observations (i.e., data) with mechanisms that support causal inferences (NRC, 2012). Similarly, when scientists engage in the practice of quantitative reasoning, they use mathematical tools to parameterize their understanding of how variables in systems interact and explore what happens when those parameters change (AAAS, 2011; NRC, 2012). This integration of knowledge and skills has been termed a “performance” (Smith et al., 2006; Corcoran et al., 2009; Jin et al., 2019a). Performances reveal how students use their scientific knowledge to accomplish a specific practice in a way that instructors can observe and assess to determine student progress (NRC, 2001). Indeed, instructors can use the performances described in a learning progression to develop assessments (both formal and informal) that are tightly aligned with the learning progression and locate where individual students fall across learning progression levels as they learn about a topic (Corcoran et al., 2009). For example, when students exhibit the target performance described in the Upper Anchor of a learning progression, this provides evidence that they have mastered the topic of interest; however, when students exhibit performances that are described in the lower levels of a learning progression, this provides evidence that they are still working toward mastery.

Assessing performances provides richer insight into student thinking compared with assessing content knowledge alone. Moreover, asking students to engage in specific performances on assessments reframes assessments as additional opportunities for students to engage in authentic scientific practices (NRC, 2001, 2012). To illustrate how performances can be used in assessment to reveal student thinking, we draw on Duncan et al.’s (2009) work developing a genetics learning progression. These authors were interested in understanding students’ conceptions about proteins and their “central role in the functioning of all living organisms” (p. 668). The performance they targeted was students’ proficiency in constructing an explanation (i.e., the practice) based on the principles of molecular and cellular biology (i.e., relevant content knowledge). They observed this performance by asking students to explain why people born with muscular dystrophy have difficulty walking. From students’ explanations, they identified different levels of proficiency in students’ ideas about how a genetic mutation influences the structure and/or function of a protein, and therefore the function of an organism. The various levels of proficiency from this question (and others) were used to help inform the overarching levels of their learning progression describing how students develop sophisticated ideas about genetics.

Learning Progressions Guide the Development of Learning Goals That Are Connected to Student Thinking

The Upper Anchor, or most sophisticated level, of a learning progression represents a target performance that students should demonstrate to be proficient in a topic. As such, instructors can use the Upper Anchor of a learning progression to guide the development of learning goals in their course. These learning goals would reflect key performances that experts have decided are necessary for students to be proficient in a topic (Corcoran et al., 2009). They would also be tightly aligned with student thinking by describing what students are able do at a particular point in their learning careers (Mohan and Plummer, 2012). This helps insure that learning goals are appropriate for the level of a particular course. We provide Table 1 as an example of how to link learning goals with performances and student thinking.

TABLE 1.

Example taken from Duncan et al. (2009, Table 2) showing how learning goals inform, and are connected to, performances and assessment tasks, with the highest level in their learning progression showna

| Learning goal | Performance | Assessment task | Expected response |

| Proteins have particular three-dimensional shapes determined by their amino acid sequences. Proteins have many different kinds of functions that depend on their specific properties. There are different types of genetic mutations that can affect the structures, and thus the functions, of proteins, and ultimately the traits. | Students explain or predict how a genetic mutation might affect the structure and function of a protein. | Some people are born with a genetic disease called muscular dystrophy. People with this disease have great difficulty in walking or exercising. Can you explain what might be causing these problems? | Maybe their muscle cells do not move well because the proteins in these cells do not work as a result of a mutation in a gene. |

aBold emphases are ours and indicate performances.

Learning Progressions Characterize the Breadth of Student Ideas about a Central Scientific Concept

By attending to the breadth of students’ conceptions about a topic, and organizing those conceptions into one framework, learning progressions leverage students’ misconceived, incomplete, or vague ideas to better understand how scientific knowledge develops; they also recognize that some of these emerging ideas may serve as important stepping-stones for developing more sophisticated understanding (NRC, 2007; Krajcik, 2012; Duncan and Rivet, 2013; Lehrer and Schauble, 2015). Instructors can use learning progressions to prepare themselves for—and make predictions about—the range of ideas their students are likely to express in their classes (Furtak, 2012).

Learning Progressions Are Connected to a Related Set of Assessment Instruments That Target Key Performances and Track Student Learning

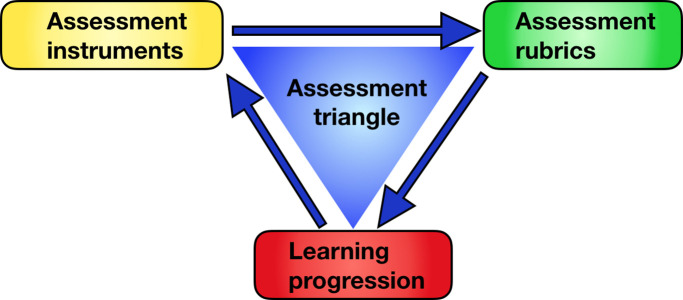

Indeed, assessment instruments are the tools that researchers use to collect student ideas in order to construct and validate the learning progression framework. The integral nature of the learning progression framework, with its associated assessment instruments and the kinds of analyses performed on collected student reasoning data, has been described as three corners of an “assessment system triangle” wherein each element (i.e., learning progression framework, assessment instruments, data analysis/assessment rubrics; Figure 2) directly informs the other two (NRC, 2001; Alonzo and Steedle, 2008). The tight relationship among these three elements ensures that the tasks students are asked to engage in on assessment instruments align with the target concepts being investigated in the learning progression (Wilson, 2018).

FIGURE 2.

An adaptation of the assessment triangle from the NRC report Knowing What Students Know (NRC, 2001, p. 44).

Learning progression–based assessment instruments also allow instructors to track student learning through time, because the assessment questions are designed to be answerable by students from multiple levels of experience (Jin and Anderson, 2012b). Consequently, learning progression–based assessment instruments measure how a student’s sense-making matures between assessment opportunities, which can help faculty evaluate the effectiveness of their instruction. This focus on tracking students’ progress across learning progression levels shifts the purpose of assessment toward creating tasks that discriminate among the different levels of student performances rather than tasks that are designed to elicit a “correct” answer (Corcoran et al., 2009; Songer et al., 2009; Wilson, 2018).

AN EXAMPLE LEARNING PROGRESSION

To exemplify how learning progressions provide insight into student thinking in ways that instructors can leverage to enrich their instruction, we examine Mohan et al.’s (2009) learning progression, which describes student thinking about the “big idea” of carbon-transforming phenomena, including plant and animal growth, cellular respiration, decomposition, and combustion. Mohan and colleagues were also interested in how students used the principle of matter conservation to inform their explanations of these phenomena. While their learning progression was based on how middle and high school students reasoned about these processes, Hartley et al. (2011), Parker et al. (2015), and Rice et al. (2014) found that college biology students from multiple institutions displayed the same kinds of reasoning about carbon-transforming processes in all but the lowest level (i.e., level 1).

Mohan et al. (2009) focused on the practice of constructing explanations in order to gain a deep understanding of how students considered carbon-transforming processes. They used open-ended assessment questions to ask students about plant and animal growth, cellular respiration, decomposition, and combustion. For example, to investigate student thinking about cellular respiration, they asked students to explain the following phenomenon: “[A person] lost a lot of weight eating a low calorie diet. Where did the mass of his fat go (how was it lost)?” To investigate students’ ideas about plant growth, they asked: “A small acorn grows into a large oak tree. a) Which of the following is FOOD for plants (circle ALL correct answers)? Soil, Air, Sunlight, Fertilizer, Water, Minerals in soil, Sugar that the plants make. b) Where do you think the plant’s increase in weight comes from?” Before collecting student data, the authors established a preliminary Upper Anchor (i.e., learning goal) that students at the highest level of their learning progression would be environmental science literate, meaning they would “have the capacity to understand and participate in evidence-based discussions about complex socio-ecological systems” (Mohan et al., 2009, p. 675).

Mohan et al.’s (2009) resulting learning progression summarized the overarching reasoning patterns students exhibited across the carbon-transforming processes they investigated. Their learning progression described four distinct levels of student reasoning (Figure 1, Carbon Learning Progression). The first and lowest level on the progression was typical of only the youngest students and described their view of carbon-transforming processes as a function of an organism’s “natural tendencies” to grow or die rather than a kind of process (Figure 1, Student Exemplars). These students also viewed living things and nonliving objects (e.g., soil) as being made of different “stuff” and focused on changes in external features of organisms and objects, like a plant increasing in mass by growing more leaves.

At the second level of the learning progression, students understood that invisible or “hidden” mechanisms were responsible for the changes they observed in an organism, rather than a foreordained tendency (as characteristic of level 1 student accounts). For example, when students described how an animal grew by eating food or a plant grew by taking up soil nutrients, they acknowledged that there were internal processes (e.g., a vague sense of digestion) involved in these changes. While imprecise, this idea represented an initial reasoning framework that tracked matter in vague ways, a prerequisite for principled reasoning with matter conservation.

At the third level of the learning progression, students replaced their “hidden mechanisms” idea with one focused on chemical transformations of matter, an idea more reminiscent of a scientific mechanism. Students explained physical changes at the macroscopic scale (e.g., plant growth, animal fat loss) by tracing matter through chemical processes, like photosynthesis or cellular respiration. However, they still struggled with the idea that gases could account for matter gains and losses. Instead, students used energy as a “‘fudge factor’…to account for materials that seemed to mysteriously appear or disappear” (Mohan et al., 2009, p. 688). For example, students would identify sunlight as a source of matter for plant growth or explain that an animal loses mass by “burning” it off through exercise.

The transition to the fourth and highest level of their learning progression occurred when students traced matter through chemical processes that included the fixation or release of gases; students also recognized that matter could not be converted into energy. This kind of reasoning was consistent with a scientific view of carbon-transforming processes and aligned with both K–12 and college-level benchmarks for student proficiency as well as the level of scientific literacy needed to interpret scientific reports aimed at the general public (AAAS, 2011; Hartley et al., 2011; NGSS Lead States, 2013).

Mohan et al. (2009) found that students have similar ideas—both accurate and misconceived—across the carbon-transforming processes they investigated. This finding provides two key affordances for instructors teaching these topics: 1) By framing plant and animal growth, cellular respiration, decomposition, and combustion as all involving carbon-transforming processes, instructors can help students build intellectual coherence around processes that appear distinct at macroscopic scales but are fundamentally related at atomic–molecular scales (i.e., they all involve the generation or transformation of organic carbon molecules). 2) Many of students’ misconceived ideas across the carbon-transforming processes were fundamentally related and could be addressed by having students consistently apply the principle of matter conservation in their reasoning (Hartley et al. 2011). For example, the misconceived ideas of “animals lose mass by burning off fat,” “sunlight contributes to a plant’s mass,” or “wood turns into heat and light energy when combusted” are all ideas that interconvert matter and energy. Having students trace carbon through these processes can address all of these misconceptions (Hartley et al., 2011; Rice et al., 2014).

Mohan et al.’s (2009) work demonstrates how learning progressions leverage a range of student ideas about a topic that creates a road map instructors can use to better understand how students construct scientific knowledge about a topic. It is important to emphasize that progress from one level to the next in a learning progression represents a cognitive shift in the way a student understands a topic and not simply the accumulation of individual knowledge pieces or vocabulary (Gotwals and Alonzo, 2012; Jin and Anderson, 2012b; Lehrer and Schauble, 2015). These cognitive shifts represent significant changes in the ways students reason about a topic as they incorporate more scientific ideas in their thinking, for example, by viewing carbon-transforming processes as matter-tracing processes (i.e., level 3) rather than inevitable events (i.e., level 2) or by reasoning consistently in a principle-based way across multiple contexts (i.e., the transition from level 3 to level 4; Mohan et al., 2009; Gunckel et al., 2012b; Wilson, 2018). Consequently, these shifts can be challenging for students to achieve. Indeed, Gunckel et al. (2012b) have suggested this process is like acquiring a whole new “discourse” in which students learn to use patterns of language, practices, and values that are consistent with, and connect them to, the scientific community. This perspective is consistent with a sociocultural perspective of knowing and learning from the learning sciences in which “one learns to participate in the practices, goals, and habits of mind of a particular community” when building knowledge (NRC, 2001, p. 63; Duncan and Rivet, 2013).

HOW LEARNING PROGRESSIONS INFORM UNDERGRADUATE BIOLOGY INSTRUCTION AND CURRICULAR DEVELOPMENT

Because learning progressions provide a conceptual road map of student thinking, they can be a powerful resource not only for informing instruction but also for guiding curricular development (Wilson, 2018). Indeed, the “vehicle” for moving students from one landmark to the next on the road map is the instruction and curriculum students interact with along the way, which significantly impact how smoothly and successfully students’ learning moves toward the final destination (Furtak, 2012; Krajcik, 2012). The potential to inform instruction and curricular development has led some researchers to create learning progression–based educational systems in which a learning progression is intrinsically tied to a specific instructional approach or curriculum (e.g., Lehrer and Schauble, 2015; Hartley et al., 2011; Anderson et al., 2018). However, other learning progressions are more broadly representative of student thinking and can be adapted and implemented for a variety of pedagogical methods (Alonzo and Steedle, 2008; Gunckel et al., 2012b). Regardless, learning progressions can help “faculty iteratively review and revise a course or curriculum on the basis of evidence that students are learning the ways of science and developing defined concepts and competencies” (AAAS, 2011, p. 23).

Currently, there are a number of published learning progressions about a variety of topics that biology instructors could use to inform their instruction and curricular development; we have highlighted these in Table 2 (for a more thorough review of published learning progressions, see Jin et al., 2019a). Many of these learning progressions were developed for primary and secondary grade levels and may provide only limited insight for undergraduate instructors. However, there are three learning progressions that we view as being appropriate for informing a number of key topics addressed in many undergraduate-level introductory biology courses: 1) Hartley et al.’s (2011) work investigating how undergraduate students use the principles of matter and energy conservation to understand carbon-cycling processes across scales (e.g., cellular respiration, photosynthesis, net primary production, food webs; based on Mohan et al., 2009, and Jin and Anderson, 2012a); 2) Furtak et al.’s (2012) learning progression about variation and differential survival/reproduction in natural selection; and 3) Todd and Romine’s (2016) work investigating undergraduate students’ understanding of modern genetics (based on Duncan et al., 2009; see also Todd and Kenyon, 2016). In the following sections, we describe how instructors and curriculum developers can use already-established learning progressions to enrich their biology instruction.

TABLE 2.

Selection of published learning progressions (LPs) about “big ideas” likely to be addressed in undergraduate biology classes, with a focus on learning progressions that covered grade bands at the secondary level or higher and links to the core concepts and/or competencies identified in Vision and Change that each learning progression addressed

| Authors | Topic | Grade band | Related concepts from Vision and Change | Notes |

| Breslyn et al., 2016 | Sea-level rise and climate change | 7–16 | Systems | “Conditional” LP still being validated and revised |

| Cabello-Garrido et al., 2018 | Human nutrition | ∼5–12 | Pathways and transformations of energy and matter | Needs empirical validation; grade band our interpretation of the target student level |

| Duncan et al., 2009 | Modern genetics | 5–10 | Structure and function; information flow, exchange, and storage | Validated for undergraduate students (Todd and Romine, 2016) |

| Furtak et al., 2012 | Natural selection | 9–10 | Evolution | Discusses how LPs can support teaching practices (see also Furtak et al., 2018) |

| Gunckel et al., 2012a | Water in socio-ecological systems | 5–12 | Systems | See Covitt et al. (2018) for connecting LP with teaching practices |

| Jin and Anderson, 2012a | Energy in socio-ecological systems | 4–11 | Pathways and transformations of energy and matter; systems | Focused on energy-related concepts in carbon-transforming processes |

| Jin et al., 2019c | Trophic dynamics in ecosystems | 6–12 | Systems | Explores systems thinking concepts like feedback loops and energy pyramids |

| Mohan et al., 2009 | Carbon-cycling processes (cellular respiration, photosynthesis) | 6–12 | Pathways and transformations of energy and matter; systems | Validated for undergraduate students (Hartley et al., 2011) |

| Sevian and Talanquer, 2014 | Chemical thinking | 8–16+ | Structure and function; pathways and transformations of energy and matter | Focused on chemistry ideas |

| Songer et al., 2009 | Biodiversity | 4–6 | Systems; ability to apply the process of science | Concurrently developed a learning progression on inquiry practices |

| Stevens et al., 2010 | Nature of matter | 7–14 | Pathways and transformations of energy and matter | Focused on atomic structure |

| Wolfson et al., 2014 | Energy transformations from chemistry to biochemistry | 13–16 | Pathways and transformations of energy and matter | Preliminary learning progression |

Learning Progressions Help Instructors Identify Empirically Validated Learning Goals

Because the learning goals/Upper Anchors of learning progressions are grounded in both student thinking and expert expectations, instructors can be confident that these learning goals are rigorous and reasonable benchmarks for instruction: they are reasonable, because they represent goals that are aligned with what students are able to achieve at a particular stage of learning; they are rigorous, because they represent ideas experts have identified as important and have been empirically validated with student thinking.

To illustrate why instructors can use the Upper Anchor of a learning progression to develop rigorous and reasonable learning goals for students, we draw briefly on our work developing a learning progression around the big idea of flux (i.e., flux α gradient/resistance) in the domain of physiology (Modell, 2000; Michael et al., 2009) to reveal the underlying processes involved in developing an Upper Anchor. The concept of flux describes how material(s) moves in an organism through processes such as diffusion, osmosis, or bulk flow. The following example focuses on student thinking about the movement of ions.

The initial, expert-derived Upper Anchor of our learning progression stated that “students should integrate the impact of electrochemical gradients and forms of resistance when explaining ion fluxes between compartments.” After collecting data from constructed-response assessment items that asked students to explain ion fluxes into and out of cells in different contexts (e.g., neuron, heart muscle cell, plant cell), we found that this Upper Anchor failed to capture the feature of this system most challenging to students: understanding how equilibrium potential mediates ion fluxes. For example, when asked how potassium could move into a cell against its concentration gradient, a student wrote, “We could change the membrane potential to make the cell more negative so that the electrical gradient points inward and overcomes the concentration gradient.” While this answer is generally correct and “integrates the impact of electrochemical gradients” to determine ion fluxes, it neglects to specify how negative the membrane potential must become before electrical forces overcome chemical forces. If the membrane potential becomes “more negative” but is still more positive than the equilibrium potential of potassium under the given conditions, then electrical forces will not overcome chemical forces to change potassium movement. From this finding, we revised the Upper Anchor to specify that “students should integrate the impact of electrochemical gradients and forms of resistance when explaining ion fluxes between compartments AND account for threshold values (e.g., equilibrium potential),” which was more precise about the features students needed to master to deeply understand ions fluxes in physiology. This revised learning goal/Upper Anchor provides greater insight to instructors about what specific ideas they should address to help students master a specific topic.

This example shows why learning goals based on the Upper Anchor of a learning progression are rigorous—they use empirical data of student thinking to frame the key performances experts have identified as evidence of student mastery of a topic (e.g., integrating multiple gradients and considering threshold values for ion movement). They are reasonable, because the Upper Anchor describes proficiency in a performance that was observed in students (e.g., “Make the membrane potential more negative than Ek [i.e., potassium equilibrium potential] which will make the electrical gradient going into the cell larger than the concentration gradient going out. This will allow for K+ ions to flow into the cell.”).

Learning Progressions Help Instructors Identify Measureable Learning Goals That Integrate Scientific Knowledge and Skills

Vision and Change recommended that “student-centered courses begin with the articulation of clear, measurable learning goals” that are explicit about “what students should know and be able to do at the end of a course” (AAAS 2011, p. 23). The performances described in the Upper Anchor of learning progressions achieve both of these recommendations: they are measureable, because they describe a task students must do that an instructor can observe, and they are explicit about what students know and be able to do by asking students to engage in specific practices that reveal their understanding of key science ideas. This can help ameliorate a key challenge instructors often face in developing assessments that reliably measure the “right” ideas or skills that indicate a student has mastered a particular learning goal of interest (AAAS, 2011).

To illustrate how the Upper Anchors of learning progressions can be used as measureable learning goals that integrate skills and knowledge, we draw on Dauer et al.’s (2014) work investigating students use of evidence when evaluating scientific claims. These authors’ work builds upon the learning progressions of Mohan et al. (2009) and Jin and Anderson (2012a) by evaluating how students’ quantitative practices interact with their explanatory practices to critique scientific arguments about plant growth. They presented students with different assessment tasks that asked them to critique two competing claims about where a plant gets its mass. As part of these tasks, students were given a simple data table that showed a change in plant mass and corresponding change in soil mass (e.g., starting mass of a seed and soil: 1 and 80 g, respectively; final mass of plant and soil: 50 and 78 g, respectively). The top level of their preliminary learning progression explained that students must “notice the provided mass data and interpret the purpose of the data as for [matter] tracing” and “trace matter by applying principles of conservation of matter to constrain the argument,” which can be directly translated into a learning goal. The authors measured students’ achievement of this goal by whether or not students used the mass discrepancy in the data that indicated plants gained most of their mass from sources other than the soil to critique the claims. They also found that when students did not use their knowledge of matter conservation when evaluating the simple data table, the students often missed the mass discrepancy and supported the claim that a plant’s mass largely comes from the soil. This highlights how integral knowledge and practices are for developing scientific understanding and why learning goals should include both features.

Learning Progressions Inform Instructors about Key Learning Hurdles That Can Inform Instructional Activities

The road map nature of learning progressions gives instructors “advance warning” of likely learning hurdles students will face when learning about a topic (Furtak, 2012). These learning hurdles are described in the lower levels of the learning progression and can be leveraged to create class activities that specifically target those learning hurdles (Talanquer, 2009; Anderson et al., 2018). For example, Hartley et al. (2011) developed teaching activities (available at www.biodqc.org) designed to address the key reasoning challenges students faced around carbon-transforming processes that were identified by Mohan et al.’s (2009) learning progression. Similarly, Anderson et al. (2018) developed a suite of epistemic tools, like “(i) Process Tools that help students organize their writing and thinking, (ii) hands-on investigations and activities that model processes and chemical change, (iii) simulations…, and (iv) discourse routines that engage students in sharing ideas and seeking consensus” in the Carbon TIME curriculum (available at https://carbontime.bscs.org). These tools were designed to address students’ common tendency to conflate/interconvert matter and energy, identified in Mohan et al.’s (2009) and Jin and Anderson’s (2012a) learning progressions. This approach guides instructors in developing teaching activities that are targeted toward helping students achieve a learning goal (i.e., reasoning at the Upper Anchor level) and are focused on those behaviors (i.e., performances) that show evidence of proficiency (Handelsman et al., 2004). However, it expands on this approach by being rooted in student conceptions and not just an instructor’s goals for teaching.

Learning Progressions Can Be Used as Formative Assessment Tools to Help Instructors Tailor Their Instruction to the Needs of a Specific Class

Instructors can administer learning progression–based assessments during a course to identify both the range and distribution of student’s ideas (Wilson, 2018). This information enables instructors to tailor their instruction to focus on the learning hurdles most prominent in a particular class. It also helps instructors anticipate, and prepare for, different questions students may ask in class (Furtak, 2012; Alonzo and von Aufschnaiter, 2018; Furtak et al., 2018). For example, in Furtak’s (2012) work exploring student explanations about natural selection, the author worked with teachers to develop suggested feedback statements that teachers could give during formative assessments when confronted with student ideas from lower levels of the learning progression. These feedback statements were designed to help refocus students to the more sophisticated ways of viewing natural selection described in higher levels of the learning progression. For instance, when a student had an anthropomorphic idea about why changes arise in populations, such as, “The moths become darker because of bark,” the feedback statement teachers identified directed students to examine evidence from class activities that investigated the origin of variation (Furtak, 2012, p. 423).

On their website Thinking Like a Biologist (www.biodqc.org), Hartley et al. (2011) have made available diagnostic question clusters for college students that instructors can use to examine how their students’ understanding of carbon-transforming process aligns with the reasoning patterns in Mohan et al.’s (2009) carbon-cycling learning progression. Additionally, Todd and Romine (2016) provided the revised Learning Progression-based Assessment of Modern Genetics (LPA-MG) assessment instrument that can be used to assess how college students’ ideas about genetics align with the reasoning patterns described in Duncan et al.’s (2009) genetics learning progression. Both of these assessment instruments provide college biology instructors with formative assessment tools that are linked to a learning progression “road map” they can use to better understand how their students construct scientific ideas in these domains.

Learning Progressions Enable Instructors to Measure Student Learning in a Nuanced Way

Because learning progressions describe the ways student thinking evolves across multiple levels of development, instructors can observe learning that occurs when students progress from one level to the next and not just when they reach the Upper Anchor (Alonzo and von Aufschnaiter, 2018). For example, the shift from thinking about what an organism needs to one based on chemical transformations of matter and energy that occurred between the second and third levels in Mohan et al.’s (2009) learning progression indicates significant progress in developing a scientific sense-making strategy, even though the students had yet to achieve complete mastery of the topic. This approach recognizes that not all students will become proficient in a topic at the same pace, though they can still make meaningful gains in developing scientific ideas by moving from one level to the next on the learning progression (Corcoran et al., 2009; Duncan and Rivet, 2013). Consequently, instructors can evaluate their instructional effectiveness by examining how their students progress across all levels of the learning progression and not just by how many students achieve the Upper Anchor. The assessment instruments provided by Hartley et al. (2011) and Todd and Romine (2016) can be used for this purpose if administered at multiple time points during a course. This nuanced approach to student learning provides more detailed evidence instructors can use to refine their instructional methods and to identify concepts that may need greater support during future courses to help propel students to higher levels of the learning progression.

CHALLENGES AND RECOMMENDATIONS FOR LEARNING PROGRESSIONS IN UNDERGRADUATE BIOLOGY

Learning progressions represent a potentially powerful educational tool that can help instructors 1) focus their instruction around big ideas of biology; 2) bring coherence to their instruction, curricula, and assessment strategies; and 3) leverage students’ many ideas about biology to better understand how students learn to think like biologists. However, as with any tool, there are challenges associated with learning progression research in undergraduate biology education. Here, we highlight two that we view as the most significant:

There are a limited number of published learning progressions that are relevant for biology education at the undergraduate level. While Hartley et al.’s (2011), Furtak et al.’s (2012), and Todd and Romine’s (2016) learning progression work covers a number of key topics in many introductory biology courses, there is a paucity of learning progression work investigating how student thinking develops in upper-division courses around more sophisticated biology ideas. Consequently, there is much to learn about how advanced biology students develop the kinds of specialized knowledge and skills they need to be successful in their professional endeavors. This leads to challenge number two.

Developing a learning progression is time and resource intensive. A learning progression, at its inception, represents a hypothesized sequence for how students develop a sophisticated conceptual understanding of a key topic. As with any scientific hypothesis, a learning progression must be empirically validated to be accepted as a “true” representation of how student thinking develops (Shavelson and Kurpius, 2012). This involves multiple rounds of developing and revising assessment items that elicit a range of student ideas about the hypothesized learning progression; collecting and analyzing many instances of student reasoning to identify, verify, and revise the different ideas captured in the learning progression levels; and conducting psychometric analyses to evaluate how well aligned multiple assessment items are for eliciting different levels of student reasoning and for validating the appropriateness of level distinctions and ordering. For perspective, our work developing an ion flux learning progression over the past 3 years has involved creating ∼20 individual short-answer assessment items, collecting and analyzing more than 12,000 individual written student responses to the assessment items from 10 different undergraduate institutions, and conducting more than 100 interviews in which students were asked about their ion flux ideas. This work is ongoing as we continue to validate the patterns described in our learning progression.

While the task of developing new learning progressions can seem daunting, it also represents a largely untapped area for biology education researchers to explore. Additionally, this work creates opportunities for interdisciplinary collaborations between biology education researchers and researchers from both the cognitive and learning sciences. This would help ground biology education and research in a theoretical understanding of student cognition that could enrich existing work in the field. For example, there are many studies from biology education research that identify the myriad ways students misconceive key ideas that are largely disconnected from a theoretical understanding of why students hold those ideas in the first place (e.g., see concept inventories by Marbach-Ad et al., 2009; Nehm et al., 2012; McFarland et al., 2016; Summers et al., 2018). If a learning progression approach was applied, these diverse ideas could be described in a coherent framework based on how students construct scientific conceptual frameworks, which instructors could use to tackle more fundamental reasons for students’ misconceptions beyond confronting each misconceived idea individually.

Learning progressions, a product of extensive research at the K–12 level investigating how students learn, can guide how learning progression research is implemented and used in collegiate settings. We invite biology education researchers and instructors to explore this body of work as a way to move biology education forward into largely unexplored, but potentially rich, areas that will help biology students develop the rigorous conceptual frameworks they need to be successful in the diverse professional fields of biology.

Acknowledgments

We thank Drs. Andy Anderson, Jack Cerchiara, Kevin Haudek, and Alexa Clemmons for their thoughtful comments on drafts of this article. We also appreciate the UW Biology Education Research Group’s (BERG) collective feedback. This work was supported by a National Science Foundation award (NSF DUE 1661263).

REFERENCES

- Alonzo, A. C. (2011). Learning progressions that support formative assessment practices. Measurement, 9(2/3), 124–129. doi: 10.1080/15366367.2011.599629 [DOI] [Google Scholar]

- Alonzo, A. C. (2012). Eliciting student responses relative to a learning progression. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 241–254). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/978-94-6091-824-7_11 [DOI] [Google Scholar]

- Alonzo, A. C., Neidorf, T., Anderson, C. W. (2012). Using learning progressions to inform large-scale assessment. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 211–240). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/978-94-6091-824-7_10 [DOI] [Google Scholar]

- Alonzo, A. C., Steedle, J. T. (2008). Developing and assessing a force and motion learning progression. Science Education, 93(3), 389–421. doi: 10.1002/sce.20303 [DOI] [Google Scholar]

- Alonzo, A. C., von Aufschnaiter, C. (2018). Moving beyond misconceptions: Learning progressions as a lens for seeing progress in student thinking. Physics Teacher, 56(7), 470–473. doi: 10.1119/1.5055332 [DOI] [Google Scholar]

- American Association for the Advancement of Science. (2011). Vision and change in undergraduate biology education. Washington, DC. Retrieved March 22, 2019, from http://visionandchange.org/finalreport/ [Google Scholar]

- Anderson, C. W. (2015). Enacting rigorous and responsive teaching of college biology. Plenary presented at: Society for the Advancement of Biology Education Research (University of Minnesota, Minneapolis). [Google Scholar]

- Anderson, C. W., de los Santos, E. X., Bodbyl, S., Covitt, B. A., Edwards, K. D., Hancock, J. B., … & Welch, M. M. (2018). Designing educational systems to support enactment of the Next Generation Science Standards. Journal of Research in Science Teaching, 55(7), 1026–1052. doi: 10.1002/tea.21484 [DOI] [Google Scholar]

- Black, P., Wilson, M., Yao, S.-Y. (2011). Road maps for learning: A guide to the navigation of learning progressions. Measurement, 9(2/3), 71–123. doi: 10.1080/15366367.2011.591654 [DOI] [Google Scholar]

- Breslyn, W., McGinnis, R., McDonald, R. C., Hestness, E. (2016). Developing a learning progression for sea level rise, a major impact of climate change. Journal of Research in Science Teaching, 53(10), 1471–1499. [Google Scholar]

- Brownell, S. E., Freeman, S., Wenderoth, M. P., Crowe, A. J. (2014). BioCore Guide: A tool for interpreting the core concepts of Vision and Change for biology majors. CBE—Life Sciences Education, 13(2), 200–211. doi: 10.1187/cbe.13-12-0233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cabello-Garrido, A., Espana-Ramos, E., Blanco-Lopez, A. (2018). Developing a human nutrition learning progression. International Journal of Science and Mathematics Education, 16(7), 1269–1289. [Google Scholar]

- Carey, S. (1986). Cognitive science and science education. American Psychologist, 41(10), 1123–1130. [Google Scholar]

- Chi, M. T. H., Slotta, J. D., De Leeuw, N. (1994). From things to processes: A theory of conceptual change for learning science concepts. Learning and Instruction, 4(1), 27–43. doi: 10.1016/0959-4752(94)90017-5 [DOI] [Google Scholar]

- Coley, J. D., Muratore, T. M. (2012). Trees, fish, and other fictions: Folk biological thought and its implications for understanding evolutionary biology. In Rosengren, K., Brem, S., Evans, E. M., Sinatra, G. (Eds.), Evolution challenges: Integrating research and practice in teaching and learning about evolution (pp. 22–46). New York: Oxford University Press. [Google Scholar]

- Coley, J. D., Tanner, K. (2015). Relations between intuitive biological thinking and biological misconceptions in biology majors and nonmajors. CBE—Life Sciences Education, 14(1), ar8, doi: 10.1187/cbe.14-06-0094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper, M. M., Caballero, M. D., Ebert-May, D., Fata-Hartley, C. L., Jardeleza, S. E., Krajcik, J. S., … & Underwood, S. M. (2015). Challenge faculty to transform STEM learning. Science, 350(6258), 281–282. doi: 10.1126/science.aab0933 [DOI] [PubMed] [Google Scholar]

- Cooper, M. M., Posey, L. A., Underwood, S. M. (2017). Core ideas and topics: Building up or drilling down? Journal of Chemical Education, 94(5), 541–548. doi: 10.1021/acs.jchemed.6b00900 [DOI] [Google Scholar]

- Corcoran, T., Mosher, F. A., Rogat, A. D. (2009). Learning progressions in science: An evidence-based approach to reform (CPRE Research Report No. RR-63). Philadelphia, PA: Consortium for Policy Research in Education. [Google Scholar]

- Covitt, B. A., Gunckel, K. L., Caplan, B., Syswerda, S. (2018). Teachers’ use of learning progression-based formative assessment in water instruction. Applied Measurement in Education, 31(2), 128–142. [Google Scholar]

- Dauer, J. M., Doherty, J. H., Freed, A. L., Anderson, C. W., Holt, E. A. (2014). Connections between student explanations and arguments from evidence about plant growth. CBE—Life Sciences Education, 13(3), 397–409. doi: 10.1187/cbe.14-02-0028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan, R., Rogat, A. D., Yarden, A. (2009). A learning progression for deepening students’ understandings of modern genetics across the 5th–10th grades. Journal of Research in Science Teaching, 46(6), 655–674. doi: 10.1002/tea.20312 [DOI] [Google Scholar]

- Duncan, R. G., Rivet, A. E. (2013). Science learning progressions. Science, 339(6118), 396–397. doi: 10.1126/science.1228692 [DOI] [PubMed] [Google Scholar]

- Duschl, R., Maeng, S., Sezen, A. (2011). Learning progressions and teaching sequences: A review and analysis. Studies in Science Education, 47(2), 123–182. doi: 10.1080/03057267.2011.604476 [DOI] [Google Scholar]

- Evans, E. M. (2018). Bridging the gap: From intuitive to scientific reasoning—The case of evolution. In Rutten, K., Blancke, S., Soetaert, R. (Eds.), Perspectives on science and culture (pp. 131–148). West Lafayette, IN: Purdue University Press. [Google Scholar]

- Evans, E. M., Rosengren, K. S., Lane, J. D., Price, K. L. S. (2012). Encountering counterintuitive ideas: Constructing a developmental learning progression for evolution understanding. In Evolution challenges: Integrating research and practice in teaching and learning about evolution (pp. 174–199). New York: Oxford University Press. [Google Scholar]

- Furtak, E. M. (2012). Linking a learning progression for natural selection to teachers’ enactment of formative assessment. Journal of Research in Science Teaching, 49(9), 1181–1210. doi: 10.1002/tea.21054 [DOI] [Google Scholar]

- Furtak, E. M., Circi, R., Heredia, S. C. (2018). Exploring alignment among learning progressions, teacher-designed formative assessment tasks, and student growth: Results of a four-year study. Applied Measurement in Education, 31(2), 143–156. doi: 10.1080/08957347.2017.1408624 [DOI] [Google Scholar]

- Furtak, E. M., Thompson, J., Braaten, M., Windschitl, M. (2012). Learning progressions to support ambitious teaching practices. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 405–433). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/9789460918247_018 [DOI] [Google Scholar]

- Gelman, S. A., Davidson, N. S. (2013). Conceptual influences on category-based induction. Cognitive Psychology, 66(3), 327–353. doi: 10.1016/j.cogpsych.2013.02.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gelman, S. A., Markman, E. M. (1986). Categories and induction in young children. Cognition, 23(3), 183–209. doi: 10.1016/0010-0277(86)90034-X [DOI] [PubMed] [Google Scholar]

- Gotwals, A. W., Alonzo, A. C. (2012). Introduction. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 3–12). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/978-94-6091-824-7_1 [DOI] [Google Scholar]

- Grotzer, T. A., Tutwiler, M. S. (2014). Simplifying causal complexity: How interactions between modes of causal induction and information availability lead to heuristic-driven reasoning. Mind, Brain, and Education, 8(3), 97–114. doi: 10.1111/mbe.12054 [DOI] [Google Scholar]

- Gunckel, K. L., Covitt, B. A., Salinas, I., Anderson, C. W. (2012a). A learning progression for water in socio-ecological systems. Journal of Research in Science Teaching, 49(7), 843–868. [Google Scholar]

- Gunckel, K. L., Mohan, L., Covitt, B. A., Anderson, C. W. (2012b). Addressing challenges in developing learning progressions for environmental science literacy. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 39–75). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/978-94-6091-824-7_4 [DOI] [Google Scholar]

- Hammer, D., Elby, A., Scherr, R. E., Redish, E. F. (2005). Resources, framing, and transfer. In Mestre, J. P. (Ed.), Transfer of learning from a modern multidisciplinary perspective (pp. 89–119). Greenwich, CT: IAP. [Google Scholar]

- Handelsman, J., Ebert-May, D., Beichner, R., Bruns, P., Chang, A., DeHaan, R., … & Wood, W. B. (2004). Scientific teaching. Science, 304(5670), 521–522. doi: 10.1126/science.1096022 [DOI] [PubMed] [Google Scholar]

- Hartley, L. M., Wilke, B. J., Schramm, J. W., D’Avanzo, C., Anderson, C. W. (2011). College students’ understanding of the carbon cycle: Contrasting principle-based and informal reasoning. BioScience, 61(1), 65–75. doi: 10.1525/bio.2011.61.1.12 [DOI] [Google Scholar]

- Herrmann, P. A., French, J. A., DeHart, G. B., Rosengren, K. S. (2013). Essentialist reasoning and knowledge effects on biological reasoning in young children. Merrill-Palmer Quarterly, 59(2), 198–220. doi: 10.1353/mpq.2013.0008 [DOI] [Google Scholar]

- Hesse, J. J., Anderson, C. W. (1992). Students’ conceptions of chemical change. Journal of Research in Science Teaching, 29(3), 277–299. [Google Scholar]

- Jin, H., Anderson, C. W. (2012a). A learning progression for energy in socio-ecological systems. Journal of Research in Science Teaching, 49(9), 1149–1180. doi: 10.1002/tea.21051 [DOI] [Google Scholar]

- Jin, H., Anderson, C. W. (2012b). Developing assessments for a learning progression on carbon-transforming processes in socio-ecological systems. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 151–181). Rotterdam, Netherlands: Sense Publishers. [Google Scholar]

- Jin, H., Mikeska, J. N., Hokayem, H., Mavronikolas, E. (2019a). Toward coherence in curriculum, instruction, and assessment: A review of learning progression literature. Science Education, 103(5), 1206–1234. doi: 10.1002/sce.21525 [DOI] [Google Scholar]

- Jin, H., Rijn, P. van, Moore, J. C., Bauer, M. I., Pressler, Y., Yestness, N. (2019b). A validation framework for science learning progression research. International Journal of Science Education, 41(10), 1324–1346. doi: 10.1080/09500693.2019.1606471 [DOI] [Google Scholar]

- Jin, H., Shin, H. J., Hokayem, H., Qureshi, F., Jenkins, T. (2019c). Secondary students’ understanding of ecosystems: A learning progression approach. International Journal of Science and Mathematics Education, 17(2), 217–235. [Google Scholar]

- Keil, F. C. (2012). Does folk science develop? In Shrager, J., Carver, S. (Eds.), The journey from child to scientist: Integrating cognitive development and the education sciences (pp. 67–86). Washington, DC: American Psychological Association. doi: 10.1037/13617-003 [DOI] [Google Scholar]

- Kelemen, D. (2012). Teleological minds: How natural intuitions about agency and purpose influence learning about evolution. In Rosengren, K., Brem, S., Evans, E. M., Sinatra, G. (Eds.), Evolution challenges: Integrating research and practice in teaching and learning about evolution (pp. 66–92). New York: Oxford University Press. [Google Scholar]

- Kelemen, D., Rosset, E. (2009). The human function compunction: Teleological explanation in adults. Cognition, 111(1), 138–143. doi: 10.1016/j.cognition.2009.01.001 [DOI] [PubMed] [Google Scholar]

- Kelemen, D., Rottman, J., Seston, R. (2013). Professional physical scientists display tenacious teleological tendencies: Purpose-based reasoning as a cognitive default. Journal of Experimental Psychology: General, 142(4), 1074–1083. doi: 10.1037/a0030399 [DOI] [PubMed] [Google Scholar]

- Krajcik, J. S. (2012). The importance, cautions and future of learning progression research. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 27–36). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/978-94-6091-824-7_3 [DOI] [Google Scholar]

- Lehrer, R., Schauble, L. (2012). Seeding evolutionary thinking by engaging children in modeling its foundations. Science Education, 96(4), 701–724. doi: 10.1002/sce.20475 [DOI] [Google Scholar]

- Lehrer, R., Schauble, L. (2015). Learning progressions: The whole world is NOT a stage. Science Education, 99(3), 432–437. doi: 10.1002/sce.21168 [DOI] [Google Scholar]

- Lombrozo, T., Vasilyeva, N. (2017). Causal explanation. In Waldmann, M. (Ed.), Oxford library of psychology. The Oxford handbook of causal reasoning. Oxford, UK: Oxford University Press. [Google Scholar]

- Marbach-Ad, G., Briken, V., El-Sayed, N. M., Frauwirth, K., Fredericksen, B., Hutcheson, S., … & Smith, A. C. (2009). Assessing student understanding of host pathogen interactions using a concept inventory. Journal of Microbiology & Biology Education, 10(1), 43–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McFarland, J., Wenderoth, M. P., Michael, J., Cliff, W., Wright, A., Modell, H. (2016). A conceptual framework for homeostasis: Development and validation. Advances in Physiology Education, 40(2), 213–222. doi: 10.1152/advan.00103.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michael, J., Modell, H., McFarland, J., Cliff, W. (2009). The “core principles” of physiology: What should students understand? Advances in Physiology Education, 33(1), 10–16. doi: 10/d8pz3t [DOI] [PubMed] [Google Scholar]

- Modell, H. I. (2000). How to help students understand physiology? Emphasize general models. Advances in Physiology Education, 23(1), S101–S107. [DOI] [PubMed] [Google Scholar]

- Mohan, L., Chen, J., Anderson, C. W. (2009). Developing a multi-year learning progression for carbon cycling in socio-ecological systems. Journal of Research in Science Teaching, 46(6), 675–698. doi: 10.1002/tea.20314 [DOI] [Google Scholar]

- Mohan, L., Plummer, J. (2012). Exploring challenges to defining learning progressions. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 139–147). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/978-94-6091-824-7_7 [DOI] [Google Scholar]

- National Research Council (NRC). (2001). Knowing what students know: The science and design of educational assessment. Washington, DC: National Academies Press. doi: 10.17226/10019 [DOI] [Google Scholar]

- NRC. (2007). Taking science to school: Learning and teaching science in grades K–8. Washington, DC: National Academies Press. doi: 10.17226/11625 [DOI] [Google Scholar]

- NRC. (2012). A framework for K–12 science education: Practices, crosscutting concepts, and core ideas. Washington, DC: National Academies Press. doi: 10.17226/13165 [DOI] [Google Scholar]

- NRC. (2018). How people learn II: Learners, contexts, and cultures. Washington, DC: National Academies Press. doi: 10.17226/24783 [DOI] [Google Scholar]

- Nehm, R. H., Beggrow, E. P., Opfer, J. E., Ha, M. (2012). Reasoning about natural selection: Diagnosing contextual competency using the ACORNS instrument. American Biology Teacher, 74(2), 92–98. doi: 10.1525/abt.2012.74.2.6 [DOI] [Google Scholar]

- Next Generation Science Standards Lead States. (2013). Next generation science standards: For states, by states. Washington, DC: National Academies Press. [PubMed] [Google Scholar]

- Parker, J. M., de los Santos, E. X., Anderson, C. W. (2015). Learning progressions and climate change. American Biology Teacher, 77(4), 232–238. doi: 10.1525/abt.2015.77.4.2 [DOI] [Google Scholar]

- Rice, J., Doherty, J. H., Anderson, C. W. (2014). Principles, first and foremost: A tool for understanding biological processes. Journal of College Science Teaching, 43(3), 74–82. [Google Scholar]

- Rosengren, K. S., Brem, S. K., Evans, E. M., Sinatra, G. M. (2012). Evolution challenges: Integrating research and practice in teaching and learning about evolution. New York: Oxford University Press. [Google Scholar]

- Schwarz, C. V., Reiser, B. J., Davis, E. A., Kenyon, L., Achér, A., Fortus, D., … & Krajcik, J. (2009). Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. Journal of Research in Science Teaching, 46(6), 632–654. doi: 10.1002/tea.20311 [DOI] [Google Scholar]

- Sevian, H., Talanquer, V. (2014). Rethinking chemistry: A learning progression on chemical thinking. Chemistry Education Research and Practice, 15, 10–23. [Google Scholar]

- Shavelson, R. J., Kurpius, A. (2012). Reflections on learning progressions. In Alonzo, A. C., Gotwals, A. W. (Eds.), Learning progressions in science: Current challenges and future directions (pp. 13–26). Rotterdam, Netherlands: Sense Publishers. doi: 10.1007/978-94-6091-824-7_2 [DOI] [Google Scholar]

- Shtulman, A., Valcarcel, J. (2012). Scientific knowledge suppresses but does not supplant earlier intuitions. Cognition, 124(2), 209–215. doi: 10.1016/j.cognition.2012.04.005 [DOI] [PubMed] [Google Scholar]

- Smith, C. L., Wiser, M., Anderson, C. W., Krajcik, J. (2006). Implications of research on children’s learning for standards and assessment: A proposed learning progression for matter and the atomic-molecular theory. Measurement, 4(1/2), 1–98. doi: 10.1080/15366367.2006.9678570 [DOI] [Google Scholar]

- Songer, N. B., Kelcey, B., Gotwals, A. W. (2009). How and when does complex reasoning occur? Empirically driven development of a learning progression focused on complex reasoning about biodiversity. Journal of Research in Science Teaching, 46(6), 610–631. doi: 10.1002/tea.20313 [DOI] [Google Scholar]

- Stevens, S. Y., Delgado, C., Krajcik, J. S. (2010). Developing a hypothetical multi-dimensional learning progression for the nature of matter. Journal of Research in Science Teaching, 47(6), 687–715. doi: 10.1002/tea.20324 [DOI] [Google Scholar]

- Summers, M. M., Couch, B. A., Knight, J. K., Brownell, S. E., Crowe, A. J., Semsar, K., … & Batzli, J. (2018). EcoEvo-MAPS: An ecology and evolution assessment for introductory through advanced undergraduates. CBE—Life Sciences Education, 17(2), ar18. doi: 10.1187/cbe.17-02-0037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talanquer, V. (2009). On cognitive constraints and learning progressions: The case of “structure of matter.” International Journal of Science Education, 31(15), 2123–2136. doi: 10.1080/09500690802578025 [DOI] [Google Scholar]

- Todd, A., Kenyon, L. (2016). Empirical refinements of a molecular genetics learning progression: The molecular constructs. Journal of Research in Science Teaching, 53(9), 1385–1418. doi: 10.1002/tea.21262 [DOI] [Google Scholar]

- Todd, A., Romine, W. L. (2016). Validation of the learning progression-based Assessment of Modern Genetics in a college context. International Journal of Science Education, 38(10), 1673–1698. doi: 10.1080/09500693.2016.1212425 [DOI] [Google Scholar]

- Wilson, M. (2018). Making measurement important for education: The crucial role of classroom assessment. Educational Measurement: Issues and Practice, 37(1), 5–20. doi: 10.1111/emip.12188 [DOI] [Google Scholar]

- Wolfson, A. J., Rowland, S. L., Lawrie, G. A., Wright, A. H. (2014). Student conceptions about energy transformations: Progression from general chemistry to biochemistry. Chemistry Education Research and Practice, 15, 168–183. [Google Scholar]

- Wyner, Y., Doherty, J. H. (2017). Developing a learning progression for three-dimensional learning of the patterns of evolution. Science Education, 101(5), 787–817. doi: 10.1002/sce.21289 [DOI] [Google Scholar]