Abstract

Typically, a medical image offers spatial information on the anatomy (and pathology) modulated by imaging specific characteristics. Many imaging modalities including Magnetic Resonance Imaging (MRI) and Computed Tomography (CT) can be interpreted in this way. We can venture further and consider that a medical image naturally factors into some spatial factors depicting anatomy and factors that denote the imaging characteristics. Here, we explicitly learn this decomposed (disentangled) representation of imaging data, focusing in particular on cardiac images. We propose Spatial Decomposition Network (SDNet), which factorises 2D medical images into spatial anatomical factors and non-spatial modality factors. We demonstrate that this high-level representation is ideally suited for several medical image analysis tasks, such as semi-supervised segmentation, multi-task segmentation and regression, and image-to-image synthesis. Specifically, we show that our model can match the performance of fully supervised segmentation models, using only a fraction of the labelled images. Critically, we show that our factorised representation also benefits from supervision obtained either when we use auxiliary tasks to train the model in a multi-task setting (e.g. regressing to known cardiac indices), or when aggregating multimodal data from different sources (e.g. pooling together MRI and CT data). To explore the properties of the learned factorisation, we perform latent-space arithmetic and show that we can synthesise CT from MR and vice versa, by swapping the modality factors. We also demonstrate that the factor holding image specific information can be used to predict the input modality with high accuracy. Code will be made available at https://github.com/agis85/anatomy_modality_decomposition.

Keywords: disentangled representation learning, cardiac Magnetic Resonance Imaging, semi-supervised segmentation, multitask learning

1. Introduction

Learning good data representations is a long running goal of machine learning (Bengio et al., 2013a). In general, representations are considered “good” if they capture explanatory (discriminative) factors of the data, and are useful for the task(s) being considered. Learning good data representations for medical imaging tasks poses additional challenges, since the representation must lend itself to a range of medically useful tasks, and work across data from various image modalities.

Within deep learning research there has recently been a renewed focus on methods for learning so called “disentangled” representations, for example in Higgins et al. (2017) and Chen et al. (2016). A disentangled representation is one in which information is represented as a number of (independent) factors, with each factor corresponding to some meaningful aspect of the data (Bengio et al., 2013a) (hence why sometimes encountered as factorised representations).

Disentangled representations offer many benefits: for example, they ensure the preservation of information not directly related to the primary task, which would otherwise be discarded, whilst they also facilitate the use of only the relevant aspects of the data as input to later tasks. Furthermore, and importantly, they improve the interpretability of the learned features, since each factor captures a distinct attribute of the data, while also varying independently from the other factors.

1.1. Motivation

Disentangled representations have considerable potential in the analysis of medical data. In this paper we combine recent developments in disentangled representation learning with strong prior knowledge about medical image data: that it necessarily decomposes into an “anatomy factor” and a “modality factor”.

An anatomy factor that is explicitly spatial (represented as a multi-class semantic map) can maintain pixel-level correspondences with the input, and directly supports spatially equivariant tasks such as segmentation and registration. Most importantly, it also allows a meaningful representation of the anatomy that can be generalised to any modality. As we demonstrate below, a spatial anatomical representation is useful for various modality independent tasks, for example in extracting segmentations as well as in calculating cardiac functional indices. It also provides a suitable format for pooling information from various imaging modalities.

The non-spatial modality factor captures global image modality information, specifying how the anatomy is rendered in the final image. Maintaining a representation of the modality characteristics allows, among other things, the ability to use data from different modalities.

Finally, the ability to learn this factorisation using a very limited number of labels is of considerable significance in medical image analysis, as labelling data is tedious and costly. Thus, it will be demonstrated that the proposed factorisation, in addition to being intuitive and interpretable, also leads to considerable performance improvements in segmentation tasks when using a very limited number of labelled images.

1.2. Overview of the proposed approach

Learning a decomposition of data into a spatial content factor and a non-spatial style factor has been a focus of recent research in computer vision (Huang et al., 2018; Lee et al., 2018) with the aim being to achieve diversity in style transfer between domains. However, no consideration has been taken regarding the semantics and the precision of the spatial factor. This is crucial in medical analysis tasks in order to be able to extract quantifiable information directly from the spatial factor. Concurrently with these approaches, Chartsias et al. (2018) aimed to precisely address the need for interpretable semantics by explicitly enforcing the spatial factor to be a binary myocardial segmentation. However, since the spatial factor is a segmentation mask of only the myocardium, remaining anatomies must be encoded in the non-spatial factor, which violates the concept of explicit factorisation into anatomical and modality factors.

In this paper instead, we propose Spatial Decomposition Network (SDNet), schematic shown in Figure 1, that learns a disentangled representation of medical images consisting of a spatial map that semantically represents the anatomy, and a non-spatial latent vector containing image modality information.

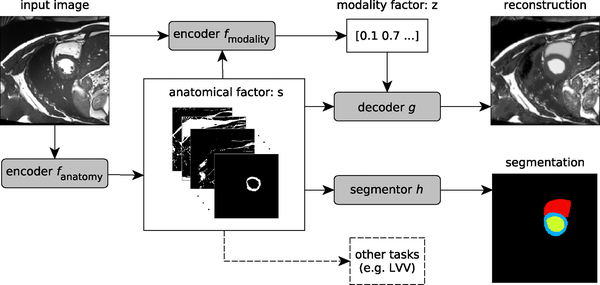

Fig. 1:

A schematic overview of the proposed model. An input image is first encoded to a multi-channel spatial representation, the anatomical factor s, using an anatomy encoder fanatomy. Then s can be used as an input to a segmentation network h to produce a multi-class segmentation mask, (or some other task specific network). The factor s along with the input image are used by a modality encoder fmodality to produce a latent vector z representing the imaging modality. The two representations s and z are combined to reconstruct the input image through the decoder network g.

The anatomy is modelled as a multi-channel feature map, where each channel represents different anatomical substructures (e.g. myocardium, left and right ventricles). This spatial representation is categorical with each pixel necessarily belonging to exactly one channel. This strong restriction prevents the binary maps from encoding modality information, encouraging the anatomy factors to be modality-agnostic (invariant), and further promotes factorisation of the subject’s anatomy into meaningful topological regions.

On the other hand, the non-spatial factor contains modality-specific information, in particular the distribution of intensities of the spatial regions. We encode the image intensities into a smooth latent space, using a Variational Autoencoder (VAE) loss, such that nearby values in this space correspond to neighbouring values in the intensity space.

Finally, since the representation should retain most of the required information about the input (albeit in two factors), image reconstructions are possible by combining both factors.

In the literature the term “factor” usually refers to either a single dimension of a latent representation, or a meaningful aspect of the data (i.e. a group of dimensions) that can vary independently from other aspects. Here we use factor in the second sense, and we thus learn a representation that consists of a (multi-dimensional) anatomy factor, and a (multi-dimensional) modality factor. Although the individual dimensions of the factors could be seen as (sub-)factors themselves, for clarity we will refer to them as dimensions throughout the paper.

1.3. Contributions

Our main contributions are as follows:

With the use of few segmentation labels and a reconstruction cost, we learn a multi-channel spatial representation of the anatomy. We specifically restrict this representation to be semantically meaningful by imposing that it is a discrete categorical variable, such that different channels represent different anatomical regions.

We learn a modality representation using a VAE, which allows sampling in the modality space. This facilitates the decomposition, permits latent space arithmetic, and also allows us to use part of our network as a generative model to synthesise new images.

We detail design choices, such as using Feature-wise Linear Modulation (FiLM) (Perez et al., 2018) in the decoder, to ensure that the modality factors do not contain anatomical information, and prevent posterior collapse of the VAE.

We demonstrate our method in a multi-class segmentation task, and on different datasets, and show that we maintain a good performance even when training with labelled images from only a single subject.

We show that our semantic anatomical representation is useful for other anatomical tasks, such as inferring the Left Ventricular Volume (LVV). More critically, we show that we can also learn from such auxiliary tasks demonstrating the benefits of multi-task learning, whilst also improving the learned representation.

Finally, we demonstrate that our method is suitable for multimodal learning (here multimodal refers to multiple modalities and not multiple modes in a statistical sense)3.20, where a single encoder is used with both MR and CT data, and show that information from additional modalities improves segmentation accuracy.

In this paper we advance our preliminary work (Chartsias et al., 2018) in the following aspects: 1) we learn a general anatomical representation useful for multi-task learning; 2) we perform multi-class segmentation (of multiple cardiac substructures); 3) we impose a structure in the imaging factor which follows a multi-dimensional Gaussian distribution, that allows sampling and improves generalisation; 4) we formulate the reconstruction process to use FiLM normalisation (Perez et al., 2018), instead of concatenating the two factors; and 5) we offer a series of experiments using four different datasets to show the capabilities and expressiveness of our representation.

The rest of the paper is organised as follows: Section 2 reviews related literature in representation learning and segmentation. Then, Section 3 describes our proposed approach. Sections 4 and 5 describe the setup and results of the experiments performed. Finally, Section 6 concludes the manuscript.

2. Related work

Here we review previous work on disentangled representation learning, which is typically a focus of research on generative models (Section 2.1). We then review its application in domain adaptation, which is achieved by a factorisation of style and content (Section 2.2). Finally, we review semi-supervised methods in medical imaging, as well as recent literature in cardiac segmentation, since they are related to the application domain of our method (Sections 2.3 and 2.4).

2.1. Factorised representation learning

Interest in learning independent factors of variation of data distributions is growing. Several variations of VAE (Kingma and Welling, 2014; Rezende et al., 2014) and Generative Adversarial Networks (GAN) (Goodfellow et al., 2014) have been proposed to achieve such a factorisation. For example β-VAE (Higgins et al., 2017) adds a hyperparameter β to the KL-divergence constraint, whilst Factor-VAE (Kim and Mnih, 2018) boosts disentanglement by encouraging independence between the marginal distributions. On the other hand, using GANs, InfoGAN (Chen et al., 2016) maximises the mutual information between the generated image and a latent factor using adversarial training, and SD-GAN (Donahue et al., 2018) generates images with a common identity and varying style. Combinations of VAE and GANs have also been proposed, for example by Mathieu et al. (2016) and Szabó et al. (2018). Both learn two continuous factors: one dataset specific factor, in their case class labels, and one factor for the remaining information. To promote independence of the factors and prevent a degenerate condition where the decoder uses only one of the two factors, mixing techniques have also been proposed (Hu et al., 2018). These ideas also begin to see use in medical image analysis: Biffi et al. (2018) apply VAE to learn a latent space of 3D cardiac segmentations to train a model of cardiac shapes useful for disease diagnosis. Learning factorised features is also used to distinguish between (learned) features specific to a modality from those shared across modalities (Fidon et al., 2017). However, their aim is combining information from multimodal images and not learning semantically meaningful representations.

These methods rely on learning representations in the form of latent vectors. Our method is similar in concept with Mathieu et al. (2016) and Szabó et al. (2018), which both learn a factorisation into known and other residual factors. However, we constrain the known factor to be spatial, since this is naturally related to the anatomy of medical images.

2.2. Style and content disentanglement

There is a connection between our task and style transfer (in medical image analysis known as modality transformation or synthesis): the task of rendering one image in the “style” of another. Classic style transfer methods do not explicitly model the style of the output image and therefore suffer from style ambiguity, where many outputs correspond to the same style. In order to address this “many to one” problem, a number of models have recently appeared that include an additional latent variable capturing image style. For example, colouring a sketch may result in different images (depending on the colours chosen) thus, in addition to the sketch itself, a vector parameterising the colour choices is also given as input (Zhu et al., 2017).

Our approach here can be seen as similar to a disentanglement of an image into “style” and “content” (Gatys et al., 2016; Azadi et al., 2018), where we represent content (i.e. in our case the underlying anatomy) spatially. Similar to our approach, there have been recent disentanglement models that also use vector and spatial representations for the style and content respectively (Almahairi et al., 2018; Huang et al., 2018; Lee et al., 2018). Furthermore, Esser et al. (2018) expressed content as a shape estimation (using an edge extractor and a pose estimator) and combined it with style obtained from a VAE. The intricacies of medical images differentiate us by necessitating the expression of the spatial content factor as categorical in order to produce a semantically meaningful (interpretable) representation of the anatomy, which cannot be estimated and rather needs to be learned from the data. This discretisation of the spatial factor also prevents the spatial representation from being associated with a particular medical image modality. The remainder of this paper uses the terms “anatomy” and “modality”, which are associated with medical image analysis, to refer to the synonymous “content” and “style” that are most common in deep learning/computer vision terminology.

2.3. Semi-supervised segmentation

A powerful property of disentangled representations is that they can be applied in semi-supervised learning (Almahairi et al., 2018). An important application in medical image analysis is (semi-supervised) segmentation, for a recent review see Cheplygina et al. (2019). As discussed in this review, manual segmentations are a laborious task, particularly as inter-rater variation means multiple labels are required to reach a consensus, and images labelled by multiple experts are very limited. Semi-supervised segmentation has been proposed for cardiac image analysis using an iterative approach and Conditional Random Fields (CRF) post-processing (Bai et al., 2017), and for gland segmentation using GANs (Zhang et al., 2017).

More recent medical semi-supervised image segmentation approaches include Zhao et al. (2018) and Nie et al. (2018). Zhao et al. (2018) address a multi-instance segmentation task in which they have bounding boxes for all instances, but pixel-level segmentation masks for only some instances. Nie et al. (2018) approach semi-supervised segmentation with adversarial learning and a confidence network. Neither approaches involve learning disentangled representations of the data.

2.4. Cardiac segmentation

We apply our model to the problem of cardiac segmentation, for which there is considerable literature (Peng et al., 2016). The majority of recent methods use convolutional networks with full supervision for multi-class cardiac segmentations, as seen for example in participants of workshop challenges (Bernard et al., 2018). Cascaded networks (Vigneault et al., 2018) are used to perform 2D segmentation by transforming the data into a canonical orientation and also by combining information from different views. Prior information about the cardiac shape has been used to improve segmentation results (Oktay et al., 2018). Spatial correlation between adjacent slices has been explored (Zheng et al., 2018) to consistently segment 3D volumes. Segmentation can also be treated as a regression task (Tan et al., 2017). Finally, temporal information related to the cardiac motion has been used for segmentation of all cardiac phases (Qin et al., 2018; Bai et al., 2018b).

Differently from the above, in this work we focus on learning meaningful spatial representations, and leveraging these for improved semi-supervised segmentation results, and performing auxiliary tasks.

3. Materials and methods

Overall, our proposed model can be considered as an autoencoder, which takes as input a 2D volume slice x ∈ X, where is the set of all images in the data, with H and W being the image’s height and width respectively. The model generates a reconstruction through an intermediate disentangled representation. The disentangled representation is comprised of a multi-channel spatial map (a tensor) s ∈ S := {0, 1}H×W×C, where C is the number of channels, and a multi-dimensional continuous vector factor , where nz is the number of dimensions. These are generated respectively by two encoders, modelled as convolutional neural networks, fanatomy and fmodality. The two representations are combined by a decoder g to reconstruct the input. In addition to the reconstruction cost, explicit supervision can be given in the form of auxiliary tasks, for example with a segmentation task using a network h, or with a regression task as we will demonstrate in Section 5.2. A schematic of our model can be seen in Figure 1 and the detailed architectures of each network are shown in Figure 2.

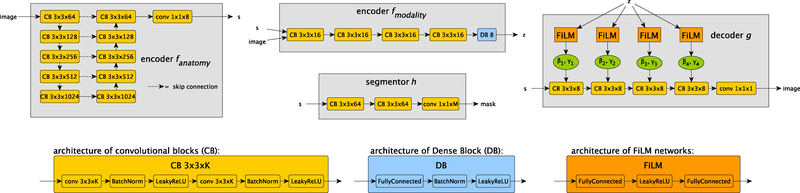

Fig. 2:

The architectures of the four networks that make up SDNet. The anatomy encoder is a standard U-Net (Ronneberger et al., 2015) that produces a spatial anatomical representation s. The modality encoder is a convolutional network (except for a fully connected final layer) that produces the modality representation z. The segmentor is a small fully convolutional network that produces the final segmentation prediction of a multi-class mask (with L classes) given s. Finally the decoder produces a reconstruction of the input image from s with its output modulated by z through FiLM normalisation (Perez et al., 2018). The bottom of the figure details the components used throughout the four networks. The anatomical factor’s channels parameter C, the modality factor’s size nz, and the number of segmentation classes L depend on the specific task and are detailed in the main text.

3.1. Input decomposition

The decomposition process yields representations for the anatomy and the modality characteristics of medical images and is achieved by two dedicated neural networks. Whilst a decomposition could also be performed with a single neural network with two separate outputs and shared layer components, as done in our previous work (Chartsias et al., 2018), we found that by using two separate networks, as also done in Huang et al. (2018) and in Lee et al. (2018), we can more easily control the information captured by each factor, and we can stabilise the behaviour of each encoder during training.

3.1.1. Anatomical representation

The anatomy encoder is a fully convolutional neural network that maps 2D images to spatial representations, fanatomy : X → S. We use a U-Net (Ronneberger et al., 2015) architecture, containing downsampling and upsampling paths with skip connections between feature maps of the same size, allowing effective fusion of important local and non-local information.

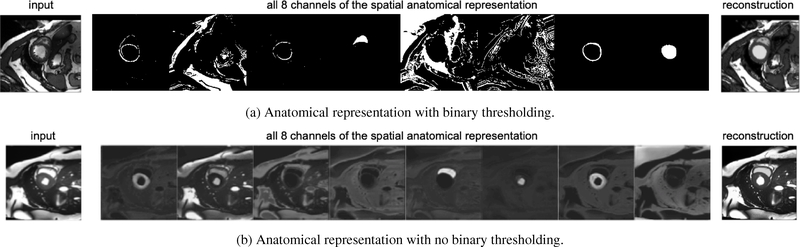

The spatial representation is a feature map consisting of a number of binary channels of the same spatial dimensions (H, W) as the input image, that is , w ∈ {1, . . . ,W}, where C is the number of channels. Some channels contain individual anatomical (cardiac) sub-structures, while the other structures, necessary for reconstruction, are freely dispersed in the remaining channels. Figure 3a shows an example of a spatial representation, where the representations of the myocardium, the left and the right ventricle, are clearly visible, and the remaining channels contain the surrounding image structures (albeit more mixed and not anatomically distinct).

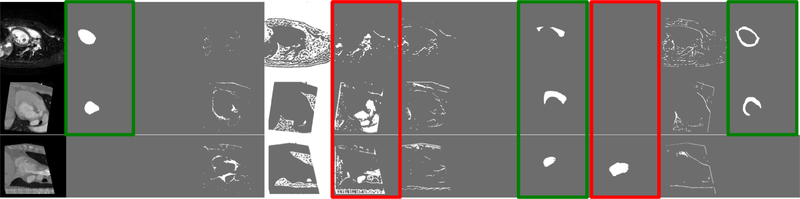

Fig. 3:

(a) Example of a spatial representation, expressed as a multi-channel binary map. Some channels represent defined anatomical parts such as the myocardium or the left ventricle, and others the remaining anatomy required to describe the input image on the left. Observe how sparse most of the informative channels are. (b) Spatial representation with no thresholding applied. Each channel of the spatial map, also captures the intensity signal in different gray level variations and is not sparse, in contrast to Figure 3a. This may hinder an anatomical separation. Note that no specific channel ordering is imposed and thus the anatomical parts can appear in different order in the anatomical representations across experiments.

The spatial representation is derived using a softmax activation function to force each pixel to have activations that sum to one across the channels. Since softmax functions encode continuous distributions, we binarise the anatomical representation via the operator s ↦⌊s + 0.5⌋, which acts as a threshold for the pixel values of the spatial variables in the forward pass. During back-propagation the step function is bypassed and updates are applied to the original non-binary representation, as in the straight-through operator (Bengio et al., 2013b).

Thresholding s is an integral part of the model’s design and offers two advantages. Firstly, it reduces the capacity of the spatial factor, encouraging it to be a representation of only the anatomy and preventing modality information from being encoded. Secondly, it enforces a factorisation of the spatial factor in distinct channels, as each pixel can only be active on one channel. To illustrate the importance of this binarisation, an example of a non-thresholded spatial factor is shown in Figure 3b. Observe, that the channels of s are not sparse with variations of gray level now evident. Image intensities are now encoded spatially, using different grayscale values, allowing a good reconstruction to be achieved without the need of a modality factor, which we explicitly want to avoid.

3.1.2. Modality representation

Given samples of the data x ∈ X with their corresponding s ∈ S (deterministically obtained by fanatomy), we learn the posterior distribution of latent factors .

Learning this posterior distribution follows the VAE principle (Kingma and Welling, 2014). In brief a VAE learns a low dimensional latent space, such that the learned latent representations match a prior distribution that is set to be an isotropic multivariate Gaussian . A VAE consists of an encoder and a decoder. The encoder, given an input, predicts the parameters of a Gaussian distribution (with diagonal covariance matrix). This distribution is then sampled, using the reparameterisation trick to allow learning through back propagation, and the resulting sample is fed through the decoder to reconstruct the input. VAEs are trained to minimise a reconstruction error and the KL divergence of the estimated Gaussian distribution q(z|x, s) from the unit Gaussian p(z),

where . Once trained, sampling a vector from the unit Gaussian over the latent space and passing it through the decoder approximates sampling from the data, i.e. the decoder can be used as a generative model.

The posterior distribution is modelled with a stochastic encoder (this is analogous to the VAE encoder) as a convolutional network, which encodes the image modality, fmodality : X ×S → Z. Specifically, the stochasticity of the encoder (for a sample x and its anatomy factor s) is achieved as in the VAE formulation as follows: fmodality(x, s) produces first the mean and diagonal covariance for an nz dimensional Gaussian, which is then sampled to yield the final z.

3.2. Segmentation

One important task for the model is to infer segmentation masks m ∈ M := {0, 1}H×W×L, where L is the number of anatomical segmentation categories in the training dataset, out of the spatial representation. This is an integral part of the training process because it also defines the anatomical structures that will be extracted from the image. The segmentation network1 is a fully convolutional network consisting of two convolutional blocks followed by a final 1 × 1 convolution layer (see Figure 2), with the goal of refining the anatomy present in the spatial maps and produce the final segmentation masks, h : S → M.

When labelled data are available, a supervised cost is employed that is based on a differentiable Dice loss (Milletari et al., 2016) between a real segmentation mask m of an image sample x and its predicted segmentation h(fanatomy(x)),

where the added small constant ϵ prevents division by 0. In a semi-supervised scenario, where there are images with no corresponding segmentations, an adversarial loss is defined using a discriminator over masks DM, based on LeastSquares-GAN (Mao et al., 2018). Networks fanatomy and h are trained to maximise the adversarial objective, against DM which is trained to minimise it,

The architecture of the discriminator is based on DCGAN discriminator (Radford et al., 2016), without Batch Normalization.

3.3. Image reconstruction

The two factors are combined by a decoder network g to generate an image with the anatomical characteristics specified by s and the imaging characteristics specified by z, g : S × Z → Y. The fusion of the two factors acts as an inpainting mechanism where the information stored in z, is used to derive the image signal intensities that will be used on the anatomical structures, stored in s.

The reconstruction is achieved by a decoder convolutional network conditioned with four FiLM (Perez et al., 2018) layers. This general purpose conditioning method learns scale and offset parameters for each feature-map channel within a convolutional architecture. Thus, an affine transformation (one per channel) learned from the conditioning input is applied.

Here, a network of two fully connected layers (see Figure 2) maps z to the scale and offset values γ and β for each intermediate feature map Fc of the decoder. Each channel of Fc is modulated based on c pairs γc and βc as follows: FiLM(Fc|γc, βc) = γc⨀Fc+βc, where element-wise multiplication (⨀) and addition are both broadcast over the spatial dimensions. The decoder and FiLM parameters are learned through the reconstruction of the input images using Mean Absolute Error,

The design of the decoding process restricts the type of information stored in z to only affect the intensities of the produced image. This is important in the disentangling process as it pushes z to not contain spatial anatomical information.

The decoder can also be interpreted as a conditional generative model, where different samples of z conditioned on a given s generate images of the same anatomical properties, but with different appearances. The reconstruction process is the opposite of the decomposition process, i.e. it learns the dependencies between the two factors in order to produce a realistic output.

3.3.1. Modality factor reconstruction

A common problem when training VAE is posterior collapse: a degenerate condition where the decoder is ignoring some factors. In this case, even though the reconstruction is accurate, not all data variation is captured in the underlying factors.

In our model posterior collapse manifests when some modality information is spatially encoded within the anatomical factor.2 To overcome this we use a z reconstruction cost, according to which an image y produced by a random z sample should produce the same modality factor when (re-)encoded,

The faithful reconstruction of the modality factor z penalises the VAE for ignoring dimensions of the latent distribution and encourages each encoded image to produce a low variance Gaussian. This is in tension with the KL divergence cost which is optimal when the produced distribution is a spherical Gaussian of zero mean and unit variance. A perfect score of the KL divergence results in all samples producing the same distribution over z, and thus the samples are indistinguishable from each other based on z. Without , the overall cost function can be minimised if imaging information is encoded in s, thus resulting in posterior collapse. Reconstructing the modality factor prevents this, and results in an equilibrium where a good reconstruction is possible only with the use of both factors.

4. Experimental setup

4.1. Data

In our experiments we use 2D images from four datasets, which have been normalised to the range [−1, 1].

For the semi-supervised segmentation experiment (Section 5.1) and the latent space arithmetic (Section 5.5) we use data from the 2017 Automatic Cardiac Diagnosis Challenge (ACDC) (Bernard et al., 2018). This dataset contains cine-MR images acquired in 1.5T and 3T MR scanners, with resolution between 1.22 and 1.68 mm2/pixel and a number of phases varying between 28 to 40 images per patient. We resample all volumes to 1.37 mm2/pixel resolution. Images are cropped to 224 × 224 pixels. There are images of 100 patients for which manual segmentations are provided for the left ventricular cavity (LV), the myocardium (MYO) and the right ventricle (RV), corresponding to the end systolic (ES) and end diastolic (ED) cardiac phases. In total there are 1,920 images with manual segmentations (from ED and ES) and 23,530 images with no segmentations (from the remaining cardiac phases).

We also use data acquired at Edinburgh Imaging Facility QMRI with a 3T scanner. The dataset contains cine-MR images of 26 healthy volunteers each with approximately 30 cardiac phases. The spatial resolution is 1.406 mm2/pixels with a slice thickness of 6mm, matrix size 256 × 256, a field of view 360mm × 303.75mm, and image size 256 × 208 pixels. This dataset is used in the semi-supervised segmentation and multi-task experiments of Sections 5.1 and 5.2 respectively. Manual segmentations of the left ventricular cavity (LV) and the myocardium (MYO) are provided, corresponding to the ED cardiac phase. In total there are 241 images with manual segmentations (from ED) and 8,353 images with no segmentations (from the remaining cardiac phases).

To demonstrate multimodal segmentation and modality transformation (Section 5.3), as well as modality estimation (Section 5.4), we use data from the 2017 Multi-Modal Whole Heart Segmentation (MM-WHS) challenge, made available by Zhuang et al. (2010), Zhuang (2013), and Zhuang and Shen (2016). This contains 40 anonymised volumes, of which 20 are cardiac CT/CT angiography (CTA) and 20 are cardiac MRI. The CT/CTA data were acquired in the axial view at Shanghai Shuguang Hospital, China, using routine cardiac CTA protocols. The in-plane resolution is about 0.78 × 0.78mm and the average slice thickness is 1.60mm. The MRI data were acquired at St. Thomas hospital and Royal Brompton Hospital, London, UK, using 3D balanced steady state free precession (b-SSFP) sequences, with about 2mm acquisition resolution at each direction and reconstructed (resampled) into about 1mm. All data have manual segmentations of seven heart substructures: myocardium (MYO), left atrium (LA), left ventricle (LV), right atrium (RA), right ventricle (RV), ascending aorta (AO) and pulmonary artery (PA). Data pre-processing is as in Chartsias et al. (2017). The image size is 224 × 224 pixels. In total there are 3,626 MR and 2,580 CT images, all with manual segmentations.

Finally, we use cine-MR and CP-BOLD images of 10 canines to further evaluate modality estimation (Section 5.4). 2D images with an in-plane resolution of 1.25mm × 1.25mm were acquired at baseline and severe ischemia (inflicted as controllable stenosis of the left-anterior descending coronary artery (LAD)) on a 1.5T Espree (Siemens Healthcare) on the same instrumented canines. The image acquisition is at short axis view, covering the midventricle, and is performed using cine-MR and a flow and motion compensated CP-BOLD acquisition. The pixel resolution is 192×114 (Tsaftaris et al., 2013). This dataset (not publicly available) is ideal to show complex spatiotemporal effects as it images the same animal with and without disease and using two almost identical sequences with the only difference that CP-BOLD modulates pixel intensity with the level of oxygenation present in the tissue. In total there are 129 cine-MR and 264 CP-BOLD images with manual segmentations from all cardiac phases.

4.2. Model and training details

The overall cost function is a composition of the individual costs of each of the model’s components and is defined as:

The λ parameters are set to values: λ1=0.01, λ2=10, λ3=10, λ4=1, λ5=1. We adopt the value of λ1 from Zhu et al. (2017), that also trains a VAE for modelling intensity variability. Separating the anatomy into segmentation masks is a difficult task, and is also in tension with the reconstruction process which pushes parts with similar intensities to be in the same channels. This motivates our decision in increasing the values of the segmentation hyperparameters λ2 and λ3.

We set the dimension of the modality factor nz=8 as in Zhu et al. (2017) across all datasets. We also set the number of channels of the spatial factor to C=8 for ACDC and QMRI and increase to C=16 for MM-WHS, to support the increased number of segmented regions (7 in MM-WHS) and the fact that CT and MR data have different contrasts and viewpoints. This additional flexibility allows the network to use some channels of s for common information across the two modalities (MR and CT) and some for unique (not common) information.

We train using Adam (Kingma and Ba, 2015) with a learning rate of 0.0001 and a decay of 0.0001 per epoch. We used a batch size of 4 and an early stopping criterion based on the segmentation cost of a validation set. All code was developed in Keras (Chollet et al., 2015). The quantitative results of Section 5 are obtained through 3-fold cross validation, where each split contains a proportion of the total volumes of 70%, 15% and 15% corresponding to training, validation and test sets. SDNet implementation will be made available at https://github.com/agis85/anatomy_modality_decomposition.

4.3. Baseline and benchmark methods

We evaluate our model’s segmentation accuracy by comparing with one fully supervised and two semi-supervised methods described below:

We use U-Net (Ronneberger et al., 2015) as a fully supervised baseline because of its effectiveness in various medical segmentation problems, and also since it is frequently used by the participants of the two cardiac challenges MM-WHS and ACDC. It’s architecture follows the one proposed in the original paper, and is the same as the SDNet’s anatomy encoder for fair comparison.

We add an adversarial cost using a mask discriminator to the fully-supervised U-Net, enabling its use in semi-supervision. This can also be considered as a variant of SDNet without the reconstruction cost. We refer to this method as GAN in Section 5.

We also use the self-train method of Bai et al. (2017), which proposes an iterative method of using unlabelled data to retrain a segmentation network. In the original paper a Conditional Random Field (CRF) post-processing is applied. Here, we use U-Net as a segmentation network (such that the same architecture is used by all baselines) and we do not perform any post-processing for a fair comparison with the other methods we present.

To permit comparisons, training of the baselines uses the same hyperparameters, such as learning rate decay, optimiser, batch size, and early stopping criterion, as used for SDNet.

5. Results and discussion

We here present and discuss quantitative and qualitative results of our method in various experimental scenarios. Initially, multi-class semi-supervised segmentation is evaluated in Section 5.1. Subsequently, Section 5.2 demonstrates multi-task learning with the addition of a regression task in the training objectives. In Section 5.3, SDNet is evaluated in a multimodal scenario by concurrently segmenting MR and CT data. In Section 5.4 we investigate whether the modality factor z captures multimodal information. Finally, Section 5.5 demonstrates properties of the factorisation using latent space arithmetic, in order to show how z and s interact to reconstruct images.

5.1. Semi-supervised segmentation

We evaluate the utility of our method in a semi-supervised experiment, in which we combine labelled images with a pool of unlabelled images to achieve multi-class semi-supervised segmentation. Specifically, we explore the sensitivity of SDNet and the baselines of Section 4.3 to the number of labelled examples, by training with various numbers of labelled images. Our objective is to show that we can achieve comparable results to a fully supervised network using fewer annotations.

To simulate a more realistic clinical scenario, sampling of the labelled images does not happen over the full image pool, but at a subject level: initially, a number of subjects are sampled, and then all images of these subjects constitute the labelled dataset. The number of unlabelled images is fixed and set equal to 1200 images: these are sampled at random from all subjects of the training set and from cardiac phases other than End Systole (ES) and End Diastole (ED) (for which no ground truth masks exist). The real segmentation masks used to train the mask discriminator are taken from the set of image-mask pairs from the same dataset.

In order to test the generalisability of all methods to different types of images, we use two cine-MR datasets: ACDC which contains masks of the LV, MYO and RV; and QMRI which contains masks of the LV and MYO. Spatial augmentations by rotating inputs up to 90° are applied to experiments using ACDC data to better simulate the orientation variability of the dataset. No augmentations are applied in experiments using QMRI data since all images maintain a canonical orientation. No further augmentations have been performed to fairly compare the effect of the different methods.

We present the average cross-validation Dice score (on held out test sets) across all labels, as well as the Dice score for each label separately, and the corresponding standard deviations. Note that images from a given subject can only be present in exactly one of the training, validation or test sets. Table 1 contains the ACDC results for all labels, MYO, LV and RV respectively, and Table 2 contains the QMRI results for all labels, MYO, and LV respectively. The test set for each fold contains 280 images of ED and ES phases, belonging to 15 subjects for ACDC, and 35 images of the ED phase belonging to 4 subjects for QMRI. The best results are shown in bold font, and an asterisk indicates statistical significance at the 5% level, compared to the second best result, computed using a paired t-test. In both tables the lowest amount of labelled data (1.5% for Table 1 and 6% for Table 2) correspond to images selected from one subject. Segmentation examples for ACDC data using different number of labelled images are shown in Figure 4, where different colours are used for the different segmentation classes.

Table 1:

Dice score (%) on ACDC for MYO, LV, RV, and average. Standard deviations are shown as subscripts. The models are trained with 1200 unlabelled and different fraction of labelled images (each one corresponding to a proportion of selected subjects). For each of the three components and the average separately, the best result is shown in bold font and an asterisk indicates statistical significance at the 5% level compared to the second best method in the same row/component.

| labels | U-Net | GAN | self-train | SDNet | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MYO | LV | RV | avg | MYO | LV | RV | avg | MYO | LV | RV | avg | MYO | LV | RV | avg | |

| 100% | 837 | 886 | 7910 | 857 | 826 | 876 | 758 | 835 | 847 | 895 | 828 | 856 | 845 | 884 | 788 | 845 |

| 50% | 837 | 877 | 7910 | 857 | 817 | 866 | 7510 | 827 | 8010 | 8510 | 7811 | 828 | 836 | 877 | 779 | 836 |

| 25% | 779 | 829 | 6714 | 7511 | 789 | 858 | 7211 | 798 | 7613 | 8510 | 7015 | 7811 | 856 | 7311 | ||

| 12.5% | 7113 | 8013 | 6117 | 7013 | 788 | 856 | 6913 | 798 | 6317 | 7713 | 5721 | 6715 | 798 | 857 | 6913 | 808 |

| 6% | 6312 | 7613 | 5622 | 6513 | 7511 | 8111 | 6913 | 7512 | 4627 | 5923 | 3418 | 4723 | 779 | 8310 | 7112 | |

| 3% | 5519 | 6620 | 4620 | 5218 | 7332 | 7910 | 6714 | 7510 | 2015 | 3520 | 2214 | 2415 | 6814 | |||

| 1.5% | 2619 | 3321 | 3517 | 2119 | 6721 | 7811 | 6312 | 6712 | 1110 | 1914 | 2512 | 1611 | 7012 | 7713 | 6415 | |

Table 2:

Dice score (%) on QMRI for MYO, LV, and average. Standard deviations are shown as subscripts. The models are trained with 1200 unlabelled and different fraction of labelled images (each one corresponding to a proportion of selected subjects). For each of the two components and the average separately, the best result is shown in bold font and an asterisk indicates statistical significance at the 5% level compared to the second best method in the same row/component.

| labels | U-Net | GAN | self-train | SDNet | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MYO | LV | avg | MYO | LV | avg | MYO | LV | avg | MYO | LV | avg | |

| 100% | 729 | 906 | 837 | 757 | 933 | 864 | 759 | 925 | 867 | 756 | 934 | 864 |

| 50% | 7215 | 8218 | 7415 | 719 | 867 | 835 | 6211 | 889 | 799 | 736 | 905 | 845 |

| 25% | 5414 | 809 | 6910 | 687 | 867 | 815 | 3622 | 5629 | 4926 | 667 | 887 | 808 |

| 12.5% | 5211 | 816 | 657 | 688 | 886 | 797 | 4216 | 6414 | 5814 | 679 | 886 | 807 |

| 6% | 2114 | 4328 | 4320 | 649 | 8410 | 7510 | 86 | 2111 | 137 | 657 | 8710 | 795 |

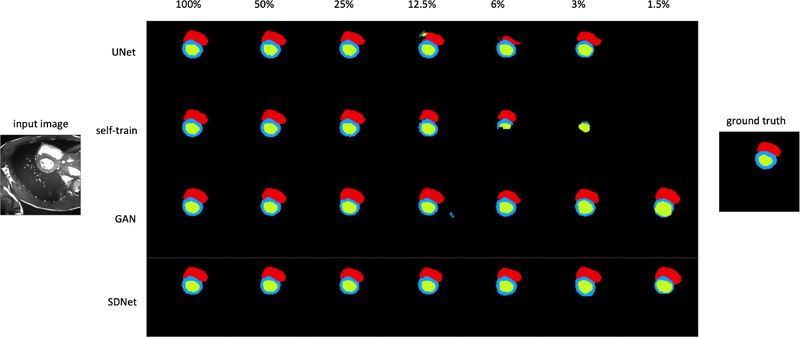

Fig. 4:

Segmentation example for different numbers of labelled images from the ACDC dataset. Blue, green and red show the models prediction for MYO, LV and RV respectively.

For both datasets, when the number of annotated images is high, then all methods perform equally well, although our method achieves the lowest variance. In Table 1 the performance of the supervised (U-Net) and self-trained methods decreases when the number of annotated images reduces below 12.5%, since the limited annotations are not sufficiently representative of the data. When using data from one or two subjects, these two methods which mostly rely on supervision fail with a Dice score below 55%. On the other hand, even when the number of labelled images is small, adversarial training used by SDNet and GAN helps maintaining a good performance. The reconstruction cost used by our method further regularises training and consistently produces more accurate results, with Dice scores equal to 73%, 77% and 78% for 1.5%, 3% and 6% labels respectively, that are also significantly better, with p-values 0.0006, 0.02, and 0.002, in a paired t-test.

It is interesting to compare the performance of SDNet with our previous work (Chartsias et al., 2018). We therefore modify our previous model for multi-class segmentation and repeat the experiment for the ACDC dataset. We compute the Dice scores and standard deviations for 100%, 50%, 25%, 12.5%, 6%, 3%, and 1.5% of labelled data to be respectively 79 ± 7%, 75 ± 8%, 79±7%, 77±10%, 75±9%, 66±15%, and 59±13%. Comparing with the results of Table 1, SDNet significantly outperforms our previous model (at the 5% level, paired t-test).

On the smaller QMRI dataset, the segmentation results are seen in Table 2, and correspond to two masks instead of three. When using annotated images from just a single subject (corresponding to 6% of the data the lowest possible), the performance of the supervised method reduces by almost 50% compared to when using the full dataset. SDNet and GAN both maintain a good performance of 75% and 79%, with no significant differences between them.

5.2. Left ventricular volume

It is common for clinicians to not manually annotate all endocardium and epicardium contours for all patients if it is not necessary. Rather, a mixture of annotations and other metrics of interest will be saved at the end of the study in the electronic health record. For example, we can have a scenario with images of some patients that contain myocardium segmentations and some images with the value of their left ventricular volume. Here we test our model in such a multi-task scenario and show that we can benefit from such auxiliary and mixed annotations. We will evaluate, firstly whether our model is capable of predicting a secondary output related to the anatomy (the volume of the left ventricle), and secondly whether this secondary task improves the performance of the main segmentation task.

Using the QMRI dataset, we first calculate the ground truth left ventricular volume (LVV) for each patient as follows: for each 2D slice, we first sum the pixels of the left ventricular cavity, then multiply this sum with the pixel resolution to get the corresponding area and then multiply the result with the slice thickness to get the volume occupied by each slice. The final volume is the sum of all individual slice volumes.

Predicting the LVV as another output of SDNet follows a similar process to the one used to calculate the ground truth values. We design a small neural network consisting of two convolutional layers (each having a 3×3×16 kernel followed by a ReLU activation), and two fully connected layers of 16 and 1 neurons respectively, both followed by a ReLU activation. This network regresses the sum of the pixels of the left ventricle, taking as input the spatial representation. The predicted sum can then be used to calculate the LVV offline.

Using a pre-trained model of labelled images corresponding to one subject (last row in Table 2 with 6% labels), we fine-tune the whole model whilst training the area regressor using ground truth values from 17 subjects. We find the average LVV over the test volumes equal to 138.57mL (standard deviation of 8.8), and the ground truth LVV equal to 139.23mL (standard deviation of 2.26), with no statistical difference between them in a paired t-test. Both measurements agree with the normal LVV values for ED cardiac phases, which was reported as 143mL in a large population study (Bai et al., 2018a). The multi-task objective used to fine-tune the whole model also benefits test segmentation accuracy, which is raised from 75.6% to 83.2% (statistically significant at the 5% level). 3 for both labels individually: MYO accuracy rises from 63.3% to 70.6% and LV accuracy rises from 81.9% to 89.9%. While this is for a single split, observe that using LVV as an auxiliary task effectively brought us closer to the range of having 50% annotated masks (second row in Table 2). Thus, auxiliary tasks, such as LVV prediction, which is related to the endocardial border segmentation, can be used to train models in a multi-task setting and leverage supervision present in typical clinical settings.

5.3. Multimodal learning

By design, our model separates the anatomical factor from the image modality factor. As a result, it can be trained using multimodal data, with the spatial factor capturing the common anatomical information and the non-spatial factor capturing the intensity information unique to each image’s particular modality. Here we evaluate our model using a multimodal MR and CT input to achieve segmentation (Section 5.3.1) and modality transformation (Section 5.3.2).

Both these tasks rely on learning consistent anatomical representations across the two modalities. However, it is well known that MR and CT have different contrasts that accentuate different tissue properties and may also have different views. Thus, we would expect some channels of the anatomy factor to be used in CT but not in MRI whereas some to be used by both. This disentanglement of information captures both differences in tissue contrasts but also differences in view when parts of the anatomy are not visible in all slice positions of a 3D volume.

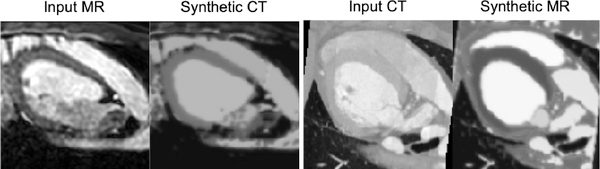

This is illustrated in Figure 5, which shows three example anatomical representations from one MR and two CT images, and specifically marks common anatomical factors that are captured in the same respective channels, and unique factors that are captured in different channels.

Fig. 5:

Example of anatomical representations from one MR and two CT images respectively. Green boxes mark common spatial information captured in the same channels, whereas red boxes mark information present in one but not the other modalities.

5.3.1. Multimodal segmentation

We train SDNet using MR and CT data with the aim to improve learning of the anatomical factor from both MR and CT segmentation masks. In fact, we show below that when mixing data from MR and CT images, we improve segmentation compared to when using each modality separately. Since the aim is to specifically evaluate the effect of multimodal training in segmentation accuracy, unlabelled images are not considered here as part of the training process, and the models are trained with full supervision only.

In Table 3 we present the Dice score over held out MR and CT test sets, obtained when training a model with differing amounts of MR and CT data. Results for 12.5% of data correspond to images obtained from one subject. Training with both data leads to improvements in both individual MR and CT performances. This is the case even when we add 12.5% of CT on 100% of MR, and vice versa; this improves MR performance (from 75% to 76%, not statistically significant, although improvement becomes significant as more CT are added), but also CT performance (from 77% to 81%, statistically significant).

Table 3:

Dice score (%) on MM-WHS (LV, RV, MYO, LA, RA, PA, AO) data, when training with different mixtures of MR and CT data. Standard deviations are shown as subscripts.

| MR train | CT train | MR test | CT test |

|---|---|---|---|

| 100% | 100% | 785 | 801 |

| 100% | 12.5% | 763 | 566 |

| 12.5% | 100% | 397 | 811 |

| 12.5% | 0% | 2712 | - |

| 0% | 12.5% | - | 237 |

| 100% | 0% | 753 | - |

| 87.5% | 12.5% | 745 | 656 |

| 75% | 25% | 752 | 693 |

| 62.5% | 37.5% | 722 | 692 |

| 50% | 50% | 685 | 733 |

| 37.5% | 62.5% | 674 | 734 |

| 25% | 75% | 676 | 743 |

| 12.5% | 87.5% | 497 | 736 |

| 0% | 100% | - | 774 |

We also train using different mixtures of MR and CT data, but keeping the total amount of training data fixed. In the CT case, we observe that Dice ranges between 77% (at 100%) and 65% (at 12.5%). This shows that CT segmentation clearly benefits from training alongside MR, since when training on CT alone with 12.5%, the corresponding Dice is 23%. In the MR case, we observe that Dice ranges between 75% (at 100%) and 49% (at 12.5%). Here, the relative reduction is larger than in the CT case, however MR training at 12.5% also benefits from the CT data, since the Dice when training on 12.5% MR alone is 27%. Furthermore, the Dice score for the other proportions of the data is relatively stable with a range of 69% to 74% for CT, and a range of 67% to 75% for MR.

In both experimental setups, whether the total number of training data is fixed or not, having additional data even when coming from another modality helps. This can have implications for current or new datasets of a rare modality, which can be augmented with data from a more common modality.

5.3.2. Modality transformation

Although our method is not specifically designed for modality transformations, when trained with multimodal data as input, we explore cross-modal transformations by mixing the disentangled factors. This mixing of factors is a special case of latent space arithmetic that we demonstrate concretely in Section 5.5. We combine different values of the modality factor with the same fixed anatomy factor to achieve representations of the anatomy corresponding to two different modalities.

To illustrate this we use the model trained with 100% of the MR and CT in the MM-WHS dataset and demonstrate transformations between the two modalities. In Figure 6 we synthesise CT images from MR (and MR from CT) by fusing a CT modality vector z with an anatomy s from an MR image (and vice versa). We can readily see how the transformed images capture intensity characteristics typical of the domain.

Fig. 6:

Modality transformation between MR and CT when a fixed anatomy is combined with a modality vector derived from each imaging modality. Specifically let xmr, xct be MR and CT images respectively. The left panel of the figure shows the original MR image xmr, and a ‘reconstruction’ of xmr using the modality component derived from xct, i.e. g(fanatomy(xmr), fmodality(xct, fanatomy(xct))). The right panel of the figure shows the original CT image xct, and a ‘reconstruction’ of xct using the modality component derived from xmr, i.e. g( fanatomy(xct), fmodality(xmr, fanatomy(xmr))).

5.4. Modality type estimation

Our premise is that the learned modality factor z captures imaging specific information. We assess this in two different settings using multimodal MR and CT data and also cine-MR and CP-BOLD MR data.

Taking one of the trained models of Table 3 corresponding to a split with 100% MR (14 subjects of 2,837 images) and 100% CT images (14 subjects of 1,837 images)4, we learn posthoc a logistic regression classifier (using the same training data) to predict the image modality (MR or CT) from the modality factor z. The learned regressor is able to correctly classify the input images as CT or MR, on a held out test set (3 subjects of 420 images for MR and 3 subjects of 387 images for CT) 92% of the time. To find whether there is a single z dimension that captures best this binary semantic component (MR or CT) we repeat 8 independent experiments training 8 single input logistic regressors, one for each dimension of z. We find that z5 obtains an accuracy of 82%, whereas the remaining dimensions vary from 42% to 66% accuracy. Thus, a single dimension (in this case z5) captures most of the intensity differences between MR and CT which are global and affect all areas of the image.

In a second complementary experiment we perform the same logistic regression classification to discriminate between cine-MR and CP-BOLD MR images (which are also cine, but contain additionally oxygen-level dependent contrast). Here, SDNet and the logistic regression model are trained using 95 cine-MR and 214 CP-BOLD images from 7 subjects, and evaluated on a test set of 27 and 31 images from 1 subject respectively. Unlike MR and CT which are easy to differentiate due to differences in signal intensities across the whole anatomy, BOLD and cine exhibit subtle spatially and temporally localised differences that are modulated by the amount of oxygenated blood present (the BOLD effect) and the cardiac cycle and these are most acute in the heart.5 Even here the classifier can detect BOLD presence with 96% accuracy, when all dimensions of z are used. When each z dimension is used separately, accuracy ranges between 47% and 65%, and thus no single z dimension globally captures the presence (or lack) of BOLD contrast.

These findings are revealing and have considerable implications. First they show that our modality factor z does capture modality specific information which is obtained completely unsupervised, and depending on context and complexity of the imaging modality, a single z dimension may capture it almost completely (in the case of MR/CT). This also implicitly suggests that spatial information may be captured only in s. 6

More importantly, it opens the question of how the spatial and modality factors interact to reproduce the output. We address these questions below using latent space arithmetic.

5.5. Latent space arithmetic

Herein we demonstrate the properties of the disentanglement by separately examining the effects of anatomical and modality factors on the synthetic images and how modifications of each alter the output. For these experiments we consider the model from Table 1, trained on ACDC using 100% of the labelled training images.

Arithmetic on the spatial factor s

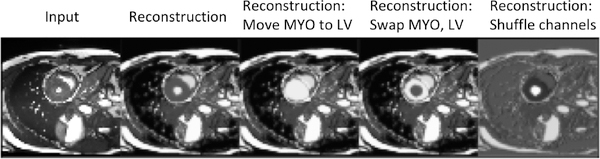

We start with the spatial factor and in Figure 7 we alter the content of the spatial channels to qualitatively see how the decoder has learned an association between the position of each channel and different signal intensities of the anatomical parts. In all these experiments the z factor remains the same. The first two images show the input and the original reconstruction. The third image is produced by adding the MYO spatial channel with the LV spatial channel and by nulling (zeroing) the MYO channel. We can see that the intensity of the myocardium is now the same as the intensity of the left ventricle. In the fourth image, we swap the channels of the MYO with the one of the LV, resulting in reverse intensities for the two substructures. Finally, the fifth image is produced by randomly shuffling the spatial channels.

Fig. 7:

Reconstructions of an input image, when re-arranging the channels of the spatial representation. The images from left to right are: the input, the original reconstruction, the reconstruction when moving the MYO to the LV channel, the reconstruction when exchanging the content of the MYO and the LV channels, and finally a reconstruction obtained after a random permutation of the channels.

Arithmetic on the modality factor z

Next, we examine the information captured in each dimension of the modality factor. Since the modality factor follows a Gaussian distribution, we can draw random samples or interpolate between samples in order to generate new images. In this analysis, an image x is firstly encoded to factors s and z. Since the prior over z is an 8-dimensional unit Normal distribution, 99.7% of its probability mass lies within three standard deviations of the mean. As a result, the probability space is almost fully covered by values in the range [−3, 3]. By interpolating each z-dimension between −3 and 3, and whilst keeping the values of the remaining dimensions and s fixed, we can decode synthetic images that will show the variability induced by every z-dimension.

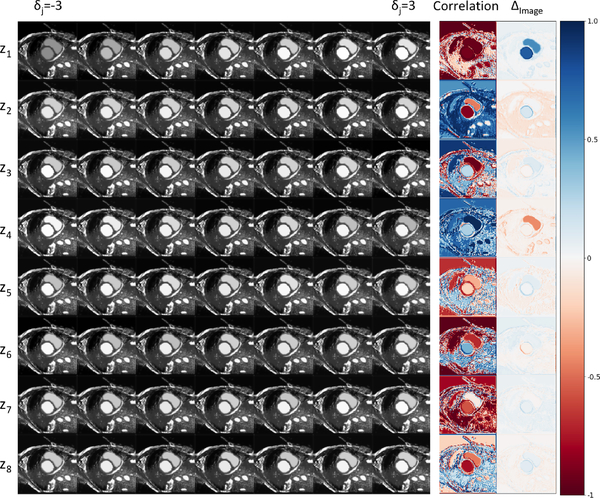

To achieve this we consider a grid where each z dimension is considered over 7 fixed steps from −3 and 3. Each row of the grid corresponds to one of the 8 z dimensions, whereas a column a specific z-th value in the range [−3, 3]. This grid is visualised in Figure 8.

Fig. 8:

Reconstructions when interpolating between z vectors. Each row corresponds to images obtained by changing the values of a single z-dimension. The final two columns (correlation and Δimage) indicate areas of the image mostly affected by this change in z.

Mathematically described, for i ∈ {1, 2, . . . , 8} and j ∈ {1, 2, . . . , 7}, an image in the ith row and jth column of the grid is g(s, z⨀vi+(1−vi)⨀δj), where ⨀ denotes element-wise multiplication, vi is a vector of length 8 with all entries 1 except for a 0 in the ith position, and δj = −3 + 6( j − 1).

In order to assess the effect of zi (the ith dimension of z) on the intensities of the synthetic results, we calculate a correlation image and a difference image (for every row of results). The value of each pixel in the correlation image is calculated using the Pearson correlation coefficient between the interpolation values of a zi and the intensity values of the synthetic images for this pixel.

where h, w are the height and width position of a pixel, is the mean value of zi, is the mean value of a pixel across the interpolated images. The difference image is calculated for each row by subtracting the image in the last column position on the grid (δj = 3) with the first position on the grid (δj = −3). 7

In Figure 8, the correlation images show large positive or negative correlation between each z dimension and most pixels of the input image, demonstrating that z mostly captures global image characteristics. However, local correlations are also evident for example between z1 and all pixels of the heart, between z4 and the right ventricle and between z5 and the myocardium. However, different magnitude changes are evident, as the difference image in the last last column of Figure 8 shows. z1 and z4 seem to alter significantly the local contrast.

5.6. Factor sizes

While throughout the paper we used C = 8 and nz = 8, it is worthwhile discussing the effects of these important hyperparameters as they determine the capacity of the model.

We have found through experiments that when C > 8 many channels are all zero. This additional capacity is helpful when we use multimodal data, as for example in the MR/CT experiments, where C = 16. This allows to capture information common and unique across the two modalities in different s-channels (see Figure 5). On the other hand, making C small (C < 4) we find that the model does not have enough capacity (for example an SDNet with C = 4 trained at 100% labels has Dice performance 68.1 ± 8%, a drop compared to 84% when C = 8, that is also statistically significant at 5%).

We used nz = 8 inspired by related literature (Zhu et al., 2017). Experiments with similar values of nz maintain the segmentation performance, though this is decreased for high values of nz. Specifically, an SDNet with 4, 32, and 128 dimensions trained at 100% labels has Dice 84±5%, 83±6%, and 82±6%, respectively. Compared to 84% when nz = 8, the results for nz = 4 and nz = 32 are similar, but the result for nz = 128 is worse (and also statistically significant at 5%), suggesting that the additional dimensions may negatively affect training and do not store extra information. To assess this we used the methodology in Burgess et al. (2018) to find the capacity of each z-dimension, which is also a measure of informativeness. This is calculated using the average variance per dimension, where a smaller variance indicates higher capacity. A variance near 1 (with a mean=0) would indicate that this dimension encodes a Normal distribution for any datapoint, and thus, according to Burgess et al. (2018), is uninformative and points to encoding the average of the distribution mode. Using this analysis, for nz = 128 we observed that two z-dimensions each had variance of 0.88, while the remaining 126 had an average variance of 0.91. Repeating this analysis for nz = 32, nz = 8 and nz = 4 we get the following results. For nz = 32, two dimensions each has variances 0.78 and 0.79, while the remaining 30 dimensions have an average variance of 0.81. For nz = 8, two z-dimensions each has variances 0.63 and 0.73, while the remaining 6 have an average variance of 0.75. Finally for nz = 4, two dimensions have variances 0.62 and 0.65, and the average variance of the other two is 0.77, which are similar to the results of nz = 8. This analysis shows that with smaller nz, more informative content is captured in the individual z-dimensions, and thus a high nz is redundant for this particular task.

6. Conclusion

We have presented a method for disentangling medical images into a spatial and a non-spatial latent factor, where we enforced a semantically meaningful spatial factor of the anatomy and a non-spatial factor encoding the modality information. To the best of our knowledge, maintaining semantics in the spatial factor has not been previously investigated. Moreover, through the incorporation of a variational autoencoder, we can treat our method as a generative model, which allows us to also efficiently model the intensity variability of medical data.

We demonstrated the utility of our methodology in a semi-supervised segmentation task, where we achieve high accuracy even when the amount of labelled images is substantially reduced. We also demonstrated that the semantics of our spatial representation mean it is suitable for secondary anatomicallybased tasks, such as quantifying the left ventricular volume, which not only can be accurately predicted, but also improve the accuracy of the primary task in a multi-task training scenario. We also show that the factorisation of the model presented can be used in multimodal learning, where both anatomical and imaging information can be encoded to create synthetic MR and CT images, using even small fractions of CT and MR input images, respectively.

The broader significance of our work is the disentanglement of medical image data into meaningful spatial and non-spatial factors. This intuitive factorisation does not require the specific network architecture choices used here, but is general in nature and thus could be applied in diverse medical image analysis tasks. Factorisation facilitates manipulations of the latent space and as such probing and interpreting the model. Such interpretability is considered key to advance the translation of advanced machine learning methods in the clinic (and perhaps why it has been recently emphasised with dedicated MICCAI workshops http://interpretable-ml.org/miccai2018tutorial/).

Our work has some limitations that inspire future directions. We can envision that extensions to 3D (in lieu of 2D), and the explicit learning of hierarchical factors that better capture semantic information (both in terms of anatomical and modality representations), would further improve applicability of our approach in several domains such as brain (which benefits from 3D view) and abdominal imaging. This work further encourages future extensions to improve the fidelity of reconstructed images by explicitly modelling image texture, which would benefit applications in ultrasound. This can be achieved with the design of more powerful decoders, although how best to maintain the balance between the semantics of the spatial representation and the quality of the reconstruction is an open question. Finally, future work includes the extension of the method’s applicability in a completely unsupervised setting where no annotated examples are available.

Highlights.

Factorisation decomposes input into spatial anatomical and imaging factors.

Factors offer unique opportunity to interpret imaging data for many learning tasks.

Achieve semi-supervised segmentation using a fraction of labelled images needed.

Supervision can be obtained by combining multimodal data sources or auxiliary tasks.

Extensive results using four different cardiovascular MR and CT datasets.

Acknowledgements

This work was supported in part by the US National Institutes of Health (1R01HL136578-01) and UK EPSRC (EP/P022928/1), and used resources provided by the Edinburgh Compute and Data Facility (http://www.ecdf.ed.ac.uk/). S.A. Tsaftaris acknowledges the support of the Royal Academy of Engineering and the Research Chairs and Senior Research Fellowships scheme.

Footnotes

Conflict of Interest

We wish to confirm that there are no known conflicts of interest associated with this publication entitled ”Disentangled Representation Learning in Cardiac Image Analysis” and there has been no significant financial support for this work that could have influenced its outcome.

We confirm that the manuscript has been read and approved by all named authors and that there are no other persons who satisfied the criteria for authorship which are not listed. We further confirm that the order of authors listed in the manuscript has been approved by all of us.

We understand that the corresponding author is the sole contact for the Editorial process (including Editorial Manager and direct communications with the office). He will be responsible for communicating with the other authors about submissions of revisions and final approval of proofs. We confirm that we have provided a current, correct email address which is accessible by the corresponding author and which has been configured to accept email from (agis.chartsias@ed.ac.uk).

Experimental results showed that having an additional segmentor network, instead of enforcing our spatial representation to contain the exact segmentation masks, improves the training stability of our method. Furthermore, it offers flexibility in that the same anatomical representation can be used for multiple tasks, such as in segmentation and the calculation of the left ventricular volume.

Note that while using FiLM prevents z from encoding spatial information, it does not prevent the case of posterior collapse i.e. that s encodes (all or part of) the modality information.

The multi-task objective in fact benefits the Dice score (statistically significant at the 5% level)

The results are based on a single split for ease of interpretation as between different splits we cannot relate the different z dimensions.

These subtle spatio-temporal differences can detect myocardial ischemia at rest as demonstrated in Bevilacqua et al. (2016); Tsaftaris et al. (2013).

It is possible to detect the modality from the anatomical factor alone. If there are systematic differences between the modalities, this can be exploited by a classifier for detection. However, in this case the modality information is not actually contained in the anatomy factor.

Note that in order to keep the correlation and the difference image in the same scale [−1, 1], we rescale the images from [−1, 1] to the [0,1], which does not have any effect on the results.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Almahairi A, Rajeswar S, Sordoni A, Bachman P, Courville AC, 2018. Augmented CycleGAN: Learning many-to-many mappings from unpaired data, in: International Conference on Machine Learning. [Google Scholar]

- Azadi S, Fisher M, Kim V, Wang Z, Shechtman E, Darrell T, 2018. Multi-content GAN for few-shot font style transfer, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, p. 13. [Google Scholar]

- Bai W, Oktay O, Sinclair M, Suzuki H, Rajchl M, Tarroni G, Glocker B, King A, Matthews PM, Rueckert D, 2017. Semi-supervised learning for network-based cardiac MR image segmentation, in: Medical Image Computing and Computer-Assisted Intervention, Springer International Publishing, Cham: pp. 253–260. [Google Scholar]

- Bai W, Sinclair M, Tarroni G, Oktay O, Rajchl M, Vaillant G, Lee AM, Aung N, Lukaschuk E, Sanghvi MM, Zemrak F, Fung K, Paiva JM, Carapella V, Kim YJ, Suzuki H, Kainz B, Matthews PM, Petersen SE, Piechnik SK, Neubauer S, Glocker B, Rueckert D, 2018a. Automated cardiovascular magnetic resonance image analysis with fully convolutional networks. Journal of Cardiovascular Magnetic Resonance 20, 65. doi: 10.1186/s12968-018-0471-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bai W, Suzuki H, Qin C, Tarroni G, Oktay O, Matthews PM, Rueckert D, 2018b. Recurrent neural networks for aortic image sequence segmentation with sparse annotations, in: Frangi AF, Schnabel JA, Davatzikos C, Alberola-López C, Fichtinger G (Eds.), Medical Image Computing and Computer Assisted Intervention, Springer International Publishing, Cham: pp. 586–594. [Google Scholar]

- Bengio Y, Courville A, Vincent P, 2013a. Representation learning: A review and new perspectives. IEEE transactions on pattern analysis and machine intelligence 35, 1798–1828. doi: 10.1109/TPAMI.2013.50. [DOI] [PubMed] [Google Scholar]

- Bengio Y, Léonard N, Courville AC, 2013b. Estimating or propagating gradients through stochastic neurons for conditional computation. CoRR abs/1308.3432.

- Bernard O, Lalande A, Zotti C, Cervenansky F, Yang X, Heng P, Cetin I, Lekadir K, Camara O, Ballester MAG, Sanroma G, Napel S, Petersen S, Tziritas G, Grinias E, Khened M, Kollerathu VA, Krishnamurthi G, Rohé M, Pennec X, Sermesant M, Isensee F, Jäger P, Maier-Hein KH, Baumgartner CF, Koch LM, Wolterink JM, Išgum I, Jang Y, Hong Y, Patravali J, Jain S, Humbert O, Jodoin P, 2018. Deep learning techniques for automatic MRI cardiac multi-structures segmentation and diagnosis: Is the problem solved? IEEE Transactions on Medical Imaging 37, 2514–2525. doi: 10.1109/TMI.2018.2837502. [DOI] [PubMed] [Google Scholar]

- Bevilacqua M, Dharmakumar R, Tsaftaris SA, 2016. Dictionary-driven ischemia detection from cardiac phase-resolved myocardial BOLD MRI at rest. IEEE Transactions on Medical Imaging 35, 282–293. doi: 10.1109/TMI.2015.2470075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biffi C, Oktay O, Tarroni G, Bai W, De Marvao A, Doumou G, Rajchl M, Bedair R, Prasad S, Cook S, O’Regan D, Rueckert D, 2018. Learning interpretable anatomical features through deep generative models: Application to cardiac remodeling, in: Frangi AF, Schnabel JA, Davatzikos C, Alberola-López C, Fichtinger G (Eds.), Medical Image Computing and Computer Assisted Intervention, Springer International Publishing, Cham: pp. 464–471. [Google Scholar]

- Burgess CP, Higgins I, Pal A, Matthey L, Watters N, Desjardins G, Lerchner A, 2018. Understanding disentangling in β-vae. NIPS Workshop on Learning Disentangled Representations. [Google Scholar]

- Chartsias A, Joyce T, Dharmakumar R, Tsaftaris SA, 2017. Adversarial image synthesis for unpaired multi-modal cardiac data, in: Simulation and Synthesis in Medical Imaging, Springer International Publishing; pp. 3–13. [Google Scholar]

- Chartsias A, Joyce T, Papanastasiou G, Semple S, Williams M, Newby D, Dharmakumar R, Tsaftaris SA, 2018. Factorised spatial representation learning: Application in semi-supervised myocardial segmentation, in: Medical Image Computing and Computer Assisted Intervention, Springer International Publishing, Cham: pp. 490–498. [Google Scholar]

- Chen X, Duan Y, Houthooft R, Schulman J, Sutskever I, Abbeel P, 2016. InfoGAN: Interpretable representation learning by information maximizing generative adversarial nets, in: Advances in neural information processing systems, Curran Associates, Inc. pp. 2172–2180. [Google Scholar]

- Cheplygina V, de Bruijne M, Pluim JPW, 2019. Not-so-supervised: A survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Medical Image Analysis 54, 280–296, doi: 10.1016/j.media.2019.03.009 [DOI] [PubMed] [Google Scholar]

- Chollet F, et al. , 2015. Keras; https://keras.io. [Google Scholar]

- Donahue C, Lipton ZC, Balsubramani A, McAuley J, 2018. Semantically decomposing the latent spaces of generative adversarial networks, in: International Conference on Learning Representations. [Google Scholar]

- Esser P, Sutter E, Ommer B, 2018. A variational u-net for conditional appearance and shape generation, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8857–8866. [Google Scholar]

- Fidon L, Li W, Garcia-Peraza-Herrera LC, Ekanayake J, Kitchen N, Ourselin S, Vercauteren T, 2017. Scalable multimodal convolutional networks for brain tumour segmentation, in: Medical Image Computing and Computer-Assisted Intervention, Springer International Publishing, Cham: pp. 285–293. [Google Scholar]

- Gatys LA, Ecker AS, Bethge M, 2016. Image style transfer using convolutional neural networks, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2414–2423. doi: 10.1109/CVPR.2016.265. [DOI] [Google Scholar]

- Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y, 2014. Generative adversarial nets, in: Advances in neural information processing systems, Curran Associates, Inc. pp. 2672–2680. [Google Scholar]

- Higgins I, Matthey L, Pal A, Burgess C, Glorot X, Botvinick M, Mohamed S, Lerchner A, 2017. beta-vae: Learning basic visual concepts with a constrained variational framework, in: International Conference on Learning Representations. [Google Scholar]

- Hu Q, Szabo A, Portenier T, Zwicker M, Favaro P, 2018. Disentangling factors of variation by mixing them, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, p. 3399–3407, doi: 10.1109/CVPR.2018.00358 [DOI] [Google Scholar]

- Huang X, Liu MY, Belongie S, Kautz J, 2018. Multimodal unsupervised image-to-image translation, in: European Conference on Computer Vision, Springer International Publishing. pp. 179–196. [Google Scholar]

- Kim H, Mnih A, 2018. Disentangling by factorising, in: International Conference on Machine Learning, JMLR.org. pp. 2654–2663. [Google Scholar]

- Kingma DP, Ba J, 2015. Adam: A method for stochastic optimization, in: International Conference on Learning Representations [Google Scholar]