Abstract

Understanding pathogen risks is a critically important consideration in the design of water treatment, particularly for potable reuse projects. As an extension to our published microbial risk assessment methodology to estimate infection risks associated with Direct Potable Reuse (DPR) treatment train unit process combinations, herein, we (1) provide an updated compilation of pathogen density data in raw wastewater and dose-response models; (2) conduct a series of sensitivity analyses to consider potential risk implications using updated data; (3) evaluate the risks associated with log credit allocations in the United States; and (4) identify reference pathogen reductions needed to consistently meet currently applied benchmark risk levels. Sensitivity analyses illustrated changes in cumulative annual risks estimates, the significance of which depends on the pathogen group driving the risk for a given treatment train. For example, updates to norovirus (NoV) raw wastewater values and use of a NoV dose-response approach, capturing the full range of uncertainty, increased risks associated with one of the treatment trains evaluated, but not the other. Additionally, compared to traditional log-credit allocation approaches, our results indicate that the risk methodology provides more nuanced information about how consistently public health benchmarks are achieved. Our results indicate that viruses need to be reduced by 14 logs or more to consistently achieve currently applied benchmark levels of protection associated with DPR. The refined methodology, updated model inputs, and log credit allocation comparisons will be useful to regulators considering DPR projects and design engineers as they consider which unit treatment processes should be employed for particular projects.

Keywords: direct potable reuse, quantitative microbial risk assessment, recycled water, reclaimed water, advanced water treatment, log credit allocations

1. Introduction

Interest in recycling water for potable purposes is growing worldwide as water resources become stressed due to population growth, urbanization, and droughts (Rice et al., 2013). Due to the nature of the source water in scenarios involving wastewater reuse, pathogen control is a critically important consideration in the design of unit treatment process combinations for both indirect potable reuse (IPR), which includes an environmental buffer (reservoir or groundwater augmentation), and direct potable reuse (DPR) which does not include an environmental buffer.

Currently no federal recommendations specifically address potable reuse in the United States. Rather, regulators are considering implementation of various unit treatment process combinations to treat wastewater for potable purposes (Dahl, 2014). For example, IPR projects in California apply the “12/10/10 Rule”, meaning viruses should be reduced by 12 logs through treatment, and Cryptosporidium and Giardia by 10 logs each (California Department of Public Health, 2011; NWRI, 2013). These log reduction values are intended to achieve a 1 infection per 10,000 people per year benchmark level of human health protection and were initially derived from the maximum reported densities of culturable enteric viruses, Giardia lamblia, and Cryptosporidium spp. found in raw sewage (Macler and Regli, 1993; U.S. EPA, 1998; Metcalf & Eddy, 2003; Sinclair et al., 2015). California is now considering the same microbial log reductions for DPR projects (Olivieri et al., 2016), which are also intended to achieve a risk benchmark of 1 infection per 10,000 people per year (NWRI, 2013; TWDB, 2014; NWRI, 2015).

We previously published a microbial risk assessment methodology to evaluate infection risks associated with various DPR treatment train combinations currently under consideration, which noted several important findings (Soller et al., 2017a). First, there are quantitative human health-based advantages for DPR projects in which product water is introduced into the raw water supply upstream of a conventional drinking water treatment facility (raw water augmentation), compared to those in which product water is introduced directly into a potable water distribution system (drinking water augmentation). Second, cumulative annual risk estimates for any particular treatment train are driven by the highest daily risks for any of the individual reference pathogens. Thus, a single day of peak pathogen wastewater inputs can cause annual risks to exceed benchmark risk levels. Finally, proposed DPR project designs need to carefully consider reduction of both Cryptosporidium spp. and human enteric viruses, such as Norovirus (NoV) (Soller et al., 2017a). Both of these enteric pathogens are often found in high densities in raw sewage (Eftim et al., 2017), are infectious at low doses (Teunis et al., 2008), and exhibit attributes allowing them to persistent in the environment and through treatment processes (Pouillot et al., 2015).

An advantage of our proposed risk methodology is that it can be iteratively refined as more information becomes available and can be applied to additional treatment train combinations. Since publication of our risk methodology, new information about NoV densities in raw wastewater (Eftim et al., 2017) and dose-response relationships for Cryptosporidium spp. (Messner and Berger, 2016), Adenovirus (AdV) (Teunis et al., 2016), and NoV (Soller et al., 2017b) have been published. The objectives of this work are: (1) to provide an updated compilation of pathogen density data in raw sewage, reductions across treatment, and dose-response functions; (2) to conduct a series of sensitivity analyses to consider potential risk implications using updated pathogen data; (3) to extend our analysis to evaluate State log reduction credit allocations, as proposed by the State of California (Mosher et al., 2016); and (4) to identify reference pathogen reductions needed to consistently meet currently applied benchmark risk levels.

2. Materials and Methods

2.1. DPR treatment trains

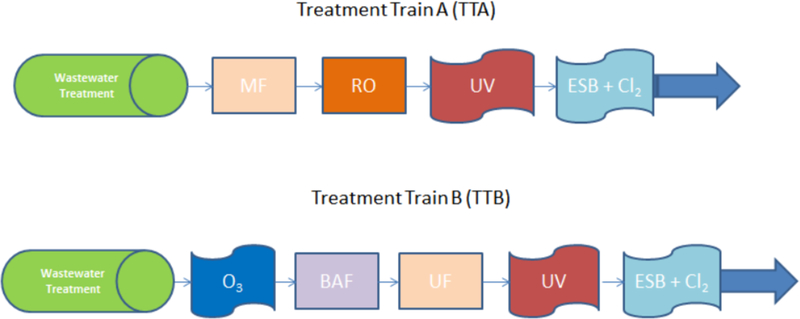

Two different DPR treatment trains were evaluated in the risk assessment sensitivity analysis (Figure 1). The first treatment train, referred to as TTA, consists of a conventional activated sludge wastewater treatment plant (WWTP) that produces non-disinfected secondary effluent feeding into an advanced water treatment facility (AWTF). The AWTF is composed of microfiltration, reverse osmosis, ultraviolet disinfection, and an engineered storage buffer with free chlorine disinfection (ESB+Cl2). Previous evaluations of this TTA indicated that the risks were predominately driven by viral enteric pathogens (Soller et al., 2017a). The second evaluated treatment train, referred to as TTB, assumes identical WWTP treatment, but the AWTF consists of ozonation, biologically active filtration, ultrafiltration, ultraviolet disinfection, and an ESB+Cl2. In contrast to TTA, previous evaluations of TTB indicated that the risks were predominately driven by protozoan pathogens, such as Cryptosporidium spp. (Soller et al., 2017a).

Figure 1.

DPR treatment trains evaluated in this study. Legend: MF –Microfiltration, RO –Reverse Osmosis, UV –Ultraviolet disinfection, ESB + Cl2 –Engineered Storage Buffer with Free Chlorine, O3 –Ozonation, BAF –Biologically Active Filtration, UF –Ultrafiltration.

To identify the potential public health significance of a treatment train design choice, the base TTA and TTB configurations were also evaluated with the assumption of a lower ultraviolet disinfection dose (12 millijoules/centimeter2, mJ/cm2), in lieu of the higher ultraviolet disinfection dose (800 mJ/cm2). The lower ultraviolet disinfection dose is consistent with conventional WWTP disinfection, while the higher ultraviolet disinfection dose is typically applied, often with the use of advanced oxidation, for the purposes of disinfection by-product destruction (Gerrity et al., 2015). Our previous analysis indicated that the ultraviolet disinfection dose (low versus high) was the most sensitive parameter in the DPR risk model (Soller et al., 2017a).

2.2. QMRA Approach

The QMRA approach applied to the treatment train evaluations was described previously (Soller et al., 2017a). Briefly, a stochastic, static QMRA methodology was used to estimate infection from reference pathogens through ingestion of DPR product water for TTA and TTB described above (Figure 1). Using a two-step Monte Carlo simulation, we estimated a distribution of cumulative annual risks of infection due to all of the evaluated pathogens. This analysis is accomplished in a step-wise fashion, by 1) calculating the reference pathogen-specific daily risk estimates; 2) combining the pathogen-specific daily risks to generate a cumulative daily risk estimates (n=365); and 3) combining the cumulative daily risks to generate a cumulative annual risk estimate. This step is then repeated 1,000 times to generate a distribution of cumulative annual risk estimates.

2.3. Sensitivity Analysis – Raw Wastewater Pathogen Densities and Dose-Response Models

As with our previous work (Soller et al., 2017a), six reference pathogens (NoV, AdV, Cryptosporidium spp., Giardia lamblia, Campylobacter spp., and Salmonella enterica), representing the major enteric pathogen classes, were included in this study. A sensitivity analysis was conducted to investigate the impact of changing the following input parameters: 1) NoV density in raw wastewater; 2) NoV dose-response model; 3) AdV dose-response model; 4) and Cryptosporidium spp. dose-response model. Table 1 summarizes the dose-response relationships used in the previous risk analysis (Soller et al., 2017a) and the additional dose-response relationships evaluated within this study. The updated AdV dose-response model is specific for ingestion (Teunis et al., 2016), as compared to the base model which was developed for inhalation (Crabtree et al., 1997). The updated NoV dose-response model accounts for the full range of uncertainty in the available observed human trial data (Soller et al., 2017b). The updated Cryptosporidium spp. dose-response model allows for more uncertainty than the previously used fractional Poisson or exponential models, while also approximating the fractional Poisson (as the model parameter approaches 1) (Messner and Berger, 2016) (Table 1).

Table 1.

Reference pathogen dose-response relationships.

| Reference Pathogen | Base Dose-Response Models | Parameter Values | Additional Dose-Response Models Evaluated | Parameter Values |

|---|---|---|---|---|

| Adenovirus | Exponential (Crabtree et al., 1997) | 0.4172 | Hypergeometric (Teunis et al., 2016) | 5.11, 2.8 |

| Campylobacter jejuni | Hypergeometric (Teunis et al., 2005) | 0.024, 0.011 | NA | |

| Cryptosporidium spp. | Exponential (U.S. EPA, 2006) Fractional Poisson (Messner and Berger, 2016) |

0.09 0.737 |

Exponential with Immunity (Messner and Berger, 2016) | Values provided by M. Messner1 |

| Giardia lamblia | Exponential (Rose et al., 1991) | 0.0199 | NA | |

| Fractional Poisson (Messner et al., 2014) | 0.72 | |||

| Salmonella enterica | Beta-Poisson (Haas et al., 1999) | 0.3126, 2884 | NA |

30,000 Markov Chain Monte Carlo parameter pairs were provided and used in the analyses

This model uses both of the base dose-response models and randomly weights them in each iteration of the simulation

In addition to the dose-response models, we updated the previously published literature review (Soller et al., 2017a), characterizing the density of each of the reference pathogens in raw wastewater and the reduction of each of the reference pathogens across each of the individual unit treatment processes under consideration with more recently published information (Table 2).

Table 2.

Pathogen densities in raw wastewater and log10 reductions across unit treatment processes.

| Adenovirus | Campylobacter | Cryptosporidium | Giardia | Norovirus | Salmonella | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Min | Max | Min | Max | Min | Max | Min | Max | Min | Max | Min | Max | |

| Raw Wastewater1 | 1.75 | 3.84 | 2.95 | 4.60 | −0.52 | 4.38 | 0.51 | 4.95 | 4.72 | 1.52 | 0.48 | 7.38 |

| CSWT3 | 0.9 | 3.2 | 0.6 | 2 | 0.7 | 1.5 | 0.5 | 3.3 | 0.8 | 3.7 | 1.3 | 1.7 |

| Ozonation | 4 | 4 | 1 | 3 | 5.4 | 4 | ||||||

| BAF | 0 | 0.6 | 0.5 | 2 | 0 | 0.85 | 0 | 3.88 | 0 | 1 | 0.5 | 2 |

| MF | 2.4 | 4.9 | 3 | 9 | 4 | 7 | 4 | 7 | 1.5 | 3.3 | 3 | 9 |

| RO | 2.7 | 6.5 | 4 | 2.7 | 6.5 | 2.7 | 6.5 | 2.7 | 6.5 | 4 | ||

| UF | 4.9 | 5.6 | 9 | 4.4 | 6 | 4.7 | 7.4 | 4.5 | 5.6 | 9 | ||

| UV Dose | ||||||||||||

| 800 mJ/cm2 | 6 | 6 | 6 | 6 | 6 | 6 | ||||||

| 12 mJ/cm2 | 0 | 0.5 | 4 | 2 | 3.5 | 2 | 3.5 | 0.5 | 1.5 | 4 | ||

| CDWT3 | 1.5 | 2 | 3 | 4 | 1.4 | 3.9 | 0.3 | 4 | 1.5 | 2 | 2 | 3 |

| Cl2 | 4 | 5 | 4 | 0 | 0 | 0.5 | 1.1 | 3.9 | 4 | |||

log10 units; Adenovirus IU/L, Campylobacter MPN/L, Cryptosporidium oocysts/L, Giardia cysts/L, Norovirus copies/L, Salmonella PFU/L.

Values shown for raw wastewater are mean and standard deviation of normal distribution in log10 copies.

See legend in Figure 1 for abbreviations; CSWT= Conventional Secondary Wastewater Treatment; CDWT = Conventional Drinking Wastewater Treatment.

2.4. Evaluation of Log Reduction Credit Allocations

Allowable log reduction value (LRV) credits for various unit treatment processes currently employed by states in the United States were compared to log reductions found in the peer-reviewed literature. We then analyzed TTA and TTB using the allowable LRV credits (Table 3), in lieu of the range of literature-review values (Table 2). LRVs were applied as point estimates in our risk assessment simulations to obtain the cumulative annual risks for TTA and TTB. Simulations assumed that the treatment trains achieve no more reduction than the log reduction credited values. These simulations used the updated raw wastewater reference pathogen densities and newer dose-response models, as presented in Tables 1 and 2.

Table 3.

Log reduction credits for DPR unit processes currently used in California (adapted from Olivieri et al. (2016)).

| Treatment Train | Pathogen | Unit Process Log Reduction Credits | Total Log Reduction with | ||||||

|---|---|---|---|---|---|---|---|---|---|

| CAS | MF | RO | High Dose UV | ESB+Cl2 | High Dose UV | Low Dose UV3 | |||

| TTA | Virus | 1.9 | 0 | 2 | 6 | 4 | 13.9 | 8.4 | |

| Cryptosporidium | 1.2 | 4 | 2 | 6 | 0 | 13.2 | 9.2 | ||

| Giardia | 0.8 | 4 | 2 | 6 | 3 | 15.8 | 11.8 | ||

| Bacteria1 | 1.9 | 3 | 2 | 6 | 4 | 16.9 | 14.9 | ||

| TTB | CAS | O3 | BAF | UF | High Dose UV | ESB+Cl2 | High Dose UV | Low Dose UV | |

| Virus | 1.9 | 6 | 0 | 0 | 6 | 4 | 17.9 | 12.4 | |

| Cryptosporidium | 1.2 | 1 | 0 | 4 | 6 | 0 | 12.2 | 8.2 | |

| Giardia2 | 0.8 | 3 | 0 | 4 | 6 | 3 | 16.8 | 12.8 | |

| Bacteria | 1.9 | 2 | 0 | 3 | 6 | 4 | 16.9 | 14.9 | |

Data not provided for CAS - assumed same as viruses

Data for BAF not provided, assumed same as Cryptosporidium

Low UV log credits not found - assumed minimum of range from literature review (Virus - 0.5, C/G - 2.0, Bacteria - 4.0)

CAS = Conventional Activated Sludge Secondary Treatment, MF = Microfiltration, RO = Reverse Osmosis, UV = Ultraviolet Disinfection, ESB+Cl2 = Engineered Storage Buffer with Chlorine, O3 = Ozonation, BAF = Biologically Active Filtration, UF = Ultrafiltration

2.5. Evaluation of Pathogen Log Reduction Targets Needed to Consistently Meet Benchmark Risk Levels

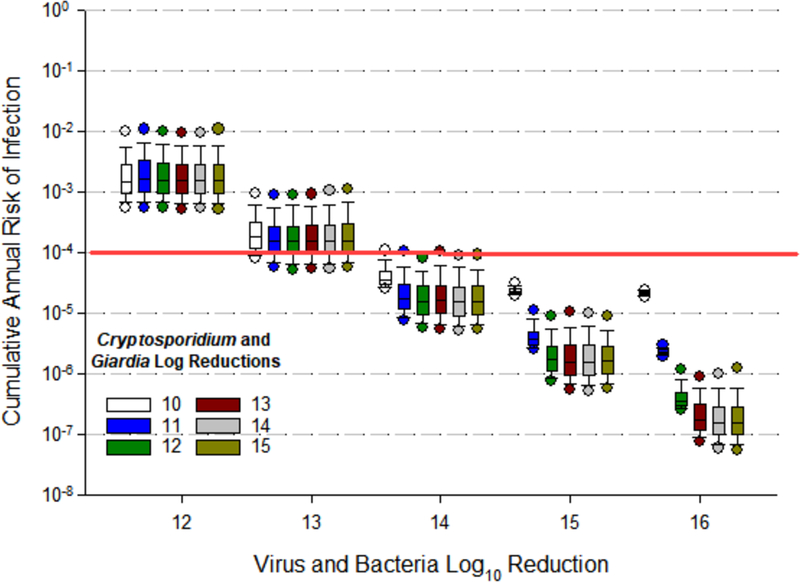

We also evaluated the amount of log reduction per pathogen class (virus, protozoa, bacteria) required to consistently achieve the benchmark risk level of 1 infection per 10,000 people per year. In this analysis, we estimated distributions of cumulative annual risks of infection (based on 1,000 simulations) associated with viral and bacterial reductions ranging from 12 to 16 logs, while also varying the log reductions for Cryptosporidium and Giardia, from 10 to 15 logs. The updated raw wastewater pathogen densities (Table 2) and dose-response relationships (Table 1) were applied in these simulations, but the log reductions for the pathogen classes were varied as point estimates.

3. Results

3.1. Updated Pathogen Densities in Raw Wastewater and Reductions Across Unit Treatment Processes

A compilation of reference pathogen densities and reductions across each of the DPR unit treatment processes is provided (Table 2). Unless described below, the values in Table 2 are consistent with those described in Soller et al. (Soller et al., 2017a).

Maximum values of Giardia spp. 9.0 × 104 cysts/L (Wallis et al., 1996), Cryptosporidium spp. 2.4 × 104 oocysts/L (Robertson et al., 2006), and Salmonella spp. 2.4 × 107 colony forming units/L (Jimenez-Cisneros et al., 2001) in raw wastewater were used in our analysis. Additionally, NoV concentrations in raw wastewater are now modeled as a normal distribution with log10 mean densities of 4.7 (log10 standard deviation of 1.5) copies/L to account for the effect of seasonality (Eftim et al., 2017). Log reduction values for NoV after disinfection with free chlorine have also been updated to a minimum of 1.1 and a maximum of 3.9 log reductions (Pouillot et al., 2015).

3.2. Sensitivity Analyses - Raw Wastewater Pathogen Densities and Dose-Response Models

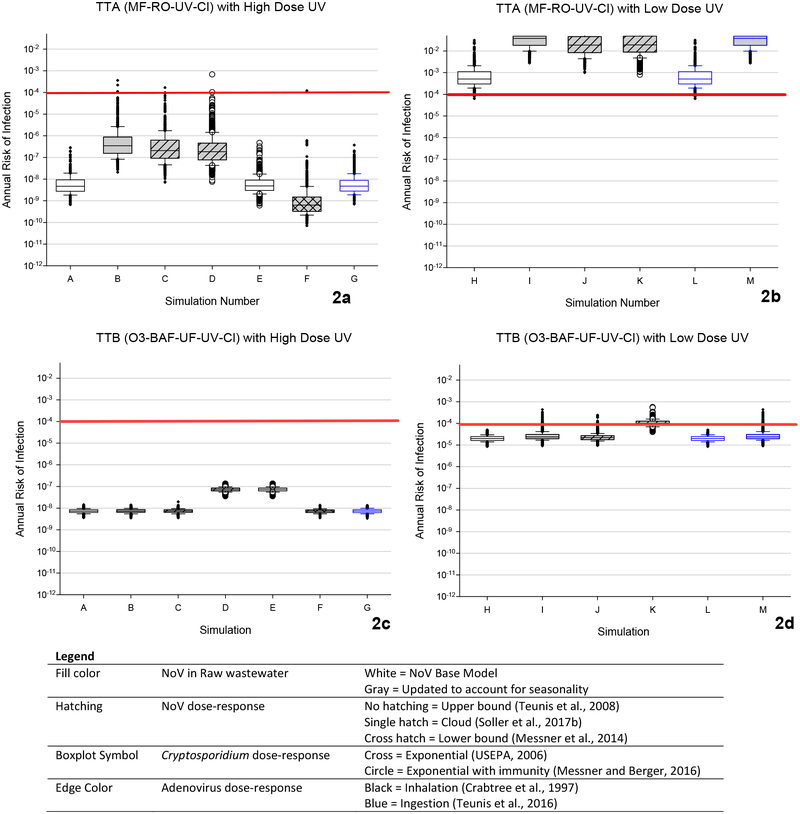

A total of thirteen simulations were run for each of the treatment trains to evaluate the model’s sensitivity to input parameters, as described in the Methods section above. Figures 2a and 2b present the estimated distributions of cumulative annual risks of infection (based on 1,000 simulations) for TTA. As shown, a median increase of approximately 0.75 logs of NoV in raw wastewater (from 103.76 to 104.7) resulted in nearly a 2 log increase in cumulative annual risks (Figure 2a and 2b, compare simulation A to B, and H to I). Additionally, when accounting for the full uncertainty in the NoV dose-response model (simulation C), resulting cumulative annual risk estimates were more similar to risk estimates associated with the upper bound dose-response model (simulation B), than the lower bound dose-response model (simulation F). For TTA, the model output was relatively insensitive to the changes in the Cryptosporidium spp. dose-response model (Figures 2a and 2b, compare simulations C to D, and A to E) and to the AdV dose-response model (Figure 2a, compare simulations A and G). Our previous work indicated that risks in this treatment train are driven by NoV risks, and thus aside from the ultraviolet disinfection dose employed, results in this treatment train were most sensitive to the updated NoV raw wastewater information (Eftim et al., 2017). All simulations that used the low dose ultraviolet disinfection configuration resulted in risks that were substantially above the benchmark risk level of 1 infection per 10,000 people per year (Figure 2b).

Figure 2.

Annual risk of infection for DPR treatment trains based on updated reference pathogen densities and dose-response relationships. See legend in Figure 1 for abbreviations.

The results for TTB are provided in Figure 2c and 2d. Increased NoV densities in raw wastewater have a much smaller effect on the annual risks for TTB, as compared to TTA (compare simulations A to B simulations for both TTA and TTB). Further, the model output for TTB with a high dose ultraviolet disinfection configuration was relatively insensitive to the NoV dose-response model (Figure 2c, compare simulations B, C, and F). However, results for the low dose ultraviolet disinfection configuration were increased, and in some cases, simulation results were above the benchmark risk level of 1 infection per 10,000 people per year (Figure 2d, compare simulation H to I, J, K, and M). Our previous work indicated that risks in this treatment train are driven by Cryptosporidium spp. risks, and thus aside from the ultraviolet disinfection dose employed, the results in this treatment train were more sensitive to the Cryptosporidium spp. dose-response model than any other parameter evaluated (Figure 2c, compare simulations C to D, and A to E).

3.3. Evaluation of Log Reduction Credit Allocations

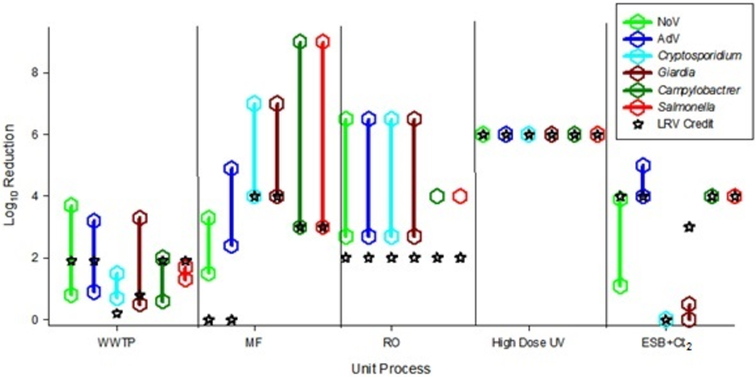

Table 3 presents a summary of LRV credits currently used for various unit treatment processes in California (Olivieri et al., 2016) corresponding to TTA and TTB. A graphical comparison of these results to the pathogen log reduction ranges found in our literature review is presented (Figure 3).

Figure 3.

Comparison of log reduction values from literature review and unit process credits values. Symbols represent minimum and maximum values from literature review. See legend in Figure 1 for abbreviations.

A comparison of the LRV credit allocations (Olivieri et al., 2016) to our literature review results indicate that the LRV credit values are generally at the low end of the literature values, as would be anticipated. One notable exception is that the virus LRV from free chlorine disinfection is at the high end of literature-based range (Figure 3).

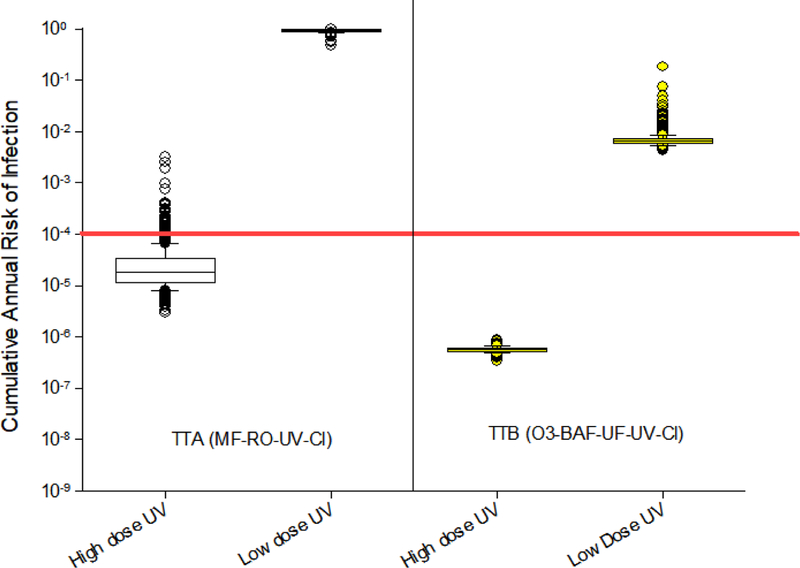

To evaluate whether treatment trains would meet benchmark risk levels when allowable LRV credits were applied to our risk methodology, we analyzed TTA and TTB using the LRV credits shown in Table 3. Reference pathogen raw wastewater densities summarized in Table 2 were applied to these simulations, along with all newer dose-response relationships (Table 1). Simulation results evaluating the cumulative annual risks associated with the allowable LRV credits are presented (Figure 4). For both TTA and TTB, the predicted cumulative annual risks associated with the allowable LRV credits are substantially higher than those associated with the literature-based log reduction values (compare Figure 4 with high dose ultraviolet disinfection to Figure 2a and 2c and Figure 4 with low dose ultraviolet disinfection to Figure 2b and 2d). Low dose ultraviolet disinfection configurations for both TTA and TTB always exceeded the benchmark risk level of 1 infection per 10,000 people per year. However, the high dose ultraviolet disinfection configuration exceeded the benchmark risk level approximately 20% of the time for TTA, but not ever for TTB.

Figure 4.

Predicted cumulative annual risks of Infection for DPR treatment trains assuming California LRV credit reductions. See legend in Figure 1 for abbreviations

3.4. Pathogen Log Reduction Targets Needed to Consistently Meet Benchmark Risk Levels

The final set of simulations determined the amount of reference pathogen reduction that would be needed to consistently achieve California’s IPR/DPR benchmark risk level of 1 infection per 10,000 people per year (Figure 5, Table 4). Figure 5 presents the estimated distributions of cumulative annual risks of infection (based on 1,000 simulations) associated with viral reductions ranging from 12 to 16 logs (x-axis) as a factor of Cryptosporidium and Giardia log reductions ranging from 10 to 15 logs (shown as different colored boxes). Results indicate that the benchmark is only consistently achieved at viral reductions of 14 logs or greater (Table 4, Figure 5). At 13 logs of virus reduction, less than half of the simulations had annual risks less than 1 infection per 10,000 people per year. At 14 logs of virus reduction and 11 or more logs of Cryptosporidium and Giardia reduction, approximately 95% of the simulations had annual risks less than 1 infection per 10,000 people per year. At 15 or more logs of virus reduction and 11 or more logs of Cryptosporidium and Giardia reduction, nearly 100% of the simulations had annual risks less than 1 infection per 10,000 people per year. The commonly recommended LRVs of 12 logs for virus, 10 logs for Cryptosporidium, and 10 logs for Giardia did not achieve the benchmark level in any of the simulations.

Figure 5.

Cumulative annual risks of infection associated with variable pathogen log reductions. Boxplots represent the distribution of cumulative annual risks corresponding to a specified log10 reduction of virus and bacteria (x-axis) and protozoa (Cryptosporidium and Giardia, colored boxes). For example, the farthest left blue box in the graph presents the distribution of cumulative annual risks corresponding to 12 logs of virus and bacteria reduction and 11 logs of protozoa (Cryptosporidium and Giardia) reduction. Edges of the boxes represent 25th and 75th percentiles. Circles below and above the boxes represent 5th and 95th percentiles, respectively.

Table 4.

Percent of simulations with cumulative annual infection risks less than the benchmark of 1 infection per 10,000 people per year.

| Giardia and Cryptosporidium Log10 Reduction | |||||||

|---|---|---|---|---|---|---|---|

| 10 | 11 | 12 | 13 | 14 | 15 | ||

| Virus and Bacteria Log10 Reduction | 12 | 0 | 0 | 0 | 0 | 0 | 0 |

| 13 | 0 | 22 | 28 | 24 | 28 | 29 | |

| 14 | 5 | 94 | 95 | 95 | 95 | 95 | |

| 15 | 27 | 99.7 | 99.7 | 100 | 100 | 100 | |

| 16 | 36 | 100 | 100 | 100 | 100 | 100 | |

4. Discussion

In this paper, we updated prior work with the information on several raw wastewater pathogen densities (Wallis et al., 1996; Jimenez-Cisneros et al., 2001; Robertson et al., 2006; Eftim et al., 2017), pathogen reductions (Pouillot et al., 2015), and the latest dose-response relationships for NoV, AdV, and Cryptosporidium spp. (Messner and Berger, 2016; Teunis et al., 2016; Soller et al., 2017b). In a sensitivity analysis, we applied the updated information to two treatment train configurations (TTA and TTB), both at low and high ultraviolet disinfection doses. TTA and TTB were chosen because they represent treatment trains with and without reverse osmosis, and because different pathogen classes drive risks for the two configurations. One important finding that remained consistent with our previous work was that treatment trains employing low doses of ultraviolet disinfection (12 mJ/cm2) do not achieve the benchmark risk level of 1 infection per 10,000 people per year (Soller et al., 2017a). Rather, the application of high dose ultraviolet disinfection is a critical unit process for meeting this benchmark. Further, ultraviolet disinfection dose is the single most important parameter in our sensitivity analyses.

In our risk analyses, high dose ultraviolet disinfection is assumed to remove pathogens up to 6 logs (Gerba et al., 2002; U.S. EPA, 2006). No data are currently available to better characterize the efficacy of high dose ultraviolet disinfection, but it is possible pathogen reductions are greater and risks are overestimated in these particular scenarios. However, to date, greater pathogen reductions have not been demonstrated.

In our sensitivity analyses, the cumulative annual risks associated with the updated NoV densities were increased by approximately two orders of magnitude in TTA, with the high dose ultraviolet disinfection configuration (Figure 2a, simulations A and B). The increased cumulative annual risk results were caused, not only by the increase in the NoV mean value, but by the higher variance (log10SD) associated with published NoV raw wastewater densities (Eftim et al., 2017). This observation highlights the importance of accounting for the full distribution of raw wastewater pathogen densities in microbial risk assessments, not just the mean, maximum, or another point-estimate value. Additionally, the updated NoV raw wastewater densities applied to our TTA and TTB account for the seasonality of NoV, which often peaks during the colder winter/spring months (Eftim et al., 2017; Gerba et al., 2017). Our results indicate that treatment configurations should also consider accounting for seasonal peaks of NoV, particularly if raw wastewater is to be used as the source water for potable reuse.

Our sensitivity analyses also explored the impact of newer dose-response models for NoV, AdV, and Cryptosporidium spp. For NoV, we evaluated an approach that includes the full spectrum of observed results from all available clinical trials (Soller et al., 2017b), as suggested by Van Abel et al. (2017). The simulation results indicate that this approach yields slightly lower risks than the more commonly used upper bound estimate (Teunis et al., 2008), but substantially greater risks than those with the lower bound dose-response model (Messner et al. 2014).

Our findings highlight that cumulative annual risks are driven by the highest daily pathogen raw wastewater values, this is especially true for NoV and Cryptosporidium spp. Greater evidence continues to emerge in the peer-reviewed literature regarding the importance of NoV with respect to adverse human health effects from exposure to waterborne pathogens (Soller et al., 2010; Viau et al., 2011; Arnold et al., 2017; Gerba et al., 2017), yet there is resistance among some practitioners in accounting for NoV risks in recycled water. For example, Pecson et al. (2017) reports uncertainty associated with the selection of a dose-response model, the lack of regulatory direction on the appropriate dose-response to utilize, absence of a culture method to assess NoV infectivity, and uncertainty related to the use of molecular methods to assess NoV infectivity as reasons for not including NoV in their DPR risk model (Pecson et al., 2017). However, given that NoV is estimated to cause more illnesses on an annual basis than all other known pathogens combined (Mead et al., 1999; Scallan et al., 2011) and that newer dose response approaches can capture the full ranges of uncertainty in the models (Soller et al., 2017b), we view the consideration of NoV to be important in the evaluation of DPR and other recycled water projects, and the stated limitations to be secondary to ensuring public health protection. This perspective is consistent with recent WHO Guidelines for potable reuse, which specifically use NoV as a reference pathogen for human enteric viruses (WHO, 2017). While NoV is not readily culturable, qPCR to cell-culture ratios for other enteric viruses can be quite low (Francy et al., 2011). Moreover, because raw sewage contains recently excreted fecal matter, it has been suggested that most of the viruses detected, including those detected by qPCR, are infectious (Gerba et al., 2017). Additionally, Gerba et al. (2017) noted that since neither cell culture nor molecular methods can assess all the potentially infectious virus in wastewater, conservative estimates should be used to assess the virus load in untreated wastewater.

Our simulation results also highlight the risk management need to understand which pathogen group contributes to the greatest risk in any proposed treatment train. Results from the TTA indicate that the model output was relatively insensitive to the updated Cryptosporidium spp. dose-response and AdV dose-response models. This work illustrated that NoV was the most important pathogen in terms of risk for the TTA configuration. In contrast, the TTB configuration was somewhat more sensitive to the updated Cryptosporidium spp. dose-response model and was similarly insensitive to the AdV dose-response model. Unlike TTA, TTB was less sensitive to changes in NoV raw wastewater densities. This, and prior work, showed that Cryptosporidium spp. was the most important pathogen in terms of risk for this treatment train (Soller et al., 2017a).

An additional key finding from this work is that the currently recommended target reductions (e.g., the “12/10/10 Rule”) do not appear to provide the sufficient reductions needed to achieve the targeted benchmark level of risk protection. Our simulations suggest that treatment trains achieving 12 logs of virus reduction, and 10 logs of Cryptosporidium and Giardia reduction will not meet the benchmark of 1 infection per 10,000 people per year. In our analyses, 14 logs of virus removal and more than 11 logs of Cryptosporidium and Giardia were needed to achieve the risk benchmark 1 infection per 10,000 people per year in 95% of the simulations. An additional log of virus reduction would be needed to confidently reach the benchmark, greater than 95% of the time. We highlight that the foundation for these estimates is strong since they are based on the latest NoV densities in raw wastewater (Pouillot et al., 2015; Eftim et al., 2017; Gerba et al., 2017) and account for a the full uncertainty in the NoV dose-response relationship (Teunis et al., 2008; Atmar et al., 2014; Soller et al., 2017b; Van Abel et al., 2017). Until further information becomes available indicating that NoV genome copies in raw and treated wastewater are not infectious, we suggest that treating them as infectious and taking into account the full uncertainty associated with the NoV dose-response relationship is prudent and health protective, particularly in the case of DPR. Our findings are consistent with the conclusions from Gerba et al. (2017), that an additional 2 to 3 log reduction of viruses, above current recommendations, may be needed to ensure the safety of recycled water.

5. Conclusions

This work provides updated insights about the relative level of public health protection provided by DPR treatment trains, with and without reverse osmosis. New reference pathogen raw wastewater data and dose-response model information were easily incorporated and yielded additional insights compared to previously published results (Soller et al., 2017a). This probabilistic risk-based approach provides more nuanced information about how consistently public health benchmarks are achieved than the traditional log credit allocations approach. Annual risk estimates for any treatment train are driven by the highest peak pathogen days for any of the reference pathogens, and thus changes in raw wastewater pathogen densities can substantially influence cumulative annual risks, particularly if that pathogen group drives the risk for the treatment train under consideration. Finally, based on our simulations, the currently proposed pathogen reduction values of 12 logs of virus reduction and 10 logs of Giardia spp. and Cryptosporidium spp. reduction do not consistently achieve the intended benchmark level of protection associated with DPR.

Acknowledgements

The research described in this article was funded by the U.S. EPA Office of Water, Office of Science and Technology under contract number EP-C-16–011 to ICF, LLC. This work has been subject to formal Agency review. The views expressed in this article are those of the authors and do not necessarily represent the views or policies of the U.S. Environmental Protection Agency. The authors gratefully acknowledge the valuable contributions of Michael Messner, Bob Bastian and Jamie Strong for their critical review of the manuscript and insightful comments.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

References

- Arnold BF, Schiff KC, Ercumen A, Bemnjamen-Chung J, Steele JA, Griffith JF et al. (2017) Acute illness among surfers following dry and wet weather seawater exposure. American Journal of Epidemiology 186: 866–875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atmar RL, Opekun AR, Gilger MA, Estes MK, Crawford SE, Neill FH et al. (2014) Determination of the 50% human infectious dose for Norwalk virus. The Journal of Infectious Diseases 209: 1016–1022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- California Department of Public Health (2011) Groundwater Replenishment Using Recycled Water, http://www.cdph.ca.gov/certlic/drinkingwater/Documents/Recharge/DraftRechargeReg-2011-11-21.pdf. In

- Crabtree KD, Gerba CP, Rose JB, and Haas CN (1997) Waterborne adenovirus: A risk assessment. Water Science and Technology 35: 1–6. [Google Scholar]

- Dahl R (2014) Advanced thinking: potable reuse strategies gain traction. Environmental Health Perspectives 122: A332–335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eftim SE, Hong T, Soller J, Bom A, Warren I, Ichida A, and Nappier SP (2017) Occurrence of norovirus in raw sewage - A systematic literature review and meta-analysis. Water Research 111: 366–374. [DOI] [PubMed] [Google Scholar]

- Francy DS, Stelzer EA, Bushon RN, Brady AMG, Mailot BE, Spencer SK et al. (2011) Quantifying viruses and bacteria in wastewater—Results, interpretation methods, and quality control In Scientific Investigations Report. Reston, VA. [Google Scholar]

- Gerba CP, Gramos DM, and Nwachuku N (2002) Comparative inactivation of enteroviruses and adenovirus 2 by UV light. Applied and Environmental Microbiology 68: 5167–5169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerba CP, Betancourt WQ, and Kitajima M (2017) How much reduction of virus is needed for recycled water: A continuous changing need for assessment? Water Research 108: 25–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerrity D, Pisarenko AN, Marti E, Trenholm RA, Gerringer F, Reungoat J, and Dickenson E (2015) Nitrosamines in pilot-scale and full-scale wastewater treatment plants with ozonation. Water Research 72: 251–261. [DOI] [PubMed] [Google Scholar]

- Haas CN, Rose JB, and Gerba CP (1999) Quantitative microbial risk assessment: J.W. Wiley, Inc. [Google Scholar]

- Jimenez-Cisneros BE, Maya-Rendon C, and Salgado-Velazquez G (2001) The elimination of helminth ova, faecal coliforms, Salmonella and protozoan cysts by various physicochemical processes in wastewater and sludge. Water Science and Technolgy 43: 179–182. [PubMed] [Google Scholar]

- Macler BA, and Regli S (1993) Use of microbial risk assessment in setting United-States drinking-water standards. International Journal of Food Microbiology 18: 245–256. [DOI] [PubMed] [Google Scholar]

- Mead PS, Slutsker L, Dietz V, McCaig LF, Bresee JS, Shapiro C et al. (1999) Food related illness and death in the United States. Emerging Infectious Diseases 5: 607–625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Messner MJ, and Berger P (2016) Cryptosporidium infection risk: results of new dose-response modeling. Risk Analysis 34: 1820–1829. [DOI] [PubMed] [Google Scholar]

- Messner MJ, Berger P, and Nappier SP (2014) Fractional Poisson--a simple dose-response model for human norovirus. Risk Analysis 34: 1820–1829. [DOI] [PubMed] [Google Scholar]

- Metcalf & Eddy (2003) Wastewater engineering, treatment and reuse, 4th ed. Boston, MA, USA: McGraw Hill. [Google Scholar]

- Mosher JJ, Vertanian GM, and Tchobanoglous G (2016) Potable Resuse Research Compilation: Synthesis of Findings. Project 15-01. In. Fountain Valley,CA: National Water Research Institute. [Google Scholar]

- NWRI (2013) Examining the Criteria for Direct Potable Reuse Recommendations of an NWRI Independent Advisory Panel, Porject 11-02. In. Fountain Valley, CA: National Water Research Institute. [Google Scholar]

- NWRI (2015) Framework for Direct Potable Reuse. In. Fountain Valley, CA: National Water Research Institute. [Google Scholar]

- Olivieri AW, Crook J, Anderson MA, Bull RJ, Drewes JE, Haas C et al. (2016) Expert Panel Final Report: Evaluation of the Feasibility of Developing Uniform Water Recycling Criteria for Direct Potable Reuse In: Submitted August 2016 by the National Water Research Institute for the State Water Resources Control Board, Sacramento, CA: http://www.waterboards.ca.gov/drinking_water/certlic/drinkingwater/rw_dpr_criteria.shtml. [Google Scholar]

- Pecson BM, Triolo SC, Olivieri S, Chen EC, Pisarenko AN, Yang CC et al. (2017) Reliability of pathogen control in direct potable reuse: Performance evaluation and QMRA of a full-scale 1 MGD advanced treatment train. Water Research 122: 258–268. [DOI] [PubMed] [Google Scholar]

- Pouillot R, Van Doren JM, Woods J, Plante D, Smith M, Goblick G et al. (2015) Meta-Analysis of the reduction of norovirus and male-specific coliphage concentrations in wastewater treatment plants. Applied and environmental microbiology 81: 4669–4681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rice J, Wutich A, and Westerhoff P (2013) Assessment of De Facto Wastewater Reuse across the US: trends between 1980 and 2008. Environmental Science & Technology 47: 11099–11105. [DOI] [PubMed] [Google Scholar]

- Robertson LJ, Hermansen L, and Gjerde BK (2006) Occurrence of Cryptosporidium oocysts and Giardia cysts in sewage in Norway. Applied and Environmental Microbiology 72: 5297–5303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rose JB, Haas CN, and Regli S (1991) Risk assessment and control of waterborne giardiasis. American Journal of Public Health 81: 709–713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scallan E, Hoekstra RM, Angulo FJ, Tauxe RV, Widdowson MA, Roy SL et al. (2011) Foodborne illness acquired in the United States--major pathogens. Emerging Infectious Diseases 17: 7–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinclair M, O’Toole J, Gibney K, and Leder K (2015) Evolution of regulatory targets for drinking water quality. Journal of Water and Health 13: 413–426. [DOI] [PubMed] [Google Scholar]

- Soller JA, Eftim SE, Warren I, and Nappier SP (2017a) Evaluation of microbiological risks associated with direct potable reuse. Microbial Risk Analysis 5: 3–14. [Google Scholar]

- Soller JA, Bartrand T, Ashbolt NJ, Ravenscroft J, and Wade TJ (2010) Estimating the primary etiologic agents in recreational freshwaters impacted by human sources of faecal contamination. Water Research 44: 4736–4747 [DOI] [PubMed] [Google Scholar]

- Soller JA, Schoen M, Steele JA, Griffith JF, and Schiff KC (2017b) Incidence of gastrointestinal illness following wet weather recreational exposures: Harmonization of quantitative microbial risk assessment with an epidemiologic investigation of surfers. Water Research 121: 280–289. [DOI] [PubMed] [Google Scholar]

- Teunis P, Van den Brandhof W, Nauta M, Wagenaar J, Van den Kerkhof H, and Van Pelt W (2005) A reconsideration of the Campylobacter dose-response relation. Epidemiology and Infection 133: 583–592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teunis PF, Schijven J, and Rutjes S (2016) A generalized dose-response relationship for adenovirus infection and illness by exposure pathway. Epidemiology and Infection 1: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teunis PF, Moe CL, Liu P, M. SE, Lindesmith L, Baric RS et al. (2008) Norwalk virus: How infectious is it? Journal of Medical Virology 80: 1468–1476. [DOI] [PubMed] [Google Scholar]

- TWDB (2014) Direct Potable Reuse Resource Document In. Austin, TX, USA,: Texas Water Development Board. [Google Scholar]

- U.S. EPA (1998) Interim enhanced surface water treatment rule. Proposed rule In Federal Register. Washington, D.C. [Google Scholar]

- U.S. EPA (2006) National primary drinking water regulations: Long term 2 enhanced surface water treatment rule (LT2ESWTR); Final Rule. 40CFR Parts 9, 141 and 142, volume 71, Number 654 In Fed Regist. Washington, DC: U.S. Environmental Protection Agency. [Google Scholar]

- Van Abel N, Schoen ME, Kissel JC, and Meschke JS (2017) Comparison of Risk Predicted by Multiple Norovirus Dose–Response Models and Implications for Quantitative Microbial Risk Assessment. Risk Analysis 37: 245–264. [DOI] [PubMed] [Google Scholar]

- Viau EJ, Lee D, and Boehm AB (2011) Swimmer risk of gastrointestinal illness from exposure to tropical coastal waters impacted by terrestrial dry-weather runoff. Environmental Science & Technology 45: 7158–7165. [DOI] [PubMed] [Google Scholar]

- Wallis PM, Erlandsen SL, Isaac-Renton JL, Olson ME, Robertson WJ, and van K. H (1996) Prevalence of Giardia cysts and Cryptosporidium oocysts and characterization of Giardia spp. isolated from drinking water in Canada. Applied Environmental Microbiology 62: 2789–2797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO (2017) Potable reuse: Guidance for producing safe drinking-water. In Geneva, Swizerland: World Health Organization. [Google Scholar]