Abstract

Background

Measuring care coordination in administrative data facilitates important research to improve care quality.

Objective

To compare shared patient networks constructed from administrative claims data across multiple payers.

Design

Social network analysis of pooled cross sections of physicians treating prevalent colorectal cancer patients between 2003 and 2013.

Participants

Surgeons, medical oncologists, and radiation oncologists identified from North Carolina Central Cancer Registry data linked to Medicare claims (N = 1735) and private insurance claims (N = 1321).

Main Measures

Provider-level measures included the number of patients treated, the number of providers with whom they share patients (by specialty), the extent of patient sharing with each specialty, and network centrality. Network-level measures included the number of providers and shared patients, the density of shared-patient relationships among providers, and the size and composition of clusters of providers with a high level of patient sharing.

Results

For 24.5% of providers, total patient volume rank differed by at least one quintile group between payers. Medicare claims missed 14.6% of all shared patient relationships between providers, but captured a greater number of patient-sharing relationships per provider compared with the private insurance database, even after controlling for the total number of patients (27.242 vs 26.044, p < 0.001). Providers in the private network shared a higher fraction of patients with other providers (0.226 vs 0.127, p < 0.001) compared to the Medicare network. Clustering coefficients for providers, weighted betweenness, and eigenvector centrality varied greatly across payers. Network differences led to some clusters of providers that existed in the combined network not being detected in Medicare alone.

Conclusion

Many features of shared patient networks constructed from a single-payer database differed from similar networks constructed from other payers’ data. Depending on a study’s goals, shortcomings of single-payer networks should be considered when using claims data to draw conclusions about provider behavior.

Electronic supplementary material

The online version of this article (10.1007/s11606-019-04978-9) contains supplementary material, which is available to authorized users.

KEY WORDS: network analysis, colorectal cancer

INTRODUCTION

Care coordination is defined as the organization of patient care activities between two or more participants involved in a patient’s care to facilitate the appropriate delivery of health care services.1 The Institutes of Medicine has identified failures in care coordination as a potential cause of poor-quality care, including among cancer patients.2 Recognizing the importance of team-based care, policy makers are attempting to improve the structure of teams and interactions among team members through promotion of patient-centered medical homes,3, 4 survivorship care plans,5 and Accountable Care Organizations (ACOs).6

Network analysis provides a mathematical framework to study care coordination on a population level.7–15 Several studies have developed measures of coordination using patients shared among providers in administrative claims data from the Centers for Medicare and Medicaid Services (CMS).8–12, 16, 17 Shared patient networks are composed of providers as nodes and shared patients as edges between the nodes. One study found that claims-based networks represented provider communication patterns well.11 Other studies have shown such claims-based network features are correlated with better patient outcomes.8, 18

One major limitation of the literature to date is that the shared patient networks have been constructed using only a single payer, usually Medicare, or health system. In the USA, no truly national, all-payer claims database exists. Many states have constructed all-payer claims databases that would include Medicare, Medicaid, and private insurers. Several private companies have also aggregated national claims from private insurance companies, but these tend to lack claims from public insurance plans. Medicare claims are national and include the very policy-relevant population of elderly adults. However, there are many reasons to question to what extent a network constructed from one payer’s data can adequately represent the true all-payer provider network. First, patient characteristics vary widely across payers; for example, Medicare consists mostly of elderly people, while private insurance covers mostly working-age adults and dependents and children. These patient characteristics are known to be correlated with a host of factors influencing patient sharing among providers (e.g., access to care, health literacy). Second, not all providers accept all payers. Third, payers differ in payment structure and incentives to encourage coordination. For example, Medicare has introduced several mandatory bundled care payment models that offer fixed payments for an entire episode of care. The private insurance market has also seen several attempts to improve coordination of care through financial incentives, narrower networks of providers, and gatekeeper roles for primary care providers.19 Therefore, it is an open question as to how well provider networks from Medicare, or any single payer, adequately represent relevant network features for the entire patient and provider population.

This paper compares shared-patient network features for networks constructed using Medicare and private insurance patients in North Carolina. We use as a case study the care of colorectal cancer patients in the state. These data have been used previously to associate network measures of care coordination with quality of care for colorectal cancer patients.18 As one of the first studies to compare networks across payers, we highlight how sensitive the distribution of key network variables used to assess quality of care in the literature are to the payer source of data.

METHODS

Data Sources

The primary data source was the Lineberger Comprehensive Cancer Center’s Cancer Information and Population Health Resource, which linked North Carolina Central Cancer Registry data to medical claims data from Medicare, Medicaid, and privately insured individuals.20 We focused on outpatient claims for 2003–2013. The claims data provide information on billed medical services delivered and include provider identifier and specialty, service dates, diagnoses, procedures, and treatments delivered. This study was approved by the University of North Carolina at Chapel Hill Institutional Review Board (#15-0315).

Analytic Cohort

To construct comprehensive measures of patient sharing among providers, we defined a “cohort” of prevalent colorectal cancer patients in the medical claims data. To develop the patient-sharing measures, we included any patients with at least one diagnosis code (International Classification of Diseases, 9th Revision [ICD-9]) for colorectal cancer (153 or 154) during 2003–2013. From all patient claims, we recorded the month, year, unique provider identifier (e.g., Unique Physician Identification Number or National Provider Identifier), and provider specialty for the encounter. Due to a high rate of facility, instead of provider identifiers listed in the Medicaid claims, we restrict our analysis to the Medicare and private insurance claims.

We constructed a provider shared-patient network by first creating a list of all patient-provider encounters (i.e., a representation of a bipartite network between patients and providers). We restricted this list to physicians (surgeons, medical oncologists, and radiation oncologists) practicing in North Carolina to avoid spurious network connections from care received when traveling. We then collapsed this list to a provider-to-provider network, in which an edge between provider pairs corresponds to the number of common patients who were seen by both providers over the 11-year period. In sensitivity analysis, we replicated all analyses requiring at least two shared patients to create an edge between providers, which has been shown to improve specificity and sensitivity.16

Network Statistics

We calculated payer-specific network statistics at several levels. We report edge-weighted statistics whenever feasible (e.g., degree, centrality measures), which gives more influence to patient-sharing relationships with more patients shared between providers. At the patient level, we report the total number of patients and the number of patients not shared among providers. Note that a single patient can be shared across multiple providers, therefore contributing to multiple edges. At the provider level, we report the total number of providers and the percent of providers from each specialty. We also report the following averages summarizing patient sharing at the provider level: number of times that providers from all three specialties shared at least one patient (edges including all three specialties), number of times that providers shared at least one patient with a provider of a different specialty (edges including at least two specialties), average provider degree (i.e., number of other providers with whom a provider shares patients), average provider weighted degree (i.e., number of instances of patient sharing with other providers, also known as strength), patient volume, and average fraction of a provider’s patients who are shared with any other provider (i.e., shared volume).

A provider’s role within the network is captured by the following measures. A provider’s betweenness centrality is the percentage of shortest paths (paths with least number of edges, calculated with and without weighting by edges) between any two providers within the network that cross through that provider. The specialty centralities are defined by the average betweenness centrality of providers within that specialty relative to the average betweenness centrality of providers outside of that specialty. Hence, the specialty centralities provide a relative comparison of how important a given specialty is to information flow across the network. We also report alternative measures of centrality including closeness centrality (reciprocal of the sum of distances between that provider and every other provider within the network taking the shortest path), eigenvector centrality (assesses the influence of a provider based on the number of neighbors a provider has and how influential each of them is), and pagerank centrality (similar to eigenvector centrality but gives each provider an amount of weight at baseline). We also examine several other metrics to characterize the different networks. For a physician sharing patients separately with two other physicians, the clustering coefficient is the fraction of these cases that the two other physicians also share a patient (i.e., fraction of closed triangles out of all triplets incident to a provider). The global clustering coefficient represents the proportion of closed triangles on the entire network (i.e., the proportion of groupings of three physicians with at least two edges present that have three edges). The average clustering coefficient averages the clustering coefficient over all the physicians, which tends to give equal weight to lower-degree providers. The size of the largest connected component is the size of the largest set of providers such that for any pair of physicians in the set there exists a path between the pair (i.e., the largest subgraph of the network whose nodes are contiguously connected).

Statistical Analysis

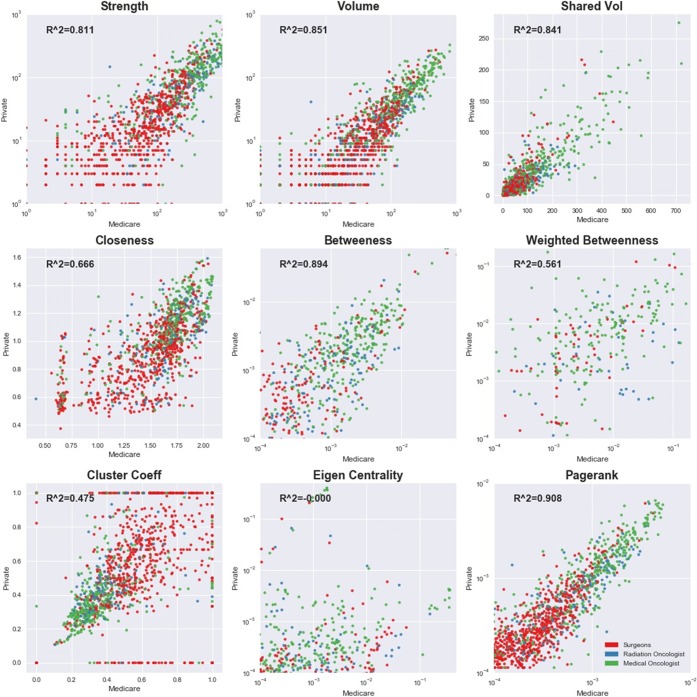

We first looked for overlap in the patient and provider populations across the Medicare and private insurance networks. We report a Venn diagram showing the number of edges in each payer database and the number of common edges appearing in both the networks. We next report descriptive statistics (e.g., counts, averages) for the network measures by payer source. Some of the descriptive statistics will appear very different because the number of colorectal cancer patients is much larger in Medicare relative to private insurance. To control for the differences in the number of patients in the networks, we subsampled the Medicare patients down to the number of privately insured patients uniformly at random including patients that were not shared; we created 10,000 iterations of the private insurance-sized Medicare network and used this distribution of values to test the null hypothesis that the statistics were equal across payer networks. We also compared the distribution of provider-level network statistics across the two payers graphically. We report a scatterplot of nine network statistics (strength, patient volume, shared volume, closeness centrality, betweenness, weighted betweenness, cluster coefficient, eigenvector centrality, and pagerank centrality) from the private insurance database against the same statistics from the Medicare database for 1163 providers who appear in both claims databases.

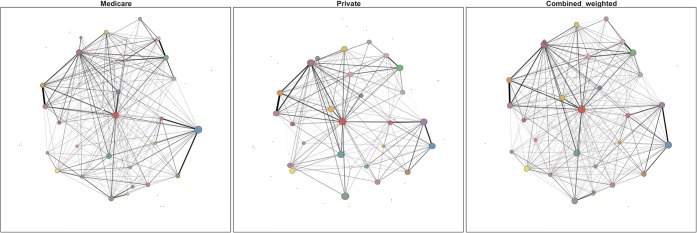

Finally, we tested whether providers would cluster into similar “communities” of connected providers in the two payer networks and a third network combining Medicare and private insurance into a single network. To identify higher-level structure within each network, we employed a well-studied community detection algorithm to optimize the quantity known as modularity.21–24 Briefly, modularity attempts to maximize the strength of edges within communities above what would be expected under a random null model. To select the appropriate resolution at which to identify community structure, we employed the Convex Hull of Admissible Modularity Partitions (CHAMP) tool.24 Community structure was compared at the broadest domains that overlapped between the networks. We conducted all analyses using Python.

RESULTS

We identified 1735 surgeons and oncologists that cared for colorectal cancer patients in North Carolina in the Medicare claims and 1321 providers in the private insurance claims. Of these providers, 1163 appeared in both networks.

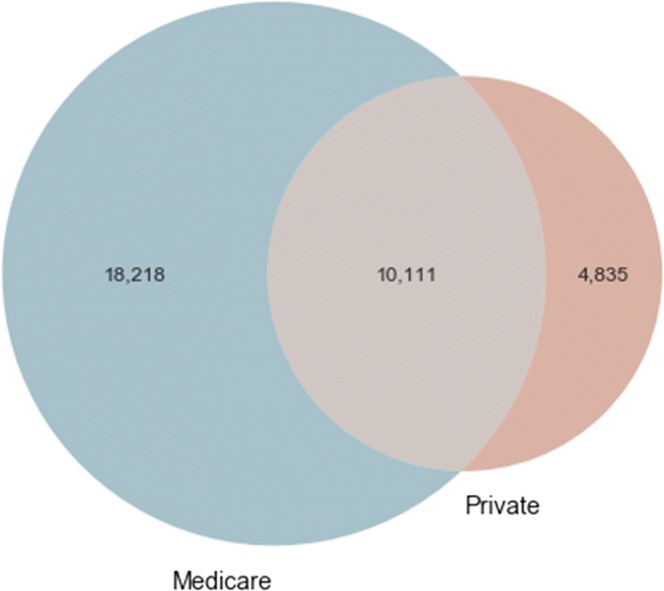

There were 33,164 total edges (i.e., patient-sharing relationships) among providers that appear in either network (Fig. 1). Only 10,111 (30.5%) of these edges appeared in both networks. Medicare claims missed 14.6% (=4835/33,164) of all shared patient relationships between providers, while private insurance claims missed 54.9% (=18,218/33,164) of all shared patient relationships.

Figure 1.

Common edges among the 1163 providers in both Medicare and private insurance claims databases.

Controlling for the total number of patients in the network, the number of unshared patients and the number of edges connecting providers with different specialties were not statistically significantly different between Medicare and private insurance (Table 1). However, patients in the private insurance database were more likely to be shared among at least two different specialties (6188 vs 5829, p < 0.001).

Table 1.

Network Statistics by Payer Source: Medicare Vs. Private Insurance

| Statistic (1) | Medicare (2) | Private (3) | Medicare subsample average (4) | p value for difference between (3) and (4) |

|---|---|---|---|---|

| Number of patients | ||||

| Total | 41,167 | 13,272 | 13,026 | < 0.001 |

| Unshared | 14,328 | 4886 | 4960 | 0.052 |

| Providers | ||||

| Total number | 1735 | 1321 | 1097 | < 0.001 |

| % surgeons | 56.369 | 55.715 | 54.333 | < 0.001 |

| % medical oncologists | 29.452 | 30.961 | 31.057 | < 0.001 |

| % radiation oncologists | 14.179 | 13.323 | 14.610 | < 0.001 |

| Provider patient sharing | ||||

| # of patients shared across all three specialties* | 4346 | 1202 | 1196 | 0.427 |

| # of patients shared between at least two specialties | 19,615 | 6188 | 5829 | < 0.001 |

| Average degree† | 43.979 | 26.044 | 27.242 | < 0.001 |

| Average edge-weighted degree‡ | 193.911 | 80.324 | 83.259 | < 0.001 |

| Average fraction shared§ | 0.134 | 0.226 | 0.127 | < 0.001 |

| Provider-to-provider connections | ||||

| Relative centrality‖ | ||||

| Surgeon | 0.096 | 0.142 | 0.118 | < 0.001 |

| Medical oncologist | 3.673 | 4.683 | 3.964 | < 0.001 |

| Radiation oncologist | 2.404 | 1.340 | 1.821 | < 0.001 |

| Average clustering coefficient¶ | 0.513 | 0.535 | 0.523 | < 0.001 |

| Global clustering coefficient# | 0.278 | 0.268 | 0.297 | < 0.001 |

| Size of largest connected component** | 1659 | 1286 | 1082 | < 0.001 |

*Specialties are surgeon, medical oncologist, and radiation oncologist

†Degree is the number of other providers with whom a provider shares patients

‡Edge-weighted degree (node strength) is the number of other providers with whom a provider shares patients weighted by the number of patients shared with each provider

§Fraction shared is the fraction of a provider’s patients who are shared with any other provider

‖Provider-specific relative centrality is defined here by the average betweenness (weighted by edges) of all providers of a given specialty divided by the average betweenness of all providers not in that specialty

¶Clustering coefficient is the proportion of providers with whom a provider shares patients who also share patients with one another

#Fraction of connected triplets that form a closed triangle

**Size of the largest subgraph that is contiguously connected

The mix of provider specialties was statistically, but not practically, different across payers; surgeons represented 55% of providers, medical oncologists 30%, and radiation oncologists 14% in the combined network. The relative position of providers in the distribution of patient volumes differed across payers; there were 24.5% of providers whose quintile rank in one payer network was one or more categories different from their rank in the other network (available upon request).

Medicare providers shared patients with a greater number of other providers than providers in the private insurance database, even after controlling for the total number of patients (27.242 vs 26.044, p < 0.001). However, providers in the private insurance database shared a higher fraction of their patients with other providers (0.226 vs 0.127, p < 0.001).

Specialties differed in their centrality in the network across payers. Medical oncologists were the most central specialty in the combined network. Surgeons were more central in the private network (0.142 vs 0.118, p < 0.001) while radiation oncologists were more central in the Medicare network (1.821 vs 1.340, p < 0.001).

On average, approximately 52% of the providers with whom a provider shared patients also shared patients with one another (average clustering coefficient). The average clustering coefficients were similar in magnitude across payers, although they were statistically significantly different after controlling for the number of patients. The similar averages hide substantial variation in the clustering coefficient values for individual providers in the two payer networks. Figure 2 shows that although most providers’ clustering coefficient in Medicare was a good predictor of their clustering coefficient in private insurance, there were many providers with vastly different values in the two networks. A similar pattern was observed for closeness centrality, weighted betweenness, and eigenvector centrality, all of which have low R-squared values between payers (Fig. 2).

Figure 2.

Scatterplot of network statistics for 1163 providers in both Medicare and private insurance claims networks.

Figure 3 shows the results of the community detection algorithms in the two payer networks and the third combined network. Each graph shows communities as nodes (i.e., each node represents a distinct community of providers with tight-knit patient-sharing patterns) with size proportional to the number of providers included in the community. The edges between the community nodes represent the collective patient sharing between communities. Comparing the communities detected in Medicare only to the combined network that also includes private insurance, most of the same communities were detected in the Medicare-only network. However, there were two cases in which the combined network detected distinct communities of providers that the Medicare network did not. In both cases, including the information from the private insurance network led to a larger, distinct community of providers that Medicare alone would have missed. None of the results were substantively changed with the inclusion of the threshold of two or more shared patients to create an edge between providers (see Supplemental Results).

Figure 3.

Comparison of provider “communities” defined using patient sharing and the CHAMP algorithm, by payer.Note: Each node represents the center of a community’s mass; each community is a unique color. The spatial locations of individual providers are constant across payer figures. The node size is proportional to the number of providers in the community. Edges represent patients shared between communities. Key differences between Medicare and the combined network are highlighted with arrows: one is at the 3 o’clock position at the right of the combined network, and the other is the larger community at the 8 o’clock position close to the center of the combined network.

DISCUSSION

Improved team care coordination is crucial in today’s policy environment. Studies have suggested that team experience matters for patient’s quality of care, utilization, and outcomes.18 The CMS is emphasizing alternative payment models the incentivize multidisciplinary coordination (e.g., ACOs and the Oncology Care Model).25 Network analysis is a promising approach to understanding patterns of care and care delivery and to measuring quality of care. However, this study demonstrates with a population of colorectal cancer patients and providers in North Carolina that shared patient networks may differ in important ways depending on the data source. Very few studies have combined multiple payer sources into a single network (e.g., Agha et al.26).

The results of this study have key implications for the literature using shared patient networks to measure care coordination. In our application, there were substantial differences for many of the network measures used in earlier studies depending on the payer data source. For example, Landon et al.12 proposed using shared patient networks to help identify natural partners for ACOs. While still a useful approach that could improve on current practice, our results suggest that some communities might be missed by relying solely on Medicare claims. The missed communities would probably not be relevant to a Medicare ACO, but might be of significance for ACO-like innovations in the private insurance market. In another example, Pham et al.27 identified provider degree as a barrier to coordination. Our results suggest that provider degree depends heavily on the payer source used to construct the network. Finally, Pollack et al.8 correlated a metric they coined “care density” with quality-of-care measures for cancer patients. We found that the proportion of a provider’s patients shared with other providers, the building block for care density, was higher in the private insurance network than in the Medicare network.

At the same time, many of the network statistics were highly correlated between the Medicare and private payer networks. Edge strength, patient volume, shared patient volume, (unweighted) betweenness centrality, and pagerank centrality all had R-square values for a linear relationship between the two payer sources of 0.8 or higher. This implies that knowledge of a provider’s place in the distribution of the shared-patient network of one payer source is predictive of their network statistics in the other payer source. Thus, depending on the network feature of interest, sampling from the multi-payer network using data from one payer may be adequate for analysis.

Our study has several limitations. First, our results are only for colorectal cancer patients. There are several reasons why results might differ in payer networks comprised of other patient populations. Larger, more heterogeneous patient populations might pick up more physician connections in both payer sources. It is also likely that the coordination processes required to improve quality of care will depend on the health problem being addressed. For example, some components of coordination, like interoperability of electronic health records and information sharing, might be agnostic to the disease being treated, whereas supportive care management for post-operative cancer patients might require shared experience with exactly those types of patients. Capturing information-sharing types of coordination would require shared patient networks including all types of patients; capturing treatment-specific coordination would require shared patient networks specific to select patients, similar to those analyzed here and in prior work.18 Second, our study is representative of colorectal cancer patients in North Carolina, the tenth most populous state, but may not represent areas of the country with different demographics, payer mixes, health systems, and regional patterns of care.28 Third, we were not able to include Medicaid as a third payer source due to a lack of clear provider identifiers. Nor could we include uninsured patients, as there are no payer claims filed for these patients.

CONCLUSION

This study suggests that shared patient networks constructed from a single-payer database may differ in several ways from similar networks from other payers, including the universe of all payers. The magnitude and significance of the differences depend on the network statistic, the population of interest, and whether the measure is used in aggregate or for individual provider analysis. Future work should continue to expand our knowledge about the shared patient network features most relevant for patient outcomes and how differences in these features across payers affect our ability to model the relationship between network structure and patient outcomes.

Electronic Supplementary Material

(DOCX 780 kb)

Funding Source

This study was supported by the Cancer Information and Population Health Resource (CIPHR), UNC Lineberger Comprehensive Cancer Center, with funding provided by the University Cancer Research Fund (UCRF) via the State of North Carolina.

Abbreviations

- CMS

Centers for Medicare and Medicaid Services

- ICD-9

International Classification of Diseases, 9th Revision

Author Contributions

JGT, PJM, AMM, and KBS conceptualized the work and led the design and interpretation of the data. AMM led the acquisition of the data. SS, WHW, and TMK led the analysis of the data. All authors drafted and revised the manuscript, approved the final draft for submission, and agree to be accountable for all aspects of the work.

Compliance with Ethical Standards

Financial Disclosure

No financial disclosures were reported by the authors of this paper.

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.McDonald KM, Sundaram V, Bravata DM, et al. Closing the quality gap: a critical analysis of quality improvement strategies (vol. 7: care coordination) Rockville (MD): AHRQ Technical Reviews; 2007. [PubMed] [Google Scholar]

- 2.Committee on Quality of Health Care in America IoM. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington (DC); 2001

- 3.Iglehart JK. No place like home--testing a new model of care delivery. N Engl J Med. 2008;359:1200–2. doi: 10.1056/NEJMp0805225. [DOI] [PubMed] [Google Scholar]

- 4.Jackson GL, Powers BJ, Chatterjee R, et al. Improving patient care. The patient centered medical home. A systematic review. Ann Intern Med. 2013;158:169–78. doi: 10.7326/0003-4819-158-3-201302050-00579. [DOI] [PubMed] [Google Scholar]

- 5.Salz T, Oeffinger KC, McCabe MS, et al. Survivorship care plans in research and practice. CA Cancer J Clin. 2012;62:101–17. doi: 10.3322/caac.20142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Auerbach DI, Liu H, Hussey PS, et al. Accountable care organization formation is associated with integrated systems but not high medical spending. Health Aff (Millwood) 2013;32:1781–8. doi: 10.1377/hlthaff.2013.0372. [DOI] [PubMed] [Google Scholar]

- 7.Hussain T, Chang HY, Veenstra CM, et al. Collaboration between surgeons and medical oncologists and outcomes for patients with stage III colon cancer. J Oncol Pract. 2015;11:e388–97. doi: 10.1200/JOP.2014.003293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pollack CE, Frick KD, Herbert RJ, et al. It’s who you know: patient-sharing, quality, and costs of cancer survivorship care. J Cancer Surviv. 2014;8:156–66. doi: 10.1007/s11764-014-0349-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pollack CE, Lemke KW, Roberts E, et al. Patient sharing and quality of care: measuring outcomes of care coordination using claims data. Med Care. 2015;53:317–23. doi: 10.1097/MLR.0000000000000319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pollack CE, Weissman GE, Lemke KW, et al. Patient sharing among physicians and costs of care: a network analytic approach to care coordination using claims data. J Gen Intern Med. 2013;28:459–65. doi: 10.1007/s11606-012-2104-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Barnett ML, Christakis NA, O'Malley J, et al. Physician patient-sharing networks and the cost and intensity of care in US hospitals. Med Care. 2012;50:152–60. doi: 10.1097/MLR.0b013e31822dcef7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Landon BE, Onnela JP, Keating NL, et al. Using administrative data to identify naturally occurring networks of physicians. Med Care. 2013;51:715–21. doi: 10.1097/MLR.0b013e3182977991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Uddin S, Hossain L, Kelaher M. Effect of physician collaboration network on hospitalization cost and readmission rate. Eur J Public Health. 2012;22:629–33. doi: 10.1093/eurpub/ckr153. [DOI] [PubMed] [Google Scholar]

- 14.Casalino LP, Pesko MF, Ryan AM, et al. Physician networks and ambulatory care-sensitive admissions. Med Care. 2015;53:534–41. doi: 10.1097/MLR.0000000000000365. [DOI] [PubMed] [Google Scholar]

- 15.Iwashyna TJ, Christie JD, Moody J, et al. The structure of critical care transfer networks. Med Care. 2009;47:787–93. doi: 10.1097/MLR.0b013e318197b1f5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Barnett ML, Landon BE, O'Malley AJ, et al. Mapping physician networks with self-reported and administrative data. Health Serv Res. 2011;46:1592–609. doi: 10.1111/j.1475-6773.2011.01262.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Landon BE, Keating NL, Barnett ML, et al. Variation in patient-sharing networks of physicians across the United States. JAMA. 2012;308:265–73. doi: 10.1001/jama.2012.7615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Trogdon JG, Chang Y, Shai S, et al. Care coordination and multispecialty teams in the care of colorectal cancer patients. Med Care. 2018;56:430–435. doi: 10.1097/MLR.0000000000000906. [DOI] [PubMed] [Google Scholar]

- 19.Dafny LS, Hendel I, Marone V, et al. Narrow networks on the health insurance marketplaces: prevalence, pricing, and the cost of network breadth. Health Affairs. 2017;36:1606–1614. doi: 10.1377/hlthaff.2016.1669. [DOI] [PubMed] [Google Scholar]

- 20.Meyer AM, Olshan AF, Green L, et al. Big data for population-based cancer research: the integrated cancer information and surveillance system. N C Med J. 2014;75:265–9. doi: 10.18043/ncm.75.4.265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Blondel VD, Guillaume JL, Lambiotte R, et al. Fast unfolding of communities in large networks. J Stat Mech Theory Exp, 2008

- 22.Newman MEJ. Modularity and community structure in networks. Proc Natl Acad Sci U S A. 2006;103:8577–8582. doi: 10.1073/pnas.0601602103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Newman MEJ, Girvan M. Finding and evaluating community structure in networks. Phys Rev E 69, 2004 [DOI] [PubMed]

- 24.Weir William, Emmons Scott, Gibson Ryan, Taylor Dane, Mucha Peter. Post-Processing Partitions to Identify Domains of Modularity Optimization. Algorithms. 2017;10(3):93. doi: 10.3390/a10030093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Centers for Medicare & Medicaid Services. Oncology care model; 2016

- 26.Agha L, Ericson KM, Geissler KH, et al. Team formation and performance: evidence from healthcare referral networks, in Research NBoE (ed): NBER Working Paper 24338. Cambridge, MA, 2018

- 27.Pham HH, O'Malley AS, Bach PB, et al. Primary care physicians’ links to other physicians through Medicare patients: the scope of care coordination. Ann Intern Med. 2009;150:236–42. doi: 10.7326/0003-4819-150-4-200902170-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fisher ES, Wennberg DE, Stukel TA, et al. The implications of regional variations in Medicare spending. Part 2: health outcomes and satisfaction with care. Ann Intern Med. 2003;138:288–98. doi: 10.7326/0003-4819-138-4-200302180-00007. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 780 kb)