Abstract

Introduction:

Experiential learning, followed by debriefing, is at the heart of Simulation-Based Medical Education (SBME) and has been proven effective to help master several medical skills. We investigated the impact of an educational intervention, based on high-fidelity SBME, on the debriefing competence of novice simulation instructors.

Methods:

This is a prospective, randomized, quasi-experimental, pre- and post-test study. Sixty physicians without prior formal debriefing expertise attended a 5-day SBME seminar targeted on debriefing. Prior to the start of the seminar, 15 randomly chosen participants had to debrief a spaghetti and tape team exercise. Thereafter, the members of each team assessed their debriefer’s performance using the Debriefing Assessment for Simulation in Healthcare (DASH)© score. The debriefing seminar that followed (intervention) consisted of 5 days of teaching that included theoretical and simulation training. Each scenario was followed by a Debriefing of the Debriefing (DOD) session conducted by the expert instructor. At the end of the course, 15 randomly chosen debriefers had to debrief a second tower building exercise and were re-evaluated with the DASH score by their respective team members. The Wilcoxon signed-rank test was used to compare pre- and post-test scores. Statistical tests were performed using GraphPad Prism 6.0c for Mac.

Results:

A significant improvement in all items of the DASH score was noted following the seminar. The debriefers significantly improved their performance with regard to “maintaining an engaging learning environment” (Median [IQR]) (4[3-5] after the pre-test vs. 5.5[5-6] after the post-test, p<0.001); “structuring the debriefing in an organized way” (5[4-5] after the pre-test vs. 5[5-6] after the post-test, p=0.002); “provoking engaging discussion” (4[3-5.75] after the pre-test vs. 6[5-6] after the post-test, p<0.001); “identifying and exploring performance gaps” (5[4-6] after the pre-test vs. 6[5-6] after the post-test, p=0.014); and “helping trainees to achieve and sustain good future performance” (4[3-5] after the pre-test vs. 6[5-6] after the post-test, p<0.001).

Conclusion:

A simulation-based debriefing course, based mainly on DOD sessions, allowed novice simulation instructors to improve their overall debriefing skills including, more specifically, the ability to foster engagement in discussions and maintain an engaging learning environment.

Keywords: Faculty , Learning , Feedback

Introduction

Debriefing can be a major component of effective experiential learning (1,2). The debrief that follows a simulated or a real experience can help explore and correct the learners’ schemes and clinical reasoning patterns and foster reflective practice (3). Experiential learning following the Kolb's theoretical frame is applicable with the debriefing skill. It requires the practice of the debriefing, the feedback on the practice, and the re-experimentation to apply and consolidate the learning (4). Debrief-based experiential learning can help the trainees acquire and strengthen their own debriefing skills (5). That is why a 5-day simulation-based seminar that included multiple Debriefing of the Debriefing (DOD) sessions was created to provide novice simulation instructors with adequate debriefing abilities and knowledge.

The primary goal of this report was to measure if a 5-day seminar based on a combination of theoretical teaching and DOD sessions led by an experienced simulation trainer would have an effect on the performance of novice instructors with regard to their debriefing skills. We hypothesized that the knowledge of the theoretical basis of the debriefing, the practice of the debriefing as part of our specific seminar training and then obtaining feedback in a framework of DOD sessions would improve the debriefing skills of the novice simulation instructor.

Methods

A randomized quasi-experimental trial including pre- and post-training evaluation was reviewed and approved by the Educational Ethics Board of the Faculty of Medicine of Tunis (Tunisia), and written consent was obtained from all the participants. The recruitment was carried out at the simulation center of the Faculty of Medicine of Tunis (Tunisia) prior to a 5-day simulation training seminar targeting novice instructors and that was conducted on four occasions (August 2015, May and June 2016, and then in April 2017). Results were collected after each seminar and compiled in April 2017.

As a part of the Education Quality Improvement Program (Programme d’Appui à la Qualité de l’enseignement supérieur or PAQ) of the Faculty of Medicine of Tunis, a collaboration was set up with the « Centre d’Apprentissage des Attitudes et Habiletés Cliniques » (CAAHC), the medical simulation center of the University of Montreal, to conduct several simulation training courses or seminars for future instructors in simulation. The goal of this collaborative effort was also to create and foster a formal community or environment where simulation instructors of all levels of expertise could reflect on their practice in an engaging way. The seminars were led by an experienced instructor from the CAAHC.

The course, designed in a collegial way to meet the training needs of the Tunis Faculty of Medicine simulation instructors, essentially taught debriefing skills through DOD sessions. All registered participants were practicing physicians affiliated to the University of Tunis in the fields of anesthesiology, emergency or intensive care medicine. Anyone with prior debriefing experience, regardless of its duration, was to be excluded.

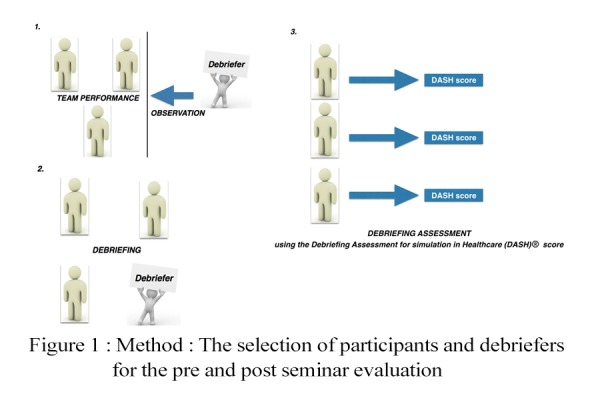

Sixty participants attended four seminars (16 during courses 1, 2 and 3 and 12 for the last one). Prior to the beginning of the course, 15 of them were randomly selected (Figure 1), by choosing blindly in a ballot box to conduct a 15-minute debriefing session that followed a pre-seminar exercise. During the exercise, each debriefer had to observe a team composed of three active randomly chosen trainees who had 15 minutes to build the tallest spaghetti sticks and tape tower. The pre-seminar debriefing skills of these 15 observers/debriefers were rated by the active participants using the Debriefing Assessment for Simulation in Healthcare (DASH)© score (6). After the 5-day seminars, the debriefing skills of 15 other trainees were evaluated again on the DASH© scale by their fellow participants to a second tower building exercise (with paper instead of spaghetti sticks). Figure 1

Figure1.

Method ; The selection of participants and debriefers for the pre and post seminar evaluation

«Spaghetti sticks and tape tower» test is usually used during simulation and debriefing training (7), focusing on teamwork and does not require medical expertise. It is, therefore, perfectly suited for training in all medical specialties, and allows the debriefer to remain focused, during its feedback, only on teamwork, without being influenced by a specific medical expertise (8,9).

Since the participants had no prior knowledge of the DASH© score or its purpose, a presentation of the grid in which each item was explained was carried out before the randomization preceding the pre-seminar exercise. The DASH© grid is composed of 6 questions, each rated on a seven-point Likert-type scale ranging from one (extremely ineffective/abysmal) to seven (extremely effective/outstanding). Except for the first one that rated the quality of the introduction to the simulation course, all questions pertained to the skills exhibited by the leader of a debriefing session. Questions 2 to 6 respectively rated the ability of the debriefer to maintain an engaging learning environment, to structure the debriefing in an organized way, provoke engaging discussion, identify and explore performance gaps, and help the trainees to achieve and sustain good future performance.

The main objective of the study was then to compare the results for questions two to six of the DASH© grid obtained by the 15 debriefers before and after the seminars. Sample size was solely determined by the number of participants who freely registered to the four seminars. The DASH© scores of the pre- and post-seminar exercises obtained by the debriefers were compared using the Wilcoxon signed-rank test. A p value <0.05 was considered significant. Statistical tests were performed using GraphPad Prism 6.0c for Mac.

Results

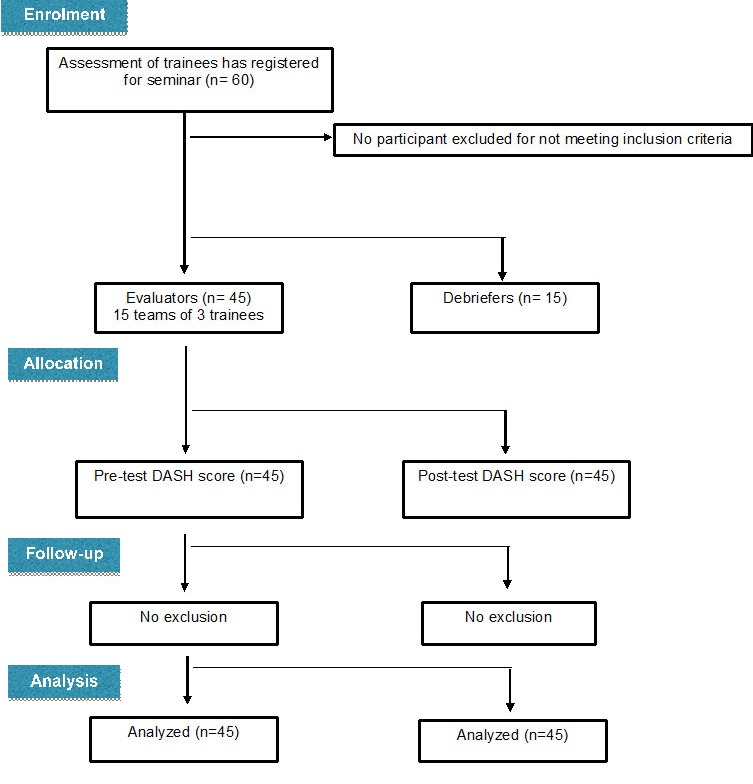

Participants (Figure 2)

Figure2.

Randomization flow chart

All 60 trainees who registered to the four seminars agreed to enroll in the study. Each specialty, anesthesiology, emergency medicine and intensive care, had 20 representatives. None of the participants had prior experience with either high-fidelity simulation or debriefing. All trainees had between three and five years of clinical experience as assistant professors within their university department. Excluding the 15 who had been selected as debriefers, it was the role of the remaining 45 participants to provide pre- and post-seminar evaluations. Since they all did it, 45 pre-test and 45 post-test DASH© scores were analyzed.

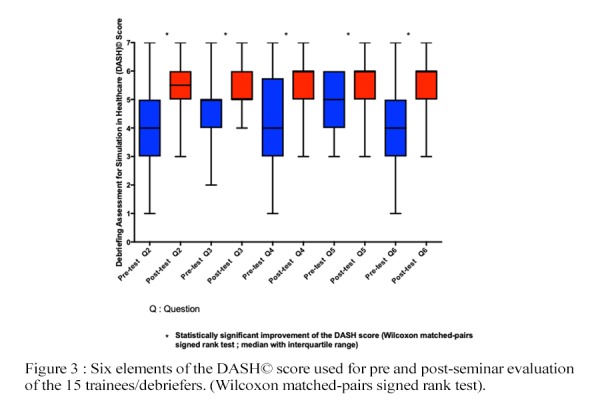

Main outcome (Figure 3)

Figure3.

Six elements of the DASH© score used for pre and post-seminar evaluation of the 15 trainees/debriefers. (Wilcoxon matched-pairs signed rank test). Element 1 : Rating of the introduction to the simulation course, (was not analyzed since the introductory speech was delivered by the expert in charge of the seminars and not the trainees/debriefers). Element 2 : Maintains an engaging learning environment; Element 3 : Structures the debriefing in an organized way; Element 4 : Provokes engaging discussion; Element 5 : Identifies and explores performance gaps; Element 6 : Helps trainees achieve or sustain good future performance

The DASH© score is composed of six elements, each rated from one to seven. Since question one evaluated the introductory speech to the course delivered by the expert in charge of the seminar and not the skills of the trainees/debriefers, it was omitted. A statistically significant improvement in the 5 other elements pertaining to the skills was shown by the 15 trainees in charge of the debriefs (Figure 3). The debriefers significantly improved their performance with regard to “maintaining an engaging learning environment” (Median [IQR]) (4[3-5] after the pre-test vs. 5.5[5-6] after the post-test, p<0.001); “structuring the debriefing in an organized way” (5[4-5] after the pre-test v. 5[5-6] after the post-test, p=0.002); “provoking engaging discussion” (4[3-5.75] after the pre-test vs. 6[5-6] after the post-test, p<0.001); “identifying and exploring performance gaps” (5[4-6] after the pre-test vs. 6[5-6] after the post-test, p=0.014); and “helping the trainees to achieve and sustain good future performance” (4[3-5] after the pre-test vs. 6[5-6] after the post-test, p<0.001).

Discussion

Little is known about how faculty development opportunities should be structured to maintain and enhance the quality of debriefing within simulation programs. Nonetheless, five key issues are frequently highlighted to help simulation educators shape the debriefing training (5): a) Are we teaching the appropriate debriefing methods?, (b) Are we using the right methods to teach debriefing skills?, (c) How can we best assess debriefing effectiveness?, (d) How can peer feedback of debriefing be used to improve debriefing quality within programs?, and (e) How can we individualize debriefing training opportunities for the learning needs of our educators? Although this report does not answer these questions directly, it suggests that they can provide a valuable framework when designing a comprehensive activity aiming at teaching and evaluating debriefing skills. When the PAQ program of the Faculty of Medicine of Tunis provided us with a valuable opportunity to teach debriefing, we had to select the methods that should be taught to best suit the needs of the community of learners that our trainees would have to serve. Just as important, we also needed to identify the teaching methods that would be most helpful to novice trainees hoping to quickly acquire the skills needed to start and run a budding simulation program. Although they included a few formal lectures, the seminars that were created relied mostly on simulations with debriefing performed by the trainees and on multiple sessions of DOD led by a debriefing expert. These DOD sessions maximized the opportunities to answer the questions regarding evaluation, providing effective feedback, and because participants to the seminars would need to train fellow faculty members to work with them, how to teach debriefing (10). The pre- and post-seminar evaluations were not only means of assessing the progression of the trainees; they provided guidance regarding future seminars’ content and were a source of additional feedback to learners and expert alike.

The DOD approach used during the seminars emphasized eliciting the trainees' assumptions about their debriefing performance and their reasons for acting, speaking, or asking questions as they did (10). According to the seminar’s leading instructor’s "debriefing with good judgment” approach (11), the trainees' « debriefing pitfalls » were treated as puzzles to be solved rather than simply erroneous elements to be identified. When the debriefing was led by a trainee, the instructor in charge of the seminar literally transcribed almost all of the conversations that took place, what could also have been done by video-recording the discussion. During the following DOD session, the trainee/debriefer and the other participants were all conjointly debriefed by the instructor. With his debriefing notes construed as objective observations, the instructor used a conversational technique during which these observations were paired with judgmental subjective elements (advocacy) to formulate a curious question aiming at illuminating the trainee/debriefer's frame in relation to his questions, speech, or behavior (inquiry) during the debriefing (12). This approach can be useful to manage the tension created by the need to share critical judgments and maintain a trusting relationship with the learners (13).

The DASH© scale is a debriefing assessment tool based on a behavioral rating that has the potential to provide valid and reliable data. It is actually used in a wide variety of settings in simulation-based medical education.

Brett-Fleegler et al. (5) demonstrated inter-rater reliability (consistency in ratings among different raters) and internal consistency (ability of a test in measuring the same knowledge). They also show a preliminary evidence of validity (ability of a test to support the interpretations of test scores). Similar to other behavior rating instruments, the DASH© scale is limited in its use for trained users, and, thus, we chose to carry out, before the randomization preceding, a presentation of the grid in which each item was explained.

Even if this report suggests that a learning approach based largely on DOD sessions led by an experienced simulation instructor can improve the debriefing performance of novice instructors, many issues are left unresolved. Only one format of the seminar that included multiple DOD sessions was studied. It was then not possible to compare the impact of the DOD approach with other forms of training. Even if the DASH© scale arguably provides some measure of objectivity, asking trainees if a fairly demanding activity has improved the skills of their fellow participants which should, in all likelihood, lends to a subjectvity bias.Also, the influence of a social desirability bias involving instructors and fellow participants with whom significant bonds have probably been created during the five-day seminar cannot be excluded.

Conclusion

This report suggests that a 5-day pedagogical intervention including mostly debriefing exercises and DOD sessions allowed novice simulation instructors to improve their debriefing skills. Despite the mentioned limitations, this type of training could be an introduction to debriefing techniques during the instructors’ training in simulation-based medical education.

Acknowledgement

This study was accepted (preliminary results), as oral communication at the AMEE conference in 2016, and then the final results were presented, as oral communication, during the Tunisian Conference of Anesthesiology and intensive care in April 2017.

Conflict of Interest:None Declared.

References

- 1.Paige JT, Arora S, Fernandez G, Seymour N. Debriefing 101: training faculty to promote learning in simulation-based training. Am J Surg. 2015;209(1):126–31. doi: 10.1016/j.amjsurg.2014.05.034. [DOI] [PubMed] [Google Scholar]

- 2.Ryoo EN, Ha EH. The Importance of Debriefing in Simulation-Based Learning: Comparison Between De-briefing and No Debriefing. Comput Inform Nurs. 2015;33(12):538–45. doi: 10.1097/CIN.0000000000000194. [DOI] [PubMed] [Google Scholar]

- 3.Levett-Jones T, Lapkin S. A systematic review of the effectiveness of simulation debriefing in health professional education. Nurse Educ Today. 2014;34(6):e58–63. doi: 10.1016/j.nedt.2013.09.020. [DOI] [PubMed] [Google Scholar]

- 4.Healey M, Jenkins A. Kolb's Experiential Learning Theory and Its Application in Geography in Higher Educa-tion. Journal of Geography. 2000;99(5):185–95. [Google Scholar]

- 5.Cheng A, Grant V, Dieckmann P, Arora S, Robinson T, Eppich W. Faculty Development for Simulation Programs: Five Issues for the Future of Debriefing Training. Simul Healthc. 2015;10(4):217–22. doi: 10.1097/SIH.0000000000000090. [DOI] [PubMed] [Google Scholar]

- 6.Brett-Fleegler M, Rudolph J, Eppich W, Monuteaux M, Fleegler E, Cheng A, et al. ebriefing assessment for simulation in healthcare: development and psychometric proper-ties. Simul Healthc. 2012;7(5):288–94. doi: 10.1097/SIH.0b013e3182620228. [DOI] [PubMed] [Google Scholar]

- 7.Workshop Tb. Marshmallow Challenge: Toastmasters International [Internet]; 2017; [updated:25 Jan 2018] . Available from: [https://www.toastmasters.org/~/media/8a6a24ead731427190be0352ea2996e7.ashx . ]

- 8.Jeanniard L. Marshmallow challenge – Build a tower, build a team [Internet]; 2014;[updated: 25 June 2014]; . Available from: [https://sogilis.com/blog/marshmallow-challenge/ ]

- 9.Mongin P. Monter une tour en spaghetti pour souder une équipe: Auto-apprentissage de la conduite de projet par une équipe [Internet]; 2019; [cited 3 June 2019]; . Available from: [https://cursus.edu/articles/42482/monter-une-tour-en-spaghetti-pour-souder-une-equipe#.XNwNHS97Rp8. ]

- 10.Eppich W, Cheng A. Promoting Excellence and Reflective Learning in Simulation (PEARLS): development and rationale for a blended approach to health care simulation debriefing. Simul Healthc. 2015;10(2):106–15. doi: 10.1097/SIH.0000000000000072. [DOI] [PubMed] [Google Scholar]

- 11.Rudolph JW, Simon R, Dufresne RL, Raemer DB. There's no such thing as "nonjudgmental" debriefing: a theory and method for debriefing with good judgment. Simul Healthc. 2006;1(1):49–55. doi: 10.1097/01266021-200600110-00006. [DOI] [PubMed] [Google Scholar]

- 12.Rudolph JW, Simon R, Rivard P, Dufresne RL, Raemer DB. Debriefing with good judgment: combining rigorous feedback with genuine inquiry. Anesthesiol Clin. 2007;25(2):361–76. doi: 10.1016/j.anclin.2007.03.007. [DOI] [PubMed] [Google Scholar]

- 13.Rudolph JW, Foldy EG, Robinson T, Kendall S, Taylor SS, Simon R. Helping without harming: the instructor's feedback dilemma in debriefing--a case study. Simul Healthc. 2013;8(5):304–16. doi: 10.1097/SIH.0b013e318294854e. [DOI] [PubMed] [Google Scholar]