Abstract

Machine learning algorithms can help to improve the accuracy and efficiency of cancer diagnosis, selection of personalized therapies and prediction of long-term outcomes. Artificial intelligence (AI) describes a subset of machine learning that can identify patterns in data and take actions to reach pre-set goals without specific programming. Machine learning tools can help to identify high-risk populations, prescribe personalized screening tests and enrich patient populations that are most likely to benefit from advanced imaging tests. AI algorithms can also help to plan personalized therapies and predict the impact of genomic variations on the sensitivity of normal and tumor tissue to chemotherapy or radiation therapy. The two main bottlenecks for successful AI applications in pediatric oncology imaging to date are the needs for large data sets and appropriate computer and memory power. With appropriate data entry and processing power, deep convolutional neural networks (CNNs) can process large amounts of imaging data, clinical data and medical literature in very short periods of time and thereby accelerate literature reviews, correct diagnoses and personalized treatments. This article provides a focused review of emerging AI applications that are relevant for the pediatric oncology imaging community.

Keywords: Artificial intelligence, Cancer, Children, Imaging, Machine learning, Oncology

Introduction

Our collective knowledge in the field of pediatric oncology is exponentially growing in terms of multi-layered diagnoses, treatment options, and treatment outcomes. This rapidly growing information is coupled with the expectation of patients and families to receive the best possible care for their specific situation, instantly and everywhere. Machine learning algorithms can analyze large amounts of data and solve complex tasks in a very short time [1, 2]. This can help physicians to improve the accuracy and efficiency of making cancer diagnoses, selecting personalized therapies and predicting long-term outcomes.

Artificial intelligence (AI) describes a subset of machine learning that can identify patterns in data and take actions to reach pre-set goals without specific programming. Convolutional neural networks (CNNs) represent multiple inter-connected layers of AI algorithms that have learnable weights and can classify data with minimal pre-processing. Convolution is a mathematical operation that expresses the amount of overlap of one function as it is shifted over another function. The primary function of convolution in medical image processing is to extract features from input data. The CNN translates raw image pixels on the input end to scores at the output. When many CNN layers are stacked, they are called deep-CNNs. Deep-CNNs have several advantages over standard image reconstruction algorithms [3]. First, deep-CNNs can directly uncover features from a training data set, and hence can significantly alleviate the effort of explicit elaboration on feature extraction. The neuron-crafted features may compensate and surpass the discriminative power of conventional feature extraction methods. Second, deep-CNNs can jointly exploit feature interaction and hierarchy within the intrinsic architecture of a neural network. Therefore, the feature selection process is significantly simplified and accelerated. Third, the three steps of feature extraction, selection and supervised classification can be realized within the optimization of the same deep architecture. With such a design, the performance of the analytical system can be tuned more easily and in a systematic fashion. Thus, deep-CNNs have the advantage of being more accurate, faster, vendor-independent and less prone to “hallucination artifacts” (enhanced random signal effects) compared to previously applied machine learning algorithms. Grewal at al. [4] and Huang et al. [5] showed that deep-CNNs are particularly well suited for cross-sectional imaging studies, where detailed segmentation and scan-level labels are often available.

There are numerous potential applications of AI for pediatric oncology imaging (Table 1). The two main bottlenecks for successful AI applications in pediatric oncology imaging are the needs for large data sets and appropriate graphic processing unit (GPU) computer and memory power. With appropriate data entry and GPU power, deep-CNNs can process large amounts of imaging data, clinical data and medical literature in very short periods of time [6, 7]. This can substantially accelerate the speed of a literature review, finding the correct diagnosis and finding the best treatment option for individual patients [7]. Current AI algorithms have to be fed with large data sets, typically on the order of several hundreds or thousands of images [6, 7]. Sparse or non-representative data feeds can lead to unreliable outcomes. This is a particular challenge for pediatric oncology because there are relatively few children with cancer overall and nearly every case is unique. To date, deep-CNNs cannot guarantee correct diagnoses in alternative circumstances that might not resemble previous training data. However, considering the exponential progress in technical advancements of deep-CNN algorithms, this problem is likely to be solved in the near future by, for example, cross-training CNNs on data sets from adults, enabling AI to learn from sparse data and sharing CNN algorithms across multiple institutions rather than pooling multi-institutional data. It is expected to become less important to have large data sets and more important to have access to abundant computing power to operate AI algorithms. This focused review article provides an overview of emerging AI applications that are clinically relevant for pediatric oncology imaging.

Table 1.

Potential benefits of artificial intelligence for pediatric oncology imaging

| AI Application | Description |

|---|---|

| Test selection | - Use screening biomarkers to assign appropriate imaging tests |

| - Score patients’ symptoms or genetic predisposition | |

| - Avoid duplicate scans | |

| - Flag previous and predict future contrast agent allergies | |

| Image reconstruction | - Decrease radiation dose |

| - Accelerate scan times | |

| - Augment low-resolution imaging data | |

| - Decrease contrast agent dose | |

| - Homogenize imaging data from multi-center clinical trials | |

| Image quality control | - Reduce patient call backs |

| - Reduce image noise | |

| - Reduce motion artifacts | |

| - Automated radiation exposure calculation | |

| Image study triage | - Create preliminary diagnoses: normal versus abnormal |

| - Organize worklists: Prioritize cases with critical findings | |

| - Route scans with new cancer diagnoses to cancer imaging experts | |

| Tumor diagnosis | - Detect tumors early |

| - Flagg abnormal findings | |

| - Differentiate benign and malignant tumors | |

| - Flag vessel encasement or involvement | |

| - Provide automated tumor measurements, staging and restaging | |

| Tumor classification | - Provide more detailed diagnoses through radio(geno)mics |

| - Link blood biomarkers to image diagnoses | |

| - Predict survival and prognosis | |

| Treatment selection | - Select most appropriate combination therapy for each patient |

| - Automate tumor segmentation for radiation therapy planning | |

| - Predict individual tumor drug sensitivities | |

| - Predict individual drug toxicities, suggest dose adjustments | |

| Tumor therapy response | - Provide automated tumor size measurements |

| - Provide automated tumor therapy response scores | |

| - Understand tumor therapy resistance | |

| - Understand spontaneous tumor regression | |

| - Facilitate new drug development | |

| - Facilitate drug repurposing/repositioning | |

| Test result communication | - Facilitate standardized reports |

| - Inform patient and appropriate clinical specialists | |

| - Document communications and track follow-ups |

Test selection

Detecting cancers at an early stage of development can be a powerful tool for improving long-term outcomes. However, modern health care management models require creative approaches to reduce costs while maintaining quality care for every child and introducing new technology. Machine learning tools can help to identify high-risk populations, prescribe the most appropriate screening test for each child and enrich the patient populations that are most likely to benefit from advanced imaging tests. Machine learning algorithms can also help us to understand the developmental context of pediatric cancer development. For example, Marshall et al. [8] described a model of pediatric cancer development that can be linked to a defect in signaling that is crucial to embryonic development.

Various investigators have used machine learning to identify blood biomarkers of early lung cancer [9], colon cancer [10, 11], breast cancer [12] and hepatocellular carcinoma [13], among others, in adults. These blood biomarkers could be used to assign people to advanced imaging tests. Similar approaches could be used to refer high-risk pediatric patients to imaging tests and narrow tumor differential diagnoses on imaging studies based on new or existing biomarkers for pediatric cancers. Examples would be to link electronic medical record data about Vanillylmandelic acid (VMA) levels with imaging diagnoses of neural crest tumors, alpha-fetoprotein (AFP) with hepatoblastoma/HCC and lactate dehydrogenase (LDH) with lymphoma. For example, a child with elevated VMA urine levels, detected by a smart toilet or diaper, could be referred for abdominal ultrasound, and an incidental chest radiograph diagnosis of a mediastinal mass could be narrowed by integrating elevated LDH into the imaging report.

In children with cancer predisposition syndromes, surveillance screening is recommended and has been shown to improve survival because of detection of early stage tumors [14]. However there is no universal agreement to date among radiologists regarding the best suited imaging techniques or imaging protocols [15] and providers report both false-negative and false-positive findings [14]. Machine learning algorithms could be used to identify imaging protocols that produce optimal diagnostic accuracy and integrate pertinent clinical information to improve the sensitivity and specificity of imaging tests for cancer screening and early cancer detection.

Image acquisition, reconstruction and quality control

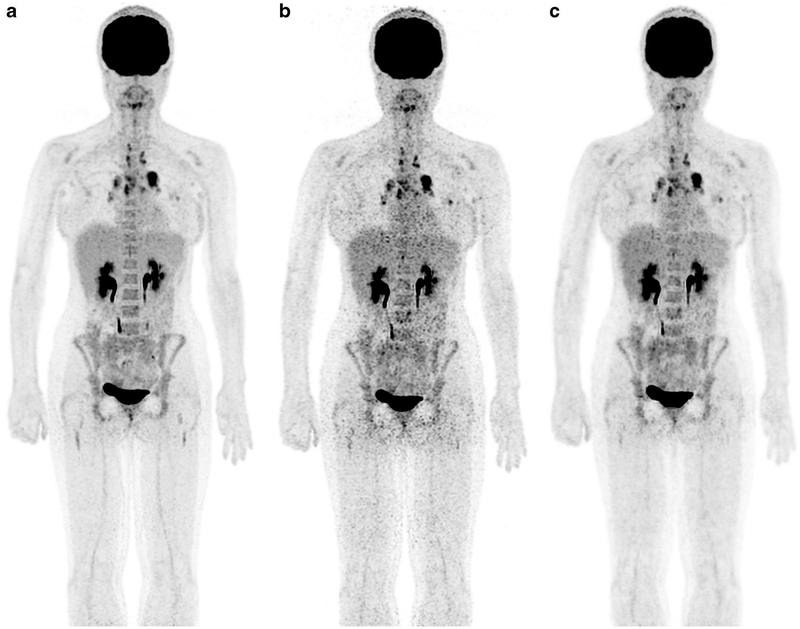

Deep-CNNs represent a powerful tool to substantially reduce ionizing radiation exposure from cross-sectional imaging scans. To achieve this, the computer network is first trained to recognize common imaging features of paired low-dose and high-dose images. In a second step, the CNN can reproduce standard-dose images from input low-dose images. Because the CNN is trained at the pixel level, training sets from one anatomical region can be applied to images from another region. Kang et al. [16] used CNNs to de-noise low-dose CT scans. Our team utilized deep-CNNs to augment standard-dose [F-18]2-fluoro-2-deoxyglucose (FDG) positron emission tomography (PET) scans from low-dose images (Fig. 1). To train our deep-CNN, we generated low-dose 18F-FDG PET data from list mode imaging studies of 20 pediatric cancer patients by randomly selecting 25% of count events, spread uniformly over the acquisition time. We reconstructed standard-dose PET images from reduced-dose images, using ordered subset expectation maximization methods, convolutional de-noising auto-encoders [17] and commercially available software (Subtle Medical Inc., Palo Alto, CA) [18].

Fig. 1.

18F-FDG PET scan of a 29-year-old woman with Hodgkin lymphoma and multiple FDG-avid mediastinal lymph nodes: (a) PET scan 60 min after intravenous injection of 18F-FDG at standard dose (3 MBq/kg); (b) reconstructed PET scan at 25% of the original 18F-FDG dose (0.75 MBq); (c) artificial-intelligence-augmented low-dose scan, which provides equal image quality compared to the standard-dose scan. 18F-FDG PET [F-18]2-fluoro-2-deoxyglucose positron emission tomography

Similar machine learning approaches can be used to substantially accelerate the acquisition time of medical imaging procedures or reduce contrast agent doses. Zhu and coworkers [19] developed a new CNN algorithm that can significantly accelerate the acquisition time of MRI scans. Gong and coworkers [20] used a CNN to reduce the dose of gadolinium-based contrast agents for brain MRI scans to 10% of the original dose by reconstructing low-dose images to standard-dose images.

Tumor detection and classification

Deep-CNNs have been used for detecting and classifying various non-neoplastic abnormalities in children, including detecting delayed bone age on hand radiographs [21] and pneumonia on chest radiographs [22]. Similar principles can be used for the detection of malignant tumors: CNNs can be trained in the differentiation of normal and abnormal scans. On abnormal scans, tumor lesions can be automatically identified, segmented and measured. For example, Becker et al. [23] used a CNN to detect breast tumors on ultrasound studies, Afifi and Nakaguchi [24] used a CNN for unsupervised detection of liver tumors on CT images, Soltaninejad et al. [25] used a CNN for automated brain tumor detection on MRI scans and Bi et al. [26] used deep-CNN to automatically detect FDG-avid lymphomas on FDG-PET scans. Helm at al. [27] used a computer-aided system to detect pulmonary nodules on chest CTs of pediatric patients. And Linguraru et al. [28] used deep-CNN for automatic segmentation and volume assessment of liver tumors and tumor-free liver parenchyma in adults.

Deep-CNNs have also been used to characterize tumors. For example, Tu et al. [29] and Chen et al. [30] used machine learning algorithms to differentiate benign and malignant lung nodules on chest CT scans [29] and PET/CT scans [30]. Perk et al. [31] developed a whole-body automatic disease classification tool to differentiate bone metastases and benign bone lesions (mostly degenerative changes) on 18F-sodium fluoride (NaF) PET/CT scans. Our team trained a deep-CNN system to differentiate alveolar and rhabdomyosarcomas on MRI images [3].

Machine learning algorithms are particularly well suited to detect patterns in big data sets for radiomic and radiogenomic analyses. Deep-CNNs can associate increasingly complex genetic and molecular tumor characteristics with specific macroscopic tumor features on imaging tests. Kickingereder and coworkers [32] reported that machine learning algorithms could predict EGFR amplification status and RTK II sub-groups in adults with a moderate yet significantly greater accuracy (63% for EGFR [P<.01] and 61% for RTK II [P=.01]) than prediction by chance. Bae and co-workers [33] used machine learning algorithms to predict survival in 217 adults with glioblastoma by integrating radiomic MRI phenotyping with clinical and genetic profiles. Several other investigators used machine learning algorithms for texture analysis and classification of pediatric brain tumors [2, 32, 34].

Selecting and monitoring personalized therapies

The Children’s Oncology Group (COG) has united pediatric oncologists from hospitals across North America to assess treatment outcomes of malignant tumors in pediatric patients. To take full advantage of CNNs, pediatric radiologists need to similarly unite and pool otherwise sparse imaging data of children with cancer. Data obtained at single centers are usually too sparse and too inhomogeneous to apply AI approaches [35, 36]. To address this critical need, we recently formed a consortium of pediatric radiologists who are pooling their pediatric PET/MR imaging data in a centralized image registry [37]. The advantage over image registries established by the COG is that the imaging-specialist-driven registry includes more detailed annotations of imaging data. For example, while many clinical trials use tumor metabolic response on 18F-FDG PET scans as an outcome measure, researchers recognized that 18F-FDG PET data acquisition and processing approaches differ considerably among centers [38]. This variability makes it difficult and sometimes impossible to use quantitative measures of radiotracer standardized uptake values (SUV) as study endpoints for multi-center studies [38]. Raw imaging data from different institutions can be fetched into a deep-CNN and reconstructed such that they have equivalent voxel sizes and 18F-FDG dose levels. This can help to generate more homogeneous datasets and enable quantitative data analyses for multi-center clinical trials.

Deep-CNNs can help clinicians to plan personalized therapies and predict the impact of genomic variations on the sensitivity of normal and tumor tissue to chemotherapy or radiation therapy [7]. For example, Men and coworkers [39] trained a CNN to provide fully automated tumor segmentation for radiation therapy planning in adults. CNNs can help to automate measures of tumor therapy response. In 1998, Glass and Reddick [40] reported the use of a neural network for automatic segmentation and quantification of necrosis in osteosarcomas on post-treatment MR images. In many pediatric tumors, chemotherapy leads to a decline in tumor metabolism before the tumor size decreases [41, 42]. Therefore, tumor therapy response is often assessed using radiotracer-based imaging tests such as 18F-FDG PET/CT [41]. Our team trained a deep-CNN in classifying responders and non-responders based on low-dose 18F-FDG PET/MRI scans.

Machine learning algorithms can also help us understand tumor therapy resistance. Cairns at al. [43] developed a network-based cancer phenotyping mapping tool that can chart drug-response networks. The authors manipulated tumor therapy response to anthracyclines and taxanes by perturbing key genes in a drug response network [43]. Most non-responders die from metastases. AI can improve our understanding of tumor spread by comparing molecular/anatomical features of primary tumors and metastases or by comparing multiple metastases in the same person. AI could also help us to uncover genetic, molecular and physiological factors that lead to spontaneous tumor regression. Thus far, no significantly different tumor signatures have been identified between regressing and non-regressing tumors.

Machine learning algorithms have been utilized to predict survival in children with cancer and improve long-term outcomes. For example, Han et al. [44] successfully applied a CNN to analyze complex multi-layered clinical data to predict survival of patients with synovial sarcoma. The survival neural network model predicted survival of people with synovial sarcoma more accurately compared to the Cox proportional hazard model [44]. Similarly, Cha et al. [45] reported that a CNN-based radiomics model accurately predicted stereotactic-radiosurgery responses of brain metastases. Ibragimov et al. [46] trained a CNN to predict radiation therapy-induced liver toxicity and to automatically identify anatomical regions that must be spared to reduce long-term toxic side effects.

CNNs also have great potential to improve and accelerate the development of new cancer therapeutics, including new drug discoveries, drug repositioning (different application of drugs approved by the U.S. Food and Drug Administration) and drug repurposing (different use of drugs that failed approval for other indications) [47, 48]. Imaging data can inform all types of drug development. Imaging data are being integrated in computational analyses and mathematical modeling of drug similarity measures, efficacy calculations and toxicity assessments. Text-mining algorithms can search large publication databases to find novel drug combination therapies [47].

Facilitating communication of test results

Artificial intelligence can optimize radiology workflows by organizing work lists, prioritizing cases with critical findings, flagging abnormal findings, providing automated tumor measurements, and translating the results into appropriate staging schemes. AI algorithms can also assist in identifying normal anatomical structures on cross-sectional imaging studies, such as brain anatomy, abdominal vessels [49] and muscles. This can facilitate and accelerate the description of the mass localization. AI algorithms can also help inform radiologists with oncology imaging expertise about new cancer diagnoses and communicate results of imaging tests to providers and patients, document these communications and alert and remind oncologists and patients of necessary follow-up studies.

Conclusion

Machine learning algorithms are revolutionizing the field of oncology imaging. While many AI approaches have been deployed for adults, dedicated applications of AI algorithms for children with cancer are limited, mostly because of the limited number of available data sets. Although it is possible to build on algorithms that have been developed for adults using transfer-learning approaches, it is important to recognize that most CNNs are not directly generalizable from adults to children. Therefore, the development of dedicated AI algorithms for pediatric oncology applications is urgently needed. Pediatric imaging datasets are not large by the standards of the deep-learning literature in medical imaging [16, 21, 50]. However, the volumetric nature of CT and MRI imaging studies coupled with our ability to leverage detailed data segmentation in addition to standard augmentation techniques [51] is expected to enable us to attain useful levels of performance, similar to recent studies on brain tumors [52, 53] and ischemic infarction [54]. Given that the deep-CNN task is essentially a pixel-level classification task, and because pediatric cancer lesions are generally sizeable, leading to a relatively large amount of training examples, CNN performance is reasonable in the realm of pediatric oncology imaging. Future developments require initiatives for standardizations and sharing of source data and new algorithms to enable cross-validations and maximize patient benefit.

Acknowledgments

This work was supported by a grant from the Eunice Kennedy Shriver National Institute of Child Health and Human Development, grant number R01 HD081123–01A1.

Footnotes

Conflicts of interest None

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Callaway E, Castelvecchi D, Cyranoski D et al. (2017) 2017 in news: the science events that shaped the year. Nature 552:304–307 [DOI] [PubMed] [Google Scholar]

- 2.Fetit AE, Novak J, Rodriguez D et al. (2018) Radiomics in paediatric neuro-oncology: a multicentre study on MRI texture analysis. NMR Biomed 31:1–13 [DOI] [PubMed] [Google Scholar]

- 3.Banerjee I, Crawley A, Bhethanabotla M et al. (2018) Transfer learning on fused multiparametric MR images for classifying histopathological subtypes of rhabdomyosarcoma. Comput Med Imaging Graph 65:167–175 [DOI] [PubMed] [Google Scholar]

- 4.Grewal M, Srivastava M, Kumar P, Varadarajan S (2017) RADNET: radiologist level accuracy using deep learning for hemorrhage detection in CT scans. https://arxiv.org/abs/1710.04934. Accessed 18 Jan 2019

- 5.Huang YH, Feng QJ (2018) Segmentation of brain tumor on magnetic resonance images using 3D full-convolutional densely connected convolutional networks. Nan Fang Yi Ke Da Xue Xue Bao 38:661–668 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Erickson BJ, Korfiatis P, Akkus Z et al. (2017) Machine learning for medical imaging. Radiographics 37:505–515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kang J, Rancati T, Lee S et al. (2018) Machine learning and radiogenomics: lessons learned and future directions. Front Oncol 8:228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Marshall GM, Carter DR, Cheung BB et al. (2014) The prenatal origins of cancer. Nat Rev Cancer 14:277–289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fortunato O, Boeri M, Verri C et al. (2014) Assessment of circulating microRNAs in plasma of lung cancer patients. Molecules 19: 3038–3054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang B, Liang XL, Gao HY et al. (2016) Models of logistic regression analysis, support vector machine, and back-propagation neural network based on serum tumor markers in colorectal cancer diagnosis. Genet Mol Res 15(2). 10.4238/gmr.15028643 [DOI] [PubMed] [Google Scholar]

- 11.Hornbrook MC, Goshen R, Choman E et al. (2017) Early colorectal cancer detected by machine learning model using gender, age, and complete blood count data. Dig Dis Sci 62: 2719–2727 [DOI] [PubMed] [Google Scholar]

- 12.Lu L, Sun J, Shi P et al. (2017) Identification of circular RNAs as a promising new class of diagnostic biomarkers for human breast cancer. Oncotarget 8:44096–44107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Patterson AD, Maurhofer O, Beyoglu D et al. (2011) Aberrant lipid metabolism in hepatocellular carcinoma revealed by plasma metabolomics and lipid profiling. Cancer Res 71:6590–6600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kumar P, Gill RM, Phelps A et al. (2018) Surveillance screening in Li-Fraumeni syndrome: raising awareness of false positives. Cureus 10:e2527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Schooler GR, Davis JT, Daldrup-Link HE et al. (2018) Current utilization and procedural practices in pediatric whole-body MRI. Pediatr Radiol 48:1101–1107 [DOI] [PubMed] [Google Scholar]

- 16.Kang E, Min J, Ye JC (2017) A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction. Med Phys 44:e360–e375 [DOI] [PubMed] [Google Scholar]

- 17.Gondara L (2016) Medical image denoising using convolutional denoising autoencoders. 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, pp 241–246 [Google Scholar]

- 18.Xu J, Gong E, Pauly JM, Zaharchuk G (2018) 200x low-dose PET reconstruction using deep learning. NIPS Healthcare Workshop 2017. https://arxiv.org/abs/1712.04119. Accessed 18 Jan 2019 [Google Scholar]

- 19.Zhu B, Liu JZ, Cauley SF et al. (2018) Image reconstruction by domain-transform manifold learning. Nature 555:487–492 [DOI] [PubMed] [Google Scholar]

- 20.Gong E, Pauly JM, Wintermark M et al. (2018) Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J Magn Reson Imaging 48:330–340 [DOI] [PubMed] [Google Scholar]

- 21.Larson DB, Chen MC, Lungren MP et al. (2018) Performance of a deep-learning neural network model in assessing skeletal maturity on pediatric hand radiographs. Radiology 287:313–322 [DOI] [PubMed] [Google Scholar]

- 22.Rajpurkar P, Irvin J, Zhu K et al. (2018) CheXNet: radiologist-level pneumonia detection on chest X-rays with deep learning. IEEE Computer Society Conference on Computer Vision and Pattern Recognition. https://arxiv.org/abs/1711.05225. Accessed 18 Jan 2019 [Google Scholar]

- 23.Becker AS, Mueller M, Stoffel E et al. (2018) Classification of breast cancer in ultrasound imaging using a generic deep learning analysis software: a pilot study. Br J Radiol 91:20170576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Afifi A, Nakaguchi T (2015) Unsupervised detection of liver lesions in CT images. Conf Proc IEEE Eng Med Biol Soc 2015: 2411–2414 [DOI] [PubMed] [Google Scholar]

- 25.Soltaninejad M, Yang G, Lambrou T et al. (2017) Automated brain tumour detection and segmentation using superpixel-based extremely randomized trees in FLAIR MRI. Int J Comput Assist Radiol Surg 12:183–203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bi L, Kim J, Kumar A et al. (2017) Automatic detection and classification of regions of FDG uptake in whole-body PET-CT lymphoma studies. Comput Med Imaging Graph 60:3–10 [DOI] [PubMed] [Google Scholar]

- 27.Helm EJ, Silva CT, Roberts HC et al. (2009) Computer-aided detection for the identification of pulmonary nodules in pediatric oncology patients: initial experience. Pediatr Radiol 39:685–693 [DOI] [PubMed] [Google Scholar]

- 28.Linguraru MG, Richbourg WJ, Liu J et al. (2012) Tumor burden analysis on computed tomography by automated liver and tumor segmentation. IEEE Trans Med Imaging 31:1965–1976 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tu SJ, Wang CW, Pan KT et al. (2018) Localized thin-section CT with radiomics feature extraction and machine learning to classify early-detected pulmonary nodules from lung cancer screening. Phys Med Biol 63:065005. [DOI] [PubMed] [Google Scholar]

- 30.Chen S, Harmon S, Perk T et al. (2017) Diagnostic classification of solitary pulmonary nodules using dual time (18)F-FDG PET/CT image texture features in granuloma-endemic regions. Sci Rep 7: 9370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Perk T, Bradshaw T, Chen S et al. (2018) Automated classification of benign and malignant lesions in (18)F-NaF PET/CT images using machine learning. Phys Med Biol 63:225019. [DOI] [PubMed] [Google Scholar]

- 32.Kickingereder P, Bonekamp D, Nowosielski M et al. (2016) Radiogenomics of glioblastoma: machine learning-based classification of molecular characteristics by using multiparametric and multiregional MR imaging features. Radiology 281:907–918 [DOI] [PubMed] [Google Scholar]

- 33.Bae S, Choi YS, Ahn SS et al. (2018) Radiomic MRI phenotyping of glioblastoma: improving survival prediction. Radiology 289: 797–806 [DOI] [PubMed] [Google Scholar]

- 34.Zarinabad N, Wilson M, Gill SK et al. (2017) Multiclass imbalance learning: improving classification of pediatric brain tumors from magnetic resonance spectroscopy. Magn Reson Med 77:2114–2124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hirsch FW, Sattler B, Sorge I et al. (2013) PET/MR in children. Initial clinical experience in paediatric oncology using an integrated PET/MR scanner. Pediatr Radiol 43:860–875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Schafer JF, Gatidis S, Schmidt H et al. (2014) Simultaneous whole-body PET/MR imaging in comparison to PET/CT in pediatric oncology: initial results. Radiology 273:220–231 [DOI] [PubMed] [Google Scholar]

- 37.Daldrup-Link H, Voss S, Donig J (2014) ACR Committee on pediatric imaging research. Pediatr Radiol 44:1193–1194 [Google Scholar]

- 38.Graham MM, Badawi RD, Wahl RL (2011) Variations in PET/CT methodology for oncologic imaging at U.S. academic medical centers: an imaging response assessment team survey. J Nucl Med 52: 311–317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Men K, Zhang T, Chen X et al. (2018) Fully automatic and robust segmentation of the clinical target volume for radiotherapy of breast cancer using big data and deep learning. Phys Med 50:13–19 [DOI] [PubMed] [Google Scholar]

- 40.Glass JO, Reddick WE (1998) Hybrid artificial neural network segmentation and classification of dynamic contrast-enhanced MR imaging (DEMRI) of osteosarcoma. Magn Reson Imaging 16:1075–1083 [DOI] [PubMed] [Google Scholar]

- 41.Kleis M, Daldrup-Link H, Matthay K et al. (2009) Diagnostic value of PET/CT for the staging and restaging of pediatric tumors. Eur J Nucl Med Mol Imaging 36:23–36 [DOI] [PubMed] [Google Scholar]

- 42.McCarville MB (2008) New frontiers in pediatric oncologic imaging. Cancer Imaging 8:87–92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Cairns J, Ung CY, da Rocha EL et al. (2016) A network-based phenotype mapping approach to identify genes that modulate drug response phenotypes. Sci Rep 6:37003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Han I, Kim JH, Park H et al. (2018) Deep learning approach for survival prediction for patients with synovial sarcoma. Tumour Biol 40 10.1177/1010428318799264 [DOI] [PubMed] [Google Scholar]

- 45.Cha YJ, Jang WI, Kim MS et al. (2018) Prediction of response to stereotactic radiosurgery for brain metastases using convolutional neural networks. Anticancer Res 38:5437–5445 [DOI] [PubMed] [Google Scholar]

- 46.Ibragimov B, Toesca D, Chang D et al. (2018) Development of deep neural network for individualized hepatobiliary toxicity prediction after liver SBRT. Med Phys 45:4763–4774 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Sun W, Sanderson PE, Zheng W (2016) Drug combination therapy increases successful drug repositioning. Drug Discov Today 21: 1189–1195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Salazar BM, Balczewski EA, Ung CY, Zhu S (2016) Neuroblastoma, a paradigm for big data science in pediatric oncology. Int J Mol Sci 18 10.3390/ijms18010037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Matsuzaki T, Oda M, Kitasaka T et al. (2015) Automated anatomical labeling of abdominal arteries and hepatic portal system extracted from abdominal CT volumes. Med Image Anal 20:152–161 [DOI] [PubMed] [Google Scholar]

- 50.Esteva A, Kuprel B, Novoa RA et al. (2017) Dermatologist-level classification of skin cancer with deep neural networks. Nature 542: 115–118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ratner AJ, Ehrenberg HR, Hussain Z et al. (2017) Learning to compose domain-specific transformations for data augmentation. Adv Neural Inf Process Syst 30:3239–3249 [PMC free article] [PubMed] [Google Scholar]

- 52.Havaei M, Davy A, Warde-Farley D et al. (2017) Brain tumor segmentation with deep neural networks. Med Image Anal 35:18–31 [DOI] [PubMed] [Google Scholar]

- 53.Havaei M, Larochelle H, Poulin P et al. (2016) Within-brain classification for brain tumor segmentation. Int J Comput Assist Radiol Surg 11:777–788 [DOI] [PubMed] [Google Scholar]

- 54.Beecy AN, Chang Q, Anchouche K et al. (2018) A novel deep learning approach for automated diagnosis of acute ischemic infarction on computed tomography. JACC Cardiovasc Imaging 11: 1723–1725 [DOI] [PubMed] [Google Scholar]