Summary.

Regularization methods, including Lasso, group Lasso and SCAD, typically focus on selecting variables with strong effects while ignoring weak signals. This may result in biased prediction, especially when weak signals outnumber strong signals. This paper aims to incorporate weak signals in variable selection, estimation and prediction. We propose a two-stage procedure, consisting of variable selection and post-selection estimation. The variable selection stage involves a covariance-insured screening for detecting weak signals, while the post-selection estimation stage involves a shrinkage estimator for jointly estimating strong and weak signals selected from the first stage. We term the proposed method as the covariance-insured screening based post-selection shrinkage estimator. We establish asymptotic properties for the proposed method and show, via simulations, that incorporating weak signals can improve estimation and prediction performance. We apply the proposed method to predict the annual gross domestic product (GDP) rates based on various socioeconomic indicators for 82 countries.

Keywords: high-dimensional data, Lasso, post-selection shrinkage estimation, variable selection, weak signal detection

1. Introduction

Given n independent samples, we consider a high-dimensional linear regression model

| (1) |

where y = (y1, … , yn)T is an n-vector of responses, X = (Xij)n×p is an n × p random design matrix, β = (β1, …, βp)T is a p-vector of regression coefficients and ε = (ε1, … , εn)T n is an n-vector of independently and identically distributed random errors with mean 0 and variance σ2. Let denote the true value of β. We write X = (x(1), …, x(n))T = (x1, …, xp), where x(i) = (Xij, … , Xip)T is the i-th row of X and xj is the j-th column of X, for i = 1, …, n and j = 1, …, p. Without the subject index i, we write y, Xj and ε as the random variables underlying yi, Xij and εi, respectively. We assume that each Xj is independent of ε. We write x as the random vector underlying x(i) and assume that x follows a p-dimensional multivariate sub-Gaussian distribution with mean zeros, variance proxy , and covariance matrix Σ. Sub-Gaussian distributions contain a wide class of distributions such as Gaussian, binary and all bounded random variables. Therefore, our proposed framework can accommodate more data types, as opposed to the conventional Gaussian distributions.

We assume that model (1) is sparse. That is, the number of nonzero β* components is less than n. When p > n, the essential problem is to recover the set of predictors with nonzero coefficients. The past two decades have seen many regularization methods developed for variable selection and estimation in high-dimensional settings, including Lasso (Tibshirani, 1996), adaptive Lasso (Zou, 2006), group Lasso (Yuan and Lin, 2006), SCAD (Fan and Li, 2001) and MCP (Zhang, 2010), among many others. Most regularization methods assume the restrictive β-min condition which requires that the strength of nonzero ′s is larger than a certain noise level (Zhang and Zhang, 2014). Hence, regularization methods may fail to detect weak signals with nonzero but small ′s, and this will result in biased estimates and inaccurate predictions, especially when weak signals outnumber strong signals.

Detection of weak signals is challenging. However, if weak signals are partially correlated with strong signals which satisfy the β-min condition, they may be more reliably detected. To elaborate on this idea, first notice that the regression coefficient can be written as

| (2) |

where Ωjj′ is the jj′-th entry of of Ω = Σ−1, the precision matrix of x. Let ρjj′ be the partial correlation of Xj and Xj′, i.e. the correlation between the residuals of Xj and Xj′ after regressing them on all the other X variables. It can be shown that . Hence, that Xj and Xj′ are partially uncorrelated is equivalent to Ωjj′ = 0. Assume that Ω is a sparse matrix with only a few nonzero entries in Ω. When the right hand side of (2) can be accurately evaluated, weak signals can be distinguished from those of noises. In high-dimensional settings, it is impossible to accurately evaluate . However, under the faithfulness condition that will be introduced in Section 3, a variable, say, indexed by j′, satisfing the β-min condition will have a nonzero cov(Xj′, y). Once we identify such strong signals, we set to discover variables that are partially correlated with them.

For brevity, we term weak signals which are partially correlated with strong signals as “weak but correlated” (WBC) signals. This paper aims to incorporate WBC signals in variable selection, estimation and prediction. We propose a two-stage procedure which consists of variable selection and post-selection estimation. The variable selection stage involves a covariance-insured screening for detecting weak signals, and the post-selection estimation stage involves a shrinkage estimator for jointly estimating strong and weak signals selected from the first stage. We call the proposed method as the covariance-insured screening based post-selection shrinkage estimator (CIS-PSE). Our simulation studies demonstrate that by incorporating WBC signals, CIS-PSE improves estimation and prediction accuracy. We also establish the asymptotic selection consistency of CIS-PSE.

The paper is organized as follows. We outline the proposed CIS-PSE method in Section 2 and investigate its asymptotic properties in Section 3. We evaluate the finite-sample performance of CIS-PSE via simulations in Section 4, and apply the proposed method to predict the annual gross domestic product (GDP) rates based on the socioeconomic status for 82 countries in Section 5. We conclude the paper with a brief discussion in Section 6. All technical proofs are provided in Appendix.

2. Methods

2.1. Notation

We use scripted upper-case letters, such as S, to denote the subsets of {1, … , p}. Denote by the cardinality of and by the complement of . For a vector v, we denote a subvector of v indexed by by . Let be a submatrix of the design matrix X restricted to the columns indexed by . For the symmetric covariance matrix Σ, denote by its submatix with the row and column indices restricted to subsets and , respectively. When , we write for short. The notation also applies to its sample version .

Denote by the graph induced by Ω, where the node set is and the set of edges is denoted by . An edge is a pair of nodes, say, k and k′, with Ωkk′ ≠ 0. For a subset , denote by Ωl the principal submatrix of Ω with its row and column indices restricted to and by the corresponding edge set. The subgraph is a connected component of if (i) any two nodes in are connected by edges in ; (ii) for any node , there exists a node such that k, k′ cannot be connected by any edges in .

For a symmetric matrix A, denote by tr(A) the trace of A, and denote by λmin(A) and λmax(A) the minimum and maximum eigenvalues of A. We define the operator norm and the Frobenius norm as and , respectively. For a p-vector v, denote its Lq norm by with q ≥ 1. For two real numbers a and b, denote a∨b = max(a, b).

Denote the sample covariance matrix and the marginal sample covariance between Xj and y, j = 1, …, p, by

For a vector V = (V1, …,Vp)T, denote .

2.2. Defining strong and weak signals

Consider a low-dimensional linear regression model where p < n. The ordinary least squares (OLS) estimator minimizes the prediction error, where is the empirical precision matrix. It is also known that is an unbiased estimator of β* and yields the best outcome prediction with the minimal prediction error.

However, when p > n, becomes non-invertible, and thus β Cannot be estimated using all X variables. Let be the true signal set and assume that . If were known, the predicted outcome, , would have the smallest prediction error. In practice, is unknown and some variable selection method must be applied first to identify . We define the set of strong signals as

| (3) |

and let be the set of weak signals. Then, the OLS estimator and the best outcome prediction are given by

and

where is the partitioned empirical precision matrix. We observe that the partial Correlations between the variables in and contribute to the estimation of and , and outcome prediction. Therefore, incorporating WBC signals helps reduce the estimation bias and prediction error.

We now further divide into and . Here, is the set of weak signals which have nonzero partial correlations with the signals in and is the set of weak signals which are not partially orrelated with signals in . Formally, with c given in (3),

and

Thus, p predictors can be partitioned as , where . We assume that and .

2.3. Covariance-insured screening based post-selection shrinkage estimator (CIS-PSE)

Our proposed CIS-PSE method consists of the variable selection and post-shrinkage estimation steps.

Variable selection:

First, we detect strong signals by regularization methods such as Lasso or adaptive Lasso. Denote by the set of detected strong signals. To identify WBC signals, we evaluate (2) for each . When there is no confusion, we use a j′ to denote a strong signal.

Though estimating cov(Xj′, y) for every 1 ≤ j′ ≤ p can be easily done, identifying and estimating nonzero entries in Ω is still challenging in high-dimensional settings. However, for identifying WBC signals, it is unnecessary to estimate the whole Ω matrix. Leveraging intra-feature correlations among predictors, we introduce a computationally efficient method for detecting nonzero Qjj′’s.

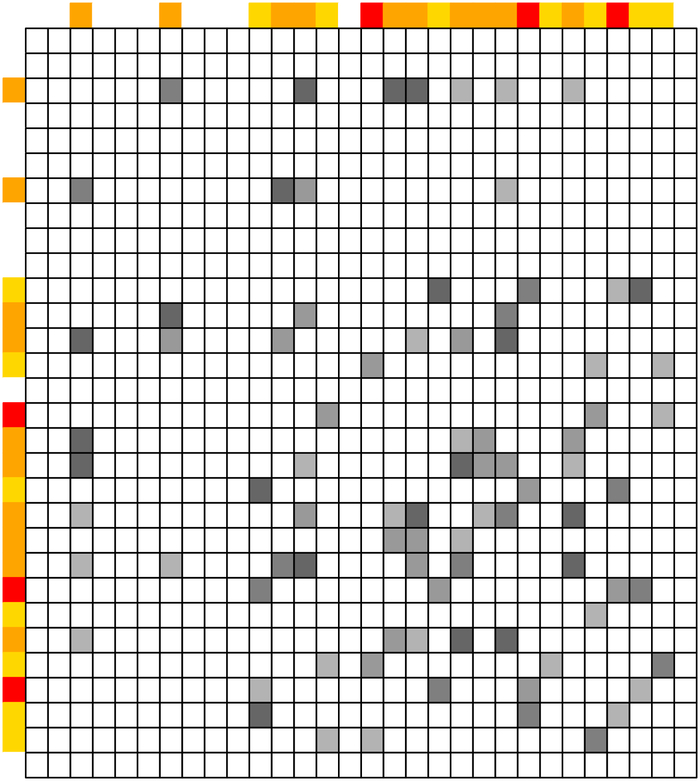

Variables that are partially correlated with signals in form the connected components of that contain at least one element of . Therefore, for detecting WBC signals, it suffices to focus on such connected components. Under the sparsity assumptions of β* and Ω, the size of such connected components is relatively small. For example, as shown in Figure 1, the first two diagonal blocks of a moderate size are relevant for detection of WBC signals.

Figure 1:

An illustrative example of marginally strong signals and their connected components in . Left panel: structure of Ω; Middle panel: structure of Ω after properly reordering the row and column indices of Ω; Right panel: the corresponding graph structure and connected components of the strong signals. Signals in are colored red. Signals in are colored orange. WBC signals in are colored yellow.

Under the sparsity assumption of Ω, the connected components of Ω can be inferred from those of the thresholded sample covariance matrix (Mazumder and Hastie, 2012; Bickel and Levina, 2008; Fan et al., 2011; Shao et al., 2011), which is much easier to estimate and can be calculated in a parallel manner. Denote by the thresholded sample covariance matrix with a thresholding parameter α, where with 1(·) being the indicator function. Denote by the graph corresponding to . For variable k, 1 ≤ k ≤ p, denote by the vertex set of the connected component in containing k. If variables k and k′ belong to the same connected component, 1 ≤ k ≠ k′ ≤ p, then . For example, in the third panel of Figure 1. Clearly, when , evaluating (2) is equivalent to estimating

| (4) |

Correspondingly, for a variable k, 1 ≤ k ≤ p, denote by the vertex set of the connected component in containing k. For a multivariate Gaussian x, Mazumder and Hastie (2012) showed that ′s can be exactly recovered from ′s with a properly chosen α. For a multivariate sub-Gaussian x, we refer to the following lemma.

Lemma 2.1. Suppose that the maximum size of a connected component in Ω containing a variable in is of order O(exp(nξ)), for some 0 < ξ < 1, then under Assumption (A7) specified in Section 3, with an and for any variable k, 1 ≤ k ≤ p, we have

| (5) |

for some positive constants C1 and C2.

We summarize the variable selection procedure for and .

Step S1: Detection of . Obtain a candidate subset of strong signals using a penalized regression method. Here, we consider the penalized least squares (PLS) estimator from Gao et al. (2017):

| (6) |

where Penλ(βj) is a penalty on each individual βj to shrink the weak effects toward zeros and select the strong signals, with the tuning parameter λ > 0 controlling the size of the candidate subset . Commonly used penalties are Penλ(βj) = λ|βj| and Penλ(βj) = λωj |βj| for Lasso and adaptive Lasso, where ωj > 0 is a known weight.

Step 2: Detection of . First, for a given threshold α, construct a sparse estimate of the covariance matrix . Next, for each selected variable j′ in from Step S1, detect , the node set, corresponding to its connected component in . Let be the union of the vertex sets corresponding to those connected components detected. That is, . Then according to (4), it suffices to identify WBC signals within . Specifically, for each , let be the submatrix from restricting on . We then evaluate (4) and select WBC variables by

| (7) |

for some pre-specified νn. Here denotes the entry of corresponding to variables j and j′. In our numerical studies, we rank the magnitude of variable according to the magnitude of and select up to the first variables.

Step 3: Detection of . To identify , we first solve a regression problem with a ridge penalty only on variables in , where . That is,

| (8) |

where is a tuning parameter controlling the overall strength of the variables selected in . Then a post-selection weighted ridge (WR) estimator has the form

| (9) |

where an is a thresholding parameter. Then the candidate subset is obtained by

| (10) |

Post-selection shrinkage estimation:

We consider the following two cases when performing the post-selection shrinkage estimation.

Case 1: . We obtain the CIS-PSE on by

| (11) |

where . Then and can be obtained by restricting to and , respectively.

Case 2: . Recall that and . We obtain the CIS-PSE of by

| (12) |

Where and is as defined by

| (13) |

where and . If is singular, we replace with a generalized inverse. Then and can be obtained by restricting to and , respectively.

Set . The final CIS-PSE estimator is defined as

| (14) |

2.4. Selection of tuning parameters

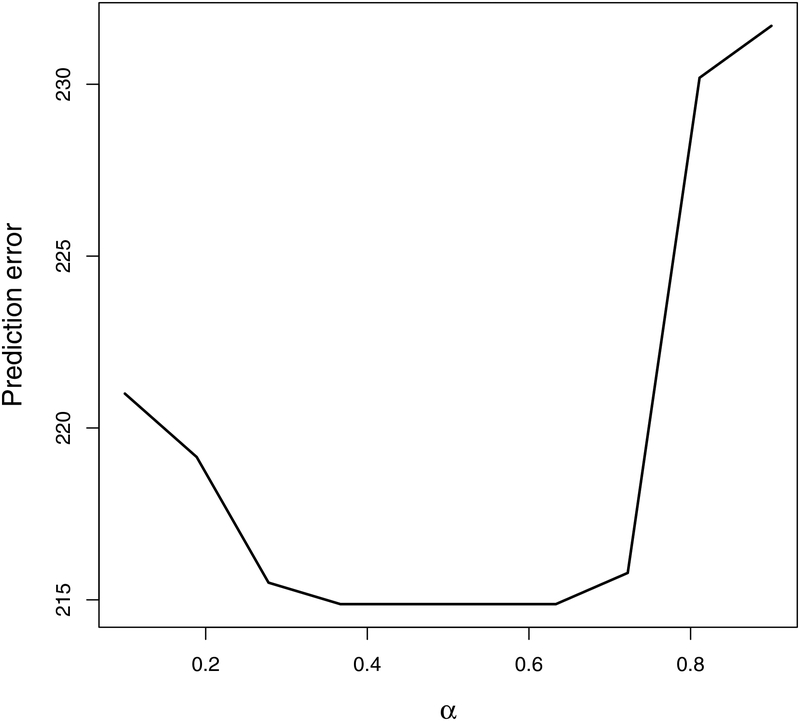

When selecting strong signals, the tuning parameter λ in Lasso or adaptive Lasso can be chosen by BIC (Zou, 2006). To choose νn for the selection of WBC signals according to (7), we rank variables according to the magnitude of and select the first variables to be . Here, r is chosen so that minimizes prediction errors on an independent validation dataset. For tuning parameter α, we set α = c3 log(n), for some positive constant c3, as suggested in Shao et al. (2011). Our empirical experiments show that α = c3 log(n) tends to give the larger true positives and the smaller false positives in identifying WBC variables. Figure 7 in Appendix reveals that in order to find the optimal α that minimizes the prediction error on a validation dataset, it suffices to conduct a grid search with only a few proposed values of α. In our numerical studies, instead of thresholding the sample covariance matrix, we threshold the sample correlation matrix. As correlations are ranged between −1 and 1, it is easier to set a target range for α. To detect signals in , we follow Gao et al. (2017) to use cross-validation to choose and an in (8) and (9), respectively. In particular, we set and an = c2n−1/8 for some positive constants c1 and c2. In the training dataset we fix the tuning parameters and fit the model, and in the validation dataset we compute the prediction error of the model. We repeat this procedure for various c1 and c2, and choose a pair that gives the smallest prediction error on the validation dataset.

Figure 7:

Sum of squared prediction error (SSPE) corresponding to different α’s.

3. Asymptotic properties

To investigate the asymptotic properties of CIS-PSE, we assume the following.

(A1) The random error ϵ has a finite kurtosis.

(A2) log(p) = O(nν) for some 0 < ν < 1.

(A3) There are positive constants κ1 and κ2 such that 0 < κ1 < λmiη(Σ) ≤ λmax(Σ) < κ2 < ∞.

(A4) Sparse Riesz condition (SRC): For the random design matrix X, any with , and any vector , there exist 0 < c* < c* < ∞ such that holds with probability tending to 1.

(A5) Faithfulness Assumption: Suppose that

where the absolute value function | · | is applied component-wise to its argument vector. The max and min operators are with respect to all individual components in the argument vectors.

(A6) Denote by the maximum size of the connected components in graph that contains at least one signal in , where B is the number of such connected components. Assume Cmax = O(ηξ) for some ξ ∈ (0,1).

(A7) Assume for some constant C > 0 and for the ξ in (A6).

(A8) For any subset with for some constant ν > 0.

(A9) Assume that for some 0 < τ < 1, where || · ||2 is the Euclidean norm.

(A1), a technical assumption for the asymptotic proofs, is satisfied by many parametric distributions such as Gaussian. The assumption is mild as we do not assume any parametric distributions for ε except that it has finite moments. (A2) and (A3) are commonly assumed in the high-dimensional literature. (A4) guarantees that can be recovered with probability tending to 1 as n → ∞ (Zhang and Huang, 2008). (A5) ensures that for all , holds with probability tending to 1 (Lemma 4 in Genovese et al., 2012). (A6) implies that the size of each connected component of a strong signal, i.e., , cannot exceed the order of exp(nξ) for some ξ ∈ (0,1). This assumption is required for estimating sparse covariance matrices. (A7) guarantees that with a properly chosen thresholding parameter α, Xk and Xk′ have non-zero thresholded sample covariances for , and have zero thresholded sample covariances for . As a result, the connected components of the thresholded sample covariance matrix and those of the precision matrix can be detected with adequate accuracy. (A8) ensures that the precision matrix can be accurately estimated by inverting the thresholded sample covariance matrix; see Shao et al. (2011) and Bickel and Levina (2008) for details. (A9), which bounds the total size of weak signals on , is required for selection consistency on (Gao et al., 2017).

We show that given a consistently selected , we have selection consistency for .

Theorem 3.1. With (A1)-(A3) and (A6)-(A8),

The following corollary shows that Theorem 3.1, together with Theorem 2 in Zhang and Huang (2008) and Corollary 2 in Gao et al. (2017), further implies selection consistency for .

Corollary 3.2. Under Assumptions (A1)-(A9), we have

Corollary 3.2 implies that CIS-PSE can recover the true set asymptotically. Thus, when , CIS-PSE gives an OLS estimator with probability going to 1 and has the minimum prediction error asymptotically, among all the unbiased estimators.

4. Simulation studies

We conduct simulations to compare the performance of the proposed CIS-PSE and the post-shrinkage estimator (PSE) by Gao et al. (2017). The key difference between CIS-PSE and PSE lies in that PSE focuses only on whereas CIS-PSE considers .

Data are generated according to (1) with

| (15) |

The random errors ϵi are independently generated from N(0,1). We consider the following examples.

Example 1: The first three variables, which belong to , are independently generated from N(0,1). The first ten, next ten and the last ten signals in belong to the connected component of X1, X2 and X3, respectively. These three connected components are independent of each other. is independent of and . Each connected component within and are generated from a multivariate normal distribution with mean zeros, variance 1, and a compound symmetric (CS) correlation matrix with correlation coefficient of 0.7. Variables in are independently generated from N(0, 1).

Example 2: This example is the same as Example 1 except that the three connected components within and follow the first order autocorrelation (AR(1)) structure with correlation coefficient of 0.7.

Example 3: This example is the same as Example 1 except that there are 30 variables in (i.e., variables X64-X93) are set to be correlated with signals in . That is, X64-X73 are correlated with X1, X74-X83 are correlated with X2, and X84-X93 are correlated with X3. These three connected components within have a CS correlation structure with correlation coefficient of 0.7.

For each example, we conduct 500 independent experiments with p=200, 300, 400 and 500. We generate a training dataset of size n = 200, a test dataset of size n = 100 to assess the prediction performance, and an independent validation dataset of size n = 100 for tuning parameter selection.

First, we compare CIS-PSE and PSE in selecting under Examples 1–2. We use Lasso and adaptive Lasso to select . Since both Lasso and adaptive Lasso give similar results, we report only the Lasso results in this section and present the results of adaptive Lasso in Appendix. We report the number of correctly identified variables (TP) in and the number of incorrectly selected variables (FP) in . Table 1 shows that CIS-PSE outperforms PSE in identifying signals in . We observe that the performance of PSE deteriorates as p increases, whereas CIS-PSE selects signals consistently even when p increases.

Table 1:

The performance of variable selection on

| p = 200 | p = 300 | p = 400 | p = 500 | |||

|---|---|---|---|---|---|---|

| Example 1 | TP | CIS-PSE | 59.6 (1.9) | 58.7 (2.1) | 57.9 (2.3) | 57.7 (2.4) |

| PSE | 41.2 (4.9) | 34.4 (5.1) | 25.7 (6.0) | 22.6 (5.8) | ||

| FP | CIS-PSE | 3.7 (2.4) | 5.1 (2.7) | 6.9 (3.1) | 8.8 (3.3) | |

| PSE | 13.3 (4.6) | 18.9 (5.2) | 21.7 (5.9) | 26.1 (6.0) | ||

| Example 2 | TP | CIS-PSE | 63.0 (0) | 62.9 (0.1) | 62.9 (0.1) | 62.9 (0.1) |

| PSE | 43.9 (3.9) | 37.0 (4.2) | 32.8 (5.0) | 31.5 (4.3) | ||

| FP | CIS-PSE | 3.5 (2.4) | 5.0 (2.7) | 6.3 (3.3) | 8.1 (3.1) | |

| PSE | 12.7 (4.2) | 19.5 (5.1) | 22.1 (6.3) | 27.4 (6.4) |

TP=true positive; FP= false positive.

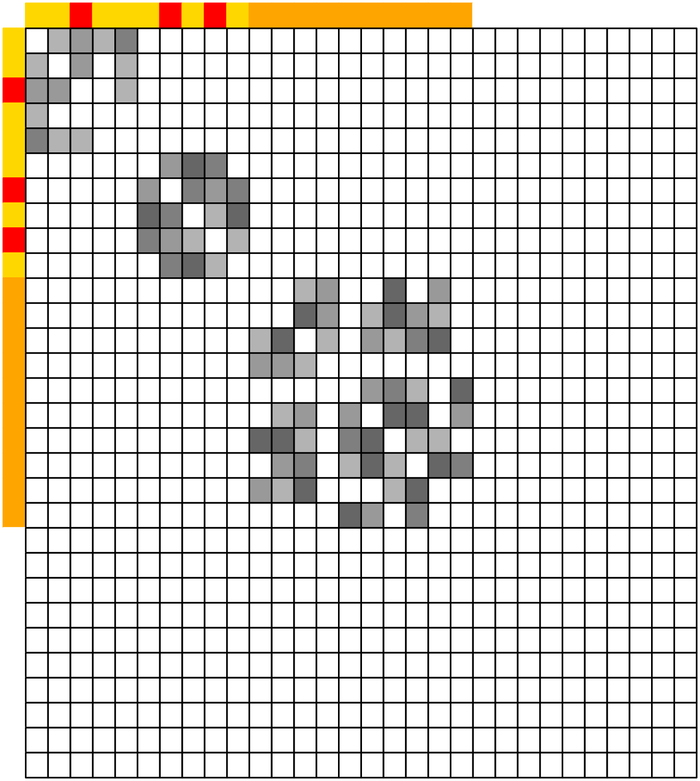

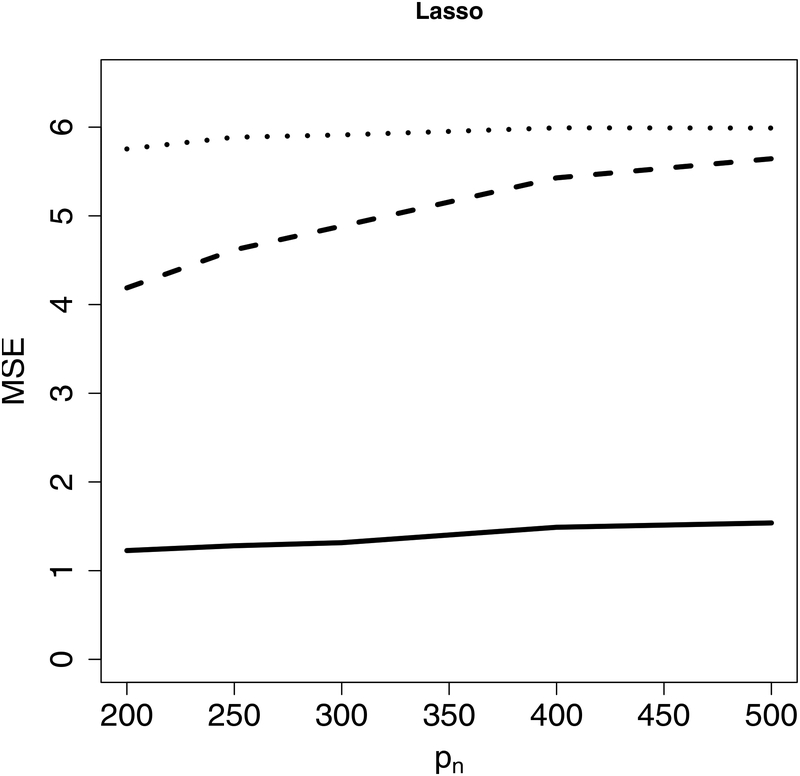

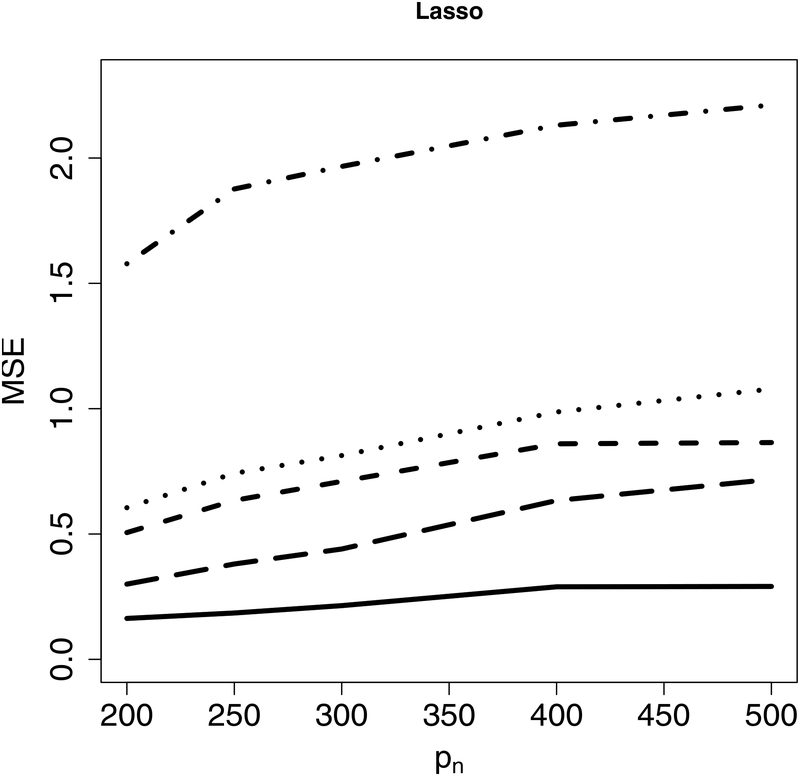

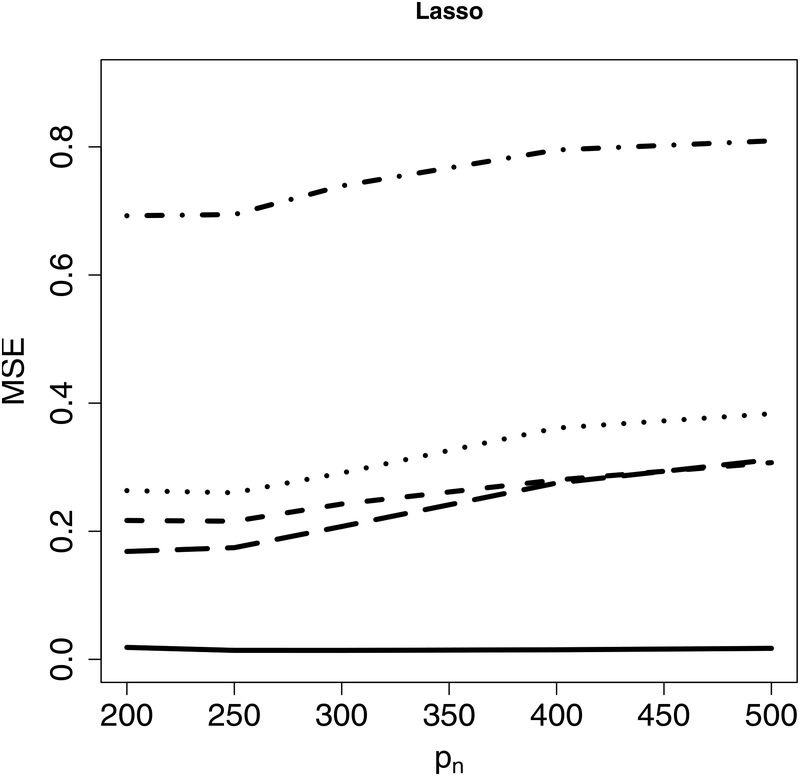

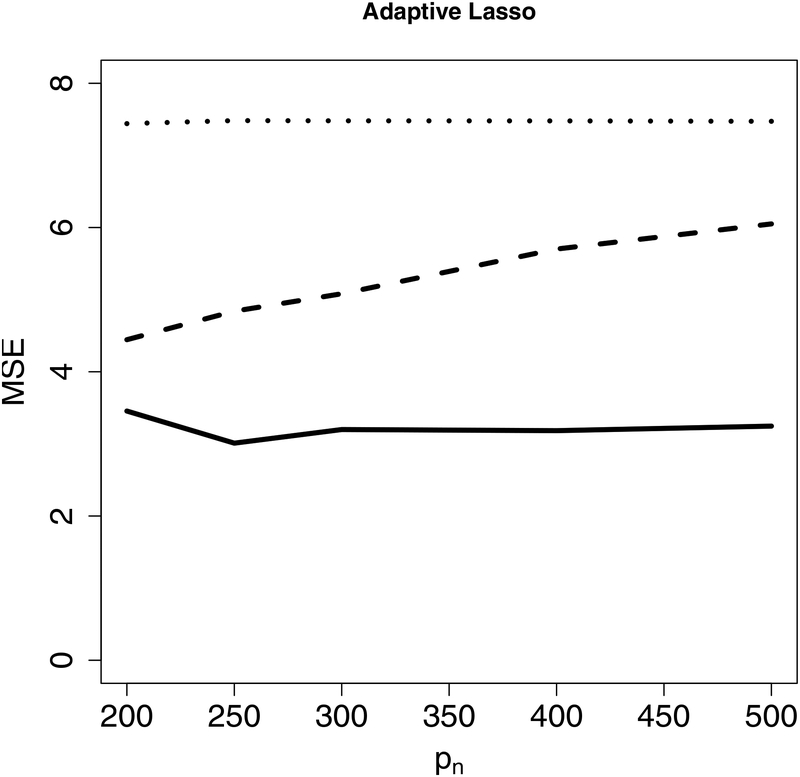

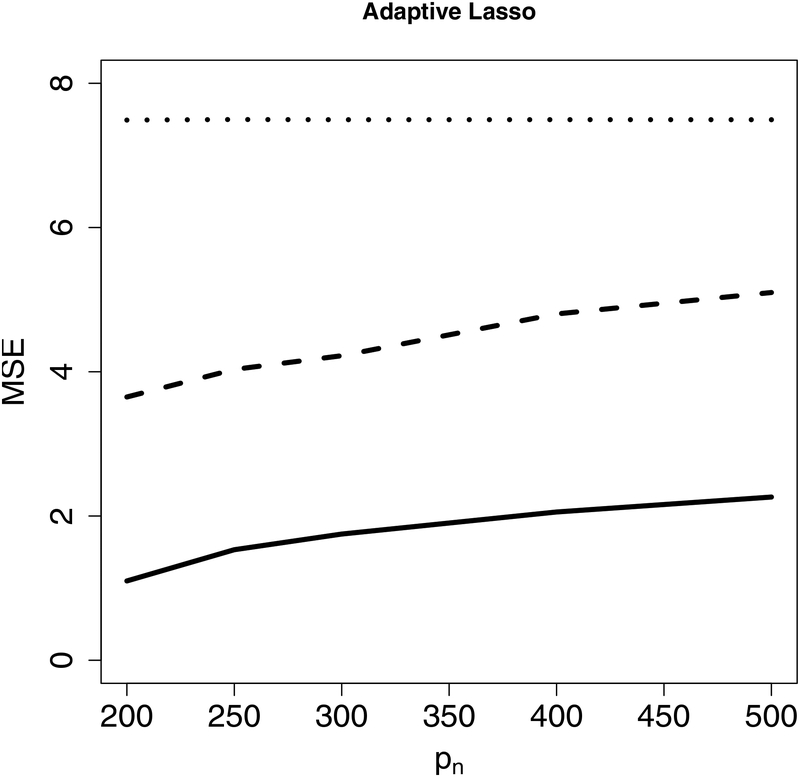

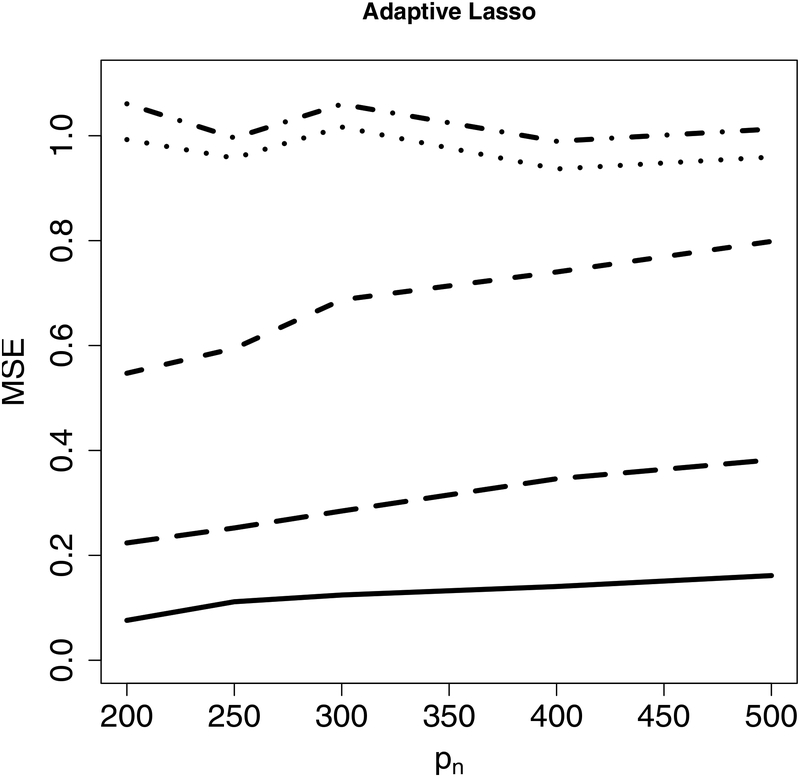

Next, we evaluate estimation accuracy on the targeted sub-model using the mean squared error (MSE) as the criterion under Examples 1–2. Figure 2 indicates that the proposed CIS-PSE detects WBC signals and provides more accurate and precise estimates. Figure 3 shows that CIS-PSE also improves the estimation of Compared to PSE.

Figure 2:

The mean squared error (MSE) of for different p’s under Example 1 (Left panel) and Example 2 (Right panel). Solid lines represent CIS-PSE, dashed lines are for PSE, and dotted lines indicate Lasso.

Figure 3:

The mean squared error (MSE) of for different p’s under Example 1 (Left panel) and Example 2 (Right panel). Solid lines represent CIS-PSE, dashed lines are for PSE, dotted lines indicate Lasso RE defined as , dot-dashed lines represent Lasso, and long-dashed lines are for WR in (9).

We explore the prediction performanCe under Examples 1–2 using the mean squared prediction error (MSPE), defined as , where is obtained from the training data, ytest is the response variable for the test dataset, ntest is the size of test dataset, and ◊ represents either the proposed CIS-PSE or PSE. Table 2, whiCh summarizes the results, shows that CIS-PSE outperforms PSE, suggesting incorporating WBC signals helps to improve the prediction accuracy.

Table 2:

Mean squared prediction error (MSPE) of the predicted outcomes

| p | 200 | 300 | 400 | 500 | |

|---|---|---|---|---|---|

| Example 1 | CIS-PSE | 3.17 (0.80) | 3.19 (0.78) | 3.25 (0.77) | 3.32 (0.77) |

| PSE | 4.19 (0.83) | 4.93 (1.02) | 5.28 (1.07) | 5.50 (1.16) | |

| Lasso | 10.28 (5.68) | 10.02 (5.58) | 9.77 (4.96) | 9.78 (4.54) | |

| Example 2 | CIS-PSE | 0.65 (0.14) | 0.92 (0.19) | 1.30 (0.16) | 2.43 (0.64) |

| PSE | 2.89 (0.61) | 3.55 (0.75) | 4.09 (0.68) | 4.34 (0.97) | |

| Lasso | 4.20 (0.79) | 4.50 (0.88) | 4.68 (0.90) | 4.73 (0.97) |

Lastly, we consider the setting where a subset of is correlated with a subset of ; see Example 3. Compared to Example 1, the results that are summarized in Table 4 show that the number of false positives only slightly increases, when some variables in are correlated with variables in .

Table 4:

Estimation results of and from the growth rate data

| When is selected by Lasso | ||||||

| TOT | 3.73 | - | - | - | ||

| LFERT | 2.55 | LLIFE | 1.88 | −.85 | ||

| NOM60 | 0.12 | 0.84 | ||||

| NOF60 | −0.10 | 0.83 | ||||

| LGDPNOM60 | −0.02 | 0.83 | ||||

| PRIF60 | −0.12 | LGDPPRIF60 | 0.02 | 0.99 | ||

| LGDPPRIM60 | −0.02 | 0.93 | ||||

| LGDPNOF60 | 0.02 | −0.90 | ||||

| When selected by adaptive Lasso | ||||||

| LFERT | 2.54 | LLIFE | 1.77 | −0.85 | ||

| NOM60 | 0.08 | 0.84 | ||||

| NOF60 | −0.07 | 0.83 | ||||

| LGDPNOM60 | −0.01 | 0.83 | ||||

stands for the sample correlations between variables in and .

TOT=The term of trade shock; LFERT=log of fertility rate (children per woman) averaged over 19601985; LLIFE=log of life expectancy at age 0 averaged over 1960–1985; NOM60=Percentage of no schooling in the male population in 1960; NOF60=Percentage of no schooling in the female population in 1960; LGDP60=log GDP per capita in 1960 (1985 price); PRIF60=Percentage of primary schooling attained in female population in 1960; PRIM60=Percentage of primary schooling attained in male population in 1960; LGDPNORM60=LGDP60×NOM60; LGDPPRIF60=LGDP60×PRIF60; LGDPPRIM60=LGDP60×PRIM60; LGDPNOF60=LGDP60×NOF60.

5. A real data example

We apply the proposed CIS-PSE method to analyze the gross domestic product (GDP) growth data studied in Gao et al. (2017) and Barro and Lee (1994). Our goal is to identify factors that are associated with the long-run GDP growth rate. The dataset includes the GDP growth rates and 45 socioeconomic variables for 82 countries from 1960 to 1985. We consider the following model:

| (16) |

where i is the country indicator, i = 1, …, 82, GRi is the annualized GDP growth rate of country i from 1960 to 1985, GDP60i is the GDP per capita in 1960, and zi are 45 socioeconomic covariates, the details of which can be found in Gao et al. (2017). The β1 and β2 represent the coefficients of log(GDP60) and socioeconomic predictors, respectively. The δ0 represents the coefficient of whether the GDP per capita in 1960 is below a threshold (=2898) or not. The δ1 represents the coefficient of log(GDP60) when GDP per capita in 1960 is below 2898. The δ2 represent the coefficients of the interactions between the GDP60i < 2898 and the socioeconomic predictors when GDP per capita in 1960 is below 2898.

We apply the proposed CIS-PSE and PSE by Gao et al. (2017) to detect . Additionally, CISPSE is used to further identify . Effects of covariates in are estimated by Lasso, adaptive Lasso, PSE and CIS-PSE. Effects of covariates in are estimated by CIS-PSE. The sample correlations between variables in and are also provided. Table 4 reports the selected variables and their estimated coefficients.

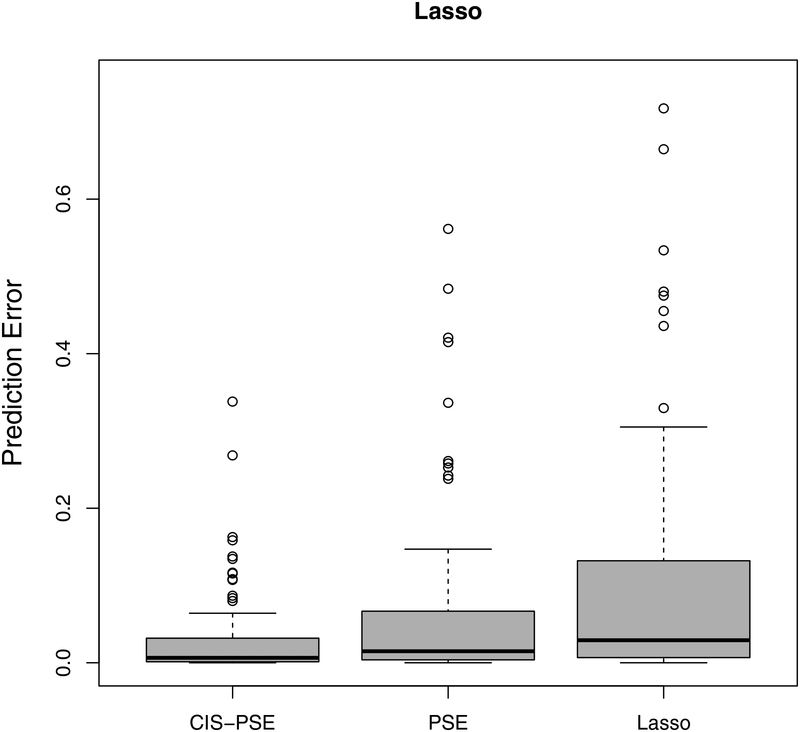

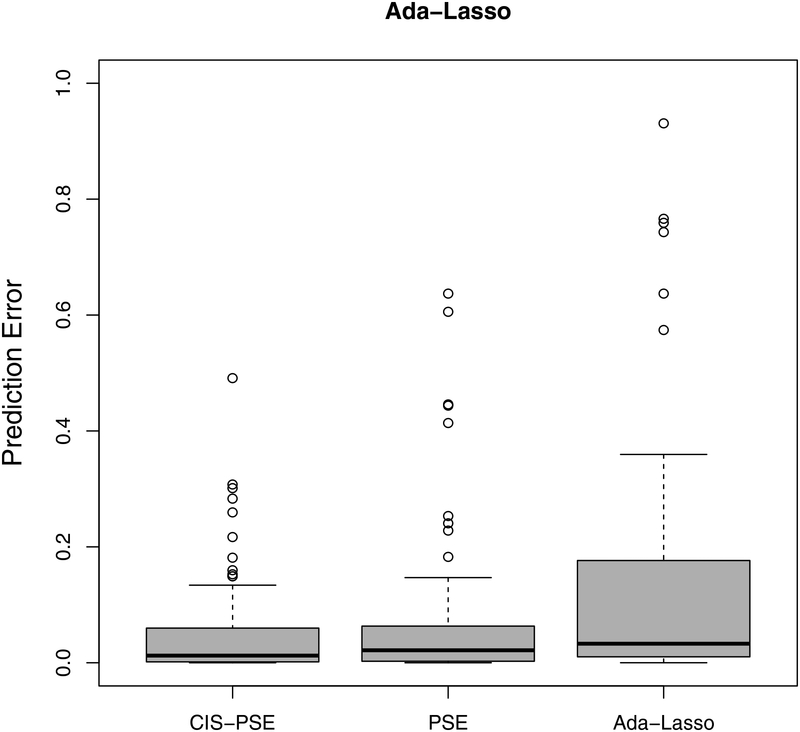

Next, we evaluate the accuracy of predicted GR using a leave-one-out cross-validation. For each country, we treat it itself as the test set while using all other countries as the training set. We apply Lasso, adaptive Lasso, PSE and CIS-PSE. All tuning parameters are selected as described in Section 4. The prediction results in Figure 4 show that CIS-PSE has the smallest prediction errors compared to PSE, Lasso and adaptive Lasso, with detected by either Lasso or adaptive Lasso.

Figure 4:

Prediction errors from post-selection shrinkage estimators: CIS-PSE, PSE and two penalized estimators (Lasso and adaptive Lasso). is detected by Lasso in the left panel and by adaptive Lasso in the right panel.

6. Discussion

To improve the estimation and prediction accuracy in high-dimensional linear regressions, we introduce the concept of weak but correlated (WBC) signals, which are commonly missed by the Lasso-type variable selection methods. We show that these variables can be easily detected with the help of their partial correlations with strong signals. We propose a CIS-PSE procedure for high-dimensional variable selection and estimation, particularly for WBC signal detection and estimation. We show that, by incorporating WBC signals, it significantly improves the estimation and prediction accuracy.

An alternative approach to weak signal detection would be to group them according to a known group structure and then select by their grouped effects (Bodmer and Bonilla, 2008; Li and Leal, 2008; Wu et al., 2011; Yuan and Lin, 2006). However, grouping strategies require prior knowledge on the group structure, and, in some situations, may not amplify the grouped effects of weak signals. For example, as pointed out in Buhlmann et al. (2013) and Shah and Samworth (2013), when a pair of highly negatively correlated variables are grouped together, they cancel out each other’s effect. On the other hand, our CIS-PSE method is based on detecting partial correlations and can accommodate the “canceling out” scenarios. Hence, when the grouping structure is known, it is worth combining the grouping strategy and CIS-PSE for weak signal detection. We will pursue this in the future.

Table 3:

Comparison of false positives (standard deviations in parentheses) between Examples 1 and 3

| p = 200 | p = 300 | p = 400 | p = 500 | |

|---|---|---|---|---|

| Example 1 | 3.7 (2.4) | 5.1 (2.7) | 6.9 (3.1) | 8.8 (3.3) |

| Example 3 | 4.6 (3.5) | 6.1 (3.4) | 7.8 (3.8) | 10.9 (4.2) |

7. Appendix

We provide technical proofs for Theorem 3.1, Corollary 3.2 and lemmas in this section. We first list some definitions and auxiliary lemmas.

Definition 7.1. A random vector Z = (Z1, …, Zp) is a sub-Gaussian with mean vector μ and variance proxy , if for any .

Let Z be a sub-Gaussian random variable with variance proxy . The sub-Gaussian tail inequality is given as, for any t > 0,

The following Lemma 7.2 ensures that the set of signals with non-vanishing marginal sample correlations with y coincides with with probability tending to 1. Therefore, evaluating condition (4) for a covariate j is equivalent to estimating nonzero Ωjj’s for every . Let rj′ be the rank of variable j′ according to the magnitude of . Denote by the first k covariates with the largest absolute marginal correlations with y, for k = 1, …, p. Recall that .

Lemma 7.2. Under Assumption (A5), we have

Proof of Lemma 7.2. By the definition of , it is suffice to show that with probability tending to 1, as n → ∞,

Since , we have

Notice that for each in probability, then as n → ∞.

It follows that when n → ∞,

Similarly, when n → ∞,

Lemma 7.2 is concluded by combining the above two inequalities with the faithfulness condition. ◻

Bickel and Levina (2008) showed that for , where with given in (A6). Furthermore, Bickel and Levina (2008) and Fan et al. (2011) showed that the estimation error for each connected component of the precision matrix is bounded by

| (17) |

Here we adopt the recursive labeling Algorithm in Shapiro and Stockman (2002) to detect the connected components of the thresholded sample covariance matrix.

Without loss of generality, suppose that the strong signals in belong to distinct connected components of . We rearrange the indices in as and write the submatrix of corresponding to , as . For notational convenience, we rewrite

The following Lemma 7.3 is useful for controlling the size of .

Lemma 7.3. Under (A6)-(A7), when x is from a multivariate sub-Gaussian distribution, we have for some positive constants C1 and C2.

Lemma 7.3 is a direct conclusion of Lemma 2.1 and Assumption (A6). Next we prove Theorem 3.1.

Proof of Theorem 3.1. Notice that and . Consider a sequence of thresholding parameters νn = O(n3ξ/2) with a decreasing series of positive numbers un = 1 + n−ξ/4 such that limn→∞ un = 1,

| (18) |

Moreover, since and , we have . As a result,

| (19) |

Notice that from Lemma 2.1, for some positive constants C1 and C2 and 0 < ξ < 1. Therefore, we further have

| (20) |

By (17) and Assumption (A6), , for some 0 < M < ∞. As n → ∞, the first term in (20) can be shown as:

| (21) |

Notice that for some positive constant . For sufficiently large n, for some positive constant . And Therefore, from (21), for suffitiently large n,

| (22) |

where the second last step is from applying Markov inequality to the positively valued random variable yTy/n.

For the second term in (20), let , then we have

for some . Notice that . Also for some positive Constant as and . Therefore, from Jensen’s inequality, . Then using Lemma 7.3 to Control the size of and applying Markov inequality on , we have

| (23) |

Plugging (22) and (23) into (20) and then plugging (20) into (19) gives

| (24) |

By a similar argument, we also have

| (25) |

Combining (24) and (25), we have

◻

Proof of Corollary 3.2. Notice that

| (26) |

Under the SRC in (A4), by Lemma 1 in Gao et al. (2017) or Theorem 2 in Zhang and Huang (2008),

| (27) |

From Theorem 3.1,

| (28) |

Equations (27) and (28) together give . This further gives that . Then by Corollary 2 in Gao et al. (2017), we also have

| (29) |

Combining (27), (28), (29) and (26) completes the proof. ◻

The following Tables 5–6 and Figures 5–6 give the selection, estimation and prediction results under Examples 1 and 2 when is selected by adaptive Lasso.

Table 5:

The performance of variable selection on when is selected by adaptive Lasso

| p = 200 | p = 300 | p = 400 | p = 500 | |||

|---|---|---|---|---|---|---|

| Example 1 | TP | CIS-PSE | 61.4 (2.4) | 61.1 (2.5) | 61.0 (2.6) | 61.1 (2.6) |

| PSE | 41.2 (5.0) | 34.4 (5.1) | 25.7 (6.1) | 22.6 (5.8) | ||

| FP | CIS-PSE | 2.5 (2.1) | 4.6 (2.5) | 6.7 (3.2) | 8.6 (3.0) | |

| PSE | 15.1 (4.9) | 19.7 (5.0) | 22.3 (5.4) | 28.0 (6.2) | ||

| Example 2 | TP | CIS-PSE | 62.5 (0.8) | 58.0 (2.2) | 54.6 (2.9) | 52.0 (3.5) |

| PSE | 43.9 (3.8) | 37 (4.2) | 32.8 (4.3) | 31.5 (4.2) | ||

| FP | CIS-PSE | 3.4 (2.6) | 5.2 (3.0) | 6.0 (3.5) | 7.7 (4.1) | |

| PSE | 13.1 (3.7) | 18.6 (4.7) | 21.9 (5.5) | 28.0 (6.1) | ||

Table 6:

Mean squared prediction error (MSPE) of the predicted outcomes when is selected by adaptive Lasso

| p | 200 | 300 | 400 | 500 | |

|---|---|---|---|---|---|

| Example 1 | CIS-PSE | 2.16 (0.57) | 3.06 (0.63) | 3.30 (0.72) | 3.60 (0.81) |

| PSE | 3.91 (0.71) | 4.85 (0.86) | 5.71 (1.02) | 6.27 (1.17) | |

| adaptive Lasso | 15.02 (4.37) | 14.67 (3.90) | 14.49 (4.00) | 14.74 (4.29) | |

| Example 2 | CIS-PSE | 2.69 (0.58) | 2.96 (0.72) | 3.23 (0.81) | 3.31 (0.83) |

| PSE | 3.77 (0.77) | 4.76 (1.08) | 5.50 (1.17) | 5.82 (0.18) | |

| adaptive Lasso | 4.48 (0.79) | 4.81 (0.91) | 4.94 (0.97) | 4.97 (1.01) |

Figure 5:

The mean squared error (MSE) of for different p’s when is selected by adaptive Lasso under Example 1 (Left panel) and Example 2 (Right panel). Solid lines represent CIS-PSE, dashed lines are for PSE, and dotted lines indicate adaptive Lasso.

Figure 6:

The mean squared error (MSE) of for different p’s when is selected by adaptive Lasso under Example 1 (Left panel) and Example 2 (Right panel). Solid lines represent CIS-PSE, dashed lines are for PSE, dotted lines indicate adaptive Lasso RE defined as dot-dashed lines represent adaptive Lasso, and long-dashed lines are for WR in (9).

Figure 7 shows the averaged sum of squared prediction error (SSPE) on the validation datasets across 500 independent experiments for different α’s.

References

- Barro R and Lee J (1994). Data set for a panel of 139 countries. http://admin.nber.org/pub/barro.lee/.

- Bickel P and Levina E (2008). Covariance regularization by thresholding. Annals of Statistics, 36(6):2577–2604. [Google Scholar]

- Bodmer W and Bonilla C (2008). Common and rare variants in multifactorial susceptibility to common diseases. Nat. Genet, 40(6):695–701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buhlmann P, Rütimann P, van de Geer S, and Zhang CH (2013). Correlated variables in regression: clustering and sparse estimation. Journal of Statistical Planning and Inference, 143:1835–1858. [Google Scholar]

- Fan J and Li R (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Statist. Assoc, 96:1348–1360. [Google Scholar]

- Fan J, Liao Y, and Min M (2011). High-dimensional covariance matrix estimation in approxiamte factor models. Annals of Statistics, 39(6):3320–3356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao X, Ahmed SE, and Feng Y (2017). Post selection shrinkage estimation for highdimensional data analysis. Applied Stochastic Models in Business and Industry, 33(2):97–120. [Google Scholar]

- Li B and Leal S (2008). Methods for detecting associations with rare variants for common diseases: application to analysis of sequence data. Am. J. Hum. Genet, 83(3):311–321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazumder R and Hastie T (2012). Exact covariance thresholding into connected components for large-scale graphical lasso. Journal of Machine Learning Research, 12:723–736. [PMC free article] [PubMed] [Google Scholar]

- Shah RD and Samworth RJ (2013). Discussion of ‘correlated variables in regression: clustering and sparse estimation’. Journal of Statistical Planning and Inference, 143:1866–1868. [Google Scholar]

- Shao J, Wang Y, Deng X, and Wang S (2011). Sparse linear discriminant analysis by thresholding for high-dimensional data. Annals of Statistics, 39(2):1241–1265. [Google Scholar]

- Shapiro L and Stockman G (2002). Computer Vision. Prentice Hall. [Google Scholar]

- Tibshirani R (1996). Regression shrinkage and selection via the Lasso. J. R. Statist. Soc. B, 58:267–288. [Google Scholar]

- Wu MC, Lee S, Cai T, Li Y, Boehnke M, and Lin X (2011). Rare-variant association testing for sequencing data with the sequence kernel association test. Am J Hum Genet, 89(1):82–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M and Lin Y (2006). Model selection and estimation in regression with grouped variables. J. R. Statist. Soc. B, 68:49–67. [Google Scholar]

- Zhang CH (2010). Nearly unbiased variable selection under minimax concave penalty. Annals of Statistics, 38(2):1567–1594. [Google Scholar]

- Zhang CH and Huang J (2008). The sparsity and bias of the LASSO selection in high dimensional linear regression. Annals of Statistics, 36:1567–1594. [Google Scholar]

- Zhang CH and Zhang S (2014). Confidence intervals for low dimensional parameters in high dimensional linear models. J. R. Statist. Soc. B, 76(1):217–242. [Google Scholar]

- Zou H (2006). The adaptive Lasso and its oracle properties. J. Am. Statist. Assoc, 101:1418–1429. [Google Scholar]