Abstract

Clinical decision support (CDS) systems delivered through the electronic health record are an important element of quality and safety initiatives within a health care system. However, managing a large CDS knowledge base can be an overwhelming task for informatics teams. Additionally, it can be difficult for these informatics teams to communicate their goals with external operational stakeholders and define concrete steps for improvement. We aimed to develop a maturity model that describes a roadmap toward organizational functions and processes that help health care systems use CDS more effectively to drive better outcomes. We developed a maturity model for CDS operations through discussions with health care leaders at 80 organizations, iterative model development by four clinical informaticists, and subsequent review with 19 health care organizations. We ceased iterations when feedback from three organizations did not result in any changes to the model. The proposed CDS maturity model includes three main “pillars”: “Content Creation,” “Analytics and Reporting,” and “Governance and Management.” Each pillar contains five levels—advancing along each pillar provides CDS teams a deeper understanding of the processes CDS systems are intended to improve. A “roof” represents the CDS functions that become attainable after advancing along each of the pillars. Organizations are not required to advance in order and can develop in one pillar separately from another. However, we hypothesize that optimal deployment of preceding levels and advancing in tandem along the pillars increase the value of organizational investment in higher levels of CDS maturity. In addition to describing the maturity model and its development, we also provide three case studies of health care organizations using the model for self-assessment and determine next steps in CDS development.

Keywords: decision support, clinical informatics, health information

Background and Significance

Patient-centered clinical decision support (CDS) has the potential to contribute substantially to the quadruple aim of advancing the health of populations, enhancing patient experience, reducing costs, and caring for caregivers. Nonetheless, CDS systems have variable effectiveness at improving adherence to evidence-based practices that lead to better outcomes. 1 2 3 4 5 6 7 The “five rights” CDS framework, 8 GUIDES checklist, 9 and similar tools 10 can help identify success factors of CDS systems that are associated with effective interventions. To incorporate these success factors reliably into the creation and maintenance of CDS systems, health care systems must coordinate CDS design, measurement, and governance. Many organizations lack the health information technology (IT) resources to consistently support high-quality CDS. This gap has led to calls for a CDS maturity model to guide organizational investment in functions and processes that would help health care systems use CDS more effectively. 11

Maturity models can help drive development by providing a roadmap to organizations for more effective use of health IT. 12 For example, Knosp et al demonstrate the use of a maturity index and a deployment index to assess academic health centers' capacity to provide research IT services and determine organizational investment priorities. 13 Multiple health care IT maturity models exist that concentrate on functional capacities related to a specific technology or a set of tasks 14 15 16 17 or an organization's acceptance of and regular use of a set of methodologies. 18 19 To our knowledge, no standardized maturity model yet exists for health care organizations' CDS capabilities. 11

CDS is defined as a process for enhancing health-related decisions and actions with pertinent, organized clinical knowledge and patient information to improve health and health care delivery. 8 Nearly all CDS processes aim to change behavior in complex environments where there are multiple interacting components and stakeholders at different organizational levels. 20 Developing organizational competence in any one specific technology or methodology is likely inadequate to understand processes and intervene effectively in such complex settings. Thus, a maturity model that focuses uniquely on characteristics of organizational use of methodologies or specific functional capacities may not capture the interactions between these elements to promote CDS effectiveness.

In this article, we took the perspective of an organization trying to determine how to maximize the impact of their operational investment in CDS. We aimed to describe a roadmap toward mature organizational functions and processes to help health care systems use CDS more effectively to drive better outcomes.

Objectives

After reading this article, the reader should be able to:

Identify the functions of a CDS operational team, its key stakeholders, and its interactions with other organizational entities.

Understand the uses of a maturity model to promote organizational development and discuss applicability of a proposed CDS maturity model to health care organizations.

Describe key components of a proposed CDS maturity model, the organizational benefits of maturing along each pillar, and advantages of developing capacities in tandem across the components.

Model Development

We developed a maturity model through notes taken from conversations with CDS stakeholders at U.S. health care organizations in the context of discussing interest in CDS analytics and governance. Of note, these conversations were not intended as rigorous qualitative research, but rather to synthesize lessons learned about effective CDS operations and gaps that could be addressed. Therefore, conversations were not recorded, although notes were taken and reviewed with participants for accuracy and appropriateness (member-checking). 21

Stakeholder Outreach

We identified CDS team members through internet searches for health care organization chief medical information officers and reviewed online profiles of staff with job titles or descriptions of “head of informatics,” “knowledge manager,” “knowledge engineer,” or any mention of “clinical decision support.” We did not have explicit criteria such as years of CDS experience to determine who to contact. We contacted these individuals via e-mail to discuss CDS analytics and governance to inform potential software tools and scheduled webinars (or in-person meetings when practical) with interested parties. Additional CDS team members were identified via snowball sampling. 22 Discussions were generally held with multiple people from a single institution at the same time in group settings.

We arranged in-person or webinar meetings with CDS team members from a total of 80 organizations ( Table 1 ). Each meeting was grossly structured in three phases: (1) current assessment of the organizations' CDS governance structure, capabilities, and practices (as defined by the stakeholders); (2) discussion of the organizations' goals and needs to further develop CDS capacity; (3) demonstration and feedback on a software application developed by several authors (E.W.O., N.M., D.F.F., M.D.Z., and M.C.T.) for CDS analytics and governance in use at their home institution at the time (Children's Hospital of Philadelphia, CHOP). We did not use a set list of questions but, similar to grounded theory, 21 adjusted questions over time, based on learnings from prior conversations, with the goal of synthesizing organizational needs to create and maintain high-quality CDS.

Table 1. Roles of CDS team members participating in discussion.

| Role a | N (%) |

|---|---|

| Chief medical information officer (CMIO) | 49 (39) |

| Physician informaticist (not CMIO) | 47 (37) |

| Nonphysician informaticist | 11 (9) |

| Knowledge manager/engineer | 7 (5) |

| Other | 13 (10) |

Abbreviation: CDS, clinical decision support.

Each individual categorized into a single role based on online profiles.

Model Synthesis

After reviewing notes from the 80 meetings, five authors (four clinical informaticists [E.W.O., N.M., D.F.F., and M.C.T.] and one business officer [M.D.Z.]) iteratively developed the proposed CDS maturity model. First, M.D.Z. and M.C.T. synthesized conversations into a candidate model. This candidate model was then reviewed with E.W.O., N.M., and D.F.F. while reviewing notes from approximately five meetings at a time. After each meeting, M.C.T. and M.D.Z. adjusted the candidate model until all meeting notes had been reviewed by these five authors with no suggested changes. After achieving agreement, the proposed model was reviewed with 19 additional organizations and presented with case examples at a regional informatics meeting (Penn Healthcare IT Roundtable) and two national informatics meetings (AMIA Annual Symposium 2018 23 and AMIA Clinical Informatics Conference 2019) 24 with feedback from each presentation reviewed by the same five authors. We ceased iterations when feedback from three consecutive organizations did not result in any changes to the model, which was achieved after review with the first six organizations (and maintained for the subsequent 13 organizations).

Proposed Maturity Model

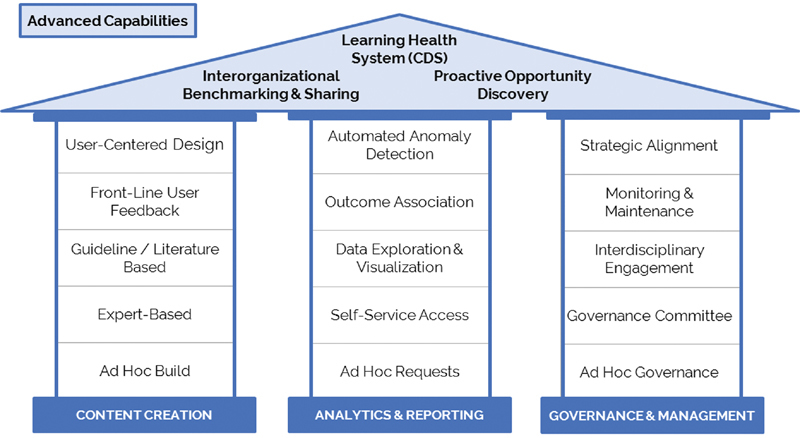

The proposed CDS maturity model ( Fig. 1 ) describes a roadmap of interdependent organizational functions and processes to help health care systems use CDS to drive better outcomes. Three “pillars” focus on distinct domains of an organization's approach to CDS: “Content Creation,” “Analytics and Reporting,” and “Governance and Management.” The “roof” represents the advanced CDS functions that become attainable after progressing along each of the three pillars. The initial state for all three pillars is “ad hoc”—for example, in content creation, ad hoc would indicate that a clinician asks for a particular CDS artifact (e.g., alert, order set, documentation template), and it is created as per their wishes without further effort to understand the problem or care process. Advancing along each pillar gives the CDS team a greater understanding of the problem to facilitate improved CDS processes.

Fig. 1.

Clinical decision support operations maturity model.

Of note, the organizational effort required to advance one step is likely different for each point in the model. An organization can advance in one pillar separately from another—for example, an organization could develop advanced CDS utilization analytical reports that associate CDS artifact use with outcomes, but may not have the governance in place to engage providers to use those data in designing and optimizing CDS processes. Similarly, an organization may develop a new capacity such as user-centered design but not yet apply it systematically or comprehensively across all CDS artifacts or processes. Advancing in tandem along the columns can confer additional advantages; for example, a robust analytics platform can yield greater benefits when coupled with interdisciplinary engagement, monitoring and maintenance processes, and user-centered design.

Additionally, organizations frequently advance within an individual pillar in a different sequence than described in the model. While this practice may be appropriate for specific CDS initiatives, we hypothesize that going in order increases the value of organizational investment in higher levels of CDS maturity.

Content Creation

The “Content Creation” pillar describes advancement from a focus on CDS tools to a more comprehensive understanding of the work system 25 26 to inform CDS design ( Table 2 ). In the first three levels, specific CDS artifacts are built with increasing level of sophistication—initially to satisfy the ideas of individual clinicians (e.g., through personal order sets), then with expert review, and then to promote evidence-based practices endorsed by applicable guidelines. In level 4, CDS teams attempt to gain a greater understanding of work as done (not only work as imagined) by systematically incorporating front-line user feedback regarding use of the CDS artifact in context. 27 28 In level 5, decision support processes are designed based on more formal analysis of the users and their tasks. 29 Additionally, rather than obtaining feedback by showing users a CDS artifact and simply asking their opinion, front-line users are asked to simulate their work. Data on their performance informs adjustments to the design.

Table 2. Content creation pillar of the proposed CDS maturity model.

| Level | Capability | Description |

|---|---|---|

| 5 | User-centered design | Design based on user- and task-analysis with scenario-based testing of prototypes performed systematically for high-risk CDS prior to implementation |

| 4 | Front-line user feedback | Feedback from front-line users on each CDS artifact is systematically solicited, reviewed, and incorporated |

| 3 | Guideline/literature based | Guidelines and academic literature are consistently reviewed and incorporated |

| 2 | Expert-based | Building based on local experts' consensus |

| 1 | Ad hoc build | Human and technical resources to build and customize CDS when needed |

Abbreviation: CDS, clinical decision support.

Analytics and Reporting

The “Analytics and Reporting” pillar describes advancement from resource-intensive data requests to more rapid and more useful evaluation of CDS process and outcome measures, and finally to automating maintenance functions. In levels 2 to 4, CDS teams are able to answer more sophisticated questions through self-service without having to enter a data request and wait on organizational resources. Level 2 represents the ability for improvement advocates in the organization to rapidly determine what CDS is being used without a dedicated data request. Level 3 represents the ability to rapidly determine how CDS is being used as well as the stakeholders to contact to determine why it is being used a particular way. Level 4 moves from examination of the CDS artifacts alone to the intended goals of the decision support (e.g., process or outcome metrics). Level 5 invokes automated processes to identify anomalies in the use of CDS or process or outcome metrics ( Table 3 ). 30 31 32 33

Table 3. Analytics and Reporting pillar of the proposed CDS maturity model.

| Level | Capability | Description |

|---|---|---|

| 5 | Anomaly detection | Automatically monitor data to identify broken, malfunctioning, or suboptimal CDS |

| 4 | Outcome association | Systematically associate clinical measures with CDS performance |

| 3 | Data exploration and visualization | User is easily able to manipulate CDS data to move from understanding what is happening to why it is happening |

| 2 | Self-service access | Basic, descriptive reports are available on-demand for consumers |

| 1 | Ad hoc requests | Consumers of CDS data receive reports from another person or team after request |

Abbreviation: CDS, clinical decision support.

Governance and Management

The “Governance and Management” pillar describes increasing engagement of organizational stakeholders with the CDS team to accomplish organizational goals. Increasing from level 1 to level 4 represents more regular review of CDS processes. Additionally, wider representation and engagement with the CDS team makes it easier to identify “owners” of a CDS process who have the appropriate clinical expertise and connections to ensure adequate review. This interdisciplinary approach also helps avoid unintended consequences across a variety of clinical workflows. At level 5, strategic alignment, the organization understands that CDS processes are critical to accomplishing goals related to the quadruple aim, leading to increased investment in people, processes, and technology to ensure CDS team involvement in all key organizational initiatives ( Table 4 ).

Table 4. Governance and Management pillar of the proposed CDS maturity model.

| Level | Capability | Description |

|---|---|---|

| 5 | Strategic alignment | The CDS team is leveraged throughout the organization as a vital partner in helping reach organizational strategic goals |

| 4 | Monitoring and maintenance | Standardized review and change recommendation processes for all existing CDS |

| 3 | Interdisciplinary engagement |

Cross-department representation in governance processes |

| 2 | Governance committee | Defined governance group and stakeholders that have established intake processes; CDS team as “gatekeepers” |

| 1 | Ad hoc governance | Governance occurs only as needed (e.g., for regulatory or safety events) |

Abbreviation: CDS, clinical decision support.

Advanced Capabilities

Achieving advanced levels across all three pillars creates new opportunities to use CDS to advance care. For example, in addition to automated anomaly detection, advanced analytics can also uncover opportunities for improvement with CDS by identifying variation in care practices or through interorganizational benchmarking. However, to take advantage of these insights, the organization must also have advanced governance processes to evaluate these opportunities and adequate resources to create high-quality CDS content. Achieving all of these milestones creates a “learning health system for CDS,” in which the organization is capable of continuously generating, interpreting, and acting on CDS data to promote evidence-based practices in pursuit of the quadruple aim. Of note, this “learning health system for CDS” is not equivalent to the global aim of The Learning Healthcare System from the Institute of Medicine which also integrates knowledge discovery, innovation, patient engagement, and other domains which are traditionally not the subject of CDS alone ( Table 5 ). 34

Table 5. Advanced capabilities of the proposed CDS maturity model.

| Capability | Description |

|---|---|

| Learning health system | Continuous integration of user input, clinical data, and organizational knowledge into CDS |

| Interorganizational benchmarking and sharing | Ability to compare CDS metrics to different organizations—both for specific processes (e.g., sepsis order set usage) and for system-wide metrics (e.g., alert burden) |

| Proactive opportunity discovery | Automated identification of potential applications for CDS based on variation in care as well as cross-department and/or cross-institution benchmarking |

Abbreviation: CDS, clinical decision support.

Case Studies: Maturity Model in Practice

Vanderbilt University Medical Center

Vanderbilt University Medical Center (VUMC) is one of the largest academic medical centers in the Southeast, managing more than 2 million patient visits each year. In November 2017, VUMC fully implemented a new electronic health record (EHR; Epic Systems) throughout all inpatient hospitals and outpatient clinics. CDS in the form of evidence-based order sets and alerts facilitates guideline-concordant care across the care continuum. Robust content creation and governance processes are in place to support lifecycle management of all deployed CDS artifacts. For CDS content creation, VUMC leverages user-centered design principles (level 5 of the “Content Creation” pillar) through an iterative approach that involves end-user feedback, driven by Osheroff's five “rights” for CDS. 8 Specifically, design goals for the CDS interventions include simple presentation of relevant data with recommended actions, optimal workflow integration via appropriate triggers minimizing unnecessary alerting, and delivering evidence-based guidance according to VUMC's content governance framework. Utilizing user-centered design principles, VUMC recently deployed CDS to implement pneumonia management guidelines in the pediatric emergency department (PED). The CDS team conducted observations of clinician users (e.g., PED faculty, fellows, residents, and nurse practitioners) investigating clinical workflows, decision-making processes, current use of technology and artifacts, and communication patterns. Structured cognitive interviews identified factors affecting decisions, care goals, and contingencies and exceptions as well as strengths and limitations of proposed care algorithms. These data informed key user-interface design requirements for several CDS candidates. Subsequently, the CDS team conducted a usability evaluation of the candidate user-interface designs by presenting PED providers with prototypes (i.e., formative usability testing) to refine design elements. The CDS design that scored the highest among all participating providers was selected for further refinements and eventual implementation. The final version consisted of three streamlined data input forms to confirm (or obtain) CDS algorithm inputs (patient clinical variables) that triggered the recommended actions—laboratory tests and antibiotic orders.

Despite following best practices for CDS design, analysis of usage data suggests that the adoption rates for some CDS artifacts remain low. Ongoing assessment of users' compliance with CDS recommendations and rapid identification of the root cause for low rates of adoption is vital for sustainable CDS. In addition to employing user-centered design principles for initial design, VUMC utilizes targeted postimplementation user feedback (level 4 of “Content Creation” pillar) informed by periodic review of CDS usage data on providers. In addition, postdeployment interviews were conducted with PED providers to determine reasons for certain user behaviors and not using the CDS during certain situations as well as modifications and solutions to resolve the related issues. Extending the user-centered CDS design principles to include ongoing evaluation of CDS has been an integral part of CDS lifecycle management and ongoing CDS optimization.

Children's Healthcare of Atlanta

Children's Healthcare of Atlanta (CHOA) is the largest pediatric health system in Georgia, managing over 1 million patient visits per year as of 2018. The organization's EHR (Epic Systems) is integrated throughout the enterprise and achieved the Healthcare Information and Management Systems Society (HIMSS) EMRAM Acute Care Stage 7 in 2016. CHOA has developed 90 clinical practice guidelines to standardize care and improve quality, with all of these supported by CDS artifacts such as order sets, information resources, and/or alerts. To facilitate plan, do, study, act cycles, CHOA has developed a self-service analytics application using QlikView that allows users to quickly view a set of 21 quality outcomes (e.g., length of stay, readmission rate, % intensive care unit [ICU] transfers) for populations meeting guideline criteria, and to filter by whether the intended order set for that population was activated. Thus, CHOA has the ability to associate CDS with outcomes (level 4 of the “Analytics and Reporting” pillar). For example, in mid-2017, the CDS team discovered that patients diagnosed with croup were noted to have a brief elevation in ICU transfer rates while use of the order set was decreasing to approximately 50% of encounters meeting criteria for the croup guideline. These data informed educational interventions to increase awareness of the order set and its association with better outcomes, which led to increased utilization of up to 75 to 80%. Over the same time period, ICU transfers and readmission within 7 days both decreased.

While the capacity to associate CDS use with outcomes has yielded some successes, the analytics tools at CHOA do not allow for more detailed exploration and visualization of CDS utilization data. For example, among patient encounters meeting criteria for the inpatient uncomplicated pneumonia guideline in 2018, only 23% had the pneumonia order set used, limiting progress toward promoting narrow-spectrum antibiotics. Determining how users selected orders within the order set or any rationale behind choices not to follow a guideline would require a separate data request. Similarly, there is no systematic mechanism for obtaining and incorporating front-line user feedback, slowing the investigation into why adherence might be low to design more effective processes. Thus, future efforts will aim to solidify CDS data exploration and visualization capabilities (level 3 of the “Analytics and Reporting” pillar) as well as front-line user feedback (level 4 of the “Content Creation” pillar) to more rapidly iterate on CDS design to achieve its intended outcomes.

Children's Hospital of Philadelphia

CHOP is the community hospital and primary care hub for children in West and South Philadelphia and a major tertiary referral center for the Greater Delaware Valley area. Annually, CHOP has nearly 30,000 inpatient admissions, more than 81,000 emergency room visits, and approximately 1.24 million outpatient visits. CHOP has been able to develop its CDS governance practices over multiple years after implementing its current EHR (Epic Systems) in the ambulatory care network in 2000 and in the inpatient and emergent care settings in 2011. A centralized governance structure (level 2 of the “Governance and Management” pillar) was established around the time of system roll-out, with biweekly meeting of a CDS governance committee with multidisciplinary representation (level 3) from pharmacy, nursing, physicians, and information services. Initially, this centralized governance primarily served to mitigate unintended consequences, especially with substantial new build at the time of system implementation. Engaging a broad group of interested stakeholders, however, also provided the opportunity to develop a deep pool of informatics expertise. For example, there are currently over 20 physicians at CHOP who are board certified in clinical informatics.

Once this pool of experts was available, the clinical informatics leadership was able to partner and align CDS efforts with other strategic initiatives in the organization (level 5 of “Governance and Management”). The development of a clinical pathways program was paired with the creation of order sets and other decision support tools to achieve standardized care. This led to further investment in clinical informatics resources by quality-improvement leadership, and the dissemination of quality-improvement best practices to clinical informatics. In recent work, the CHOP Sepsis Quality Improvement program aimed to reduce time to antimicrobial administration for patients with suspected sepsis in the pediatric intensive care unit (PICU). PICU sepsis leadership partnered with quality improvement and clinical informatics teams to identify this cohort of patients in real time and, when “stat” ordering was not used, prompt the clinician to change the ordering priority to “stat.” Clinical informatics helped refine design of an EHR alert. Analysis after the intervention using statistical process control charts demonstrated special cause variation and 20% center-line shift improvements in “stat” ordering and time to antimicrobial administration.

CHOP only recently established system-wide review processes for CDS tools (level 4 of the “Governance and Management” pillar). While this illustrates the ability for any given organization to move out of sequence in the maturity model, the review process also demonstrated opportunities for improvement that could have allowed for more optimal engagement with quality improvement and patient safety (level 5) had it been in place earlier.

Discussion

We developed a maturity model to guide organizations' development of data-driven CDS processes to improve population health, enhance patient experience, reduce costs, and care for caregivers. Informed by discussions with 80 organizations, the model provides a structure to assess current capacity in the domains of CDS content creation, analytics, and governance. Of note, these domains are focused on organizational capacities that support the creation and maintenance of high-quality CDS, rather than success factors for a specific CDS implementation. Within each of these domains, we describe a natural progression to help organizations identify a “zone of proximal development” 35 where investment of resources could yield immediate benefits in addition to building CDS infrastructure. Advancing in tandem across domains confers additional benefits, and a “roof” represents advanced capabilities that become possible through achievement of high levels of maturity across the three pillars. At the pinnacle of the model, organizations create a “learning health system for CDS” in which CDS designs are continuously adjusted based on a thorough understanding of the work system and the impact of the CDS on outcomes of interest.

The model as defined suggests that capabilities must be achieved serially and that each capability is either implemented or not. However, organizations may have different levels of deployment 13 for each capability described in the model—for example, the ability to visualize and explore EHR use patterns for alerts but not order sets. Additionally, as described in the case studies, many organizations develop “higher” capabilities prior to “lower” ones or work to develop multiple areas simultaneously. Nonetheless, the imposed order is intended to reflect that going in order maximizes the benefit of higher levels. For example, if an organization has the ability to do anomaly detection but cannot associate CDS artifacts with outcomes, they would not gain the ability to monitor for unexpected changes in the outcome (for example, due to changes in diagnosis codes). 36 Similarly, if an organization has optimized the process of incorporating front-line user feedback but does not base CDS processes on guidelines and literature, the resulting system may reflect what many clinicians want but may diverge from evidence or established best practice, leading to poorer patient outcomes. Intentional progression through these stages can limit unnecessary disruption to existing processes while still transforming the organization positively. 37

To our knowledge, no previous studies have systematically assessed organizations' outlook on CDS maturity or synthesized insights into a CDS maturity model to support CDS operations. Many studies have evaluated the impact of specific CDS content 1 or characterized organizational variability in use and effectiveness of specific CDS tool types such as order sets 38 and computerized reminders or alerts. 39 Numerous models and textbooks describe IT governance and management strategies, 40 but these models have not been adapted for health care knowledge management. Health Catalyst has developed a maturity model for health care analytics, but the model is intended for general use across the health care enterprise and not specific to CDS systems. 41

While this maturity model was informed by discussions with CDS team members from 80 organizations, we did not employ rigorous methods for qualitative research such as transcribing interviews or independent identification of themes. 21 Similarly, while we obtained feedback on the proposed model from 19 organizations as well as participants in a regional and national health informatics meeting, we did not use validated methods such as consensus mapping 42 or Delphi methods. 43 The proposed model may therefore be skewed toward a specific vision of operationally focused, data-driven CDS that may differ from many health care organizations' goals.

Conclusion

Effective CDS has the potential to transform health care and improve patient, population, and caregiver outcomes at reduced costs. However, achieving these aims requires operational coordination of CDS content creation, analytics, and governance. The proposed CDS maturity model can help organizations assess their current capacity and guide investment in CDS capabilities to accomplish organizational goals. Future efforts will include creation and validation of assessment tools that help individual health care organizations identify CDS improvement opportunities and drive policy that facilitates effective CDS implementation.

Clinical Relevance Statement

To leverage the potential of CDS to improve outcomes, health care organizations must develop operational capacities to create high-quality decision support processes, streamline data analytics, and develop robust governance strategies. However, informatics teams with limited resources must be intentional when investing time and resources into building CDS capacity. We propose a maturity model for CDS operations based on discussion with 80 organizations that can help CDS teams assess their current capacity, create a roadmap for development, and identify attainable next steps that yield immediate benefits while building CDS infrastructure.

Multiple Choice Questions

-

Anomaly detection is an important component of a robust clinical decision support (CDS) analytics group because:

It pushes otherwise hidden insights to the CDS team.

It identifies patients that have the best outcomes.

It allows a CDS team to visualize their data.

It aggregates the data into a single location.

Correct Answer: The correct answer is option a. Modern CDS systems provide a large amount of performance log data. CDS analytics typically follow a “pull” approach where an analyst manually retrieves pertinent insight that they are seeking. Anomaly detection enables insights around malfunctions or other significant changes to be readily available to the CDS team without needing to explicitly seek it out.

-

A hospital system creates order sets by convening discussions between groups of attending clinicians in which they explain their preferences for a given order set, the order set is built as described by the clinical decision support (CDS) team, and the order set is reviewed once more by a representative clinician before going to production. What stage of CDS maturity in the Content Creation domain does this represent?

Ad hoc build is.

Expert-based.

Guideline/literature based.

Front-line user feedback.

User-centered design.

Correct Answer: The correct answer is option b. In this example, the hospital system's CDS content creation is based on discussion between clinical experts. This is an improvement over ad hoc build (in which anyone in the organization can ask for an order set and it is built to their specification) because it requires groups of similar clinicians to come to consensus on their workflow. The system as described does not enforce use of guidelines or evidence from the literature to inform order-set design. Similarly, this system does not incorporate feedback from actual users of the order set after it has been deployed to adjust the design. Finally, no formal user or task analysis was performed, nor was there any scenario-based testing of users' interaction with the order set to inform design.

-

A health care organization assigns clinical owners to each order set and alert in their system and provides those owners with data on use patterns. A critical care physician notices that out of 150 items in their critical care order set, only 50 have been used at least once in the last year. The physician requests removal of the extraneous items to produce a leaner, more relevant order set. What stage of CDS maturity in the Governance and Management domain does this represent?

Ad hoc governance.

Governance committee.

Interdisciplinary engagement.

Monitoring and maintenance.

Strategic alignment.

Correct Answer: The correct answer is option d. In this example, the health care organization has provided clinical owners of decision support artifacts with data allowing them to monitor how those artifacts are used and make adjustments. In addition to interdisciplinary engagement from naming clinical owners, empowering owners with data simplifies the monitoring and maintenance process, allowing clinical owners to adjust the design of the artifacts based on use patterns. This example does not yet demonstrate strategic alignment as the CDS team was not tied directly to organizational strategic goals in this example.

-

Which of the following examples demonstrates the use of outcome association to inform clinical decision support design?

An organization reports central-line associated bloodstream infection (CLABSI) rates to its executive board. When CLABSI rates increase, the board sends e-mails to all clinical leaders asking them to inform nurses and providers to follow hospital policy when caring for patients with central lines.

A critical care physician sees that, of the 150 items in their admission order set, only 50 have been used in the last year. The physician requests that extraneous items be removed, producing a leaner, easier-to-use order set that front-line users prefer.

An organization's anomaly detection algorithm notifies the clinical decision support (CDS) team that a venous thromboembolism prophylaxis alert, which used to fire on average 30 times per day, is now firing 220 times per day. The CDS team investigates and sees that a change in codes is leading the alert to fire inappropriately. The team adjusts the codes and alert frequency returns to the previous level.

An organization notices that among patients admitted for community-acquired pneumonia, those in whom a pneumonia order set was used were no more likely to receive narrow-spectrum antibiotics compared with patients where the specified order set was not used. After changing defaults, admissions in which the order set was used achieve higher rates of narrow-spectrum antibiotic use.

Correct Answer: The correct answer is option d. In example (d), the clinical decision support (CDS) team is monitoring the outcome of interest for which the CDS was developed—namely to increase use of narrow-spectrum antibiotics for community-acquired pneumonia. By associating CDS use with this outcome, the organization is able to determine that the CDS is not leading to the desired outcome, take action, and produce an improvement. Example (a) involves reporting of an outcome, but there is no association with CDS. Example (b) demonstrates use of self-service access, data exploration and visualization, and monitoring and maintenance—however, there is no clinical outcome that is used to inform the design of the order set. Example (c) demonstrates use of anomaly detection, but again no clinical outcome is used to inform the design.

Acknowledgment

The authors acknowledge the many clinical decision support leaders from 80 organizations who engaged with the authors in candid discussions about the current state of their clinical decision support teams and their goals for improvement. The authors also acknowledge Dr. Gary Frank and Natalie Tillman for their critical review of the manuscript and contribution to the case example from Children's Healthcare of Atlanta.

Conflict of Interest M.Z. and M.C.T. have equity in and are employees of Phrase Health, a health care governance and analytics company. E.W.O., N.M., and D.F. have equity in Phrase Health, a health care governance and analytics company. They receive no direct revenue. A.O.W., E.S., and J.S. declare no competing interests.

Authors' Contributions

E.W.O., N.M., A.O.W., D.F.F., M.D.Z., J.S., E.S., and M.C.T. wrote the manuscript. N.M., M.D.Z., and M.C.T. performed primary data collection. E.W.O., N.M., D.F.F., M.D.Z., and M.C.T. analyzed the data and developed the proposed CDS maturity model.

Protection of Human and Animal Subjects

No human subjects were involved in this project.

References

- 1.Bright T J, Wong A, Dhurjati R et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157(01):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 2.Roshanov P S, You J J, Dhaliwal J et al. Can computerized clinical decision support systems improve practitioners' diagnostic test ordering behavior? A decision-maker-researcher partnership systematic review. Implement Sci. 2011;6(88):88. doi: 10.1186/1748-5908-6-88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Nieuwlaat R, Connolly S J, Mackay J A et al. Computerized clinical decision support systems for therapeutic drug monitoring and dosing: a decision-maker-researcher partnership systematic review. Implement Sci. 2011;6:90. doi: 10.1186/1748-5908-6-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Souza N M, Sebaldt R J, Mackay J A et al. Computerized clinical decision support systems for primary preventive care: a decision-maker-researcher partnership systematic review of effects on process of care and patient outcomes. Implement Sci. 2011;6(87):87. doi: 10.1186/1748-5908-6-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sahota N, Lloyd R, Ramakrishna A et al. Computerized clinical decision support systems for acute care management: a decision-maker-researcher partnership systematic review of effects on process of care and patient outcomes. Implement Sci. 2011;6(91):91. doi: 10.1186/1748-5908-6-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hemens B J, Holbrook A, Tonkin M et al. Computerized clinical decision support systems for drug prescribing and management: a decision-maker-researcher partnership systematic review. Implement Sci. 2011;6(89):89. doi: 10.1186/1748-5908-6-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Roshanov P S, Fernandes N, Wilczynski J M et al. Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trials. BMJ. 2013;346:f657. doi: 10.1136/bmj.f657. [DOI] [PubMed] [Google Scholar]

- 8.Osheroff J A, Teich J M, Levick D . Chicago, IL: HIMSS; 2012. Improving Outcomes with Clinical Decision Support: an Implementer's Guide. 2nd ed. [Google Scholar]

- 9.Van de Velde S, Kunnamo I, Roshanov P et al. The GUIDES checklist: development of a tool to improve the successful use of guideline-based computerised clinical decision support. Implement Sci. 2018;13(01):86. doi: 10.1186/s13012-018-0772-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Greenes R A, Bates D W, Kawamoto K, Middleton B, Osheroff J, Shahar Y. Clinical decision support models and frameworks: Seeking to address research issues underlying implementation successes and failures. J Biomed Inform. 2018;78:134–143. doi: 10.1016/j.jbi.2017.12.005. [DOI] [PubMed] [Google Scholar]

- 11.Millard Krause T.CMIO/CHIO Summit: managing clinical decision support and improving workflowHealthcare Informatics Magazine (2016). Available at:https://www.healthcare-informatics.com/article/ehr/cmiochio-summit-managing-clinical-decision-support-and-improving-workflow. Accessed November 9, 2018

- 12.Carvalho J V, Rocha Á, Abreu A. Maturity models of healthcare information systems and technologies: a literature review. J Med Syst. 2016;40(06):131. doi: 10.1007/s10916-016-0486-5. [DOI] [PubMed] [Google Scholar]

- 13.Knosp B M, Barnett W K, Anderson N R, Embi P J. Research IT maturity models for academic health centers: early development and initial evaluation. J Clin Transl Sci. 2018;2(05):289–294. doi: 10.1017/cts.2018.339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.HIMSS Analytics - North America.Electronic Medical Record Adoption ModelAvailable at:https://www.himssanalytics.org/emram. Accessed November 12, 2018

- 15.Analytics HIMSS. Continuity of Care Maturity Model. Available at:https://www.himssanalytics.org/ccmm. Accessed: November 23, 2018

- 16.van de Wetering R, Batenburg R. Towards a theory of PACS deployment: an integrative PACS maturity framework. J Digit Imaging. 2014;27(03):337–350. doi: 10.1007/s10278-013-9671-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Corrigan D, McDonnell R, Zarabzadeh A, Fahey T. A multistep maturity model for the implementation of electronic and computable diagnostic clinical prediction rules (eCPRs) EGEMS (Wash DC) 2015;3(02):1153. doi: 10.13063/2327-9214.1153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Staggers N, Rodney M, Alafaireet P . Chicago, IL: Health Information Management Systems Society; 2011. Promoting Usability in Health Organizations: Initial Steps and Progress Toward a Healthcare Usability Maturity Model; pp. 1–55. [Google Scholar]

- 19.Innovation Pathways Maturity Model HIMSS. Available at:https://www.himss.org/library/innovation/pathways-maturity-model. Accessed November 23, 2018

- 20.Lipsitz L A. Understanding health care as a complex system: the foundation for unintended consequences. JAMA. 2012;308(03):243–244. doi: 10.1001/jama.2012.7551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ash J S, Smith A C, III, Stavri P Z. New York, NY: Springer-Verlag; 2006. Performing subjectivist studies in the qualitative traditions responsive to users; pp. 267–300. [Google Scholar]

- 22.Marshall M N. Sampling for qualitative research. Fam Pract. 1996;13(06):522–525. doi: 10.1093/fampra/13.6.522. [DOI] [PubMed] [Google Scholar]

- 23.Tobias M C, Orenstein E W, Muthu N. Decision support for decision support: a novel system to prioritize improvement efforts, identify safety hazards, and measure improvement. AMIA Annu Symp Proc. 2018;2018:2246. [Google Scholar]

- 24.Orenstein E W, Weitkamp A O, Rosenau P, Mallozzi C, Tobias M C.Towards a maturity model for clinical decision support operations: an interactive panelIn: American Medical Informatics Association Clinical Informatics Conference; April 30–May 2, 2019; Atlanta, GA [DOI] [PMC free article] [PubMed]

- 25.Carayon P, Schoofs Hundt A, Karsh B T et al. Work system design for patient safety: the SEIPS model. Qual Saf Health Care. 2006;15 01:i50–i58. doi: 10.1136/qshc.2005.015842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Holden R J, Carayon P, Gurses A P et al. SEIPS 2.0: a human factors framework for studying and improving the work of healthcare professionals and patients. Ergonomics. 2013;56(11):1669–1686. doi: 10.1080/00140139.2013.838643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jones B E, Collingridge D S, Vines C G et al. CDS in a learning health care system: identifying physicians' reasons for rejection of best-practice recommendations in pneumonia through computerized clinical decision support. Appl Clin Inform. 2019;10(01):1–9. doi: 10.1055/s-0038-1676587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Aaron S, McEvoy D S, Ray S, Hickman T TT, Wright A. Cranky comments: detecting clinical decision support malfunctions through free-text override reasons. J Am Med Inform Assoc. 2019;26(01):37–43. doi: 10.1093/jamia/ocy139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chan J, Shojania K G, Easty A C, Etchells E E. Does user-centred design affect the efficiency, usability and safety of CPOE order sets? J Am Med Inform Assoc. 2011;18(03):276–281. doi: 10.1136/amiajnl-2010-000026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wright A, Hickman T T, McEvoy D et al. Analysis of clinical decision support system malfunctions: a case series and survey. J Am Med Inform Assoc. 2016;23(06):1068–1076. doi: 10.1093/jamia/ocw005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Yoshida E, Fei S, Bavuso K, Lagor C, Maviglia S. The value of monitoring clinical decision support interventions. Appl Clin Inform. 2018;9(01):163–173. doi: 10.1055/s-0038-1632397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kassakian S Z, Yackel T R, Gorman P N, Dorr D A. Clinical decisions support malfunctions in a commercial electronic health record. Appl Clin Inform. 2017;8(03):910–923. doi: 10.4338/ACI-2017-01-RA-0006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Thayer J G, Miller J M, Fiks A G, Tague L, Grundmeier R W. Assessing the safety of custom web-based clinical decision support systems in electronic health records: a case study. Appl Clin Inform. 2019;10(02):237–246. doi: 10.1055/s-0039-1683985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Institute of Medicine.The Learning Healthcare System: Workshop Summary Washington, DC: National Academies Press; 2007 [PubMed] [Google Scholar]

- 35.Lindgren H, Lu M H, Hong Y, Yan C. Applying the zone of proximal development when evaluating clinical decision support systems: a case study. Stud Health Technol Inform. 2018;247:131–135. [PubMed] [Google Scholar]

- 36.Wright A, Ai A, Ash J et al. Clinical decision support alert malfunctions: analysis and empirically derived taxonomy. J Am Med Inform Assoc. 2018;25(05):496–506. doi: 10.1093/jamia/ocx106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Nielsen J.Corporate UX maturity: stages 5–8. 2006Available at:https://www.nngroup.com/articles/ux-maturity-stages-5-8/. Accessed November 23, 2018

- 38.Wright A, Feblowitz J C, Pang J E et al. Use of order sets in inpatient computerized provider order entry systems: a comparative analysis of usage patterns at seven sites. Int J Med Inform. 2012;81(11):733–745. doi: 10.1016/j.ijmedinf.2012.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Shojania K G, Jennings A, Mayhew A, Ramsay C R, Eccles M P, Grimshaw J. The effects of on-screen, point of care computer reminders on processes and outcomes of care. Cochrane Database Syst Rev. 2009;8(03):CD001096. doi: 10.1002/14651858.CD001096.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mutafelija B, Stromberg H. Norwood, MA: Artech House; 2003. Systematic Process Improvement Using ISO 9001:2000 and CMMI. [Google Scholar]

- 41.Sanders D, Burton D A, Prott D.The healthcare analytics adoption model: a framework and roadmap 2016. Available at:https://downloads.healthcatalyst.com/wp-content/uploads/2013/11/analytics-adoption-model-Nov-2013.pdf. Accessed September 2, 2019

- 42.Tarakci M, Ates N Y, Porck J P, van Knippenberg D, Groenen P JF, de Haas M. Strategic consensus mapping: a new method for testing and visualizing strategic consensus within and between teams. Strateg Manage J. 2014;35:1053–1069. [Google Scholar]

- 43.Okoli C, Pawlowski S. The Delphi method as a research tool: an example, design considerations and applications. Inf Manage. 2004;42:15–29. [Google Scholar]