Abstract

Key features of the school environment can have a significant impact on teachers’ effective use of evidence-based practices (EBP), yet implementation-specific organizational constructs have rarely been studied in the education sector. This study examined three aspects of the organizational implementation context (implementation leadership, climate, and citizenship behavior), which have been conceptualized and validated in other service settings. Focus groups with central office administrators, principals, and teachers were conducted to understand the applicability and conceptual boundaries of these organizational constructs in schools. Focus group transcripts were coded, and the results indicated both similarities and differences in their conceptualizations of implementation leadership, climate, and citizenship behavior in school. The data indicated that: (1) implementation leadership was largely present in schools with the addition of Distributed Leadership; (2) two implementation climate constructs were most clearly present (i.e., Focus on EBP and Educational Support for EBP) and two additional constructs (i.e., Existing Support to Deliver EBP and Prioritization of EBP) emerged as part of this construct; and (3) implementation citizenship behavior (Helping Others and Keeping Informed) was consistently acknowledged across schools and two new components emerged (i.e., Information Sharing and Observation/Feedback). Recommendations to researchers and community stakeholders are discussed.

Keywords: Schools, Implementation, Evidence-based practices, Leadership, Climate, Citizenship behavior

Introduction

Schools are the most common setting for the delivery of mental health services to youth in the USA (Costello, He, Sampson, Kessler, & Merikangas, 2014; Farmer, Burns, Phillips, Angold, & Costello, 2003; Langer et al., 2015; Lyon, Ludwig, Vander Stoep, Gudmundsen, & McCauley, 2013; Merikangas et al., 2011). There is immense pressure for public schools to adopt a continuum of evidence-based practices (EBP), defined as those with the best research evidence, clinical expertise, and that cater to patient preference and culture (APA Presidential Task Force on Evidence-Based Practice, 2006; Cook & Odom, 2013), across universal, targeted, and intensive levels of services to improve the mental health of youth (Fixsen, Blase, Metz, &Van Dyke, 2013; Odom, Cox, Brock, & National Professional Development Center on ASD, 2013). However, successful adoption, delivery, and sustainment of EBP in schools are fraught with challenges (Owens et al., 2014). When EBP are adopted in schools, only 25–50% are implemented with fidelity (i.e., implemented as intended) (Cook & Odom, 2013; Gottfredson & Gottfredson, 2001). Delivery of EBP with poor fidelity is unlikely to be effective in changing youth outcomes (Durlak & Dupre, 2008). This is a critical issue that results in significant wasted resources and weakens the potential of schools to promote youth’s mental health outcomes.

Recent research has examined the role of organizational constructs in the successful use of EBP in other service sectors such as specialty mental health and child welfare (e.g., Aarons et al., 2012; Beidas et al., 2013, 2014, 2016a; Bonham, Willging, Sommerfeld, & Aarons, 2014; Ehrhart, Aarons, & Farahnak, 2015; Powell et al., 2017), which also have relevance to schools (Forman et al., 2013; Hoagwood & Johnson, 2003; Langley, Nadeem, Kataoka, Stein, & Jaycox, 2010; Owens et al., 2014). Organizational constructs may play a critical role in the implementation of school-based services that maximize school mental health. Lyon et al. (2018) used the Exploration, Preparation, Implementation, Sustainment framework (EPIS; Aarons, Hurlburt, & Horwitz, 2011), a four-phase, prospective implementation framework that delineates the nested structure of outer (i.e., larger system-level) and inner (i.e., building level) contextual constructs that drive EBP implementation efforts, to define the organizational implementation context (OIC). The OIC represents malleable constructs specific to the inner context (i.e., microsystemic factors associated with a given school building) that influence successful EBP implementation in schools (Lyon et al., 2018). The OIC represents the immediate setting in which implementation takes place. As such, OIC constructs are most proximal to and likely to have an influence on implementer behavior than other factors outside that are farther removed from the place where implementation happens (Jacobs, Weiner, & Bunger, 2014; Malloy et al., 2015). The OIC captures three core organizational constructs: implementation leadership, implementation climate, and implementation citizenship behavior. These OIC constructs have been less frequently studied in schools but are likely to serve as proximal contextual indicators of behavior change among school-based practitioners (e.g., teachers, school counselors, licensed school-based mental health provider) who are responsible for the delivery of different services within a multi-tiered continuum of care (Bruns et al., 2016).

Implementation leadership refers to specific behaviors that leaders perform to support the implementation of EBP (Aarons, Ehrhart, & Farahnak, 2014a). Prior research has identified five dimensions of implementation leadership that combine to influence implementation outcomes in a given organizational context: organizational leaders’ being knowledgeable about the identified EBP, supportive behaviors directed toward frontline providers, proactive anticipation and problem solving regarding barriers that are likely to arise during the implementation process, perseverance with staying the course with implementation despite barriers that may arise, and availability to support and troubleshoot issues with frontline providers (Aarons et al., 2014a; Ehrhart et al., 2018). Implementation leadership can operate as a critical construct of the OIC that establishes a specific climate in a school that is conducive to EBP adoption, delivery, and sustainment across multiple tiers (Aarons, Ehrhart, Farahnak, & Sklar, 2014b; Aarons, Ehrhart, Torres, Finn, & Beidas, 2017).

Implementation climate is more specific than general school climate. While school climate reflects how individuals within schools perceive and ultimately describe the environment in their school, implementation climate reflects staff’s shared perceptions of the policies, practices, and procedures supporting EBP implementation, as well as the kinds of behaviors that are expected, supported, and rewarded as part of the implementation process (Ehrhart, Aarons, & Farahnak, 2014). Ehrhart et al. (2014) identified six dimensions of implementation climate including the organization’s: Focus on EBP, Educational Support for EBP, Recognition for EBP, Rewards for EBP, Employee Selection for EBP, and Selection for Openness. Organizations with low levels of implementation climate fail to demonstrate that EBP implementation is a valued endeavor as there is limited focus on EBP, support provided, and/or forms of recognition and acknowledgment for staff who invest in EBP implementation. Although the two constructs are distinct, implementation climate is primarily driven by implementation leadership and is supported by specific leaders’ behaviors that communicate those norms and expectations and allocate necessary resources (e.g., protected time, materials, money) to demonstrate the organization’s values within a given context (Aarons et al., 2014a). Existing research suggests that such focused or strategic climates are more related to specific outcomes rather than more molar or general measures (Schneider, Ehrhart, & Macey, 2013). Implementation climate may be a critical construct to examine in schools to help support the use of EBP (Locke et al., 2016).

Lastly, organizational citizenship behavior is exhibited when employees go “above and beyond” their core job aspects or standard “call of duty” to further the mission of the organization (Organ, Podsakoff, & MacKenzie, 2005). Applying this concept to the goal of EBP adoption and sustainment, implementation citizenship behaviors are those that demonstrate a commitment to EBP within the organization whereby individuals strive to keep informed about the EBP and offer support to their colleagues who are attempting to deliver the EBP with fidelity (Ehrhart et al., 2015). Ehrhart et al. (2015) posit two dimensions of implementation citizenship including: Helping Others and Keeping Informed. Implementation citizenship behaviors serve as a hypothesized mediator by which implementation leadership and implementation climate exert their influence on implementation success (Ehrhart, Aarons, Torres, Finn, & Roesch, 2016). Implementation citizenship captures specific behavior changes in school staff (e.g., helping a colleague with EBP implementation) and may mediate the influence of implementation leadership and implementation climate on successful EBP use.

Application to the School Context

Implementation research in other service sectors is generally more advanced than in schools (Sanetti, Knight, Cochrane, & Minster, in preparation). The multidisciplinary field of implementation science creates opportunities for researchers and practitioners to take advantage of existing findings and products from other service sectors, like health care, specialty mental health, and child welfare, by adapting and examining the application and generalizability of prior findings in novel contexts such as schools. For example, the ways in which the OIC constructs operate in the child welfare sector may look and operate differently in schools and there are few studies investigating implementation leadership, climate, and citizenship behavior within the context of EBP implementation in schools (Locke et al., 2016; Lyon et al., 2018). The absence of research in this area may be due to unknown relevance and appropriateness of OIC constructs to the education sector without systematic consideration of the school context (Lyon et al., 2018). It is possible that the manifestation of implementation leadership, climate, and citizenship in schools differs from the ways in which it is measured in other service contexts (e.g., hospitals, community mental health agencies). In addition, each of the OIC constructs may be perceived differently by individuals in different school roles (central office administrators, principals, teachers). Understanding the perspectives of different stake-holders can provide a more nuanced understanding of OIC constructs (Beidas et al., 2016b) in schools and may point to the need for a shift in thinking with regard to EBP implementation. Lastly, the boundaries of the OIC constructs may be more or less expansive in schools than in other settings in which they were initially conceptualized, resulting in the addition or deletion of specific subconstructs. This study may help guide future EBP implementation in schools.

Purpose of this Study

The current study occurred in the context of a larger sponsored project to adapt a suite of measures capturing OIC constructs for use in schools to support data-driven continuous improvement of evidence-based universal supports targeting student social, emotional, and behavioral outcomes. The purpose of this study was to use qualitative methods to examine how each of the OIC constructs (implementation leadership, implementation climate, and implementation citizenship behavior) is conceptualized in schools to determine whether the OIC constructs have different meaning and application than other service sectors in which they have been assessed (e.g., community mental health and child welfare), as well as generate potential relevant subconstructs that fall under the three broader OIC constructs. Given that school-based mental health involves the delivery of a continuum of services across universal, targeted, and intensive levels of care (Bruns et al., 2016), this study included different groups of educational stakeholders (i.e., district administrators, principals, teachers) involved in varying ways with implementation. For example, central administrators often manage and track implementation across schools, principals oversee and support implementation within their own schools, and teachers are the primary implementers of universal supports or collaborators on more intensive mental health interventions. The inclusion of different stakeholder groups also allowed us to examine whether perspectives of the OIC constructs varied as a function of group.

Methods

Setting and Participants

This study occurred as part of a larger federally funded project examining the iterative adaptation and validation of specific measures capturing key constructs of the school OIC. The university institutional review board and each participating school district approved the study. All participants provided informed consent prior to their participation. We first contacted a central administrator from each of the two partnering school districts. Next, the central administrator provided the names of 6–8 staff for each of three stakeholder groups: central administrators, elementary school principals, and elementary school teachers. Our recruitment process resulted in a total of 37 individuals (16 central administrators, 10 elementary school principals, and 11 elementary school teachers) from two relatively large school districts in the Northwestern USA. The two school districts are socioeconomically (approximately 26% of students in these districts qualify for free and reduced lunch) and racially/ethnically diverse (41.3% White; 26.8% Asian; 11.6% Hispanic/Latino; 9.6% Multiethnic; 8.7% African American; 0.3% American Indian or Alaskan Native; 0.2% Native Hawaiian). Together, these two school districts have 91 elementary schools. The sample was predominantly female (n = 29, 78.38%) with an average age range of 35–44 years old. Age bands were collected rather than exact ages to accommodate our participant population who felt more comfortable reporting ranges. The ethnic backgrounds of participants were as follows: 75.68% white, 10.81% multiracial/multiethnic, 5.40% Asian, 2.70% African American, 2.70% American Indian/Alaskan Native, and 2.70% Native Hawaiian or Other Pacific Islander. Their highest educational attainment was as follows: 8.11% had a doctoral degree, 86.49% had a master’s degree, and 5.40% had a bachelor’s degree. See Table 1 for demographic information.

Table 1.

Demographics

| Variable | Whole sample | Central admin | Principals | Teachers |

|---|---|---|---|---|

| Age | ||||

| 18–24 years old | 1 (2.70%) | 0 (0.00%) | 0 (0.00%) | 1 (9.09%) |

| 25–34 years old | 8 (21.62%) | 1 (6.25%) | 1 (10.00%) | 6 (54.55%) |

| 35–44 years old | 16 (43.24%) | 6 (37.50%) | 6 (60.00%) | 4 (36.36%) |

| 45–54 years old | 7 (18.92%) | 4 (25.00%) | 3 (30.00%) | 0 (0.00%) |

| 55–64 years old | 4 (10.81%) | 4 (25.00%) | 0 (0.00%) | 0 (0.00%) |

| 65–74 years old | 1 (2.70%) | 1 (6.25%) | 0 (0.00%) | 0 (0.00%) |

| 75 years or older | 0 (0.00%) | 0 (0.00%) | 0 (0.00%) | 0 (0.00%) |

| Gender | ||||

| Male | 8 (21.62%) | 3 (18.75%) | 5 (50.00%) | 0 (0.00%) |

| Female | 29 (78.38%) | 13 (81.25%) | 5 (50.00%) | 11 (100.0%) |

| Race/ethnicity | ||||

| Am. Indian or Alaskan Native | 1 (2.70%) | 0 (0.00%) | 1 (10.00%) | 0 (0.00%) |

| Asian | 2 (5.40%) | 1 (6.25%) | 0 (0.00%) | 1 (9.09%) |

| Black or African Am. | 1 (2.70%) | 1 (6.25%) | 0 (0.00%) | 0 (0.00%) |

| Native Hawaiian or Other Pacific Islander | 1 (2.70%) | 0 (0.0%) | 1 (10.00%) | 0 (0.00%) |

| White or Caucasian | 28 (75.68%) | 12 (75.00%) | 8 (80.00%) | 8 (72.73%) |

| Multiethnic | 4 (10.81%) | 2(12.50%) | 0 (0.00%) | 2 (18.18%) |

| Advanced degree | ||||

| Bachelor’s degree | 2 (5.40%) | 0 (0.00%) | 0 (0.00%) | 2 (18.18%) |

| Master’s degree | 32 (86.49%) | 13 (81.25%) | 10 (100.0%) | 9 (81.82%) |

| Doctoral degree | 3 (8.11%) | 3 (18.75%) | 0 (0.00%) | 0 (0.00%) |

| Total | 37 (100.0%) | 16 (100.0%) | 10 (100.0%) | 11 (100.0%) |

Procedures

Our theoretical approach uses the EPIS framework to develop a focus group protocol (see below and the Appendix) to elicit information about the relevance and appropriateness of measuring implementation leadership, implementation climate, and implementation citizenship in schools (Aarons et al., 2011). Focus groups were selected instead of other qualitative methods in order to allow synergistic discussion when individuals in the same focus group could elaborate on points articulated by other participants to provide a deeper understanding of how the OIC constructs manifest in schools. This approach was appropriate given the time and financial constraints of the grant award that did not permit the use of individual interviews. Focus groups were held at the central office of each respective school district to provide a convenient and accessible location for participants.

Separate focus groups were held for each stakeholder type (central office administrators, principals, and teachers) to remove obstacles to participant engagement (potential power differentials between stakeholders who may be in supervisory/managerial roles) as well as to allow for comparisons across stakeholder groups. The size of each focus group (6–9 participants) was consistent with recommended procedures for thematic saturation (Guest, Bunce, & Johnson, 2006), with the exception of one principal focus group which only had four participants because two had last minute conflicts that prevented them from attending the session. Each focus group session began with establishing norms and expectations for the session, introductions, and an overview of constructs (e.g., implementation leadership, climate, citizenship behavior) that were to inform the development of a set of school-based measures (Lyon et al., 2018). Prior to the focus group discussions, facilitators defined EBP and implementation science for all participants. Participants had varying levels of understanding and experience with EBP implementation. The focus group moderators situated the discussion of EBP with a focus on Tier 1 or universal programs that promote students’ social, emotional, or behavioral functioning, which was the focus of the larger study (Lyon et al., 2018). Participants also were provided an overview of each construct (definitions, how each construct is described in the literature) and asked to review existing measures (e.g., Implementation Leadership Scale, Implementation Climate Scale, and Implementation Citizenship Behavior Scale). Discussion of each of the OIC constructs ensued after measure review, with specific probing questions designed to elicit feedback from the participants about each construct’s manifestation in the school context. Each focus group was audio-and video-recorded and lasted 90–120 min. Participants were compensated $150 for their participation.

Measures

We developed a systematic and comprehensive focus group protocol with questions that elicited open-ended responses from participants about: (1) implementation leadership (e.g., “What steps can leaders take to support their staff in successful implementation?”); (2) implementation climate (e.g., “What specific aspects of implementation climate come to mind when you think about schools in which the adoption and implementation of evidence-based practices are high priorities for leaders and staff?”); and (3) implementation citizenship behavior (e.g., “What is it about an employee in a school that allows him/her to support colleagues in implementing evidence-based practices effectively?”).

Data Analysis

Focus groups were conducted by Lyon et al. (2018). Focus groups were transcribed and uploaded to NVivo QSR 10 for data management. The EPIS model and OIC guided the development of the coding scheme. The coding scheme was developed using a rigorous, systematic, transparent, and iterative approach using the following steps. First, Lyon et al. (2018) independently coded two initial transcripts line-byline to identify recurring codes. Second, they met as a group to discuss recurring codes and developed a codebook using an integrated approach to coding as certain codes were conceptualized during the focus group protocol development (i.e., deductive approach) and other codes were developed through a close reading of the two transcripts (i.e., inductive approach; Bradley, Curry, & Devers, 2007; Neale, 2016). Next, coders and principal investigators met to discuss and select common codes interpreted from the transcripts. The group collectively determined which codes were incorporated into the final codebook. Then, operational definitions of each code were documented as well as examples of the code from the data, as well as when to use and not use the code. The coding scheme was applied to the data to produce a descriptive analysis of each code; the coding scheme was then refined throughout the data analytic process (Bradley et al., 2007). A table of codes and definitions is provided in the Appendix. Lyon et al. (2018) coded all data and overlapped on 20% of randomly selected transcripts to determine inter-rater reliability. They met together on a weekly basis to discuss, clarify, verify, and compare emerging codes to ensure consensus. Agreement was calculated based on the number of words agreed upon; agreement between raters was excellent (percent agreement = 95.05% on parent codes and 98.60% on subcodes).

Results

Participants’ conceptualizations of each of the constructs indicated unique elements of the school context that may be important for successful implementation of EBP to support school mental health. However, there also were many similarities with the definitions and item content associated with the original development of the OIC measures in the implementation science literature. Overall, central administrators and principals were broader in their descriptions of how the constructs manifest in schools, whereas teachers were more likely to focus on the specifics of EBP implementation. See Table 2 for a list of codes and definitions and Table 3 for a list of OIC constructs, definitions, existing and new domains. Below we address each construct, noting which emerged as “new” and which previously “existed” in the original measures.

Table 2.

Definitions of codes

| Codes | Subcodes |

|---|---|

| Implementation leadership: Refers to leadership that involves specific actions that are performed to support the adoption and use of EBP in the school setting. This can include proactive leadership, supportive leadership, knowledgeable leadership, perseverant leadership, and available leadership. This also includes comments about how leadership is distributed and built within a school, how leaders are held accountable across all stakeholders, or the level of leadership necessary for successful implementation and sustainment | Proactive leadership: Involves the extent to which a leader establishes clear standards surrounding implementation, develops plans to facilitate implementation, and removes obstacles to implementation |

| Supportive leadership: Involves the degree to which leaders support employee efforts to learn more about or use EBP, and recognizes and appreciates employee efforts | |

| Knowledgeable leadership: Occurs when employees believe that leaders “know what they are talking about” surrounding EBP and are able to answer questions effectively | |

| Perseverant leadership: Refers to the extent to which leaders carry on through challenges of implementation and react to critical issues surrounding implementation | |

| Available leadership: Refers to the extent to which leaders are accessible when it comes to implementation, make time to meet about implementation, are available to discuss implementation or provide help, and can be contacted with problems or concerns | |

| Distributed leadership: Comments about Distributed Leadership structures in schools, especially as they relate to implementation. May include comments about leadership teams, how principals facilitate Distributed Leadership. Distributed Leadership also includes the level of leadership that is most relevant to implementation and accountability across those levels. These comments refer to the different gradelevel teams/leaders within teachers to be accountable for implementing and sustaining new practices | |

| Implementation climate: Climate refers to educators’ share perception of the importance of EBP implementation. This includes Focus on EBP, Educational Support for EBP, Recognition for EBP, Rewards for EBP, Selection for EBP, and Selection for Openness. Comments that would be coded under this includes educators’ perceptions of norms/expectations of implementation, the existing structures and resources in place to support implementation, incentives to implementation, school goals/priorities, the varying demographic levels of students and staff across schools. | Focus on EBP: References whether the organization thinks implementation is important, a top priority, or has effective use of EBP as a primary goal |

| Educational support for EBP: Refers to whether the organization supports EBP training, staff travel to conferences or workshops, or supplies training materials or other supports (e.g., journal articles) | |

| Recognition for EBP: Refers to whether the organization views staff with EBP experience as experts, holds them in high esteem, and is likely to promote them | |

| Rewards for EBP: Rewards are financial incentives for the use of EBP, whether staff that use EBP are more likely to get bonuses/raises, or accumulated compensated time. This code also includes comments about contextually appropriate incentive structures in schools that are driving motivators for educators. May include student outcomes, leadership opportunities, protected time | |

| Selection for EBP: Refers to the extent to which the organization prefers to hire staff that are flexible, adaptable, or open to new interventions | |

| Selection for openness: Refers to the extent to which the organization prefers to hire staff that are flexible, adaptable, or open to new interventions | |

| Existing supports to deliver EBP: Existing school structures or resources that could be incorporated/repurposed to support implementation at an organizational level. May include comments about existing teams or staff interactions relevant to implementation, structures for professional development (e.g., individualized professional development plans) | |

| Prioritization of EBP: Comments about school priorities, policies and procedures, and how they align with the implementation of social, emotional, and behavioral programming | |

| Implementation citizenship behavior: Citizenship refers to the different elements of citizenship behaviors or the degree to which educators go “above and beyond” to support implementation. Demonstrates a commitment to EBP by keeping informed about the EBP being implemented and supporting colleagues to meet EBP standards. Includes educators’ willingness to share knowledge with peers or their community, opening their classroom for observation and feedback. | Helping others: Refers to the extent to which educators assist others to make sure they implement EBP, help teach EBP, implementation procedures, or help others with responsibilities related to EBP (e.g., completing fidelity assessments) |

| Keeping informed: Refers to whether educators keep up to date on changes in EBP policy, follow the latest news or new findings regarding EBP, and keep up with agency communications related to EBP | |

| Information sharing: Educators demonstrating citizenship by sharing knowledge and information with peers and beyond the school setting (e.g., connecting with parents, and community members) to further the implementation effort; also includes be ability and openness to take that knowledge and information and adopt it into practice | |

| Observation/feedback: Educators demonstrating citizenship by offering to observe, be observed in the classroom, and provide or receive feedback on their professional practice |

Table 3.

Original OIC construct definitions, existing domains, and added domains

| Original OIC construct | Definition | Existing domains | New domains |

|---|---|---|---|

| Strategic implementation leadership | Strategic implementation leadership is a subcomponent of general leadership that involves specific behaviors that support or inhibit implementation in service organizations. These include leaders being knowledgeable and able to articulate the importance of implementation and being supportive of staff, proactive in problem solving, and perseverant in the implementation process | Proactive leadership Supportive leadership Knowledgeable leadership Perseverant leadership Available leadership |

Distributed leadership |

| Strategic implementation climate | Strategic implementation climate encompasses employee perceptions of the organizational supports and practices that help to define norms and expectations with regard to the implementation of new EBP. A positive implementation climate signals what is expected, supported, and rewarded in relation to use of programs or practices | Focus on EBP Educational support for EBP Recognition for EBP Rewards for EBP Selection for EBP Selection for openness |

Existing supports to deliver EBP Prioritization of EBP |

| Implementation citizenship behavior | Citizenship behaviors are exhibited when employees go “above and beyond” their core job aspects or standard “call of duty” to further the mission of the organization. Implementation citizenship behaviors are those that demonstrate a commitment to EBP by keeping informed about the EBP being implemented and supporting colleagues to meet EBP standards | Helping others Keeping informed |

Information sharing Observation/feedback |

New Dimensions

Several new dimensions emerged from each of the OIC constructs that are unique to the school context. First, Distributed Leadership was identified within the implementation leadership construct. Participants from all three stakeholder groups discussed Distributed Leadership across multiple individuals (e.g., teacher leaders, career ladder teachers), teams (school psychologists, counselors, teacher leaders, teacher mentors/coaches), and levels (schools and central office) to support EBP implementation. Overall, many participants from each of the three stakeholder groups challenged the notion that leadership in schools is centralized at the school administrator level and highlighted the importance of a team-based leadership approach. One principal said, “There are a lot of different leaders in the building… the principal might not know everything about EBP, so we rely on our experts in the building to know those answers.” A central administrator highlighted that “the building leadership team develops a plan [for EBP implementation] versus the school administrator [often] establishes clear school standards and accountability measures for implementation.” Although the principal often is seen as the primary leader in a school, the general sentiment across all three stakeholder groups was that leadership teams (which comprise several stakeholders in school settings) share the responsibilities of supporting EBP implementation.

Second, Existing Supports to Deliver EBP was a new dimension of implementation climate that emerged across all participants that captured how existing school structures or resources could be incorporated or repurposed to support EBP implementation and build capacity across levels (individual teacher/staff, school and central office). All participants mentioned using existing school teams (i.e., guidance teams, school improvement planning teams, career ladder teachers, grade-level teams, professional learning communities) that are naturally embedded in schools’ existing infrastructure to play a role in the adoption, implementation, and sustainment of EBP in schools. One central administrator commented that “there are routines or structures established within the school [such as] coordinated professional learning communities or planning time…that communicates with people and [trains] new staff to make [EBP implementation] more feasible” in schools. The reliance on existing school teams may facilitate EBP use and champion implementation efforts forward on multiple levels. Another aspect of Existing Supports endorsed by all stakeholder groups was the use of peer-to-peer relationships and informal social interactions among teachers/staff to support EBP implementation efforts by reducing feelings of isolation that are common in schools.

Third, Prioritization of EBP also was a new dimension of implementation climate created to capture district and school priorities, policies and procedures, and their alignment with EBP implementation. Participants varied in their description of Prioritization of EBP. Some central administrators remarked that Prioritization of EBP often is based on district-level support and resources (i.e., funding) or district priorities, even though there is a “disconnect between what the district prioritizes and what actually happens [in practice].” Principals elaborated on the challenge of competing priorities in academic versus social-emotional EBP that teachers/staff manage that often leads to EBP fatigue. One principal said that “we are so consumed with a lot of other academic frameworks that we would like to get to the social-emotional learning standards…but, they are not a prominent feature.” Both principals and teachers expressed the need for central office and school leadership to de-prioritize competing EBP and other initiatives more broadly and suggested that “clearly defined” school priorities around EBP and initiatives is essential to gain buy-in from teachers/staff.

Fourth, Information Sharing was a new dimension that emerged as part of implementation citizenship behavior. The majority of principals and teachers identified Information Sharing in their discussion of implementation citizenship behavior where school personnel actively shared knowledge (e.g., expertise, tips, strategies) with their colleagues, peers, and broader community (parents). Principals also discussed that it was “above and beyond” behavior to “put them[selves] out there” to “share knowledge or [their] expertise with others within [their] building as well as within [their] district or beyond.” Observation/Feedback also was a new dimension of implementation citizenship. Most participants described this construct as willingness to open up their practices for observation and feedback as well as provide opportunities for educational site visits from other schools/districts to observe their practices. One central administrator noted that “having [staff] that [implement EBP well] model those practices for other staff and colleagues” is critical to this construct.

Existing Dimensions

Implementation Leadership

Comments from some central administrators and principals aligned with Aarons et al. (2014a) definition of proactive leadership. Many central administrators and principals described proactive leadership in terms of collecting multiple sources of data to identify growth and areas of improvement as well as track accountability. One central administrator indicated “using data to show where there’s growth and where there’s room for improvement” would be helpful in increasing EBP use. Some central administrators and principals described supportive leadership similarly to Aarons et al. (2014a) by saying that leaders were: (1) present and fully engaged in implementation efforts; and (2) able to provide resources (e.g., time, physical space, embedded coaching, behavioral observations, materials). One novel component described by central administrators and teachers was showing support by helping to “prioritize or de-prioritize” competing demands or initiatives in shared decision making given the EBP fatigue or overload that often exists in schools. Some teachers differed in their depiction of supportive leadership and expressed that supportive leadership entails a leader who: (1) is willing to learn about EBPs alongside teachers and implementation agents (e.g., “I would like to see administrator willingness to get involved in the learning with [teachers, where they] come to [the] classroom and do the strategy…”); (2) understands and has empathy for the implementation challenges that teachers face; and (3) is intentional about creating structures (e.g., check-ins with whole staff and individuals, troubleshooting) to ensure EBP implementation is prioritized.

Similar to Aarons et al. (2014a) definition, central administrators and teachers characterized knowledgeable leadership as providing “the why” to teachers and staff to increase understanding of the expectations, needs, and goals of EBP implementation. Many principals noted additional aspects of knowledgeable leadership including the leader’s ability to “consistently model EBP” and the ability to discern and use credible “evidence to support decision making to use EBP.” Perseverant leadership was infrequently discussed across the participants. Most principals described perseverant leadership as active involvement with teacher and school staff implementation efforts to create an environment where “EBP implementation carries on even in the absence of the principal.” Consistent with Ehrhart et al. (2018), central administrators and teachers discussed available leadership in terms of being open and present to provide help and feedback, while principals did not mention this construct. Teachers underscored the importance of an available leader as someone they can trust to have an “open door to bring up concerns” to enhance implementation or barriers to implementation. One teacher specifically noted that available leadership entails “physical availability… actually having [their] door open or being in the classroom and… emotional availability [where they are] willing to address something.”

Implementation Climate

Many principals mentioned having a “building focus” on EBP as well as setting “explicit goals around [adoption of] EBP” among staff carrying out implementation. Similar to Ehrhart et al. (2014), participants described educational supports for EBP in the context of professional development trainings (i.e., workshops, seminars, conferences) that are initiated and supported at the district level across all schools. One administrator mentioned, “there is an underlying assumption that everybody understands what an EBP is… [but she] never [uses the term] EBP because people would freak out…and assume that it is a major effort to implement.” Many teachers discussed the lack of educational support in terms of tangible materials. One teacher noted that she has “gone to training, [but] if [she] wants to implement [the EBP, she] would have to buy it or have the materials to be able to copy and paste all the stuff.” Additional aspects of Educational Support for EBP that many principals and teachers brought up included having: (1) access to experts in the field available to teachers and school staff; and (2) protected time to reflect, adapt, develop implementation plans, and integrate EBP into daily practice.

Recognition for EBP was infrequently discussed. The only component of the existing subscale that participants noted was the potential for promotion. One principal described “greater access to leadership opportunities” as compared to “traditional promotion” as recognition opportunities in schools. All participants agreed and stated that traditional Rewards for EBP (i.e., in the form of promotions or financial incentives) were not appropriate or feasible within the school context. Central administrators explained how “financial incentives [like] bonuses and raises are off the table…[due to] union contracts and involvement.” Furthermore, some principals specifically noted that financial incentives are “not a motivator” and “will divide [them] as a team” since “the heart of why [school staff] do what [they] do…” is to see “[their] kids succeed.” Interestingly, teachers articulated that they “get so much more [from] being compensated for time to plan” around EBP implementation, which would be the most favorable reward that they could receive over and above monetary incentives. Selection for EBP was mentioned twice and only among teachers. Teachers expressed the lack of understanding among school staff as to how new hires are selected. One teacher said, “Unless [school staff] are on the hiring committee, [they] do not know why a person is selected.” The reasons that some people are selected and hired into their roles appear to be largely unknown to teachers in participating schools. Selection for Openness was not discussed.

Implementation Citizenship Behavior

Participants’ conceptualization of Helping Others was similar to Ehrhart et al. (2015) definition with respect to responsibilities for EBP implementation and helping teach EBP implementation procedures; however, none of the participants described helping to ensure proper EBP implementation (monitoring fidelity). Most frequently across participants, Helping Others was described in the context of teamwork, “equally sharing the workload among staff” via formal (e.g., professional learning committees, grade group meetings, demonstration teachers) and informal methods (e.g., distributing responsibilities, mundane daily tasks). Keeping Informed was not frequently discussed. Only one component of the Keeping Informed subscale from Ehrhart et al. (2015) definition, latest news regarding EBP, was discussed among teachers. Central administrators described “attending trainings [and professional development] as a key indicator” of Keeping Informed as well as keeping up with the “latest news [such as] reading online sources” wherever available during personal off-work times. However, district-or school-wide communication related to EBP and changes in EBP policies and procedures were not mentioned.

Discussion

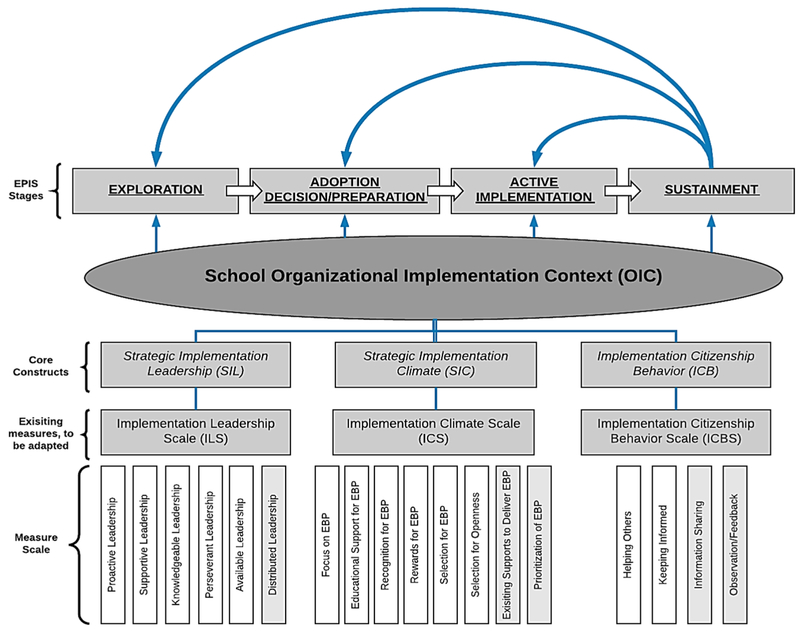

Adaptation and application of existing constructs and corresponding measures of the OIC to the school setting provide promising opportunities to advance school-based implementation science and practice. This study extends research led by Aarons et al. (2014a) and Ehrhart et al. (2014, 2015, 2018) to articulate key aspects of the OIC of schools that relate to EBP implementation success in two local school districts in the Northwestern USA. See Fig. 1. Specifically, this study engaged key education stakeholders (central administrators, principals, and teachers) to gather information regarding their perceptions of the appropriateness and conceptual boundaries of the OIC constructs (implementation leadership, climate, and citizenship behavior) in schools. Three general findings emerged from our focus groups. First, we found critical differences unique to the education sector and several similarities with other service sectors (e.g., community mental health and child welfare) in how OIC constructs manifest, which are further discussed below. Second, all stakeholder groups indicated that greater attention to the OIC constructs offers a promising approach to improving youth mental health outcomes through the adoption and delivery of higher quality school-based mental health services and supports. Last, the OIC constructs appear to have potential utility across each stage of the implementation process (exploration, preparation, implementation and sustainment; Aarons et al., 2011). Based on the data gleaned from this study, we offer recommendations to researchers and community stakeholders who may be interested in examining these organizational constructs in schools.

Fig. 1.

School organizational implementation context (OIC) concept map. The light gray bars depict new dimensions of the OIC constructs in schools

We note that many dimensions of the OIC were not frequently discussed that may play a critical role in successful EBP implementation in schools. For example, perseverant leadership may be one aspect of implementation leadership that warrants more attention in schools since implementation is a long and arduous process that often takes 2–5 years or more for full implementation to occur (Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005). A lack of perseverant leadership may contribute to the number of EBP implementation efforts that fail in schools (Gottfredson & Gottfredson, 2001), and the frequently encountered “flavor of the month” phenomenon in which organizations get stuck in a cycle of taking on and abandoning new programs and practices (Basch, Sliepcevich, Gold, Duncan, & Kolbe, 1985). Schools should carefully consider the ways to problem solve implementation challenges prior to decommissioning an EBP, which may involve the de-implementation of other ineffective programs and practices (Wang, Maciejewski, Helfrich, & Weiner, 2017). In addition, participants in the current study augmented implementation leadership with an additional dimension: Distributed Leadership, where leadership is shared across multiple individuals and roles in a school as well as the central office (Angelle, 2010). This dimension captures shared leadership responsibilities that are held across stakeholders in schools. Although there also was recognition among participants that principals tend to be the ultimate arbiters of most school-related issues, there may be various leaders that spearhead different tasks or content areas in schools that embody middle leaders or middle managers, and implementation efforts ought to consider the critical role that multiple leaders may play in schools (Priest-land & Hanig, 2005; Birken et al., 2015).

Our results suggest that implementation climate may manifest somewhat differently in schools. While some components (Focus on EBP; Educational Support for EBP) were similarly conceptualized to more traditional allied health-care delivery settings, other aspects of implementation climate differed. First, it was clear that selection for EBP and selection for openness may not be feasible components of implementation climate measurement in schools, as some stakeholders at the school building level often do not have influence in the hiring process to select staff with a proclivity for use of EBP. It is concerning that teachers described the selection and hiring process as lacking transparency. Our participants often indicated they were not aware of the district standards for hiring new employees, which may indicate that hiring standards are not readily available in the districts in which this work was conducted, or they do not exist. Without transparent processes, it is more difficult to make changes that might support the recruitment and selection of individuals with more openness, knowledge, skills, and abilities related to the use of EBP. Second, our findings suggest that the reward and recognition components of implementation climate become increasingly blurred since there is universal agreement that financial rewards are inappropriate and unfeasible in schools (Lyon et al., 2018) or, that they may receive less emphasis compared to the potential benefits of EBPs for students. These results are consistent with Lyon et al. (2018), who quantitatively examined implementation climate in schools and found that the factor structure of most implementation climate subscales was upheld but there was less evidence supporting the rewards subscale. However, consistent with one item in that subscale, our stakeholders posited non-financial incentives—such as the provision of protected time for planning or additional preparatory periods—as potential recognition or reward for EBP use, which central offices and schools may consider to develop positive implementation climates. Third, two new dimensions (Existing Supports to Deliver EBP and Prioritization of EBP) emerged that also may capture important aspects of implementation climate in schools. Given the financial constraints that many schools face, stakeholders discussed the importance of capitalizing on existing school structures and supports and leveraging existing structures (teams, personnel) to maximize limited resources to facilitate EBP implementation. This may help mitigate resource-related barriers to implementation. Similarly, stakeholders identified Prioritization and De-Prioritization of EBP and other initiatives as an important component of implementation climate. Schools often experience significant EBP fatigue and prioritizing certain implementation efforts may increase the likelihood of successful EBP adoption, use, and sustainment. Lastly, OIC constructs may be malleable determinants in schools that act as mechanisms through which organizationally focused implementation strategies may impact implementation outcomes. This conceptualization mirrors the direction of increasing implementation research focused on developing a more robust understanding of how implementation strategies exert their effects (Lewis et al., 2018). With proper implementation strategies deployed, it may be possible to improve these aspects of implementation climate and improve EBP use in schools (Cook, Lyon, Locke, Waltz, & Powell, submitted; Lyon, Cook, Locke, Powell, & Waltz, submitted).

Similar to implementation climate, our results suggest that aspects of implementation citizenship behavior (Helping Others and Keeping Informed) were comparably conceptualized in schools; however, there also were unique differences that stakeholders highlighted for implementation citizenship behavior in schools. The Helping Others and Keeping Informed dimensions were mostly present in schools with three notable differences—none of the stakeholders discussed: (1) ensuring proper EBP implementation; (2) district- or school-wide communication related to EBP; or (3) changes in EBP policies and procedures, which suggests these behaviors are rare, not salient, or nonexistent in school settings. These may be important behaviors to cultivate to support EBP implementation in schools. In addition, two new subconstructs of implementation citizenship emerged: Information Sharing and Observation/Feedback. Stakeholders highlighted the necessity of Information Sharing with their colleagues, particularly sharing their expertise with others in their building and beyond. Interestingly, stakeholders also noted the value of observation and feedback—both opportunities to provide and receive Observation/Feedback on their performance. These additions to implementation citizenship may be critical practices in the education sector. Strategies to improve opportunities for collaboration and feedback may help strengthen implementation citizenship in schools. Moreover, identifying and supporting key opinion leaders (Atkins et al., 2008) and EBP champions (Aitken et al., 2011) may reflect social strategies to influence implementation citizenship behaviors that facilitate EBP uptake, delivery, and sustainment. However, the extant literature offers little guidance on strategies to promote implementation citizenship in schools.

The results of our study also were consistent with the implementation leadership dimensions (proactive, supportive, knowledgeable, perseverant, and available) found in the broader implementation science literature (Aarons et al., 2014a; Ehrhart et al., 2018). However, it appears that some dimensions were more salient and prevalent (supportive) in schools than others (perseverant). Participants noted that supportive leadership encompassed the provision of tangible resources (materials) as well as emotional support for EBP use. Interestingly, participants also discussed Prioritization and De-Prioritization of EBP and other school initiatives to prevent EBP fatigue as supportive leadership. This is an important point to consider as schools often face barriers to implementation that include inadequate time, energy, effort, and resources for implementation (Forman, Olin, Hoagwood, Crowe, & Saka, 2009; Locke et al., 2015). Indeed, in a recent school-focused adaptation of the Expert Recommendations for Implementing Change (ERIC) compilation of implementation strategies in health care (Powell et al., 2015), Cook et al. (submitted) added “pruning competing initiatives” to address exactly this issue.

Limitations and Future Directions

While this study explored the qualitative nuances of implementation leadership, climate, and citizenship behavior in schools, several limitations are noted. First, these results should be interpreted with caution as the data only were gathered in two predominantly white school districts in the Northwestern USA, which may limit the geographic generalizability of the results to areas with similar racial/ethnic diversity and/or socioeconomic status as well as school characteristics. Second, the teachers who participated were not self-nominated, but were nominated by the district’s central administrators. Third, the sample was predominantly female, which may limit the interpretation of the results. Fourth, the current project was focused primarily on Tier 1 universal supports. The OIC constructs investigated may manifest differently for more intensive social, emotional, or behavioral supports. Lastly, this study only focused on the OIC constructs, and no individual-level constructs were explored, which also is important for EBP implementation (Becker-Haimes et al., 2017; Feuerstein et al., 2017).

Conclusion

Findings from this study support the assertion that the three OIC constructs (implementation leadership, climate, and citizenship) are relevant and applicable to the school context. Results also demonstrate the utility of qualitative methods to inform revisions to existing constructs from other service settings (e.g., health care, child welfare, juvenile justice) with the goal of facilitating application in novel settings, such as schools. As noted earlier, the OIC constructs also have been codified in a set of established organizational evaluation measures (Lyon et al., 2018), which may be further revised based on the information collected herein. Next steps could include validation of the expanded versions of OIC constructs in the context of school-based implementation efforts. Use of qualitative methods to adapt existing measures is one way to avoid the “home-grown measure” phenomenon in which measures are rapidly created “in house to assess a construct in a particular study sample without engaging in proper test development procedures” (Martinez, Lewis, & Weiner, 2014, p. 4). This phenomenon has recently plagued implementation science and diminished the comparability of results across studies. Home-grown measures often are highly specified to the population in which the measure was used and have limited generalizability and utility for comparisons across studies (Martinez et al., 2014). Additionally, there is a need for research that carefully considers how the OIC constructs manifest across individuals at different levels (e.g., individual provider, school/organizational, and central office/district) and how to design specific implementation strategies that precisely target specific aspects of OIC constructs in schools.

Funding

This study was funded by R305A160114 (third and last author) awarded by the Institute of Education Sciences as well as K01MH100199 (first author) awarded from the National Institute of Mental Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or Institute of Education Sciences.

Appendix

Structure to OASIS Study 1 - Focus Group Session

Brief presentation on purpose of the focus groups (5 minutes)

Introduction to Evidence-Based Practice and Implementation Science to provide context (5–10 minutes)

- Brief overview of evidence-based practices in the schools

- “Before we jump into the focus group itself, we want to provide a little context for our discussion. This entire project is about the implementation of evidence-based practices in the schools. In particular, we are most interested in Tier 1 or universal programs that promote students’ social, emotional, or behavioral functioning.

- We define evidence-based practices as clearly-specified programs, practices, and supports with demonstrated empirical support for their efficacy/effectiveness and application to target groups.

- “What does this mean to you?”

- Next consider: What is the opposite of evidence-based practices?”

- →Lead a discussion about why evidence-based practices have been established and why we have reached a point where legislation and policy emphasize the increased adoption and delivery of evidence-based practices in the school setting.

- “A key part of evidence-based practices are the active ingredients of the prevention or intervention program. Think about evidence-based practices as a good cooking recipe. If one of the ingredients is left out, such as flour or sugar left out of chocolate chip cookies, then one won’t end up with chocolate chip cookies. Evidence-based practices can be thought of similarly.There are core ingredients or components of an intervention that need to be adopted in order for them to produce the findings demonstrated in research studies. The trouble is that when attempting to transfer evidence-based practices in to the school setting, active ingredients are often not fully implemented or insufficiently adopted school-wide to produce desired outcomes.”

- Explanation of school-based implementation science

- “One of the most significant issues we face is this implementation gap, which reflects the discrepancy between science and practice. It turns out that research findings don’t crawl out of journals and make their way into the everyday settings on their own.

- “Implementation science is the study of methods to promote the integration of research findings and other evidence-based practices into routine practice. We want to do this so children can actually benefit from them.

- Research has uncovered several factors – such as supportive leadership – that promote the use of evidence-based practices in the school setting. The OASIS project is about measuring key aspects of the school setting that impact the successful use of evidence-based Tier 1 practices for students’ social, emotional, and behavioral functioning.”

Importance of organizations to successful implementation (5 minutes)

Description of the organizational implementation context (OIC)

“Your handouts include this overview figure and a description of each of our key constructs”

- “Our project focuses on ways to measure the organizational implementation context, which refers to aspects of the school setting that are closely related to actual implementation.

- For example, leadership practices that create an environment that is conducive to – or problematic for – the implementation of new innovations is a core component of the OIC. In addition, a teacher’s attitudes toward evidence-based practices are likely to impact their motivation and commitment to implement those practices once the teacher has received training.”

- What the OIC isn’t / what we aren’t talking about

- “We recognize that there is wide variation in the extent to which professional development / implementation supports are used effectively when introducing new programs. We are most interested in organizational factors that may influence implementation success whether or not professional development supports are optimal.”

- “We are most focused on the factors that influence whether programs are implemented with fidelity and have their intended effects on students.”

- Adaptation and refinement of existing measure that capture critical aspects of the OIC

- “This project benefits from previous work conducted in Child Welfare and Youth Mental Health, where measures assessing aspects of the organizational implementation context were developed and validated. Our project seeks to adapt and refine these measures for use in the school setting so we can better understand whether a given school is ready to begin implementing or assess key variables during active implementation that serve as barriers to success.”

- “There are four measures assessing unique, but related, components of the organizational implementation context. These are the Implementation Climate Scale, Implementation Leadership Scale, Implementation Citizenship Behavior Scale, and Evidence-Based Practice Attitudes Scale. Each of the measures captures specific aspects of the organizational implementation context that helps explain why implementation is likely to be successful within a given school or district. Each measure will be described in more detail later.”

- “Ultimately, the data from these measures are intended to provide actionable information that will enable district personnel, external consultants, or implementation coaches to pinpoint specific implementation supports that can facilitate the adoption and delivery of evidence-based practices that are being rolled out in the school.

- “Does anybody have any questions or comments?”

Strategic Implementation Climate

- Present the Strategic Implementation Climate construct and key elements of the construct (provide a handout that outlines the construct and bullet points key idea associated with the construct) (5 minutes)

- Recall that we are most interested in Tier 1 or universal programs that promote students’ social, emotional, or behavioral functioning

- “First, we are going to discuss Strategic Implementation Climate, as measured by the Implementation Climate Scale.”

- “Strategic Implementation Climate is the staff's shared perception of the importance of EBP implementation and includes staff perceptions of norms and expectations with regard to implementation.

- SIC differs from general school climate, which refers to how individuals within the system collectively experience the school as supportive. Implementation climate is therefore a sub-component of general climate that reflects people’s perceptions about the extent to which the school supports taking on and implementing new things.”

- “Schools can range from an open and positive implementation climate to a closed and negative implementation climate. The specific aspects of Strategic Implementation Climate evaluated by the Implementation Climate Scale include Focus on EBP, Educational Support for EBP, Staff Recognition for EBP, Rewards for EBP, Staff Selection for EBP and Staff Selection for Openness.”

- “Focus on EBP references whether the organization’s thinks implementation is important, a top priority, or has effective use of EBP as a primary goal.”

- “Educational Support for EBP refers to whether the organization supports EBP training, staff travel to conferences or workshops, or supplies training materials or other supports (e.g., journal articles).”

- “Recognition for EBP refers to whether the organization views staff with EBP experience as experts, holds them in high esteem, and is likely to promote them.”

- “Rewards for EBP are financial incentives for the use of EBP, whether employees who use EBP are more likely to get bonuses/raises, or accumulated compensated time.

- “Selection for EBP refers to the extent to which the organization prefers to hire employees who have previously used EBP, have formal education supporting EBP, and value EBP.”

- “Selection for Openness refers to the extent to which the organization prefers to hire employees who are flexible, adaptable, or open to new interventions.”

- DO YOU HAVE ANY QUESTIONS ABOUT STRATEGIC IMPLEMENTATION CLIMATE OR ITS SUBCONSTRUCTS?

SIC ACTIVITY

- Part 1 – Individual reflection on SIC (1–2 minutes):

- GENERATE IDEAS: “First, we would like to give you each 1 minute to think privately about the kinds of ideas on a blank piece of paper about what information should be included in a measure of SIC. This might be questions that you think would be important to ask or specific behaviors or perceptions that we should be sure to capture. Even though the measure is included in your packet, we ask that you refrain from reviewing it for the time being.”

- Part 2 – Review of original and adapted ICA measures (15–25 minutes):

- MEASURE REVIEW:

- “Next, we would like you to review the document in your folder that lists the original items from the Implementation Climate Scale in one column, our adapted version of the item in the next column, and a third column for your notes/comments. The form also asks for your ratings of item appropriateness to schools. After sifting through all the items, we would also like you to reflect on additional items that we should consider including that would fall under the Implementation Climate Scale. These items may include ideas you generated during the first part of this activity.”

- “We will be collecting these forms at the end of the focus group”

- Provide 5–10 minutes for review and 10–15 minutes for discussion.

- Facilitate discussion (potential probes):

- “What feedback do you have on the measures and their ability to assess Strategic Implementation Climate in Schools?”

- “What are we potentially missing with this measure that could be included to provide for a more valid and useful measure of implementation climate?”

- “Beyond general school climate, such as how much people feel included or cared about by the organization, what specific aspects of climate come to mind when you think about schools in which the adoption and implementation of evidence-based practices are high priorities for leaders and staff?”

- How would you know if your school or district values EBP?

- What are the various aspects of school environments that send the message that EBP adoption and implementation are valued in this school?

- What policies, practices, procedures, or systems would you expect to see in place in a school that prioritizes EBP implementation?

- “What indicators do you believe would capture a school building that has a positive implementation climate that would likely lead to successful evidence-based practice implementation?”

Strategic Implementation Leadership

- Present the Strategic Implementation Leadership construct and key elements of the construct (provide a handout that outlines the construct and bullet points key idea associated with the construct) (5 minutes)

- “Next, we are going to discuss strategic implementation leadership, which is measured by the Implementation Leadership Scale.”

- “Strategic implementation leadership refers to a specific form of leadership that involves actions that are performed to support the adoption and use of an innovative program or practice being introduced into an organization. It differs from general leadership qualities that may help to promote a positive work climate and motivate staff to stay on with an organization, but do not capture things that leaders can do to support implementation specifically.

- For example, strategic implementation leadership captures behaviors that fall under five different categories of behavior: Proactive Leadership, Supportive Leadership, Knowledgeable Leadership, Perseverant Leadership, and Availability.”

- Proactive Leadership involves the extent to which a leader establishes clear standards surrounding implementation, develops plans to facilitate implementation, and removes obstacles to implementation.

- Supportive Leadership involves the degree to which leaders support employee efforts to learn more about or use EBP, and recognizes and appreciates employee efforts.

- Knowledgeable Leadership occurs when employees believe that leaders “know what they are talking about” surrounding EBP and are able to answer questions effectively.

- Perseverant Leadership refers to the extent to which leaders carry on through challenges of implementation and react to critical issues surrounding implementation.

- Available Leadership refers to the extent to which leaders are accessible when it comes implementation, make time to meet about implementation, are available to discuss implement or provide help, and can be contacted with problems or concerns.

SIL ACTIVITY

- Part 1 – Individual reflection about SIL (1–2 minutes):

- GENERATE IDEAS: “First, we would like to give you each 1 minute to think privately about the kinds of ideas on a blank piece of paper about what information should be included in a measure of SIL. [This might be questions that you think would be important to ask or specific behaviors or perceptions that we should be sure to capture. Even though the measure is included in your packet, we ask that you refrain from reviewing it for the time being].”

- Part 2 – Review original and adapted ILS measures (15–25 minutes):

- MEASURE REVIEW: Distribute original and adapted ILS measure form. Have each member identify words that may be confusing, items that seem irrelevant for the school context, and additional items that seem relevant to capture under the measure

- “Next, we would like you to review the document in your folder that provides the original items from the Implementation Leadership Scale in one column, our adapted version of the item in the other column, and a third column for your notes/comments. The form also asks for your ratings of item appropriateness to schools. After sifting through all the items, we would also like you to reflect on additional items that we should consider including that would fall under the Implementation Leadership Scale. These items may include ideas you generated during the first part of this activity.”

- “We will be collecting these forms at the end of the focus group”

- Provide 5–10 minutes for review and 10–15 minutes for discussion.

- Facilitate Discussion

- “What are we potentially missing with this measure that could be included to provide for a more valid and useful measure of strategic implementation leadership?”

- “Beyond general leadership qualities, such as caring about employee’s well-being or being organized, what specific aspects of leadership come to mind when you think about someone who would strategically support staff in school to adopt and implement evidence-based practices?”

- What specific things can leaders do that send a message that implementation is a high priority in their school?

- What steps can leaders take to support their staff in successful implementation?

- “What is it about a leader that allows her to promote a positive climate for taking on and implementing new practices effectively?”

Implementation Citizenship Behavior

- Present the Implementation Citizenship Behavior construct and key elements of the construct (provide a handout that outlines the construct and bullet points key idea associated with the construct) (2 minutes)

- “Now, we are going to discuss implementation citizenship behaviors, which are measured by the Implementation Citizenship Behavior Scale. Implementation citizenship is the extent to which employees in an organization go ‘above and beyond’ to support implementation.”

- “Implementation citizenship behaviors are those that demonstrate a commitment to EBP by keeping informed about the EBP being implemented and supporting colleagues to meet EBP standards. It includes at least two major components: Helping Others and Keeping Informed.”

- Helping Others refers to the extent to which employees assist others to make sure they implement EBP, help teach EBP implementation procedures, or help others with responsibilities related to EBP (e.g., completing fidelity assessments).

- Keeping Informed refers to whether employees keep up to date on changes in EBP policy, follow the latest news or new findings regarding EBP, and keep up with agency communications related to EBP.

ICB ACTIVITY

- Part 1 – Individual reflection on ICB (1–2 minutes):

- GENERATE IDEAS: “First, we would like to give you each 1 minute to think privately about the kinds of ideas on a blank piece of paper about what information should be included in a measure of ICB. [This might be questions that you think would be important to ask or specific behaviors or perceptions that we should be sure to capture. Even though the measure is included in your packet, we ask that you refrain from reviewing it for the time being].”

- Part 2 – Review of original and adapted ICBS measures (10 minutes):

- MEASURE REVIEW: Distribute original and adapted ICBS measure form. Have each member identify words that may be confusing, items that seem irrelevant for the school context, and additional items that seem relevant to capture under the measure

- “In your folder, you have a document that provides the original items from the Implementation Citizenship Behavior Scale in one column, our adapted version of the item in another column, and a third column for your notes/comments. The form also asks for your ratings of item appropriateness to schools. After sifting through all the items, we would also like you to reflect on additional items that we should consider including that would fall under the Implementation Citizenship Behavior Scale. These items may include ideas you generated during the first part of this activity.”

- “We will be collecting these forms at the end of the focus group”

- Provide 5–10 minutes for review and 10–15 minutes for discussion.

- Facilitate discussion

- “What are we potentially missing with this measure that could be included to provide for a more valid and useful measure of implementation citizenship behavior?”

- “Beyond generally positive employee qualities, such as being sociable and hardworking, what specific implementation citizenship behaviors come to mind when you think about someone who would go ‘above and beyond’ to support EBP implementation?”

- “What is it about an employee in an organization that allows her to support her colleagues in implementing EBPs effectively?”

- [NOTE FOR FACILITATOR: Other dimensions in the literature include: Sportsmanship – tolerating problems and inconveniences without complaining; Organizational compliance – following rules and procedures even when no one is looking; and Individual initiative – sharing ideas to improve performance, such as voicing behaviors, giving extra effort, taking on additional responsibilities]

Footnotes

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

References

- Aarons GA, Ehrhart MG, & Farahnak LR (2014a). The implementation leadership scale (ILS): Development of a brief measure of unit level implementation leadership. Implementation Science, 9, 45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Ehrhart MG, Farahnak LR, & Sklar M (2014b). Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annual Review of Public Health, 35, 255–274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Ehrhart MG, Torres EM, Finn NK, & Beidas RS (2017). The humble leader: Association of discrepancies in leader and follower ratings of implementation leadership with organizational climate in mental health. Psychiatric Services, 68, 115–122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Green AE, Palinkas LA, Self-Brown S, Whitaker DJ, Lutzker JR, et al. (2012). Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implementation Science, 7, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, & Horwitz SM (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38, 4–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aitken LM, Hackwood B, Crouch S, Clayton S, West N, Carney D, et al. (2011). Creating an environment to implement and sustain evidence-based practice: A developmental process. Australian Critical Care, 24, 244–254. [DOI] [PubMed] [Google Scholar]

- Angelle PS (2010). An organizational perspective of distributed leadership: A portrait of a middle school. Rmle Online, 33, 1–16. [Google Scholar]

- APA Presidential Task Force on Evidence-Based Practice. (2006). Evidence-based practice in psychology. The American Psychologist, 61, 271. [DOI] [PubMed] [Google Scholar]

- Atkins M, Frazier S, Leathers S, Graczyk P, Talbott E, Jakob-sons L, et al. (2008). Teacher key opinion leaders and mental health consultation in low-income urban schools. Journal of Consulting and Clinical Psychology, 76, 905–908. [DOI] [PubMed] [Google Scholar]

- Basch CE, Sliepcevich EM, Gold RS, Duncan DF, & Kolbe LJ (1985). Avoiding type III errors in health education program evaluations: A case study. Health Education Quarterly, 12, 315–331. [DOI] [PubMed] [Google Scholar]

- Becker-Haimes EM, Okamura KH, Wolk CB, Rubin R, Evans AC, & Beidas RS (2017). Predictors of clinician use of exposure therapy in community mental health settings. Journal of Anxiety Disorders, 49, 88–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Aarons G, Barg F, Evans A, Hadley T, Hoagwood K, et al. (2013). Policy to implementation: Evidence-based practice in community mental health—Study protocol. Implementation Science, 8, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Edmunds J, Ditty M, Watkins J, Walsh L, Marcus S, et al. (2014). Are inner context factors related to implementation outcomes in cognitive-behavioral therapy for youth anxiety? Administration and Policy In Mental Health, 41, 788–799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, et al. (2016a). Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatrics, 169, 374–382. [DOI] [PMC free article] [PubMed] [Google Scholar]