Abstract

Traditional wavefront-sensor-based adaptive optics (AO) techniques face numerous challenges that cause poor performance in scattering samples. Sensorless closed-loop AO techniques overcome these challenges by optimizing an image metric at different states of a deformable mirror (DM). This requires acquisition of a series of images continuously for optimization−an arduous task in dynamic in vivo samples. We present a technique where the different states of the DM are instead simulated using computational adaptive optics (CAO). The optimal wavefront is estimated by performing CAO on an initial volume to minimize an image metric, and then the pattern is translated to the DM. In this paper, we have demonstrated this technique on a spectral-domain optical coherence microscope for three applications: real-time depth-wise aberration correction, single-shot volumetric aberration correction, and extension of depth-of-focus. Our technique overcomes the disadvantages of sensor-based AO, reduces the number of image acquisitions compared to traditional sensorless AO, and retains the advantages of both computational and hardware-based AO.

1. Introduction

Optical imaging systems have been the driving force behind numerous seminal discoveries in biology and medicine, and their ability to non-invasively probe the structural and functional properties of a sample has driven their introduction into clinical environments at various levels. Conventional microscopes are strictly tethered to physical limits such as those described by Abbe’s diffraction [1], and recent advances have attempted to break-free of this limit using superlenses [2], unique illumination profiles [3], or fluorescence localization [4, 5]. While one can engineer the perfect microscope, the other half of this system, i.e. the biological sample, is beyond our control. The inhomogeneous nature of biological samples, at a microscopic level, imparts numerous spatial variations to the refractive index, which reshapes the wavefront and introduces aberrations [6]. This problem is particularly pronounced in ocular imaging and imaging deep within a tissue.

The concept of adaptive optics (AO), borrowed from astronomy, attempts to measure and correct these wavefront distortions or aberrations [7–9]. Sample-introduced optical aberrations are unique and, therefore, warrant a closed-loop solution [10, 11]. In traditional hardware-based solutions to AO, the aberrations at the image plane are measured with a wavefront sensor, mapped on to the plane of a compensating element placed in the path of light, and the negative of this distortion is expressed by the compensating element. The most commonly used compensating elements are deformable mirrors (DM) and spatial light modulators (SLMs). The former has high optical efficiency and is wavelength or polarization independent; the latter has a larger pixel count and, therefore, a larger range of response [12].

Alternatively, these aberrations can be corrected computationally in imaging modalities such as optical coherence tomography (OCT) [13], Fourier ptychographic microscopy [14], digital holography [15], and DIC-fluorescence microscopy [16]. Digital wavefront correction is achieved either through deconvolution or with a compensating phase mask. The former is often an illconditioned problem whose performance is dependent on the sample and is extremely intensive computationally [16–18]. In contrast, the latter is a well-conditioned problem [13], but requires complex-valued images [19]. Imaging modalities based on interferometry such as holography and OCT intrinsically generate complex-valued images. For other modalities such as wide-field or confocal microscopy, the phase of the images can be artificially reconstructed with phase retrieval techniques [20, 21]. Additionally, real-time algorithms for phase recovery using deep learning and neural networks are driven by the rapid improvement in computational processors and tools [22]. Apart from improving the image quality by correcting sample-introduced wavefront errors, AO has also been used to extend the depth of focus by introducing aberrations such as spherical [23] or astigmatism [24].

One of the major challenges in AO microscopy is wavefront sensing [7]. Traditional sensors, such as the Shack-Hartmann sensor, exhibit high accuracy with single sources. This is true in the cases of guide-stars or perfectly reflective surfaces. However, in biological samples, the plurality of scattering sources creates a superposition of different wavefronts. The sensed wavefront can be restricted to a single depth, i.e. the focal plane of the sample by confocal [25] or coherence [26] gating; but the sensitivity of the measurements is still dependent on the sample structure [8, 27]. Moreover, there is an observed cross-coupling in the measured wavefront between two modes [28]. Additionally, the mismatch between the wavefront sensing and the detection optical paths can lead to inaccuracies, known as non-common path errors [29]. Furthermore, there is a trade-off between the dynamic range and sensitivity of the wavefront sensor dictated by the number of lenslet arrays and the camera used. Despite their disadvantages, the closed-loop AO system with a Shack-Hartmann wavefront sensor and a DM has been widely used over the past decade for in vivo retinal imaging with AO ophthalmoscopy [30–32] and AO OCT [33, 34].

In order to overcome these challenges, sensorless wavefront sensing techniques estimate the wavefront computationally using prior knowledge of the pupil function, the phase of complex-valued images [35, 36], pupil segmentation methods [36, 37], or phase recovery and phase diversity algorithms [20, 21, 38]. Alternatively, a hill-climbing approach to sensorless AO involves iteratively optimizing an image metric such as sharpness or brightness at different states of the DM [39]. This closed-loop approach has been demonstrated in a variety of imaging modalities including confocal microscopy [11], structured illumination microscopy [40], multiphoton imaging [41, 42], and OCT [43, 44]. These techniques directly estimate the wavefront correction at the DM plane. These sensorless closed-loop AO (SCL-AO) techniques are more versatile than sensor-based AO. However, they require a series of images to be acquired at the same position for several seconds. This could prove difficult in dynamically varying samples such as in vivo ophthalmic imaging.

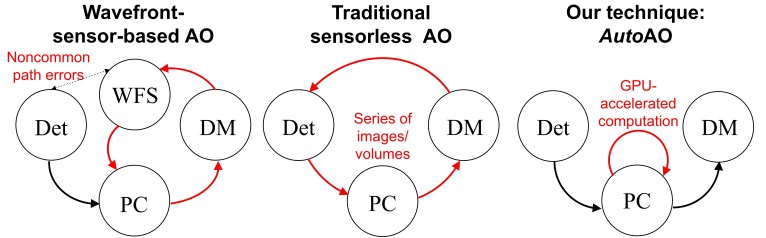

In order to avoid this bottleneck of traditional SCL-AO, our technique, AutoAO, exploits the advantages of all these methods to enable automated single-shot SCL-AO with a combination of hardware and computational AO (CAO) [45, 46]. We provide a framework for AO where a single volume is acquired with an initial setting of the DM, then the wavefront is computationally estimated by optimizing an image metric, and the output finally mapped onto the DM. The second volume acquired at the new state of the DM is the desired result, as any residual aberrations can then be computationally corrected in post-processing. As only one initial volume is required, AutoAO calibrates the optimal DM state in a single shot. In contrast to previous SCL-AO techniques, where there is a closed-loop formed by the DM states, image acquisition, and metric estimation, AutoAO simulates the different states of the DM using CAO. Figure 1 illustrates the differences between the three techniques for AO. AutoAO eliminates the need for multiple image acquisitions, reduces laser exposure on the sample, and dramatically improves the speed of the system. This implies a 300× reduction in the number of volumes required for optimization compared to previous implementations of SCL-AO OCT [43, 47] and SCL-AO laser scanning ophthalmoscopy [48]. This is demonstrated on an AO optical coherence microscope (OCM) where the wavefront error is calculated in terms of its Zernike polynomials [49], based on the method described by Pande et al. [50] and implemented on a graphics processing unit (GPU). The wavefront estimation technique used here exploits the complex-valued images generated inherently by OCM, and alternate wavefront computation techniques described above could be used for other imaging modalities. To highlight the versatility of our technique, we optimized the wavefront for three different metrics independently corresponding to depth-wise aberration correction, volumetric aberration correction, and volumetric extension of the depth-of-focus. The results overcome the challenges of traditional sensor-based AO, avoid acquisition of multiple images or volumes for optimization, harness the speed of CAO to achieve real-time correction of aberrations for a single plane, and increase the energy of the collected signal that can only be achieved by hardware-based AO (HAO). As the wavefront is directly inferred from the image, it provides a larger choice over wavefront sampling and the region-of-interest. Additionally, with its high-speed and versatility, AutoAO can be adapted to any optical imaging modality in both laboratory and clinical environments.

Fig. 1.

Comparison of sensor-based AO, traditional sensorless AO, and AutoAO. The red lines represent the closed-loop involved in finding the optimal DM pattern for imaging. Det: Image Detector. DM: Deformable Mirror. PC: Computing Unit. WFS: Wavefront Sensor.

2. Experimental setup

2.1. Description of the OCM system

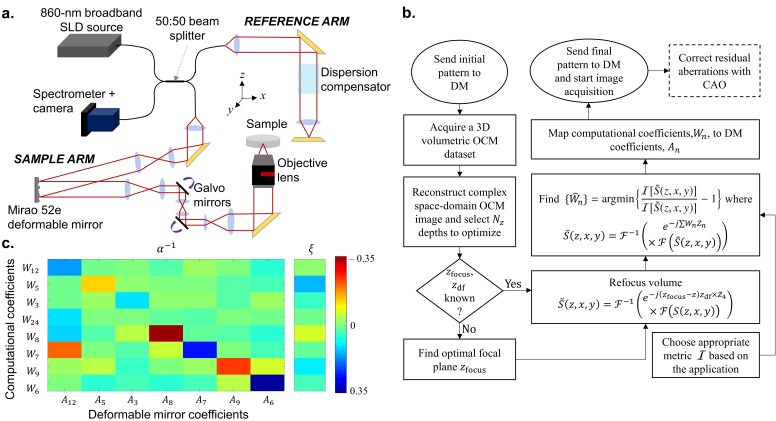

A custom-built spectral-domain AO OCM system was used to demonstrate our technique, which consisted of a central wavelength of 860 nm sourced by a broadband laser source (SuperLum Inc.) as seen in Fig. 2(a). The sample arm was built in a 4f configuration where the DM (Mirao 52-e, Imagine Optic SA), the pivots of two galvanometer mirrors (Cambridge Technology), and the back focal plane of the objective lens (LUCPLFLN 40X, Olympus Corporation, Numerical Aperture = 0.6) were carefully aligned and conjugated. To compensate for the dispersion in the sample arm, a series of dispersion compensation elements were placed in the reference arm path, with the residual dispersion being corrected computationally [51]. The detection was performed by a spectrometer (Cobra 800, Wasatch Photonics) and camera (Sprint spL4096-140km, Basler AG) with a selected region-of-interest of 2048 pixels corresponding to 177 nm.

Fig. 2.

(a) Schematic diagram of the spectral domain AO OCM system. (b) Flowchart of the AutoAO algorithm. The steps with the dashed edges describes optional post-processing steps. (c) The Tikhonov-regularized inverse interaction matrix, α−1, mapping Wn to An and the offset, ξ.

Data was acquired with a custom LabVIEW-based software (LabVIEW, National Instruments). A modified version of the resilient backpropagation algorithm, first described by Riedmiller and Braun [52], and real-time CAO display were performed on a GPU (GeForce GTX Titan 1080 Ti, NVIDIA) with a C++-based program using the CUDA libraries. The DM was controlled by a custom C-based program and linked to the LabVIEW software though TCP-IP communication.

2.2. AutoAO algorithm

CAO, described by Adie et al. [13], requires a phase mask, Φ(z, Qx, Qy), generated as a sum of weighted Zernike polynomials. When multiplied to the image in the two-dimensional (2D) Fourier space, it can alter the aberration state of the image. Given an initial three-dimensional (3D) complex-valued image, S(z, x, y), the new image, S̄(z, x, y), can be described as

| (1) |

where ℱ[u,v] denotes the Fourier transform operator along the dimensions u and v, where Qx and Qy are the spatial-frequencies of x and y, respectively, and Zn and Wn are Zernike polynomials (with the OSA/ANSI index [53]) and their corresponding weights. Ideally, CAO, when used to improve the image quality, corrects all the aberrations for a given volume in post-processing. However, it is known that the presence of aberrations causes a significant decrease in the collected signal energy, which cannot be recovered by CAO [54]. Therefore, if this phase mask were translated to the DM, both the signal energy and image quality are expected to improve.

An algorithm to automatically estimate the phase mask to improve the image quality was described by the forward model presented by Pande et al. [50]. In the paper cited, the image quality was quantified by a sharpness metric, described as the sum of the squares of absolute values at every pixel. In a more generalized form, the metric is chosen based on the application. The choice and number of Zernike polynomials to optimize depends on the phase sensitivity of the imaging system and the response of the DM. Generally, the first three polynomials, Z0, Z1, and Z2 are ignored because Z0 is simply a constant phase offset, and Z1 and Z2 are tilts introduced by the sample. Additionally, the presence of defocus, represented by Z4, can affect the convergence of the other polynomials. The corresponding weights are modeled as a linear function of z such that W4(z) = (zfocus − z)zdf where zfocus is the location of the focal plane and z df is the defocus factor. Therefore, we first obtain the optimized set of W4(z) and the whole volume is refocused prior to optimization. Figure 2(b) presents a flowchart to elucidate the AutoAO technique.

First, a set of linearly spaced depths zk are chosen within the region of interest z. The optimal value of W4 is estimated as:

| (2) |

where

| (3) |

and

| (4) |

The image sharpness metric for estimating defocus, , is based on the fifth sharpness metric proposed by Muller and Buffington [55]. As shown later by Fienup and Miller [56], this metric, with an exponent of less than 2, would be appropriate for relatively sparser samples. Based on our experience with the samples used here, a relatively lower value of was chosen to accelerate convergence. The values of zfocus and zdf are obtained by linear regression of the estimated Ŵ4(zk). The whole volume is then refocused based on Eq. (3).

The optimization problem was defined based on the image sharpness metric, , as

| (5) |

which was solved using a resilient backpropagation algorithm for multivariate optimization [50, 52]. In this paper, the computational phase mask was optimized for Z3, Z5, Z7, Z8, Z6, Z9, Z12, and Z24, which correspond to pairs of astigmatism, coma, trefoil, and spherical aberrations, respectively.

The translation of the phase correction mask, , obtained from the weights of the Zernike polynomial of the DM pattern, An, is modeled as a linear function:

| (6) |

where α is the interaction matrix and ξ is the offset. To generate the interaction matrix, each Zernike polynomial among Z3, Z5, Z6, Z7, Z8, Z9, and Z12 is applied individually at different weights spaced between −0.35 to 0.35 to the deformable mirror (DM). Using the automatic wavefront sensing algorithm, the optimal wavefront was estimated for 8 depths selected from a 60-μm range around the focal plane. Each weight of the optimal wavefront, Wn, was fit against the DM pattern applied and the goodness of fit was calculated as its coefficient of determination or its r2 value. If the r2 value of the fit was over 0.5, the slope and the intercept were populated into the interaction matrix, α, and an offset matrix, ξ′, respectively. The offset matrix, ξ′, was later collapsed into a column matrix, ξ, by taking the median value of each row, such that it satisfies Eq. (6). The interaction matrix was later inverted with Tikhonov regularization such that it minimizes the mean square error. This inverted matrix, α−1, and the offset, ξ, are shown in Fig. 2(c).

Ideally, ξ would be equal to 0 if the microscope has no intrinsic aberrations in the absence of a sample. Additionally, in an ideal scenario, α would be a diagonal matrix. However, the number of pixels on the DM is limited, and the effect of discretization of the pattern causes the appearance of non-diagonal elements in α. Moreover, A4 was independently estimated as 0.0085 per pixel shift to the focal plane. The pattern sent to the DM is calculated as , where κDM was set at , and qx and qy are normalized 2D spatial frequency coordinates. Any residual aberrations may be corrected in post-processing through automated CAO that can restore aberration-free focal plane resolution to the entire volume. It should be noted that the calibration for α and ξ only needs to be performed once during system setup, and that the process could be completely automated.

In the next section, we illustrate three applications of AutoAO: depth-wise aberration correction, volumetric aberration correction, and extension of depth-of-focus. To demonstrate aberration correction, both depth-wise and volumetric, a 3D image of a translucent plastic block is acquired with a transverse dimension of 256 × 256 pixels, corresponding to a transverse area of 100 × 100 μm2 at a line-scan rate of 40 kHz and a frame rate of 128 Hz. Another semi-transparent poly-acrylic sheet was placed at an angle in the path of light to induce aberrations in the image. Additionally, to demonstrate our technique for volumetric aberration correction in a generic biological sample, a block of muscle tissue was extracted from a defrosted salmon. This sample was imaged with the focal plane placed 1 mm deep in the tissue with a transverse area of 300 × 300 μm2, corresponding to 1024 × 1024 pixels sampled at a line-scan rate of 60 kHz. To demonstrate extension of depth-of-focus, the same plastic block was imaged without any additional elements in the path of light, and with the same dimensions at a line-scan rate of 20 kHz and a frame rate of 75 Hz. To observe the point spread function (PSF) during extension of depth-of-focus, a silicone-based phantom, which contained iron-oxide nanoparticles ranging from 50–100 nm in size and sparsely distributed within, was imaged with a transverse dimension of 1024 × 1024 pixels, corresponding to 75 × 75 μm2.

3. Results

3.1. Depth-wise aberration correction

One important application of AO is deep-tissue imaging where the refractive index of the sample is constantly varying across depth; consequently, the aberration state could vary from one depth to another. To demonstrate the real-time capabilities of our algorithm, a tissue-mimicking phantom consisting of a translucent plastic sheet, having closely spaced point-scatterers, was imaged with aberrations introduced by a semi-transparent poly-acrylic sheet in the path of the imaging beam. Selected depths from the OCM volume acquired from the plastic sheet, shown in the top row of Fig. 3(a), are optimized individually. From these images, it is apparent that the original OCM volume is aberrated. The particularly elongated shape of the profile suggests that there is a large value of astigmatism in the images. For this example, it is assumed that zfocus (the plane of least confusion) is known. The image sharpness, ℐDW[zk, S(z, x, y)], is chosen for each depth zk independently to find the phase mask for aberration correction. It is evaluated as

| (7) |

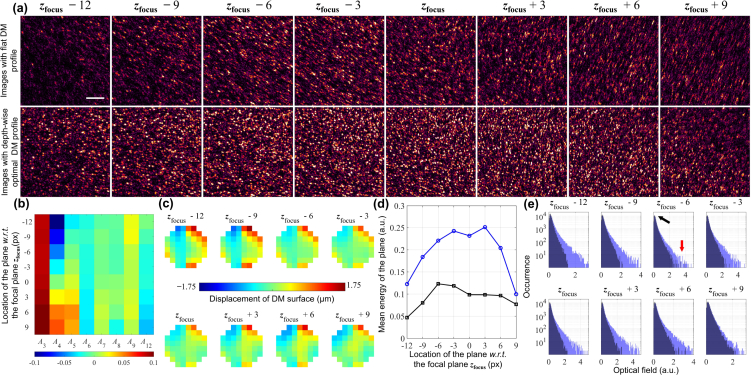

Fig. 3.

Depth-wise aberration correction. (a) En face OCM images of a tissue-mimicking phantom made from a translucent plastic sheet before (top row) and after correction (bottom row) at different depths with respect to (w.r.t.) the focal plane. The color scales are normalized for the focal plane in each row. The top row corresponds to en face images from the same volume. Each image in the bottom row is from a different volume shown at the plane of optimization. Scale bar: 20 μm. (b) Zernike polynomial weights sent to the DM, An, corresponding to the images acquired in the bottom row of (a). (c) Patterns sent to the DM corresponding to the weights in (b) to acquire the images in the bottom row of (a). (d) Comparing the energy of the acquired signals before and after optimization. The black line corresponds to the original volume, the points in the blue line are from the corresponding optimized volumes calculated at the plane of optimization. (e) Histograms of the optical field for each plane before and after optimization where the original signal occurrences are in dark blue and the optimized signal occurrences are in light blue. The black and red arrows represent the possible noise and signal levels of the en face images respectively. Note that the y-axes are in logarithmic scale.

The optimized Zernike weights for the DM pattern, An, is obtained through α−1 and ξ, whereas A4 is calculated as (zk − zfocus) × 0.0085. In order to correct for all aberrations, including defocus, the optimization must be performed depth-wise; each resulting in an independent OCM volume as a result, and whose plane of optimization is aberration-free. As seen in the bottom row of Fig. 3(b), each image appears sharper, aberration-free, and has a focal-plane resolution at each corrected plane. Observing the Zernike weights, An, seen in Fig. 3(b), it is evident that the major aberration corrected is oblique astigmatism. Moreover, there is a constant trefoil subtracted for all planes, along with some depth-variant values of other aberrations. The patterns generated with these coefficients are shown in Fig. 3(c).

To quantify the improvement to the image numerically, we observe the signal energy collected before and after correction, estimated as |S(zk, x, y)S*(zk, x, y)| and shown in Fig. 3(d). After correcting these aberrations, it is apparent that there is a minimum of 2× improvement to the collected signal energy. Additionally, in the histogram of the signal levels, i.e. the optical field, we can assume that the peak of the distribution corresponds to the noise (indicated by the black arrow), whereas the higher values correspond to the signal levels (indicate by the red arrow). While the location of the noise peak does not shift before or after correction, the number of occurrences of higher signal values increases. This indicates an improvement to the signal-to-noise ratio of the image.

AutoAO for depth-wise aberration correction took an average of 173 ± 50 ms for optimization and for transferring the pattern to the DM. As OCM images are inherently depth-resolved, the computed wavefront is also restricted to a single depth, overcoming a major challenge of sensor-based AO techniques. A previous technique for sensorless OCT [43] iteratively varied the DM pattern directly to improve the image quality at a plane. This technique needed a series of ∼300 OCM volumes to be acquired over 65 seconds, where each en face image was only one-fourth the size of those presented here. Additional studies have reported similar time-scales on a sensorless AO system for multiphoton microscopy with OCT-guided wavefront sensing [47]. While these techniques can vary the Zernike modes at the end of each A-scan, AutoAO can only be performed at the end of a volumetric acquisition. Nonetheless, compared to these previous methods, our technique offers a 375× improvement in speed and a 300× reduction in the number of volumes required for optimization. The key differences between these traditional SCL-AO techniques and AutoAO are illustrated in the schematics shown in Fig. 1. Compared to a recent technique for SCL-AO ophthalmoscopy which needed 20 seconds for optimization [48], AutoAO shows a 110× improvement in speed.

3.2. Volumetric aberration correction

Sample-induced aberrations do not necessarily arise from aberrations introduced within the depth-of-field. For instance, in the case of in vivo retinal imaging, the aberrations are introduced by the cornea, intraocular fluid, and lens, which are all before the axial depth-of-field. These aberrations create a severe decrease in the collected signal energy and cause distortions to the image. Cases like this warrant a single correction pattern that can correct the aberrations for the entire volume, except for defocus. To demonstrate these capabilities, the same translucent plastic sheet used in the previous section with introduced aberrations was imaged. Figure 4 shows the initial OCM volume of the plastic sheet with introduced aberrations, the second OCM volume after correction with the DM, and after performing CAO on the latter volume. The 8 en face images, which span an axial range of 54 μm, each show a 50 × 50 μm2 transverse section. The whole-volume metric chosen for optimization is

| (8) |

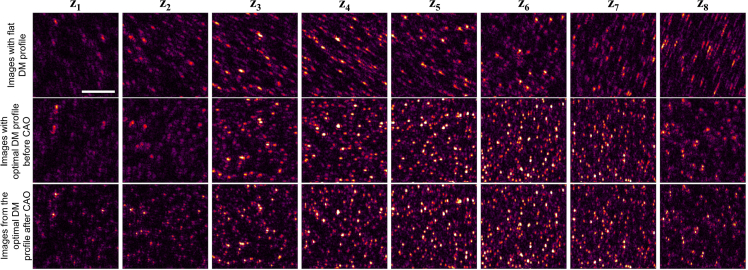

Fig. 4.

Volumetric aberration correction. En face OCM images of a tissue-mimicking phantom made from a translucent plastic sheet before (First row), after correction with DM (second row), and post-correction with CAO on planes in the second row (third row) at different depths spaced 3 pixels (or 9 μm) apart. Scale bar: 10 μm.

A total of 8 depths were chosen over a range of 60 μm, zfocus and zdf were estimated, and the phase correction mask for the corresponding DM pattern shown in Fig. 5(e) was generated. It is apparent that, even in the presence of non-diagonal elements in the interaction matrix, the computational mask and the DM pattern are almost negatives of each other. No changes were made to the defocus so that the focal plane of the corrected volume coincides with the plane of least confusion of the original image. The estimation of Ŵ4(z) for the entire volume took ∼1.2 seconds; the time taken to estimate the DM pattern is shown in Fig. 5(d). For a fair sample-independent comparison, the number of iterations for the time analysis was set at 50.

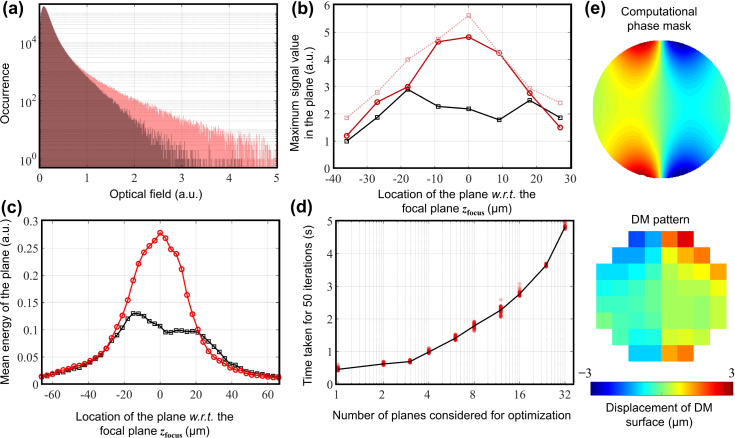

Fig. 5.

Analysis of volumetric aberration correction. (a) Histograms of the optical field for a 150-μm section before and after optimization (before correction with CAO) where the original signal occurrences are in dark red and the optimized signal occurrences are in light red. (b) The maximum signal magnitude at each pixel calculated for each of the en face images shown in Fig. 4. The black line corresponds to the aberrated image, the solid red line corresponds to the image corrected with AutoAO before correction with CAO, and the dotted red line corresponds to the metric after CAO. (c) Comparing the energy of the acquired signals before and after optimization. The black and red lines correspond to the original and optimized volumes, respectively. (d) Time taken for estimating the optimal phase correction mask when different number of depths are chosen for optimization. The solid line passes through the median value of 100 measurements. (e) Phase correction mask generated by AutoAO algorithm (top), corresponding pattern sent to the DM (bottom).

First, even before correction with CAO, the second volume has a better focal plane resolution compared to the initial aberrated volume. Second, observing the signal energy collected at each plane in Fig. 5(c), the original volume has two signal peaks, indicating the presence of astigmatism. After correction, the profile appears distinctly Gaussian, with a 2× improvement in the collected signal energy. Third, the histogram of the signal, i.e. the optical field, suggest a dramatic improvement in the signal-to-noise ratio, similar to that of depth-wise correction (Fig. 5(a)). The residual aberrations are minimal and they can be corrected. After correction with CAO, as seen in Fig. 5(b), the maximum value of the OCM signal in a plane increases. Additionally, the volume can be refocused using the methods presented by Adie et al. [13] in post-processing to ensure focal-plane resolution (0.6 μm) for the entire volume [45], as shown in Fig. 4.

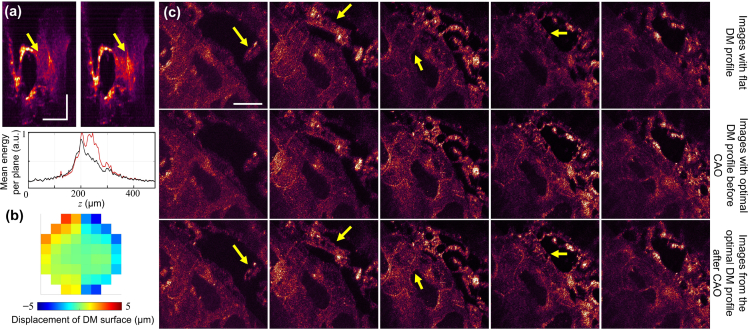

While the results shown in Section 3.1 are relevant for real-time processing, one of the major advantages of OCM is its ability to obtain 3D images through depth-resolved 2D raster scans of the transverse plane. Therefore, in order to demonstrate this potential of OCM in biological samples, a tissue sample from a salmon was imaged with the focal plane placed ∼1 mm below the sample surface, and volumetric aberration correction was subsequently performed. The correction was performed on a sub-volume of 256 × 256 transverse pixels. A total of 8 depths chosen over 60 axial pixels were optimized for the metric in Eq. (8) and the resulting pattern, shown in Fig. 6(b), was sent to the DM. In Fig. 6(a), appearance of previously suppressed features took is observed after correction in the cross-sectional images. Additionally, from the adjacent graph, the regions between 200 and 300 μm have increased signal energy collected per plane. Moreover, there is an apparent enhancement of features and signal intensity seen in the en face images shown over a region of ∼120 μm in Fig. 6(c). These effects are especially pronounced in the regions indicated by the yellow arrows. In this case, AutoAO for volumetric aberration correction was sufficient to recover most of the image features. After correction with CAO, focal-plane resolution of 0.6 μm was restored to the entire 300 × 300 × 120 μm3 volume. By performing the optimization on a sub-volume of 256×256×8 pixels, corresponding to 2.2% of the entire volume, the time taken was similar to that observed in tissue phantoms, while enabling improvement to the image quality for the entire volume.

Fig. 6.

Volumetric aberration correction in a biological sample. (a) Cross-section OCM images (superposition of 10 slow-axis frames) of a tissue section from a salmon before (left) and after correction (right). The yellow arrows highlight the difference before and after correction with AutoAO. The plot below shows the mean energy per plane (for the entire 300 × 300 μm2 transverse region) before (red) and after (black) correction. Scale bars: 100 μm. (b) Pattern sent to the DM to achieve correction. (c) En face OCM images before correction (top), after correction (middle), and the corrected images post-CAO (bottom), each spaced 30 μm apart along the z-axis. The yellow arrows highlight the difference before and after correction with AutoAO, where the features are aberration free and have apparent increase in signal. Scale bar: 100 μm.

3.3. Extension of depth-of-focus

Another application of AO is to extend the depth-of-focus by introducing aberrations. In 2012, Sasaki et al. [23] showed that adding spherical aberration to the optical system could increase the depth-of-focus with minimal loss to the image resolution. In 2018, Liu et al. [24] demonstrated that the depth-of-focus could be extended further by imaging with an astigmatic beam. In the latter case, the aberration was corrected computationally in post-processing, and focal plane resolution was restored for the entire volume. For a perfectly Gaussian beam, both the resolution and signal energy per plane drop significantly as one moves away from the focal plane. Introduction of astigmatism was shown to extend the axial range of OCM by splitting the focal plane of a Gaussian beam into two line foci. However, this results in a peculiarly shaped PSF that varies drastically with depth. Introducing spherical aberration has been shown to preserve the transverse resolution and the image PSF over a larger set of depths compared to a Gaussian beam. Consequently, these must exist an aberration pattern that would optimally maintain an uniform transverse resolution over a large set of depths while ensuring that the decrease to the signal energy at the focal plane stays reasonable. From Sections 3.1 and 3.2, it is apparent that the image sharpness metrics ℐDW and ℐVol are related to the image resolution. Let us define the image sharpness across depth, , as

| (9) |

For a Gaussian beam profile, the variation of along the z-axis is large. In order to maintain uniform resolution over a larger set of depths, must vary minimally across depth. Therefore, the image metric for extending the depth-of-focus, ℐDOF[S(z, x, y)], modeled the deviation of with respect to a fixed value, μz, is defined as:

| (10) |

where

| (11) |

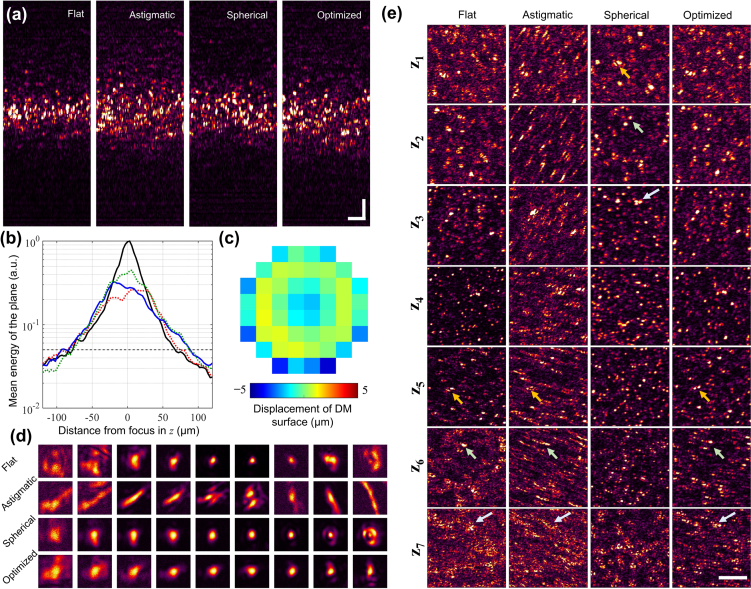

and Nz is the number of depths selected for optimization. Alternatively, if μz were chosen as the mean image sharpness over these depths, ℐDOF in Eq. (10) would correspond to the standard deviation of image sharpness. An additional stop condition was added to ensure that the drop in the signal-to-noise ratio (SNR) remains within a reasonable limit. Therefore, if any of the depths had an SNR drop of 23 dB, calculated as where Q is the quantile function, the computational wavefront estimation is terminated and the results are sent to the DM. This pattern is compared to an equivalent amount of spherical aberration and astigmatism applied to the DM. By observing the cross-sectional images of the tissue-mimicking phantom, consisting of a plastic sheet in Fig. 7(a), we see that all the aberrated images have a larger depth-of-focus compared to the flat profile of the DM. The flat profile of the DM is expected to generate a Gaussian beam profile at the sample plane, in the absence of sample-induced aberrations. This is observed in Fig. 7(b) where the flat DM profile appears distinctly Gaussian. We assume that the axial depth-of-field is the range where the signal energy is above 5% of the maximum value for the flat profile. For an astigmatic beam that creates an ∼80% drop from the previous maximum signal energy, the axial range expands from 144 μm to 159 μm. For a spherically aberrated beam, created by adding the Zernike polynomial Z12, this range extends further to 168 μm. This range for the optimized beam, created by sending the pattern seen in Fig. 7(c) to the DM, is 177 μm, which is a 22% increase to that of the flat DM profile. This pattern is dominated by the weight of Z12, followed by contribution from Z8, with reduced contributions from other polynomials.

Fig. 7.

Extension of depth-of-focus. (a) Cross-section OCM images of the tissue mimicking phantom made of a translucent plastic sheet for different states of the DM. The color scales for each image are normalized to the maximum value in the plane. Scale bars: 20 μm. (b) Comparing the energy of the acquired signals for different aberration states on the DM. The black line corresponds to a flat DM profile, the red and green dotted lines correspond to astigmatic and spherically aberrated beams, respectively, and the blue solid line corresponds to the optimally aberrated profile generated by AutoAO. The gray dotted horizontal line corresponds to a 23 dB drop in the mean energy of the plane. From the intersection of this line to the four curves, the axial depth-of-fields from the graph for the flat, astigmatic, spherically aberrated, and the optimized beams are 144, 159, 168, and 177 μm, respectively. (c) The optimized pattern sent to the DM. (d) PSF measured at different aberration states shown at depths spaced 9 μm apart, measured on a silicone phantom with sparsely distributed iron-oxide nanoparticles. Each tile is 7.25 × 7.25 μm2. (e) En face OCM images for the flat, astigmatic, spherical, and optimized aberration profiles generated by the DM. Axial locations z1 to z7 are depths spaced 15 μm apart. The arrows point to notable features to help visually align the images in each column. Scale bar: 20 μm.

Apart from signal energy, the profiles of the PSF must also be considered in the choice of aberrations. The PSF of the different beam profiles was observed on a silicone-based phantom containing sparsely-distributed iron-oxide nano-particles with mean diameter of 100 nm. As seen in Fig. 7(d), the flat DM profile has a PSF that varies dramatically with depth. In fact, the profiles far below focus are severely aberrated due to sample-induced aberrations. In the case of an astigmatic beam, the PSFs are distinctly elongated and need computational correction to recover any discernible features. This could restrict the application of an astigmatic beam in real-time clinical applications. The corresponding increase to the axial range is less than that of the spherically aberrated or optimized beam. While the spherically aberrated beam has a more uniform resolution for a larger range of depths compared to the flat DM profile, the PSFs away from the focus appear irregular and may distort image features. The optimized beam has a uniform PSF for the depth range shown, with a 25% increase to the focal plane resolution compared to the flat DM profile. This effect is reflected in the en face images shown in Fig. 7(e). The features in the flat DM profile appear distorted for the depths z1, z6, and z7. All the images from the astigmatic beam are distorted and need computational correction in post-processing. While the spherically aberrated beam performs well above the focal plane, the profiles at depth z6 have side-lobes and the features are distorted at depth z7. These effects are minimized for the optimized beam, which was expected to have a uniform beam profile for the entire range of depths shown here. Even in the absence of computational correction, the extension to the depth-of-focus could be considered as an acceptable trade off to the minimal loss of resolution at the focal plane.

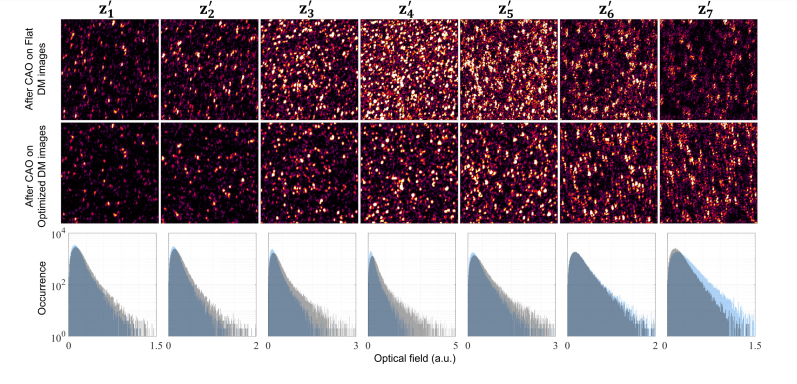

Figure 8 shows the images of the tissue-mimicking phantom, generated with the flat and optimized DM profiles after appropriate correction with CAO, where focal-plane resolution is restored to the entire volume. We define depth-of-focus as depth range over which the image remains sufficiently in focus. In OCM, one of the limiting factors to depth-of-focus is the signal energy collected from the depths away from the focal plane and introducing aberrations. Introducing aberration spreads the signal energy over a larger set of depths and is therefore expected to extend the depth-of-focus. Initially, at depth , the signals have nearly equal values for both the flat and optimized profiles. The en face images at this depth corroborate these observations. As expected, at the depths , , and , the images obtained with a flat profile have a higher SNR compared to the aberrated beam. These correspond to the peak of the black curve in Fig. 7(b). The corresponding OCM images reflect these observations, where the images from the flat DM profile have higher SNR. However, it must be noted that the image features are recovered for an aberrated beam profile with an acceptable loss to the SNR at these depths. Beyond these depths, at , and , the SNR of the images obtained with an optimized DM profile is higher than that of the flat DM profile. At these depths, it is apparent that the images in the second row of Fig. 8 have higher SNR than their counterparts in the first row. This demonstrates the advantage of optimizing the beam profile to extend the depth-of-field to image deeper into the sample without any significant loss to the image quality at the focal plane. Additionally, instead of choosing a fixed aberration to extend the depth-of-field, AutoAO provides a method to selectively target regions of interest in the sample dynamically. This could prove especially useful in multimodal multiphoton imaging systems [57] where control over the depth-of-focus could help in choosing and co-aligning the optimal axial section.

Fig. 8.

Comparing en face images of the translucent plastic sheet from the flat DM profile with the beam profile optimized for extending the depth-of-focus after correction with CAO for depths to , each spaced 15 μm apart. The third row is the histogram of the signals for the planes shown where the black and gray bars correspond to the flat and optimized DM profiles, respectively. Scale bar: 20 μm.

4. Discussion

In this paper, we have presented a technique for SCL-AO optics that combines the advantages of HAO, CAO, and previous sensorless techniques. While traditional SCL-AO techniques optimize an image metric at different states of a DM, CAO can simulate these states instead, thereby requiring only one initial volume for optimization. Additionally, AutoAO overcomes the challenges of sensor-based AO and the performance is, therefore, independent of sample properties. Moreover, algorithms for CAO and wavefront sensing can show accelerated performance when implemented on a GPU, enabling real-time operation. Furthermore, AutoAO can be used for a variety of applications such as depth-wise aberration correction, volumetric aberration correction, and extension of depth-of-focus. AutoAO is demonstrated here on an AO-OCM system where aberration correction was performed for a single depth in 173 ms, and for a whole volume within a few seconds. Its performance was also evaluated on a generic biological sample for deep-tissue imaging. In this case, we have also shown that AutoAO can be performed on a small fraction of the entire final volume to achieve high speeds, when the aberrations are assumed to be transversely invariant within the field-of-view. Finally, AutoAO has also been shown to extend the depth-of-field for OCM by 22% with acceptable reduction to the SNR.

Because OCM generates complex-valued images intrinsically, it simplifies the wavefront reconstruction problem. The image wavefront for OCM can be computationally altered simply by multiplying a phase-mask in the Fourier domain. For intensity-based microscopy techniques, AutoAO could be implemented with an additional step that involves phase retrieval or phase diversity techniques. Phase retrieval of confocal images has been in development since the development of modern microscopy [58–60]. Additionally, instead of sensing the image wavefront with phase masks, alternative techniques such as pupil segmentation or phase diversity could be used [36–38]. While the applications used in Sections 3.2 and 3.3 are specific to 3D volumes, section 3.1 provides a generic framework to achieve correction for other imaging modalities. However, such imaging modalities might need additional hardware modifications to support phase reconstruction. AutoAO could also be readily implemented in multimodal OCM and multiphoton AO microscopy systems, such as the one described by Cua et al. [47]. Additionally, in such a multimodal imaging system, a volumetric aberration correction pattern estimated for the OCM images based on Section 3.2 could also be used to image and correct the aberrations for the multiphoton imaging system at different depths just by varying the value of A4.

The concept of using digital wavefront sensing with correction of aberrations in the system has been previously demonstrated on guide-star samples in fluorescence microscopy with phase retrieval [61]. However, we have shown that the performance of AutoAO is sample-independent. Furthermore, rather than using AO to improve the PSF of a single plane, our technique can be expanded to a multitude of applications by choosing an appropriate metric, ℐ. Similar to our metrics for aberration correction based on Eqs. (6) and (8), there have been numerous techniques that have used an image-based sharpness metric taken at different states of a DM to optimize the correction pattern [43, 44, 47, 62–65]. Figure 1 illustrates the key difference between these traditional techniques and AutoAO. These techniques are susceptible to motion artifacts and require the acquisition of several stable images over tens of seconds, which is particularly challenging in in vivo studies. Alternatively, these techniques could employ axial tracking algorithms which would limit the sensitivity and accuracy of the correction pattern [44, 47]. In contrast, AutoAO finds the optimal pattern by acquiring a single stable 3D volume. The phase stability that AutoAO demands for optimal performance is the same as the phase stability needed for implementing CAO on in vivo samples. CAO has been successfully used for wavefront sensing or aberration correction for ex vivo, in vitro, and in vivo OCM volumes of various biological samples [45, 46, 66, 67]. Also, the optimal pattern can be estimated for an initial volume of a smaller size and field of view, thereby reducing the effect of motion artifacts. Additionally, a 300× reduction in the number of volumes acquired, compared to a previous study [43], implies a 300× reduction in laser exposure on the sample. Our technique also shows an improvement in speed and laser exposure compared to a later study for lens based AO-OCT for in vivo retinal imaging that required an acquisition of 55 OCT volumes over 3 seconds at very high A-scan rates [44]. In the demonstration of AutoAO presented in this paper, the most time-consuming step was to acquire the initial volume for acquisition, which took 2 seconds, compared to the calculation of the DM pattern, which took a small fraction of a second. Therefore, at these high image acquisition speeds described by Jian et al. [44], the whole process of AutoAO could be completed within a fraction of a second.

Finally, in this paper, we used modal estimation of the wavefront in terms of its Zernike polynomials. As the response of the DM is restricted by the limited number of actuators, the number of Zernike polynomials on the DM used in this paper was restricted to 8. To avoid having an ill-defined or over-fitted interaction matrix, the number of polynomials for the computational wavefront estimation was restricted to 9. If the DM were to have a better response for higher-order Zernike polynomials, the wavefront estimation could include higher order Zernike modes. Additionally, some algorithms determine the wavefront zonally in terms of its spatial distribution [36, 68, 69], and this is the preferred method in astronomical and ophthalmic AO [7, 70]. The resolution and sampling of the wavefront sensor is usually much higher than that of the compensating element, and additional discretization and regularization must be done to ensure that the translation from the estimated wavefront to the DM pattern remains well-defined.

In conclusion, we have presented a technique, AutoAO, which finds a phase function optimized for a chosen metric using CAO, and translates this pattern to the DM. This eliminates the need for a wavefront sensor, harnesses the high speed of CAO to enable real-time correction, avoids acquisition of multiple volumes for closed-loop operation, and recovers both the signal energy and SNR post correction. While there are numerous optimized microscopes that have been designed, we believe that our technique brings us a step closer to building an optimized optical imaging system.

Funding

Air Force Office of Scientific Research (AFOSR) (FA9550-17-1-0387); National Institutes of Health (NIH) (R01 CA213149).

Disclosures

Stephen A. Boppart is co-founder and Chief Medical Officer of Diagnostic Photonics, which is licensing intellectual property from the University of Illinois at Urbana-Champaign related to computational optical imaging. The other authors declare no competing interests or disclosures.

References

- 1.Abbe E., “Beiträge zur theorie des mikroskops und der mikroskopischen wahrnehmung,” Arch. für mikroskopische Anat. 9, 413–418 (1873). 10.1007/BF02956173 [DOI] [Google Scholar]

- 2.Böhm U., Hell S. W., Schmidt R., “4Pi-RESOLFT nanoscopy,” Nat. Commun. 7, 10504 (2016). 10.1038/ncomms10504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gustafsson M. G., “Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy,” J. Microsc. 198, 82–87 (2000). 10.1046/j.1365-2818.2000.00710.x [DOI] [PubMed] [Google Scholar]

- 4.Rust M. J., Bates M., Zhuang X., “Stochastic optical reconstruction microscopy (STORM) provides sub-diffraction-limit image resolution,” Nat. Methods 3, 793 (2006). 10.1038/nmeth929 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hess S. T., Girirajan T. P., Mason M. D., “Ultra-high resolution imaging by fluorescence photoactivation localization microscopy,” Biophys. J. 91, 4258–4272 (2006). 10.1529/biophysj.106.091116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wang K., Milkie D. E., Saxena A., Engerer P., Misgeld T., Bronner M. E., Mumm J., Betzig E., “Rapid adaptive optical recovery of optimal resolution over large volumes,” Nat. Methods 11, 625–628 (2014). 10.1038/nmeth.2925 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tyson R., Principles of adaptive optics (CRC Press, 2010), 3rd ed. 10.1201/EBK1439808580 [DOI] [Google Scholar]

- 8.Booth M. J., “Adaptive optics in microscopy,” Philos. Transactions Royal Soc. Lond. A: Math. Phys. Eng. Sci. 365, 2829–2843 (2007). 10.1098/rsta.2007.0013 [DOI] [PubMed] [Google Scholar]

- 9.Booth M. J., “Adaptive optical microscopy: the ongoing quest for a perfect image,” Light. Sci. Appl. 3, e165 (2014). 10.1038/lsa.2014.46 [DOI] [Google Scholar]

- 10.Neil M. A., Booth M. J., Wilson T., “Closed-loop aberration correction by use of a modal Zernike wave-front sensor,” Opt. Lett. 25, 1083–1085 (2000). 10.1364/OL.25.001083 [DOI] [PubMed] [Google Scholar]

- 11.Booth M. J., Neil M. A., Juškaitis R., Wilson T., “Adaptive aberration correction in a confocal microscope,” Proc. Natl. Acad. Sci. 99, 5788–5792 (2002). 10.1073/pnas.082544799 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Girkin J. M., Poland S., Wright A. J., “Adaptive optics for deeper imaging of biological samples,” Curr. Opin. Biotechnol. 20, 106–110 (2009). 10.1016/j.copbio.2009.02.009 [DOI] [PubMed] [Google Scholar]

- 13.Adie S. G., Graf B. W., Ahmad A., Carney P. S., Boppart S. A., “Computational adaptive optics for broadband optical interferometric tomography of biological tissue,” Proc. Natl. Acad. Sci. U. S. A. 109, 7175–7180 (2012). 10.1073/pnas.1121193109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zheng G., Horstmeyer R., Yang C., “Wide-field, high-resolution Fourier ptychographic microscopy,” Nat. Photonics 7, 739 (2013). 10.1038/nphoton.2013.187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Colomb T., Montfort F., Kühn J., Aspert N., Cuche E., Marian A., Charrière F., Bourquin S., Marquet P., Depeursinge C., “Numerical parametric lens for shifting, magnification, and complete aberration compensation in digital holographic microscopy,” J. Opt. Soc. Am. A 23, 3177–3190 (2006). 10.1364/JOSAA.23.003177 [DOI] [PubMed] [Google Scholar]

- 16.Kam Z., Hanser B., Gustafsson M., Agard D., Sedat J., “Computational adaptive optics for live three-dimensional biological imaging,” Proc. Natl. Acad. Sci. U. S. A. 98, 3790–3795 (2001). 10.1073/pnas.071275698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bertero M., Boccacci P., Introduction to Inverse Problems in Imaging (CRC Press, 1998). 10.1887/0750304359 [DOI] [Google Scholar]

- 18.Liu Y.-Z., South F. A., Xu Y., Carney P. S., Boppart S. A., “Computational optical coherence tomography,” Biomed. Opt. Express 8, 1549–1574 (2017). 10.1364/BOE.8.001549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hillmann D., Spahr H., Hain C., Sudkamp H., Franke G., Pfäffle C., Winter C., Hüttmann G., “Aberration-free volumetric high-speed imaging of in vivo retina,” Sci. Rep. 6, 35209 (2016). 10.1038/srep35209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hanser B. M., Gustafsson M. G., Agard D., Sedat J. W., “Phase-retrieved pupil functions in wide-field fluorescence microscopy,” J. Microsc. 216, 32–48 (2004). 10.1111/j.0022-2720.2004.01393.x [DOI] [PubMed] [Google Scholar]

- 21.Fogel F., Waldspurger I., d’Aspremont A., “Phase retrieval for imaging problems,” Math. Program. Comput. 8, 311–335 (2016). 10.1007/s12532-016-0103-0 [DOI] [Google Scholar]

- 22.Rivenson Y., Zhang Y., Günaydın H., Teng D., Ozcan A., “Phase recovery and holographic image reconstruction using deep learning in neural networks,” Light. Sci. Appl. 7, 17141 (2018). 10.1038/lsa.2017.141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sasaki K., Kurokawa K., Makita S., Yasuno Y., “Extended depth of focus adaptive optics spectral domain optical coherence tomography,” Biomed. Opt. Express 3, 2353–2370 (2012). 10.1364/BOE.3.002353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Liu S., Mulligan J. A., Adie S. G., “Volumetric optical coherence microscopy with a high space-bandwidth-time product enabled by hybrid adaptive optics,” Biomed. Opt. Express 9, 3137–3152 (2018). 10.1364/BOE.9.003137 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cha J.-W., Ballesta J., So P. T., “Shack-Hartmann wavefront-sensor-based adaptive optics system for multiphoton microscopy,” J. Biomed. Opt. 15, 046022 (2010). 10.1117/1.3475954 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tuohy S., Podoleanu A. G., “Depth-resolved wavefront aberrations using a coherence-gated Shack-Hartmann wavefront sensor,” Opt. Express 18, 3458–3476 (2010). 10.1364/OE.18.003458 [DOI] [PubMed] [Google Scholar]

- 27.Rahman S. A., Booth M. J., “Direct wavefront sensing in adaptive optical microscopy using backscattered light,” Appl. Opt. 52, 5523–5532 (2013). 10.1364/AO.52.005523 [DOI] [PubMed] [Google Scholar]

- 28.Southwell W., “What’s wrong with cross coupling in modal wave-front estimation?” in Adaptive Optics Systems and Technology, vol. 365 (International Society for Optics and Photonics, 1983), pp. 97–105. 10.1117/12.934204 [DOI] [Google Scholar]

- 29.Hofer H., Porter J., Yoon G., Chen L., Singer B., Williams D. R., Adaptive Optics for Vision Science: Principles, Practices, Design, and Applications (Wiley, 2006), Ch. 15, pp. 395–415. [Google Scholar]

- 30.Liang J., Williams D. R., Miller D. T., “Supernormal vision and high-resolution retinal imaging through adaptive optics,” J. Opt. Soc. Am. A 14, 2884–2892 (1997). 10.1364/JOSAA.14.002884 [DOI] [PubMed] [Google Scholar]

- 31.Roorda A., Romero-Borja F., Donnelly W. J., III, Queener H., Hebert T. J., Campbell M. C., “Adaptive optics scanning laser ophthalmoscopy,” Opt. Express 10, 405–412 (2002). 10.1364/OE.10.000405 [DOI] [PubMed] [Google Scholar]

- 32.Roorda A., Duncan J. L., “Adaptive optics ophthalmoscopy,” Annu. Rev. Vis. Sci. 1, 19–50 (2015). 10.1146/annurev-vision-082114-035357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zawadzki R. J., Jones S. M., Olivier S. S., Zhao M., Bower B. A., Izatt J. A., Choi S., Laut S., Werner J. S., “Adaptive-optics optical coherence tomography for high-resolution and high-speed 3D retinal in vivo imaging,” Opt. Express 13, 8532–8546 (2005). 10.1364/OPEX.13.008532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Jonnal R. S., Kocaoglu O. P., Zawadzki R. J., Liu Z., Miller D. T., Werner J. S., “A review of adaptive optics optical coherence tomography: technical advances, scientific applications, and the future,” Invest. Ophthalmol. Vis. Sci. 57, OCT51–OCT68 (2016). 10.1167/iovs.16-19103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.South F. A., Liu Y.-Z., Bower A. J., Xu Y., Carney P. S., Boppart S. A., “Wavefront measurement using computational adaptive optics,” J. Opt. Soc. Am. A 35, 466–473 (2018). 10.1364/JOSAA.35.000466 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kumar A., Drexler W., Leitgeb R. A., “Subaperture correlation based digital adaptive optics for full field optical coherence tomography,” Opt. Express 21, 10850–10866 (2013). 10.1364/OE.21.010850 [DOI] [PubMed] [Google Scholar]

- 37.Wang C., Ji N., “Pupil-segmentation-based adaptive optical correction of a high-numerical-aperture gradient refractive index lens for two-photon fluorescence endoscopy,” Opt. Lett. 37, 2001–2003 (2012). 10.1364/OL.37.002001 [DOI] [PubMed] [Google Scholar]

- 38.Kner P., “Phase diversity for three-dimensional imaging,” J. Opt. Soc. Am. A 30, 1980–1987 (2013). 10.1364/JOSAA.30.001980 [DOI] [PubMed] [Google Scholar]

- 39.Verstraete H. R., Wahls S., Kalkman J., Verhaegen M., “Model-based sensor-less wavefront aberration correction in optical coherence tomography,” Opt. Lett. 40, 5722–5725 (2015). 10.1364/OL.40.005722 [DOI] [PubMed] [Google Scholar]

- 40.Débarre D., Botcherby E. J., Booth M. J., Wilson T., “Adaptive optics for structured illumination microscopy,” Opt. Express 16, 9290–9305 (2008). 10.1364/OE.16.009290 [DOI] [PubMed] [Google Scholar]

- 41.Débarre D., Botcherby E. J., Watanabe T., Srinivas S., Booth M. J., Wilson T., “Image-based adaptive optics for two-photon microscopy,” Opt. Lett. 34, 2495–2497 (2009). 10.1364/OL.34.002495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Albert O., Sherman L., Mourou G., Norris T., Vdovin G., “Smart microscope: an adaptive optics learning system for aberration correction in multiphoton confocal microscopy,” Opt. Lett. 25, 52–54 (2000). 10.1364/OL.25.000052 [DOI] [PubMed] [Google Scholar]

- 43.Jian Y., Xu J., Gradowski M. A., Bonora S., Zawadzki R. J., Sarunic M. V., “Wavefront sensorless adaptive optics optical coherence tomography for in vivo retinal imaging in mice,” Biomed. Opt. Express 5, 547–559 (2014). 10.1364/BOE.5.000547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Jian Y., Lee S., Ju M. J., Heisler M., Ding W., Zawadzki R. J., Bonora S., Sarunic M. V., “Lens-based wavefront sensorless adaptive optics swept source OCT,” Sci. Reports 6, 27620 (2016). 10.1038/srep27620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Liu Y.-Z., Shemonski N. D., Adie S. G., Ahmad A., Bower A. J., Carney P. S., Boppart S. A., “Computed optical interferometric tomography for high-speed volumetric cellular imaging,” Biomed. Opt. Express 5, 2988–3000 (2014). 10.1364/BOE.5.002988 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Shemonski N. D., South F. A., Liu Y.-Z., Adie S. G., Carney P. S., Boppart S. A., “Computational high-resolution optical imaging of the living human retina,” Nat. Photonics 9, 440–443 (2015). 10.1038/nphoton.2015.102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Cua M., Wahl D. J., Zhao Y., Lee S., Bonora S., Zawadzki R. J., Jian Y., Sarunic M. V., “Coherence-gated sensorless adaptive optics multiphoton retinal imaging,” Sci. Reports 6, 32223 (2016). 10.1038/srep32223 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.DuBose T., Nankivil D., LaRocca F., Waterman G., Hagan K., Polans J., Keller B., Tran-Viet D., Vajzovic L., Kuo A. N., et al. , “Handheld adaptive optics scanning laser ophthalmoscope,” Optica 5, 1027–1036 (2018). 10.1364/OPTICA.5.001027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lakshminarayanan V., Fleck A., “Zernike polynomials: a guide,” J. Mod. Opt. 58, 545–561 (2011). 10.1080/09500340.2011.554896 [DOI] [Google Scholar]

- 50.Pande P., Liu Y.-Z., South F. A., Boppart S. A., “Automated computational aberration correction method for broadband interferometric imaging techniques,” Opt. Lett. 41, 3324–3327 (2016). 10.1364/OL.41.003324 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Marks D. L., Oldenburg A. L., Reynolds J. J., Boppart S. A., “Digital algorithm for dispersion correction in optical coherence tomography for homogeneous and stratified media,” Appl. Opt. 42, 204–217 (2003). 10.1364/AO.42.000204 [DOI] [PubMed] [Google Scholar]

- 52.Riedmiller M., Braun H., “A direct adaptive method for faster backpropagation learning: The RPROP algorithm,” in Proceedings of IEEE International Conference on Neural Networks, (IEEE, 1993), pp. 586–591. 10.1109/ICNN.1993.298623 [DOI] [Google Scholar]

- 53.Thibos L., Applegate R. A., Schwiegerling J. T., Webb R., “Standards for reporting the optical aberrations of eyes,” J. Refract. Surg. 18, S652–S660 (2002). [DOI] [PubMed] [Google Scholar]

- 54.South F. A., Kurokawa K., Liu Z., Liu Y.-Z., Miller D. T., Boppart S. A., “Combined hardware and computational optical wavefront correction,” Biomed. Opt. Express 9, 2562–2574 (2018). 10.1364/BOE.9.002562 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Muller R. A., Buffington A., “Real-time correction of atmospherically degraded telescope images through image sharpening,” J. Opt. Soc. Am. 64, 1200–1210 (1974). 10.1364/JOSA.64.001200 [DOI] [Google Scholar]

- 56.Fienup J., Miller J., “Aberration correction by maximizing generalized sharpness metrics,” J. Opt. Soc. Am. A 20, 609–620 (2003). 10.1364/JOSAA.20.000609 [DOI] [PubMed] [Google Scholar]

- 57.You S., Tu H., Chaney E. J., Sun Y., Zhao Y., Bower A. J., Liu Y.-Z., Marjanovic M., Sinha S., Pu Y., et al. , “Intravital imaging by simultaneous label-free autofluorescence-multiharmonic microscopy,” Nat. Commun. 9, 2125 (2018). 10.1038/s41467-018-04470-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Fienup J. R., “Phase retrieval algorithms: a comparison,” Appl. Opt. 21, 2758–2769 (1982). 10.1364/AO.21.002758 [DOI] [PubMed] [Google Scholar]

- 59.Shechtman Y., Eldar Y. C., Cohen O., Chapman H. N., Miao J., Segev M., “Phase retrieval with application to optical imaging: a contemporary overview,” IEEE Signal Process. Mag. 32, 87–109 (2015). 10.1109/MSP.2014.2352673 [DOI] [Google Scholar]

- 60.Hanser B. M., Gustafsson M. G., Agard D. A., Sedat J. W., “Phase retrieval for high-numerical-aperture optical systems,” Opt. Lett. 28, 801–803 (2003). 10.1364/OL.28.000801 [DOI] [PubMed] [Google Scholar]

- 61.Kner P., Winoto L., Agard D. A., Sedat J. W., “Closed loop adaptive optics for microscopy without a wavefront sensor,” Proc. SPIE 7570, 757006 (2010). 10.1117/12.840943 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Thayil A., Booth M. J., “Self calibration of sensorless adaptive optical microscopes,” J. Eur. Opt. Soc. 6, 11045 (2011). 10.2971/jeos.2011.11045 [DOI] [Google Scholar]

- 63.Olivier N., Débarre D., Beaurepaire E., “Dynamic aberration correction for multiharmonic microscopy,” Opt. Lett. 34, 3145–3147 (2009). 10.1364/OL.34.003145 [DOI] [PubMed] [Google Scholar]

- 64.Walker K. N., Tyson R. K., “Wavefront correction using a Fourier-based image sharpness metric,” Proc. SPIE 7468, 74680O (2009). 10.1117/12.826648 [DOI] [Google Scholar]

- 65.Tehrani K. F., Xu J., Zhang Y., Shen P., Kner P., “Adaptive optics stochastic optical reconstruction microscopy (AO-STORM) using a genetic algorithm,” Opt. Express 23, 13677–13692 (2015). 10.1364/OE.23.013677 [DOI] [PubMed] [Google Scholar]

- 66.South F. A., Liu Y.-Z., Huang P.-C., Kohlfarber T., Boppart S. A., “Local wavefront mapping in tissue using computational adaptive optics OCT,” Opt. Lett. 44, 1186–1189 (2019). 10.1364/OL.44.001186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Mulligan J. A., Feng X., Adie S. G., “Quantitative reconstruction of time-varying 3D cell forces with traction force optical coherence microscopy,” Sci. Rep. 9, 4086 (2019). 10.1038/s41598-019-40608-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Li X., Jiang W., “Comparing zonal reconstruction algorithms and modal reconstruction algorithms in adaptive optics system,” Proc. SPIE 4825, 121–131 (2002). 10.1117/12.451985 [DOI] [Google Scholar]

- 69.Ji N., Milkie D. E., Betzig E., “Adaptive optics via pupil segmentation for high-resolution imaging in biological tissues,” Nat. Methods 7, 141 (2009). 10.1038/nmeth.1411 [DOI] [PubMed] [Google Scholar]

- 70.Panagopoulou S. I., Neal D. P., “Zonal matrix iterative method for wavefront reconstruction from gradient measurements,” J. Refract. Surg. 21, S563–S569 (2005). [DOI] [PubMed] [Google Scholar]