Abstract

High spatial resolution is the goal of many imaging systems. While designing a high-resolution lens with diffraction-limited performance over a large field of view remains a difficult task, creating a complex speckle pattern with wavelength-limited spatial features is easily accomplished with a simple random diffuser. With this observation and the concept of near-field ptychography, we report a new imaging modality, termed near-field Fourier ptychography, which is to be used for high-resolution imaging challenges in both microscopic and macroscopic imaging settings. ‘Near-field’ refers to placing the object at a short defocus distance with a large Fresnel number. We project a speckle pattern with fine spatial features on the object instead of directly resolving the spatial features via a high-resolution lens. We then translate the object (or speckle) to different positions and acquire the corresponding images by using a low-resolution lens. A ptychographic phase retrieval process is used to recover the complex object, the unknown speckle pattern, and the coherent transfer function at the same time. In a microscopic imaging setup, we use a 0.12 numerical aperture (NA) lens to achieve an NA of 0.85 in the reconstruction process. In a macroscale photographic imaging setup, we achieve ~7-fold resolution gain by using a photographic lens. The collection optics do not determine the final achievable resolution; rather, the speckle pattern’s feature size does. This is similar to our recent demonstration in fluorescence imaging settings (Guo et al., Biomed. Opt. Express, 9(1), 2018). The reported imaging modality can be employed in light, coherent X-ray, and transmission electron imaging systems to increase resolution and provide quantitative absorption and object phase contrast.

1. Introduction

Achieving high spatial resolution is the goal of many imaging systems. Designing a high numerical aperture (NA) lens with diffraction-limited performance over a large field of view remains a difficult task in imaging system design. On the other hand, creating a complex speckle pattern with wavelength-limited spatial features is effortless and can be implemented via a simple random diffuser. In the context of super-resolution imaging, speckle illumination has been used to modulate the high-frequency object information into the low-frequency passband [1–8], similar to the idea of structured illumination microscopy [9–12]. Typical implementations achieve two-fold resolution gain in the linear region. With certain support constraints, 3-fold resolution gain has also been reported [8]. More recently, we have demonstrated the use of a translated unknown speckle pattern [4] to achieve 13-fold resolution gain for fluorescence imaging [13]. Different from previous structured illumination demonstrations, this strategy uses a single dense speckle pattern for resolution improvement and allows us to achieve more than one order of magnitude resolution gain without the direct access to the object plane. Based on the same strategy, we have also improved the resolution of a 0.1-NA objective to the level of 0.4-NA objective [13], allowing us to obtain both large field of view and high resolution for fluorescence microscopy. Along with a different line, speckle illumination has also been demonstrated for phase retrieval and phase imaging. The developments include speckle-field digital holographic microscopy [14], wavefront reconstruction [15], phase retrieval from far-field speckle data [16], super-resolution ptychography [17], near-field ptychography [18–20], among others. In the latter two cases, a translated speckle pattern (i.e., the probe beam) is used to illuminate the complex object and the diffraction measurements are used to recover the object via a phase retrieval process.

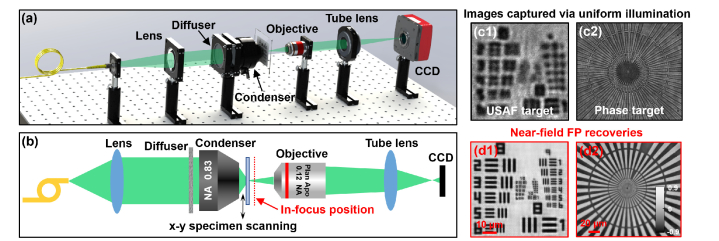

Inspired by the concepts of super-resolution ptychography [17] and near-field ptychography [18–20], we explore a new coherent imaging modality in this work. The proposed imaging approach, termed near-field Fourier ptychography, is able to tackle high-resolution imaging challenges in both microscopic and macroscale photographic imaging settings. The meaning of ‘near-field’ is referred to placing the object at a short defocus distance for converting phase information into intensity variations [21]. Instead of directly resolving fine spatial features via a high-NA lens, we project a speckle pattern with fine spatial features on the object. We then translate the object (or speckle) to different positions and acquire the corresponding intensity images using a low-NA lens. Our microscopic imaging setup is shown in Fig. 1(a)-(b), where we use a scotch tape as the diffuser and project the speckle pattern via a high-NA condenser lens. Based on the captured images, we jointly recover the complex object, the unknown speckle pattern, and the coherent transfer function (CTF) in a phase retrieval process. The final achievable resolution is not determined by the collection optics. Instead, it is determined by the feature size of the speckle pattern, similar to our recent demonstration in fluorescence imaging settings [13]. In this work, we achieved a ~7-fold resolution gain in both a microscopic and a macroscale photographic imaging setting. Figure 1(c)-(d) show the resolution improvement of the near-field FP in a microscope setup.

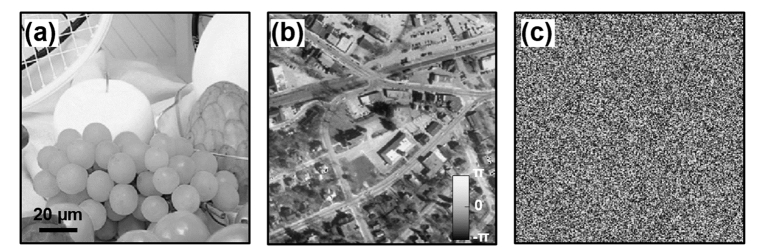

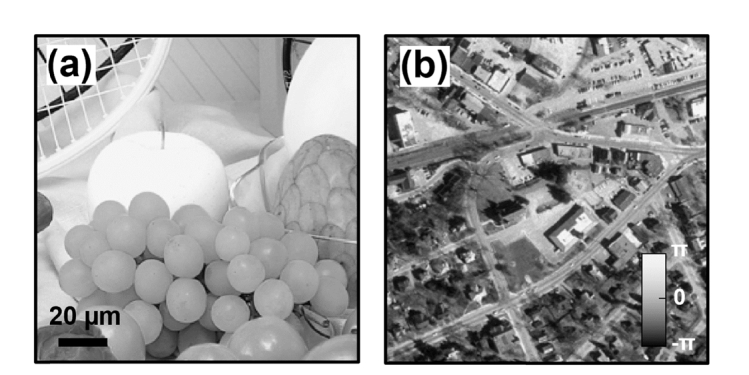

Fig. 1.

The near-field Fourier ptychography approach for super-resolution phase retrieval. (a) - (b) The experimental setup, where the object is placed at a short defocus distance to convert complex amplitude into intensity variations. A translated spackle pattern is used for sample illumination and the captured images are used for super-resolution phase retrieval. The captured images of an amplitude (c1) and phase (c2) object under uniform illumination. The recovered intensity (d1) and phase (d2) of the amplitude and the phase object.

In Fig. 1(b), we place the object at an out-of-focus position in our near-field Fourier ptychography implementation (~40 µm defocused). This short defocus distance is able to convert the phase information of the object into intensity variation in the captured images. The meaning of ‘near-field’ is, thus, referred to this short distance propagation in our implementation. This strategy is similar to the propagation-based in-line holography which makes transparent objects visible in the intensity measurements [22–24]. It is also similar to the near-field ptychography implementation which has a high Fresnel number [18–20].

The proposed near-field Fourier ptychography is closely related to three imaging modalities: 1) near-field ptychography [18–20], 2) super-resolution ptychography [17], and 3) Fourier ptychography (FP) [25–35]. Drawing connections and distinctions between the proposed approach and its related imaging modalities helps to clarify its operation. Near-field ptychography uses a translated speckle pattern to illuminate the object over the entire field of view. It then jointly recovers both the complex object and the speckle pattern in a ptychographic phase-retrieval process. Similar to the near-field ptychography, the near-field FP setup also captures multiple images under a translated speckle illumination. Since near-field FP is implemented based on a lens system, it appears as the Fourier counterpart of near-field ptychography, justifying the proposed name. By implementing it via a lens system, near-field FP is able to bypass the resolution limit of the employed objective lens.

Super-resolution ptychography uses speckle illumination to improve the achievable resolution. With super-resolution ptychography, the illumination probe is confined to a limited region in the object space, leading to a large number of image acquisitions. With near-field FP, we illuminate the object over the entire field of view. The use of a lens system also enables its implementation in a macroscale photographic imaging setting which may be impossible for regular ptychography approaches.

FP illuminates the object with angle-varied illumination and recovers the complex object profile. Near-field FP replaces the angle-varied illumination with a translated speckle pattern. Both FP and near-field FP can be implemented in a macroscale photographic imaging setting [26,29]. FP, however, cannot be used for fluorescence imaging because the captured fluorescence images under angle-varied illumination remain identical. The near-field FP setup, on the other hand, is able to improve the resolution of fluorescence microscopy thanks to the use of non-uniform speckle patterns [4,13]. As such, it can be used in both coherent and incoherent imaging settings to improve the achievable resolution.

In coherent X-ray imaging, it is relatively challenging to generate angle-varied illumination to implement FP [36]. Near-field FP, on the other hand, can be implemented in a regular zone-plate-based transmission X-ray microscope platform without hardware modification. It also allows zone-plate optics to be replaced by total-reflection mirrors [37] or Kinoform diffractive lenses [38], even they are limited to lower NAs or with introduced aberrations. The reported near-field FP may be able to improve the imaging performance of current synchrotron beamline setups and table-top transmission X-ray microscopes.

This paper is structured as follows: in Section 2, we discuss the forward modeling and recovery procedures of the reported approach. Section 3 reports our experimental results on a microscopic imaging setup, where we use a 0.12-NA lens to achieve a NA of 0.85 in the reconstruction process. Section 4 reports our experimental results on a macroscale photographic imaging setup, where we achieve 7-fold resolution gain using a photographic lens. Finally, we summarize the results and discuss future directions in Section 5. The open-source code is provided in the Appendix.

2. Modeling and simulations

The forward imaging model of the captured image in near-field FP can be described as

| (1) |

where Ij(x,y) is the jth intensity measurement (j = 1,2,3…, J), O(x,y) is the complex object, is the unknown speckle pattern, (xj, yj) is the jth positional shift of the specimen (or the speckle pattern), PSF(x,y) is the point spread function (PSF) of the imaging system, and ‘*’ stands for the convolution operation.

Based on all captured images Ij(x,y), the goal of the near-field FP is to recover the complex O(x,y), P(x,y), and PSF(x,y). We have already demonstrated a reconstruction scheme for positive O(x,y) and P(x,y) in an incoherent imaging setting [13]. In the current framework, O(x,y) and P(x,y) are complex values and a phase retrieval process is needed for recovery. The proposed recovery process is outlined in Figs. 2-3, where the captured intensity images are addressed in a random sequence, Oj(x,y) and Pj(x,y) represent the updated object and speckle using the jth captured image. In this process, we initialize the amplitude of the object by shifting back the captured raw images and averaging them. We then initialize the amplitude of the unknown speckle pattern by averaging all measurements. Finally, we initialize the PSF using the pre-set defocus distance.

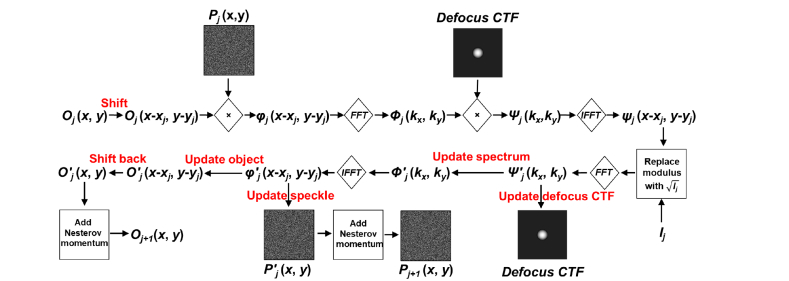

Fig. 2.

The flowchart of the recovery process.

Fig. 3.

The outline of the recovery algorithm.

In the reconstruction process (Figs. 2-3), we first shift the updated object Oj(x, y) by (xj, yj) in the jth iteration, and multiply with the speckle pattern Pj(x, y) to form an exit wave φj(x-xj, y-yj). We then perform a Fourier transform of φj to obtain Φj(kx, ky) in the Fourier space. The resulting Φj(kx, ky) is multiplied with the defocus coherent transfer function (CTF) to obtain Ψj(kx, ky). We then perform an inverse Fourier transform of Ψj(kx, ky) to obtain the complex amplitude ψj(x-xj, y-yj) on the image plane and perform Fourier magnitude projection to obtain Ψ’j(kx, ky). We use the extended ptychographical iterative engine (ePIE) [39] to update the high-resolution Fourier spectrum Φ’j (kx, ky) and the defocus CTF in the Fourier domain:

| (2) |

| (3) |

Based on the updated , we perform an inverse Fourier transform to obtain φ’j(x-xj, y-yj). We then use the regularized ptychographical iterative engine (rPIE) [40] to update the object and the speckle pattern in the spatial domain:

| (4) |

| (5) |

and in Eqs. (4)-(5) are algorithm weights in rPIE. We also add Nesterov momentum to accelerate the convergence speed in our implementation. The detailed implementation and parameter choice are provided in the Appendix (Figs. 11-14).

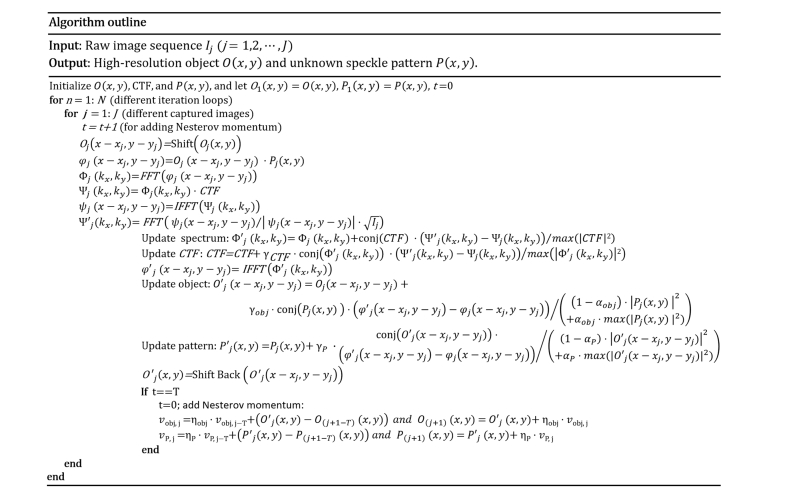

We have performed several simulations to validate the proposed approach. Figure 4 shows the simulation results that demonstrate the super-resolution phase retrieval concept. In this simulation, the object ground truth is shown in Fig. 4(a). The simulated captured images under speckle and uniform illumination are shown in Fig. 4(b). Figure 4(c) shows the recovered results by placing the object at the 40-µm defocus position. As a comparison, Fig. 4(d) shows the recovered results for the in-focus position. We can see that the amplitude and phase are well-resolved for a short defocus distance, justifying the ‘near-field’ concept of the proposed approach. The Fourier spectrum of the recovered complex object is shown in Fig. 4(e), where the red dashed circle indicates the original CTF boundary of a 0.12-NA objective lens. The resolution improvement is significant as the recovered Fourier spectrum occupies the entire Fourier space in Fig. 4(e). The computational time is ~6 mins for processing 1681 images with 512 pixels by 512 pixels each using a Dell XPS 8930 desktop computer.

Fig. 4.

Simulation of the near-field FP for super-resolution phase retrieval. (a) The ground-truth object. (b) The simulated raw image under speckle and uniform illumination. Near-field FP recoveries by placing the object at a 40-μm defocus distance (c) and in-focus position (d). (e) The recovered Fourier spectrum for (c), where the circle indicates the original CTF.

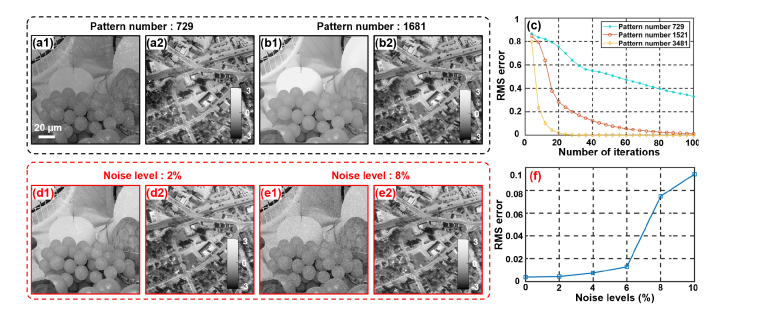

In the second simulation, we analyze the performance of the reported approach with different numbers of translated positions and different noise levels. Figures 5(a)-5(b) show the recovered complex object using different numbers of translated positions. In Fig. 5(c), we quantify the result using root mean square (RMS) error. Based on Figs. 5(a)-5(c), we can see that more translated positions lead to an improved reconstruction and an accelerated convergence. In Figs. 5(d)-5(f), we simulate and analyze the effect of additive noise. Figures 5(d)-5(e) show the recovered object with different amounts of Gaussian noises added into the raw images. The noise performance is quantified in Fig. 5(f).

Fig. 5.

The imaging performance with different numbers of translated positions and different noise levels. (a)-(b) The recovered object with different numbers of translated positions. (c) The RMS errors are plotted as a function of iterations. (d)-(e) The recovered object with different noise levels; the performance is quantified via the RMS error in (f).

3. Near-field FP for microscopy imaging

The experimental setup for microscopic imaging is shown in Fig. 1(a), where we use a 200-mW 532-nm laser diode coupled with a single-mode fiber as the light source. A scotch tape (diffuser) is placed at the back focal plane of a 0.83-NA condenser lens to generate dense speckle pattern on the object. Since the speckle pattern propagates along the axial direction, no focusing of the condenser lens is needed. At the detection path, we use a low-NA objective lens (4X, 0.12 NA) and a monochromatic camera (Pointgrey BFS-U3-200S6) to acquire the object images with a ~0.1 ms exposure time. Two motorized stages (ASI LX-4000) are used to move the object to different x-y positions with 0.25-0.75 µm different and known step size. The acquisition time for ~1000 images is ~3 mins in our experiment. The captured images are then used to recover the complex object, the unknown speckle pattern, and the CTF of the microscope platform. We use a 40-µm defocus distance to effectively convert the complex amplitude information into intensity variations. A shorter distance may not be enough for the conversion. A longer distance, on the other hand, may lead to a reduction of the effective field of view. In our experiment, we first move the sample into the in-focus position using incoherent light illumination. We then move the sample to a 40-µm distance using a motorized stage. If the estimated defocus distance is not off too much, the CTF updating process can recover the distance numerically.

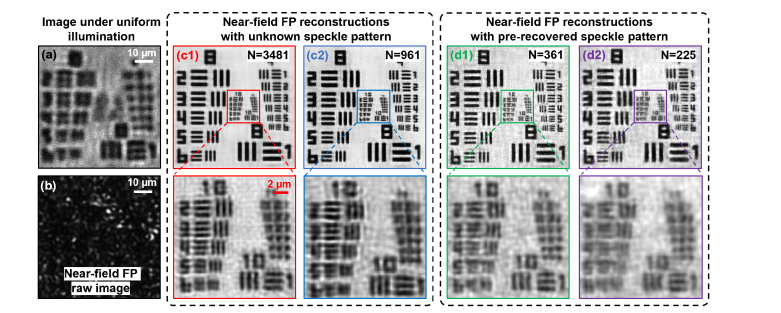

Figure 6 shows the resolution gain of the reported platform using a USAF resolution target. Figure 6(a) shows the captured image under uniform illumination and Fig. 6(b) shows the raw near-field FP image under speckle illumination. Figure 6(c) shows the recovered object with different number of translated positions. The reconstruction quality increases as the number of captured raw images increases and we can resolve group 10 element 5 with a 310-nm linewidth (corresponding to a NA of ~0.85 for coherent imaging). In our implementation, we can also recover the unknown speckle in a calibration experiment. The pre-recovered speckle pattern can then be used to recover other unknown objects. Figure 6(d) shows the recovered object with the pre-recovered speckle pattern. In this case, we can reduce the number of translated positions from ~1000 to ~300. Based on Figs. 6(c1) and 6(d1), we achieve a ~7.1-fold resolution gain in this experiment.

Fig. 6.

Near-field FP for microscopy imaging. The captured raw image under uniform illumination (a) and speckle illumination (b). (c) The recovered object using different numbers of translated positions, with an unknown speckle pattern. (d) The recovered object using different numbers of translated positions and with a pre-recovered speckle pattern.

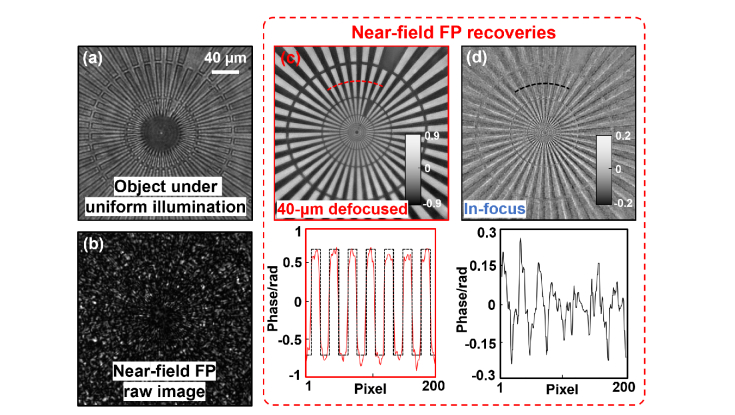

In the second experiment, we use a quantitative phase target (Benchmark QPT) as the object. Figure 7(a) shows the captured image under uniform illumination and Fig. 7(b) shows the near-field FP raw image under speckle illumination. Figure 7(c) shows the recovered phase by placing the object at the 40-μm defocus distance. As a comparison, Fig. 7(d) show the recovered phase for the in-focus position. We also plot the phase profile along the dash lines in 7(c) and 7(d). For the 40-μm defocus case, the recovered phase profile is in a good agreement with the theoretical phase value provided by the manufacturer (the dark dash line). This experiment validates the ‘near-field’ requirement of the proposed approach.

Fig. 7.

Quantitative phase recovery via the near-field FP. The captured raw image under (a) uniform illumination and (b) speckle illumination. The recovered phase by placing the target at the 40-μm defocused distance (c) and the in-focus position (d). The phase profiles are plotted along the red and black dash arc in (c) and (d).

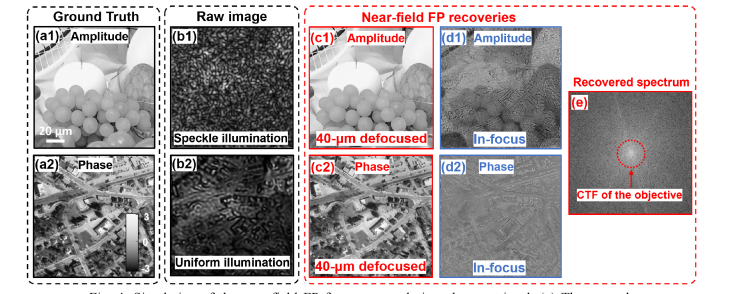

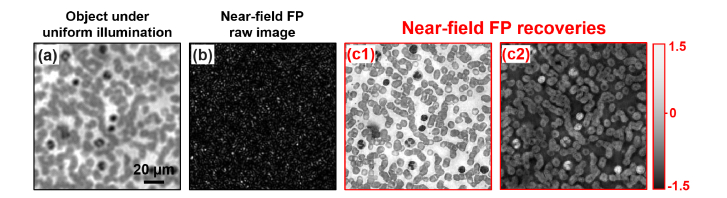

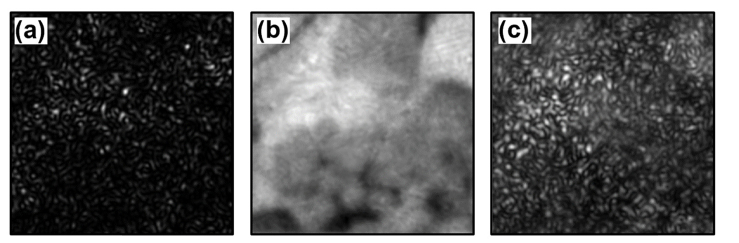

In the third experiment, we use a blood smear sample as the object. Figures 8(a) and 8(b) show the captured raw image under uniform and speckle illumination. The recovered intensity and phase of the blood smear are shown in Fig. 8(c), where the features of blood cells can be clearly resolved using our approach.

Fig. 8.

Experimental demonstration of a blood smear sample using the reported approach. (a) The captured image of blood smear under uniform illumination (a) and speckle illumination (b). (c) The recovered intensity and phase using the near-field FP.

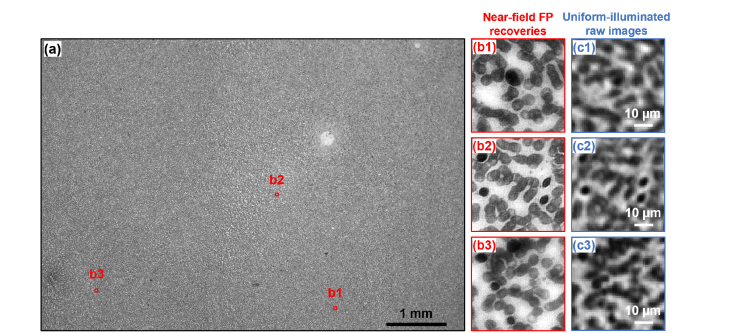

In many microscopy applications, it is important to achieve both high-resolution and wide field of view at the same time. We have also performed an experiment to demonstrate such a capability. In this experiment, we use a 2X, 0.1 NA objective lens to acquire images with a large field of view. Figure 9(a) shows the recovered image of the blood smear, with a size of 6.6 mm by 4.5 mm. Figure 9(b) shows the magnified views of Fig. 9(a), and Fig. 9(c) shows the corresponding raw images under uniform illumination.

Fig. 9.

Wide-field, high-resolution imaging via the near-field FP. (a) The recovered gigapixel intensity image of a blood smear section, with a field of view of 6.6 mm by 4.5 mm. (b1)-(b3) show three magnified view of (a). (c1)-(c3) The captured images under uniform illumination.

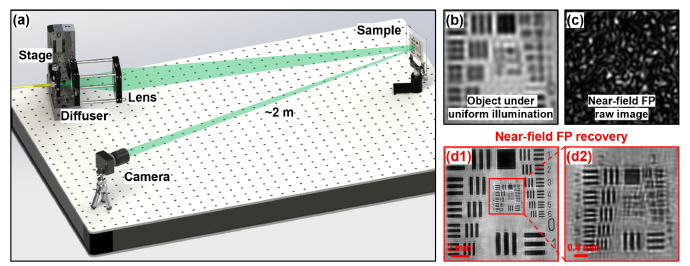

4. Near-field FP for long-range photographic imaging

The proposed near-field FP approach can be used for macroscale photographic imaging. The key innovation is to use a large-aperture lens to project speckle pattern with fine spatial features. We note that, aberration is not an issue for the large-aperture projection lens. The speckle feature size is only determined by the aperture size of the projection lens, regardless of the aberrations. Therefore, one can use a large plano-convex lens to project the fine speckle pattern.

Our experiment setup is shown in Fig. 10(a), where we use a large plano-convex lens (Thorlabs LA1050) and a diffuser (DG20-600) for speckle projection. On the detection side, we use a Nikon photographic lens (50 mm, f/1.8) with a circular aperture to acquire the images. The collection NA is ~0.0006 in our implementation. We then mechanically scan the speckle pattern to 41 by 41 different positions. The captured images are used to recover the high-resolution object image. Figure 10(b) shows the raw captured object image under uniform illumination. Figure 10(c) shows the raw near-field FP image under speckle illumination. The recovered object image is shown in Fig. 10(d), where we can resolve the 62-µm line width. Based on Figs. 10(b) and 10(d), we achieve ~7-fold resolution gain using this macroscale photographic imaging setup.

Fig. 10.

Near-field FP for macroscale photographic imaging. (a) The experimental setup, where we use a diffuser and a large plano-convex lens to project the laser speckles onto the object. We use the same image-plane defocus distance as the microscope setup in Fig. 1(a). (b) The captured image of the USAF resolution target under uniform illumination. (c) The captured raw near-field FP image under speckle illumination. (d1) The high-resolution recovered object using the reported approach. (d2) The magnified view of the resolution target.

5. Summary

In summary, we have discussed a new coherent imaging modality, termed near-field Fourier ptychography, for tackling high-resolution imaging challenges in both microscopic and macroscopic imaging settings. Instead of directly resolving fine spatial features via high-resolution lenses, we project a speckle pattern with fine spatial features on the object. We then translate the object (or speckle) to different positions and acquire the corresponding images using a low-resolution lens. A Fourier ptychographic phase retrieval process is used to recover the complex object, the unknown speckle pattern, and the coherent transfer function at the same time. We achieve a ~7-fold resolution gain in both a microscopic and a macroscale photographic imaging setup. The final achievable resolution is not determined by the collection optics. Instead, it is determined by the feature size of the speckle pattern. Compared to FP, the reported approach can be readily employed in current synchrotron beamline setups and table-top transmission X-ray microscopes without major hardware modifications. It may find applications in light, coherent X-ray, and transmission electron imaging systems to increase resolution, correct aberrations, and provide quantitative absorption and phase contrast of the object.

Appendix

The following simulation Matlab code consists of the following nine steps: 1) set the parameters for the coherent imaging system, 2) generate the input object image, 3) generate the CTF and the low-resolution captured image, 4) generate the speckle pattern, 5) generate the spiral sequence, 6) generate the low-resolution measurements, 7) reorder the measurement sequence for reconstruction, 8) generate the initial guess, 9) perform the iterative phase retrieval.

In step 1, we define the parameters for the imaging system, which are the same as our experimental setup.

%% Step 1: Set the parameters for the coherent imaging system

-

1.

WaveLength = 0.53e-6; % Wave length (green)

-

2.

PixelSize = 3.45e-6 /6.8; % Effective pixel size of imaging system

-

3.

NA = 0.12; % Numerical aperture of imaging system

-

4.

phasePatternIndex = 10*pi; % Maximum phase of pattern

-

5.

phaseObjectIndex = 2*pi; % Maximum phase of object

-

6.

InputImgSize = 257; % Size of image (assumed to be square)

-

7.

DefocusZ = 40e-6; % Defocus distance

-

8.

nIterative = 20; % Number of iterations

-

9.

ShiftStepSize = 1; % Step size of each positional shift

-

10.

SpiralRadius = 19;

-

11.

nPattern = (SpiralRadius*2 + 1)^2; % Number of captured images

In step 2, we generate the complex input object, as shown in Fig. 11

Fig. 11.

The simulated high-resolution amplitude (a) and phase (b) of the input object.

. The image’s size is 257 by 257 pixels. The amplitude of the input object is normalized, and the input phase is set from -pi to pi. This complex object is stored in ‘InputImg’.

%% Step 2: Generate the input object image

-

12.

InputAmplitude = double(imread('fruits.png'));

-

13.

InputAmplitude = imresize(InputAmplitude(:,:,1),[InputImgSize InputImgSize]);

-

14.

InputAmplitude = InputAmplitude/max(InputAmplitude(:));

-

15.

InputPhase = double(imread(('westconcordorthophoto.png')));

-

16.

InputPhase = imresize(InputPhase,[InputImgSize,InputImgSize]);

-

17.

InputPhase = phaseObjectIndex*(InputPhase/max(InputPhase(:))-0.5);

-

18.

InputImg = InputAmplitude.*exp(1i.*InputPhase);

-

19.

Figure (1); subplot(1,2,1); imshow(abs(InputImg),[]); title('Ground Truth Amplitude');

-

20.

subplot(1,2,2); imshow(angle(InputImg),[]); title('Ground Truth Phase');

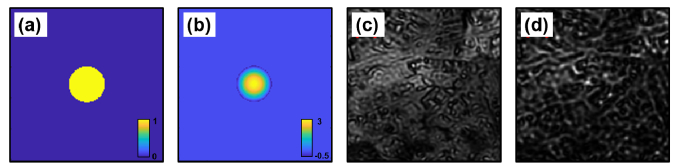

In step 3, we generate the CTF of the imaging system. A phase pupil ‘fmaskpro’ is introduced to describe the effect of defocus aberration. Figures 12(a) and 12(b)

Fig. 12.

The simulated CTF for low-resolution image generation. (a) The amplitude of simulated CTF. (b) The phase of simulated CTF with defocus aberration. The intensity of captured low-resolution image without aberration (c) and with the defocus aberration (d).

show the CTF with no aberration (in-focus) and with a 40-µm defocus aberration, respectively. Figures 12(c) and 12(d) show the generated in-focus and defocused low-resolution images.

%% Step 3: Generate the CTF and the low-resolution image

-

21.

k0 = 2*pi/WaveLength;

-

22.

CutoffFreq = NA * k0;

-

23.

kmax = pi/PixelSize;

-

24.

[GridX, GridY] = meshgrid(-kmax:kmax/((InputImgSize-1)/2):kmax,-

kmax:kmax/((InputImgSize-1)/2):kmax);

-

25.

CTFInfocus = double((GridX.^2 + GridY.^2)<CutoffFreq^2); % Pupil function, no

aberration

-

26.

LRInfocusTargetImgFT = fftshift(fft2(InputImg)).*CTFInfocus;

-

27.

InputInfocusImgLR = abs(ifft2(ifftshift(LRInfocusTargetImgFT))).^2; % Low- resolution in-

focus image

-

28.

GridZ = sqrt(k0^2-GridX.^2-GridY.^2);

-

29.

fmaskpro = exp(1j.*DefocusZ.*real(GridZ)).*exp(-abs(DefocusZ).*abs(imag(GridZ)));

-

30.

CTFDefocus = fmaskpro.*CTFInfocus; % Defocus CTF

-

31.

LRDefocusTargetImgFT = fftshift(fft2(InputImg)).*CTFDefocus;

-

32.

InputDefocusImgLR = abs(ifft2(ifftshift(LRDefocusTargetImgFT))).^2; % Low-resolution

defocus image

-

33.

Figure (2); subplot(2,2,1);imagesc(abs(CTFInfocus));title('infocus CTF');

-

34.

subplot(2,2,2);imshow(InputInfocusImgLR,[]);title('LR infocus input image');

-

35.

subplot(2,2,3);imagesc(angle(CTFDefocus));title('defocus CTF phase');

-

36.

subplot(2,2,4);imshow(InputDefocusImgLR,[]);title('LR defocus input image');

In step 4, we generate a speckle pattern with random phase ranged from 0 to 10*pi and random amplitude ranged from 0 to 1.

%% Step 4: Generate the speckle pattern

-

37.

PatternAmplitude = rand(InputImgSize,InputImgSize);

-

38.

PatternPhase = phasePatternIndex*rand(InputImgSize,InputImgSize);

-

39.

Pattern = PatternAmplitude.*exp(1i.*PatternPhase);

In step 5, we generate a spiral sequence for object scanning. The object shifts along the 39-by-39-pixel spiral path. The x- and y- positions are stored in ‘LocationX’ and ‘LocationY’.

%% Step 5: Generate the spiral sequence

-

40.

LocationX = zeros(1,nPattern);

-

41.

LocationY = zeros(1,nPattern);

-

42.

SpiralPath = spiral(2*SpiralRadius + 1);

-

43.

for iShift = 1:nPattern

-

44.

[iRow, jCol] = find(SpiralPath = = iShift);

-

45.

LocationX(1,iShift) = ShiftStepSize*(iRow-SpiralRadius);

-

46.

LocationY(1,iShift) = ShiftStepSize*(jCol-SpiralRadius);

-

47.

end

In step 6, we generate the low-resolution image sequence by scanning the high-resolution object to different positions. We shift the object by multiplying an equivalent phase factor to the spectrum of the object, and the method is defined in the function ‘subpixelshift()’. The low-resolution image sequence is obtained by applying the lowpass filter given by the CTF with the defocus aberration. The obtained low-resolution measurements with a dimension of 257 by 257 by 1521 pixels are stored in ‘TargetImgs’. The first generated image is shown in Fig. 13(a)

Fig. 13.

The simulated captured low-resolution image sequence. (a) The first generated low-resolution measurement. The initial guess of the high-resolution object (b) and speckle (c).

.

%% Step 6: Generate the low-resolution measurements

-

48.

TargetImgs = zeros(InputImgSize,InputImgSize,nPattern);

-

49.

for iTargetImg = 1:nPattern

-

50.

ImgShiftTemp = subpixelshift(InputImg,LocationX(1,iTargetImg),

LocationY(1,iTargetImg));

-

51.

ImgHighTemp = ImgShiftTemp.*Pattern;

-

52.

ImgHighFTTemp = fftshift(fft2(ImgHighTemp));

-

53.

ImgLowFTTemp = ImgHighFTTemp.*CTFDefocus;

-

54.

TargetImgs(:,:,iTargetImg) = abs(ifft2(ifftshift(ImgLowFTTemp))).^2;

-

55.

disp(iTargetImg);

-

56.

end

-

57.

Figure (3); imshow(TargetImgs(:,:,1),[]); title('The 1st LR captured image');

-

58.

function output_image = subpixelshift(input_image,xshift,yshift)

-

59.

[m,n,num] = size(input_image);

-

60.

output_image = (input_image);

-

61.

[FX,FY] = meshgrid(-floor(n/2):ceil(n/2-1),-floor(n/2):ceil(n/2-1));

-

62.

for i = 1:num

-

63.

Hs = exp(−1j*2*pi.*(FX.*xshift(1,i)/n + FY.*yshift(1,i)/m));

-

64.

output_image(:,:,i) = ifft2(ifftshift(fftshift(fft2(output_image(:,:,i))).*Hs));

-

65.

end

-

66.

end

In step 7, we randomly reorder the generated low-resolution images for reconstruction. The order for reconstruction is stored in ‘reOrder’.

%% Step 7: Reorder the image sequence for reconstruction

-

67.

reOrder = randperm(nPattern);

-

68.

TargetImgs_temp = TargetImgs(:,:,reOrder);

-

69.

TargetImgs = TargetImgs_temp;

-

70.

LocationX_temp = LocationX(reOrder); LocationY_temp = LocationY(reOrder);

-

71.

LocationX = LocationX_temp; LocationY = LocationY_temp;

In step 8, we generate the initial guess of the object and the speckle pattern, as shown in Figs. 13(b) and 13(c).

%% Step 8: Generate the initial guess of the object and the speckle pattern

-

72.

ImgShifted = zeros(InputImgSize,InputImgSize,nPattern);

-

73.

PatternShifted = zeros(InputImgSize,InputImgSize,nPattern);

-

74.

for iTargetImg = 1:nPattern

-

75.

ImgShifted(:,:,iTargetImg) = sqrt(subpixelshift(TargetImgs(:,:,iTargetImg),-

LocationX(1,iTargetImg),-LocationY(1,iTargetImg)));

-

76.

PatternShifted(:,:,iTargetImg) = sqrt(TargetImgs(:,:,iTargetImg));

-

77.

disp(iTargetImg);

-

78.

end

-

79.

ImgMean = mean(ImgShifted,3); % Initial guess of the object

-

80.

PatternMean = mean(PatternShifted,3); % Initial guess of the speckle pattern

-

81.

Figure (4); subplot(1,2,1); imshow(ImgMean,[]); title('Object initial guess');

-

82.

subplot(1,2,2); imshow(PatternMean,[]); title('Speckle initial guess');

In step 9, we recover the object, speckle, and CTF using the iterative phase retrieval algorithm. The variable ‘nIterative’ defined in step 1 determines the number of iterations. In each iteration, the updated object, speckle, and CTF are stored in ‘ImgRecovered’, ‘PatternRecovered’, and ‘CTFDefocus’, respectively. The recovered high-resolution complex object and the speckle pattern are shown in Figs. 14(a)-14(c)

Fig. 14.

The recovered high-resolution amplitude (a), phase (b), and the speckle (c).

.

%% Step 9: Iterative reconstruction

-

83.

ImgRecovered = ImgMean;

-

84.

PatternRecovered = PatternMean;

-

85.

t = 0; T = 100; gama = 0.2; eta = 0.8; alpha = 0.3;

-

86.

vImg = zeros(InputImgSize,InputImgSize);

-

87.

vP = zeros(InputImgSize,InputImgSize);

-

88.

ImgRecoverBeforeUpdate = ImgRecovered;

-

89.

PatternRecoverBeforeUpdate = PatternRecovered;

-

90.

for iterative = 1:nIterative

-

91.

for iTargetImg = 1:nPattern

-

92.

t = t + 1;

-

93.

ImgRecoveredTemp = subpixelshift(ImgRecovered,LocationX(1,iTargetImg),

LocationY(1,iTargetImg));

-

94.

TempTargetImg = PatternRecovered.*ImgRecoveredTemp;

-

95.

TempTargetImgCopy = TempTargetImg;

-

96.

TempTargetImgFT = fftshift(fft2(TempTargetImg));

-

97.

LRTempTargetImgFT = CTFDefocus.*TempTargetImgFT;

-

98.

LRTempTargetImg = ifft2(ifftshift(CTFDefocus.*TempTargetImgFT

-

99.

LRTempTargetImg_AmpUpdated =

sqrt(TargetImgs(:,:,iTargetImg)).*exp(1i.*angle(LRTempTargetImg)); % Update

the amplitude and keep the phase unchanged

-

100.

LRTempTargetImg_AmpUpdatedFT = fftshift(fft2(LRTempTargetImg_AmpUpdated));

-

101.

TempTargetImgFT =

TempTargetImgFT + conj(CTFDefocus)./(max(max((abs(CTFDefocus)).^2)))

.*(LRTempTargetImg_AmpUpdatedFT-LRTempTargetImgFT);

-

102.

CTFDefocus = CTFDefocus + gama.*conj(TempTargetImgFT)./(max(max((abs(TempTarget ImgFT)).^2))) .*(LRTempTargetImg_AmpUpdatedFT-LRTempTargetImgFT); % Update the defocus CTF

-

103.

TempTargetImg = ifft2(ifftshift(TempTargetImgFT));

-

104.

PatternRecovered =

PatternRecovered + gama.*conj(ImgRecoveredTemp).*(TempTargetImg-

TempTargetImgCopy)./((1-alpha).*(abs(ImgRecoveredTemp)).^2

+ alpha.*max(max(abs(ImgRecoveredTemp).^2))); % Update the speckle

-

105.

ImgRecoveredTemp =

ImgRecoveredTemp + gama.*conj(PatternRecovered).*(TempTargetImg-

TempTargetImgCopy)./((1-alpha).*(abs(PatternRecovered)).^2

+ alpha.*max(max(abs(PatternRecovered).^2))); % Update the object

-

106.

ImgRecovered = subpixelshift(ImgRecoveredTemp,-LocationX(1,iTargetImg),

-LocationY(1,iTargetImg));

-

107.

ImgRecoverAfterUpdate = ImgRecovered;

-

108.

PatternRecoverAfterUpdate = PatternRecovered; % Add momentum to accelerate convergence

-

109.

if t = = T

-

110.

vImg = eta*vImg + (ImgRecoverAfterUpdate-ImgRecoverBeforeUpdate);

-

111.

ImgRecovered = ImgRecoverAfterUpdate + eta*vImg;

-

112.

ImgRecoverBeforeUpdate = ImgRecovered;

-

113.

vP = eta*vP + (PatternRecoverAfterUpdate-PatternRecoverBeforeUpdate);

-

114.

PatternRecovered = PatternRecoverAfterUpdate + eta*vP;

-

115.

PatternRecoverBeforeUpdate = PatternRecovered;

-

116.

t = 0;

-

117.

end

-

118.

disp([iterative iTargetImg]);

-

119.

end

-

120.

Figure (5); set(gcf,'outerposition',get(0,'screensize'));

-

121.

subplot(1,3,1); imshow(abs(ImgRecovered),[]); title('Recovered object amplitude');

-

122.

subplot(1,3,2); imshow(angle(ImgRecovered),[]); title('Recovered object phase');

-

123.

subplot(1,3,3); imshow(abs(PatternRecovered),[]); title('Recovered pattern amplitude');

-

124.

pause(0.01);

-

125.

end

Funding

National Science Foundation (1510077); National Institutes of Health (R21EB022378, R03EB022144).

References

- 1.Mudry E., Belkebir K., Girard J., Savatier J., Le Moal E., Nicoletti C., Allain M., Sentenac A., “Structured illumination microscopy using unknown speckle patterns,” Nat. Photonics 6(5), 312–315 (2012). 10.1038/nphoton.2012.83 [DOI] [Google Scholar]

- 2.Chakrova N., Rieger B., Stallinga S., “Deconvolution methods for structured illumination microscopy,” J. Opt. Soc. Am. A 33(7), B12–B20 (2016). 10.1364/JOSAA.33.000B12 [DOI] [PubMed] [Google Scholar]

- 3.Yeh L.-H., Tian L., Waller L., “Structured illumination microscopy with unknown patterns and a statistical prior,” Biomed. Opt. Express 8(2), 695–711 (2017). 10.1364/BOE.8.000695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dong S., Nanda P., Shiradkar R., Guo K., Zheng G., “High-resolution fluorescence imaging via pattern-illuminated Fourier ptychography,” Opt. Express 22(17), 20856–20870 (2014). 10.1364/OE.22.020856 [DOI] [PubMed] [Google Scholar]

- 5.Dong S., Nanda P., Guo K., Liao J., Zheng G., “Incoherent Fourier ptychographic photography using structured light,” Photon. Res. 3(1), 19–23 (2015). 10.1364/PRJ.3.000019 [DOI] [Google Scholar]

- 6.Yilmaz H., van Putten E. G., Bertolotti J., Lagendijk A., Vos W. L., Mosk A. P., “Speckle correlation resolution enhancement of wide-field fluorescence imaging,” Optica 2(5), 424–429 (2015). 10.1364/OPTICA.2.000424 [DOI] [Google Scholar]

- 7.Dong S., Guo K., Jiang S., Zheng G., “Recovering higher dimensional image data using multiplexed structured illumination,” Opt. Express 23(23), 30393–30398 (2015). 10.1364/OE.23.030393 [DOI] [PubMed] [Google Scholar]

- 8.Min J., Jang J., Keum D., Ryu S.-W., Choi C., Jeong K.-H., Ye J. C., “Fluorescent microscopy beyond diffraction limits using speckle illumination and joint support recovery,” Sci. Rep. 3(1), 2075 (2013). 10.1038/srep02075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gustafsson M. G., “Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy,” J. Microsc. 198(2), 82–87 (2000). 10.1046/j.1365-2818.2000.00710.x [DOI] [PubMed] [Google Scholar]

- 10.Orieux F., Sepulveda E., Loriette V., Dubertret B., Olivo-Marin J.-C., “Bayesian estimation for optimized structured illumination microscopy,” IEEE Trans. Image Process. 21(2), 601–614 (2012). 10.1109/TIP.2011.2162741 [DOI] [PubMed] [Google Scholar]

- 11.Jost A., Heintzmann R., “Superresolution Multidimensional Imaging with Structured Illumination Microscopy,” Annu. Rev. Mater. Res. 43(1), 261–282 (2013). 10.1146/annurev-matsci-071312-121648 [DOI] [Google Scholar]

- 12.Li D., Shao L., Chen B.-C., Zhang X., Zhang M., Moses B., Milkie D. E., Beach J. R., Hammer J. A., 3rd, Pasham M., Kirchhausen T., Baird M. A., Davidson M. W., Xu P., Betzig E., “Extended-resolution structured illumination imaging of endocytic and cytoskeletal dynamics,” Science 349(6251), aab3500 (2015). 10.1126/science.aab3500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Guo K., Zhang Z., Jiang S., Liao J., Zhong J., Eldar Y. C., Zheng G., “13-fold resolution gain through turbid layer via translated unknown speckle illumination,” Biomed. Opt. Express 9(1), 260–275 (2018). 10.1364/BOE.9.000260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Park Y., Choi W., Yaqoob Z., Dasari R., Badizadegan K., Feld M. S., “Speckle-field digital holographic microscopy,” Opt. Express 17(15), 12285–12292 (2009). 10.1364/OE.17.012285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Almoro P., Pedrini G., Osten W., “Complete wavefront reconstruction using sequential intensity measurements of a volume speckle field,” Appl. Opt. 45(34), 8596–8605 (2006). 10.1364/AO.45.008596 [DOI] [PubMed] [Google Scholar]

- 16.Cederquist J. N., Fienup J. R., Marron J. C., Paxman R. G., “Phase retrieval from experimental far-field speckle data,” Opt. Lett. 13(8), 619–621 (1988). 10.1364/OL.13.000619 [DOI] [PubMed] [Google Scholar]

- 17.Maiden A. M., Humphry M. J., Zhang F., Rodenburg J. M., “Superresolution imaging via ptychography,” J. Opt. Soc. Am. A 28(4), 604–612 (2011). 10.1364/JOSAA.28.000604 [DOI] [PubMed] [Google Scholar]

- 18.Stockmar M., Cloetens P., Zanette I., Enders B., Dierolf M., Pfeiffer F., Thibault P., “Near-field ptychography: phase retrieval for inline holography using a structured illumination,” Sci. Rep. 3(1), 1927 (2013). 10.1038/srep01927 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Clare R. M., Stockmar M., Dierolf M., Zanette I., Pfeiffer F., “Characterization of near-field ptychography,” Opt. Express 23(15), 19728–19742 (2015). 10.1364/OE.23.019728 [DOI] [PubMed] [Google Scholar]

- 20.McDermott S., Maiden A., “Near-field ptychographic microscope for quantitative phase imaging,” Opt. Express 26(19), 25471–25480 (2018). 10.1364/OE.26.025471 [DOI] [PubMed] [Google Scholar]

- 21.Sheppard C. J., “Defocused transfer function for a partially coherent microscope and application to phase retrieval,” J. Opt. Soc. Am. A 21(5), 828–831 (2004). 10.1364/JOSAA.21.000828 [DOI] [PubMed] [Google Scholar]

- 22.Xu W., Jericho M. H., Meinertzhagen I. A., Kreuzer H. J., “Digital in-line holography for biological applications,” Proc. Natl. Acad. Sci. U.S.A. 98(20), 11301–11305 (2001). 10.1073/pnas.191361398 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rivenson Y., Zhang Y., Günaydın H., Teng D., Ozcan A., “Phase recovery and holographic image reconstruction using deep learning in neural networks,” Light: Science &Amp. Applications 7(2), 17141 (2018). 10.1038/lsa.2017.141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Greenbaum A., Luo W., Su T.-W., Göröcs Z., Xue L., Isikman S. O., Coskun A. F., Mudanyali O., Ozcan A., “Imaging without lenses: achievements and remaining challenges of wide-field on-chip microscopy,” Nat. Methods 9(9), 889–895 (2012). 10.1038/nmeth.2114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zheng G., Horstmeyer R., Yang C., “Wide-field, high-resolution Fourier ptychographic microscopy,” Nat. Photonics 7(9), 739–745 (2013). 10.1038/nphoton.2013.187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dong S., Horstmeyer R., Shiradkar R., Guo K., Ou X., Bian Z., Xin H., Zheng G., “Aperture-scanning Fourier ptychography for 3D refocusing and super-resolution macroscopic imaging,” Opt. Express 22(11), 13586–13599 (2014). 10.1364/OE.22.013586 [DOI] [PubMed] [Google Scholar]

- 27.Tian L., Li X., Ramchandran K., Waller L., “Multiplexed coded illumination for Fourier Ptychography with an LED array microscope,” Biomed. Opt. Express 5(7), 2376–2389 (2014). 10.1364/BOE.5.002376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Guo K., Dong S., Zheng G., “Fourier Ptychography for Brightfield, Phase, Darkfield, Reflective, Multi-Slice, and Fluorescence Imaging,” IEEE J. Sel. Top. Quantum Electron. 22(4), 77 (2016). 10.1109/JSTQE.2015.2504514 [DOI] [Google Scholar]

- 29.Holloway J., Wu Y., Sharma M. K., Cossairt O., Veeraraghavan A., “SAVI: Synthetic apertures for long-range, subdiffraction-limited visible imaging using Fourier ptychography,” Sci. Adv. 3(4), e1602564 (2017). 10.1126/sciadv.1602564 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yeh L.-H., Dong J., Zhong J., Tian L., Chen M., Tang G., Soltanolkotabi M., Waller L., “Experimental robustness of Fourier ptychography phase retrieval algorithms,” Opt. Express 23(26), 33214–33240 (2015). 10.1364/OE.23.033214 [DOI] [PubMed] [Google Scholar]

- 31.Sun J., Zuo C., Zhang L., Chen Q., “Resolution-enhanced Fourier ptychographic microscopy based on high-numerical-aperture illuminations,” Sci. Rep. 7(1), 1187 (2017). 10.1038/s41598-017-01346-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chung J., Lu H., Ou X., Zhou H., Yang C., “Wide-field Fourier ptychographic microscopy using laser illumination source,” Biomed. Opt. Express 7(11), 4787–4802 (2016). 10.1364/BOE.7.004787 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kuang C., Ma Y., Zhou R., Lee J., Barbastathis G., Dasari R. R., Yaqoob Z., So P. T., “Digital micromirror device-based laser-illumination Fourier ptychographic microscopy,” Opt. Express 23(21), 26999–27010 (2015). 10.1364/OE.23.026999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Guo K., Dong S., Nanda P., Zheng G., “Optimization of sampling pattern and the design of Fourier ptychographic illuminator,” Opt. Express 23(5), 6171–6180 (2015). 10.1364/OE.23.006171 [DOI] [PubMed] [Google Scholar]

- 35.Dong S., Guo K., Nanda P., Shiradkar R., Zheng G., “FPscope: a field-portable high-resolution microscope using a cellphone lens,” Biomed. Opt. Express 5(10), 3305–3310 (2014). 10.1364/BOE.5.003305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Wakonig K., Diaz A., Bonnin A., Stampanoni M., Bergamaschi A., Ihli J., Guizar-Sicairos M., Menzel A., “X-ray Fourier ptychography,” Science Advances 5, eaav0282 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Matsuyama S., Yasuda S., Yamada J., Okada H., Kohmura Y., Yabashi M., Ishikawa T., Yamauchi K., “50-nm-resolution full-field X-ray microscope without chromatic aberration using total-reflection imaging mirrors,” Sci. Rep. 7(1), 46358 (2017). 10.1038/srep46358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Karvinen P., Grolimund D., Willimann M., Meyer B., Birri M., Borca C., Patommel J., Wellenreuther G., Falkenberg G., Guizar-Sicairos M., Menzel A., David C., “Kinoform diffractive lenses for efficient nano-focusing of hard X-rays,” Opt. Express 22(14), 16676–16685 (2014). 10.1364/OE.22.016676 [DOI] [PubMed] [Google Scholar]

- 39.Maiden A. M., Rodenburg J. M., “An improved ptychographical phase retrieval algorithm for diffractive imaging,” Ultramicroscopy 109(10), 1256–1262 (2009). 10.1016/j.ultramic.2009.05.012 [DOI] [PubMed] [Google Scholar]

- 40.Maiden A., Johnson D., Li P., “Further improvements to the ptychographical iterative engine,” Optica 4(7), 736–745 (2017). 10.1364/OPTICA.4.000736 [DOI] [Google Scholar]