Abstract

The aim of the present study was to explore the feasibility of using deep learning as artificial intelligence (AI) to classify cervical squamous epithelial lesions (SIL) from colposcopy images. A total of 330 patients who underwent colposcopy and biopsy by gynecologic oncologists were enrolled in the current study. A total of 97 patients received a pathological diagnosis of low-grade SIL (LSIL) and 213 of high-grade SIL (HSIL). An original AI-classifier with 11 layers of the convolutional neural network was developed and trained. The accuracy, sensitivity, specificity and Youden's J index of the AI-classifier and oncologists for diagnosing HSIL were 0.823 and 0.797, 0.800 and 0.831, 0.882 and 0.773, and 0.682 and 0.604, respectively. The area under the receiver-operating characteristic curve was 0.826±0.052 (mean ± standard error), and the 95% confidence interval 0.721–0.928. The optimal cut-off point was 0.692. Cohen's Kappa coefficient for AI and colposcopy was 0.437 (P<0.0005). The AI-classifier performed better than oncologists, although not significantly. Although further study is required, the clinical use of AI for the classification of HSIL/LSIL from by colposcopy may be feasible.

Keywords: colposcopy, cervical cancer, cervical intraepithelial neoplasia, deep learning, artificial intelligence

Introduction

With current advancements in computer science, artificial intelligence (AI) has made remarkable progress recently. The hypothetical moment in time when AI becomes so advanced that humanity undergoes a dramatic and irreversible change (1) is likely to arrive in this century. AI has already exceeded human experts in the field of games with perfect information, such as Go (2), showing us novel tactics. Therefore, since AI can recognize some information that conventional procedures cannot detect, it may provide a more precise diagnosis in practical medicine. AI may also be able to support clinicians in practical medicine, reducing the time and effort necessary. The aim of the present study was to investigate the feasibility of applying deep learning, a type of AI, for gynecological clinical practice.

Uterine cervical cancer continues to be a major public health problem. Cervical cancer is the third most common female cancer and the leading cause of cancer-related mortality among women in Eastern, Western and Middle Africa, Central America, South-Central Asia and Melanesia. New methodologies of cervical cancer prevention should be made available and accessible to women of all countries (3).

Colposcopy is a well-established tool for examining the cervix under magnification (4–6). When lesions are treated with acetic acid diluted to 3–5%, colposcopy can detect and recognize cervical intraepithelial neoplasia (CIN). Classification systems, such as the Bethesda System in 2002 are used to categorize lesions as high-grade squamous intraepithelial lesions (HSIL) or low-grade SIL (LSIL) (7,8). HSIL and LSIL were previously referred to as CIN2/CIN3 and CIN1, respectively. In clinical practice, it is important for clinicians to distinguish HSIL from LSIL in biopsy specimens, since further examination or treatment, such as conization, may be required for HSIL. Expert gynecologic oncologists spend much time and effort to provide precise colposcopy findings.

For these reasons, we explored whether AI can evaluate colposcopy findings as well as a gynecologic oncologist. In the present study, we applied deep learning with a convolutional neural network to the realm of AI to develop an original classifier for predicting HSIL or LSIL from colposcopy images. Deep learning is becoming very popular among machine learning methods, such as logistic regression (9), naive Bayes (10), nearest neighbor (11), random forest (12) and neural network (13). The classifier program was developed using supervised deep learning with a convolutional neural network (14) that tried to mimic the visual cortex of the mammal brain (15–23), in order to categorize colposcopy images as either HSIL or LSIL. The present study demonstrated the effective use of the AI colposcopy image classifier in predicting HSIL or LSIL by comparing the colposcopic diagnosis to that of gynecologic oncologists.

Patients and methods

Patients

This retrospective study used fully deidentified patient data and was approved by the Institutional Review Board of Shikoku Cancer Center (approval no. 2017-81). This study was explained to the patients, who underwent cervical biopsy by gynecologic oncologists at Shikoku Cancer Center from January 1, 2012 to December 31, 2017. Patients were also directed to a website with additional information, including an opt-out option for the study. The Institutional Review Board of Shikoku Cancer Center approved the opt-out option for patients to choose to withdraw from this study. Gynecologic oncologists at the Shikoku Cancer Center determined the biopsy in routine conventional practice when necessary. A total of 330 patients were enrolled in this study.

Images

Colposcopy images of lesions processed with acetic acid prior to biopsy were captured, cropped to a square and saved in JPEG format. The images were used retrospectively as the input data for deep learning. Gynecologists biopsied the most advanced lesion, the pathological results of which were revealed later.

Preparation for AI

All deidentified images stored offline were transferred to our AI-based system. Each image was cropped to a square and then saved. Twenty percent of the images were randomly selected as the test dataset, and the rest were used as the training dataset. Next, 20% of the training dataset was used as the validation dataset, and the rest was used to train the AI-classifier. Thus, the training, validation and test datasets did not overlap. That way, the AI classifier was trained by a training dataset and simultaneously validated and then tested for the test dataset. The number of training datasets was augmented, as is often done in computer science, in a process known as data augmentation. The training dataset was augmented in this study because the image processing of the arbitrary degrees of rotation can lead to images being included in the same category of different vector data.

AI-classifier

We developed classifier programs using supervised deep learning with a convolutional neural network (14,19). We tested a lot of convolutional neural networks by varying L2 regularization (24,25) and the architectures consisted of a combination of convolution layers with kernels (26–28), pooling layers (29–32), flattened layers (33), linear layers (34,35), rectified linear unit layers (36,37) and a softmax layer (38,39) that demonstrated the probability of LSIL or HSIL from an image (Table I). We also tested the ResNet-50 network (40), which performed very well in the ImageNet Large Scale Visual Recognition Challenge (41) and Microsoft common objects in context MS-COCO (42) competition. We modified the ResNet-50, the first layer of which was replaced with the convolutional layer with a kernel size of 4, stride size of 2, padding size of 2, and input image size of 111×111 pixels, which is the minimum size for the ResNet-50. The last layer of the ResNet-50 was also replaced with a linear layer, followed by a softmax layer with an output with a vector size of 2.

Table I.

Architectures of the top classifier that exhibited the highest accuracy.

| Layers | Supplementations |

|---|---|

| Convolution layer | Output channels; 64, Kernel size; 3×3 |

| ReLU | N/A |

| Pooling layer | Kernel size; 2×2 |

| Convolution layer | Output channels; 64, Kernel size; 3×3 |

| ReLU | N/A |

| Pooling layer | Kernel size; 2×2 |

| Flatten layer | N/A |

| Linear layer | Size; 29 |

| ReLU | N/A |

| Linear layer | 2 |

| Softmax layer | N/A |

The convolutional neural network structures, which consisted of 11 layers of convolutional deep learning, were obtained. ReLU, rectified linear units.

Cross-validation (43–45), a powerful method for model selection, was applied to identify the optimal method of machine learning. The suitable number of images for the training data was investigated by evaluating the accuracy and variances using the 5-fold cross-validation method. This calculation procedure reveals the optimal number of training data and can be used to avoid overfitting (46–51), which is a modeling error that occurs when a classifier is too closely fit to a limited set of data points. After the optimal number of training data was obtained, the classifier that showed the best accuracy was selected, as is standard practice in computer science. Conventional colposcopy diagnosis and AI colposcopy diagnosis for test dataset were compared.

Development environment

The following development environment was used in the present study: A Mac running OS X 10.14.3 (Apple, Inc.) and Mathematica 11.3.0.0 (Wolfram Research).

Statistical analysis

The laboratory and AI-classifier data were compared. The two proportion between gynecologic oncologists and the classifier using deep learning was compared by two-proportion z-test. The agreements among the conventional colposcopy, the AI classifier and pathological results were evaluated by Cohen's Kappa (52) coefficients. The formula to calculate Cohen's kappa for two raters is as follows:

(Aobserved - Aexpected by chance)/(1- Aexpected by chance)

where:

Aobserved = the relative observed agreement among raters,

Aexpected by chance = the hypothetical probability of chance agreement. Mathematica 11.3.0.0 (Wolfram Research) was used for all statistical analyses.

Results

Profiles of pathological diagnosis and colposcopy

The pathological diagnoses and corresponding number of patients were as follows: LSIL, 97; HSIL, 213; squamous cell carcinoma, 12; microinvasive squamous cell carcinoma, 1; adenocarcinoma, 5; adenocarcinoma in situ, 2. A total of 310 images of both pathological LSIL and HSIL were used, due of the limited number of available images. Among the 213 pathological HSIL cases, 177, 32, 3 and 1 received a conventional colposcopy diagnosis by gynecologists of HSIL, LSIL, invasive cancer and cervicitis, respectively. Among the 97 pathological LSIL cases, 22, 70 and 5 received a conventional colposcopy diagnosis by gynecologists of HSIL, LSIL and cervicitis, respectively (Table II) The accuracy, sensitivity, specificity, positive predictive value, negative predictive value and Youden's J index (53) for HSIL, as determined by gynecologists were 0.797 (247/310), 0.831 (177/213), 0.773 (75/97), 0.889 (117/199), 0.686 (70/102) and 0.604, respectively (Table III).

Table II.

Charactersitics of the 330 patients that underwent colposcopy and biopsy by gynecologic oncologists.

| Patient characteristics | Pathological HSIL (n=213) | Pathological LSIL (n=97) |

|---|---|---|

| Age (years) | ||

| Mean ± SD | 31.66±5.01 | 33.75±8.94 |

| Median | 32 | 33 |

| Range | 19-46 | 19-62 |

| HPV | ||

| Type 16 positive | 75 | 2 |

| Type 18 positive | 5 | 2 |

| Type 16 and 18 positive | 1 | 0 |

| Positive, but not type 16 or 18 | 123 | 33 |

| Negative | 6 | 6 |

| Not available | 3 | 54 |

| Colposcopic diagnosis | ||

| HSIL | 177 | 22 |

| LSIL | 32 | 70 |

| Cervicitis | 1 | 5 |

| Invasive cancer | 3 | 0 |

HSIL, high-grade squamous intraepithelial lesions; LSIL, low-grade squamous intraepithelial lesions; SD, standard deviation.

Table III.

Comparison between gynecologic oncologists and the top classifier using deep learning.

| Variable | Gynecologic oncologists | AI |

|---|---|---|

| Accuracy | 0.797 (247/310) | 0.823 (51/62) |

| Sensitivity | 0.831 (177/213) | 0.800 (36/45) |

| Specificity | 0.773 (75/97) | 0.882 (15/17) |

| Positive predictive value | 0.889 (177/199) | 0.947 (36/38) |

| Negative predictive value | 0.686 (70/102) | 0.625 (15/24) |

| Youden's J index | 0.604 | 0.682 |

Bracketed data indicates the number of corresponding selected cases/the number of relevant cases. AI, artificial intelligence.

AI-classifier results

The best accuracy for HSIL was 0.823 (51/62), when the number of the augmented training data set was 1,488, the value of L2 regularization 0.175, the number of layers of the architecture 11 (Table II) and the image size 70×70 pixels. The sensitivity, specificity, positive predictive value, negative predictive value and Youden's J index were 0.800 (36/45), 0.882 (15/17), 0.947 (36/38), 0.625 (15/24) and 0.682, respectively (Table III). The accuracy, sensitivity, specificity, positive predictive value, negative predictive value and Youden's J index of the best modified ResNet-50 were 0.790 (49/62), 0.847 (39/46), 0.625 (10/16), 0.867 (39/45), 0.588 (10/17) and 0.472, respectively. The original conventional neural network was better than the modified ResNet-50, although not significantly. There were no differences between the gynecologic oncologists and the best AI in accuracy, sensitivity, specificity, positive or negative predictive value, as determined by a proportional test.

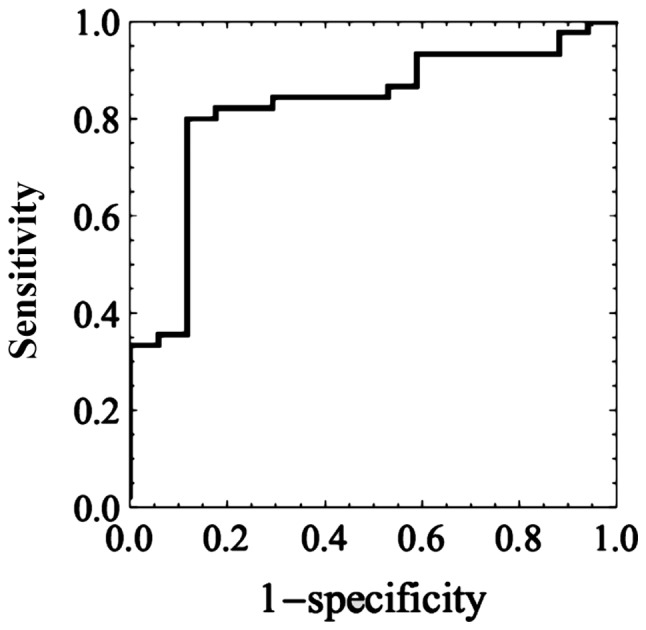

Using confidence score, the area under the receiver-operating characteristic curve (ROC) of the best classifier for predicting HSIL was 0.824±0.052 (mean ± SE), and the 95% confidence interval 0.721–0.928. The ROC curve is shown in Fig. 1. The optimal cut-off point was 0.692.

Figure 1.

The receiver-operating characteristic curve of the best classifier for predicting high-grade squamous intraepithelial lesions. The value of the area under the curve is 0.824±0.052 (mean ± standard error) and the 95% confidence interval ranged between 0.721–0.928.

Comparison of AI-classifier with conventional colposcopy

The association among conventional colposcopy, the AI classifier and pathological results for the test dataset that was 20% of patients of both pathological HSIL and LSIL diagnosed by punch biopsy are shown in Tables IV–VI. Cohen's Kappa (52) coefficients of the conventional colposcopy and pathological results, the AI classifier and pathological results, and the conventional colposcopy and the AI classifier were 0.691, 0.561 and 0.437 (all P<0.0001), respectively. All were more than moderate agreements (54). Conventional colposcopy showed better agreement than the AI, although the difference was not significant. Classification took less than 0.2 sec per image.

Table IV.

Conventional colposcopy diagnosis and pathological results of the test data set.

| Conventional colposcopy diagnosis | ||

|---|---|---|

| Lesion type | HSIL | LSIL |

| Pathological HSIL | 39 | 6 |

| Pathological LSIL | 2 | 15 |

Cohen's Kappa coefficient was 0.691, P<0.0001. HSIL, high-grade squamous intraepithelial lesions; LSIL, low-grade squamous intraepithelial lesions; AI, artificial intelligence.

Table VI.

Conventional colposcopy diagnosis and AI colposcopy diagnosis for test data set.

| AI colposcopy diagnosis | ||

|---|---|---|

| HSIL | LSIL | |

| Conventional colposcopy HSIL | 32 | 9 |

| Conventional colposcopy LSIL | 7 | 14 |

Cohen's Kappa coefficient was 0.437, P<0.0005. HSIL, high-grade squamous intraepithelial lesions; LSIL, low-grade squamous intraepithelial lesions; AI, artificial intelligence.

Discussion

We developed a classifier using deep learning with convolutional neural networks using images of cervical SILs to predict the pathological diagnosis. In the present study, the accuracy values achieved by the classifier and by gynecologic oncologists were 0.823 and 0.797, respectively (Table III). The sensitivity values of the classifier and gynecologic oncologists were 0.800 and 0.831, respectively. The specificity values of the classifier and gynecologic oncologists were 0.882 and 0.773, respectively. The accuracy and specificity of the classifier were superior to those of gynecologic oncologists, although the difference was not significant. Only moderate agreement was obtained between conventional colposcopy diagnosis and AI colposcopy diagnosis with the Kappa value of 0.437. McHugh reported that Cohen suggested 0.41 might be acceptable and the Kappa result be interpreted as follows: Values ≤0 as indicating no agreement and 0.01–0.20 as none to slight, 0.21–0.40 as fair, 0.41–0.60 as moderate, 0.61–0.80 as substantial and 0.81–1.00 (54). Thus, the Kappa value of 0.437 was acceptable. But, AI colposcopy that might have a potential would not be an alternative to conventional colposcopy without further studies.

Several reports have used AI (55) for deep learning with convolutional neural networks in medicine (56). The accuracy values of this method with deep learning have been published and include 0.997 for the histopathological diagnosis of breast cancer (57), 0.90–0.83 for the early diagnosis of Alzheimer's disease (58), 0.83 for urological dysfunctions (59), 0.72 (60) and 0.50 (61) for colposcopy, 0.68–0.70 for localization of rectal cancer (62), 0.83 for the diagnostic imaging of orthopedic trauma (63), 0.98 for the morphological quality of blastocysts and evaluation by an embryologist (64), 0.65 for predicting live birth without aneuploidy from a blastocyst image (65) and 0.64–0.88 for predicting live birth from a blastocyst image of patients by age (66).

Several studies have reported a limitation of conventional colposcopy. An investigation of the accuracy of colposcopically-directed biopsy reported a total biopsy failure rate, comprising both non-biopsy and incorrect selection of biopsy site, of 0.20 in CIN1, 0.11 in CIN2 and 0.09 in CIN3 (67). The colposcopic impression of high-grade CIN had a sensitivity of 0.54 and a specificity of 0.88, as determined by 9 expert colposcopists in 100 cervigrams (68). The sensitivity of an online colpophotographic assessment of high-grade disease (CIN2 and CIN3) by 20 colposcopists was 0.39 (69). Thus, conventional colposcopy does not provide good sensitivity, even by colposcopists. By contrast, the accuracy and sensitivity reported in this study for predicting HSIL from colposcopy images using deep learning was 0.823 and 0.800, respectively, which appears favorable. Since the classifier was not trained in colposcopy findings such as acetowhite epithelium, mosaic, punctuation, it may recognize some features of cervical SILs by itself in high-dimensional space. It is possible that the AI-classifier may recognize features that colposcopists do not, such as complexity of the shape of the lesion, relative or absolute brightness of acetowhite, distribution of punctuation density, and quantitative marginal evaluation of borders. The pathological results were obtained and defined by punch biopsy in this study, as it was not ethically recommended for patients with LSIL (CIN1) diagnosed by colposcopy to undergo conization or hysterectomy. If the pathological results were defined by conization or hysterectomy, the advanced lesion would have been be revealed, and thus both conventional colposcopy and the AI classifier may have demonstrated different results. In the present study, we only tried to compare the effectiveness of AI with that of conventional colposcopy for SIL. When the AI will be used to predict more advanced diseases, such as squamous cell carcinoma, adenocarcinoma and AIS, the pathological diagnosis should be provided by not punch biopsy but conization or hysterectomy.

In clinical practice, it is important for clinicians to distinguish HSIL from LSIL in biopsy specimens. Further examination or treatment, such as conization, may be required for HSIL. When a reliable classifier indicates HSIL from colposcopy images in clinical practice, the clinician should consider biopsy. The accuracy values of the classifier and gynecologists for detecting HSIL were 0.823 and 0.797, respectively. The classifier might help untrained clinicians to avoid or reduce the risks of overlooking HSIL. When the AI-classifier can perform higher in terms of accuracy, sensitivity and specificity for classifying HSIL/LSIL, clinicians will be able to perform more precise practice, referencing AI. Furthermore, a gynecologist could reduce the time and effort it takes to become a colposcopy expert and, as a result, improve in other skills, training and activities.

The architecture of the neural network has progressed. The LeNet study published in 1998 (70) consisted of 5 layers. AlexNet, published in 2012 (38), consisted of 14 and Google Net, published in 2014 (35), was constructed from a combination of micro networks. ResNet-50, published in 2015 (41), consisted of modules with a shortcut process. The Squeeze-and-Excitation Networks were published in 2017 (71). AI used for image recognition is still being developed. Progress in AI will allow us to achieve better results. Image information is one of the parameters that need to be investigated. Only 15×15 pixels are used to detect cervical cancer (72). In a colposcopy study (61), it was reported that the accuracy for images of 150×150 pixels was better than those for 32×32 or 300×300 pixels. Hence, image size remains an issue. We used 70×70 and 111×111 pixels for our images, in order to use the original neural networks and the modified ResNet-50, respectively. The original conventional neural network was better than the modified ResNet-50, although not significantly. We believe that a pixel size of 70×70 falls within the acceptable range. Regularization values are also important parameters for constructing a good classifier that avoids overfitting. If the regularization value is too low, overfitting occurs. If the value is too large, the classifier will not be trained well. Choosing the appropriate number of training datasets is also very important. If the number of training datasets is too high, the accuracy will be lower and more variances will be observed. The validation dataset, as well as L2 regularization, also prevent overfitting. The appropriate number of training datasets must be achieved to obtain a good classifier. More varied patterns of images may be needed for datasets. Ordinarily, 500–1,000 images are prepared for each class during image classification with deep learning (61,73). Such a large number of datasets will improve the accuracy and specificity of the classifier with deep learning.

In the present study, a classifier was developed based on deep learning, which used images of uterine cervical SILs to predict pathological HSIL/LSIL. Its accuracy was 0.823. Although further study may be required to validate the classifier, we demonstrated that AI may have a clinical use in colposcopic examinations and may provide benefits to both patients and medical personnel.

Table V.

AI colposcopy diagnosis and pathological result for test data set.

| AI colposcopy diagnosis | ||

|---|---|---|

| Lesion type | HSIL | LSIL |

| Pathological HSIL | 36 | 9 |

| Pathological LSIL | 3 | 14 |

Cohen's Kappa coefficient was 0.561, P<0.0001. HSIL, high-grade squamous intraepithelial lesions; LSIL, low-grade squamous intraepithelial lesions; AI, artificial intelligence.

Acknowledgements

Not applicable.

Funding

No funding was received.

Availability of data and materials

The datasets generated and/or analyzed during the present study are not publicly available, since data sharing is not approved by the Institutional Review Board of Shikoku Cancer Center (approval no. 2017-81).

Authors' contributions

YM designed the current study, performed AI programming, produced classifiers by AI, performed statistical analysis and wrote the manuscript. KT performed clinical intervention, data entry and collection, designed the current study, and critically revised the manuscript. TM designed the current study and critically revised the manuscript.

Ethics approval and consent to participate

The protocol for the present retrospective study used fully deidentified patient data and was approved by the Institutional Review Board of Shikoku Cancer Center (approval no. 2017-81). The study protocol was explained to the patients who underwent cervical biopsy at the Shikoku Cancer Center from January 1, 2012 to December 31, 2017. Patients were also directed to a website with additional information, including an opt-out option, allowing them to not participate. Written informed consent for was not required, according to the guidance of the Ministry of Education, Culture, Sports, Science and Technology of Japan.

Patient consent for publication

The current study was explained to the patients who underwent cervical biopsy at the Shikoku Cancer Center from January 1, 2012 to December 31, 2017. Patients were also directed to a website with additional information, including an opt-out option that let them know they had the right to refuse publication.

Competing interests

YM and TM declare that they have no competing interests. KT reports personal fees from Taiho Pharmaceuticals, Chugai Pharma, AstraZeneca, Nippon Kayaku, Eisai, Ono Pharmaceutical, Terumo Corporation and Daiichi Sankyo, outside of the submitted work.

References

- 1.Müller VC, Bostrom N. Springer; Berlin: 2016. Future progress in artificial intelligence: A survey of expert opinion. In: Fundamental Issues of Artificial Intelligence; pp. 555–572. [Google Scholar]

- 2.Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, Hubert T, Baker L, Lai M, Bolton A, et al. Mastering the game of Go without human knowledge. Nature. 2017;550:354–359. doi: 10.1038/nature24270. [DOI] [PubMed] [Google Scholar]

- 3.Arbyn M, Castellsagué X, de Sanjosé S, Bruni L, Saraiya M, Bray F, Ferlay J. Worldwide burden of cervical cancer in 2008. Ann Oncol. 2011;22:2675–2686. doi: 10.1093/annonc/mdr015. [DOI] [PubMed] [Google Scholar]

- 4.Garcia-Arteaga JD, Kybic J, Li W. Automatic colposcopy video tissue classification using higher order entropy-based image registration. Comput Biol Med. 2011;41:960–970. doi: 10.1016/j.compbiomed.2011.07.010. [DOI] [PubMed] [Google Scholar]

- 5.Kyrgiou M, Tsoumpou I, Vrekoussis T, Martin-Hirsch P, Arbyn M, Prendiville W, Mitrou S, Koliopoulos G, Dalkalitsis N, Stamatopoulos P, Paraskevaidis E. The up-to-date evidence on colposcopy practice and treatment of cervical intraepithelial neoplasia: The Cochrane colposcopy and cervical cytopathology collaborative group (C5 group) approach. Cancer Treat Rev. 2006;32:516–523. doi: 10.1016/j.ctrv.2006.07.008. [DOI] [PubMed] [Google Scholar]

- 6.O'Neill E, Reeves MF, Creinin MD. Baseline colposcopic findings in women entering studies on female vaginal products. Contraception. 2008;78:162–166. doi: 10.1016/j.contraception.2008.04.002. [DOI] [PubMed] [Google Scholar]

- 7.Waxman AG, Chelmow D, Darragh TM, Lawson H, Moscicki AB. Revised terminology for cervical histopathology and its implications for management of high-grade squamous intraepithelial lesions of the cervix. Obstet Gynecol. 2012;120:1465–1471. doi: 10.1097/AOG.0b013e31827001d5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Darragh TM, Colgan TJ, Cox JT, Heller DS, Henry MR, Luff RD, McCalmont T, Nayar R, Palefsky JM, Stoler MH, et al. The lower anogenital squamous terminology standardization project for HPV-associated lesions: Background and consensus recommendations from the college of American pathologists and the American society for colposcopy and cervical pathology. J Low Genit Tract Dis. 2012;16:205–242. doi: 10.1097/LGT.0b013e31825c31dd. [DOI] [PubMed] [Google Scholar]

- 9.Dreiseitl S, Ohno-Machado L. Logistic regression and artificial neural network classification models: A methodology review. J Biomed Inform. 2002;35:352–359. doi: 10.1016/S1532-0464(03)00034-0. [DOI] [PubMed] [Google Scholar]

- 10.Ben-Bassat M, Klove KL, Weil MH. Sensitivity analysis in Bayesian classification models: Multiplicative deviations. IEEE Trans Pattern Anal Mach Intell. 1980;2:261–266. doi: 10.1109/TPAMI.1980.4767015. [DOI] [PubMed] [Google Scholar]

- 11.Friedman JH, Baskett F, Shustek LJ. An algorithm for finding nearest neighbors. IEEE Trans Comput. 1975;24:1000–1006. doi: 10.1109/T-C.1975.224110. [DOI] [Google Scholar]

- 12.Breiman L. Random forests. Mach Lean. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 13.Rumelhart D, Hinton G, Williams R. Learning representations by back-propagating errors. Nature. 1986;323:533–536. doi: 10.1038/323533a0. [DOI] [Google Scholar]

- 14.Bengio Y, Courville A, Vincent P. Representation learning: A review and new perspectives. IEEE Trans Pattern Anal Mach Intell. 2013;35(1):798–828. doi: 10.1109/TPAMI.2013.50. [DOI] [PubMed] [Google Scholar]

- 15.Fukushima K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol Cybern. 1980;36:193–202. doi: 10.1007/BF00344251. [DOI] [PubMed] [Google Scholar]

- 16.Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. J Physiol. 1968;195:215–243. doi: 10.1113/jphysiol.1968.sp008455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hubel DH, Wiesel TN. Receptive fields of single neurones in the cat's striate cortex. J Physiol. 1959;148:574–591. doi: 10.1113/jphysiol.1959.sp006308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Schmidhuber J. Deep learning in neural networks: An overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 19.LeCun Y, Bottou L, Orr GB, Müller KR. Neural Networks: Tricks of the Trade. Springer; Berlin: 1998. Efficient BackProp. [Google Scholar]

- 20.LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86:2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 21.LeCun Y, Boser B, Denker JS, Henderson D, Howard RE, Hubbard W, Jackel LD. Backpropagation applied to handwritten zip code recognition. Neural Computation. 1989;1:541–551. doi: 10.1162/neco.1989.1.4.541. [DOI] [Google Scholar]

- 22.Serre T, Wolf L, Bileschi S, Riesenhuber M, Poggio T. Robust object recognition with cortex-like mechanisms. IEEE Trans Pattern Anal Mach Intell. 2007;29:411–426. doi: 10.1109/TPAMI.2007.56. [DOI] [PubMed] [Google Scholar]

- 23.Wiatowski T, Bölcskei H. A mathematical theory of deep convolutional neural networks for feature extraction. IEEE Trans Inf Theory. 2018;64:1845–1866. doi: 10.1109/TIT.2017.2756880. [DOI] [Google Scholar]

- 24.Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: A simple way to prevent neural networks from overfitting. J Mach Lean Res. 2014;15:1929–1958. [Google Scholar]

- 25.Nowlan SJ, Hinton GE. Simplifying neural networks by soft weight-sharing. Neural Comput. 1992;4:473–493. doi: 10.1162/neco.1992.4.4.473. [DOI] [Google Scholar]

- 26.Bengio Y. Learning deep architectures for AI. Found Trends Mach Lean. 2009;2:1–127. doi: 10.1561/2200000006. [DOI] [Google Scholar]

- 27.Mutch J, Lowe DG. Object class recognition and localization using sparse features with limited receptive fields. Int J Comput Vision. 2008;80:45–57. doi: 10.1007/s11263-007-0118-0. [DOI] [Google Scholar]

- 28.Neal RM. Connectionist learning of belief networks. Artificial Intell. 1992;56:71–113. doi: 10.1016/0004-3702(92)90065-6. [DOI] [Google Scholar]

- 29.Ciresan D, Meier U, Masci J, Gambardella LΜ, Schmidhuber J. Flexible, high performance convolutional neural networks for image classification. IJCAI Proc Int Joint Conf Artificial Intell. 2011;22:1237–1242. [Google Scholar]

- 30.Scherer D, Müller A, Behnke S. Evaluation of pooling operations in convolutional architectures for object recognition. In: Artificial Neural Networks (ICANN) 2010. In: Diamantaras K, Duch W, Iliadis LS, editors. Lecture Notes in Computer Science. Springer; Berlin: 2010. pp. 92–101. [DOI] [Google Scholar]

- 31.Huang FJ, LeCun Y. Large-scale learning with SVM and convolutional for generic object categorization. Computer vision and pattern recognition. IEEE Comput Soc Conf. 2006;1:284–291. [Google Scholar]

- 32.Jarrett K, Kavukcuoglu K, Ranzato MA, LeCun Y. 2009 IEEE 12th International Conference on Computer Vision. ICCV 2009; Kyoto, Japan: 2009. What is the best multi-stage architecture for object recognition? pp. 2146–2153. [DOI] [Google Scholar]

- 33.Zheng Y, Liu Q, Chen E, Ge Y, Zhao JL. Time series classification using multi-channels deep convolutional neural networks. In: Li F, Li G, Hwang S, Yao B, Zhang Z, editors. Web-Age Information Management, WAIM 2014. Springer; Cham: 2014. pp. 298–310. [Google Scholar]

- 34.Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G, et al. Human-level control through deep reinforcement learning. Nature. 2015;518:529–533. doi: 10.1038/nature14236. [DOI] [PubMed] [Google Scholar]

- 35.Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2015:1–9. [Google Scholar]

- 36.Glorot X, Bordes A, Bengio Y. Deep sparse rectifier neural networks. Proc Fourteenth Int Conf Artificial Intell Stat. 2011:315–323. [Google Scholar]

- 37.Nair V, Hinton G. Rectified linear units improve restricted Boltzmann machines. Proc Int Conf Mach Lean. 2010:807–814. [Google Scholar]

- 38.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Adv Neural Inf Proc Syst. 2012:1097–1105. [Google Scholar]

- 39.Bridle JS. Probabilistic interpretation of feedforward classification network outputs, with relationships to statistical pattern recognition. In: Soulié FF, Hérault J, editors. Neurocomputing. Springer; Berlin: 1990. [DOI] [Google Scholar]

- 40.He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. arXiv: 1512.03385. 2015 [Google Scholar]

- 41.Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC. Imagenet large scale visual recognition challenge. Int J Comput Vision. 2015;115:211–252. doi: 10.1007/s11263-015-0816-y. [DOI] [Google Scholar]

- 42.Lin, Tsung-Yi, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, C. Lawrence Zitnick. In European conference on computer vision. Springer; Cham: 2014. Microsoft coco: Common objects in context; pp. 740–755. [Google Scholar]

- 43.Kohavi R. A study of cross-validation and bootstrap for accuracy estimation and model selection. Proc Int Joint Conf Artificial Intell. 1995;2:1137–1143. [Google Scholar]

- 44.Schaffer C. Selecting a classification method by cross-validation. Mach Lean. 1993;13:135–143. doi: 10.1007/BF00993106. [DOI] [Google Scholar]

- 45.Refaeilzadeh P, Tang L, Liu H. Cross-validation. In: Liu L, Özsu MT, editors. Encyclopedia of Database Systems. Springer; New York: 2009. [Google Scholar]

- 46.Yu L, Chen H, Dou Q, Qin J, Heng PA. Automated melanoma recognition in dermoscopy images via very deep residual networks. IEEE Trans Med Imaging. 2017;36:994–1004. doi: 10.1109/TMI.2016.2642839. [DOI] [PubMed] [Google Scholar]

- 47.Caruana R, Lawrence S, Giles CL. Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. Adv Neural Inf Proc Syst. 2001:402–408. [Google Scholar]

- 48.Baum EB, Haussler D. What size net gives valid generalization? Neural Comput. 1989;1:151–160. doi: 10.1162/neco.1989.1.1.151. [DOI] [Google Scholar]

- 49.Geman S, Bienenstock E, Doursat R. Neural networks and the bias/variance dilemma. Neural Comput. 1992;4:1–58. doi: 10.1162/neco.1992.4.1.1. [DOI] [Google Scholar]

- 50.Krogh A, Hertz JA. A simple weight decay can improve generalization. Adv Neural Inf Proc Syst. 1992;4:950–957. [Google Scholar]

- 51.Moody JE. The effective number of parameters: An analysis of generalization and regularization in nonlinear learning systems. Adv Neural Inf Proc Syst. 1992;4:847–854. [Google Scholar]

- 52.Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20:37–46. doi: 10.1177/001316446002000104. [DOI] [Google Scholar]

- 53.Youden WJ. Index for rating diagnostic tests. Cancer. 1950;3:32–35. doi: 10.1002/1097-0142(1950)3:1<32::AID-CNCR2820030106>3.0.CO;2-3. [DOI] [PubMed] [Google Scholar]

- 54.McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb) 2012;22:276–282. doi: 10.11613/BM.2012.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Miyagi Y, Fujiwara K, Oda T, Miyake T, Coleman RL. Development of new method for the prediction of clinical trial results using compressive sensing of artificial intelligence. J Biostat Biometric. 2018;3:202. [Google Scholar]

- 56.Abbod MF, Catto JW, Linkens DA, Hamdy FC. Application of artificial intelligence to the management of urological cancer. J Urol. 2007;178:1150–1156. doi: 10.1016/j.juro.2007.05.122. [DOI] [PubMed] [Google Scholar]

- 57.Litjens G, Sánchez CI, Timofeeva N, Hermsen M, Nagtegaal I, Kovacs I, Hulsbergen-van de Kaa C, Bult P, van Ginneken B, van der Laak J. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep. 2016;6:26286. doi: 10.1038/srep26286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Ortiz A, Munilla J, Górriz JM, Ramírez J. Ensembles of deep learning architectures for the early diagnosis of the Alzheimer's disease. Int J Neural Syst. 2016;26:1650025. doi: 10.1142/S0129065716500258. [DOI] [PubMed] [Google Scholar]

- 59.Gil D, Johnsson M, Chamizo JMG, Paya AS, Fernandez DR. Application of artificial neural networks in the diagnosis of urological disfunctions. Expert Syst Appl. 2009;36:5754–5760. doi: 10.1016/j.eswa.2008.06.065. [DOI] [Google Scholar]

- 60.Simões PW, Izumi NB, Casagrande RS, Venson R, Veronezi CD, Moretti GP, da Rocha EL, Cechinel C, Ceretta LB, Comunello E, et al. Classification of images acquired with colposcopy using artificial neural networks. Cancer Inform. 2014;13:119–124. doi: 10.4137/CIN.S17948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Sato M, Horie K, Hara A, Miyamoto Y, Kurihara K, Tomio K, Yokota H. Application of deep learning to the classification of images from colposcopy. Oncol Lett. 2018;15:3518–3523. doi: 10.3892/ol.2018.7762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Trebeschi S, van Griethuysen JJM, Lambregts DMJ, Lahaye MJ, Parmar C, Bakers FCH, Peters NHGM, Beets-Tan RGH, Aerts HJWL. Deep learning for fully-automated localization and segmentation of rectal cancer on multiparametric MR. Sci Rep. 2017;7:5301. doi: 10.1038/s41598-017-05728-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Olczak J, Fahlberg N, Maki A, Razavian AS, Jilert A, Stark A, Sköldenberg O, Gordon M. Artificial intelligence for analyzing orthopedic trauma radiographs. Acta Orthop. 2017;88:581–586. doi: 10.1080/17453674.2017.1344459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Khosravi P, Kazemi E, Zhan Q, Toschi M, Makmsten J, Hickman C, Meseguer M, Rosenwaks Z, Elemento O, Zaninovic N, Hajirasouliha I. Robust automated assessment of human blastocyst quality using deep learning. bioRxiv 394882. 2018 [Google Scholar]

- 65.Miyagi Y, Habara T, Hirata R, Hayashi N. Feasibility of artificial intelligence for predicting live birth without aneuploidy from a blastocyst image. Reprod Med Biol. 2019;18:204–211. doi: 10.1002/rmb2.12284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Miyagi Y, Habara T, Hirata R, Hayashi N. Feasibility of deep learning for predicting live birth from a blastocyst image in patients classified by age. Reprod Med Biol. 2019;18:190–203. doi: 10.1002/rmb2.12284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Sideri M, Garutti P, Costa S, Cristiani P, Schincaglia P, Sassoli de Bianchi P, Naldoni C, Bucchi L. Accuracy of colposcopically directed biopsy: Results from an online quality assurance programme for colposcopy in a population-based cervical screening setting in Italy. BioMed Res Int. 2015;2015:614035. doi: 10.1155/2015/614035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Sideri M, Spolti N, Spinaci L, Sanvito F, Ribaldone R, Surico N, Bucchi L. Interobserver variability of colposcopic interpretations and consistency with final histologic results. J Low Genit Tract Dis. 2004;8:212–216. doi: 10.1097/00128360-200407000-00009. [DOI] [PubMed] [Google Scholar]

- 69.Massad LS, Jeronimo J, Katki HA, Schiffman M, National Institutes of Health/American Society for Colposcopy and Cervical Pathology Research Group The accuracy of colposcopic grading for detection of high grade cervical intraepithelial neoplasia. J Low Genit Tract Dis. 2009;13:137–144. doi: 10.1097/LGT.0b013e31819308d4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.LeCun Y, Haffner P, Bottou L, Bengio Y. Springer; Berlin, Heidelberg: 1999. Object recognition with gradient-based learning. In Shape, contour and grouping in computer vision. [Google Scholar]

- 71.Hu J, Shen L, Sun G. Squeeze-and-excitation networks. Proceedings of the IEEE conference on computer vision and pattern recognition. 2018:7132–7141. [Google Scholar]

- 72.Kudva V, Prasad K, Guruvare S. Automation of detection of cervical cancer using convolutional neural networks. Crit Rev Biomed Eng. 2018;46:135–145. doi: 10.1615/CritRevBiomedEng.2018026019. [DOI] [PubMed] [Google Scholar]

- 73.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated and/or analyzed during the present study are not publicly available, since data sharing is not approved by the Institutional Review Board of Shikoku Cancer Center (approval no. 2017-81).