Abstract

PURPOSE

We conducted a randomized controlled trial to compare the effectiveness of adding various forms of enhanced external support to practice facilitation on primary care practices’ clinical quality measure (CQM) performance.

METHODS

Primary care practices across Washington, Oregon, and Idaho were eligible if they had fewer than 10 full-time clinicians. Practices were randomized to practice facilitation only, practice facilitation and shared learning, practice facilitation and educational outreach visits, or practice facilitation and both shared learning and educational outreach visits. All practices received up to 15 months of support. The primary outcome was the CQM for blood pressure control. Secondary outcomes were CQMs for appropriate aspirin therapy and smoking screening and cessation. Analyses followed an intention-to-treat approach.

RESULTS

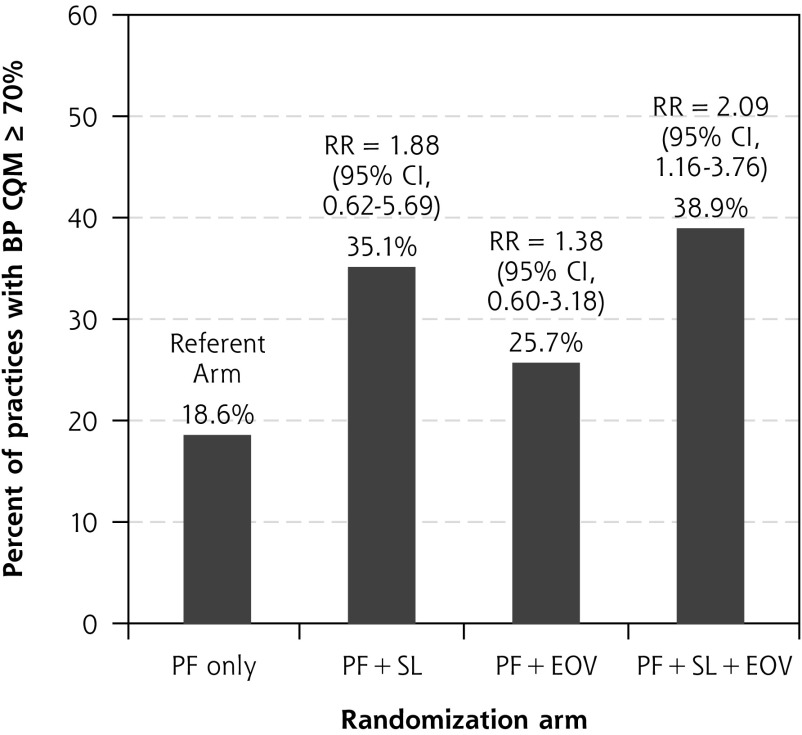

Of 259 practices recruited, 209 agreed to be randomized. Only 42% of those offered educational outreach visits and 27% offered shared learning participated in these enhanced supports. CQM performance improved within each study arm for all 3 cardiovascular disease CQMs. After adjusting for differences between study arms, CQM improvements in the 3 enhanced practice support arms of the study did not differ significantly from those seen in practices that received practice facilitation alone (omnibus P = .40 for blood pressure CQM). Practices randomized to receive both educational outreach visits and shared learning, however, were more likely to achieve a blood pressure performance goal in 70% of patients compared with those randomized to practice facilitation alone (relative risk = 2.09; 95% CI, 1.16-3.76).

CONCLUSIONS

Although we found no significant differences in CQM performance across study arms, the ability of a practice to reach a target level of performance may be enhanced by adding both educational outreach visits and shared learning to practice facilitation.

Key words: primary health care, cardiovascular disease, quality improvement, chronic illness, prevention, health promotion, quantitative methods, health services, organizational change, practice-based research

INTRODUCTION

Past efforts to transform primary care have included practice redesign based on medical home principles and adoption of electronic health records.1–4 More recently, primary care practices face increasing expectations to improve the quality of care they deliver to their patients.5,6 The Centers for Medicare & Medicaid Services (CMS) and others have moved to value-based reimbursement tied to improved performance on quality metrics.7 Gains in quality of care have been uneven across primary care settings and have faltered for some indicators.8 For example, from 1999 to 2016, there was no consistent improvement in blood pressure (BP) control among patients treated for hypertension.9

In 2015, the Agency for Healthcare Research and Quality launched the EvidenceNOW initiative, a $112 million national program that funded 7 implementation studies within regional cooperatives across the United States.10–12 The goal of the program was to understand how to best build and support the capacity of primary care practices to receive and incorporate new evidence into practice and thus improve their quality of care. The focus of improvement was on cardiovascular disease (CVD) risk factor control, thus aligning EvidenceNOW with the Department of Health and Human Services’ Million Hearts initiative. CVD remains the leading cause of avoidable morbidity and mortality in the Unites States.13 Clinical trials and population-based studies provide a strong evidence base for addressing 4 CVD risk factors in primary care settings through the corresponding ABCS interventions: aspirin therapy in high-risk patients, BP control, cholesterol management, and smoking screening and cessation counseling.14

Improving care quality requires developing the quality improvement (QI) capacity within primary care to support needed changes in how care is delivered. This requirement is especially true for smaller practices that comprise nearly one-half of all primary care settings.15 They often lack the staffing and resources to invest in the infrastructure and training required to provide essential competencies and resources needed to conduct effective QI activities.16,17 Even when these practices have resources and are committed to QI in principle, they often struggle with developing and implementing improvement strategies.18 Major disruptions such as clinician and staff turnover or changes in health information technology systems are common.19 In addition, these practices struggle with generating the performance reports needed to guide QI activities.20 Many have proposed providing external support to overcome these challenges and assist these practices in making changes required to improve care quality.11,21,22

Three specific external practice support strategies have some evidence of effectiveness in improving care quality in primary care settings: practice facilitation,23,24 shared learning, and educational outreach.22 Practice facilitation is delivered by a trained practice facilitator, usually external to the practice setting, who meets with those who work within a practice on a recurring basis over time to assist them with implementing a change in care delivery.24–27 As described by Berta and colleagues,26 “…facilitation is a concerted, social process that focuses on evidence-informed practice change and incorporates aspects of project management, leadership, relationship building, and communication.” Shared learning opportunities, where practices share information to learn QI practices from one another, can also improve care.28 Educational outreach occurs when a trained outside expert delivers brief educational content to a health care professional or clinical team.29,30 Although practice facilitation has a strong evidence base, little is known about the benefit of supplementing practice facilitation with shared learning, educational outreach, or both, to improve care quality in primary care settings.

Here we present the results of the Healthy Hearts Northwest (H2N) randomized controlled trial. The primary aim of the study was to compare the effectiveness of adding enhanced practice support interventions— shared learning opportunities, educational outreach visits, or both—to practice facilitation to improve performance on CVD risk factor management in smaller primary care practices. We hypothesized that improvement in clinical quality measures (CQMs) for CVD risk factors would be greater among practices assigned to the enhanced practice support arms of the study compared with practice facilitation alone.

METHODS

Study Design and Setting

H2N is 1 of 7 regional cooperatives funded by the Agency for Healthcare Research and Quality under the EvidenceNOW initiative.12 Details about the study protocol have been previously published.31 Briefly, a 2-by-2 factorial design was used to compare the effectiveness of adding shared learning, educational outreach visits, or both to practice facilitation. The trial therefore had 4 intervention arms: (1) practice facilitation alone, (2) practice facilitation and shared learning, (3) practice facilitation and educational outreach visits, and (4) practice facilitation with both shared learning and educational outreach visits. The study took place within smaller primary care practices across Washington, Oregon, and Idaho. To be eligible, practices were required to have fewer than 10 full-time clinicians in a single location and participate in stage 1 meaningful use federal certification for their electronic health record.32 This study was reviewed and approved by the Kaiser Permanente Washington Health Research Institute’s Institutional Review Board.

Interventions

Practice Facilitation

Practice facilitation support was provided by 2 organizations, Qualis Health in Washington and Idaho, and the Oregon Rural Practice Research Network (ORPRN) in Oregon. Sixteen facilitators provided 15 months of active support to the 209 randomized practices. The facilitation protocol included at least 5 face-to-face quarterly practice facilitation visits, with at least monthly contact (in-person visits, telephone calls, or e-mails) in between those in-person visits. Facilitators met with a QI team within each clinic to assist them in developing and testing plan-do-study-act cycles of improvement focused on the ABCS measures. Facilitators were guided in their activities by assessing and working with practices on 7 high-leverage changes adapted from prior work and experience with supporting medical home practice transformation: (1) embed clinic evidence into daily work, (2) use data to understand and improve care, (3) establish a regular QI process, (4) identify at-risk patients for outreach, (5) define roles and responsibilities for improving care, (6) deepen patient self-management support, and (7) link patients to resources outside of the clinic.33 Two separate in-person 1-day training sessions were held for facilitators from both organizations, and all facilitators participated in monthly telephone calls to harmonize their approach.

Shared Learning

It was not practical or feasible to offer a traditional learning collaborative given the geographic spread and timeline of the study. Instead, practices randomized to the shared learning arm of the study were offered the opportunity to visit an enrolled practice with a particularly strong or innovative approach to QI. We were concerned about the ability of these small practices to free up an individual to spend a day away from the practice for these visits. They were therefore also offered the opportunity to participate in 2 virtual 1-hour shared learning conference calls with such an exemplar practice. Exemplars were identified through nominations from practice facilitators and other members of the H2N study team. Shared learning focused on improvement strategies used by the exemplar practice and roles and responsibilities for improvement within the practice’s team. For those who participated in the telephone calls, each participating practice identified a promising approach or activity it was willing to try during the first call, then reported on its experience during the second call.

Educational Outreach Visits

The purpose of the educational outreach visits was to encourage use of a CVD risk calculator within patient encounters.34 The design of the educational outreach visit was based on the principles of academic detailing and is described in more detail elsewhere.35 Briefly, with input from a small advisory group of primary care clinicians, the study team developed the educational outreach visit protocol to address priority topics and issues related to implementing CVD risk calculation within daily clinic work. The advisory group of clinicians emphasized the need to keep the length of the educational outreach visit to less than an hour because of the high levels of competing demands faced by primary care clinicians. The educational outreach visit consisted of a 30-minute interactive webinar and telephone call between 1 or more clinicians and members of their care team within an enrolled clinic and a physician academic expert. The interaction focused on eliciting current practices, attitudes, and beliefs about CVD risk calculation, as well as perceived barriers, and on identifying specific strategies to overcome those barriers. A follow-up e-mail was sent to the participants and their practice facilitator documenting commitments made by the clinicians or members of their team during the call.

Randomization

Enrolled practices were categorized into 1 of 8 strata defined by their practice facilitation support organization (Qualis Health or ORPRN), prior practice experience obtaining customized data to drive QI (yes or no), and prioritization of the work of improving CVD risk factors (high or low). Within each stratum, practices were randomly assigned by a computer-generated randomization scheme to 1 of the 4 intervention arms.

Data Collection and Measures

A practice questionnaire completed by an office manager in each practice was collected at baseline and provided information about the practice such as numbers of clinicians and staff, as well as characteristics of their patient population. Outcomes for the study, the CVD risk factor CQMs, were defined for each practice as the percent of patients in the risk factor target population who met the defined clinical quality criteria. All CQMs were endorsed by CMS.36 The primary study outcome, as stated in our published study protocol, was the CQM for BP control (CMS 165),37 defined as the percent of patients aged 18 to 85 years with previously diagnosed hypertension (denominator) from each practice who achieved adequate blood pressure control (<140/90 mm Hg) (numerator). Secondary outcomes were appropriate aspirin use (CMS 164)38 and tobacco use screening and cessation (CMS 138).39 We a priori chose BP control as the primary outcome because improvements in this measure require more marked changes in clinical care of patients than changes in workflow and documentation, which alone can sometimes result in improved rates of aspirin use or tobacco screening and cessation. The cholesterol and statin therapy measure (CMS 347) was under revision at the start of the study based on recent changes in evidence-based clinical guidelines; as a result, practices experienced considerable challenges in obtaining this measure from their electronic health record, so it is not included in the analysis. Each practice submitted numerator and denominator data on each CQM measure using a rolling 12-month look-back period. The study protocol called for practices to submit CQM data quarterly.

Analyses

The primary aim of the study was to compare the effectiveness of adding shared learning opportunities, educational outreach visits, or both to practice facilitation on the 3 CQMs. Our primary outcome was the practice-level change in the BP CQM from baseline to the postintervention follow-up. We defined baseline as the 2015 calendar year before randomization (January 1, 2015 to December 31, 2015) and follow-up as calendar year 2017 (January 1, 2017 to December 31, 2017).

Before analysis, CQM data were assessed for data quality. Two members of the coordinating center analysis team (M.L.A., E.S.O.), who were blinded to study arm, independently identified and adjudicated highly improbable values by examining trends in the data submitted by each practice. Discrepant evaluations were reviewed for consensus, and values found to be implausible were set to missing. Missing CQM data for the primary time points were imputed when possible by using values from adjacent quarters (next quarter carried backward for baseline and last value carried forward for follow-up measures). For our primary outcome, BP, data were imputed for only 6 practices in 2015 and 5 practices in 2017. As a sensitivity analysis, primary and secondary analyses were repeated using the original data as submitted; as study conclusions were unchanged, only results including imputed outcomes are reported.

To assess intervention effects on the primary study outcome of BP, we fit a linear regression model at the practice level, with change in the percent of patients in the practice achieving the BP CQM target as the dependent variable and indicators for intervention groups as independent variables. Models used generalized estimating equations with a robust variance estimator, and accounted for potential correlation between practices with the same practice facilitator (cluster).40 Models adjusted for practice facilitation support organization (Qualis Health or ORPRN), baseline prior practice experience obtaining customized data reports (yes or no), baseline prioritization of QI work (high or low), and the baseline BP CQM. By adjusting for practice facilitation support organization, we intended to control for differences in history and background regarding primary care transformation efforts in Oregon vs Washington and Idaho, and other unmeasured differences between practice context in these 2 geographic areas. To assess statistical significance, we used the Fisher protected least significant difference approach, to control for multiple comparisons. We first calculated an omnibus F test to assess whether there were any significant differences between intervention groups, and considered pairwise comparisons only if that test was statistically significant.

Analyses followed an intention-to-treat approach, analyzing practices according to randomized group assignment regardless of engagement with and participation in intervention activities. We attempted to obtain outcome data for all randomized practices, including those that did not actively participate in the intervention or dropped out of the study. To account for potential bias due to missing outcome data, however, we used inverse probability weights in the final outcomes model to balance intervention groups with respect to baseline practice characteristics. To construct the weights, we fit a logistic regression model with a binary indicator if the CQM outcome was observed as the dependent variable (yes or no), and practice characteristics as independent variables. The inverse of the estimated probability that the CQM outcome was observed was used for weighting in the outcome model. Similar analyses were conducted for secondary outcomes, the aspirin therapy and smoking CQMs.

In addition to the primary analysis, we also assessed group differences in practices’ ability to reach the Million Hearts goal of 70% or higher on the BP CQM at follow-up. Our rationale for doing so was that practices enrolled in the study were both told about this target and provided a visual dashboard during each quarterly meeting with their practice facilitator that showed how close they were to this goal. We fit a generalized linear model with log link and robust variance estimation to estimate relative risks of achieving the 70% threshold for each intervention group relative to the practice facilitation–only group. The model adjusted for the same variables as the primary outcome model, and accounted for clustering by practice facilitator. Analyses were performed using Stata statistical software, version 15.0 (StataCorp, LLC).

RESULTS

A total of 259 smaller primary practices enrolled in the study. Of these, 50 withdrew before randomization, resulting in 209 randomized practices (Figure 1). Overall, the practices received an average of 7.9 (SD 3.5) in-person practice facilitation visits lasting 30 minutes or more during the 15-month intervention period. The number of visits did not differ significantly by study arm, with a range of 7.6 to 8.4 visits across arms. Of the 104 practices randomized to educational outreach visits either alone or in combination with a shared learning site visit, 44 (42%) participated. Of the 104 randomized to a shared learning site visit either alone or in combination with educational outreach visit, 28 (27%) participated.

Figure 1.

CONSORT diagram of practices in the Healthy Hearts Northwest (H2N) randomized trial.

CONSORT = Consolidated Standards of Reporting Trials; EOV = educational outreach visit; PF = practice facilitation; SL = shared learning.

Practice characteristics are shown in Table 1. Most practices had 2 to 5 physicians, slightly more than 43% were rural, and 46% were owned by independent physicians.

Table 1.

Practice and Patient Characteristics, by Randomization Arm and Overall

| Characteristic | Randomization Arm

|

Overall (N = 209) | |||

|---|---|---|---|---|---|

| PF Only (n = 53) | PF + SL (n = 52) | PF + EOV (n = 52) | PF + EOV + SL (n = 52) | ||

| Practices | |||||

| Site, No. (%) | |||||

| Qualis Health | 28 (52.8) | 28 (53.9) | 28 (53.9) | 28 (53.9) | 112 (53.6) |

| ORPRN | 25 (47.2) | 24 (46.2) | 24 (46.2) | 24 (46.2) | 97 (46.4) |

| Location, No. (%) | |||||

| Rural | 27 (50.9) | 20 (38.5) | 18 (34.6) | 26 (50.0) | 91 (43.5) |

| Urban | 26 (49.1) | 32 (61.5) | 34 (65.4) | 26 (50.0) | 118 (56.5) |

| Clinicians, No. (%) | |||||

| 1 (solo) | 11 (20.8) | 10 (19.2) | 9 (17.3) | 8 (15.4) | 38 (18.2) |

| 2-5 | 30 (56.6) | 26 (50.0) | 27 (51.9) | 28 (53.9) | 111 (53.1) |

| ≥6 | 12 (22.6) | 16 (30.8) | 16 (30.8) | 16 (30.8) | 60 (28.7) |

| Average panel size for full-time clinician, median (IQR) | 1,100 (1,229) | 1,000 (1,025) | 1,000 (919) | 1,000 (1,000) | 1,000 (1,029) |

| Patient visits per week at practice, median No. (IQR) | 137 (180) | 160 (266) | 148 (275) | 155 (314) | 150 (266) |

| Organizational type, No. (%) | |||||

| FQHC | 7 (13.2) | 6 (11.5) | 3 (5.8) | 6 (11.5) | 22 (10.5) |

| Health/hospital system | 15 (28.3) | 19 (36.5) | 26 (50.0) | 21 (40.4) | 81 (38.8) |

| IHS/tribal | 3 (5.7) | 1 (1.9) | 3 (5.8) | 3 (5.8) | 10 (4.8) |

| Independent | 28 (52.8) | 26 (50.0) | 20 (38.5) | 22 (42.3) | 96 (45.9) |

| Specialty, No. (%) | |||||

| Family medicine | 42 (79.3) | 39 (75.0) | 47 (90.4) | 42 (80.8) | 170 (81.3) |

| Internal medicine | 2 (3.8) | 1 (1.9) | 2 (3.9) | 2 (3.9) | 7 (3.4) |

| Mixed | 9 (17.0) | 12 (23.1) | 3 (5.8) | 8 (15.4) | 32 (15.3) |

| Patients | |||||

| White, mean (SD), % | 81.5 (25.1) | 89.1 (11.6) | 83.9 (22.7) | 82.4 (24.6) | 84.0 (22.0) |

| Hispanic, mean (SD), % | 11.2 (16.0) | 9.3 (16.8) | 7.0 (7.3) | 9.9 (14.3) | 9.4 (14.0) |

| Female, mean (SD), % | 54.0 (6.7) | 53.6 (6.9) | 56.9 (8.0) | 53.1 (9.0) | 54.3 (7.8) |

| Age-group, mean (SD), % | |||||

| <17 y | 13.9 (9.5) | 10.9 (7.9) | 13.3 (9.6) | 13.7 (8.7) | 13.0 (9.0) |

| 18-39 y | 24.6 (9.7) | 22.4 (6.7) | 24.1 (9.3) | 29.4 (12.9) | 25.1 (10.1) |

| 40-59 y | 30.3 (12.3) | 29.0 (6.2) | 28.9 (10.4) | 27.2 (6.0) | 28.9 (9.3) |

| 60-75 y | 20.7 (9.7) | 28.1 (10.6) | 19.7 (7.3) | 21.2 (11.8) | 22.3 (10.3) |

| >75 y | 10.4 (8.1) | 9.5 (6.0) | 13.9 (17.8) | 8.4 (6.2) | 10.6 (10.9) |

| Insurance type, mean (SD), % | |||||

| Medicare | 23.6 (18.2) | 25.1 (12.9) | 26.8 (18.7) | 23.4 (16.4) | 24.6 (16.7) |

| Medicaid | 25.9 (22.3) | 22.5 (17.8) | 22.6 (17.7) | 26.0 (21.4) | 24.4 (20.0) |

| Dual (Medicare and Medicaid) | 4.1 (8.3) | 2.4 (5.4) | 5.7 (9.3) | 3.0 (5.0) | 3.8 (7.3) |

| Commercial | 32.0 (22.4) | 41.5 (22.4) | 34.6 (22.5) | 37.5 (22.0) | 36.2 (22.4) |

| Uninsured | 8.8 (17.8) | 4.4 (5.4) | 7.1 (9.8) | 8.8 (13.1) | 7.4 (12.8) |

| Other | 5.8 (12.4) | 4.0 (8.1) | 3.1 (7.7) | 1.6 (4.8) | 3.7 (8.9) |

| Baseline BP CQM | |||||

| Practice had achieved Million Hearts goal,a No. (%) | 10 (21.3) | 13 (25.5) | 13 (28.3) | 8 (16.7) | 44 (22.9) |

BP = blood pressure; CQM = clinical quality measure; EOV = educational outreach visit; FQHC = Federally Qualified Health Center; IHS = Indian Health Service; IQR = interquartile range; ORPRN = Oregon Rural Practice Research Network; PF = practice facilitation; QI = quality improvement; SL = shared learning.

Notes: Missing data on practice characteristics: panel size not reported for 30 practices that did not empanel patients, missing for 16 other practices; patient visits/week, 1 practice; centralized QI, 2 practices; prior experience customizing data, 5 practices; prioritization of QI work, 5 practices. Missing data on patient population: race, 65 practices; Hispanic ethnicity, 72 practices; female sex, 53 practices; age, 64 practices; insurance type, 45 practices. Missing data on baseline BP CQM: 17 practices.

Goal is ≥70% of patients with history of hypertension achieving BP <140/90 mm Hg.

A total of 183 (87.6%) of the 209 randomized practices successfully submitted numerator and denominator outcome data, for both baseline (2015) and postintervention follow-up (2017) for the BP CQM, compared with 141 (67.5%) for the aspirin CQM and 151 (68.9%) for the smoking CQM. Across all practices, performance on each CQM improved from baseline to follow-up, from 61.6% to 64.7% of patients meeting the clinical target for BP, from 67.4% to 71.3% for aspirin, and from 74.6% to 81.6% for smoking. Unadjusted baseline and follow-up CQM outcomes by study arm are shown in Table 2. For example, for practices in the practice facilitation–only arm, the mean percent of patients with a prior hypertension diagnosis who had controlled BP (<140/90 mm Hg) was 58.2% at baseline and 62.5% at the postintervention follow-up. At baseline, CQM attainment tended to be highest for the smoking CQM across study arms and lowest for the BP CQM.

Table 2.

Baseline (2015) and Follow-up (2017) CQM Outcomes by Study Arm

| CQM Outcomea | Eligible Patients Meeting CQM, Mean (SD), %

|

|||||||

|---|---|---|---|---|---|---|---|---|

| PF Only | PF + SL | PF + EOV | PF + EOV + SL | |||||

|

| ||||||||

| 2015 | 2017 | 2015 | 2017 | 2015 | 2017 | 2015 | 2017 | |

| Blood pressure (183 practices) | 58.2 (11.0) | 62.5 (10.8) | 63.1 (12.5) | 65.8 (11.2) | 65.6 (10.4) | 65.1 (10.7) | 59.6 (14.4) | 65.1 (12.1) |

| Aspirin (141 practices) | 67.3 (16.6) | 69.3 (16.2) | 65.3 (17.0) | 71.0 (10.3) | 69.0 (16.2) | 71.1 (12.8) | 68.5 (15.6) | 73.8 (12.8) |

| Smoking (144 practices) | 68.4 (28.2) | 79.6 (22.1) | 77.4 (19.6) | 83.8 (17.7) | 75.4 (23.5) | 80.3 (22.6) | 76.7 (18.7) | 82.2 (20.3) |

CQM = clinical quality measure; CVD = cardiovascular disease; EOV = educational outreach visit; PF = practice facilitation; SL = shared learning.

CQM outcomes are defined as the percent of the eligible target population (denominator) meeting the CQM (numerator) for each CVD quality measure. Assessed only for practices with valid observations for both 2015 and 2017.

Estimates of intervention effects on the CQM outcomes during the 2-year study period are shown in Table 3. Improvements in CQM performance over time were found within each study arm, but there were no statistically significant differences between arms (overall P values >.40 for all comparisons). The largest improvements (adjusted mean changes of greater than 4%) were seen in the arms that included shared learning, either with practice facilitation or in combination with both practice facilitation and educational outreach visits.

Table 3.

Mean Changes in CQM Outcomes From Baseline (2015) to Follow-up (2017)

| CQM Outcomea | Adjustedb Change,c Mean (95% CI) | Adjustedb Difference in Mean Change vs PF Only, Mean Difference (95% CI) | Overall P Valued | |||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| PF Only | PF + SL | PF + EOV | PF + EOV + SL | PF + SL | PF + EOV | PF + EOV + SL | ||

| Blood pressure | 2.46 (−2.03 to 6.95) | 4.80 (0.08 to 9.53) | 2.78 (−0.67 to 6.23) | 4.84 (2.53 to 7.16) | 2.34 (−4.71 to 9.39) | 0.32 (−6.59 to 7.22) | 2.38 (−2.31 to 7.08) | .40 |

| Aspirin | 2.28 (−3.02 to 7.57) | 4.02 (1.25 to 6.78) | 3.49 (0.24 to 6.74) | 5.54 (−0.16 to 11.25) | 1.74 (−4.82 to 8.30) | 1.21 (−4.74 to 7.16) | 3.26 (−6.05 to 12.58) | .90 |

| Smoking | 9.41 (3.27 to 15.55) | 7.52 (0.07 to 14.96) | 6.25 (0.37 to 12.12) | 5.87 (−0.05 to 11.79) | −1.90 (−11.64 to 7.85) | −3.17 (−12.83 to 6.50) | −3.54 (−11.69 to 4.61) | .80 |

CQM = clinical quality measure; CVD = cardiovascular disease; EOV = educational outreach visit; PF = practice facilitation; SL = shared learning.

CQM outcomes are defined as the percent of eligible target population (denominator) meeting the clinical quality standard (numerator) for each CVD quality measure.

Models adjusted for site, prior experience obtaining customized data, prioritization of quality improvement work, and the 2015 measure of the CQM outcome.

Positive value for change indicates an increase (improvement) in the mean percent of the practices’ target population meeting the CQM; negative value for change indicates a decrease.

Overall P value is a global test for whether the mean change in CQM outcome in any of the study arms receiving enhanced services (PF + SL, PF + EOV, or PF + EOV + SL) differs when compared with that in the PF-only group.

Regarding differences across arms in practices’ ability to achieve the Million Hearts performance goal of 70% on the BP CQM14 at follow-up, the likelihood of reaching this performance goal was higher among practices randomized to receive shared learning, with 35.1% (95% CI, 19.2%-51.0%) achieving this mark among practices in the practice facilitation and shared learning arm, and 38.9% (95% CI, 26.7%-51.1%) in the practice facilitation and both shared learning and educational outreach visit arm, compared with 18.6% (95% CI, 4.0%-33.3%) in the practice facilitation–only arm (Figure 2). This difference reached statistical significance for practices randomized to receive both educational outreach visits and shared learning, with a roughly doubling of the likelihood of achieving the goal compared with practice facilitation alone (relative risk, 2.09; 95% CI, 1.16-3.76), but was not significant for practice facilitation and shared learning compared with practice facilitation alone (relative risk, 1.88; 95% CI, 0.62-5.69).

Figure 2.

Achievement of Million Hearts goal of ≥70% of eligible patients with controlled blood pressure (<140/90 mm Hg) at follow-up (2017).

BP = blood pressure; CQM = clinical quality measure; EOV = educational outreach visit; PF = practice facilitation; RR = relative risk; SL = shared learning.

DISCUSSION

Smaller primary care practices provided with external support had modest improvements in their CQMs for CVD risk factors, although absolute changes in performance did not differ significantly between practices randomized to receive enhanced support (shared learning, educational outreach visits, or both) and practices randomized to receive practice facilitation alone. Those randomized to receive both educational outreach visits and shared learning in addition to practice facilitation, however, were more likely to achieve the Million Hearts BP performance goal of at least 70% of eligible patients compared with those randomized to practice facilitation alone. The change between BP CQM from baseline to postintervention follow-up within the arms of the study that included shared learning was approximately twice as large as that seen with practice facilitation alone, but again, the observed differences between arms were not significant.

The conclusions from our intent-to-treat analysis are limited by the low rates of participation of practices in the enhanced support interventions: 42% among those offered an educational outreach visit and 27% among those offered shared learning. We redid the analysis using a per-protocol approach, that is, including only practices who participated in the interventions as described in the methods. Because of the small sample size, these per-protocol analyses were restricted to estimation of the main effects. We again did not find any significant differences (data not shown), so the results and conclusions were not altered by this analysis. In addition, we looked for evidence of participation bias by comparing participants with nonparticipants based on practice characteristics (size, ownership, rural vs urban location), their baseline BP CQM performance, and the priority they placed on improving CVD risk factors, and found no differences (data not shown).

It is possible that participating in the educational outreach visits, shared learning, or both exceeded the capacity of many practices to invest in additional improvement efforts beyond meeting with a practice facilitator. This barrier would be consistent with findings from other studies that describe primary care practice transformation as “hard work”41 with highly variable change capabilities across practices, and the development of “change fatigue.”3 One H2N facilitator noted in field notes: “Clinic feels overwhelmed by randomization arm ... even though I explained that it was simply an added learning opportunity … .” Some H2N practices had multiple QI initiatives underway at the same time as H2N. For one practice, the facilitator commented: “Single clinician site involved in 3 QI initiatives. Need to prioritize time for staff involvement in meetings and work across [other] initiatives. Not able to stretch to make this happen.” It was not uncommon for a practice to ask their facilitator for a break or some time off from working on CVD risk factor improvement: “…we are putting them on a hiatus period where they are not scheduling new visits with H2N but will continue to receive communications about the project.” In addition, many enrolled practices experienced a considerable disruption such as the departure of a clinician or office manager during the study, similar to findings from other EvidenceNOW collaboratives.19 Finally, it is worth noting that we were unable to provide any financial incentives or payments to the enrolled practices for participating in the study, limiting their ability to devote resources to study-related activities.

Why were practices randomized to both educational outreach visits and shared learning more likely to achieve a performance of at least 70% of patients with BP control, when the absolute change in this CQM was not significant? This finding may be attributable to the previously observed threshold effect in pay-for-performance evaluations.42 That is, people strive to reach a goal, but then stop further improvement once it is reached. Practices enrolled in this study were provided with feedback on their performance that included the 70% Million Hearts performance goal for BP. Given the overall baseline level of performance of 63.4% for the BP CQM, some practices may have curtailed further efforts to improve once they reached the 70% goal, limiting the absolute change in the BP CQM seen across study arms. When analyzed as an dichotomous outcome, however, this threshold resulted in a significant finding.

In addition to limited participation on the shared learning and educational outreach visit interventions, a few other limitations deserve note. These interventions were “light touch” with a low dose of contact time with the practices compared with the practice facilitation intervention. Given this light touch, the results presented here may not be entirely unexpected. It is also possible that observed improvements in CVD risk factor CQMs may be attributable to external factors such as the CMS Quality Payment Program.43 Findings from studies about the influence of financial incentives on quality of care are mixed at best, however, and some have concluded that evidence is lacking.44

Although the observed changes in performance on these CVD risk factors are small, they have great potential for population-level impact on CVD events such as heart attacks and strokes.45 For example, approximately 200,000 patients (in the 183 practices that reported valid measures at both time points) had a diagnosis of hypertension. The observed increase of 3% in the BP CQM translates to 6,000 additional hypertensive patients achieving a BP of less than 140/90 mm Hg across these practices. Given that people with BP above this threshold develop cardiovascular disease 5.0 years earlier,46 these modest improvements in performance may have substantial impact on subsequent cardiovascular events and mortality.

In conclusion, smaller practices can improve their performance on CVD risk factors with external support, and reaching a target level of performance may be enhanced by adding external supports such as educational outreach visits and shared learning opportunities to practice facilitation. These practices may lack the capacity to participate in these additional external supports, however. Additional internal resources, time, and people to accept the support offered may be required to achieve significant improvements in care quality.18,47,48

Acknowledgments

We deeply appreciate the practice facilitators from Qualis Health and the Oregon Rural Practice Research Network who devoted themselves tirelessly to outreach and support activities for the practices, and all the primary care practices across Washington, Oregon, and Idaho who bravely agreed to participate in this ambitious study.

Footnotes

Conflicts of interest: authors report none.

To read or post commentaries in response to this article, see it online at http://www.AnnFamMed.org/content/17/Suppl_1/S40.

Funding support: This project was supported by grant number R18HS023908 from the Agency for Healthcare Research and Quality. Additional support was provided by the National Center for Advancing Translational Sciences of the National Institutes of Health under Award Number UL1 TR002319.

Disclaimer: The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality or the official views of the National Institutes of Health.

Ethics approval: Kaiser Permanente Washington Health Research Institute’s Institutional Review Board reviewed and approved this study.

Trial registration: This trial is registered with www.clinicaltrials.gov Identifier# NCT02839382. Registered July 18, 2016.

References

- 1.Jackson GL, Powers BJ, Chatterjee R, et al. The patient centered medical home. A systematic review. Ann Intern Med. 2013; 158(3): 169–178. [DOI] [PubMed] [Google Scholar]

- 2.Reid RJ, Coleman K, Johnson EA, et al. The Group Health medical home at year two: cost savings, higher patient satisfaction, and less burnout for providers. Health Aff (Millwood). 2010; 29(5): 835–843. [DOI] [PubMed] [Google Scholar]

- 3.Nutting PA, Crabtree BF, Miller WL, Stange KC, Stewart E, Jaén C. Transforming physician practices to patient-centered medical homes: lessons from the National Demonstration Project. Health Aff (Millwood). 2011; 30(3): 439–445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lynch K, Kendall M, Shanks K, et al. The Health IT Regional Extension Center Program: evolution and lessons for health care transformation. Health Serv Res. 2014; 49(1 Pt 2): 421–437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mostashari F. The paradox of size: how small, independent practices can thrive in value-based care. Ann Fam Med. 2016;14(1):5–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bodenheimer T, Ghorob A, Willard-Grace R, Grumbach K. The 10 building blocks of high-performing primary care. Ann Fam Med. 2014;12(2):166–171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rajkumar R, Conway PH, Tavenner M. CMS—engaging multiple payers in payment reform. JAMA. 2014; 311(19): 1967–1968. [DOI] [PubMed] [Google Scholar]

- 8.Levine DM, Linder JA, Landon BE. The quality of outpatient care delivered to adults in the United States, 2002 to 2013. JAMA Intern Med. 2016; 176(12): 1778–1790. [DOI] [PubMed] [Google Scholar]

- 9.Dorans KS, Mills KT, Liu Y, He J. Trends in prevalence and control of hypertension according to the 2017 American College of Cardiology/American Heart Association (ACC/AHA) Guideline. J Am Heart Assoc. 2018; 7(11): e008888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Shoemaker SJ, McNellis RJ, DeWalt DA. The capacity of primary care for improving evidence-based care: early findings from AHRQ’s EvidenceNOW. Ann Fam Med. 2018;16(Suppl 1):S2–S4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cohen DJ, Balasubramanian BA, Gordon L, et al. A national evaluation of a dissemination and implementation initiative to enhance primary care practice capacity and improve cardiovascular disease care: the ESCALATES study protocol. Implement Sci. 2016; 11(1): 86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Meyers D, Miller T, Genevro J, et al. EvidenceNOW: balancing primary care implementation and implementation research. Ann Fam Med. 2018;16(Suppl 1):S5–S11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Murphy SL, Kochanek KD, Xu J, Arias E. Mortality in the United States, 2014. NCHS Data Brief. 2015; (229): 1–8. [PubMed] [Google Scholar]

- 14.Frieden TR, Berwick DM. The “Million Hearts” initiative—preventing heart attacks and strokes. N Engl J Med. 2011; 365(13): e27. [DOI] [PubMed] [Google Scholar]

- 15.Liaw WR, Jetty A, Petterson SM, Peterson LE, Bazemore AW. Solo and small practices: a vital, diverse part of primary care. Ann Fam Med. 2016;14(1):8–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nutting PA, Crabtree BF, McDaniel RR. Small primary care practices face four hurdles—including a physician-centric mind-set—in becoming medical homes. Health Aff (Millwood). 2012; 31(11): 2417–2422. [DOI] [PubMed] [Google Scholar]

- 17.Arar NH, Noel PH, Leykum L, Zeber JE, Romero R, Parchman ML. Implementing quality improvement in small, autonomous primary care practices: implications for the patient-centred medical home. Qual Prim Care. 2011; 19(5): 289–300. [PMC free article] [PubMed] [Google Scholar]

- 18.Taylor EF, Peikes D, Genevro J, Meyers D. Creating Capacity for Improvement in Primary Care. The Case for Developing a Quality Improvement Infrastructure. Rockville, MD: Agency for Healthcare Research and Quality; 2013. http://www.ahrq.gov/professionals/prevention-chronic-care/improve/capacity-building/pcmhqi1.html. Published Apr 2013 Accessed Aug 30, 2018. [Google Scholar]

- 19.Mold JW, Walsh M, Chou AF, Homco JB. The alarming rate of major disruptive events in primary care practices in Oklahoma. Ann Fam Med. 2018;16(Suppl 1):S52–S57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cohen DJ, Dorr DA, Knierim K, et al. Primary care practices’ abilities and challenges in using electronic health record data for quality improvement. Health Aff (Millwood). 2018; 37(4): 635–643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Phillips RL, Jr, Kaufman A, Mold JW, et al. The primary care extension program: a catalyst for change. Ann Fam Med. 2013;11(2):173–178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Taylor EF, Genevro J, Gennotti PD, Wang W, Meyers D. Building Quality Improvement Capacity in Primary Care: Supports and Resources. Rockville, MD: Agency for Healthcare Research & Quality; 2013. http://www.ahrq.gov/professionals/prevention-chronic-care/improve/capacity-building/pcmhqi2.html. Published 2013 Accessed Aug 30, 2018. [Google Scholar]

- 23.Wang A, Pollack T, Kadziel LA, et al. Impact of practice facilitation in primary care on chronic disease care processes and outcomes: a systematic review. J Gen Intern Med. 2018; 33(11): 1968–1977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Baskerville NB, Liddy C, Hogg W. Systematic review and meta-analysis of practice facilitation within primary care settings. Ann Fam Med. 2012;10(1):63–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nagykaldi Z, Mold JW, Robinson A, Niebauer L, Ford A. Practice facilitators and practice-based research networks. J Am Board Fam Med. 2006; 19(5): 506–510. [DOI] [PubMed] [Google Scholar]

- 26.Berta W, Cranley L, Dearing JW, Dogherty EJ, Squires JE, Esta-brooks CA. Why (we think) facilitation works: insights from organizational learning theory. Implement Sci. 2015; 10: 141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Parchman ML, Noel PH, Culler SD, et al. A randomized trial of practice facilitation to improve the delivery of chronic illness care in primary care: initial and sustained effects. Implement Sci. 2013; 8: 93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lineker SC, Bell MJ, Boyle J, et al. Implementing arthritis clinical practice guidelines in primary care. Med Teach. 2009; 31(3): 230–237. [DOI] [PubMed] [Google Scholar]

- 29.Yeh JS, Van Hoof TJ, Fischer MA. Key features of academic detailing: development of an expert consensus using the Delphi method. Am Health Drug Benefits. 2016; 9(1): 42–50. [PMC free article] [PubMed] [Google Scholar]

- 30.O’Brien MA, Rogers S, Jamtvedt G, et al. Educational outreach visits: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2007; (4): CD000409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Parchman ML, Fagnan LJ, Dorr DA, et al. Study protocol for “Healthy Hearts Northwest”: a 2 × 2 randomized factorial trial to build quality improvement capacity in primary care. Implement Sci. 2016; 11(1): 138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Office of the National Coordinator for Health Information Technology Office-Based Physician Electronic Health Record Adoption. Washington, DC: Department of Health and Human Services; 2016. https://dashboard.healthit.gov/quickstats/pages/physician-ehr-adoption-trends.php. Published Dec 2016 Accessed Aug 30, 2018. [Google Scholar]

- 33.Parchman ML, Anderson ML, Coleman K, Michaels L, Schuttner L, Conway L, Hsu C, Fagnan LJ. Assessing quality improvement capacity in primary care practices. BMC Fam Pract. 2019;20:103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Muntner P, Colantonio LD, Cushman M, et al. Validation of the atherosclerotic cardiovascular disease pooled cohort risk equations. JAMA. 2014; 311(14): 1406–1415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Baldwin LM, Fischer MA, Powell J, et al. Virtual educational outreach intervention in primary care based on the principles of academic detailing. J Contin Educ Health Prof. 2018; 38(4): 269–275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Xierali IM, Hsiao CJ, Puffer JC, et al. The rise of electronic health record adoption among family physicians. Ann Fam Med. 2013;11(1):14–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.The Office of the National Coordinator for Health Information Technology, Centers for Medicare & Medicaid Services, Department of Health and Human Services Controlling high blood pressure; 2016 Performance Period EP eCQMs. https://ecqi.healthit.gov/ecqm/measures/cms165v4 Accessed Aug 30, 2018.

- 38.The Office of the National Coordinator for Health Information Technology, Centers for Medicare & Medicaid Services, Department of Health and Human Services Ischemic vascular disease (IVD): use of aspirin or another antithrombotic; 2016 Performance Period EP eCQMs. https://ecqi.healthit.gov/ecqm/measures/cms164v4 Accessed Aug 30, 2018.

- 39.The Office of the National Coordinator for Health Information Technology Centers for Medicare & Medicaid Services, Department of Health and Human Services Preventive care and screening: tobacco use: screening and cessation intervention; 2016 Performance Period EP eCQMs. https://ecqi.healthit.gov/ecqm/measures/cms138v6 Accessed Aug 30, 2018.

- 40.Chandler RE, Bate S. Inference for clustered data using the independence loglikelihood. Biometrika. 2007; 94(1): 167–183. [Google Scholar]

- 41.Crabtree BF, Nutting PA, Miller WL, et al. Primary care practice transformation is hard work: insights from a 15-year developmental program of research. Med Care. 2011; 49(Suppl): S28–S35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Van Herck P, De Smedt D, Annemans L, Remmen R, Rosenthal MB, Sermeus W. Systematic review: Effects, design choices, and context of pay-for-performance in health care. BMC Health Serv Res. 2010; 10: 247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Miller P, Mosley K. Physician reimbursement: from fee-for-service to MACRA, MIPS and APMs. J Med Pract Manage. 2016; 31(5): 266–269. [PubMed] [Google Scholar]

- 44.Rosenthal MB, Frank RG. What is the empirical basis for paying for quality in health care? Med Care Res Rev. 2006; 63(2): 135–157. [DOI] [PubMed] [Google Scholar]

- 45.Kottke TE, Faith DA, Jordan CO, Pronk NP, Thomas RJ, Capewell S. The comparative effectiveness of heart disease prevention and treatment strategies. Am J Prev Med. 2009; 36(1): 82–88. [DOI] [PubMed] [Google Scholar]

- 46.Rapsomaniki E, Timmis A, George J, et al. Blood pressure and incidence of twelve cardiovascular diseases: lifetime risks, healthy life-years lost, and age-specific associations in 1.25 million people. Lancet. 2014; 383(9932): 1899–1911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Solberg LI. Improving medical practice: a conceptual framework. Ann Fam Med. 2007;5(3):251–256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hroscikoski MC, Solberg LI, Sperl-Hillen JM, Harper PG, McGrail MP, Crabtree BF. Challenges of change: a qualitative study of chronic care model implementation. Ann Fam Med. 2006;4(4):317–326. [DOI] [PMC free article] [PubMed] [Google Scholar]