Abstract

How does developmental experience, as opposed to intrinsic physiology, shape cortical function? Naturalistic stimuli were used to elicit neural synchrony in individuals blind from birth (n = 18) and those who grew up with sight (n = 18). Blind and blindfolded sighted participants passively listened to three audio-movie clips, an auditory narrative, a sentence shuffled version of the narrative (maintaining language but lacking a plotline), and a version of the narrative backward (lacking both language and plot). For both groups, early auditory cortices were synchronized to a similar degree across stimulus types, whereas higher-cognitive temporoparietal and prefrontal areas were more synchronized by meaningful, temporally extended stimuli (i.e., audio movies and narrative). “Visual” cortices were more synchronized across blind than sighted individuals, but only for audio-movies and narrative. In the blind group, visual cortex synchrony was low for backward speech and intermediate for sentence shuffle. Meaningful auditory stimuli synchronize visual cortices of people born blind.

SIGNIFICANCE STATEMENT Naturalistic stimuli engage cognitive processing at many levels. Here, we harnessed this richness to investigate the effect of experience on cortical function. We find that listening to naturalistic audio movies and narrative drives synchronized activity across “visual” cortices of blind, more so than sighted, individuals. Visual cortex synchronization varies with meaningfulness and cognitive complexity. Higher synchrony is observed for temporally extended meaningful stimuli (e.g., movies/narrative), intermediate for shuffled sentences, lowest for time varying complex noise. By contrast, auditory cortex was synchronized equally by meaningful and meaningless stimuli. In congenitally blind individuals most of visual cortex is engaged by meaningful naturalistic stimuli.

Keywords: blindness, narrative, naturalistic, plasticity, synchrony, visual cortex

Introduction

Studies of blindness give insight into how intrinsic physiology and experience shape cortical function. Sensory loss early in life alters the response properties of sensory cortices. In blindness, “visual” cortices are active during a variety of auditory and tactile tasks, including motion detection, shape discrimination, sound localization, echolocation, Braille-reading and auditory sentence comprehension (Wanet-Defalque et al., 1988; Uhl et al., 1991; Sadato et al., 1996; Weeks et al., 2000; Bavelier and Neville, 2002; Röder et al., 2002; Merabet et al., 2004; Gougoux et al., 2005; Poirier et al., 2006; Stilla et al., 2008; Collignon et al., 2011; Thaler et al., 2011; Wolbers et al., 2011). Transiently disrupting visual cortex activity with transcranial magnetic stimulation impairs verb-generation and Braille-reading performance (Cohen et al., 1997; Amedi et al., 2004). Questions remain about the nature and extent of this repurposing.

First, does variation in the localization of activation across studies stem from individual variability or is repurposing systematic and similar across blind individuals? Second, a given task activates a small subset of visual cortices. What is the spatial extent of blindness-related plasticity? Last, are repurposed cortices engaged during everyday behaviors? Experimental paradigms often use stimuli unlike those encountered in daily life. For example, visual cortices respond to multiclause sentences with syntactic movement, but such sentences are infrequent in natural speech (Lane et al., 2015). Do deprived cortices come online only during such unusually demanding cognitive tasks, i.e., as an “overflow” processor?

An approach that is complementary to task-based studies uses naturalistic stimuli to drive brain activity during fMRI (Hasson et al., 2004). Movies and narrated stories are richly engaging; i.e., a cognitive “kitchen sink” where many processes are elicited. The data are analyzed by correlating activity of each cortical location across participants. In this way, each participants' brain activity, serves as a model for others. Such intersubject correlation provides a natural measure of the degree to which the same cortical location serves a similar function across individuals (for review, see Hasson et al., 2004, 2010).

The cortical synchronization approach can also provide insight into the types of cognitive processes that colonize the occipital cortices in blindness. The type of stimuli that synchronize a given cortical area relates to the types of cognitive functions the area supports (Hasson et al., 2004, 2008). In sighted subjects frontotemporal semantic networks are synchronized by cognitively complex naturalistic stimuli, such as movies and comedic skits. Disrupting the cognitive content and temporal structure of these stimuli (e.g., by shuffling or presenting them backward) dramatically reduces synchronization. In contrast, early auditory and visual cortices synchronize comparably to meaningful and meaningless shuffled stimuli (Hasson et al., 2008; Lerner et al., 2011; Naci et al., 2017). One interpretation of these results is that different cortical areas have different temporal receptive windows: short temporal receptive windows for early sensory areas and longer temporal receptive windows for higher-cognitive regions. Whether temporal receptive windows are an intrinsic physiological property of cortical circuits or whether they change in cases of functional reorganization is not known. In blindness, are the receptive windows of the visual cortices short, like those of other sensory areas, or long, like those of frontoparietal networks? Is higher-cognitive content required to synchronize activity in visual cortices of individuals who are blind from birth?

Congenitally blind and blindfolded sighted individuals listened to four intact naturalistic stimuli (5–7 min each): three audio movies and a spoken narrative called Pie-Man. Participants also listened to a shuffled version of the narrative that preserved sentences but lacked a coherent plotline and the narrative played backward, i.e., with no discernible semantic or linguistic content (Lerner et al., 2011; Naci et al., 2014, 2017). Synchronization of activity in occipital cortices was compared across blind and sighted participants, across stimulus types, and with other cortical areas.

Materials and Methods

Participants

Eighteen congenitally blind (6 male; 13 right-handed, 2 ambidextrous; age: mean = 41.87 SD = 16.41; years of education: mean = 16.72, SD = 2.52) and 18 sighted controls (3 male; 16 right-handed; age: mean = 41.23, SD = 13.19; years of education: mean = 18.39, SD = 4.26) contributed data to the current experiment (for further details, see Table S2, available at https://osf.io/r4tgb/). Blind and sighted participants were matched on average age and education level (age: t(34) = 0.13, p > 0.5; education: t(34) = 1.43, p = 0.16). All blind participants self-reported never having been able to distinguish colors, shapes, or motion. Eleven of the 18 blind participants had minimal light perception; the remainder had none. Participants had no known neurological disorders, head injuries, or brain damage. For all blind participants, the causes of blindness excluded pathology posterior to the optic chiasm (Table 1). All participants gave written consent under a protocol approved by the Institutional Review Board of Johns Hopkins University. Five additional sighted and three additional blind individuals participated in the experiment but were removed from analyses due to poor performance (see Stimulus and procedure section for details). One additional blind participant was removed from analyses because of subsequently reported temporary vision during childhood. Reported statistics refer only to participants included in analyses.

Table 1.

Total participants (N) for each cause of blindness

| Blindness etiology | N |

|---|---|

| Leber congenital amaurosis | 7 |

| Retinopathy of Prematurity | 5 |

| Optic nerve hypoplasia | 3 |

| Retinitis pigmentosa | 1 |

| Unknown | 2 |

Experimental design and statistical analyses

Stimuli and procedure.

Participants listened to four intact and two scrambled entertainment clips while blindfolded and undergoing functional magnetic resonance imaging (stimuli are posted to Open Science Framework: https://osf.io/r4tgb/). Intact stimuli were excerpted from the audio tracks of movies (Brian De Palma's Blow Out, Pierre Morel's Taken, and James Wan's The Conjuring) and a spoken narration (Jim O'Grady's Pie-Man). To enable a shared interpretive experience across participants, we chose intact clips to be suspenseful, entertaining, and easy to follow. Non-intact stimuli were generated from the intact Pie-Man stimulus. The backward condition was time-reversed to lack intelligible speech; sentence shuffle was spliced from intact, permuted sentences to lack a coherent plotline. To construct the sentence shuffle stimulus, individual sentences were clipped to make the shortest possible stand-alone sentence. Compound sentences were divided into each of its standalone components, sometimes beginning with the word “and”. This resulted in 96 sentences (length: mean = 4.37 s, SD = 3.43 s) that were randomly reordered such that newly adjoining sentences had an original distance of at least four sentences between them. Stimulus features were as follows: backward/sentence shuffle/Pie-Man: RMS amplitude = 0.032, frequency = 1177; Blow Out: RMS amplitude = 0.054, frequency = 4414; Taken: RMS amplitude = 0.031, frequency = 1600; The Conjuring: RMS amplitude = 0.110, frequency = 2472 (http://sox.sourceforge.net). We also collected a rest run in which no stimulus was presented and participants were told to relax but not to fall asleep.

Before each auditory clip (and scan), participants were read a 2–3 sentence contextualizing prologue to facilitate interpretation of the clip. After the entire scan-session, participants were given an expected multiple-choice comprehension test for each intact clip. There were five questions per clip and the questions probed detailed information, e.g., names of characters, locations of events, and critical plot points. All stimulus data were excluded from participants who did not correctly answer at least 3 of 5 questions for at least 3 (of 4) intact runs. Additionally, for each intact clip, participant data were excluded if the participant failed the comprehension assessment for that particular clip or if the participant reported having previously seen the movie from which that particular clip was taken. Analyses thus included 15–18 participants per stimulus, per vision group. For each stimulus, blind and sighted participants were statistically equivalent with respect to age and years of education.

Each auditory clip was preceded by 5 s of rest and followed by 20–22 s of rest. Stimuli were presented using Psychtoolbox (v 3.0.14) for MATLAB; stimulus starts were triggered at the acquisition of the first volume. We subsequently discarded the first 20 s and last 18 s of each functional scan to remove scans with rest and the auditory stimulus onset (accounting for the hemodynamic lag). The duration of each stimulus, not counting the rest periods before and after the clip, were as follows: rest (7.4 min), backward, sentence shuffle, and Pie-Man (6.8 min), The Conjuring (5.1 min), Taken (5 min), and Blow Out (6.5 min). Presentation order of the six stimuli was counterbalanced across participants, with blind and sighted participants yoked to receive the same orderings. In addition to the comprehension questions, we also asked three questions to probe participants' subjective experience. Each participant rated each intact clip on suspense, entertainment, and following ease according to a five-point Likert scale (for average subjective ratings of clips, see Table S3, available at https://osf.io/r4tgb/).

Auditory stimuli were presented over Sensimetrics MRI-compatible earphones (http://www.sens.com/products/model-s14/) at the maximum comfortable volume for each participant. To ensure that participants could hear the softer sounds in the auditory clips over the scanner noise, a relatively soft sound (RMS amplitude = 0.002, frequency = 3479) was played to participants during acquisition of the anatomical image; all participants indicated hearing the sound via button press.

MRI data acquisition and cortical surface analysis.

MRI structural and functional data of the whole brain were collected on a 3-tesla Philips dStream Achieva scanner. T1-weighted structural images were collected in 150 axial slices with 1 mm isotropic voxels using a magnetization-prepared rapid gradient-echo. T2*-weighted functional images were collected using a gradient echo planar imaging sequence (36 sequential ascending axial slices, repetition time: 2 s, echo time: 0.03 s, flip angle: 70°, field-of-view: matrix 76 × 70, slice thickness: 2.5 mm, inter-slice gap: 0.5, slice-coverage: FH 107.5, voxel size: 2.53 × 2.47 × 2.50 mm, PE direction L/R, first-order shimming). SENSE factor 2.0 was used as a parallel imaging method. The acquisition time and number of volumes collected for each auditory clip were as follows: backward, shuffled, Pie-Man (7:42 m, 223), Taken (5:54, 169), Blow Out (7:26, 215), The Conjuring (6:00, 172). Data analyses were performed using FSL (v 5.0.9), FreeSurfer (v 5.3), the HCP workbench (v 1.2.0), and custom software (Dale et al., 1999; Smith et al., 2004; Glasser et al., 2013).

Preprocessing.

Functional data were motion corrected, slice-time corrected. Nuisance covariates (i.e., linear trend and any motion spikes; time points with a root mean squared framewise displacement >1.75 mm) were regressed out of the time series of all gray matter voxels. As a result, motion spikes were set to the run-mean (number per run, sighted: mean = 0.23, SD = 0.58; blind: mean = 0.64, SD = 0.88; t(34) = 1.65, p = 0.11). Resulting time-series residuals were next high-pass filtered with a 128 s cutoff.

Each participants' functional data were mapped to the surface after co-registering to their own anatomical scan using FSL's FEAT. Next, each participant's anatomical scan was normalized to a common surface-based template (fsaverage) using sulcal/gyral alignment (via recon-all). Finally, functional data were projected to the surface, downsampled to HCP's 32K standard cortical surface, dilated and eroded by 2.5 mm to fill small holes. For whole-brain analyses, data were smoothed with a 12 mm FWHM (on the surface) Gaussian kernel (Hagler et al., 2006; Jo et al., 2007; Anticevic et al., 2008; Tucholka et al., 2012). Note that smoothing was performed on the 2-dimensional surface, rather than in the 3-dimensional volume, a given surface smoothing radius will encompass less surrounding tissue than the same smoothing radius used in the volume. 12 mm of smoothing on the surface corresponds to ∼8.5 mm smoothing in the volume (Hagler et al., 2006). Time points before and after stimulus presentation were trimmed (as noted above). Finally, so that participant time courses would have comparable intensity values, we scaled each time point by the grand-mean of the time series and then multiplied each time point by 10,000.

Intersubject whole-cortex correlation.

For each vertex in the cortex, we assessed the extent of stimulus-driven synchronization (i.e., correlation) to that same vertex in other people's cortices. Synchrony of brain activity was determined within vision groups; i.e., each congenitally blind individual's brain to the mean of all other congenitally blind individuals and each sighted individual to the mean of all other sighted individuals. For each run, we calculated vertex-wise synchrony as the average Pearson product-moment correlation coefficient (r) between each subject's time course and the average of the reference group (Hasson et al., 2004; Lerner et al., 2011). For example, the blind group's intersubject whole-cortex correlation (ISC) value at vertex 99 was calculated by correlating the time course of Blind Participant 1's vertex 99 to the mean time course in the blind group (without Participant 1) of vertex 99, repeating for all blind participants, and then averaging ISC values across blind participants. For both group comparisons (i.e., blind to blind and sighted to sighted), averaged r value ISC maps were transformed to Fisher's z values [i.e., arctanh(r)] to enable comparisons of correlations across different stimuli/groups. Differences in synchronization between stimuli and/or between groups were compared by subtracting the relevant z-maps (i.e., blind > sighted = blind − sighted). A mean audio-movie synchronization map was created by averaging z-maps of the three intact audio-movie stimuli; i.e., The Conjuring, Taken, Blow Out. Resulting z-maps were subsequently back-transformed to r-maps [i.e., tanh(r)].

Because ISC maps violate several assumptions of parametric hypothesis testing, we performed a nonparametric, permutation analysis to assess the statistical significance of the intersubject correlations (Lerner et al., 2011; Regev et al., 2013). We generated a null distribution by permuting the original data. Each preprocessed time course was first Fourier transformed to obtain absolute values and phases of each time course frequency. Time courses were permuted by reordering the phases of the empirical time courses and then applying an inverse Fourier transform on these permuted phases and to the original amplitudes (Lerner et al., 2011; Regev et al., 2013). Phase randomization was independent across participants but the same for all vertices within a participant. This procedure maintained autocorrelation and spatial non-independence across cortex, which is observed in empirical time courses. ISC values, for all stimuli and comparisons, were calculated on these permuted “null” time courses, in the same way as the non-permuted real data analysis. A null distribution, for each stimulus and comparison, was obtained by repeating the procedure 1000 times.

We implemented a “vertex-wise” correction for multiple comparisons to control for the familywise error (FWE) rate of the multiple comparisons across the cortex. Only the largest ISC value across all brain cortices, in each of the 1000 permutations, contributed to the null distribution. We rejected the null hypothesis for a particular comparison if the real data's ISC value was in the upper 5% of all 1000 values in each null distribution (Nichols and Holmes, 2002; Simony et al., 2016). The statistical test is, therefore, one-tailed. The resulting Pearson correlation thresholds for contrasts examined varied from 0.10 to 0.25. Differences in criteria reflect different variances for each of the null sampling distributions, likely because of differences in degrees of freedom among the stimuli (e.g., number of time points) and between numbers of participants in each contrast, as well as the computation performed (e.g., the audio movies > “backward” comparison subtracts movie ISC values from backward ISC values and, therefore, sums the variances of both the audio movies and backward distributions). Because the sighted group's ISC criteria were a bit higher than the blind group's ISC criteria, we thresholded sighted ISC (Fig. 1) with the blind group's criteria to more conservatively test our hypothesis that the sighted group's visual cortices will not synchronize for the nonvisual stimuli. Results were qualitatively the same as those obtained by using the sighted group's own criteria.

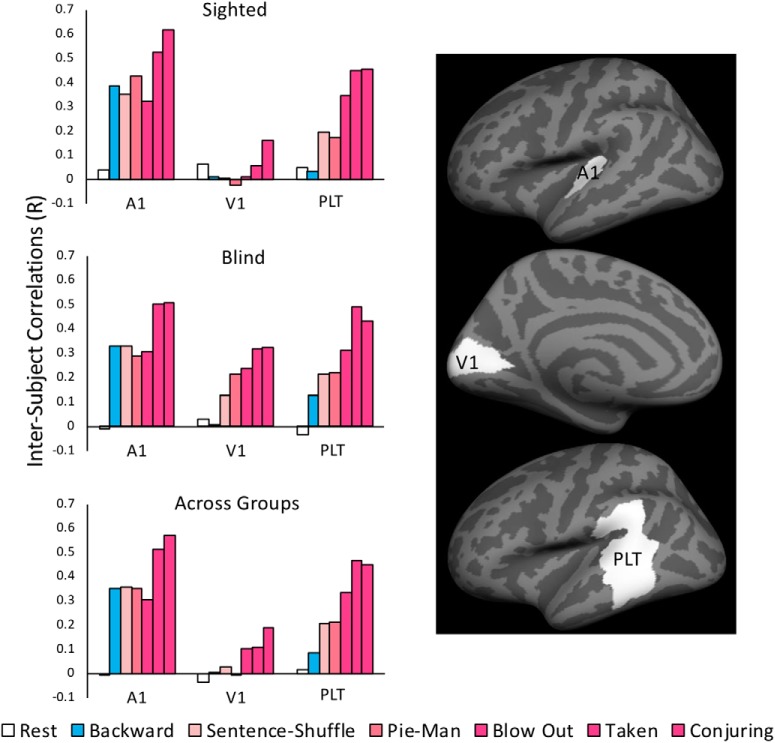

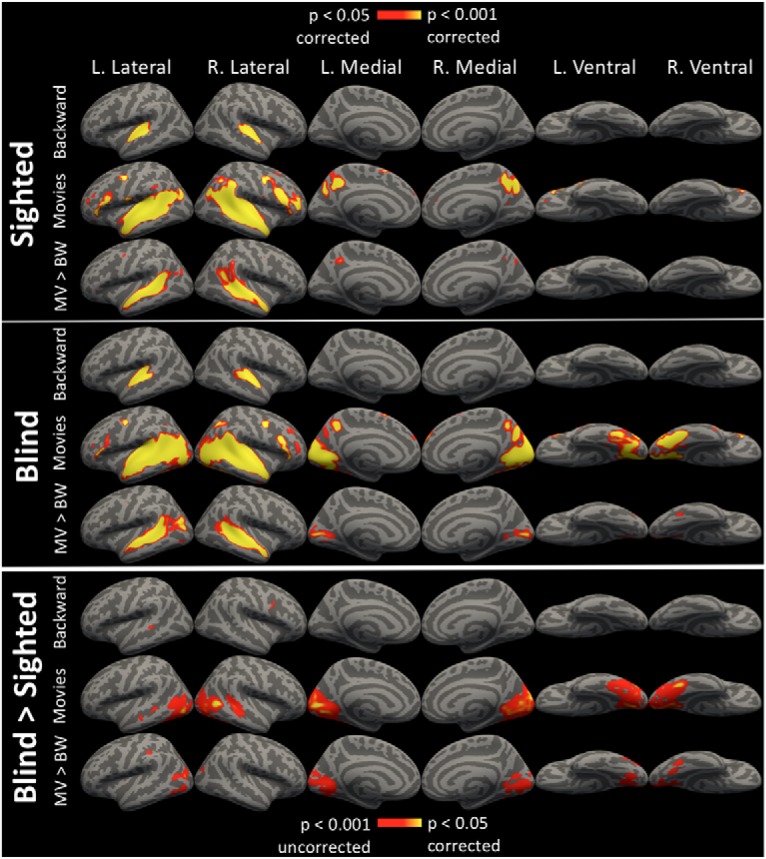

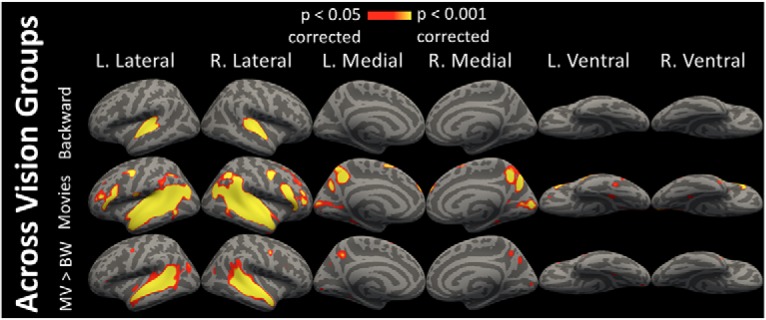

Figure 1.

Whole-cortex ISCs. ISCs for the backward stimulus, all audio-movie stimuli, and for the comparison of audio movie greater than backward (MV > BW). Synchronization is shown within the sighted group and within the blind group, vertex-wise corrected for multiple comparison at p < 0.05. A comparison of blind group synchronization greater than sighted group synchronization (Blind > Sighted) is also shown, vertex-wise thresholded at p < 0.001 uncorrected and cluster-corrected for multiple comparisons at p < 0.05.

The vertex-wise correction for multiple comparisons described above is highly conservative, as there is a 5% probability of rejecting one or more true nulls in each 64,000 vertex family of statistical tests. Therefore, we used a “cluster-wise” correction (p < 0.05) to control for the FWE rate of the multiple comparisons across the cortex for contrasts between groups (i.e., blind ISC > sighted ISC for the backward stimulus) and for contrasts between conditions that differ only subtly (i.e., Pie-Man > sentence shuffle; Eklund et al., 2016; Kessler et al., 2017). Rather than form a null distribution from the highest vertex-wise ISC values in each permutation across the whole cortex, we first generated an uncorrected α criterion (of p < 0.001) by taking the r value > 99.9% of all permutations (for each vertex) and averaging this value across all vertices. Next, phase-randomized ISC maps were thresholded at this criterion and assessed for maximum cluster size. For each of the 1000 permutations, a maximum whole-cortex cluster was obtained (for each stimulus and comparison). The size of the maximum ISC clusters thus form a null distribution of cluster size. Cluster-correction criteria at p < 0.05 were likewise set as the cluster-size >95% of all other clusters. Real-data ISC maps were cluster-corrected by first thresholding each vertex at the uncorrected p < 0.001 criterion and then thresholding clusters at the cluster-wise threshold of p < 0.05. Cluster criteria for reported contrasts ranged from 45 to 88 mm.

ISC ROI analysis.

We defined three bilateral ROIs: primary visual cortex (V1), the early auditory cortex (A1), and higher-cognitive posterior lateral temporal (PLT) cortex. We used a V1 ROI from a previously published anatomical surface-based atlas (PALS-B12; Van Essen, 2005). We defined an early auditory cortex ROI as the transverse temporal portion of a gyral based atlas (Morosan et al., 2001; Desikan et al., 2006). For brevity, the early auditory cortex ROI will be abbreviated to A1, although it may not be strictly limited to primary auditory cortex. A higher-cognitive bilateral PLT ROI was taken from parcels that respond to high-level linguistic content in sighted subjects. The ROI was originally defined in the left hemisphere as responding more to sentences than lists of non-words in a large sample of sighted participants (Fedorenko et al., 2010). This portion of lateral temporal cortex has shown sensitivity to a wide range of high-level linguistic information, including word and sentence level meaning to sentence structure (Bookheimer, 2002). The ROI was mirrored to the right hemisphere to match it with the bilateral V1 and A1 ROI and because right hemisphere areas have been shown to respond to high-level aspects of language such as discourse, context and metaphor, that are present in naturalistic stimuli (Vigneau et al., 2011).

ROI analyses were performed on unsmoothed functional data. For each participant, a time course was obtained for each ROI by averaging across all vertices present in the bilateral ROI. From here, ISC analysis proceeded as in the whole-brain analysis. For each ROI, each participant's ROI time course was correlated to the average ROI time course of all participants in the leave-one-out group (for within vision group analysis) or to the whole group (for across vision group analysis).

All statistics for factor comparisons (i.e., ROI, group, and/or conditions) were obtained by subtraction of the relevant z-transformed-r ISC values. For example, within A1 sighted group: backward ISC versus rest ISC = A1 sighted backward z-transformed-r ISC − A1 sighted rest z-transformed r ISC. Fisher's z-transformed-r ISC values were subsequently transformed back to r (correlation coefficient) values for reporting.

Statistical significance of ROIs was assessed as in the whole-brain analysis. Time course data were permuted 1000 times as described in the Intersubject whole-cortex correlation section to generate a null distribution. Critically, for ROI analysis, we permuted the ROI time course after aggregating across vertices. This generates a realistic time course signal that accounts for the lack of independence among spatially proximal vertices. Using these null ROI time courses, analysis proceeded as in the empirical ROI ISC analysis. As in the empirical ROI analysis, statistics for all factor comparisons were generated by subtracting the relevant ISC ROI values from the permuted time course. Doing so over all permutations resulted in a null distribution for each statistic. Reported probabilities were calculated relative to that statistic's null distribution (formed by performing the relevant subtractions over null distribution values for each component). Probabilities reflect the proportion of null values whose magnitude is greater than, or equal to, the empirically observed value. ROI tests for statistical significance are thus two-tailed. Empirical values are considered significantly different from the null hypothesis if p < 0.05.

Results

High intersubject correlation in the visual cortices of blind individuals for audio movies and narrative: whole-cortex analysis

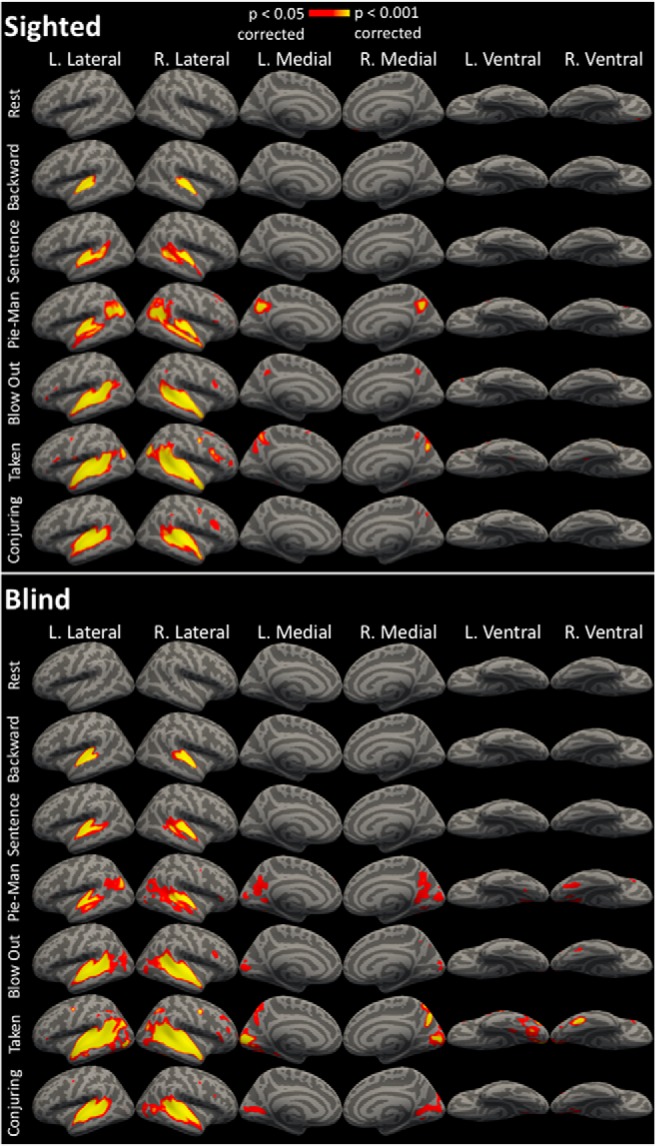

We used whole-cortex intersubject correlation analysis to compare synchrony across blind and sighted groups, and across intact and shuffled stimuli. Within the blind, but not the sighted group, there was significant intersubject synchronization in the occipital cortices for the audio-movie stimuli, bilaterally on medial, lateral, and ventral occipital cortices and absent only on the posterior occipital cortices (Fig. 1; p < 0.05, corrected). By contrast, the backward stimulus did not significantly drive synchronization within the occipital cortices of blind individuals (Fig. 1; p < 0.05, corrected). A direct comparison revealed higher intersubject synchronization for audio-movies than for the backward stimulus within the primary visual cortices of the blind group (Fig. 1; p < 0.05, corrected). Overall, 65.04% of occipital cortices (occipital lobe parcel from PALS-B12 atlas; Van Essen, 2005) were significantly synchronized across blind participants during audio-movie listening. Across each of the four intact stimuli, blind participants reliably synchronized similar regions within visual cortices, although the degree of synchrony varied across audio movies (Fig. 2).

Figure 2.

All stimuli, sighted and blind ISCs. ISCs for each stimulus within the sighted and within the blind group, vertex-wise corrected for multiple comparisons, p < 0.05.

A direct comparison of vision groups revealed that the audio-movie stimuli drove higher synchronization in the blind group, than in the sighted group, extensively across the occipital cortices (Fig. 1; p < 0.05, corrected). An interaction contrast (blind > sighted × audio-movies > backward) revealed areas along the lateral, medial, and ventral occipital cortices in which a greater increase in synchronization for audio movies compared with the backward stimulus, was observed within the blind group than within the sighted group (Fig. 1; p < 0.05, corrected).

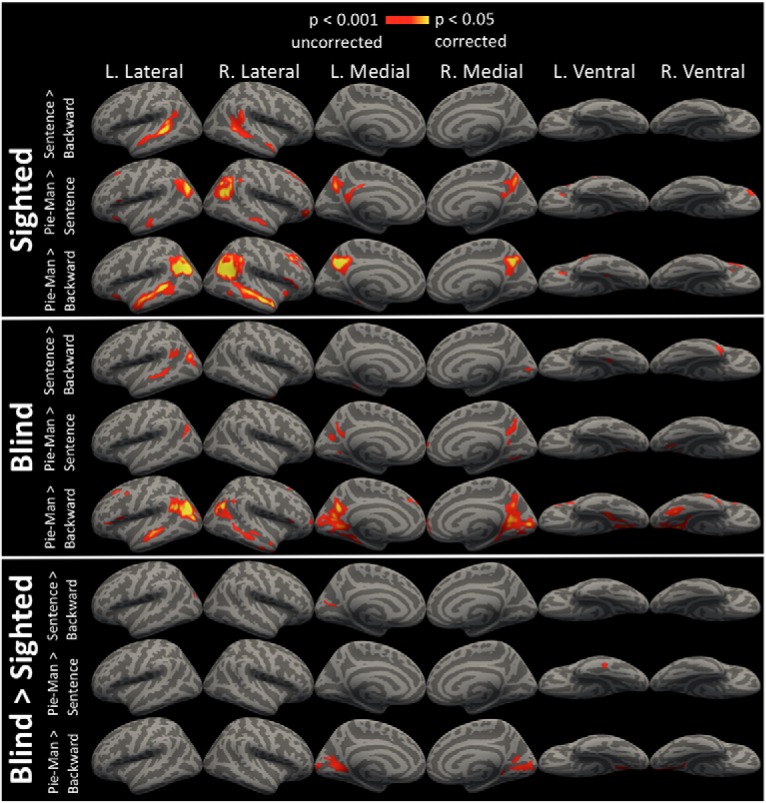

Relative to meaningful naturalistic stimuli, auditory backward also produced less synchrony in higher cognitive lateral temporal, precuneus and prefrontal areas among both sighted and blind groups. For the backward stimulus, significant intersubject synchronizations were observed only in the transverse temporal gyrus (Fig. 1; p < 0.05, corrected). By contrast, intelligible auditory movies, as well as Pie-Man, evoked significant additional intersubject synchronization across the superior and middle temporal gyri/sulci, angular gyrus, precuneus, inferior frontal gyrus/sulcus, and the middle frontal junction (Fig. 1; p < 0.05, corrected). For both blind and sighted groups, a direct comparison of the audio movies and backward stimuli revealed significantly more synchronization for audio movies along the superior/middle temporal gyri/sulci and precuneus (Fig. 1; p < 0.05, corrected). Similar but weaker results were obtained for Pie-man compared with backward (Fig. 3; p < 0.05, corrected). The sentence shuffle condition produced an intermediate pattern between audio-movies/Pie-man and backward speech (Fig. 3; p < 0.05, corrected).

Figure 3.

ISC comparisons. Comparisons of ISCs for Sentence Shuffle > backward, Pie-Man > Sentence Shuffle, and Pie-Man > backward. Synchronization is shown within the sighted group and within the blind group. A comparison of blind group synchronization greater than sighted group synchronization (Blind > Sighted) is also shown. All images are vertex-wise thresholded at p < 0.001 uncorrected and cluster-corrected for multiple comparisons at p < 0.05.

For completeness, we also correlated brain activity between blind and sighted groups directly. Non-occipital cortices were synchronized similarly across groups as they were within groups (Fig. 4; p < 0.05, corrected). Additionally, we observed synchronization across vision groups bilaterally along the calcarine sulcus. Overall, audio movies appear to synchronize occipital cortices across groups more than backward speech. However, the degree of synchrony in V1 was lower across vision groups than within the blind group.

Figure 4.

ISCs between vision groups. ISCs between vision groups (i.e., sighted to blind and blind to sighted), shown for the backward stimulus, the movie stimuli, and for movie > backward (i.e., MV > BW). All figures are vertex-wise corrected for multiple comparisons.

Effects of stimulus meaningfulness on correlations in V1, A1, and language-responsive PLT cortex: ROI analysis

Within group correlations

We conducted a ROI analysis to more closely examine the intersubject synchronization profiles of three ROIs: V1, A1, and PLT, across stimulus types and vision groups. Results are displayed in Figure 5. Because of the large number of possible comparisons (between 15 and 21 per group and region), we describe the observed pattern and test only those comparisons that are most critical.

Figure 5.

ISCs within ROIs. ISCs of the sighted group, blind group, and across vision groups. ISCs are shown for select conditions within A1, V1, and the PLT cortex. ROIs are displayed in the left hemisphere, but intersubject correlations are assessed bilaterally. Movies appear in the order listed.

In early auditory cortex of both groups, all stimuli drove high intersubject synchronization. Two audio movies, Taken and Conjuring, produced greater synchrony in A1 relative to the third audio movie (Blow Out) as well as relative to all of the other audio stimuli (Fig. 5; sighted A1: Taken vs Blow Out, r = 0.24, p < 0.001, Conjuring vs Blow Out, r = 0.37, p < 0.001; blind A1: Taken vs Blow Out, r = 0.23, p < 0.001, Conjuring vs Blow Out, r = 0.24, p < 0.001), possibly because of low-level differences between these two movies and the other stimuli. These two movies also produced higher levels of synchrony in PLT (Fig. 5; sighted PLT: Taken vs Blow Out, r = 0.13, p = 0.026, Conjuring vs Blow Out, r = 0.13, p = 0.018; blind PLT: Taken vs Blow Out, r = 0.21, p < 0.001, Conjuring vs Blow Out, r = 0.14, p = 0.009).

Overall, Pie-Man produced similar levels of synchrony to backward speech and sentence shuffle in A1, with the exception of slightly higher synchrony for Pie-Man than Sentence Shuffle (but not than backward speech) in the sighted group (Fig. 5; sighted A1: Pie-Man vs Backward, r = 0.05, p = 0.2, Pie-Man vs Sentence Shuffle, r = 0.09, p = 0.018; blind A1: Pie-Man vs Backward, r = 0.04, p = 0.308, Pie-Man vs Sentence Shuffle, r = 0.04, p = 0.333). In PLT, Pie-Man and Sentence Shuffle produced higher levels of synchrony than backward speech (Fig. 5; sighted PLT: Pie-Man vs Backward r = 0.14, p < 0.001, Sentence Shuffle vs Backward, r = 0.16, p < 0.001; blind PLT: Pie-Man vs Backward r = 0.09, p = 0.043, Sentence Shuffle vs Backward, r = 0.09, p = 0.048). Note that the Sentence Shuffle and backward speech stimuli were created from the Pie-Man stimulus and are thus matched to it (but not to the audio movies) on low-level auditory features, such as frequency and amplitude variation. Crucially, synchrony in V1 varied as a function of group and condition. The sighted showed low levels of synchrony across all auditory stimuli in V1, although, one of the audio movies that produced higher synchrony in both A1 and PLT (Conjuring) also produced some synchrony in V1 of the sighted group (Fig. 5; sighted V1: Backward vs Rest, r = 0.01, p > 0.5, Sentence Shuffle vs Rest, r = 0.06, p = 0.197, Pie-Man vs Rest, r = −0.09, p = 0.064, Blow Out vs Rest, r = 0.05, p = 0.296, Taken vs Rest, r = 0.01, p > 0.5, Conjuring vs Rest, r = 0.10, p = 0.046). In the blind group, all three movies as well as Pie-Man and to a lesser degree sentence shuffle produced high synchrony (Fig. 5; blind V1: Sentence Shuffle vs Rest, r = 0.10, p = 0.036, Pie-Man vs Rest, r = 0.19, p < 0.001, Blow Out vs Rest, r = 0.21, p < 0.001, Taken vs Rest, r = 0.29, p < 0.001, Conjuring vs Rest, r = 0.29, p < 0.001). No synchrony was observed for backward speech (Fig. 5; blind V1: Backward vs Rest, r = 0.02, p > 0.5). V1 of the blind group demonstrated significantly higher synchronization than V1 of the sighted group, but only for the intact stimuli (Fig. 5; V1 blind vs sighted: backward, r = 0.003, p > 0.5, Sentence Shuffle r = 0.125, p = 0.004, Pie-Man, r = 0.24, p < 0.001; Blow Out, r = 0.18, p = 0.001, Taken, r = 0.26, p < 0.001, Conjuring, r = 0.26, p < 0.001). The blind, but not the sighted, group showed a significant difference between Pie-Man and backward speech [Fig. 5; V1: group (blind vs sighted) × condition (Pie-Man vs backward) interaction r = 0.24, p < 0.001].

Within group sighted to blind ROI ISC

As in the whole-brain analysis, we assessed common functionality across vision groups by directly correlating sighted individuals to the blind groups, and vice versa. We found similar levels of synchrony in A1 and PLT across, as within, groups (Fig. 5; across group A1: backward vs rest, r = 0.36, p < 0.001; Sentence Shuffle vs rest, r = 0.36, p < 0.001; Pie-Man vs rest, r = 0.35, p < 0.001, audio movies vs rest, r = 0.47, p < 0.001; PLT: backward vs rest, r = 0.07, p = 0.02; Sentence Shuffle vs rest, r = 0.19, p < 0.001; Pie-Man vs rest, r = 0.20, p < 0.001, audio-movies vs rest, r = 0.41, p < 0.001; A1 audio-movies: across group vs blind group, r = 0.03, p = 0.19; across group vs sighted group: r = 0.04, p = 0.13; PLT audio-movies: across group vs blind group, r = 0.004, p > 0.5; across group vs sighted group: r = 0.002, p > 0.5).

In V1 we observed low but significant levels of correlation between the blind and sighted subjects for the three movies, but not for the other intact stimulus, Pie-Man (Fig. 5; across group V1: backward vs rest, r = 0.04, p = 0.27; Sentence Shuffle vs rest, r = 0.06, p = 0.07; Pie-Man vs rest, r = 0.03, p = 0.4, movies > rest, r = 0.17, p < 0.001). Overall, synchrony in V1 for the movie stimuli was lower across vision groups than within the blind group (across group vs blind group: audio movies, r = 0.16, p < 0.001) and higher than within the sighted group (across group vs sighted group: audio movies, r = 0.06, p = 0.03). Also, unlike V1 synchronization within the blind group, V1 synchronization across vision groups did not systematically increase with increasing cognitive complexity of the stimuli (Fig. 5; across group V1: Pie-Man + Blow Out vs backward, r = 0.05, p = 0.10). The effect of cognitive complexity on V1 synchronization was significantly smaller in the across group correlation than in the blind group and no different from that within the sighted group [Fig. 5; V1: group (blind vs across group) × condition (Pie-Man + Blow Out vs backward) interaction: r = 0.17, p = 0.001; group (sighted vs across group) × condition (Pie-Man + Blow Out vs backward) interaction: r = 0.07, p = 0.2].

Discussion

Visual cortices of blind individuals synchronize to each other during naturalistic listening to auditory movies and a narrative. The audio track of movies drove collective responding in 65% of the visual cortices, by surface area. Synchronization was observed bilaterally, and spanned both retinotopic and higher order areas on the lateral, medial, and ventral surfaces of the occipital lobe. This is a lower, rather than an upper, bound to the topographical extent of visual cortex repurposing. Failure to synchronize could occur because the particular naturalistic stimuli used in the current study did not contain relevant cognitive content for some subset of visual cortices. The current findings are consistent with the idea that, in blindness, most of the available cortical tissue undergoes systematic adaptation for everyday tasks.

A key observation is that meaningful temporally extended naturalistic stimuli (i.e., auditory movies and narratives) synchronize visual cortices of blind individuals more than stimuli that are meaningless. Visual cortices of blind individuals showed little synchrony while listening to a nonsense backward auditory stream. This finding is consistent with a recent magnetoencephalography study that observed greater synchrony for intelligible than unintelligible speech in foveal V1 of the blind participants (Van Ackeren et al., 2018). We found that the shuffled sentences condition, which contains some meaning but no plot, synchronized visual cortices of the blind group (and frontotemporal cortices of both groups) to an intermediate degree. In sum, visual cortices of blind individuals synchronize to a shared experience of meaningful naturalistic stimuli.

Previous work has used the observations of varying levels of synchrony across stimuli of different cognitive complexity to characterize the “temporal response window” of different cortical networks (Hasson et al., 2008; Lerner et al., 2011). According to this framework, cortical networks differ according to the length of the temporal window over which they integrate information. Higher cognitive areas integrate information over longer time windows and therefore synchronize only for stimuli that have structure at this long timescale. By contrast, low-level sensory areas, including early auditory cortices and early visual cortices of sighted individuals, integrate information only over short time windows. Stimulus structure at longer time scales has no effect on the levels of synchrony in these early sensory areas (Hasson et al., 2008). Here we find that in blind individuals visual cortices exhibit a long temporal response window that is comparable to that of higher-order cognitive areas, such as the posterior lateral temporal cortex. These results suggest that the temporal response window of a cortical area is not intrinsic to its anatomy at birth.

The present results are consistent with and complementary to evidence from studies using task-based designs. Previous studies suggest that deafferented visual cortices are engaged in a wide range of auditory and tactile experimental tasks, including motion detection, shape discrimination, sound localization, echolocation, Braille-reading, and auditory sentence comprehension (Wanet-Defalque et al., 1988; Uhl et al., 1991; Sadato et al., 1996; Weeks et al., 2000; Bavelier and Neville, 2002; Röder et al., 2002; Merabet et al., 2004; Gougoux et al., 2005; Poirier et al., 2006; Stilla et al., 2008; Collignon et al., 2011; Thaler et al., 2011; Wolbers et al., 2011). Particularly relevant, visual cortices of blind individuals are active during memory recall (e.g., when naming words from a previously memorized list) and are sensitive to linguistic meaning and structure (Röder et al., 2002; Amedi et al., 2003; Raz et al., 2005; Bedny et al., 2011; Lane et al., 2015). For example, the visual cortices of blind individuals respond more to sentences than to lists of unrelated words and more to grammatically complex than grammatically simple sentences (Röder et al., 2002; Bedny et al., 2011; Lane et al., 2015). Naturalistic stimuli of the type used in the current study likely engaged some of these and additional diverse cognitive processes. Processing audio movies and narratives involves language comprehension, recall of past information, selective attention, integrating across relevant plot points, and predicting upcoming events (Naci et al., 2014). Synchrony of visual cortex activity across individuals suggests that such processes colonize common parts of visual cortex across different blind individuals and engaged by naturalistic stimuli.

The present results leave open several important questions. The complex naturalistic stimuli used in the current study synchronized large swaths of deafferented visual cortex, including not only V1 but also extending into lateral and ventral occipitotemporal areas. Previous research suggests that visual cortex is not colonized for a single nonspecific process in blindness. Rather visual cortices participate in an array of distinct cognitive operations and naturalistic stimuli engage many of these processes at once (Kanjlia et al., 2016; Abboud and Cohen, 2018). Further work is needed to delineate whether different portions of this synchronized network support different cognitive functions and if so to identify the nature of these functions.

A further open question concerns the way in which intrinsic physiology shapes the function of visual cortex and precisely what is shared and what is different across sighted and blind people. A key finding of the current study is greater synchrony of visual cortices across blind as compared with sighted adults. However, even in the sighted (blindfolded) group we observed some synchrony of visual cortices. In particular, the same audio-movie stimulus produced the highest synchrony across all tested brain regions and across both groups.

While providing evidence for functional plasticity, the present results also illustrate ways in which innate constraints shape cortical function even in blindness. First, synchronization of visual cortices across blind individuals suggests the presence of systematically localized cortical function across individuals even in cases of atypical sensory experience. Such systematicity is likely related to common anatomical constraints. Future work using techniques, such as hyper-alignment (Haxby et al., 2011), could provide insight into whether functional specialization of cortex is more variable across individuals in cases where the cortical region is receiving species-atypical information from the environment.

Second, although synchrony of visual cortices was much lower across sighted than blind individuals, even among the sighted, two of the three movies produced some synchronization. Furthermore, when blind and sighted data were directly correlated with each other, we observed some synchrony between foveal V1 of the sighted group and V1 of the blind group. Again, the degree of this synchrony was low, relative to what was observed among individuals who are blind and unlike in the blind group, synchronization did not vary systematically across stimulus meaningfulness. The existence of some synchrony even in the sighted nevertheless suggests that nonvisual information is reaching visual cortices in the sighted population. Further support comes from evidence that visual cortices are active in sighted individuals during some nonvisual tasks although the tasks and stimuli that elicit these responses are different from those reported in studies of blindness (Sathian et al., 1997; Zangaladze et al., 1999; James et al., 2002; Facchini and Aglioti, 2003; Merabet et al., 2004, 2008; Sathian, 2005; Voss et al., 2016). Thus, exactly how the function of visual cortex is changing in blindness remains to be fully understood. There may be different types and degrees of functional change across different anatomical locations within the visual system. Some occipitotemporal areas previously believed to perform modality-specific visual functions (e.g., scene perception) show preferential responses to analogous stimuli (e.g., names or sounds characteristic of places) in blind participants (He et al., 2013; Peelen et al., 2014; Striem-Amit and Amedi, 2014; van den Hurk et al., 2017). Conversely, large swaths of early visual cortices respond to abstract cognitive functions, including grammar, in people who are blind more so than those who are sighted (Röder et al., 2002; Bedny et al., 2011; Lane et al., 2015). Precisely in which ways the functions of these regions are similar and different across blind and sighted populations in each of these cases remains to be fully understood.

The available evidence suggests that innately determined long-range connectivity patterns guide functional specialization by constraining the types of input that a cortical area receives (O'Leary, 1989; Johnson, 2000; Dehaene and Cohen, 2007; Mahon and Caramazza, 2011; Saygin et al., 2016; Bedny, 2017; Cusack et al., 2018). Blindness, nevertheless, modifies what visual cortex does with incoming, nonvisual information suggesting that intrinsic cortical anatomy allows for different functional profiles depending on experience.

Footnotes

This work was supported by the National Institute of Health (NEI, R01 EY027352-01 to M.B.), the Catalyst Award from Johns Hopkins University (M.B.) and a National Science Foundation Graduate Research Fellowship DGE-1232825 (to R.E.L.). We thank the blind and sighted individuals who participated in this research, the blind community for its support of this research, without it the work would not be possible, and the F. M. Kirby Research Center for Functional Brain Imaging at the Kennedy Krieger Institute.

The authors declare no competing financial interests.

References

- Abboud S, Cohen L (2018) Distinctive interaction between cognitive networks and the visual cortex in early blind individuals. bioRxiv, 437988. [DOI] [PubMed] [Google Scholar]

- Amedi A, Raz N, Pianka P, Malach R, Zohary E (2003) Early visual cortex activation correlates with superior verbal memory performance in the blind. Nat Neurosci 6:758–766. 10.1038/nn1072 [DOI] [PubMed] [Google Scholar]

- Amedi A, Floel A, Knecht S, Zohary E, Cohen LG (2004) Transcranial magnetic stimulation of the occipital pole interferes with verbal processing in blind subjects. Nat Neurosci 7:1266–1270. 10.1038/nn1328 [DOI] [PubMed] [Google Scholar]

- Anticevic A, Dierker DL, Gillespie SK, Repovs G, Csernansky JG, Van Essen DC, Barch DM (2008) Comparing surface-based and volume-based analyses of functional neuroimaging data in patients with schizophrenia. Neuroimage 41:835–848. 10.1016/j.neuroimage.2008.02.052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Neville HJ (2002) Cross-modal plasticity: where and how? Nat Rev Neurosci 3:443–452. 10.1038/nrn848 [DOI] [PubMed] [Google Scholar]

- Bedny M. (2017) Evidence from blindness for a cognitively pluripotent cortex. Trends Cogn Sci 21:637–648. 10.1016/j.tics.2017.06.003 [DOI] [PubMed] [Google Scholar]

- Bedny M, Pascual-Leone A, Dodell-Feder D, Fedorenko E, Saxe R (2011) Language processing in the occipital cortex of congenitally blind adults. Proc Natl Acad Sci U S A 108:4429–4434. 10.1073/pnas.1014818108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bookheimer S. (2002) Functional MRI of language: new approaches to understanding the cortical organization of semantic processing. Annu Rev Neurosci 25:151–188. 10.1146/annurev.neuro.25.112701.142946 [DOI] [PubMed] [Google Scholar]

- Cohen LG, Celnik P, Pascual-Leone A, Corwell B, Falz L, Dambrosia J, Honda M, Sadato N, Gerloff C, Catalá MD, Hallett M (1997) Functional relevance of cross-modal plasticity in blind humans. Nature 389:180–183. 10.1038/38278 [DOI] [PubMed] [Google Scholar]

- Collignon O, Vandewalle G, Voss P, Albouy G, Charbonneau G, Lassonde M, Lepore F (2011) Functional specialization for auditory–spatial processing in the occipital cortex of congenitally blind humans. Proc Natl Acad Sci U S A 108:4435–4440. 10.1073/pnas.1013928108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cusack R, Wild CJ, Zubiaurre-Elorza L, Linke AC (2018) Why does language not emerge until the second year? Hear Res 366:75–81. 10.1016/j.heares.2018.05.004 [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI (1999) Cortical surface-based analysis: segmentation and surface reconstruction. Neuroimage 9:179–194. 10.1006/nimg.1998.0395 [DOI] [PubMed] [Google Scholar]

- Dehaene S, Cohen L (2007) Cultural recycling of cortical maps. Neuron 56:384–398. 10.1016/j.neuron.2007.10.004 [DOI] [PubMed] [Google Scholar]

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, Albert MS, Killiany RJ (2006) An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31:968–980. 10.1016/j.neuroimage.2006.01.021 [DOI] [PubMed] [Google Scholar]

- Eklund A, Nichols TE, Knutsson H (2016) Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proc Natl Acad Sci U S A 113:7900–7905. 10.1073/pnas.1602413113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Facchini S, Aglioti SM (2003) Short term light deprivation increases tactile spatial acuity in humans. Neurology 60:1998–1999. 10.1212/01.WNL.0000068026.15208.D0 [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Hsieh PJ, Nieto-Castañón A, Whitfield-Gabrieli S, Kanwisher N (2010) New method for fMRI investigations of language: defining ROIs functionally in individual subjects. Journal of Neurophysiology 104:1177–1194. 10.1152/jn.00032.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasser MF, Sotiropoulos SN, Wilson JA, Coalson TS, Fischl B, Andersson JL, Xu J, Jbabdi S, Webster M, Polimeni JR, Van Essen DC, Jenkinson M; WU-Minn HCP Consortium (2013) The minimal preprocessing pipelines for the human connectome project. Neuroimage 80:105–124. 10.1016/j.neuroimage.2013.04.127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gougoux F, Zatorre RJ, Lassonde M, Voss P, Lepore F (2005) A functional neuroimaging study of sound localization: visual cortex activity predicts performance in early-blind individuals. PLoS Biol 3:e27. 10.1371/journal.pbio.0030027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagler DJ Jr, Saygin AP, Sereno MI (2006) Smoothing and cluster thresholding for cortical surface-based group analysis of fMRI data. Neuroimage 33:1093–1103. 10.1016/j.neuroimage.2006.07.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R (2004) Intersubject synchronization of cortical activity during natural vision. Science 303:1634–1640. 10.1126/science.1089506 [DOI] [PubMed] [Google Scholar]

- Hasson U, Yang E, Vallines I, Heeger DJ, Rubin N (2008) A hierarchy of temporal receptive windows in human cortex. J Neurosci 28:2539–2550. 10.1523/JNEUROSCI.5487-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Malach R, Heeger DJ (2010) Reliability of cortical activity during natural stimulation. Trends Cogn Sci 14:40–48. 10.1016/j.tics.2009.10.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Guntupalli JS, Connolly AC, Halchenko YO, Conroy BR, Gobbini MI, Hanke M, Ramadge PJ (2011) A common, high-dimensional model of the representational space in human ventral temporal cortex. Neuron 72:404–416. 10.1016/j.neuron.2011.08.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- He C, Peelen MV, Han Z, Lin N, Caramazza A, Bi Y (2013) Selectivity for large nonmanipulable objects in scene-selective visual cortex does not require visual experience. Neuroimage 79:1–9. 10.1016/j.neuroimage.2013.04.051 [DOI] [PubMed] [Google Scholar]

- James TW, Humphrey GK, Gati JS, Servos P, Menon RS, Goodale MA (2002) Haptic study of three-dimensional objects activates extrastriate visual areas. Neuropsychologia 40:1706–1714. 10.1016/S0028-3932(02)00017-9 [DOI] [PubMed] [Google Scholar]

- Jo HJ, Lee JM, Kim JH, Shin YW, Kim IY, Kwon JS, Kim SI (2007) Spatial accuracy of fMRI activation influenced by volume- and surface-based spatial smoothing techniques. Neuroimage 34:550–564. 10.1016/j.neuroimage.2006.09.047 [DOI] [PubMed] [Google Scholar]

- Johnson MH. (2000) Functional brain development in infants: elements of an interactive specialization framework. Child Dev 71:75–81. 10.1111/1467-8624.00120 [DOI] [PubMed] [Google Scholar]

- Kanjlia S, Lane C, Feigenson L, Bedny M (2016) Absence of visual experience modifies the neural basis of numerical thinking. Proc Natl Acad Sci U S A 113:11172–11177. 10.1073/pnas.1524982113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler D, Angstadt M, Sripada CS (2017) Reevaluating “cluster failure” in fMRI using nonparametric control of the false discovery rate. Proc Natl Acad Sci U S A 114:E3372–E3373. 10.1073/pnas.1614502114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane C, Kanjlia S, Omaki A, Bedny M (2015) visual cortex of congenitally blind adults responds to syntactic movement. J Neurosci 35:12859–12868. 10.1523/JNEUROSCI.1256-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerner Y, Honey CJ, Silbert LJ, Hasson U (2011) Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J Neurosci 31:2906–2915. 10.1523/JNEUROSCI.3684-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A (2011) What drives the organization of object knowledge in the brain? Trends Cogn Sci 15:97–103. 10.1016/j.tics.2011.01.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merabet L, Thut G, Murray B, Andrews J, Hsiao S, Pascual-Leone A (2004) Feeling by sight or seeing by touch? Neuron 42:173–179. 10.1016/S0896-6273(04)00147-3 [DOI] [PubMed] [Google Scholar]

- Merabet LB, Hamilton R, Schlaug G, Swisher JD, Kiriakopoulos ET, Pitskel NB, Kauffman T, Pascual-Leone A (2008) Rapid and reversible recruitment of early visual cortex for touch. PLoS One 3:e3046–12. 10.1371/journal.pone.0003046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morosan P, Rademacher J, Schleicher A, Amunts K, Schormann T, Zilles K (2001) Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. Neuroimage 13:684–701. 10.1006/nimg.2000.0715 [DOI] [PubMed] [Google Scholar]

- Naci L, Cusack R, Anello M, Owen AM (2014) A common neural code for similar conscious experiences in different individuals. Proc Natl Acad Sci U S A 111:14277–14282. 10.1073/pnas.1407007111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naci L, Sinai L, Owen AM (2017) Detecting and interpreting conscious experiences in behaviorally non-responsive patients. Neuroimage 145:304–313. 10.1016/j.neuroimage.2015.11.059 [DOI] [PubMed] [Google Scholar]

- Nichols TE, Holmes AP (2002) Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum Brain Mapp 15:1–25. 10.1002/hbm.1058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Leary DD. (1989) Do cortical areas emerge from a protocortex. Trends Neurosci 12:400–406. 10.1016/0166-2236(89)90080-5 [DOI] [PubMed] [Google Scholar]

- Peelen MV, He C, Han Z, Caramazza A, Bi Y (2014) Nonvisual and visual object shape representations in occipitotemporal cortex: evidence from congenitally blind and sighted adults. J Neurosci 34:163–170. 10.1523/JNEUROSCI.1114-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poirier C, Collignon O, Scheiber C, Renier L, Vanlierde A, Tranduy D, Veraart C, De Volder AG (2006) Auditory motion perception activates visual motion areas in early blind subjects. Neuroimage 31:279–285. 10.1016/j.neuroimage.2005.11.036 [DOI] [PubMed] [Google Scholar]

- Raz N, Amedi A, Zohary E (2005) V1 activation in congenitally blind humans is associated with episodic retrieval. Cereb Cortex 15:1459–1468. 10.1093/cercor/bhi026 [DOI] [PubMed] [Google Scholar]

- Regev M, Honey CJ, Simony E, Hasson U (2013) Selective and invariant neural responses to spoken and written narratives. J Neurosci 33:15978–15988. 10.1523/JNEUROSCI.1580-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Röder B, Stock O, Bien S, Neville H, Rösler F (2002) Speech processing activates visual cortex in congenitally blind humans. Eur J Neurosci 16:930–936. 10.1046/j.1460-9568.2002.02147.x [DOI] [PubMed] [Google Scholar]

- Sadato N, Pascual-Leone A, Grafman J, Ibañez V, Deiber MP, Dold G, Hallett M (1996) Activation of the primary visual cortex by braille reading in blind subjects. Nature 380:526–528. 10.1038/380526a0 [DOI] [PubMed] [Google Scholar]

- Sathian K. (2005) Visual cortical activity during tactile perception in the sighted and the visually deprived. Dev Psychobiol 46:279–286. 10.1002/dev.20056 [DOI] [PubMed] [Google Scholar]

- Sathian K, Zangaladze A, Hoffman JM, Grafton ST (1997) Feeling with the mind's eye. Neuroreport 8:3877–3881. 10.1097/00001756-199712220-00008 [DOI] [PubMed] [Google Scholar]

- Saygin ZM, Osher DE, Norton ES, Youssoufian DA, Beach SD, Feather J, Gaab N, Gabrieli JD, Kanwisher N (2016) Connectivity precedes function in the development of the visual word form area. Nat Neurosci 19:1250–1255. 10.1038/nn.4354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simony E, Honey CJ, Chen J, Lositsky O, Yeshurun Y, Wiesel A, Hasson U (2016) Dynamic reconfiguration of the default mode network during narrative comprehension. Nat Commun 7:12141. 10.1038/ncomms12141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM (2004) Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage 23:S208–S219. 10.1016/j.neuroimage.2004.07.051 [DOI] [PubMed] [Google Scholar]

- Stilla R, Hanna R, Hu X, Mariola E, Deshpande G, Sathian K (2008) Neural processing underlying tactile microspatial discrimination in the blind: a functional magnetic resonance imaging study. J Vis 8(10):13 1–19. 10.1167/8.10.13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Striem-Amit E, Amedi A (2014) Visual cortex extrastriate body-selective area activation in congenitally blind people “seeing” by using sounds. Curr Biol 24:687–692. 10.1016/j.cub.2014.02.010 [DOI] [PubMed] [Google Scholar]

- Thaler L, Arnott SR, Goodale MA (2011) Neural correlates of natural human echolocation in early and late blind echolocation experts. PLoS One 6:e20162–16. 10.1371/journal.pone.0020162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tucholka A, Fritsch V, Poline JB, Thirion B (2012) An empirical comparison of surface-based and volume-based group studies in neuroimaging. Neuroimage 63:1443–1453. 10.1016/j.neuroimage.2012.06.019 [DOI] [PubMed] [Google Scholar]

- Uhl F, Franzen P, Lindinger G, Lang W, Deecke L (1991) On the functionality of the visually deprived occipital cortex in early blind persons. Neurosci Lett 124:256–259. 10.1016/0304-3940(91)90107-5 [DOI] [PubMed] [Google Scholar]

- Van Ackeren MJ, Barbero FM, Mattioni S, Bottini R, Collignon O (2018) Neuronal populations in the occipital cortex of the blind synchronize to the temporal dynamics of speech. eLife 7:e31640. 10.7554/eLife.31640 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van den Hurk J, Van Baelen M, Op de Beeck HP (2017) Development of visual category selectivity in ventral visual cortex does not require visual experience. Proc Natl Acad Sci U S A 114:E4501–E4510. 10.1073/pnas.1612862114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC. (2005) A population-average, landmark- and surface-based (PALS) atlas of human cerebral cortex. Neuroimage 28:635–662. 10.1016/j.neuroimage.2005.06.058 [DOI] [PubMed] [Google Scholar]

- Vigneau M, Beaucousin V, Hervé PY, Jobard G, Petit L, Crivello F, Mellet E, Zago L, Mazoyer B, Tzourio-Mazoyer N (2011) What is right-hemisphere contribution to phonological, lexico-semantic, and sentence processing? Neuroimage 54:577–593. 10.1016/j.neuroimage.2010.07.036 [DOI] [PubMed] [Google Scholar]

- Voss P, Alary F, Lazzouni L, Chapman CE, Goldstein R, Bourgoin P, Lepore F (2016) Crossmodal processing of haptic inputs in sighted and blind individuals. Front Syst Neurosci 10:62. 10.3389/fnsys.2016.00062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wanet-Defalque MC, Veraart C, De Volder A, Metz R, Michel C, Dooms G, Goffinet A (1988) High metabolic activity in the visual cortex of early blind human subjects. Brain Res 446:369–373. 10.1016/0006-8993(88)90896-7 [DOI] [PubMed] [Google Scholar]

- Weeks R, Horwitz B, Aziz-Sultan A, Tian B, Wessinger CM, Cohen LG, Hallett M, Rauschecker JP (2000) A positron emission tomographic study of auditory localization in the congenitally blind. J Neurosci 20:2664–2672. 10.1523/JNEUROSCI.20-07-02664.2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolbers T, Zahorik P, Giudice NA (2011) Decoding the direction of auditory motion in blind humans. Neuroimage 56:681–687. 10.1016/j.neuroimage.2010.04.266 [DOI] [PubMed] [Google Scholar]

- Zangaladze A, Epstein CM, Grafton ST, Sathian K (1999) Involvement of visual cortex in tactile discrimination of orientation. Nature 401:587–590. 10.1038/44139 [DOI] [PubMed] [Google Scholar]