Abstract

Objective

To assess the effect of disclosing authors’ conflict of interest declarations to peer reviewers at a medical journal.

Design

Randomized controlled trial.

Setting

Manuscript review process at the Annals of Emergency Medicine.

Participants

Reviewers (n=838) who reviewed manuscripts submitted between 2 June 2014 and 23 January 2018 inclusive (n=1480 manuscripts).

Intervention

Reviewers were randomized to either receive (treatment) or not receive (control) authors’ full International Committee of Medical Journal Editors format conflict of interest disclosures before reviewing manuscripts. Reviewers rated the manuscripts as usual on eight quality ratings and were then surveyed to obtain “counterfactual scores”—that is, the scores they believed they would have given had they been assigned to the opposite arm—as well as attitudes toward conflicts of interest.

Main outcome measure

Overall quality score that reviewers assigned to the manuscript on submitting their review (1 to 5 scale). Secondary outcomes were scores the reviewers submitted for the seven more specific quality ratings and counterfactual scores elicited in the follow-up survey.

Results

Providing authors’ conflict of interest disclosures did not affect reviewers’ mean ratings of manuscript quality (Mcontrol=2.70 (SD 1.11) out of 5; Mtreatment=2.74 (1.13) out of 5; mean difference 0.04, 95% confidence interval –0.05 to 0.14), even for manuscripts with disclosed conflicts (Mcontrol= 2.85 (1.12) out of 5; Mtreatment=2.96 (1.16) out of 5; mean difference 0.11, –0.05 to 0.26). Similarly, no effect of the treatment was seen on any of the other seven quality ratings that the reviewers assigned. Reviewers acknowledged conflicts of interest as an important matter and believed that they could correct for them when they were disclosed. However, their counterfactual scores did not differ from actual scores (Mactual=2.69; Mcounterfactual=2.67; difference in means 0.02, 0.01 to 0.02). When conflicts were reported, a comparison of different source types (for example, government, for-profit corporation) found no difference in effect.

Conclusions

Current ethical standards require disclosure of conflicts of interest for all scientific reports. As currently implemented, this practice had no effect on any quality ratings of real manuscripts being evaluated for publication by real peer reviewers.

Introduction

Considerable research has documented biases in medical research associated with financial conflicts of interest (COIs), particularly in the pharmaceutical and medical device industry. For example, industry funded trials are more likely to produce results favoring the sponsor, even with the quality of studies is taken into account.1 2 3 4 Given that researchers cannot always avoid conflicts, many research establishments take measures to manage conflicts that arise, and, among such measures, disclosure is the most common. Most high quality medical and scientific journals require disclosure of possible COIs, typically adopting the guidelines of the International Committee of Medical Journal Editors (ICMJE) and other standard setting organizations.5 6 7 COI disclosure is intended, in part, to improve the objectivity and accuracy of research papers, as well as to help readers to critically evaluate the research.

However, scant research has investigated whether COI disclosures achieve their intended purpose. Moreover, no consensus, or for that matter scientific research, exists on which metrics should be used to evaluate the effectiveness of COI disclosures. In the little research that has been done,8 9 10 11 the study design is typically stylized: practicing clinicians, aware that they are in a study, review fictional short abstracts varying with respect to whether, and if so what, conflicts are disclosed, and report their perceptions of the abstracts’ credibility. Most of these studies find that participants report reduced credibility when a COI has been disclosed. One of the strengths of these studies is that their participants, although not experienced reviewers or researchers, are practicing clinicians (as opposed to, for example, convenience samples of medical students or physicians in residency training). In addition, the outcome measure—perceived credibility—has high face validity as a measure for assessing the effect of COI disclosures.

However, such studies may overstate the effect of disclosures on reviewers in actual reviewing situations. Firstly, actual reviewers typically review only one paper at a time, so the COI disclosure may be much less salient to them than it would be in an experiment in which reviewers review multiple abstracts with different COI disclosures in close succession. In the one study in which the purpose of the research was made less obvious, by showing respondents only one abstract (as opposed to several in sequence), COI information had no effect on perceived research credibility.12 Secondly, responses may reflect social expectations; clinicians know that the “correct” response is to distrust findings funded by industry. Given that research evaluating the effect of COI disclosures is scant, is limited to stylized scenario studies, and has produced conflicting results, in this research we tested whether such disclosures affect perceived quality of research in a naturalistic setting.

In response to concerns that COIs erode scientific integrity, leading scientific authorities strongly advocate that authors disclose COIs and standard setting organizations in medical publication mandate it.7 13 14 Failure to do so is considered a breach of academic ethics. Societal concern about the corrupting influence of such conflicts is great enough that in the US it is legally mandated that every payment to any physician by an industry source be reported annually to a governmental public database.15 However, despite this public and scientific concern, no research has examined whether such disclosures affect assessments of research quality in naturalistic settings. We sought to close this gap by conducting a randomized controlled trial of whether COI disclosures affect perceived research quality in real manuscripts in a real world editorial process, as assessed by critical gatekeepers in the dissemination of science: peer reviewers.

Methods

Intervention

The study consisted of two phases. In the primary phase, we did a two arm randomized prospective trial to test whether the current system of disclosure in medical journals has an identifiable effect on reviewers’ assessments. We randomized reviewers of the Annals of Emergency Medicine, a well established medical specialty journal that is top ranked in its field, to either receive (treatment) or not receive (control) authors’ full COI disclosures before reviewing original research (appendix 1).16 17 18 These disclosures consisted of authors’ responses to two questions (a “Yes” response required authors to then provide details of the conflict of interest in an open ended text box). (1) “For any aspect of the submitted manuscript, did any authors or their institutions receive grants, consulting fees, or honoraria, support for meeting travel, fees for participation in review activities such as data monitoring boards or statistical analysis, payment for writing or reviewing the manuscript, provision of writing assistance, medicines, equipment, or administrative support?” (2) “Do any authors have financial relationships in the past 36 months with entities in the bio-medical arena that could be perceived to influence, or that give the appearance of potentially influencing, what you wrote in the submitted work? Examples include, but are not limited to: board membership, consultancy, employment, expert testimony, grants/grants pending, payment for lectures including service on speakers bureaus, payment for manuscript preparation, patents (planned, pending, or issued), royalties, payment for development of educational presentations, stock/stock options, other travel/accommodations/meeting expenses, or other (err on the side of full disclosure).” We measured the effect of this intervention on the eight different quality scores that reviewers gave to the manuscripts: one overall desirability item and seven additional items assessing specific aspects of quality (for example, quality and validity of methods).

The Annals of Emergency Medicine, like more than 5400 other medical journals (appendix 1), requires authors to complete the standard ICMJE COI disclosure form (http://icmje.org/conflicts-of-interest/) on submission of a manuscript, either reporting specifics of any potential COI or attesting that they have no COI to declare.5 This information is shared with the editor handling a given submission. Although authors’ COI disclosures accompany published articles, standard practice at this journal is that reviewers do not have access to authors’ COI disclosures on reviewing the manuscript as part of the peer review process (in our trial we randomized half of reviewers to receive them, as described below in the Procedure section).

From the authors’ and reviewers’ perspective, the review process is double blind: the authors are not given the reviewers’ names, and the reviewers are not given the authors’ names. Such double blinding is less common than single blinding (whereby the reviewers are given the authors’ names, but the authors are not given the reviewers’ names). As of May 2019, 46% of the top 50 medical journals (as indicated by the ISI impact factor) used a single blind process, but a non-trivial proportion (10%) of top journals use double blinding, and 44% allow authors to choose whether to reveal their identity to reviewers (essentially allowing authors to choose whether their review process will be single or double blind). In our study, double blinding is helpful as it affords internal validity to test the specific effect of COI disclosure on reviewers’ perception of manuscript quality in the absence of bias introduced by knowledge of who the authors are or any awareness of authors’ conflicts that might otherwise exist.

In the secondary phase, conducted after reviewers had submitted their review, we asked them to complete a follow-up survey. Reviewers who had been randomized to the treatment arm were asked whether they recalled having read the COI information that they had been given in the first phase. They were also asked whether the COI information had affected what they wrote in their reviews; this item was intended to assess whether the COI information might have affected reviewers’ perceptions, and reports to editors, in ways not necessarily captured by the quantitative quality scores that they gave the manuscript.

In this secondary phase, all reviewers completed a thought experiment in which they were asked whether they would have rated the given manuscript differently had they been assigned to the other trial arm. Those from the control arm were shown the disclosures they had not received when producing their review and were reminded of the scores they had submitted (for each of the eight assessment items). For each item, they entered a “counterfactual score”—the score that they believed they would have given if they had received, when they reviewed the paper, the disclosures they had now been provided with. Reviewers from the treatment arm were also reminded of how they had scored the manuscript and then entered the scores they believed they would have given the manuscript had they not received the disclosures (these reviewers were not re-shown the disclosures they had received during the review process). Finally, we also assessed reviewers’ attitudes toward disclosures of COIs, as well as demographics.

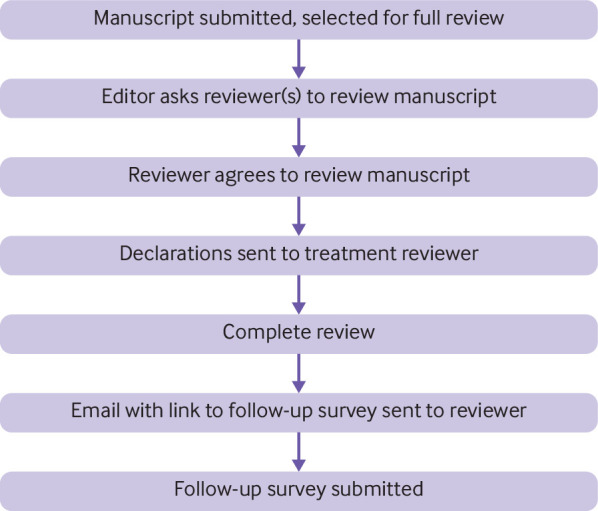

Procedure

We conducted the intervention on the reviews of the 1480 original research manuscripts submitted from 2 June 2014 to 23 January 2018 that editors sent out for first round review at the Annals of Emergency Medicine (impact factor 5.35; top 6% of scientific journals). No important editorial changes occurred, in either editorial board composition or editorial processes, during the four years in which data were collected. During the trial, editors made their decisions about the manuscripts in the usual fashion, beginning with a decision on whether to send the manuscript for peer review (during this trial, editors rejected no manuscripts owing to reported COIs). Manuscripts that passed this screen were then sent to peer reviewers who agreed, via email, to review the given manuscript.

In this trial, 3377 prospective reviewers agreed to provide a review (for one of the 1480 original research manuscripts sent out for first round review during the trial period). The first reviewer who agreed to review a given manuscript was randomized to either receive (treatment arm) or not receive (control arm) the authors’ COI disclosures. Subsequent reviewers who agreed to review the given manuscript were assigned to alternating conditions. Thus, if the first reviewer was randomized to receive the disclosure, the second reviewer would not receive the disclosure. Given the independence of peer reviewers, this design enabled each paper to serve as its own control. The allocation process was completely automated; editors were not informed of the condition reviewers were assigned to.

Once prospective reviewers had agreed to provide a review, they were sent the manuscript by email as per standard practice, and only at this point were they assigned to a trial arm by use of customized software (ExtensionEngine, Boston, MA; https://extensionengine.com), which also operated the automated surveys in the study’s secondary phase. Within 24 hours of receiving the email containing the manuscript, reviewers in the treatment arm were sent an additional email (appendix 2) indicating whether the authors had disclosed COIs on their ICMJE form and, if so, the authors’ disclosures (that is, the exact text of what the authors had written on the form; appendix 3). No information on COIs was obtained from any other source. In addition, no change of any kind was made in the process of collecting COI information; we simply randomized whether or not it was shared with the reviewers of the manuscript. Thus, reviewers in the treatment arm learned one of two things about the authors of the paper they were to review: that the authors had reported having no COIs or that the authors had disclosed COIs (in which case the reviewers were shown the authors’ disclosures).

Approximately 24 hours after submitting their review, reviewers were automatically sent an email, directly from the editor-in-chief’s email address (and signed by the editor-in-chief), asking them to complete a follow-up survey. Reviewers were sent up to three reminder emails. Reviews by reviewers who initially agreed to review a manuscript but did not complete a review were not included in any analyses.

Editors played their usual role during this study; they were not informed that a trial was taking place. They thus had no control over assignment of reviewers to conditions and were not informed of any of the additional communication reviewers were automatically sent as a result of the trial (the COI disclosure email or follow-up survey email). Once the reviews were obtained, the editor decided the manuscript’s fate, based on their own reading of the manuscript and how the reviewers scored it on the journal’s eight standard measures of quality (see below), as well as the reviewers’ written comments.

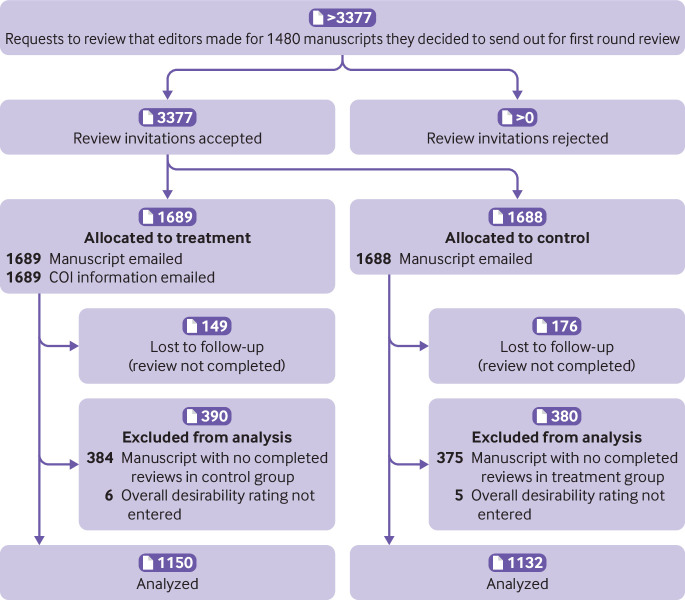

Reviewers’ identities were masked to the researchers by use of identity codes for all analyses, and all participants consented to participate in this research. Figure 1 shows the study flow; figure 2 and figure 3 show the CONSORT diagrams for the two phases.

Fig 1.

Study flow

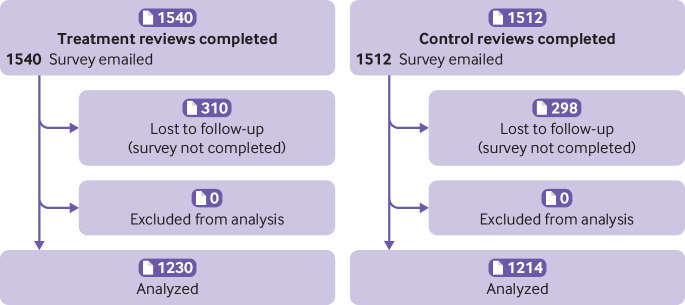

Fig 2.

CONSORT diagram. Phase 1: randomized trial of provision of conflict of interest (COI) information to reviewers during peer review process. Sample was restricted to manuscripts with at least one control review and one treatment review. Two factors contributed to exclusion of 759 reviews (384 in treatment arm; 375 in control arm) because no accompanying review from other arm for given manuscript was available. (1) Failure to complete review: reviewer assigned to complete review from other arm did not submit review. (2) Allocation error: after first few months of data collection, an error was detected in the algorithm that allocated reviewers to condition, with result that, for some manuscripts, all reviewers were inadvertently assigned to same condition. These manuscripts were therefore excluded from analysis. The error was remedied quickly once it was detected

Fig 3.

CONSORT diagram. Phase 2: follow-up survey

Measures

Our primary data source was reviewers’ quantitative responses from the manuscript evaluation form administered in the first phase of the study. We did not alter this form; it is the standard manuscript evaluation form that reviewers have routinely completed at this journal for many years (appendix 4). Our primary outcome measure was the “overall desirability for publication in Annals” on a scale from 1 to 5. As secondary outcome measures, we assessed the effect of the intervention on the seven additional routine assessment items designed to measure different facets of research quality (for example, methods, conclusions, objectivity). The evaluation form also includes three items enquiring whether reviewers have conflicts.

Our secondary data source was reviewers’ responses to the follow-up survey sent in the second phase of the study. The counterfactual score was the primary outcome measure of the follow-up survey (appendix 5). We also assessed several additional measures (exact text of items in appendix 5); reviewers in the treatment arm were asked whether they had noticed the COI information. In addition, after several months of data collection, we added an item to assess whether they believed that the COI information had affected their written review (as opposed to their quantitative scores, as assessed by the counterfactual score).

For all participants, the survey also assessed their attitudes toward COIs (for example, whether they believed that the disclosure was sufficient for them to objectively evaluate the manuscript, whether they knew how to factor it into their review, and their personal perspectives on COIs), an attention check item to ensure that reviewers could detect whether the disclosure they read indicated the presence versus absence of a COI, and demographic questions.

After data collection, two coders, blind to the study hypotheses and the scores the manuscripts had earned during the review process, coded the content of the disclosures. Specifically, for each manuscript that disclosed a COI, the coders coded for the presence of four different categories of funding revealed in that COI: commercial (that is, a for-profit company), non-profit, government, or university, based on information displayed on the funders’ official websites. We chose these sources because they encompassed most funding sources and the different sources could plausibly have affected how reviewers in the treatment condition might have adjusted their reviews in light of receiving COI information (for example, knowledge that a positive trial result was funded by a drug company might prompt a reviewer to shade the review downward—more so than knowledge that such a trial was funded by a university research center). The coders agreed 95.8% of the time. See appendix 6 for additional details.

Statistical analysis

Our primary analysis, which tested the effect of the intervention on overall desirability scores, capitalized on the yoked design in which each manuscript contributed at least one data point to both the control and treatment conditions. This analysis therefore restricted the sample to manuscripts that received at least two reviews. (The journal usually obtains at least two completed reviews per manuscript.)

For our primary analysis, we estimated the average treatment effect of informing reviewers of authors’ COI disclosures for the given manuscript by regressing the overall rating on an indicator variable for the treatment condition. Standard errors were clustered by manuscript. Our primary analysis consisted of three variants of this regression. Firstly, we tested whether receiving these disclosures—regardless of whether they indicated that the author had or did not have a COI—affected overall desirability ratings (model 1). However, because such disclosures plausibly depend on whether authors report a COI, we tested for treatment effects (that is, informing reviewers of the authors’ disclosures) separately for manuscripts in which authors disclosed a COI (model 2) and manuscripts in which authors disclosed that they did not have a COI (model 3). We ascertained manuscript status—that is, whether it was coded as “conflicted” or “unconflicted”—on the basis of authors’ responses on the ICMJE form—information to which reviewers in the treatment arm were exposed. Specifically, manuscripts for which the authors’ responded “Yes” to either of the two items assessing whether a COI was present—item 1A and 2A on the form (appendix 3)—were coded as conflicted.

As described in appendix 7, we also did a variety of robustness checks; for example, additional regressions on the primary outcome measure in which we added manuscript fixed effects, restricted the dataset to the given reviewer’s first provided review during the trial, restricted the dataset to reviewers with a track record of high review quality ratings by editors over the previous five years, restricted the dataset to manuscripts for which the authors only answered “Yes” to the first COI question, and restricted the dataset to manuscripts for which the authors only answered “Yes” to the second COI question.

Finally, in an exploratory analysis, we looked at whether the intervention’s effect on overall desirability scores might have depended on the source of the disclosed funding. We did so by re-running our primary analysis and adding dummy variables representing whether, for a given manuscript, the authors had disclosed funding from a commercial entity, a non-profit organization, a government, a university, or some other source, as well as variables representing the interaction between each dummy variable and our intervention.

Power analysis

We determined our sample size on the basis of a power analysis using input from a previous study that assessed this journal’s editors’ perceptions of the smallest difference in overall quality rating that would prompt them to change their decision on whether to accept or reject a given manuscript.19 20 The median value reported by editors was 0.4 points on the 1-5 “overall desirability” item. To detect a difference in overall rating of 0.4 (SD 1.6) points with a two sided paired t test, 5% significance level, and 99% power, we needed a sample size of 296 manuscripts (appendix 8). The study included enough data to assure more than 99% power to detect this minimally important difference for each of the three primary regressions described above. (Our final sample size was larger than what our power analysis determined to be minimally necessary because we kept collecting data until we had time to work on this project intensively. Importantly, the decision to stop collecting data was independent from the data analysis and results.) We measured how reviewers responded to being informed of a paper’s conflicts of interest and to being informed that a paper had no conflicts of interest. In this sample size, tests of both hypotheses had 99% power to detect a 0.4 point difference on a 1-5 scale.

Patient and public involvement

This research was done without patient involvement. Patients were not invited to comment on the study design and were not consulted to develop outcomes relevant to patients or interpret the results. Patients were not invited to contribute to the writing or editing of this document for readability or accuracy. Before publication, the results of this study were not disseminated to patients or the public.

Results

The trial consisted of 1480 manuscripts, of which 525 (35%) reported having COIs and 955 (65%) reported the absence of conflicts (proportions similar to a broad array of ICMJE compliant general medical journals).21 Among the 525 manuscripts that reported COIs, 115 (22%) reported commercial funding, 118 (23%) reported non-profit funding, 140 (27%) reported government funding, and 41 (8%) reported university funding; 75 (14%) could not be categorized (this was most commonly because the disclosure was vague or ambiguous—stating, for example, that the research had been funded by “multiple grants.” In a few instances, authors reported sources that did not fall into any of the established categories; for example, an author who received funding from a study participant).

We obtained 3041 completed reviews across 838 unique reviewers (table 1 shows their demographics by condition; reviewers’ personal experiences with COIs are in appendix 9). Each manuscript received a mean of 2.1 (SD 0.9) reviews; 607 (43%) manuscripts received two reviews, and 382 (32%) manuscripts received three or more reviews.

Table 1.

Demographics of reviewers. Values are numbers (percentages) unless stated otherwise

| Characteristics | Control (n=368) | Treatment (n=361) |

|---|---|---|

| Male sex | 253 (75) | 243 (76) |

| Mean (SD) age, years | 45.72 (10.65) | 45.74 (10.61) |

| Education: | ||

| MD | 284 (85) | 291 (91) |

| PhD | 53 (16) | 45 (14) |

| Masters | 134 (40) | 122 (38) |

| Editorial experience (served as an editor) | 105 (31) | 106 (33) |

| Reviewing experience (No of grant applications reviewed): | ||

| 0 | 157 (47) | 143 (45) |

| 1-10 | 99 (29) | 115 (36) |

| 11-50 | 48 (14) | 40 (12) |

| 51-100 | 21 (6) | 14 (4) |

| >100 | 11 (3) | 9 (3) |

| Publication experience (No of scientific peer reviewed publications): | ||

| 0 | 1 (0) | 3 (1) |

| 1-10 | 98 (29) | 87 (27) |

| 11-50 | 142 (42) | 145 (45) |

| 51-100 | 54 (16) | 55 (17) |

| >100 | 41 (12) | 31 (10) |

| % of those publications in which first or last author: | ||

| 0-10 | 24 (7) | 16 (5) |

| 11-50 | 145 (43) | 149 (46) |

| 51-90 | 133 (40) | 129 (40) |

| 91-100 | 32 (10) | 27 (8) |

Demographic items were administered in follow-up survey, so demographic data are restricted to 80% of reviewers in sample that completed follow-up survey (control: n=368; treatment n=361). In addition, because some reviewers reviewed multiple papers as part of trial, reviewer sample sizes reflect number of unique reviewers in trial. Reviewers all had full academic appointments representing virtually all medical schools in US and Canada, including all top research institutions.

Intervention

The intervention had no effect on overall desirability scores. Firstly, when we examined all manuscripts, we found no effect of the treatment on overall mean desirability scores (Mcontrol=2.70 (SD 1.11) out of 5; Mtreatment=2.74 (1.13) out of 5; mean difference 0.04, 95% confidence interval –0.05 to 0.14; table 2, model 1). Given that the treatment was qualitatively different as a function of whether the given manuscript was conflicted, we also considered the results separately by manuscript status. Again, the intervention had no effect, regardless of whether authors reported COIs (Mcontrol=2.85 (1.12) out of 5; Mtreatment=2.96 (1.16) out of 5; mean difference 0.11, –0.05 to 0.26; table 2, model 2) or did not report COIs (Mcontrol=2.62 (1.09) out of 5; Mtreatment 2.62 (1.10) out of 5; mean difference 0.01, –0.11 to 0.12; table 2, model 3). The intervention also had no effect when we examined the two types of conflicts of interest assessed on the ICMJE form separately (appendix 7.2.e). The seven other manuscript evaluation items also showed no difference as a function of the treatment (table 3). Consistent with the primary analyses, our exploratory tests, in which we assessed whether the effect of the intervention depended on the nature of the disclosed funding sources, were not statistically significant (table 4 and appendix 10).

Table 2.

Effect of receiving authors’ conflict of interest (COI) disclosures for given manuscript on reviewers’ overall evaluation of those manuscripts

| Model* | No of manuscripts | Treatment: mean (SD) | Control: mean (SD) | Difference (95% CI) |

|---|---|---|---|---|

| 1. All manuscripts | 888 | 2.74 (1.13) | 2.70 (1.11) | 0.04 (–0.05 to 0.14) |

| 2. Manuscripts with COIs | 319 | 2.96 (1.16) | 2.85 (1.12) | 0.11 (–0.05 to 0.26) |

| 3. Manuscripts without COIs | 569 | 2.62 (1.10) | 2.62 (1.09) | 0.01 (–0.11 to 0.12) |

Model 1 denotes overall treatment effect (that is, collapsing across whether authors disclosed versus did not disclose COIs). Model 2 tested for treatment effects among conflicted manuscripts. Model 3 tested for treatment effects among unconflicted manuscripts.

Table 3.

Mean scores for all eight assessment items of 888 manuscripts included, by treatment versus control

| Assessment item | Treatment: mean (SD) | Control: mean (SD) | Difference (95% CI) |

|---|---|---|---|

| Originality/importance of science or clinical impact | 3.20 (0.99) | 3.12 (0.98) | 0.07 (–0.01 to 0.16) |

| Abstract accurately reflects all essential aspects of study | 3.24 (0.98) | 3.15 (1.00) | 0.10 (0.01 to 0.18) |

| Quality and validity of study methodology and design | 2.88 (1.01) | 2.80 (1.01) | 0.08 (–0.00 to 0.16) |

| Conclusions supported by results | 2.97 (1.06) | 2.88 (1.06) | 0.09 (–0.00 to 0.18) |

| Limitations are addressed | 2.84 (1.01) | 2.88 (1.03) | –0.04 (–0.12 to 0.05) |

| Composition is clear, organized, and complete | 3.33 (1.02) | 3.27 (1.02) | 0.06 (–0.02 to 0.15) |

| Presents and interprets results objectively and accurately | 3.19 (1.04) | 3.16 (0.98) | 0.02 (–0.07 to 0.11) |

| Overall desirability for publication in Annals* | 2.74 (1.13) | 2.70 (1.11) | 0.04 (–0.05 to 0.14) |

Primary outcome measure.

Table 4.

Overall desirability scores of treatment versus control, by funder type.

| Funder type | No of manuscripts | Treatment: mean (SD) | Control: mean (SD) | Difference (95% CI) |

|---|---|---|---|---|

| Commercial | 115 | 2.88 (1.18) | 2.85 (1.17) | 0.03 (–0.24 to 0.30) |

| Government | 140 | 2.95 (1.17) | 2.93 (1.08) | 0.02 (–0.21 to 0.25) |

| Non-profit | 118 | 3.08 (1.13) | 2.89 (1.02) | 0.19 (–0.08 to 0.46) |

| University | 41 | 3.03 (1.21) | 2.73 (1.16) | 0.30 (–0.19 to 0.79) |

| Other | 75 | 2.97 (1.16) | 2.98 (1.16) | –0.00 (–0.33 to 0.32) |

For example, first row (commercial) restricts dataset to manuscripts for which authors said “Yes” to at least one of two conflict of interest questions, and that at least one of funders disclosed was commercial entity. Consistent with primary analysis, this supplementary analysis shows no effect of treatment, regardless of nature of funding source disclosed. This means, for example, that overall desirability scores given by reviewers who found out that authors received funding from commercial entity were statistically equivalent to those given by reviewers who were not given this information.

We observed these null effects despite the fact that overall desirability scores varied. Looking across reviews, the entire 1-5 scale was used: a score of 1 was assigned in 18% (n=538)of reviews, and a score of 5 was assigned in 7% (n=218) of reviews. Looking within reviewer, among the 68% (n=528) reviewers who completed two or more reviews, the median reviewer rated their most preferred manuscript two points higher than their least preferred manuscript.

Survey

The response rate was 80% (treatment 1230/1540; control 1214/1512), and most respondents from the treatment arm confirmed that they recalled seeing the COI information (959/1229; 78%). Regardless of experimental condition, mean counterfactual scores for the overall desirability item did not differ from the actual scores reviewers had assigned (Mactual=2.69 (1.19); Mcounterfactual=2.67 (1.19); mean difference 0.02, 0.01 to 0.02; pooled across the treatment, as the treatment did not interact with counterfactual scores; appendix 11).

However, respondents were more likely to agree that “the authors of the paper were subject to significant conflicts of interest” for conflicted versus unconflicted manuscripts, suggesting that they had read and understood the disclosure information (Mconflicted=2.11 (1.16) on a scale from 1=strongly disagree to 5=strongly agree; Munconflicted=1.35 (0.69); mean difference 0.76, 0.68 to 0.85). Reviewers also indicated that they considered COIs to be important and strongly believed they could correct for the biasing influence of COIs when disclosed (table 5). These attitudes are noteworthy given that disclosures had no effect on desirability ratings, even for papers in which authors revealed conflicts.

Table 5.

Reviewers’ attitudes to conflict of interest (COI)

| Item | Mean (SD) |

|---|---|

| Usefulness of COI information | |

| The conflict of interest information I received was sufficient to objectively evaluate the manuscript | 4.01 (1.12) |

| After reading the conflict of interest information provided by the authors, I knew what, if any, impact it should have on my evaluation of this manuscript | 3.93 (1.09) |

| The typical peer reviewer would know how to change their review and recommend changes in the manuscript (if needed) based on COI information disclosure | 3.19 (0.95) |

| General attitudes toward COI | |

| It is reasonable to require authors of medical papers to disclose conflicts of interest | 4.78 (0.56) |

| Requiring authors to disclose conflicts of interest improves the quality of academic publications | 4.34 (0.83) |

| Conflicts of interest are a serious problem in medical research | 3.78 (0.97) |

| Industry collaboration with academics is, on balance, a good thing | 3.49 (0.91) |

| Investigators receiving financial support from commercial interests have a hard time being objective in their research | 3.38 (0.98) |

| The problem of conflicts of interest is exaggerated in the US media | 2.54 (1.08) |

| Policies dealing with conflicts of interest have become a kind of witch-hunt in medicine | 2.32 (1.08) |

Response scale for all items: 1=strongly disagree to 5=strongly agree. Means collapse across treatment arm because items were assessed at end of follow-up survey, after all reviewers had received COI disclosure. For first two items in table, n=2444. For all other items, n=905 (as these items assessed reviewers’ general attitudes toward COI, they were administered only first time given reviewer took follow-up survey—that is, for first review that reviewer submitted during study period).

Finally, we analyzed responses from reviewers in the treatment group who received an additional question about whether their written report had been affected by the COI disclosure they received (n=221). Most (n=180; 81%) respondents endorsed the “Not at all” response option (see appendix 12 for a histogram of responses).

Discussion

Despite being well powered to detect an effect in our primary analyses, this study showed that providing reviewers with COI information did not have a significant effect on their manuscript quality ratings. This was true not only of the overall quality rating but also of seven more specific numeric scores provided by reviewers. In the follow-up survey, reviewers expressed the view that COIs are important, and they also believed that they could correct for the biasing influence of the COIs if they had the information. However, disclosure had no significant effect on reviewers’ evaluations of manuscripts judged by these measures, even when we limited the sample to manuscripts in which authors reported conflicts, and even when we tested for an effect of disclosure by using a variety of different specifications (appendices 8 and 9). Almost none of the reviewers who received the COI disclosure reported having substantially changed the free text of their review in response to that disclosure. Because these are the two major mechanisms by which reviewers might identify and/or correct any bias for editors (and subsequently readers), these findings suggest that disclosure, in its current form, may not be providing the information and guidance that reviewers need to correct for COI induced bias.

Strengths and limitations of study

The study’s strengths include its randomized controlled trial structure, the fact that all the interventions took place in the normal manuscript assessment process of a well established journal (thus it has high ecological validity), the high response rates, the fact that participants were experienced and active peer reviewers, the fact that it was powered to detect an effect (deemed by previous research to be of clinical interest to editors of this journal),19 and the fact that the null results were consistent across all outcome measures.

The study is subject to several limitations. Firstly, given the specific, specialized, nature of any journal, an open question is whether the results would be generalizable to other journals. Future research involving multiple journals could test whether the effect of revealing COIs on reviewers’ scores interacts with characteristics of the journal, such as type of readership, impact factor, and so on.

Our primary analyses, in which we tested whether our intervention affected overall desirability scores across all manuscripts, as well as when restricting the sample to only conflicted manuscripts and to only unconflicted manuscripts, were well powered, affording confidence that the observed null effect is not a type II error. However, we cannot say the same of the exploratory analysis in which we also found our intervention to have no effect when taking into account the nature of the funding source disclosed. Although our coding scheme was highly reliable—our coders agreed 95.8% of the time—the study was not well powered to detect possible interactions between our intervention and the nature of the funding source. Additional research is needed to examine whether reviewers’ responses to receiving COI disclosures depend on their content. Relatedly, future research could also examine whether effects, or lack thereof, differ by reviewers’ characteristics (for example, junior versus senior, and whether the reviewers themselves have COIs). As the first randomized trial of provision of COI information in a live peer review process, the study considered what we believe to be the first order question: whether exposure to authors’ COI disclosures affects overall manuscript ratings by reviewers.

We also did not test whether giving reviewers COI information affected downstream measures of editors’ decisions. This was impractical for us to do, given our within subjects design in which we compared the ratings of reviewers who did or did not receive COI disclosures for the same paper. In addition, it is the practice of the Annals of Emergency Medicine to provide all editors with COI information for all papers. Given that disclosures had no effect on the scores reviewers themselves assigned to manuscripts, it seems unlikely that we would see effects of COI disclosure to reviewers on editors’ decisions (which are, presumably, based on those reviewers’ assessments).

We attempted to maximize the likelihood of detecting effects of COI disclosure on reviewers’ perceptions, both by ensuring that we had adequate power and by testing for possible effects across eight quantitative outcome measures; however, the information could nevertheless have had some effect on reviewers’ behavior that we failed to detect, including on their written comments. Although in the follow-up survey, reviewers stated that the COI disclosure did not affect their written reviews, we did not do textual analysis of reviews—for example, of whether the comments of reviewers informed of a COI were more negative than those of reviewers who did not receive the COI information. One would expect that if they had been, the reviewers’ rating scores would also be more negative. Future research could test more systematically whether COI disclosures affect reviewers’ written comments.

The COI information could also have had no effect because any bias introduced by authors’ COIs was apparent to reviewers from the content of the manuscript even in the control group, in the absence of explicit COI disclosure information. If this were the case, however, one might have expected manuscripts with COIs to be rated lower than those without COIs, regardless of whether the reviewer received the COI information, which did not occur. Given the emphasis placed on COI disclosures, policy makers seem to believe that the biasing effect of COIs may go undetected in the absence of explicit disclosure.

Lastly, we acknowledge limitations in the validity of the counterfactual scores—whether and how reviewers reported that they would have provided different ratings if they counterfactually had, or had not, received COI information. The validity of these scores is limited to the extent that reviewers are unable to accurately introspect about these matters. Despite our best efforts to make this task clear to reviewers, it may nevertheless have been confusing to them. In the face of such confusion, reviewers may have been inclined to simply provide the same answer that they had during the review process (which they were reminded of), as opposed to truly reflecting on whether assignment to the alternative arm would have affected their scores.

Findings in context

Disclosures are becoming ubiquitous in science. Increasingly, paper submissions, grant applications, and assumption of reviewer responsibilities all require detailed disclosure of possible COIs. This paper presents results from, to the best of our knowledge, the first randomized controlled trial to examine the effect of COI disclosures on reviewers’ evaluations of research papers.

Although the findings of this study suggest that information from COI disclosures has little effect on reviewers, disclosure might well have benefits other than those examined in this study. In addition to transparency being valued in its own right, editors, who have experience with a larger number of manuscripts, might make use of the information, even if reviewers do not. Declarations might allow editors and reviewers to flag a manuscript for intensified scrutiny. Transparency may also provide useful grist for watchdog groups to study the connection between conflicts and bias in research, hopefully leading to more effective measures. Finally, disclosure may serve as a prophylactic: knowing that they will have to disclose a COI, researchers might think twice before entering a conflicted relationship, tone down recommendations, or further emphasize limitations.22

Implications

Especially given the impracticality of prohibiting funding of research by sources that create any potential conflicts, the peer review process should be a primary line of defense against conflict induced bias. However, our results suggest that simply providing COI information to reviewers is unlikely to have much effect. In one sense, this ineffectiveness is not surprising, given that reviewers are not given explicit guidance on how to correct for possible COIs. If a disclosure reveals an important COI, should the reviewer recommend rejection? Apply special scrutiny to methods? Consult additional experts? No consensus exists about how reviewers should respond to COI disclosures; nor, hence, is any direction provided about how to do so.

Journals may therefore seek ways of enhancing the use that reviewers make of authors’ COI disclosures. We suspect that such approaches would need to go beyond merely increasing reviewers’ attention to disclosed conflicts. Editorial intervention might be helpful; editors might routinely enlist a highly qualified methodologist or an extra reviewer to evaluate manuscripts with serious COIs. Relatedly, it has been proposed that five factors should be considered in assessing the effect of a COI, including whether oversight mechanisms (for example, trial registration) were in place.18 However, before such factors are provided as guidelines for interpreting COI information and appropriately factoring such information into reviews, they would need to be empirically validated. To that end, developing and validating a method of classifying the severity of COIs is an important area for future research.

Further research into the peer review process might clarify how bias could be better identified and what specific techniques reviewers should use to respond to it. Until now, such research has been remarkably rare. Especially given research showing that attempts to deal with COIs can have unintended consequences,23 any such intervention should itself be thoroughly evaluated, ideally with a randomized field experiment, before being implemented broadly.

At a methodological level, these results point to the value of small scale field studies for testing the effect of policies on real world behaviors. The previous studies we reviewed that examined the effect of COI disclosures were conducted in laboratory settings in which participants knew that they were being studied as well as the purpose of the study. These studies uncovered significant effects of conflict disclosures on participants’ evaluations of studies,8 9 10 11 but they differed in major ways from the real world. Our field experiment, in contrast, calls into question whether these effects will emerge when disclosures are routinely viewed and embedded into a sea of other information in the course of a real reviewing process.

Our finding of a null effect on manuscript ratings contributes new field evidence to a literature documenting disappointing, or in some cases perverse, effects of disclosure of information in a range of settings, such as calorie labeling, privacy information to people giving personal data to companies, and disclosures of the financial risks associated with consumer financial products, as well as conflict of interest disclosures.24 Although disclosure might seem, intuitively, to be beneficial, this study joins a large body of literature suggesting that such beneficial effects cannot be assumed but warrant empiric investigation.

Conclusion

Current ethical standards require disclosure of conflicts of interest for all scientific reports, but as currently implemented we were unable to identify any effect of this practice on the quality ratings of real manuscripts being evaluated for publication by real peer reviewers. Journals should ideally have at least minimal descriptions of how they expect their reviewers to handle COI disclosures, and further research (ideally with multiple journals of varying types) is needed to identify techniques for monitoring and interventions that are of proven, and not just anticipated, effectiveness. In the meantime, this study provides new support for the conclusion that, in its current form, COI disclosure does not provide the long desired tool for solving the problems created by conflicts.

What is already known on this topic

Scientific journals and academic ethical standards mandate that authors explicitly disclose potential conflicts of interest to help peer reviewers, editors, and readers to better detect and compensate for possible bias

No study has determined whether disclosures achieve that goal

What this study adds

This is the first field experiment assessing whether conflict of interest disclosure affects peer reviewers’ assessment of the quality of the manuscripts they consider for publication

Despite ample power to detect effects, the study’s results indicate no effect of disclosures on reviewers’ assessments

Acknowledgments

We are grateful for the research assistance of Danny Blumenthal, Marina Burke, Holly Howe, Meg King, Shannon Sciarappa, Trevor Spelman, and Josh Yee.

Web extra.

Extra material supplied by authors

Appendices 1-12

Contributors: LKJ contributed to the design of the study, supervised data collection, interpreted the results, and wrote the manuscript. GL contributed to the design of the study, interpretation of the results, and revision of the manuscript. AM did the data analysis and contributed to the interpretation of the results and revision of the manuscript. MLC conceived the study and contributed to its design, implemented and supervised data collection, interpreted the results, and revised the manuscript. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted. LKJ and MLC are the guarantors.

Funding: This research was funded using LKJ’s annual research budget allocation from Harvard Business School.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf (available on request from the corresponding author) and declare: no support from any organization for the submitted work; no financial relationships with any organizations that might have an interest in the submitted work in the previous three years; no other relationships or activities that could appear to have influenced the submitted work.

Ethical approval: The study was approved by the Institutional Review Board of Harvard University (IRB: 24032). All participants gave informed consent before taking part in the research.

Transparency statement: The lead author (the manuscript’s guarantor) affirms that this manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as planned (and, if relevant, registered) have been explained..

Data sharing: No additional data available.

References

- 1. Lundh A, Lexchin J, Mintzes B, Schroll JB, Bero L. Industry sponsorship and research outcome. Cochrane Database Syst Rev 2017;2:MR000033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Bhandari M, Busse JW, Jackowski D, et al. Association between industry funding and statistically significant pro-industry findings in medical and surgical randomized trials. CMAJ 2004;170:477-80. [PMC free article] [PubMed] [Google Scholar]

- 3. Lexchin J, Bero LA, Djulbegovic B, Clark O. Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ 2003;326:1167-70. 10.1136/bmj.326.7400.1167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Friedman LS, Richter ED. Relationship between conflicts of interest and research results. J Gen Intern Med 2004;19:51-6. 10.1111/j.1525-1497.2004.30617.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.International Committee of Medical Journal Editors (ICMJE). Conflicts of interest. 2018. http://www.icmje.org/conflicts-of-interest/.

- 6.Committee on Publication Ethics. Conflicts of interest / competing interests. 2018. https://publicationethics.org/competinginterests.

- 7. Ferris LE, Fletcher RH. Conflict of interest in Peer-Reviewed medical journals: the World Association of Medical Editors (WAME) position on a challenging problem. Cardiovasc Diagn Ther 2012;2:188-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Chaudhry S, Schroter S, Smith R, Morris J. Does declaration of competing interests affect readers’ perceptions? A randomised trial. BMJ 2002;325:1391-2. 10.1136/bmj.325.7377.1391 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Schroter S, Morris J, Chaudhry S, Smith R, Barratt H. Does the type of competing interest statement affect readers’ perceptions of the credibility of research? Randomised trial. BMJ 2004;328:742-3. 10.1136/bmj.38035.705185.F6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Lacasse JR, Leo J. Knowledge of ghostwriting and financial conflicts-of-interest reduces the perceived credibility of biomedical research. BMC Res Notes 2011;4:27. 10.1186/1756-0500-4-27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Kesselheim AS, Robertson CT, Myers JA, et al. A randomized study of how physicians interpret research funding disclosures. N Engl J Med 2012;367:1119-27. 10.1056/NEJMsa1202397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Silverman GK, Loewenstein GF, Anderson BL, Ubel PA, Zinberg S, Schulkin J. Failure to discount for conflict of interest when evaluating medical literature: a randomised trial of physicians. J Med Ethics 2010;36:265-70. 10.1136/jme.2009.034496 [DOI] [PubMed] [Google Scholar]

- 13. Bazerman M. The power of noticing: What the best leaders see. Simon and Schuster, 2014. [Google Scholar]

- 14. Chetty R, Looney A, Kroft K. Salience and taxation: Theory and evidence. Am Econ Rev 2009;99:1145-77 10.1257/aer.99.4.1145. [DOI] [Google Scholar]

- 15. Willis LE. The Sunshine Act. In: Laskin AV, ed. The Handbook of Financial Communication and Investor Relations. John Wiley & Sons, 2017: 391-9 10.1002/9781119240822.ch34. [DOI] [Google Scholar]

- 16. Baxt WG, Waeckerle JF, Berlin JA, Callaham ML. Who reviews the reviewers? Feasibility of using a fictitious manuscript to evaluate peer reviewer performance. Ann Emerg Med 1998;32:310-7. 10.1016/S0196-0644(98)70006-X [DOI] [PubMed] [Google Scholar]

- 17. Schroter S, Black N, Evans S, Godlee F, Osorio L, Smith R. What errors do peer reviewers detect, and does training improve their ability to detect them? J R Soc Med 2008;101:507-14. 10.1258/jrsm.2008.080062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Resnik DB, Elliott KC. Taking financial relationships into account when assessing research. Account Res 2013;20:184-205. 10.1080/08989621.2013.788383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Callaham M, John LK. What does it take to change an editor’s mind? Identifying minimally important difference thresholds for peer reviewer rating scores of scientific articles. Ann Emerg Med 2018;72:314-318.e2. 10.1016/j.annemergmed.2017.12.004 [DOI] [PubMed] [Google Scholar]

- 20. McGlothlin AE, Lewis RJ. Minimal clinically important difference: defining what really matters to patients. JAMA 2014;312:1342-3. 10.1001/jama.2014.13128 [DOI] [PubMed] [Google Scholar]

- 21. Grundy Q, Dunn AG, Bourgeois FT, Coiera E, Bero L. Prevalence of Disclosed Conflicts of Interest in Biomedical Research and Associations With Journal Impact Factors and Altmetric Scores. JAMA 2018;319:408-9. 10.1001/jama.2017.20738 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Sah S, Loewenstein G. Nothing to declare: mandatory and voluntary disclosure leads advisors to avoid conflicts of interest. Psychol Sci 2014;25:575-84. 10.1177/0956797613511824 [DOI] [PubMed] [Google Scholar]

- 23. Loewenstein G, Sah S, Cain DM. The unintended consequences of conflict of interest disclosure. JAMA 2012;307:669-70. 10.1001/jama.2012.154 [DOI] [PubMed] [Google Scholar]

- 24. Loewenstein G, Sunstein CR, Golman R. Disclosure: Psychology changes everything. Annu Rev Econ 2014;6:391-419 10.1146/annurev-economics-080213-041341. [DOI] [Google Scholar]

- 25. Lippert S, Callaham ML, Lo B. Perceptions of conflict of interest disclosures among peer reviewers. PLoS One 2011;6:e26900. 10.1371/journal.pone.0026900 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendices 1-12