Abstract

Background Neurologists perform a significant amount of consultative work. Aggregative electronic health record (EHR) dashboards may help to reduce consultation turnaround time (TAT) which may reflect time spent interfacing with the EHR.

Objectives This study was aimed to measure the difference in TAT before and after the implementation of a neurological dashboard.

Methods We retrospectively studied a neurological dashboard in a read-only, web-based, clinical data review platform at an academic medical center that was separate from our institutional EHR. Using our EHR, we identified all distinct initial neurological consultations at our institution that were completed in the 5 months before, 5 months after, and 12 months after the dashboard go-live in December 2017. Using log data, we determined total dashboard users, unique page hits, patient-chart accesses, and user departments at 5 months after go-live. We calculated TAT as the difference in time between the placement of the consultation order and completion of the consultation note in the EHR.

Results By April 30th in 2018, we identified 269 unique users, 684 dashboard page hits (median hits/user 1.0, interquartile range [IQR] = 1.0), and 510 unique patient-chart accesses. In 5 months before the go-live, 1,434 neurology consultations were completed with a median TAT of 2.0 hours (IQR = 2.5) which was significantly longer than during 5 months after the go-live, with 1,672 neurology consultations completed with a median TAT of 1.8 hours (IQR = 2.2; p = 0.001). Over the following 7 months, 2,160 consultations were completed and median TAT remained unchanged at 1.8 hours (IQR = 2.5).

Conclusion At a large academic institution, we found a significant decrease in inpatient consult TAT 5 and 12 months after the implementation of a neurological dashboard. Further study is necessary to investigate the cognitive and operational effects of aggregative dashboards in neurology and to optimize their use.

Keywords: clinical decision support systems, data aggregation, neurology, organizational efficiency, referral and consultation, inpatients

Background and Significance

Since its introduction in 2009, the Health Information Technology for Economic and Clinical Health (HITECH) Act has been associated with widespread adoption of electronic health record (EHR) systems in hospitals across the United States. 1 2 Many physicians have reported generally positive experiences with EHRs, 3 but despite the documented benefits of such systems, 4 EHR systems are also associated with unintended increases in physician workload and documentation times, 5 6 7 8 hospital inefficiencies, 9 and decreased time spent delivering direct patient care. 10 11 12 13 EHR-related increases in physicians' documentation and billing workload are key contributors to physician dissatisfaction in the field of neurology, where physician burnout is high relative to other specialties, 14 15 16 thereby potentially leading to compromises in patient-care quality. 17 18

As a specialty, neurology entails high-EHR utilization due to several factors. First, neurologists rely on unique, multimodal EHR data, including neuroimaging, video feeds, and electrophysiology waveforms to support diagnostic and treatment decisions. 19 Neurology additionally entails a significant amount of consultative work, 20 which may involve review and interpretation of multiple-EHR data streams, and finally, neurological trainees are instructed to incorporate meticulous history-taking, physical examinations, and assessments into their patient evaluations 14 which may further drive extensive use of EHRs and their data.

Dashboards that aggregate clinical data from multiple sources and present information within a single, centralized visualization platform may potentially address the task of extensive EHR data review from multiple disparate sources in neurology. However, clinical decision support requires considerable summarization of EHR data 21 from fragmented locations. By constituting forms of clinical decision support, 22 23 such dashboards may also extend beyond neurology in their uses which include tools for improving quality 24 25 or reducing cognitive burden. 26 27

Given that aggregative dashboards can potentially reduce the workload associated with data review in neurological evaluations, we sought to investigate whether such a dashboard might be associated with reduction in time spent performing neurological consultation. We hypothesized that, among neurological trainees being taught to perform meticulous evaluations, inpatient consultation turnaround time (TAT) would be shorter after dashboard implementation than before.

Methods

Design

We conducted a retrospective analysis of consultation TAT before and after the implementation of a web-based, neurological data dashboard at Columbia University Irving Medical Center (CUIMC), a large academic medical center in New York that is home to a neurology residency program. In conjunction with New York-Presbyterian (CUIMC's hospital) and the Department of Biomedical Informatics at CUIMC has a clinical data repository that dates back to 1988, and maintains a web-based, display-only clinical data review platform (i-NewYork Presbyterian [iNYP]; http://inyp.nyp.org/inyp ) that is separate from the transactional EHR used by the hospital and the ancillary systems. 28 This platform is accessible via single-factor authentication from within the hospital intranet and through dual-factor authentication from outside the hospital intranet. Via an interface, the iNYP Clinical Information System (iNYP) ingests data from the main hospital EHR and admission/discharge/transfer system, as well as laboratory, radiology, ultrasound, and neurophysiology reporting systems ( Fig. 1 ). The iNYP platform also contains several aggregative “dashboard” pages that display tailored clinical data to particular clinical user groups. Given that many inpatient consultations at CUIMC are focused on stroke, a vascular neurology-oriented dashboard was conceived, developed, and implemented as a clinical informatics quality improvement project between August and December, 2017. The data review platform was chosen because it could perform dashboarding functions that the institutional EHR could not. The platform was also chosen because we felt it was likely to have good uptake among trainee users due to its history as the former institutional EHR system that had been replaced in favor of the current institutional EHR but had survived in a form that contained only data review functionality.

Fig. 1.

Simplified data flow/architecture diagram between ancillary and EHR systems at CUIMC, contextualizing the iNYP Clinical Information System that contains the iNYP neurology dashboard. Red arrows denote flow of result data to interface. Red arrows with two asterisks denote the flow of medication, order, and vital sign data entered by end-users from EHR to interface. Green arrows denote data flows back to presentation layer. ADT, admission/discharge/transfer; CUIMC, Columbia University Irving Medical Center; CROWN, Clinical Records Online Web Network; EEG, electroencephalogram; EHR, electronic health record; iNYP, i-NewYork Presbyterian; PACS, picture archive communication system; SCM, Sunrise Clinical Manager.

Between August and September 2017, one vascular neurologist completing fellowship training in clinical informatics (BRK) gathered requirements from four attending stroke neurologists in the department of Neurology at CUIMC to finalize the dashboard's clinical content, which was divided into 13 rectangular page subsections, or “tiles.” The design was based on an active iNYP dashboard for general internal medicine, and retained five tiles from the latter, but the eight remaining tiles were tailored for stroke consultations based on vascular neurologist domain expert input ( Table 1 ; sample screenshots in Figs. 2 and 3 ). The iNYP neurology dashboard was developed between September 2017 and December 2017, and went live on December 1, 2017. In November 2017, one clinical informatics fellow conducted two separate demonstrations of the dashboard and its functionality to an audience of CUIMC stroke neurology faculty and all neurology residents, respectively. One additional demonstration was repeated for the neurology residents in January 2018.

Table 1. Dashboard tile content.

| Tile | Contents a | Development process |

|---|---|---|

| Clinical alerts | Patient age, sex, race, smoking status; 10-year ASCVD risk calculator and statin therapy; BMI and BP alerts b | Copied from different iNYP dashboard SME recommendation |

| Visit history | 12-month visit history and locations (outpatient, specialty, faculty practice, inpatient) | Copied from different iNYP dashboard |

| Vital signs | Most recent vital signs with data source (outpatient/inpatient), current admission indicator, and no-shows | Copied from different iNYP dashboard |

| Neuroimaging c | PACS neuraxis reports and images (X-ray icon) | SME recommendation |

| Neurophysiology/Neurosonology | EEG, carotid and transcranial Doppler reports | SME recommendation |

| Cardiology | TTE, TEE, EKG reports | SME recommendation |

| General Laboratory | Complete blood count, serum electrolytes, renal function, coagulation profile, liver function tests | Copied from different iNYP dashboard |

| Health Issues | Two-section table of general and neurological diagnoses, grouped by ICD10 code | SME recommendation |

| Stroke Laboratory | Hemoglobin A1c, lipid panel, TSH, fingerstick glucose | SME recommendation |

| Hypercoagulable/inflammatory workup | ESR/CRP, RF, ANA, anti-dsDNA; C3, C4; ANCA; anticardiolipin Ab; lupus anticoagulant; anti-extractable nuclear Ab; lumbar puncture; protein C, protein S, anti-thrombin III levels, HIV | SME recommendation |

| Pathology | Factor V Leiden, MTHFR and prothrombin gene mutations, cryoglobulin | SME recommendation |

| Notes d | All available neurology notes | SME recommendation |

| Medications | Inpatient, outpatient medications with dose, route, frequency | Copied from different iNYP dashboard |

Abbreviations: Ab, antibody; ANA, antinuclear antibody; ANCA, antinuclear cytoplasmic antibodies; ASCVD, atherosclerotic cardiovascular disease; BMI, body mass index; iNYP, i-NewYork Presbyterian; BP, blood pressure; CRP, C-reactive protein; CT, computed tomography; dsDNA, double-stranded DNA; EEG, electroencephalogram; EKG, electrocardiogram; ESR, erythrocyte sedimentation rate; HIV, human immunodeficiency virus; MR, magnetic resonance; MTHFR, methylene-tetrahydrofolate reductase; SME, domain expert; TEE, transesophageal echocardiogram; TSH, thyroid stimulating hormone; TTE, transthoracic echocardiogram.

Laboratory/test reports and notes pop-up in separate browser windows from clicking hyperlinked report/note dates, unless otherwise specified. ASCVD 10-year risk is computed according the American College of Cardiology Guideline on the Assessment of Cardiovascular Risk. 42

Displays red triangle followed by body-mass-index and corresponding category (overweight, obese, severely obese) if the latter is greater than 25, 30, or 40, respectively. Configured to display red triangle if most recent systolic blood pressure or diastolic blood pressure is greater than 140 or 90 mm Hg, respectively.

Displays all available CT/MR spine, head/brain studies, as well as CT, MR angiograms of head/neck, and cerebral angiography.

Displays any note with title containing “Neuro,” “Neurology,” “Neuro ICU,” or “Epilepsy.”

Fig. 2.

Screenshot of iNYP dashboard with only eight tiles shown. Statin and blood pressure clinical decision support alerts are pictured in red boxes.

Fig. 3.

Closeup of neuroimaging tile with picture archive communication system (PACS) link icon in red box.

Population and Measurements

At CUIMC, neurology house staff physicians are responsible for receiving all inpatient consultation requests and performing the history, physical examination, and initial assessment, and plan for each consultation. Using the institutional EHR, we identified all distinct, initial inpatient neurological consultations at CUIMC that were completed between July 1, 2017 and November 30, 2017, as well as for the 5-month period, following the dashboard go-live on December 1, 2017 ending on April 30, 2018. We also identified all inpatient neurology consultations for the 7-month interval period between May 1, 2018 and November 30, 2018. For these time periods, we calculated consultation TAT as the difference in time between the placement of the consultation order by the requesting provider in the EHR, and the timestamp corresponding to consultation note completion by the neurological consultant in the EHR. Using log data that contained user names, titles, and departments, we counted total dashboard users, unique page hits, and unique patient chart accesses at 5 months after the go-live and 12 months after the go-live. Using 5-month log data only, we also determined each user's title and affiliated department, and applied text mining to the user title field to determine whether the user was a member of the institutional house staff (defined as resident or fellow).

Statistical Analysis

We counted consultation volume, page hits, unique chart accesses, and user numbers; we also determined proportions of users according to disciplines, physician type, and department. We determined the median consult TAT and interquartile range (IQR) for each study period and compared median consultation TAT for each of the three time periods using the Wilcoxon's rank-sum test. All analyses were performed using R version 3.5 (R Foundation, Vienna, Austria). Statistical significance was set at a two-sided α of 0.05.

Results

Consultation Volumes and Turnaround Times

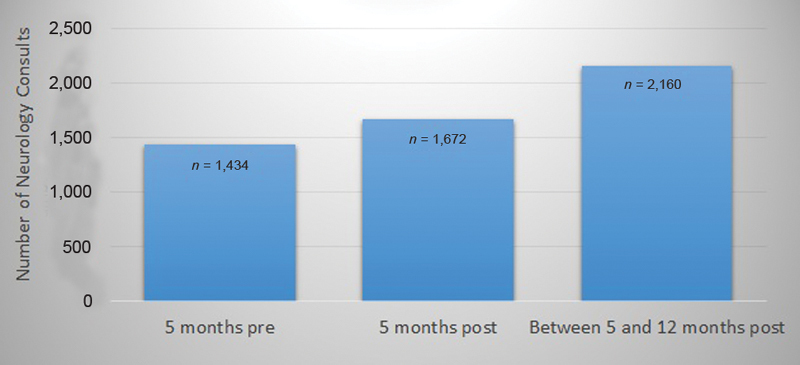

No major unexpected organizational changes that could have significantly affected neurological consultation volume or TAT occurred over the study period, such as modifications to physician staffing models, note templates, order sets, EHR or data platform interfaces, or care pathways. In the 5-month period ending on November 30, 2017, 1,434 neurology consultations were completed which was less than the 1,672 consultations completed over the 5-month period ending April 30, 2018, and the 2,160 consultations completed over the 7-month period between May 1, 2018 and November 30, 2018 ( Fig. 4 ). The median TAT for the 5-month period after the go-live was significantly shorter than that of the 5-month period preceding the go-live by 0.2 hours ( p = 0.001; Fig. 5 ). The median TAT for the 7-month period ending November 30, 2018 was not significantly different from the 5-month period after the go-live ( p = 0.26) but remained significantly shorter than the median TAT for the 5-month period preceding the go-live ( p = 0.02).

Fig. 4.

Neurology consultation volumes at 5 months before go-live, 5 months after go-live, and between 5 and 12 months after go-live.

Fig. 5.

Median consultation turnaround time (TAT) and interquartile range for each study period. IQR, interquartile range.

Usage Patterns

By April 30, 2018, at 5 months after the dashboard go-live, we identified 269 unique users, 684 dashboard page hits (median hits/user = 1.0, interquartile range [IQR] = 1.0), and 510 unique patient chart accesses. By November 30, 2018, at 12 months after the go-live, unique user, page hits, and chart access counts had increased by approximately 1.5-fold (598, 1044, and 1336, respectively; Fig. 6 ). At 5 months after dashboard go-live, usage logs showed that 133 dashboard users (49.4%) were physicians of whom 92 (69.2%) were house staff. Of the physician users, 35 (26.3%) were neurologists. Of the house staff users, 27 (29.3%) were neurology house staff; however, neurology house staff comprised 77.1% of neurologist users and 73% of CUIMC's 37-resident training program during the 2017 to 2018 academic year ( Fig. 7 ).

Fig. 6.

Cumulative dashboard usage counts at 5 and 12 month after go-live.

Fig. 7.

Proportions of dashboard users at 5 months post-go-live. Clockwise from top left: physicians as proportion of all users; house staff as proportion of all physicians; neurology house staff as proportion of all house staff; neurologists as proportion of all physicians.

In aggregate, neurologists were responsible for generating 223 (35.8%) of total dashboard page hits, and neurology house staff comprised 8 (61.5%) of the 13 users that generated the top quintile of page hits. The Internal Medicine and Neurology departments had the most users among the top quintile of page hits, followed by anesthesia, as well as both pediatrics and pathology ( Fig. 8 ). Of the 136 nonphysician users, the most frequently represented users in descending order were administrative personnel ( n = 49; 36.0%), nursing ( n = 24; 17.6%), medical students ( n = 22; 16.2%), and research staff ( n = 13; 9.6%; data not shown).

Fig. 8.

Top quintile by page hits per user, stratified by academic department.

Discussion

In a neurology department at a large academic medical center, we found that in comparison to 5 months before implementation of an aggregative dashboard, inpatient neurological consult TAT was significantly shortened by approximately 12 minutes in a 5-month period following implementation. This time difference remained stable over the following 7 months, suggesting a sustained reduction in TAT. Further, based on log data, we found that house staff constituted nearly two-thirds of all physician users that most neurology users were neurology house staff, and that most neurological residents at CUIMC were users.

Our findings suggest that our implementation was successful in targeting neurology residents for use of the dashboard. Further, a TAT reduction of 12 minutes may be significant, considering the daily academic obligations of residency trainees, and the small size of the CUIMC consult service team (two to three residents) that receive consultation requests and perform evaluations on a daily basis during daytime hours. In the post-go-live period, the mean daily consult volume was approximately 11.1, resulting in approximately 3.7 to 5.5 consults/day/resident depending on consult team size. Whereas a reduction of 12 minutes per consult could translate to an approximate aggregate time savings of 44 to 60 minutes per daytime resident shift, overnight, and on weekends, one resident receives all inpatient consultations for the hospital, thereby increasing the consult volume and the potential time savings.

The specifics of the CUIMC consult service are important for contextualizing our findings and may have implications for nonacademic neurological practitioners. At CUIMC, consultation volume, patient complexity, and the logistics of the consult service all combine to produce a high-EHR work burden for neurology residents, who are the front-line responders to consultation requests. An internal analysis of institutional consult volume during the 2017 to 2018 academic year demonstrates that neurology was the most frequently consulted inpatient service at CUIMC. 29 This volume is compounded by sparse off-hour staffing, which further reduces providers' ability to perform timely and thorough consultations. Furthermore, many patients are referred to CUIMC for complex conditions, or for advanced diagnostic or therapeutic modalities, which may increase the amount of EHR data to be reviewed for a neurological consultation. The complexity of EHR data, detailed neurological examinations, and high consultation volume may place time pressure on consultants, but these circumstances also create opportunities for efficiency improvements, particularly where the need for face-to-face time with the patient and hands-on evaluations accentuate the cognitive demands of locating and consolidating data to formulate assessments and recommendations. While our results are immediately relevant to neurologists practicing at academic medical centers similar to CUIMC, the significant amount of consultative work performed by neurologists may also make our findings generalizable to neurological practitioners as a whole.

Aggregative dashboards are but one of many tools for reducing EHR workload. Such solutions include note templates, such as that provided by Epic SmartPhrases (Epic Systems Corporation, Verona, Wisconsin, Unites States), 30 31 strategic menu design, 32 33 and order sets. 34 Automation-centric innovations also exist, such as automated problem list generation 35 and follow-up determination after radiographic testing, 36 as well as clinical decision support, alerts for risk stratification 37 and drug–disease interactions. 38 A study by Arndt et al of ambulatory family practitioners in the United States showed that clinicians devote nearly 50% of their total daily time in their EHR performing clinical documentation and chart review, with chart review comprising nearly 75% of EHR tasks related to direct medical care. 11 Additionally, Neri et al reported that emergency physicians struggle to integrate clinical data from disparate sources into their clinical documentation workflows which occupy a significant portion of their work. 39 Given the significance of chart review and data integration for physicians, dashboards are uniquely poised to facilitate these tasks by providing tailored data aggregation and potentially reducing cognitive load. Furthermore, dashboard aggregation has also been shown to reduce medication errors at the point of care, 40 suggesting that the benefits of such dashboards may extend beyond EHR work reductions to include clinical benefits as well.

While we advertised the dashboard as a demonstration to the CUIMC neurology faculty and residents, and most of the institutional neurology residents used the dashboard, logs suggest that at least in the short term, the majority of users were not neurologists. This finding may have resulted from the fact that demonstration of the dashboard was too infrequent, or that dissemination made use of suboptimal venues or modalities. The high usage of the dashboard by general internal medicine residents may be related to the existence of iNYP dashboards with similar functionalities for internal medicine, such as general ambulatory medicine and chronic kidney disease, which appear immediately above the neurology dashboard link in the iNYP web page. Finally, physicians caring for many patients admitted to internal medicine services may also be managing patients with comorbid neurological disease, such as stroke, encephalopathy, or seizure, and may have plausible use for the aggregative data review functions of the iNYP neurology dashboard.

Our findings also illustrate the implementation of a process improvement solution in a system that is external to the institutional EHR, and therefore potentially emphasize the relative advantages of such a developmental approach. It is important to note that our study did not specifically compare ease of change implementation in transactional (such as CUIMC's institutional EHR) to nontransactional, derivative (such as iNYP dashboards) systems. Nonetheless, while modifications to transactional systems can present challenges due to direct care quality and safety implications, changes may be relatively easier to implement in a dashboard that is used as a separate clinical data review tool. Despite the relative ease in modifying nontransactional systems, dashboards are also susceptible to errors other than those originating from their source systems. Reliance on dashboards over source systems, as can occur with some successful dashboard implementations, may encourage a lack of use and/or verification of source system data, thereby leading to incorrect identification of dashboard bugs or data are sues with consequent effects on medical decision making and patient care.

Limitations

This study is limited by several important factors. First, this was a retrospective study, and we were not able to control which user groups took advantage of the dashboard to make clinical decisions. Second, due to our reliance on user log data alone, we were not able to determine which dashboard links, functions, and tiles were utilized and could not measure the exact amount of time users spent performing specific EHR tasks nor in which care setting and at which point in the workflow users accessed and used the dashboard. We therefore could not definitively determine that the dashboard was used to support clinical decision making, nor that use of the dashboard occurred during inpatient neurological consultations, as intended, nor that use of the dashboard resulted in faster data review. As such, the observed reduction in consultation TAT may be related to factors independent from dashboard use, such as higher levels of patient complexity in the pre-go-live period, relative inexperience of new residents starting consultative work in the pre-go-live period, or higher volumes in the post-go-live periods which may have caused residents to be able to spend less time per individual consult.

A third limitation was that we calculated TAT as the difference between consult order and consult note redaction as our measurable outcome. Due to the fact that many users were trainees, we could not entirely exclude that users could have performed nonconsult-related responsibilities prior to completing their consultations, such as attending academic conferences, thereby making TAT a potentially inaccurate measure of the dashboard's effectiveness in reducing the amount of work involved in reviewing EHR data. However, it is typically impressed upon trainees that patient care should take precedence over academic activities, so we believe it is unlikely that academic activities inflated the consult TAT. Fourth, the dashboard was heavily weighted toward vascular neurology evaluations. We did not perform a stratified analysis by patient diagnoses which could have enabled us to determine whether the dashboard was useful for evaluation of specific neurological diagnoses, such as stroke, seizure, or encephalopathy. Fifth, we did not measure cognitive burden on the users, quality of care, or hospital resource utilization, all of which are important outcomes to evaluate in an implementation such as this.

Additionally, we disseminated the dashboard only through a demonstration to users, rather than through a pilot program with progressive extension to a larger user group, which may have limited the amount of usage among neurology residents and potentially limited our understanding of how to tailor the dashboard to use cases that were not understood as part of the initial requirement gathering process. However, the majority of the neurology house staff used the dashboard during the study period, suggesting that the dissemination strategy we employed may have been partially successful in reaching our audience. It should also be emphasized that the time involved in performing a consultation is independent of the quality of medical care provided, and while our study focused on the effect of the dashboard on consult TAT, this was not a guideline-based outcome that might accurately reflect quality of care, such as that studied in the PRESCRIBE cluster-randomized controlled trial. 41

Despite these limitations, we found that a 5-month decrease in inpatient neurological consultation TAT followed the neurological dashboard go-live at a large academic medical center, which persisted over the following 7 months. We cannot definitively conclude that introduction of the iNYP neurology dashboard resulted in reduced TAT, decreases in cognitive burden, or increases in face-to-face time with patients, but we demonstrated that significant numbers of neurological and nonneurological house staff used the dashboard. However, a future prospective cluster-randomized trial that contrasts consultation TAT, cognitive burden, measures of burnout, and hospital resource utilization during conventional data review with data review using a neurological dashboard could provide further guidance. This trial should aim to collect data on time spent and usage of each dashboard tile, and should attempt to adjust for patient demographics, clinical characteristics, month of evaluation, as well as resident training levels. Additionally, further work should focus on investigating the impact of neurological dashboards on guideline-based recommended practices, and whether aggregation of neurological data into a single-digital access point significantly impacts cognitive overload, burnout, and time spent with patients.

Clinical Relevance Statement

In this description of an implementation of an aggregative neurological dashboard at a large academic center, the authors measure the neurology consultation TAT 5 months prior to, and at both 5 months after dashboard implementation, as well as over the 7-month period following. In comparison to the 5-month period prior to implementation, consult TAT was significantly shorter at 5 months postimplementation, and this shorter TAT remained unchanged over the following 7 months. In addition to show high usage among institutional neurology residents, usage logs showed that high numbers of physicians from Internal Medicine also used the dashboard over the study period. Further work is needed to determine whether such dashboards significantly impact cognitive load and turnaround time in neurology.

Multiple Choice Questions

-

Which answer best described this study's finding when comparing in-hospital neurology consultation turnaround time during the 5-month period after and prior to the implementation of a neurology dashboard?

Statistically significantly longer.

Statistically significantly shorter.

Longer, but not statistically significantly so.

Shorter, but not statistically significantly so.

Correct Answer : The correct answer is option b.

-

In this study of a neurology dashboard, approximately what percentage of the total dashboard users were physicians?

25%

50%

75%

100%

Correct Answer : The correct answer is option b.

-

Which aspect of the study design most significantly limited the association between dashboard use and turnaround time?

Medication orders were not included in the dashboard.

The usage logs did not capture sufficient numbers of users.

Dashboard usage was not tracked during and outside of consultations.

The dashboard was only accessible via web page.

Correct Answer : The correct answer is option c.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

The institutional review board of CUIMC approved the use of consultation data for this analysis, and waived the requirement for informed consent.

References

- 1.Gold M, McLAUGHLIN C. Assessing HITECH Implementation and Lessons: 5 Years Later. Milbank Q. 2016;94(03):654–687. doi: 10.1111/1468-0009.12214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Adler-Milstein J, Holmgren A J, Kralovec P, Worzala C, Searcy T, Patel V. Electronic health record adoption in US hospitals: the emergence of a digital “advanced use” divide. J Am Med Inform Assoc. 2017;24(06):1142–1148. doi: 10.1093/jamia/ocx080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.King J, Patel V, Jamoom E W, Furukawa M F.Clinical benefits of electronic health record use: national findings Health Serv Res 201449(1, Pt 2):392–404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Buntin M B, Burke M F, Hoaglin M C, Blumenthal D. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff (Millwood) 2011;30(03):464–471. doi: 10.1377/hlthaff.2011.0178. [DOI] [PubMed] [Google Scholar]

- 5.Paterick Z R, Patel N J, Paterick T E.Unintended consequences of the electronic medical record on physicians in training and their mentors Postgrad Med J 201894(1117):659–661. [DOI] [PubMed] [Google Scholar]

- 6.Poissant L, Pereira J, Tamblyn R, Kawasumi Y. The impact of electronic health records on time efficiency of physicians and nurses: a systematic review. J Am Med Inform Assoc. 2005;12(05):505–516. doi: 10.1197/jamia.M1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Campbell E M, Sittig D F, Ash J S, Guappone K P, Dykstra R H. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2006;13(05):547–556. doi: 10.1197/jamia.M2042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Carayon P, Wetterneck T B, Alyousef B et al. Impact of electronic health record technology on the work and workflow of physicians in the intensive care unit. Int J Med Inform. 2015;84(08):578–594. doi: 10.1016/j.ijmedinf.2015.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kannampallil T G, Denton C A, Shapiro J S, Patel V L. Efficiency of emergency physicians: insights from an observational study using EHR log files. Appl Clin Inform. 2018;9(01):99–104. doi: 10.1055/s-0037-1621705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sinsky C, Colligan L, Li L et al. Allocation of physician time in ambulatory practice: a time and motion study in 4 specialties. Ann Intern Med. 2016;165(11):753–760. doi: 10.7326/M16-0961. [DOI] [PubMed] [Google Scholar]

- 11.Arndt B G, Beasley J W, Watkinson M D et al. Tethered to the EHR: primary care physician workload assessment using ehr event log data and time-motion observations. Ann Fam Med. 2017;15(05):419–426. doi: 10.1370/afm.2121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Joukes E, Abu-Hanna A, Cornet R, de Keizer N F. Time spent on dedicated patient care and documentation tasks before and after the introduction of a structured and standardized electronic health record. Appl Clin Inform. 2018;9(01):46–53. doi: 10.1055/s-0037-1615747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Block L, Habicht R, Wu A W et al. In the wake of the 2003 and 2011 duty hours regulations, how do internal medicine interns spend their time? J Gen Intern Med. 2013;28(08):1042–1047. doi: 10.1007/s11606-013-2376-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sigsbee B, Bernat J L. Physician burnout: a neurologic crisis. Neurology. 2014;83(24):2302–2306. doi: 10.1212/WNL.0000000000001077. [DOI] [PubMed] [Google Scholar]

- 15.Josephson S A, Johnston S C, Hauser S L. Electronic medical records and the academic neurologist: when carrots turn into sticks. Ann Neurol. 2012;72(06):A5–A6. doi: 10.1002/ana.23821. [DOI] [PubMed] [Google Scholar]

- 16.Ramos V F. Reflections: neurology and the humanities: a neurologist, an EMR, and a patient. Neurology. 2012;79(20):2079–2080. doi: 10.1212/wnl.0b013e3182749f5d. [DOI] [PubMed] [Google Scholar]

- 17.Bernat J L. Ethical and quality pitfalls in electronic health records. Neurology. 2013;81(17):1558. doi: 10.1212/WNL.0b013e3182a9f1ea. [DOI] [PubMed] [Google Scholar]

- 18.Bernat J L, Busis N A. Patients are harmed by physician burnout. Neurol Clin Pract. 2018;8(04):279–280. doi: 10.1212/CPJ.0000000000000483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.McCarthy L H, Longhurst C A, Hahn J S. Special requirements for electronic medical records in neurology. Neurol Clin Pract. 2015;5(01):67–73. doi: 10.1212/CPJ.0000000000000093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.American Academy of Medical Colleges.Careers in MedicineAvailable at:https://www.aamc.org/cim/. Accessed May 12, 19

- 21.Sittig D F, Wright A, Osheroff J A et al. Grand challenges in clinical decision support. J Biomed Inform. 2008;41(02):387–392. doi: 10.1016/j.jbi.2007.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Koopman R J, Kochendorfer K M, Moore J L et al. A diabetes dashboard and physician efficiency and accuracy in accessing data needed for high-quality diabetes care. Ann Fam Med. 2011;9(05):398–405. doi: 10.1370/afm.1286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Palma J P, Brown P J, Lehmann C U, Longhurst C A. Neonatal informatics: optimizing clinical data entry and display. Neoreviews. 2012;13(02):81–85. doi: 10.1542/neo.13-2-e81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gordon B D, Asplin B R. Using online analytical processing to manage emergency department operations. Acad Emerg Med. 2004;11(11):1206–1212. doi: 10.1197/j.aem.2004.08.015. [DOI] [PubMed] [Google Scholar]

- 25.Hester G, Lang T, Madsen L, Tambyraja R, Zenker P. Timely data for targeted quality improvement interventions: use of a visual analytics dashboard for bronchiolitis. Appl Clin Inform. 2019;10(01):168–174. doi: 10.1055/s-0039-1679868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Effken J A, Loeb R G, Kang Y, Lin Z C. Clinical information displays to improve ICU outcomes. Int J Med Inform. 2008;77(11):765–777. doi: 10.1016/j.ijmedinf.2008.05.004. [DOI] [PubMed] [Google Scholar]

- 27.Feblowitz J C, Wright A, Singh H, Samal L, Sittig D F. Summarization of clinical information: a conceptual model. J Biomed Inform. 2011;44(04):688–699. doi: 10.1016/j.jbi.2011.03.008. [DOI] [PubMed] [Google Scholar]

- 28.New York Presbyterian Hospital.iNYP: Clinical communication and data reviewAvailable at:https://inyp.nyp.org/inyp/. Accessed April 29, 19

- 29.New York Presbyterian Hospital Analytics. Consultation Tableau Dashboard. Internal website. Available at:https://nysgtableau03.sis.nyp.org/#/site/Prod/views/Consults/Overview. Accessed July 24, 2019

- 30.Lamba S, Berlin A, Goett R, Ponce C B, Holland B, Walther S; AAHPM Research Committee Writing Group.Assessing emotional suffering in palliative care: use of a structured note template to improve documentation J Pain Symptom Manage 201652011–7. [DOI] [PubMed] [Google Scholar]

- 31.Nusrat M, Parkes A, Kieser Ret al. Standardizing opioid prescribing practices for cancer-related pain via a novel interactive documentation template at a public hospital J Oncol Pract 2019; (e-pub ahead of print) 10.1200/JOP.18.00789 [DOI] [PubMed] [Google Scholar]

- 32.Tannenbaum D, Doctor J N, Persell S D et al. Nudging physician prescription decisions by partitioning the order set: results of a vignette-based study. J Gen Intern Med. 2015;30(03):298–304. doi: 10.1007/s11606-014-3051-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kreuzthaler M, Pfeifer B, Vera Ramos J A et al. EHR problem list clustering for improved topic-space navigation. BMC Med Inform Decis Mak. 2019;19 03:72. doi: 10.1186/s12911-019-0789-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McGreevey J D., III Order sets in electronic health records: principles of good practice. Chest. 2013;143(01):228–235. doi: 10.1378/chest.12-0949. [DOI] [PubMed] [Google Scholar]

- 35.Devarakonda M V, Mehta N, Tsou C H, Liang J J, Nowacki A S, Jelovsek J E. Automated problem list generation and physicians perspective from a pilot study. Int J Med Inform. 2017;105:121–129. doi: 10.1016/j.ijmedinf.2017.05.015. [DOI] [PubMed] [Google Scholar]

- 36.Kovacs M D, Mesterhazy J, Avrin D, Urbania T, Mongan J. Correlate: a PACS- and EHR-integrated tool leveraging natural language processing to provide automated clinical follow-up. Radiographics. 2017;37(05):1451–1460. doi: 10.1148/rg.2017160195. [DOI] [PubMed] [Google Scholar]

- 37.Wilson F P, Shashaty M, Testani Jet al. Automated, electronic alerts for acute kidney injury: a single-blind, parallel-group, randomised controlled trial Lancet 2015385(9981):1966–1974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bubp J L, Park M A, Kapusnik-Uner J et al. Successful deployment of drug-disease interaction clinical decision support across multiple Kaiser Permanente regions. J Am Med Inform Assoc. 2019:ocz020. doi: 10.1093/jamia/ocz020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Neri P M, Redden L, Poole S et al. Emergency medicine resident physicians' perceptions of electronic documentation and workflow: a mixed methods study. Appl Clin Inform. 2015;6(01):27–41. doi: 10.4338/ACI-2014-08-RA-0065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Campbell A A, Harlan T, Campbell M. Using real-time data to warn nurses of medication administration errors using a nurse situational awareness dashboard. Stud Health Technol Inform. 2018;250:140–141. [PubMed] [Google Scholar]

- 41.Patel M S, Kurtzman G W, Kannan S et al. Effect of an automated patient dashboard using active choice and peer comparison performance feedback to physicians on statin prescribing: the PRESCRIBE cluster randomized clinical trial. JAMA Netw Open. 2018;1(03):e180818. doi: 10.1001/jamanetworkopen.2018.0818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Goff D C, Jr., Lloyd-Jones D M, Bennett G et al. 2013 ACC/AHA guideline on the assessment of cardiovascular risk: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines. Circulation. 2014;129(25) 02:S49–S73. doi: 10.1161/01.cir.0000437741.48606.98. [DOI] [PubMed] [Google Scholar]