Abstract

The auditory frequency-following response (FFR) is a non-invasive index of the fidelity of sound encoding in the brain, and is used to study the integrity, plasticity, and behavioral relevance of the neural encoding of sound. In this Perspective, we review recent evidence suggesting that, in humans, the FFR arises from multiple cortical and subcortical sources, not just subcortically as previously believed, and we illustrate how the FFR to complex sounds can enhance the wider field of auditory neuroscience. Far from being of use only to study basic auditory processes, the FFR is an uncommonly multifaceted response yielding a wealth of information, with much yet to be tapped.

Subject terms: Auditory system, Learning and memory, Neural ageing, Sensory processing

The auditory frequency-following response (FFR) indexes the quality of neural sound encoding in the brain. In this Perspective, the authors discuss the potential of the FFR to provide a better understanding of sound encoding in the auditory system and its relationship to behavior.

Introduction

The auditory system must faithfully encode and process rapid variations in acoustic signals and precisely extract important features, such as frequency, amplitude modulation, and sound onsets and offsets. This task is accomplished by a complex, interconnected, and parallel system. Auditory information enters the brainstem from the cochlea via the auditory nerve and ascends via both lemniscal and nonlemniscal auditory pathways1. Neurons in the lemniscal (or “primary/classical”) pathway are thought to be the main bearers of temporally varying information, with synapses in the brainstem (cochlear nucleus and superior olivary complex), midbrain (central nucleus of the inferior colliculus), thalamus (ventral division of the medial geniculate body), and the primary auditory cortex. The fidelity of sound encoding in these ascending pathways affects all cognitive processes that use the information—and in turn, these ascending pathways are affected by cognitive processes via the vast efferent system. Consequently, sound encoding is relevant to the study of many higher-level functions central to human communication, including speech and music.

Frequency-following responses (FFRs) are recordings of phase-locked neural activity that is synchronized to periodic and transient aspects of sound. Traditionally, FFRs have been measured in humans as electrophysiological potentials to sound, recorded from the scalp. For guidance on collecting FFRs, see Skoe and Kraus for a tutorial in EEG-FFR collection2, Krizman and Kraus for a tutorial on EEG-FFR analysis3, and Coffey et al. for technical details on the MEG-FFR4 (see Box 1 for key points).

Human FFRs were first measured in the 1970s5. Identified as subcortical in origin, they were viewed as a potential supplement to behavioral audiometry. Over the years, the field has moved away from treating the subcortical auditory system as a bottom-up, hardwired conduit for sound, and is increasingly recognizing the contribution of top-down influences within the context of distributed neural networks. Studies using the FFR have played an instrumental role in this evolution of thinking.

The FFR is a noninvasive means of reliably measuring the fidelity and precision with which the brain encodes sound. Measures derived from the FFR (e.g. timing, amplitude, consistency, and pitch tracking, see Fig. 1) reveal an individual’s mapping between a stimulus and the brain’s activity, which may be impaired in disease or enhanced through expertize. The FFR has proven essential to answering basic questions about how our auditory system manages complex acoustic information, how it integrates with other senses, and how both tasks are shaped by experience6–8. FFR measures are related to the ability to differentiate sounds, hear targets in noise, and to experience with music, tonal languages, or multilingualism8–13. The FFR can reveal the plastic nature of the human auditory system, including its potential to change over short-time scales, and its sensitivity to enriched and impoverished experiences with sound13–22.

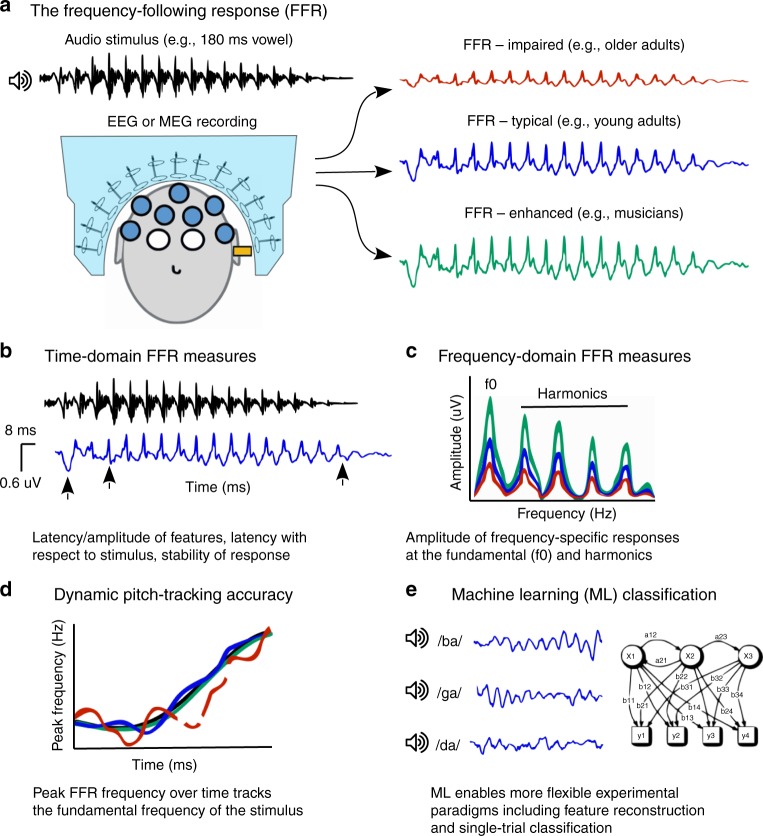

Fig. 1.

The FFR is a means of non-invasively measuring the brain’s ability to encode sound, as well as the general integrity of the auditory system. a The FFR is measured using EEG or MEG while periodic or quasi-periodic sounds such as vowels, consonant-vowel syllables, or tones are presented (see also Box 1). The morphology of the averaged evoked response differs between individuals as a function of pathology and expertize. FFRs can be visualized in b the time domain, c the frequency domain, and d as the accuracy of changes in frequency content over time in response to spectrally dynamic stimuli. e Classification accuracy derived from machine learning techniques provides an additional metric

The FFR is useful to address questions concerning impaired auditory processing in populations with impaired cochlear function23–26, and in neurodevelopmental speech and language disorders27–32 or autism33,34. It can also be used to study maturational35,36 and aging-related changes37,38, sex differences in auditory functions39, and improvement caused by interventions15,40–42. More broadly, the FFR can provide an index of neurological health, for instance, in populations with acquired neurological disorders (e.g. concussion)43. For a comprehensive review of FFR and its role in indexing the effects of experience on the auditory brain, see refs. 44,45.

A fundamental question is what source(s) underlie the FFR in humans. This is important for basic scientific knowledge for its own sake and also because a greater understanding of the FFR’s sources can inform its translation and deployment in medicine. Methods have emerged that allow for some spatial separation of FFR sources in humans (i.e., brainstem, thalamus, cortex4,46,47). These studies have reopened questions about the degree to which activity in different subcortical and cortical centres contributes to the well-studied scalp-recorded FFR and whether sources identified using other methods generalize to the traditional, scalp-recorded response. To be clear: while many questions remain to be answered, we do not think the FFR is solely generated in the auditory cortex, nor do we exclude the possibility of cortical contributions under certain circumstances.

Here we aim to update our evolving understanding of the FFR in a way that is accessible to an interdisciplinary audience; and, we wish to outline a roadmap that promotes a more integrative understanding of the FFR and its potential to study human auditory function.

Historical roots and changing views

To our knowledge, the term “frequency-following response” was dubbed in the late 1960s by Worden and Marsh48, where it was described in an animal model. Initially investigated with low-frequency pure tones (<500 Hz), FFRs were an appealing alternative/adjunct to other objective measures of auditory function available at the time (e.g., auditory brainstem responses, electrocochleograms) because the latter have poor frequency specificity and are less effective at evoking responses to stimulus frequencies below 500 Hz.

By the 1990s, however, evidence began to emerge that the FFR reflected more than mere stimulus audibility. Gary Galbraith, a pioneer in the use of richer FFR stimuli such as two-tone complexes, missing fundamental stimuli, and speech, reported that the FFR was affected by attention49 and by how a particular speech stimulus was perceived by the listener50. Galbraith’s insight that “the FFR is a unique tool for understanding the most important of all auditory capacities: the coding and processing of human language” has proven prescient as the 21st century has seen a dramatic increase in investigations into speech-evoked FFR and how response properties relate to human communication. With these discoveries has come a renewed interest in the investigation of the FFR above and beyond its ability to signal sound detection. Instead, as we detail below, the FFR is now seen as a powerful tool to understand the neurophysiological bases of complex auditory behaviors in humans, including speech and music.

Evoked responses, which are also derived from EEG recordings but typically using a low-pass frequency filter (<40 Hz, often referred to as “cortical auditory evoked potentials” or “late-latency responses” and their variants, such as the mismatch negativity or P300), generally reflect a response to stimulus onset and later processing stages. Distinguishing the FFR is the precision with which it retains the morphological features of the waveform of the stimulus, therefore revealing how the auditory system responds to its acoustic elements. An uncommon wealth of analysis strategies accompanies interpretation of this multifaceted response (see Fig. 1 and Box 2). The past 10 years have seen refinements of FFR analyses that capitalize on the richness of the response3.

Evidence for multiple sources in human scalp-recorded FFR

The biological sources of the FFR have been a topic of debate since the early days of the technique51–53. Efforts to clarify the sources of far-field responses have yielded greater understanding of how and where auditory information is integrated across auditory and non-auditory regions and timescales, and the degree to which auditory centres are subject to neuroplasticity54,55.

Our view of the FFR’s origins relies on three axioms about the auditory system.

The central auditory system is a network of intertwined structures that extend across medulla, pons, midbrain, thalamus, and temporal lobes of cortex. This network is intrinsically connected to other sensory systems and motor, cognitive, and reward systems. To be sure, cells and circuits within each of the nuclei have specialized functions and properties; but, none of these cells or circuits operates in a vacuum. The interactivity of the system means that even something as simple as a primary auditory cortex neuron’s tuning curve has to be considered within the broader context of an integrative and plastic system (reviewed in Kraus and White-Schwoch44). Thus, any consideration of one or more sources of the FFR also has to consider how those sources interact with each other and with non-auditory brain circuits. It is also important to bear in mind that the same auditory structure can yield different neural activity depending on the sound’s context29,56–58.

Phase-locking, the phenomenon by which neurons discharge at a particular phase within the stimulus cycle, is a common feature throughout the auditory system. Through this action the recurring, periodic elements of the stimulus (e.g., the period of the fundamental frequency, the period of the amplitude modulation frequency) are encoded in the synchronous activity of a neuronal population. As you ascend the lemniscal pathway the rate of phase-locking decreases. (For more on auditory system phase-locking see Box 3 and Fig. 2).

The auditory system is plastic. Neurons throughout the auditory axis exhibit rapid plasticity based on stimulus context (e.g., Carbajal and Malmierca59) and the interactive nature of the auditory system makes each centre subject to non-auditory input, whether by changes in overall brain physiology or metabolism, changes in environmental input, and/or changes in top-down cognitive input to refine sensory representation. Thus, while an FFR might measure the current functional state of stimulus representation in the auditory brain, that functional state reflects the legacy of this plasticity.

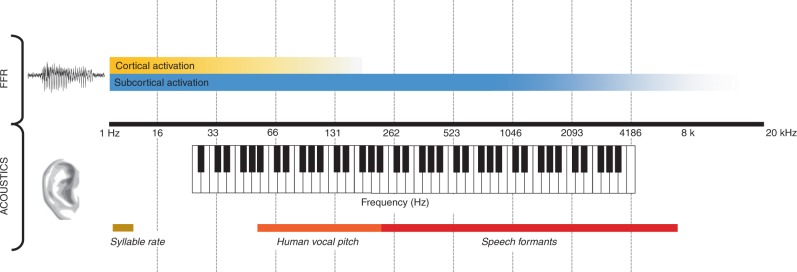

Fig. 2.

Schematic of frequency ranges of speech and music and the relative activation of subcortical and cortical phase-locking to the frequency-following response. Phase-locking limitations of neurons and neuronal assemblies in the human auditory system are not yet known, but can be partly inferred from animal models. Despite phase-locking limitations, the frequency-following response is predictive of the functionality of the entire auditory system

What supports the conventional wisdom that the FFR has a subcortical origin?

Our current understanding of sources of human scalp-recorded FFR is the culmination of non-invasive studies in humans and invasive studies in animal models, each of which has advantages and limitations. The inferior colliculus has often been considered as the dominant source of the FFR derived from EEG scalp-recordings (EEG-FFR) (reviewed in Chandrasekaran and Kraus60), based on the auditory system’s reduced capacity for high-frequency phase-locking at higher centres. Additional evidence comes from direct recordings in animal models, in which the neural sources of the FFR have been studied by selectively taking different auditory structures offline by cooling, lesioning, or pharmacological manipulation. For example, the scalp-recorded FFR was abolished or strongly reduced by cryogenic blockade of the IC in cats51, and in human patients with focal IC lesions52, confirming that the IC is an important FFR signal generator. While these experiments ruled out more peripheral sources, they cannot rule out thalamic or cortical sources—since the IC is an obligatory station of the afferent pathway, blocking IC activity fails to disambiguate IC vs. thalamocortical contributions. Approaching this question from the other direction, studies in cats and rabbits showed that FFRs close to 100 Hz remained largely unaffected by decreased auditory cortex function, but were influenced by lesions to the inferior colliculus61. Also noteworthy is that speech-evoked FFRs and evoked responses to amplitude-modulated tones recorded directly from subcortical structures in animals strongly resemble those recorded from the brain’s surface and those recorded to the same stimuli in humans62,63.

The FFR’s short stimulus-to-response latency of ~5–9 ms is often quoted as evidence of a subcortical origin (e.g. ref. 64), as the IC has a latency of 5–7 ms. However, latency-based arguments are difficult to defend as FFR latencies vary considerably according to stimulus characteristics such as sound pressure level, frequency, and amplitude envelope, and stimulus-to-response latencies much longer than 7 ms have been reported between the stimulus and EEG-FFR in some studies (e.g. 14.6 ms65). Furthermore, intracranial recordings from Heschl’s gyrus show that the first responses to sound in the cortex can occur as early as ~9 ms post stimulus onset66.

Rethinking FFR sources: The multiple generator hypothesis

There have long been hints of the idea that the FFR comprises multiple generators. We advance the hypothesis that the EEG-FFR is an aggregate response reflecting multiple auditory stations, including the auditory nerve, cochlear nucleus, inferior colliculus, thalamus, and cortex, and that the specific mixture of sources may vary depending on the recording techniques, stimulus, and participant demographic. This hypothesis motivates several predictions.

Prediction 1: Decomposition of a multichannel EEG signal should indicate multiple, independent components. In 1978, Stillman et al. recorded FFRs to tones with various fundamental frequencies using only two EEG channels, and concluded that the human FFR is a composite of several waveforms whose relative influence differs as a function of frequency53. Kuwada et al. recorded human EEG and electrophysiology in rabbits and concluded that surface recordings are composite responses from multiple brain generators62. Two-channel recordings and principal component analysis on multichannel EEG data have demonstrated separable FFR components that relate to stimulus properties, such as the presence or absence of energy at the fundamental frequency64,67,68.

Prediction 2: Multimodal source modeling should indicate multiple generators of the scalp-recorded signal. Coffey et al. reported that FFRs to speech (with f0 ~100 Hz) could be non-invasively recorded using MEG, which allows spatial source localization. MEG-FFR contributions included not only subcortical sources—the cochlear nucleus, inferior colliculus, and medial geniculate body (thalamus)—but also the auditory cortices (with a right-hemisphere predominance)4. Using a combination of EEG and functional magnetic resonance imaging (fMRI), a subsequent study confirmed that hemodynamic activity in the right auditory cortex was related to individual differences in the EEG-based FFR f0 strength, consistent with the hypothesis that phase-locked activity in auditory cortex has a hemodynamic signature69. Bidelman found corroborating evidence of multiple sources to the FFR, including a cortical one, using distributed source modeling techniques on multichannel EEG recordings and a speech stimulus (with f0 in the same range as in Coffey et al.). This EEG approach revealed subcortical sources contributing more than the auditory cortex46 (note that thalamic sources did not appear to be included in the analysis).

Prediction 3: Individual differences in FFR components should correlate with behavior if they are functionally relevant. Zhang and Gong used principal component analysis on multichannel EEG data, and found multiple, separable components with different scalp topographies, only one of which correlated with pitch perception; they concluded that phase-locked activity at different sources differentially relates to behavior68. Coffey et al. observed significant correlations between the magnitude of the right auditory cortical MEG-FFR response and pitch perception thresholds, as well as with musical training, suggesting that phase-locked activity in this region provides behaviorally–relevant information4. Separately, while the MEG-FFR strength at subcortical and cortical sources was predictive of speech-in-noise (SIN) perception, the strongest correlations were observed with the right auditory cortex70. In a cross-modal attention task, Hartmann and Weisz confirmed the strong contribution of cortical regions to the MEG-FFR and found that only the right auditory cortex was significantly affected by attention71.

Prediction 4: Different stimulus frequencies will bias certain generators. Tichko and Skoe conducted an extensive investigation that measured EEG-FFR amplitude to complex tones as a function of fundamental frequency72. EEG-FFRs to stimuli with frequencies between 16.35 and 880 Hz showed generally decreasing amplitude with increasing frequency, but with local maxima at ~44, 87, 208, and 415 Hz. The local maxima suggest an EEG-FFR with multiple underlying generators whose activity interacts constructively or destructively at the scalp depending on the stimulus frequency (Fig. 3a). The EEG-FFR interference pattern that produced these local maxima was modeled by the authors as the summation of multiple phase-locked signals, all phase-locked to the stimulus frequencies but with different latencies (i.e., neural conduction times). The authors suggested that recording protocol, electrode montage, recording quality (i.e. signal-to-noise ratio), and subject demographics influence the EEG-FFR interference patterns because each one of these manipulations alters the strength of phase-locking or the degree to which this phase-locking can be detected at the scalp.

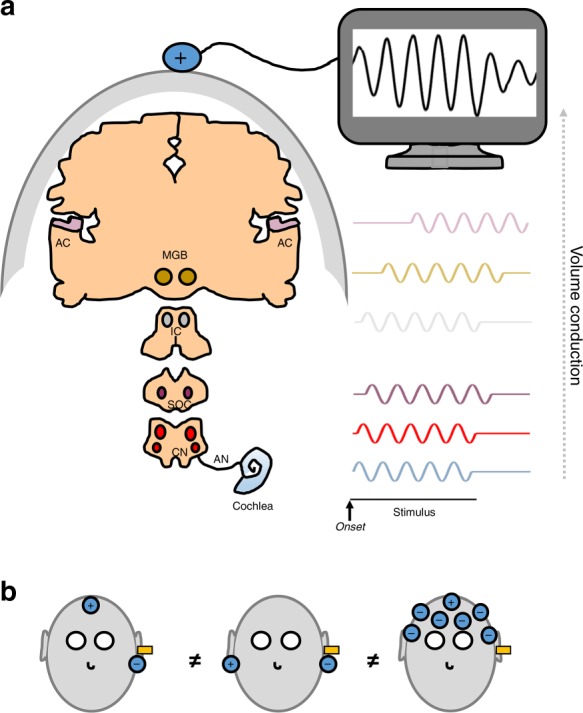

Prediction 5: Different recording techniques will differ in their sensitivity to different sources. Source-localized EEG-FFR and MEG-FFR do not show identical patterns of source strength4,46. Results from MEG should not be directly applied to EEG due to their differing sensitivities to radial vs. tangential currents, and to superficials vs. deep sources (discussed with reference to FFR in ref. 4); although they both are sensitive to the electrochemical current flows within and between brain cells, they provide partly overlapping and partly complementary information73–75. Still, even using only EEG-FFR, electrode placement and referencing appears to affect signal content. Coffey et al. compared two common electrode montages and found only a moderate correlation in their sensitivity to behavioral measures76; these montages, often used interchangeably, may thus differ in the combination of sources to which they are sensitive (Fig. 3b). Likewise, reaction times on an auditory task were noted to track with amplitude of the EEG-FFR in an electrode montage that favors more central subcortical sources, but not in responses from a simultaneously recorded montage that was more peripherally biased77.

Fig. 3.

a Scalp-recorded frequency-following responses (FFRs) may reflect, in part, the summation of phase-locked activity from different sources, each with a characteristic lag relative to the onset of the stimulus. The putative sources of the FFRs include the cochlea, auditory nerve (AN), cochlear nucleus (CN), superior olive (SOC), inferior colliculus (IC), medial geniculate body (MGB), and auditory cortex (AC). b Electrode montage influences the relative contribution of sources in the scalp-recorded signal: for example, the montages shown on the left and central panels which include an electrode at the mastoid likely include a greater contribution from peripheral sources than does the montage illustrated on the right, which references a single vertex channel to the average of other scalp electrodes

A thread through this work is that recording modalities, stimuli, and stimulus presentation paradigms all may influence the mix of sources underlying the recorded signal. One must therefore exercise caution in extrapolating conclusions from one modality or paradigm to the results of another.

In summary, the extent of contributions of sources to the scalp-recorded EEG-FFR under different experimental conditions and in different populations is an unsettled topic. Yet the discovery that different recording techniques implicate different underlying generators increases the richness of what FFR can tell us. We find ourselves sympathetic to the view that the EEG-FFR signal can represent a mixture of sources including the auditory nerve, CN, IC, MGB, and cortex, and that the contribution of each source could differ depending on where and how the response is recorded. Regardless of the “real-time” sources of an FFR, and the possibility that one source may dominate the response, we want to reemphasize that each of those potential sources operates in concert with each other (and non-auditory systems) to shape its function.

Approaches to test hypotheses about FFR origins

To make further progress on these concepts, it will be useful to employ methods whereby FFR data are collected simultaneously with other data that unambiguously reflect cortical and network activity70,78–80. Functional connectivity measures that allow for quantification of the strength and direction of information transfer may also prove useful when applied to spatially resolved signals such as EEG/MEG in source space81. Combinations of different methods could be especially valuable, such as EEG-based FFR together with fMRI or functional near-infrared spectroscopy (fNIRS)69,82. fMRI or fNIRS provides a means of quantifying functional networks throughout the brain which could be used to relate to FFR variables.

Recent animal neurophysiology studies have demonstrated that an FFR similar to that of humans can be recorded in awake monkeys83, confirming previous demonstrated analogs between humans and anesthetized non-human animals37,38,84,85. Awake animal preparations could be particularly enlightening because of the possibility of recording simultaneously from multiple sites in behaving animals. Neurophysiological studies in animals and humans86 could provide a ground truth comparison for FFR strength estimates, establishing cellular-level correlates of observable EEG signals and their changes with plasticity. Another approach would be to combine FFR measurements with brain stimulation of the auditory cortex.

There is also still more to learn about the “old-fashioned” scalp-recorded FFR. Much work to date has focused on the lower-frequency components of the response relating to the fundamental frequency of the stimulus, even though there are approaches that bias responses to high-frequency cues such as speech formants. A wealth of analysis techniques accompanies the interpretation of the FFR; see Krizman and Kraus3. A deeper understanding of these FFR components can enrich our understanding of complex auditory behaviors. And, when applied in tandem with animal research and other techniques, these techniques can further our understanding of generators underlying these relatively simple paradigms.

Finally, new methods to collect FFR offer many interesting possibilities for future research. For example, an exciting future direction is to record FFR to continuous, natural speech or other signals, instead of the traditional repeated singles stimulus paradigm87–90. Combined with free-field recordings91, portable FFR systems92, and/or wearable technologies93, these methods open opportunities to examine FFR in real-world settings. On the analytical front, machine learning algorithms have recently been developed allowing single-trial FFR classification94,95 which could have many applications, including for instance as neurofeedback in training paradigms.

Network dynamics and the “functional view” of the FFR

Contemporary approaches in systems and cognitive neuroscience emphasize the concept that the nervous system functions as an integrated set of complex networks, comprising various interconnected nodes and hubs at which distinct operations take place96, and from whose interactions complex cognition emerges. This perspective strongly informs our view that the auditory nervous system exhibits extensive bidirectional cortical–subcortical and ipsilateral–contralateral connectivity (in addition to bidirectional connectivity with other sensory and cognitive systems). In turn, auditory cortex may itself be considered a hub97 for the ventral and dorsal corticocortical loops that are known to underwrite auditory cognition including auditory object recognition, localization, speech, and music98–101. Thus, we may consider the entire auditory system as consisting of a number of conjoined complex networks, each of which is of course far from fully characterized at this point.

Taking this idea of a highly interconnected nervous system as a framework, we suggest that the FFR serves as an index of the functional properties of the subcortical and early cortical parts of the auditory system. By virtue of the interconnectedness of networks, the FFR is a snapshot of auditory processing. It also seems that the FFR would be influenced by, and hence be relevant to, the corticocortical loops as well. Although direct evidence for such network-level influences remains sparse, the modulation of FFR parameters associated with training-induced plasticity or with cortical dysfunction, as mentioned above, may be one instantiation of this phenomenon15,102. Similarly, the proposals that the FFR may be influenced by attention71,103–105 (but see ref. Varghese et al.106), arousal state107, or task demands76,86,108, may constitute another example. Conceptually similar is the idea that stimulus-specific adaptation (and mismatch negativity) were originally considered cortical109, but we now know that they reflect an integrated auditory change detection response56,57,110,111.

It is our view that the FFR should be thought of as an aggregate measure of the response of the auditory system, reflecting its cumulative prior history. Specific auditory brain centres may contribute differently to a measured response, but those centres function jointly, and in the context of broader neural networks. This gives us the “functional view” of the FFR—we see it as a measure of how well the entire brain is coding sound features much more than as a reflection of activity within any single nucleus, because the nuclei are embedded in complex functional networks. Distinct computations may happen at local nodes, but the functional metrics can be considered as an emergent property of the interactions between nodes. Considering the FFR in this way leads to the development of systems-level hypotheses that should encourage understanding of the relationships between the FFR and other neural features. For example, combining FFR measures with functional MRI may prove useful in delineating the interactions between auditory representations and higher-order cognitive functions (e.g., attention, memory, and even visual and motor operations) and how these interactions change with experience. Similarly, functional and structural connectivity metrics offer opportunities to explore individual differences in network properties and how they affect auditory encoding. All of these approaches can also inform questions relating to development and maturation, as well as to aging and disorders.

Box 1 FFR collection and analysis.

The FFR may be measured in its most simple form using one-channel EEG, with a reasonably high sampling rate (>2000 Hz) and open filters, as subjects are presented with a high number of repetitions (500+) of an auditory stimulus such as a complex tone or speech syllable. FFRs have commonly been measured to stimuli with fundamental frequencies above 80 Hz. Although frequency tracking occurs in the brain at much lower frequencies (e.g. ~2–4 Hz112) it remains to be seen whether these lower frequencies are tracked by identical neural mechanisms. The most commonly used electrode montages are single or averaged earlobes (or mastoids) to vertex (Cz), or hairline (~FCz) to 7th cranial vertebra (see Fig. 3b). More recently, multichannel EEG, and MEG, and complementary measures of whole-brain hemodynamic response (BOLD fMRI)69 have been used to provide spatial information. In most studies to date, the stimulus has a fundamental frequency between 80 and 500 Hz. Stimulus duration is usually between 40 and 250 ms, representing a trade-off between ecological validity and expedience, as high numbers of repetitions are needed. However, even sentence-length stimulation has been used88.

Insert tube earphones are used to avoid electromagnetic stimulus artifacts, and stimulation is generally suprathreshold (70–80 dB SPL). Stimuli may be presented monaurally (e.g. to study lateralization113) or binaurally (to maximize the amplitude of the FFR). They are often presented in alternating polarity (i.e. the digital signal is multiplied by −1 for half of the presentations), which allows for analysis of both envelope and spectral responses.

Experimental approaches should be matched to the research questions and constraints. For research questions that concern the function and contribution of specific structures or localize individual differences, methods that provide spatio-temporal separation are necessary (e.g. MEG). For research questions concerning identification of biomarkers, relation to behavioral performance, or tracking longitudinal changes, simpler equipment with a lower cost is desirable. The single-EEG channel FFR has repeatedly proven its worth as a sensitive measure of the fidelity with which the auditory system as a whole preserves useful sound information6,45. Intermediate approaches that make use of information from more than one channel64,114 or stimulus are also possible. For example, results from several studies measuring FFRs to a range of frequencies suggested frequency-dependent cortical contributions, thus generating hypotheses for future studies with more definitive localization methodology47,72,103.

Box 2 Terminology.

A source of confusion in the field stems from the many terms and acronyms used to refer to the FFR (e.g. ABR, sABR, cABR, AMFR, SS-EP, SSR). The auditory brainstem response has been invoked to help ground FFR in a known clinical technique. Thus, speech-evoked ABR (or “sABR”) will turn up in some circles. Kraus and colleagues promoted “cABR” for some time. The “c” stands for complex, referring to the evoking stimulus, to contrast it with the clicks and pure tones of conventional audiometric ABR. It was also employed to avoid limiting the discussion of the response to phase-locking to the stimulus periodicity (i.e., the following of the stimulus frequency); cABR was meant to be inclusive of the transient onsets and offsets present in the recording. However, we no longer use the term—in part due to its potentially misleading emphasis on “brainstem,” in addition to the implication that it has identical sources and generating mechanisms as the ABR. Another term used is “envelopefollowing response”115. For speech stimuli, however, the term is suboptimal because it incorrectly implies that temporal fine structure (TFS, the counterpart to the acoustic envelope) cannot be observed in the response. Although the acoustic envelope, which imparts important perceptual attributes of sound (e.g., voice pitch), is the most widely studied facet of the FFR, other facets that have received attention include the TFS and responses to distortion products3,116,117. We propose that terms such as envelope and temporal fine structure be subordinated as modifiers: FFRENV and FFRTFS. The rarely used “amplitude modulation following response”, likewise, is too limiting, suggesting a narrowly defined stimulus type62. SS-EP, steady-state evoked potential7, and SSR, steady-state response114 have also emerged recently, adding to the alphabet soup of terminology. The lack of standardized terminology frustrates literature searches, creates false impressions, and at times leads to unnecessary balkanization among researchers. We advocate for simply calling it “FFR,” which avoids presupposing a single source, emphasizing one aspect of the response over another, and limiting the stimulus set. FFR, while imperfect, is a transparent name because it emphasizes that it is a neural response that reflects the acoustical properties of the inducing stimulus, including its periodic and non-periodic components. From a practical standpoint, the term FFR lends itself to easy modification based on collection technique (EEG-FFR, MEG-FFR), analysis technique (FFRENV, FFRTFS) or response subcomponent of interest (FFR-F0, FFR-harmonics). And, it has been the modal term for the past 50 years.

Box 3 Phase-locking in the auditory system.

As a general principle, as you ascend the auditory system, neural response latency increases, phase-locking (i.e. neurons discharging at a particular phase within the stimulus cycle) becomes weaker at higher stimulus frequencies, and the frequency range over which phase-locking occurs becomes narrower. It is known from single-neuron recordings that although the upper limit of stimulus synchronization declines progressively in the ascending pathway from 5000 Hz in the auditory nerve, it is still present up to 800 Hz in the medial geniculate body118–120, with some reports suggesting thalamic phase-locking as high as 1000 Hz in cats121. While the upper limit of phaselocking of individual cortical neurons is often quoted as ~100 Hz, this limit may be an underestimate as it has not clearly been established in humans. Intracranial recording directly from the auditory cortex is possible in neurosurgical epilepsy patients, and using this method, clear phase-locked near-field responses have been reported in response to click trains up to 200 Hz122,123 and to speech stimuli with fundamental frequencies within the range of 120 Hz86. The volley principle124, that multiple neurons each contribute to a subdivision of a period, in theory, could enable even higher frequencies to be coded by neural populations. Indeed, multiple-neuron activity recordings from the monkey primary auditory cortex show phase-locking capability at 250 Hz frequencies (but not at 500 Hz)125, and in the guinea pig some auditory cortex units phase locked up to 500 Hz (with some units tuned specifically to the fundamental frequency range of the species’ 270 Hz alarm calls, suggesting behavioral importance126). The overlap in the phaselocking ranges observed across the auditory neuraxis together with the contribution of the response to transient stimulus components create the physiological conditions for the FFR for any given stimulus frequency to have multiple potential underlying generators. The presence of multiple sources creates complex far-field waveforms that, because of the phase relationships of the sources, can lead to false conclusions about the number and latency of the sources52,72,127.

Box 4 Questions for future research.

Why do FFRs, which cover only a relatively narrow band of frequencies compared to the entire hearing range (Fig. 2a), nonetheless predict such a wide range of auditory behaviors?

How does the FFR relate to other measures that are sensitive to quasi-periodic or aperiodic fluctuations in the signal that allow for reconstruction of other aspects of sound content (refs. 55,128,129)?

What accounts for large inter-individual differences in several parameters of FFR sound encoding even in a homogeneous population of healthy, normal-hearing adults76? What are the neural origins and behavioral correlates of these differences?

How does lateralization of cortical and subcortical FFR, observed in several studies4,69,113, emerge in the system, and what functional relevance does it have?

What kinds of circuit dynamics do we expect as different structures interact, and can these be observed using FFR methods that allow for source separation? Can bottom-up and top down influences be definitively separated using the FFR?

To what extent do FFR fundamental frequencies and harmonics reflect activity from different cortical and subcortical sources, and under which conditions of measurement (e.g. MEG vs. EEG, stimulus properties)? How and where is this information integrated in the brain?

What are the neural origins of group differences and experience-related changes in the FFR? How does the relative contribution of brainstem, thalamic, and cortical sources vary as a function of age or experience with sound? Do some pathologies affect certain FFR generators more than others?

Can we integrate predictions stemming from theoretical models of oscillatory activity (ref. 116) with physiological FFR data to achieve a better understanding of the functional properties of the whole system?

Is the FFR the product of feed-forward transmission of phase-locked spiking, or alternatively, active oscillatory circuits?

Conclusions

Auditory neuroscience is now more attuned to the significance of top-down influences and the role of neuroplasticity in auditory processing; the auditory system is correctly viewed as part of interconnected circuitry that involves cognitive, sensorimotor, and limbic systems. In many ways, the FFR is an ideal way to access this complex circuit precisely because it is not a monolithic response reflecting only a single stimulus component or single source. Rather, the FFR reveals how the auditory system responds to multiple acoustic elements throughout an entire sound, enabling a wealth of analysis strategies. Germane to this perspective article, the FFR can be measured with a number of different techniques, each of which provides a distinct window into auditory processing. Because the FFR is so rich and complex, much more is to be learned from it (Box 4). There needs to be agreement on terminology, a concerted effort against over-generalization vis-à-vis its generation, and careful harmonization between techniques and research questions to fully understand and successfully harness its potential. We hope this perspective piece serves to both inform readers and to inspire them to embrace the complexity of the FFR while remaining grounded in best practices and interpretation as research into the brain mechanisms underlying this response proceeds.

Acknowledgements

The authors gratefully acknowledge the support of their respective institutions.

Author contributions

E.B.J.C., T.N., T.W.-S., B.C., J.K., E.S., R.J.Z., and N.K. contributed equally to this work.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information Nature Communications thanks Manuel Malmierca the other, anonymous reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Schnupp, J., Nelken, I. & King, A. Auditory Neuroscience Making Sense of Sound (MIT Press, 2011).

- 2.Skoe E, Kraus N. Auditory brain stem response to complex sounds: a tutorial. Ear Hear. 2010;31:302–324. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Krizman Jennifer, Kraus Nina. Analyzing the FFR: A tutorial for decoding the richness of auditory function. Hearing Research. 2019;382:107779. doi: 10.1016/j.heares.2019.107779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Coffey EBJJ, Herholz SC, Chepesiuk AMPP, Baillet S, Zatorre RJ. Cortical contributions to the auditory frequency-following response revealed by MEG. Nat. Commun. 2016;7:11070. doi: 10.1038/ncomms11070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Moushegian G, Rupert AL, Stillman RD. Scalp-recorded early responses in man to frequencies in the speech range. Electroencephalogr. Clin. Neurophysiol. 1973;35:665–667. doi: 10.1016/0013-4694(73)90223-X. [DOI] [PubMed] [Google Scholar]

- 6.Kraus N, Nicol T. The power of sound for brain health. Nat. Hum. Behav. 2017;1:700–702. doi: 10.1038/s41562-017-0201-7. [DOI] [PubMed] [Google Scholar]

- 7.Nozaradan S, Schönwiesner M, Caron-Desrochers L, Lehmann A. Enhanced brainstem and cortical encoding of sound during synchronized movement. Neuroimage. 2016;142:231–240. doi: 10.1016/j.neuroimage.2016.07.015. [DOI] [PubMed] [Google Scholar]

- 8.Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc. Natl. Acad. Sci. USA. 2007;104:15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Thompson EC, Woodruff Carr K, White-Schwoch T, Otto-Meyer S, Kraus N. Individual differences in speech-in-noise perception parallel neural speech processing and attention in preschoolers. Hear. Res. 2017;344:148–157. doi: 10.1016/j.heares.2016.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Marmel F, et al. Subcortical neural synchrony and absolute thresholds predict frequency discrimination independently. J. Assoc. Res. Otolaryngol. 2013;14:757–766. doi: 10.1007/s10162-013-0402-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Omote A, Jasmin K, Tierney A. Successful non-native speech perception is linked to frequency following response phase consistency. Cortex. 2017;93:146–154. doi: 10.1016/j.cortex.2017.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhao TC, Kuhl PK. Linguistic effect on speech perception observed at the brainstem. Proc. Natl. Acad. Sci. USA. 2018;115:8716–8721. doi: 10.1073/pnas.1800186115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Krishnan A, Xu Y, Gandour J, Carianib P, Cariani P. Encoding of pitch in the human brainstem is sensitive to language experience. Cogn. Brain Res. 2005;25:161–168. doi: 10.1016/j.cogbrainres.2005.05.004. [DOI] [PubMed] [Google Scholar]

- 14.Wong PCM, Skoe E, Russo NM, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat. Neurosci. 2007;10:420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Reetzke R, Xie Z, Llanos F, Chandrasekaran B. Tracing the trajectory of sensory plasticity across different stages of speech learning in adulthood. Curr. Biol. 2018;28:1419–1427.e4. doi: 10.1016/j.cub.2018.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Skoe E, Krizman J, Spitzer E, Kraus N. The auditory brainstem is a barometer of rapid auditory learning. Neuroscience. 2013;243:104–114. doi: 10.1016/j.neuroscience.2013.03.009. [DOI] [PubMed] [Google Scholar]

- 17.Parbery-Clark A, Anderson S, Hittner E, Kraus N. Musical experience offsets age-related delays in neural timing. Neurobiol. Aging. 2012;33:1483.e1–1483.e4. doi: 10.1016/j.neurobiolaging.2011.12.015. [DOI] [PubMed] [Google Scholar]

- 18.Krizman J, Marian V, Shook A, Skoe E, Kraus N. Subcortical encoding of sound is enhanced in bilinguals and relates to executive function advantages. Proc. Natl. Acad. Sci. USA. 2012;109:7877–7881. doi: 10.1073/pnas.1201575109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Colella-Santos MF, Donadon C, Sanfins MD, Borges LR. Otitis media: long-term effect on central auditory nervous system. Biomed. Res. Int. 2019;2019:1–10. doi: 10.1155/2019/8930904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Elmer S, Hausheer M, Albrecht J, Kühnis J. Human brainstem exhibits higher sensitivity and specificity than auditory-related cortex to short-term phonetic discrimination learning. Sci. Rep. 2017;7:7455. doi: 10.1038/s41598-017-07426-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Jafari Z, Malayeri S. Effects of congenital blindness on the subcortical representation of speech cues. Neuroscience. 2014;258:401–409. doi: 10.1016/j.neuroscience.2013.11.027. [DOI] [PubMed] [Google Scholar]

- 22.Jeng FC, et al. Cross-linguistic comparison of frequency-following responses to voice pitch in american and chinese neonates and adults. Ear Hear. 2011;32:699–707. doi: 10.1097/AUD.0b013e31821cc0df. [DOI] [PubMed] [Google Scholar]

- 23.Presacco A, Simon JZ, Anderson S. Speech-in-noise representation in the aging midbrain and cortex: effects of hearing loss. PLoS One. 2019;14:e0213899. doi: 10.1371/journal.pone.0213899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Daly DMD, Roeser RJR, Moushegian G, clinical GM-E. and & 1976, undefined. The frequency-following response in subjects with profound unilateral hearing loss. Electronencephalogr. Clin. Neurophysiol. 1976;40:132–142. doi: 10.1016/0013-4694(76)90158-9. [DOI] [PubMed] [Google Scholar]

- 25.Zhong Z, Henry KS, Heinz MG. Sensorineural hearing loss amplifies neural coding of envelope information in the central auditory system of chinchillas. Hear. Res. 2014;309:55–62. doi: 10.1016/j.heares.2013.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Shaheen LA, Valero MD, Liberman MC. Towards a diagnosis of cochlear neuropathy with envelope following responses. J. Assoc. Res. Otolaryngol. 2015;16:727–745. doi: 10.1007/s10162-015-0539-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hornickel J, Kraus N. Unstable representation of sound: a biological marker of dyslexia. J. Neurosci. 2013;33:3500–3504. doi: 10.1523/JNEUROSCI.4205-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.White-Schwoch T, et al. Auditory processing in noise: a preschool biomarker for literacy. PLoS Biol. 2015;13:1–17. doi: 10.1371/journal.pbio.1002196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chandrasekaran B, Hornickel J, Skoe E, Nicol T, Kraus N. Context-dependent encoding in the human auditory brainstem relates to hearing speech in noise: implications for developmental dyslexia. Neuron. 2009;64:311–319. doi: 10.1016/j.neuron.2009.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Basu M, Krishnan A, Weber-Fox C. Brainstem correlates of temporal auditory processing in children with specific language impairment. Dev. Sci. 2010;13:77–91. doi: 10.1111/j.1467-7687.2009.00849.x. [DOI] [PubMed] [Google Scholar]

- 31.Billiet CR, Bellis TJ. The relationship between brainstem temporal processing and performance on tests of central auditory function in children with reading disorders. J. Speech Lang. Hear. Res. 2010;54:228–242. doi: 10.1044/1092-4388(2010/09-0239). [DOI] [PubMed] [Google Scholar]

- 32.Rocha-Muniz CN, Befi-Lopes DM, Schochat E. Investigation of auditory processing disorder and language impairment using the speech-evoked auditory brainstem response. Hear. Res. 2012;294:143–152. doi: 10.1016/j.heares.2012.08.008. [DOI] [PubMed] [Google Scholar]

- 33.Otto-Meyer S, Krizman J, White-Schwoch T, Kraus N. Children with autism spectrum disorder have unstable neural responses to sound. Exp. Brain Res. 2018;236:733–743. doi: 10.1007/s00221-017-5164-4. [DOI] [PubMed] [Google Scholar]

- 34.Russo N, Nicol T, Trommer B, Zecker S, Kraus N. Brainstem transcription of speech is disrupted in children with autism spectrum disorders. Dev. Sci. 2009;12:557–567. doi: 10.1111/j.1467-7687.2008.00790.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Musacchia G, et al. Effects of noise and age on the infant brainstem response to speech. Clin. Neurophysiol. 2018;129:2623–2634. doi: 10.1016/j.clinph.2018.08.005. [DOI] [PubMed] [Google Scholar]

- 36.Ribas-Prats T, et al. The frequency-following response (FFR) to speech stimuli: a normative dataset in healthy newborns. Hear. Res. 2019;371:28–39. doi: 10.1016/j.heares.2018.11.001. [DOI] [PubMed] [Google Scholar]

- 37.Lai J, Bartlett EL. Masking differentially affects envelope-following responses in young and aged animals. Neuroscience. 2018;386:150–165. doi: 10.1016/j.neuroscience.2018.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Parthasarathy A, Datta J, Torres JAL, Hopkins C, Bartlett EL. Age-related changes in the relationship between auditory brainstem responses and envelope-following responses. J. Assoc. Res. Otolaryngol. 2014;15:649–661. doi: 10.1007/s10162-014-0460-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Krizman J, Bonacina S, Kraus N. Sex differences in subcortical auditory processing emerge across development. Hear. Res. 2019;380:166–174. doi: 10.1016/j.heares.2019.07.002. [DOI] [PubMed] [Google Scholar]

- 40.Anderson S, White-Schwoch T, Parbery-Clark A, Kraus N. Reversal of age-related neural timing delays with training. Proc. Natl. Acad. Sci. USA. 2013;110:4357–4362. doi: 10.1073/pnas.1213555110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Song J, Skoe E, Wong P, Kraus N. Plasticity in the adult human auditory brainstem following short-term linguistic training. J. Cogn. Neurosci. 2008;20:1892–1902. doi: 10.1162/jocn.2008.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Tierney AT, Krizman J, Kraus N. Music training alters the course of adolescent auditory development. Proc. Natl. Acad. Sci. USA. 2015;112:1–6. doi: 10.1073/pnas.1505114112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kraus N, et al. The neural legacy of a single concussion. Neurosci. Lett. 2017;646:21–23. doi: 10.1016/j.neulet.2017.03.008. [DOI] [PubMed] [Google Scholar]

- 44.Kraus N, White-Schwoch T. Unraveling the biology of auditory learning: a cognitive-sensorimotor-reward framework. Trends Cogn. Sci. 2015;19:642–654. doi: 10.1016/j.tics.2015.08.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kraus Nina, Anderson Samira, White-Schwoch Travis. The Frequency-Following Response. Cham: Springer International Publishing; 2017. The Frequency-Following Response: A Window into Human Communication; pp. 1–15. [Google Scholar]

- 46.Bidelman GM. Subcortical sources dominate the neuroelectric auditory frequency-following response to speech. Neuroimage. 2018;175:56–69. doi: 10.1016/j.neuroimage.2018.03.060. [DOI] [PubMed] [Google Scholar]

- 47.Zhang X, Gong Q. Frequency-following responses to complex tones at different frequencies reflect different source configurations. Front. Neurosci. 2019;13:130. doi: 10.3389/fnins.2019.00130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Worden F, Marsh J. Frequency-following (microphonic-like) neural responses evoked by sound. Electroencephalogr. Clin. Neurophysiol. 1968;25:42–52. doi: 10.1016/0013-4694(68)90085-0. [DOI] [PubMed] [Google Scholar]

- 49.Galbraith, G. & Doan, B. Brainstem frequency-following and behavioral responses during selective attention to pure tone and missing fundamental stimuli. Int. J. Psychophysiol.19, 203–214 (1995). [DOI] [PubMed]

- 50.Galbraith GC, Jhaveri SP, Kuo J. Speech-evoked brainstem frequency-following responses during verbal transformations due to word repetition. Electroencephalogr. Clin. Neurophysiol. 1997;102:46–53. doi: 10.1016/S0013-4694(96)96006-X. [DOI] [PubMed] [Google Scholar]

- 51.Smith JC, Marsh JT, Brown WS. Far-field recorded frequency-following responses: evidence for the locus of brainstem sources. Electroencephalogr. Clin. Neurophysiol. 1975;39:465–472. doi: 10.1016/0013-4694(75)90047-4. [DOI] [PubMed] [Google Scholar]

- 52.Sohmer H, Pratt H, Kinarti R. Sources of frequency following responses (FFR) in man. Electroencephalogr. Clin. Neurophysiol. 1977;42:656–664. doi: 10.1016/0013-4694(77)90282-6. [DOI] [PubMed] [Google Scholar]

- 53.Stillman RD, Crow G, Moushegian G. Components of the frequency-following potential in man. Electroencephalogr. Clin. Neurophysiol. 1978;44:438–446. doi: 10.1016/0013-4694(78)90028-7. [DOI] [PubMed] [Google Scholar]

- 54.Herdman AT, et al. Intracerebral sources of human auditory steady-state responses. Brain Topogr. 2002;15:69–86. doi: 10.1023/A:1021470822922. [DOI] [PubMed] [Google Scholar]

- 55.Dean Linden R, Picton TW, Hamel G, Campbell KB. Human auditory steady-state evoked potentials during selective attention. Electroencephalogr. Clin. Neurophysiol. 1987;66:145–159. doi: 10.1016/0013-4694(87)90184-2. [DOI] [PubMed] [Google Scholar]

- 56.Pérez-González D, Malmierca MS, Covey E. Novelty detector neurons in the mammalian auditory midbrain. Eur. J. Neurosci. 2005;22:2879–2885. doi: 10.1111/j.1460-9568.2005.04472.x. [DOI] [PubMed] [Google Scholar]

- 57.Shiga T, et al. Deviance-related responses along the auditory hierarchy: combined FFR, MLR and MMN evidence. PLoS One. 2015;10:e0136794. doi: 10.1371/journal.pone.0136794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Skoe E, Krizman J, Spitzer E, Kraus N. Prior experience biases subcortical sensitivity to sound patterns. J. Cogn. Neurosci. 2015;27:124–140. doi: 10.1162/jocn_a_00691. [DOI] [PubMed] [Google Scholar]

- 59.Carbajal GV, Malmierca MS. The neuronal basis of predictive coding along the auditory pathway: from the subcortical roots to cortical deviance detection. Trends Hear. 2018;22:233121651878482. doi: 10.1177/2331216518784822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Chandrasekaran B, Kraus N. The scalp-recorded brainstem response to speech: neural origins and plasticity. Psychophysiology. 2010;47:236–246. doi: 10.1111/j.1469-8986.2009.00928.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kiren T, Aoyagi M, Furuse H, Koike Y. An experimental study on the generator of amplitude-modulation following response. Acta Otolaryngol. Suppl. 1994;511:28–33. [PubMed] [Google Scholar]

- 62.Kuwada S, et al. Sources of the scalp-recorded amplitude-modulation following response. J. Am. Acad. Audiol. 2002;13:188–204. [PubMed] [Google Scholar]

- 63.White-Schwoch T, Nicol T, Warrier CM, Abrams DA, Kraus N. Individual differences in human auditory processing: insights from single-trial auditory midbrain activity in an animal model. Cereb. Cortex. 2017;27:5095–5115. doi: 10.1093/cercor/bhw293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.King A, Hopkins K, Plack CJ. Differential group delay of the frequency following response measured vertically and horizontally. J. Assoc. Res. Otolaryngol. 2016;17:133–143. doi: 10.1007/s10162-016-0556-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Akhoun I, et al. The temporal relationship between speech auditory brainstem responses and the acoustic pattern of the phoneme/ba/in normal-hearing adults. Clin. Neurophysiol. 2008;119:922–933. doi: 10.1016/j.clinph.2007.12.010. [DOI] [PubMed] [Google Scholar]

- 66.Brugge JF, et al. Functional localization of auditory cortical fields of human: click-train stimulation. Hear. Res. 2008;238:12–24. doi: 10.1016/j.heares.2007.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Galbraith GC. Two-channel brain-stem frequency-following responses to pure tone and missing fundamental stimuli. Electroencephalogr Clin. Neurophysiol. Potentials Sect. 1994;92:321–330. doi: 10.1016/0168-5597(94)90100-7. [DOI] [PubMed] [Google Scholar]

- 68.Zhang Xiaochen, Gong Qin. Correlation between the frequency difference limen and an index based on principal component analysis of the frequency-following response of normal hearing listeners. Hearing Research. 2017;344:255–264. doi: 10.1016/j.heares.2016.12.004. [DOI] [PubMed] [Google Scholar]

- 69.Coffey EBJ, Musacchia G, Zatorre RJ. Cortical correlates of the auditory frequency-following and onset responses: EEG and fMRI evidence. J. Neurosci. 2016;37:830–838. doi: 10.1523/JNEUROSCI.1265-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Coffey EBJ, Chepesiuk AMP, Herholz SC, Baillet S, Zatorre RJ. Neural correlates of early sound encoding and their relationship to speech-in-noise perception. Front. Neurosci. 2017;11:479. doi: 10.3389/fnins.2017.00479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Hartmann Thomas, Weisz Nathan. Auditory cortical generators of the Frequency Following Response are modulated by intermodal attention. NeuroImage. 2019;203:116185. doi: 10.1016/j.neuroimage.2019.116185. [DOI] [PubMed] [Google Scholar]

- 72.Tichko P, Skoe E. Frequency-dependent fine structure in the frequency-following response: the byproduct of multiple generators. Hear. Res. 2017;348:1–15. doi: 10.1016/j.heares.2017.01.014. [DOI] [PubMed] [Google Scholar]

- 73.Lin F-H, et al. Assessing and improving the spatial accuracy in MEG source localization by depth-weighted minimum-norm estimates. Neuroimage. 2006;31:160–171. doi: 10.1016/j.neuroimage.2005.11.054. [DOI] [PubMed] [Google Scholar]

- 74.Baillet S. Magnetoencephalography for brain electrophysiology and imaging. Nat. Neurosci. 2017;20:327–339. doi: 10.1038/nn.4504. [DOI] [PubMed] [Google Scholar]

- 75.Gross J, et al. Good practice for conducting and reporting MEG research. Neuroimage. 2013;65:349–363. doi: 10.1016/j.neuroimage.2012.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Coffey EBJ, Colagrosso EMG, Lehmann A, Schönwiesner M, Zatorre RJ. Individual differences in the frequency-following response: relation to pitch perception. PLoS One. 2016;11:e0152374. doi: 10.1371/journal.pone.0152374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Galbraith GC, et al. Putative measure of peripheral and brainstem frequency-following in humans. Neurosci. Lett. 2000;292:123–127. doi: 10.1016/S0304-3940(00)01436-1. [DOI] [PubMed] [Google Scholar]

- 78.Bidelman GM, Davis MK, Pridgen MH. Brainstem-cortical functional connectivity for speech is differentially challenged by noise and reverberation. Hear. Res. 2018;367:149–160. doi: 10.1016/j.heares.2018.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Musacchia G, Strait DL, Kraus N. Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians. Hear. Res. 2008;241:34–42. doi: 10.1016/j.heares.2008.04.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Presacco Alessandro, Simon Jonathan Z., Anderson Samira. Effect of informational content of noise on speech representation in the aging midbrain and cortex. Journal of Neurophysiology. 2016;116(5):2356–2367. doi: 10.1152/jn.00373.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Bastos AM, Schoffelen J-M. A tutorial review of functional connectivity analysis methods and their interpretational pitfalls. Front. Syst. Neurosci. 2016;9:1–23. doi: 10.3389/fnsys.2015.00175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Chandrasekaran B, Kraus N, Wong PCM. Human inferior colliculus activity relates to individual differences in spoken language learning. J. Neurophysiol. 2012;107:1325–1336. doi: 10.1152/jn.00923.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Ayala YA, Lehmann A, Merchant H. Monkeys share the neurophysiological basis for encoding sound periodicities captured by the frequency-following response with humans. Sci. Rep. 2017;7:16687. doi: 10.1038/s41598-017-16774-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Warrier CM, Abrams DA, Nicol TG, Kraus N. Inferior colliculus contributions to phase encoding of stop consonants in an animal model. Hear. Res. 2011;282:108–118. doi: 10.1016/j.heares.2011.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Abrams DA, Nicol T, White-Schwoch T, Zecker S, Kraus N. Population responses in primary auditory cortex simultaneously represent the temporal envelope and periodicity features in natural speech. Hear. Res. 2017;348:31–43. doi: 10.1016/j.heares.2017.02.010. [DOI] [PubMed] [Google Scholar]

- 86.Behroozmand R, et al. Neural correlates of vocal production and motor control in human Heschl’s gyrus. J. Neurosci. 2016;36:2302–2315. doi: 10.1523/JNEUROSCI.3305-14.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Puschmann Sebastian, Baillet Sylvain, Zatorre Robert J. Musicians at the Cocktail Party: Neural Substrates of Musical Training During Selective Listening in Multispeaker Situations. Cerebral Cortex. 2018;29(8):3253–3265. doi: 10.1093/cercor/bhy193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Forte, A. E., Etard, O. & Reichenbach, T. The human auditory brainstem response to running speech reveals a subcortical mechanism for selective attention. Elife6, 1–12 (2017). [DOI] [PMC free article] [PubMed]

- 89.Maddox, R. K. & Lee, A. K. C. Auditory brainstem responses to continuous natural speech in human listeners. eNeuro 5, ENEURO.0441-17.2018 5, 1–13 (2018). [DOI] [PMC free article] [PubMed]

- 90.Etard O, Kegler M, Braiman C, Forte AE, Reichenbach T. Decoding of selective attention to continuous speech from the human auditory brainstem response. Neuroimage. 2019;200:1–11. doi: 10.1016/j.neuroimage.2019.06.029. [DOI] [PubMed] [Google Scholar]

- 91.Gama N, Peretz I, Lehmann A. Recording the human brainstem frequency-following-response in the free-field. J. Neurosci. Methods. 2016;280:47–53. doi: 10.1016/j.jneumeth.2017.01.016. [DOI] [PubMed] [Google Scholar]

- 92.Kraus N, Hornickel J, Strait DL, Slater J, Thompson E. Engagement in community music classes sparks neuroplasticity and language development in children from disadvantaged backgrounds. Front. Psychol. 2014;5:1403. doi: 10.3389/fpsyg.2014.01403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Wiegers JS, Bielefeld EC, Whitelaw GM. Utility of the Vivosonic IntegrityTM auditory brainstem response system as a hearing screening device for difficult-to-test children. Int. J. Audiol. 2015;54:282–288. doi: 10.3109/14992027.2014.979374. [DOI] [PubMed] [Google Scholar]

- 94.Yi HG, Xie Z, Reetzke R, Dimakis AG, Chandrasekaran B. Vowel decoding from single-trial speech-evoked electrophysiological responses: a feature-based machine learning approach. Brain Behav. 2017;7:e00665. doi: 10.1002/brb3.665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Xie Z, Reetzke R, Chandrasekaran B. Machine learning approaches to analyze speech-evoked neurophysiological responses. J. Speech Lang. Hear. Res. 2019;62:587–601. doi: 10.1044/2018_JSLHR-S-ASTM-18-0244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Mišić B, Sporns O. From regions to connections and networks: New bridges between brain and behavior. Curr. Opin. Neurobiol. 2016;40:1–7. doi: 10.1016/j.conb.2016.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Griffiths TD, Warren JD. The planum temporale as a computational hub. Trends Neurosci. 2002;25:348–353. doi: 10.1016/S0166-2236(02)02191-4. [DOI] [PubMed] [Google Scholar]

- 98.Hickok G, Poeppel D. The cortical organization of speech processing. Nat. Rev. Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- 99.Rauschecker J, Scott S. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat. Neurosci. 2009;12:718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Zatorre RJ, Chen J, Penhune V. When the brain plays music: auditory–motor interactions in music perception and production. Nat. Rev. Neurosci. 2007;8:547–558. doi: 10.1038/nrn2152. [DOI] [PubMed] [Google Scholar]

- 101.Feng Gangyi, Yi Han Gyol, Chandrasekaran Bharath. The Role of the Human Auditory Corticostriatal Network in Speech Learning. Cerebral Cortex. 2018;29(10):4077–4089. doi: 10.1093/cercor/bhy289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Bidelman GM, Villafuerte JW, Moreno S, Alain C. Age-related changes in the subcortical–cortical encoding and categorical perception of speech. Neurobiol. Aging. 2014;35:2526–2540. doi: 10.1016/j.neurobiolaging.2014.05.006. [DOI] [PubMed] [Google Scholar]

- 103.Holmes E, Purcell DW, Carlyon RP, Gockel HE, Johnsrude IS. Attentional modulation of envelope-following responses at lower (93–109 Hz) but not higher (217–233 Hz) modulation rates. J. Assoc. Res. Otolaryngol. 2018;19:83–97. doi: 10.1007/s10162-017-0641-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Hoormann J, Falkenstein M, Hohnsbein J. Effects of spatial attention on the brain stem frequency-following potential. Neuroreport. 2004;15:1539–1542. doi: 10.1097/01.wnr.0000134932.89313.3b. [DOI] [PubMed] [Google Scholar]

- 105.Lehmann A, Schönwiesner M. Selective attention modulates human auditory brainstem responses: relative contributions of frequency and spatial cues. PLoS One. 2014;9:1–9. doi: 10.1371/journal.pone.0085442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Varghese L, Bharadwaj HM, Shinn-Cunningham BG. Evidence against attentional state modulating scalp-recorded auditory brainstem steady-state responses. Brain Res. 2015;1626:146–164. doi: 10.1016/j.brainres.2015.06.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Mai G, Schoof T, Howell P. Modulation of phase-locked neural responses to speech during different arousal states is age-dependent. Neuroimage. 2019;189:734–744. doi: 10.1016/j.neuroimage.2019.01.049. [DOI] [PubMed] [Google Scholar]

- 108.Hairston WD, Letowski TR, McDowell K. Task-related suppression of the brainstem frequency following response. PLoS One. 2013;8:1–9. doi: 10.1371/journal.pone.0055215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Ulanovsky N, Las L, Nelken I. Processing of low-probability sounds by cortical neurons. Nat. Neurosci. 2003;6:391–398. doi: 10.1038/nn1032. [DOI] [PubMed] [Google Scholar]

- 110.King C, McGee T, Rubel EW, Nicol T, Kraus N. Acoustic features and acoustic change are represented by different central pathways. Hear. Res. 1995;85:45–52. doi: 10.1016/0378-5955(95)00028-3. [DOI] [PubMed] [Google Scholar]

- 111.Parras GG, et al. Neurons along the auditory pathway exhibit a hierarchical organization of prediction error. Nat. Commun. 2017;8:2148. doi: 10.1038/s41467-017-02038-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Nozaradan S. Exploring how musical rhythm entrains brain activity with electroencephalogram frequency-tagging. Philos. Trans. R. Soc. B. 2014;369:20130393–20130393. doi: 10.1098/rstb.2013.0393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Hornickel J, Skoe E, Kraus N. Subcortical laterality of speech encoding. Audiol. Neurootol. 2009;14:198–207. doi: 10.1159/000188533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Bharadwaj HM, Shinn-Cunningham BG. Rapid acquisition of auditory subcortical steady state responses using multichannel recordings. Clin. Neurophysiol. 2014;125:1878–1888. doi: 10.1016/j.clinph.2014.01.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Aiken SJ, Picton TW. Envelope and spectral frequency-following responses to vowel sounds. Hear. Res. 2008;245:35–47. doi: 10.1016/j.heares.2008.08.004. [DOI] [PubMed] [Google Scholar]

- 116.Lerud KD, Almonte FV, Kim JC, Large EW. Mode-locking neurodynamics predict human auditory brainstem responses to musical intervals. Hear. Res. 2014;308:41–49. doi: 10.1016/j.heares.2013.09.010. [DOI] [PubMed] [Google Scholar]

- 117.Luo L, Wang Q, Li L. Neural representations of concurrent sounds with overlapping spectra in rat inferior colliculus: comparisons between temporal-fine structure and envelope. Hear. Res. 2017;353:87–96. doi: 10.1016/j.heares.2017.06.005. [DOI] [PubMed] [Google Scholar]

- 118.Joris PX, Schreiner CE, Rees A. Neural processing of amplitude-modulated sounds. Physiol. Rev. 2004;84:541–577. doi: 10.1152/physrev.00029.2003. [DOI] [PubMed] [Google Scholar]

- 119.Moller HJ, Devins GM, Shen J, Shapiro CM. Sleepiness is not the inverse of alertness: evidence from four sleep disorder patient groups. Exp. Brain Res. 2006;173:258–266. doi: 10.1007/s00221-006-0436-4. [DOI] [PubMed] [Google Scholar]

- 120.Wang X, Lu T, Bendor D, Bartlett E. Neural coding of temporal information in auditory thalamus and cortex. Neuroscience. 2008;154:294–303. doi: 10.1016/j.neuroscience.2008.03.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Rouiller E, de Ribaupierre Y, de Ribaupierre F. Phase-locked responses to low frequency tones in the medial geniculate body. Hear. Res. 1979;1:213–226. doi: 10.1016/0378-5955(79)90015-7. [DOI] [PubMed] [Google Scholar]

- 122.Brugge JF, et al. Coding of repetitive transients by auditory cortex on Heschl’s gyrus. J. Neurophysiol. 2009;102:2358–2374. doi: 10.1152/jn.91346.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Nourski KV, et al. Coding of repetitive transients by auditory cortex on posterolateral superior temporal gyrus in humans: an intracranial electrophysiology study. J. Neurophysiol. 2013;109:1283–1295. doi: 10.1152/jn.00718.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.Irvine, D. R. F. The auditory brainstem: a review of the structure and function of auditory brainstem processing mechanisms. In Progress in Sensory Physiology, Vol. 7 (ed Ottoson, D.) (Springer-Verlag, Berlin, 1986).

- 125.Steinschneider M, Arezzo J, Vaughan HG. Phase-locked cortical responses to a human speech sound and low-frequency tones in the monkey. Brain Res. 1980;198:75–84. doi: 10.1016/0006-8993(80)90345-5. [DOI] [PubMed] [Google Scholar]

- 126.Wallace MN, Shackleton TM, Palmer AR. Phase-locked responses to pure tones in the primary auditory cortex. Hear. Res. 2002;172:160–171. doi: 10.1016/S0378-5955(02)00580-4. [DOI] [PubMed] [Google Scholar]

- 127.Batra R, Kuwada S, Maher VL. The frequency-following response to continuous tones in humans. Hear. Res. 1986;21:167–177. doi: 10.1016/0378-5955(86)90037-7. [DOI] [PubMed] [Google Scholar]

- 128.Anumanchipalli GK, Chartier J, Chang EF. Speech synthesis from neural decoding of spoken sentences. Nature. 2019;568:493–498. doi: 10.1038/s41586-019-1119-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Ding N, Simon JZ. Emergence of neural encoding of auditory objects while listening to competing speakers. Proc. Natl Acad. Sci. USA. 2012;109:11854–11859. doi: 10.1073/pnas.1205381109. [DOI] [PMC free article] [PubMed] [Google Scholar]