Abstract

Data‐driven science is heralded as a new paradigm in materials science. In this field, data is the new resource, and knowledge is extracted from materials datasets that are too big or complex for traditional human reasoning—typically with the intent to discover new or improved materials or materials phenomena. Multiple factors, including the open science movement, national funding, and progress in information technology, have fueled its development. Such related tools as materials databases, machine learning, and high‐throughput methods are now established as parts of the materials research toolset. However, there are a variety of challenges that impede progress in data‐driven materials science: data veracity, integration of experimental and computational data, data longevity, standardization, and the gap between industrial interests and academic efforts. In this perspective article, the historical development and current state of data‐driven materials science, building from the early evolution of open science to the rapid expansion of materials data infrastructures are discussed. Key successes and challenges so far are also reviewed, providing a perspective on the future development of the field.

Keywords: artificial intelligence, databases, data science, machine learning, materials, materials science, open innovation, open science

The current state of data‐driven materials science is reviewed, with a focus on materials data infrastructures and stakeholders from academia, the public, government, and industry. From a historical perspective, the field has matured greatly, however, key challenges—relevance, completeness, standardization, acceptance, and longevity—that still need to be resolved to create the Materials Ultimate Search Engine (MUSE) are identified.

1. Introduction

In this perspective article, we review the current state of data‐driven materials science with a focus on materials data infrastructures. Data‐driven invokes associations with big data, data management, open data and artificial intelligence (e.g., machine learning). The public debate of these terms is currently dominated by internet giants like Google, Amazon, and Facebook who also lead the technological development of data infrastructures, algorithms, and analysis tools. Compared to these e‐commerce and social media developments, the field of data‐driven materials science is still under construction. By way of analogy, it is nonetheless still instructive to imagine a Materials “Google”—the Materials Ultimate Search Engine (MUSE). In this article, we address what it takes to develop such a search tool for materials.

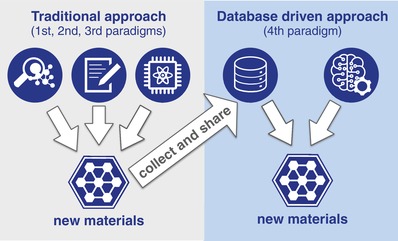

Materials science, the study of the characteristics and applications of materials is a well established discipline that combines chemistry, physics, and engineering research. Materials scientists frequently dream of designing new materials from scratch for use in society.1 However, instead of finding new materials using the MUSE, they discover new materials through conventional experimental, theoretical, or computational research (see left panel of Figure 1 ). This pipeline through which new materials are discovered, designed, developed, manufactured, and deployed remains slow, costly, and highly inefficient: By the time a new material comes to market, the patent protection of the original invention is at the end of its tenure, and proprietary advantage is lost2 (see also ref. 3). By applying data science to materials research, we now have a way to accelerate the materials value chain from discovery to deployment.

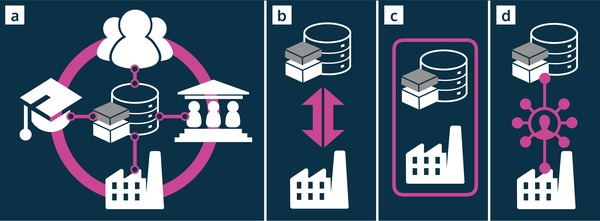

Figure 1.

Materials discovery schematic. In the traditional approach, new materials are discovered by experimentation, theory, or computation (also referred to as 1st, 2nd, and 3rd paradigms and symbolized by the three icons at the top of the left panel). In the 4th paradigm of data‐driven materials science, available data is gathered in data infrastructures, and machine learning approaches discover new materials.

Data science has developed out of the growing demand for open science combined with the meteoric rise of AI and machine learning. As these innovative technologies allow ever‐larger datasets to be processed and hidden correlations to be unveiled, data‐driven science is emerging as the fourth scientific paradigm4, 5 (cf. Figure 1) following the first three eras of experimentally, theoretically, and computationally propelled scientific discoveries. Often connected to the fourth industrial revolution6 or the second machine age,7 such data‐driven approaches permeate science, business, politics, and even social life. Since materials innovation is a critical, well‐recognized driver of economic development and societal progress, it is important that new trends, such as data science, are embraced if they have the potential to advance the field.

Data‐driven materials science and materials informatics are umbrella terms for the scientific practice of systematically extracting knowledge from materials datasets. This practice differs from traditional scientific approaches in materials research by the volume of processed data and the more automated way information is extracted (cf. Figure 1), for example, through the use of machine learning (see refs. 5, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23 for recent review articles on machine learning in materials science). In our MUSE analogy, this would be the search and find part. In addition to data processing and data analysis tools, data‐driven materials science also requires physical infrastructures that host and preserve that data. These would be the data storage part of our MUSE example, which, as physical infrastructures, require dedicated community efforts and sustained investment to become and remain operational.

Stakeholders in academia, industry, governments, and the public attach different meanings and expectations to data‐driven materials science. The actual material science is carried out in academia and research and development (R&D) departments in industry. Scientists at universities and companies not only produce materials data that could then be stored in data facilities, they are also the primary user group of materials data infrastructures. In the wake of digitalization, industry has a further interest in digitizing materials data and incorporating data‐driven materials science into their value chain. Policy makers and governmental or private funding agencies may have an interest in promoting open science data and can stir scientific developments through policy and funding decisions. The general public benefits from materials science by quality‐of‐life enhancement through new products and technologies. They have an indirect interest in data‐driven materials science as a means to accelerate innovations and follow developments in science and open data in the media. Together these stakeholders form an ecosystem of mutual benefit. The vitality of this ecosystem is crucial for the success and the longevity of data‐driven materials science.

In this article, we embed our perspective in the emerging field of data‐driven materials science in the context of the open science movement, which has shaped the philosophy and design of several materials science data infrastructures. We discuss how these infrastructures grew historically from simple databases into data centers that then progressed into materials discovery platforms, and we detail the current state of data infrastructure. A list of current challenges provides the gateway to the second part of this article, in which we delve deeper into data organization, acquisition, quality, and machine learning. We conclude with an industrial perspective that addresses the future and longevity of materials data infrastructures.

2. Open Science Movement

Many of the fundamental aspects of data‐driven materials science are built upon the key elements of the Open Science movement. The European Commission outlines24 Open Science as “…a new approach to the scientific process based on cooperative work and new ways of diffusing knowledge by using digital technologies and new collaborative tools.” Here we reflect on those aspects of Open Science that are particularly relevant to the birth and future of data‐driven materials science.

Openness in science was initially curtailed by the prestige wars between the patrons of early scientists and their associated, convoluted encryption schemes.25 Once more professional scientific practice developed, scientists embraced the idea of accessibility of research as a cornerstone of progress, and this has been generally mandated by public policy. As early as 1710 in the UK, the Copyright Act endowed the ownership of copyright to authors rather than publishers, encouraging authors to deposit manuscripts into national libraries to make them publicly accessible. In addition to accessibility, public accountability and scientific reproducibility have remained powerful driving forces in the way science has been conducted and disseminated, and significant deviations from these norms are of great concern to the community.26 More recently in the 1990s, the development of the internet transformed this debate as it became possible to make nearly all aspects of the scientific research process easily accessible, from preliminary data to final publications. While arguments over fair allocation of rewards for scientific achievement versus full and early research dissemination remain challenging,27 and intellectual property management regularly introduces conflicts,28 the era of Open Science29, 30 (or indeed Open Innovation31) is here to stay and contributes to scientific advancement overall.32 Open‐access journals and data, and open‐source software have significant impacts on the Open Science movement.

2.1. The Rise of Open Access Publishing

Building on the foundations of the very first online journals, websites like arXiv (established in 1991) took the first steps in providing Open Access to scientific publications. As more content became available online, and the need for physical copies of journals in libraries rapidly diminished, many expected a significant reduction in the cost of journal subscriptions. When this did not happen, it catalyzed the Open Access movement and other alternatives to conventional scientific publishing practices. At present, over 50% of newly published articles are Open Access, and conservative estimates place achievement of complete Open Access by 2040.33, 34 Current Open Access approaches tend to fall into two classes (or hybrids thereof34): gold, where the article is freely available at the point of publication; and green, where the authors can deposit the article in a public repository, for example, at their home institution. Some publishers require an embargo period before deposition in a public repository, but there is little evidence in terms of publisher income to support the existence of such embargoes.27 Many funding agencies have embraced Open Access publishing as a way to improve public transparency and accountability, and these agencies have made it a condition for support—this includes all European Union funding for 2020 and beyond.35 As such, Open Access is at the heart of the Open Science movement and certainly overlaps with one of the critical developments in data‐driven science, Open Data.

2.2. Open Access Data

The initiative to make data Open Access can be traced to efforts to establish scientific global data centers in the 1950s,36 largely as a way to store data long term and make it internationally accessible—all data was fully available for the cost of printing and delivery. Following this change, demands for scientific data sharing continued to rise, especially after the development of the internet and the tantalizing prospect of easy upload and download of data globally.

While many scientists were quick to embrace this, it took a decade for Open Data to appear as a clear objective and topic for scientific policy. In 2004, science ministers of most developed countries signed an agreement that all publicly funded archive data should be made available, with the guidelines for this following in 2007.37 As is often the case, the scientific communities themselves were ahead of policy changes, and many bespoke scientific databases had already proliferated, providing data repositories in almost every field across the globe. There are now thousands of them, and finding useful ways to search for a relevant repository, let alone data within it, requires serious effort.38

Motivation to make this effort is increasing rapidly, with many journals and funding agencies demanding the availability of data tied to publication or grants. Contributing to many aspects of the Open Science initiative, the Public Library of Science39 has pioneered this development, with a clear policy on data sharing for its publications and likely rejection if policies are not followed. Other major publishers have also been active, with at least the creation of specific Open Data journals,40 policies,41 and collaboration with Open Data initiatives.42 Many funding agencies now insist on a data management plan with all submissions, and this plan must give a detailed account of how data will be stored, secured, and shared—with particular attention to the Open Science rules of the agency in question.

In an attempt to provide unifying guidelines for the widely varying groups interested in Open Data and to aid in data management development, the FAIR Data Principles were established.43, 44 These principles have been adopted by several major players in global data management (see Table 1 ). The ideas behind making data searchable, accessible, flexible, and reusable at the core of FAIR are also the concepts that make the power of data‐driven science actually attainable.

Table 1.

List of current major materials data infrastructures. The entries are divided into non‐commercial (top) and commercial (bottom). Note that some platforms are named after the leading research project and may host multiple services under different names. As contact person we listed the director(s) of each infrastructure, in such cases, where they were clearly identifiable. Data volume numbers reflect the state in April 2019

| Name | Website | Contact | Overview | Ref. |

|---|---|---|---|---|

| AFLOW | aflowlib.org | Stefano Curtarolo, Duke University | Computational data consisting of 2 118 033 material compounds and 281 698 389 calculated properties with focus on inorganic crystal structures. Incorporates multiple computational modules for automating high‐throughput first principles calculations. | 83, 91 |

| Computational Materials Repository | cmr.fysik.dtu.dk | Kristian Thygesen and Karsten Jacobsen, DTU | Computational datasets from a diverse set of applications. Data creation and analysis with the Atomic Simulation Environment (ASE). | 92, 93, 94 |

| Crystallography Open Database | crystallography.net | Open‐access collection of crystal structures of organic, inorganic, metal–organic compounds and minerals, excluding biopolymers. | 95, 96 | |

| HTEM | htem.nrel.gov | Caleb Phillips and Andriy Zakutayev, NREL | Properties of thin films synthesized using combinatorial methods. Contains 57 597 thin film samples, across a wide range of materials (oxides, nitrides, sulfide, intermetallics). | 97, 98 |

| Khazana | khazana.gatech.edu | Rampi Ramprasad, Georgia Institute of Technology | Platform to store structure and property data created by atomistic simulations, and tools to design materials by learning from the data. Tools include Polymer Genome and AGNI. | 99, 100, 101 |

| MARVEL NCCR | nccr‐marvel.ch | Nicola Marzari, EPFL | Materials informatics platform for data‐driven high‐throughput quantum simulations. Data available at materialscloud.org, powered by the AiiDA‐infrastructure. | 85 |

| Materials Data Facility (MDF) | materialsdatafacility.org | Ben Blaiszik and Ian Foster, University of Chicago | Data publication network for computational and experimental datasets. Data exploration through the Forge python package. | 102, 103 |

| Materials Project | materialsproject.org | Kristin Persson, LBNL | Online platform for materials exploration containing data of 86 680 inorganic compounds, 21 954 molecules and 530 243 nanoporous materials. Develops various open‐source software libraries, including pymatgen, custodian, FireWorks, and atomate. | 84, 104 |

| MatNavi/NIMS | mits.nims.go.jp | Yibin Xu, NIMS | An integrated material database system comprising ten databases, four application systems and the NIMS Structural Datasheet Online. | 105 |

| NOMAD CoE | nomad‐coe.eu | Matthias Scheffler, FHI/Max Planck Society | Provides storage for full input and output files of all important computational materials science codes, with multiple big‐data services built on top. Contains over 50 236 539 total energy calculations. | 106, 107 |

| Organic Materials Database | omdb.mathub.io | Alexander Balatsky, Nordita | Open access electronic structure database for 3‐dimensional organic crystals. Contains approximately 24 000 materials. | 108, 109 |

| Open Quantum Materials Database | oqmd.org | Chris Wolverton, Northwestern University | Database of DFT‐calculated thermodynamic and structural properties with focus on inorganic crystal structures. Contains 563 247 entries with support for full download and advanced usage through the qmpy python package. | 90, 110 |

| Open Materials Database | openmaterialsdb.se | Rickard Armiento, Linköping University | Computational database primarily based on structures from the Crystallography Open Database. Data creation and analysis with High‐Throughput Toolkit (httk). | 111, 112 |

| SUNCAT | suncat.stanford.edu | Thomas Francisco Jaramillo, SLAC/Stanford University | Materials informatics center for atomic‐scale design of catalysts. Online tools and computational results for 112 157 surface reactions and barriers available at catalysis‐hub.org. | 89, 113 |

| Citrine Informatics | citrine.io | Bryce Meredig and Greg Mulholland | A materials informatics platform combining data infrastructure and AI. Open database and analytics platform for material and chemical information available at the Citrination platform: citrination.com. | 114, 115 |

| Exabyte.io | exabyte.io | Timur Bazhirov | Cloud‐based modelling platform for materials informatics. | 116, 117 |

| Granta Design | grantadesign.com | Mike Ashby and David Cebon | R&D organization offering data, tools and expertise for materials design. | 118 |

| Materials Design | materialsdesign.com | Clive M. Freeman, Erich Wimmer and Stephen J. Mumby | Software products and services for chemical, metallurgical, electronic, polymeric, and materials science research applications. | 119, 120, 121 |

| Materials Platform for Data Science | mpds.io | Evgeny Blokhin | Online edition of the PAULING FILE with focus on curated experimental data for inorganic materials. | 122, 123 |

| MaterialsZone | materials.zone | Assaf Anderson and Barak Sela | Provides a notebook‐based materials informatics environment together with experimental data. | 124 |

| SpringerMaterials | materials.springer.com | Michael Klinge | Curated data covering multiple material classes, property types, and applications. A set of advanced functionalities for visualizing and analyzing data provided through SpringerMaterials Interactive. | 125 |

2.3. Open‐Source Software for Science

The development of open‐source software entails the final element of the Open Science movement. Its development started in parallel with the earliest computing hardware efforts, with nearly all software freely available in the public domain as part of large academic and corporate collaborations. Since the relative cost of software compared to hardware has increased, this openness began to steadily decline until the early 1980s with the launch of the GNU project and the parallel explosion of Linux and the internet in the early 1990s. This provided a powerful platform and toolset for the collaborative development of software that could then be freely downloaded, culminating in the active open‐source movement in 1997.45 In particular, it suited the kind of focused, rapidly changing software that characterizes nearly all scientific applications.

In 2005, the creation (by Linus Torvalds) and rapid adoption of Git as a distributed revision control system, closely followed by hosting site GitHub, put the seal on the standard approach for open‐source scientific software development that remains to this day. It became possible to manage updates to codes from a large development team, while providing a platform for feedback, bug notification, and feature requests from users. It is now possible to find Open Source software for nearly every aspect of a scientific project,46 from electronic lab notebooks,47 experimental toolsets,48 and simulation packages,49 to machine learning libraries50 and online collaborative writing sites.51 With freely accessible data, Open Access publications explaining the science behind it, and a wealth of open‐source software to mine it, the way is clear for innovative data‐driven science.

3. Materials Data Infrastructures

Having established the context for Open Science, we next review the emergence of materials data infrastructures that collect, host, and provide materials data to stakeholders. We first reflect on early digital materials infrastructures before discussing the current state.

3.1. Development of Materials Infrastructures

The increasing capabilities of first‐principles methods—and the increasing capabilities of computational science in general—have accelerated materials researchers interest for new, computer‐based pathways to materials discovery and design—better, faster, and cheaper than ever before. Perhaps one of the first attempts to use materials information in a different and more efficient way was the development of the Calculation of Phase Diagrams (CALPHAD, 1970s) method and database, in which multiple calculations of phase diagrams were put in a centralized database to speed up the design and development of new alloys.52 In the 1990s, the increasing capability to collect, store and analyze “big data” led researchers to explore the potential of data‐science in scientific research (for more information, see ref. 4). With these innovative ideas up in the air, material scientists at the Massachusetts Institute of Technology (MIT) developed tools to predict the properties of materials from datasets.53 Around the same time, researchers at the Technical University of Denmark demonstrated the potential of evolutionary algorithms in finding materials with specific properties,54 or to use high‐throughput screening for candidate materials with key parameters to narrow down the number of required experiments.55, 56, 57 The researchers at MIT even envisioned how with such computational tools a “virtual materials laboratory” could be build, in which new materials are designed and tested based on computer calculations.53 These ideas eventually led to the launch of a curated database that is now called the Materials Project.58, 59 This Open Access (see Section 2.2) database would use high‐throughput computing to uncover the properties of all known inorganic materials and enable future researchers to find appropriate materials through interactive exploration and data mining.59, 60

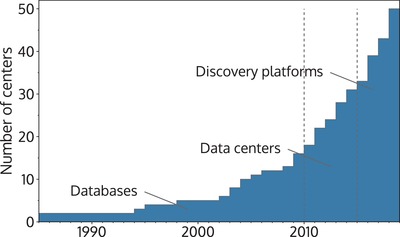

As big data and data science became increasingly fashionable, the US government announced the launch of the Materials Genome Initiative (MGI) in 2011.61 This initiative emphasized the usefulness of data informatics for materials discovery and design. As similar efforts were launched around the world promoting the availability and accessibility of digital data in science, a trend was set and a new paradigm of materials science emerged:5 data‐driven materials science. Set to reduce time and investment needed to support the typical 10–20 year research‐development‐commercialization cycle for new materials, more and more Open Access materials data initiatives opened worldwide, as illustrated by Figures 2 and 3 .

Figure 2.

Timeline and geographic distribution of materials data infrastructures and companies. The colour of the dots represents the time of establishment. The map shows that historically more centers have emerged in the U.S. and Europe, with Asia catching up over time. In addition, the U.S. has a higher renewal rate than Europe, as can be seen in the larger number of ligher colored dots. CSD: Cambridge Structural Database, ICSD: Inorganic Crystal Structure Database, ESP: Electronic Structure Project, AFLOW: Automatic‐Flow for Materials Discovery, AIST: National Institute of Advanced Industrial Science and Technology Databases, COD: Crystallography Open Database, MatDL: Materials Digital Library, CMR: Computational Materials Repository, NREL CID: NREL Center for Inverse Design, CEPDB: The Clean Energy Project Database, MGI: Materials Genome Initiative, CMD: Computational Materials Network, OQMD: Open Quantum Materials Database, NOMAD: Novel Materials Discovery Laboratory, MaX: Materials Design at the Exascale, MICCOM: Midwest Integrated Center for Computational Materials, MPDS: Materials Platform for Data Science, CMI2: Center for Materials Research by Information Integration, HTEM: High Throughput Experimental Materials Database, JARVIS: Joint Automated Repository for Various Integrated Simulations, OMDB: Organic Materials Database, QCArchive: The Quantum Chemistry Archive.

Figure 3.

Number of materials informatics projects and infrastructures as function of time (see Figure 2 and Table 1 for details on individual projects and infrastructures). We divide the time axis into three periods that reflect the evolution of the data infrastructures (see text for details).

Most of the early materials data initiatives started as databases that hosted data and offered search functionality with the idea to encourage materials scientists to share their data with a larger community. The launch of the Materials Genome Initiative became a defining moment in data‐driven materials science (see Figure 3) as databases evolved into data centers that offered rudimentary materials and data analysis services. The emerging interest around data mining and AI made materials scientists increasingly eager to use such algorithms in their research. As a result, the focus of most centers transitioned to developing workflows that would enable scientists to search, mine, and query the databases. This marks another turning point in the history of data‐driven materials science, with infrastructures becoming materials discovery platforms (see Figure 3), whose self‐declared mission is to facilitate the discovery of novel materials.

The distinction between databases, data centers and materials discovery platforms introduced in the previous paragraph is based on the loose definitions given in the paragraph. The terminology reflects our impression of the evolution of materials data infrastructures and provides a simple classification scheme to distinguish different infrastructure types. For the remainder of the article, we will use materials data infrastructure as the most general and encompassing term to refer to either of the three types.

The spillover effect from data science to materials science is currently boosting the emerging field of data driven materials science or materials informatics.5 The computational possibilities of machines to analyze and detect patterns in data has created a new feedback loop in the relationship between hypothesis and experiment, which facilitates the next step to mix human trial‐and‐error experimental and computational research with “artificial intuition” (or to use data mining tools to approach human‐like intuition to suggest candidate materials that are further refined via computational and experimental research).

Big data and data science are also prevalent in other scientific fields. In chemistry, databases emerged earlier than in materials science,62, 63, 64 as exemplified by Chemical Abstracts Service (CAS), the principal chemical database provider65, 66 whose first database was created in 1965.66, 67 Carefully produced and curated datasets were essential for developments in quantum chemistry.68, 69, 70, 71 In particle physics, the CERN Open Data Portal72 offers more than 1 petabyte of open data for research conducted at their facilities. In biology, a variety of databases and metadatabases store biological information, for example, ConsensusPathDB73 for human protein–protein, genetic, metabolic, signaling, gene regulatory, and drug–target interactions; the protein data bank74 that houses 3D structural data of large biological molecules; and the International Nucleotide Sequence Database Collaboration75, 76 that collects and disseminates DNA and RNA sequences. In this perspective article, our focus is on materials science, but it is clear that the “4th scientific paradigm”4 is emerging in other fields as well.

3.2. Overview of Current Materials Infrastructures

Figure 3 depicts a clear rise of active materials infrastructures, many of which have developed into very mature and stable services used in everyday research processes.77, 78, 79, 80 Table 1 shows a summary of the most prominent materials discovery platforms in existence today. As these platforms have matured, the range of different services they provide has grown (for another perspective on the components of materials data infrastructures see ref. 21), and Table 2 shows their features. Data infrastructures that have emerged at the time of submission of this article, such as, e.g., QCArchive81 have not yet been included in Tabels 1 and 2.

Table 2.

Services provided by the selected materials data infrastructures. Open Access: provides partial or full free access to data. Computational data: contains data originating from software simulations. Experimental data: contains data originating from experiments. Data upload: allows upload of own data, with the possibility of issuing Digital Object Identifiers (DOIs). Workflow management tools: provide or collaborate in the development of open‐source software tools for workflow management. Web API: data can be accessed remotely with automated scripts. Data analysis tools: provide online or offline data analysis tools, including machine learning

| Open access | Comp. data | Exp. data | Data upload (DOIs) | Workflow management tools | Web API | Data analysis tools | |

|---|---|---|---|---|---|---|---|

| AFLOW | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Computational Materials Repository | ✓ | ✓ | ✓ | ✓ | |||

| Crystallography Open Database | ✓ | ✓ | ✓ | ✓ | |||

| HTEM | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Khazana | ✓ | ✓ | ✓ | ✓ | |||

| MARVEL NCCR | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Materials Data Facility (MDF) | ✓ | ✓ | ✓ | ✓ (DOI) a) | ✓ | ||

| Materials Project | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| MatNavi/NIMS | ✓ | ✓ | ✓ | ✓ | |||

| NOMAD CoE | ✓ | ✓ | ✓ (DOI) | ✓ | ✓ | ||

| Organic Materials Database | ✓ | ✓ | ✓ | ||||

| Open Quantum Materials Database | ✓ | ✓ | ✓ | ||||

| Open Materials Database | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SUNCAT | ✓ | ✓ | ✓ | ✓ | |||

| Citrine Informatics | ✓ b) | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Exabyte.io | ✓ | ✓ | |||||

| Granta Design | ✓ | ✓ | ✓ | ||||

| Materials Design | ✓ | ✓ | ✓ | ||||

| Materials Platform for Data Science | ✓ c) | ✓ | ✓ | ✓ | ✓ | ||

| Materials Zone | ✓ | ✓ | |||||

| Springer Materials | ✓ | ✓ |

Upload requires access to private/institutional storage space

Open access to a subset of data

Open access to limited set of materials properties.

Perhaps the most important service that a data platform has to offer is an efficient distribution channel for the data stored within. Often the data is accessible through a webpage that its clients can access online. This has the lowest adoption barrier since no additional software is needed and the data can be explored visually through a browser. Examples of such services include the Novel Materials Discovery Laboratory (NOMAD) Encyclopedia,82 AFLOWlib,83 the Materials Project,84 and the Materials Cloud.85 A browser‐based method is, however, rarely useful for materials informatics applications, which require automated access to large volumes of data. To facilitate access to large data volumes, it is typical to offer an application programming interface (API) to users to enable automatic data crawling. This is often done by defining a Representational State Transfer (REST) or GraphQL interface to the data.86, 87, 88, 89 These interfaces allow automated access through programmable queries. Another way, as adopted by OQMD,90 for example, is to offer an offline version of the database as a direct download to users. Offline access provides the most flexibility and performance but typically requires knowledge on how to interact with the underlying database with Structured Query Language (SQL) or object‐relational‐mapping (ORM). That said, a full download is not practical for large data volumes.

As the amounts of data produced by materials science increases, a practical concern over long‐term storage of this data is emerging. There is also increasing pressure from funding agencies and other institutions to ensure the correct and safe long‐term storage of data. To answer this demand, some data infrastructures now provide data storage services for materials data. Currently, Springer‐Nature lists two recommended data repositories for materials science:126 the NOMAD Repository107 and the Materials Cloud.127 Both of these free services are for computational materials data, accept uploads from any source, and guarantee data storage for at least 10 years after data deposition. Often the data volumes in experimental studies, especially in imaging, far outnumber computational efforts. For instance, electron microscopes can easily generate tens of gigabytes of data in a day of operation.128 Because of this higher volume, it is much more challenging to organize central and free data storage for experimental data. Instead the storage space is provided by the host university or laboratory, as in the case of the Materials Data Facility,102 which is a collaboration between US universities and research centers. In addition to the materials‐science‐specific storage solution, there are also free solutions to store generic scientific data, such as Zenodo,129 Dryad,130 Figshare,131 and Dataverse.132

The online analytics tools85, 117, 133 provided by data infrastructures are fairly modern additions that have emerged from the rise and popularity of interactive browser content and notebook‐based environments, such as the Jupyter notebook.134 These online tools range from simple tutorials to realistic materials property prediction and materials discovery through machine learning. They can be used without local hardware or software resources and have therefore become an important channel for dissemination and learning. Some platforms also participate in the development of Open Source software (see Section 2.3) libraries for performing offline data analysis on materials data.135, 136, 137, 138 Such libraries have high reuse value for scientists working with materials data and, through Open Source distribution and contribution mechanisms, can remain in active use beyond the lifetime of individual projects.

The value of materials data has also been recognized by materials informatics companies. We have included a selection of these companies in Tables 1 and 2. A major selling point of these companies is the access they provide to privately owned, highly curated materials property data that is inherently valuable in R&D. In sufficiently large quantities, this kind of materials data can help firms immensely in selecting optimal materials for products, without having to spend additional expenses on building their own research infrastructure. Another recently emerging business model revolves around selling access to software environments with a Software as a Service (SaaS) model. In this model, companies offer on‐demand access to preconfigured cloud‐based environments for materials informatics. Such services can be valuable for companies and research laboratories because they can be used according to current demand, do not require large one‐off investments in hardware, and do not require specialized skills in software configuration and system management.

3.3. Current Challenges

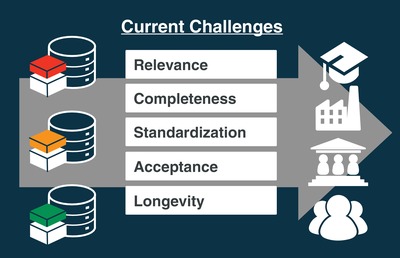

Having reviewed the current state of data infrastructures in materials science, we now return to the MUSE analogy. The previous two sections illustrated that despite enormous process in data‐driven materials science, several challenges need to be overcome before a powerful materials search engine and discovery tool takes shape. The challenges are depicted in Figure 4 and are raised here briefly before being discussed in detail in corresponding sections.

Figure 4.

Challenges faced by materials data infrastructures (on the left) on the way to increase the adoption by stakeholders from academia, industry, governments and the public (depicted on the right).

3.3.1. Relevance and Adoption

Materials data infrastructures must provide relevant data and information to be adopted by stakeholders, be it scientific communities, industries, governments, or the public. Relevance is determined by data volume, data type, and data quality, and entails data completeness, and data homogeneity. Different communities will have different specifications of these terms, which makes it challenging to develop general and interdisciplinary infrastructures that can be adopted. Relevance and adoption are therefore closely related to the subsequent challenges of completeness, standardization, and acceptance.

Relevance also includes tools that operate on the data and help users to classify, analyze, and correlate data. Machine learning has gained the most prominence in this regard and is reviewed in Section 7. Since machine learning is always data hungry, it makes sense to integrate machine learning applications directly into materials data infrastructures. Challenges to such one‐stop‐shop solutions, which would increase the acceptance of materials infrastructures, include the wide variety of available machine learning approaches and data diversity. For data to be informative for machine learning algorithms, its features and properties need to be relevant and complete.

3.3.2. Completeness

Completeness is “the quality of being whole or perfect and having nothing missing.” While ideal completeness is hard to attain in practice, data infrastructures today suffer from a real, severe completeness problem: they contain mostly computational and almost no experimental data (cf. Table 2). This state of affairs is rooted in the historic development of data‐driven materials science presented in Section 3.1. Since computational data comes in a digital format, computational scientists were early adopters of database platforms. Moreover computational data is currently more homogeneous and easier to curate than experimental data.139 Facilitating a seamless comparison between computational and experimental data is, however, an important step toward validating theoretical predictions and in driving materials discovery and development efforts:139 materials that are identified as promising still require further evaluation, selection, and experimentation. Building synergies among computational and experimental databases thus remains an important challenge for the future of data‐driven materials science, which we address in Sections 4 and 5.

3.3.3. Standardization

Some form of standardization is essential in the widespread adoption of a new paradigm or technology.140, 141 Stakeholders can only participate in the development of a technology if they speak a common language. The language analog in data‐driven materials science is metadata. Metadata provides relations (the grammar) between data items (the words). Developing standardized metadata for materials science that is informative, exhaustive, and adaptable is an outstanding challenge. We address the first steps toward creating a materials ontology in Section 4. Such an ontology, or classification system, would be the foundation for materials science metadata and the evolution of different materials science dialects into a common language.

3.3.4. Acceptance and Ecosystems

Materials data infrastructures will only be useful if they are accepted as a useful tool by various stakeholders. Apart from being relevant and complete, data infrastructures have to be user friendly to be widely adopted. User friendliness includes easy upload and download of data. Easy data upload also facilitates completeness since it reduces hurdles to data sharing. Widespread acceptance furthermore requires trust in the stored data, and this can only be achieved through data curation. Data curation is the management and quality control of data throughout its lifecycle, from creation and initial storage to the time when it is archived for posterity or becomes obsolete and is deleted. We address the challenges pertaining to data creation and curation in Sections 5 and 6.

Infrastructure acceptance is different for different stakeholders. Current infrastructures are predominantly built and used by scientists in academia, as detailed in the previous section. Industry interest and participation has not been systematically studied, and it is dependent mostly on anecdotal evidence. Some materials companies leverage the value of reference databases (e.g., IBM142 and ASM International143), while others contract the services of intermediaries (cf. Table I and Table II). Apart from this, industry seems to still be exploring the opportunities and potential benefits of materials informatics144 without full engagement with academia.

The disconnect between academic and corporate R&D in many fields makes industry involvement more difficult in this specific case. A hurdle to widespread industry adoption is the materials gap—the fact that industry requires other data than what is currently stored in the available materials data platforms. Ecosystems that facilitate the interaction between academic, corporate, governmental, and public stakeholders are a potential solution that we discuss further in Section 9.

3.3.5. Longevity and Diffusion

With increasing awareness for open and data‐driven science, national, and international funding for the development of Open Science (see Section 2) is rising, and new materials data platforms are emerging in their wake. However, longevity and diffusion of innovations and new technologies are rarely considered by funding agencies, and long‐term financial support for sustained operation is not guaranteed. The initial wave of digital materials infrastructures were built predominantly by materials scientists whose main focus lies in basic science. The long‐term maintenance and usability of infrastructure is often only a secondary priority for most scientists. As a result, these digital infrastructures are in danger of becoming digital ruins of the expansion of Open Science. We discuss potential solutions in Section 9.

Next we explore central topics and applications around data‐driven materials science to provide insight into these challenges and into the successes of data‐driven materials science.

4. Materials Ontology

We begin our more detailed review sections with the relevance, completeness, and standardization challenges. One of the first decisions in the planning of a materials data infrastructure is which types of materials will be relevant to its intended user base. The most complete representation of these materials will then have to be stored in the database of the infrastructure. The storage requires standardization and the development of metadata formats. Storing only the raw materials data without any metadata would be futile because raw data is neither searchable nor suitable for machine learning.

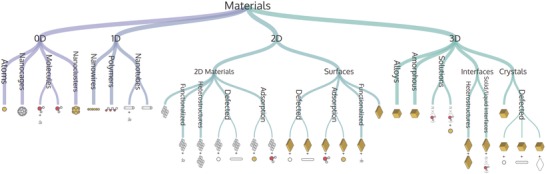

The development of a metadata framework requires materials classification schemes from which metadata entries and relations can be derived. Crude classification schemes group materials by their functional properties (electronic, optical, mechanical), topological characteristics (bulk, surface, nanotube, polymer, see Figure 5 ), or by material type (ceramics, metals, glasses, polymers, or composites). More sophisticated classification schemes are clearly needed to facilitate data‐driven materials science. In addition, the origin of the raw data needs to be encoded in the metadata. For real samples, these would be the synthesis and processing conditions and the history of the sample since creation. For virtual samples, the generating computer code and the computational settings need to be known. This clearly demands the classification and organization of materials data in a materials ontology.

Figure 5.

Example of an ontological hierarchy for the structural characterization of materials: a materials tree of life. Adapted under the terms of the Creative Commons Attribution 4.0 International License.145 Copyright 2018, the Authors, Published by Springer Nature.

Ontology is originally a field of philosophy defined as the study of properties, events, processes, and relations of existence.146 In computer science, the term ontology has been co‐opted to more specifically mean a formal collection of entities, relationships between those entities, and inference rules that are shared by a community. In materials science, a materials ontology would be a classification scheme for materials, their properties, units, and limits, and their interrelations. Defining an ontology is conceptually important for the purpose of establishing a standard that can be shared by different people working with the same data, and it is a practical necessity in database design. The ontology concept is also closely linked to the ability to search data: the ontology defines the available search terms and facilitates semantic reasoning, which then facilitates complex searches.

Creating the necessary machinery for ontologies in materials science is a tremendous task. An ontology structure has to be developed, suitable formats and standards for encoding meaning have to be defined, and wide‐spread adoption of the ontology has to be ensured. For example, a substantial part of information available on the internet today consists of human written text. To interpret the information contained within this text requires human reasoning or sophisticated natural language processing software. But there is a complementary standard by the World Wide Web Consortium called Semantic Web that defines an explicit, machine‐readable format (Resource Description Framework, RDF) to organize information on the web. It provides an ontology language (Web Ontology Language, OWL) to describe ontologies for sharing concepts across content creators.147 If this semantic web standard were embraced by the web community, it would significantly boost information sharing across the internet and unleash the power of automated semantic reasoning by artificial intelligence.148 As of now, this powerful idea remains largely unrealized.

Similarly in materials science, a standardized ontology that ensures a complete representation of materials has not yet emerged. Currently various ontologies and less‐than‐formal standards compete. NOMAD Meta‐info,86, 149 ESCDF,86 and OpenKIM150 are the first attempts to categorize computational results in atomistic materials science. PLINIUS151 is used in the field of ceramics, ONTORULE152 in the steel industry, SLACKS153 for laminated composites, and PIF,154 Ashino,155 EMMO,156 MatOnto,157 Premap,158 and MatOWL159 represent general materials science data. Although the development of these materials ontologies has accelerated, they are not nearly as mature as in other fields, for example, the biosciences.160 Especially for industrial purposes, these publicly available ontologies are typically insufficient, forcing companies to create their own internal, domain‐specific ontologies.156

The lack of standardization aggravates data sharing. Computational science, for example, still relies on file‐based data exchanges between different codes. Such file‐based data exchange requires interfacing software, significant human resources, and expertise on how the data is structured. Moreover, incompatible standards lead to conversion errors and data loss. These interoperability problems could be solved by a common ontology and a standardized representation of knowledge within this ontology. Fitting existing and novel data into such an ontological framework would still be a tedious and error‐prone task for humans. Existing tools and techniques could, however, be used to simplify and automate this process. Data curation services161 help in organizing and annotating data, natural language processing can be used to mine data from scientific literature,162, 163 and automated structural classification helps in categorizing the contents.145, 164, 165

The ontologies themselves could also be constructed semiautomatically by observing the nomenclature and the relation of concepts used in the literature.166, 167 If widely adopted in materials infrastructures, this standardization would enable the vision of a powerful search platform for materials science. Once defined and filled, AI solutions would benefit from it. One could envision virtual AI agents helping scientists to answer complex questions related to material performance and synthesis by analyzing materials databases and scientific literature. Such AI agents would not only aid basic research, they would also help businesses that could then more effectively leverage existing scientific knowledge in their R&D.

Recently there have been promising efforts in trying to unify the nomenclature and standards in materials science by the European Materials Modelling Council,156 the Research Data Alliance (RDA),168 and by a collaboration between NOMAD scientists and the Centre Européen de Calcul Atomique et Moléculaire (CECAM).86 A concrete example of such collaboration is the Open Databases Integration for Materials Design (OPTiMaDe) consortium.169 OPTiMaDe is building a common interface for accessing data from multiple materials platforms. The diversity of subfields and stakeholders in materials science might make it impossible to define one universal materials ontology. We, however, recommend that unifying ontologies whenever possible and disseminating these efforts to the materials science community are key steps in making the most out of the rich body of materials data created with the modern experimental and computational methods discussed next.

In summary, standardization facilitates data sharing. A materials ontology is a classification scheme for materials that enables standardization. Ontologies also ensure completeness of materials data since everything that falls outside of an ontology by definition indicates a lack of completeness in the ontology. Attempts at constructing materials ontologies are underway. However, more needs to be done to ensure that relevant materials and relevant materials properties are incorporated into existing materials infrastructures. Otherwise our MUSE will return irrelevant information when queried.

5. Data Creation

We now stay with the challenges of relevance and completeness and address how enough relevant data can be generated to feed a materials infrastructure. Once again, we encounter standardization but this time in the context of standards for generating data. We briefly review techniques and recent improvements in data creation methodology—so‐called high‐throughput methods—that are enabling experimental and computational scientists to efficiently create data for data‐hungry repositories and applications.

For experimental materials data, the introduction and refinement of deposition and analysis methods has had perhaps the greatest impact on data creation efficiency. In 1965, the first composition gradients could be achieved in thin‐film material codeposition,170 offering a more efficient replacement for the one‐by‐one creation and study of materials. Since then, multiple improved materials synthesis and characterization techniques have been introduced.171, 172, 173, 174, 175 They have enabled the rapid generation of composition–structure–property relationships.176, 177, 178, 179, 180 State‐of‐the‐art deposition techniques, such as combinatorial laser‐molecular beam epitaxy (CLMBE)173 introduced around the year 2000, can be used to create temperature and composition gradients across the sample and provide control of the deposition in three dimensions.

These new methods facilitate a finer and more complete sampling of structural phases and chemical compositions in a single experiment. They efficiently create materials libraries—experimental samples with one or more composition or phase gradients. Each library represents part of a well‐defined materials space. Measurements from such materials libraries are now made accessible through Open Access online services, such as the High Throughput Experimental Materials (HTEM) database.97

In contrast, and perhaps surprisingly, the high‐throughput creation of computational materials data has only become common practice in the 21st century.90, 181, 182 Thanks to Moore's law and massively parallel computing architectures, available computational power has increased steadily, and computational data creation has quickly taken advantage of this power, even surpassing experimental efforts. For example, the Open Quantum Materials Database (OQMD) performs virtual high‐throughput materials synthesis by decorating known crystal structure prototypes with new elements. It has now grown from the initial set of roughly 30,000 experimentally known crystal structures from Inorganic Crystal Structure Database (ICSD) to more than 560,000 computationally predicted materials.90, 110 High‐throughput workflows have now matured and are increasingly applied to screen also complex properties such as coupling and reorganization energies in organic crystals.183, 184

In the creation of such massive datasets, it is increasingly important to adhere to computational standards. This standardization has been pioneered by the Materials Project and the Automatic‐Flow for Materials Discovery (AFLOW)‐consortium (see Table 1), with comprehensive specifications for the methodological details, such as k‐point grid densities, cutoff energies, pseudopotentials, and convergence criteria, related to density functional theory (DFT) calculations.185, 186, 187 This standardization ensures that data can be made cross‐compatible within a database or even across databases.

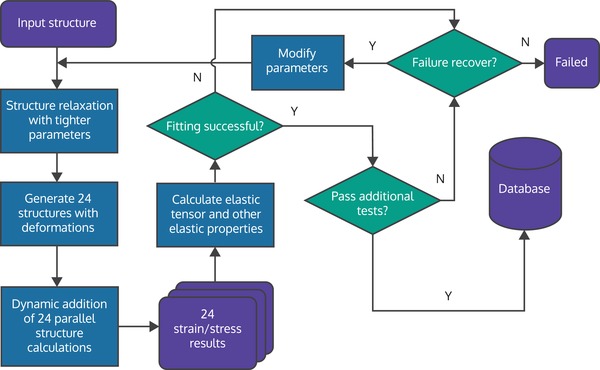

Relatively recent additions to computational materials science are workflow management tools like FireWorks,188 atomate,189 AiiDa,190 and AFLOWπ.191 These tools enable researchers to build automated and robust workflows for creating consistent datasets. Workflows connect different computational steps and checks into a single computational graph. The computational steps generate data, and checks aid with automated recovery from errors that might occur in a computational step, for instance due to incorrect computational settings or hardware failures. An example of a workflow graph from FireWorks is given in Figure 6 .

Figure 6.

A computational workflow used in creating a dataset of elastic tensors with the FireWorks workflow manager. The indigo boxes correspond to inputs or results, lighter blue boxes correspond to actions, and green diamonds correspond to decisions. Adapted with permission.188 Copyright 2019, Wiley.

In summary, materials data needs to be created in sufficient volumes for materials infrastructures to be relevant and complete enough. To fulfill this need, high‐throughput experimental and computational methods have emerged. The level of automation and efficiency provided by these methods ensures that the bandwidth at which materials data can be created should not be an issue for the MUSE of the future.

6. Data Quality

From data generation, we move on to data quality. As already alluded to in the previous section, the quality of data is related to standardization in the generation of data and considerably affects the acceptance of data infrastructures.

Big data is often characterized in terms of four Vs: volume, velocity, variety, and veracity. Each V poses a challenge, although the volume challenge could, in principle, be solved by more storage space, and the velocity challenge of faster data generation could be addressed by faster computer processing and accelerated measurement and fabrication techniques. Increased variety is more a challenge for standardization and ontology integration, yet it is also a benefit for machine learning and materials discovery algorithms. Veracity, however, is the most problematic because it is a softer measure of how to quantify the degree of trust in data and how to improve its trustworthiness.

Data veracity has two aspects: bias and variance. In an experiment or a calculation, the bias of the result is quantified as the offset of the average result from the ground truth, whereas variance is quantified by its probabilistic definition as the spread of the results over identical runs. Note that the variance and bias discussed here originate from approximations in theoretical models or experimental uncertainties, not from stochastic processes in the experiment or calculations, for example in molecular dynamics simulations. We also disambiguate the use of bias and variance here from the same terminology commonly used in machine learning.

For users of data infrastructures, it is important to know the quality of available datasets. However both data bias and its veracity may be hard to quantify.

In the computational realm, variance can be caused by different computational environments or differences in implementation but it is typically negligible even between different software implementations.192 Computational variance is also often one or several orders of magnitude lower than the corresponding experimental variance.193 The veracity of computational data is thus dominated by bias—offset from the experimental ground truth. The estimation of this bias depends on access to experimental data or comparison to results from a higher‐level theory.194 The bias also depends on the types of chemical elements in the materials. Some computational approaches or approximations may break down for specific groups of elements, such as dispersion‐governed compounds, magnetic materials, strongly correlated materials, and relativistic effects in heavy elements, leading to much larger errors for these groups of materials.193

While computational scientists have full control over their simulations, experimental scientists often face errors that are beyond the control of their experimental setup. Bias in measurements can be due to incomplete knowledge of a sample's content and history, as well as interactions between the sample and the environment. Variance can be caused by material imperfections and contaminants, and experimental uncertainties introduced by the equipment. As such, it is typically difficult to discriminate between bias and variance in experimental errors. If there are no comparable experimental facilities, this can also make it hard to assess the data quality. In some cases, quality‐controlled commercial equipment and widely accepted standard procedures are available when performing measurements. However, there is often a need to use custom‐built equipment or to measure materials for which the standard procedures are not applicable, making it harder to reproduce and validate results. This difficulty of controlling more elaborate experimental setups means that reliable experimental data exists for simple systems, such as small molecules195 or elemental crystals,193 but for more complex systems and measurements, the data quality may be harder to determine.

The combination of high bias in computational results and the difficulty of controlling errors in experiments makes the overall estimation of data veracity in materials science particularly hard. One example is given by the formation energies of crystals for which computational and experimental values notably differ. This discrepancy is caused by both systematic errors in the computational methodology and experimental uncertainties.196 Due to the species‐specific nature of the computational error and the vastness of compositional and structural space, it is impossible to make an exhaustive brute‐force comparison between experiment and computation. However intelligent error extrapolation schemes are being developed. In one such scheme, the computational error of nonconverged energies for crystals with two different chemical species—compared to fully converged energies—can be estimated by using a linear combination of errors from solids with only one chemical species.197 Such schemes could also be extended to estimate the error between experimental and computational data. This will require systematic data collection from both experiment and computation but it may prove to be fertile for practical error estimation.

In summary, data quality is important to ensure standardization of data and to increase acceptance of data infrastructures, but it is challenging to quantify. Two indicators of quality are bias and variance. Systematic data collection and new extrapolation schemes would facilitate bias and variance assessments in the future.

7. Data Analytics

To increase the adoption of data infrastructures, developers are adding tools and apps to their data platforms that operate directly on the data in the infrastructure's database. These tools add value to the data and enhance its relevance. Many tools now include machine learning. Here we briefly review the main types of machine learning and illustrate how they could add value to material infrastructures.

7.1. Introduction to Machine Learning

Machine learning is the scientific study of how to construct computer programs that automatically improve with experience.198 More specifically, machine learning algorithms use statistical models and optimization algorithms to reveal patterns in training data to make predictions or decisions without being explicitly programmed to perform a certain task. The advantage over human learning is that computers can often handle much larger and higher dimensional data, and suitable approximations can be automatically found by monitoring how well the models generalize to unseen data.

Many of the statistical methods in machine learning have been around for decades. For example, Marvin Minsky built the first hardware implementation of a neural network199 in 1951. Our current AI boom has been facilitated by the rapid hardware development for information storage and processing, the conscious effort of data gathering and curation, as well as increased developments of machine learning methods and libraries by private companies, the public sector and academia, driven by the potential that machine learning can unlock from previously untapped data resources. Today machine learning is a key ingredient of materials informatics, as showcased by various reviews on the topic.5, 8, 9, 10, 13, 14, 17, 18, 22, 23

Machine learning can be divided into different subfields that are characterized by the available data. Supervised learning is the most mature and powerful of these subfields and is used in the majority of machine learning studies in the physical sciences.17 Supervised learning applies in situations where a machine learning model is trained on input–output pairs from a real process to produce optimal outputs for unseen inputs. Typical applications are predictions of physical properties (like formation energies200, 201, 202 or molecular properties203, 204, 205, 206, 207) given the input features of a material or process (e.g., geometry, physical properties, external conditions).

In unsupervised learning, only input data is given to a model but no output. The machine is then tasked with a learning objective, for example to find rankings or patterns for this input. Unsupervised learning is often used to preprocess input data, such as dimensionality reduction by principal component analysis (PCA),208, 209 or aiding the analysis of complex output data like visualization of high‐dimensional data with T‐distributed stochastic neighbor embedding (T‐SNE)97, 210 or sketchmap.211

Finally reinforcement learning is a rapidly emerging field with promising applications in tasks that require machine creativity. In reinforcement learning, a model is given a task of choosing a set of actions to optimize a long‐term goal. As such, it differs from supervised learning because no correct input–output pairs are presented for individual actions, but the training is a mixture of exploration and exploitation guided by a long‐term reward.212 This mode of learning can be useful in the exploration of compound and material spaces like exploration of grain‐boundary structures with evolutionary algorithms213 and the search for new molecules with objective‐reinforced generative adversarial networks.214

The knowledge contained in a machine learning model is encoded in the parameters of the model, and it is, in principle, tractable. However the number of parameters can reach into the millions, which makes these models quite opaque to human interpretation. This is different from the scientific approach thus far, which has relied on deriving and discovering physical laws that are encoded in humanly readable equations. For commercial applications, the transparency of the models is not as important as their performance, but for advancing scientific understanding and wider acceptance, better human interpretability would be beneficial. Recent examples of approaches that analyze machine learning models to reveal their mechanisms include the analysis of input feature importance,200 explicit formulation of the input in algebraic form,215, 216 and analysis of convolutional neural network filters.217

7.2. Specifics of Machine Learning in Materials Science

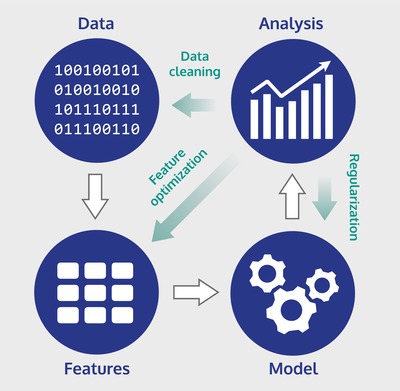

Currently the applications of machine learning in materials science are rich and diverse, ranging from catalyst design,19, 80 exploring the mechanisms of high‐temperature superconductivity,218, 219 to predicting excitation spectra.206 Building such applications can generally be broken down to four key steps: data acquisition, feature engineering, model building, and analysis. These steps are illustrated in Figure 7 . They are, however, interdependent and often multiple iterations of each step are required to create a successful machine learning system. Specialized software frameworks220, 221, 222 have been developed to aid the set‐up and build and management of machine learning models.

Figure 7.

Key steps in building a machine learning model. The white arrows indicate the flow of data, green arrows indicate actions that can be identified and performed after analysis to improve the performance of the model.

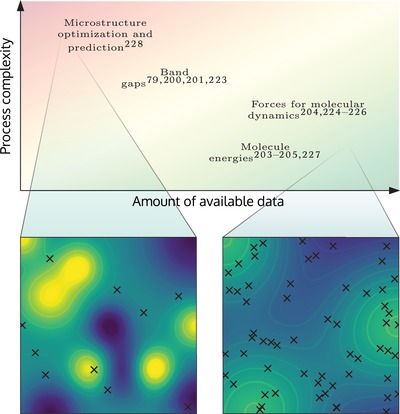

While machine learning generally requires data, the amount of data depends on the specific problem. Figure 8 illustrates the trade‐off between the available data volume and the complexity of the underlying process for different machine learning approaches. Problems in the top‐left corner are not suitable for machine learning due to the low amount of available data. The further to the right a problem sits, the more suitable it becomes for machine learning. In practice, it is often difficult to place machine learning methods and new problems in this diagram. Thus rapid prototyping of the problem is frequently a key to successful machine learning. Since we have control over only one of these parameters—the amount of data—the importance of open data access, materials databases, efficient data creation, and data veracity is paramount for the success of machine learning.

Figure 8.

The machine learning domain in terms of data volume and the complexity of the physical process, with selected examples placed in this domain. The complexity of a physical process here means the complex, nonlinear structures present in the data. Two opposing learning scenarios, a hard and an easy one, are illustrated in the lower panel. In these two cases, the underlying physical process is represented by a colored contour map, and the sampling of this process is represented by black crosses.223, 224, 225, 226, 227, 228

Machine learning models expect input data in alpha‐numerical form, typically as an array of letters or more often as numbers. Raw data, however, is usually unsuitable for machine learning. The first task in building a machine learning model is therefore to extract informative features from the raw data (cf. Figure 7). Feature engineering refers to the act of introducing domain expertise to the learning model by affecting which features are used. It can be beneficial to apply problem‐specific feature transformations, called feature extraction,212 which exploit known symmetries in the input for example, making it easier for the model to learn a unique mapping. This can be especially important for input features that encode atomic geometries. Physical properties exhibit symmetries with respect to translation, rotation, and index permutation in the Cartesian coordinates representing a geometry. Using a transformation that makes the input invariant with respect to these symmetries will help the learning process by creating a unique mapping from an atomic geometry to its properties. Such structural descriptors have been successfully applied in the prediction of molecular and crystalline properties,203, 205, 229 and there their development has exploded in recent years.202, 203, 205, 229, 230, 231, 232, 233, 234, 235, 236, 237, 238 To facilitate easier navigation through descriptor choices, application‐neutral software libraries for descriptors are being developed.239, 240 In contrast to human‐driven feature engineering, the optimal features can also be discovered more systematically by learning them directly from the data with feature learning. In the simplest form this can be achieved by methods like principal component analysis (PCA),208 which is based on the variance of the input features. In the other extreme, the features may be formed by an encoder as a nonlinear latent space within an autoencoder neural network.241 Analyzing the features used by the machine learning model in making a decision forms the basis of understanding and verifying the correctness of the model. Integrating such analysis into the workflow of building machine learning models is still lacking in many cases, hindering their acceptance and interpretability.

After feature selection (cf. Figure 7), the machine learning algorithm must be chosen (see different machine learning types discussed at the beginning of this section). Each algorithm has its own application domain, and there is currently no algorithm that is optimal for all problems.10 This conundrum is also known as the “no free lunch theorem.”242 Some common choices include feed‐forward neural networks,243 decision trees,244 kernel ridge regression,245 support vector regression,245 and Gaussian processes.246 These approaches are common in computer science and are available in generic software packages that help select the best model for a task.50, 247, 248, 249, 250, 251, 252, 253, 254 Apart from such generic approaches and packages, machine learning models are often customized to materials science. One example is the creation of custom neural network architectures201, 204, 206, 255, 256 that have been designed specifically for atomistic geometries, reducing the need for feature engineering.

One practical concern in model selection is the amount of data that is available for training the model. Methods like kernel ridge regression require an inversion of a matrix whose size is proportional to the number of training samples. This restricts their usability for large datasets because the time taken by a brute force inversion scales as with the dataset size n. Other models like neural networks can handle larger datasets since they can be trained by using small batches of the training data, and their performance can be monitored during training. At the other end of the spectrum we find powerful tools for small datasets such as, regression with Gaussian processes and Bayesian optimization,18, 257 the extraction of effective materials descriptors with subgroup discovery258 or compressed sensing as done in, e.g., the least absolute shrinkage and selection operator (LASSO) or the sure independence screening and sparsifying operator (SISSO).215, 216 Also some forms of input are better suited for certain models. For example, images exhibit a high degree of correlation between adjacent input points. Models that exploit such correlations, like convolutional neural networks,259 may then be the best choice. In other cases, the input features have no apparent correlations or have completely different numerical ranges, and decision trees may exhibit the best performance.244

The final step in the machine learning workflow is the performance assessment (cf. Figure 7). This analysis guides all other aspects of learning ‐ from excluding corrupt learning samples to optimizing the features and the model itself. The analysis step is general for all application areas of machine learning. For a more in‐depth introduction, we refer the reader to existing literature.198, 212 The goal of this step is to ensure that the model generalizes well to unseen data. Two common problems are over‐ and underfitting. In over‐fitting, the model becomes too specific. It reproduces the training data very well but performs poorly on new data. In underfitting, the model learns rules that are too general and averages through training and through new data. The balancing act between over‐ and underfitting is called the bias‐variance trade‐off, and it is typically controlled with cross‐validation and careful dataset design. The whole dataset is usually split into a training set, from which a further validation set for hyperparameter optimization can be split off, and a test set. Model performance is then evaluated on the test set. The dataset contents and the exact way the data is split into training and test sets can affect the reported performance and in some cases lead to unrealistic results. Often the sampling of training examples is not very even in the input space, as the samples can exhibit high levels of clustering—for example, the dataset may comprise of multiple clustered material types that have very similar properties. In such cases the model is able to interpolate very well even if it has only been trained on one representative of each cluster, but its performance will start to deteriorate for unseen material types, which are hard to leave out of the training set with purely random selection. Due to this effect randomly split training and test sets can offer unrealistic performance metrics and alternative cross‐validation strategies like leave‐one‐cluster‐out cross‐validation (LOCO CV)260 offer more realistic performance metrics.

All the key elements for successfully applying machine learning in materials science are in place, as illustrated also by the applications showcased in the next section. However, several challenges prevail. For example, selecting the optimal combination of data, features, machine learning models and analysis tools can be a formidable task, especially because the field is advancing so rapidly and practices become outdated quickly. Careful curation and standardization of both data and machine learning models can to some degree mitigate the problem, but not enough benchmark sets have been established in the community. Also, available data volumes are often still too small to apply machine learning tools that have been successful in other domains, e.g., commerce or social media.

Another challenge is the exchange of pre‐trained models. Projects such as OpenML261 and DLHub262 are first examples for model‐and‐data sharing platforms that enable transfer learning, but more could be done. Metric assessment is a further challenge. The reported performance for machine learning models is an important selection criterion for adopting certain models or features. However, performance metrics are not yet standardized. Standardized datasets help, but more attention should be devoted to the selection of test and training sets to obtain more realistic error bars.

We have already discussed the challenge of interpretability. As the exact way input data informs the machine learning model is often blindly guided by the model optimization and hidden behind internal parameters, better methods for interpreting the decisions made by machine learning models are required. Although the natural sciences rarely have to worry about ethical consequences—unlike the social sciences that are now adopting AI into their decision making263 —a critical evaluation of the decision mechanisms is important for understanding the shortcomings of machine learning models and to advance scientific understanding.

In summary, machine learning is a powerful concept for data analysis and materials informatics. Machine learning is a field undergoing very active development, and a plethora of suitable machine learning methods has been applied to materials science. Increasingly such machine learning tools are incorporated directly into data infrastructures. In our MUSE analogy, they will provide meaningful answers to our “searches.”

8. Applications

Staying with relevance and adoption, we now briefly present areas in which data‐driven materials science has been applied successfully. Success stories are important for the development of any field as they inspire trust and commitment in stakeholders. We have identified three major research objectives for which we think data‐driven approaches have the largest impact on materials science: materials discovery, understanding materials phenomena, and advancing materials modelling. We review these three areas briefly and present relevant studies.