Abstract

Cancer Care Ontario (CCO) is the clinical advisor to the Ontario Ministry of Health and Long-Term Care for the funding and delivery of cancer services. Data contained in radiology reports are inaccessible for analysis without significant manual cost and effort. Synoptic reporting includes highly structured reporting and discrete data capture, which could unlock these data for clinical and evaluative purposes. To assess the feasibility of implementing synoptic radiology reporting, a trial implementation was conducted at one hospital within CCO’s Lung Cancer Screening Pilot for People at High Risk. This project determined that it is feasible to capture synoptic data with some barriers. Radiologists require increased awareness when reporting cases with a large number of nodules due to lack of automation within the system. These challenges may be mitigated by implementation of some report automation. Domains such as pathology and public health reporting have addressed some of these challenges with standardized reports based on interoperable standards, and radiology could borrow techniques from these domains to assist in implementing synoptic reporting. Data extraction from the reports could also be significantly automated to improve the process and reduce the workload in collecting the data. RadLex codes aided the difficult data extraction process, by helping label potential ambiguity with common terms and machine-readable identifiers.

Keywords: Synoptic Reporting, Structured Reporting, Structured Data capture, RadLex

Background

Radiology reports are most commonly stored and transmitted in narrative form, or with minimal structure by using high-level headings, such as “Clinical History,” “Findings,” and “Summary” [5]. As a result, narrative reports prevent access to important clinical patient data, without either time-consuming and costly manual work or the use of natural language processing (NLP) applications, both of which can be subject to inaccuracies [2, 6]. This becomes a barrier to efficient patient information exchange, patient management, patient follow-up, public health surveillance, cancer surveillance, quality improvement, data mining, and research [5]. Synoptic reporting is the use of a structured report with coded concepts, supporting the discrete input and storage of clinical data and enabling direct extraction in machine-readable format from the radiology report [11]. The codes in synoptic data can help machines identify specific clinical content more quickly and accurately. In the case of disparate data sets from two different origins, RadLex can be used to disambiguate synonyms into a common code set. This specificity is referred to as semantic clarity, which is the ultimate goal of these standardized terminologies [9].

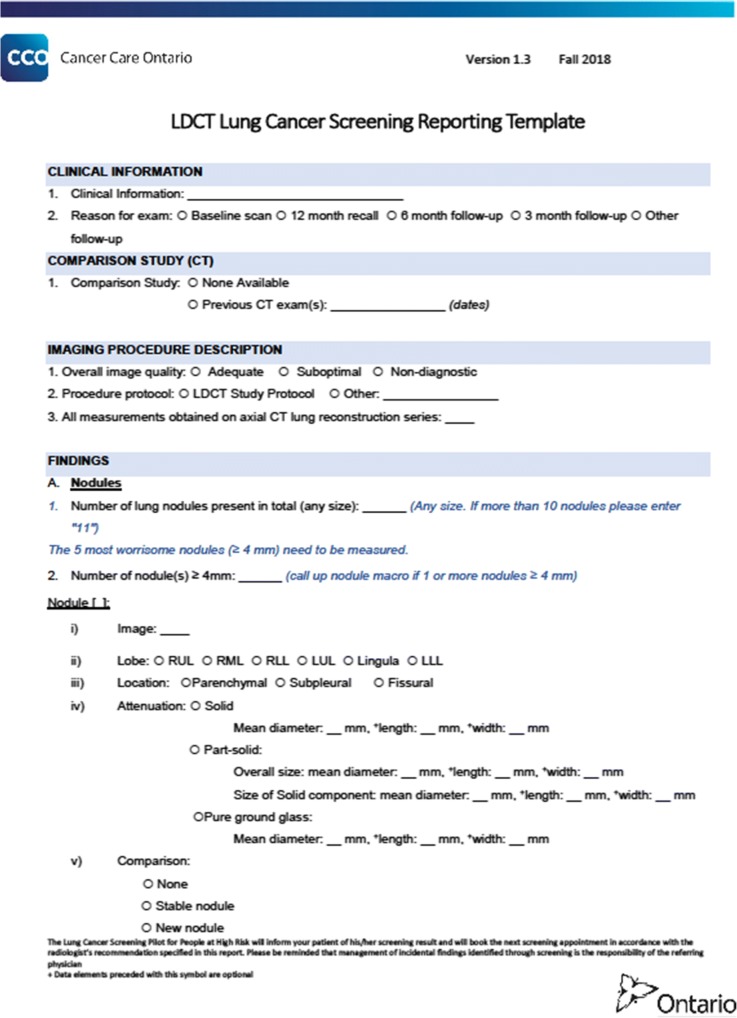

Cancer Care Ontario (CCO) is a provincial advisor to the Ontario Ministry of Health and Long-Term Care (MOHLTC), responsible for the delivery of health services to the citizens of Ontario. In June 2017, CCO launched the High-Risk Lung Cancer Screening Pilot (HR LCSP), a provincial screening program designed to screen participants at a high risk for lung cancer. The pilot is in phase 1 and is being operated at three hospitals in Ontario: Lakeridge Health, Health Sciences North, and The Ottawa Hospital, and will run until 2020. Standardized radiology reporting of the low-dose computed tomography (LDCT) performed as part of an organized lung cancer screening program has been indicated as a key component for organized screening and was a requirement for all pilot sites. The evaluation also requires information contained in the LDCT reports for metrics, such as the number of follow-up appointments, different levels of severity of patients and their outcomes, and service utilization within the catchment area. This evaluation process will help inform on the future implementation and expansion of the program. Due to this need for clinical data, there was an interest in implementing synoptic radiology reporting at one or more sites to explore current-state feasibility and reduce the data burden on sites. Otherwise, sites need to manually extract key indicator fields from each report for separate, secure transmission to CCO. As part of an early feasibility assessment, each site’s existing technology was reviewed to assess its ability to deliver synoptic reports without any upgrades or software enhancements. At the time of program launch, only Lakeridge Health was able to collect synoptic data using the current version of their radiology voice recognition (VR) reporting system.

This paper describes our experience implementing a highly structured radiology reporting template into current state systems at Lakeridge Health, one of Ontario’s largest community hospitals serving approximately 650,000 people and one of the three pilot sites for HR LCSP, to achieve synoptic radiology reporting.

Methods

The development and implementation of the LDCT Lung Cancer Screening template in the HR LCSP were done through a series of iterative processes. First, the development of the template by a clinical group that worked iteratively to define the content through a discussion and consensus process. Second, the implementation worked with radiologists, who would provide feedback on the implementation, after which appropriate changes would be made. Third, data extraction was tested to ensure the data being received was of high quality. This final step was conducted iteratively, to correct different issues as they appeared for data quality improvement. Through these three iterative processes, we were able to develop, implement, and ultimately extract data from the reports.

Development of the Reporting Template

A Low-Dose (LD) CT Lung Cancer Screening Reporting Template was developed by a clinical working group to standardize the reporting of the lung cancer screening for nodules and incidental findings. The group chose a set of standardized terminology to maintain consistency between reports and ensure semantic clarity. The standardized terminology supports complete and succinct reporting that is more readily usable for data collection, because it creates semantic clarity within the data set. The working group included ten radiologists, three surgeons, two family physicians, two nurse navigators, two epidemiologists, one pathologist, and one oncologist from a diverse set of hospitals and regions in Ontario. This diversity supported the development of a report template that covered the various needs of each specialty, ensuring the final report output is clear to both the user of the reporting template and the end user of the screening report. Discussions were supported by available evidence, and the American College of Radiologists (ACR) Lung-RADS™ Assessment Categories were leveraged to standardize nodule reporting and patient management recommendations, with an adaptation made (with permission) to account for patient care in the Ontario context [1]. The group worked iteratively, with consensus agreement of half the group required to define each data elements and structure of the report. Where there were difficulties reaching consensus on a concept or term, the RadLex ontology was referenced to identify the standardized term for a concept most appropriately applied in the radiology domain. The RadLex ontology is a comprehensive set of standardized terminology and codes maintained by the Radiology Society of North America (RSNA) and the National Center for Biomedical Ontology (NCBO). The group was presented with the term, and it helped guide them toward the Ontario standardized term. This process informed the development of the reporting template and explanatory notes.

Iterative Template Design Process for Implementation

Current radiology reporting standards, such as DICOM Structured Report and IHE Management of Radiology Reporting Templates, have not been widely adopted by vendors for digital sharing of templates and pre-population or reports between systems. Picture Archiving and Communication System (PACS) admins and radiologists were responsible for developing a VR reporting template in their own existing VR reporting systems based on the paper version of the CCO LDCT Lung Cancer Screening Reporting Template. CCO is unable to produce an electronic version of the reporting template that can be easily imported into all PACS in the province because of the variety of capability that exists in the province, i.e., the number of different VR solutions and versions of VR solutions. This lack of reporting template interoperability and sharing between VR systems and VR system versions would demand significant CCO resources to update and maintain all of the different versions of the electronic reporting template for the province.

An iterative design approach was used to develop the most usable reporting template in each of the VR systems at the pilot sites. The PACS administrators developed multiple versions of the reporting template based on the original paper copy of the CCO LDCT Lung Cancer Screening Reporting Template to determine which version had the least impact on the radiologist workflow. The final versions were adopted for use in each of the VR systems. To ensure adoption of the VR reporting template, the pilot site leads radiologists and other selected radiologists were engaged on the efficacy of the template. Areas for improvement were determined through serial testing, which were then addressed by the PACS administrators. Repeating this iterative process resulted in the finalization of a more usable template. This process was helpful for all three HR LCSP sites and, with CCO facilitation, led to improved consistency between all sites, in particular the final pdf reports, which were almost identical between the three sites. However, to achieve extract data, the Lakeridge implementation required additional time and effort to build a usable version of the reporting template in the VR system by the PACS administrators. Despite the challenges at Lakeridge, the improved consistency could ease report reading for referring physicians, by enabling them to quickly find the information they require.

Challenges with this process included issues with template version control, as changes between template versions could result in issues with data extraction upon delivery of data to CCO. Therefore, an essential success factor in template development was robust communication between teams in order to maintain consistency and data integrity. If a change was made without communication then, the data integrity may be compromised, as data appears to as missing when received by CCO. The missing data resulted in additional work of trying to find when the change was made, so that it could be accounted for in the larger data set and tracked.

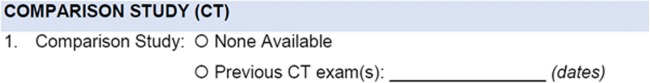

Part of the template that was adjusted initially included combining the comparison study field into a single field instead of a picklist and a conditional field for dates. Figure 1 shows the section before it was adjusted to a single free text field in the VR implementation. CCO did not make this change on the official version of the template, but it was made as a compromise at Lakeridge for improved usability. Instead, radiologists were instructed to report “none available” or “none” for previous CT exam(s) since choosing from the picklist of none available and previous CT exam(s) took additional time, as shown in the single free text field in Fig. 2. By eliminating an answer field, radiologists were able to move through this section more quickly; however, it required sacrificing data quality as there are a variety of responses for “Previous CT exam(s).” Some of these responses include “No,” “None,” “Nil,” “No previa,” a response that may have been unintentional, “No previous,” “Not visible,” “No prior,” “No prior CT Thorax,” and “None available.”

Fig. 1.

Example from template of Comparison Study field

Fig. 2.

Example from template of Clinical Information field

After some discussion within the Lakeridge Radiology department, further changes were suggested. The first suggestion was to pre-populate the clinical information section of the template with the text “Screening for Lung Cancer.” This change saved time, but also increased the potential for error if radiologists did not change the pre-populated text and if it was not applicable to the patient they were reporting. This risk was considered acceptable since it had minimal impact on the patient’s care.

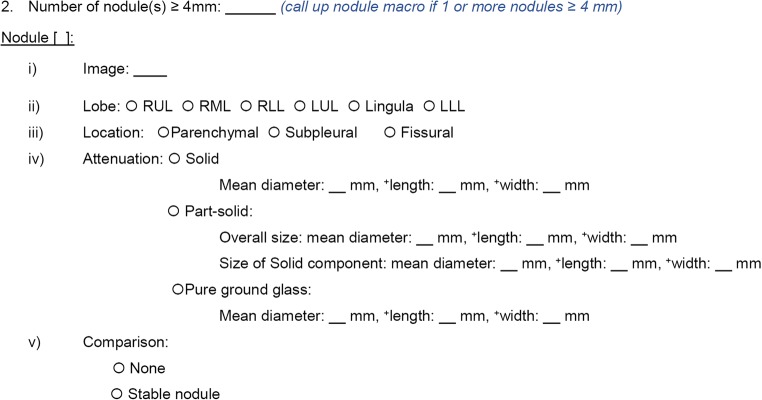

A more significant change to the clinical content of the reporting template was the decision to reduce the number of minimum reported nodules. Originally, nodules greater than or equal to 4 mm need to be reported, up to 10 nodules maximum in total, regardless of the number of nodules observed. An example of the nodule section is shown in Fig. 3. After periodic evaluation with pilot site radiologist and clinician advisory, it was determined that the reduction in the number of nodules reported on the LDCT Lung Cancer Screening Reporting Template would not have an impact on the patient management of Lung Cancer Screening, and the maximum number of reported was reduce to the 5 most concerning nodules, ≥ 4 mm in diameter. Since the nodule section of the LDCT Lung Cancer Screening Reporting Template is the most content-rich part of the reporting template, this greatly decreased the amount of potential work, though sites reported that 2–3 nodules were more typical of an LDCT Lung Cancer Screening exam.

Fig. 3.

Example from template of Repeating Nodule section

Other changes that had to be made were due to system limitations, not usability. For example, fields that collected data could not be pre-populated in the report template, i.e., the use of “canned text.” Each of these fields had to be manually filled. If these fields were picklists, the choices were alphabetically sorted and presented by default and customization of the list was not available, preventing the most frequently chosen option to be listed first. The technical limitations on these fields thus resulted in inefficient radiologist workflow.

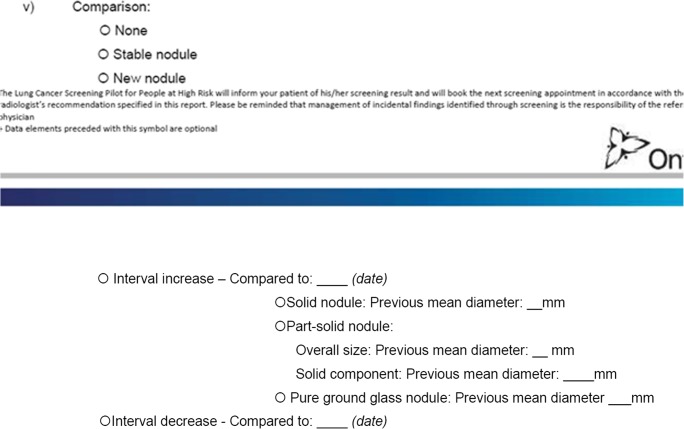

The reporting template contains mostly required fields; however, some of these required fields could not be implemented due to the lack of nested question logic, also known as a parent-child question relationship as shown in Fig. 4. If answers by the radiologist meant that the child question was not applicable for the report, the system would continue to look for an answer to that question, if the said child question was labeled in the system as mandatory. For example, if the nodule was solid but all measurements, regardless of attenuation, were mandatory, the system would prompt the user for a measurement in each field for solid, part-solid, and pure ground glass. This would lead to radiologists being unable to submit reports without putting measurements in each field. Therefore, no measurement and all other typically conditional questions, are not bound required to answer rule in the VR system. The lack of nested questions can lead to error because questions that may be required may be skipped. If these conditional questions were shown when the appropriate parent answer is given, rather than all the time, it would reduce the perceived length of the report and improve usability [10]. These conditional questions also clutter the user interface, as they are shown regardless if the condition has been met.

Fig. 4.

Example of comparison of nodules questions in the Repeating Nodule section

We are preparing to make further adjustments to the template for usability and use of the captured synoptic data to identify opportunities to improve the quality for reporting and patient management in the Lung Cancer Screening pilot after we collect feedback in our Fall 2019 review period. We will once again use an iterative design process when making these adjustments.

Data Extraction

The built-in data collection capabilities of the VR system were used to collect most of the fields within the LDCT Lung Cancer Screening Report template (Appendix) when used as part of Lakeridge screening participant reporting. However, data submission still requires a significant amount of manual effort to send this data from LH to CCO. Canned text was used in some fields to aid with reporting speed. We were unable to automatically collect data from fields that had canned text. The data collection questions had picklist options sorted alphabetically and could not be pre-populated automatically from the Electronic Medical Record (EMR) or with canned text. All of the data from the fields collected were saved in the VR system and are extracted in a CSV-formatted report. Once it is in the database analytics and spot checks of the data can be performed, the PACS administrators submit this CSV file monthly along with other data sets to CCO as part of the HRLCSP. The fields in the file were then mapped to a database where the information is extracted and saved automatically at CCO. Once at CCO, another round of manual data integrity checks are performed to ensure the clarity of the data.

Results

The lack of data validation on the front- and back-ends introduces the potential for a fair amount of data quality issues and results in a significant amount of data management of the data received. We have several examples of missed fields such as comparison date, measurements, and other important fields; however, we are waiting to collect a complete set of data before we conduct further investigation. Another common issue is differently formatted dates. This requires extra effort to process when the data is received and means that some of the dates need to be manually checked and validated. It also makes it more challenging to tell what format the date is in, such as MM/DD/YYYY or DD/MM/YYYY. Other inconsistencies require smaller adjustments that are easier to manage, such as measurement units and decimal format. Given the limitations of our current technology, we were only able to make adjustments and compromises to the template and radiologist training on the template in order to address these challenges. Report data captured show inconsistencies in how radiologists report, which requires investigation into how it impacts the patient, but we can say with certainty has high impact on data collection. Having data validation rules could help prevent issues with submitting data and prevent radiologist errors, because it would prevent data integrity issues by showing questions conditionally and ensuring that correct data types are placed in the correct fields. For example, data validation can ensure that dates, decimals, and text are consistently used between multiple reports. Ideally, these rules should be applied at the Graphical User Interface (GUI) level, when the information is being entered by the radiologist and at the back end when the data is sent to CCO. While the lack of data validation poses challenges, with manual cleaning, the data is fairly usable for analysis of trends in patients screened for lung cancer and reporting. These trends might include population analysis, risk assessment, and outcomes analyses.

We were able to use RadLex codes to identify questions and answers in the template that may be ambiguous. We are able to ensure semantic clarity of reporting content by mapping question and answer fields to appropriate RadLex codes. By creating a database and a mapping table incorporating RadLex codes, we can run analytics to better relate questions and answers.

As we approach our 2-year review period in Fall 2019, we are making adjustments to the reporting template. We are unsure how these changes will affect the data integrity and data use. Maintaining the highest standard of patient management takes priority over data quality, and adjustments may be made to the summary section of the template containing the Lung-RADS™ to better match the outcomes we are experiencing; for example, we may adjust patient management recommendations to better fit the Ontario context. Changes to the form need to be accommodated in our database and can affect data quality, as we may lose fields we previously had and create new ones. These changes could potentially limit the analysis we can do on the data. We will need to accommodate previous versions of the template in our data sets and find ways to relate the two data sets.

Discussion

The limitations of the current reporting solution that presented themselves, such as lack of front end data validation, usability challenges, and lack of conditional logic, prevented a fully synoptic reporting solution and required many manual intervention and workarounds. Despite the limitations, we were able to achieve our goals within the current-state technology. A number of enhancements could have improved the implementation including the ability to customize the sequence answer, pre-populating applicable fields, and the use of conditional logic within sections and individual question of the reporting template [10]. These enhancements would all ease the burden of the radiologists using this template and ensure that the final radiology report is clear, concise, and machine-readable.

The inability to scale the solution easily to other sites is due to the lack of interoperability standards for synoptic reporting. Other sites wishing to replicate the LDCT Reporting Template from Lakeridge at Lakeridge health may not be able to simply import a digital copy of their template, unless their vendor solution was the same type and version. This limits sharing between sites that have different VR systems, because any new sites would need to go through the same iterative process to adapt the template to their VR system and local needs. While any new sites could leverage lessons learned, they would most likely need to recreate the template in their site-specific VR system.

These limitations could be handled by improving the use of interoperability standards such as IHE Structured Data Capture (SDC). SDC is an XML-based interoperable standard based on the creation of Forms. [7] SDC is currently being used for pathology and public health reporting. In pathology, the College of American Pathologists is currently working to convert all of their forms to SDC format [3], while the CDC is using it for Digital Bridge electronic Case Reporting (eCR) initiative which aims to achieve live electronic case reporting with multi-directional information exchange [4].

SDC forms can be loaded into any electronic medical records (EMR) system that has implemented SDC. CCO could curate and host a number of SDC forms for various purposes, such as specific procedures. Data could then automatically extract the information through the use of the unique identifiers required by all SDC forms. These IDs allow the data to be discretely captured, extracted, and then discretely stored in a database. These SDC forms can also be tagged with standardized terminology such as SNOMED-CT, ICD codes, LOINC codes, or RadLex, directly in the form, versus via a mapping table like how we did in the screening pilot.

CCO has developed a number of forms in SDC format; however, we have not been able to implement the technology at any sites for data capture to date. We aim to use this technology to reduce the amount of manual work required to capture the data as SDC, while maintaining governance of our forms. With SDC, CCO can provide the forms to the vendors who have adopted the standard and which can be transformed from XML to HTML or other technologies for display to clinicians. This method ensures that accurate data is captured at the source at which it is entered without the need for any manual transcription for that data to reach the EMR or CCO’s data warehouse.

Forms can also be aligned to clinical guidelines such as Lung-RADS and TNM. This enables better clinical content that is consistently produced by various radiologists. Guidelines also provide clear next steps for physicians and in many cases can provide clarity for patients reading their reports. Synoptic reporting lends itself well to these guidelines [8].

Conclusion

During this experience, we have discovered that the iterative design approach can be effective for reducing the barriers to adopting structured and synoptic reporting by clinicians. Our teams were able to create more effective templates by working with radiologists and making frequent adjustments based on their feedback. This approach helped manage some of the technical limitations we encountered. We were successful in capturing discrete data using the current-state technology, despite limitations, improving the template, and determining the effectiveness of the HRLCSP to date. It is unclear given the amount of effort and workarounds if this solution can be expanded to other sites to collect synoptic data without technology changes. Currently, implementation and maintenance costs are very high, as weeks of preparation and testing are required by template designers, PACS administrators, radiologists, IT support teams, and CCO data analytics staff. The methodology used ensured that the synoptic template was able to collect data while maintaining its usability for the radiologists; however, the amount of rework and testing required is extensive and requires excellent communication between all parties involved in the template creation and data collection process. Future uses of synoptic data include intelligent templating that reacts to user inputs, assisted staging, and other clinical decision support and predictive analytics to make projections on patient populations and identify those at highest risk before they step into a clinic. Using standards, such as IHE SDC, could enable this reporting future.

Acknowledgments

We would like to acknowledge, the Emerging Programs Team at CCO, the Lakeridge Health Team, and the Cancer Imaging Program at CCO.

Appendix. LDCT lung cancer screening form version 1.3

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Alexander K. Goel, Email: Alexander.goel@cancercare.on.ca

Debbie DiLella, Email: ddilella@lakeridgehealth.on.ca.

Gus Dotsikas, Email: gdotsikas@lakeridgehealth.on.ca.

Maria Hilts, Email: mhilts@lakeridgehealth.on.ca.

David Kwan, Email: David.Kwan@cancercare.on.ca.

Lindsay Paxton, Email: lpaxton@lakeridgehealth.on.ca.

References

- 1.American College of Radiologists: Lung Rads. 2018. Retrieved May 16, 2018, from https://www.acr.org/Clinical-Resources/Reporting-and-Data-Systems/Lung-Rads

- 2.Barchard KA, Pace LA. Preventing human error: The impact of data entry methods on data accuracy and statistical results. Comput Hum Behav. 2011;27(5):1834–1839. doi: 10.1016/j.chb.2011.04.004. [DOI] [Google Scholar]

- 3.College of American Pathologists: CAP eCC [text/html]. 2019. Retrieved December 3, 2018, from https://www.cap.org/laboratory-improvement/proficiency-testing/cap-ecc

- 4.Digital Bridge: Digital Bridge eCR functional requirements statements. Digital Bridge, 2017. Retrieved from http://www.digitalbridge.us/db/wp-content/uploads/2017/02/Digital-Bridge-eCR-Functional-Requirements.pdf

- 5.Dobranowski J: Structured reporting in Cancer imaging: Reaching the quality dimension in communication. Health Manag 15(4), 2015. Retrieved from https://healthmanagement.org/c/healthmanagement/issuearticle/structured-reporting-in-cancer-imaging-reaching-the-quality-dimension-in-communication

- 6.Harvey H: Synoptic reporting makes better radiologists, and algorithms. 2018. Retrieved July 16, 2018, from https://towardsdatascience.com/synoptic-reporting-makes-better-radiologists-and-algorithms-9755f3da511a

- 7.Integrating the Healthcare Enterprise (IHE): Structured Data Capture (SDC) Rev. 2.1 – Trial Implementation. 2016. Retrieved December 17, 2018, from https://ihe.net/uploadedFiles/Documents/QRPH/IHE_QRPH_Suppl_SDC.pdf

- 8.Johnson AJ, Chen MYM, Swan JS, Applegate KE, Littenberg B. Cohort study of structured reporting compared with conventional dictation. Radiology. 2009;253(1):74–80. doi: 10.1148/radiol.2531090138. [DOI] [PubMed] [Google Scholar]

- 9.National Center for Biomedical Ontology: Radiology lexicon - summary | NCBO BioPortal. 2018. Retrieved July 9, 2018, from http://bioportal.bioontology.org/ontologies/RADLEX

- 10.Segall N, Saville JG, L’Engle P, Carlson B, Wright MC, Schulman K, Tcheng JE. Usability Evaluation of a Personal Health Record. AMIA Ann Symp Proc. 2011;2011:1233–1242. [PMC free article] [PubMed] [Google Scholar]

- 11.Srigley JR, McGowan T, MacLean A, Raby M, Ross J, Kramer S, Sawka C. Standardized synoptic cancer pathology reporting: A population-based approach. J Surg Oncol. 2009;99(8):517–524. doi: 10.1002/jso.21282. [DOI] [PubMed] [Google Scholar]