Abstract

In the era of personalized medicine, the emphasis of health care is shifting from populations to individuals. Artificial intelligence (AI) is capable of learning without explicit instruction and has emerging applications in medicine, particularly radiology. Whereas much attention has focused on teaching radiology trainees about AI, here our goal is to instead focus on how AI might be developed to better teach radiology trainees. While the idea of using AI to improve education is not new, the application of AI to medical and radiological education remains very limited. Based on the current educational foundation, we highlight an AI-integrated framework to augment radiology education and provide use case examples informed by our own institution’s practice. The coming age of “AI-augmented radiology” may enable not only “precision medicine” but also what we describe as “precision medical education,” where instruction is tailored to individual trainees based on their learning styles and needs.

Introduction

Applications of artificial intelligence (AI) in medicine will change radiology practice in myriad ways. There is growing consensus that education of radiology post-graduate trainees and medical students should now include understanding AI.1,2 While the field has discussed educating radiologists on use of AI,3 the purpose of this review is to instead focus on use of AI for “precision education” in radiology.

We first define “precision medical education” and provide a brief overview of radiology education. Next, we introduce challenges in modern radiology education for trainees and propose solutions from AI for both students and educators. Then, we illustrate use cases from our institution for AI in radiology education, namely an interactive online system built for radiology education and a digital neuroradiology teaching file. Integration of such tools will improve models of augmented radiology education. Finally, we explore new challenges facing AI in radiology education.

Artificial Intelligence and precision medical education

Generally, AI has potential to improve efficiency and productivity throughout medicine.4–11 AI techniques are computational models able to emulate human performance on a task, often without explicitly being programmed for that task.12 Deep learning models are one class of AI that have found recent success, although AI more generally refers to a much broader set of computational techniques that perform complex operations previously thought to require human intelligence. Currently, successful AI radiological applications include (i) abnormality detection, (ii) anatomic segmentation, (iii) image quality assessment, (iv) natural language processing (NLP), (v) improvement of protocols and worklists, etc..13 Further discussion of applications of AI in radiology is beyond the scope of this text and is reviewed elsewhere.12–14 Often, the goal of AI is to understand some aspect of an individual’s health by taking a multitude of variables into account. As such, AI is closely tied to the concept of “precision medicine”, which is a healthcare framework focused on “prevention and treatment strategies that take individual variability into account”.15

In contrast, AI applications to medical education are relatively underexplored. Individual variability is thought to contribute substantially to learning styles.16 Although a tremendous amount of research has focused on precision medicine, very little has focused on analogous personalized medical education. The opportunity to exploit AI technologies for education in general is not a new concept, and indeed was presented as a “grand challenge” for AI in 2013.17 AI has been utilized to forecast student performance, dropout rates and adverse academic events.18,19 Additionally, several AI methods use e-learning modules to identify learning styles of individual students.20,21 However, it should be noted that there are legitimate concerns of didactic applications of AI given that AI has not yet been widely implemented for clinical practice and the majority of published papers have been retrospective and single institution.12 However, the potential instructive applications of AI warrant further discussion and research.

Here, we focus on the potential use of AI in radiology education. Much of the excitement about AI in education centers around the use of AI for taking into account unique individual needs. Therefore, we propose the term “precision medical education” to illustrate the use of tools and diagnostics to personalize medical education to individual learners. We introduce and analyze the potential utility of AI in radiology education, AI-based solutions, and new challenges in an era of precision medical education.

Precision radiology education framed by learning theories

Radiology education requires acquiring skills to analyze/extract imaging features to find patterns, generate a differential diagnosis that matches the patterns and correlate the imaging features and differential with clinical findings to select the most probable diagnosis.22 To acquire and apply these skills, trainees learn and integrate diverse knowledge sources. Radiology training relies on the traditional apprenticeship model. Because this relies on trainee’s relationships and limited time available to review preliminary reports with the staff radiologist, gains in knowledge and skills can vary between trainees.23–25 Moreover, this learning is dictated by number and diversity of cases encountered, varying within practice and patient mix. Hence, this apprenticeship education model is challenged by ever-increasing workload demands on both attending/staff physicians and trainees and can be improved by better understanding relations between humans and tools.1

Radiology affords tremendous opportunity to leverage technological advances for education, with its inherently digital nature and early technology adoption. To put a proposed model for future radiology education into context, we briefly review three learning theories (behaviorist, cognitive and constructivist) (Table 1) that are relevant to radiology trainees and medical students.26 The behaviorist theory emphasizes whether the best gamut and diagnosis were made. Cognitive theory focuses on how the diagnosis was made, with proper reasoning and algorithms. Finally, constructivist theory highlights with whom and what tools the diagnosis was made, allowing analysis of trainees’ collaborations with peers, supervisors, AI tools, etc.

Table 1. .

Learning theories address questions that form the basis of how we understand radiology and differential diagnosis26

| Learning Theory | Question Addressed | Description |

|---|---|---|

| Behaviorist theory | Is the best diagnosis made? | The study of behavior focuses on questions including whether radiology trainees formulate the most accurate differential, and whether significant radiologic features or lesions are distinguished from artefact. This context emphasizes on results demonstrated by trainees but treats trainees as a “black box.” |

| Cognitive theory | How was the best diagnosis made and from what reasoning? | Cognitive processes highlight reasoning involved in deciding diagnoses. What features were compiled by trainees and how did he or she rank these to determine a differential? The focus becomes how we can allow trainees to develop skills necessary for obtaining correct responses. Furthermore, this aspect identifies cognitive biases, or mistakes in memory recall, reasoning or decision-making, that are salient in practice. |

| Constructivist theory | With whom and with what tools was the best diagnosis made? | Learning is recognized to be a social process wherein trainees rely on and actively participate in interactions with other trainees, technologists, clinical colleagues, and staff physicians. Trainees will utilize tools to facilitate their education. Relationships between trainees, their mentors and supervisors, and study resources creates a greater understanding of how radiology is learnt and practiced. |

As an illustrative case of the learning theories, imagine a trainee in her neuroradiology rotation. She encounters a real (or simulated test) case of a patient presenting with acute neurological deficits. The brain MRI depicts a T2 hyperintense lesion with restricted diffusion in the basal ganglia. She generates a differential diagnosis from the radiologic and clinical features, and ultimately arrives on the diagnosis of acute infarction. In assessing her performance, the behaviorist theory evaluates whether she generated the best diagnosis. Cognitive theory examines her reasoning of correlating radiologic features in the provided clinical context. Constructivist theory focuses on how she utilized resources, such as consulting textbooks, colleagues and supervisors. Together, these theories contribute to a holistic understanding, albeit not equally in strength at all points in time; portions of theories are relevant to certain learners at certain points in their training. AI may offer potential improvements to current educational practice corresponding to any of these perspectives (Table 2).

Table 2. .

Learning theories address challenges that form the foundation to improve radiology precision education with AI

| Learning theory | Challenge addressed | Description |

|---|---|---|

| Behaviorist theory | How can we improve perceptual and diagnostic accuracy of trainees and medical students? | Experience is a gateway between apprenticeship and autonomy, empowering trainees with greater accuracy.17,26 Patient care can improve with increased trainee experience. |

| Cognitive theory | How can we improve radiology education and its use of textbooks and lectures as the main access points to knowledge and diagnostic thinking? | With growing knowledge requirements in medicine, and definitions of pathology ever-evolving, the knowledge gap between trainee and attending continues to widen. AI can build knowledge efficiently and highlight and correct individual cognitive biases. |

| Constructivist theory | How can we optimize and maximize the time spent between trainees and their teachers? | One-on-one time at workstations is essential to radiology education environments,17 but this is not always achievable in busy reading rooms, with ever-increasing work-loads.1,23 AI can also automate “learning profiles” (discussed later).24 |

Now, imagine the trainee is learning with an AI network that has already determined a potential diagnosis. Such a network allows her to check whether the elements of her own differential diagnosis are correct. Accuracy measured by percentage of correct diagnoses can demonstrate the behaviorist theory of trainee performance. Based on her differentials, AI can individualize its recommendations and prompt her to identify or corroborate her observations of T2 hyperintensity or diffusion restriction. In addition to image interpretation, AI can assist in clinical recommendations for this acute stroke patient. This individualized learning guides her decisions, which may optimize measures of proper lesion characterization, potentially reducing complications further promoting the cognitive theory. Upon interacting with the human-machine interface, she can now discuss the case with other radiologists. Optimizing interactions can be assessed by turnaround time or time utilizing the AI, which are central to the constructivist theory.

Challenges in radiology education and solutions offered by AI

Radiology education faces inherent challenges. Some are unique to the field, including lack of high-fidelity simulation training, the immersion of trainees into realistic situations mimicking real-life encounters.25 Some are common across medicine, including evolving apprenticeships.27 Herein, we introduce challenges and discuss potential solutions offered by AI. Naturally, some solutions overlap between learning theories, so they are listed under the “best matched” theory (Figure 1). The applications presented here are not yet validated. Hence, we provide a critical overview of the challenges of implementing such solutions and possible evaluation methods in the “New Challenges” section.

Figure 1. .

Triangular approach to learning theories and integration of AI into radiology precision education. Behaviorist, cognitive and constructivist learning theories each present challenges and solutions.

Automated measurement and case flow assignment: Addition of AI to post-graduate education may directly assist trainees in augmenting caseloads with necessary breadth and depth of cases. Automated segmentation and measurements of lesions, sometimes already at or above human accuracy,4,11,28,29 can improve efficiency of study interpretation, leading to more educational cases for trainees. At a systems-level, the “intelligent” case allocation and assignment for trainees and staff radiologists produces an efficient distribution of resources and education.14 Indeed, radiology trainee performance increases with optimal case exposure and volume.30 Specific “must-see” cases can be assigned based on rotations to help minimize potential for inconsistency in individual trainee experiences, creating more time for trainees to review resources critical for proper case interpretation and improve diagnostic accuracy allowing more time to interact between educators and students. Importantly, for “educated consumerism” of AI technologies, instruction on the technology should be addended to core radiology and medical school curriculums to assist trainees identify strengths and weaknesses of AI,31 reinforced by its use for trainee education.

Case-based learning (Bottom-up approach): Interactive and problem/case-based learning are “bottom-up approaches,” where students gain firsthand experience in interpreting cases themselves. Conversely, “top-down approaches” expect students to learn diagnostic processes from educators first and practice afterwards. AI can complement existing bottom-up platforms used to teach radiology.32 It has been argued that case-based learning should be implemented because it is more effective than traditional, top-down approaches and is preferred by radiology educators and students.33–35 Similar to how weekly interesting case discussions are prepared, bottom-up cases for trainee learning may be selected by attending physicians based on the criteria such as the rarity of the diagnosis, the difficulty in differentiating diagnoses, or the opportunity to integrate multiple lines of evidence. Eventually, AI may select the cases itself as it detects rare radiologic or clinical features. AI offers ideal opportunities to present case-based learning, through tools, like AI-curated teaching files delivered at optimally relevant times, for trainees to review that adapt with individualized performance. Such systems have been present for many years, often termed “intelligent tutoring systems”,36 but AI is now reaching the computational capacity for a broader application. In radiology education, an intelligent tutor might similarly create modules or virtual curriculums for rotating trainees, track learning style and performance and reinforce challenging topics. To assess trainees’ knowledge base, the supervising physician and/or such an AI-based personal tutor can evaluate how the trainee performs with and without assistance. This approach of case-based milestones encourages both individualization of trainee learning through AI tools and standardization of radiology graduate and continuing education, in line with the Accreditation Council for Graduate Medical Education (ACGME).37 On-demand, case-based, standardized curricula may guide program directors and supervisors toward more thorough, equitable radiology training and education.

Guided decisions: AI algorithms have potential to directly teach cognitive processes related to diagnostic decisions. For example, a subset of AI tools utilizes “decision-trees” to search the space of possible decision points to acquire the simplest combination of points that yields the highest accuracy. Other AI tools can highlight important anatomical regions utilized by AI to determine a result. When applied to different diagnoses, decision trees and saliency maps can allow trainees to peer “inside the black box” to learn radiological decision-making.4 These guided decisions may serve to demystify AI tools and allow for trainees to derive diagnostically meaningful information that might otherwise be hidden. This also demonstrates how precision radiology education is distinct from precision medical education, which may use networks to highlight clinically visible signs, symptoms or lab results. Additionally, salient case features can be identified and cross-referenced with records or teaching files to recommend additional cases for review. This database can be curated and adapted by supervising physicians to guide trainees on how to make clinical decisions. Educators can analyze trainee decisions and either reinforce or discourage such decisions in future situations. As educators implement AI tools, cognitive biases can be reduced by presenting trainees with forgotten information, strengthening proper reasoning and providing examples of correct decision-making. Finally, AI can optimize the relative contributions of “thinking slow” and “thinking fast”.38 “Slow,” logical decisions, like analyzing studies for planning stereotactic radiosurgery, can be augmented by systematic AI-based decisions which may improve the quality of trainee reasoning. “Fast” thinking, like interpreting acute infarcts on head CT, requires quick heuristics developed as individuals familiarize themselves with cases. By streamlining workflow with automated quantification, such as characterizing lesions in multiple sclerosis,39 AI can reduce time-to-diagnosis and increase quantity of cases studied to improve quick decision-making. Hence guided decisions may assist individual trainees on multiple levels of decision-making and improve the quantity of training cases and quality of personal time spent learning from them. This is not yet proven, and we discuss this further in the “New Challenges” section. Nonetheless, similar to how graphing calculators can guide students to understand calculus, AI tools may be able to guide trainees in radiological interpretation. While there is concern that use of AI for clinical and diagnostic decisions will supersede the role of human radiologists, AI affords augmented radiologists to evolve new roles in data science and assessment.40

High and low-level supervision: One solution to a constructivist challenge is to support human-machine interactions and extend human interactions to enrich learning experiences. In one study, US residents spent about 24 minutes reviewing a brain MRI and drafting a report, and greater supervision may assist in optimizing time spent.41 AI can be used for low-level education, while attending/staff physicians still provide high-level supervision. Previous AI tools have matched students with preferred learning styles,20,27 and AI can identify and uniquely customize low-level learning experiences with trainees. Trainees working on draft reports may be directed by AI algorithms to review example case reports with similar radiologic or clinical features, relevant clinical literature and additional quantitative measures, such as the size, volume, lesion count or change over multiple time-points. AI-driven search queries and recommendations have already been implemented in biomedical databases for “intelligent navigation”. Such tools may enable intelligent triangulation and the creation of trainee competency profiles, akin to online trainee logs of skills and experiences required for surgical training programs and are currently in development in radiology.24,42 For high-level training, students will still enjoy direct face time with attending/staff physicians but may now focus on synthesizing and refining information. The attending can review a trainee’s activity on cases and usage of AI-networks to address errors in reasoning and discuss more abstract considerations from clinical best practices to management decisions. We later discuss potential metrics of performance.

Flipped learning: AI techniques promote interactive flipped learning for personalized education. Normal classrooms provide supervised (yet passive) instruction while trainees practice unsupervised. Alternatively, flipped classrooms empower trainees to review cases and draft reports while supervised by educators and gather information from knowledge sources while unsupervised. Flipped learning has been tested and preferred by medical students,43 dental students44 and radiology trainees.1,35,45 In fact, many medical schools have implemented flipped classrooms where lessons are introduced at home and ideas are synthesized and applied in place of lecture during class-time; workstations and reading rooms can become flipped, “precision learning classrooms.” Supervisors and trainees may spend less time reviewing basic ideas and more time on deeper discussions of radiologic and clinical principles and hold review/question-&-answer sessions. Students can utilize AI to supplement unsupervised learning during personal time. This change would allow human educators to spend more time watching trainees’ practice, correcting errors made in unsupervised learning and tailoring training methods and lesson content based on their students’ strengths and weaknesses, promoting bi-directional information exchange and learning. Thus, AI promotes unique precision education platforms that balances a standardized curriculum with inherently individualized learning.

High-fidelity simulating training: High-fidelity simulations (HFS) allow trainees to practice for clinical encounters. Radiology utilizes highly advanced technology, but compared to other medical fields, there is lack of HFS and situational learning that can provide real-time feedback tailored to specific learning skills or modules.25 From surveyed US radiology residency program directors, department chairs and chief residents, one third of respondents highlighted issues like lack of faculty time and training, cost of equipment and lack of support-staff as barriers for HFS in diagnostic radiology; many of these barriers can be overcome with AI. In fact, AI clinical learning tools, like models for sepsis, pediatric asthma management and breast cancer risk prediction, are currently being developed to potentially improve clinical management.46–48 Such models must be rigorously validated by different investigators across different populations of training data before clinical adoption. While such tools have not yet been approved for widespread clinical practice, they might be helpful in training clinicians on how or how not to act.49 Furthermore, the construct validity of whether certain AI tools actually “learn” has been debated, and the use of such tools in medical education and simulation has not been thoroughly tested. Nevertheless, it is certainly worth determining whether such models may one day be applied to simulation training to prepare students on how to best manage clinical scenarios. An AI-integrated call preparation platform might allow trainees to better simulate mini-call conditions, expeditiously extract information from patient charts and increase autonomy under faculty supervision. These initiatives are cost-effective and may prevent morbidity associated with unexpected situations or life-threatening events faced during traditional post-graduate medical education and training.50 Precision education can promote a better learning environment where trainees are tested with simulation calls and assessed by performance. Real-time feedback and situational learning tailored to unique events can be provided by AI networks and supervisors, who can track progress and proficiency from simulations. Based on performance, simulations can be adapted to fit the learning needs of trainees, as is further discussed in the use cases. Moreover, learning environments might be adapted to individual learners on case-by-case basis, leveraging previous research on applying AI to match students’ learning styles and provide feedback.20,21,51

Use cases for radiology precision education

Given these potential solutions from AI, we now examine two use cases and propose a model for AI in radiology precision education.

Bayesian networks

Textbooks and lectures in radiology generally focus on knowledge and important imaging findings required for image interpretation. Additionally, years of training and experience with many exemplars, augmented by direct workstation teaching, empower trainees with skills of reasoning and basic understanding of probability/rank-ordered differential diagnosis. Currently, Clinical Decision Support (CDS) systems are being developed to improve diagnosis and management that can integrate with electronic health records.52,53

Rather than trainees memorizing lists and mnemonics, a system that supports development of differential diagnosis may be a more effective educational tool. The Adaptive Radiology Interpretation and Education System (ARIES) is an open source software (https://github.com/jeffduda/aries-app), developed at our institution as a tool for integrating imaging and clinical features to formulate a differential diagnosis, as well as for teaching probabilistic reasoning in forming these differential diagnoses.54,55 Specifically, ARIES is a web-based interface to a Bayesian network allowing users to select observed features for any imaging examination. It computes probabilities of specific diseases based on reported combinations of imaging and/or clinical features. Real-time probabilities of a pre-defined list of differential diagnoses are calculated according to a specified Bayesian network with expert-derived conditional probabilities from specialists or published findings.54,55 Further validations are underway to determine whether systems such as ARIES are effective in improving educational outcomes and diagnostic performance of trainees. While such networks are yet to be validated for clinical practice, they hold promise in streamlining education given the current alternatives are inefficient textbook/literature searches.

ARIES is a Bayesian network, rather than a deep learning-based AI algorithm and has specific networks developed for several organ systems (including preliminary MRI sub networks for musculoskeletal system, spine, lung, and renal lesions) with a tailored differential diagnosis. Specific sub networks may be used for trainees during individual rotations or selected based on the indication or clinical description of the imaging study. Further description of methods is found in 54,55 and Supplementary Material. While deep learning methods often demonstrate high performance, integrating Bayesian networks into digital platforms offers the advantage of access to internal features “under the hood.”

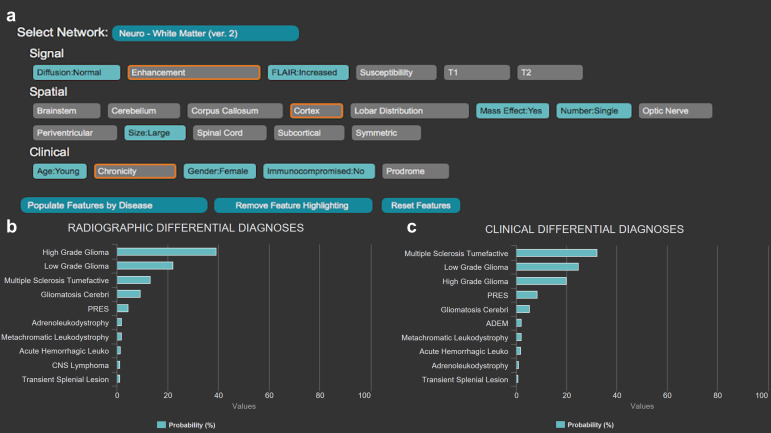

ARIES assesses features to predict diagnoses, from common to uncommon to rare frequency. To better illustrate utility of such software for education, consider a trainee on neuroradiology rotation. A patient presenting with nonspecific acute neurological manifestations undergoes a brain MRI, which the trainee reviews using ARIES for diagnostic assistance. The trainee can select relevant imaging/clinical features (for example, single large lesion with mass effect, increased FLAIR signal, absence of reduced diffusion and heterogeneous contrast enhancement) (Figure 2a). Clinical information can also be specified to improve differential diagnosis (age, immune status, gender). These features are integrated by the Bayesian network to calculate probabilities for certain diagnoses relevant to the organ system. Two sets of probabilistic diagnoses are provided, one accounting for only imaging findings (“Radiographic Differential Diagnoses”) (Figure 2b), and the second calculating disease probabilities given imaging findings in the given clinical setting (“Clinical Differential Diagnoses”) (Figure 2c). In addition, ARIES allows users to highlight (in orange in Figure 2a) the most relevant diagnostic features for differentiating the items in the current differential diagnosis. This feature can guide a radiology trainee in assessing the most relevant imaging features for a particular diagnosis.

Figure 2. .

The Adaptive Radiology Interpretation and Education System (ARIES) distinguishes between a high-grade glioma and tumefactive multiple sclerosis in the white matter neuroradiology network. (a) Features based on signal, spatial and clinical information are selected by the trainee in blue; unselected features are gray. The most differentiating unanswered features are highlighted in orange and updated in real-time. Differential diagnosis by (b) imaging features only versus (c) a combination of clinical and imaging features derived from the features selected in (a). Probabilities of diagnoses are calculated by a naïve Bayes network with prior probabilities based on expert consensus.

In the provided example, the neuroradiology white matter network is queried with features described above, pointing towards the diagnosis of high-grade glioma. Addition of clinical findings suggests an alternative diagnosis of tumefactive multiple sclerosis. This difference in rank-ordering of differential diagnosis teaches importance of clinical presentations in imaging contexts.

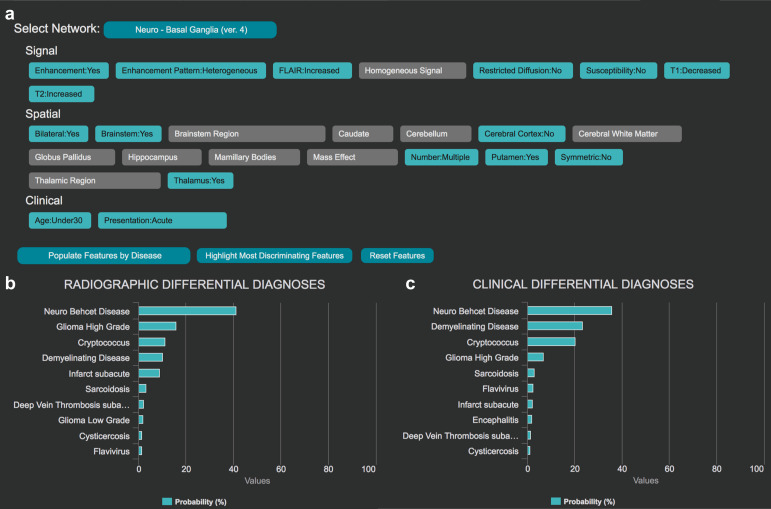

Platforms like ARIES are also applicable for rare diagnoses. For the basal ganglia network (Figure 3a), a trainee may encounter a young adult with acute neurological symptoms with increased FLAIR signal located in deep gray nuclei, brain stem and white matter, with focal heterogeneous enhancement. Differential diagnosis may include neoplastic, demyelinating, infectious and inflammatory etiologies. Yet, the diagnosis with highest probability based on ARIES is Neuro-Behçet’s disease (Figure 3b–c). This specific imaging pattern in a young individual with an acute presentation preferentially suggests this rare condition.

Figure 3. .

The Adaptive Radiology Interpretation and Education System (ARIES) distinguishes between Neuro-Behçet's disease and other diagnoses in the basal ganglia neuroradiology network. (a) Features based on signal, spatial and clinical information are selected by the trainee in blue; unselected features are gray. Differential diagnosis by (b) imaging features only versus (c) a combination of clinical and imaging features derived from the features selected in (a).

Learning tools like ARIES empower trainees with the capacity to develop and test hypotheses with interactive analysis by adding, removing, or changing features to see how they affect the differential diagnosis. Real-time probabilistic feedback from authenticated, proven cases supports direct education in radiological decision-making, integrating all learning theories and radiology triangulation. For another neuroradiology example, see Supplementary Video 1. This demonstrates how dynamic a differential diagnosis can be. Localization of signal abnormality to a certain thalamic subregion can change probabilities for the top diagnosis from Wilson disease (anterolateral thalamic involvement) to flavivirus encephalitis (posteromedial thalamic involvement). This illustrates how incomplete input into systems such as ARIES may lead to erroneous interpretations, and hence careful consideration of each important feature is required by both network designers and end users to determine a complex diagnosis. When utilized thoughtfully, this dynamic diagnostic process may assist trainees to better understand key features that distinguish disorders of similar clinical and/or radiological presentations.

Such interactive systems have the potential to augment radiology and medical education. As we will discuss in the next use case, platforms such as ARIES can be augmented with aspects of machine learning to create a truly personalized learning experience. After identifying important features and formulating differential diagnoses, trainees can consult AI platforms for probabilistic differential diagnoses, with specific questions highlighting clinical decision points for review. This system leverages existing Bayesian AI and can be integrated with teaching file databases to further enhance aspects of learning.

Comprehensive digital teaching file databases

Teaching files are an important component of radiology education, with collections originally developed in printed film form but now taking advantage of the digital environment.56–59 Typically, these teaching file cases are presented digitally, in native picture archiving and communication system (PACS), but case curation continues to be labor-intensive. Moreover, the onus of deciding which cases are most valuable falls on trainees. Instead, one might imagine large collections of automatically curated cases, which are presented to trainees based on their own “learning profile,” accounting both for their individual educational needs and subspecialty interests, reflecting precision education. Such profiles are a logical progression and extension of experiential logs already required for many post-graduate programs, including radiology.24

At our institution, we developed a neuroradiology teaching file of over 1000 cases, anonymized in our PACS. These include over 100 common and rare neurological diagnoses and are classified according to brain sub regions involved and clinical diagnosis, with up to eight examples per diagnosis. These files were collected using semi-automated, random searches and selection criteria (Supplementary Material). Thus, the file’s distribution of diagnoses reflects the underlying distribution of diagnoses in our health system’s patient population with varying imaging appearances and are truly representative cases for training. Additionally, future NLP algorithms working on reports or health records may automatically populate collections over time.60,61

Through integration of teaching files with AI platforms, from novel deep learning architectures to more intuitive Bayesian networks, potential for implementing real-time searchability and delivery of clinical cases/knowledge increases dramatically. Here, we propose a model for an AI-based supervision of case selection, report drafting, and live teaching file cataloguing (Figure 4). The model depends on the creation of a trainee learning profile, based on a standard curriculum of diagnoses or clinical scenarios that trainees should be proficient with before program completion. After exposure to a new diagnosis with satisfactory performance, judged by a supervisor, a trainee may be considered proficient in that particular item. Our model utilizes AI to facilitate introduction and learning of novel diagnoses or scenarios, either real time or through standardized file cases, and gives attendings the opportunity to fine-tune trainees’ skills. Suppose a patient is scanned and the imaging study is added to the task list. The AI system may perform a cursory sweep of lesion segmentation, such as FLAIR lesions62 to identify potential diagnoses. It then compares the putative diagnosis with profiles of trainees unfamiliar with this diagnosis and assigns the case to the trainee predicted to benefit most from it, with or without informing the trainee of the putative diagnosis. Such options may be customized from individual learning styles identified by AI or utilized for deliberate performance assessments, promoting opportunities for high-fidelity case simulations. The trainee identifies important clinical and radiologic features from the study and formulates a tentative differential diagnosis within a draft report. The diagnosis can either be generated completely unassisted, with supplementation from AI quantitation tools, or with AI-guided decision making and putative diagnosis sharing. The trainee and supervising radiologist will collaborate on the final report and sign off. The AI utilizes its NLP to anonymize the case, add it to a teaching file, extract the diagnosis and categorize the case, and update the trainee’s case experiential learning profile. The AI will deliver additional cases from the teaching file to trainees as they read similar cases, akin to “suggested readings.” This integrated AI-augmented radiological education promotes greater independence under attending supervision, HFS and real-time situational feedback. Development of platforms to track trainees, their activity and case-logs demonstrates utility of virtual tools for post-graduate education24,52 and can be integrated with AI as a tool for supervisors to assess individual strengths and weaknesses. Such approaches have potential to improve efficiency in radiology education and practice by targeting specific trainee needs.

Figure 4. .

Future model for radiology precision education (spanning low to high level supervision) with an AI-augmented team and teaching file. (Step 1) The patient study is cataloged by the AI system, which quantitatively segments lesions, assesses images, and detects a potential rare diagnosis. (Step 2) The AI assigns it to a third-year trainee who has never seen this proposed diagnosis in practice. (Step 3) The trainee uses an AI system such as ARIES and measurement tools to supplement her differential diagnosis as needed to draft a comprehensive quantitative report. (Step 4) The trainee reviews with the attending/staff radiologist and their report is signed off. (Step 5) After confirmation by the attending, NLP detects the rare disease description; the case is anonymized and added to teaching files for other trainees to review, or for teaching conferences. (Step 6) Moreover, this case is now linked with not only other cases of identical diagnosis, but also other cases in the teaching file with similar findings (but potentially different diagnoses), which the AI can recommend to interpreting radiologists or other trainees viewing cases for learning.

New challenges

AI-augmented radiology has the potential to greatly advance radiology precision education. Yet, there are certain obstacles to be surmounted to reach this goal. Still in infancy stages of investigation, AI techniques require significant, rate-limiting research, development and real-world validation. For generating and maintaining of AI-empowered teaching files, quality of input data segmentation will influence accuracy of AI networks in delivering initial estimated diagnosis.4,63 Currently, many high-quality clinical imaging data sets to be used for training and validation are not easily accessible. We believe, an international consortium for collecting and curating heterogeneous, high-quality labeled data for imaging of a vast multitude of clinical conditions would critically support this modern model of radiology education. Integration of existing image platforms into AI networks will improve radiology through standardization and personalization, from academic centers to communities worldwide.37,64–66

For successful implementation, precision education will encounter other challenges. Use of AI will require agreement from all parties, including trainees, program directors, etc. There will be a significant learning curve, for physicians, technologists and engineers. From a 2018 study, 71% of surveyed radiologists do not currently use AI/ML. Yet, buy-in may not be a substantial hurdle as 87% of respondents plan to learn and 67% are willing to help develop and train such algorithms.3 Learning to use AI will require some pre-clinical and on-the-job training. End-user training for physicians can be introduced in medical school and deepened in post-graduate training. Importantly, the role of attending physicians in precision education is strengthened, not reduced. As described in the text, the supervisor can utilize AI platforms as a first pass didactic tool and save time. AI can help trainees learn simpler concepts and indicate which topics trainees may need attending physicians to teach. Integrated platforms allow supervisors to monitor progress of trainees. Such changes may occur more seamlessly at academic centers that have existing infrastructures for teaching files and a cohort of trainees and educators with vested interest for reform. Collaborations between academia and industry may yield user-ready AI tools. The major incentive for AI-augmented radiology precision education is the opportunity to potentially improve performance through individualized learning.

There have been few if any head-to-head comparisons between traditional learning and AI-augmented medical or radiology education. To determine and verify the utility of AI in precision education, trainee performance must be assessed through reliable and valid measures. If AI demonstrates utility in improving trainee performance through previously discussed assessments such as simulated mini-calls, then such assessments can be utilized to track longitudinal trainee performance. Quality measures are integral to the improvement of radiology education, especially with the utilization of AI.67,68 The learning theories frame several outcomes that can be evaluated in the improvement of radiology training and may potentially serve as metrics of the successes and failures of AI in implementation and practice. For example, quality in practice and outcome measures can assess how behaviorist theory standards are met, through metrics such as the percentage of cases with correct diagnosis during simulated mini-calls. Cognition and constructivist interaction may be difficult to directly quantify, although metrics may still be able to indirectly assess such learning. Cognitive theory can evaluate the ability of trainees to integrate reasoning in formulating differential diagnosis and clinical management, potentially by the percentage of cases with correct qualitative and quantitative lesion characterization. Constructivist theory can illuminate how networks of care-providers collaborate productively. Trainees may log the number of colleagues or resources consulted in each report and receive metrics such as report turnaround time or satisfaction of referring physicians. Wide-scale adoption to community practices will follow only after such AI didactic methods are assessed and proven to be beneficial at pioneering centers.

There may be unintended implications of integration of AI in diagnostic medicine. When guiding diagnosis, AI may not necessarily improve human interpretation. In the application of mammography, some forms of computer-assisted diagnosis can increase false-positive and false-negative errors.69 Hence, continual refinement of AI tools is required to improve sensitivity and specificity, and care must be taken not to detrimentally affect trainee learning opportunities. Additionally, part of the curriculum of AI in precision medical education is understanding the strengths, weaknesses, applications and limitations of AI in radiology. AI may be time-saving and beneficial for flipped learning, which emphasizes low-level supervision, such as comparing scans over time. Trainees can learn patterns and trends of what types of imaging features may or may not be detected as lesions by AI, in comparison to attending physicians. AI can also detect what features may or may not be detected as lesions by trainees.70 In this way, we envision that both radiology trainees and the AI network can maintain a system of “checks and balances” on each other. Therefore, AI may support some but not all aspects of diagnosis and the use of AI in education and diagnosis should be balanced with a holistic understanding of its pearls and pitfalls.

Conclusion

Recent developments in AI demonstrate its viability for augmenting radiology education. The next generation of radiology trainees may learn how AI technologies are used in practice, but similar tools will also be used to enrich their education. A reference frame from behaviorist, cognitive, and constructivist aspects of radiology education presents current challenges and potential solutions of how to tailor allocation of resources to individual trainees, adapting to personal learning needs. While progression to radiology precision education is approaching, it will encounter significant challenges and require measures to assess effectiveness. Hence, integrating AI into radiology precision education requires a dynamic collaboration from research, clinical, and educational perspectives.

Footnotes

Acknowledgements: We thank members of the Department of Radiology at the University of Pennsylvania, especially Dr. Linda J. Bagley and Dr. Jeffrey T. Duda, for support and insightful discussions on clinical education and practice.

Patient consent: This review discusses various roles of AI in augmenting radiology medical education. This “AI-empowered precision education” will help teach trainees and trainers to improve teaching interactions as well as overall work-flow efficiency and diagnostic accuracy, leading to better patient outcomes.

Disclosure: RNB is founder and chairman of Galileo CDS. SM has research grants from Galileo CDS and Novocure.

Contributor Information

Michael Tran Duong, Email: mduong@sas.upenn.edu.

Andreas M. Rauschecker, Email: andreas.rauschecker@gmail.com.

Jeffrey D. Rudie, Email: jeff.rudie@gmail.com.

Po-Hao Chen, Email: sleepy.howard@gmail.com.

Tessa S. Cook, Email: tessa.cook@uphs.upenn.edu.

R. Nick Bryan, Email: nick.bryan@austin.utexas.edu.

Suyash Mohan, Email: suyash.mohan@uphs.upenn.edu.

REFERENCES

- 1.Slanetz PJ, Reede D, Ruchman RB, Catanzano T, Oliveira A, Ortiz D, Slanetz PJ, Reede D, Buchman RB, et al. . Strengthening the radiology learning environment. J Am Coll Radiol 2018; 15: 1016–8. doi: 10.1016/j.jacr.2018.04.013 [DOI] [PubMed] [Google Scholar]

- 2.Pietrzak B, Ward A, Cheung MK, Schwendimann BA, Mollaoglu G, Duong MT, et al. . Education for the future. Science 2018; 360: 1409–12. doi: 10.1126/science.aau3877 [DOI] [PubMed] [Google Scholar]

- 3.Collado-Mesa F, Alvarez E, Arheart K. The role of artificial intelligence in diagnostic radiology: a survey at a single radiology residency training program. J Am Coll Radiol 2018; 15: 1753–7. doi: 10.1016/j.jacr.2017.12.021 [DOI] [PubMed] [Google Scholar]

- 4.Erickson BJ, Korfiatis P, Akkus Z, Kline TL. Machine learning for medical imaging. Radiographics 2017; 37: 505–15. doi: 10.1148/rg.2017160130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Erickson BJ, Korfiatis P, Kline TL, Akkus Z, Philbrick K, Weston AD. Deep Learning in Radiology: Does One Size Fit All? J Am Coll Radiol 2018; 15(3 Pt B): 521–6. doi: 10.1016/j.jacr.2017.12.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Choy G, Khalilzadeh O, Michalski M, Do S, Samir AE, Pianykh OS, et al. . Current applications and future impact of machine learning in radiology. Radiology 2018; 288: 318–28. doi: 10.1148/radiol.2018171820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kohli M, Prevedello LM, Filice RW, Geis JR. Implementing machine learning in radiology practice and research. AJR Am J Roentgenol 2017; 208: 754–60. doi: 10.2214/AJR.16.17224 [DOI] [PubMed] [Google Scholar]

- 8.Hainc N, Federau C, Stieltjes B, Blatow M, Bink A, Stippich C. The bright, artificial intelligence-augmented future of neuroimaging reading. Front Neurol 2017; 8: 489. doi: 10.3389/fneur.2017.00489 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Vieira S, Pinaya WHL, Mechelli A. Using deep learning to investigate the neuroimaging correlates of psychiatric and neurological disorders: methods and applications. Neurosci Biobehav Rev 2017; 74(Pt A): 58–75. doi: 10.1016/j.neubiorev.2017.01.002 [DOI] [PubMed] [Google Scholar]

- 10.Hassabis D, Kumaran D, Summerfield C, Botvinick M, Intelligence N-IA. Neuroscience-Inspired artificial intelligence. Neuron 2017; 95: 245–58. doi: 10.1016/j.neuron.2017.06.011 [DOI] [PubMed] [Google Scholar]

- 11.Lao Z, Shen D, Liu D, Jawad AF, Melhem ER, Launer LJ, et al. . Computer-Assisted segmentation of white matter lesions in 3D Mr images using support vector machine. Acad Radiol 2008; 15: 300–13. doi: 10.1016/j.acra.2007.10.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kahn CE. Artificial intelligence in radiology: decision support systems. Radiographics 1994; 14: 849–61. doi: 10.1148/radiographics.14.4.7938772 [DOI] [PubMed] [Google Scholar]

- 13.Lakhani P, Prater AB, Hutson RK, Andriole KP, Dreyer KJ, Morey J, et al. . Machine learning in radiology: applications beyond image interpretation. J Am Coll Radiol 2018; 15: 350–9. doi: 10.1016/j.jacr.2017.09.044 [DOI] [PubMed] [Google Scholar]

- 14.Kim DW, Jang HY, Kim KW, Shin Y, Park SH. Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: results from recently published papers. Korean J Radiol 2019; 20: 405–10. doi: 10.3348/kjr.2019.0025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Collins FS, Varmus H. A new initiative on precision medicine. N Engl J Med 2015; 372: 793–5. doi: 10.1056/NEJMp1500523 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hart SA. Precision education initiative: moving toward personalized education. Mind, Brain, and Education 2016; 10: 209–11. doi: 10.1111/mbe.12109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Woolf BP, Lane HC, Chaudhri VK, Kolodner JL. Ai grand challenges for education. AI Magazine 2013; 34: 66–84. doi: 10.1609/aimag.v34i4.2490 [DOI] [Google Scholar]

- 18.Lakkaraju H, Aguiar E, Shan C, Miller D, Bhanpuri N, Ghani R, et al. . A machine learning framework to identify students at risk of adverse academic outcomes. Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 2015;: 1909–18. [Google Scholar]

- 19.Kotsiantis SB. Use of machine learning techniques for educational proposes: a decision support system for forecasting students’ grades. Artif Intell Rev 2012; 37: 331–44. doi: 10.1007/s10462-011-9234-x [DOI] [Google Scholar]

- 20.Bernard J, Chang T-W, Popescu E, Graf S. Learning style identifier: improving the precision of learning style identification through computational intelligence algorithms. Expert Syst Appl 2017; 75: 94–108. doi: 10.1016/j.eswa.2017.01.021 [DOI] [Google Scholar]

- 21.Truong HM. Integrating learning styles and adaptive e-learning system: current developments, problems and opportunities. Comput Human Behav 2016; 55: 1185–93. doi: 10.1016/j.chb.2015.02.014 [DOI] [Google Scholar]

- 22.Reeder MM, Felson B. Gamuts in Radiology. 4th ed New York: Springer-Verlag; 2003. xvii. [Google Scholar]

- 23.Wildenberg JC, Chen P-H, Scanlon MH, Cook TS. Attending radiologist variability and its effect on radiology resident discrepancy rates. Acad Radiol 2017; 24: 694–9. doi: 10.1016/j.acra.2016.12.004 [DOI] [PubMed] [Google Scholar]

- 24.Chen P-H, Loehfelm TW, Kamer AP, Lemmon AB, Cook TS, Kohli MD. Toward data-driven radiology Education-Early experience building multi-institutional academic trainee interpretation log database (MATILDA. J Digit Imaging 2016; 29: 638–44. doi: 10.1007/s10278-016-9872-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cook TS, Hernandez J, Scanlon M, Langlotz C, Li C-DL. Why Isn't There More High-fidelity Simulation Training in Diagnostic Radiology? Results of a Survey of Academic Radiologists. Acad Radiol 2016; 23: 870–6. doi: 10.1016/j.acra.2016.03.008 [DOI] [PubMed] [Google Scholar]

- 26.Williamson KB, Gunderman RB, Cohen MD, Frank MS. Learning theory in radiology education. Radiology 2004; 233: 15–18. doi: 10.1148/radiol.2331040198 [DOI] [PubMed] [Google Scholar]

- 27.Lam CZ, Nguyen HN, Ferguson EC. Radiology resident' satisfaction with their training and education in the United States: effect of program directors, teaching faculty, and other factors on program success. AJR Am J Roentgenol 2016; 206: 907–16. doi: 10.2214/AJR.15.15020 [DOI] [PubMed] [Google Scholar]

- 28.Korfiatis P, Kline TL, Erickson BJ. Automated segmentation of hyperintense regions in FLAIR MRI using deep learning. Tomography 2016; 2: 334–40. doi: 10.18383/j.tom.2016.00166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Liew C. The future of radiology augmented with artificial intelligence: a strategy for success. Eur J Radiol 2018; 102: 152–6. doi: 10.1016/j.ejrad.2018.03.019 [DOI] [PubMed] [Google Scholar]

- 30.Agarwal V, Bump GM, Heller MT, Chen L-W, Branstetter BF, Amesur NB, et al. . Resident case volume correlates with clinical performance: finding the sweet spot. Acad Radiol 2019; 26: 136–40. doi: 10.1016/j.acra.2018.06.023 [DOI] [PubMed] [Google Scholar]

- 31.Wartman SA, Combs CD. Medical education must move from the information age to the age of artificial intelligence. Acad Med 2018; 93: 1107–9. doi: 10.1097/ACM.0000000000002044 [DOI] [PubMed] [Google Scholar]

- 32.Maleck M, Fischer MR, Kammer B, Zeiler C, Mangel E, Schenk F, et al. . Do computers teach better? A media comparison study for case-based teaching in radiology. Radiographics 2001; 21: 1025–32. doi: 10.1148/radiographics.21.4.g01jl091025 [DOI] [PubMed] [Google Scholar]

- 33.Kumar V, Gadbury-Amyot CC, Case-Based A. A case-based and team-based learning model in oral and maxillofacial radiology. J Dent Educ 2012; 76: 330–7. [PubMed] [Google Scholar]

- 34.Terashita T, Tamura N, Kisa K, Kawabata H, Ogasawara K. Problem-Based learning for radiological technologists: a comparison of student attitudes toward plain radiography. BMC Med Educ 2016; 16: 236. doi: 10.1186/s12909-016-0753-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Welter P, Deserno TM, Fischer B, Günther RW, Spreckelsen C. Towards case-based medical learning in radiological decision making using content-based image retrieval. BMC Med Inform Decis Mak 2011; 11: 68. doi: 10.1186/1472-6947-11-68 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Anderson JR, Boyle CF, Reiser BJ. Intelligent tutoring systems. Science 1985; 228: 456–62. doi: 10.1126/science.228.4698.456 [DOI] [PubMed] [Google Scholar]

- 37.McLoud TC. Trends in radiologic training: national and international implications. Radiology 2010; 256: 343–7. doi: 10.1148/radiol.10091429 [DOI] [PubMed] [Google Scholar]

- 38.Kahneman D. Thinking, fast and slow. New York: Farrar, Straus and Giroux; 2011. [Google Scholar]

- 39.Bilello M, Arkuszewski M, Nucifora P, Nasrallah I, Melhem ER, Cirillo L, et al. . Multiple sclerosis: identification of temporal changes in brain lesions with computer-assisted detection software. Neuroradiol J 2013; 26: 143–50. doi: 10.1177/197140091302600202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Jha S, Topol EJ. Adapting to artificial intelligence: radiologists and pathologists as information specialists. JAMA 2016; 316: 2353–4. doi: 10.1001/jama.2016.17438 [DOI] [PubMed] [Google Scholar]

- 41.Al Yassin A, Salehi Sadaghiani M, Mohan S, Bryan RN, Nasrallah I. It is About "Time": Academic Neuroradiologist Time Distribution for Interpreting Brain MRIs. Acad Radiol 2018; 25: 1521–5. doi: 10.1016/j.acra.2018.04.014 [DOI] [PubMed] [Google Scholar]

- 42.Fiorini N, Leaman R, Lipman DJ, Lu Z. How user intelligence is improving PubMed. Nat Biotechnol 2018; 36: 937–45. doi: 10.1038/nbt.4267 [DOI] [PubMed] [Google Scholar]

- 43.Belfi LM, Bartolotta RJ, Giambrone AE, Davi C, Min RJ. "Flipping" the introductory clerkship in radiology: impact on medical student performance and perceptions. Acad Radiol 2015; 22: 794–801. doi: 10.1016/j.acra.2014.11.003 [DOI] [PubMed] [Google Scholar]

- 44.Ramesh A, Ganguly R. Interactive learning in oral and maxillofacial radiology. Imaging Sci Dent 2016; 46: 211–6. doi: 10.5624/isd.2016.46.3.211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Flanders AE. What is the future of electronic learning in radiology? Radiographics 2007; 27: 559–61. doi: 10.1148/rg.272065192 [DOI] [PubMed] [Google Scholar]

- 46.Komorowski M, Celi LA, Badawi O, Gordon AC, Faisal AA. The artificial intelligence clinician learns optimal treatment strategies for sepsis in intensive care. Nat Med 2018; 24: 1716–20. doi: 10.1038/s41591-018-0213-5 [DOI] [PubMed] [Google Scholar]

- 47.Ross MK, Yoon J, van der Schaar A, van der Schaar M. Discovering pediatric asthma phenotypes on the basis of response to controller medication using machine learning. Ann Am Thorac Soc 2018; 15: 49–58. doi: 10.1513/AnnalsATS.201702-101OC [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.He T, Puppala M, Ezeana CF, Huang Y-S, Chou P-H, Yu X, et al. . A deep Learning-Based decision support tool for precision risk assessment of breast cancer. JCO Clin Cancer Inform 2019; 3: 1–12. doi: 10.1200/CCI.18.00121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Jeter R, Shashikumar S, Nemati S. Does the “Artificial Intelligence Clinician” learn optimal treatment strategies for sepsis in intensive care? Available from: https://arxiv.org/abs/1902.03271. [DOI] [PubMed]

- 50.Petscavage JM, Wang CL, Schopp JG, Paladin AM, Richardson ML, Bush WH. Cost analysis and feasibility of high-fidelity simulation based radiology contrast reaction curriculum. Acad Radiol 2011; 18: 107–12. doi: 10.1016/j.acra.2010.08.014 [DOI] [PubMed] [Google Scholar]

- 51.Khumrin P, Ryan A, Judd T, Verspoor K. Diagnostic machine learning models for acute abdominal pain: towards an e-learning tool for medical students. Stud Health Technol Inform 2017; 245: 447–51. [PubMed] [Google Scholar]

- 52.Shortliffe EH, Sepúlveda MJ. Clinical decision support in the era of artificial intelligence. JAMA 2018; 320: 2199–200. doi: 10.1001/jama.2018.17163 [DOI] [PubMed] [Google Scholar]

- 53.Romano MJ, Stafford RS. Electronic health records and clinical decision support systems: impact on national ambulatory care quality. Arch Intern Med 2011; 171: 897–903. doi: 10.1001/archinternmed.2010.527 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Chen PH, Botzolakis E, Mohan S, Bryan RN, Cook T. Feasibility of streamlining an interactive Bayesian-based diagnostic support tool designed for clinical practice In: Zhang J, Cook T. S, eds. Proceedings of SPIE Medical Imaging; 2016. pp 97890C. [Google Scholar]

- 55.Duda JT, Botzolakis E, Chen P-H.et al. . Bayesian network interface for assisting radiology interpretation and education In: Zhang J, Chen P. -H, eds. Proceedings of SPIE Medical imaging; 2018. pp 26. [Google Scholar]

- 56.Wiggins RH, Davidson HC, Dilda P, Harnsberger HR, Katzman GL. The evolution of filmless radiology teaching. J Digit Imaging 2001; 14(S1): 236–7. doi: 10.1007/BF03190352 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Rosset A, Ratib O, Geissbuhler A, Vallée J-P. Integration of a multimedia teaching and reference database in a PACS environment. Radiographics 2002; 22: 1567–77. doi: 10.1148/rg.226025058 [DOI] [PubMed] [Google Scholar]

- 58.Henderson B, Camorlinga S, DeGagne J-C. A cost-effective web-based teaching file system. J Digit Imaging 2004; 17: 87–91. doi: 10.1007/s10278-004-1001-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Dashevsky B, Gorovoy M, Weadock WJ, Juluru K. Radiology teaching files: an assessment of their role and desired features based on a national survey. J Digit Imaging 2015; 28: 389–98. doi: 10.1007/s10278-014-9755-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Do BH, Wu A, Biswal S, Kamaya A, Rubin DL. Informatics in radiology: RADTF: a semantic search-enabled, natural language processor-generated radiology teaching file. Radiographics 2010; 30: 2039–48. doi: 10.1148/rg.307105083 [DOI] [PubMed] [Google Scholar]

- 61.Pons E, Braun LMM, Hunink MGM, Kors JA. Natural language processing in radiology: a systematic review. Radiology 2016; 279: 329–43. doi: 10.1148/radiol.16142770 [DOI] [PubMed] [Google Scholar]

- 62.Duong MT, Rudie JD, Wang J, Xie L, Mohan S, Gee JC, et al. . Convolutional neural network for automated FLAIR lesion segmentation on clinical brain MR imaging. AJNR Am J Neuroradiol 2019. doi: 10.3174/ajnr.A6138 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Rachmadi MF, Valdés-Hernández MDC, Agan MLF, Di Perri C, Komura T, .Alzheimer's Disease Neuroimaging Initiative . Segmentation of white matter hyperintensities using convolutional neural networks with global spatial information in routine clinical brain MRI with none or mild vascular pathology. Comput Med Imaging Graph 2018; 66: 28–43. doi: 10.1016/j.compmedimag.2018.02.002 [DOI] [PubMed] [Google Scholar]

- 64.Neill DB. Using artificial intelligence to improve Hospital inpatient care. IEEE Intell Syst 2013; 28: 92–5. doi: 10.1109/MIS.2013.51 [DOI] [Google Scholar]

- 65.Chan KS, Zary N. Applications and challenges of implementing artificial intelligence in medical education: integrative review. JMIR Med Educ 2019; 5: e13930: e13930. doi: 10.2196/13930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ghogawala Z, Dunbar MR, Essa I. Lumbar spondylolisthesis: modern registries and the development of artificial intelligence. J Neurosurg 2019; 30: 729–35. doi: 10.3171/2019.2.SPINE18751 [DOI] [PubMed] [Google Scholar]

- 67.Sarwar A, Boland G, Monks A, Kruskal JB. Metrics for radiologists in the era of value-based health care delivery. Radiographics 2015; 35: 866–76. doi: 10.1148/rg.2015140221 [DOI] [PubMed] [Google Scholar]

- 68.Walker EA, Petscavage-Thomas JM, Fotos JS, Bruno MA. Quality metrics currently used in academic radiology departments: results of the QUALMET survey. Br J Radiol 2017; 90: 20160827. doi: 10.1259/bjr.20160827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Fenton JJ, Taplin SH, Carney PA, Abraham L, Sickles EA, D'Orsi C, et al. . Influence of computer-aided detection on performance of screening mammography. N Engl J Med 2007; 356: 1399–409. doi: 10.1056/NEJMoa066099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Zhang J, Lo JY, Kuzmiak CM, Ghate SV, Yoon SC, Mazurowski MA. Using computer-extracted image features for modeling of error-making patterns in detection of mammographic masses among radiology residents. Med Phys 2014; 41: 091907. doi: 10.1118/1.4892173 [DOI] [PubMed] [Google Scholar]