Abstract

Background

Patient-reported outcomes (PROs) are captured within cancer trials to help future patients and their clinicians make more informed treatment decisions. However, variability in standards of PRO trial design and reporting threaten the validity of these endpoints for application in clinical practice.

Methods

We systematically investigated a cohort of randomized controlled cancer trials that included a primary or secondary PRO. For each trial, an evaluation of protocol and reporting quality was undertaken using standard checklists. General patterns of reporting where also explored.

Results

Protocols (101 sourced, 44.3%) included a mean (SD) of 10 (4) of 33 (range = 2–19) PRO protocol checklist items. Recommended items frequently omitted included the rationale and objectives underpinning PRO collection and approaches to minimize/address missing PRO data. Of 160 trials with published results, 61 (38.1%, 95% confidence interval = 30.6% to 45.7%) failed to include their PRO findings in any publication (mean 6.43-year follow-up); these trials included 49 568 participants. Although two-thirds of included trials published PRO findings, reporting standards were often inadequate according to international guidelines (mean [SD] inclusion of 3 [3] of 14 [range = 0–11]) CONSORT PRO Extension checklist items). More than one-half of trials publishing PRO results in a secondary publication (12 of 22, 54.5%) took 4 or more years to do so following trial closure, with eight (36.4%) taking 5–8 years and one trial publishing after 14 years.

Conclusions

PRO protocol content is frequently inadequate, and nonreporting of PRO findings is widespread, meaning patient-important information may not be available to benefit patients, clinicians, and regulators. Even where PRO data are published, there is often considerable delay and reporting quality is suboptimal. This study presents key recommendations to enhance the likelihood of successful delivery of PROs in the future.

Patient-reported outcomes (PROs) are increasingly captured within cancer trials to provide the patient perspective on the physical, functional, psychological, and social consequences of disease and treatment (1). This information is important in supporting patients to make more informed treatment decisions at the point of cancer diagnosis and beyond (2,3).

The utility of such data has been recognized by patients, clinicians, funders, regulators, and policy makers (4–8). Despite this, emerging evidence suggests that important PRO information may be omitted from protocols (9,10), potentially impairing data collection (11,12), and that PRO results are poorly reported in trial publications (5,13–21) or may not be reported at all (22). This represents a waste of limited health-care and research resources and may restrict the effective uptake of PRO trial findings in practice.

The American Society of Clinical Oncology, United Kingdom National Institute for Health and Care Excellence, and European Medicines Agency have all outlined the need to improve the quality of PRO trial results to better inform technology appraisals and licensing decisions (8,23,24). Most importantly, patients with cancer have called for greater availability of high-quality PRO trial data to help them gain insight into what their life will actually be like during and after a certain therapy as well as how long they may survive (25).

It has been hypothesized that omission of key PRO protocol components may be an important contributor to suboptimal PRO reporting (26). To our knowledge, however, only one study has examined this relationship in a small (n = 26) sample of ovarian cancer trials (10). Furthermore, a recent study has assessed the issue of availability of PRO trial data across Germany, Switzerland, and Canada but did not evaluate PRO protocol quality, so the relationship between the two could not be determined (22). To investigate these issues, we conducted a systematic evaluation of PRO protocol content and reporting across a cohort of completed international cancer trials.

Methods

Search Strategy and Extraction

We identified randomized controlled cancer trials in the National Institute for Health Research (NIHR) Portfolio that included a PRO primary or secondary outcome [study protocol available (26)]. The NIHR is the largest UK public funding stream, comparable to the National Institutes of Health in the United States. Trials were eligible if they were listed as closed on the database by March 2014 (scheduled to allow time for reporting to occur) and/or had published results by the time of our final publication search in June 2017. We excluded trials lacking random allocation to one of two or more groups, or a control arm, and those that terminated early.

For each trial, we attempted to source the trial protocol (final ethically approved version), published articles reporting final results, and secondary publications reporting PRO results. We defined a primary publication as the first or principal publication of the trial results regarding the primary outcome(s) and secondary publications as those published following/in support of the primary article. Abstracts and reports of preliminary results were excluded. Protocol retrieval was attempted via direct contact with research teams and by searching trial registries, databases, and websites (see Supplementary Box 1, available online). Publications were obtained via direct author contact or by searching MEDLINE, Embase, Cinahl+, PsycINFO, Cochrane Controlled Trials Register, or the Patient-Reported Outcome Measures Over Time In Oncology Registry (27). Full search details are provided in the Supplementary Methods (available online).

All searching, sourcing, and extraction were conducted by two independent investigators (TK and KA), with a third researcher (DK, MC, or AR) involved where required. Investigators extracted trial characteristics and determined the availability of PRO trial results. Unreported PROs were defined as those that were prespecified in the NIHR Portfolio database, trial registry, or trial protocol but that were not reported in either a primary or secondary publication. The University of Birmingham (Ref: ERN_17–0085A) gave ethical approval for this study.

Data Analysis

Investigators evaluated the completeness of general protocol sections using the Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT) 2013 checklist (28). Completeness of PRO-specific content was evaluated using a PRO protocol checklist (9). For publications, general reporting standards were evaluated using the 2010 Consolidated Standards of Reporting Trials (CONSORT) checklist (29). PRO-specific aspects were reviewed using the 2013 CONSORT-PRO Extension (30). For each trial protocol or publication assessed, individual checklist items were described as “present” or “absent,” and one point was assigned for each item “present” giving a total score. Protocol and reporting standards did not make a distinction between study phases; however, investigators noted where a checklist item was deemed “not applicable” according to the study design (eg, SPIRIT item 17a on blinding for a nonblinded study), and the denominator was adjusted accordingly during the analysis. Inter-rater agreement was calculated for each checklist based on the proportion of matching item-level decisions. A full breakdown of checklists is provided in Supplementary Figures 1 and 2 (available online). It should be noted that many included trials would have been developed before the existence of the SPIRIT/PRO protocol and CONSORT PRO standards used in this study. Although developed recently, they present consolidated criteria drawn from many preceding years of published research outlining commonly considered good practice; thus, they remain a useful metric by which to assess the quality of PRO trial design and reporting.

Statistical Analysis

Descriptive data are reported as numbers and percentages and where appropriate are summarized using means (SDs) or 95% confidence intervals (CIs). We performed three prespecified exploratory regression models including those trials for which a matching protocol and publication had been retrieved. Backwards elimination with a P-to-eliminate value of greater than .05 was used to select variables to be included in all models. All tests were 2-sided. All analyses were conducted in STATA version 12 (StataCorp, College Station, TX).

Model A investigated protocol inclusion of PRO protocol checklist items. The independent variable was the PRO Protocol Checklist score (adjusted for denominator variation), and the independent variables were year of the protocol, whether the PRO was named as a primary or secondary outcome, cancer specialty, trial sample size, funding source, and the SPIRIT checklist score (adjusted for denominator variation).

Model B used logistic regression to determine factors associated with the reporting of PRO trial results. The dependent variable was “PRO trial results reported in the principal trial publication (yes/no).” Covariates included year of the protocol, whether the PRO was named as a primary or secondary outcome, cancer specialty, trial sample size, funding source, the SPIRIT checklist score (adjusted for denominator variation), whether the primary outcome of the trial was statistically significant, and the PRO protocol checklist score (adjusted for denominator variation).

Model C explored factors associated with publication adherence to the CONSORT-PRO Extension. The dependent variable was the CONSORT-PRO Extension score (adjusted for denominator variation). Covariates included the year of publication, whether the PRO was named as a primary or secondary outcome, whether there were single or multiple reports, trial sample size, funding source, journal impact factor, the CONSORT 2010 checklist score (adjusted for denominator variation), and the PRO protocol checklist score (adjusted for denominator variation). Full model details are provided in the Supplementary Methods (available online).

Results

Data Screening and Sourcing

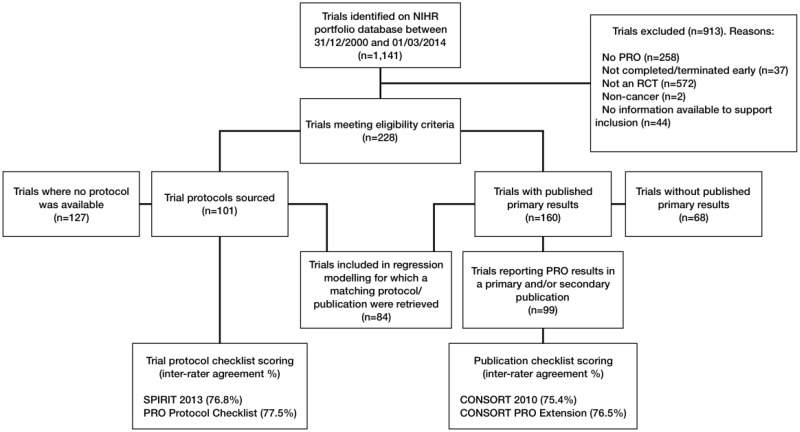

The NIHR Portfolio included 1141 trials up to March 1, 2014, of which 913 were excluded because they were not randomized controlled trials, did not include a PRO, or had not been completed by the cutoff date (Figure 1). The final sample included 228 trials, recruiting across 72 countries, which used 262 different measures to collect PRO data (see Table 1 for trial characteristics and Supplementary Tables 1–3 (available online) for full sample details and checklist scoring results).

Figure 1.

Study flow diagram. CONSORT = Consolidated Standards of Reporting Trials; NIHR = National Institute for Health Research; PRO = patient-reported outcome; RCT = randomized controlled trial; SPIRIT = Standard Protocol Items: Recommendations for Interventional Trials.

Table 1.

Characteristics of included trials (n = 228)

| Characteristic | Trials, No. (%) |

|---|---|

| Trial phase | |

| II | 32 (14.0) |

| II/III | 17 (7.5) |

| III | 151 (66.2) |

| Other | 28 (12.3) |

| Recruitment regions* | |

| United Kingdom | 221 (96.9) |

| Spain | 65 (28.5) |

| Italy | 63 (27.6) |

| Canada | 62 (27.2) |

| France | 62 (27.2) |

| Germany | 58 (25.4) |

| United States | 55 (24.1) |

| Belgium | 54 (23.7) |

| Australia | 51 (22.4) |

| Poland | 47 (20.6) |

| Russian Federation | 43 (18.9) |

| Netherlands | 41 (18.0) |

| Korea, Republic of | 34 (14.9) |

| Brazil | 30 (13.2) |

| Austria | 29 (12.7) |

| Sweden | 29 (12.7) |

| Argentina | 28 (12.3) |

| Israel | 28 (12.3) |

| Czech Republic | 26 (11.4) |

| Hungary | 25 (11.0) |

| China | 24 (10.5) |

| Japan | 24 (10.5) |

| Taiwan | 24 (10.5) |

| Turkey | 24 (10.5) |

| Cancer type | |

| Breast | 37 (16.2) |

| Lung | 28 (12.3) |

| Prostate | 21 (9.2) |

| Colorectal | 15 (6.6) |

| Ovarian | 14 (6.1) |

| Other | 115 (50.4) |

| Source of funding† | |

| Industry only | 79 (34.6) |

| Public only | 34 (14.9) |

| Charity only | 85 (37.3) |

| Mixed | 30 (13.2) |

| PRO | |

| Primary outcome | 42 (18.4) |

| Secondary outcome only | 186 (81.6) |

| Both | 28 (12.3) |

| PROs measured | |

| Quality of life | 163 (71.5) |

| Symptom burden | 128 (56.1) |

| Anxiety and depression | 24 (10.5) |

| Other | 12 (5.3) |

| PRO questionnaires used | |

| EORTC QLQ-C30 | 95 (41.7) |

| EQ-5D | 54 (23.7) |

| HADS | 21 (9.2) |

| Other | 92 (40.4) |

| Year of trial closure | |

| 2001 | 2 (0.9) |

| 2002 | 5 (2.2) |

| 2003 | 4 (1.8) |

| 2004 | 3 (1.3) |

| 2005 | 12 (5.3) |

| 2006 | 9 (3.9) |

| 2007 | 8 (3.5) |

| 2008 | 20 (8.8) |

| 2009 | 23 (10.1) |

| 2010 | 32 (14.0) |

| 2011 | 34 (14.9) |

| 2012 | 34 (14.9) |

| 2013 | 38 (16.7) |

| 2014 | 4 (1.8) |

Additional recruitment regions included in less than 10% of trials: Greece, Switzerland, New Zealand, Ireland, Mexico, Singapore, Hong Kong, India, Thailand, Denmark, Romania, Portugal, South Africa, Finland, Chile, Norway, Peru, Slovak Republic, Ukraine, Bulgaria, Colombia, Croatia, Estonia, Puerto Rico, Philippines, Latvia, Egypt, Guatemala, Luxembourg, Panama, Serbia, Slovenia, Bosnia and Herzegovina, Costa Rica, Cyprus, Lebanon, Lithuania, Malaysia, Saudi Arabia, Bahamas, Belarus, Ecuador, Indonesia, Macedonia, Pakistan, Tunisia, and Uruguay. See Supplementary Appendix (available online) for additional information. EORTC QLQ = European Organization for the Research and Treatment of Cancer Quality of Life Questionnaire; EQ-5D = EuroQol Five Dimension preference-based health status measure; HADS = Hospital Anxiety and Depression Scale; NIHR = National Institute for Health Research; PRO = patient-reported outcome.

As listed on the NIHR Portfolio Database.

We were able to source 101 of 228 protocols (44.3%): 73 from the named trial contact, 13 as a supplementary journal file, 1 from the trial website, 5 from the sponsor/funder, and 9 using a Google search. Eighty percent of sourced protocols were associated with trials closing in 2008 or later, which was comparable to the overall sample. In addition, the demographics of trials where we were able to source the protocol vs those where the protocol was unavailable were broadly similar (Table 2). There were, however, some exceptions. Compared with the overall sample, studies for which we retrieved the protocol were less likely to be industry funded and included slightly fewer breast and prostate cancer trials, but slightly more lung, colorectal, and ovarian cancer trials. Finally, interrater agreement for all checklists was high (≥75%).

Table 2.

Trial demographics stratified by availability of protocol

| Characteristic | Protocol sourced No. (%) | No protocol available No. (%) |

|---|---|---|

| Total | 101 (100.0) | 127 (100.0) |

| Trial phase | ||

| II | 14 (13.9) | 18 (14.2) |

| II/III | 8 (7.9) | 9 (7.1) |

| III | 66 (65.3) | 85 (66.9) |

| Other | 13 (12.9) | 15 (11.8) |

| Cancer type | ||

| Breast | 12 (11.9) | 25 (19.7) |

| Lung | 16 (15.8) | 12 (9.4) |

| Prostate | 8 (7.9) | 13 (10.2) |

| Colorectal | 11 (10.9) | 4 (3.1) |

| Ovarian | 8 (7.9) | 6 (4.7) |

| Other | 46 (45.5) | 67 (52.8) |

| Source of funding* | ||

| Industry only | 19 (18.8) | 60 (47.2) |

| Public only | 18 (17.8) | 16 (12.6) |

| Charity only | 45 (44.6) | 40 (31.5) |

| Mixed | 19 (18.8) | 11 (8.7) |

| PRO | ||

| Primary outcome | 19 (18.8) | 23 (18.1) |

| Secondary outcome only | 82 (81.2) | 104 (81.9) |

| Both | 15 (14.9) | 13 (10.2) |

| Year of trial closure | ||

| 2001 | 1 (1.0) | 1 (0.8) |

| 2002 | 1 (1.0) | 4 (3.1) |

| 2003 | 2 (2.0) | 2 (1.6) |

| 2004 | 1 (1.0) | 2 (1.6) |

| 2005 | 6 (5.9) | 6 (4.7) |

| 2006 | 5 (5.0) | 4 (3.1) |

| 2007 | 5 (5.0) | 3 (2.4) |

| 2008 | 10 (9.9) | 10 (7.9) |

| 2009 | 12 (11.9) | 11 (8.7) |

| 2010 | 13 (12.9) | 19 (15.0) |

| 2011 | 15 (14.9) | 19 (15.0) |

| 2012 | 15 (14.9) | 19 (15.0) |

| 2013 | 14 (13.9) | 24 (18.9) |

| 2014 | 1 (1.0) | 3 (2.4) |

As listed on the National Institute for Health Research Portfolio Database. PRO = patient-reported outcome.

PRO Protocol Content

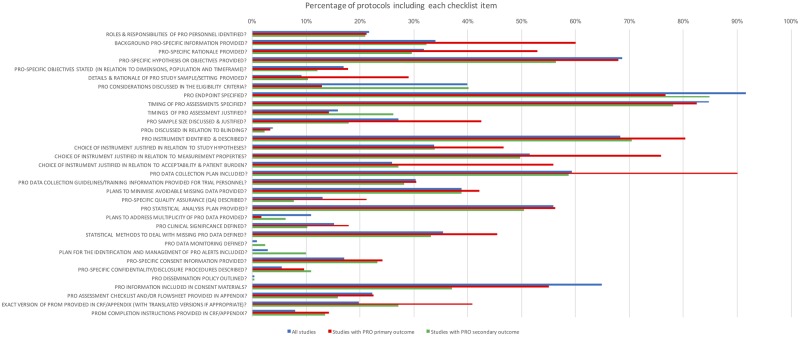

Trial protocols (n = 101) included a mean (SD) of 32 (6) of 51 (range = 11–43) SPIRIT 2013 recommendations (66.2% adjusted for denominator variation) and 10 (4) of 33 (range = 2–19) PRO protocol checklist items (31.9% adjusted). There were a number of PRO items deemed important in the literature (31) that were frequently omitted, for example, the rationale for PRO collection (missing in 68.2% of protocols), description of PRO-specific objectives (missing in 83.1%), justification of the choice of PRO instrument with regard to the study hypothesis (missing in 66.2%) and questionnaire measurement properties (missing in 48.5%), information regarding PRO data collection plans (missing in 40.7%), and methods to reduce avoidable missing PRO data (missing in 61.1%) (Figure 2). Where a PRO was the primary outcome, protocols included an adjusted mean of 62.4% SPIRIT recommendations and 38.3% PRO protocol checklist items. Where a PRO was the secondary outcome, protocols included an adjusted mean of 67.0% SPIRIT recommendations and 30.4% PRO protocol checklist items.

Figure 2.

Percentage of protocols (n = 101) including each patient-reported outcome (PRO) protocol checklist item (adjusted for denominator variation). PROM = patient-reported outcome measure; CRF = case report form.

Reporting of PRO Trial Results

With a mean of 6.43 years of follow-up from trial closure, 160 trials had published their primary results by the time of our final publication search (Figure 1). Eighty-five trials included their PRO findings in the primary publication. Eight trials published their PRO data in both a primary and secondary publication and 14 solely in a secondary publication. More than one-third, 61 of 160 (38.1%, 95% CI = 30.6% to 45.7%), failed to include their PRO findings in any publication; these trials included 49 568 participants. More than one-half of trials publishing their PRO results in a secondary publication (12 of 22, 54.5%) took 4 or more years to do so following trial closure, with eight (36.4%) taking 5–8 years and one trial publishing after 14 years.

Where a PRO was a primary outcome, 27 of 32 (84.4%) trials included PRO findings in the primary publication. Two trials (6.3%) published their PRO data in both a primary and secondary publication, two (6.3%) solely in a secondary publication, and three (9.4%) failed to include their PRO findings in any publication. Mean time from trial closure to publication of PRO results in a primary publication was 3 years vs 8 years for a secondary publication.

Where a PRO was a secondary outcome, 58 of 128 (45.3%) trials included PRO findings in the primary publication. Six trials (4.7%) published their PRO data in both a primary and secondary publication, 12 (9.4%) solely in a secondary publication, and 58 (45.3%) failed to include their PRO findings in any publication. Mean time from trial closure to publication of PRO results in a primary publication was 4 years vs 5 years for a secondary publication.

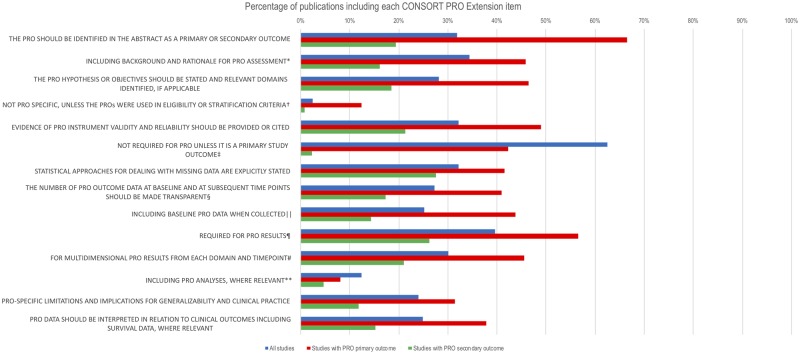

Publications included a mean (SD) of 23 (4) of 37 (range = 13–32) CONSORT 2010 items (63.0% adjusted for denominator variation) and 3 (3) of 14 (range = 0–11) CONSORT-PRO Extension checklist items (21.7% adjusted). Commonly omitted CONSORT-PRO Extension items included description of the PRO hypothesis/objectives (missing in 71.8% of publications), evidence of the validity and reliability of the PRO instrument(s) (missing in 67.8%), detail regarding the number of PRO data collected at baseline and subsequent time points (missing in 72.8%), and description of the statistical approaches used to deal with missing PRO data (missing in 67.8%) (Figure 3). Where a PRO was the primary outcome, publications included an adjusted mean of 62.1% of CONSORT 2010 items and 41.1% CONSORT-PRO items. Where a PRO was the secondary outcome, protocols included an adjusted mean of 63.3% of CONSORT 2010 items and 16.9% CONSORT-PRO checklist items.

Figure 3.

Percentage of publications (n = 99) including each Consolidated Standards of Reporting Trials (CONSORT) patient-reported outcomes (PRO) Extension Checklist item (adjusted for denominator variation). *PRO elaboration to CONSORT checklist item 2a: “Scientific background and explanation of rationale.” †PRO elaboration to CONSORT checklist item 4a: “Eligibility criteria for participants.” ‡PRO elaboration to CONSORT checklist item 7a: “How sample size was determined.” §PRO elaboration to CONSORT checklist item 13a: “For each group, the numbers of participants who were randomly assigned, received intended treatment, and were analyzed for the primary outcome.” ‖PRO elaboration to CONSORT checklist item 15: “A table showing baseline demographic and clinical characteristics for each group.” ¶PRO elaboration to CONSORT checklist item 16: “For each group, number of participants (denominator) included in each analysis and whether the analysis was by original assigned groups.” #PRO elaboration to CONSORT checklist item 17a: “For each primary and secondary outcome, results for each group, the estimated effect size, and its precision (such as 95% confidence interval).” **PRO elaboration to CONSORT checklist item 18: “Results of any other analyses performed, including subgroup analyses and adjusted analyses, distinguishing pre-specified from exploratory.”

Factors Associated with PRO Protocol Content and Reporting

Eighty-four trials were included in the prespecified exploratory regression analyses. Full details of each model are presented in Supplementary Tables 4–6 (available online).

For model A, statistically significant predictors of the protocol inclusion of PRO protocol checklist items included presence of the PRO as a primary outcome (coef. = 10.93, 95% CI = 4.46 to 17.41), later year of the protocol (coef. = −0.82, 95% CI = −1.52 to −0.12), a higher adjusted SPIRIT 2013 checklist score (coef. = 0.41, 95% CI = 0.20 to 0.62), and larger sample size (reference category <100; n = 100–499, coef. = 9.77, 95% CI = 1.84 to 17.71; n = 500–999, coef. = 14.14, 95% CI = 5.04 to 23.24; n > 1000, coef. = 6.50, 95% CI = −2.70 to 15.70). Statistically nonsignificant covariates included cancer specialty and funding source.

For model B, increased odds of publishing PRO results were associated with inclusion of the PRO as a primary outcome (odds ratio [OR] = 5.68, 95% CI = 1.09 to 29.5). With charity funding as a reference category, industry funding (OR = 0.24, 95% CI = 0.07 to 0.87) and mixed-funding (OR = 0.17, 95% CI = 0.04 to 0.66) were associated with decreased odds of publishing PRO results. Statistically nonsignificant covariates included year of the protocol, cancer specialty, trial sample size, adjusted SPIRIT checklist score, whether the primary outcome of the trial was statistically significant, and the adjusted PRO protocol checklist score.

For model C, a higher adjusted PRO protocol checklist score was a statistically significant predictor of reporting quality, as measured by the CONSORT PRO Extension (coef. = 0.44, 95% CI = 0.01 to 0.87). Statistically nonsignificant covariates included year of publication, whether the PRO was named as a primary or secondary outcome, whether there were single or multiple reports, trial sample size, funding source, journal impact factor, and the adjusted CONSORT 2010 checklist score.

Discussion

In this study evaluating PRO protocol quality and reporting in cancer clinical trials, several key messages emerged. Nonreporting of PRO trial results was widespread, PRO protocol components were often inadequate, and where published PRO data were available, there was often considerable delay and standards of reporting were poor.

More than one-third of trials failed to include their PRO findings in either a primary or secondary publication. Thus, valuable information that may have an important impact on treatment decision-making and outcomes may not be available to patients and their clinicians or to researchers undertaking meta-analyses. This represents a waste of limited health-care research resources. Moreover, it devalues the considerable contribution of trial participants who spend time and effort providing PRO information in the belief that the data will be used for the benefit of future patients. Worryingly, we found almost 50 000 patients were involved in studies that failed to publish their PRO data. Nonreporting of these important patient data is unethical.

Our results concur with findings from a previous smaller study that reviewed 90 cancer trials collecting quality-of-life PROs conducted in Switzerland, Germany, and Canada between 2000 and 2003 and a recent study evaluating PRO reporting across 11 major journals (22,32). Our methodology has the added value of being able to evaluate the quality of included PRO protocols and publications and the association between the PRO protocol quality and reporting as well as to track the time from trial closure to publication of PRO results.

Our results identify a failure to include comprehensive PRO information in many trial protocols and publications. These findings concur with previous studies evaluating the quality of PRO protocols and publications in both cancer and noncancer settings (9–11,13–21,33). Rudimentary design elements were consistently omitted from protocols reviewed in this study, including a clear PRO rationale or objectives, justification for the choice of measure, guidance on data collection, and, crucially, aspects around prevention or analysis of missing PRO data, which has been identified as a particular problem in trials collecting PROs (34). These omissions may impair PRO-specific trial conduct, reduce data quality (11,35,36), and threaten the validity of these endpoints for application in clinical practice. Our exploratory regression analysis suggested an association between PRO-specific protocol completeness and reporting, which supports our a priori hypothesis (26). We postulate that the inclusion of “good-quality” PRO protocol components facilitates more robust data collection, lower rates of avoidable missing data, and more informative data with which to generate meaningful, publishable, PRO reports. The publication of the SPIRIT-PRO Extension in 2018 provides consensus recommendations regarding items that should be included in trial protocols in which PROs are a primary or key secondary outcome (37). In addition, open access international reporting guidelines are available via the 2013 CONSORT PRO Extension (30). It is hoped the existence of these standards will help improve the completeness and homogeneity of PRO design and reporting in the future.

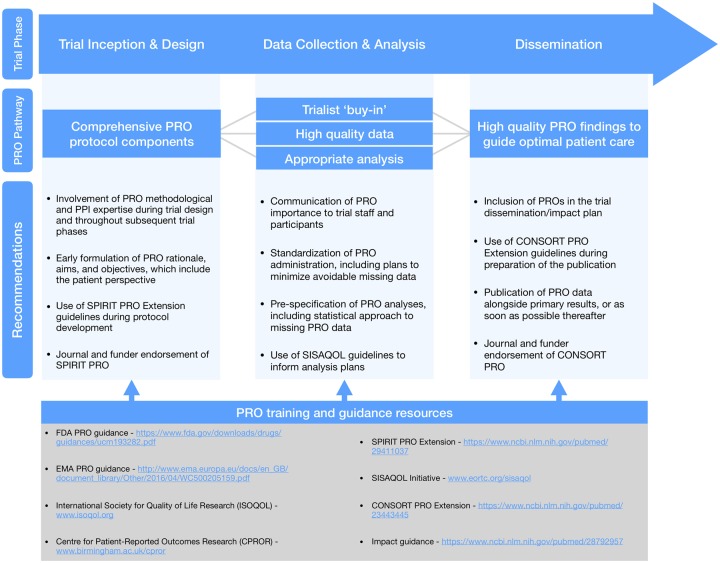

Alongside the current study, we conducted 44 follow-up qualitative interviews (Retzer A, Calvert M, Ahmed K, Keeley T, Armes J, Brown JM, Calman L, Gavin G, Glaser AW, Greenfield DM, Lanceley A, Taylor RM, Velikova G, Brundage M, Efficace F, Mercieca-Bebber R, King MT, Turner G, Kyte D. unpublished data) with journal editors, funder representatives, international PRO methodology experts, people with lived experience of cancer, and trialists based in Austria, Canada, Belgium, the Netherlands, Spain, the United States, and the United Kingdom. The protocol is available (38), and results will be published elsewhere. The qualitative data suggest the reasons underpinning our concerning findings are multi-factorial, aligning with related research in this area (11,12,35,39). In summary, interviewees suggested that future trials collecting PROs should include more comprehensive PRO trial design and protocol development involving PRO expertise and patient input, with a focus on standardized administration, minimizing burden, preventing/addressing missing data, development of a priori PRO analyses and dissemination plans, and training of all staff involved. We concur with these suggestions and propose several further methodological recommendations below (summarized in Figure 4).

Figure 4.

Summary of key recommendations and resources. CONSORT = Consolidated Standards of Reporting Trials; CPROR = Centre for Patient-Reported Outcomes Research; FDA = Food & Drug Administration; EMA = European Medicines Agency; ISOQOL = International Society for Quality of Life Research; PPI = patient and public involvement; PRO = patient-reported outcome; SISAQOL = Setting International Standards in Analysing Patient-Reported Outcomes and Quality of Life Endpoints Data; SPIRIT = Standard Protocol Items: Recommendations for Interventional Trials.

Our study had limitations. We were unable to source all protocols in our sample either due to an out-of-date database or registry information or because researchers refused to provide the document. The PRO protocol checklist we used was a precursor to the internationally endorsed SPIRIT-PRO Extension, published after our study had taken place. However, SPIRIT-PRO represents minimum standards, whereas the PRO protocol checklist is more comprehensive and was developed by experts in the field following a large-scale systematic review (31). Most included studies were developed before the publication of the PRO protocol and CONSORT-PRO checklists. However, as no other internationally endorsed PRO-specific consensus guidelines or checklists existed at the time of our study, we believe their use is justified. Moreover, they provide a useful benchmark that may help leverage improvements in future trials collecting PROs. Although the criteria for publication and reporting of phase II and III trials can be different, the reporting standards we employed did not make a distinction between these study designs. To mitigate, investigators agreed where a checklist item was deemed “not applicable” according to the study design and the denominator was adjusted accordingly during the analysis. NIHR Portfolio trials are predominantly United Kingdom-led; thus, replication of our study results in other countries is needed to demonstrate generalizability. The confidence intervals for predictors in our exploratory regression models were quite wide; this should be considered when interpreting the results and reflect the spectrum of quality observed with regard to protocol content and reporting. It should be noted that the most recent trials included in our sample closed 3 years before our final literature search in June 2017. It may be that some studies went on to publish their PRO data after this cutoff, which should be considered when interpreting our results. We would, however, argue that even reporting delays of this magnitude may impair the uptake of PRO trial results in practice and contravene recent regulatory and funder requirements mandating publication of results within 12 months of trial completion (40,41).

Our findings suggest that nonreporting of PRO trial findings is widespread, and concerns surrounding standards of PRO protocol content and reporting in cancer clinical trials appear valid. Thus, valuable patient-centered information may not be available to aid the decision-making of patients, clinicians, and regulators. These deficiencies must be urgently addressed to ensure these data are made available to enhance clinical outcomes for the benefit of future patients.

We therefore recommend that researchers utilize the recently published SPIRIT-PRO Extension (37) alongside the original SPIRIT 2013 statement (28,42) when developing protocols for trials including PROs. For reporting, we encourage the use of the CONSORT-PRO (30) Extension alongside CONSORT (43). Evidence suggests that the use of such checklists may be valuable in driving up standards of PRO research (44). We urge funders and journals to endorse and enforce the use of SPIRIT-PRO and CONSORT-PRO and to promote and facilitate prompt publication of PRO findings, preferably as part of the main trial report. Finally, we encourage all stakeholders to utilize the growing range of suitable open access PRO training resources and guidelines to support high-quality PRO research and dissemination.

Funding

This work was supported by project funding from Macmillan Cancer Support (grant number 5592105). The study funder did not have any role in the study design; the collection, analysis, and interpretation of data; the writing of the report; or the decision to submit the article for publication.

Notes

Affiliations of authors: Centre for Patient-Reported Outcomes Research, Institute of Applied Health Research, University of Birmingham, Birmingham, UK (DK, AR, KA, TK, GT, MC); UK National Cancer Research Institute (NCRI) Psychosocial Oncology and Survivorship CSG subgroup: Understanding and Measuring the Consequences of Cancer and Its Treatment, UK (DK, JA, LC, AG, AWG, DMG, AL, RMT); School of Health Sciences, University of Surrey, Guildford, UK (JA); Clinical Trials Research Unit, University of Leeds, Leeds, UK (JMB); Macmillan Survivorship Research Group, Health Sciences, University of Southampton, Highfield Campus, Southampton, UK (LC); N. Ireland Cancer Registry, Centre for Public Health, Queens University, Belfast, Northern Ireland (AG); Leeds Institute of Cancer and Pathology, University of Leeds, Leeds, UK (AWG, GV); Sheffield Teaching Hospital NHS Foundation Trust and University of Sheffield, Sheffield, UK (DMG); UCL Elizabeth Garrett Anderson Institute for Women’s Health, Medical School Building, University College London, London, UK (AL); Cancer Clinical Trials Unit, University College London Hospitals NHS Foundation Trust, London, UK (RMT); Queen’s Department of Oncology School of Medicine, Queen’s Cancer Research Institute, Kingston, Ontario, Canada (MB); Italian Group for Adult Hematologic Diseases (GIMEMA), Health Outcomes Research Unit, Rome, Italy (FE); Sydney Medical School, University of Sydney and Psycho-oncology Co-operative Research Group, School of Psychology, Faculty of Science, University of Sydney, Sydney, Australia (RMB, MTK); NHMRC Clinical Trials Centre, University of Sydney, Sydney, Australia (RMB); NIHR Birmingham Biomedical Research Centre, University Hospitals Birmingham NHS Foundation Trust and University of Birmingham, Birmingham, UK (DK, MC).

The funders had no role in design of the study; the collection, analysis, and interpretation of the data; the writing of the manuscript; and the decision to submit the manuscript for publication. All authors had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. The lead author affirms that this manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as planned have been explained.

DK, AR, KA, TK, and MC were supported by project funding from Macmillan Cancer Support. JA, LC, AGa, AGl, DK, AL, RT, and DG are all members of the National Cancer Research Institute Psychosocial Oncology and Survivorship CSG subgroup: Understanding and measuring the consequences of cancer and its treatment. FE reports personal fees from Bristol-Myers Squibb, grants and personal fees from TEVA, grants from Amgen, personal fees from Orsenix, and personal fees from Incyte outside the submitted work. GV and JB report grants from the National Institute for Health Research (NIHR) and Yorkshire Cancer Research and in addition GV receives personal fees from Roche, Genentech, Eisai, and Novartis. MC reports grants from Macmillan Cancer Support, Innovate UK, the NIHR, NIHR Birmingham Biomedical Research Centre and NIHR Surgical Reconstruction and Microbiology Research Centre (SRMRC) at the University of Birmingham and University Hospitals Birmingham NHS Foundation Trust, and personal fees from Astellas, Takeda, and Merck outside the submitted work. DK reports grants from Macmillan Cancer Support, Innovate UK, the NIHR, NIHR Birmingham Biomedical Research Centre, and NIHR SRMRC at the University of Birmingham and University Hospitals Birmingham NHS Foundation Trust, and personal fees from Merck outside the submitted work.

The study was initially conceived by members of the UK National Cancer Research Institute (NCRI) Psychosocial Oncology and Survivorship CSG subgroup: Understanding and measuring the consequences of cancer and its treatment (JA, LC [chair], AGa, AGl, DG, DK, AL, and RT). DK, MC, JA, LC, Aga, AGl, DG, AL, and RT acquired funding. DK and MC designed the study. KA, TK, AR, and GT undertook data extraction and analysis with input from DK and MC. DK drafted the first manuscript, with all authors contributing to subsequent iterations. All authors provided critical input on the manuscript and approved the final version for publication. DK or MC is guarantor.

We thank the researchers who responded to our requests for information and trial documentation. We also thank Anita Walker for her administrative support and Dr Richard Lehman for his comments on the manuscript.

Supplementary Material

References

- 1. Kluetz PG, Slagle A, Papadopoulos EJ, et al. Focusing on core patient-reported outcomes in cancer clinical trials: symptomatic adverse events, physical function, and disease-related symptoms. Clin Cancer Res. 2016;227:1553–1558. [DOI] [PubMed] [Google Scholar]

- 2.Basch, E., 2013. Toward patient-centered drug development in oncology. N Engl J of Medi. 369(5): 397–400. [DOI] [PubMed] [Google Scholar]

- 3. Basch E, Jia X, Heller G, et al. Adverse symptom event reporting by patients vs clinicians: relationships with clinical outcomes. J Natl Cancer Inst. 2009;10123:1624–1632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Ahmed S, Berzon RA, Revicki DA, et al. The use of patient-reported outcomes (PRO) within comparative effectiveness research: implications for clinical practice and health care policy . Med Care. 2012;5012:1060–1070. [DOI] [PubMed] [Google Scholar]

- 5. Brundage M, Leis A, Bezjak A, et al. Cancer patients’ preferences for communicating clinical trial quality of life information: a qualitative study. Qual Life Res. 2003;124:395–404. [DOI] [PubMed] [Google Scholar]

- 6. FDA. Guidance for Industry: Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims 2009. http://www.fda.gov/downloads/Drugs/GuidanceComplianceRegulatoryInformation/Guidances/UCM193282.pdf. Accessed March 2019. [DOI] [PMC free article] [PubMed]

- 7. Ouwens M, Hermens R, Hulscher M, et al. Development of indicators for patient-centred cancer care. Support Care Cancer. 2010;181:121–130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.European Medicines Agency. Appendix 2 to the guideline on the evaluation of anticancer medicinal products in man: the use of patient-reported outcome (PRO) measures in oncology studies 2016. https://www.ema.europa.eu/en/documents/other/appendix-2-guideline-evaluation-anticancer-medicinal-products-man_en.pdf. Accessed March 2019.

- 9. Kyte D, Duffy H, Fletcher B, et al. Systematic evaluation of the patient-reported outcome (PRO) content of clinical trial protocols. PLoS One. 2014;910:e110229.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Mercieca-Bebber R, Friedlander M, Kok PS, et al. The patient-reported outcome content of international ovarian cancer randomised controlled trial protocols. Qual Life Res. 2016;2510:2457–2465. [DOI] [PubMed] [Google Scholar]

- 11. Kyte D, Ives J, Draper H, et al. Inconsistencies in quality of life data collection in clinical trials: a potential source of bias? Interviews with research nurses and trialists. PLoS One. 2013;810:e76625.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Mercieca-Bebber R, Kyte D, Calvert M, et al. Administering patient-reported outcome questionnaires in Australian cancer trials: the roles, experiences, training received and needs of site coordinators. Trials. 2017;18(suppl 1):O30. [Google Scholar]

- 13. Bylicki O, Gan HK, Joly F, et al. Poor patient-reported outcomes reporting according to CONSORT guidelines in randomized clinical trials evaluating systemic cancer therapy. Ann Oncol. 2015;261:231–237. [DOI] [PubMed] [Google Scholar]

- 14. Dirven L, Taphoorn MJ, Reijneveld JC, et al. The level of patient-reported outcome reporting in randomised controlled trials of brain tumour patients: a systematic review. Eur J Cancer. 2014;5014:2432–2448. [DOI] [PubMed] [Google Scholar]

- 15. Efficace F, Fayers P, Pusic A, et al. Quality of patient-reported outcome reporting across cancer randomized controlled trials according to the CONSORT patient-reported outcome extension: a pooled analysis of 557 trials. Cancer. 2015;12118:3335–3342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Efficace F, Feuerstein M, Fayers P, et al. Patient-reported outcomes in randomised controlled trials of prostate cancer: methodological quality and impact on clinical decision making. Eur Urol. 2014;663:416–427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Efficace F, Jacobs M, Pusic A, et al. EORTC Quality of Life Group. Patient-reported outcomes in randomised controlled trials of gynaecological cancers: investigating methodological quality and impact on clinical decision-making. Eur J Cancer. 2014 Jul 1;50(11):1925–1941. [DOI] [PubMed] [Google Scholar]

- 18. Joly F, Vardy J, Pintilie M, et al. Quality of life and/or symptom control in randomized clinical trials for patients with advanced cancer. Ann Oncol. 2007;1812:1935–1942. [DOI] [PubMed] [Google Scholar]

- 19.Mercieca-Bebber RL, Perreca A, King M, et al. Patient-reported outcomes in head and neck and thyroid cancer randomised controlled trials: a systematic review of completeness of reporting and impact on interpretation. Eur J Cancer. 2016;56:144–161. [DOI] [PubMed] [Google Scholar]

- 20. Smith AB, Cocks K, Parry D, et al. Reporting of health-related quality of life (HRQOL) data in oncology trials: a comparison of the European Organization for Research and Treatment of Cancer Quality of Life (EORTC QLQ-C30) and the Functional Assessment of Cancer Therapy-General (FACT-G). Qual Life Res. 2014;233:971–976. [DOI] [PubMed] [Google Scholar]

- 21. Weingärtner V, Dargatz N, Weber C, et al. Patient reported outcomes in randomized controlled cancer trials in advanced disease: a structured literature review. Expert Rev Clin Pharmacol. 2016;96:821–829. [DOI] [PubMed] [Google Scholar]

- 22. Schandelmaier S, Conen K, von Elm E, et al. Planning and reporting of quality-of-life outcomes in cancer trials. Ann Oncol. 2015;269:1966–1973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. ASCO. ASCO Value Framework Update.2016. http://www.asco.org/advocacy-policy/asco-in-action/asco-value-framework-update. Accessed August 2017.

- 24. Keetharuth A, Dixon S, Winter M, et al. Effects of cancer treatment on quality of life (ECTQOL): final results. decision support unit, University of Sheffield 2014. http://www.nicedsu.org.uk/DSU_Cancer_utilities_ECTQoL_final_report_SEPT_2014.pdf. Accessed August 2015.

- 25. Wilson R. Patient led PROMs must take centre stage in cancer research. Res Involv Engagem. 2018;41:7.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Ahmed K, Kyte D, Keeley T, et al. Systematic evaluation of patient-reported outcome (PRO) protocol content and reporting in UK cancer clinical trials: the EPiC study protocol. BMJ Open. 2016;69:e012863.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Efficace F, Rees J, Fayers P, et al. Overcoming barriers to the implementation of patient-reported outcomes in cancer clinical trials: the PROMOTION registry. Health Qual Life Outcomes. 2014;121:86.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chan AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Inter Med. 2013. Feb 5;158(3):200–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Schulz KF, Altman DG, Moher D, et al. CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. Jpn Pharmacol Ther. 2015;436:883–891. [Google Scholar]

- 30. Calvert M, Blazeby J, Altman DG, et al. Reporting of patient reported outcomes in randomised trials: the CONSORT PRO extension. JAMA. 2013;3098:814–822. [DOI] [PubMed] [Google Scholar]

- 31. Calvert M, Kyte D, Duffy H, et al. Patient-reported outcome (PRO) assessment in clinical trials: a systematic review of guidance for trial protocol writers. PLoS One. 2014;910:e110216.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Marandino L, La Salvia A, Sonetto C, et al. Deficiencies in health-related quality-of-life assessment and reporting: a systematic review of oncology randomized phase III trials published between 2012 and 2016. Ann Oncol. 2018;2912:2288–2295. [DOI] [PubMed] [Google Scholar]

- 33. Brundage M, Bass B, Davidson J, et al. Patterns of reporting health-related quality of life outcomes in randomized clinical trials: implications for clinicians and quality of life researchers. Qual Life Res. 2011;205:653–664. [DOI] [PubMed] [Google Scholar]

- 34. Fairclough D, Peterson HF, Chang V.. Why are missing quality of life data a problem in clinical trials of cancer therapy? Stat Med. 1998;17(5–7):667–677. [DOI] [PubMed] [Google Scholar]

- 35. Kyte D, Ives J, Draper H, et al. Current practices in patient-reported outcome (PRO) data collection in clinical trials: a cross-sectional survey of UK trial staff and management. BMJ Open. 2016;610:e012281.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Kyte D, Ives J, Draper H, et al. Management of patient-reported outcome (PRO) alerts in clinical trials: a cross sectional survey. PLoS One. 2016;111:e0144658.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Calvert M, Kyte D, Mercieca-Bebber R, et al. Guidelines for inclusion of patient-reported outcomes in clinical trial protocols: the SPIRIT-PRO extension. JAMA. 2018;3195:483–494. [DOI] [PubMed] [Google Scholar]

- 38. Retzer A, Keeley T, Ahmed K, et al. Evaluation of patient-reported outcome protocol content and reporting in UK cancer clinical trials: the EPiC study qualitative protocol. BMJ Open. 2018;82:e017282.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Friedlander M, Mercieca-Bebber R, King M.. Patient-reported outcomes (PRO) in ovarian cancer clinical trials—lost opportunities and lessons learned. Ann Oncol. 2016;27(suppl 1):i66–i71. [DOI] [PubMed] [Google Scholar]

- 40. EU. Posting of Clinical Trial Summary Results in European Clinical Trials Database (EudraCT) to Become Mandatory for Sponsors as of 21 July 20142014. https://www.ema.europa.eu/en/news/posting-clinical-trial-summary-results-european-clinical-trials-database-eudract-become-mandatory. Accessed December 2018.

- 41. MRC. Written Evidence Submitted by the Medical Research Council (RES0041): Research Integrity and Clinical Trials Transparency 2018. http://data.parliament.uk/writtenevidence/committeeevidence.svc/evidencedocument/science-and-technology-committee/research-integrity/written/77043.html. Accessed December 2018.

- 42.Chan AW, Tetzlaff JM, Gøtzsche PC, et al. SPIRIT 2013 explanation and elaboration: guidance for protocols of clinical trials. BMJ. 2013;346:e7586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Schulz KF, Altman DG, Moher D.. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. PLoS Med. 2010;73:e1000251.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Mercieca-Bebber R, Rouette J, Calvert M, et al. Preliminary evidence on the uptake, use and benefits of the CONSORT-PRO extension. Qual Life Res. 2017;266:1427–1437. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.