Abstract

Recent studies have reported the existence of rich non-spatial visual object representations in both human and monkey posterior parietal cortex (PPC), similar to those found in occipito-temporal cortex (OTC). Despite this similarity, we recently showed that visual object representation still differ between OTC and PPC in two aspects. In one study, by manipulating whether object shape or color was task relevant, we showed that visual object representations were under greater top-down attention and task control in PPC than in OTC (Vaziri-Pashkam & Xu, 2017, J Neurosci). In another study, using a bottom-up data driven approach, we showed that there exists a large separation between PPC and OTC regions in the representational space, with OTC regions lining up hierarchically along an OTC pathway and PPC regions lining up hierarchically along an orthogonal PPC pathway (Vaziri-Pashkam & Xu, 2019, Cereb Cortex). To understand the interaction of goal-direct visual processing and the two-pathway structure in the representational space, here we performed a set of new analyses of the data from the three experiments of Vaziri-Pashkam and Xu (2017) and directly compared the two-pathway separation of OTC and PPC regions when object shapes were attended and task relevant and when they were not. We found that in all three experiments the correlation of visual object representational structure between superior IPS (a key PPC visual region) and lateral and ventral occipito-temporal regions (higher OTC visual regions) became greater when object shapes were attended than when they were not. This modified the two-pathway structure, with PPC regions moving closer to higher OTC regions and a compression of the PPC pathway towards the OTC pathway in the representational space when shapes were attended. Consistent with this observation, the correlation between neural and behavioral measures of visual representational structure was also higher in superior IPS when shapes were attended than when they were not. By comparing representational structures across experiments and tasks, we further showed that attention to object shape resulted in the formation of more similar object representations in superior IPS across experiments than between the two tasks within the same experiment despite noise and stimulus differences across the experiments. Overall, these results demonstrated that, despite the separation of the OTC and PPC pathways in the representational space, the visual representational structure of PPC is flexible and can be modulated by the task demand. This reaffirms the adaptive nature of visual processing in PPC and further distinguishes it from the more invariant nature of visual processing in OTC.

Keywords: Object representation, Attention, Task, Posterior parietal cortex, Occipito-temporal cortex

Introduction

Overview

Although PPC is largely known for its role in space, attention and action-related processing, over the last two decades, a growing number of monkey neurophysiology and human imaging studies have reported the direct representations of a variety of action-independent non-spatial visual information in the primate posterior parietal cortex (PPC), both in perception and during visual working memory (VWM) delay (see Xu, 2018a for a recent review; see also Xu, 2018b). For example, by correlating behavioral performance and fMRI response amplitudes across the whole brain, research in VWM, including that of our own, has identified a region in the superior part of human IPS (henceforth referred to as superior IPS for simplicity) that tracks the amount of visual information stored in VWM (Todd & Marois 2004 & 2005; Xu & Chun 2006; Xu 2007; see also Sheremata et al., 2010). Our further fMRI decoding studies have revealed that superior IPS region can represent a variety of visual information such as oriented gratings, object shapes, object categories and viewpoint-invariant object identity (Xu & Jeong, 2015; Bettencourt & Xu, 2016a; Jeong & Xu, 2016 & 2017; Vaziri-Pashkam & Xu, 2017 & 2019; Variri-Pashkam et al., 2019; see also Liu et al. 2011; Hou & Liu, 2012; Christophel et al. 2012, 2015, 2018; Ester et al. 2015, 2016; Weber et al. 2016; Bracci et al. 2017; Freud et al., 2017; Yu & Shim 2017). Representations in superior IPS also exhibit tolerance to changes in position, size and spatial frequency, similar to those found in OTC regions (Vaziri-Pashkam & Xu, 2019; Vaziri-Pashkam et al., 2019). These results echo an earlier fMRI adaptation study documenting position, size and viewpoint tolerate object representations in IPS topographic areas IPS1 and IPS2 (Konen & Kastner 2008; see also Sawamura et al., 2005). In monkey studies, action-independent nonspatial visual responses have been reported largely from macaque lateral intra-parietal (LIP) area. Because superior IPS partially overlaps with IPS1/IPS2 (Bettencourt & Xu 2016b), and macaque LIP is considered the homolog of human IPS1/IPS2 (Silver & Kastner 2009), roughly the same visual processing region appears to exist in both macaques and humans.

Despite these similarities, two differences in visual representation have been reported between PPC and OTC. The first one is that visual representations in PPC are in general under greater attention and task control and exhibit greater distractor resistance than those in OTC (e.g., Xu, 2010; Jeong & Xu, 2013; Bettencourt & Xu, 2016a; Bracci & Op de Beeck, 2017; Vaziri-Pashkam & Xu, 2017). The second difference is that there is an information-driven two-pathway separation between OTC and PPC regions in the representational space, with OTC regions arranging hierarchically along one pathway and PPC regions along another pathway (Vaziri-Pashkam & Xu, 2019). The goal of this study is to understand the relationship between these two differences in visual representation between OTC and PPC. We do so by reporting several new analyses of the data from Vaziri-Pashkam and Xu (2017).

Some human fMRI studies have examined the entire PPC as a single brain region whereas others have reported visual representation from multiple PPC regions. However, only representations in topographic areas IPS1 and IPS2 exhibit the most tolerance to low-level visual feature changes and those in the VWM-defined superior IPS exhibit greater task modulation and resistance to distraction than other IPS regions (e.g., Konen & Kastner 2008, Jeong & Xu, 2013; Xu & Jeong 2015, Bettencourt & Xu 2016a). Given the overlap between superior IPS and IPS1 and IPS2 (Bettencourt & Xu, 2016b), superior IPS appears to be a key visual processing region in the human PPC. As such, we focused our PPC investigation on superior IPS in this study.

PPC visual representations are under greater attention and task modulation than those in OTC

To understand whether or not visual representations differ between PPC and OTC, in a recent study (Varizi-Pashkam & Xu, 2017), we examined how goal-directed visual processing may impact visual representations in these two brain regions. We asked human participants to view colored exemplars from eight object categories when either shape or color was attended and task relevant. We additionally varied the strength of feature conjunction in three experiments, making color and shape partially overlapping, overlapping but on separate objects, or fully integrated (Figures 1A & 1B). Using fMRI and multi-voxel pattern analysis (MVPA), we examined object category decoding in topographically and functionally defined regions in PPC and OTC (Figure 1C). We first extracted the averaged fMRI responses from a block of exemplars as the fMRI response pattern for that object category. We then obtained pairwise fMRI MVPA decoding accuracy in both OTC and PPC for the eight object categories used in the experiment. Although there was an overall effect of task with higher object category decoding in the shape than the color task in both OTC and PPC, the effect was significantly greater in PPC than in OTC. This task effect was additionally modulated by the strength of feature conjunction, which was the strongest when features were less conjoined and diminished when features were fully conjoined, reflecting the well-known object-based feature encoding effect (e.g., Duncan 1984, Luck & Vogel 1997, O’Craven et al. 1999). These decoding results were seen in both the direct decoding of object categories within each task and in cross-decoding in which the classifier was trained with the categories on one task and tested with those on the other task.

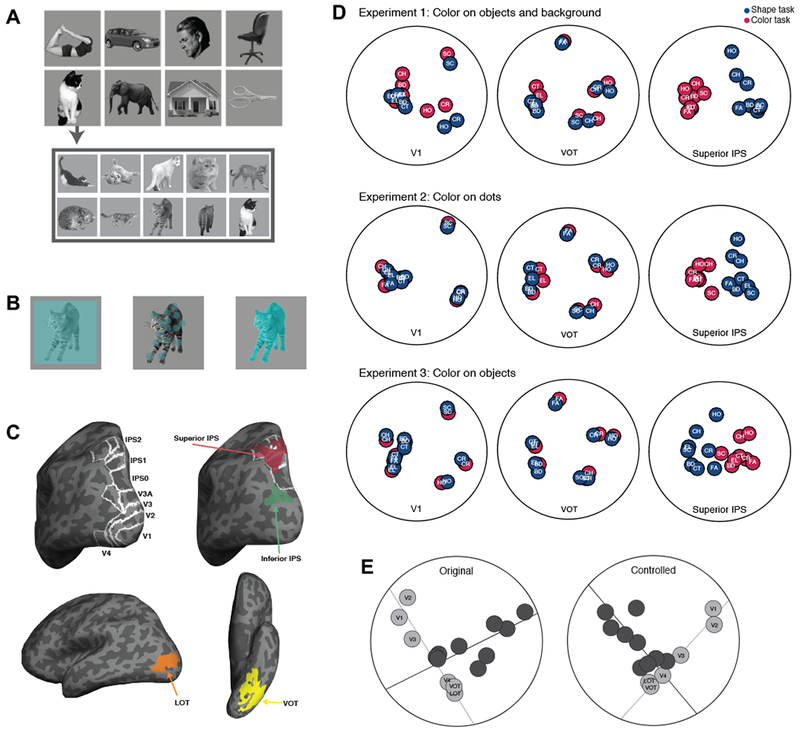

Figure 1.

Stimuli, regions of interest (ROIs) and previous findings. A. Example stimuli used in the experiments. The same 10 distinctive exemplars from 8 object categories were used in each of the three experiments. B. The relationship between the shape and color features in each experiment. In Experiment 1, color appeared over the entire image square such that both the objects and the background were colored (leftmost image). In Experiment 2, color appeared on a set of dots superimposed on the objects and shared a similar spatial envelope with the objects (middle image). In Experiment 3, color appeared on the objects only (rightmost image). C. Inflated brain surface from a representative participant showing the ROIs examined: topographic ROIs in OTC and PPC, superior IPS and inferior IPS ROIs, with white outlines showing the overlap between these two PPC ROIs and PPC topographic ROIs, and LOT and VOT ROIs. D. Category representational structures across the two tasks in V1, VOT and superior IPS for Experiments 1-3 as reported in Vaziri-Pashkam and Xu (2017). Using the pairwise decoding accuracy for each pair of object categories as input, we performed a MDS analysis and projected the similarity between each pair of object categories as the distance on a 2D surface. Each colored circle denotes an object category in a task, with blue circles representing those in the shape task and the red ones those in the color task. BD: body, CT: cat, CH: chair, CR: car, EL: elephant, FA: face, HO: house, and SC: scissors. E. A two-pathway separation of visual information representation in OTC and PPC regions as reported in Vaziri-Pashkam and Xu (2019). Here participants viewed uncolored exemplars from the same 8 object categories as those shown in Figure 1A and performed a 1-back object repetition detection task. The exemplars were either shown unedited (original) or with contrast, luminance and spatial frequency equalized (controlled). Using pairwise object decoding accuracy, we constructed a category-wise similarity matrix for each ROI. We then correlated these matrices across ROIs to construct a region-wise similarity matrix. Using MDS, we projected the ROIs onto a 2D surface with the distances between the ROIs reflecting the similarities in their object category representational similarity. For both the original (left panel) and controlled (right panel) images viewed, we saw the emergence of a two-pathway structure in the representational space, with OTC regions lining up hierarchically along an OTC pathway and PPC regions lining up hierarchically along an orthogonal PPC pathway. A total least square regression line through the OTC regions (the light gray line) and another through the PPC regions (the dark gray line) were able to account for 79% and 61% of the total amount of variance of the region-wise differences in visual object representation for the original and the controlled object images, respectively. Figures used here are modified from those appeared in Vaziri-Pashkam and Xu (2017) and Vaziri-Pashkam and Xu (2019).

These results may be best illustrated using representational similarity analysis (Kriegeskorte & Kievit, 2013) and multidimensional scaling (MDS; Shepard, 1980) in which the two dimensions that captured most of the representational variance among the eight categories in a brain region are projected onto a 2D surface with the distance between each pair of categories on this surface reflecting the similarity between them (Vaziri-Pashkam & Xu 2017). In these MDS plots (Figure 1D), object categories from the two attention conditions appeared to spread out to a similar extent in two representative OTC regions: V1 and ventral occipitotemporal area (VOT, which largely overlaps with the posterior fusiform area but extend further into the temporal cortex in our effort to include as many object selective voxels as possible in OTC regions). However, the spread was much greater in superior IPS when shape, rather than color, was attended to and when the two features were less integrated. This shows that task and attention play a more dominant role in determining the distinctiveness of object representation in superior IPS than in V1 or VOT. Additionally, whereas object representations from the two attention conditions overlapped extensively in V1 and VOT, they were completely separated in superior IPS regardless of the amount of feature integration. Thus, whereas the representational structures of V1 and VOT predominantly reflect the differences among objects, that of superior IPS reflects both the differences among objects and the demand of visual processing. Object representation, therefore, appears to be more adaptive and task sensitive in PPC than in OTC.

Based on the above results and a detailed review of other studies comparing visual representations between PPC and OTC, we recently argued that whereas OTC visual representation is largely dedicated to the detailed analysis of the visual world to allow us to form an invariant/stable representation and understanding of the world, PPC visual representation is largely adaptive/dynamic/flexible, tracking the demands of goal-directed visual processing and enabling us to interact flexibly with the world (Xu, 2018a). After all, visual processing contains two complementary goals. On the one hand, it is essential to comprehend the richness of the visual input to be well informed of our environment and create stable/invariant representations independent of our specific interactions with the world. On the other hand, at any given moment, only a fraction of the visual input may be useful to guide our thoughts and actions. Having the entire visual input available could be distracting and disruptive. To extract the most useful information, visual processing needs be selective and adaptive. Understanding the nature of visual representations in OTC and PPC from the prospective of invariant vs adaptive visual processing thus captures the two complementary goals of visual information processing in the primate brain.

An information-driven two-pathway characterization of OTC and PPC visual representations

To examine whether or not visual objects are represented similarly in OTC and PPC, in a separate recent study (Vaziri-Pashkam & Xu, 2019), from the pairwise fMRI decoding accuracies of multiple visual object categories, we constructed the representational structure for these object categories in a brain region. When we correlated these representational structures among OTC and PPC brain regions and then used MDS to project these brain regions onto a 2D surface according to how similar they are in visual object representation, we found a large separation between OTC and PPC in the representational space. Specifically, while OTC regions were arranged hierarchically along one pathway (the OTC pathway), PPC regions were arranged hierarchically along an orthogonal pathway (the PPC pathway) (Figure 1E). This two-pathway distinction was independently replicated ten times across seven experiments and accounted for 58 to 81% of the total variance of the region-wise differences in visual representations. Visual representations thus differ between OTC and PPC despite the existence of rich visual information in both. This separation was unlikely to have been driven by fMRI noise correlation between adjacent brain regions. This is because areas that are cortically next to each, such as V3 and V4, are more separated from each other than areas that are cortically apart, such as V4 and V3A in the representational space (see Figures 1C & 1E). The later reflects a shared representation likely enabled by the recent rediscovery of the vertical occipital fasciculus that directly connects V4 and V3A (Yeatman et al. 2014; Takemura et al. 2016). Neither could the 2-pathway distinction be driven by higher category decoding accuracy in OTC than PPC, as this difference would have been normalized during the calculation of the correlations of the object category RDMs between brain regions. The separation between OTC and PPC was not driven by differences in tolerance to changes in low-level visual features, as similar amount of tolerance was seen in both OTC and PPC regions. Neither was it driven by an increasingly rich object category-based representation landscape along the OTC pathway, as the two-pathway distinction was equally strong for natural object categories varying in various category features, such as animate/inanimate, action/non-action, and small/large, and artificial object categories in which none of these differences existed. Finally, despite PPC visual representations showing greater attention and task modulation than those in OTC (Bracci & Op de Beeck, 2017; Vaziri-Pashkam & Xu, 2017), the two-pathway distinction was present whether or not object shape was task relevant in Vaziri-Pashkam and Xu (2017). Presently, it remains unknown what drives this two-pathway separation. It is likely that different neural coding schemes are used for object representations in the two pathways. Alternatively, PPC neurons could be selective for a different set of object features compared to those in OTC.

The present study

We thus have two pieces of evidence describing how visual object representation may differ between OTC and PPC, with one showing visual object representation under greater top-down attention and task control in PPC than in OTC, and the other showing a difference in visual object representational structure between the two brain regions using a bottom-up data driven approach. How can we understand these two findings together? On the one hand, the two-pathway structure could be rigid and completely immune from any task modulation; on the other hand, the two-pathway structure may not be completely fixed and could be modulated by task. To answer this question, in this study, we performed a set of new analyses of the data from the three experiments of Vaziri-Pashkam and Xu (2017) and directly compared the two-pathway separation of OTC and PPC when object shapes were attended and task relevant and when they were not. We found that in all three experiments attention and task significantly modulated the two-pathway structure with PPC positioned closer to higher OTC regions when object shapes were attended, resulting in a compression of the PPC pathway towards the OTC pathway in the visual object representational space. We quantified this observation by reporting a significantly higher correlation of visual representational structure between superior IPS and lateral and ventral occipito-temporal region (LOT/VOT) when object shapes were attended than when they were not. We also reported a significantly higher correlation of neural and behavioral measures of visual representational structure for superior IPS when objects shapes were attended than when they were not. By comparing representations across tasks and experiments in superior IPS, we further showed that, despite noise and stimulus differences across the experiments, object representations were more similar within the shape task across the different experiments than between the shape and color tasks within the same experiment. Thus attention to object shapes resulted in the formation of similar object representations across experiments. These results reaffirm the adaptive nature of visual processing in PPC.

Materials and Methods

The design and some of the analysis details of the three experiments reported here have been described in two previous publications (Vaziri-Pashkam & Xu, 2017 and 2019) and are reproduced here for the readers’ convenience. The three main analyses reported here, however, have not been published before.

Participants

A total of 7 healthy adults (4 females), aged between 18-35, with normal color vision and normal or corrected to normal visual acuity participated in all three main fMRI experiments. An additional 8 adults from the same subject pool (6 females) participated in the behavioral search experiment, one of which (female) also participated in the fMRI experiments. All participants gave their informed consent prior to the experiments and received payment for their participation. The experiments were approved by the Committee on the Use of Human Subjects at Harvard University.

Experimental Design and Procedures

Main experiments

Experiment 1: Color on the object and background.

In this experiment, we used grey-scaled object images from eight object categories (faces, bodies, houses, cats, elephants, cars, chairs and scissors). These categories were chosen as they covered a good range of real-world object categories and were some of the typical categories used in previous investigations of object category representations in ventral visual cortex (e.g., Haxby et al., 2001; Kriegeskorte, et al., 2008). For each object category 10 unique object exemplars were selected (Figure 1A). These exemplars varied in identity, pose (for cats and elephants), expression (for faces) and viewing angle to reduce the likelihood that object category decoding would be driven by the decoding of any particular exemplar. Objects were placed on a light gray background and covered with a semi-transparent colored square subtending 9.24° of visual angle (Figure 1B, leftmost image). Thus, both the object and the background surrounding the object were colored. On each trial, the color of the square was selected from a list of 10 different colors (blue, red, light green, yellow, cyan, magenta, orange, dark green, purple and brown). Participants were instructed to view the images while fixating at a centrally presented red dot subtending 0.46° of visual angle. To ensure proper fixation throughout the experiment, eye-movements were monitored in all the experiments using an SR-research Eyelink 1000 eyetracker.

In each trial block 10 colored exemplars from the same object category were presented sequentially, each for 200 ms followed by a 600 ms fixation period between the images. In half of the runs, participants attended to the object shapes and ignored the colors, and pressed a response button whenever the same object repeated back to back. Two of the objects in each block were randomly selected to repeat. In the other half of the runs, participants attended to the colors and ignored object shapes and detected a one-back repetition of the colors, which occurred twice in each block.

Each experimental run consisted of 1 practice block at the beginning of the run and 8 experimental blocks with 1 for each of the 8 object categories. The stimuli from the practice block were chosen randomly from one of the 8 categories and data from the practice block were removed from further analysis. Each block lasted 8 s. There was a 2 s fixation period at the beginning of the run and an 8 s fixation period after each stimulus block. The presentation order of the object categories was counterbalanced across runs for each task. To balance for the presentation order of the two tasks, task changed every other run with the order reversed halfway through the session so that for each participant one task was not presented on average earlier than the other task. Each participant completed one session of 32 runs, with 16 runs for each of the two tasks. Each run lasted 2 min 26 s.

Experiment 2: Color on the dots over the object.

The stimuli and paradigm used in this experiment were similar to those of Experiment 1 except that instead of both the object and the background being colored, a set of 40 semi-transparent colored dots, each with a diameter subtending 0.93° of visual angle, were placed on top of the object, covering the same spatial extent as the object (Figure 1B, middle image). This ensured that participants attended to approximately the same spatial envelope whether or not they attended the object shape or color in the two tasks. Other details of the experiment were identical to those of Experiment 1.

Experiment 3: Color on the object.

The stimuli and paradigm used in this experiment were similar to those of Experiment 1 except that only the objects were colored (Figure 1B, rightmost image), making color more integrated with shape in this experiment than in the previous two. Participants thus attended different features of the same object when doing the two tasks. Other details of the experiment were identical to those of Experiment 1.

Localizer experiments

A number of independently defined human OTC and PPC regions were examined in this study (see Figure 1C). In OTC, we included early visual areas V1 to V4 and areas involved in visual object processing in lateral occipitotemporal (LOT) and ventral occipito-temporal (VOT) regions. LOT and VOT loosely correspond to the location of lateral occipital (LO) and posterior fusiform (pFs) areas (Malach et al., 1995; Grill-Spector et al.,1998) but extend further into the temporal cortex in our effort to include as many object selective voxels as possible in OTC. Reponses in these regions have previously been shown to correlate with successful visual object detection and identification (e.g., Grill-Spector et al. 2000; Williams et al., 2007) and lesions to these areas have been linked to visual object agnosia (Goodale et al.,1991; Farah, 2004). Previous studies have combined pFs and LO to form a larger ROI, the lateral occipital complex (LOC, e.g., Grill-Spector et al.,2000) as a single higher level visual processing region. Here we averaged responses from LOT and VOT to form a corresponding larger ROI LOT/VOT. In PPC, we included regions previously shown to exhibit robust visual encoding along the IPS. Specifically, we included topographic regions V3A, V3B and IPS0-4 along the IPS (Sereno et al., 1995; Swisher et al., 2007; Silver & Kastner, 2009) and two functionally defined object selective regions with one located at the inferior and one at the superior part of IPS (henceforth referred to as inferior IPS and superior IPS, respectively). Inferior IPS has previously been shown to be involved in visual object selection and individuation while superior IPS is associated with visual representation and VWM storage (Todd & Marois, 2004 & 2005; Xu & Chun, 2006 and 2009; Xu & Jeong, 2015; Jeong & Xu, 2016; Bettencourt & Xu, 2016a).

Topographic visual regions.

Topographic visual regions were mapped with flashing checkerboards using standard techniques (Sereno et al., 1995; Swisher et al., 2007) with parameters optimized following Swisher et al. (2007) to reveal maps in PPC. Specifically, a polar angle wedge with an arc of 72° swept across the entire screen (23.4 × 17.5° of visual angle). The wedge had a sweep period of 55.467s, flashed at 4Hz, and swept for 12 cycles in each run (for more details, see Swisher et al., 2007). Participants completed 4 to 6 runs, each lasting 11 mins 56 secs. The task varied slightly across participants. All participants were asked to detect a dimming in the visual display. For some participants, the dimming occurred only at fixation, for some it occurred only within the polar angle wedge, and for others, it could occur in both locations, commiserate with the various methodologies used in the literature (Bressler & Silver, 2010; Swisher et al., 2007). No differences were observed in the maps obtained through each of these methods.

Superior IPS.

To identify the superior IPS ROI previously shown to be involved in VWM storage (Todd & Marios, 2004; Xu & Chun, 2006), we followed the procedures development by Xu and Chun (2006) and implemented by Xu and Jeong (2015). In an event-related object VWM experiment, participants viewed in the sample display, a brief presentation of 1 to 4 everyday objects, and after a short delay, judged whether a new probe object in the test display matched the category of the object shown in the same position as in the sample display. A match occurred in 50% of the trials. Gray-scaled photographs of objects from four categories (shoes, bikes, guitars, and couches) were used. Objects could appear above, below, to the left, or to the right of the central fixation. Object locations were marked by white rectangular placeholders that were always visible during the trial. The placeholders subtended 4.5° × 3.6° and were 4.0° away from the fixation (center to center). The entire display subtended 12.5° × 11.8° Each trial lasted 6 sec and contained the following: fixation (1,000 msecs), sample display (200 msecs), delay (1,000 msecs), test display/response (2,500 msecs), and feedback (1,300 msecs). With a counterbalanced trial history design (Todd & Marois, 2004; Xu & Chun, 2006), each run contained 15 trials for each set size and 15 fixation trials in which only the fixation dot was present for 6 seconds. Two filler trials, which were excluded from the analysis, were added at the beginning and end of each run, respectively, for practice and trial history balancing purposes. Participants were tested with two runs, each lasting 8 min.

Inferior IPS.

Following the procedure developed by Xu and Chun (2006) and implemented by Xu and Jeong (2015), participants viewed blocks of objects and noise images. The object images were similar to the images in the superior IPS localizer, except that in all trials, four images were presented on the display. For the noise images, we took the object images but phase-scrambled each component object. Each block lasted 16 secs and contained 20 images, each appearing for 500 msecs followed by a 300 msecs blank display. Participants were asked to detect the direction of a slight spatial jitter (either horizontal or vertical), which occurred randomly once in every ten images. Each run contained eight object blocks and eight noise blocks. Each participant was tested with two or three runs, each lasting 4 mins 40 secs.

Lateral and ventral occipito-temporal regions (VOT and LOT).

To identify LOT and VOT ROIs, following Kourtzi and Kanwisher (2000), participants viewed blocks of face, scene, object and scrambled object images (all subtended approximately 12.0°× 12.0°). The images were photog raphs of gray-scaled male and female faces, common objects (e.g., cars, tools, and chairs), indoor and outdoor scenes, and phase-scrambled versions of the common objects. Participants monitored a slight spatial jitter which occurred randomly once in every ten images. Each run contained four blocks of each of scenes, faces, objects, and phase-scrambled objects. Each block lasted 16 secs and contained 20 unique images, with each appearing for 750 msecs and followed by a 50 msecs blank display. Besides the stimulus blocks, 8-sec fixation blocks were included at the beginning, middle, and end of each run. Each participant was tested with two or three runs, each lasting 4 mins 40 secs.

Behavioral visual search experiment

In the visual search experiment performed outside the scanner, all the participants searched for a target object category embedded among images of a distractor category. Each participant completed eight blocks of trials, with each of the eight categories in Experiments 1-3 serving as the target category for one block and the remaining seven categories serving as the distractor category for that block. Each block began with an instruction showing all images of the target category for that block. Participants then viewed ten images appearing in a circular array around the fixation. A target category image was present in 50% of the trials and were shown equally often in each of the ten possible locations across the different trials. In the target-present trials, the target image was pseudo-randomly chosen from the ten possible images of the target category, and the nine distractor images were chosen from the 10 possible exemplar images of the distractor category. The distractor category for each trial was pseudo-randomly chosen from the seven possible distractor categories with equal probability. In the target-absent trials, all the images were from the same distractor category.

Participants completed 8 blocks total for the 8 possible target categories, with one block for each category. Each block contained 16 practice and 140 experimental trials (seven distractor categories x ten locations x two target appearances (i.e., target presence and absence)). The inter-trial interval was 0.5 second. To provide participants feedback about their accuracy, an additional 2 seconds were added to the inter-trial interval in the incorrect trials during which the fixation dot flashed on an off. Incorrect trials were repeated at the end of each block until correct responses were obtained for all the trials in that block. Reaction times were calculated from all the correct trials, and only the reaction times, and not the accuracies, were included in further analysis.

MRI methods

MRI data were collected using a Siemens MAGNETOM Trio, A Tim System 3T scanner, with a 32-channel receiver array head-coil, at the Center for Brain Science, Harvard University (Cambridge, Massachusetts, USA). Participants lied on their back inside the MRI scanner and viewed the back-projected display through an angled mirror mounted inside the head coil. The display was projected using an LCD projector at a refresh rate of 60 Hz and a spatial resolution of 1024 × 768. An Apple Macbook Pro laptop was used to generate the stimuli and collect the motor responses. All stimuli were created using Matlab and Psychtoolbox (Brainard 1997) except for the topographic mapping stimuli which were created using VisionEgg (Straw, 2008).

A high-resolution T1-weighted structural image (1.0 × 1.0 × 1.3 mm) was obtained from each participant for surface reconstruction. For all functional scans, T2*-weighted gradient-echo, echo-planar sequences were used. For the three main experiments, 33 axial slices parallel to the AC-PC line (3 mm thick, 3 × 3 mm in-plane resolution with 20% skip) were collected covering the whole brain (TR = 2 s, TE = 29 ms, flip angle = 90° matrix = 64 × 64). For the LOT/VOT and inferior IPS localizer scans, 30-31 axial slices parallel to the AC-PC line (3 mm thick, 3 × 3 mm in-plane resolution with no skip) were collected covering occipital, parietal and posterior temporal lobes (TR = 2 s, TE = 30 ms, flip angle = 90° matrix = 72 × 72). For the superior IPS locali zer scans, 24 axial slices parallel to the AC-PC line (5 mm thick, 3 × 3 mm in-plane resolution with no skip) were collected covering most of the brain except the anterior temporal and frontal lobes (TR = 1.5 s, TE = 29 ms, flip angle = 90° ma trix = 72 × 72). For topographic mapping 42 slices (3 mm thick, 3.125 × 3.125 mm in-plane resolution with no skip) just off parallel to the AC-PC line were collected covering the whole brain (TR = 2.6 s, TE = 30 ms, flip angle = 90°, matrix = 64 × 64). Different slice prescriptions were used here for the different localizers to be consistent with the parameters we used in previous studies. Because the localizer data were projected into the volume view and then onto individual participants’ flattened cortical surface, the exact slice prescriptions used had minimal impact on the final results.

Data Analysis

FMRI data were analyzed using FreeSurfer (surfer.nmr.mgh.harvard.edu), fsfast (Dale et al., 1999) and in-house MATLAB codes. LibSVM software (Chang & Lin 2011) was used for support vector machine (SVM) analysis. FMRI data preprocessing included 3D motion correction, slice timing correction, and linear and quadratic trend removal. No spatial smoothing was applied.

ROI Definitions

Topographic maps.

Following the procedure outlined in Swisher et al. (2007), topographic maps in both ventral and dorsal regions were defined. We identified V1, V2, V3, V3a, V3b, V4, IPS0, IPS1, IPS2, IPS3 and IPS4 separately in each participant (Figure 1C). Activations from IPS3 and IPS4 were in general less robust than those from other IPS regions. Consequently, the localization of these two IPS ROIs was less reliable. Nonetheless, we decided to include these two ROIs here to have a more extensive coverage of the PPC regions along the IPS.

Superior IPS.

The superior IPS ROI was defined, following Todd and Marois (2004) and Xu and Chun (2006), as the collection of voxels that tracked each participant’s behavioral VWM capacity. To identify this ROI (Fig. 2B) fMRI data from the superior IPS localizer was analyzed using a linear regression analysis to determine voxels whose responses correlated with a given participant’s behavioral VWM capacity estimated using Cowan’s K (Cowan 2001). In a parametric design, each stimulus presentation was weighted by the estimated Cowan’s K for that set size. After convolving the stimulus presentation boxcars (lasting 6 s) with a hemodynamic response function, a linear regression with 2 parameters (a slope and an intercept) was fitted to the data from each voxel. Superior IPS was defined as a region in parietal cortex showing significantly positive slope in the regression analysis overlapping or near the Talairach coordinates previously reported for this region (Todd and Marois 2004) (see Figure 1C). The statistical threshold for selecting superior IPS voxels was set to p < 0.001 (uncorrected) for two of the participants. This threshold was relaxed to 0.05 (uncorrected) in 3 participants and to 0.1 (uncorrected) in two participants in order to obtain at least 100 voxels across the two hemispheres. This produced an ROI with a range of 150 to 423 voxels and an average of 234 voxels across the participants.

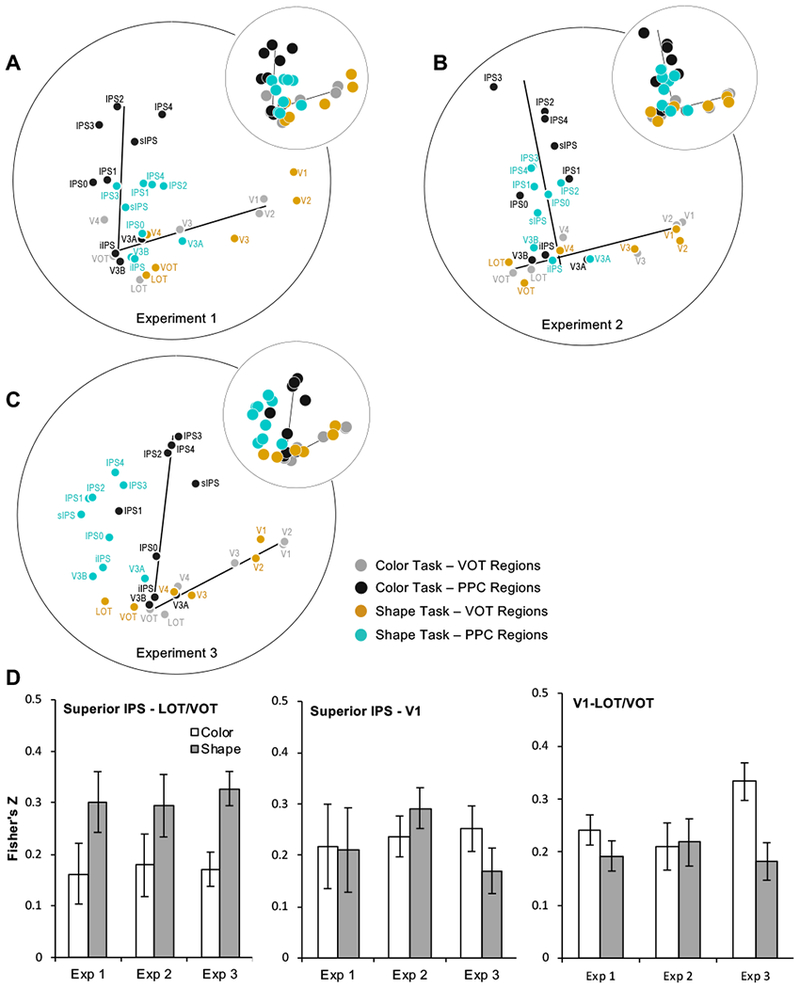

Figure 2.

The representational structures for all the ROIs examined in the two tasks for A. Experiment 1, B. Experiment 2, and C. Experiment 3. The larger plots show the brain regions with their names labeled. The small insert at the top right of each large plot provides a cleaner view of the shift of brain regions across the two attentional tasks. Although the OTC pathway had a similar extent and overlapped between the two attentional tasks, the PPC pathway was compressed towards the OTC pathway when shape, rather than color, was attended. D. Fisher’s z-transformed correlation coefficients for superior IPS–LOT/VOT, superior IPS–V1, and V1–LOT/VOT object representational structure correlations across the two attentional tasks in the three experiments. Consistent with the observations made from the MDS plots (A to C), object representational structures were more correlated between superior IPS and LOT/VOT when shapes were attended than when they were not. Such an effect was not found in the superior IPS–V1 or the V1–LOT/VOT correlation. Error bars indicate within subject errors.

Inferior IPS.

This ROI (Figure 1C) was defined as a cluster of continuous voxels in the inferior part of IPS that responded more (p < 0.001 uncorrected) to the original than to the scrambled object images in the inferior IPS localizer and did not overlap with the superior IPS ROI.

LOT and VOT.

These two ROIs (Figure 1C) were defined as a cluster of continuous voxels in the lateral and ventral occipital cortex, respectively, that responded more (p < 0.001 uncorrected) to the original than to the scrambled object images. LOT and VOT loosely correspond to the location of LO and pFs (Malach et al., 1995; Grill-Spector et al., 1998; Kourtzi & Kanwisher, 2000) but extend further into the temporal cortex in an effort to include as many object selective voxels as possible in OTC.

Because no hemisphere effects were found in these ROIs in our previous studies, the left and right hemisphere voxels for a given ROI were combined into a larger ROI by concatenating the voxel values from the left and right ROIs to obtain a large pattern including results from both ROIs.

MVPA classification analysis

For each experiment and each task, we first performed a general linear model analysis in each participant and obtained the averaged beta value for a trial block for each category in each voxel of the brain and in each run. We then used the beta values from all the voxels in each ROI as the fMRI response pattern for that ROI in that run. To remove response amplitude differences between categories, runs, and ROIs, we z-transformed the beta values across all the voxels in an ROI for each category in each run. The resulting normalized data had the mean amplitude of 0 and the standard deviation of 1. Following Kamitani and Tong (2005), we used a linear support vector machine (SVM) and a leave-one-out cross-validation procedure to calculate pairwise category decoding accuracy. As pattern decoding results could vary depending on the total number of voxels in an ROI, when comparing results from different ROIs, it is important to take into account variations in the number of voxels across the different ROIs and to equate the number of voxels in each ROI. To do this, we selected the 75 most informative voxels from each ROI using a t-test analysis. Specifically, following Mitchell et al. (2004), within each SVM training and testing iteration, we included n-1 runs in our training set and selected the 75 voxels with the lowest p values for discriminating between the two conditions of interest only in the training data (excluding the test data). An SVM was then trained only on these voxels on the n-1 training runs and then tested on the left-out run. This training and testing procedure was rotated with every run serving as the testing run and the remaining runs as the training runs to ensure independence between training and testing. In other words, since voxel selection was based on training data and the decoding accuracy was calculated from the testing data, there is no non-independence confound in our analysis. We used this voxel selection method to boost the signal-to-noise ratio of our data as the localization of some of the parietal regions was noisy in some of our participants. Following this procedure, we obtained a total of 28 pairs of object category comparisons in each ROI for each task.

Representational similarity analysis

To determine how object category representational similarity varied across brain regions and the two attentional tasks, for each region in each task, from the pairwise object category decoding accuracy, we first constructed an 8 × 8 category-wise similarity matrix. We then concatenated all the off-diagonal values of this matrix to create an object category similarity vector. Lastly, we correlated these vectors between every pair of brain regions across the two tasks to form a 30 × 30 region-task-wise similarity matrix (i.e., from 15 brain regions x 2 attentional tasks). This region-task-wise similarity matrix was first calculated for each participant and then averaged across participants to obtain the group level region-task-wise similarity matrix. To visualize the representational similarity of the different brain regions in the two attentional tasks, using the correlational values in the region-task-wise similarity matrix as input to MDS, we projected the first two dimensions of this matrix onto a 2D space with the distance between the regions denoting their relative similarities to each other in each task.

In addition to visualizing how representational similarity varied across brain regions and the two attentional tasks using MDS, to quantify our observations, we also directly compared representational similarity among representative brain regions in PPC and OTC. In PPC, superior IPS was chosen as a representative PPC region as it was a key visual processing region in PPC (Xu & Chun, 2006; Xu and Jeong, 2015; Bettencourt and Xu, 2016a; Jeong and Xu, 2016). In OTC, we have previously chosen VOT as a representative visual object processing region. However, whether to choose VOT or LOT as a representative higher OTC region was somewhat arbitrary. Because both LOT and VOT have been shown to play a role in visual shape processing and detection (e.g., Grill-Spector et al., 2000) and that lesions to this general area have been linked to visual object agnosia (Goodale et al. 1991; Farah 2004), to sample higher OTC regions contributing to object processing in an unbiased manner and to increase power, we averaged the responses from LOT and VOT to form a larger LOT/VOT region as our representative OTC object processing region. Besides superior IPS and LOT/VOT, V1 was also chosen as a representative brain region because it was the first cortical stage of visual information processing. To assess whether object category representational structure became more similar between a pair of brain regions in the shape than in the color task, we obtained object category similarity correlation coefficient (Fisher’s z-transformed) between pairs of brain regions in the two attentional tasks for each participant and then evaluated the difference at the group-level using statistical tests.

We also compared object category representations in the two attentional tasks across the three experiments to understand how they varied across the different stimulus manipulations in these experiments. For each representative brain region (i.e., superior IPS, LOT/VOT and V1), we performed pairwise correlations across tasks and experiments and Fisher’s z-transformed the resulting correlation coefficients. We visualized the similarities among the object category representations across the different tasks and experiments using MDS, as well as averaged the results across the experiments and performed group-level statistical tests to verify the observations made from the MDS plots.

Correlation between neural and behavioral object category representational similarities

To construct a behavioral category-wise similarity matrix for the 8 object categories, we obtained the search speed for each category paired with each of the other 7 categories. We then averaged all trials containing the pairing of the same two categories, regardless of which category was the target and which was the distractor, as search speed from both types of trials reflected the similarity between the two categories. This left us with a total of 28 category pairs. Additionally, search speed for the target present and absent trials were combined, as we previously showed that they produced similar results (Jeong & Xu, 2016). Search speeds were extracted separately from each participant and then averaged across participants to form the group-level behavioral category-wise similarity matrix for the 8 categories. We then concatenated all the off-diagonal values of this matrix to create a group-level behavioral object category similarity vector.

To document how attention may modulate neural and behavioral object category similarity measures, we correlated the group-level behavioral object category similarity vector with the neural object category similarity vector from a given brain region for each fMRI participant in each of the two attentional tasks. The resulting correlation coefficients were Fisher’s z-transformed and the difference in correlation between the two attentional tasks was evaluating by performing group-level statistical comparisons.

Results

In this study, we showed human participants exemplars from the following eight object categories: faces, houses, bodies, cats, elephants, cars, chairs and scissors (Figure 1A). These categories were chosen as they covered a good range of real-world object categories and are some of the typical categories used in previous investigations of object category representations in OTC (e.g., Haxby et al., 2001; Kriegeskorte, et al., 2008). We presented colors along with object shapes and manipulated in three experiments how color and shape were integrated, from partially overlapping, to overlapping but on separate objects, to being fully integrated (Figure 1B). Participants viewed blocks of images containing different exemplars from the same category in different colors. In different runs of the experiment, they either attended object shapes and detected an immediate repetition of the same exemplar or attended colors and detected an immediate repetition of the same color. Using the average fMRI response pattern from a block of trials as input, we examined object category decoding in topographically and functionally defined regions in PPC and OTC (Figure 1C). Although the exemplars from a given category varied in identity, pose (for cats and elephants), expression (for faces) and viewing angle to reduce the likelihood that object category decoding would be driven by the decoding of any particular exemplar, because all exemplars in a category still shared a set of defining shape features (e.g., the large round outline of a face/head, the protrusion of the limbs in animals), object category decoding here was likely to be mostly driven by shape decoding.

The impact of task on object category representational structure across brain regions within an experiment

To determine how object category representational similarity varied across brain regions and the two attentional tasks, for each region in each task, from the pairwise object category decoding accuracy, we first constructed a category-wise similarity matrix. We then correlated these matrices between every pair of brain regions across the two tasks to form a region-task-wise similarity matrix separately for each participant and then averaged across participants to obtain the group level region-task-wise similarity matrix. Using MDS, we projected the first two dimensions of the region-task-wise similarity matrix onto a 2D space with distance between brain regions determined by their relative similarities to each other in each task (Figures 2A to C).

These MDS plots show the prominent presence of the two-pathway structure in each experiment and in each attentional task as documented previously (Vaziri-Pashkam & Xu, 2019). Importantly, although the OTC pathway had a similar extent and overlapped between the two attentional tasks, the PPC pathway was compressed toward the OTC pathway when shape, rather than color, was attended. This suggests that PPC visual object representations became more similar to those in higher OTC regions when object shape was attended. This was not observed before when the representational structures were plotted separately for each task in each experiment as was done in Vaziri-Pashkam and Xu (2019). By plotting the representational structures for the two tasks together in each experiment here, the impact of task on the two-pathway structure could be directly visualized.

To quantify the above observation, we examined the correlation of category-wise similarity matrix between representative object processing regions in PPC and higher OTC regions across the two attentional tasks. In PPC, superior IPS was chosen as it was a key visual processing region in PPC (see Introduction). In OTC, LOT/VOT (which reflected the averaged responses of LOT and VOT) was chosen due to the role of both LOT and VOT in visual shape processing and detection (e.g., Grill-Spector et al., 2000) and that lesions to this general area have been linked to visual object agnosia (Goodale et al. 1991; Farah 2004). To compare and contrast, we also examined responses from V1 in early visual areas, as it is the first cortical stage of visual information processing. We measured the correlation of the representational structure between superior IPS and LOT/VOT in all three experiments and across the two attentional tasks and then entered the resulting Fisher’s z-transformed correlation coefficients in a two-way repeated measures ANOVA with experiment and task as factors (see Figure 2D). We found a significant main effect of attention (F(1,6) = 9.16, p = 0.023), but no main effect of experiment or an interaction between attention and experiment (Fs < 1, ps > 0.96). No main effects or interactions were found for the correlations between superior IPS and V1 (Fs < 0.34, ps > 0.71) or between V1 and LOT/VOT (Fs < 3.10, ps > 0.12). Further comparisons revealed that the change in correlation between tasks averaged across the three experiments (since there was no main effect of experiment and no interaction between experiment and task) was significantly greater for the superior IPS-LOT/VOT than the V1-LOT/VOT correlation (t(6) = 3.61, p = 0.01, two-tailed). This indicates a greater modulation of visual representational structure in the PPC than the OTC pathway between the two attentional tasks. The change in correlation between tasks did not differ significantly between the superior IPS-LOT/VOT and the superior IPS-V1 correlation (t(6) = 1.55, p = 0.17, two-tailed). This is somewhat expected as, when the PPC regions were being compressed towards the OTC regions, their representational distances to both the higher OTC regions and the early visual areas could decrease. Overall, these results confirmed the observations made from the MDS plots and showed that visual object representational structure became more similar between PPC and higher OTC regions when object shape was attended and task relevant, resulting in the compression of the PPC pathway towards the OTC pathway in the region-wise representational space.

In Experiment 3, there appeared to be a rotation of the axis along the parietal areas (i.e., comparing the blue vs. dark gray circles in Figure 2C). One way to characterize this rotation is to directly compare the correlation between superior IPS and V1. A rotation of the axis away from the early visual areas in the shape task would predict a greater correlation between superior IPS and V1 in the color than in the shape task. This difference, although in the right direction (see Figure 2D middle), was far from being significant, t(6) = 0.93, p = 0.39. In Experiment 3, it also appeared that LOT/VOT was better correlated with V1 in the color than in the shape task (Figure 2D right). The difference between this correlation across the two tasks was marginally significant (t(6) = 2.13, p = 0.078). Color and shape were most integrated in Experiment 3 than in Experiments 1 and 2. It is interesting to see that in Experiment 3 attention to shape resulted in both PPC regions and higher OTC regions to be further repelled from early visual areas in the shape representational space. Further research, however, is needed to test whether these are replicable true effects or are just statistical fluctuations in the data.

The impact of task on behavioral and neural correlation of object category representational structure within an experiment

To provide convergent evidence that the object representational structure of PPC can be modulated by task, in this analysis, we compared the similarity between behavioral and neural object representations across the two tasks by correlating neural object category representational structure with behavioral object category representational structure obtained from a visual search task. Because the behavioral object representational structure was measured with reaction times (RTs) with longer RTs indicating greater similarity, and the neural object representational structure in an ROI was measured from pairwise object category decoding accuracy with lower accuracy indicating greater similarity, a more negative correlation would indicate a greater similarity between behavioral and neural object representations. Using Fisher’s z-transformed correlation coefficients as the measure, in a two-way repeated measures ANOVA with experiment and task as factors, in superior IPS, we found a significant main effect of task (F(1,6) = 17.95, p = 0.0054), but no main effect of experiment or interaction (Fs < 1, ps > 0.66). In V1 and LOT/VOT, no main effects or interactions reached significance (Fs < 1.83, ps > 0.22 for V1 and Fs < 0.70, ps > 0.51 for LOT/VOT) (see Figure 3). Further comparisons revealed that the change in the behavioral and neural correlation between tasks averaged across the three experiments (since there was no main effect of experiment and no interaction between task and experiment) was significantly greater for superior IPS than for LOT/VOT (t(6) = 3.62, p = 0.01, two-tailed) and significantly greater for superior IPS than for V1 (t(6) = 3.38, p = 0.015, two-tailed).

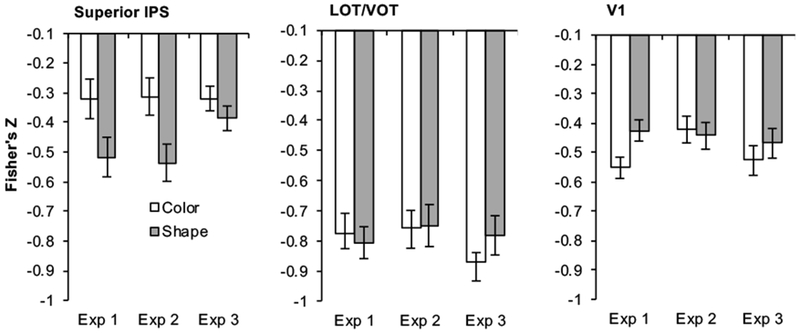

Figure 3.

Fisher’s z-transformed correlation coefficients for correlations between behavioral and neural object representational structures for superior IPS, LOT/VOT and V1 across the two attentional tasks in the three experiments. A more negative correlation indicated a greater similarity between behavioral and neural object representations. Across the three experiments, behavioral and neural correlation was higher in superior IPS when shapes were attended than when they were not. Such an effect was not found in LOT/VOT or V1. Error bars indicate within subject errors.

We also directly compared the strength of behavioral and neural correlation of visual representation among the three representative brain regions. Within the shape task, we found that this correlation averaged across the three experiments was greater in LOT/VOT than in either superior IPS or V1 (ts > 7.38, ps < .001, two-tailed), with the latter two being no different from each other (t(6) < 1, p = 0.63, two-tailed).

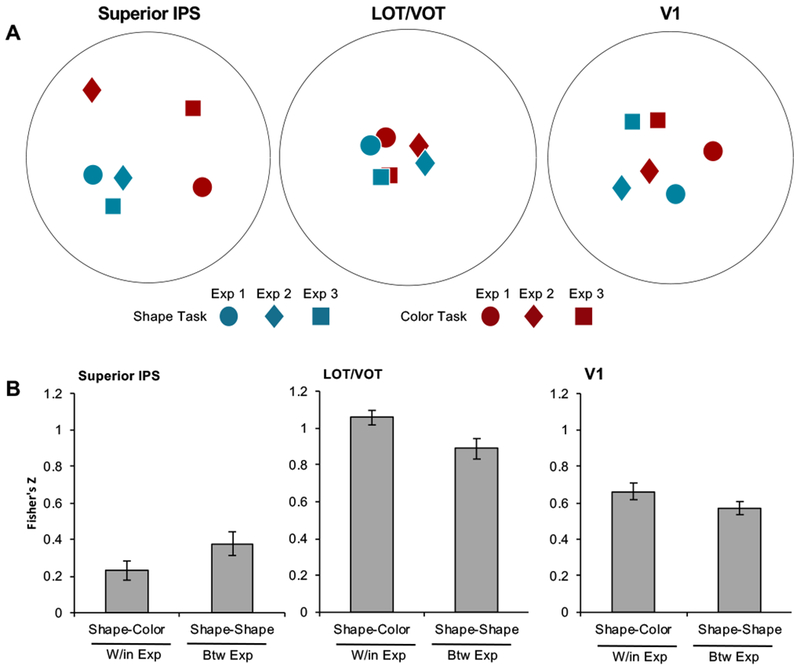

The impact of task on neural correlation of object category representational structure across experiments

To further document how object category representational structure may differ between PPC and OTC regions, we compared object category representations in the two attentional tasks across the three experiments to understand how they varied across the different stimulus manipulations in these experiments. For each representative brain region (i.e., superior IPS, LOT/VOT and V1), we performed pairwise correlations across tasks and experiments and Fisher’s z-transformed the resulting correlation coefficients. We visualized the similarities among the object category representations across the tasks and experiments using MDS (Figure 4 left), as well as averaged the results across the experiments and performed group-level statistical tests to verify the observations made from the MDS plots (Figure 4 right). In superior IPS, pairwise comparisons revealed that object category representations differed within and across experiments (t(6) = 4.05, p = 0.0067, two-tailed), such that category representations were more similar to each other in the shape task across the three experiments than they were to those from the color task within the same experiment. Thus, even though stimulus appearance and noise differed across experiments, attention to object shape resulted in the formation of similar object category representation across experiments in superior IPS. In comparison, attention to color and shape within the same experiment resulted in less similar object category representations in this brain region.

Figure 4.

MDS plots (A) and Fisher’s z-transformed correlation coefficients of the correlations of visual object representational structures across the two attentional tasks and the three experiments (B) in superior IPS, LOT/VOT, and V1. These MDS plots and correlation coefficients show that visual object representational structures in superior IPS were more similar in the shape task across the different experiments than between the two tasks within the same experiment despite noise and stimulus differences across the experiments. The opposite was true in LOT/VOT. W/in Exp: within the same experiment; Btw Exp: between different experiments. Error bars indicate within subject errors.

In LOT/VOT, object category representations also differed within and across experiments (t(6) = 2.74, p = 0.034, two-tailed), but exhibited an opposite response pattern, such that category representations were more similar across the two tasks within an experiment than within shape tasks across experiments. In V1, object category representations did not differ significantly within and across experiments (t(6) = 1.59, p = 0.16, two-tailed). In a two-way repeated measures ANOVA with brain region and category comparison within and across experiments as factors revealed significant interactions between these two factors between superior IPS and LOT/VOT and between superior IPS and V1 (Fs > 16.91, ps < 0.0063), but not between LOT/VOT and V1 (F(1,6) = 1.69, p = 0.24).

Discussion

Recent studies have reported the existence of rich non-spatial visual object representations in PPC, similar to those found in OTC. Focusing on PPC regions along the IPS that have previously been shown to exhibit robust visual encoding, our recent work provided two pieces of evidence describing how visual object representation may differ between OTC and PPC. In one study, by manipulating whether object shape or color was task relevant, we showed that visual object representations are under greater top-down attention and task control in PPC than in OTC (Vaziri-Pashkam & Xu, 2017). These results support a division of labor of visual information processing in the primate brain, with OTC and PPC involved in the invariant and adaptive aspects of visual processing, respectively (Xu, 2018a). In another study, using a bottom-up data driven approach, we showed that there is a large separation among PPC and OTC regions in the representational space, with OTC regions lining up hierarchically along an OTC pathway and PPC regions lining up hierarchically along an orthogonal PPC pathway.

To understand the relationship between these two differences in visual processing between PPC and OTC, here we presented a set of new analyses of the data from the three experiments of Vaziri-Pashkam and Xu (2017) and directly compared the two-pathway separation of OTC and PPC when object shape was attended and task relevant and when it was not. Unlike Vaziri-Pashkam and Xu (2017) that focused on object category representation within and between tasks, here we focused on how object category representational similarity structure changed across task and experiments. We found that in all three experiments the correlation of visual representational structure between superior IPS and LOT/VOT became greater when object shapes were attended than when they were not. This modulated the two-pathway structure, with PPC positioned closer to higher OTC regions when object shape was attended and resulting in a compression of the PPC pathway towards the OTC pathway in the representational space. Consistent with this observation, the correlation between neural and behavioral measures of visual representational structure was also higher in superior IPS when objects shapes were attended than when they were not. By comparing representations across experiments and tasks, we further showed that attention to object shapes resulted in the formation of more similar representations across experiments than those between the two tasks within the same experiment in superior IPS despite noise and stimulus differences across the experiments.

It is worth noting that it was not the case that object representational structure was just noisy across all brain regions when color was attended, resulting in poorer superior IPS-LOT/VOT correlation, and became more crisp when shape was attended, resulting in higher superior IPS-LOT/VOT correlation. This is because the correlation between V1-LOT/VOT did not change between tasks. Additionally, the behavioral and neural correlation of representational structure did not change between tasks for V1 and LOT/VOT. Thus, only the representational structure of superior IPS changed according to the demand of the task. This further supports the adaptive nature of visual representation in superior IPS.

The present results showed that the object representational structure did not change with attention and task in regions in OTC (see both Figures 2 and 3), consistent with the role of these regions in the invariant and stable aspect of visual processing and their roles in more faithfully tracking the quality of the visual input that is more general as well as context and task invariant (Op de Beeck & Baker, 2010). While our previous study reported that the representational space of visual objects was separated by task in superior IPS (Figure 1D), it did not tell us whether the representational structure itself was fixed or variable according to the demand of visual processing (Vaziri-Pashkam & Xu, 2017). For example, the representational structure could be identical for each task but separable by task in the representational space. By analyzing the two tasks together within an experiment, we were able to show here that the object representational structure of superior IPS was not fixed and identical in the two tasks, but was variable and became more aligned with that of VOT when the explicit processing of object shape was task relevant.

Given that both noise and the amount of shape-color conjunction differed across the experiments, it was expected that object representational structure would differ across experiments. Indeed, in VOT, we found that object representational structures were more similar between tasks within the same experiment than within the same shape task across experiments. In superior IPS, however, we found that object representational structures were more similar within the shape task across experiments than between the two tasks within the same experiment. Thus goal-directed visual processing resulted in the formation of similar object category representations in superior IPS despite differences in noise and stimulus manipulations across experiments.

Direct comparisons between brain regions revealed that the correlation between behavioral and neural representations in the shape task was lower in superior IPS than LOT/VOT (Figure 3). This indicated that the shape information used in the behavioral visual search task was overall more robustly represented in LOT/VOT than in superior IPS. Higher OTC regions such as VOT and LOT are critical for visual object representation such that fMRI responses from these regions have been shown to correlate with successful visual object detection and identification (e.g., Grill-Spector et al. 2000; Williams et al., 2007) and that lesions to these regions have been linked to visual object agnosia (Goodale et al.,1991; Farah, 2004). In this regard, it was not surprising to find robust visual object representation in LOT/VOT in the present study. Although visual object representations in superior IPS were less robust than that in LOT/VOT in comparison, two factors could have contributed to this. One was that SNR was lower in superior IPS given the fewer voxels this ROI had and the less precision we had in localizing this ROI with an event-related design (as opposed to a block design we had in localizing LOT/VOT). The second factor was that given that the shape task only required the detection of a back-to-back shape repetition, object category representation was never directly task relevant. This could have led to a relatively weak object category representation in superior IPS. Nonetheless, compared to LOT/VOT and V1, object category representational structure in superior IPS exhibited the greatest amount of task modulation, affirming the adaptive nature of visual representation in this brain region.

Despite the existence of studies arguing for PPC unique visual representations in action, 3D shape processing and tool representation, a recent detailed analysis and review of the literature showed that these PPC representations likely originate from OTC, rather than being computed entirely independently within PPC (Xu, 2018a). This is consistent with the existence of extensive anatomical connections between OTC and PPC in both monkey and human brains that allow for rapid information exchange between these regions. The present results further support this view by directly demonstrating that the visual representational structure of PPC is flexible and tracks that of a higher OTC region according to the demand of the task. This reaffirms the adaptive nature of visual processing in PPC and further support a division of labor of visual information processing in the primate brain, with OTC and PPC being involved in the invariant and adaptive aspects of visual information processing, respectively.

In the present study, in each trial block 10 colored exemplars from the same object category were presented sequentially. The average response from the entire block was then extracted for further analysis. While we were able to perform pairwise object category decoding, because multiple colors appeared in each trial block, we could not extract fMRI response pattern for each color in the two task conditions. This prevented us from examining how PPC color representation may be modulated by task. Color representations in PPC have been shown to be task dependent by previous neurophysiology and fMRI studies (e.g., Toth & Assad, 2002; Yu & Shim 2017). We thus expect a similar task effect for PPC color representation as we found for PPC object category representation. However, whether or not a two-pathway separation exists for color representation and how task may modulate color representation across OTC and PPC require further research.

To conclude, despite a large separation between PPC and OTC regions in the visual representational space, with OTC regions lining up hierarchically along one pathway and PPC regions lining up hierarchically along an orthogonal pathway, this representational structure is not entirely rigid, but rather it can be modulated by task to track the demand of visual processing.

Highlights.

Visual representations are known to be under greater task control in PPC than in OTC

There also exists a two-pathway separation between PPC and OTC in visual processing

We show that this two-pathway separation can be modulated by task demand

Task also changes PPC visual representational correlation with behavior

Task renders the formation of similar PPC object representations across experiments

Acknowledgements:

We thank Katherine Bettencourt for her help in selecting parietal topographic maps, and Michael Cohen for sharing some of the real object images with us. This research was supported by NIH grant 1R01EY022355 to Y.X. and by the Intramural Research Program of the NIMH (ZIA MH002035-39) to M.V-P. Data collection and part of the data analysis were performed at Harvard University and part the data analysis was performed at Yale University.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Yaoda Xu, Yale University.

Maryam Vaziri-Pashkam, National Institute of Mental Health.

References

- Bettencourt KC, Xu Y. 2016a. Decoding the content of visual short-term memory under distraction in occipital and parietal areas. Nat Neurosci 19:150–157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bettencourt KC, Xu Y. 2016b. Understanding location- and feature-based processing along the human intraparietal sulcus. J Neurophysiol 116:1488–1497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bracci S, Daniels N, Op de Beeck HP. 2017. Task context overrules object- and category-related representational content in the human parietal cortex. Cereb Cortex 27:310–321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. 1997. The psychophysics poolbox. Spat Vis 10:433–436. [PubMed] [Google Scholar]

- Bressler DW, Silver MA. 2010. Spatial attention improves reliability of fMRI retinotopic mapping signals in occipital and parietal cortex. Neuroimage 53:526–533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. 2011. LIBSVM: a library for support vector machines. ACM Transactions on Intelligent Systems and Technology 2:27. [Google Scholar]

- Christophel TB, Allefeld C, Endisch C, Haynes J-D. 2018. View-independent working memory representations of artificial shapes in prefrontal and posterior regions of the human brain. Cereb Cortex. 28:2146–61. [DOI] [PubMed] [Google Scholar]

- Christophel TB, Hebart MN, Haynes JD. 2012. Decoding the contents of visual short-term memory from human visual and parietal cortex. J Neurosci 32:12983–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christophel TB, Cichy RM, Hebart MN, Haynes JD. 2015. Parietal and early visual cortices encode working memory content across mental transformations. NeuroImage 106:198–206. [DOI] [PubMed] [Google Scholar]

- Cowan N. 2001. Metatheory of storage capacity limits. Behav Brain Sci 24:154–185. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. 1999. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage 9:179–194. [DOI] [PubMed] [Google Scholar]

- Duncan J. 1984. Selective attention and the organization of visual information. J Exp Psychol Gen 113:501–517. [DOI] [PubMed] [Google Scholar]

- Ester EF, Sprague TC, Serences JT. 2015. Parietal and frontal cortex encode stimulus-specific mnemonic representations during visual working memory. Neuron 87:893–905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester E, Sutterer D, Serences J, Awh E. 2016. Feature-selective attentional modulations in human frontoparietal cortex. J Neurosci 36:8188–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farah MJ. 2004. Visual agnosia. Cambridge, Mass.: MIT Press. [Google Scholar]

- Freud E, Culham JC, Plaut DC, Behrmann M 2017. The large-scale organization of shape processing in the ventral and dorsal pathways. eLIFE 6:e27576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodale MA, Milner AD, Jakobson LS, Carey DP. 1991. A neurological dissociation between perceiving objects and grasping them. Nature 349:154–156. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Hendler T, Malach R. 2000. The dynamics of object-selective activation correlate with recognition performance in humans. Nat Neurosci 3:837–843. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Hendler T, Edelman S, Itzchak Y, Malach R. 1998. A sequence of object-processing stages revealed by fMRI in the human occipital lobe. Hum Brain Mapp 6:316–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. 2001. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- Hou Y, Liu T. 2012. Neural correlates of object-based attentional selection in human cortex. Neuropsychologia 50:2916–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeong SK, Xu Y. 2013. Neural representation of targets and distractors during object individuation and identification. J Cogn Neurosci 25:117–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeong SK, Xu Y. 2016. Behaviorally relevant abstract object identity representation in the human parietal cortex. J Neurosci 36:1607–1619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeong SK, Xu Y. 2017. Task-context-dependent linear representation of multiple visual objects in human parietal cortex. J Cogn Neurosci 29:1–12. [DOI] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. 2005. Decoding the visual and subjective contents of the human brain. Nat Neurosci 8:679–685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konen CS, Kastner S. 2008. Two hierarchically organized neural systems for object information in human visual cortex. Nat Neurosci 11:224–231. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. 2000. Cortical regions involved in perceiving object shape. J Neurosci 20:3310–3318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Kievit RA. 2013. Representational geometry: integrating cognition, computation, and the brain. Trends Cogn Sci 17:401–412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, Bandettini PA. 2008. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron 60:1126–1141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Hospadaruk L, Zhu DC, Gardner JL. 2011. Feature-specific attentional priority signals in human cortex. J Neurosci 31:4484–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck SJ, Vogel EK. 1997. The capacity of visual working memory for features and conjunctions. Nature 390:279–281. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB. 1995. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci USA 92:8135–8139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell TM, Hutchinson R, Niculescu RS, Pereira F, Wang XR, Just M, Newman S. 2004. Learning to decode cognitive states from brain images. Mach Learn 57:145–175. [Google Scholar]

- O’Craven KM, Downing PE, Kanwisher N. 1999. fMRI evidence for objects as the units of attentional selection. Nature 401:584–587. [DOI] [PubMed] [Google Scholar]

- Op de Beeck HP, Baker CI. 2010. The neural basis of visual object learning. Trends Cogn. Sci 14:22–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sawamura H, Georgieva S, Vogels R, Vanduffel W, Orban GA. 2005. Using functional magnetic resonance imaging to assess adaptation and size invariance of shape processing by humans and monkeys. J Neurosci 25:4294–4306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. 1995. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science 268:889–893. [DOI] [PubMed] [Google Scholar]

- Shepard RN. 1980. Multidimensional scaling, tree-Fitting, and clustering. Science 210:390–398. [DOI] [PubMed] [Google Scholar]

- Sheremata SL, Bettencourt KC, Somers DC. 2010. Hemispheric asymmetry in visuotopic posterior parietal cortex emerges with visual short-term memory load. J Neurosci 30:12581–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silver MA, Kastner S. 2009. Topographic maps in human frontal and parietal cortex. Trends Cogn Sci 13:488–495. [DOI] [PMC free article] [PubMed] [Google Scholar]