Abstract

Objective

Cancer and its treatment are associated with long-term cognitive deficits. However, most studies of cancer patients have used traditional, office-based cognitive evaluations instead of assessing patients in their daily lives. Recent research in cognitive aging suggests that variability in performance may be a sensitive indicator of cognitive decline. Using Ecological Momentary Assessment (EMA), we examined cognitive variability among breast cancer survivors and evaluated whether ratings of fatigue and depressed mood were associated with cognition.

Methods

Participants were 47 women (M age = 53.3 years) who completed treatment for early stage breast cancer 6 to 36 months previously. Smartphones were preloaded with cognitive tests measuring processing speed, executive functioning and memory, as well as rating scales for fatigue and depressed mood. Participants were prompted five times per day over a 14-day period to complete EMA cognitive tasks and fatigue and depressed mood ratings.

Results

Cognitive variability was observed across all three EMA cognitive tasks. Processing speed responses were slower at times that women rated themselves as more fatigued than their average (p<.001). Ratings of depressed mood were not associated with cognition.

Conclusions

This study is the first to report cognitive variability in the daily lives of women treated for breast cancer. Performance was worse on a measure of processing speed at times when a woman rated her fatigue as greater than her own average. The ability to identify moments when cognition is most vulnerable may allow for personalized interventions to be applied at times when they are most needed.

Keywords: Breast cancer, cognition, fatigue, depression, ecological momentary assessment, oncology, memory, survivorship, quality of life

It is increasingly clear that cancer and its treatment are associated with short- and long-term cognitive deficits (1–3). Patients often report that cancer-associated cognitive deficits make it difficult to resume work, social, and family activities after treatment (4). Ahles and Hurria (2) recommended a number of methodological improvements for the study of cancer-associated cognitive decline (CACD). For example, they advocated for the development of more sensitive and reliable instruments of neurocognitive functioning, including those informed by cognitive neuroscience (5). We would add another recommendation, one that has been the focus of research on detecting cognitive change in aging and Alzheimer’s disease (6), namely the assessment of objective cognition in the naturalistic context of daily life. Typical, lab-based neurocognitive testing evaluates cognition under optimal conditions (e.g., quiet, free from distractions) that may not generalize to the complex conditions of daily life. As such, lab-based neurocognitive assessment likely assesses peak performance rather than typical performance. Compensatory cognitive resources that are available to patients in an optimal, controlled lab environment may break down when a patient is fatigued or feeling depressed in daily life. Environmental demands may thus cause variability in neurocognitive performance that are reflected in the daily cognitive failures that patients often report (7).

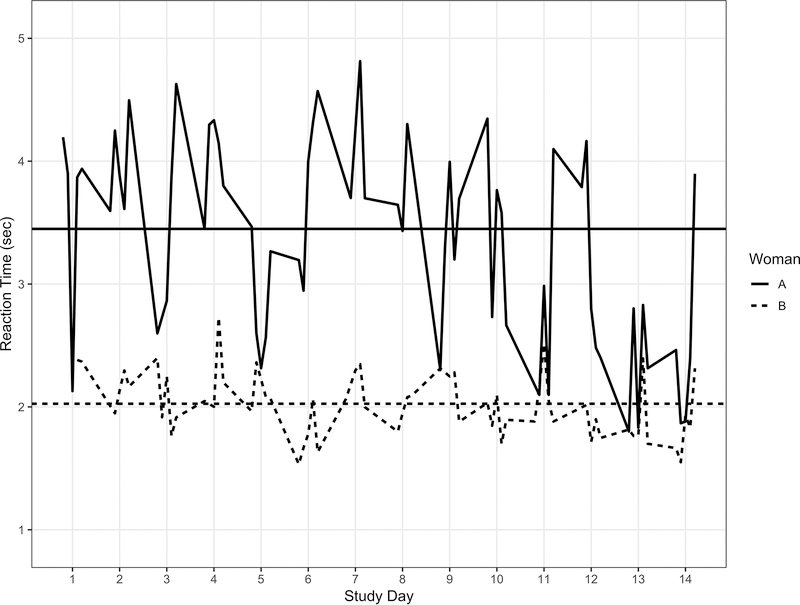

Ecological Momentary Assessment (EMA) has been used to assess self-reported behavioral and contextual factors in real time in participants’ natural environments (8). In the current study, we used EMA to conduct objective neurocognitive assessment throughout the day in patients’ daily life using smartphones. One advantage of this approach is that it allows us to distinguish how cognitive performance varies between persons (e.g., one patient consistently performs worse than another) from how performance varies for an individual who is measured repeated (e.g., a patient performs worse on some occasions relative to their own average) (9). Figure 1 shows the response times for two women on a processing speed task from each of the five daily prompted assessments across the 14-day study period used in the current study. The horizontal lines represent average response latencies across the assessment period and show between person differences. Patient A, on average, responds more slowly than Patient B. By contrast, within person variability is illustrated by the degree to which each assessment varied from an individual’s average. Patient A, for example, performed more slowly than her usual throughout Day 7, but faster than her average on Day 12 (indeed, her scores on this day look more like Patient B’s than her own average!). Thus, a woman can vary relative to other women, but may also experience “good days” and “bad days” relative to her own typical performance.

Figure 1:

Reaction time (sec) for the Symbol Search test for two women across prompts and days of assessment.

Our EMA approach also enables identification of co-occurring factors (e.g., fatigue) that may impact cognitive performance. Thus, we are able to link, in real-time, reports of risk factors that may negatively impact cognitive performance in order to identify periods of vulnerability among cancer patients. Returning to Figure 1, woman A may respond more slowly on average than woman B because she experiences greater fatigue or depressed mood on average across the study period (between person differences). For either women, the times when she responds more slowly than her own average may reflect times when she reports more fatigue or depressed mood than her usual (within person differences). This information may facilitate the development of interventions to be directed to the right patients at the right time (10).

Much of the previous research on CACD has examined factors that differ between persons such as treatment modality (11), cognitive reserve (12), symptomatology (e.g., fatigue, depression) (13) and genetic polymorphisms (14). These between person differences in risk factors, however, are only inconsistently associated with performance on traditional neuropsychological testing. To date, no studies to our knowledge have assessed relationships among symptomatology and cognitive variability.

In the current paper, we report on the results of an EMA study of cognitive performance in breast cancer patients. Participants completed three EMA cognitive tasks and rated their depressed mood and fatigue up to five times a day for a two-week period. We hypothesized that: 1) cognitive variability would exist between cancer patients, as well as within persons across the two-week period, and 2) Concurrent ratings of fatigue and depression would be related to cognitive variability.

Methods

Participants

Participants were recruited from Moffitt Cancer Center. Eligible women: a) were ≥18 years of age; b) had no documented or observable psychiatric or neurological disorders (e.g., dementia, psychosis); c) were capable of speaking and reading standard English; d) had no history of cancer other than breast cancer or non-melanoma skin carcinoma; e) had been treated for Stage I-II breast cancer with a minimum of four cycles of chemotherapy; f) had completed treatment between 6 and 36 months previously; g) had no recurrence of breast cancer; and h) were able to provide written informed consent. Participants were identified via review of medical records and appointment schedules compliant with HIPAA guidelines.

Procedure and Measures

In-person assessment

After signing the informed consent form at recruitment, participants completed several questionnaires and the office-based neuropsychological tasks. The self-report questionnaires included demographic characteristics, depressive symptoms from the Centers for Epidemiologic-Depression scale (CES-D; 15) and fatigue using the Fatigue Symptom Inventory (FSI; 16). The neuropsychological testing consisted of a standard battery that we (14) have used with breast cancer patients and included measures that corresponded to several of the domains evaluated by the EMA cognitive tasks. The specific tests included: Hopkins Verbal Learning Test (17), Visual Reproduction from the Wechsler Memory Scale (18), Digit Span (19), Digit Symbol (19), and Color Trails (20). Clinical variables were extracted from medical chart reviews and included date of breast cancer diagnosis, disease stage, dates of chemotherapy treatments, hormonal therapy, and prescribed medications.

Finally, participants were trained on the use of the study-provided smartphones (i.e., Samsung Galaxy S5 running Android-based operating system) with the study’s custom EMA app preloaded on the smartphone. Participants were allowed to practice and barriers to participation were described (e.g., not to respond when driving). Participants were instructed to begin the next day and provided with a help line for questions. Compliance was monitored via study data servers and check-up calls on day 1 and 7.

EMA Phase

The smartphones prompted participants 5 times throughout the day to alert them to complete a survey about their current states and recent experiences. They completed the EMA cognitive tests after the completion of these ratings. Prompts occurred around the hours of 9am, 12pm, 3pm, 6pm, and 9pm but timing appeared quasi-random to participants so that they would not anticipate them. A duration of 14 days was selected for the assessment period based upon previous studies that indicated assessing cognitive performance for at least 10 days allowed practice effects to be disentangled from cognitive performance (21). At the end of the EMA phase, participants were contacted to instruct them how to return their smartphones via the postage paid mailer.

Fatigue and Depressed Mood

At each prompted survey, participants were asked to respond on a visual analog scale slider bar (i.e., “slide your finger along the bar”) for questions about depressed mood and fatigue. The depressed mood rating (i.e., “How depressed/blue do you feel right now” – “Not at all” to “Extremely”) was scaled from 0 to 100. The average rating of depressed mood across the 14 days of assessment was over 10 points (M = 10.67, SD = 11.85), was correlated with CES-D scores from the in-person assessment (r = .66, p < .001) and exhibited excellent reliability (22; ρ = .98).

The fatigue rating (i.e., “How fatigued do you feel right now?” - “No fatigue” to “Worst possible fatigue”) was scaled from 0 to 100. The average rating of fatigue across the 14 days was over 30 (M = 31.58, SD = 19.95), exhibited a significant correlation with FSI scores from the in-person questionnaire assessment (r = .60, p < .001), and excellent reliability (22; ρ = .98).

EMA Cognitive Tasks

The EMA cognitive tasks have been shown to exhibit excellent reliability and convergent validity with traditional neuropsychological instruments (21). The tasks mapped onto abilities shown to decline among breast cancer patients (23) in the domains of processing speed (i.e., Symbol Search), episodic memory (i.e., Dot Memory), and attention (i.e., Card Matching).

Symbol Search is based on the Digit Symbol task (19). This task presented participants with three pairs of symbols at the top of the screen. Participants were instructed to find a pair of matching symbols as quickly as possible from two choices at the bottom of the screen. Participants completed 12 trials at each EMA. The outcome used in these analyses was the prompt-level median response latency for correct trials.

Dot Memory presented participants with a 5 × 5 grid with three cells containing red dots. The grid and dots remained on the screen for 3 seconds and participants were told to remember which boxes contain the red dots. Then, in a distractor task, participants had to touch all the F’s on a screen of E’s and F’s that is presented for 8 seconds. Finally, participants were instructed to touch the three boxes that contained the red dots on an empty grid. Participants complete two trials at each prompt assessment. The outcome was Euclidean distance score from each prompted assessment. Larger distance scores indicate worse performance.

Card Matching is a version of the traditional n-back task (24). Participants were presented with images of three playing cards in a row and asked to indicate whether the first and third cards are identical. The cards then shifted one place to the left and a new card was introduced in the third position. After this practice, the first two cards are flipped so that the faces are not visible and participants have to recall the card value in the series that transitions to the first card spot in order to match with the card value in the third spot which is visible to the participant. Participants completed 16 trials at each prompted assessment and higher scores indicate better performance.

Statistical Analysis

The sample size was based upon power analysis for EMA studies (25). Each participant contributed up to 70 data points (i.e., 5 prompts per day for 14 days) and sufficient between person and day-to-day variability was found in a previous study with fewer observations using a computer-based version of these EMA tasks (26).

Daily variation in cognitive performance was evaluated using a series of three-level (persons, days, prompts) multilevel models (27). Unconditional multilevel models were first used to evaluate the extent to which cognitive performance varied between persons and within persons. Multilevel models were then estimated to evaluate whether cognitive performance was related to ratings of fatigue and depressed mood. Analyses also evaluated whether study design (i.e., daily prompt, study day), demographic (i.e., age, years of education) and clinical (i.e., hormone status, disease stage, time since treatment) measures were related to cognitive performance. Because depression and fatigue were assessed repeatedly across the study, it was necessary to create two predictor variables for each in order to distinguish the effect of being an individual who was more depressed or fatigued than the sample average (i.e., between person effect) on typical levels of cognitive performance across the study from the effect of the individual presently feeling more depressed or fatigued than her usual state (i.e., within person effect) on her cognitive performance at this prompt. For these predictors, two variables were created, one that corresponded to between person differences and another the provided an index of within person variation. For the between person measure, the participants’ person-mean was used as the metric; these were grand mean centered in order to facilitate interpretations of how an individual differs from the sample average. The individual time-varying prompted response served to index within person variation (28), each observation was person mean centered in order to allow interpretation of the effect of within person deviations from the person’s average or typical state.

Data Availability Statement

In accordance with the NIH Data Sharing Policy, deidentified data collected as part of this study and supporting documentation will be made available to other researchers who contact the Principal Investigator directly and complete a material transfer agreement.

Results

Demographic Characteristics

The sample consisted of 47 breast cancer survivors. On average, participants were over 50 years of age (M = 53.3 years, SD = 6.5), were predominantly White (79% White, 21% non-White) and had completed 15 years of education (M = 15.0 years, SD = 2.5). Clinically, the women were a mean of 17 months (SD = 7) post-treatment for early stage breast cancer (26% Stage I, 74% Stage II). At the time of testing, 64% (n = 30) of women were on hormonal therapy. Participants showed excellent compliance with the EMA protocol with an average 88% response rate to the daily prompts (62 prompts out of a possible of 70).

EMA Cognitive Tasks: Variability, Reliability and Construct Validity

Regarding Symbol Search, 46% of variation in scores were attributable to differences between persons and 54% to variation within persons. The reliability was excellent (22; ρ = .97) and scores were significantly correlated with Digit Symbol (19) completion (r = −.50, p < .001). For Dot Memory, 31% of the variation in scores were attributable to between person differences and 69% to within person differences. Reliability was high (22; ρ = .95) and performance was significantly correlated with delayed recall from the Hopkins Verbal Learning Test-Revised (17; r = −.36, p = .009) and Wechsler Memory Scale Visual Reproductions II scores (18; r = −.30, p = .038). Regarding Card Matching, 17% of variation in scores was attributable to between person differences and 83% to within person differences. Matching accuracy exhibited excellent reliability (22; ρ = .89) and was correlated significantly (r = −.37, p = .011) with Color Trails B completion time (20).

EMA: Relation to Fatigue and Depressed Mood

Table 1 displays the results of the multilevel models for the study design, demographic and clinical predictors, as well as measures of fatigue and depressed mood. For Dot Memory, performance was worse at prompts that were later in the day, but the effect of time of day was unrelated to Symbol Search and Card Matching. Significant effects of days in study were observed for each cognitive outcome, with performance improving across the 14-day assessment period. Age, years of education, cancer stage, time since treatment and receipt of hormonal therapy were unrelated to between person differences across the cognitive outcomes.

Table 1:

Multilevel Model Results for Symbol Search, Dot Memory and Card Matching

| Variable | Symbol Search | Dot Memory | Card Matching | ||||||

|---|---|---|---|---|---|---|---|---|---|

| β | SE | p | β | SE | p | β | SE | p | |

| Demographic and Clinical Characteristics | |||||||||

| Age | .023 | .013 | .077 | .011 | .022 | .621 | −.001 | .001 | .917 |

| Years of education | −.016 | .029 | .576 | −.048 | .049 | .331 | .004 | .003 | .140 |

| Hormonal treatment | .022 | .165 | .895 | −.429 | .277 | .129 | .014 | .016 | .371 |

| Disease stage | .143 | .174 | .416 | .157 | .292 | .593 | .017 | .017 | .333 |

| Time since treatment | −.001 | .009 | .898 | −.009 | .017 | .591 | .001 | .001 | .145 |

| Study Design | |||||||||

| Prompt | .004 | .006 | .481 | .052 | .015 | <.001 | −.001 | .001 | .321 |

| Days in study | −.084 | .009 | <.001 | −.078 | .021 | <.001 | .007 | .002 | <.001 |

| Days in study2 | .003 | .001 | <.001 | .002 | .002 | .201 | −.003 | .001 | .051 |

| EMA Patient Reported Outcomes | |||||||||

| Person mean fatigue | −.001 | .086 | .993 | −.131 | .147 | .377 | −.003 | .009 | .710 |

| Daily fluctuation in fatigue | .067 | .015 | <.001 | .054 | .034 | .117 | −.004 | .003 | .184 |

| Person mean depressed mood | .078 | .087 | .378 | .166 | .147 | .263 | −.001 | .009 | .878 |

| Daily fluctuation in depressed mood | −.032 | .017 | .064 | −.046 | .040 | .248 | −.003 | .003 | .403 |

| Variance Estimates | |||||||||

| Between person differences | .199 | .047 | <.001 | .553 | .132 | <.001 | .002 | .001 | <.001 |

| Within person, across days | .015 | .004 | <.001 | .021 | .019 | .145 | .001 | .001 | .009 |

| Within person, within day | .204 | .007 | <.001 | 1.171 | .037 | <.001 | .009 | .001 | <.001 |

Results are from 47 women assessed up to 5 times a day (prompt; 1–5) across the two-week assessment period (days in study: 1–14). EMA patient reported outcomes reflect differences between breast cancer patients (person mean) and within person variation (daily fluctuation).

Among the EMA patient-reported outcomes, only the within person fatigue predictor was significantly associated with response time. At times when survivors rated their fatigue as higher than their own average, they demonstrated longer response latencies. Between person differences in average levels of fatigue in daily life, as well as depressed mood, were unrelated to EMA cognitive performance.

Discussion

Ours is the first study to our knowledge to apply EMA to evaluate CACD. Results indicated that EMA provided highly reliable and valid estimates of objective cognitive performance. Considerable variability was observed at the level of between person differences and within person variability. Finally, cognitive performance was significantly worse at times when patients rated themselves as more fatigued than usual.

Although fatigue is a common symptom among cancer survivors (29), evidence for an association with worse cognitive performance has been mixed with some studies observing an association (30), and other studies reporting that fatigue was associated with subjective reports of cognitive performance, but not when functioning was measured using objective indices (31). Our results indicate that a patient’s average level of fatigue was not related to her processing speed performance, but when she felt more fatigued than her usual state, this was significantly associated with longer response latencies. That is, being a “highly fatigued” survivor did not predict differences in performance, but experiencing more fatigue right now was related to performing more slowly right now. One reason for the finding that greater fatigue was associated with worse objective cognitive performance observed here is that we have adopted a within persons approach and rather than comparing a person’s rating of fatigue to others’, we link her own rating of fatigue to her average rating. Another important contribution of this study is that we are able to assess fatigue at the same time as cognitive performance is assessed, rather than asking patients to retrospect about their average fatigue over the past week or longer, as most questionnaire-based instruments do. Retrospective measures ask persons to think back over long periods of time and are subject to peak (32) and end effects (33); survivors may report their worst or most recent ratings, respectively.

Our results also showed that over half of variability in objective cognitive performance could be attributed to within person fluctuations during the 5 prompts a day and across the 14-day assessment period. The extent to which cognitive performance varies from prompt to prompt and day to day may reflect lapses in attentional control and inhibitory processes (34), both of which are theorized to be mechanisms of CACD (2, 35). Recently, Yao and colleagues (36) examined women diagnosed with breast cancer and non-cancer controls on overall accuracy and speed on Stroop test performance, as well as whether speed of responses varied across trials. No statistically significant group differences were observed for overall accuracy or speed, but women with breast cancer were significantly more variable across trials as compared to healthy controls. Thus, variability in functioning, even in the absence of significant differences at the mean-level, may serve as a more sensitive measure of current or future CACD (37).

Clinical Implications

The results of the present study have implications for our understanding of CACD. For example, assessing cognitive performance of cancer patients a single time, as in a traditional neuropsychological assessment, may overestimate or underestimate the extent to which they are cognitively impaired, relative to normative values or the performance of others. The extent to which a given individual’s visit to the research lab for the traditional assessments falls on cognitively “good” or “bad” days may provide a very different picture of CACD.

Our results also showed that we were able to evaluate cognitive performance in everyday life using highly reliable (all above .85) and valid (significant correlations to traditional neuropsychological instruments) measures. Andreotti and colleagues (38) reported very poor test-retest reliability of standard neuropsychological tests longitudinally. Poor reliability of existing tools greatly impacts attempts to identify individual difference predictors of cognitive functioning (39). Finally, the results indicated that cognitive performance generally improved across days of assessment. Practice effects are commonly observed in traditional neurocognitive testing (40) and underscore the utility of the EMA approach to covary performance from gains associated with repeated exposure.

Study Limitations

There are a number of limitations in the current study. First, women without a history of cancer were not included in the study, thus we are unable to draw conclusions regarding differences in the level of cognitive performance or variability across patients and non-cancer controls. Second, study participants tended to be white, non-Hispanic, and well-educated which may limit the generalizability of findings. Finally, all participants were assessed months to years after the end of treatment, thus, objective information on changes from previous levels of functioning are not available. Increasingly, deficits in cognitive performance in cancer patients who have not started treatment have been observed (2).

In summary, the current study introduces a new paradigm to study the cognitive performance of cancer survivors in real life, naturalistic settings. Our results showed that cancer survivors exhibit substantial variability between persons, but also within days and across the 14-day assessment period. Further within survivors, processing speed response time was slowed at times when ratings of fatigue were worse than average. The ability to identify moments when cognition is most vulnerable may allow for personalized interventions to be applied at times when they are most needed.

Acknowledgements

This study was supported by R03CA191712 (MPI: Scott/Small) from the National Cancer Institute and an Institutional Investigator Research Grant from the American Cancer Society to the Moffitt Cancer Center (PI: Scott). Dr. Jim has been a consultant for RedHill Biopharma and Janssen Scientific Affairs.

Footnotes

Data Availability Statement

In accordance with the NIH Data Sharing Policy, deidentified data collected as part of this study and supporting documentation will be made available to other researchers who contact the Principal Investigator directly and complete a material transfer agreement.

Disclaimer: The views expressed in this article are those of the authors and do not necessarily reflect the official views of the National Cancer Institute.

References

- 1.Wefel JS, Kesler SR, Noll KR, Schagen SB. Clinical characteristics, pathophysiology, and management of noncentral nervous system cancer-related cognitive impairment in adults. CA: a cancer journal for clinicians. 2015;65(2):123–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ahles TA, Hurria A. New Challenges in Psycho-Oncology Research IV: Cognition and cancer: Conceptual and methodological issues and future directions. Psychooncology. 2018;27(1):3–9. [DOI] [PubMed] [Google Scholar]

- 3.Bernstein LJ, McCreath GA, Komeylian Z, Rich JB. Cognitive impairment in breast cancer survivors treated with chemotherapy depends on control group type and cognitive domains assessed: A multilevel meta-analysis. Neurosci Biobehav Rev. 2017;83:417–28. [DOI] [PubMed] [Google Scholar]

- 4.Von Ah D, Habermann B, Carpenter JS, Schneider BL. Impact of perceived cognitive impairment in breast cancer survivors. European journal of oncology nursing : the official journal of European Oncology Nursing Society. 2013;17(2):236–41. [DOI] [PubMed] [Google Scholar]

- 5.Horowitz TS, Suls J, Trevino M. A Call for a Neuroscience Approach to Cancer-Related Cognitive Impairment. Trends Neurosci. 2018;41(8):493–6. [DOI] [PubMed] [Google Scholar]

- 6.Koo BM, Vizer LM. Mobile Technology for Cognitive Assessment of Older Adults: A Scoping Review. Innov Aging. 2019;3(1):igy038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Costa DSJ, Fardell JE. Why Are Objective and Perceived Cognitive Function Weakly Correlated in Patients With Cancer? J Clin Oncol. 2019;37(14):1154–8. [DOI] [PubMed] [Google Scholar]

- 8.Shiffman S, Stone AA, Hufford MR. Ecological momentary assessment. Annual review of clinical psychology. 2008;4:1–32. [DOI] [PubMed] [Google Scholar]

- 9.Curran PJ, Bauer DJ. The disaggregation of within-person and between-person effects in longitudinal models of change. Annu Rev Psychol. 2011;62(1):583–619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Meinlschmidt G, Lee JH, Stalujanis E, Belardi A, Oh M, Jung EK, et al. Smartphone-Based Psychotherapeutic Micro-Interventions to Improve Mood in a Real-World Setting. Frontiers in psychology. 2016;7:1112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Phillips KM, Jim HS, Small BJ, Laronga C, Andrykowski MA, Jacobsen PB. Cognitive functioning after cancer treatment: a 3-year longitudinal comparison of breast cancer survivors treated with chemotherapy or radiation and noncancer controls. Cancer. 2012;118(7):1925–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ahles TA, Saykin AJ, McDonald BC, Li Y, Furstenberg CT, Hanscom BS, et al. Longitudinal assessment of cognitive changes associated with adjuvant treatment for breast cancer: impact of age and cognitive reserve. J Clin Oncol. 2010;28(29):4434–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ehlers DK, Aguinaga S, Cosman J, Severson J, Kramer AF, McAuley E. The effects of physical activity and fatigue on cognitive performance in breast cancer survivors. Breast Cancer Res Treat. 2017;165(3):699–707. [DOI] [PubMed] [Google Scholar]

- 14.Small BJ, Rawson KS, Walsh E, Jim HS, Hughes TF, Iser L, et al. Catechol-O-methyltransferase genotype modulates cancer treatment-related cognitive deficits in breast cancer survivors. Cancer. 2011;117(7):1369–76. [DOI] [PubMed] [Google Scholar]

- 15.Radloff LS. The CES-D Scale. Applied Psychological Measurement. 2016;1(3):385–401. [Google Scholar]

- 16.Hann DM, Jacobsen PB, Azzarello LM, Martin SC, Curran SL, Fields KK, et al. Measurement of fatigue in cancer patients: development and validation of the Fatigue Symptom Inventory. Quality of life research : an international journal of quality of life aspects of treatment, care and rehabilitation. 1998;7(4):301–10. [DOI] [PubMed] [Google Scholar]

- 17.Brandt J The Hopkins Verbal Learning Test: Development of a new memory test with six equivalent forms. Clinical Neuropsychologist. 1991;5:125–42. [Google Scholar]

- 18.Wechsler D Weschler Memory Scale - IV manual. San Antonio, TX: Psychological Corporation; 1999. [Google Scholar]

- 19.Wechsler D Wechsler Adult Intelligence Scale-III (WAIS-III). San Antonio, TX: The Psychological Corporation; 1999. 1999. [Google Scholar]

- 20.D’Elia LF, Satz P, Uchiyama CL, White T. Color Trails Test. Odessa, FL: Psychological Assessment Resources; 1996. [Google Scholar]

- 21.Sliwinski MJ, Mogle JA, Hyun J, Munoz E, Smyth JM, Lipton RB. Reliability and Validity of Ambulatory Cognitive Assessments. Assessment. 2018;25(1):14–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Raykov T, Marcoulides GA. On multilevel model reliability estimation from the perspective of structural equation modeling. Struct Equ Modeling. 2006;13(1):130–41. [Google Scholar]

- 23.Jim HS, Phillips KM, Chait S, Faul LA, Popa MA, Lee YH, et al. Meta-analysis of cognitive functioning in breast cancer survivors previously treated with standard-dose chemotherapy. J Clin Oncol. 2012;30(29):3578–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Smith EE, Jonides J. Storage and executive processes in the frontal lobes. Science. 1999;283(5408):1657–61. [DOI] [PubMed] [Google Scholar]

- 25.Bolger N, Laurenceau J-P. Intensive longitudinal methods: An introduction to diary and experience sampling research. New York, NY: The Guilford Press; 2013. [Google Scholar]

- 26.Stawski RS, Sliwinski MJ, Hofer SM. Between-person and within-person associations among processing speed, attention switching, and working memory in younger and older adults. Exp Aging Res. 2013;39(2):194–214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hoffman L Longitudinal analysis: Modeling within-person fluctuation and change. New York, NY: Routledge; 2015. [Google Scholar]

- 28.Hoffman L, Stawski RS. Persons as Contexts: Evaluating Between-Person and Within-Person Effects in Longitudinal Analysis. Research in human development. 2009;6(2–3):97–120. [Google Scholar]

- 29.Jacobsen PB, Donovan KA, Small BJ, Jim HS, Munster PN, Andrykowski MA. Fatigue after treatment for early stage breast cancer: a controlled comparison. Cancer. 2007;110(8):1851–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gullett JM, Cohen RA, Yang GS, Menzies VS, Fieo RA, Kelly DL, et al. Relationship of fatigue with cognitive performance in women with early-stage breast cancer over 2 years. Psychooncology. 2019;28(5):997–1003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Klemp JR, Myers JS, Fabian CJ, Kimler BF, Khan QJ, Sereika SM, et al. Cognitive functioning and quality of life following chemotherapy in pre- and peri-menopausal women with breast cancer. Support Care Cancer. 2018;26(2):575–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Banthia R, Malcarne VL, Roesch SC, Ko CM, Greenbergs HL, Varni JW, et al. Correspondence between daily and weekly fatigue reports in breast cancer survivors. J Behav Med. 2006;29(3):269–79. [DOI] [PubMed] [Google Scholar]

- 33.Sobel-Fox RM, McSorley AM, Roesch SC, Malcarne VL, Hawes SM, Sadler GR. Assessment of daily and weekly fatigue among African American cancer survivors. J Psychosoc Oncol. 2013;31(4):413–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.MacDonald SW, Nyberg L, Backman L. Intra-individual variability in behavior: links to brain structure, neurotransmission and neuronal activity. Trends Neurosci. 2006;29(8):474–80. [DOI] [PubMed] [Google Scholar]

- 35.Ahles TA, Root JC. Cognitive Effects of Cancer and Cancer Treatments. Annual review of clinical psychology. 2018;14:425–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yao C, Rich JB, Tannock IF, Seruga B, Tirona K, Bernstein LJ. Pretreatment Differences in Intraindividual Variability in Reaction Time between Women Diagnosed with Breast Cancer and Healthy Controls. J Int Neuropsychol Soc. 2016;22(5):530–9. [DOI] [PubMed] [Google Scholar]

- 37.Diehl M, Hooker K, Sliwinski MJ. Handbook of intraindividual variability across the life span. New York, NY: Routledge; 2015. [Google Scholar]

- 38.Andreotti C, Root JC, Schagen SB, McDonald BC, Saykin AJ, Atkinson TM, et al. Reliable change in neuropsychological assessment of breast cancer survivors. Psychooncology. 2016;25(1):43–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hedge C, Powell G, Sumner P. The reliability paradox: Why robust cognitive tasks do not produce reliable individual differences. Behav Res Methods. 2018;50(3):1166–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Duff K, Beglinger LJ, Moser DJ, Paulsen JS, Schultz SK, Arndt S. Predicting cognitive change in older adults: the relative contribution of practice effects. Arch Clin Neuropsychol. 2010;25(2):81–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

In accordance with the NIH Data Sharing Policy, deidentified data collected as part of this study and supporting documentation will be made available to other researchers who contact the Principal Investigator directly and complete a material transfer agreement.