Abstract

It is widely accepted that optimization of medical imaging system performance should be guided by task-based measures of image quality (IQ). Task-based measures of IQ quantify the ability of an observer to perform a specific task such as detection or estimation of a signal (e.g., a tumor). For binary signal detection tasks, the Bayesian Ideal Observer (IO) sets an upper limit of observer performance and has been advocated for use in optimizing medical imaging systems and data-acquisition designs. Except in special cases, determination of the IO test statistic is analytically intractable. Markov-chain Monte Carlo (MCMC) techniques can be employed to approximate IO detection performance, but their reported applications have been limited to relatively simple object models. In cases where the IO test statistic is difficult to compute, the Hotelling Observer (HO) can be employed. To compute the HO test statistic, potentially large covariance matrices must be accurately estimated and subsequently inverted, which can present computational challenges. This work investigates supervised learning-based methodologies for approximating the IO and HO test statistics. Convolutional neural networks (CNNs) and single-layer neural networks (SLNNs) are employed to approximate the IO and HO test statistics, respectively. Numerical simulations were conducted for both signal-known-exactly (SKE) and signal-known-statistically (SKS) signal detection tasks. The considered background models include the lumpy object model and the clustered lumpy object model. The measurement noise models considered are Gaussian, Laplacian, and mixed Poisson-Gaussian. The performances of the supervised learning methods are assessed via receiver operating characteristic (ROC) analysis and the results are compared to those produced by use of traditional numerical methods or analytical calculations when feasible. The potential advantages of the proposed supervised learning approaches for approximating the IO and HO test statistics are discussed.

Keywords: Imaging system optimization, numerical observers, Bayesian Ideal Observer, Hotelling Observer, task-based image quality, supervised learning, deep learning

I. INTRODUCTION

Medical imaging systems commonly are assessed, validated, and optimized using task-specific measures of image quality that quantify the ability of an observer to perform a specific task [1]–[5]. When optimizing imaging systems for signal detection tasks (e.g., detection of a tumor), it has been advocated to use the performance of the Bayesian Ideal Observer (IO) as a figure-of-merit (FOM). In this way, the imaging system can be optimized in such a way that the amount of task-specific information in the measurement data is maximized. The IO for a binary signal detection task implements a test statistic given by the likelihood ratio and maximizes the area under the receiver operating characteristics (ROC) curve [6]. The IO has also been employed to assess the efficiency of human observers on signal detection tasks [7].

The IO test statistic is generally a non-linear function of the image data and, except in some special cases, cannot be determined analytically. Because of this, sampling-based methods that employ Markov-chain Monte Carlo (MCMC) techniques have been developed to approximate the IO test statistic for medical imaging applications [2], [8]. However, current applications of these methods have been limited to relatively simple object models that include parameterized torso phantoms [9], lumpy background models [2], and a binary texture model [8]. To the best of our knowledge, applications of MCMC methods to approximate the IO test statistic for more sophisticated object models—such as the clustered lumpy background (CLB) model that has been used to synthesize mammographic images—have not been reported to date.

When the IO is intractable, the Hotelling Observer (HO) can be employed to optimize imaging systems for signal detection tasks [10]–[13]. The HO employs the Hotelling discriminant, which is the population equivalent of the Fisher linear discriminant [1], and is optimal among all linear observers in the sense that it maximizes the signal-to-noise ratio of the test statistic [1], [14], [15]. However, implementation of the HO is also not without challenges. Specifically, it requires the estimation and inversion of a covariance matrix that can be enormous [16]. Different strategies for circumventing this difficulty exist [10]. For use in detection tasks where background variability is considered and the measurement noise covariance matrix is known, methods for the estimation and inversion of these large covariance matrices by use of a covariance matrix decomposition are available [1]. It has been demonstrated, however, that in certain situations the use of the covariance decomposition can result in a significant bias in the HO performance [17]. Alternatively, to avoid an explicit inversion of the covariance matrix, an iterative algorithm can be employed to estimate the Hotelling test statistic [1]. Finally, a variety of channelized HOs that utilize efficient channels have been proposed for approximating the HO in a computationally tractable way [4], [18], [19].

Supervised learning-based approaches hold significant promise for the design and implementation of model observers for optimizing imaging systems [20]–[23]. Recent efforts have primarily focused on training anthropomorphic model observers using deep learning [22], [24], [25]. The extent to which deep learning-based methods can benefit such applications remains a topic of investigation due to the difficulty of acquiring large amounts of labeled data in medical imaging applications. When optimizing imaging systems and data-acquisition designs, computer-simulated data can sometimes be employed [2]. In such applications, large amounts of labeled data can be generated and it can be feasible to train complicated inference models to be employed as model observers for assessing task-based measures of image quality.

Artificial neural networks (ANNs) with sufficiently complex architectures are known to be able to approximate any continuous function [26]. Accordingly, in principle, ANNs can be trained to approximate functions that represent test statistics of model observers. For example, Kupinski et al. investigated the use of fully-connected neural networks (FCNNs) to approximate the test statistic of an IO that acted on low-dimensional vectors of extracted image features [27]. More recently, Zhou and Anastasio employed convolutional neural networks (CNNs) to approximate the IO test statistic that acted directly on images for a simple signal-known-exactly and background-known-exactly (SKE/BKE) binary signal detection task, and demonstrated the use of modern deep learning technologies for approximating IOs [28].

In this work, supervised learning-based methods that employ ANNs for approximating the IO test statistic are explored systematically for binary signal detection tasks in which the observer acts on 2D image data. The detection tasks considered are of varying difficulty, and address both background and signal randomness in combination with different measurement noise models. In order to approximate the generally nonlinear IO test statistic, CNNs are employed. For the special case of the HO, an alternative supervised learning methodology is proposed that employs single-layer neural networks (SLNNs) for learning the Hotelling template without the need for explicitly estimating and inverting covariance matrices. The signal detection performance is assessed via receiver operating characteristic (ROC) analysis [1], [29]. The results produced by the proposed supervised learning methods are compared to those produced by use of traditional numerical methods or analytical calculations when feasible. The potential advantages of the proposed supervised learning approaches for approximating the IO and HO test statistics are discussed.

The remainder of this article is organized as follows. In Sec. II, the salient aspects of binary signal detection theory are reviewed and previous works on approximating the IO test statistic by use of ANNs are summarized. A novel methodology that employs SLNNs to approximate the HO test statistic is developed in Sec. III. The numerical studies and results of the proposed methods for approximating the IO and HO for signal detection tasks with different object models and noise models are provided in Sec. IV and Sec. V. Finally, the article concludes with a discussion of the work in Sec. VI.

II. BACKGROUND

Consider a linear digital imaging system that is described as:

| (1) |

where is a vector that describes the measured image data, f(r) is the object function with a spatial coordinate denotes a continuous-to-discrete (C-D) imaging operator that maps is the measurement noise. Because n is a random vector, so is the measured image data g. Below, the object function f(r) will be viewed as being either deterministic or stochastic, depending on the specification of the signal detection task. When its spatial dependence is not important to highlight, the notation f will be employed to denote f (r). The same notation will be employed with other functions.

A. Formulation of binary signal detection tasks

A binary signal detection task requires an observer to classify an image as satisfying either a signal-present hypothesis (H1) or a signal-absent hypothesis (H0). The imaging processes under these two hypotheses can be described as:

| (2a) |

| (2b) |

where fb and fs represent the background and signal functions, respectively, is the background image and is the signal image. In a signal-known-exactly (SKE) detection task, fs is non-random, whereas in a signal-known-statistically (SKS) detection task it is a random process. Similarly, in a background-known-exactly (BKE) detection task, fb is non-random, whereas in a background-known-statistically (BKS) detection task it is a random process. Let bm and sm denote the mth (1 ≤ m ≤ M) component of b and s, respectively. When is a linear operator, as in the numerical studies presented later, these quantities are defined as:

| (3a) |

| (3b) |

where hm(r) is the point response function of the imaging system associated with the mth measurement [1].

To perform a binary signal detection task, an observer computes a test statistic t(g) that maps the measured image g to a real-valued scalar variable, which is compared to a predetermined threshold τ to classify g as satisfying H0 or H1. By varying the threshold τ, a ROC curve can be plotted to depict the trade-off between the false-positive fraction (FPF) and the true-positive fraction (TPF) [1], [29]. The area under the ROC curve (AUC) can be subsequently calculated to quantify the signal detection performance.

B. Bayesian Ideal Observer and Hotelling Observer

Among all observers, the IO sets an upper performance limit for binary signal detection tasks. The IO test statistic is defined as any monotonic transformation of the likelihood ratio ΛLR(g), which is defined as [1], [2], [27]:

| (4) |

Here, and are conditional probability density functions that describe the measured data g under hypothesis H0 and H1, respectively. It will prove useful to note that one monotonic transformation of ΛLR(g) is the posterior probability

| (5) |

where Pr(H0) and Pr(H1) are the prior probabilities associated with the two hypotheses.

When the IO test statistic cannot be determined analytically, the HO is sometimes employed to assess task-based measures of image quality. The HO employs the Hotelling discriminant that is the population equivalent of the Fisher linear discriminant [1]. The HO test statistic tHO(g) is computed as:

| (6) |

where is the Hotelling template. Let denote the conditional mean of the image data given an object function. Similarly, let denote the conditional mean averaged with respect to object randomness associated with Hj (j = 0,1). The Hotelling template wHO is defined as [1]:

| (7) |

Here, is the covariance matrix of the measured data g under the hypothesis Hj (j = 0,1), and is the difference between the mean of the measured data g under the two hypotheses. It is useful to note that the covariance matrix Kj can be decomposed as [1]:

| (8) |

In Eq. (8), the first term is the mean of the noise covariance matrix averaged over f under the hypothesis Hj. The second term is the covariance matrix associated with the object f under the hypothesis Hj.

The signal-to-noise ratio associated with a test statistic t, denoted as SNRt, is defined as:

| (9) |

where are the mean and variance of t under the hypothesis Hj (j = 0,1). Similar to the AUC, SNRt is a commonly employed FOM of signal detectability that can be employed to guide the optimization of imaging systems. Whereas the IO maximizes the AUC among all observers, the HO maximizes the value of SNRt among all linear observers that can be computed as [1], [15]:

| (10) |

C. Previous works on approximating the IO test statistic by use of ANNs

A feed-forward ANN is a system of connected artificial neurons that are computational units described by adjustable real-valued parameters called weights [30], [31]. A sufficiently complex ANN possesses the ability to approximate any continuous function [26]. Accordingly, ANNs can be trained to approximate functions that represent test statistics of model observers. Previous published results indicate the feasibility of using ANNs to approximate IOs [27], [28]. For example, Kupinski et al. [27] applied fully-connected neural networks (FCNNs), which are a conventional type of feed-forward ANNs, to approximate the test statistic for an IO acting on low-dimensional vectors of extracted image features. It was demonstrated that [27], given sufficient training data and an ANN of sufficient representation capacity, the test statistic of the IO acting on a low-dimensional vector of image features could be accurately approximated. However, ordinary ANNs, such as FCNNs, do not scale well to high-dimensional data (e.g., images) because each neuron in FCNNs is fully connected to all neurons in the previous layer, which limits the dimension of the input layer and depth of the models that can be trained effectively. As such, FCNNs are not well suited for use as numerical observers that act directly on image data.

Modern deep learning approaches that employ convolutional neural networks (CNNs) have been developed to address this limitation [31]–[34]. A comprehensive review of CNNs for image classifications can be found in [35]. Recently, motivated by the success of CNNs in image classification tasks, Zhou and Anastasio [28] investigated a supervised learning-based method to approximate the test statistic of an IO that acts directly on 2D images by using CNNs. The basic idea is to identify a CNN that can approximate which, as described by Eq. (5), is a monotonic transformation of the likelihood ratio. In that preliminary work, the feasibility of using CNNs to approximate an IO for a simple SKE/BKE object model was explored. As an extension of that preliminary study, supervised learning-based methods that employ CNNs and SLNNs for approximating test statistics of the IO and HO acting on 2D measured images with various object and noise models are systematically explored in this work.

D. Maximum likelihood estimation of CNN weights for approximating the IO test statistic

To train a CNN for approximating the posterior probability the sigmoid function is employed in the last layer of the CNN; in this way the output of the CNN can be interpreted as probability. Let the set of all weights of neurons in a CNN be denoted by the vector Θ and denote the output of the CNN as It should be noted that the vertical bar in has two usages: to denote that the probability of H1 is conditioned on g and to denote that the function is parameterized by the nonrandom weight vector Θ. The goal of training the CNN is to determine a vector Θ such that the difference between the CNN-approximated posterior probability and the actual posterior probability Pr(H1|g) is small. The posterior Pr(H0|g) can be subsequently approximated by A supervised learning-based method can be employed to approximate the maximum likelihood (ML) estimate of Θ [27]. Let denote the image label, where y = 0 and y = 1 correspond to the hypothesis H0 and H1, respectively. The ML estimate of Θ can be obtained by minimizing the generalization error defined as the ensemble average of cross-entropy over distribution p(g, y) [2]:

| (11) |

where denotes the mean over the probability density can represent any functional form, when Eq. (11) is minimized [2]. To see this, one can rewrite the negative cross-entropy as:

| (12) |

When the CNN is sufficiently complex to represent any functional form, the task of finding ΘML becomes finding the optimal that maximizes Eq. (12). Consider the gradient of Eq. (12) with respect to

| (13) |

For Eq. (13) equals zero only when from which

Given a set of independent labeled training data can be estimated by minimizing the empirical error, which is the average of the cross-entropy over the training dataset:

| (14) |

where is the empirical estimate of ΘML. The IO test statistic is subsequently approximated as However, if the training dataset is small, directly minimizing the empirical error can cause overfitting and large generalization errors [36]. To reduce the rate at which overfitting happens, mini-batch stochastic gradient descent algorithms can be employed [36]. In online learning, these mini-batches are drawn on-the-fly from the joint distribution p(g, y) [36].

III. Approximation of the Ho test statistic by use of SLNNs

Below, a novel supervised learning-based method is proposed for learning the Ho test statistic.

A. Training the HO by use of supervised learning

As described by Eq. (6), the Ho test statistic is a linear function of the measured image g. Linear functions can be modeled by a single-layer neural network (SLNN) that possesses only a single fully connected layer. Denote the vector of weight parameters in the SLNN as The output of a SLNN can be computed as:

| (15) |

To approximate tHO(g) by tSLNN(g), a SLNN can be trained by maximizing SNRt by solving the following optimization problem:

| (16) |

where C is any positive number. The Lagrangian function related to this constrained optimization problem can be computed as:

| (17) |

The optimal solution w* satisfies the Lagrange multiplier conditions:

| (18a) |

| (18b) |

where λ* is the Lagrange multiplier. According to Eq. (18):

| (19a) |

| (19b) |

Because Eq. (17) is convex, w* is the global minimum of and the constrained optimization problem defined in Eq. (16) can be solved by minimizing L(w, λ*) with respect to w, which is equivalent to minimizing with respect to w. Hence, the generalization error to be minimized is defined as:

| (20) |

In order to have w* = wHO, λ* is set to 2.

Given N labeled image data in which half of them are signal-absent and the others are signal-present, the empirical error to be minimized is:

| (21) |

where Any gradient-based algorithm can be employed to minimize Eq. (21) to learn the empirical estimate of the Hotelling template, which is equivalent to the template employed by the Fisher linear discriminant. Because this method does not require estimation and inversion of a covariance matrix, it can scale well to large images.

B. Training the HO by use of a covariance-matrix decomposition

Methods have been developed previously to estimate and invert empirical covariance matrices by use of a covariance-matrix decomposition [1], [17]. As stated in Eq. (8), the covariance matrix Kj can be decomposed into the component associated with the object randomness and that associated with the noise randomness To invert the full covariance matrix for computing the HO test statistic, is assumed known and needs to be estimated from samples of background and signal images. When uncorrelated noise is considered, is a diagonal matrix. For applications where detectors introduce correlations in the measurements, is banded and may be a nearly diagonal matrix [1]. In this subsection, an alternative method is provided to approximate the HO test statistic by use of a covariance-matrix decomposition.

According to the covariance-matrix decomposition stated in Eq. (8), the variance of the test statistic can be computed as:

| (22) |

Denote which is assumed known. The generalization error defined in Eq. (20) can be reformulated as:

| (23) |

where

Given N background images and N signal images the empirical error to be minimized is:

| (24) |

where

To approximate the Hotelling template, any gradient-based algorithm can be employed to minimize Eq. (24). This method also does not require inversion of covariance matrix.

IV. NUMERICAL STUDIES

Computer-simulation studies were conducted to investigate the proposed methods for learning the IO and HO test statistics. Four different binary signal detection tasks were considered. A signal-known-exactly and background-known-exactly (SKE/BKE) signal detection task was considered in which the IO and HO can be analytically determined. A signal-known-exactly and background-known-statistically (SKE/BKS) detection task and a signal-known-statistically and background-known-statistically (SKS/BKS) detection task that both employed a lumpy background object model [37] were also considered. For these two BKS signal detection tasks, computations of the IO test statistic by use of MCMC methods have been accomplished [2], [38]. Finally, a SKE/BKS detection task employing a clustered lumpy background (CLB) object model [39] was addressed. To the best of our knowledge, current MCMC applications to the CLB object model have not been reported [8]. For all considered signal detection tasks, ROC curves were fit by use of the Metz-ROC software [40] that utilized the “proper” binormal model [41], [42].

The imaging system in all studies was simulated by a linear C-D mapping with a Gaussian kernel that was motivated by an idealized parallel-hole collimator system [2], [43]:

| (25) |

where the height h = 40 and the width w = 0.5. The details for each signal detection task and the training of neural networks are given in the following subsections.

A. SKE/BKE signal detection task

Both the signal and background were non-random for this case. The image size was 64 × 64 (i.e. M = 4096) and the background image was specified as b = 0. The signal function fs(r) was a 2D symmetric Gaussian function:

| (26) |

where A = 0.2 is the amplitude, rc = [32,32]T is the coordinate of the signal location, and ws = 3 is the width of the signal. The signal image s can be computed as:

| (27) |

Independent and identically distributed (i.i.d.) Laplacian noise that can describe histograms of filtered natural images [44] was employed: denotes a Laplacian distribution with the exponential decay c. The value of c was set to which corresponds to standard deviation 30.

Because the randomness in the measurements was only from the Laplacian noise, the IO test statistic can be computed as [44]:

| (28) |

The Hotelling template can be computed by analytically inverting the covariance matrix

| (29) |

where denotes the component at the mth row and the nth column (1 ≤ m, n ≤ M) of The performances of the proposed learning-based methods were compared to those produced by these analytical computations for this case.

B. SKE/BKS signal detection task with a lumpy background model

In this case, the image size was 64 × 64 and a non-random signal described by Eq. (26) was employed. The background was random and described by a stochastic lumpy object model [37]:

| (30) |

where Nb is the number of lumps that is sampled from Poisson distribution with the mean denotes a Poisson distribution with the mean that was set to 5, and is the lumpy function modeled by a 2D Gaussian function with amplitude a and width s:

| (31) |

Here, a was set to 1, s was set to 7, and rn is the location of the nth lump that was sampled from uniform distribution over the field of view. The background image b was analytically computed as:

| (32) |

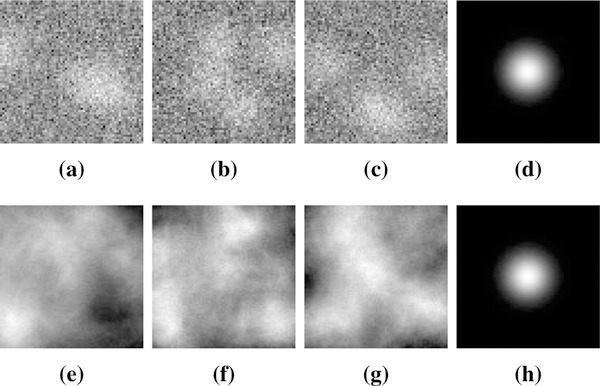

The measurement noise was an i.i.d. Gaussian noise that models electronic noise: denotes a Gaussian distribution with the mean 0 and the standard deviation δ that was set to 20. Examples of signal-present images are shown in the top row of Fig. 1.

Fig. 1:

(a)-(c) Samples of the signal-present measurements for the SKE/BKS detection task with the lumpy background model. (d) An image showing the signal contained in (a)-(c). (e)-(g) Samples of the signal-present measurements for the SKE/BKS detection task with the CLB model. (h) An image showing the signal contained in (e)-(g).

The IO and HO test statistics cannot be analytically determined because of the background randomness. To serve as a surrogate for ground truth, the MCMC method was employed to approximate the IO test statistic. In one Markov Chain, 200,000 background images were sampled according to the proposal density and the acceptance probability defined in [2]. The traditional HO test statistic was calculated by use of the covariance-matrix decomposition [1] with an empirical background covariance matrix that was estimated by use of 100,000 background images.

C. SKS/BKS signal detection task with a lumpy background model

This case employed the same stochastic lumpy background model that was specified in the SKE/BKS case described above. The signal was random and modeled by a 2D Gaussian function with a random location and a random shape, which can be mathematically represented as:

| (33) |

Here, is a rotation matrix that rotates a vector through an angle θ in Euclidean space, and determines the width of the Gaussian function along each coordinate axis. The signal image s was analytically computed as:

| (34) |

where The value of A was set to 0.2, θ was drawn from a uniform distribution: w1 and w2 were sampled from a uniform distribution: and rc was uniformly distributed over the image field of view. The measurement noise was Gaussian having zero mean and a standard deviation of 10.

The MCMC method was employed to provide a surrogate for ground truth for the IO. In each Markov Chain, 400,000 background images were sampled according to the proposal density and the acceptance probability described in [38]. The traditional HO test statistic was calculated by use of the covariance-matrix decomposition [1] with an empirical object covariance matrix that was estimated by use of 100,000 background images and 100,000 signal images.

Because linear observers typically are unable to detect signals with random locations, the HO was expected to perform poorly. Multi-template model observers [45]–[47] and the scanning HO [48], [49] can be employed to detect variable signals. In this paper, we do not provide a method for training these observers. The approximation of multi-template observers and the scanning HO by use of a supervised learning method represents a topic for future investigation.

D. SKE/BKS signal detection task with a clustered lumpy background model

A second SKE/BKS detection task associated with a more sophisticated stochastic background model, the clustered lumpy background (CLB), was considered also. The CLB model can be employed to synthesize mammographic images [39]. In this study, the image size was set to 128 × 128 and a CLB realization was simulated as:

| (35) |

where is the number of clusters, is the number of blobs in the kth cluster, rk is the location of the kth cluster, and rkn is the location of the nth blob in the kth cluster. Here, rk was sampled from a uniform distribution over the image field of view, rkn was sampled from a Gaussian distribution with standard deviation σ and center rk, and is the blob function:

| (36) |

where a, α and β are adjustable parameters. The rotation matrix rθkn is associated with the angle and L(r) is the “radius” of the ellipse with half-axes Lx and Ly :

| (37) |

where Here, rx and ry denote the components of r. The parameters employed for generating the CLB images are summarized in Table I.

TABLE I:

Parameters for generating CLB images.

| Lx | Ly | α | β | σ | a | ||

|---|---|---|---|---|---|---|---|

| 150 | 20 | 5 | 2 | 2.1 | 0.5 | 12 | 100 |

The signal image was generated as a 2D symmetric Gaussian function centered in the image with an amplitude of 500 and a width of 12. Mixed Poisson-Gaussian noise that models both photon noise and electronic noise was employed. The standard deviation of Gaussian noise was set to 10. Examples of signal-present images are shown in the bottom row of Fig. 1.

To the best of our knowledge, current MCMC methods have not been applied to the CLB object model and the mixed Poisson-Gaussian noise model. To provide a surrogate for ground truth for the HO, the traditional HO was computed by use of covariance-matrix decomposition with the empirical background covariance matrix estimated using 400,000 back-ground images.

E. Details of training neural networks

Here, details regarding the implementation of the supervised learning-based methods for approximating the IO and HO for the tasks above are described.

The train-validation-test scheme [36] was employed to evaluate the proposed supervised learning approaches. Specifically, the CNNs and SLNNs were trained on a training dataset. Subsequently, these neural networks were specified based upon a validation dataset and the detection performances of these networks were finally assessed on a testing dataset. To prepare training datasets for the BKS detection tasks, 100,000 lumpy background [37] images and 400,000 CLB images [39] were generated. When training the CNNs for approximating IOs, to mitigate the overfitting that can be caused by insufficient training data, a “semi-online learning” method was proposed and employed. In this approach, the measurement noise was generated on-the-fly and added to noiseless images drawn from the finite datasets. The validation dataset and testing dataset both comprised 200 images for each class.

To approximate the HO test statistic, SLNNs that represent linear functions were trained by use of the proposed method employing the covariance-matrix decomposition described in Sec. III-B. This was possible because the noise models for the considered detection tasks were known. At each iteration in training processes, the parameters of SLNNs were updated by minimizing error function Eq. (24) on mini-batches drawn from the training dataset. Specifically, when training the SLNN for the SKE/BKE detection task, the signal and background that were known exactly were employed and each mini-batch contained the fixed signal image and background image. When training the SLNNs for the SKE/BKS detection tasks, the known signals were employed and each mini-batch contained 200 background images and the fixed signal image. For training the SLNN for the SKS/BKS detection task, each mini-batch contained 200 background images and 200 signal images. The weight vector w that produced the maximum SNRt value evaluated on the validation dataset was specified to approximate the Hotelling template. The feasibility of the proposed methods for approximating the HO from a reduced number of images was also investigated. Specifically, the SLNNs were trained for the SKE/BKS detection task with the CLB model by minimizing Eq. (21) and Eq. (24) on datasets comprising 2000 labeled measurements (contained 1000 signal-present images and 1000 signal-absent images) and 2000 background images, respectively.

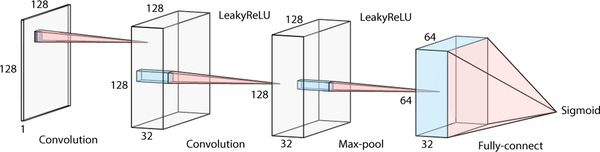

As opposed to the case of the HO approximation where the network architecture is known linear, to specify the CNN architecture for approximating the IO, a family of CNNs that possess different numbers of convolutional (CONV) layers was explored. Specifically, an initial CNN having one CONV layer was firstly trained by minimizing the cross-entropy described in Eq. (14). Subsequently, CNNs having additional CONV layers were trained according to Eq. (14) until the network did not significantly decrease the cross-entropy on a validation dataset. The cross-entropy was considered as significantly decreased if its decrement is at least 1.0% of that produced by the previous CNN. Finally the CNN having the minimum validation cross-entropy was selected as the optimal CNN in the explored architecture family. For all the considered CNN architectures in this architecture family, each CONV layer comprised 32 filters with 5×5 spatial support and was followed by a LeakyReLU activation function [50], a max-pooling layer [51] following the last CONV layer was employed to subsample the feature maps, and finally a fully connected (FC) layer using a sigmoid activation function computed the posterior probability It should be noted that these architecture parameters were determined heuristically and may not be optimal for many signal detection tasks. One instance of the implemented CNN architecture is illustrated in Fig. 2. These CNNs were trained by minimizing the error function defined in Eq. (14) on mini-batches at each iteration. Each mini-batch contained 200 signal-absent images and 200 signal-present images. Because the HO detection performance is a lower bound of the IO detection performance, the selected optimal CNN should not perform worse than the SLNN-approximated HO (SLNN-HO) on the corresponding signal detection task if that CNN approximates IO. If this occurs, the architecture parameters need to be re-specified and a different family of CNN architectures should be considered.

Fig. 2:

One instance of the CNN architecture employed for approximating the IO test statistic.

The Adam algorithm [52], which is a stochastic gradient descent algorithm, was employed in Tensorflow [53] to minimize the error functions for approximating the IO and HO. All networks were trained on a single NVIDIA TITAN X GPU.

V. RESULTS

A. SKE/BKE signal detection task

1). HO approximation:

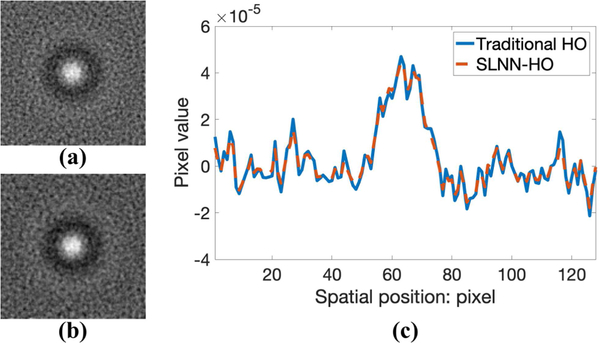

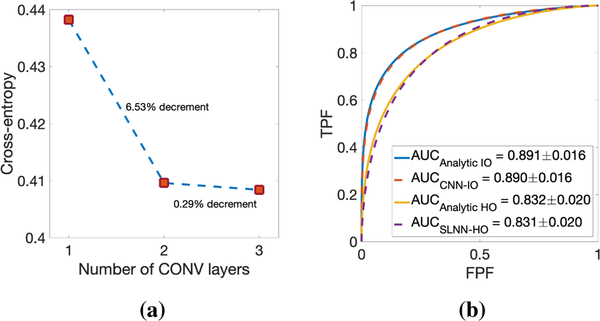

A linear SLNN was trained for 1000 mini-batches and the weight vector w that produced the maximum SNRt value evaluated on the validation dataset was selected to approximate the Hotelling template. The linear templates employed by the SLNN-HO and the analytical HO are shown in Fig. 3. The results corresponding to the SLNN-HO closely approximate those of the analytical HO. The ROC curve produced by the SLNN-HO (purple dashed curve) is compared to that produced by the analytical HO (yellow curve) in Fig. 4 (b). These two curves nearly overlap.

Fig. 3:

Comparison of the Hotelling template in the SKE/BKE case: (a) Analytical Hotelling template; (b) SLNN-HO template; (c) Center line profiles in (a) and (b). The estimated templates are nearly identical.

Fig. 4:

(a) Validation cross-entropy values of CNNs having one to three CONV layers; (b) Testing ROC curves for the IO and HO approximations.

2). IO approximation:

The CNNs having one to three CONV layers were trained for 100,000 mini-batches and the corresponding validation cross-entropy values are plotted in Fig. 4 (a). The validation cross-entropy was not significantly decreased after adding the third CONV layer. Therefore, we stopped adding more CONV layers and the CNN having the minimum validation cross-entropy, which was the CNN that possesses 3 CONV layers, was selected. The detection performance of this selected CNN was evaluated on the testing dataset and the resulting AUC value was 0.890, which was greater than that of the SLNN-HO (i.e., 0.831). Subsequently, the selected CNN was employed to approximate the IO. The testing ROC curve of the CNN-approximated IO (CNN-IO) (red-dashed curve) was compared to that of the analytical IO (blue curve) in Fig. 4 (b). The efficiency of the CNN-IO, which can be computed as the squared ratio of the detectability index [54] of the CNN-IO to that of the IO, was 99.14%. The mean squared error (MSE) of the posterior probabilities computed by the analytical IO and the CNN-IO was 0.30%. These quantities were evaluated on the testing dataset.

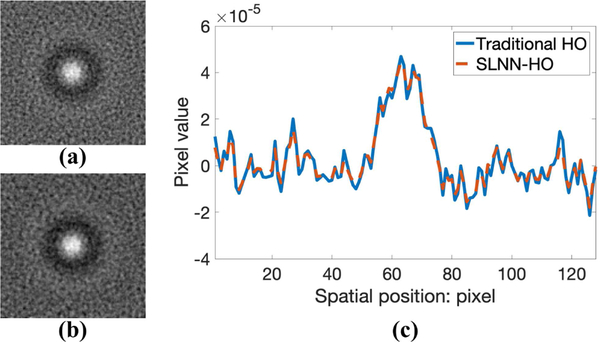

B. SKE/BKS signal detection task with lumpy background

1). HO approximation:

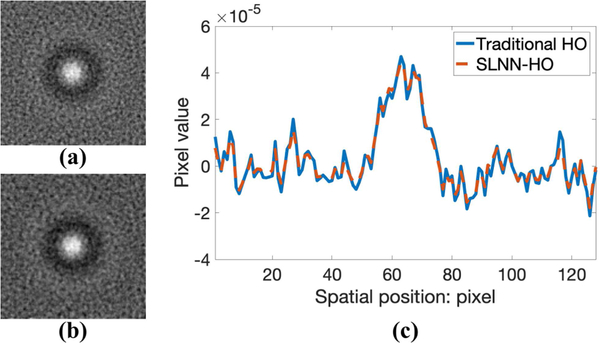

The SLNN was trained for 1000 mini-batches (i.e., 2 epochs) and the weight vector w that produced the maximum SNRt value evaluated on the validation dataset was selected to approximate the Hotelling template. The linear templates employed by the SLNN-HO and the traditional HO are shown in Fig. 5. The results corresponding to the SLNN-HO closely approximate those of the traditional HO. The ROC curves corresponding to the traditional HO (yellow curve) and the SLNN-HO (purple-dashed curve) are compared in Fig. 6 (b). Two ROC curves nearly overlap.

Fig. 5:

Comparison of the Hotelling template in the SKE/BKS case: (a) Traditional Hotelling template; (b) SLNN-HO template; (c) Center line profiles in (a) and (b). The estimated templates are nearly identical.

Fig. 6:

(a) Validation cross-entropy values of CNNs having one to seven CONV layers; (b) Testing ROC curves for the IO and HO approximations.

2). IO approximation:

The CNNs having 1, 3, 5, and 7 CONV layers were trained for 100,000 mini-batches (i.e., 200 epochs) and the corresponding validation cross-entropy values are plotted in Fig. 6 (a). There was no significant difference of the validation cross-entropy between the CNNs having 5 and 7 CONV layers. Therefore, we stopped adding more CONV layers and the CNN having the minimum validation cross-entropy, which was the CNN that possesses 7 CONV layers, was selected. The selected CNN was evaluated on the testing dataset and the resulting AUC value was 0.907, which was greater than that of the SLNN-HO (i.e., 0.808). Subsequently, the selected CNN was employed to approximate the IO. The testing ROC curve of the CNN-IO (red-dashed curve) is compared to that of the MCMC-computed IO (MCMC-IO) (blue curve) in Fig. 6 (b). The efficiency of the CNN-IO was 94.64% with respect to the MCMC-IO, and the MSE of the posterior probabilities computed by the CNN-IO and the MCMC-IO was 0.84%. These quantities were evaluated on the testing dataset.

C. SKS/BKS signal detection task with lumpy background

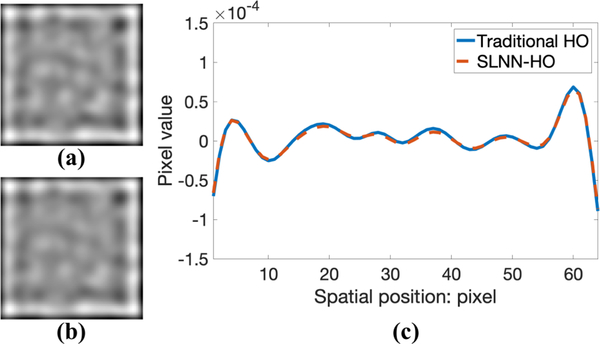

1). HO approximation:

A linear SLNN was trained for 1000 mini-batches (i.e., 2 epochs) and the weight vector w that produced the maximum SNRt value evaluated on the validation dataset was selected to approximate the Hotelling template. The linear templates employed by the SLNN-HO and the traditional HO are shown in Fig. 7. The results corresponding to the SLNN-HO closely approximate those of the traditional HO. The ROC curves corresponding to the SLNN-HO (purple dashed curve) and the traditional HO (yellow curve) are compared in Fig. 8 (b). The two ROC curves nearly overlap. The HO performed nearly as a random guess for this task as expected.

Fig. 7:

Comparison of the Hotelling template in the SKS/BKS case: (a) Traditional Hotelling template; (b) SLNN-HO template; (c) Center line profiles in (a) and (b). The estimated templates are nearly identical.

Fig. 8:

(a) Validation cross-entropy values produced by CNNs having 1 to 13 CONV layers; (b) Testing ROC curves for the IO and HO approximations.

2). IO approximation:

Convolutional neural networks having 1, 5, 9, and 13 CONV layers were trained for 300,000 mini-batches (i.e., 600 epochs) and the corresponding validation cross-entropy values are plotted in Fig. 8 (a). Because there was no significant decrement of the validation cross-entropy value after adding 4 CONV layers to the CNN having 9 CONV layers, we stopped adding more CONV layers and the CNN having the minimum validation cross-entropy value, which was the CNN with 13 CONV layers, was selected. The selected CNN was evaluated on the testing dataset and the resulting AUC value was 0.853, which was greater than that of the SLNN-HO (i.e., 0.508). Subsequently, the selected CNN was employed to approximate the IO. The testing ROC curve produced by the CNN-IO (red-dashed curve) is compared to that produced by the MCMC-IO (blue curve) in Fig. 8 (b). The efficiency of the CNN-IO was 95.14% with respect to the MCMC-IO and the MSE of the posterior probabilities computed by the CNN-IO and the MCMC-IO was 1.46%. These quantities were evaluated on the testing dataset.

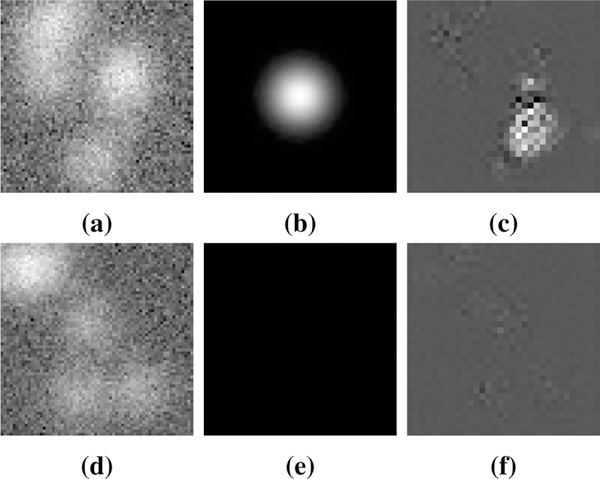

3). CNN visualization:

Feature maps extracted by CONV layers enabled us to understand how CNNs were able to extract task-specific features for performing signal detection tasks. In this case, the 32 subsampled feature maps output from the max-pooling layer were weighted by the weight parameters of the last FC layer and then summed to produce a single 2D image for the visualization. That single 2D image was referred to as the signal feature map and is shown in Fig. 9. The signal to be detected was nearly invisible in the signalpresent measurements but can be easily observed in the signal feature map. This illustrates the ability of CNNs to perform signal detection tasks.

Fig. 9:

(a) Signal-present measurements; (b) Image showing the signal contained in (a); (c) The signal feature map corresponding to (a); (d) Signal-absent measurements; (e) Image showing that the signal is absent in (d); (f) The signal feature map corresponding to (d). In the signal feature maps, the regions around the signals were activated by the CNN.

D. SKE/BKS signal detection task with clustered lumpy back-ground

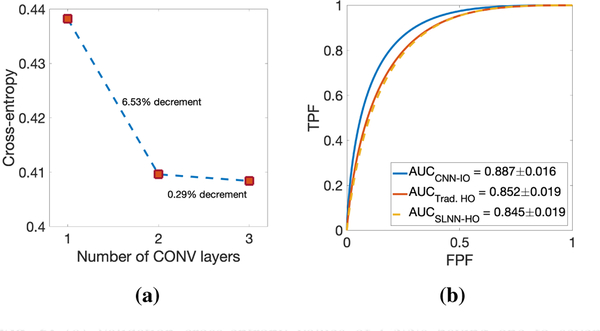

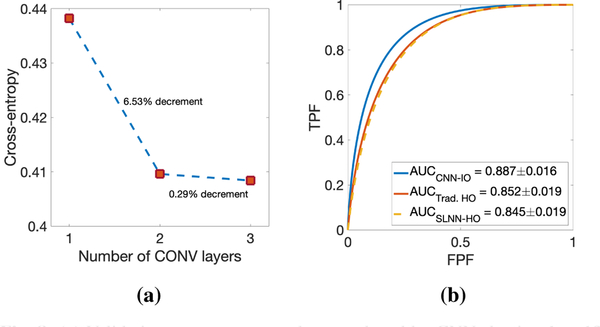

1). HO approximation:

The SLNN was trained for 40,000 mini-batches (i.e., 20 epochs) and the weight vector w that produced the maximum validation SNRt was selected to approximate the Hotelling template. The traditional HO template and the SLNN-HO template are compared in Fig. 10. The results corresponding to the SLNN-HO closely approximate those of the traditional HO. The ROC curve of the SLNN-HO (yellow-dashed curve) compares to that of the traditional HO (red curve) in Fig. 11 (b). Two curves nearly overlap.

Fig. 10:

Comparison of the Hotelling template: (a) Traditional Hotelling template; (b) SLNN-HO template; (c) Center line profiles in (a) and (b). The estimated templates are nearly identical.

Fig. 11:

(a) Validation cross-entropy values of CNNs having one to three CONV layers; (b) Testing ROC curves for the IO and HO approximations.

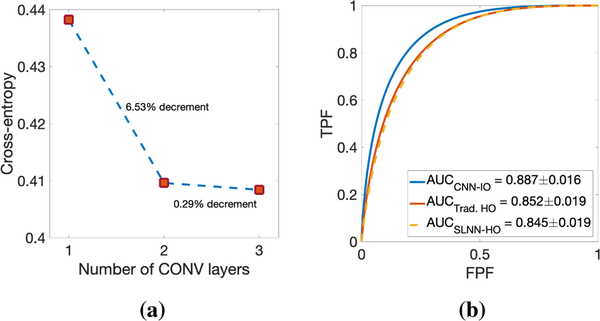

2). IO approximation:

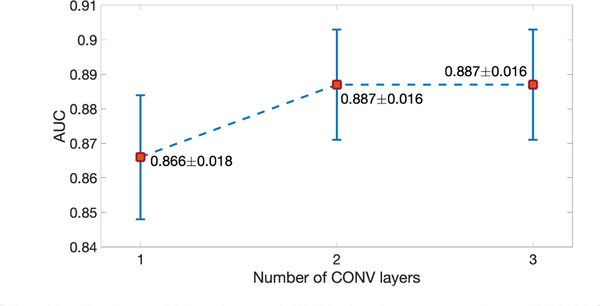

Convolutional neural networks having one to three CONV layers were trained for 100,000 mini-batches (i.e., 50 epochs) and the corresponding validation cross-entropy values are plotted in Fig. 11 (a). Because the validation cross-entropy was not significantly decreased by adding the third CONV layer, we stopped adding more CONV layers and the CNN having the minimum validation cross-entropy value, which was the CNN with three CONV layers, was selected. The detection performance of this selected CNN was evaluated on the testing dataset and the resulting AUC value was 0.887, which was greater than that of the SLNN-HO (i.e., 0.845). Subsequently, the selected CNN was employed to approximate the IO. The CNN-IO was evaluated on the testing dataset and the resulting ROC curve is plotted in Fig. 11 (b). To show how the signal detection performance varied when the number of CONV layers was increased, the AUC values evaluated on the testing dataset corresponding to the CNNs with one to three CONV layers are illustrated in Fig. 12. These AUC values were estimated by use of the “proper” binormal model [41], [42]. The AUC value was increased when more CONV layers were employed until convergence. Because MCMC applications to the CLB object model have not been reported to date, validation for the IO approximation was not provided in this case. To the best of our knowledge, we are the first to approximate the IO test statistic for the CLB object model.

Fig. 12:

Testing AUC values of CNNs having one to three CONV layers.

3). HO approximation from a reduced number of images:

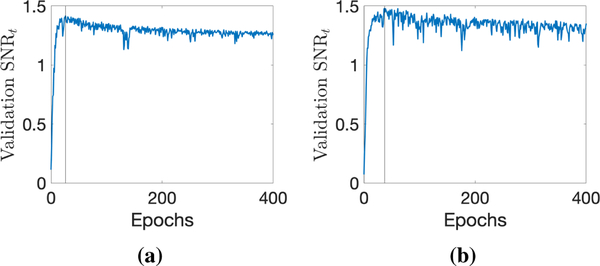

To solve the dimensionality problem of inverting a large covariance matrix for computing the Hotelling template, the matrix-inversion lemma has been implemented in which the covariance matrix is approximated by use of a small number of images [1]. However, this method can introduce significant positive bias on the estimate of SNRHO [17]. To investigate the ability of our proposed methods to approximate the HO performance when small dataset is employed, the linear SLNNs were trained by minimizing Eq. (21) and Eq. (24) on 2000 noisy measurements and 2000 background images, respectively, for 400 epochs. In the training processes, overfitting occurred as revealed by the curves of validation SNRt with respect to the number of epochs shown in Fig. 13. However, an early-stopping strategy can be employed in which training is stopped at the epoch having the maximum validation SNRt. The values of , which were computed according to Eq. (10), evaluated at the 400th epoch and at the epoch having the maximum validation SNRt are shown in Table II. These data reveal that overfitting caused a significant positive bias on , while the early-stopping strategy accurately approximated the reference , which was computed by using the Hotelling template of the traditional HO that was shown in Fig. 10 (a). The Hotelling template was also computed by using the matrix-inversion lemma [1] on 2000 background images, and the corresponding ,had a significant positive bias shown in Table II as observed by others [17].

Fig. 13:

Curves of validation SNRt with respect to the number of epochs. (a) Validation SNRt curve of the SLNN trained on labeled noisy measurements. (b) Validation SNRt curve of the SLNN trained on background images using decomposition of covariance matrix. The vertical gray line indicates the epoch having the maximum validation SNRt value. Overfitting occurred after the overall curves of validation SNRt start to decrease.

TABLE II:

computed from both background images b and measurements g. The Hotelling template computed from few images can cause significant positive bias. However, when SLNNs were trained using our proposed methods, early-stopping strategy in which the epoch having the maximum validation SNRt was selected could be employed to closely approximate the HO performance.

VI. DISCUSSION AND CONCLUSION

The proposed supervised learning-based method that employs CNNs to approximate the IO test statistic represents an alternative approach to conventional numerical approaches such as MCMC methods for use in optimizing medical imaging systems and data-acquisition designs. Although theoretical convergence properties exist for MCMC methods, practical issues such as designs of proposal densities from which proposed object samples are drawn need to be addressed for each considered object model and current applications of the MCMC methods have been limited to some specific object models that include parameterized torso phantoms [9], lumpy background models [2] and a binary texture model [8]. Supervised learning-based approaches may be easier to deploy with sophisticated object models than are MCMC methods. To demonstrate this, in the numerical study, we applied the proposed supervised learning method with a CLB object model, for which the IO computation has not been addressed by MCMC methods to date [8]. A practical advantage of the proposed method is that supervised learning-based methods are becoming widespread in their usage and many researchers are becoming experienced on training feed-forward ANNs.

A challenge in approximating the IO by use of CNNs is the specification of the collection of model architectures to be systematically explored. In this study, we explored a family of CNNs that possess different numbers of CONV layers. By adding more CONV layers, the representation capacity of the network is increased and the test statistic can be more accurately approximated. This study does not provide methods for determining other architecture parameters such as the number of FC layers and the size of convolutional filters. Recent work [55] proposed a method that optimizes the network architecture in the training process. This represents a possible approach for jointly optimizing the network architecture and weights to approximate the IO test statistic.

We also proposed a supervised learning-based method using a simple linear SLNN to approximate the HO that is the optimal linear observer and sets a lower bound of the IO performance. The proposed methodology directly learns the Hotelling template without estimating and inverting covariance matrices. Accordingly, the proposed method can scale well to large images. When approximating the HO test statistic, selection of network architecture is not an issue because the HO test statistic depends linearly on the input image and one can employ a linear SLNN to represent linear functions. We also provided an alternative method to learn the HO by use of a covariance-matrix decomposition. The feasibility of both methods to learn the HO from a reduced number of images was investigated. For the case where 2000 clustered lumpy images with the dimension 128 × 128 were employed to approximate the HO, our proposed learning-based methods could still produce accurate estimates of SNRHO by incorporating an early-stopping strategy.

Numerous topics remain for future investigation. With regards to approximating IOs by use of experimental images, there is a need to investigate methods to train large CNN models on limited training data. To accomplish this, one may investigate transfer learning [56] or domain adaptation methods [57] that learn features of images in target domain (e.g., experimental images) by use of images in source domain (e.g., computer-simulated images). One may also employ the method proposed by Kupinski et al. [43] or train a generative adversarial network [58] to estimate a stochastic object model (SOM) from experimental images to produce large datasets. Finally, it will be important to extend the proposed learning-based methods to more complicated tasks, such as joint detection and localization of a signal.

ACKNOWLEDGMENT

This research was supported in part by NIH awards EB020168 and EB020604 and NSF award DMS1614305.

Contributor Information

Weimin Zhou, Department of Electrical and Systems Engineering, Washington University in St. Louis, St. Louis, MO, 63130 USA.

Hua Li, Department of Bioengineering, University of Illinois at Urbana-Champaign, and the Carle Cancer Center, Carle Foundation Hospital, Urbana, IL, 61801 USA.

Mark A. Anastasio, Department of Bioengineering, University of Illinois at Urbana-Champaign, Urbana, IL, 61801 USA

REFERENCES

- [1].Barrett HH and Myers KJ, Foundations of Image Science. John Wiley & Sons, 2013. [Google Scholar]

- [2].Kupinski MA, Hoppin JW, Clarkson E, and Barrett HH, “Ideal-Observer computation in medical imaging with use of Markov-Chain Monte Carlo techniques,” JOSA A, vol. 20, no. 3, pp. 430–438, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Park S, Barrett HH, Clarkson E, Kupinski MA, and Myers KJ, “Channelized-Ideal Observer using Laguerre-Gauss channels in detection tasks involving non-Gaussian distributed lumpy backgrounds and a gaussian signal,” JOSA A, vol. 24, no. 12, pp. B136–B150, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Park S. and Clarkson E, “Efficient estimation of Ideal-Observer performance in classification tasks involving high-dimensional complex backgrounds,” JOSA A, vol. 26, no. 11, pp. B59–B71, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Shen F. and Clarkson E, “Using Fisher information to approximate Ideal-Observer performance on detection tasks for lumpy-background images,” JOSA A, vol. 23, no. 10, pp. 2406–2414, 2006. [DOI] [PubMed] [Google Scholar]

- [6].Wagner RF and Brown DG, “Unified SNR analysis of medical imaging systems,” Physics in Medicine & Biology, vol. 30, no. 6, p. 489, 1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Liu Z, Knill DC, Kersten D. et al. , “Object classification for human and Ideal Observers,” Vision Research, vol. 35, no. 4, pp. 549–568, 1995. [DOI] [PubMed] [Google Scholar]

- [8].Abbey CK and Boone JM, “An Ideal Observer for a model of X-ray imaging in breast parenchymal tissue,” in International Workshop on Digital Mammography Springer, 2008, pp. 393–400. [Google Scholar]

- [9].He X, Caffo BS, and Frey EC, “Toward realistic and practical Ideal Observer (IO) estimation for the optimization of medical imaging systems,” IEEE Transactions on Medical Imaging, vol. 27, no. 10, pp. 1535–1543, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Barrett HH, Myers KJ, Hoeschen C, Kupinski MA, and Little MP, “Task-based measures of image quality and their relation to radiation dose and patient risk,” Physics in Medicine & Biology, vol. 60, no. 2, p. R1, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Reiser I. and Nishikawa R, “Task-based assessment of breast tomosynthesis: Effect of acquisition parameters and quantum noise,” Medical Physics, vol. 37, no. 4, pp. 1591–1600, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Sanchez AA, Sidky EY, and Pan X, “Task-based optimization of dedicated breast CT via Hotelling observer metrics,” Medical Physics, vol. 41, no. 10, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Glick SJ, Vedantham S, and Karellas A, “Investigation of optimal kVp settings for CT mammography using a flat-panel imager,” in Medical Imaging 2002: Physics of Medical Imaging, vol. 4682 International Society for Optics and Photonics, 2002, pp. 392–403. [Google Scholar]

- [14].Barrett HH, Gooley T, Girodias K, Rolland J, White T, and Yao J, “Linear discriminants and image quality,” Image and Vision Computing, vol. 10, no. 6, pp. 451–460, 1992. [Google Scholar]

- [15].Barrett HH, Yao J, Rolland JP, and Myers KJ, “Model observers for assessment of image quality,” Proceedings of the National Academy of Sciences, vol. 90, no. 21, pp. 9758–9765, 1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Barrett HH, Myers KJ, Gallas BD, Clarkson E, and Zhang H, “Megalopinakophobia: its symptoms and cures,” in Medical Imaging 2001: Physics of Medical Imaging, vol. 4320 International Society for Optics and Photonics, 2001, pp. 299–308. [Google Scholar]

- [17].Kupinski MA, Clarkson E, and Hesterman JY, “Bias in Hotelling observer performance computed from finite data,” in Medical Imaging 2007: Image Perception, Observer Performance, and Technology Assessment, vol. 6515 International Society for Optics and Photonics, 2007, p. 65150S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Barrett HH, Abbey CK, Gallas BD, and Eckstein MP, “Stabilized estimates of Hotelling-observer detection performance in patient-structured noise,” in Medical Imaging 1998: Image Perception, vol. 3340 International Society for Optics and Photonics, 1998, pp. 27–44. [Google Scholar]

- [19].Gallas BD and Barrett HH, “Validating the use of channels to estimate the ideal linear observer,” JOSA A, vol. 20, no. 9, pp. 1725–1738, 2003. [DOI] [PubMed] [Google Scholar]

- [20].Brankov JG, Yang Y, Wei L, El Naqa I, and Wernick MN, “Learning a channelized observer for image quality assessment,” IEEE Transactions on Medical Imaging, vol. 28, no. 7, p. 991, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Wernick MN, Yang Y, Brankov JG, Yourganov G, and Strother SC, “Machine learning in medical imaging,” IEEE Signal Processing Magazine, vol. 27, no. 4, pp. 25–38, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Massanes F. and Brankov JG, “Evaluation of CNN as anthropomorphic model observer,” in Medical Imaging 2017: Image Perception, Observer Performance, and Technology Assessment, vol. 10136 International Society for Optics and Photonics, 2017, p. 101360Q. [Google Scholar]

- [23].Alnowami M, Mills G, Awis M, Elangovanr P, Patel M, Halling-Brown M, Young K, Dance DR, and Wells K, “A deep learning model observer for use in alterative forced choice virtual clinical trials,” in Medical Imaging 2018: Image Perception, Observer Performance, and Technology Assessment, vol. 10577 International Society for Optics and Photonics, 2018, p. 105770Q. [Google Scholar]

- [24].Kopp FK, Catalano M, Pfeiffer D, Rummeny EJ, and Noël PB, “Evaluation of a machine learning based model observer for X-ray CT,” in Medical Imaging 2018: Image Perception, Observer Performance, and Technology Assessment, vol. 10577 International Society for Optics and Photonics, 2018, p. 105770S. [Google Scholar]

- [25].Kopp FK, Catalano M, Pfeiffer D, Fingerle AA, Rummeny EJ, and Noël PB, “CNN as model observer in a liver lesion detection task for X-ray computed tomography: A phantom study,” Medical Physics, 2018. [DOI] [PubMed] [Google Scholar]

- [26].Hornik K, Stinchcombe M, and White H, “Multilayer feedforward networks are universal approximators,” Neural Networks, vol. 2, no. 5, pp. 359–366, 1989. [Google Scholar]

- [27].Kupinski MA, Edwards DC, Giger ML, and Metz CE, “Ideal Observer approximation using Bayesian classification neural networks,” IEEE Transactions on Medical Imaging, vol. 20, no. 9, pp. 886–899, 2001. [DOI] [PubMed] [Google Scholar]

- [28].Zhou W. and Anastasio MA, “Learning the Ideal Observer for SKE detection tasks by use of convolutional neural networks,” in Medical Imaging 2018: Image Perception, Observer Performance, and Technology Assessment, vol. 10577 International Society for Optics and Photonics, 2018, p. 1057719. [Google Scholar]

- [29].Metz CE, “ROC methodology in radiologic imaging.” Investigative Radiology, vol. 21, no. 9, pp. 720–733, 1986. [DOI] [PubMed] [Google Scholar]

- [30].Schmidhuber J, “Deep learning in neural networks: An overview,” Neural Networks, vol. 61, pp. 85–117, 2015. [DOI] [PubMed] [Google Scholar]

- [31].LeCun Y, Bengio Y, and Hinton G, “Deep learning,” Nature, vol. 521, no. 7553, p. 436, 2015. [DOI] [PubMed] [Google Scholar]

- [32].Lawrence S, Giles CL, Tsoi AC, and Back AD, “Face recognition: A convolutional neural-network approach,” IEEE Transactions on Neural Networks, vol. 8, no. 1, pp. 98–113, 1997. [DOI] [PubMed] [Google Scholar]

- [33].CireşAn D, Meier U, Masci J, and Schmidhuber J, “Multi-column deep neural network for traffic sign classification,” Neural Networks, vol. 32, pp. 333–338, 2012. [DOI] [PubMed] [Google Scholar]

- [34].Garcia C. and Delakis M, “Convolutional face finder: A neural architecture for fast and robust face detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 26, no. 11, pp. 1408–1423, 2004. [DOI] [PubMed] [Google Scholar]

- [35].Rawat W. and Wang Z, “Deep convolutional neural networks for image classification: A comprehensive review,” Neural Computation, vol. 29, no. 9, pp. 2352–2449, 2017. [DOI] [PubMed] [Google Scholar]

- [36].Goodfellow I, Bengio Y, Courville A, and Bengio Y, Deep learning. MIT press; Cambridge, 2016, vol. 1. [Google Scholar]

- [37].Rolland J. and Barrett HH, “Effect of random background inhomogeneity on observer detection performance,” JOSA A, vol. 9, no. 5, pp. 649–658, 1992. [DOI] [PubMed] [Google Scholar]

- [38].Park S, Kupinski MA, Clarkson E, and Barrett HH, “Ideal-Observer performance under signal and background uncertainty,” in Biennial International Conference on Information Processing in Medical Imaging Springer, 2003, pp. 342–353. [DOI] [PubMed] [Google Scholar]

- [39].Bochud FO, Abbey CK, and Eckstein MP, “Statistical texture synthesis of mammographic images with clustered lumpy backgrounds,” Optics Express, vol. 4, no. 1, pp. 33–43, 1999. [DOI] [PubMed] [Google Scholar]

- [40].Metz C, “Rockit user’s guide,” Chicago, Department of Radiology, University of Chicago, 1998. [Google Scholar]

- [41].Metz CE and Pan X, “‘Proper’ binormal ROC curves: theory and maximum-likelihood estimation,” Journal of Mathematical Psychology, vol. 43, no. 1, pp. 1–33, 1999. [DOI] [PubMed] [Google Scholar]

- [42].Pesce LL and Metz CE, “Reliable and computationally efficient maximum-likelihood estimation of “proper” binormal ROC curves,” Academic Radiology, vol. 14, no. 7, pp. 814–829, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Kupinski MA, Clarkson E, Hoppin JW, Chen L, and Barrett HH, “Experimental determination of object statistics from noisy images,” JOSA A, vol. 20, no. 3, pp. 421–429, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Clarkson E. and Barrett HH, “Approximations to Ideal-Observer performance on signal-detection tasks,” Applied Optics, vol. 39, no. 11, pp. 1783–1793, 2000. [DOI] [PubMed] [Google Scholar]

- [45].Eckstein MP and Abbey CK, “Model observers for signal-known-statistically tasks (sks),” in Medical Imaging 2001: Image Perception and Performance, vol. 4324 International Society for Optics and Photonics, 2001, pp. 91–103. [Google Scholar]

- [46].Zhang Y, Pham BT, and Eckstein MP, “Automated optimization of jpeg 2000 encoder options based on model observer performance for detecting variable signals in X-ray coronary angiograms,” IEEE Transactions on Medical Imaging, vol. 23, no. 4, pp. 459–474, 2004. [DOI] [PubMed] [Google Scholar]

- [47].Castella C, Eckstein M, Abbey C, Kinkel K, Verdun F, Saunders R, Samei E, and Bochud F, “Mass detection on mammograms: influence of signal shape uncertainty on human and model observers,” JOSA A, vol. 26, no. 2, pp. 425–436, 2009. [DOI] [PubMed] [Google Scholar]

- [48].Barrett HH, Myers KJ, Devaney N, and Dainty C, “Objective assessment of image quality. IV. Application to adaptive optics,” JOSA A, vol. 23, no. 12, pp. 3080–3105, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Gifford HC, King MA, Pretorius PH, and Wells RG, “A comparison of human and model observers in multislice LROC studies,” IEEE Transactions on Medical Imaging, vol. 24, no. 2, pp. 160–169, 2005. [DOI] [PubMed] [Google Scholar]

- [50].Springenberg JT, Dosovitskiy A, Brox T, and Riedmiller M, “Striving for simplicity: The all convolutional net,” arXiv preprint arXiv:14126806, 2014. [Google Scholar]

- [51].Scherer D, Müller A, and Behnke S, “Evaluation of pooling operations in convolutional architectures for object recognition,” in Artificial Neural Networks-ICANN 2010. Springer, 2010, pp. 92–101. [Google Scholar]

- [52].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:14126980, 2014. [Google Scholar]

- [53].Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M. et al. , “Tensorflow: a system for large-scale machine learning.” in OSDI, vol. 16, 2016, pp. 265–283. [Google Scholar]

- [54].Park S, Clarkson E, Kupinski MA, and Barrett HH, “Efficiency of the human observer detecting random signals in random backgrounds,” JOSA A, vol. 22, no. 1, pp. 3–16, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Cortes C, Gonzalvo X, Kuznetsov V, Mohri M, and Yang S, “Adanet: Adaptive structural learning of artificial neural networks,” arXiv preprint arXiv:160701097, 2016. [Google Scholar]

- [56].Qiu J, Wu Q, Ding G, Xu Y, and Feng S, “A survey of machine learning for big data processing,” EURASIP Journal on Advances in Signal Processing, vol. 2016, no. 1, p. 67, 2016. [Google Scholar]

- [57].Ganin Y. and Lempitsky V, “Unsupervised domain adaptation by backpropagation,” arXiv preprint arXiv:14097495, 2014. [Google Scholar]

- [58].Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, and Bengio Y, “Generative adversarial nets,” in Advances in Neural Information Processing Systems, 2014, pp. 2672–2680. [Google Scholar]