Abstract

Transparency in health economic decision modelling is important for engendering confidence in the models and in the reliability of model-based cost-effectiveness analyses. The Mount Hood Diabetes Challenge Network has taken a lead in promoting transparency through validation with biennial conferences in which diabetes modelling groups meet to compare simulated outcomes of pre-specified scenarios often based on the results of pivotal clinical trials. Model registration is a potential method for promoting transparency, while also reducing the duplication of effort. An important network initiative is the ongoing construction of a diabetes model registry (https://www.mthooddiabeteschallenge.com). Following the 2012 International Society for Pharmacoeconomics and Outcomes Research and the Society of Medical Decision Making (ISPOR-SMDM) guidelines, we recommend that modelling groups provide technical and non-technical documentation sufficient to enable model reproduction, but not necessarily provide the model code. We also request that modelling groups upload documentation on the methods and outcomes of validation efforts, and run reference case simulations so that model outcomes can be compared. In this paper, we discuss conflicting definitions of transparency in health economic modelling, and describe the ongoing development of a registry of economic models for diabetes through the Mount Hood Diabetes Challenge Network, its objectives and potential further developments, and highlight the challenges in its construction and maintenance. The support of key stakeholders such as decision-making bodies and journals is key to ensuring the success of this and other registries. In the absence of public funding, the development of a network of modellers is of huge value in enhancing transparency, whether through registries or other means.

Key Points

| Improving the transparency of health economic decision modelling will enhance the reliability of model-based cost-effectiveness analyses. |

| The Mount Hood Diabetes Challenge Network has established a registry of health economic models of diabetes containing structured information about each model and outcomes for reference simulations. |

| The development of modelling networks is of huge value in promoting transparency in economic modelling. |

| The support of stakeholders including decision-making bodies and journals and public funding is key to ensuring the success of model registries. |

Introduction

There is growing recognition that a lack of transparency in medical research may be a barrier to good decision making in health care [1–3]. This may result directly, for instance, from publication bias (i.e. the non-reporting of negative results), which can bias the known evidence base that informs decisions. In addition, poor transparency may cause stakeholders to have less confidence in the evidence generated by medical research, for example, because of misplaced concerns about publication bias, an inability to interpret complex evidence or to reproduce the evidence, or the absence of evidence on the validity of research methods. Calls for increased transparency in medical research have inspired many responses, including the AllTrials movement [4], which seeks to ensure that all clinical trials are prospectively registered and reported, and the open data movement [5], which maintains that providing data and analysis programming code permits greater scrutiny and promotes the replication of analyses and results.

Cost-effectiveness analysis, which formally compares the relative costs and relative benefits of two or more treatment options, is an important component of resource allocation in health care. Many governmental and private bodies worldwide make reimbursement decisions and develop clinical and public health guidelines using such evidence [6]. Clinical trials can provide valuable data on the costs and effects of alternative technologies. For chronic and progressive diseases, trial durations are routinely too short to capture all health and economic consequences resulting from an intervention. Additionally, they rarely contain all outcomes and all comparators needed for economic evaluation and, in many cases, the analysis must be translated to a different setting (e.g. with different populations, treatment patterns, and/or unit costs) [7]. Decision analytic models, formal mathematical frameworks that synthesise clinical and economic data from a range of sources and extrapolate from these data over time and/or setting, are therefore routinely used to generate estimates of lifetime cost-effectiveness, and some health technology assessment bodies, for example, the National Institute of Health and Care Excellence (NICE) in the UK, require modelling for many technology appraisals [8].

Health economic decision models are often complex, particularly for chronic and progressive diseases like diabetes, and modellers are required to make numerous decisions during model development including in the structure of the model and in the selection and processing of underlying clinical and economic data. Each decision, individually or collectively, can affect the resulting estimate of cost-effectiveness [9]. The importance of transparently reporting modelling efforts is now widely recognised, and some guidelines have been developed to support it [10]. Nevertheless, the Second Panel on Cost-Effectiveness in Health and Medicine identified increasing model transparency as a key area of future research in order to enhance the reliability and validity of health economics research, and ultimately the use of such research in decision making [11]. The purpose of this paper is to describe the role of the Mount Hood Diabetes Challenge Network in promoting model transparency through a model registry including the results of standardised validation exercises [12–15]. We discuss the opportunities and challenges associated with the development and maintenance of a registry of economic models for diabetes.

What is Model Transparency and How Can it be Promoted?

While there is almost universal agreement that enhancing transparency in health economic decision modelling is important [11, 16], there are competing definitions of transparency and disagreements about how it should best be operationalised; for example, whether transparency necessitates the sharing of model code [17]. A report by the International Society for Pharmacoeconomics and Outcomes Research and the Society of Medical Decision Making (ISPOR-SMDM) provides an informative and pragmatic guide to enhance the understanding of model transparency and validation [16]. Importantly, ISPOR-SMDM recognise that transparency is not an end in itself, but rather a means to enhancing model credibility: a transparent model does not guarantee validity, while a valid model may not be transparent [16, 18].

According to the authors of this report, transparency involves the provision of sufficient information to enable readers, whether they are specialists or non-specialists, to understand a model’s accuracy, limitations, and potential applications [16]. This requires both non-technical and technical documentation. Non-technical documentation should provide details about the model structure and its potential applications, though not at the level of detail required to support replication. Technical documentation should provide substantial details about model structure, equations and data input such that model reproduction is, in theory, possible, and can, at the modeller’s discretion, include access to the model code itself.

Demonstrating the validity of a model increases the confidence in its use for health care decision making. The ISPOR-SMDM report defines validation as a set of methods for judging a model’s accuracy in making relevant predictions [16]. Others have contended that a focus on accuracy is misplaced in health economic decision modelling given the inherent uncertainties involved in such work, and instead recommend a focus on the extent to which a model is a proper and sufficient representation of the system it is intended to represent [19]. Validation encompasses many methods, including face validity, internal validity, cross-validity, external validity, and predictive validity. Checklists such as the AdViSHE validation-assessment tool provide guidance on reporting validation exercises, with the aim of promoting transparency in assessing model validity for the benefit of decision makers and other model users [20].

There is also value in subjecting all models used for similar applications (e.g. in a particular disease area) to a common set of simulation scenarios. This could involve replicating exercises such as replicating the results of clinical trials [14] as well as running ‘reference case simulations’ involving a pre-defined simulation for a representative patient or population. Comparing models against observed data (i.e. external validation) and against each other (i.e. cross-validation) provides valuable information about model validity and promotes transparency. Reporting the results of reference simulations would also provide information about changes to individual models over time. It is also important that information on methods and outcomes are well documented and ideally presented in a standardised structure [12–15, 20–22]. This would help also limit the selective reporting of validation studies since modelling groups would have to pre-commit to undertaking validations prior to knowledge of the results.

The provision of model code is often considered an integral part of promoting transparency [17]. Researchers, however, may be concerned that the provision of code could result in others using their model without appropriate attribution or amending the model in a way that impairs its validity. The ISPOR-SMDM guidelines on model transparency and validation suggest, however, that model code should be provided at the discretion of the modellers in order to protect intellectual property [16]. Moreover, while model code entails a comprehensive account of the model, it is often highly complex (particularly for chronic conditions like diabetes), rendering it extremely difficult for ordinary users to understand and use, and may result in misuse of the model (whether intentional or not). There are many alternatives to providing code, which may be more useful. First, structured, technical and non-technical documentation can, as noted above, provide useful and easily comprehensible information about model data and structure. Second, components of models can be made available, like the risk equations in the United Kingdom Prospective Diabetes Study (UKPDS) Outcomes Model [23], which is currently used in many other diabetes models [15]. Third, user interfaces can be created to allow users to vary values of parameters, and potentially structural assumptions, and to derive outcomes. An open-access example is the Study of Heart and Renal Protection Chronic Kidney Disease–Cardiovascular Disease (SHARP CKD-CVD) model [24], which is available at http://dismod.ndph.ox.ac.uk/kidneymodel/app/.

Modellers can follow the ISPOR-SMDM and AdViSHE recommendations by publishing technical and non-technical documents and reporting validation efforts on publicly available websites, in scientific journals, or in other venues. The quality of reporting of economic evaluations is, however, known to vary widely [10]. Moreover, even if such information was routinely provided, individuals or organisations that required access to it would nevertheless face barriers in identifying the appropriate information and ensuring it is current, complete, and valid for the model application at hand. As a consequence, the status quo can lead to the duplication of effort, whether in the construction of new models or in assessing the validity of existing models [20, 25]. In addition, the lack of consistent (and consistently reported) validation exercises would limit the confidence that a model user has in model results.

Model registration has been proposed as a solution to these and other problems [25–27]. Sampson and Wrightson [25] argued that a registry combined with a linked database of model-based economic evaluations could help overcome publication bias (though the feasibility of pre-registration of all analysis plans in the context of model-based economic evaluations is less than in clinical trials), and may promote collaborative validation exercises. Model registration, so envisaged, provides a single repository or linked repositories for models and their applications, potentially containing relevant technical and non-technical documentation, ideally in a standardised format so as to ensure consistent and complete representation of all models. It can also act as a repository for validation tests, ensuring the consistent reporting of validation methods and outcomes [15, 20]. Another benefit of a registry would be to facilitate collaboration between researchers, and engagement with other stakeholders (such as reimbursement bodies, providers, and industry).

The Mount Hood Diabetes Challenge Network

In 2000, two diabetes modelling groups met at Mount Hood in Oregon, USA, and jointly designed and ran several simulations with their models [12]. The predictions of the cumulative incidence of various clinical events were then compared to provide insights into model reliability. This meeting inspired the Mount Hood Diabetes Challenge Network, which has assembled on a further eight occasions, and biennially since 2010, with an increasing number of modelling groups and participants present (Tables 1, 2). The most recent meeting in October 2018, at the German Diabetes Centre (Deutsches Diabetes-Zentrum, Düsseldorf, Germany), involved 13 modelling teams and 70 participants.

Table 1.

Information on previous and forthcoming Mount Hood Challenge meetings

| Meeting number | Year | Location | Number of models | Number of delegates |

|---|---|---|---|---|

| 1 | 2000 | Mount Hood, Oregon, United States | 2 | 6 |

| 2 | 2002 | San Francisco, United States | 6 | 62 |

| 3 | 2003 | Oxford, United Kingdom | 6 | 52 |

| 4 | 2004 | Basel, Switzerland | 8 | ~ 50 |

| 5 | 2010 | Lund, Sweden | 8 | 77 |

| 6 | 2012 | Baltimore, United States | 8 | 79 |

| 7 | 2014 | Palo Alto, United States | 11 | 77 |

| 8 | 2016 | St Gallen, Switzerland | 10 | 57 |

| 9 | 2018 | Dusseldorf, Germany | 13 | 70 |

| 10 | 2020 | Chicago, United States | – | |

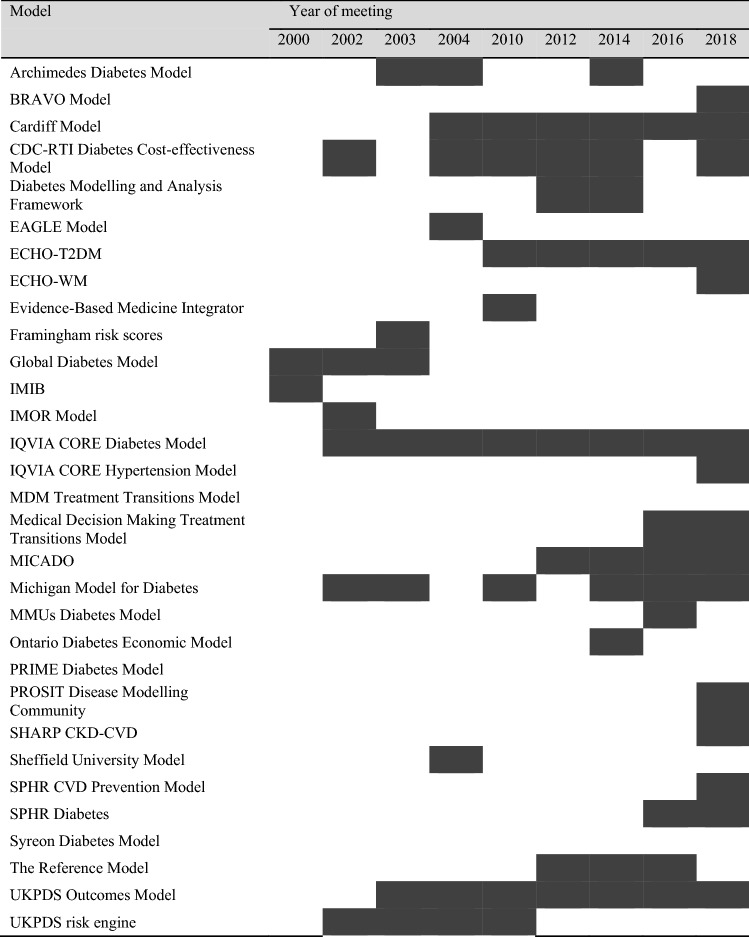

Table 2.

Participation of models at Mount Hood Challenge meetings

More information on many of these models can be obtained from the register of diabetes models, listed on www.mthooddiabeteschallenge.com

Shaded cells indicate that the corresponding model participated in the challenges

SHARP CKD-CVD Study of Heart and Renal Protection Chronic Kidney Disease–Cardiovascular Disease, UKPDS United Kingdom Prospective Diabetes Study

Modelling teams participating in the challenges are provided with a series of instructions typically describing either clinical trials that have been undertaken in diabetes or reference simulations and are asked to submit model results prior to the conference in a pre-circulated template, which requires modelling teams to specify any additional assumptions they were required to make. Each meeting has a somewhat different focus, e.g. on subgroup results, health-related quality of life, the handling of uncertainty, or reproducibility of results. For example, for challenge #2 of the 2018 meeting, modelling teams were requested to run simulations to investigate the impact of utility values and stipulated changes in common diabetes risk factors (glycated haemoglobin [HbA1c], systolic blood pressure, low-density lipoprotein cholesterol, and body mass index) on life expectancy and quality-adjusted life-years in hypothetical patients with pre-specified characteristics. As part of the 2016 Mount Hood Diabetes Challenge Network meeting, modelling groups defined a minimum set of reporting standards for describing and reporting model inputs in diabetes health economic studies [15]; these incorporated information on the simulation cohort, the treatment interventions, costs, health state utilities, and other model characteristics that may have a substantive impact on model transparency (Table 3). A comprehensive record of all Mount Hood Diabetes Network challenges is provided on the network’s website (https://www.mthooddiabeteschallenge.com/archive).

Table 3.

Diabetes modelling input checklist.

Reproduced from Palmer et al. (2018) [15]

| Model input | Checkbox | Comments (e.g. justification if not reported) |

|---|---|---|

| Simulation cohort | ||

| Baseline age | ||

| Ethnicity/race | ||

| BMI/weight | ||

| Duration of diabetes | ||

| Baseline HbA1c, lipids, and blood pressure | ||

| Smoking status | ||

| Comorbidities | ||

| Physical activity | ||

| Baseline treatment | ||

| Treatment intervention | ||

| Type of treatment | ||

| Treatment algorithm for HbA1c evolution over time | ||

| Treatment algorithm for other conditions (e.g. hypertension, dyslipidaemia, and excess weight) | ||

| Treatment initial effects on baseline biomarkers | ||

| Rules for treatment intensification (e.g. the cut-off HbA1c level to switch the treatment, the type of new treatment, and whether the rescue treatment is an addition or substitution to the standard treatment) | ||

| Long-term effects, adverse effects, treatment adherence and persistence, and residual effects after the discontinuation of the treatment | ||

| Trajectory of biomarkers, BMI, smoking, and any other factors that are affected by treatment | ||

| Cost | ||

| Differentiated by acute event in the first year and subsequent years | ||

| Cost of intervention and other costs (e.g. managing complications, adverse events, and diagnostics) | ||

| Please report unit prices and resource use separately and give information on discount rates applied | ||

| Health state utilities | ||

| Operational mechanics of the assignment of utility values (i.e. utility- or disutility-oriented) | ||

| Management of multihealth conditions | ||

| General model characteristics | ||

| Choice of mortality table and any specific event-related mortality | ||

| Choice and source of risk equations | ||

| If microsimulation, number of Monte-Carlo simulations conducted and justification | ||

| Components of model uncertainty being simulated (e.g. risk equations, risk factor trajectories, costs, and treatment effect); number of simulations and justification | ||

BMI body mass index, HbA1c glycated haemoglobin

At the meetings, the outcomes from participating models are compared to each other (i.e. cross-validation) and to observed data if applicable (i.e. internal and/or external validation), and the proceedings are often published in a peer-reviewed journal [12–15]. The Mount Hood Diabetes Challenge Network has spawned similar exercises in other disease areas including chronic obstructive pulmonary disease [22]. Other initiatives also exist, for instance, the Cancer Intervention and Surveillance Modelling Network (CISNET) funded with multiple grants by the National Cancer Institute in the United States [21].

Plans for a Mount Hood Diabetes Challenge Network Model Registry

There are several registries pertaining to specific components of health economics [25], including published cost-effectiveness analyses [28], mapping algorithms (e.g. mapping from disease-specific quality-of-life measures to EQ-5D) [29], resource use measurement [30], and health state utility values [31]. There is, however, no comprehensive registry for health economic decision models or model applications, and only rare examples of disease-specific ones, e.g. the CISNET registry of cancer models (https://resources.cisnet.cancer.gov/registry) [21].

In an attempt to extend the efforts of the Mount Hood Diabetes Challenge Network in promoting transparency in diabetes modelling, and in response to recent calls for a registry of economic models [25, 27], we established a registry of models in the area of diabetes. The Mount Hood Diabetes Challenge Network is well-positioned to push this agenda given its large and established network of researchers in diabetes modelling from around the world representing both academia and industry. Furthermore, diabetes is an area where such an exercise may be most valuable, as most models are used and updated repeatedly for many different applications. An initial model registry is located on the Mount Hood Diabetes Challenge Network website: https://www.mthooddiabeteschallenge.com/registry. Currently, the registry is limited to non-technical descriptions of each model, with links to technical descriptions and published reports. The Mount Hood Diabetes Challenge Network collaborators are currently in the process of deciding on a structured form for the technical and non-technical descriptions of contributing models. Modelling groups should provide sufficient information to allow evaluation of a model’s validity and, in theory, the reproduction of the model. The registry could also serve as a repository for model programming code, should modelling groups elect to provide it.

An important component of the proposed registry is the inclusion of validation exercises. This is expected to take two forms. First, modelling groups would be expected to report the methods and outcomes from their own validation efforts in a structured form, for instance, using the AdViSHE validation-assessment tool [20]. Second, all model versions would be encouraged to run and provide results for several pre-defined scenarios (i.e., reference simulations), and report their methods (Table 3) and results in a structured format. The final set of reference case simulations will be decided by consultation with the various participating modelling groups, and would be subject to changes or supplementation over time. The reference case simulations should reflect different aspects of the models and different patient characteristics, and may even provide something akin to outcome tables which simulate outcomes such as life expectancy over a wide range of possible risk factors and levels of risk factors [32].

Challenges to Constructing and Maintaining a Registry

Most diabetes models are designed for multiple applications, and so many undergo continual or periodic development over time and must often be adapted to evaluate particular interventions. One way to document model changes would be to require the modellers to register any substantive changes to model structure that produce variations to the outcomes reported in the reference case. This would place the responsibility on modellers to periodically update entries in the register (just as it is important for trials to register amendments to protocols [33]). Like computer software, models would ideally be provided in defined versions and variants; this would strengthen the ability to reproduce previously computed or published model results.

Including reference simulations places an onus on the modellers. Ideally, a large number of conceptually varied reference simulations would be defined to reflect the complexity of modelling in diabetes, the importance of various different parameters and assumptions for cost-effectiveness, and account for the fact that some models will perform well in some simulations but not in others. The number of simulations should not overburden the contributors or complicate review by independent researchers or organisations, which would risk poor compliance and underutilisation. There are also challenges inherent in the definition of any single reference simulation: standardisation must be sufficient to enable comparison, while allowing for each model to retain its defining characteristics.

However, a big challenge is overcoming inertia and the absence of general infrastructure to enable health economic decision models to be registered. The Mount Hood Diabetes Challenge Network is at an advantage here because of its long-established network of modellers in diabetes and a website which can host a registry for diabetes simulation models. However, as participation is currently voluntary, such a registry will only attract a subset of models. It is therefore important to engage key stakeholders including both medical journal editors and decision-making bodies. Here we can draw on the experience of clinical trial registries which have been assisted with public funding for infrastructure (e.g. ClinicalTrials.gov was set up by the Food and Drug Administration in the US [33]) and by journal editors mandating registration of clinical trials [34]. It is hard to envision how a comprehensive register of health economics models can be achieved without similar support.

Conclusions

The complexity and scale of many health economic decision models necessitates a move beyond the usual methods of peer review in order to enhance transparency. The development of a modelling network is a way to promote transparency by bringing together health economists and computer simulation modellers and engaging in an ongoing process of review. The Mount Hood Diabetes Challenge Network provides a working example of how to develop and maintain a health-economics modelling community. For example, past meetings of the network have used challenges to promote external model validation of a large number of diabetes models [14] and an examination of transparency in reporting of simulations used in economic evaluations of therapies for diabetes [15]. A network not only promotes these aspects of transparency, but also provides a regular forum where models can be reviewed by peers. It can also facilitate the development of bespoke modelling and reporting guidelines [15] which complement more general modelling guidelines by taking into account specific modelling challenges in a particular disease area like diabetes.

Another reason for developing networks is that it is a way to maintain model registries, containing systematic technical and non-technical information about models. We have argued that such a registry could be enhanced by reporting the results from several reference case simulations, as this helps improve transparency and confidence in the use of models for decision making, and ultimately, in the decisions themselves. The Mount Hood Diabetes Challenge Network has established one of the first registries to provide information on a wide range of health economic decision models in standard format. Although this is just the first step, it is nevertheless a positive one on a long road to developing a general register of health economic models. The support of major stakeholders such as decision-making bodies and journals, for example, by encouraging or even mandating model registration, and public funding is key to furthering this venture. In the absence of funding, the establishment of modelling networks like the Mount Hood Diabetes Challenge Network is essential.

Acknowledgements

The Mount Hood Diabetes Challenge Network receives no sponsorship from any organisation. We would like to thank all the participants in the Mount Hood Diabetes Challenge Network meetings over the past two decades.

Author Contributions

PC had the original idea for the manuscript. SK, FB, and PC prepared the initial draft. All other co-authors commented on at least one subsequent revision of the manuscript.

Compliance with Ethical Standards

Funding

No explicit funding was received for work involved in the preparation of this article or for participation in the Mount Hood Diabetes Network Challenge. SK, PC and AG were partly funded by the National Institute for Health Research Biomedical Research Centre at the University of Oxford (grant number NIHR-BRC-1215-20008)

Conflict of interest

MW acknowledges employment by the Swedish Institute for Health Economics, which created and owns the ECHO-T2DM model and provides consulting services for its use. PC acknowledges payments for workshops on diabetes simulation modelling held alongside Mount Hood meetings. The Universities of Oxford and Melbourne have received royalties for the UKPDS Outcomes Model. PM is the managing director of Health Economics and Outcomes Research Ltd. TF developed the AdViSHE tool. All other authors (SK, FB, AT-D, IS, MT, PZ, WY, SL, WH, WS, AG, JL, ML, and AJP) report no conflicts of interest.

References

- 1.Goldacre B. The WHO joint statement from funders on trials transparency. BMJ. 2017;357:j2816. doi: 10.1136/bmj.j2816. [DOI] [PubMed] [Google Scholar]

- 2.Easterbrook PJ, Gopalan R, Berlin JA, et al. Publication bias in clinical research. Lancet. 1991;337:867–872. doi: 10.1016/0140-6736(91)90201-Y. [DOI] [PubMed] [Google Scholar]

- 3.Ioannidis JPA. Why most clinical research is not useful. PLoS Med. 2016;13:e1002049. doi: 10.1371/journal.pmed.1002049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chalmers I, Glasziou P, Godlee F. All trials must be registered and the results published. BMJ. 2013;346:f105. doi: 10.1136/bmj.f105. [DOI] [PubMed] [Google Scholar]

- 5.Watson M. When will ‘open science’ become simply ‘science’? Genome Biol. 2015;16:101. doi: 10.1186/s13059-015-0669-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hill S, Velazquez A, Tay-Teo K, et al. 2015 Global Survey on Health Technology Assessment by National Authorities. WHO 2015.

- 7.Buxton MJ, Drummond MF, Van Hout BA, et al. Modelling in economic evaluation: an unavoidable fact of life. Health Econ. 1997;6:217–227. doi: 10.1002/(SICI)1099-1050(199705)6:3<217::AID-HEC267>3.0.CO;2-W. [DOI] [PubMed] [Google Scholar]

- 8.National Institute for Health and Clinical Excellence Guide to the methods of technology appraisal. Nice. 2013 doi: 10.2165/00019053-200826090-00002. [DOI] [PubMed] [Google Scholar]

- 9.Goodacre S. Being economical with the truth: how to make your idea appear cost effective. Emerg Med J. 2002;19:301–304. doi: 10.1136/emj.19.4.301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Husereau D, Drummond M, Petrou S, et al. Consolidated Health Economic Evaluation Reporting Standards (CHEERS) statement. Eur J Health Econ. 2013;14:367–372. doi: 10.1007/s10198-013-0471-6. [DOI] [PubMed] [Google Scholar]

- 11.Neumann PJ, Kim DD, Trikalinos TA, et al. Future directions for cost-effectiveness analyses in health and medicine. Med Decis Mak. 2018;38:767–777. doi: 10.1177/0272989X18798833. [DOI] [PubMed] [Google Scholar]

- 12.Brown JB, Palmer AJ, Bisgaard P, et al. The Mt. Hood challenge: cross-testing two diabetes simulation models. Diabetes Res Clin Pract. 2000;50:S57–S64. doi: 10.1016/s0168-8227(00)00217-5. [DOI] [PubMed] [Google Scholar]

- 13.Palmer AJ, Mount Hood 5 Modeling Group. Clarke P, et al. Computer modeling of diabetes and its complications: a report on the Fourth Mount Hood Challenge Meeting (ADA WORKGROUP REPORT) (Conference notes) Diabetes Care. 2007;30:1638–1646. doi: 10.2337/dc07-9919. [DOI] [PubMed] [Google Scholar]

- 14.Palmer AJ. Computer modeling of diabetes and its complications: a report on the fifth Mount Hood challenge meeting. Value Health. 2013;16:670–685. doi: 10.1016/j.jval.2013.01.002. [DOI] [PubMed] [Google Scholar]

- 15.Palmer AJ, Si L, Tew M, et al. Computer modeling of diabetes and its transparency: a report on the eighth Mount Hood challenge. Value Health. 2018;21:724–731. doi: 10.1016/j.jval.2018.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Eddy DM, Hollingworth W, Caro JJ, et al. Model transparency and validation: a report of the ISPOR-SMDM modeling good research practices task force-7. Med Decis Mak. 2012;32:733–743. doi: 10.1177/0272989X12454579. [DOI] [PubMed] [Google Scholar]

- 17.Cohen JT, Wong JB. Can economic model transparency improve provider interpretation of cost-effectiveness analysis? A response. Med Care. 2017;55:912–914. doi: 10.1097/MLR.0000000000000811. [DOI] [PubMed] [Google Scholar]

- 18.Eddy DM. Accuracy versus transparency in pharmacoeconomic modelling: finding the right balance. Pharmacoeconomics. 2006;24:837–844. doi: 10.2165/00019053-200624090-00002. [DOI] [PubMed] [Google Scholar]

- 19.Vemer P, Krabbe PFM, Feenstra TL, et al. Improving model validation in health technology assessment: comments on guidelines of the ISPOR-SMDM Modeling Good Research Practices Task Force. Value Health. 2013;16:1106–1107. doi: 10.1016/j.jval.2013.06.015. [DOI] [PubMed] [Google Scholar]

- 20.Vemer P, Corro Ramos I, van Voorn GAK, et al. AdViSHE: a validation-assessment tool of health-economic models for decision makers and model users. Pharmacoeconomics. 2016;34:349–361. doi: 10.1007/s40273-015-0327-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kuntz KM, Lansdorp-Vogelaar I, Rutter CM, et al. A systematic comparison of microsimulation models of colorectal cancer: the role of assumptions about adenoma progression. Med Decis Mak. 2011;31:530–539. doi: 10.1177/0272989X11408730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hoogendoorn M, Feenstra TL, Asukai Y, Briggs AH, Hansen RN, Leidl R, Risebrough N, Samyshkin Y, Wacker MRMM. External validation of health economic decision models for chronic obstructive pulmonary disease (COPD): report of the Third COPD Modeling Meeting. Value Health. 2017;20:397–403. doi: 10.1016/j.jval.2016.10.016. [DOI] [PubMed] [Google Scholar]

- 23.Hayes AJ, Leal J, Gray AM, et al. UKPDS Outcomes Model 2: a new version of a model to simulate lifetime health outcomes of patients with type 2 diabetes mellitus using data from the 30 year United Kingdom Prospective Diabetes Study: UKPDS 82. Diabetologia. 2013;56:1925–1933. doi: 10.1007/s00125-013-2940-y. [DOI] [PubMed] [Google Scholar]

- 24.Schlackow I, Kent S, Herrington W, et al. A policy model of cardiovascular disease in moderate-to-advanced chronic kidney disease. Heart. 2017;103:1880–1890. doi: 10.1136/heartjnl-2016-310970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sampson CJ, Wrightson T. Model registration: a call to action. Pharmacoeconom Open. 2017;1:73–77. doi: 10.1007/s41669-017-0019-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Arnold RJG, Ekins S. Time for Cooperation in health economics among the modelling community. Pharmacoeconomics. 2010;28:609–613. doi: 10.2165/11537580-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 27.Arnold RJG, Ekins S. Ahead of our time: collaboration in modeling then and now. Pharmacoeconomics. 2017;35:975–976. doi: 10.1007/s40273-017-0532-2. [DOI] [PubMed] [Google Scholar]

- 28.Neumann PJ, Fang CH, Cohen JT. 30 years of pharmaceutical cost-utility analyses: growth, diversity and methodological improvement. Pharmacoeconomics. 2009;27:861–872. doi: 10.2165/11312720-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 29.Dakin H. Review of studies mapping from quality of life or clinical measures to EQ-5D: an online database. Health Qual Life Outcomes. 2013;16:31. doi: 10.1186/1477-7525-11-151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ridyard CH, Hughes DA. Development of a database of instruments for resource-use measurement: purpose, feasibility, and design. Value Health. 2012;15:650–655. doi: 10.1016/j.jval.2012.03.004. [DOI] [PubMed] [Google Scholar]

- 31.Rees A, Paisley S, Brazier J, et al. Development of the Scharr HUD (Health Utilities Database) Value Health. 2013;16:A580. doi: 10.1016/j.jval.2013.08.1585. [DOI] [Google Scholar]

- 32.Leal J, Gray AM, Clarke PM. Development of life-expectancy tables for people with type 2 diabetes. Eur Heart J. 2009;30:834–839. doi: 10.1093/eurheartj/ehn567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dickersin K, Rennie D. The evolution of trial registries and their use to assess the clinical trial enterprise. JAMA. 2012;307:1861–1864. doi: 10.1001/jama.2012.4230. [DOI] [PubMed] [Google Scholar]

- 34.Zarin DA, Tse T, Ide NC. Trial registration at ClinicalTrials.gov between May and October 2005. N Engl J Med. 2005;353:2779–2787. doi: 10.1056/nejmsa053234. [DOI] [PMC free article] [PubMed] [Google Scholar]