Abstract

Background

The diagnosis of cancer in primary care is complex and challenging. Electronic clinical decision support tools (eCDSTs) have been proposed as an approach to improve GP decision making, but no systematic review has examined their role in cancer diagnosis.

Aim

To investigate whether eCDSTs improve diagnostic decision making for cancer in primary care and to determine which elements influence successful implementation.

Design and setting

A systematic review of relevant studies conducted worldwide and published in English between 1 January 1998 and 31 December 2018.

Method

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines were followed. MEDLINE, EMBASE, and the Cochrane Central Register of Controlled Trials were searched, and a consultation of reference lists and citation tracking was carried out. Exclusion criteria included the absence of eCDSTs used in asymptomatic populations, and studies that did not involve support delivered to the GP. The most relevant Joanna Briggs Institute Critical Appraisal Checklists were applied according to study design of the included paper.

Results

Of the nine studies included, three showed improvements in decision making for cancer diagnosis, three demonstrated positive effects on secondary clinical or health service outcomes such as prescribing, quality of referrals, or cost-effectiveness, and one study found a reduction in time to cancer diagnosis. Barriers to implementation included trust, the compatibility of eCDST recommendations with the GP’s role as a gatekeeper, and impact on workflow.

Conclusion

eCDSTs have the capacity to improve decision making for a cancer diagnosis, but the optimal mode of delivery remains unclear. Although such tools could assist GPs in the future, further well-designed trials of all eCDSTs are needed to determine their cost-effectiveness and the most appropriate implementation methods.

Keywords: cancer, clinical decision support tool, early diagnosis, general practitioners, primary health care

INTRODUCTION

A timely diagnosis of cancer is critical, as delays are associated with poorer patient outcomes and survival rates.1,2 GPs play a key role in early cancer diagnosis, with 75–85% of cases first presenting symptomatically in primary care.3–5

The primary care interval describes the time from first symptomatic presentation to the GP, through to referral to a specialist.6 The length of this interval varies, with many patients presenting to their GP three or more times before referral.7 Consequently, interventions that assist GPs’ clinical decision making have the potential to improve the timeliness of cancer diagnosis and improve cancer outcomes.

Electronic clinical decision support tools (eCDSTs) are electronic systems that assist clinical decision making.8 Patient-specific information is entered into the eCDST by the GP or can be automatically populated from the patient’s electronic health record. Using validated algorithms, the eCDST produces recommendations, prompts, or alerts for the GP to consider. eCDSTs can be actively used during a GP consultation or may be designed to continuously mine data in the background.

The development of eCDSTs has been driven by the complex nature of a cancer diagnosis. Often, patients present to the GP with non-specific symptoms that have a low diagnostic value.9 Algorithms have been designed to apply epidemiological data on combinations of symptoms and test results, and prompt consideration of a cancer diagnosis based on cancer risk thresholds.10,11 eCDSTs have been proposed as a solution for cancers that are more challenging to diagnose in primary care because of their variable symptomatic presentation and limited specific features.12

eCDSTs have been shown to improve both practitioner performance11 and diagnostic accuracy in simulated patients for a range of conditions, such as dementia, osteoporosis, and HIV.13 The effects of eCDSTs on referral behaviours have been summarised in a previous systematic review,14 but it did not investigate cancer diagnosis specifically; consequently, the role of eCDSTs in cancer diagnosis has not been adequately addressed.

This systematic review aimed to summarise existing evidence on the effects of eCDSTs on decision making for cancer diagnosis in primary care, and determine factors that influence their successful implementation.

How this fits in

| Electronic clinical decision support tools (eCDSTs) improve practitioner performance and patient care, but their role in cancer diagnosis has not been adequately addressed. This review outlines the effectiveness of eCDSTs for cancer diagnosis and factors affecting their implementation. Decision support tools have been proposed as an approach to reduce delays in diagnosis, particularly for cancer with non specific symptom signatures. To the authors’ knowledge, this is the first systematic review of available publications to inform eCDST implementation in primary care for the diagnosis of cancer. |

METHOD

A mixed-methods narrative review was conducted. The review was registered on PROSPERO (registration ID: CRD42018107219) and the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) criteria were followed.15

Search strategy

Electronic searches were run across three databases: MEDLINE, EMBASE (Ovid), and the Cochrane Central Register of Controlled Trials (CENTRAL). The search strategy (available from the authors on request) included MeSH headings and word variations for three terms: ‘general practitioner’, ‘cancer’, and ‘electronic decision support’. All studies from 1 January 1998 until 31 December 2018 were included. Titles and abstracts were screened independently by two reviewers and any disagreements were resolved with a third researcher. To identify studies not found via the electronic searches, reference lists were manually checked, citation tracking was performed, and experts in the field were contacted. The corresponding authors from all the included studies were contacted via email to identify further studies or unpublished research.

Inclusion and exclusion criteria

Studies investigating an eCDST designed to aid decision making for a potential cancer diagnosis were selected. For inclusion in the review, the study had to report on a:

cancer diagnosis;

cancer referral; or

cancer investigation.

Healthcare utilisation and cost, practitioner performance, and other educational outcomes were also included in the study. As the mode and delivery of eCDSTs vary, studies using any form of electronic support that included algorithm-based prompts or recommendations were eligible. Tools that applied risk markers for prevalent undiagnosed cancer were included, as were qualitative studies if they evaluated barriers and facilitators to implementing eCDSTs for cancer diagnoses in primary care.

Exclusion criteria for qualitative and quantitative studies included:

decision support used for cancer screening in asymptomatic populations, including tools that incorporated risk factors to predict future incident risk of cancer;

studies that did not involve decision support designed for use in primary care;

articles not in English;

unpublished work;

editorials; and

academic theses.

Assessment of bias

Several Joanna Briggs Institute (JBI) Critical Appraisal Checklists were used, depending on the study design of the articles included in the systematic review, to assess the risk of bias of included studies.16 The authors used the following JBI Critical Appraisal Checklists:

Checklist for Quasi-Experimental Studies (non-randomised experimental studies);

Checklist for Qualitative Research;

Checklist for Diagnostic Test Accuracy Studies; and

Checklist for Randomized Controlled Trials.

Studies with a percentage score of >80% were considered to have a low risk of bias; those with a percentage score of 60–80% were considered to have a moderate risk of bias.

Study design

Data were extracted and analysed separately from included studies, before all results were combined into an extensive narrative synthesis. Segregated methodology was used to synthesise the evidence while maintaining the standard distinction between quantitative and qualitative research, in line with recommendations.17,18

Data extraction and synthesis

Data extraction was performed, and cross-checked by the two reviewers who screened the articles. For quantitative studies, data extraction was based on an adapted version of the Cochrane Effective Practice and Organisation of Care data-collection checklist.19 For qualitative studies, data extraction was performed by a reviewer who screened the original articles, together with a third author, guided by the approach of Noblit and Hare.20 This involved identifying the major themes from primary papers, determining how they are related, and building on themes to interpret overarching theories and understandings.20

Categorisation of extracted themes was based on the normalisation process theory (NPT) framework,21 a theory used to describe factors and actions that promote or impede the embedding of new technologies into an existing practice. NPT uses four constructs to explain the processes that affect the integration and adoption of new technologies:

Using these constructs, the barriers and facilitators identified in the qualitative studies were mapped onto the NPT framework to explain the results.20

RESULTS

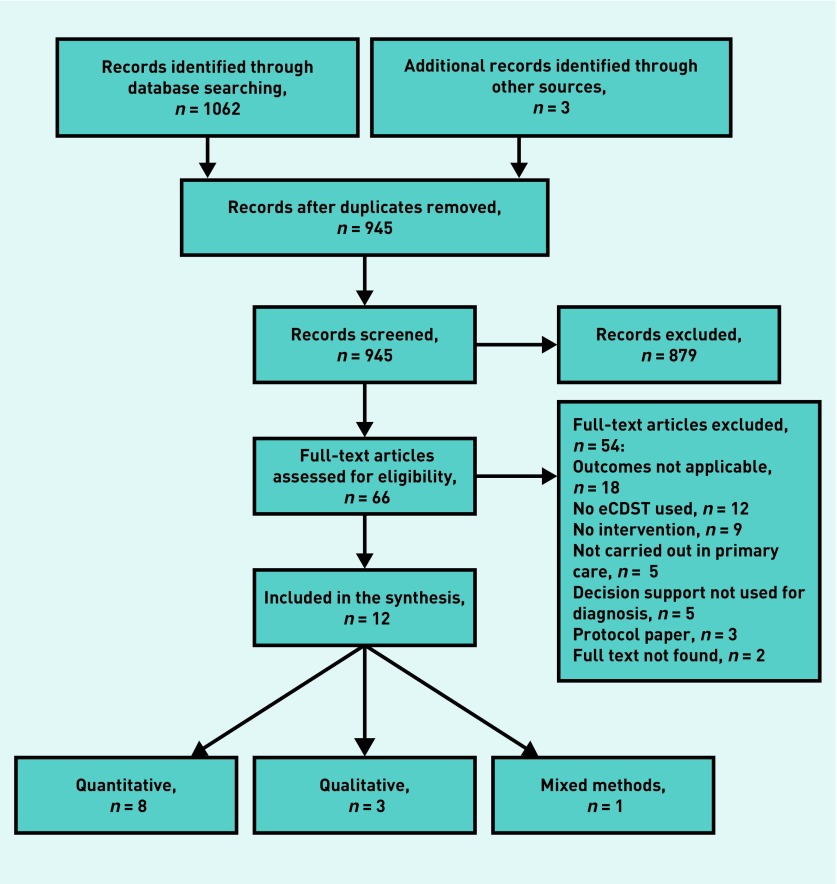

In total, 1065 titles were identified and 66 full-text papers reviewed for eligibility (Figure 1). Twelve articles, reporting on nine individual studies, fulfilled the selection criteria: eight quantitative,24–31 three qualitative,32–34 and one mixed methods.35 Characteristics of the included studies are summarised in Table 1.

Figure 1.

PRISMA flow diagram of literature search. eCDST = clinical decision support tool. PRISMA = Preferred Reporting Items for Systematic Reviews and Meta-Analyses.

Table 1.

Characteristics of included studies

| Reference | Country | Study type | Cancer type | GPs, n | Patients, n |

|---|---|---|---|---|---|

| Gerbert et al (2000)24 | US | Before and after, pilot study | Non-melanoma skin cancer | 20 | 30 images of skin lesions (no contact with patients) |

| Jiwa et al (2006)35,a | UK | Mixed methods: cluster RCT and qualitative | Colorectal | 180 (8 qualitative) | 514 |

| Kidney et al (2015)25,b Kidney et al (2017)34 |

UK | Mixed methods: cross-sectional and qualitative | Colorectal | 20 practices (number of GPs not given) Qualitative: 18 GPs (+12 practice managers) | 809 |

| Logan et al (2002)26 | UK | Cluster RCT | Colorectal/gastric/oesophageal and other cancers (not specified) | 89 practices (number of GPs not given) | 431 |

| Murphy et al (2015)27,b Meyer et al (2016)30 |

US | Cluster RCT | Colorectal, prostate and lung | 72 | 733 |

| Walter et al (2012)28,b Wilson et al (2013)31 |

UK | RCT | Melanoma | 28 | 1297 |

| Winkelmann et al (2015)29 | US | Diagnostic accuracy | Melanoma | 34 | 12 images of skin lesions (no contact with patients) |

| Dikomitis et al (2015)32 | UK | Qualitative | All | 23 | n/a |

| Chiang et al (2015)33 | Australia | Qualitative simulated patient study | All | 15 | n/a |

Mixed-methods publication, contributing to both quantitative and qualitative synthesis.

Trial described in two publications. RCT = randomised controlled trial.

The design of each eCDST and key results are summarised in Table 2. Outcomes included:

appropriateness of care (n = 5);

diagnostic accuracy (n = 1);

time to diagnosis (n = 1);

cost-effectiveness (n = 1);

process measures (n = 1); and

qualitative (n = 4).

Table 2.

Quantitative study descriptions and results

| Reference | Description of decision support | Primary outcome | Results | Secondary outcomes | Results | Risk of bias scorea |

|---|---|---|---|---|---|---|

| Gerbert et al (2000)24 | The eCDST comprised a clinical information form, decision tree, and support features. An algorithm embedded in the software uses clinical information entered by the GP to provide triage recommendations through a decision tree | Appropriateness of decision to triage skin lesion | Intervention: 86.7% Control: 63.3% | Appropriateness of triage decision for cancerous lesions | Intervention: 96.4% Control: 77.9% |

High risk |

| Appropriateness of triage decision for non-cancerous lesions | Intervention: 83.7% Control: 65.6% |

|||||

| Mean change in physician performance (range = −15 to 15) | 3.15, P<0.001 | |||||

| Jiwa et al (2006)35 | An interactive pro forma requested information, through drop-down menus, for 15 clinical signs and symptoms identified as being significant in diagnosing CRC. Once the clinical data were entered in the pro forma, the interactive software offered the GP guidance on which cases needed urgent referral based on UK Department of Health guidelines. A referral letter was then automatically produced, seeking an appropriate appointment at a hospital clinic | Appropriateness of referral | Intervention: 14.2% Control: 19.4% RR 0.73 (95% CI = 0.46 to 1.15), P= 0.18 |

Assessment score of letters | Intervention: 2.4 Control: 2.1 Mean difference 0.3 (95% CI = 0.17 to 0.42), P<0.0001 |

Low risk |

| Percentage of GPs who used the eCDST in intervention arm | 18.1% | |||||

| Kidney et al (2015)25 | Clinical management software was modified to incorporate an algorithm that identifies patients who meet NICE (2005) urgent referral criteria for suspected CRC. The algorithm flagged up patients aged 60–79 years, who had diarrhoea or rectal bleeding for >6 months, or increased haemoglobin accompanied by iron deficiency anaemia. Patients without a previous diagnosis of CRC, whose records indicated that they met urgent referral criteria up to 2 years before the date of the search, were flagged. GPs reviewed the records of patients who were flagged and decided on further clinical management | Patients flagged and needing further review | 34% | Percentage of CRC diagnosis in patients who were flagged | 1.2% | Moderate risk |

| Logan et al (2002)26 | Laboratory computers were programmed to print a decision prompt based on blood indices on the FBC report received by GPs. The intervention prompt stated ‘consistent with iron deficiency-? cause. Suggest treat with ferrous sulphate, 200 mg tds [three times a day] for 4 months, but check response in 3–4 weeks. Simultaneously investigate cause. Consider barium enema to exclude colorectal problems.’ | Appropriateness of referral | Intervention: 45% Control: 49% OR 0.88 (95% CI = 0.60 to 1.29), P = 0.52 |

Oral iron prescribed | OR 2.19 (95% CI = 1.27 to 3.77), P = 0.005 | Low risk |

| Adequate dose of iron | OR 1.96 (95% CI = 1.24 to 3.10), P= 0.004 | |||||

| Adequate course of iron | OR 1.26 (95% CI = 0.77 P= 0.36 | |||||

| FBC repeated within 6 weeks | OR 0.85 (95% CI = 0.57 to 1.27), P= 0.43 | |||||

| Normal haemoglobin within 1 year | OR 1.16 (95% CI = 0.74 to 1.80), P= 0.52 | |||||

| Murphy et al (2015)27 Meyer et al (2016)30 | Electronic triggers were applied to electronic health record data repositories twice a month over a 15-month period to identify records of patients with potential delays in diagnostic evaluation of CRC, prostate cancer, or lung cancer. Electronic triggers identified ‘red-flag’ symptoms as: a positive FOBT, elevated PSA, iron deficiency anaemia, or hematochezia (blood in the stool). All trigger-positive records were initially considered to be high risk for delayed diagnostic evaluation. The records were then manually checked by study clinicians to determine whether delayed diagnostic evaluation had occurred. The patient’s GP was then contacted, including information about patient’s red flags. The information above was communicated to GPs in three escalating steps: first, secure emails were sent; if the GP did not follow up within 1 week, up to three telephone calls were made to either the GP or their nurse; if no one could be reached, clinic directors were informed | Median time to diagnostic evaluation27 | Intervention versus control: Colorectal: 104 versus 200 days, P= 0.001 Prostate: 144 versus 192 days, P= 0.001 Lung: 65 versus 93 days, P= 0.59 | Diagnostic evaluation at 7 months | Intervention: 73.4% Control: 52.2% RR 1.41 (95% CI = 1.25 to 1.58), P<0.001 |

Low risk |

| Use of secure emails (cumulative response rate)30 | 11.1% | Response rates (by role) | GP: 67.9% Nurse: 69.7% P= 0.82 |

|||

| Telephone calls (cumulative response rate)30 | 72.1% | |||||

| Contacting clinic directors (cumulative response rate)30 | 73.4% | |||||

| Walter et al (2012)28 | MoleMate is a computerised diagnostic tool that utilises spectrophotometric intracutaneous analysis (SIAscopy) integrated with a primary care scoring algorithm. Clinicians used the MoleMate system to assist their assessment and management of the suspicious lesion, deciding whether to refer patients through the fast-track skin cancer pathway or manage them in primary care. | Appropriateness of referral28 | Intervention: 56.8% Control: 64.5% Percentage difference: −8.1% (95%CI = −18.0 to 1.8), P= 0.12 |

Appropriate management of skin lesions in primary care | Percentage difference 0.5 (95% CI = −0.6 to 2.0) | Low risk |

| Wilson et al (2013)31 | The results of the RCT were used to estimate the expected long-term cost and health gain of the MoleMate system versus best practice | Incremental cost-effectiveness ratio31 | £1896/QALY | Sensitivity | 2.8 (95% CI = −1.8 to 7.4) | |

| Specificity | −6.2 (95% CI = −9.9 to −2.6) | |||||

| Winkelmann et al (2015)29 | MSDSLA analysed pigmented skin lesions and generates a ‘classifier score’. Participants were first asked if they would biopsy the lesion based on clinical images, then asked again after observing high-resolution dermoscopy images, and once more when subsequently shown MSDSLA probability information | Diagnostic accuracy | Intervention: 73% Control: 54% P<0.0001 |

Sensitivity | Intervention: 95% Control: 66% P<0.0001 |

High risk |

| Specificity | Intervention: 55% Control: 46% P<0.0001 |

Risk of bias score calculated according to Joanna Briggs Institute Critical Appraisal Checklists.16 CI = confidence interval. CRC = colorectal cancer. eCDST = clinical decision support tool. FBC = full blood count. FOBT = faecal occult blood test. MSDSLA = multispectral digital skin lesion analysis. NICE = National Institute for Health and Care Excellence. OR = odds ratio. PSA = prostate-specific-antigen. QALY = quality-adjusted life year. RCT = randomised controlled trial. RR = relative risk.

Appropriateness of referral was defined by the proportion of patients referred who were diagnosed with cancer. The approach to implement an eCDST within GP workflow varied, but the tools were designed to be used in real time, during consultation, or applied outside of the consultation to flag up potential cases of cancer.

Quantitative synthesis of the included studies was not possible because of significant methodological and clinical heterogeneity. Results for the risk of bias assessment for each included study are given in Table 2. In summary, four quantitative studies had a low risk of bias, including the quantitative component of the mixed-methods study,26–28,35 one had a moderate risk,25 and in two the risk of bias was high.24,29 Of the qualitative studies, three had a low risk of bias32–34 and one a high risk, including the qualitative component of the mixed-methods paper.35 High risk of bias did not influence the inclusion of articles in the review.

Quantitative

eCDSTs used during GP consultation

Three studies26,28,35 examined eCDSTs that were designed to be used during the GP consultation with patients. Jiwa et al35 assessed whether an electronic referral pro forma used when patients present with bowel symptoms improved the appropriateness of referral for colorectal cancer (CRC). Control practices received an educational outreach visit by a local colorectal surgeon. The pro forma had no impact on the appropriateness of referral; however, it did improve the information and quality of the referral in comparison with the standard referral used by the control group.

Logan et al26 used a computer-generated prompt that recommended further investigations to rule out CRC when full blood-count results indicated iron deficiency anaemia. Control practices received laboratory results as per ‘usual care’. The prompts had no effect on the appropriateness of referral or investigation for CRC but, instead, led to increased prescriptions and adequate dose of iron.

Walter et al28 assessed MoleMate, a diagnostic tool for melanoma, which incorporates a scoring algorithm with spectrophotometric intracutaneous analysis (also known as SIAscopy) of pigmented skin lesions. MoleMate did not improve the appropriateness of referral, due in part to the high sensitivity and relatively low specificity set for the eCDST.28 Despite this, a health economic analysis found that, in UK practice, MoleMate was likely to be cost-effective compared with current best practice with an incremental cost-effectiveness ratio of £1896 per quality-adjusted life year gained.31

eCDSTs used outside the GP consultation

Two studies assessed eCDSTs designed to be applied outside of the consultation, identifying patients at increased risk of an undiagnosed cancer. Murphy et al27 applied electronic triggers to identify ‘red-flag’ symptoms in patients who had presented to their GP in the previous 90 days, without documented follow-up. There was a statistically significant reduction in time to diagnostic evaluation for CRC and prostate cancer in the intervention arm.

Kidney et al25 evaluated an eCDST that searched the patient’s electronic medical record and created a list of patients at increased risk of an undiagnosed CRC based on National Institute for Health and Care Excellence guidelines for urgent referrals. A third of all patients flagged by the algorithm were judged to need further review by their GP; 1.2% were subsequently diagnosed with CRC.

Clinical images

Two studies24,29 — both of low quality — that tested eCDSTs to support GPs’ assessment of clinical images of melanoma and non-melanoma skin cancer were identified. Both studies showed an improvement in decision making for cancerous and non-cancerous lesions.

Qualitative

Three studies showed improvements in decision making related to cancer diagnosis,24,25,29 one showed reduced time to diagnosis,27 and three demonstrated positive effects on secondary clinical or health service outcomes such as prescribing,26 quality of referrals,35 or cost-effectiveness.31 Jiwa et al35 and Kidney et al34 conducted a qualitative sub-study within their quantitative evaluation of the eCDST, both involving semi-structured interviews with GPs. Chiang et al33 and Dikomitis et al32 conducted exploratory qualitative studies of GPs’ experiences using eCDSTs in practice, one of which used simulated consultations. Both studies used NPT as a framework. The themes and constructs extracted from each qualitative study are outlined in Table 3.

Table 3.

Qualitative results: normalisation process theory

| Coherence | Cognitive participation | Collective action | Reflexive monitoring | Risk of biasa | |

|---|---|---|---|---|---|

| Dikomitis et al (2015)32 |

|

|

|

Not addressed | Low risk |

| Chiang et al (2015)33 |

|

|

|

No monitoring or resources to adapt or evaluate the intervention | Low risk |

| Jiwa et al (2006)35 | Not addressed |

|

|

Not addressed | High risk |

| Kidney et al (2017)34 | GPs were more likely to refer if the recommendation fit with current guidelines |

|

|

Not addressed | Low risk |

| Summary | In three of the four studies, GPs described the eCDSTs as valuable, suggesting the role of these tools in assisting with diagnosis has a place in primary care. They reported that the eCDSTs raised their awareness of symptoms indicative of cancer. In contrast, GPs had different interpretations of how and when the eCDSTs should be used, with some using it with patients who were asymptomatic | Clinician buy-in was identified as one of the limiting factors to successful implementation; disconnection and mistrust of the eCDST occurred when the recommendations conflicted with the GP’s intuition or clinical judgement. Buy-in was stronger if recommendations were in line with clinical knowledge and aligned with the GP’s judgement | Compatibility of the eCDST in current practice and — at an organisational level — was a common barrier across all four studies. The barriers occurred at the interpersonal, clinical, and health-system levels. Introducing the tool disrupted the consultation, with GPs reporting feeling a loss of control. At the clinical level, additional tasks and time pressures impacted clinical flow. At a health-system level, the prompts for referral placed extra pressure on secondary care | There was limited evidence about how GPs monitored and adapted the new interventions over time. No study reported any system in place to monitor and adapt the eCDST to ensure sustainability over time. This important construct is not being considered at the point of implementation |

Risk of bias score calculated according to Joanna Briggs Institute Critical Appraisal Checklists.16 eCDST = clinical decision support tool.

Overarching themes

Three core constructs were identified in the synthesis:

trust;

the GP’s role as a gatekeeper; and

the impact on workflow.

Mistrust of the eCDST was driven by the disagreement between the tool’s recommendations and the GP’s assessment, ambiguity of underlying guidelines embedded within the eCDST, and a desire to understand the evidence that underpinned the clinical recommendation.32,33,35

The GP’s role as a gatekeeper was identified as a barrier due to conflicting referral thresholds between the eCDST and the GP, with GPs concerned about potential over-referral of patients at low risk.32,34,35 Finally, for eCDSTs designed to be used during consultation, there were challenges due to disruption of the usual workflow and the generation of additional tasks in an already-busy appointment.32–34

DISCUSSION

Summary

This systematic review evaluated the efficacy of eCDSTs used for cancer diagnosis in primary care, and describes factors that influence effective implementation. Three studies showed improvements in decision making related to cancer diagnosis,24,25,29 one showed reduced time to diagnosis,27 and three demonstrated positive effects on secondary clinical or health service outcomes such as prescribing26, quality of referrals,35 or cost-effectiveness.31 Key qualitative findings related to issues of trust in the tool, the impact on a GP’s role as gatekeeper, and potential negative effects on GP workflow.

eCDSTs that were used outside of GP consultations appeared to be more effective than tools used in real-time during consultation; they seemed to have the ability to detect patients at an increased risk of an undiagnosed cancer, leading to improvements in clinical assessment and time to diagnostic assessment. However, the implementation issues shifted from a disruption of the GP workflow during consultation to the ability to successfully convey the results of the eCDST to GPs outside of the consultation and ensure they acted on the information.25,27 Communicating this information to GPs did not always lead to follow-up of the patient.

Strengths and limitations

To the authors’ knowledge, this is the first review to evaluate the efficacy of eCDSTs used for cancer diagnosis in primary care and examine factors influencing their effective implementation. Rigorously conducted, it provides a summary of available findings to inform eCDST implementation in primary care for the diagnosis of cancer.

However, there are some limitations. This review is limited by the small number of included studies and large-scale randomised controlled trials: eCDSTs are relatively under-utilised for cancer diagnosis. Further, none of the included studies looked at outcomes such as survival rates and only one evaluated time to diagnosis;27 this highlights the challenges of conducting trials of diagnostic interventions for relatively rare conditions in primary care. Much larger implementation trials with long-term follow-up of cancer diagnoses, stage, and survival are required to determine the magnitude or effect of eCDSTs on cancer outcomes.

Comparison with existing literature

As with this work, a 2011 systematic review by Mansell et al36 did not identify any studies that examined a delay in referral of cancer as a primary outcome; all 22 included studies used a proxy measurement, such as GP knowledge or quality of referrals.

Mistrust of the eCDST was driven by the disagreement between the tool’s suggestion and the GP’s assessment, ambiguity of guidelines, and a desire to understand the underlying research underpinning the clinical recommendation.32,33 GPs reported that the eCDST compromised their autonomy, with the eCDST recommendation being perceived as ‘the final word’ rather than support at the time of decision making.33 This is consistent with recent evidence from the GUIDES implementation guidelines for eCDSTs.37 These guidelines comprise a checklist of factors that were found by patient and healthcare users to influence the effectiveness of eCDST implementation. The GUIDES checklist highlighted that the most important factor for successful implementation is ‘trustworthy evidence-based information’.37

As gatekeepers, there is much pressure on GPs to balance the use of limited and costly referrals for tests against potentially missing a cancer diagnosis.38 There are conflicting thresholds when comparing an eCDST’s output with the GP’s ability to refer everyone who was recommended.32–34 The International Cancer Benchmarking Partnership, a collaboration between six countries, identified that a stronger gatekeeper role, and different cancer-risk thresholds for referral, were associated with poorer cancer survival.39 Concerns about resource constraints and unwillingness to refer differed by country, but was found to play a large role in decision making in Australia and the UK.39

The usability and acceptability of eCDSTs was dependent on several competing issues, such as disruption of workflow, prompt fatigue, and time. There is a growing recognition in the literature that the technology being developed must seamlessly integrate into the current work practices of those using eCDSTs.37 The eCDST’s functionality and how it affects workflow could be mitigated using a consistent feedback loop between GPs and tool designers.40 There were no practices in place to monitor and adapt the eCDSTs for use in consultation, and no opportunity for the GPs to critically appraise how the tool affects workflow.

Implications for research and practice

The diagnostic algorithms in the eCDSTs included in this review were of a limited nature, but diagnostic and clinical utility could increase with more sophisticated algorithms that combine a larger number of factors such as symptoms, abnormal test results, and patterns over time. With the advances in artificial intelligence (AI) and machine learning in clinical practice, future developments will likely drive the next wave of eCDSTs. The use of AI has had promising preliminary results in areas such as visual image analysis in dermatology41 and radiology;42 however, further research using large primary care datasets is required before it can be known whether this approach will have utility to improve diagnoses of symptomatic cancers in general practice.

The available evidence in this review suggests that eCDSTs have the capacity to improve decision making for a cancer diagnosis, but the optimal mode of delivery remains unclear. Given the complex nature of a cancer diagnosis, the advancement and sustainability of eCDSTs in primary care relies on a continuous loop of practitioner feedback and refinement. The findings of the review presented here indicate that improvements in their design and implementation are needed to ensure they can be embedded in normal general practice workflows and alter professional decision making as intended. Strategies for effective communication need to be better explored.

Funding

This work was supported by the Cancer Australia Primary Care Cancer Collaborative Clinical Trials Group (PC4). Jon D Emery is supported by a National Health and Medical Research Council Practitioner Fellowship and is a member of the senior faculty of the multi-institutional CanTest Collaborative, which is funded by Cancer Research UK (C8640/A23385).

Ethical approval

Ethical approval was not required for this study.

Provenance

Freely submitted; externally peer reviewed.

Competing interests

The authors have declared no competing interests.

Discuss this article

Contribute and read comments about this article: bjgp.org.uk/letters

REFERENCES

- 1.Tørring ML, Frydenberg M, Hansen RP, et al. Time to diagnosis and mortality in colorectal cancer: a cohort study in primary care. Br J Cancer. 2011;104(6):934–940. doi: 10.1038/bjc.2011.60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Neal RD, Tharmanathan P, France B, et al. Is increased time to diagnosis and treatment in symptomatic cancer associated with poorer outcomes? Systematic review. Br J Cancer. 2015;112(Suppl 1):S92–S107. doi: 10.1038/bjc.2015.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hamilton W. Five misconceptions in cancer diagnosis. Br J Gen Pract. 2009. [DOI] [PMC free article] [PubMed]

- 4.Emery JD. The challenges of early diagnosis of cancer in general practice. Med J Aust. 2015;203(10):391–393. doi: 10.5694/mja15.00527. [DOI] [PubMed] [Google Scholar]

- 5.Allgar VL, Neal RD. Delays in the diagnosis of six cancers: analysis of data from the National Survey of NHS Patients: Cancer. Br J Cancer. 2005;92(11):1959–1970. doi: 10.1038/sj.bjc.6602587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bergin RJ, Emery J, Bollard RC, et al. Rural–urban disparities in time to diagnosis and treatment for colorectal and breast cancer. Cancer Epidemiol Biomarkers Prev. 2018;27(9):1036–1046. doi: 10.1158/1055-9965.EPI-18-0210. [DOI] [PubMed] [Google Scholar]

- 7.Lacey K, Bishop JF, Cross HL, et al. Presentations to general practice before a cancer diagnosis in Victoria: a cross-sectional survey. Med J Aust. 2016;205(2):66–71. doi: 10.5694/mja15.01169. [DOI] [PubMed] [Google Scholar]

- 8.Moja L, Kwag KH, Lytras T, et al. Effectiveness of computerized decision support systems linked to electronic health records: a systematic review and meta-analysis. Am J Public Health. 2014;104(12):e12–e22. doi: 10.2105/AJPH.2014.302164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Astin M, Griffin T, Neal RD, et al. The diagnostic value of symptoms for colorectal cancer in primary care: a systematic review. Br J Gen Pract. 2011. [DOI] [PMC free article] [PubMed]

- 10.Usher-Smith J, Emery J, Hamilton W, et al. Risk prediction tools for cancer in primary care. Br J Cancer. 2015;113(12):1645–1650. doi: 10.1038/bjc.2015.409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293(10):1223–1238. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 12.Lyratzopoulos G, Wardle J, Rubin G. Rethinking diagnostic delay in cancer: how difficult is the diagnosis? BMJ. 2014;349:g7400. doi: 10.1136/bmj.g7400. [DOI] [PubMed] [Google Scholar]

- 13.Kostopoulou O, Rosen A, Round T, et al. Early diagnostic suggestions improve accuracy of GPs: a randomised controlled trial using computer-simulated patients. Br J Gen Pract. 2015. [DOI] [PMC free article] [PubMed]

- 14.Roshanov PS, You JJ, Dhaliwal J, et al. Can computerized clinical decision support systems improve practitioners’ diagnostic test ordering behavior? A decision-maker-researcher partnership systematic review. Implement Sci. 2011;6(1):88. doi: 10.1186/1748-5908-6-88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–269. doi: 10.7326/0003-4819-151-4-200908180-00135. [DOI] [PubMed] [Google Scholar]

- 16.Joanna Briggs Institute JBI reviewers’ manual: 2014 edition. https://wiki.joannabriggs.org/display/MANUAL/JBI+Reviewer%27s+Manual (accessed 5 Nov 2019)

- 17.Sandelowski M, Voils CI, Barroso J. Defining and designing mixed research synthesis studies. Res Sch. 2006;13(1):29. [PMC free article] [PubMed] [Google Scholar]

- 18.Joanna Briggs Institute Methodology for JBI mixed methods systematic reviews. https://wiki.joannabriggs.org/display/MANUAL/8.1+Introduction+to+mixed+methods+systematic+reviews (accessed 5 Nov 2019)

- 19.Cochrane Effective Practice and Organisation of Care (EPOC) Screening, data extraction and management. EPOC resources for review authors. 2017. http://epoc.cochrane.org/resources/epoc-resources-review-authors (accessed 8 Nov 2019)

- 20.Noblit GW, Hare RD. Meta-ethnography: synthesizing qualitative studies. Thousand Oaks, CA: Sage Publications; 1988. [Google Scholar]

- 21.Murray E, Treweek S, Pope C, et al. Normalisation process theory: a framework for developing, evaluating and implementing complex interventions. BMC Med. 2010;8(1):63. doi: 10.1186/1741-7015-8-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Henderson EJ, Rubin GP. The utility of an online diagnostic decision support system (Isabel) in general practice: a process evaluation. JRSM Short Rep. 2013;4(5):31. doi: 10.1177/2042533313476691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kanagasundaram NS, Bevan MT, Sims AJ, et al. Computerized clinical decision support for the early recognition and management of acute kidney injury: a qualitative evaluation of end-user experience. Clin Kidney J. 2016;9(1):57–62. doi: 10.1093/ckj/sfv130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gerbert B, Bronstone A, Maurer T, et al. Decision support software to help primary care physicians triage skin cancer: a pilot study. Arch Dermatol. 2000;136(2):187–192. doi: 10.1001/archderm.136.2.187. [DOI] [PubMed] [Google Scholar]

- 25.Kidney E, Berkman L, Macherianakis A, et al. Preliminary results of a feasibility study of the use of information technology for identification of suspected colorectal cancer in primary care: the CREDIBLE study. Br J Cancer. 2015;112(Suppl 1):S70–S76. doi: 10.1038/bjc.2015.45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Logan EC, Yates JM, Stewart RM, et al. Investigation and management of iron deficiency anaemia in general practice: a cluster randomised controlled trial of a simple management prompt. Postgrad Med J. 2002;78(923):533–537. doi: 10.1136/pmj.78.923.533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Murphy DR, Wu L, Thomas EJ, et al. Electronic trigger-based intervention to reduce delays in diagnostic evaluation for cancer: a cluster randomized controlled trial. J Clin Oncol. 2015;33(31):3560–3567. doi: 10.1200/JCO.2015.61.1301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Walter FM, Morris HC, Humphrys E, et al. Effect of adding a diagnostic aid to best practice to manage suspicious pigmented lesions in primary care: randomised controlled trial. BMJ. 2012;345:e4110. doi: 10.1136/bmj.e4110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Winkelmann RR, Yoo J, Tucker N, et al. Impact of guidance provided by a multispectral digital skin lesion analysis device following dermoscopy on decisions to biopsy atypical melanocytic lesions. J Clin Aesthet Dermatol. 2015;8(9):21–24. [PMC free article] [PubMed] [Google Scholar]

- 30.Meyer AN, Murphy DR, Singh H. Communicating findings of delayed diagnostic evaluation to primary care providers. J Am Board Fam Med. 2016;29(4):469–473. doi: 10.3122/jabfm.2016.04.150363. [DOI] [PubMed] [Google Scholar]

- 31.Wilson EC, Emery JD, Kinmonth AL, et al. The cost-effectiveness of a novel SIAscopic diagnostic aid for the management of pigmented skin lesions in primary care: a decision-analytic model. Value Health. 2013;16(2):356–366. doi: 10.1016/j.jval.2012.12.008. [DOI] [PubMed] [Google Scholar]

- 32.Dikomitis L, Green T, Macleod U. Embedding electronic decision-support tools for suspected cancer in primary care: a qualitative study of GPs’ experiences. Prim Health Care Res Dev. 2015;16(6):548–555. doi: 10.1017/S1463423615000109. [DOI] [PubMed] [Google Scholar]

- 33.Chiang PP, Glance D, Walker J, et al. Implementing a QCancer risk tool into general practice consultations: an exploratory study using simulated consultations with Australian general practitioners. Br J Cancer. 2015;112(Suppl 1):S77–S83. doi: 10.1038/bjc.2015.46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kidney E, Greenfield S, Berkman L, et al. Cancer suspicion in general practice, urgent referral, and time to diagnosis: a population-based GP survey nested within a feasibility study using information technology to flag-up patients with symptoms of colorectal cancer. BJGP Open. 2017. [DOI] [PMC free article] [PubMed]

- 35.Jiwa M, Skinner P, Coker AO, et al. Implementing referral guidelines: lessons from a negative outcome cluster randomised factorial trial in general practice. BMC Fam Pract. 2006;7(1):65. doi: 10.1186/1471-2296-7-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mansell G, Shapley M, Jordan JL, Jordan K. Interventions to reduce primary care delay in cancer referral: a systematic review. Br J Gen Pract. 2011. [DOI] [PMC free article] [PubMed]

- 37.Van de Velde S, Kunnamo I, Roshanov P, et al. The GUIDES checklist: development of a tool to improve the successful use of guideline-based computerised clinical decision support. Implementation Sci. 2018;13(1):86. doi: 10.1186/s13012-018-0772-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Vedsted P, Olesen F. Are the serious problems in cancer survival partly rooted in gatekeeper principles? An ecologic study. Br J Gen Pract. 2011. [DOI] [PMC free article] [PubMed]

- 39.Rose PW, Rubin G, Perera-Salazar R, et al. Explaining variation in cancer survival between 11 jurisdictions in the International Cancer Benchmarking Partnership: a primary care vignette survey. BMJ Open. 2015;5(5):e007212. doi: 10.1136/bmjopen-2014-007212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Haenssle HA, Fink C, Schneiderbauer R, et al. Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann Oncol. 2018;29(8):1836–1842. doi: 10.1093/annonc/mdy166. [DOI] [PubMed] [Google Scholar]

- 42.Hosny A, Parmar C, Quackenbush J, et al. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18(8):500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]