Abstract

Objectives: It has been recommended that clinical trials of Traditional Chinese Medicine (TCM) would be more ecologically valid if its characteristic mode of diagnostic reasoning were integrated into their design. In that context, however, it is also widely held that demonstrating a high level of agreement on initial TCM diagnoses is necessary for the replicability that the biomedical paradigm requires for the conclusions from such trials. Our aim was to review, summarize, and critique quantitative experimental studies of inter-rater agreement in TCM, and some of their underlying assumptions.

Design: Systematic electronic searches were conducted for articles that reported a quantitative measure of inter-rater agreement across a number of rating choices based on examinations of human subjects in person by TCM practitioners, and published in English language peer-reviewed journals. Publications in languages other than English were not included, nor those appearing in other than peer-reviewed journals. Predefined categories of information were extracted from full texts by two investigators working independently. Each article was scored for methodological quality.

Outcome measures: Design features across all studies and levels of inter-rater agreement across studies that reported the same type of outcome statistic were compared.

Results: Twenty-one articles met inclusion criteria. Fourteen assessed inter-rater agreement on TCM diagnoses, two on diagnostic signs found upon traditional TCM examination, and five on novel rating schemes derived from TCM theory and practice. Raters were students of TCM colleges or graduates of TCM training programs with 3 or more years experience and licensure. Type of outcome statistic varied. Mean rates of pairwise agreement averaged 57% (median 65, range 19–96) across the 9 studies reporting them. Mean Cohen's kappa averaged 0.34 (median 0.34, range 0.07–0.59) across the seven studies reporting them. Meta-analysis was not possible due to variations in study design and outcome statistics. High risks of bias and confounding, and deficits in statistical reporting were common.

Conclusions: With a few exceptions, the levels of agreement were low to moderate. Most studies had significant deficits of both methodology and reporting. Results overall suggest a few design features that might contribute to higher levels of agreement. These should be studied further with better experimental controls and more thorough reporting of outcomes. In addition, methods of complex systems analysis should be explored to more adequately model the relationship between clinical outcomes, and the series of diagnoses and treatments that are the norm in actual TCM practice.

Keywords: inter-rater agreement, Traditional Chinese Medicine, diagnosis, diagnostic signs

Introduction

The diagnostic and clinical reasoning practices of Traditional Chinese Medicine (TCM) guide therapeutic intervention. It has been recommended that clinical trials of TCM would more faithfully reflect actual clinical practice if that reasoning were incorporated into their design.1–4 One attempt to do that has been the individualized treatment trial in which subjects receive different treatments according to their diagnoses by TCM practitioners. It is also widely held that a high level of agreement on initial diagnosis and treatment planning must be demonstrated to satisfy the scientific standard of experimental replicability.2,5 In addition, the validity of investigations of associations between TCM descriptors and various physiologic and genetic variables depends on the level of agreement with which those descriptors are assigned to individual patients.6–8 The same is true of studies that explore initial TCM diagnoses as baseline predictors of clinical outcomes or of subgroups of biomedically defined conditions.9

Studies of the inter-rater agreement of TCM diagnostic practices have consequently become an important aspect of its current encounter with biomedical science. In response to these concerns, a number of studies have assessed various methods of producing acceptable inter-rater agreement among TCM practitioners on their initial assessments, and have been surveyed in four previous reviews.2,10–12 Given the importance currently accorded to this issue and the possibility that additional studies might have appeared, an updated systematic review was warranted. In addition to comparing outcomes, features of study design and analysis that were intended to increase inter-rater agreement, and the levels they achieved were also examined. Our conclusion includes a brief critique of the assumptions underlying these experiments.

Materials and Methods

Selection criteria

We included English language, peer-reviewed publications that reported experimental studies of inter-rater agreement among TCM practitioners in which human subjects were examined in person, and that reported a mean or range of some measure of inter-rater agreement across all or a subset of the categories that raters endorsed, or provided tabular data that enabled a mean or range to be calculated. Years of publication ranged from the earliest dated entry in each database to the final searches on October 2, 2018.

Articles in languages other than English, those appearing in nonpeer-reviewed publications, studies of the inter-rater reliability in other traditional East Asian medicines such as Korean or Japanese, studies that compared practitioners' ratings with those produced by machines or computer programs, studies that reported the frequency with which some diagnoses were assigned but not levels of agreement across a range,13–15 and studies in which ratings were based on photographs,16,17 questionnaire responses, reviews of textbooks,18,19 clinical records,20 or survey data were excluded.

Search strategy

Searches were conducted in PubMed, AltHealthWatch, AMED, EMBASE, CINAHL Plus, and Google Scholar using the Boolean string ((Chinese medicine) or acupuncture or (oriental medicine)) and (diagnosis or rating or classification) and (reliability or variation or variability) and English[Language]. For other than language, searches were conducted on “all fields,” or when that option was absent on “title,” “abstract,” and “keyword” or “subject word” if available. In PubMed, an additional search was conducted using “medical subjects headings” (MeSH), a National Medical Library search vocabulary, using the closest approximation of the mentioned search string available: (“medicine, Chinese, traditional” or “acupuncture therapy”) and (diagnosis or classification) and (“reproducibility of results” or “observer variation”).

Final searches of each database were conducted on October 2, 2018. The Google Scholar search returned 270,000 records, of which the first 100 were examined. In addition, the bibliographies of articles that were reviewed in full text were examined for references to other studies that might qualify (E.J.).

Vetting process

The references returned from searches were first assessed on the basis of their titles. Abstracts of selected titles were then obtained and vetted against the inclusion criteria. Full texts selected on that basis were then assessed and final decisions as to inclusion were made (E.J.). Previously determined categories of information were summarized from included articles by two extractors (E.J. and either L.C., M.S., or D.T.) who worked independently and recorded information on a previously drafted form. Disparities where resolved by joint examination of the full texts by the relevant pair of raters. The extracted information was then condensed into the categories given in Supplementary Appendix SA1, and the studies were compared on each of those categories.

Although several of the included articles also presented data on agreement as to the most valuable methods of examination, acupuncture point selection, or herbal prescriptions, we only extracted information that was pertinent to inter-rater agreement on diagnosis, diagnostic signs, or other novel rating schemes. Our methods largely conformed to the PRISMA guidelines.21,22

Quality scoring

To compare variations in risk of bias and confounding, and the adequacy of statistical reporting, a quality score was assigned to each article. A subset of criteria was selected from the Guidelines for Reporting Reliability and Agreement Studies23,24 and was further refined and augmented by criteria that differentiated the studies reviewed here. One point from a maximum of 12 was deducted for failure to report each of the following: (1) number of subjects included in the analysis of outcomes and the criteria for their selection, (2) characteristics of the subjects actually enrolled, (3) number and criteria for raters, (4) blinding of raters, (5) blinding of analysts, (6) limitation on time between examinations of each subject, (7) randomization of the order in which raters examined subjects, (8) rationale for sample sizes and/or number of data points, (9) description of statistical methods, (10) number of options on rating recording forms, (11) reporting central tendency of outcomes, and (12) reporting variability of outcomes. When any of those features was only partially implemented, a fractional point was deducted for each respect in which that feature was deficient. Lower scores, therefore, indicate greater risk of some combination of bias, confounding, and inadequate reporting.

A point was not deducted for failure to randomize for studies in which all raters examined each subject simultaneously, nor for failure to report the number of rating choices for studies that did not use rating forms, nor for post hoc revision of analytic plans. Our reasoning in this latter regard is that the majority of these trials were not tests of specific hypotheses, but experimental efforts to develop methods of achieving more satisfactory levels of agreement. From that point of view, post hoc modifications of analysis are another dimension of experimentation. When an included publication did not provide a central tendency or range for an outcome but did include tabular data allowing us to calculate one or both, the authors did so and included those values in square brackets [] in the outcomes column of Supplementary Appendix SA1, and Tables 3 and 4, but still deducted from the quality score for the publication's failure to state the value explicitly.

Table 3.

Group I—Agreement on Traditional Chinese Medicine Pattern Differentiation: Rank Order of Mean Cohen's Kappaa

| Trial | Kappa mean (range, CI or p-value) | Q score | Prior group discussion and practice | Outcome of post hoc analysis | Dx prompts on rating form | Rating categories included in analysis | Raters rating each subject | Subjects included in analysis |

|---|---|---|---|---|---|---|---|---|

| Zhang 2008b,c | [0.59] (0.49 to 0.76, p > 0.05) | 8.7 | * | * | * | 9 | 3 | 42 |

| Grant Primary | 0.56 (CI 0.25 to 0.81, p < 0.001) | 8.5 | * | 3 | 2 | 27 | ||

| MacPherson Secondary | [0.48] (0.25 to 0.67) | 8 | * | * | 3 | 2 | 48 | |

| Tangb | 0.472 (p = 0.003) | 5 | * | * | * | 3 | 2 | 22 |

| Sung Phase IIIb | [0.36] (0.27 to 0.51) | 6.7 | * | * | 4 | 4 | 65 | |

| MacPherson Primary | [0.31] (0 to 0.59) | 8 | * | * | 3 | 2 | 87 | |

| Zhang 2004 More stringentb,c | [0.26] (0.23 to 0.30) | 9.2 | * | * | * | [10] | 3 | 39 |

| Birkeflet 4 categories | [0.19] (0.13 to 0.51) | 8.5 | * | 4 | 2 | 54 | ||

| Sung Phase Ib | [0.11] (0.03 to 0.32) | 6.7 | * | 4 | 4 | 39 | ||

| Birkeflet 12 categories | [0.07] (−0.01 to 0.22) | 8.5 | * | 12 | 2 | 54 | ||

| Mean | 0.34 | |||||||

| Median | 0.34 |

[] Values calculated from tabular data in the publication (E.J.); p = values reported from chi square tests; median between ranks occurs between Sung and MacPherson; Dx, diagnosis; * indicates that the trial had the feature named at the head of the column.

The range of kappa values for agreement by “at least two” raters reported in O'Brien 2009a28 omitted because its calculation is not described.

kappa assumed to be Cohen's.

Kappa values “weighted by number of pairs involved.”

Table 4.

Quality Scores for Controls on Bias, Confounding, and Statistical Reporting

| Publications | Subjects | Raters | Controls | Statistical reporting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total score | n Subjects, criteria | Enrolled cohort characteristics | n Raters, criteria | Raters blind | Analyst blind | Limited time for examinations of same subject | Randomized order of examinations | Rationale for sample sizes, data points | Statistical methods | Rating options on data collection form | Outcomes: central tendency | Outcomes: variability | |

| Group I: Agreement on TCM pattern differentiation | |||||||||||||

| Hogeboom 2001 | 10 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 1 |

| MacPherson 2003 | 8 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 1 |

| Sung 2004 | 6.7 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0.7 | 1 | 0 | 1 |

| Zhang 2004 | 9.2 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 0.7 | 1 | 0.5 | 1 |

| Zhang 2005 | 7.3 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0.7 | 1 | 0.3 | 0.3 |

| Zhang 2008 | 8.7 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0.7 | 1 | 0 | 1 |

| O'Brien 2009aa | 8.7 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0.7 | 1 | 0b | 1 |

| Mist 2009 | 7.5 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 0.8 | 0.7 |

| Mist 2011c,d | 8.5 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 1 | 1 | 0.5 | 0 |

| Birkeflet 2011c,d | 8.5 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 0.5 | 1 | 0 | 1 |

| Grant 2013 | 8.5 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 0.75 | 0.75 |

| Tang 2013 | 5 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0.5 | 1 | 1 | 0.5 |

| Xu 2015 | 3.5 | 1 | 0 | 0.5 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 |

| Poppelwell 2018 | 5.5 | 1 | 1 | 1 | 0 | 0 | 0 | 0.5 | 0 | 1 | 0 | 1 | 0 |

| Mean: | 7.5 | ||||||||||||

| Median: | 8.3 | ||||||||||||

| Group II: Agreement on TCM examination findings | |||||||||||||

| O'Brien 2009b | 8.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0.5 | 1 | 0.5b | 1 |

| Hua 2012 | 8 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 1 |

| Mean: | 8.25 | ||||||||||||

| Median: | 8.25 | ||||||||||||

| Group III: Agreement on novel rating schemes | |||||||||||||

| Walsh 2001 | 8 | 1 | 0.5 | 1 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 0.5 |

| King 2002c | 9 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 1 | 1 | 0.5 | 0.5 |

| Langevin 2004 | 8 | 1 | 0.5 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 0.5 |

| King 2006c | 7.5 | 1 | 0.5 | 1 | 0 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 0 |

| Bilton 2010 | 7 | 1 | 0.5 | 1 | 1 | 0 | 1 | 0 | 0 | 0.5 | 1 | 1 | 0 |

| Mean: | 7.9 | ||||||||||||

| Median: | 8 | ||||||||||||

Data collection form did not prompt dx categories of pattern dx; did require endorsement of eight guiding principles that were listed.

The publication states that Cohen's kappa were used to compare agreement across all three raters to agreement expected by chance, but the literature suggests that this statistic is valid only for agreement between two raters.51 It also provides outcomes based on agreement by “at least two” raters, but this may not be comparable with rates or kappa for true pairwise agreement.

Studies with two raters in which both examined each subject simultaneously, consequently points were not deducted for failure to limit time between exams, nor to randomize.

Did not use a form to collect ratings data, consequently no point was deducted for failure to report number of rating options prompted.

TCM, Traditional Chinese Medicine.

Results

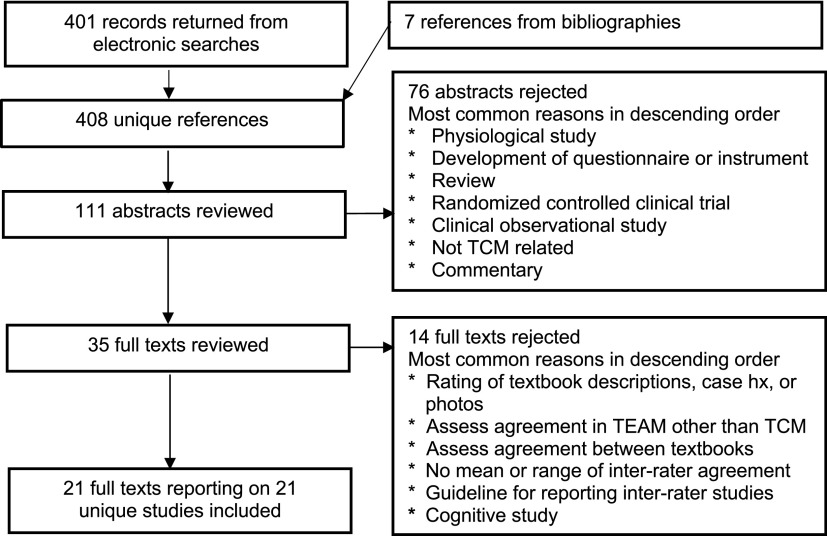

From the total of 401 records returned by electronic searches, 7 references found in bibliographies were added, for a total of 408 references. On the basis of the publication titles, 111 abstracts were selected to review, and on that basis, 35 full texts were selected. Twenty-one of the reviewed texts met eligibility criteria and were included (E.J.) (Fig. 1).

FIG. 1.

Flowchart of publication selection.

Each included article reported a different study and was published during the period 2001–2018. Eight studies were conducted in Australia,25–32 eight in the United States,33–40 three in China,41–43 and one each in Norway44 and the United Kingdom.45 In the following we occasionally refer to individual publications by the last name of its first author and, when necessary to differentiate multiple publications with the same first author, by the year of publication.

Because of differences in the biomedical status of subjects, rating schemes, analytic methods, and other factors, a meta-analysis of outcomes was not feasible. To facilitate the comparison of studies of similar design, they were divided into three groups according to the type of rating scheme used. Group I studied agreement on TCM pattern differentiation (bian zheng), Group II on diagnostic signs found by the traditional methods of examination, and Group III on novel rating schemes based on TCM concepts (Supplementary Appendix SA1).

Group I—agreement on TCM pattern differentiation

This group includes 14 studies in which raters endorsed one or more TCM pattern diagnoses (bian zheng).28,31–33,35–39,41–45 These publications reported the outcomes of 17 trials, and multiple outcomes were reported for some trials. In this section, individual trial outcomes are identified by the name of first author, year of publication when necessary, and the name given to the relevant trial in the publication (e.g., Zhang 2008 Phase I). To identify the outcomes of trials in which multiple types of rating were reported, the name given the type of rating is appended (e.g., McPherson Primary), and for cases in which outcomes based on multiple criteria for scoring agreement were reported, the name given the criterion is appended (e.g., Zhang 2004 More stringent) (Table 1).

Table 1.

Designation of Trials and Outcomes in Group I: Traditional Chinese Medicine Pattern Differentiation

| Publications | Trial and outcome designations | ||

|---|---|---|---|

| Trial | Type of rating | Agreement criteria | |

| Hogeboom 2001 | Hogeboom | ||

| MacPherson 2004 | MacPherson | Primary | |

| Secondary | |||

| Sung 2004a | Sung Phase I | ||

| Sung Phase III | |||

| Zhang 2004b | Zhang 2004 | More stringent | |

| Less stringent | |||

| Zhang 2005 | Zhang 2005 | ||

| Zhang 2008 | Zhang 2008 | ||

| O'Brien 2009a | O'Brien 2009a | Eight guiding principles | All three raters |

| Zhang Fu | At least two raters | ||

| Mist 2009 | Mist 2009 Phase I | Exact | |

| Whole system | |||

| Mist 2009 Phase II Tucson | Exact | ||

| Whole system | |||

| Mist 2009 Phase II Portland | Exact | ||

| Whole system | |||

| Mist 2011 | Mist 2011 | ||

| Birkeflet 2011 | Birkeflet | 12 Categories | |

| 4 Categories | |||

| Grant 2013 | Grant | Primary | |

| Combined primary and secondary | |||

| Tang 2013 | Tang | ||

| Xu 2015 | Xu | ||

| Popplewell 2018 | Popplewell | Pattern agreement | |

| Weighted agreement | |||

A group training designated “Phase II” was conducted but no outcomes are reported.

A “Training” trial was described before the principal trial but no outcomes are reported.

Across those 17 trials, the sample size of subjects averaged 168 (median 40, range 4–2218). Each of those trials except one32 enrolled subjects qualified for a biomedical disorder: rheumatoid arthritis in three (Zhang 2004, 2005, 2008),35–37 temporomandibular joint disorder (TMJD) and its symptoms in three (Mist 2009 Phase I & Phase II Tucson and Portland),38 low-back pain in two (Hogeboom and MacPherson),33,45 irritable bowel syndrome in two (Sung Phases I and III),41 and hypercholesterolemia, fibromyalgia, prediabetes, ketamine abuse, infertility, and a variety of cardiovascular diseases in one each (O'Brien 2009a, Mist 2011, Grant, Tang, Birkeflet, and Xu).28,31,39,42–44 Birkeflet enrolled two cohorts of women, one qualified as infertile and the other as previously fertile, and Mist 2009 enrolled subjects with TMJD diagnoses in Phase I, then with TMJD symptoms but not diagnoses in Phase II, both Tucson and Portland.38,44

Within this group of trials, the study by Popplewell et al. is an exception in its enrollment of subjects who were not selected for a specific biomedical diagnosis, and their data collection form prompted an unusually large number of rating choices (56), in both features approximating the conditions of clinical practice rather than those of a clinical trial.32 Similarly Xu et al. included subjects with a wide range of cardiovascular conditions (9) and drew raters from an apparently large pool of staff across several hospitals, thus approximating conditions of practice in a cardiology department.43

Across trials, the number of raters examining each subject averaged 3 (median 2, range 2–6). Rater qualifications were similar across studies: graduation from an academic program in TCM, 3 or more years of clinical experience, and often licensure in TCM or acupuncture. In all except one study, the manner in which subjects were examined was specified as all or a subset of the traditional “four methods” (interrogation, observation, smelling-listening, and palpation). The exception again was the study of Popplewell et al. in which raters were directed to use their “normal diagnostic strategies.”32 Hogeboom specified three of the traditional methods—interrogration, pulse palpation, and tongue inspection—but also allowed raters to reach their conclusions “as they would if patients were coming into their private office.”33

Features intended to raise levels of agreement including some combination of group discussion, review of reference literature, and/or practice diagnosis preceded 35% of the trials reported in this group (MacPherson, Sung Phase III, Zhang 2008, Mist 2009 Phase I, Tang, and Xu).37,38,41–43,45 In Mist 2009 Phase II, group discussions of ratings were also conducted intermittently during the trial.38

Returning from the level of trials to that of studies, various strategies were implemented to reduce the large number of distinct ratings that are possible within the overall scheme of TCM pattern diagnosis to a smaller number for which more favorable outcomes might arise. Eighty-six percent of studies used written prompts or verbal instructions to suggest or require a limited number of diagnostic choices, although the option to write in nonlisted diagnoses was provided by most.28,31–33,35–38,41–43,45 The number of prompted or allowed diagnoses averaged 15 (median 13, range 4–56). The two studies that did not use data collection forms (Mist 2011, Birkeflet) each standardized some other aspect of the examination or rating processes.39,44

A second criterion for agreement that was more liberal than the first used was adopted in 36% of studies.28,32,35,38,44 In 10 studies (71%), the analytic procedure was altered post hoc. In seven of those studies, the number of categories that were prompted on the data collection form were collapsed to a smaller number for analysis.31,33,35–37,44,45 Three studies calculated agreement post hoc for only the most frequently endorsed diagnoses.28,33,42

Levels of agreement reported: Among the 17 trials, 9 mean rates of pairwise agreement were reported: (Zhang 2004 Less and More stringent, Zhang 2005, Mist 2011, Grant Primary and Combined Primary and Secondary, Tang, Popplewell Pattern agreement and Weighted agreement).31,32,35,36,39,42 Another five trial outcomes were reported only as ranges of mean rates across pairs of raters (MacPherson Primary and Secondary, Sung Phases I and III, Zhang 2008), but each of those publications included tabular data that allowed to calculate mean rates37,41,45 (E.J.). These are displayed in square brackets [] in Supplementary Appendix SA1 and Table 2.

Table 2.

Group I—Agreement on Traditional Chinese Medicine Pattern Differentiation: Rank Order of Mean Pairwise Percentage Rates

| Trial | Percentage rate mean (range) | Q score | Prior group discussion and practice | Outcome of post hoc analysis | Dx prompts on rating form | Rating categories included in analysis | Raters rating each subject | Subjects included in analysis |

|---|---|---|---|---|---|---|---|---|

| Mist 2011 | 96.40% | 8.5 | * | 6 | 2 | 56 | ||

| Sung Phase III | [80%] (77.8–84.4) | 6.7 | * | * | 4 | 4 | 65 | |

| Zhang 2008a | [73%] (64.3–85.7) | 8.7 | * | * | * | 9 | 3 | 42 |

| Grant Primary | 70% (25–100) | 8.5 | * | 3 | 2 | 27 | ||

| MacPherson Secondary | [68.0%] (56–80) | 8 | * | * | 3 | 2 | 48 | |

| MacPherson Primary | [65.4%] (47–75) | 8 | * | * | 3 | 2 | 87 | |

| Zhang 2004 less stringenta | 64.8% (46.7–84.7) | 9.2 | * | * | * | [10] | 3 | 39 |

| Tang | 59% (0–50) | 5 | * | * | * | 5 | 2 | 22 |

| Sung Phase I | [57.4%] (46.2–70.6) | 6.7 | * | 4 | 4 | 39 | ||

| Grant combined primary and secondary | 41% (range n/r) | 8.5 | * | n/r | 2 | 27 | ||

| Zhang 2005 | 31.7% (27.5–35) | 7.3 | * | * | [7] | 3 | 40 | |

| Zhang 2004 more stringenta | 28.2% (26.5–33.3) | 9.2 | * | * | * | [10] | 3 | 39 |

| Popplewell pattern agreement | 23% (range n/r) | 5 | * | 18 | 2 for 16 | 35 | ||

| 3 for 19 | ||||||||

| Popplewell weighted agreementb | 19% (range n/r) | 5 | * | 18 | 2 for 16 | 35 | ||

| 3 for 19 | ||||||||

| Mean: | 56.7% | |||||||

| Median: | 64.8% |

[] Values calculated from tabular data in publication (E.J.); median between ranks occurs between Zhang and Tang; Dx, diagnosis; * indicates that the trial had the feature named at the head of the column.

Mean rates reported in O'Brien 2009a28 for agreement by “at least two” raters may not be comparable to pairwise rates, and are omitted.

Outcomes weighted by number of pairs agreeing per dx category.

Outcome weighted by similarity of severity scores.

Table 2 lists all 14 mean rate outcomes in rank order (Table 2). Examining the relationship between outcomes and study features, the seven outcomes above the median have quality scores just slightly higher than those below, and there is a much higher incidence of training raters before the trail. Outcomes above the median generally included fewer rating categories in the analysis. The rank order does not appear to be correlated with post hoc analyses, nor with the number of subjects or raters.

We assumed that the “pairwise” kappa in Sung et al., the “two category” kappa in the three studies by Zhang et al., and the “between two” kappa in Birkeflet et al. are all Cohen's kappa. On that basis, two outcomes were reported as mean values of Cohen's kappa averaged across rater pairs (Grant Primary and Tang) and another nine only as ranges of that statistic (MacPherson Primary and Secondary, Sung Phases I and III, Zhang 2004 More and Less stringent, Zhang 2008, Birkeflet 4 and 12 categories).31,35,37,41,42,44,45 Eight of the publications that only reported ranges included tabular data that allowed us to calculate mean values (Zhang 2004 Less stringent was the exception), and these too are displayed in brackets [] in Supplementary Appendix SA1, and Tables 2 and 3 (E.J.).

Table 3 ranks these 10 mean Cohen's kappa and their associated ranges, p-values or confidence intervals (CIs) where reported (Table 3). On visual examination, twice as many outcomes above the median were from trials in which raters received prior training, there was a mild predominance of rating forms that prompted a list of diagnoses, and in general fewer rating categories were included in analyses. The rank order does not appear to be correlated with quality scores, with post hoc alterations of analytic procedure, nor with the number of subjects or raters.

Looking again at Table 2 it is noteworthy that the two lowest ranked outcomes—Popplewell Pattern agreement and Weighted agreement—were reported from a study that had among the lowest quality scores in this entire review, but also included features designed to approximate the conditions of actual clinical practice, that is, subjects not qualified for any specific biomedical condition, no prior training of raters, the largest number of rating categories in its analysis, and no post hoc modifications of analysis.32

Similarly the study by Xu et al., which is not included in Table 3 because its outcome was averaged across diagnostic categories instead of raters, reported data giving a weighted mean Cohen's kappa of 0.06, the lowest such value found in this review, and also had the lowest quality score. It appears to have approximated conditions of practice in a hospital cardiology department by including patients with a range of conditions and pairs of raters drawn from a very large pool of clinical staff.43 Setting aside their deficits of quality, the poor outcomes in both of these studies suggest that levels of agreement in clinical practice might be significantly lower than those in clinical trials that use various devices to raise them.

Comparisons of agreement pre- versus post-training: three studies in this group compared levels of agreement pre- versus postgroup trainings that combined discussion of diagnostic standards with practice examinations and ratings. Sung reported a significant improvement in agreement pre- versus post-training from Mann–Whitney U tests (p = 0.002 for mean rates, and p = 0.015 for kappa).41 Zhang et al. 2008 replicated the design of their two earlier studies with minor changes but added group training of raters before the agreement trial, and reported significantly higher levels compared with each of their earlier studies per chi square tests (p < 0.001 vs. Zhang et al. 2004, and vs. Zhang et al. 2005).35–37 Mist et al. 2009 reported significant improvements in Fliess' kappa (interclass correlation coefficient [ICC] p < 0.01) across two trials conducted pre- versus post group training.38

Reasons given for low levels of agreement: the most common factors cited to account for low levels of agreement were uncontrolled variations in the prior training and clinical experience of the raters,28,33,35,41,43,45 that the findings of some traditional methods of examination are inherently more subjective than others (often specified as observation and palpation),28,35–37 that agreement on diagnostic conclusions from a single clinical meeting might underestimate the levels that would be reached over multiple consultations as in actual clinical practice,33,35,36,41 and that subjects might give different verbal information to different raters.28,31,33

Quality scores: the quality scores in this group varied widely from 3.5 to 10 (mean 7.5, median 8.3). The most common risks of bias were failure to provide a statistical rationale for sample sizes of subjects or raters (93%), to blind statistical analysts (79%), to report central tendencies for all outcomes (65%), and to randomize the order in which raters examined subjects (57%). Failure to limit the time elapsed between examinations of the same subject constituted a risk of confounding in many (36%). Deficits of statistical reporting were very common with every publication failing to provide values either for central tendency or variability for least one of the outcomes reported, and 57% failing to explicitly identify the type of kappa used. As already mentioned, we did not deduct quality points for post hoc revision of analytic plans, which occurred in 11 of the studies in this group28,31,33,35–39,42,44,45 (Table 4).

Group II—agreement on TCM examination findings

This group consists of two studies that assessed agreement on diagnostic signs endorsed after traditional TCM methods of examination. Each collected rating data on forms that presented multiple descriptors for each type of sign found by each mode of examination and multiple rating choices for each descriptor.

In O'Brien et al. 2009b subjects with hypercholesterolemia were assessed by three raters who endorsed multiple choices for findings from inspection, auscultation, and palpation. Mean Cohen's kappa and 95% CI were reported for groups of signs found by each examination method, first across all three raters, and then for instances of agreement by “at least two” raters.29

In Hua et al.'s study, 40 subjects with osteoarthritis of the knee were assessed by two raters using all four traditional modes of examination. Their data collection form presented multiple choices for signs covering the findings of each method. Pairwise rates and Cohen's kappa were reported for each type of sign, allowing us to summarize ranges of agreement, but mean kappa overall were not reported30 (Supplementary Appendix SA1).

In each of these studies, the ranges of agreement varied greatly across the different types of signs, ranging from slightly >0% to 100%, and kappa from slightly <0 to 1.00.

As reasons given for low levels of agreement on some signs, both studies cited variability in the education and clinical experience of practitioners, and the “inherently subjective” nature of inspection and assessments of pulse force and location.

Quality scores were 8.5 for O'Brien and 8 for Hua et al. Neither randomized the order in which raters examined subjects, provided a rationale for sample sizes, or reported mean values of agreement across all rated categories (Table 4).

Group III—agreement on novel rating schemes

This group includes five studies in which radial pulse characteristics were rated according to novel schemes based on traditional TCM pulse palpation.25–27,34,40 Each enrolled subjects who were free of acute illnesses or under a stable regimen of treatment for any chronic condition, thus allowing a generalization of outcomes to the population at large. The number of subjects averaged 26 (median 13.5, range 6 − 66), and the number of raters examining each subject averaged 7 (median 5, range 2–17) (Supplementary Appendix SA1).

Walsh et al. compared rates of agreement among students during the first week, immediately after, and 1 year after completion of an initial course in pulse reading at a TCM college course, using a rating scheme of 12 “basic pulse parameters,” rather than traditional pulse categories. Nested chi-square tests seem to have been used to compare the proportion of raters agreeing versus chance, first per each descriptor X subject, then for each time point overall. That would seem to compound p-values from one level of nesting to the next. Only the data collected immediately after the conclusion of the class were reported to have agreement significantly greater than chance (p = 0.046), and the data combined from all three time points did not to have agreement significantly greater (p > 0.05). A decay of agreement at 1 year post-training was attributed to the graduates' exposure through TCM literature to multiple often conflicting accounts of how pulses should be assessed.25

In two later studies using an apparently more evolved version of the same “operational” methodology, 16 pulse descriptors were rated on multiple choice. King et al. 2002 reported a mean rate of pairwise agreement of 80% across two trials, and chi-square greater than chance (p < 0.05) for each rated descriptor. Using the same method, King et al. 2006 found 86% pairwise agreement for rating overall pulse strength in left versus right radial arteries. The high rates in each of these later studies were attributed to the novel operationalized scheme. However, the disparity between those outcomes and Walsh's test of an earlier, simpler version of a very similar scheme suggests that the high levels found in the King's trials may have been due more to those two raters' long collaboration and joint practice than to the novel methodology itself. It may be that the Walsh–King system achieves high levels of agreement only when employed by experienced practitioners who have spent extensive time practicing together.26,27

In Langevin et al., pulses for yin and for yang were rated on separate graphic Likert scales with lines indicating integer values, and raters were free to employ their “customary means of examination.” Those data were used to estimate Intraclass Correlation (ICC) for numbers of raters ranging from 1 to 6. ICC for one rater was 0.77 for yin and 0.78 for yang, and six raters approximated a standard of “good agreement” (ICC >0.80), outcomes that were comparable with the three highest mean pairwise rates reported in Group I.34

Bilton assessed pairwise agreement among raters who were highly trained in Contemporary Chinese Pulse Diagnosis (CCPD)—another novel method purporting to detect “operationally defined” pulse characteristics.12,46 Pulse descriptors were rated for female subjects at two time points separated by 28 days to control for the possible effects of menses. Each pair of raters was scored for agreement both within each time point and across time points in both orders.

The validity of combining synchronic and diachronic rates of agreement in that way rests on the assumption that subjects' discernible pulse qualities did not vary significantly across the 28 days. The possibility that they did raises suspicion of a significant confound, without which the overall rate might have been higher because some disagreements across time points might have been due to changes in the subjects' pulses rather than to differences in raters' assessments. Omitting the values they calculated for agreement across the two time points, the average kappa reported within time point 1 (0.52) and time point 2 (0.56) are free from that suspicion. That this outcome does not compare with levels in other studies that provided intensive training in a novel method of pulse rating might also be due to the very large number (87) of descriptive categories that were rated.40

The most common reasons given for low levels of agreement in this group were lack of consistent or clear definitions of pulses and/or palpation technique in the TCM or CCPD literature.26,27,40

Quality scores averaged 7.9 (median 8, range 7–9). The most common risks of bias were failure to provide a rationale for sample sizes (100%), to blind analysts (80%), to report both gender distribution and mean age for the subjects (80%), and to randomize the order in which raters examined subjects (40%). Each publication failed to report at least one variability statistic (Table 4).

Discussion

This systematic review expanded on earlier ones by including new studies and facilitated their comparison by organizing them into three groups—studies of agreement on TCM pattern diagnosis, on findings of traditional TCM methods of examination, and on novel schemes of rating based on TCM concepts. A quantitative comparison of outcomes reported by two subsets of studies within the first group is also provided, and some apparent associations between a few design features and outcomes above versus below the medians are observed. In this section, our findings regarding outcomes, methods that might facilitate high levels of agreement, relevance to clinical practice, quality, and implications for clinical trials of TCM are summarized. In conclusion we critique some of the assumptions that underlie these experiments, and note some limitations of this review.

Overall the levels of agreement reported were low to moderate and generally reflected the findings of prior reviews. However, there were a few exceptionally high levels of agreement: in Group I—studies of agreement on TCM pattern diagnosis, the trial Mist 2011 Primary reported a mean rate of 96.4%, and Sung Phase III achieved a mean rate of 80% after group training of raters.39,41 In Group III—studies of agreement on novel rating schemes, the outcomes reported for trials by King (80% and 86%) and Langevin (ICC = 0.80) were also among the highest in this review.26,27,34

There were preliminary indications that a few of the methods of increasing agreement that were tested in these experiments might contribute to higher than median levels: training raters as a group and prolonged practice between raters before a trial were associated with higher or improved outcomes in both Groups I and III.25–27,37,38,41 In Group I, studies that included a smaller number of rating categories in their analyses tended to produce higher rates of pairwise rates or Cohen's kappa,31,42,45 and in Group III, a simple graphical rating form produced a high ICC34 (Tables 2 and 3).

Permitting raters to use their accustomed modes of clinical reasoning was not consistently associated with outcomes above group medians, not even when combined with data collection forms that were designed to guide reasoning or required a large number of variables to be rated.32,33 The high rate achieved in Langevin et al. may have been due to the combination of such permission with an unusually simple graphic rating scale.34

With respect to implications for clinical practice, the restriction to or prompting for a limited number of rating choices may not reflect the much larger number of diagnostic options considered by practitioners in the clinic. Nor do the other methods that might increase agreement seem adaptable to clinical practice: frequent group training of large number of practitioners is probably not feasible, and forms designed to guide diagnostic reasoning did not consistently produce high levels of agreement. Simple graphic rating forms such as those used in Langevin et al. might be useful for simpler diagnostic constructs such as the eight guiding principles, but would be difficult to adapt to pattern diagnosis.34

Across all three groups, the most frequent deficits were the failure to provide a rationale for sample size, to blind analysts, to randomize the order of examinations, and to adequately report statistical tests and outcomes. The sample sizes of subjects and raters were with few exceptions so small that one doubts they reflected the heterogeneity of diagnostic signs that would appear in the larger samples typical of clinical trials.

As has been long noted, there are serious questions concerning the use of kappa in studies of inter-rater agreement.48 Kappa formulae incorporate certain assumptions regarding rates of chance agreement that may not reflect the actual features of some studies.49,50 Values also vary with prevalence of the rating categories in the actual population and with the number of possible rating categories.51,52 One review advises that kappa is an appropriate measure of inter-rater agreement only when the rated categories are truly independent in their occurrence,53 but this is not the case for TCM pattern differentiation in which some combinations of diagnostic components are more closely associated with one another than others.54,55 Despite frequent references to publications that characterize values in the range 0.6–0.8 as “good,” that seems insufficient to support the standard of replicability of clinical trial results that most authors cite as the goal of this type of study.52,56

These problems suggest caution in interpreting the outcomes of these studies, and raise the possibility that the methods of increasing agreement for which some provide suggestive evidence might not produce the same effects in actual individualized trials. Nevertheless, if conducted with more thorough controls for bias and confounding, and reported more adequately, studies of the effects of prior group training or prolonged collaboration of raters, fewer rating prompts or possibilities, and simple graphic rating forms in combination with allowing raters to use their customary mode of diagnostic reasoning would be valuable tests of whether those features might reliably produce high levels of agreement. Whether or not the levels achieved would be adequate to support scientifically acceptable coefficients of association between TCM diagnosis and trial outcomes is a separate question. In addition, establishing inter-rater agreement on initial diagnosis would not solve the problem of reproducibility in clinical trials because the link between specific diagnosis and subsequent treatment decisions has also been shown to be highly variable.33

Finally, the premise that high inter-rater reliability of initial diagnoses is crucial to scientifically valid assessment of the efficacy of TCM might not be the most useful approach to understanding the relationship between diagnosis and clinical efficacy. The reassessment of diagnoses and adjustment of treatment plans on successive clinical encounters is an essential feature of TCM clinical practice, and the course of reassessment across sessions might be an equal or more significant predictor of outcome than the initial diagnosis, as noted by several of the articles reviewed here.33,36,41 In addition, the complexity of the TCM model of psychophysiology, which includes organs and systems that are inter-related through multiple logics, suggests that there might be more than one sequence of diagnostic assessments and treatments that would be effective for some patients. This is plausible on the principle that some complex dynamic systems can be impelled into similar pathways of change by more than one type of input. If that were true for TCM, then individual variation among practitioners in their initial diagnoses and treatments would not be an obstacle to reliably good clinical outcomes. Accounting for replicability would simply require more sophisticated modeling. Taking that possibility seriously would be a substantial conceptual challenge to studies of the relationship between diagnosis and outcome. The various ways of modeling the behavior of complex systems might be valuable resources for responding to that challange.57–59

This review is limited in that only English language publications in peer-reviewed journals were searched, and this may have excluded Chinese language reports of similar studies. We did not attempt to include studies that appeared only as theses or dissertations which might have met the inclusion criteria if they had been published in a peer-reviewed journal. The heterogeneity of design, rating schemes, and outcome measures of the studies that were included rendered meta-analysis infeasible.

Conclusions

The studies reviewed here experimented with various procedures for producing biomedically acceptable levels of agreement on initial diagnoses or other types of ratings by TCM practitioners when made under some of the controlled conditions that are typical of clinical trials. With few exceptions, outcomes were inadequate to that standard. As a whole, these studies are beset by serious risks of bias and confounding, by deficits in statistical reporting, and by a lack of consensus on key issues of analysis.

Bearing those limitations in mind, they do suggest a few strategies that might contribute to higher levels of inter-rater agreement on initial assessment: providing opportunities for group discussion and practice diagnosis among raters immediately before a trial, extended prior collaboration by raters, using rating collection forms that prompt for fewer endorsement choices, and allowing raters to use their accustomed modes of diagnostic reasoning while responding to simple graphic rating forms. Future investigations with more thorough experimental controls and statistical reporting should assess the reliability with which those devices can raise levels of agreement. However, the premise that high inter-rater reliability of initial diagnoses is crucial to valid assessment of the efficacy of TCM is questionable, and methods from the field of complex systems analysis might be usefully applied to investigate the empirical relationship between multiple diagnoses across serial clinical encounters and outcomes.

Supplementary Material

Acknowledgments

The authors thank the New England School of Acupuncture and the Massachusetts College of Pharmacy and Allied Health Sciences for access to electronic databases at their libraries.

Disclaimer

The contents of this study are solely the responsibility of the authors and do not represent the official views of the NCCIH or the NIH.

Author Disclosure Statement

No competing financial interests exist.

Funding Information

This research was supported by grants from National Center for Complementary and Integrative Health (NCCIH), at the National Institutes of Health (NIH): K01AT004916, U19AT002022, and K24AT009282.

Supplementary Material

References

- 1. Birch S. Issues to consider in determining an adequate treatment in a clinical trial of acupuncture. Complment Ther Med 1997;5:8–12 [Google Scholar]

- 2. Birch S. Testing traditionally based systems of acupuncture. Clin Acupunct Orient Med 2003;4:84–87 [Google Scholar]

- 3. Hammerschlag R. Methodological and ethical issues in clinical trials of acupuncture. J Altern Complement Med 1998;4:159–171 [DOI] [PubMed] [Google Scholar]

- 4. Birch S, Alraek T. Traditional East Asian Medicine: How to understand and approach diagnostic findings and patterns in a modern scientific framework? Chin J Integr Med 2014;20:336–340 [DOI] [PubMed] [Google Scholar]

- 5. Lewith G, Vincent C. On the evaluation of the clinical effects of acupuncture: A problem reassessed and a framework for further research. J Altern Complement Med 1996;2:79–90 [DOI] [PubMed] [Google Scholar]

- 6. Lu Y. The relationship between the insulin levels of IGT patients and TCM syndrome differentiation. Zhejian J Trad Chinese Med 2003;5:220 [Google Scholar]

- 7. Yu E. Association between disease patterns in Chinese medicine and serum ECP in allergic rhinitis. Chinese Med J 1999;8:19–26 [Google Scholar]

- 8. Seca S, Giovanna F. Understanding Chinese medicine patterns of rheumatoid arthritis and related biomarkers. Medicines 2018;5:17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Tan S, Tilisch K, Bolus R, et al. Traditional Chinese Medicine based subgrouping of Irritable Bowel Syndrome patients. Am J Chinese Med 2005;33:365–379 [DOI] [PubMed] [Google Scholar]

- 10. Zaslawski C. Clinical reasoning in Traditional Chinese Medicine: Implications for clinical research. Clin Acupunct Oriental Med 2003;4:94–101 [Google Scholar]

- 11. O'Brien KA, Birch S. A review of the reliability of traditional East Asian medicine diagnoses. J Altern Complement Med 2009c;15:353–366 [DOI] [PubMed] [Google Scholar]

- 12. Bilton K, Zaslawski C. Reliability of manual pulse diagnosis methods in Traditional East Asian Medicine: A systematic narrative literature review. J Altern Complement Med 2016;22:599–609 [DOI] [PubMed] [Google Scholar]

- 13. Zell B, Hirata J, Marcus A, et al. Diagnosis of symptomatic post menopausal women by traditional Chinese medicine practitioners. Menopause 2000;7:129–134 [DOI] [PubMed] [Google Scholar]

- 14. Kalauokalani D, Sherman KJ, Cherkin DC. Acupuncture for chronic low back pain: Diagnosis and treatment patterns among acupuncturists evaluating the same patients. South Med J 2001;94:486–492 [PubMed] [Google Scholar]

- 15. Coeytaux RR, Chen W, Lindemuth CE, et al. Variability in the diagnosis and point selection for persons with frequent headache by Traditional Chinese Medicine acupuncturists. J Altern Complement Med 2006;12:863–872 [DOI] [PubMed] [Google Scholar]

- 16. Kim M, Cobbin D, Zaslawski C. Traditional Chinese Medicine tongue inspection: An examination of the inter- and intrapractitioner reliability of specific tongue characteristics. J Altern Complement Med 2008;14:527–536 [DOI] [PubMed] [Google Scholar]

- 17. Lo L-C, Cheng T-L, Huang Y-C, et al. Analysis of agreement on traditional Chinese medical diagnostics for many practitioners. Evid Based Complement Alternat Med 2012;2012:5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Birch S, Sherman K, Zhong Y. Acupuncture and low-back pain: Traditional Chinese medical acupuncture differential diagnoses and treatments for chronic lumbar pain. J Altern Complement Med 1999;5:415–425 [DOI] [PubMed] [Google Scholar]

- 19. Alvim DT, Ferreira AS. Inter-expert agreement and similarity analysis of traditional diagnoses and acupuncture prescriptions in textbook and pragmatic-based practices. Complement Ther Clin Pract 2018;30:38–43 [DOI] [PubMed] [Google Scholar]

- 20. Birkeflet O, Laake P, Vøllestad NK. Poor multi-rater reliability in TCM pattern diagnoses and variation in the use of symptoms to obtain an diagnosis. Acupunct Med 2014;32:325–332 [DOI] [PubMed] [Google Scholar]

- 21. Moher D, Liberate A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA Statement. PLoS Med 2009;6:e1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Booth A, Clarke M, Dooley G, et al. The nuts and bolts of PROPERO: An international prospective register of systematic reviews. Syst Rev 2012;1:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Kottner J, Audige L, Brorson S, et al. Guidelines for reporting reliability and agreement studies (GRRAS) were proposed. Int J Nurs Stud 2011;48:6610671. [DOI] [PubMed] [Google Scholar]

- 24. Gerke O, Moller S, Debrabant B, et al. Experience applying the Guidelines for Reporting Reliability and Agreement Studies (GRRAS) indicated five questions should be addressed in the planning phase from a statistical point of view. Diagnostics 2018;8:69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Walsh S, Cobbin D, Bateman K, Zaslawski C. Feeling the pulse: Trial to assess agreement level among TCM students when identifying basic pulse characteristics. Eur J Orient Med 2001;3:25–31 [Google Scholar]

- 26. King E, Cobbin D, Walsh S, Ryan D. The reliable measurement of radial pulse characteristics. Acupunct Med 2002;20:150–159 [DOI] [PubMed] [Google Scholar]

- 27. King E, Walsh S, Cobbin D. The testing of classical pulse concepts in Chinese medicine: Left- and right-hand pulse strength discrepancy between males and females and its clinical implications. J Altern Complement Med 2006;15:727–734 [DOI] [PubMed] [Google Scholar]

- 28. O'Brien KA, Abbas E, Zhang J, et al. An investigation into the reliability of Chinese medicine diagnosis according to Eight Guiding Principles and Zang-Fu theory in Australians with hypercholesterolemia. J Altern Complement Med 2009a;15:259–266 [DOI] [PubMed] [Google Scholar]

- 29. O'Brien K, Abbas E, Zhang J, et al. Understanding the reliability for diagnostic variables in a Chinese medicine examination. J Altern Complement Med 2009b;15:727–734 [DOI] [PubMed] [Google Scholar]

- 30. Hua B, Abbas E, Hayes A, et al. Reliability of Chinese medicine diagnostic variables in the examination of patients with osteoarthritis of the knee. J Altern Complement Med 2012;18:1028–1037 [DOI] [PubMed] [Google Scholar]

- 31. Grant SJ, Schnyer RA, Chang DH-T, et al. Inter-rater reliability of Chinese medicine diagnosis in people with prediabetes. Evid Based Complement Alternat Med 2013;2013:1–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Popplewell M, Reizes J, Zaslawski C. Consensus in Traditional Chinese Medical diagnosis in open populations. J Altern Complement Med 2018;1–6 [DOI] [PubMed] [Google Scholar]

- 33. Hogeboom CJ, Sherman JK, Cherkin DC. Variation in the diagnosis and treatment of chronic low back pain by Traditional Chinese Medicine acupuncturists. Complement Ther Med 2001;9:154–166 [DOI] [PubMed] [Google Scholar]

- 34. Langevin HM, Badger G, Povolny BK, et al. Yin scores and Yang scores: A new method for quantitative diagnostic evaluation in Traditional Chinese Medicine research. J Altern Complement Med 2004;10:389–395 [DOI] [PubMed] [Google Scholar]

- 35. Zhang GG, Bausell B, Lao L, et al. The variability of TCM pattern diagnosis and herbal prescription on rheumatoid arthritis patients. Altern Ther Health Med 2004;10:58–63 [PubMed] [Google Scholar]

- 36. Zhang GG, Lee W, Bausell B, et al. Variability in the Traditional Chinese Medicine (TCM) diagnoses and herbal prescriptions provided by three TCM practitioners for 40 patients with rheumatoid arthritis. J Altern Complement Med 2005;11:415–421 [DOI] [PubMed] [Google Scholar]

- 37. Zhang GG. Singh B, Lee W, et al. Improvement of agreement in TCM diagnosis among TCM practitioners for persons with the conventional diagnosis of rheumatoid arthritis: Effect of training. J Altern Complement Med 2008;14:381–386 [DOI] [PubMed] [Google Scholar]

- 38. Mist S, Ritenbough C, Aickin M. Effects of questionnaire-based diagnosis and training on inter-rater reliability among practitioners of Traditional Chinese Medicine. J Altern Complement Med 2009;15:703–709 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Mist SD, Wright CL, Jones KD, Carson JW. Traditional Chinese Medicine diagnoses in a sample of women with fibromyalgia. Acupunct Med 2011;29:266–269 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Bilton K, Smith N, Walsh S, Hammer L. Investigating the reliability of contemporary Chinese pulse diagnosis. Aust J Acupunct Chin Med 2010;5:3–13 [Google Scholar]

- 41. Sung JJY, Leung WK, Ching JYL, et al. Agreements among Traditional Chinese Medicine practitioners in the diagnosis and treatment of irritable bowel syndrome. Aliment Pharmacol Ther 2004;20:1205–1210 [DOI] [PubMed] [Google Scholar]

- 42. Tang W LM, Leung W, Sun W, et al. Traditional Chinese Medicine diagnoses in persons with ketamine abuse. J Trad Chin Med 2013;33:164–169 [DOI] [PubMed] [Google Scholar]

- 43. Xu Z-X, Xu J, Yan J-J, et al. Analysis of the diagnostic consistency of Chinese medicine specialists in cardiovascular disease cases and syndrome identification based on the relevant feature for each label learning method. Chin J Integr Med 2015;21:217–222 [DOI] [PubMed] [Google Scholar]

- 44. Birkeflet O, Laake P, Vøllestad N. Low inter-rater reliability in Traditional Chinese Medicine for female infertility. Acupunct Med 2011;29:51–57 [DOI] [PubMed] [Google Scholar]

- 45. MacPherson H, Thorpe L, Thomas K, Campbell M. Acupuncture for low back pain: Traditional diagnosis and treatment of 148 patients in a clinical trial. Complement Ther Med 2003;12:38–44 [DOI] [PubMed] [Google Scholar]

- 46. Hammer L. Chinese Pulse Diagnosis: A Contemporary Approach. Seattle: Eastland Press, 2005. [Google Scholar]

- 47. Bilton K. Investigating the Reliability of Contemporary Chinese Pulse Diagnosis as a Diagnostic Tool in Oriental Medicine. Sydney, Australia: University of Technology, 2012 [Google Scholar]

- 48. Maclure M, Willet WC. Misinterpretation and misuse of the kappa statistic. Am J Epidemiol 1987;126:161–169 [DOI] [PubMed] [Google Scholar]

- 49. Steinijans VW, Dilette E, Bomches B, et al. Interobserver agreement: Cohen's kappa coefficient does not necessarily reflect the percentage of patients with congruent classifications. Int J Clin Pharmacol Ther 1997;35:93–95 [PubMed] [Google Scholar]

- 50. Feinstein AR, Cicchetti DV. High agreement but low kappa: I The problems of two paradoxes. J Clin Epidemiol 1990;43:543–549 [DOI] [PubMed] [Google Scholar]

- 51. Dormer A, Klar N. The statistical analysis of kappa statistics in multiple samples. J Clin Epidemiol 1996;43:543–549 [DOI] [PubMed] [Google Scholar]

- 52. Altman DG. Practical Statistics for Medical Research. London: Chapman & Hall/CRC, 1999. [Google Scholar]

- 53. Tooth L, Ottenbacher KJ. The kappa statistic in rehabilitation research: An examination. Arch Phys Med Rehabil 2004;85:1371–1376 [DOI] [PubMed] [Google Scholar]

- 54. Maciocia G. The Practice of Chinese Medicine. London: Churchill Livingston, 1994. [Google Scholar]

- 55. Schnyer R, Allen JB, Hitt SK, Manber R. Acupuncture in the Treatment of Major Depression: A Manual for Research and Practice. London: Churchill Livingston, 2001. [Google Scholar]

- 56. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics 1977;33:159–174 [PubMed] [Google Scholar]

- 57. Bell IR, Koithan M. Models for the study of whole systems. Integr Cancer Ther 2006;5:293–307 [DOI] [PubMed] [Google Scholar]

- 58. Langevin HM, Wayne PM, MacPherson H, et al. Paradoxes in acupuncture research: Strategies for moving forward. Evid Based Complement Alternat Med 2011;2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Zhang A, Sun H, Yan G, et al. Systems biology approach opens door to essence of acupuncture. Complement Ther Med 2013;21:253–259 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.