Abstract

Mental imagery and visual perception rely on the same content‐dependent brain areas in the high‐level visual cortex (HVC). However, little is known about dynamic mechanisms in these areas during imagery and perception. Here we disentangled local and inter‐regional dynamic mechanisms underlying imagery and perception in the HVC and the hippocampus (HC), a key region for memory retrieval during imagery. Nineteen healthy participants watched or imagined a familiar scene or face during fMRI acquisition. The neural code for familiar landmarks and faces was distributed across the HVC and the HC, although with a different representational structure, and generalized across imagery and perception. However, different regional adaptation effects and inter‐regional functional couplings were detected for faces and landmarks during imagery and perception. The left PPA showed opposite adaptation effects, with activity suppression following repeated observation of landmarks, but enhancement following repeated imagery of landmarks. Also, functional coupling between content‐dependent brain areas of the HVC and HC changed as a function of task and content. These findings provide important information about the dynamic networks underlying imagery and perception in the HVC and shed some light upon the thin line between imagery and perception which has characterized the neuropsychological debates on mental imagery.

Keywords: carry‐over effect, functional connectivity, imagery, mental images, multivariate pattern analysis, perception, places, psychophysiological interaction

1. INTRODUCTION

Mental imagery concerns the human ability to access previously encoded perceptual information from memory (Farah, 1989; Kosslyn, 1980) to create a complex and sophisticated mental experience of objects, people, or places. How the brain allows for such a complex mechanism is one of the most fascinating issues in modern neuroscience. To date it has been repeatedly demonstrated that imagery and perception yield roughly overlapping activations in content‐dependent brain areas in occipito‐temporal high‐level visual cortex (HVC), with activations depending on the object category. For example, imagining a face leads to the activation of the fusiform face area (FFA), a brain area related to face perception, whereas imagining a scene leads to the activation of the parahippocampal place area (PPA), a brain area related to place and scene perception (O'Craven & Kanwisher, 2000).

However, neuropsychological evidence of severe visual agnosia in absence of imagery deficit (Aglioti, Bricolo, Cantagallo, & Berlucchi, 1999; Behrmann, Winocur, & Moscovitch, 1992; Riddoch & Humphreys, 1987), as well as evidence for a selective acquired (Guariglia, Padovani, Pantano, & Pizzamiglio, 1993; Trojano & Grossi, 1994) or congenital deficit in generating mental images (Fulford et al., 2017; Jacobs, Schwarzkopf, & Silvanto, 2017; Keogh & Pearson, 2017; Watkins, 2017; Zeman, Dewar, & Della Sala, 2015, 2016), inevitably points toward a dissociation between imagery and perception. Neuroimaging studies show that information about the object category can be decoded based on the activity patterns within the HVC during both imagery and perception, but only during perception based on the activity patterns of the low‐level visual cortex (LVC; i.e., the retinotopic cortex/lower visual areas; Reddy, Tsuchiya, & Serre, 2010). The activity patterns in the visual cortex can be also used to decode the individual perceived or imagined exemplar both in the case of objects (Lee, Kravitz, & Baker, 2012) and places (Boccia et al., 2015; Boccia et al., 2017; Johnson & Johnson, 2014), even if the distribution of information across LVC and HVC is strikingly different during imagery and perception: whereas the amount of information about the perceived object is higher in LVC, the amount of information about the imagined object is higher in HVC (Lee et al., 2012).

Thus, even if imagery and perception share the same neural substrates, they may be subtended by locally different regional, and possibly inter‐regional, neural dynamics. Two sets of neuroimaging studies support this hypothesis.

First, studies based on neural adaptation show that mental imagery and perception produce opposite adaptation effects on early brain potentials (Ganis & Schendan, 2008). Regional hemodynamic responses also show different patterns of adaptation effects as a function of the perceptual category: category repetition results in a suppression of neural signal in the left superior parietal lobule, the right V1, and the bilateral retrosplenial complex (RSC) during mental imagery of geographical space, and in an enhancing of activation during imagery of both objects and familiar places in several brain areas, including the right fusiform gyrus and the right RSC for familiar places and the bilateral inferior parietal lobule, the right inferior temporal lobe, and the right superior parietal lobule for objects (Boccia et al., 2015).

Second, studies of effective connectivity—the effect of experimental conditions on the functional coupling between brain regions—point at different dynamic interactions across brain areas subtending imagery and perception: perception originates from bottom‐up mechanisms arising from LVC, whereas imagery originates from top‐down mechanisms arising from prefrontal cortex (Ishai, 2010). A reversal of the predominant direction of cortical signal flow between parietal and occipital cortices occurs during mental imagery, with increased top‐down signal flow during imagery as compared with perception (Dentico et al., 2014). In the same vein, while an increase in both bottom‐up and top‐down coupling has been reported during perception, only a modulation of backward connections has been found during imagery, with a stronger increase in coupling than during perception (Dijkstra, Zeidman, Ondobaka, van Gerven, & Friston, 2017). Interestingly, the functional coupling among HVC areas also changes during imagery and perception. In the right hemisphere, the RSC and the PPA are more connected during perception than during imagery of familiar environments, whereas the PPA and the hippocampus (HC), a key region for memory formation and retrieval (Moscovitch et al., 2005), are more connected during imagery (Boccia et al., 2017).

Thus, even if object‐ and category‐related information is re‐instantiated during imagery and perception in the HVC, different local and inter‐regional dynamic interactions subtend imagery and perception. Investigating the dynamic interaction among the content‐dependent brain areas of the HVC and the HC during imagery may disclose the mechanisms allowing to access previously encoded perceptual information from memory to create the mental image. Here we aimed at providing for the first time a comprehensive investigation of this issue, assessing the dynamic contribution of HVC and HC to imagery and perception by means of different and complementary data‐analysis approaches. We faced three main questions featuring the thin line between imagery and perception within the HVC: (a) Are individual faces and landmarks represented in the HVC and the HC, and does this neural representation generalize across imagery and perception? (b) Do different neural mechanisms underlie visual perception and visual imagery in the HVC? (c) Does the functional coupling among these areas dynamically change as a function of task (imagery vs. perception) and content (faces vs. landmarks)?

To these aims, we developed a functional magnetic resonance imaging (fMRI) paradigm using stimuli from two perceptual categories known to be well‐represented in HVC, namely faces and landmarks, and a carry‐over design (Figure 1), which allows to combine different data analysis approaches: (a) we used a multivariate pattern classification analysis to study the existence of neural representations of faces and landmarks and their generalization across imagery and perception; (b) we analyzed neural adaptation effects to disentangle whether different local regional dynamics subtended imagery and perception in the HVC; and (c) we used generalized psychophysiological interactions (gPPI) to assess whether functional connectivity between regions within the HVC was affected by task (imagery vs. perception) and content (faces vs. landmarks). Thus, moving from local neural representation of landmarks and faces within the HVC and HC to their functional coupling, we assessed dynamic regional and inter‐regional mechanisms allowing information to be re‐instantiated in service of imagery and perception. Based on previous findings, we expected that the neural representation of faces and landmarks, assessed by means of multivariate pattern classification analysis, generalizes across imagery and perception in the content‐dependent areas of the HVC and HC. We also expected that different local regional dynamic mechanisms, that is different adaptation effects, as well as different inter‐regional dynamics across the HVC and the HC, assessed by means of gPPI, underlie imagery and perception, as a function of task and content.

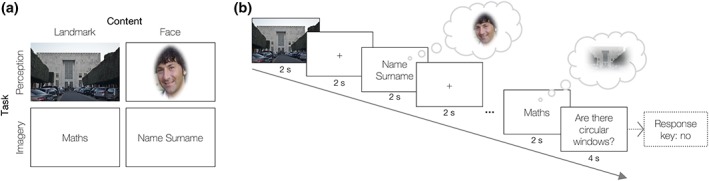

Figure 1.

Experimental design and timeline. (a) The experiment was frames as 2 by 2 factorial with a task (imagery vs. perception) and a content (faces vs. scenes) factor. (b) fMRI event‐related paradigm was designed as a continuous carry‐over design, in which each stimulus preceded and followed every other stimulus. Each of the five scans consisted of 60 perceptual (photos) and 60 imagery (labels) trials (half were faces), plus 5 null trials and 9 question trials. With the exception of the question trials, which lasted 4 s, trials lasted 2 s and were followed by a fixation point of the same duration [Color figure can be viewed at http://wileyonlinelibrary.com]

2. MATERIALS AND METHODS

2.1. Participants details

Nineteen healthy right‐handed individuals (mean age: 24.947 and SD: 1.840; 8 women) with no history of neurological or psychiatric disorders participated in the fMRI study. All participants were students at the Sapienza University of Rome and, thus, very familiar with the university campus. Campus knowledge was assessed with a preliminary questionnaire in which participants were asked to locate 15 campus landmarks on an outline map (average performance 92.281%; SD 10.003%). All participants were also familiar with famous faces selected for the study and none suffered from developmental prosopagnosia, as confirmed by an informal interview and performances on a preliminary questionnaire in which participants were asked to link 12 famous people's faces and names (average performance 93.421%; SD 11.973). All participants gave their written informed consent to participate in the study. The study was designed in accordance with the principles of the Declaration of Helsinki and was approved by the ethical committee of Fondazione Santa Lucia, Rome.

2.2. Stimuli

We conceived our fMRI experiment as a 2 by 2 factorial design (Figure 1a), where imagery and perception trials (Task factor) related to faces and landmarks (Content factor), two perceptual categories known to be well‐represented in HVC, were alternated in a pseudo‐random sequence (Figure 1b). We used six familiar landmarks within the Sapienza University campus, which have been validated and used in three previous studies (Boccia, Piccardi, et al., 2014; Boccia et al., 2015; Boccia et al., 2017) as well as six famous people's faces, selected for the purpose of this study by means of a pilot study as follows. First, we asked six individuals to name all famous people who came to their mind (e.g., actors, anchor‐men/women, showmen/women, reporters). We selected 12 famous people with the higher level of occurrence and asked to an independent sample of six participants to associate their names and faces. For the fMRI study, we selected six faces receiving 100% of correct answers.

In the present study landmarks and faces could be presented either as photos, in the perception trials, or as written labels, in the imagery trials, resulting in six photos and six labels of familiar landmarks within the university campus (i.e., Literature, Orthopedics, Hygiene, Chapel, Maths, Law) and six photos and six names of famous Italian people (i.e., Raffaella Carrà, Gerry Scotti, Paolo Bonolis, Paola Cortellesi, Luciana Littizzetto, Carlo Verdone). Famous people's photos were derived from the web and represented faces in a canonical position (i.e., front view).

2.3. Procedure

Participants observed either the photo of the familiar landmark/famous face (during perceptual trials) or its name (during imagery trials) and were instructed to watch the landmark/face during perception or imagine the indicated landmark/face during imagery (see Figure 1). Participants were also advised that questions could randomly appear (question trials) to which they had to respond by using one of two buttons on the fMRI compatible keypad. Question trials were introduced to ensure that participants actually kept their attention and executed the task and concerned a perceptual detail of the last imagined/perceived item. For example, “Are there plants in front of it?” or “Has she got blond hair?” On average, participants correctly answered on 83.79% of the question trials (SD = 6.94).

We developed the fMRI event‐related paradigm as a continuous carry‐over design (Aguirre, 2007). This allowed us to estimate both the mean difference in neural activity between stimuli and the interactive effects of two consecutive stimuli on brain activations, that is the modulatory effect of the previously presented stimulus on the neural response to the current stimulus (i.e., carry‐over effect), namely neural adaptation effect (Aguirre, 2007). During five fMRI scans, stimuli were presented in an unbroken sequential manner in five serially balanced sequences in which each stimulus preceded and followed every other stimulus (Nonyane & Theobald, 2007). Each item was presented 5 times in each fMRI scan and each scan thus consisted of 60 perceptual (half were faces) and 60 imagery (half were faces) trials, plus 5 null trials and 9 question trials. With the exception of the question trials, which lasted 4 s, trials lasted 2 s and were followed by a fixation point of the same duration.

2.4. Image acquisition

A Siemens Allegra scanner (Siemens Medical Systems, Erlangen, Germany), operating at 3 T and equipped for echo‐planar imaging, was used to acquire functional magnetic resonance images. Head movements were minimized with mild restraint and cushioning. Stimuli were generated by a control computer located outside the MR room, running in‐house software implemented in MATLAB. An LCD video projector projected stimuli to a back‐projection screen mounted inside the MR tube and visible through a mirror mounted inside the head coil. Presentation timing was controlled and triggered by the acquisition of fMRI images.

Participants underwent an fMRI acquisition session, consisting in five scans during the main experiment and two localizer scans. For the main experiment, functional MRI images were acquired for the entire cortex using blood‐oxygen‐level dependent (BOLD) contrast imaging (30 slices, in‐plane resolution = 3 × 3 mm, slice spacing = 4.5 mm, repetition time [TR] = 2 s, echo time [TE] = 30 ms, flip angle = 70°). For each scan, 281 fMR volumes were acquired. Localizer scans were aimed at identifying scene‐ and face‐responsive regions in the HVC. In each imaging run, participants passively viewed eight alternating blocks (16 s) of photographs of faces and places/scenes presented for 300 ms every 500 ms, interleaved with fixation periods of 15 s on average (Boccia et al., 2017; Sulpizio, Committeri, & Galati, 2014; Sulpizio, Committeri, Lambrey, Berthoz, & Galati, 2013). During each localizer scan, we acquired 234 functional MR volumes. We also acquired a three‐dimensional high‐resolution T1‐weighted structural image for each subject (Siemens MPRAGE, 176 slices, in‐plane resolution = 0.5 × 0.5 mm, slice thickness = 1 mm, TR = 2 s, TE = 4.38 ms, flip angle = 8°).

Image analyses were performed using SPM12 (http://www.fil.ion.ucl.ac.uk/spm). The first four volumes of each scan were discarded to allow for T1 equilibration. All images were corrected for head movements (realignment) using the first volume as reference. The images of each participant were then coregistered onto their T1‐weighted image and normalized to the standard MNI‐152 EPI template using the T1 image as a source. Images for univariate analysis were then spatially smoothed using a 6‐mm full‐width at half‐maximum (FWHM) isotropic Gaussian kernel for the main experiment and a 4‐mm FWHM isotropic Gaussian kernel for the localizer images. Multivariate pattern analysis (MVPA) was performed on unsmoothed images. The set of analyses was conducted on six independently defined, theoretically motivated, regions of interest (ROIs), defined on the basis of localizer scans and automatic segmentation procedure of T1‐weighted structural image (see below for further details).

2.5. ROI definition

ROIs of the HVC were defined in each individual participant by analyzing localizer imaging runs. For localizer runs, place/scene and face blocks were modeled as box‐car functions, convolved with a canonical hemodynamic response function. Scene‐ and face‐responsive ROIs were defined on each individual's brain surface (automatically reconstructed by using FreeSurfer software package). The scene‐responsive regions were defined as the regions responding more strongly to places/scenes than to faces, in the posterior parahippocampal cortex (i.e., PPA), in the retrosplenial cortex/parieto‐occipital sulcus (i.e., RSC) and the lateral occipital cortex (i.e., Occipital Place Area, OPA). The face‐responsive regions were defined as the regions responding more strongly to faces than to places/scenes in the fusiform gyrus (i.e., FFA) and inferior occipital cortex (i.e., Occipital Face Area, OFA). Individual ROIs were created by selecting 60 most activated nodes, among suprathreshold cluster of activation (p < 0.05 FDR corrected at the cluster level, with a cluster‐forming threshold of p < 0.001 uncorrected). This procedure allowed us to obtain ROIs of the HVC with the same dimension, which would be comparable for both univariate and multivariate analyses, and thus they entered in the analyses as repeated measure (see below). The HC, instead, was defined on an anatomical basis in each participant based on the automatic segmentation provided by FreeSurfer (Van Leemput et al., 2009). In light of the functional specialization along the long axis of the HC (Poppenk, Evensmoen, Moscovitch, & Nadel, 2013; Ranganath & Ritchey, 2012), we also considered a subdivision of the HC in an anterior (aHC) and a posterior (pHC) portion for the gPPI described below. To this aim we cut the HC axially at z = −10 (Morgan, MacEvoy, Aguirre, & Epstein, 2011).

2.6. Multivariate pattern analysis

As a preliminary step for MVPA, we used a general linear model on unsmoothed time series, in which trials related to each of the six landmarks/faces in the perceptual and imagery conditions were modeled by separate regressors, in order to estimate the magnitude of the response at each voxel for each exemplar and task separately. Multi‐voxel patterns of activity for each item in each ROI were then extracted from the resulting parameter estimate images. MVPAs were performed using a linear classifier (Support Vector Machine, SVM) using libSVM (Chang & Lin, 2011), using a leave‐one‐session‐out cross‐validation procedure (i.e., decoding) or cross‐decoding procedure on imagery and perception trials (i.e., cross‐decoding). The most informative voxels were identified through recursive features elimination (De Martino et al., 2008). As a sanity check, we first performed a cross‐decoding analysis on the content: we trained the classifier to decode the category (i.e., landmarks vs. faces) from the activity pattern related to each pair of imagined items and computed the mean classification accuracies in the perceptual domain for each participant. The results of this preliminary analysis are provided in Supporting Information (Table S1 and Figure S1). Then, we tested whether multi‐voxel patterns of activity allowed to decode the identity of individual landmarks and faces in imagery and perception separately; to do so, we trained the classifier to decode the landmark/face identity from the activity pattern related to each pair of perceived or imagined items on N‐1 scans and computed the mean classification accuracies on the remaining scan for each participant (i.e., a leave‐one‐out cross‐validation decoding procedure). Then, we tested whether the neural encoding of the individual landmark or face identity generalized across imagery and perception; to this aim we trained the classifier to decode the landmark/face identity from the activity pattern related to each pair of imagined items and computed the mean classification accuracies in the perceptual domain for each participant (i.e., a cross‐decoding procedure) and vice versa. For each classification analysis, we compared the between‐subject distribution of classification accuracies with chance level (i.e., 0.5) by means of one‐sample t tests, applying Bonferroni's correction for multiple comparisons.

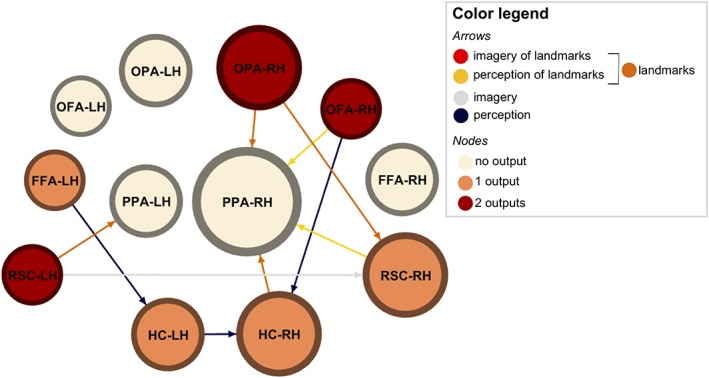

2.7. Representational similarity analysis

In order to characterize the neural representation underlying imagery and perception of familiar landmarks and faces, we also performed a representational similarity analysis (Kriegeskorte, Mur, & Bandettini, 2008). We first built, for each region, a representational dissimilarity matrix (RDM) by computing multivariate (Euclidean) distances between the activity patterns associated with each pair of stimuli (see Figure 5). We then averaged the RDM elements, within subjects, depending on whether they were computed on pairs of stimuli belonging to the same task or to different tasks (imagination vs. perception), and to the same category or to different categories (faces vs. landmarks). Pairs of same‐category stimuli related to the same exemplar were excluded from such averages.

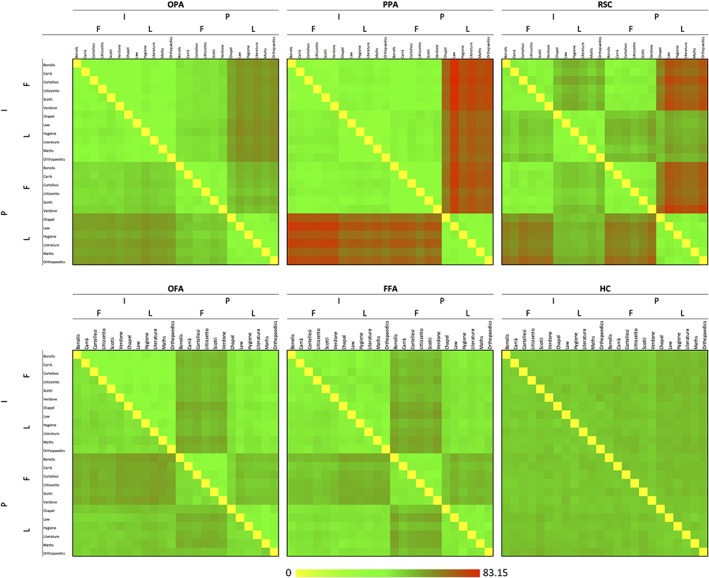

Figure 5.

Representational dissimilarity matrices. Mean Euclidean distances between pair of exemplars are plotted in green‐to‐red patches, for each ROI and hemisphere. Matrix elements below the main diagonals represent the left hemisphere results; those above the main diagonals represent the right hemisphere results. Note. F = faces; I = imagery; L = landmarks; P = perception [Color figure can be viewed at http://wileyonlinelibrary.com]

For each task (i.e., perception and imagery), we computed a dissimilarity index between categories as follows:

where C t is the index of between‐categories dissimilarity relative to task t, FL t is the mean Euclidean distance between face and landmark exemplars in task t, and FF t and LL t are the mean Euclidean distances between different exemplars of the face category, and between different exemplars of the landmark category, respectively. We tested whether C t was higher than zero for imagery and perception using one‐sample t tests. We also directly compared the two C t indexes, by using a 2‐tailed t test. Bonferroni's correction for multiple comparisons was applied (p < 0.0042).

Dissimilarity index between tasks was also computed for each category as follows:

where T c is the index of between‐tasks dissimilarity relative to category c, IP C is the mean Euclidean distance between imagined and perceived exemplars of category c, and II c and PP c are the mean Euclidean distances between different imagined exemplars, and between different perceived exemplars, respectively. Again, we tested whether T c was higher than zero for landmarks and faces using one‐sample t tests. We also directly compared the two T c indexes, by using a 2‐tailed t test. Bonferroni's correction for multiple comparisons was applied (p < 0.0042). Supplemental analyses on regional differences in T c and C t are reported in supplemental information.

2.8. fMR adaptation analysis

Each trial was modeled as a canonical hemodynamic response function time‐locked to the trial onset. We defined separate regressors for each experimental condition, by labeling each trial by the Task (perception vs. imagery), Task repetition (task‐repetition vs. task‐change) and Content (landmark vs. face). The first trial of each fMRI session, the question trials and the trials following question trials, as well as all trials following a content switch (i.e., not‐repeated content), were modeled as separate conditions and were not considered in the group analysis. For each participant and region, we computed a regional estimate of the amplitude of the hemodynamic response in each experimental condition by entering a spatial average (across all voxels in the region) of the preprocessed time series into the individual general linear models. Regional hemodynamic responses were analyzed with a 2 × 2 × 2 ANOVA, with Task, Task Repetition and Content as factors. Post hoc pairwise comparisons were performed applying Bonferroni's correction for multiple comparisons.

2.9. Psychophysiological interaction analysis

We assessed whether the effective connectivity among the ROIs changed as a function of the Task and Content by using a generalized form of psychophysiological interaction analysis (gPPI). As a first step, we computed seed‐to‐seed partial correlation coefficients between averaged ROIs of the present study by using a previous resting state data set on 47 participants (for a similar procedure and data set see Boccia, Sulpizio, Nemmi, Guariglia, & Galati, 2016). We calculated partial correlation coefficients on this resting state data set, for each pair of ROIs within each hemisphere and between each ROI and its contralateral homologous. After transforming correlation coefficients to z values using the Fisher transform, we used one‐sample one‐tailed t tests to assess whether correlation coefficients were significantly higher than zero. Thus, we selected 23 pairs of significant couplings (p < 0.0009; see Table 1). This allowed us to restrict PPI to seed‐to‐target couplings we know to be part of the same functional network. Then we performed generalized PPIs for each pair of ROIs which resulted to be significantly correlated at rest. PPI offers the opportunity to understand how brain regions interact in a task‐dependent manner (McLaren, Ries, Xu, & Johnson, 2012) by modeling BOLD responses in one target brain region in terms of the interaction between a psychological process and the neural signal from a source region. Thus, PPI allows to test whether experimental conditions (here Task and Content) modulate the functional connectivity between a source and a target region. Here, we modeled the BOLD signal in the target region as a combination of (a) the effects of the experimental conditions (perception vs. imagery and face vs. landmark), modeled through canonical hemodynamic functions, (b) a regressor containing the BOLD time course of the source region, which modeled the intrinsic functional connectivity between the source and the target region, and (c) regressors expressing the interaction between trial‐induced activation in each of the four conditions and the neural signal in the source region (PPI terms). PPI terms were built according to McLaren et al. (2012). The resulting parameter estimates are expressed as percent signal changes in BOLD signal in the target region as a function of percent signal changes in the source region. We tested whether the percent signal change in the target regions for all possible combinations of target and seed regions was significant by means of one‐sample t tests (Di & Biswal, 2015), applying Bonferroni's correction for multiple comparisons. We also tested whether effective connectivity between highlighted couplings was different according to the Task and Content by means of 2 × 2 ANOVAs. We restricted our analyses to couplings showing significant PPIs for investigated effects (Table 2). For example, even if the OFA and the OPA were significantly correlated during resting state (seed‐to‐seed partial correlation coefficients in Table 1), no PPIs were significant among these regions (Table 2), that is, their coupling did not change during any experimental condition vs. rest. Thus, they were not entered in the 2 × 2 ANOVAs. This procedure allowed us to restrict our focus only on psychophysiological interactions significantly associated with experimental conditions, avoiding spurious effects not directly linked to experimental purposes and conditions.

Table 1.

Seed‐to‐seed partial correlation coefficients on resting state data set

| RH | |||||||

|---|---|---|---|---|---|---|---|

| FFA | OFA | OPA | PPA | RSC | HC | ||

| LH | FFA | 0.457 | 0.002 | 0.072 | −0.040 | 0.296 | |

| OFA | 0.332 | 0.257 | 0.093 | −0.007 | 0.228 | ||

| OPA | −0.012 | 0.331 | 0.112 | 0.109 | 0.220 | ||

| PPA | 0.134 | 0.052 | 0.203 | 0.205 | 0.122 | ||

| RSC | −0.017 | −0.077 | 0.039 | 0.262 | 0.301 | ||

| HC | 0.283 | 0.271 | 0.237 | 0.083 | 0.356 | ||

Note. Significant correlations are showed in italics (p < 0.0009).

Table 2.

Significant PPIs

|

3. RESULTS

3.1. Network definition: HVC and hippocampus

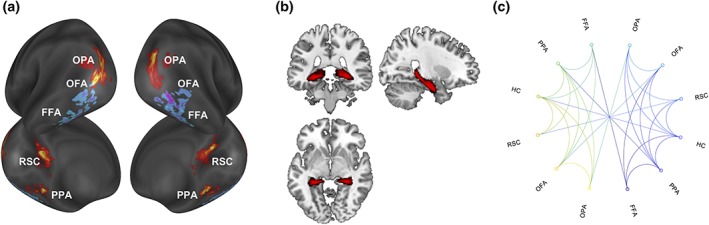

Scene‐responsive regions were successfully identified in the lateral occipital cortex (OPA; left hemisphere = 17/19 participants; right hemisphere = 15/19 participants), in the posterior parahippocampal cortex (PPA; left hemisphere = 19/19 participants; right hemisphere = 19/19 participants), and in the retrosplenial cortex/parieto‐occipital sulcus (RSC; left hemisphere = 13/19 participants; right hemisphere = 16/19 participants). Face‐responsive regions were successfully identified in the inferior occipital cortex (OFA; left hemisphere = 11/19 participants; right hemisphere = 10/19 participants) and in the fusiform gyrus (FFA; left hemisphere = 17/19 participants; right hemisphere = 17/19 participants). The average location of all regions is shown in Figure 2a. The HC was defined in all participants (19/19) (Figure 2b). Significant functional couplings between pairs of regions are reported in Table 1 and summarized in Figure 2c.

Figure 2.

Network definition. Averaged participants' ROIs in the HVC and HC and their resting state functional connectivity. (a) Scene‐ and face‐responsive ROIs are plotted on an average brain from the Conte69atlas (Van Essen, Glasser, Dierker, Harwell, & Coalson, 2012). ROIs were created on individual surface by selecting 60 most activated nodes, among suprathreshold cluster of activation (p < 0.05 FDR corrected at the cluster level and peak p < 0.001 uncorrected). The scene‐responsive regions were defined as the regions responding more strongly to places/scenes than to faces, whereas face‐responsive regions were defined as the regions responding more strongly to faces than to places/scenes. (b) Averaged participants' HC resulting from automatic segmentation procedure. Axial, sagittal and coronal views are provided. (c) Significant seed‐to‐seed resting state functional couplings between ROIs (more details about seed‐to‐seed partial correlations are available in Table 1) [Color figure can be viewed at http://wileyonlinelibrary.com]

3.2. Neural representation of faces and landmarks generalizes across imagery and perception in HVC and hippocampus

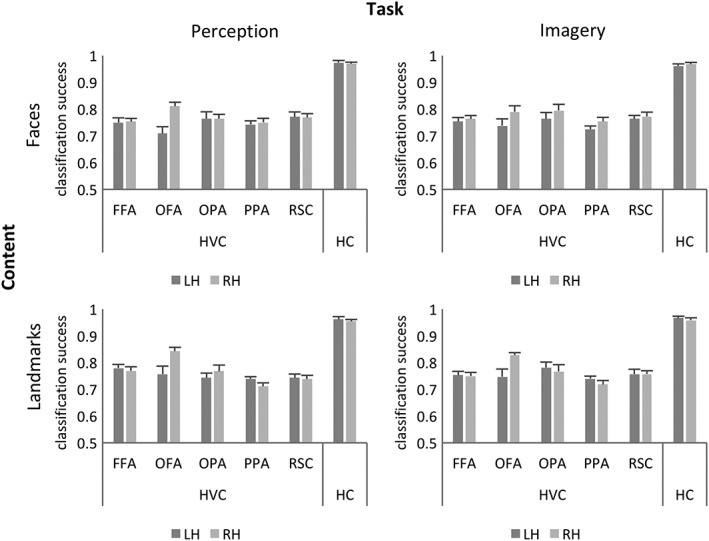

The first step of our analysis pipeline was aimed at clarifying whether the neural representation of individual landmarks and faces in the HVC and HC generalizes across imagery and perception and whether preferred/nonpreferred categories are processed in category selective regions with a similar representational structure or not. These analyses allowed us to clarify where and how information underlying imagery and perception is stored in the HVC. To this aim we performed a multivariate pattern analysis (MVPA) with a combination of decoding and cross‐decoding procedures. As a first step, we tested whether it was possible to decode the specific landmark or face participants were imagining or perceiving, separately for each of the four combinations of Task and Content. We found that multi‐voxel patterns of activity in all the investigated regions allowed to decode with supra‐chance accuracy (Figure 3) the individual face or landmark participants were perceiving or imagining (t 9 < df < 18 ≥ 8.277; p ≤ 0.000009). Statistics are fully reported in Supporting Information Table S2.

Figure 3.

Neural representation of faces and landmarks within the HVC and hippocampus. Classification accuracies as they result from decoding classification procedures, computed for each ROI of the HVC and the HC (mean classification accuracy ± SEM)

Then, we tested whether neural patterns coding for specific landmarks or faces generalized across imagery and perception. We found that, in all the investigated regions, the multi‐voxel pattern of activity associated to a specific landmark or face in imagery trials predicted which face or landmark participants observed in perception trials with supra‐chance accuracy (t 9 < df < 18 ≥ 4.708026; p ≤ 0.000175) (Figure 4a,c). Similarly, the multi‐voxel pattern of activity associated to a specific landmark or face in perception trials predicted which face or landmark participants imagined (Figure 4b,d); (t 9 < df < 18 ≥ 6.378677; p ≤ 0.000018; Statistics are fully reported in Supporting Information Table S3).

Figure 4.

Neural representation of faces and landmarks generalizes across imagery and perception in HVC and hippocampus. Classification accuracies as they result from cross‐decoding classification procedures, computed for each ROI of the HVC and the HC (mean classification accuracy ± SEM)

In order to characterize whether the neural representations underlying investigated conditions were different across regions as a function of category and task, we computed for each region the representation dissimilarity matrices (RDM) between stimuli (Figure 5), that is, the multivariate distances between the neural activation patterns evoked by each stimulus, taken as indices of the diversity between the involved neural representations (Kriegeskorte et al., 2008). The elements of the matrix were grouped according to whether the two stimuli in the pair belonged to the same category or not, and to the same task or not.

We first searched for significant distances associated with the same category vs. different categories within each task: we found that the dissimilarity index (C t) was higher than zero in all the ROIs for the perception task (t 9 < df < 18 ≥ 4.721; p s < 0.0011), and in the bilateral FFA (LH: t 16 = 4.390; p = 0.0005; RH: t 16 = 3.346; p = 0.0041) and RSC (LH: t 12 = 4.785; p = 0.0004; RH: t 15 = 5.038; p = 0.0001) for the imagery task. The direct comparisons between the two dissimilarity indexes revealed significant differences in all the ROIs (t 9 < df < 18 ≥ 4.118; p s < 0.0026), with the exception of the bilateral HC: differences were higher during perception than during imagery (Figure 5).

We then searched for differences between distances associated with the same task versus different tasks within the same category: we found that the dissimilarity index (T c) was higher than zero in the bilateral FFA (LH: t 16 = 5.474; p = 0.0001; RH: t 16 = 8.000; p < 0.0001), OFA (LH: t 10 = 4.190; p = 0.0019; RH: t 9 = 5.771; p = 0.0003), OPA (LH: t 16 = 3.363; p = 0.0040; RH: t 14 = 4.766; p = 0.0003) and PPA (LH: t 18 = 3.312; p = 0.0039; RH: t 18 = 5.520; p < 0.0001) for faces; and in all the investigated ROIs, with the exception of the left OFA (t 9 < df < 18 ≥ 3.910; p s < 0.0012), for landmarks. The direct comparisons between the two dissimilarity indexes revealed significant differences in all the ROIs (t 9 < df < 18 ≥ 3.708; p s < 0.0041): distances were higher for faces than landmarks in the bilateral OFA and FFA; instead, differences were higher for landmarks than faces in the bilateral HC, OPA, PPA, and RSC (Figure 5).

In sum, multi‐voxel patterns of activity in all the investigated regions allowed to decode the individual landmark and face participants were imagining or perceiving. Besides highlighting significant within‐format accuracy, we found that training the classifier on the imagery condition allowed to decode items in the perceptual domain, and vice versa. This result suggests that patterns generalized across imagery and perception. However, the RDM suggests that the neural representation in most of the investigated ROIs is different for faces and landmarks across the two tasks. Thus, information about faces and landmarks is widely distributed across these regions, but different neural representations underlie the investigated conditions.

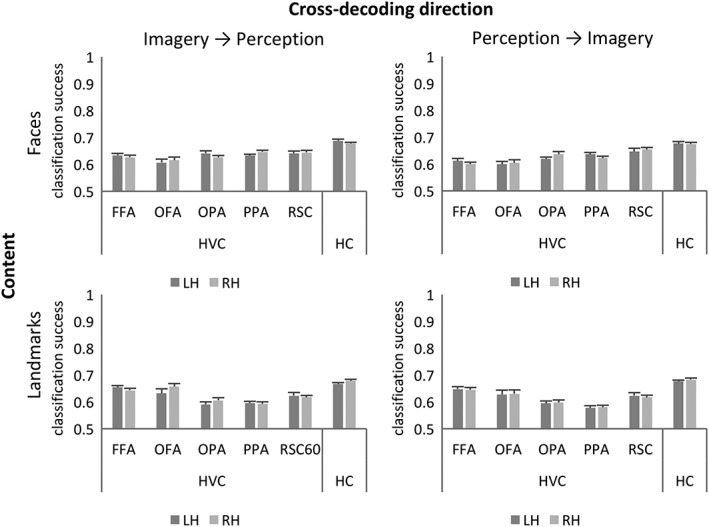

3.3. Different fMR adaptation mechanisms for imagery and perception in the HVC

The first step of our analysis pipeline demonstrates that information about landmarks and faces is widely coded in the HVC and HC and generalizes across imagery and perception. The second step was aimed at demonstrating that although this information is widely represented and generalizes across tasks, different local regional dynamics underlie imagery and perception of faces and landmarks in the HVC. The analysis of previous literature reported above reveals that different adaptation effects underlie imagery and perception. Thus, the study of the adaptation effect may shed light upon the thin line between imagery and perception within the HVC. In order to assess whether different adaptation effects underlie imagery and perception in the HVC, we performed a neural adaptation analysis assessing whether repeated exposure to the imagery and/or perceptual task led to different adaptation effects. Here we only considered trials following a trial of the same Content (i.e., a face or landmark preceded by another face or landmark) and modeled the fMRI response as a function of Task (perception vs. imagery), Content (landmark vs. face), and Task repetition (repeated‐task vs. task‐change). For example, within the imagery Task and the face Content, a face imagery trial preceded by a face imagery trial counted as a repeated task trial, while a face imagery trial preceded by a face perception trial counted as a task‐change trial.

In scene‐responsive regions (Figure 6a,c), Task repetition yielded neural adaptation in the bilateral RSC (left hemisphere: F 1,12 = 4.968; p = 0.046; right hemisphere: F 1,15 = 4.325; p = 0.055), with suppression of activity in repeated task trials (Figure 6c). The left PPA (Figure 6b) also showed a main effect of Task repetition (F 1,18 = 6.184; p = 0.023), but also a Task by Task repetition Interaction (F 1,18 = 12.996; p = 0.002) and a third‐level interaction (F 1,18 = 9.645; p = 0.006). This area showed suppression of activity during repeated perception of landmarks and enhancing of activity during repeated imagery of landmarks (Figure 6b).

Figure 6.

Different fMR adaptation mechanisms for imagery and perception in the HVC. Neural adaptation effect is plotted for each region and hemisphere as bar (mean ± SEM). Significant effects are marked with an asterisk (see legend of the effects) and fully described in the text. (a) Left and right OPA; (b) left and right PPA; (c) left and right RSC; (d) left and right OFA; (e) left and right FFA; (f) right HC. Note. F = faces; I = imagery; L = landmarks; P = perception

Among face‐responsive regions (Figure 6d–e), a Task by Task repetition Interaction was detected in the bilateral FFA (left hemisphere: F 1,16 = 4.646; p = 0.047; right hemisphere: F 1,16 = 7.119; p = 0.017): this area showed neural adaptation only during imagery (regardless of the content), with suppressed activity during repeated task trials (Figure 6e). The right HC showed a trend toward significance for Task repetition (F 1,18 = 4.288; p = 0.053), with suppression of activity for repeated task trials (Figure 6f).

Additional effects were detected as follows (for easiness of exposition, detailed statistics are summarized in Table 3). All scene‐responsive regions (Figure 6a–c) showed the expected preference for landmarks, and a main effect of the task, with higher activation for perception than imagery. However, all scene‐responsive regions showed Content‐by‐Task interaction: in the bilateral OPA and PPA, as well as in the left RSC, the difference between perception and imagery (i.e., higher activation during perception) was higher for landmarks than for faces (Figure 6a,b), while perception and imagery only differed for landmarks in the right RSC (Figure 6c). It has to be noted that in the bilateral RSC only landmarks (in both tasks) led to significant activation. Concerning face‐responsive regions (Figure 6d,e), all of them showed the expected preference for faces. The right OFA and the bilateral FFA also showed a main effect of Task and a Content by Task interaction: these regions were generally more activated during perception than imagery, but in the right hemisphere this difference was significant only for faces. Instead, in the left FFA, the difference between imagery and perception was higher for faces than landmarks (Figure 6e). Finally, the right HC showed a significant effect of Content, being more activated for landmarks than faces (Figure 6f).

Table 3.

Statistics

| ROI | Hem | Effects | ||

|---|---|---|---|---|

| Content | Task | Content‐by‐task interaction | ||

| Scene‐responsive regions | ||||

| OPA | LH | F(1,16) = 28.333; p < 0.001 | F(1,16) = 37.755; p < 0.001 | F(1,16) = 19.647; p < 0.001 |

| RH | F(1,14) = 130.149; p < 0.001 | F(1,14) = 73.836; p < 0.001 | F(1,14) = 109.985; p < 0.001 | |

| PPA | LH | F(1,18) = 73.393; p < 0.001 | F(1,18) = 154.487; p < 0.001 | F(1,18) = 131.882; p < 0.001 |

| RH | F(1,18) = 70.877; p < 0.001 | F(1,18) = 68.188; p < 0.001 | F(1,18) = 63.187; p < 0.001 | |

| RSC | LH | F(1,12) = 142.757; p < 0.001 | F(1,12) = 6.330; p = 0.027 | F(1,12) = 36.333; p = <0.001 |

| RH | F(1,15) = 55.962; p < 0.001 | F(1,15) = 41.036; p < 0.001 | F(1,15) = 57.154; p < 0.001 | |

| Face‐responsive regions | ||||

| OFA | LH | F(1,10) = 16.040; p = 0.002 | ||

| RH | F(1,9) = 29.640; p < 0.001 | F(1,9) = 48.695; p < 0.001 | F(1,9) = 57.545; p < 0.001 | |

| FFA | LH | F(1,16) = 27.011; p < 0.001 | F(1,16) = 17.363; p = 0.001 | F(1,16) = 16.204; p = 0.001 |

| RH | F(1,16) = 79.746; p < 0.001 | F(1,16) = 33.657; p < 0.001 | F(1,16) = 23.724; p < 0.001 | |

| Memory‐related region | ||||

| HC | LH | |||

| RH | F(1,18) = 10.168; p = 0.005 | |||

Note. Hem = hemisphere.

In sum, different adaptation effects may be detected in the HVC as a function of the task to be performed and the content of the task. Interestingly, imagery and perception of landmarks lead to opposite adaptation trends in the left PPA.

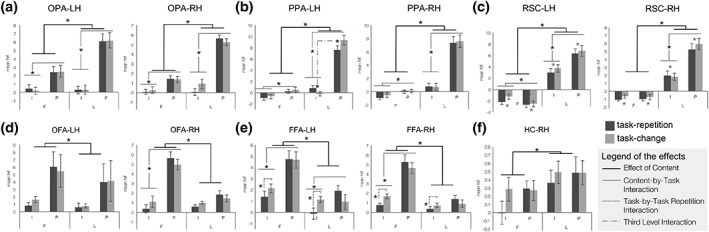

3.4. Different functional couplings underpin imagery and perception in HVC

Differences between imagery and perception in the HVC are not limited to local regional dynamics: different inter‐regional functional couplings have been tentatively demonstrated for imagery and perception. Here we aimed at providing evidence for dynamic couplings within the HVC and HC, as a function of task (imagery vs. perception) and content (faces vs. landmarks). Thus, we set out to study whether and how HVC and HC regions interact in a task‐ and content‐dependent manner, that is whether the functional coupling within regions of the HVC and HC changed as a function of the task and content. As a first step, we provide a general picture of task‐ and content‐related effective connectivity relative to resting‐state connectivity. To this aim, one sample t tests were used to test whether PPIs—namely, effective connectivity during different experimental conditions—were significant. Significant PPIs (p < 0.002083) are reported in Table 2 for each possible pair of source and target regions, as they emerged from seed‐to‐seed resting state correlations described above (Figure 2c).

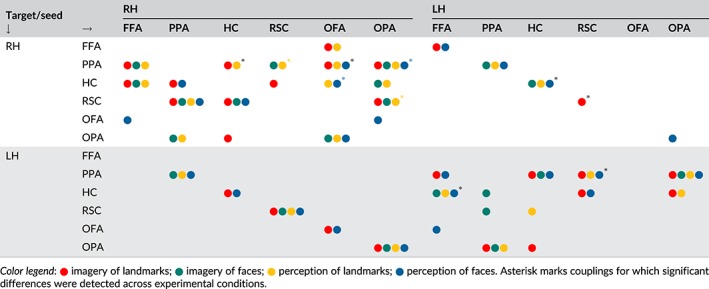

This general picture was used to frame our following analyses, aimed at assessing differences in PPIs according to Task and Content. For each combination of source and target regions showing at least one significant PPI (Table 2), we performed a 2 × 2 ANOVA with Content (landmark vs. face) and Task (perception vs. imagery) as factors. Couplings showing significant effects are summarized in Figure 7 and described below.

Figure 7.

Different functional couplings underpin imagery and perception in HVC. PPIs showing significant effects are schematically represented by directional arrows. Arrows identify seed‐to‐target direction. Node size is proportional to the number of inputs, whereas color represents the number of outputs from that ROIs (color legend—nodes). Arrow color summarizes the experimental level (color legend—arrows) [Color figure can be viewed at http://wileyonlinelibrary.com]

In the right hemisphere, OPA was more connected with PPA and RSC during processing of landmarks than faces (PPA: F 1,14 = 7.680; p = 0.015; RSC: F 1,13 = 10.699; p = 0.006), regardless of the task participants were performing. This was also true for the HC, which was more connected with the PPA during landmarks processing (F 1,18 = 4.923; p = 0.040). The RSC was specifically connected with the PPA during perception of landmarks, as suggested by the presence of a Content by Task interaction (F 1,12 = 8.264; p = 0.014). The OFA was more connected with the HC during perception, without preference for the Content (F 1,7 = 6.998; p = 0.033), as well as with the PPA, with a preference for perception (F 1,7 = 10.526; p = 0.014) and landmark processing (F 1,7 = 19.190; p = 0.003).

In the left hemisphere, we found that the RSC and the HC were more connected with their right homologous region during imagery (F 1,12 = 5.816; p = 0.033) and perception (F 1,18 = 8.457; p = 0.009), respectively. The RSC was also more connected with the PPA during landmark processing (F 1,12 = 5.257; p = 0.041). The FFA was more connected with the HC during perception (F 1,16 = 8.917; p = 0.009).

In light of the functional specialization along the long axis of the HC (Poppenk et al., 2013; Ranganath & Ritchey, 2012), we also split the HC into an anterior (aHC) and a posterior (pHC) portion, and performed additional PPI analyses for hippocampal couplings we found to be differently affected by Task and Content (Figure 7). ANOVAs with the same factorial structure of those reported above revealed that the observed inter‐hemispheric coupling in HC during perception was driven by the left aHC (F 1,18 = 4.739; p = 0.043) but not by the left pHC (F 1,18 = 0.149; p = 0.704). Also, the observed HC versus PPA coupling in the right hemisphere during landmark processing was explained only by the aHC (F 1,18 = 4.452; p = 0.049), not by the pHC (F 1,18 = 0.615; p = 0.443). Similarly, in the left hemisphere the FFA versus HC coupling observed during perception was guided by the aHC (F 1,16 = 9.658; p = 0.007) but not by the pHC (F 1,16 = 3.265; p = 0.090). Conversely, in the right hemisphere both the aHC (F 1,7 = 5.609; p = 0.050) and the pHC (F 1,7 = 6.822; p = 0.035) contributed to explain the above‐reported higher functional coupling between OFA and HC observed during perception.

In sum, the results of the PPI analysis suggest that functional couplings among the investigated regions change according to Task and Content.

4. DISCUSSION

This is the first study aimed at providing a comprehensive picture of the dynamic mechanisms underlying imagery and perception in the HVC and the HC. Even if previous neuroimaging studies found that they share the same neural substrates in the HVC, neuropsychological evidence points toward a dissociation between imagery and perception. Recent neuroimaging evidence suggests that the key difference between imagery and perception lies in different network dynamics. For these reasons, the systematic assessment of the dynamic neural mechanisms of perception and imagery would allow to resolve the tension between the previous neuroimaging and neuropsychological studies (Lee et al., 2012), besides disclosing the way in which imagery and perception coexist in the HVC. With this aim we investigated task‐dependent and content‐dependent mechanisms of imagery and perception of familiar places and faces, following three main theoretical questions.

4.1. Are faces and landmarks represented in the HVC and the HC, and does this neural representation generalize across imagery and perception?

Concerning our first key point, we found that multi‐voxel patterns of activity in all the investigated regions allowed to decode the landmark and the face participants were imagining or perceiving. Also, such patterns were re‐instantiated during imagery and perception, supporting the idea that neural representation of familiar faces and landmarks generalizes across imagery and perception in the HVC and HC. It has been previously shown that HVC areas represent information about the category and place of both perceived and imagined objects (Boccia et al., 2015; Cichy, Heinzle, & Haynes, 2012), and that item‐specific information about places is also represented in HVC during mental imagery (Johnson & Johnson, 2014). Here we expand upon these results by showing that also the identity of imagined individual faces can be decoded in the HVC, contributing new important insights on how fine‐grained information about an item identity is represented during imagery. Indeed, while there is evidence that the identity of imagined exemplars from different categories can be decoded from activation patterns in the HVC during imagery (Johnson & Johnson, 2014), here we show for the first time that information about the identity of very similar instances of two categories (i.e., different landmarks within the same environmental context, and different faces) is re‐instantiated in HVC during imagery. These results deserve feasible considerations. On one hand, they demonstrate that information about familiar faces and places is coded within the HVC and the HC and is independent from the bottom‐up retinal inputs. On the other hand, they suggest that such information is widely distributed across the HVC and the HC. Actually, all the investigated regions significantly decoded items from both the preferred and the nonpreferred perceptual category (i.e., Content). This is somewhat surprising, since one would expect item‐specific information about landmarks and faces to be selectively encoded in respective content‐dependent regions. However, the present results are consistent with those of previous studies in which content‐dependent regions of the HVC (including the PPA, the FFA, and the OFA) were found to share the representations of the perceptual category (i.e., place or faces) about both preferred and nonpreferred categories during perception (Haxby et al., 2001; O'Toole et al., 2005) and imagery (Cichy et al., 2012). Theoretically, such a result ties well with the principle of distributed encoding (Haxby et al., 2001), which posits that objects are encoded in the ventral visual stream in terms of attributes that may be shared among different perceptual categories. However, we found that dissimilar representations underlie investigated conditions, supporting also the idea that the shared neural code we detected encodes different aspects of the stimulus, rather than a redundant representation per se (O'Toole et al., 2005). In this vein, results from the representational similarity analysis deserve further attention. Dissimilarity matrices of content‐dependent regions of the HVC were clearly structured in macro‐blocks along the category and task boundaries, reflecting their perceptual preference (Figure 5). Instead, the dissimilarity matrix of the HC showed little structure, although the HC was shown to contain enough representational information to allow single perceived or imagined exemplars to be decoded. This result suggests that the HC, compared with the HVC, is less sensitive to within‐category similarities and between‐category differences, and rather encodes single exemplars, even within the same category, in a more unique, distinguishable fashion. This interpretation ties well with the general role of the HC in episodic memory formation and retrieval (Moscovitch et al., 2005).

Finding that item‐specific information about faces and landmarks is widely distributed across the HVC and HC, besides expanding on previous results, may have some important clinical consequences. Actually, it may have a role in the association between navigational deficits and developmental (Iaria & Barton, 2010; Klargaard, Starrfelt, Petersen, & Gerlach, 2016; Piccardi et al., 2017) and acquired prosopagnosia (Corrow et al., 2016), frequently reported. However, it has to be noted that not all of those who show deficit in topographical orientation also show deficit in face processing. An alternative hypothesis is that this shared neural code, even if coding for different attributes of the stimuli, may act as a resilience mechanism explaining why some individuals with developmental topographical disorientation have no deficit in face processing (Bianchini et al., 2014; Piccardi et al., 2017); in this light, the association between navigational deficits and prosopagnosia should raise from a wider alteration in the HVC, which prevents any resilience mechanisms. Even if this compelling hypothesis is phylogenetically plausible, further investigations are needed.

4.2. Do the HVC and the HC show different local dynamics as a function of the perceptual category and the task that was repeated?

Concerning our second key point, we studied how brain activity in these areas adapted to Task repetition. As reported above, previous evidence suggested that different adaptation effects underlie imagery and perception (Boccia et al., 2015; Ganis & Schendan, 2008). Here we found that the left PPA showed an opposite adaptation effect for imagery and perception of landmarks. Actually, this area showed the expected adaptation effect—namely, suppression of activity as a consequence of repeated exposure to the same condition (i.e., perception)—during repeated perception of landmarks, but the opposite trend—namely, enhancing of activity as a consequence of repeated exposure to the same condition (i.e., imagery task)—during imagery of landmarks (hereafter called cross‐task adaptation shift). These findings are consistent with previous evidence that imagery and perception produce opposite adaptation effects in occipitotemporal neurons (Ganis & Schendan, 2008), suggesting that both tasks recruit similar neural populations, but they involve different dynamic regional and inter‐regional mechanisms. Furthermore, this result expands over previous ones, pointing toward the existence of a specific mechanism for mental imagery of places, which is likely located in the left PPA. In this vein, the finding that the left PPA shows reduced activity for repeated landmark perception, and enhanced activity for imagery of the same category, is consistent with suggestions that imagining landmarks entails the reactivation of a memory trace through top‐down interactions (Ganis & Schendan, 2008). We also found task‐related adaptation effects in the bilateral FFA: this area showed neural adaptation only during imagery (regardless the content), with suppressed activity during task‐repetition as compared to task‐change. A main effect of the Task repetition was detected in the bilateral RSC, with this region showing suppression of activity for task‐repetition as compared to task‐change regardless of Content and Task. Taken together, these results support the idea that, even if perception and imagery share widely distributed representations in the HVC, different regional dynamic mechanisms underpin the use of such information, to create a mental image of familiar landmarks and faces (during imagery) or to recognize them (during perception). It remains to clarify whether these regions, with their different contributions, interact to give rise to the mental imagery experience or the perceptual processing of different Content. We answered this question with gPPI analysis discussed below.

4.3. Does the functional coupling among these areas dynamically change as a function of task and content?

Previous studies on effective connectivity during perception and mental imagery of different categories of objects have mainly focused on the top‐down versus bottom‐up direction of the information flow between frontal/parietal regions and LVC (Dentico et al., 2014; Dijkstra et al., 2017). Thus, the way in which the functional coupling within content‐dependent HVC areas and between these areas and the HC is modulated by task and content is still largely unknown. To address this issue, we performed a gPPI analysis that allowed to test whether experimental conditions modulate the functional connectivity between a source and a target region. It is apparent from Figure 7 that the arrangement of functional couplings among the investigated regions changes according to Task and Content. First, most of the interhemispheric connections originated in the left hemisphere (source regions in the PPI) and targeted the right one (target regions in the PPI). This is intriguing due to the long‐standing debate about the hemispheric contribution to mental imagery. Seminal studies of the past century suggested that perception and imagery yielded different patterns of hemispheric activation, with the HVC more activated in the right hemisphere during perception and more activated in the left hemisphere during imagery (Farah, Weisberg, Monheit, & Peronnet, 1989). More recent studies support the idea that both hemispheres contribute to imagery and perception, with specialized and complementary contributions, namely categorical (left hemisphere) and coordinate (right hemisphere) processing (Kosslyn et al., 1989; Kosslyn, Maljkovic, Hamilton, Horwitz, & Thompson, 1995; Laeng, 1994; Palermo, Bureca, Matano, & Guariglia, 2008). The idea that the two hemispheres complementary contribute to imagery and perception is consistent with the present data on the neural adaptation effects, which we found lateralized in the left PPA. Here we found that during imagery the left and the right RSC were more connected than during perception, regardless of the Content. This data is quite interesting because the disconnection of the splenium of the corpus callosum has been linked to the presence of representational neglect (Rode et al., 2010). The present data, taken together with the neuropsychological findings on representational neglect, suggest that the left RSC, targeting the right RSC by means of the splenium of the corpus callosum, may be the brain hub that allows for complementary contributions of the left and the right hemisphere to be integrated. Interestingly, the left RSC is also more connected with the left PPA during landmark processing, regardless of Task. As reported above, the left PPA holds a peculiar adaptation effect, with a cross‐task adaptation shift. The PPI results, taken together with results of the neural adaptation effect, confirm the pivotal role of the left PPA in differentiating the HVC contribution to imagery and perception. Otherwise, in the right hemisphere, perception of landmarks characterized the within‐hemisphere coupling between the RSC and the PPA. It has to be noted that the PPA is the main target of the right hemisphere intrahemispheric connections (from OPA, OFA, RSC, and HC); actually, it has the greatest numbers of psychophysiological interactions as a target region. The nature of these connections (which is mainly due to landmark processing and/or perception) is consistent with the well‐known contribution of the PPA to spatial navigation (Boccia, Nemmi, & Guariglia, 2014). Also, the stronger connection between the RSC and the PPA in the right hemisphere during perception of landmarks is consistent with our previous investigation (Boccia et al., 2017). Although we found that during processing of landmarks the HC and the PPA were more connected in the right hemisphere (Table 2 and Figure 7), differently from our previous study (Boccia et al., 2017), there is no significant difference between imagery and perception. This is probably due to the nature of the task adopted here, which required, in the case of imagery of landmarks, to mentally retrieve the perceptual features of landmarks rather than their relative positions in the environment. It has to be noted that, during imagery, hippocampal activation is modulated parametrically by a spatial index of the imagined scene—namely the number of enclosing boundaries in the scene (Bird, Capponi, King, Doeller, & Burgess, 2010). Thus, the functional coupling between the HC and the PPA may be strictly linked to the retrieval of spatial information of familiar places, which was not required here. Additional PPI results on hippocampal subregions (i.e., aHC vs. pHC) tie well with the long‐axis specialization proposed by Poppenk et al. (2013). Indeed, with the exception of the right OFA, which activity interacted with both aHC and HC, we found that Task and Content modulate the interhemispheric effective connectivity of the aHC as well as the intrahemispheric connectivity with PPA and FFA. This result is consistent with the coarse‐grained (global) functional specialization of the aHC and the fine‐graded (local) information processing in the pHC. In this light, the aHC, biased toward pattern completion and global processing, interacts with more anterior areas of the HVC during imagery and perception. Instead, the pHC, biased toward pattern separation and fine‐graded processing of information, interacts with OFA, an earlier content dependent area of the HVC. With the exception of the functional coupling with the right PPA, which is higher during landmarks processing, hippocampal coupling with the FFA and OFA is stronger during perception, regardless of the category. This is consistent with the idea that the HC generally contributes to successful cued recall, regardless of the stimulus to be recalled (Staresina, Cooper, & Henson, 2013).

5. CONCLUSIONS

In conclusion, mental imagery is an emergent property of the dynamic interaction between key regions of the HVC. Actually, information about familiar faces and places is widely distributed in the HVC and HC and generalizes across imagery and perception; however, the dynamic local and inter‐regional mechanisms allow for this information to be re‐instantiated as a function of the task to be performed. This evidence is consistent with classical neuropsychological evidence of severe visual agnosia in the absence of imagery deficit (Behrmann et al., 1992) as well as of representational neglect in the absence of extrapersonal perceptual neglect (Guariglia, Palermo, Piccardi, Iaria, & Incoccia, 2013). They also open new fascinating possibilities toward the understanding of new discovered disorders of mental imagery such as congenital aphantasia (Fulford et al., 2017; Jacobs et al., 2017; Keogh & Pearson, 2017; Watkins, 2017; Zeman et al., 2015, 2016).

Supporting information

Table S1 Statistics about the one‐sample t tests performed on classification accuracies

Figure S1 Mean classification accuracies and standard errors of preliminary cross‐decoding analysis on content. * p < 0.004

Table S2 Results of the one‐sample t tests performed on classification accuracies of decoding

Table S3 Results of the one‐sample t tests performed on classification accuracies of cross‐decoding

Boccia M, Sulpizio V, Teghil A, et al. The dynamic contribution of the high‐level visual cortex to imagery and perception. Hum Brain Mapp. 2019;40:2449–2463. 10.1002/hbm.24535

REFERENCES

- Aglioti, S. , Bricolo, E. , Cantagallo, A. , & Berlucchi, G. (1999). Unconscious letter discrimination is enhanced by association with conscious color perception in visual form agnosia. Current Biology, 9(23), 1419–1422. [DOI] [PubMed] [Google Scholar]

- Aguirre, G. K. (2007). Continuous carry‐over designs for fMRI. NeuroImage, 35, 1480–1494. 10.1016/j.neuroimage.2007.02.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrmann, M. , Winocur, G. , & Moscovitch, M. (1992). Dissociation between mental imagery and object recognition in a brain‐damaged patient. Nature, 359, 1. [DOI] [PubMed] [Google Scholar]

- Bianchini, F. , Palermo, L. , Piccardi, L. , Incoccia, C. , Nemmi, F. , Sabatini, U. , & Guariglia, C. (2014). Where am I? A new case of developmental topographical disorientation. Journal of Neuropsychology, 8(1), 107–124. 10.1111/jnp.12007 [DOI] [PubMed] [Google Scholar]

- Bird, C. M. , Capponi, C. , King, J. A. , Doeller, C. F. , & Burgess, N. (2010). Establishing the boundaries: The hippocampal contribution to imagining scenes. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 30, 11688–11695. 10.1523/JNEUROSCI.0723-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boccia, M. , Nemmi, F. , & Guariglia, C. (2014). Neuropsychology of environmental navigation in humans: Review and meta‐analysis of FMRI studies in healthy participants. Neuropsychology Review, 24(2), 236–251. 10.1007/s11065-014-9247-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boccia, M. , Piccardi, L. , Palermo, L. , Nemmi, F. , Sulpizio, V. , Galati, G. , & Guariglia, C. (2014). One's own country and familiar places in the mind's eye: Different topological representations for navigational and non‐navigational contents. Neuroscience Letters, 579, 52–57. 10.1016/j.neulet.2014.07.008 [DOI] [PubMed] [Google Scholar]

- Boccia, M. , Piccardi, L. , Palermo, L. , Nemmi, F. , Sulpizio, V. , Galati, G. , & Guariglia, C. (2015). A penny for your thoughts! Patterns of fMRI activity reveal the content and the spatial topography of visual mental images. Human Brain Mapping, 36(3), 945–958. 10.1002/hbm.22678 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boccia, M. , Sulpizio, V. , Nemmi, F. , Guariglia, C. , & Galati, G. (2016). Direct and indirect parieto‐medial temporal pathways for spatial navigation in humans: Evidence from resting‐state functional connectivity. Brain Structure and Function, 222, 1945–1957. 10.1007/s00429-016-1318-6 [DOI] [PubMed] [Google Scholar]

- Boccia, M. , Sulpizio, V. , Palermo, L. , Piccardi, L. , Guariglia, C. , & Galati, G. (2017). I can see where you would be: Patterns of fMRI activity reveal imagined landmarks. NeuroImage, 144(Pt A), 174–182. 10.1016/j.neuroimage.2016.08.034 [DOI] [PubMed] [Google Scholar]

- Chang, C.‐C. , & Lin, C.‐J. (2011). A library for support vector machines. ACM Transactions on Interlligent Systems and Technology (TIST), 2, 39–27. 10.1145/1961189.1961199 [DOI] [Google Scholar]

- Cichy, R. M. , Heinzle, J. , & Haynes, J. D. (2012). Imagery and perception share cortical representations of content and location. Cerebral Cortex, 22, 372–380. 10.1093/cercor/bhr106 [DOI] [PubMed] [Google Scholar]

- Corrow, J. C. , Corrow, S. L. , Lee, E. , Pancaroglu, R. , Burles, F. , Duchaine, B. , … Barton, J. J. (2016). Getting lost: Topographic skills in acquired and developmental prosopagnosia. Cortex, 76, 89–103. 10.1016/j.cortex.2016.01.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino, F. , Valente, G. , Staeren, N. , Ashburner, J. , Goebel, R. , & Formisano, E. (2008). Combining multivariate voxel selection and support vector machines for mapping and classification of fMRI spatial patterns. NeuroImage, 43, 44–58. 10.1016/j.neuroimage.2008.06.037 [DOI] [PubMed] [Google Scholar]

- Dentico, D. , Cheung, B. L. , Chang, J. Y. , Guokas, J. , Boly, M. , Tononi, G. , & Van Veen, B. (2014). Reversal of cortical information flow during visual imagery as compared to visual perception. NeuroImage, 100, 237–243. 10.1016/j.neuroimage.2014.05.081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di, X. , & Biswal, B. B. (2015). Characterizations of resting‐state modulatory interactions in the human brain. Journal of Neurophysiology, 114(5), 2785–2796. 10.1152/jn.00893.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dijkstra, N. , Zeidman, P. , Ondobaka, S. , van Gerven, M. A. J. , & Friston, K. (2017). Distinct top‐down and bottom‐up brain connectivity during visual perception and imagery. Scientific Reports, 7(5677), 5677 10.1038/s41598-017-05888-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farah, M. J. (1989). The neuropsychology of mental imagery In Boller F. & Grafman J. (Eds.), The handbook of neuropsychology: Disorders of visual behaviour (pp. 395–413). Amsterdam: Elsevier. [Google Scholar]

- Farah, M. J. , Weisberg, L. L. , Monheit, M. , & Peronnet, F. (1989). Brain activity underlying mental imagery: Event‐related potentials during mental image generation. Journal of Cognitive Neuroscience, 1(4), 302–316. 10.1162/jocn.1989.1.4.302 [DOI] [PubMed] [Google Scholar]

- Fulford, J. , Milton, F. , Salas, D. , Smith, A. , Simler, A. , Winlove, C. , & Zeman, A. (2017). The neural correlates of visual imagery vividness—An fMRI study and literature review. Cortex, 105, 26–40. 10.1016/j.cortex.2017.09.014 [DOI] [PubMed] [Google Scholar]

- Ganis, G. , & Schendan, H. E. (2008). Visual mental imagery and perception produce opposite adaptation effects on early brain potentials. NeuroImage, 42, 1714–1727. 10.1016/j.neuroimage.2008.07.004 [DOI] [PubMed] [Google Scholar]

- Guariglia, C. , Padovani, A. , Pantano, P. , & Pizzamiglio, L. (1993). Unilateral neglect restricted to visual imagery. Nature, 364(6434), 235–237. 10.1038/364235a0 [DOI] [PubMed] [Google Scholar]

- Guariglia, C. , Palermo, L. , Piccardi, L. , Iaria, G. , & Incoccia, C. (2013). Neglecting the left side of a city square but not the left side of its clock: Prevalence and characteristics of representational neglect. PLoS One, 8(7), e67390 10.1371/journal.pone.0067390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby, J. V. , Gobbini, M. I. , Furey, M. L. , Ishai, A. , Schouten, J. L. , & Pietrini, P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science (New York, N.Y.), 293, 2425–2430. 10.1126/science.1063736 [DOI] [PubMed] [Google Scholar]

- Iaria, G. , & Barton, J. J. (2010). Developmental topographical disorientation: A newly discovered cognitive disorder. Experimental Brain Research, 206(2), 189–196. 10.1007/s00221-010-2256-9 [DOI] [PubMed] [Google Scholar]

- Ishai, A. (2010). Seeing faces and objects with the "mind's eye". Archives Italiennes de Biologie, 148(1), 1–9. [PubMed] [Google Scholar]

- Jacobs, C. , Schwarzkopf, D. S. , & Silvanto, J. (2017). Visual working memory performance in aphantasia. Cortex, 105, 61–73. 10.1016/j.cortex.2017.10.014 [DOI] [PubMed] [Google Scholar]

- Johnson, M. R. , & Johnson, M. K. (2014). Decoding individual natural scene representations during perception and imagery. Frontiers in Human Neuroscience, 8, 59 10.3389/fnhum.2014.00059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keogh, R. , & Pearson, J. (2017). The blind mind: No sensory visual imagery in aphantasia. Cortex, 105, 53–60. 10.1016/j.cortex.2017.10.012 [DOI] [PubMed] [Google Scholar]

- Klargaard, S. K. , Starrfelt, R. , Petersen, A. , & Gerlach, C. (2016). Topographic processing in developmental prosopagnosia: Preserved perception but impaired memory of scenes. Cognitive Neuropsychology, 33(1–9), 405–413. 10.1080/02643294.2016.1267000 [DOI] [PubMed] [Google Scholar]

- Kosslyn, S. M. (1980). Image and mind image and mind. Cambridge, MA: Harvard University Press. [Google Scholar]

- Kosslyn, S. M. , Koenig, O. , Barrett, A. , Cave, C. B. , Tang, J. , & Gabrieli, J. D. (1989). Evidence for two types of spatial representations: Hemispheric specialization for categorical and coordinate relations. Journal of Experimental Psychology: Human Perception and Performance, 15(4), 723–735. [DOI] [PubMed] [Google Scholar]

- Kosslyn, S. M. , Maljkovic, V. , Hamilton, S. E. , Horwitz, G. , & Thompson, W. L. (1995). Two types of image generation: Evidence for left and right hemisphere processes. Neuropsychologia, 33(11), 1485–1510. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte, N. , Mur, M. , & Bandettini, P. (2008). Representational similarity analysis—Connecting the branches of systems neuroscience. Frontiers in Systems Neuroscience, 2, 4 10.3389/neuro.06.004.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laeng, B. (1994). Lateralization of categorical and coordinate spatial functions: A study of unilateral stroke patients. Journal of Cognitive Neuroscience, 6(3), 189–203. 10.1162/jocn.1994.6.3.189 [DOI] [PubMed] [Google Scholar]

- Lee, S. H. , Kravitz, D. J. , & Baker, C. I. (2012). Disentangling visual imagery and perception of real‐world objects. NeuroImage, 59, 4064–4073. 10.1016/j.neuroimage.2011.10.055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLaren, D. G. , Ries, M. L. , Xu, G. , & Johnson, S. C. (2012). A generalized form of context‐dependent psychophysiological interactions (gPPI): A comparison to standard approaches. NeuroImage, 61(4), 1277–1286. 10.1016/j.neuroimage.2012.03.068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan, L. K. , MacEvoy, S. P. , Aguirre, G. K. , & Epstein, R. A. (2011). Distances between real‐world locations are represented in the human hippocampus. The Journal of Neuroscience, 3, 1238–1245. 10.1523/JNEUROSCI.4667-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moscovitch, M. , Rosenbaum, R. S. , Gilboa, A. , Addis, D. R. , Westmacott, R. , Grady, C. , … Nadel, L. (2005). Functional neuroanatomy of remote episodic, semantic and spatial memory: A unified account based on multiple trace theory. Journal of Anatomy, 207(1), 35–66. 10.1111/j.1469-7580.2005.00421.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nonyane, B. A. S. , & Theobald, C. M. (2007). Design sequences for sensory studies: Achieving balance for carry‐over and position effects. The British Journal of Mathematical and Statistical Psychology, 60, 339–349. 10.1348/000711006X114568 [DOI] [PubMed] [Google Scholar]

- O'Craven, K. M. , & Kanwisher, N. (2000). Mental imagery of faces and places activates corresponding stiimulus‐specific brain regions. Journal of Cognitive Neuroscience, 12(6), 1013–1023. [DOI] [PubMed] [Google Scholar]

- O'Toole, A. J. , Jiang, F. , Abdi, H. , & Haxby, J. V. (2005). Partially distributed representations of objects and faces in ventral temporal cortex. J Cogn Neurosci, 17, 580–590. [DOI] [PubMed] [Google Scholar]

- Palermo, L. , Bureca, I. , Matano, A. , & Guariglia, C. (2008). Hemispheric contribution to categorical and coordinate representational processes: A study on brain‐damaged patients. Neuropsychologia, 46(11), 2802–2807. 10.1016/j.neuropsychologia.2008.05.020 [DOI] [PubMed] [Google Scholar]

- Piccardi, L. , De Luca, M. , Di Vita, A. , Palermo, L. , Tanzilli, A. , Dacquino, C. , & Pizzamiglio, M. R. (2017). Evidence of taxonomy for developmental topographical disorientation: Developmental landmark Agnosia case 1. Applied Neuropsychology: Child, 1–12. 10.1080/21622965.2017.1401477 [DOI] [PubMed] [Google Scholar]

- Poppenk, J. , Evensmoen, H. R. , Moscovitch, M. , & Nadel, L. (2013). Long‐axis specialization of the human hippocampus. Trends in Cognitive Sciences, 17(5), 230–240. 10.1016/j.tics.2013.03.005 [DOI] [PubMed] [Google Scholar]

- Ranganath, C. , & Ritchey, M. (2012). Two cortical systems for memory‐guided behavior. Nature Reviews Neuroscience, 13, 713–726. 10.1038/nrn3338 [DOI] [PubMed] [Google Scholar]

- Reddy, L. , Tsuchiya, N. , & Serre, T. (2010). Reading the mind's eye: Decoding category information during mental imagery. NeuroImage, 50, 818–825. 10.1016/j.neuroimage.2009.11.084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riddoch, M. J. , & Humphreys, G. W. (1987). A case of integrative visual agnosia. Brain, 110(Pt 6), 1431–1462. [DOI] [PubMed] [Google Scholar]

- Rode, G. , Cotton, F. , Revol, P. , Jacquin‐Courtois, S. , Rossetti, Y. , & Bartolomeo, P. (2010). Representation and disconnection in imaginal neglect. Neuropsychologia, 48(10), 2903–2911. 10.1016/j.neuropsychologia.2010.05.032 [DOI] [PubMed] [Google Scholar]

- Staresina, B. P. , Cooper, E. , & Henson, R. N. (2013). Reversible information flow across the medial temporal lobe: The hippocampus links cortical modules during memory retrieval. The Journal of Neuroscience, 33(35), 14184–14192. 10.1523/JNEUROSCI.1987-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sulpizio, V. , Committeri, G. , Lambrey, S. , Berthoz, A. , & Galati, G. (2013). Selective role of lingual/parahippocampal gyrus and retrosplenial complex in spatial memory across viewpoint changes relative to the environmental reference frame. Behavioural Brain Research, 242, 62–75. 10.1016/j.bbr.2012.12.031 [DOI] [PubMed] [Google Scholar]

- Sulpizio, V. , Committeri, G. , & Galati, G. (2014). Distributed cognitive maps reflecting real distances between places and views in the human brain. Frontiers in Human Neuroscience, 8, 716 10.3389/fnhum.2014.00716 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trojano, L. , & Grossi, D. (1994). A critical review of mental imagery defects. Brain and Cognition, 24(2), 213–243. 10.1006/brcg.1994.1012 [DOI] [PubMed] [Google Scholar]

- Van Essen, D. C. , Glasser, M. F. , Dierker, D. L. , Harwell, J. , & Coalson, T. (2012). Parcellations and hemispheric asymmetries of human cerebral cortex analyzed on surface‐based atlases. Cerebral Cortex, 22(10), 2241–2262. 10.1093/cercor/bhr291 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Leemput, K. , Bakkour, A. , Benner, T. , Wiggins, G. , Wald, L. L. , Augustinack, J. , … Fischl, B. (2009). Automated segmentation of hippocampal subfields from ultra‐high resolution in vivo MRI. Hippocampus, 19, 549–557. 10.1002/hipo.20615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watkins, N. W. (2017). (A)phantasia and severely deficient autobiographical memory: Scientific and personal perspectives. Cortex, 105, 41–52. 10.1016/j.cortex.2017.10.010 [DOI] [PubMed] [Google Scholar]

- Zeman, A. , Dewar, M. , & Della Sala, S. (2015). Lives without imagery—Congenital aphantasia. Cortex, 73, 378–380. 10.1016/j.cortex.2015.05.019 [DOI] [PubMed] [Google Scholar]

- Zeman, A. , Dewar, M. , & Della Sala, S. (2016). Reflections on aphantasia. Cortex, 74, 336–337. 10.1016/j.cortex.2015.08.015 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1 Statistics about the one‐sample t tests performed on classification accuracies

Figure S1 Mean classification accuracies and standard errors of preliminary cross‐decoding analysis on content. * p < 0.004