Abstract

Dynamic facial expressions of emotions constitute natural and powerful means of social communication in daily life. A number of previous neuroimaging studies have explored the neural mechanisms underlying the processing of dynamic facial expressions, and indicated the activation of certain social brain regions (e.g., the amygdala) during such tasks. However, the activated brain regions were inconsistent across studies, and their laterality was rarely evaluated. To investigate these issues, we measured brain activity using functional magnetic resonance imaging in a relatively large sample (n = 51) during the observation of dynamic facial expressions of anger and happiness and their corresponding dynamic mosaic images. The observation of dynamic facial expressions, compared with dynamic mosaics, elicited stronger activity in the bilateral posterior cortices, including the inferior occipital gyri, fusiform gyri, and superior temporal sulci. The dynamic facial expressions also activated bilateral limbic regions, including the amygdalae and ventromedial prefrontal cortices, more strongly versus mosaics. In the same manner, activation was found in the right inferior frontal gyrus (IFG) and left cerebellum. Laterality analyses comparing original and flipped images revealed right hemispheric dominance in the superior temporal sulcus and IFG and left hemispheric dominance in the cerebellum. These results indicated that the neural mechanisms underlying processing of dynamic facial expressions include widespread social brain regions associated with perceptual, emotional, and motor functions, and include a clearly lateralized (right cortical and left cerebellar) network like that involved in language processing.

Keywords: amygdala, dynamic facial expression, functional magnetic resonance imaging (fMRI), inferior frontal gyrus, laterality, superior temporal sulcus

1. INTRODUCTION

Dynamic facial expressions of emotions constitute natural and powerful means of social communication in daily life, because emotional facial expressions represent a primary source of information (Mehrabian, 1971 1971) and normal facial expressions are dynamic (Darwin, 1872). Psychological studies have revealed that dynamic facial expressions trigger multiple strong psychological responses compared with dynamic control stimuli, such as mosaics, or static facial expressions. For example, previous studies showed that dynamic facial expressions boost perceptual awareness of the expression (Ceccarini & Caudeka, 2013; Yoshikawa & Sato, 2008), subjective emotional responses (Sato & Yoshikawa, 2007a), and spontaneous facial mimicry (i.e., facial muscular responses interpretable as mimicking behaviors) (Rymarczyk, Biele, Grabowska, & Majczynski, 2011; Rymarczyk, Zurawski, Jankowiak‐Siuda, & Szatkowska, 2016a, 2016b; Sato, Fujimura, & Suzuki, 2008; Sato & Yoshikawa, 2007b; Weyers, Muhlberger, Hefele, & Pauli, 2006). The emotional effects of dynamic facial expressions are processed rapidly, even before conscious awareness of the face (Sato, Kubota, & Toichi, 2014).

A number of neuroimaging studies using functional magnetic resonance imaging (fMRI) and positron emission tomography have been performed to gain insight into the neural mechanisms underlying the processing of dynamic facial expressions (Arnold, Iaria, & Goghari, 2016; Arsalidou, Morris, & Taylor, 2011; Badzakova‐Trajkov, Haberling, Roberts, & Corballis, 2010; De Winter et al., 2015; Faivre, Charron, Roux, Lehéricy, & Kouider, 2012; Foley, Rippon, Thai, Longe, & Senior, 2012; Fox, Iaria, & Barton, 2009; Furl, Henson, Friston, & Calder, 2013; Grosbras & Paus, 2006; Johnston, Mayes, Hughes, & Young, 2013; Kessler et al., 2011; Kilts, Egan, Gideon, Ely, & Hoffman, 2003; Kret, Pichon, Grezes, & de Gelder, 2011a; LaBar, Crupain, Voyvodic, & McCarthy, 2003; Pelphrey, Morris, McCarthy, & Labar, 2007; Pentón et al., 2010; Polosecki et al., 2013; Rahko et al., 2010; Reinl & Bartles, 2014; Rymarczyk, Zurawski, Jankowiak‐Siuda, & Szatkowska, 2018; Sato, Kochiyama, Uono, & Yoshikawa, 2010; Sato, Kochiyama, Yoshikawa, Naito, & Matsumura, 2004; Sato, Toichi, Uono, & Kochiyama, 2012; Schobert, Corradi‐Dell'Acqua, Frühholz, van der Zwaag, & Vuilleumier, 2018; Schultz, Brockhaus, Bülthoff, & Pilz, 2013; Schultz & Pilz, 2009; Trautmann, Fehr, & Herrmann, 2009; van der Gaag, Minderaa, & Keysers, 2007; for reviews, see Arsalidou et al., 2011; Zinchenko, Yaple, & Arsalidou, 2018). These studies contrasted brain activation during observation of dynamic emotional facial expressions with that during observation of control stimuli matched for visual motion or form with the dynamic expressions, such as dynamic mosaics, dynamic objects, nonemotional facial movements, and static emotional facial expressions. Of these 28 studies, 23 indicated that dynamic facial expressions activated the superior temporal sulcus (STS) region, which consists of the STS and its adjacent middle and superior temporal gyri (Allison, Puce, & McCarthy, 2000) (e.g., Kilts et al., 2003; LaBar et al., 2003; Sato et al., 2004), and 17 studies found that dynamic facial expressions activated the fusiform gyrus (FG) (e.g., Kilts et al., 2003; LaBar et al., 2003; Sato et al., 2004). In addition to these posterior cortical regions, 11 studies reported activation in limbic system regions, such as the amygdala (e.g., LaBar et al., 2003; Pelphrey et al., 2007; Sato et al., 2004), and 11 reported activation in the inferior frontal gyrus (IFG) (e.g., LaBar et al., 2003; Sato et al., 2004; van der Gaag et al., 2007), which contains motor‐related parts (Binkofski & Buccino, 2006). The activation of these regions in response to dynamic facial expressions was also demonstrated in a recent meta‐analysis that analyzed the coordinates reported by 14 articles (Zinchenko et al., 2018). Substantial neuroimaging and neuropsychological evidence suggested that activation of these brain regions was consistent with their information‐processing functions, such as visual analysis of the dynamic aspects of faces involving the STS region (Allison et al., 2000), emotional processing involving the amygdala (Calder, Lawrence, & Young, 2001), and motor mimicry as a form of social interaction involving the IFG (Iacoboni, 2005). Based on such evidence, these regions have been called “social brain regions” (Adolphs, 2003; Brothers, 1990; Emery & Perrett, 2000). Taken together, these neuroscientific data provide valuable information regarding the manner in which human brains process dynamic facial expressions associated with emotion to engage in social cognition.

However, some issues remain unsettled. First, the results of previous studies have been inconsistent regarding the activated brain regions. For example, although 11 studies reported amygdala activity in response to dynamic facial expressions (e.g., LaBar et al., 2003), studies that failed to find such activity outnumbered those that found it (i.e., 17 studies; e.g., Grosbras & Paus, 2006; Kilts et al., 2003; Pentón et al., 2010). One study suggested that increased activity in the amygdala is more likely to be found in studies using dynamic facial expressions generated with computer morphing techniques, compared to those using stimuli with natural dynamic facial expressions (Reinl & Bartles, 2014; however, see Badzakova‐Trajkov et al., 2010; Foley et al., 2012; Fox et al., 2009; Rahko et al., 2010; Trautmann et al., 2009; van der Gaag et al., 2007). Additionally, it should be noted that numerous other regions were reportedly activated in a small number of studies. For example, three previous studies (Badzakova‐Trajkov et al., 2010; Fox et al., 2009; Sato et al., 2012) indicated that observation of dynamic facial expressions activated the dorsomedial prefrontal cortex (dmPFC), which could be involved in attributing the intentions and other mental states from the faces of others (Frith & Frith, 2003). One possible reason for these inconsistent findings is sample size, which was less than 30 in all previous individual experiments with only a few exceptions (Badzakova‐Trajkov et al., 2010; Kessler et al., 2011; Rymarczyk et al., 2018), because studies involving small sample sizes may have low statistical power and a reduced chance of detecting effects (Button et al., 2013). This issue could be relevant even to meta‐analyses (Arsalidou et al., 2011; Zinchenko et al., 2018) because these studies employed coordinate‐based meta‐analytical methods, which assess the convergence of the locations of activation foci reported in individual studies and have difficulty detecting small effects with underpowered individual studies (Acar, Seurinck, Eickhoff, & Moerkerke, 2018). Another possible reason for these inconsistencies is that a majority of previous studies have compared dynamic with static facial expressions. Due to the contrasts of strong versus weak social stimuli, some social brain regions might have been activated under both conditions. Based on these data, we hypothesized that the activity of social brain regions during the observation of dynamic facial expressions would be robustly elicited by testing a relatively large sample size and comparing dynamic facial expressions with nonsocial dynamic control stimuli.

Second, few studies performed statistical analyses of lateralized brain activation during the processing of dynamic facial expressions. Laterality has been proposed to be a key feature of the human brain, and some psychological functions, such as language, have clearly lateralized neural substrates (Hopkins, Misiura, Pope, & Latash, 2015). Some previous studies reported that the observation of dynamic facial expressions induced more widespread activation in the posterior cortices of the right hemisphere than the left hemisphere (e.g., Pentón et al., 2010; Rahko et al., 2010; Sato et al., 2004). However, to our knowledge, only one study performed statistical comparison of regional brain activities in the left versus right hemisphere during the processing of dynamic facial expressions (De Winter et al., 2015). In this study, the researchers conducted analyses for some regions of interest and found that observation of dynamic faces versus dynamic scrambled images yielded right‐dominant activation in the STS region. However, other studies reported lateralized activity in some other regions. Specifically, some studies reported IFG activity in response to dynamic facial expressions only in the right hemisphere (Fox et al., 2009; Sato et al., 2004, 2010). Based on these observations, we hypothesized that the use of a larger sample would more clearly reveal lateralized social brain region activity during the processing of dynamic facial expressions.

Third, the modulatory effects of stimulus emotion and participant sex on activity in social brain regions during processing of dynamic facial expressions remain unclear. Although numerous studies have investigated the effects of emotion (Arsalidou et al., 2011; Faivre et al., 2012; Foley et al., 2012; Furl et al., 2013; Kessler et al., 2011; Kilts et al., 2003; LaBar et al., 2003; Rahko et al., 2010; Sato et al., 2004, 2010, 2012; Trautmann et al., 2009; van der Gaag et al., 2007), the results were largely inconsistent. For example, some studies statistically or descriptively identified more evident amygdala activity in response to facial expressions associated with negative emotions compared with those associated with positive emotions (Arsalidou et al., 2011; Sato et al., 2004; Trautmann et al., 2009), whereas the majority of the studies did not find such differences. Similarly, although some studies analyzed effects of sex (Kret, Pichon, Grezes, & de Gelder, 2011b; Rahko et al., 2010; Sato et al., 2012), none reported sex differences in activity in abovementioned social brain regions in response to dynamic facial expressions compared with control stimuli. Based on these data and the ongoing debate regarding the evaluation of these effects using different stimuli (cf. Filkowski, Olsen, Duda, Wanger, & Sabatinelli, 2017; Fusar‐Poli et al., 2009; García‐García et al., 2016; Lindquist, Wager, Kober, Bliss‐Moreau, & Barrett, 2012; Sergerie, Chochol, & Armony, 2008), we made no predictions pertaining to the effects of emotion and sex during the processing of dynamic facial expressions.

To investigate these issues, we measured brain activity using fMRI in a relatively large sample of healthy individuals (n = 51) while viewing dynamic natural facial expressions. We presented facial expressions depicting anger and happiness because (a) these emotions represent negative and positive emotional valences, respectively; (b) these emotions were frequently assessed in previous neuroimaging studies evaluating dynamic facial expression processing (e.g., LaBar et al., 2003); and (c) behavioral studies provided ample evidence of facial mimicry of the facial expressions associated with these emotions but not other emotions (Hess & Fischer, 2014; Rymarczyk et al., 2016a). For comparison with dynamic facial expressions, we presented dynamic mosaic images created using the frames of dynamic facial expressions, because (a) they provide no social information and allow for the performance of a social versus nonsocial contrast; (b) it is possible to control for low‐level visual properties, such as luminance and motion; and (c) dynamic mosaic images or similar nonsocial dynamic images (e.g., abstract pattern motions, noise patterns, and phase‐scrambled images) have been used in multiple studies (e.g., Sato et al., 2004). The participants passively viewed the stimuli with dummy target detection so that the automatic processing of facial expressions could be evaluated. To acquire robust findings, we conducted conservative statistical analyses using whole brain, family wise error rate corrected height thresholds. By comparing brain activity between dynamic facial expression and dynamic mosaic image conditions, we identified the regions involved in the processing of dynamic facial expressions. Furthermore, to statistically test the laterality of brain activity, we generated flipped images of brain activity that were then contrasted with the original images (Baciu et al., 2005). We also explored the effects of emotion and sex in these analyses.

2. METHODS

2.1. Participants

The study population consisted of 51 volunteers (26 females and 25 males; mean ± SD overall age: 22.5 ± 4.5 years; age of the females: 22.4 ± 5.6 years; age of the males: 22.6 ± 3.3 years). A previous methodological study indicated that a total of 45 participants is recommended to achieve a statistical power of 80% with a standard effect size (Cohen's d = 1) using a voxel‐wise whole‐brain correction (Wager et al., 2009). The participants were administered the Mini‐International Neuropsychiatric Interview (Sheehan et al. 1998), a short structured diagnostic interview, by a psychiatrist or psychologist. The interview revealed no neuropsychiatric conditions in any participant. All participants were right‐handed as assessed by the Edinburgh Handedness Inventory (Oldfield, 1971) (the left–right laterality quotients, scored from −100 to 100, were >0; mean ± SD: 81.1 ± 23.3). All provided informed consent after receiving a detailed explanation of the experimental procedure. Our study was approved by the Ethics Committee of the Primate Research Institute, Kyoto University. The study was conducted in accordance with the Declaration of Helsinki.

2.2. Experimental design

The experiment had a within‐subject two‐factor design, with factors of stimulus type (dynamic expression, dynamic mosaic) and emotion (angry, happy). For further analyses, we added the between‐subject factor of participant sex (male, female).

2.3. Stimuli

Angry and happy facial expressions of four women and four men were presented as video clips (Figure 1). These stimuli were selected from our video database of facial expressions of emotion that includes 65 Japanese models. The stimulus model looked straight ahead. All of the faces were unfamiliar to the participants. These specific stimulus expressions were selected because they were considered to represent theoretically appropriate facial expressions, which was confirmed by coding analyses performed by a trained coder using the Facial Action Coding System (Ekman & Friesen, 1978) and the Facial Action Coding System Affect Interpretation Dictionary (Ekman et al., 1998). Additionally, the speeds of dynamic changes in these expressions were within a natural range for the observers (Sato & Yoshikawa, 2004), and the stimuli have been validated in a number of previous behavioral studies. Specifically, the stimuli were appropriately recognized as angry or happy expressions (Sato et al., 2010), and they elicited appropriate subjective emotional responses (Sato & Yoshikawa, 2007a) and spontaneous facial mimicry (Sato & Yoshikawa, 2007b; Sato et al., 2008; Yoshimura et al., 2015).

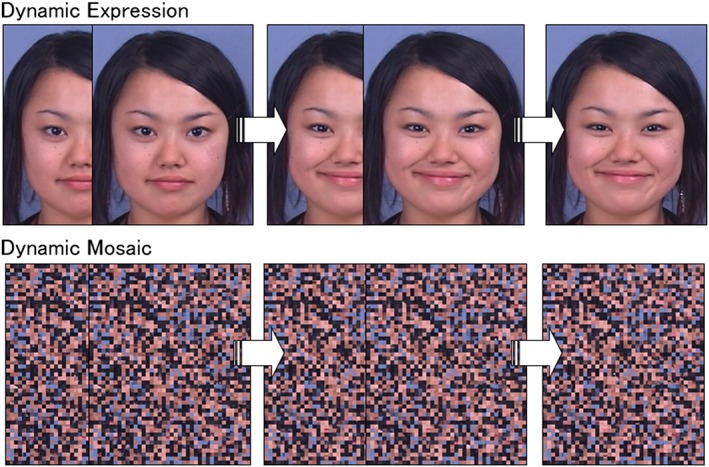

Figure 1.

Illustrations of dynamic facial expression and dynamic mosaic stimuli. Under the dynamic condition, clips consisting of 38 frames ranging from neutral to emotional (angry or happy) expressions were shown. Each frame was shown for 40 ms, and each clip was presented for 1,520 ms

The dynamic expression stimuli were composed of 38 frames ranging from neutral to emotional expressions. Each frame was presented for 40 ms and each clip for 1,520 ms. The stimuli subtended a visual angle of approximately 15° vertical × 12° horizontal. An example of the stimulus sequence is shown in Figure 1, which includes data from a model who provided consent to the use of her image in scientific publications.

For the dynamic mosaic image stimuli, all of the dynamic facial expression frames were divided into 50 vertical × 40 horizontal squares and reordered using a fixed randomization algorithm (Figure 1). This rearrangement rendered each image unrecognizable as a face. A set of these 38 frames corresponding to the original dynamic face images (without changing frame orders) was serially presented as a moving clip at the same speed as that for the dynamic expression stimuli. The resultant dynamic mosaic stimuli were presented in unrecognizable but smooth motion that was comparable with that of the natural dynamic facial expressions.

2.4. Presentation apparatus

The experiments were controlled using Presentation 16.0 software (Neurobehavioral Systems, Albany, CA). The stimuli were projected from a liquid crystal projector (DLA‐HD10K; Japan Victor Company, Yokohama, Japan) at a refresh rate of 60 Hz to a mirror positioned in front of the participants. Responses were obtained using a response box (Response Pad; Current Designs, Philadelphia, PA).

2.5. Procedure

Each participant completed a single fMRI scan, which consisted of 20 epochs of 20 s each separated with 20 rest periods (a blank screen) of 10 s each. A block design was employed in the present study because ample evidence indicates that, relative to an event‐related design, this design has the advantage of high statistical power (Bennett & Miller, 2013; Friston et al., 1999). Although the block design has inherent disadvantages, such as anticipatory or preparatory processes (D'Esposito et al., 1999; Friston et al., 1999) and task‐related motion (Birn et al., 1999; Johnstone et al., 2006), these factors were considered to have less of an impact on the present dummy‐target detection task (see below). Each of the four stimulus conditions was presented in different epochs within each run, and the order of epochs was pseudorandomized. The order of stimuli within each epoch was randomized. Each epoch consisted of eight trials, and a total of 160 trials were completed by each participant. Stimulus trials were replaced by target trials in eight trials (two each of the angry dynamic facial expression, happy dynamic facial expression, angry dynamic mosaic, and happy dynamic mosaic conditions).

During each stimulus trial, a fixation point (a small gray “+” on a white background the same size as the stimulus) was presented in the center of the screen for 980 ms. The stimulus was then presented for 1,520 ms. During each target trial, a large red cross (1.2 × 1.2°) was presented instead of the stimulus. Participants were instructed to press a button using their right forefinger as quickly as possible when a red cross appeared and to fixate on the fixation point in each trial; they did not receive any other information (e.g., stimulus type). These dummy tasks confirmed that the participants attended to the stimuli but did not implement either the controlled processing of the stimuli or stimulus‐related motor responses. Performance on the dummy target‐detection task was perfect (correct identification rate = 100.0%).

To confirm that the brain activation in response to dynamic facial expressions versus dynamic mosaic images was not accounted for by eye‐movement artifacts when viewing versus not viewing the stimuli, a preliminary assessment of the eye movements of 12 participants who did not take part in the imaging study was performed outside of the scanner. The same stimuli were presented at the same visual angle on a 19‐in. CRT monitor (HM903D‐A; Iiyama, Tokyo, Japan), and eye movements were tracked using the Tobii X2‐60 system (Tobii Tech, Stockholm, Sweden). The results revealed few horizontal eye movements exceeding 6° (i.e., outside of the stimuli) under both stimulus type conditions (mean ± SD number of eye movements: 1.2 ± 1.5 and 1.5 ± 1.6 during each epoch of the dynamic expression and dynamic mosaic conditions, respectively) and showed no significant differences between the conditions (p > .1, t test).

2.6. MRI acquisition

Image scanning was performed on a 3‐T scanning system (MAGNETOM Trio, A Tim System; Siemens, Malvern, PA) at the ATR Brain Activity Imaging Center using a 12‐channel head coil. The head position was fixed using lateral foam pads. The functional images consisted of 40 consecutive slices parallel to the anterior–posterior commissure plane, and covered the whole brain. A T2*‐weighted gradient‐echo echo‐planar imaging sequence was used with the following parameters: repetition time (TR) = 2,500 ms; echo time (TE) = 30 ms; flip angle = 90°; matrix size = 64 × 64; and voxel size = 3 × 3 × 4 mm. The order of slices was ascending. After acquisition of functional images, a T1‐weighted high‐resolution anatomical image was obtained using a magnetization‐prepared rapid‐acquisition gradient‐echo sequence (TR = 2,250 ms; TE = 3.06 ms; inversion time = 1,000 ms; flip angle = 9°; field of view = 256 × 256 mm; and voxel size = 1 × 1 × 1 mm).

2.7. Image analysis

Image analyses were performed using the statistical parametric mapping package SPM12 (http://www.fil.ion.ucl.ac.uk/spm), implemented in MATLAB R2018 (MathWorks, Natick, MA).

For preprocessing, first, functional images of each run were realigned using the first scan as a reference to correct for head motion. The realignment parameters revealed only a small (<2 mm) motion correction. Next, all functional images were corrected for slice timing. Then, the functional images were coregistered to the anatomical image. Subsequently, all anatomical and functional images were normalized to Montreal Neurological Institute space using the anatomical image‐based unified segmentation‐spatial normalization approach (Ashburner & Friston, 2005). Finally, the spatially normalized functional images were resampled to a voxel size of 2 × 2 × 2 mm and smoothed with an isotropic Gaussian kernel of 8‐mm full‐width at half‐maximum to compensate for anatomical variability among participants.

We used random‐effects analyses to identify significantly activated voxels at the population level (Holmes & Friston, 1998). First, a single‐subject analysis was performed (Friston et al., 1995). The task‐related regressor for each stimulus condition and target condition was modeled by boxcar and delta functions, respectively, convolving it with a canonical hemodynamic response function for each presentation condition in each participant. The realignment parameters were used as covariates to account for motion‐related noise signals. We used a high‐pass filter with a cutoff period of 128 s to eliminate the artifactual low‐frequency trend. Serial autocorrelation was accounted for using a first‐order autoregressive model. For the second‐level random‐effects analysis, contrast images of the main effect of stimulus type (dynamic expression vs. dynamic mosaic) were first entered into a one‐sample t test. Next, contrast images of the simple main effect of stimulus type (dynamic expression vs. dynamic mosaic under each of emotion condition) were subjected to a two‐way analysis of variance (ANOVA) model with emotion (anger, happiness) as a within‐subject factor and participant sex (male, female) as a between‐subject factor. Voxels were deemed to be significant if they reached a height threshold of p < .05 with family wise error correction for the whole brain, which is recommended as an appropriate inference method (Woo et al., 2014). Additionally, activations were reported with a minimum cluster size of nine voxels (i.e., 72 mm3) because biologically plausible hemodynamic responses tend to be expressed on a spatial scale of 5–8 mm (i.e., spherical approximation, 65–268 mm3) (cf. Friston et al., 1996).

For laterality analysis, we conducted a comparison between the original and flipped images (Baciu et al., 2005), as described in several previous studies investigating language processing (e.g., Hernandez et al., 2013). The analysis was modified to incorporate the recent methodological developments (Kurth et al., 2015). For this analysis, preprocessing was repeated after slice timing correction in the same way as described above, except for the use of a symmetrical template provided by the VBM8 software (http://dbm.neuro.uni-jena.de/vbm8/TPM_symmetric.nii). Single‐subject analysis was performed similarly except that the analysis was applied both to the original and flipped images. Finally, the second‐level random‐effects analysis was conducted with an inclusive mask of the right (unilateral) hemisphere. For this analysis, contrast images of the main effect of stimulus type were first subjected to a paired t test comparing the hemispheres. Next, contrast images of the simple main effect of stimulus type were analyzed using a three‐way ANOVA model with hemisphere, emotion, and participant sex as factors. Thresholds were identical to those used in the analysis outlined above.

Brain structures were labeled anatomically and identified according to Brodmann's areas using the Automated Anatomical Labeling atlas (Tzourio‐Mazoyer et al., 2002) and Brodmann Maps (Brodmann.nii), respectively, with MRIcron (http://www.mccauslandcenter.sc.edu/mricro/mricron/).

3. RESULTS

3.1. Regional brain activity

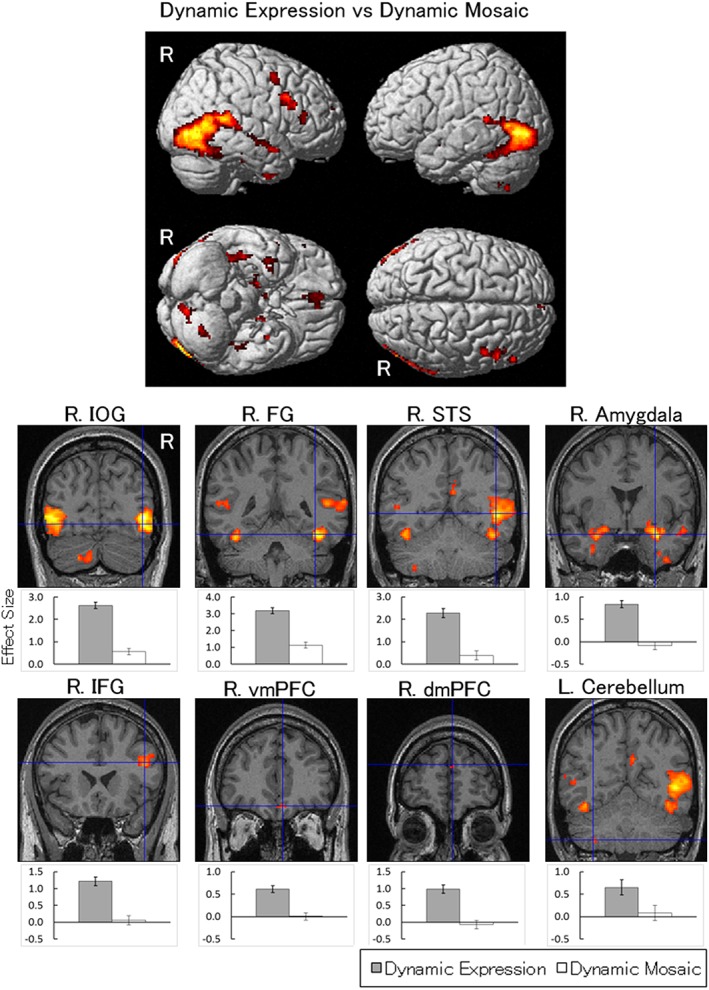

The contrast of dynamic facial expressions versus dynamic mosaic images was analyzed using one‐sample t tests. As reported in numerous studies, the results revealed significant activation in bilateral posterior regions, including the STS regions and FG (Table 1; Figure 2). The cluster covered the inferior occipital gyrus (IOG) as well as the ascending limb of the inferior temporal sulcus corresponding to the V5/MT area (Tootell et al., 1997). Also similar to numerous previous studies, the contrast revealed significant activity in the bilateral amygdalae and the right IFG. Additionally, significant activation to dynamic expressions was observed in the bilateral ventromedial prefrontal cortices (vmPFC), dmPFC, precuneus, and temporal poles in the neocortices. In subcortical regions, significant activation was elicited in the bilateral thalamus and brainstem, which covered the pulvinar (x = 16, y = −33, z = 3; cf. Fischer & Whitney, 2009) and superior colliculus (x = 6, y = −33, z = 0; cf. Limbrick‐Oldfield et al., 2012), and the left cerebellum.

Table 1.

Brain regions that exhibited significant activation for original image analyses

| Side | Region | BA | Coordinates | t‐Value (df = 50) | p‐Value (FWE) | Cluster size (voxel) | ||

|---|---|---|---|---|---|---|---|---|

| x | y | z | ||||||

| Stimulus type (expression > mosaic) | ||||||||

| R | IOG | 19 | 42 | −76 | −10 | 14.39 | .000 | 3,136 |

| R | FG | 37 | 38 | −46 | −20 | 12.20 | .000 | |

| R | Middle temporal gyrus | 37 | 46 | −56 | 0 | 12.11 | .000 | |

| R | Inferior temporal gyrus | 37 | 50 | −66 | −10 | 10.18 | .000 | |

| R | Inferior temporal gyrus | 21 | 46 | −32 | 4 | 9.54 | .000 | |

| R | Middle temporal gyrus | 21 | 50 | −48 | 14 | 8.88 | .000 | |

| R | Superior temporal gyrus | 42 | 50 | −38 | 14 | 8.81 | .000 | |

| L | IOG | 19 | −44 | −82 | −4 | 12.72 | .000 | 1,687 |

| L | FG | 37 | −42 | −54 | −20 | 10.30 | .000 | |

| L | Middle temporal gyrus | 37 | −44 | −68 | 2 | 9.38 | .000 | |

| L | Middle temporal gyrus | 22 | −56 | −44 | 10 | 7.59 | .000 | |

| L | Middle temporal gyrus | 51 | −48 | −52 | 8 | 7.56 | .000 | |

| L | Inferior temporal gyrus | 20 | −44 | −30 | −20 | 6.76 | .002 | |

| L | FG | 20 | −38 | −22 | −26 | 6.60 | .003 | |

| R | Hippocampus | – | 20 | −6 | −14 | 11.70 | .000 | 507 |

| R | Amygdala | – | 28 | 0 | −20 | 11.23 | .000 | |

| R | Temporal pole | 38 | 32 | 8 | −24 | 6.39 | .006 | |

| R | Thalamus | – | 16 | −30 | 0 | 11.26 | .000 | 102 |

| R | Brainstem | – | 12 | −26 | −6 | 6.77 | .002 | |

| L | Hippocampus | – | −20 | −8 | −14 | 10.82 | .000 | 390 |

| L | Amygdala | – | −30 | 0 | −20 | 8.68 | .000 | |

| L | Temporal pole | 34 | −28 | −6 | −20 | 6.15 | .015 | |

| L | Thalamus | – | −16 | −32 | 0 | 9.46 | .000 | 36 |

| R | Precentral gyrus | 6 | 46 | 4 | 52 | 8.57 | .000 | 67 |

| R | IFG | 48 | 44 | 20 | 24 | 8.56 | .000 | 317 |

| R | IFG | 44 | 36 | 10 | 30 | 7.90 | .000 | |

| L | Cerebellum crus I | – | −12 | −70 | −26 | 8.50 | .000 | 68 |

| R | Middle temporal gyrus | 21 | 54 | 4 | −18 | 8.44 | .000 | 122 |

| R | Middle temporal gyrus | 22 | 50 | −12 | −12 | 6.83 | .001 | |

| R | Superior temporal gyrus | 48 | 50 | −14 | −6 | 6.68 | .002 | |

| L | Cerebellum crus II | – | −10 | −78 | −38 | 8.24 | .000 | 118 |

| R | Thalamus | – | 10 | −14 | 10 | 7.86 | .000 | 73 |

| R | Precuneus | 23 | 4 | −54 | 20 | 7.76 | .000 | 64 |

| R | Inferior temporal gyrus | 20 | 38 | −2 | −44 | 7.69 | .000 | 59 |

| R | FG | 38 | 30 | 2 | −38 | 6.68 | .002 | |

| R | Rectus gyrus | 11 | 6 | 40 | −18 | 7.53 | .000 | 95 |

| L | Rectus gyrus | 11 | −6 | −46 | −16 | 7.47 | .000 | |

| R | IFG | 45 | 52 | 32 | 8 | 7.48 | .000 | 47 |

| L | Inferior temporal gyrus | 36 | −34 | −2 | −36 | 7.47 | .000 | 21 |

| L | Cerebellum lobule VIII | – | −32 | −58 | −50 | 7.36 | .000 | 59 |

| R | Superior frontal gyrus (medial) | 10 | 4 | 56 | 22 | 6.41 | .006 | 15 |

| Stimulus type (expression > mosaic) × emotion | ||||||||

| None | ||||||||

| Stimulus type (expression > mosaic) × gender | ||||||||

| None | ||||||||

| Stimulus type (expression > mosaic) × emotion × gender | ||||||||

| None | ||||||||

Abbreviations: BA = Brodmann's area; FG = fusiform gyrus; FWE = family wise error corrected; IFG = inferior frontal gyrus; IOG = inferior occipital gyrus; L = left; R = right.

Figure 2.

Statistical parametric maps indicating regions that were significantly more activated in response to dynamic expressions versus dynamic mosaics. Areas of activation are rendered on the spatially normalized brain (upper) and the spatially normalized magnetic resonance images of a representative participant (lower). The blue crosses indicate the activation foci in the comparison of dynamic expressions with dynamic mosaics. Effect sizes are indicated by the mean (±SE) beta values of these regions at the sites of activation foci. dmPFC = dorsomedial prefrontal cortex; FG = fusiform gyrus; IFG = inferior frontal gyrus; IOG = inferior occipital gyrus; R = right; STS = superior temporal sulcus; vmPFC = ventromedial prefrontal cortex

When the effects of emotion and participant sex were analyzed by an ANOVA model, no significant main effects or interactions were observed (Figure S1).

3.2. Laterality

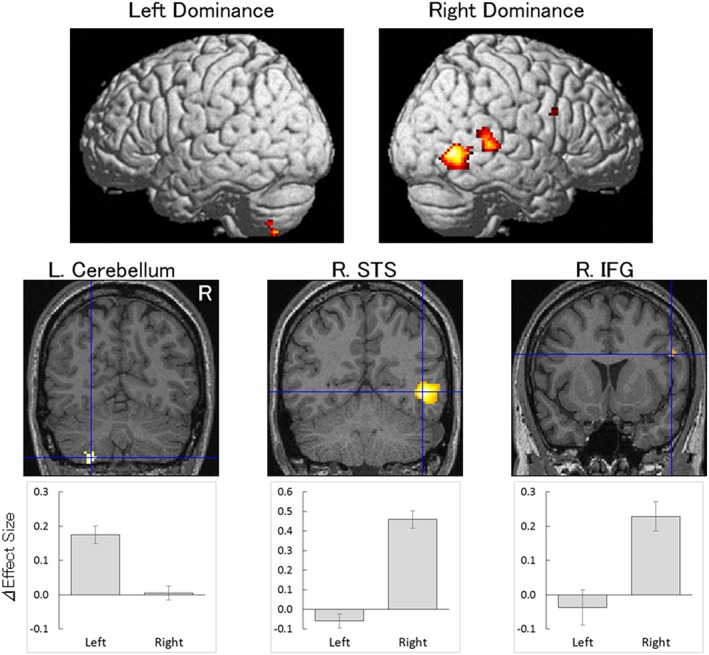

Contrast images of dynamic expressions versus dynamic mosaics were compared between the original and flipped image conditions using paired t tests. The results revealed a significant right‐hemispheric dominance in activity in the STS region (two clusters), IFG, and superior occipital gyrus (Table 2; Figure 3). Significant left‐hemispheric dominance was shown in cerebellum activity.

Table 2.

Brain regions that exhibited significant activation for original and flipped image analyses

| Dominance | Region | Coordinates | t‐Value (df = 50) | p‐Value (FWE) | Cluster size (voxel) | |||

|---|---|---|---|---|---|---|---|---|

| BA | x | y | z | |||||

| Stimulus type (expression > mosaic) × laterality | ||||||||

| R | Middle temporal gyrus | 37 | 46 | −56 | 4 | 8.96 | .000 | 467 |

| Middle temporal gyrus | 21 | 58 | −50 | 0 | 5.56 | .029 | ||

| Superior temporal gyrus | 21 | 46 | −32 | 4 | 8.41 | .000 | 392 | |

| IFG | 44 | 50 | 10 | 26 | 5.90 | .011 | 10 | |

| Superior occipital gyrus | 19 | 26 | −68 | 34 | 5.77 | .016 | 17 | |

| L | Cerebellum lobule VIII | – | −24 | −64 | −56 | 6.59 | .001 | 146 |

| Stimulus type (expression > mosaic) × emotion × laterality | ||||||||

| None | ||||||||

| Stimulus type (expression > mosaic) × sex × laterality | ||||||||

| None | ||||||||

| Stimulus type (expression > mosaic) × emotion × sex × laterality | ||||||||

| None | ||||||||

Abbreviations: BA = Brodmann's area; FWE = family wise error corrected; IFG = inferior frontal gyrus; L = left; R = right.

Figure 3.

Statistical parametric maps indicating regions with significantly more left‐dominant (left) and right‐dominant (right) activation in response to dynamic expressions versus dynamic mosaics. Areas of activation are rendered on the glass brain (top) and the brain of a representative participant (bottom). The blue crosses indicate the activation foci in the comparison between original and flipped images of dynamic expressions versus dynamic mosaics. ΔEffect size indicates mean (±SE) beta value differences between dynamic expressions and dynamic mosaics of these regions at the sites of activation foci. IFG = inferior frontal gyrus; L = left; R = right; STS = superior temporal sulcus

When the effects of emotion and participant sex were analyzed by ANOVA using the factors of hemisphere, emotion, and sex for the contrast images of dynamic expressions versus dynamic mosaics, there was no significant activation in terms of main effects or interactions related to the factors of emotion or sex (Figure S2).

4. DISCUSSION

4.1. Regional brain activity

The results of the regional brain activity analyses performed herein showed that dynamic facial expressions were associated with greater activation in distributed brain regions such as the posterior cortices, including the bilateral FG and STS regions, limbic regions, including the bilateral amygdalae, and motor regions, including the right IFG, compared with dynamic mosaic images. The present findings of activation in these regions are consistent with those of at least 11 previous studies (e.g., LaBar et al. 2003; for a review, see Zinchenko et al., 2018). However, a similar number of previous studies have also reported null findings regarding activities in these brain regions, probably due to a lack of statistical power and/or a relatively less clear‐cut contrast (e.g., the contrast between dynamic and static facial expressions). Additionally, the present results demonstrated that dynamic facial expressions, compared with dynamic mosaic images, activated a number of other regions that were reported in fewer than 10 previous studies, including the IOG (Badzakova‐Trajkov et al., 2010; De Winter et al., 2015; Fox et al., 2009; Reinl & Bartles, 2014; Sato et al., 2004, 2010; Schultz et al., 2013; Schultz & Pilz, 2009), V5/MT area (Furl et al., 2013; Kessler et al., 2011; Kilts et al., 2003; Pentón et al., 2010; Schobert et al., 2018; Schultz et al., 2013; Schultz & Pilz, 2009; Trautmann et al., 2009), vmPFC/orbitofrontal cortex (Faivre et al., 2012; Pentón et al., 2010; Trautmann et al., 2009), cerebellum (Kilts et al., 2003; van der Gaag et al., 2007), dmPFC (Badzakova‐Trajkov et al., 2010; Fox et al., 2009; Sato et al., 2012), precuneus (Badzakova‐Trajkov et al., 2010; Fox et al., 2009; Trautmann et al., 2009), and temporal pole (LaBar et al., 2003; Pentón et al., 2010). Thus, the present results obtained using a relatively large sample provide the first evidence that these widely distributed social brain regions are jointly involved in the processing of dynamic facial expressions.

Activation of these regions would provide a mechanistic explanation for psychological processing of dynamic facial expressions. With regard to the psychological correlates of posterior cortices, previous neuroimaging studies have shown that the STS region is involved in visual analysis of the dynamic or changeable aspects of faces (e.g., Hoffman & Haxby, 2000; Puce et al., 1998; Wheaton et al., 2004). The V5/MT area is also known to be involved in visual processing of dynamic facial signals (Puce et al., 1998; Schobert et al., 2018; Wheaton et al., 2004), which could result in the feed‐forward of facial motion information to the STS regions in the dorsal visual stream (Puce et al., 1998). In contrast, the FG has been shown to be related to the visual analysis of invariant aspects of faces (i.e., features and spaces among the features specifying identity) (e.g., Guntupalli et al., 2017; Hoffman & Haxby, 2000; Visconti di Oleggio Castello et al., 2017) and/or subjective perceptions of faces (e.g., Tong et al., 1998; Madipakkam et al., 2015). The IOG is similarly involved in the visual processing of the invariant aspects of faces (e.g., Liu et al., 2010; Sergent et al., 1992; Strother et al., 2011) and may send information on facial form to the FG in the ventral visual stream (Gschwind et al., 2012). Together with these data, our results suggest that the dynamic presentation of facial expressions strongly activates visual processing of the motion and form of faces. Therefore, activation of these visual cortices could explain why humans can efficiently detect (Ceccarini & Caudeka, 2013; Yoshikawa & Sato, 2008) and recognize (Bould et al., 2008) dynamic facial expressions associated with emotion.

Limbic regions, including the amygdala and vmPFC, have been shown to be involved in emotional processing (e.g., Breiter et al., 1996; Winston et al., 2003). The activity of these regions can therefore account for the elicitation of subjective and physiological emotional responses to dynamic facial expressions (Anttonen et al., 2009; Sato & Yoshikawa, 2007a). At the same time, as these regions are known to be involved in several other social functions, such as the evaluation of trustworthiness (Winston et al., 2002) and perception of social support (Sato et al., 2016), it is possible that the observation of dynamic facial expressions elicits other, currently untested, psychological processes through activity in the amygdala and vmPFC.

Previous neuroimaging studies have reported greater IFG activation not only when participants passively observed dynamic facial actions (Buccino et al., 2001, 2004), but also when they imitated such expressions (Lee et al., 2006; Leslie et al., 2004). These findings are consistent with theories suggesting that the IFG contains mirror neurons (Gallese et al., 2004; Rizzolatti et al., 2001), which are activated in response to both the observation and the execution of facial expressions. This is particularly true for the pars opercularis of the IFG (Brodmann area 44), which has been proposed as the human homolog of the monkey ventral premotor area F5 (Petrides, 2005; Petrides & Pandya, 1994). We found activation in response to dynamic facial expressions in this region, which did not overlap with the more anteroventral part of the IFG that is typically activated in emotional expression labeling (e.g., Hariri et al., 2000) or person identification (e.g., Visconti di Oleggio Castello et al., 2017). There is a great deal of evidence that the cerebellum is also involved in motor processing (Manto et al., 2012) and some studies suggested its involvement in mirror neuron functioning (e.g., Leslie et al., 2004; for a review, see Van Overwalle et al., 2014). Specifically, we found activation in response to dynamic facial expressions versus dynamic mosaic images in the crus I and II, which were reportedly activated during motor mirror tasks (for a review, see Van Overwalle et al., 2014) and social and emotional processing tasks (Guell et al., 2018). Anatomical studies showed that the cerebellum forms networks with the contralateral frontal cortex (Palesi et al., 2015). These data suggest that the right IFG and left cerebellum activity seen in response to dynamic facial expressions in the present study may also be involved in the observation–execution matching of facial expressions. Therefore, these regions may be the neural substrates for strong facial mimicry in response to dynamic facial expressions (e.g., Weyers et al., 2006).

The dmPFC, precuneus, and temporal pole, as well as the STS region, have been shown to be activated when participants attributed mental states to others (i.e., mentalizing or theory of mind; e.g., Gallagher et al., 2000; for a review, see Frith & Frith 2003). Interestingly, a previous psychological study indicated that the attribution of mental states was facilitated by dynamic facial stimuli compared with static stimuli (Back et al., 2009). Together, the activation of these brain regions may underlie mentalizing triggered by observation of dynamic facial expressions.

Some studies have shown that emotional facial expressions were unconsciously processed through the subcortical visual pathway to the amygdala, which includes the superior colliculus and pulvinar (Morris et al., 1999; Pasley et al., 2004). These data may shed light on the neural pathway for unconscious processing of dynamic facial expressions (Kaiser et al., 2016; Sato et al., 2014).

4.2. Laterality

The results of the present laterality analyses revealed that activity in the STS region, IFG, and superior occipital gyrus was right‐hemispheric dominant, whereas that in the cerebellum was left‐hemispheric dominant during the observation of dynamic facial expressions. The right‐hemispheric dominance in the STS region during processing of dynamic facial expressions is consistent with the results of a previous investigation (De Winter et al., 2015). Among the STS regions showing widespread activation in response to dynamic facial expressions versus dynamic mosaic images, the laterality analyses showed two clusters separated by a nonsignificant region. This result suggests the presence of functionally lateralized and nonlateralized subregions in the STS region. This idea is consistent with data showing functionally segregated STS subregions that process social signals (Engell & Haxby, 2007; Pelphrey et al., 2005; Redcay et al., 2016; Schobert et al., 2018; Wheaton et al., 2004). The right‐dominant activity in the IFG and occipital region corroborates the findings of some previous studies although they did not statistically test for lateralized activity (e.g., Sato et al., 2004). Although left‐dominant activity in the cerebellum during the processing of dynamic facial expressions has yet to be reported, it is consistent with anatomical and functional evidence showing that the connections between the neocortex and cerebellum are contralateral, and that functional asymmetries of the cortex are often reflected in cerebellar function (Häberling & Corballis, 2016). The findings related to these multiple lateralized regions are consistent with the theoretical proposal that it is the distributed and interactive regional network, rather than a single region, that is lateralized for processing facial information (Behrmann & Plaut, 2013).

Cortical right hemispheric dominance in the processing of dynamic facial expressions is consistent with the findings of lesion studies indicating that patients with right hemisphere damage are less emotionally reactive during face‐to‐face communication than those with left hemisphere damage (Blonder et al., 1993; Borod et al., 1985; Langer et al., 1998; Ross & Mesulam, 1979). The results were also consistent with several findings indicating that patients with right, compared with left, hemisphere damage are more severely impaired in the recognition of emotions in dynamic facial expressions (Benowitz et al., 1983; Karow et al., 2011; Schmitt et al., 1997). Although many studies also reported that patients with damage to the right hemisphere were impaired in the recognition of static facial expressions compared with those with left hemisphere damage (e.g., Cicone et al., 1980; DeKosky et al., 1980), some studies did not show clear results (e.g., Cancelliere & Kertesz, 1990; Prigatano & Pribram, 1982; Young et al., 1993; for a review, see Yuvaraj et al., 2013). We speculate that dynamic presentation of facial expressions could more clearly reveal the hemispheric functional asymmetry of facial expression processing.

Interestingly, the right cortical (specifically, the STS region and IFG) and left cerebellar dominance in dynamic expression processing found in the present study mirrored the neural network for language processing. Numerous functional neuroimaging studies have reported activation of the left STS region, left IFG, and right cerebellum during language or speech tasks (e.g., Häberling & Corballis, 2016; Hubrich‐Ungureanu, et al. 2002; Jansen et al., 2005; Seghier et al., 2011). Based on anatomical evidence (Palesi et al., 2015), it is highly plausible that these regions constitute a functional network. Although other ventral occipitotemporal regions are also involved in language processing, such as visual word‐form recognition (for a review, see Price, 2012), these regions do not appear to be universally lateralized (e.g., Bolger et al., 2005; Liu et al., 2007; Nelson et al., 2009). Neuroscientific findings showing commonalities between the processing of dynamic facial expressions and that of language may corroborate the findings of empirical and theoretical studies indicating that common pathways exist between these two types of processing. For example, it has been noted that, as in the case of emotional facial expressions, vocalizations produce concomitant facial movements around the mouth and other features, which could be processed simultaneously with auditory components (Ghazanfar & Takahashi, 2014). Psychological studies revealed that facial expressions are used as grammatical markers in face‐to‐face communication (Pfau & Quer, 2010; Benitez‐Quiroz et al., 2016). Language studies also indicated that both mouth movements and the acoustic envelope of speech mainly exhibit a theta rhythm (2–7 Hz), which corresponds to the typical rhythm of dynamic facial expressions (Chandrasekaran et al., 2009). Taken together, these observations suggest similarity in the psychological and neural computations involved in the processing of dynamic facial expressions and language. Based on such data, one evolutionary theory suggested that the speech rhythm may have evolved through modification of rhythmic facial movements, such as lip smacks, in ancestral primates (MacNeilage, 1998). As facial expressions may have appeared earlier than language in our evolutionary history (Darwin, 1872), and where right‐hemispheric dominance in the processing of emotional facial expressions is found even in nonhuman primates (Vermeire & Hamilton, 1998), it is possible that our ancestors adapted these computations for nonverbal, emotional facial expressions in right‐cortical and left‐cerebellum circuits to the processing of verbal facial movements and speech in the mirrored left‐cortical and right‐cerebellar circuits.

4.3. Emotional and sex effects

The present data did not reveal clear effects or hemispheric differences in terms of emotion or participant sex on the processing of dynamic facial expressions. These results are consistent with some previous studies in which these factors exerted no significant effect on such processing (e.g., van der Gaag et al., 2007), whereas other studies reported effects of emotion on the activity of certain brain regions (e.g., Kilts et al., 2003); however, these results were inconsistent. A meta‐analysis of studies investigating factors other than dynamic facial expression processing also did not identify different brain activation patterns across emotions (Lindquist et al., 2012). Although some meta‐analyses reported sex effects on emotional brain activity, these results were inconsistent across studies (Filkowski et al., 2017; Fusar‐Poli et al., 2009; García‐García et al., 2016; Sergerie et al., 2008).

Regardless, it should be noted that the present results are not necessarily indicative of the absence of emotion and sex effects related to dynamic facial expression processing. It is possible that there were null findings because emotion and sex effects were small and might have required a sample larger than the one used in this study. The present results only suggest that the differences in neural activity associated with emotion and sex are relatively weak compared with the activity in response to dynamic facial expressions versus dynamic mosaic images.

4.4. Implications and limitations

In addition to the theoretical implications discussed above, the present results have practical implications. First, these results indicate that the observation of dynamic facial expressions automatically activates almost all of the proposed social brain regions, including the amygdala and vmPFC (e.g., Brothers, 1990). Also, as some previous studies suggested (e.g., Fox et al., 2009), our results demonstrate that the presenting of dynamic facial expressions is useful for activating the distributed neural system for face perception, which includes the core (e.g., the STS region) and extended (e.g., the amygdala) system regions (Haxby et al., 2000). Although tasks employing images of static faces are widely used, several studies have reported that these tasks sometimes do not sufficiently activate important regions, such as the STS region (e.g., Kanwisher et al., 1997), in clear contrast to our results. Thus, the task used in the present study may be useful for testing clinical populations with deficits in social functioning or facial information processing, such as those with autism spectrum disorder or schizophrenia.

Second, our results point to a clearly lateralized neural network for processing dynamic facial expressions, which suggests the necessity for presurgical assessment of such nonverbal laterality. Surgical operations could be applied for pharmacologically intractable focal epilepsy. Although the regions associated with language have been carefully assessed (Bookheimer, 2007; Loring & Meador, 2000), the brain regions associated with facial expression processing are not routinely assessed before such surgery because of the paucity of empirical data regarding lateralized neural networks, and the lack of appropriate assessment tools. Our data suggest that this type of assessment may be needed for evaluation of lateralized brain networks associated with the processing of dynamic facial expressions.

The present study has several limitations that should be considered. First, dynamic mosaic images were employed as control stimuli to clearly detect the social brain regions associated with dynamic facial expression processing. Due to the use of these stimuli as controls, the types of social processing that activate these brain regions remains unclear. To specify the psychological functions of these particular brain regions, the use of other control stimuli will be necessary. One possible candidate for these types of control stimuli include static facial expressions to investigate motion‐related visual processing and enhanced emotional and motor processing (e.g., Sato & Yoshikawa, 2007a, 2007b) of dynamic facial expressions. Because numerous neuroimaging studies have reported that static facial expressions also activate widespread social brain regions (e.g., Kesler‐West et al., 2001; for a review, see Fusar‐Poli et al., 2009), it would be interesting to investigate qualitative and quantitative differences in social brain activity during the processing of dynamic versus static facial expressions in samples with high statistical power. Another possible candidate for control stimuli might be nonemotional dynamic facial expressions to investigate the effects of the emotional meaning of expressions while controlling for visual properties. Among such expressions, language‐related facial expressions may be particularly interesting because several previous neuroimaging studies have reported that the observation of dynamic speaking faces elicits left‐lateralized IFG activity (e.g., Buccino et al., 2004; Campbell et al., 2001; Paulesu et al., 2003), which is in contrast to the right‐lateralized IFG activity observed in the present study. Thus, investigations of commonalities and differences during the processing of language‐related and emotional facial movements may deepen our understanding of the neural processing of dynamic facial expressions.

Second, participants were asked to passively observe the stimuli (with a dummy task) to investigate the automatic processing of dynamic facial expressions. Thus, it remains unclear whether controlled psychological processing would enhance or inhibit activity in social brain regions during observation of dynamic facial expressions. A number of studies investigating the processing of static facial expressions based on the effects of explicit (e.g., emotion recognition) versus implicit (e.g., sex identification) emotional tasks have reported increased activation in some social brain regions, including the amygdala (e.g., Habel et al., 2007; for a review, see Fusar‐Poli et al., 2009). In contrast, only one previous study tested this issue with regard to the processing of dynamic facial expressions; this study reported increased activity in social brain regions, such as the STS region, during the explicit compared with the implicit emotional task (Johnston et al., 2013). Further studies assessing effect of the controlled processing of dynamic facial expressions would be valuable; studies with increased statistical power and/or different types of tasks would be particularly useful.

5. CONCLUSION

In conclusion, regional brain activity analysis showed that the observation of dynamic facial expressions, compared with dynamic mosaics, elicited stronger activity in the bilateral posterior cortices, including the IOG, FG, and STS. The dynamic facial expressions also activated bilateral limbic regions more strongly, including the amygdalae and vmPFC. In the same manner, activation was noted in the right IFG and left cerebellum. Laterality analyses contrasting original and flipped images revealed right‐hemispheric dominance in the STS and IFG and left‐hemispheric dominance in the cerebellum. These results indicate that the neural mechanisms underlying the processing of dynamic facial expressions include widespread social brain regions associated with perceptual, emotional, and motor functions, including a clearly lateralized cortico‐cerebellum network like that involved in language processing.

CONFLICT OF INTEREST

The authors declare no competing conflict of interest.

6.

Supporting information

Figure S1 Mean (± SE) beta values of brain regions exhibiting significant activation in response to dynamic expressions versus dynamic mosaics. R = right; IOG = inferior occipital gyrus; FG = fusiform gyrus; STS = superior temporal sulcus; IFG = inferior frontal gyrus; vmPFC = ventromedial prefrontal cortex; dmPFC = dorsomedial prefrontal cortex; F = female; M = Male; AN = anger; HA = happiness; DE = dynamic expression; DM = dynamic mosaic

Figure S2 Mean (± SE) beta values differences (dynamic expressions – dynamic mosaics) of brain regions indicating significant hemispheric dominance in response to dynamic expressions versus dynamic mosaics. L = left; R = right; STS = superior temporal sulcus; IFG = inferior frontal gyrus; F = female; M = male; AN = anger; HA = happiness

ACKNOWLEDGMENTS

The authors thank the ATR Brain Activity Imaging Center for support in data acquisition and Akemi Inoue, Emi Yokoyama, and Kazusa Minemoto for their technical support. This study was supported by funds from the Japan Society for the Promotion of Science Funding Program for Next Generation World‐Leading Researchers (LZ008), Research Complex Program from Japan Science and Technology Agency (JST), and JST CREST (JPMJCR17A5).

Sato W, Kochiyama T, Uono S, et al. Widespread and lateralized social brain activity for processing dynamic facial expressions. Hum Brain Mapp. 2019;40:3753–3768. 10.1002/hbm.24629

Wataru Sato and Takanori Kochiyama contributed equally to this study.

Funding information Japan Science and Technology Agency, Grant/Award Numbers: CREST (JPMJCR17A5), Research Complex Program; Japan Society for the Promotion of Science, Grant/Award Number: LZ008

Data Availability: The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

DATA AVAILABILITY

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

REFERENCES

- Acar, F. , Seurinck, R. , Eickhoff, S. B. , & Moerkerke, B. (2018). Assessing robustness against potential publication bias in activation likelihood estimation (ALE) meta‐analyses for fMRI. PLoS One, 13, e0208177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs, R. (2003). Cognitive neuroscience of human social behaviour. Nature Reviews Neuroscience, 4, 165–178. [DOI] [PubMed] [Google Scholar]

- Allison, T. , Puce, A. , & McCarthy, G. (2000). Social perception from visual cues: Role of the STS region. Trends in Cognitive Sciences, 4, 267–278. [DOI] [PubMed] [Google Scholar]

- Anttonen, J. , Surakka, V. , & Koivuluoma, M. (2009). Ballistocardiographic responses to dynamic facial displays of emotion while sitting on the EMFi chair. Journal of Media Psychology, 21, 69–84. [Google Scholar]

- Arnold, A. E. , Iaria, G. , & Goghari, V. M. (2016). Efficacy of identifying neural components in the face and emotion processing system in schizophrenia using a dynamic functional localizer. Psychiatry Research: Neuroimaging, 248, 55–63. [DOI] [PubMed] [Google Scholar]

- Arsalidou, M. , Morris, D. , & Taylor, M. J. (2011). Converging evidence for the advantage of dynamic facial expressions. Brain Topography, 24, 149–163. [DOI] [PubMed] [Google Scholar]

- Ashburner, J. , & Friston, K. J. (2005). Unified segmentation. NeuroImage, 26, 839–851. [DOI] [PubMed] [Google Scholar]

- Baciu, M. , Juphard, A. , Cousin, E. , & Bas, J. F. (2005). Evaluating fMRI methods for assessing hemispheric language dominance in healthy subjects. European Journal of Radiology, 55, 209–218. [DOI] [PubMed] [Google Scholar]

- Back, E. , Jordan, T. R. , & Thomas, S. M. (2009). The recognition of mental states from dynamic and static facial expressions. Visual Cognition, 17, 1271–1286. [Google Scholar]

- Badzakova‐Trajkov, G. , Haberling, I. S. , Roberts, R. P. , & Corballis, M. C. (2010). Cerebral asymmetries: Complementary and independent processes. PLoS One, 5, e9682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrmann, M. , & Plaut, D. C. (2013). Distributed circuits, not circumscribed centers, mediate visual recognition. Trends in Cognitive Sciences, 17, 210–219. [DOI] [PubMed] [Google Scholar]

- Benitez‐Quiroz, C. F. , Wilbur, R. B. , & Martinez, A. M. (2016). The not face: A grammaticalization of facial expressions of emotion. Cognition, 150, 77–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett, C. M. , & Miller, M. B. (2013). fMRI reliability: Influences of task and experimental design. Cognitive, Affective, & Behavioral Neuroscience, 13, 690–702. [DOI] [PubMed] [Google Scholar]

- Benowitz, L. I. , Bear, D. M. , Rosenthal, R. , Mesulam, M. M. , Zaidel, E. , & Sperry, R. W. (1983). Hemispheric specialization in nonverbal communication. Cortex, 19, 5–11. [DOI] [PubMed] [Google Scholar]

- Binkofski, F. , & Buccino, G. (2006). The role of ventral premotor cortex in action execution and action understanding. Journal of Physiology, Paris, 99, 396–405. [DOI] [PubMed] [Google Scholar]

- Birn, R. M. , Bandettini, P. A. , Cox, R. W. , & Shaker, R. (1999). Event‐related fMRI of tasks involving brief motion. Human Brain Mapping, 7, 106–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blonder, L. X. , Burns, A. F. , Bowers, D. , Moore, R. W. , & Heilman, K. M. (1993). Right hemisphere facial expressivity during natural conversation. Brain and Cognition, 21, 44–56. [DOI] [PubMed] [Google Scholar]

- Bolger, D. J. , Perfetti, C. A. , & Schneider, W. (2005). Cross‐cultural effect on the brain revisited: Universal structures plus writing system variation. Human Brain Mapping, 25, 92–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bookheimer, S. (2007). Pre‐surgical language mapping with functional magnetic resonance imaging. Neuropsychology Review, 17, 145–155. [DOI] [PubMed] [Google Scholar]

- Borod, J. C. , Koff, E. , Lorch, M. P. , & Nicholas, M. (1985). Channels of emotional expression in patients with unilateral brain damage. Archives of Neurology, 42, 345–348. [DOI] [PubMed] [Google Scholar]

- Bould, E. , Morris, N. , & Wink, B. (2008). Recognising subtle emotional expressions: The role of facial movements. Cognition & Emotion, 22, 1569–1587. [Google Scholar]

- Breiter, H. C. , Etcoff, N. L. , Whalen, P. J. , Kennedy, W. A. , Rauch, S. L. , Buckner, R. L. , … Rosen, B. R. (1996). Response and habituation of the human amygdala during visual processing of facial expression. Neuron, 17, 875–887. [DOI] [PubMed] [Google Scholar]

- Brothers, L. (1990). The B: A project for integrating primate behavior and neurophysiology in a new domain. Concepts in Neuroscience, 1, 27–51. [Google Scholar]

- Buccino, G. , Binkofski, F. , Fink, G. R. , Fadiga, L. , Fogassi, L. , Gallese, V. , … Freund, H. J. (2001). Action observation activates premotor and parietal areas in a somatotopic manner: An fMRI study. The European Journal of Neuroscience, 13, 400–404. [PubMed] [Google Scholar]

- Buccino, G. , Lui, F. , Canessa, N. , Patteri, I. , Lagravinese, G. , Benuzzi, F. , … Rizzolatti, G. (2004). Neural circuits involved in the recognition of actions performed by nonconspecifics: An fMRI study. Journal of Cognitive Neuroscience, 16, 114–126. [DOI] [PubMed] [Google Scholar]

- Button, K. S. , Ioannidis, J. P. , Mokrysz, C. , Nosek, B. A. , Flint, J. , Robinson, E. S. , & Munafò, M. R. (2013). Power failure: Why small sample size undermines the reliability of neuroscience. Nature Reviews. Neuroscience, 14, 365–376. [DOI] [PubMed] [Google Scholar]

- Calder, A. J. , Lawrence, A. D. , & Young, A. W. (2001). Neuropsychology of fear and loathing. Nature Reviews. Neuroscience, 2, 352–363. [DOI] [PubMed] [Google Scholar]

- Campbell, R. , MacSweeney, M. , Surguladze, S. , Calvert, G. , McGuire, P. , Suckling, J. , … David, A. S. (2001). Cortical substrates for the perception of face actions: An fMRI study of the specificity of activation for seen speech and for meaningless lower‐face acts (gurning). Brain Research. Cognitive Brain Research, 12, 233–243. [DOI] [PubMed] [Google Scholar]

- Cancelliere, A. E. , & Kertesz, A. (1990). Lesion localization in acquired deficits of emotional expression and comprehension. Brain and Cognition, 13, 133–147. [DOI] [PubMed] [Google Scholar]

- Ceccarini, F. , & Caudeka, C. (2013). Anger superiority effect: The importance of dynamic emotional facial expressions. Visual Cognition, 21, 498–540. [Google Scholar]

- Chandrasekaran, C. , Trubanova, A. , Stillittano, S. , Caplier, A. , & Ghazanfar, A. A. (2009). The natural statistics of audiovisual speech. PLoS Computational Biology, 5, e1000436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicone, M. , Wapner, W. , & Gardner, H. (1980). Sensitivity to emotional expressions and situations in organic patients. Cortex, 16, 145–158. [DOI] [PubMed] [Google Scholar]

- Darwin, C. (1872). The expression of the emotions in man and animals. London, England: Murray. [Google Scholar]

- De Winter, F. L. , Zhu, Q. , Van den Stock, J. , Nelissen, K. , Peeters, R. , de Gelder, B. , … Vandenbulcke, M. (2015). Lateralization for dynamic facial expressions in human superior temporal sulcus. NeuroImage, 106, 340–352. [DOI] [PubMed] [Google Scholar]

- DeKosky, S. T. , Heilman, K. M. , Bowers, D. , & Valenstein, E. (1980). Recognition and discrimination of emotional faces and pictures. Brain and Language, 9, 206–214. [DOI] [PubMed] [Google Scholar]

- D'Esposito, M. , Zarahn, E. , & Aguirre, G. K. (1999). Event‐related functional MRI: Implications for cognitive psychology. Psychological Bulletin, 125, 155–164. [DOI] [PubMed] [Google Scholar]

- Ekman, P. , & Friesen, W. V. (1978). Facial action coding system. Palo Alto, CA: Consulting Psychologist Press. [Google Scholar]

- Ekman, P. , Hager, J. , Irwin, W. , & Rosenberg, E. (1998). Facial Action Coding System Affect Information Database (FACSAID). http://www.face-and-emotion.com/dataface/facsaid/description.jsp.

- Emery, N. J. , & Perrett, D. I. (2000). How can studies of the monkey brain help us understand "theory of mind" and autism in humans In Baron‐Cohen S., Tager‐Flusberg H., & Cohen D. J. (Eds.), Understanding other minds: Perspectives from developmental cognitive neuroscience (2nd ed., pp. 274–305). Oxford, England: Oxford University Press. [Google Scholar]

- Engell, A. D. , & Haxby, J. V. (2007). Facial expression and gaze‐direction in human superior temporal sulcus. Neuropsychologia, 45, 3234–3241. [DOI] [PubMed] [Google Scholar]

- Faivre, N. , Charron, S. , Roux, P. , Lehéricy, S. , & Kouider, S. (2012). Nonconscious emotional processing involves distinct neural pathways for pictures and videos. Neuropsychologia, 50, 3736–3744. [DOI] [PubMed] [Google Scholar]

- Filkowski, M. M. , Olsen, R. M. , Duda, B. , Wanger, T. J. , & Sabatinelli, D. (2017). Sex differences in emotional perception: Meta analysis of divergent activation. NeuroImage, 147, 925–933. [DOI] [PubMed] [Google Scholar]

- Fischer, J. , & Whitney, D. (2009). Precise discrimination of object position in the human pulvinar. Human Brain Mapping, 30, 101–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foley, E. , Rippon, G. , Thai, N. J. , Longe, O. , & Senior, C. (2012). Dynamic facial expressions evoke distinct activation in the face perception network: A connectivity analysis study. Journal of Cognitive Neuroscience, 24, 507–520. [DOI] [PubMed] [Google Scholar]

- Fox, C. J. , Iaria, G. , & Barton, J. J. (2009). Defining the face processing network: Optimization of the functional localizer in fMRI. Human Brain Mapping, 30, 1637–1651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston, K. J. , Holmes, A. , Poline, J. B. , Price, C. J. , & Frith, C. D. (1996). Detecting activations in PET and fMRI: Levels of inference and power. NeuroImage, 4, 223–235. [DOI] [PubMed] [Google Scholar]

- Friston, K. J. , Holmes, A. P. , Poline, J. B. , Grasby, P. J. , Williams, S. C. , Frackowiak, R. S. J. , & Turner, R. (1995). Analysis of fMRI time‐series revisited. NeuroImage, 2, 45–53. [DOI] [PubMed] [Google Scholar]

- Friston, K. J. , Zarahn, E. , Josephs, O. , Henson, R. N. , & Dale, A. M. (1999). Stochastic designs in event‐related fMRI. NeuroImage, 10, 607–619. [DOI] [PubMed] [Google Scholar]

- Frith, U. , & Frith, C. D. (2003). Development and neurophysiology of mentalizing. Philosophical Transactions of the Royal Society B: Biological Sciences, 358, 459–473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furl, N. , Henson, R. N. , Friston, K. J. , & Calder, A. J. (2013). Top‐down control of visual responses to fear by the amygdala. The Journal of Neuroscience, 33, 17435–17443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar‐Poli, P. , Placentino, A. , Carletti, F. , Landi, P. , Allen, P. , Surguladze, S. , … Politi, P. (2009). Functional atlas of emotional faces processing: A voxel‐based meta‐analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry & Neuroscience, 34, 418–432. [PMC free article] [PubMed] [Google Scholar]

- Gallagher, H. L. , Happe, F. , Brunswick, N. , Fletcher, P. C. , Frith, U. , & Frith, C. D. (2000). Reading the mind in cartoons and stories: An fMRI study of 'theory of mind' in verbal and nonverbal tasks. Neuropsychologia, 38, 11–21. [DOI] [PubMed] [Google Scholar]

- Gallese, V. , Keysers, C. , & Rizzolatti, G. (2004). A unifying view of the basis of social cognition. Trends in Cognitive Sciences, 8, 396–403. [DOI] [PubMed] [Google Scholar]

- García‐García, I. , Kube, J. , Gaebler, M. , Horstmann, A. , Villringer, A. , & Neumann, J. (2016). Neural processing of negative emotional stimuli and the influence of age, sex and task‐related characteristics. Neuroscience and Biobehavioral Reviews, 68, 773–793. [DOI] [PubMed] [Google Scholar]

- Ghazanfar, A. A. , & Takahashi, D. Y. (2014). The evolution of speech: Vision, rhythm, cooperation. Trends in Cognitive Sciences, 18, 543–553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grosbras, M. H. , & Paus, T. (2006). Brain networks involved in viewing angry hands or faces. Cerebral Cortex, 16, 1087–1096. [DOI] [PubMed] [Google Scholar]

- Gschwind, M. , Pourtois, G. , Schwartz, S. , Van De Ville, D. , & Vuilleumier, P. (2012). White‐matter connectivity between face‐responsive regions in the human brain. Cerebral Cortex, 22, 1564–1576. [DOI] [PubMed] [Google Scholar]

- Guell, X. , Schmahmann, J. D. , Gabrieli, J. , & Ghosh, S. S. (2018). Functional gradients of the cerebellum. eLife, 7, e36652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guntupalli, J. S. , Wheeler, K. G. , & Gobbini, M. I. (2017). Disentangling the representation of identity from head view along the human face processing pathway. Cerebral Cortex, 27, 46–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Habel, U. , Windischberger, C. , Derntl, B. , Robinson, S. , Kryspin‐Exner, I. , Gur, R. C. , & Moser, E. (2007). Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia, 45, 2369–2377. [DOI] [PubMed] [Google Scholar]

- Häberling, I. S. , & Corballis, M. C. (2016). Cerebellar asymmetry, cortical asymmetry and handedness: Two independent networks. Laterality, 21, 397–414. [DOI] [PubMed] [Google Scholar]

- Hariri, A. R. , Bookheimer, S. Y. , & Mazziotta, J. C. (2000). Modulating emotional responses: Effects of a neocortical network on the limbic system. Neuroreport, 11, 43–48. [DOI] [PubMed] [Google Scholar]

- Haxby, J. V. , Hoffman, E. A. , & Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4, 223–233. [DOI] [PubMed] [Google Scholar]

- Hernandez, N. , Andersson, F. , Edjlali, M. , Hommet, C. , Cottier, J. P. , Destrieux, C. , & Bonnet‐Brilhault, F. (2013). Cerebral functional asymmetry and phonological performance in dyslexic adults. Psychophysiology, 50, 1226–1238. [DOI] [PubMed] [Google Scholar]

- Hess, U. , & Fischer, A. (2014). Emotional mimicry: Why and when we mimic emotions. Social and Personality Psychology Compass, 8, 45–57. [Google Scholar]

- Hoffman, E. A. , & Haxby, J. V. (2000). Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience, 3, 80–84. [DOI] [PubMed] [Google Scholar]

- Holmes, A. P. , & Friston, K. J. (1998). Generalisability, random effects and population inference. NeuroImage, 7, S754. [Google Scholar]

- Hopkins, W. D. , Misiura, M. , Pope, S. M. , & Latash, E. M. (2015). Behavioral and brain asymmetries in primates: A preliminary evaluation of two evolutionary hypotheses. Annals of the New York Academy of Sciences, 1359, 65–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hubrich‐Ungureanu, P. , Kaemmerer, N. , Henn, F. A. , & Braus, D. F. (2002). Lateralized organization of the cerebellum in a silent verbal fluency task: A functional magnetic resonance imaging study in healthy volunteers. Neuroscience Letters, 319, 91–94. [DOI] [PubMed] [Google Scholar]

- Iacoboni, M. (2005). Neural mechanisms of imitation. Current Opinion in Neurobiology, 15, 632–637. [DOI] [PubMed] [Google Scholar]

- Jansen, A. , Flöel, A. , Van Randenborgh, J. , Konrad, C. , Rotte, M. , Förster, A. F. , … Knecht, S. (2005). Crossed cerebro‐cerebellar language dominance. Human Brain Mapping, 24, 165–172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston, P. , Mayes, A. , Hughes, M. , & Young, A. W. (2013). Brain networks subserving the evaluation of static and dynamic facial expressions. Cortex, 49, 2462–2472. [DOI] [PubMed] [Google Scholar]

- Johnstone, T. , Ores Walsh, K. S. , Greischar, L. L. , Alexander, A. L. , Fox, A. S. , Davidson, R. J. , & Oakes, T. R. (2006). Motion correction and the use of motion covariates in multiple‐subject fMRI analysis. Human Brain Mapping, 27, 779–788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser, J. , Davey, G. C. , Parkhouse, T. , Meeres, J. , & Scott, R. B. (2016). Emotional facial activation induced by unconsciously perceived dynamic facial expressions. International Journal of Psychophysiology, 110, 207–211. [DOI] [PubMed] [Google Scholar]

- Kanwisher, N. , McDermott, J. , & Chun, M. M. (1997). The fusiform face area: A module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience, 17, 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karow, C. M. , Marquardt, T. P. , & Marshall, R. C. (2011). Affective processing in left and right hemisphere brain‐damaged subjects with and without subcortical involvement. Aphasiology, 15, 715–729. [Google Scholar]

- Kesler‐West, M. L. , Andersen, A. H. , Smith, C. D. , Avison, M. J. , Davis, C. E. , Kryscio, R. J. , & Blonder, L. X. (2001). Neural substrates of facial emotion processing using fMRI. Brain Research. Cognitive Brain Research, 11, 213–226. [DOI] [PubMed] [Google Scholar]

- Kessler, H. , Doyen‐Waldecker, C. , Hofer, C. , Hoffmann, H. , Traue, H. C. , & Abler, B. (2011). Neural correlates of the perception of dynamic versus static facial expressions of emotion. Psycho‐Social Medicine, 8, Doc03. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilts, C. D. , Egan, G. , Gideon, D. A. , Ely, T. D. , & Hoffman, J. M. (2003). Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. NeuroImage, 18, 156–168. [DOI] [PubMed] [Google Scholar]

- Kret, M. E. , Pichon, S. , Grezes, J. , & de Gelder, B. (2011a). Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. NeuroImage, 54, 1755–1762. [DOI] [PubMed] [Google Scholar]

- Kret, M. E. , Pichon, S. , Grezes, J. , & de Gelder, B. (2011b). Men fear other men most: Gender specific brain activations in perceiving threat from dynamic faces and bodies: An FMRI study. Frontiers in Psychology, 2, 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurth, F. , Gaser, C. , & Luders, E. (2015). A 12‐step user guide for analyzing voxel‐wise gray matter asymmetries in statistical parametric mapping (SPM). Nature Protocols, 10, 293–304. [DOI] [PubMed] [Google Scholar]

- LaBar, K. S. , Crupain, M. J. , Voyvodic, J. T. , & McCarthy, G. (2003). Dynamic perception of facial affect and identity in the human brain. Cerebral Cortex, 13, 1023–1033. [DOI] [PubMed] [Google Scholar]

- Langer, S. L. , Pettigrew, L. C. , & Blonder, L. X. (1998). Observer liking of unilateral stroke patients. Neuropsychiatry, Neuropsychology, and Behavioral Neurology, 11, 218–224. [PubMed] [Google Scholar]

- Lee, T. W. , Josephs, O. , Dolan, R. J. , & Critchley, H. D. (2006). Imitating expressions: Emotion‐specific neural substrates in facial mimicry. Social Cognitive and Affective Neuroscience, 1, 122–135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leslie, K. R. , Johnson‐Frey, S. H. , & Grafton, S. T. (2004). Functional imaging of face and hand imitation: Towards a motor theory of empathy. NeuroImage, 21, 601–607. [DOI] [PubMed] [Google Scholar]

- Limbrick‐Oldfield, E. H. , Brooks, J. C. , Wise, R. J. , Padormo, F. , Hajnal, J. V. , Beckmann, C. F. , & Ungless, M. A. (2012). Identification and characterisation of midbrain nuclei using optimised functional magnetic resonance imaging. NeuroImage, 59, 1230–1238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist, K. A. , Wager, T. D. , Kober, H. , Bliss‐Moreau, E. , & Barrett, L. F. (2012). The brain basis of emotion: A meta‐analytic review. The Behavioral and Brain Sciences, 35, 121–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, J. , Harris, A. , & Kanwisher, N. (2010). Perception of face parts and face configurations: An FMRI study. Journal of Cognitive Neuroscience, 22, 203–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, Y. , Dunlap, S. , Fiez, J. , & Perfetti, C. (2007). Evidence for neural accommodation to a writing system following learning. Human Brain Mapping, 28, 1223–1234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loring, D. W. , & Meador, K. J. (2000). Pre‐surgical evaluation for epilepsy surgery. Neurosciences, 5, 143–150. [PubMed] [Google Scholar]

- MacNeilage, P. F. (1998). The frame/content theory of evolution of speech production. The Behavioral and Brain Sciences, 21, 499–511. [DOI] [PubMed] [Google Scholar]

- Madipakkam, A. R. , Rothkirch, M. , Guggenmos, M. , Heinz, A. , & Sterzer, P. (2015). Gaze direction modulates the relation between neural responses to faces and visual awareness. The Journal of Neuroscience, 35, 13287–13299. [DOI] [PMC free article] [PubMed] [Google Scholar]