Abstract

During face perception, we integrate facial expression and eye gaze to take advantage of their shared signals. For example, fear with averted gaze provides a congruent avoidance cue, signaling both threat presence and its location, whereas fear with direct gaze sends an incongruent cue, leaving threat location ambiguous. It has been proposed that the processing of different combinations of threat cues is mediated by dual processing routes: reflexive processing via magnocellular (M) pathway and reflective processing via parvocellular (P) pathway. Because growing evidence has identified a variety of sex differences in emotional perception, here we also investigated how M and P processing of fear and eye gaze might be modulated by observer's sex, focusing on the amygdala, a structure important to threat perception and affective appraisal. We adjusted luminance and color of face stimuli to selectively engage M or P processing and asked observers to identify emotion of the face. Female observers showed more accurate behavioral responses to faces with averted gaze and greater left amygdala reactivity both to fearful and neutral faces. Conversely, males showed greater right amygdala activation only for M‐biased averted‐gaze fear faces. In addition to functional reactivity differences, females had proportionately greater bilateral amygdala volumes, which positively correlated with behavioral accuracy for M‐biased fear. Conversely, in males only the right amygdala volume was positively correlated with accuracy for M‐biased fear faces. Our findings suggest that M and P processing of facial threat cues is modulated by functional and structural differences in the amygdalae associated with observer's sex.

Keywords: amygdala, emotion perception, eye gaze, fMRI, gender, sex differences

1. INTRODUCTION

Face perception, particularly assessment of facial emotion during social interactions, is critical for adaptive social behavior. It enables both observers and expressers of facial cues to communicate nonverbally about the social environment. For example, a happy facial expression implies to an observer that either the expresser or the environment surrounding the observer is safe and friendly, and thus approachable, whereas a fearful facial expression can imply the existence of a potential threat to an expresser or even to an observer. Prior work has investigated how observers read such signals from a facial expression combined with direct or averted eye gaze, which imparts different meanings. For example, a fearful face tends to be perceived as more fearful when presented with an averted eye gaze because the combination of fearful facial expression and averted gaze provides a congruent social signal (both the expression and the gaze direction signal avoidance), leading to facilitated processing of the congruent signals (e.g., Adams et al., 2012; Adams & Kleck, 2003, 2005; Cushing et al., 2018; Hadjikhani, Hoge, Snyder, & de Gelder, 2008; Im et al., 2017a). Furthermore, in a fearful face this “pointing with the eyes” (Hadjikhani et al., 2008) to the source of threat disambiguates whence the threat is coming. When a fearful expression is combined with direct gaze, however, it tends to look less fearful due to the incongruity that direct gaze (an approach signal) creates in combination with the fearful expression (an avoidance signal), requiring more reflective processing to resolve the ambiguity inherent in the conflicting signal and the source of threat. Such interactions between gaze direction and a specific emotional facial expression (e.g., fear, joy, or anger) have been reported in many studies (e.g., Adams & Kleck, 2003, 2005; Adams, Gordon, Baird, Ambady, & Kleck, 2003; Akechi et al., 2009; Bindemann, Burton, & Langton, 2008; Hess, Adams, & Kleck, 2007; Milders, Hietanen, Leppänen, & Braun, 2011; Sander, Grandjean, Kaiser, Wehrle, & Scherer, 2007), suggesting that perceiving an emotional face involves integration of different types of social cues available in the face.

Recent work proposes that visual threat stimuli may differentially engage the major visual streams—the magnocellular (M) and parvocellular (P) pathways. An emerging hypothesis posits that reflexive processing of clear threat cues may be predominantly associated with the more primitive, coarse, and action‐oriented M pathway, while reflective, sustained processing of threat ambiguity may preferentially engage the slower, analysis‐oriented P pathway (Adams et al., 2012; Adams & Kveraga, 2015; Kveraga, 2014). Indeed, recent fMRI studies compared M versus P pathway involvement in threat perception and supported this hypothesis by showing that congruent threat and incongruent threat signals in both face (Cushing et al., 2018; Im et al., 2017a) and scene images (Kveraga, 2014) were processed preferentially by the M and P visual pathways, respectively. Moreover, Im et al. (2017a) showed that observers’ trait anxiety levels differentially modulated M and P processing of clear and ambiguous facial threat cues such that higher anxiety facilitated processing of averted‐gaze fear projected to M pathway, whereas higher anxiety impaired perception of direct‐gaze fear projected to P pathway. These findings are consistent with the hypothesis that the M pathway may be more involved in responding to clear and congruent threat cues, and the P‐pathway may play a greater role in assessing threat ambiguity (Adams et al., 2012; Kveraga, 2014).

Moreover, further evidence suggests that the left and right amygdalae show differential attunement to the P and M processing, respectively. We previously had observed greater right amygdala activation for M‐biased objects stimuli than for P‐biased objects stimuli (Kveraga, Boshyan, & Bar, 2007). Using fearful face stimuli with direct or averted eye gaze, we had also observed that stimuli depicting clear threat (averted‐gaze fear) tended to activate the right amygdala more, particularly with brief stimulus exposure, whereas ambiguous threat stimuli (direct‐gaze fear) activated the left amygdala more, particularly with longer stimulus exposures (Adams et al., 2012; Cushing et al., 2018). These findings were also replicated and extended in a recent study in which we showed that higher observer anxiety was associated with increased right amygdala activity with facilitated processing of M‐biased clear‐threat stimuli, whereas higher anxiety was associated with increased left amygdala activity with impaired processing of P‐biased ambiguous threat stimuli (Im et al., 2017a). Together, processing of clear vs. ambiguous threat cues from emotional expression and eye gaze of faces appears to differentially engage the M and P pathways, with differential hemispheric dominance in the right and the left amygdala.

Another factor that is known to modulate emotional perception is sex‐specific facial cues (Becker, Kenrick, Neuberg, Blackwell, & Smith, 2007; Hess, Adams, & Kleck, 2004, 2005; Zebrowitz, Kikuchi, & Fellous, 2010). For example, anger is more readily perceived in male faces whereas joy is more readily perceived in female faces (Becker et al., 2007; Hess et al. 2004; see Adams, Hess, & Kleck, 2015 for review); and the sex of the expresser also modulates the interaction between facial expression and gaze direction (Slepian, Weisbuch, Adams, & Ambady, 2011). The sex of perceiver, as a key biological factor, is also shown to modulate a variety of human brain and behavioral functions. In addition to cognitive differences including language (Shaywitz et al., 1995), navigational ability (Grön, Wunderlich, Spitzer, Tomczak, & Riepe, 2000), defensiveness (Kline, Allen, & Schwartz, 1998), mathematical ability (Haier & Benbow, 1995), and attention (Mansour, Haier, & Buchsbaum, 1996), females and males appear to differ markedly in processing of affective stimuli (Cahill, 2006; Campbell et al., 2002; Collignon et al., 2010; Hall, 1978; Hampson, van Anders, & Mullin, 2017; Stevens & Hamann, 2012). Behaviorally, females tend to be emotionally expressive than males (Kring & Gordon, 1998), possibly as a result of differences in socialization (Grossman & Wood, 1993). Moreover, females tend to be more emotionally reactive than males (Birnbaum & Croll, 1984; Shields, 1991) and tend to show stronger psychophysiological responses to emotional stimuli (Kring & Gordon, 1998; Orozco & Ehlers, 1998) and greater efficiency in using audio‐visual, multisensory emotional information (Collignon et al., 2010) in order to recognize subtle facial emotions more accurately (Hoffmann, Kessler, Eppel, Rukavina, & Traue, 2010) than males. Despite sex‐related differences reported in a variety of social, affective, and cognitive functions, however, underlying neural mechanisms have not been fully characterized. For example, how the two major visual streams along the M and P pathways process visual affective information differentially in female versus male observers is not yet understood. Addressing this question will provide us with better neural and behavioral foundations of sex differences in social interactions and affective processing.

The current study tested how female and male observers’ brains differentially respond to threat cues extracted from emotional expression and eye gaze of faces presented in the stimuli selectively projected to M and P pathway. Here we focused on the activation of bilateral amygdalae, because the amygdala has been known to play a critical role in the processing of affective information in general (Costafreda, Brammer, David, & Fu, 2008; Kober et al., 2008; Sergerie, Chochol, & Armony, 2008), as well as threat vigilance (Davis & Whalen, 2001). The amygdala has also been a frequent subject of sex differences research. One of the consistent findings on the sex‐related differences in amygdala activation is a different pattern of hemispheric lateralization. In male observers’ brains, the right amygdala was found to be dominant while in female observers’ brains, the left amygdala was found to be more involved in affective processing (Cahill et al., 1996; Cahill et al., 2001; Canli, Zhao, Brewer, Gabrieli, & Cahill, 2000; Canli, Zhao, Desmond, Glover, & Gabrieli, 1999; Hamann, Ely, Grafton, & Kilts, 1999; Killgore, Oki, & Yurgelun‐Todd, 2001; Wager, Phan, Liberzon, & Taylor, 2003). Given that the amygdala's involvement in facial expression and eye gaze interaction (Adams et al., 2012; Hadjikhani et al., 2008; Im et al., 2017a; Sato, Kochiyama, Yoshikawa, Naito, & Matsumura, 2004), as well as in processing each of them separately (Hoffman, Gothard, Schmid, & Logothetis, 2007; Kawashima et al., 1999; Sergerie et al., 2008), has been well established, the current study examined functional and anatomical differences in the bilateral amygdalae between female vs. male observers during processing of face stimuli that convey different emotional expressions and gaze directions. We chose to use two facial expressions (fearful and neutral) and two eye gaze directions (direct and averted) as in many previous studies on facial threat cue perception (e.g., Adams et al., 2003; Adams & Kleck, 2005; Adams et al., 2012; Ewbank, Fox, & Calder, 2010; Im et al., 2017a). Comparing fearful vs. neutral faces rather than fearful vs. happy faces would allow us to examine the effects of perceived threat cues from the face when paired with different eye gaze direction, instead of examining the effects of emotional valence per se. Moreover, neutral faces have also been reported to be perceived as somewhat fearful when paired with averted gaze compared to direct gaze (e.g., Adams & Kleck, 2005; Ewbank et al., 2010). Thus, testing both fearful and neutral faces with different eye gazes would also allow us to investigate the modulatory effects of eye gaze on ambiguous threat perception from neutral faces.

Given the sexually dimorphic amygdala activations with more dominant right amygdala in male and left amygdala in female brains, we expected to observe sex‐related differences in the bilateral amygdala functions and structures which would be also associated with different behavioral responses to facial threat cues. Based on the previous findings that showed the differential attunement of the left and right amygdalae to processing of P‐biased ambiguous threat cue (e.g., fearful face with direct eye gaze) and M‐biased clear threat cue (e.g., fearful face with averted eye gaze), respectively, we formulated our hypothesis that males and females will show lateralized amygdala activation differences, with males showing greater right amygdala activity to clear threat cues in the right amygdala, particularly presented to the M pathway, and females showing greater left amygdala activity to ambiguous threat cues, particularly in the P‐biased form.

2. METHOD

2.1. Participants

One hundred and eight participants (64 females and 44 males) from the Massachusetts General Hospital (MGH) and surrounding communities participated in this study. Descriptive statistics for age, STAI‐State and STAI‐Trait are reported in Table 1 separately for males and females. The state and trait anxiety scores (STAI: Spielberger, 1983) of the female and male participants were not significantly different (STAI‐state: t(106) = .925, p = .357; STAI‐trait: t(106) = .364; p = .717). All had normal or corrected‐to‐normal visual acuity and normal color vision, as verified by the Snellen chart (Snellen, 1862), the Mars letter contrast sensitivity test (Arditi, 2005), and the Ishihara color plates (Ishihara, 1917). Informed consent was obtained from the participants in accordance with the Declaration of Helsinki. The experimental protocol was approved by the Institutional Review Board of MGH. The participants were compensated with $50 for their participation in this study.

Table 1.

Participants’ descriptive statistics for age, STAI‐State and STAI‐Trait

| Mean Age (SD) | Mean STAI‐S | Mean STAI‐T | |

|---|---|---|---|

| Female | 36.39 (16.41) | 33.53 (9.92) | 35.3 (10.54) |

| Male | 37.69 (16.71) | 31.71 (8.45) | 32.55 (7.98) |

2.2. Apparatus and stimuli

The stimuli were generated using MATLAB (Mathworks Inc., Natick, MA), together with the Psychophysics Toolbox extensions (Brainard, 1997; Pelli, 1997). The stimuli consisted of a face image presented in the center of a gray screen, subtending 5.79° × 6.78° of visual angle. We utilized a total of 24 face identities (12 female), 8 identities selected from the Pictures of Facial Affect (Ekman & Friesen, 1976), 8 identities from the NimStim Emotional Face Stimuli database (Tottenham et al., 2009), and the other 8 identities from the FACE database (Ebner, Riediger, & Lindenberger, 2010). The face images displayed either a neutral or fearful expression with either a direct gaze or averted gaze, and were presented as M‐biased, P‐biased, or Unbiased stimuli, resulting in 288 unique visual stimuli in total. Faces with an averted gaze had the eyes pointing either leftward or rightward.

Each face image was first converted to a two‐tone image (black–white; termed the Unbiased stimuli from here on). From the two‐tone image, low‐luminance contrast (< 5% Weber contrast), achromatic, grayscale stimuli (M‐biased stimuli), and chromatically defined, isoluminant red‐green stimuli (P‐biased stimuli) were generated. Examples of the M‐biased and P‐biased stimuli are shown in Figure 3a. Such manipulation is based on basic properties of the M and P systems: The M‐cells are almost color‐blind and respond not at all or very poorly to chromatic borders that are isoluminant, but very sensitive to luminance contrasts (Cheng, Eysel, & Vidyasagar, 2004; Hicks, Lee, & Vidyasagar, 1983; Solomon, White, & Martin, 2002). On the other hand, the P‐cells are able to resolve fine details, edges, and isoluminant, red‐green stimuli (Kaplan & Shapley, 1986; Steinman, Steinman, & Lehmkuhle, 1997). Finally, red light is reported to suppress the M‐cells (e.g., Breitmeyer & Breier, 1994; Chapman, Hoag, & Giaschi, 2004; Okubo & Nicholls, 2005; West, Anderson, Bedwell, & Pratt, 2010). This method has been employed successfully in many previous studies that investigated different processing of M‐ and P‐pathways (e.g., Awasthi, Williams, & Friedman, 2016; Cheng et al., 2004; Denison, Vu, Yacoub, Feinberg, & Silver, 2014; Im et al., 2017a; Kveraga et al., 2007; Schechter et al., 2003; Steinman et al., 1997; Thomas, Kveraga, Huberle, Karnath, & Bar, 2012). The foreground‐background luminance contrast for achromatic M‐biased stimuli and the isoluminance values for chromatic P‐biased stimuli vary somewhat across individual observers. Therefore, these values were established for each participant in separate test sessions, with the participant positioned in the scanner, before commencing functional scanning. This ensured that the exact viewing conditions were subsequently used during functional scanning in the main experiment. Following the procedure in Kveraga et al. (2007), Thomas et al. (2012), and Im et al. (2017a), the overall stimulus brightness was kept lower for M stimuli (the average value of 115.88 on the scale of 0–255) than for P stimuli (146.06) to ensure that any processing advantages for M‐biased stimuli were not due to greater overall brightness of the M stimuli, as described in detail below.

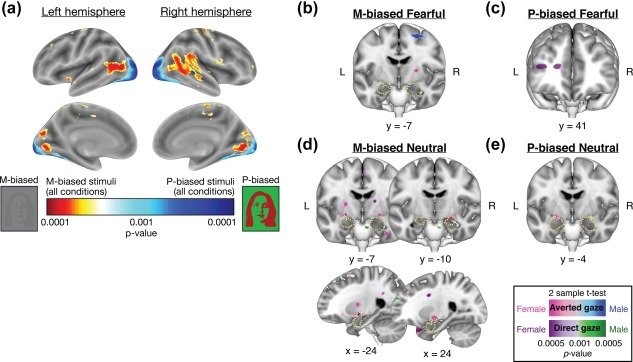

Figure 3.

(a) Different patterns of activations of the whole brain for all the M‐biased versus P‐biased stimuli, collapsed across all the conditions (emotions and eye gazes). (b)‐(e) Different patterns of left and right amygdala activations in female and male participants when they viewed: (b) M‐biased fearful faces, (c) P‐biased fearful faces, (d) M‐biased neutral faces, and (e) P‐biased neutral faces. The yellow broken outlines indicate anatomical masks for the bilateral masks for the bilateral amygdala, created by using SPM8 Anatomical Toolbox

2.3. Procedure

Before the fMRI session, participants completed the Spielberger State‐Trait Anxiety Inventory (STAI: Spielberger, 1983), followed by vision tests using the Snellen chart (Snellen, 1862), the Mars letter contrast sensitivity test (Arditi, 2005), and the Ishihara color plates (Ishihara, 1917). Participants were then positioned in the fMRI scanner and asked to complete the two pretests to identify the luminance values for M stimuli and chromatic values for P stimuli that were then used in the main experiment. The visual stimuli containing a face image were rear‐projected onto a mirror attached to a 32‐channel head coil in the fMRI scanner, located in a dimly lit room.

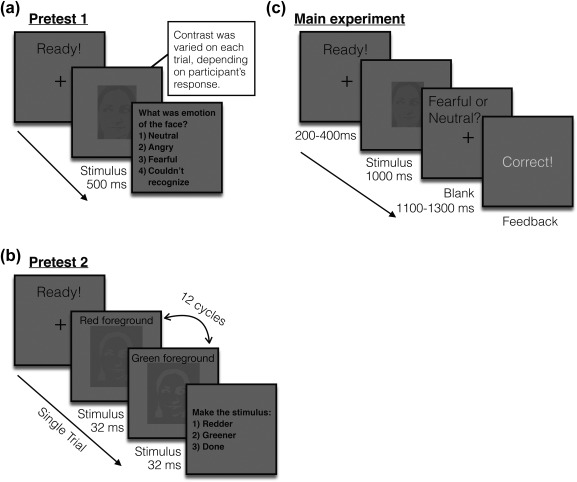

2.4. Pretest 1: Measuring luminance threshold for M‐biased stimuli

The appropriate luminance contrast was determined by finding the luminance threshold via a multiple staircase procedure. Figure 1a illustrates a sample trial of Pretest 1. Participants were presented with visual stimuli for 500 ms and instructed to make a key press to indicate the facial expression of the face that had been presented. They were required to choose one of the four options: (1) neutral, (2) angry, (3) fearful, or (4) did not recognize the image. One‐fourth of the trials were catch trials in which the stimulus did not appear. To find the threshold for foreground‐background luminance contrast, our algorithm computed the mean of the turnaround points above and below the gray background ([120 120 120] RGB value on the 8‐bit scale of 0–255). From this threshold, the appropriate luminance (∼3.5% Weber contrast) value was computed for the face images to be used in the low‐luminance‐contrast (M‐biased) condition. As a result, the average foreground RGB values for M‐biased stimuli were [116.71 116.71 116.71] ± 2.02 (SD) for female participants and [116.08 116.08 116.08] ± 2.19 (SD) for male participants.

Figure 1.

Sample trials of the pretests and the main experiment. (a) A sample trial of pretest 1 to measure the participants’ threshold for the foreground‐background luminance contrast for achromatic M‐biased stimuli. (b) A sample trial of pretest 2 to measure the participants’ threshold for the isoluminance values for chromatic P‐biased stimuli. (c) A sample trial of the main experiment

2.5. Pretest 2: Measuring red‐green iso‐luminance value for P‐biased stimuli

Figure 1b illustrates a sample trial of Pretest 2. For the chromatically defined, isoluminant (P‐biased) stimuli, each participant's isoluminance point was determined using heterochromatic flicker photometry with two‐tone face images displayed in rapidly alternating colors, between red and green. The alternation frequency was ∼14 Hz, because in our previous studies (Kveraga et al., 2007; Kveraga, 2014; Thomas et al., 2012) we obtained the best estimates for the isoluminance point (e.g., narrow range within‐subjects and low variability between‐subjects; Kveraga et al., 2007) at this frequency. The isoluminance point was defined as the color values at which the flicker caused by luminance differences between red and green colors disappeared and the two alternating colors fused, making the image look steady. On each trial, participants were required to report via a key press whether the stimulus appeared flickering or steady. Depending on the participant's response, the value of the red gun in [r g b] was adjusted up or down in a pseudorandom manner for the next cycle. The average of the values in the narrow range when a participant reported a steady stimulus became the isoluminance value for the subject used in the experiment. Thus, isoluminant stimuli were defined only by chromatic contrast between foreground and background, which appeared equally bright to the observer. On the background with green value of 140, the resulting foreground red value was 151.34 ± 3.86 (SD) on average for female participants and 150.79 ± 4.33 (SD) on average for male participants. Therefore, the isoluminant P‐biased stimuli were objectively brighter than the low‐luminance contrast, M‐biased, stimuli. This was done to ensure that any performance advantages for the M‐biased stimuli over the P‐biased stimuli (as found in Kveraga et al., 2007) were due to pathway‐biasing and not stimulus brightness.

2.6. Main experiment

Figure 1c illustrates a sample trial of the main experiment. After a variable pre‐stimulus fixation period (200–400 ms), a face stimulus was presented for 1,000 ms, followed by a blank screen (1,100–1,300 ms). Participants were required to indicate whether a face image looked fearful or neutral, as quickly as possible. Key‐target mapping was counterbalanced across participants: One half of the participants pressed the left key for neutral and the right key for fearful and the other half pressed the left key for fearful and the right key for neutral. Feedback was provided on every trial. The accuracy (proportion correct) of participants’ responses and the response time (RT) were recorded and analyzed as behavioral measurement.

2.7. fMRI data acquisition and analysis

fMRI images of brain activity were acquired using a 1.5 T scanner (Siemens Avanto) with a 32‐channel head coil. High‐resolution anatomical MRI data were acquired using T1‐weighted images for the reconstruction of each subject's cortical surface (TR = 2,300 ms, TE = 2.28 ms, flip angle = 8°, FoV = 256 × 256 mm2, slice thickness = 1 mm, sagittal orientation). The functional scans were acquired using simultaneous multislice, gradient‐echo echoplanar imaging with a TR of 2,500 ms, three echoes with TEs of 15, 33.83, and 52.66 ms, flip angle of 90°, and 58 interleaved slices (3 × 3 × 2 mm resolution). Scanning parameters were optimized by manual shimming of the gradients to fit the brain anatomy of each subject, and tilting the slice prescription anteriorly 20°–30° up from the AC–PC line as described in the previous studies (Deichmann, Gottfried, Hutton, & Turner, 2003; Kveraga et al., 2007; Wall, Walker, & Smith, 2009), to improve signal and minimize susceptibility artifacts in the subcortical brain regions. For each participant, the first 15 s of each run were discarded, followed by acquisition of 96 functional volumes per run (lasting 4 min). There were four successive functional runs, providing the 384 functional volumes per subject in total, including the 96 null, fixation trials and the 288 stimulus trials. During the null trials as the baseline condition, only fixation cross was presented on the gray background for 1,000 ms, without any face stimuli. In our 2 (Emotion: Fear and Neutral) × 2 (Eye gaze direction: Direct gaze vs. Averted gaze) × 3 (Bias: Unbiased, M‐biased, and P‐biased) design, each condition had 24 trials, and the sequence of total 384 trials was optimized for hemodynamic response estimation efficiency using the optseq2 software (https://surfer.nmr.mgh.harvard.edu/optseq/).

The acquired functional images were pre‐processed using SPM8 (Wellcome Department of Cognitive Neurology). The functional images were corrected for differences in slice timing, realigned, corrected for movement‐related artifacts, coregistered with each participant's anatomical data, normalized to the Montreal Neurological Institute (MNI) template, and spatially smoothed using an isotropic 8‐mm full width half‐maximum (FWHM) Gaussian kernel. Outliers due to movement or signal from preprocessed files, based on thresholds of 3 SD from the mean, 0.75 mm for translation and 0.02 radians rotation, were removed from the data sets, using the ArtRepair software (Mazaika, Hoeft, Glover, & Reiss, 2009).

2.8. Whole brain analysis

For whole brain analyses, subject‐specific contrasts were estimated using a fixed‐effects model. These contrast images were used to obtain subject‐specific estimates for each effect then entered into a second‐level analysis treating participants as a random effect, using one‐sample t tests at each voxel. Age and anxiety of participants were controlled as covariates. One‐sample t tests were first conducted across all subjects for each of the conditions of our interest, compared to the baseline (Null trials). In order to examine the sex difference in the pattern of whole brain activation, we then conducted two‐sample t tests between female and male participants for each condition. For illustration purposes, the resulting t test images showing the difference between female and male participants were overlaid onto a group average brain of the 108 participants, using the Multi‐image Analysis GUI (Mango: http://rii.uthscsa.edu/mango/index.html) software. Table 2 reports all the significant clusters from the whole brain analysis using the formal threshold of p < .05, FWE whole‐brain corrected. For the left and right amygdalae, the regions of our main interest based on the previous studies (Adams et al., 2003; Adams et al., 2012; Im et al., 2017a; Cushing et al., 2018), significant activation was reported after small volume correction at the threshold of p < .05, FWE‐corrected. The small volume correction was done by using the anatomically defined masks for the left and right amygdala that were automatically segmented and labeled by SPM Anatomy Toolbox (Eickhoff et al., 2005; Eickhoff, Heim, Zilles, & Amunts, 2006; Eickhoff et al., 2007). For visualization of the contrasts, we used the threshold of p < .001 (uncorrected) with a minimal cluster size of five voxels. These parameters are more conservative than those that have been argued to optimally balance between Type 1 and Type 2 errors (Lieberman & Cunningham, 2009; height p < .005, uncorrected, extent: 10 voxels, see also Adams et al., 2012; Kveraga et al., 2011).

Table 2.

BOLD activations from group analysis, thresholded at p < .05, FWE corrected, based on cluster‐defining threshold of p < .001 and k (extent; number of voxels) = 5

| Region label | Coordinates [x y z] | p FWE‐corr | p unc | T | Z | k |

|---|---|---|---|---|---|---|

| M‐biased direct fear none | ||||||

| M‐biased averted fear | ||||||

| Female > Male none | ||||||

| Male > Female | ||||||

| R amygdala | [24 −7 −14] | 0.014 | 0.002 | 3.02 | 2.95 | * |

| P‐biased direct fear | ||||||

| Female > Male | ||||||

| L anterior prefrontal cortex | [−33 41 14] | 0.032 | 0.002 | 4.17 | 4 | 102 |

| [−39 56 22] | 0.032 | 0.002 | 3.88 | 3.75 | † | |

| Male > Female none | ||||||

| P‐biased averted fear none | ||||||

| M‐biased direct neutral | ||||||

| Female > Male | ||||||

| L amygdala | [−24 −7 −12] | 0.043 | 0.003 | 2.79 | 2.73 | * |

| Male > Female none | ||||||

| M‐biased averted neutral | ||||||

| Female > Male | ||||||

| L posterior superior Temporal sulcus | [−42 −76 18] | 0.011 | 0.001 | 4.26 | 4.09 | 126 |

| [−45 −64 18] | 0.011 | 0.001 | 4.04 | 3.88 | † | |

| [−39 −58 10] | 0.011 | 0.001 | 3.82 | 3.69 | † | |

| L amygdala | [−24 −7 −12] | 0.048 | 0.005 | 2.93 | 2.78 | * |

| R amygdala | [24 −10 −12] | 0.033 | 0.004 | 2.74 | 2.69 | * |

| Male > Female none | ||||||

| P‐biased direct neutral none | ||||||

| P‐biased averted neutral | ||||||

| Female > Male | ||||||

| L amygdala | [−21 −4 −14] | 0.02 | 0.001 | 3.06 | 2.99 | * |

| Male > Female none | ||||||

†indicates that this cluster is part of a larger cluster immediately above.

* indicates that this cluster is based on small‐volume correction using an anatomically‐defined mask for amygdala.

2.9. Region‐of‐interest (ROI) analysis

For ROI analyses, we used the rfxplot toolbox (http://rfxplot.sourceforge.net) for SPM and extracted the beta weights from the left and right centromedial amygdalae. The bilateral centromedial amygdalae were defined by using anatomical masks created using Anatomy Toolbox for SPM8 (Eickhoff et al., 2005; Eickhoff et al., 2006; Eickhoff et al., 2007). The extracted beta weights from the anatomical masks were subjected to mixed repeated measures ANOVA, conducted separately for Fearful and Neutral face stimuli.

2.10. Estimation of amygdala volume

To assess amygdala volumes, we performed quantitative morphometric analysis of T1–weighted MRI data using an automated segmentation and probabilistic ROI labeling technique (FreeSurfer, http://surfer.nmr.mgh.harvard.edu). This procedure has been widely used in volumetric studies and was shown to be comparable in accuracy to that of manual labeling (Bickart, Wright, Dautoff, Dickerson, & Barrett, 2011; Fischl et al., 2002). Because males have 8%–13% larger brains than females, it was important for us to control for brain size and examine proportional, not absolute, size of the amygdala (see Ruigrok et al., 2014). Therefore, the estimated amygdala volumes for each individual participant were divided by total intracranial volume of the participant in order to adjust for individual differences in head size, as performed in the prior work (e.g., O'Brien et al., 2006).

3. RESULTS

3.1. Behavioral results: Accuracy

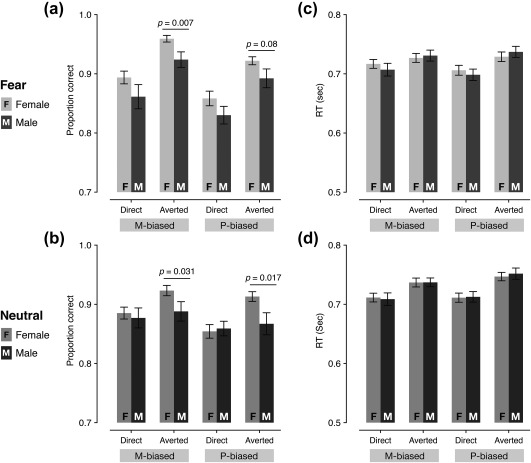

Figure 2a,b show the average accuracy (proportion correct) of female and male participants, separately plotted for fearful face stimuli and neutral face stimuli, respectively. For behavioral responses to fearful faces, a mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Bias (two levels: M‐biased and P‐biased) and Eye gaze (two levels: Direct gaze and Averted gaze) as within‐subject factors revealed three main effects. First, there was a significant main effect of Sex (F(1,106) = 5.658, p = .019) with female participants being more accurate than male participants for fear stimuli overall. Second, there was also a significant main effect of Bias (F(1,106) = 28.81, p < .001) with M‐biased fear stimuli being recognized more accurately than P‐biased fear stimuli. Greater accuracy for M‐biased fear than for P‐biased fear faces, despite its lower contrast than that of the P‐stimuli, suggests that M‐pathway is superior to P‐pathway in detecting facial threat cues. Third, there was a significant main effect of Eye gaze (F(1,106) = 57.39, p < .001), with averted gaze fear expressions being recognized more accurately than direct eye gaze fear expressions. Neither the two‐way interactions between the factors nor the three‐way interaction of all the factors was significant (p > .481). Greater accuracy for averted fear than direct fear faces overall was also observed in the previous studies showing that fearful faces with averted eye gaze tended to be perceived as more intense compared to fearful faces with direct eye gaze (e.g., Adams & Kleck, 2005). Since our main interest was to test sex differences in perception of emotional face with different eye gaze directions and pathway biases, we further conducted planned comparisons between female and male participants for each condition, and found that the M‐biased averted fear (t(106) = 2.76, p = .007) and P‐biased averted fear (t(106) = 1.757, p = .08, marginally significant) yielded significantly higher accuracy for female than male participants.

Figure 2.

Behavioral results. (a) The mean accuracy for fearful faces paired with direct or averted eye gazes, presented in M‐ or P‐biased stimuli. The error bars indicate the SEM. (b) The median RT for fearful faces paired with direct or averted eye gazes, presented in M‐ or P‐biased stimuli. The error bars indicate the SEM. (c) The mean accuracy for neutral faces paired with direct or averted eye gazes, presented in M‐ or P‐biased stimuli. The error bars indicate the SEM. (d) The median RT for neutral faces paired with direct or averted eye gazes, presented in M‐ or P‐biased stimuli. The error bars indicate the SEM

For neutral face stimuli, mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Bias (two levels: M‐biased and P‐biased) and Eye gaze (two levels: Direct gaze and Averted gaze) as within‐subject factors showed only marginally significant main effect of Sex with female participants being more accurate than male participants (F(1,106) = 3.618, p = .060), significant main effect of Bias (F(1,106) = 8.181, p = .005) with M‐biased stimuli being recognized more accurately than P‐biased stimuli, and significant main effect of Eye gaze (F(1,106) = 8.306, p = .005) with averted eye gaze being recognized more accurately than direct eye gaze. Although the two‐way interaction of Bias × Sex (F(1,106) = 0.006, p = .938) and interaction of Bias × Eye gaze (F(1,106) = 0.459, p = .50) was not significant, the two‐way interaction of Eye gaze × Sex was marginally significant (F(1,106) = 3.743, p = .056). We assessed the nature of the Eye gaze × Sex interaction by using post hoc pairwise comparisons (Bonferroni corrected) and found that female participants recognized neutral faces with averted eye gaze more accurately than with direct eye gaze (p = .024 for M‐biased and p = .015 for P‐biased), while male participants did not show any difference in the accuracy for neutral faces with direct eye gaze versus averted eye gaze (p's > .841). The three‐way interaction among the factors was not significant (F(1,106) = 0.815, p = .369). In order to test the sex difference in the accuracy for each of the conditions, we also conducted further planned comparisons between female and male participants for each of the four conditions, and found that female participants were more accurate than male participants at recognizing neutral faces with averted gaze, both in M‐biased (p = .031) and in P‐biased (p = .017) stimuli.

3.2. Behavioral results: Response time (RT)

Figure 2c shows the median RT of female and male participants for fearful face stimuli. Only the RTs from correct trials were used for the analyses, and outliers (3 SD above the group mean) within each condition were excluded. As a result, 1.03% of the data points on average were excluded for the further analyses. The mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Bias (two levels: M‐biased and P‐biased) and Eye gaze (two levels: Direct gaze and Averted gaze) as within‐subject factors showed no significant main effects of Sex (F(1,106) = 0.014, p = .906) or Bias (F(1,106) = 0.960, p = .329), although a main effect of Eye gaze was significant with direct‐gaze fear faces recognized faster than averted‐gaze fear faces (F(1,106) = 38.142, p < .001). We also found that the two‐way interaction of Eye gaze × Sex (F(1,106) = 3.671, p = .058, marginally significant) and the interaction of Bias and Eye gaze were significant (F(1,106) = 7.029, p = .009). In order to assess the nature of the significant two‐way interactions, we conducted post hoc pairwise comparisons (Bonferroni corrected) and found that male participants were significantly faster for P‐biased fearful faces with direct gaze than with averted gaze (p = .027) although female participants did not show significant differences in RT between direct versus averted fear. Moreover, P‐biased fearful faces were recognized significantly faster with direct gaze than for those with averted gaze (p = .031), while M‐biased fearful faces did not show any significant eye gaze effects (p > .761). Thus, the significant main effect of Eye gaze we found seemed to be driven mainly by faster RT for P‐biased gaze fear faces. The three‐way interaction of all the factors was not significant (F(1,106) = 0.047, p = .828).

Figure 2d shows the RT of female and male participants for neutral face stimuli. The mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Bias (two levels: M‐biased and P‐biased) and Eye gaze (two levels: Direct gaze and Averted gaze) as within‐subject factors showed that the main effect of Sex was not significant (F(1,106) = 0.009, p = .926), although the main effects of Bias and Eye gaze were significant, with M‐biased neutral faces being recognized faster than P‐biased neutral faces (F(1,106) = 6.842, p = .010) and neutral faces with direct gaze being recognized faster than with averted gaze (F(1,106) = 8.814, p = .004). None of the two‐way or three‐way interaction was significant (p > .221). Together, we found these sex differences in the perception of faces: Compared to male, female observers showed more accurate perception of both fearful and neutral faces with averted gaze, suggesting that they are more sensitive to covert information conveyed by a non‐emotional facial cue, such as eye gaze.

3.3. fMRI results

We first ensured that our manipulation for M‐ and P‐biased stimuli preferentially engaged different visual —M and P—pathways. From the contrast between all the M‐biased stimuli vs. all the P‐biased stimuli (with all the emotions and eye gazes collapsed), we observed that the M‐biased stimuli preferentially activated brain regions including posterior superior temporal sulcus, inferior fontal gyrus, and parietal areas whereas the P‐biased stimuli preferentially activated brain regions including occipital and inferior temporal areas (Figure 3a). Because the same image sets were used, the different patterns of brain activations most likely result from our manipulation for M‐ and P‐biased stimuli. In our previous work, we had observed similar patterns of brain activations from the stimuli of group of multiple faces (e.g., crowds) that are suggested to more rely on global, low spatial frequency information, compared to single face stimuli (Im et al., 2017b): The posterior superior temporal sulcus, inferior fontal gyrus, and parietal areas showed greater activation during perception of crowd emotion from multiple faces of different emotional expressions, whereas occipital and inferior temporal regions showed relatively greater activation during perception of a single, individual face (Im et al., 2017b). Moreover, non‐face grating stimuli also revealed similar patterns of brain activation from the contrast between gray‐scale gratings with low spatial frequency (M‐biased stimuli) and isoluminant, red‐green gratings with high spatial frequency (P‐biased stimuli; Im et al., 2017b). The parallels observed across different studies with different types of visual stimuli and groups of participants provide consistent evidence that our stimuli in the current study selectively engaged different visual pathways.

Figures 3b‐4e show different patterns of left and right amygdala activations in female and male participants when they viewed fear and neutral faces with direct and averted eye gazes in M‐ and P‐biased stimuli. Using the small volume correction (FWE) at p < .05, we found that male participants showed greater right amygdala activation (dorsal amygdala/SI, peak: [x = 24, y = −7, z = −14], p = .032, small volume, FWE‐corrected) than female participants for M‐biased fearful faces with averted eye gaze (Figure 3b). For M‐biased neutral faces with averted eye gaze (Figure 3d), however, we found that female participants showed greater activation both in the left and right amygdalae (dorsal amygdalae/SI, peaks: [x = −24, y = −7, z = −12], p = .048 for left and [x = 24, y = −10, z = −12], p = .033 for right, both are small volume, FWE‐corrected). We also found greater left amygdala activation for M‐biased neutral faces with direct eye gaze in female participants compared to male participants ([x = −24, y = −7, z = −12], p = .043, small volume, FWE‐corrected). Although we did not find significant activations in amygdala, P‐biased fearful faces with direct eye gaze evoked significantly greater activations of the left anterior prefrontal cortex in female participants than males ([x = −33, y = 41, z = 14], p = .032, FWE‐corrected; Figure 3c). Finally, we found greater left amygdala activation in female participants than males for P‐biased neutral faces with averted eye gaze ([x = −21, y = −4, z = −14], p = .02, small volume, FWE‐corrected; Figure 3e). These results suggest that compound cues of emotional expression and eye gaze direction of face stimuli projected to M‐ and P‐ pathways are processed differentially in the left and right amygdala of female versus male observers. The complete list of the brain areas that showed significant differences between female vs. male participants for each contrast (whole‐brain FWE‐corrected p < .05) is shown in Table 2.

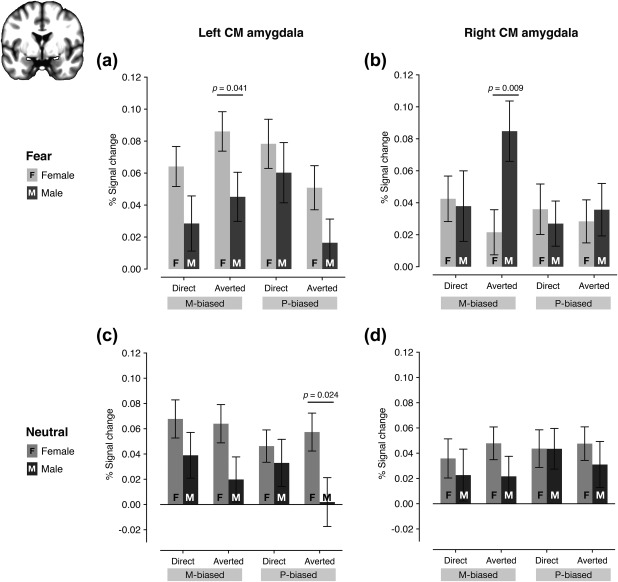

Figure 4.

The percentage signal change resulting from the ROI analyses. (a) The % signal change of the left amygdala for fearful faces with direct or averted eye gaze in M‐biased or P‐biased stimuli. (b) The percentage signal change of the right amygdala for fearful faces with direct or averted eye gaze in M‐biased or P‐biased stimuli. (c) The percentage signal change of the left amygdala for neutral faces with direct or averted eye gaze in M‐biased or P‐biased stimuli. (d) The percentage signal change of the right amygdala for neutral faces with direct or averted eye gaze in M‐biased or P‐biased stimuli

3.4. ROI analysis: Sex‐specific differences in amygdala reactivity

We next ran ROI analyses to directly compare the amygdala responsivity to different combinations of emotional expression, eye gaze direction, and pathway bias in female vs. male participants. Because recent evidence has shown that the centromedial (CM) amygdala is responsible to sex‐specific neural circuits of emotional regulation (Wu et al., 2016) and also involved in defensive responses associated with fear and threat‐related cues (e.g., Adams et al., 2012; Davis & Whalen, 2001; Cheng, Knight, Smith, & Helmstetter, 2006, 2007; Kim, Somerville, Johnstone, Alexander, & Whalen, 2003; Knight, Nguyen, & Bandettini, 2005; Whalen et al., 1998; Whalen et al., 2001), we focused on the centromedial amygdala as our ROI. Figure 4a shows the % signal change in the left CM amygdala for four different fear conditions (two bias: M‐ and P‐ by 2 eye gaze directions: direct and averted), plotted separately for female and male participants, next to each other. The mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Bias (two levels: M‐biased and P‐biased) and Eye gaze (two levels: Direct gaze and Averted gaze) as within‐subject factors showed a significant main effect of Sex (F(1,106) = 5.487, p = .021) with female participants showing greater left CM amygdala activation than male participants. Neither the main effect of Bias (F(1,106) = 0.213, p = .646) nor the main effect of Eye gaze (F(1,106) = 0.663, p = .417) was significant. We also found a significant two‐way interaction between Bias and Eye gaze (F(1,106) = 10.478, p = .002), although the other two‐way or three‐way interactions were not significant (p > .539). We further tested the sex difference by using planned comparisons between female and male participants for each of the four conditions, and found that female participants showed significantly greater left CM amygdala activation for the M‐biased averted fear than male participants (p = .041), although the other conditions did not reach significant difference between sex groups (p's > .089).

Figure 4b shows the right CM amygdala activation. The mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Bias (two levels: M‐biased and P‐biased) and Eye gaze (two levels: Direct gaze and Averted gaze) as within‐subject factors showed no statistically significant main effects of Sex, Bias, or Eye gaze (p's > .093). Only the two‐way interaction between Eye gaze and Sex was significant (F(1,106) = 4.647, p = .033), although two‐way interactions between Bias and Sex (F(1,106) = 2.927, p = .090) or between Bias and Eye gaze (F(1,106) = 0.528, p = .192) were not significant. Further contrast analyses conducted separately for female and male participants revealed that the right CM amygdala activation was greater for M‐biased fearful faces with averted eye gaze than any of the other three conditions (using the contrast weight of [−1 +3 −1 −1]) in male participants (p = .015), but not in female participants (p = .400). Finally, further planned comparisons between female and male participants for each of the four conditions showed significantly greater right CM amygdala activation for M‐averted fear in male participants than in female participants (p = .009).

The left CM amygdala showed greater activation for neutral face stimuli overall in female participants than in male participants (Figure 4c). A mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Bias (two levels: M‐biased and P‐biased) and Eye gaze (two levels: Direct gaze and Averted gaze) as within‐subject factors showed a significant main effect of Sex (F(1,106) = 5.114, p = .026), supporting this observation. None of the other main effects or interactions were significant (p's > .151). Planned comparisons between female and male participants for each of the four conditions showed significantly greater left amygdala activation for P‐biased averted neutral (p = .024) in female participants than in male participants. For the right amygdala responses to neutral face stimuli (Figure 4d), however, none of the main effects or the interactions was significant (p's > .355). Therefore, the fMRI results suggest that male participants show greater right amygdala activation for M‐biased averted fear face stimuli (e.g., clear threat cue; Adams et al., 2012; Im et al., 2017a) indicating greater attunement of the right amygdala to clear threat conveyed by the magnocellular pathway, whereas female participants show greater left amygdala involvement in processing of faces (both fearful and neutral), compared to male participants.

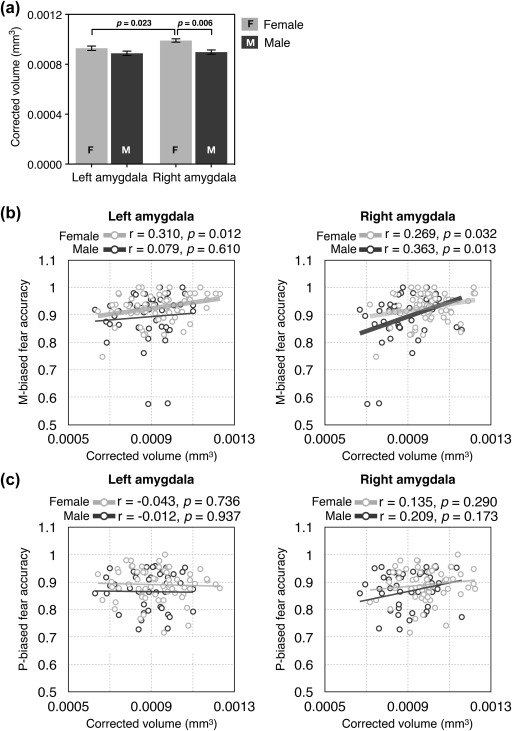

3.5. ROI analysis: Sex‐specific differences in amygdala volumes

In addition to the differences in the BOLD responses, we also found differences in the volume (divided by total intracranial volume to correct for differences in the cranium size) of the left and right amygdala between female and male participants (Figure 5a). Mixed repeated measures ANOVA with Sex (Female and Male) as a between‐subject factor (our main interest) and with Hemisphere (two levels: Left and Right) as a within‐subject factors showed a significant main effect of Sex (F(1,106) = 9.519, p = .003) with the amygdala volume (corrected for brain size) being proportionately greater in female than male participants, a significant main effect of the Hemisphere (F(1,106) = 13.119, p < .001) with the right amygdala volume being greater than the left amygdala volume, and a significant interaction (F(1,106) = 7.161, p = .009). Further t tests (Bonferroni corrected) revealed the nature of the interaction: in female participants the amygdala volume was significantly greater in the right than in the left (p = .023), whereas male participants did not show a significant difference between the volumes of the left and right amygdala (p = .988); lastly, the right amygdala volume was proportionately greater in female participants than in male participants (p = .006).

Figure 5.

The results of the analyses of amygdala volumes. (a) The volume of the left and right amygdala for female and male participants. (b) The correlation between the left and the right amygdala volume and the behavioral accuracy for M‐biased fear. The dots with brighter gray indicate female participants and the dots with darker gray indicate male participants. The thicker regression lines indicate the statistically significant correlations. (c) The correlation between the left and the right amygdala volume and the behavioral accuracy for P‐biased fear. The dots with brighter gray indicate female participants and the dots with darker gray indicate male participants

Finally, the measures of the left and right amygdala volume were found to correlate with observers’ behavioral accuracy for fearful faces differentially in female versus male participants. In female participants (dots and regression lines with brighter gray in Figure 5b), both the left and right amygdala volumes showed significant positive correlation with their accuracy for M‐biased fear (averaged across direct and averted eye gaze), with slightly stronger correlation for the left amygdala than for the right amygdala (left amygdala: r = .310, p = .012; right amygdala: r = .269, p = .032). In male participants (dots and regression lines with darker gray in Figure 5b), however, only the right amygdala showed significant correlation with their behavioral accuracy for M‐biased fear (r = .363, p = .013), but not the left amygdala (r = .079, p = .610). Unlike the accuracy for M‐biased fear, neither the accuracy for P‐biased fear faces (Figure 5c) nor M‐ and P‐biased neutral faces showed correlation with the amygdala volumes (all p's > .173). Together, our findings suggest that female and male participants show differences in perception of emotion from compound cues of facial expressions and eye gaze directions and in hemispheric lateralization of amygdala responsivity and volumes.

4. DISCUSSION

The goal of this study was to explore behavioral and neural differences between female and male observers during perception of faces with potential threat cues conveyed by the combination of facial expression (fearful or neutral) and eye gaze direction (direct or averted). Previous studies (Cahill et al., 2001; Canli et al., 2000, 1999) have shown lateralization in amygdala responses, with males showing greater right amygdala activity, and larger left amygdala activation in females. Recent findings by us (Adams et al., 2012) suggested that right and left amygdala are engaged preferentially by clear and ambiguous threat, respectively, and that this lateralization is also driven additionally by whether the stimuli are presented to the magnocellular or parvocellular pathway (Im et al., 2017a). Lastly, Hoffmann et al. (2010) reported that females outperform males mainly in recognizing subtle rather than more intense expressions. Therefore, here we wanted to test the hypothesis that males and females will show different behavioral and amygdala lateralization patterns, driven by the type of threat cue (clear or ambiguous) and the pathway (M or P) to which it is presented.

We had four novel findings of sex differences in this study: (1) Female participants recognized the facial expressions with averted gaze more accurately than did male participants, indicating better ability in females to integrate eye gaze with facial expression, (2) Male participants showed greater right amygdala activation for M‐biased averted‐gaze fear (clear threat) faces, whereas female participants showed greater involvement of the left amygdala when they viewed both fearful and neutral faces, (3) Female participants had proportionately greater amygdala volumes than male participants, with the difference being more pronounced in the right amygdala, and (4) both the left and right amygdala volumes positively correlated with behavioral accuracy for M‐biased fear in female participants, whereas only the right amygdala volume correlated with accuracy for M‐biased fear in male participants.

When facial expression signals the emotional state of an expresser, eye gaze direction can indicate the source or target of that emotion (Adams & Kleck, 2003; Adams & Kleck, 2005; Adams et al., 2012; Hadjikhani et al., 2008; Im et al., 2017a). The social signals conveyed by facial expression and eye gaze can be integrated to facilitate an observer's processing of emotion of the face when they convey congruent information. For example, processing of approach‐oriented facial expressions such as anger or joy can be facilitated with direct eye gaze, which also signals approach motivation, while processing of avoidance‐oriented facial expressions, such as fear or sadness, can be facilitated with averted eye gaze which likewise signals avoidance (Adams & Kleck, 2003, 2005; Sander et al., 2007). Therefore, the meaning and intensity of an observed facial expression is driven not only by the expression itself, but also by the changes in an expresser's eye gaze (Benton, 2010; Fox, Mathews, Calder, & Yiend, 2007; Hadjikhani et al., 2008; Im et al., 2017a; Milders et al., 2011; N'Diaye, Sander, & Vuilleumier, 2009; Rigato, Menon, Farroni, & Johnson, 2013; Sander et al., 2007; Sato et al., 2004). The outcome of the present work further provides new evidence that this integration of facial expression and eye gaze is also modulated by the observer's sex.

Better recognition of facial expressions with averted eye gaze in female than in male participants suggests that females are more sensitively attuned to reading and integrating eye gaze with facial expression. Previous studies of sex‐related differences in affective processing have also reported female participants outperforming males in facial detection tasks (recognition of a face as a face) or facial identity discrimination and showing stronger face pareidolia, the tendency to perceive non‐face stimuli (e.g., food‐plate images resembling faces) as faces (Pavlova, Scheffler, & Sokolov, 2015). The superiority of female participants, however, seems to be more pronounced when the task or stimulus involves processing of subtle facial cues, and reduced when highly expressive and obvious stimuli are presented (Hoffmann et al., 2010). Females were also shown to have superior skills in other types of integrative processing during visual social cognition, such as body language reading (e.g., understanding emotions, intentions, motivations, and dispositions of others through their body motion). Specifically, females were faster in discriminating emotional biological motion from neutral and more accurate in recognizing point‐light neutral body motion (e.g., walking or jumping on the spot; Alaerts, Nackaerts, Meyns, Swinnen, & Wenderoth, 2011). Our current finding of more accurate integration of facial expression and eye gaze in female observers is in line with these previous findings that showed female observers’ greater ability in integrative and detailed processing of affective stimuli.

In addition to behavioral responses, we also found differences in amygdala activity between female and male observers. Male participants showed greater right amygdala responses, but only to M‐biased averted‐gaze fear faces (congruent, clear threat cues) whereas the female participants showed greater left amygdala responses to both fearful and neutral faces. Prior work suggests that a fearful face with averted eye gaze tends to be perceived as a clear threat (“pointing with the eyes” to the threat; Hadjikhani et al., 2008) because both emotional expression and eye gaze direction signal congruent avoidance motivation (Adams et al., 2012; Cushing et al., 2018; Im et al., 2017a). Moreover, we previously showed that the right amygdala is highly responsive to such a clear threat cue (averted‐gaze fear; e.g., Adams et al., 2012; Cushing et al., 2018), especially when presented to the magnocellular pathway (Im et al., 2017a). Thus, greater right amygdala reactivity to M‐biased averted‐gaze fear faces in male participants suggests that processing of facial expression by eye gaze interactions in male observers is tuned more to detection of a clear, congruent threat cue from face stimuli, compared to female observers. Conversely, female observers showed more involvement of the left amygdala, which has been suggested to play a role in more detailed analysis and reflective processing (Adams et al., 2012; Cushing et al., 2018; Im et al., 2017a). Together, our findings suggest that brain activations elicited by interactions of facial expression and eye gaze direction are modulated by the observer's sex. While sex‐specific modulation of fMRI activity for interaction of threatening facial and bodily expressions has been reported (Kret, Pichon, Grèzes, & de Gelder, 2011), our study presents the first behavioral and neural evidence of sex‐specific modulation of integration of facial expression and eye gaze direction.

It is worth noting that the significant activations from the whole‐brain analyses were mostly observed in dorsal region of the amygdalae. Similarly, a previous study (Adams et al., 2012) has reported significant activation differentiating direct‐gaze fear from averted‐gaze fear in gray‐scale face images in dorsal amygdala (which they labeled as dorsal amygdala/substantia innominata (SI)). The dorsal amygdala contains the central nucleus (Mai, Assheuer, & Paxinos, 1997), an output region that projects to brain stem, hypothalamic and basal forebrain targets (Paxinos, 1990). In rodents, the central nucleus in the dorsal amygdala has been reported to be essential for the basic species‐specific defensive responses associated with fear (Davis & Whalen, 2001). In humans, this area has been also implicated in threat vigilance, action preparedness, and arousal in response to acute threat (e.g., Cheng et al., 2006, 2007; Kim et al., 2003; Knight et al., 2005; Whalen et al., 2001; Whalen et al., 1998). Together, the dorsal amygdala seems to be an important subdivision of the amygdala that is involved in threat processing via larger circuits fed by M‐ and P‐pathways.

Our findings of the differential attunement of female and male observers toward the left and right amygdala are also in line with the previous findings that showed sex‐specific hemispheric lateralization of the amygdalae. For example, the two previous studies that involved only male participants showed either exclusive (Cahill et al., 1996) or predominant (Hamann et al., 1999) right lateralization of the amygdala. On the other hand, the two studies that involved only female participants reported left lateralized amygdala activation (Canli et al., 2000, 1999). By directly comparing the amygdala activity between female and male observers in identical conditions, researchers (Cahill et al., 2001) also found a clear difference in hemispheric lateralization of the amygdala in which increased recall of the emotional, compared with neutral, films was significantly predicted by the right amygdala activity in male observers and by the left amygdala activity in female observers. Given the putatively different emphases of the left and right amygdalae in reflective and reflexive processing of compound facial cues (Adams et al., 2012; Cushing et al., 2018; Im et al., 2017a), such sex‐related differences in amygdala activity may reflect different cognitive and processing styles in female and male participants, such as better integration of incongruent eye gaze and facial expression cues in females, and a greater bias toward perceiving clear, congruent threat cues in males.

Along with the sex differences in the functional reactivity of the amygdala, we also observed sex‐related differences in amygdala volumes. Previous clinical studies have reported that the amygdala volume was correlated with observers’ ability to recognize happy facial expression in Huntington's Disease (Kipps, Duggins, McCusker, & Calder, 2007) and with observers’ anxiety or depression level both in children and in adults (Barrós‐Loscertales et al., 2006; De Bellis et al., 2000; Frodl et al., 2002; MacMillan et al., 2003). A recent study of healthy participants has also reported that amygdala volume correlated with individuals’ social network size and complexity (Bickart et al., 2011). Using a large sample of healthy participants, we found that their amygdala volumes also positively correlated with observers’ accuracy for M‐biased averted‐gaze fear faces (clear threat). This is consistent with previously reported evidence of magnocellular projections to the amygdala for fast, but coarse, threat‐related signals (e.g., Méndez‐bértolo et al., 2016), and enhanced right amygdala activation for M‐biased neutral stimuli (object drawings), compared with P‐biased stimuli (Kveraga et al., 2007). Furthermore, we also observed sex‐related lateralization differences in that both the left and right amygdala volumes predicted the behavioral accuracy in recognizing M‐biased averted‐gaze fear faces in female observers, whereas only the right amygdala showed such a relationship in male observers.

5. CONCLUSIONS

The current study found that an observers’ sex affects behavioral responses to facial expression and eye gaze interaction, and predicts both functional reactivity and volume of the amygdala. Many diseases related to impairments in visual and affective social cognition show sex differences in rates of affliction. For example, females are more often affected by anxiety disorders (Mclean, Asnaani, & Litz, 2011) and depression (Abate, 2013) that are associated with deficits in face processing and emotion perception. On the other hand, males have a higher risk of developing autism spectrum disorders (Werling & Geschwind, 2013) that show impaired emotional perception and are more vulnerable to attention deficit hyperactivity disorders (ADHD; Ramtekkar, Reiersen, Todorov, & Todd, 2010) that are related to attention, working memory, executive functioning deficits (Kibby et al., 2015) and reduced perception of anger (Manassis, Tannock, Young, & Francis‐John, 2007). Thus, investigating sex‐related differences in behavioral and neural responses, as well as brain structure, in larger samples of healthy observers will have important implications for identifying such sex differences in these psychiatric disorders. The present findings demonstrate that neural mechanisms underlying affective visual processing can differ between healthy men and women. The current findings further indicate that theories of behavioral and neural mechanisms underlying the perception of affective stimuli should take into account participants’ sex.

DECLARATION OF CONFLICTING INTERESTS

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of the article.

AUTHOR CONTRIBUTIONS

R. B. Adams, and K. Kveraga developed the study concept and designed the study. Testing and data collection were performed by H. Y. Im, N. Ward, C. A. Cushing, and J. Boshyan. H. Y. Im analyzed the data and all the authors contributed to the writing of the manuscript.

ACKNOWLEDGMENTS

This work was supported by the National Institutes of Health R01MH101194 to K. K. and to R. B. A. Jr.

Im HY, Adams RB Jr., Adams RB, et al. Sex‐related differences in behavioral and amygdalar responses to compound facial threat cues. Hum Brain Mapp. 2018;39:2725–2741. 10.1002/hbm.24035

Funding information National Institutes of Health, Grant/Award Number: R01MH101194

REFERENCES

- Abate, K. H. (2013). Gender disparity in prevalence of depression among patient population: A systematic review. Ethiopian Journal of Health Sciences, 23(3), 283–288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams, R. B., Jr. , Gordon, H. L. , Baird, A. A. , Ambady, N. , & Kleck, R. E. (2003). Effects of gaze on amygdala sensitivity to anger and fear faces. Science, 300, 1536. [DOI] [PubMed] [Google Scholar]

- Adams, R. B., Jr. , Hess, U. , & Kleck, R. E. (2015). The intersection of gender‐related facial appearance and facial displays of emotion. Emotion Review, 7(1), 5. [Google Scholar]

- Adams, R. B., Jr. , & Kleck, R. E. (2003). Perceived gaze direction and the processing of facial displays of emotion. Psychological Science, 14(6), 644–647. [DOI] [PubMed] [Google Scholar]

- Adams, R. B., Jr. , & Kleck, R. E. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion (Washington, D.C.), 5(1), 3–11. [DOI] [PubMed] [Google Scholar]

- Adams, R. B., Jr. , Franklin, R. G. , Kveraga, K. , Ambady, N. , Kleck, R. E. , Whalen, P. J. , & Nelson, A. J. (2012). Amygdala responses to averted vs direct gaze fear vary as a function of presentation speed. Social Cognitive and Affective Neuroscience, 7, 568–577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams, R. B., Jr. , & Kveraga, K. (2015). Social vision: Functional forecasting and the integration of compound social cues. Review of Philosophy and Psychology, 6(4), 591–610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akechi, H. , Senju, A. , Kikuchi, Y. , Tojo, Y. , Osanai, H. , & Hasegawa, T. (2009). Does gaze direction modulate facial expression processing in children with autism spectrum disorder? Child Development, 80(4), 1134–1146. [DOI] [PubMed] [Google Scholar]

- Alaerts, K. , Nackaerts, E. , Meyns, P. , Swinnen, S. P. , & Wenderoth, N. (2011). Action and emotion recognition from point light displays: An investigation of gender differences. PLoS ONE, 6(6), e20989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arditi, A. (2005). Improving the design of the letter contrast sensitivity test. Investigative Ophthalmology & Visual Science, 46(6), 2225–2229. [DOI] [PubMed] [Google Scholar]

- Awasthi, B. , Williams, M. A. , & Friedman, J. (2016). Examining the role of red background in magnocellular contribution to face perception. Peer Journal, 4, e1617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrós‐Loscertales, A. , Meseguer, V. , Sanjuán, A. , Belloch, V. , Parcet, M. A. , Torrubia, R. , & Ávila, C. (2006). Behavioral inhibition system activity is associated with increased amygdala and hippocampal gray matter volume: A voxel‐based morphometry study. NeuroImage, 33(3), 1011–1015. [DOI] [PubMed] [Google Scholar]

- Becker, D. V. , Kenrick, D. T. , Neuberg, S. L. , Blackwell, K. C. , & Smith, D. M. (2007). The confounded nature of angry men and happy women. Journal of Personality and Social Psychology, 92(2), 179–190. [DOI] [PubMed] [Google Scholar]

- Benton, C. P. (2010). Rapid reactions to direct and averted facial expressions of fear and anger. Visual Cognition, 18(9), 1298–1319. [Google Scholar]

- Bickart, K. C. , Wright, C. I. , Dautoff, R. J. , Dickerson, B. C. , & Barrett, L. F. (2011). Amygdala volume and social network size in humans. Nature Neuroscience, 14(2), 163–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bindemann, M. , Mike Burton, A. , & Langton, S. R. H. (2008). How do eye gaze and facial expression interact? Visual Cognition, 16(6), 708–733. [Google Scholar]

- Birnbaum, D. W. , & Croll, W. L. (1984). The etiology of children's stereotypes about sex differences in emotionality. Sex Roles, 10, 677–691. [Google Scholar]

- Brainard, D. H. (1997). The Psychophysics Toolbox. Spatial Vision, 10(4), 433–436. [PubMed] [Google Scholar]

- Breitmeyer, B. G. , & Breier, J. I. (1994). Effects of background color on reaction time to stimuli varying in size and contrast: Inferences about human M channels. Vision Research, 34(8), 1039–1045. [DOI] [PubMed] [Google Scholar]

- Cahill, L. (2006). Why sex matters for neuroscience. Nature Reviews. Neuroscience, 7(6), 477–484. [DOI] [PubMed] [Google Scholar]

- Cahill, L. , Haier, R. J. , Fallon, J. , Alkire, M. T. , Tang, C. , Keator, D. , … McGaugh, J. L. (1996). Amygdala activity at encoding correlated with long‐term, free recall of emotional information. Proceedings of the National Academy of Sciences, 93(15), 8016–8021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cahill, L. , Haier, R. J. , White, N. S. , Fallon, J. , Kilpatrick, L. , Lawrence, C. , … Alkire, M. T. (2001). Sex‐related difference in amygdala activity during emotionally influenced memory storage. Neurobiology of Learning and Memory, 75(1), 1–9. [DOI] [PubMed] [Google Scholar]

- Campbell, R. , Elgar, K. , Kuntsi, J. , Akers, R. , Terstegge, J. , Coleman, M. , & Skuse, D. (2002). The classification of “fear” from faces is associated with face recognition skill in women. Neuropsychologia, 40(6), 575–584. [DOI] [PubMed] [Google Scholar]

- Canli, T. , Zhao, Z. , Brewer, J. , Gabrieli, J. D. E. , & Cahill, L. (2000). Event‐related activation in the human amygdala associates with later memory for individual emotional experience. The Journal of Neuroscience, 20(19), RC99 LP–RC99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canli, T. , Zhao, Z. , Desmond, J. E. , Glover, G. , & Gabrieli, J. D. E. (1999). fMRI identifies a network of structures correlated with retention of positive and negative emotional memory. Psychobiology, 27(4), 441–452. [Google Scholar]

- Chapman, C. , Hoag, R. , & Giaschi, D. (2004). The effect of disrupting the human magnocellular pathway on global motion perception. Vision Research, 44, 2551–2557. [DOI] [PubMed] [Google Scholar]

- Cheng, A. , Eysel, U. T. , & Vidyasagar, T. R. (2004). The role of the magnocellular pathway in serial deployment of visual attention. European Journal of Neuroscience, 20(8), 2188–2192. [DOI] [PubMed] [Google Scholar]

- Cheng, D. T. , Knight, D. C. , Smith, C. N. , & Helmstetter, F. J. (2006). Human amygdala activity during the expression of fear responses. Behavioral Neuroscience, 120(6), 1187–1195. [DOI] [PubMed] [Google Scholar]

- Cheng, D. T. , Richards, J. , & Helmstetter, F. J. (2007). Activity in the human amygdala corresponds to early, rather than late period autonomic responses to a signal for shock. Learning and Memory, 14, 485–490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collignon, O. , Girard, S. , Gosselin, F. , Saint‐Amour, D. , Lepore, F. , & Lassonde, M. (2010). Women process multisensory emotion expressions more efficiently than men. Neuropsychologia, 48(1), 220–225. [DOI] [PubMed] [Google Scholar]

- Costafreda, S. G. , Brammer, M. J. , David, A. S. , & Fu, C. H. Y. (2008). Predictors of amygdala activation during the processing of emotional stimuli: A meta‐analysis of 385 PET and fMRI studies. Brain Research Reviews, 58(1), 57–70. [DOI] [PubMed] [Google Scholar]

- Cushing, C. A. , Im, H. Y. , Adams, R. B., Jr. , Ward, N. , Albohn, N. D. , Steiner, T. G. , & Kveraga, K. (2018). Neurodynamics and connectivity during facial fear perception: The role of threat exposure and signal congruity. Scientific Reports, 8, 2776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis, M. , & Whalen, P. J. (2001). The amygdala: Vigilance and emotion. Molecular Psychiatry, 6(1), 13–34. [DOI] [PubMed] [Google Scholar]

- De Bellis, M. D. , Casey, B. J. , Dahl, R. E. , Birmaher, B. , Williamson, D. E. , Thomas, K. M. , & Ryan, N. D. (2000). A pilot study of amygdala volumes in pediatric generalized anxiety disorder. Biological Psychiatry, 48(1), 51–57. [DOI] [PubMed] [Google Scholar]

- Deichmann, R. , Gottfried, J. A. , Hutton, C. , & Turner, R. (2003). Optimized EPI for fMRI studies of the orbitofrontal cortex. NeuroImage, 19(2 Pt 1), 430–441. [DOI] [PubMed] [Google Scholar]

- Denison, R. N. , Vu, A. T. , Yacoub, E. , Feinberg, D. A. , & Silver, M. A. (2014). Functional mapping of the magnocellular and parvocellular subdivisions of human LGN. NeuroImage, 102(P2), 358–369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner, N. C. , Riediger, M. , & Lindenberger, U. (2010). FACES – A database of facial expressions in young, middle‐aged, and older women and men: Development and validation. Behavior Research Methods, 42(1), 351–362. [DOI] [PubMed] [Google Scholar]

- Eickhoff, S. , Stephan, K. E. , Mohlberg, H. , Grefkes, C. , Fink, G. R. , Amunts, K. , & Zilles, K. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage, 25, 1325–1335. [DOI] [PubMed] [Google Scholar]

- Eickhoff, S. B. , Heim, S. , Zilles, K. , & Amunts, K. (2006). Testing anatomically specified hypotheses in functional imaging using cytoarchitectonic maps. NeuroImage, 32, 570–582. [DOI] [PubMed] [Google Scholar]

- Eickhoff, S. B. , Paus, T. , Caspers, S. , Grosbras, M. H. , Evans, A. , Zilles, K. , & Amunts, K. (2007). Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. NeuroImage, 36, 511–521. [DOI] [PubMed] [Google Scholar]

- Ekman, P. , & Friesen, W. V. (1976). The pictures official aficr. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Ewbank, M. P. , Fox, E. , & Calder, A. J. (2010). The interaction between gaze and facial expression in the amygdala and extended amygdala is modulated by anxiety. Frontiers in Human Neuroscience, 4, 56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl, B. , Salat, D. H. , Busa, E. , Albert, M. , Dieterich, M. , Haselgrove, C. , … Dale, A. M. (2002). Whole brain segmentation. Neuron, 33(3), 341–355. [DOI] [PubMed] [Google Scholar]

- Fox, E. , Mathews, A. , Calder, A. J. , & Yiend, J. (2007). Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion (Washington, D.C.), 7(3), 478–486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frodl, T. , Meisenzahl, E. , Zetzsche, T. , Bottlender, R. , Born, C. , Groll, C. , … Möller, H.‐J. (2002). Enlargement of the amygdala in patients with a first episode of major depression. Biological Psychiatry, 51(9), 708–714. [DOI] [PubMed] [Google Scholar]

- Grön, G. , Wunderlich, A. P. , Spitzer, M. , Tomczak, R. , & Riepe, M. W. (2000). Brain activation during human navigation: Gender‐different neural networks as substrate of performance. Nature Neuroscience, 3(4), 404–408. [DOI] [PubMed] [Google Scholar]

- Grossman, M. , & Wood, W. (1993). Sex differences in intensity of emotional experience: A social role interpretation. Journal of Personality and Social Psychology, 65(5), 1010–1022. [DOI] [PubMed] [Google Scholar]

- Hadjikhani, N. , Hoge, R. , Snyder, J. , & de Gelder, B. (2008). Pointing with the eyes: The role of gaze in communicating danger. Brain and Cognition, 68(1), 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haier, R. J. , & Benbow, C. P. (1995). Sex differences and lateralization in temporal lobe glucose metabolism during mathematical reasoning. Developmental Neuropsychology, 11(4), 405–414. [Google Scholar]

- Hall, J. A. (1978). Gender effects in decoding nonverbal cues. Psychological Bulletin, 85(4), 845–857. [Google Scholar]

- Hamann, S. B. , Ely, T. D. , Grafton, S. T. , & Kilts, C. D. (1999). Amygdala activity related to enhanced memory for pleasant and aversive stimuli. Nature Neuroscience, 2(3), 289–293. [DOI] [PubMed] [Google Scholar]

- Hampson, E. , van Anders, S. M. , & Mullin, L. I. (2017). A female advantage in the recognition of emotional facial expressions: Test of an evolutionary hypothesis. Evolution and Human Behavior, 27(6), 401–416. [Google Scholar]

- Hess, U. , Adams, R. B., Jr. , & Kleck, R. E. (2004). Facial appearance, gender, and emotion expression. Emotion, 4(4), 378–388. [DOI] [PubMed] [Google Scholar]

- Hess, U. , Adams, R. B., Jr. , & Kleck, R. E. (2005). Who may frown and who should smile? Dominance, affiliation, and the display of happiness and anger. Cognition and Emotion, 19, 515–536. [Google Scholar]

- Hess, U. , Adams, R. B., Jr. , & Kleck, R. E. (2007). Looking at you or looking elsewhere: The influence of head orientation on the signal value of emotional facial expressions. Motivation and Emotion, 31(2), 137–144. [Google Scholar]

- Hicks, T. P. , Lee, B. B. , & Vidyasagar, T. R. (1983). The responses of cells in the macaque lateral geniculate nucleus to sinusoidal gratings. Journal of Physiology, 337, 183–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman, K. L. , Gothard, K. M. , Schmid, M. C. , & Logothetis, N. K. (2007). Facial‐expression and gaze‐selective responses in the monkey amygdala. Current Biology: Cb, 17(9), 766–772. [DOI] [PubMed] [Google Scholar]

- Hoffmann, H. , Kessler, H. , Eppel, T. , Rukavina, S. , & Traue, H. C. (2010). Expression intensity, gender and facial emotion recognition: Women recognize only subtle facial emotions better than men. Acta Psychologica, 135(3), 278–283. [DOI] [PubMed] [Google Scholar]

- Im, H. Y. , Adams, R. B., Jr. , Boshyan, J. , Ward, N. , Cushing, C. , & Kveraga, K. (2017a). Observer's anxiety facilitates magnocellular processing of clear facial threat cues, but impairs parvocellular processing of ambiguous facial threat cues. Scientific Reports, 15151, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Im, H. Y. , Albohn, N. D. , Steiner, T. G. , Cushing, C. , Adams, R. B., Jr. , & Kveraga, K. (2017b). Ensemble coding of crowd emotion: Differential hemispheric and visual stream contributions. Nature Human Behaviour, 1, 828–842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishihara, S. (1917). Tests for color‐blindness. Handaya, Tokyo: Hongo Harukicho. [Google Scholar]

- Kaplan, E. , & Shapley, R. M. (1986). The primate retina contains two types of ganglion cells, with high and low contrast sensitivity. Proceedings of the National Academy of Sciences of the United States of America, 83, 2755–2757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawashima, R. , Sugiura, M. , Kato, T. , Nakamura, A. , Hatano, K. , Ito, K. , … Nakamura, K. (1999). The human amygdala plays an important role in gaze monitoring. Brain, 122(4), 779–783. [DOI] [PubMed] [Google Scholar]

- Kibby, M. Y. , Dyer, S. M. , Vadnais, S. A. , Jagger, A. C. , Casher, G. A. , & Stacy, M. (2015). Visual processing in reading disorders and attention‐deficit/hyperactivity disorder and its contribution to basic reading ability. Frontiers in Psychology, 6, 1635. [DOI] [PMC free article] [PubMed] [Google Scholar]