Abstract

The spatial location of an object can be represented in two frames of reference: egocentric (relative to the observer's body or body parts) and allocentric (relative to another object independent of the observer). The object positions relative to the two frames can be either congruent (e.g., both left or both right) or incongruent (e.g., one left and one right). Most of the previous studies, however, did not discriminate between the two types of spatial conflicts. To investigate the common and specific neural mechanisms underlying the spatial congruency effect induced by the two reference frames, we adopted a 3 (type of task: allocentric, egocentric, and color) × 2 (spatial congruency: congruent vs. incongruent) within‐subject design in this fMRI study. The spatial congruency effect in the allocentric task was induced by the task‐irrelevant egocentric representations, and vice versa in the egocentric task. The nonspatial color task was introduced to control for the differences in bottom‐up stimuli between the congruent and incongruent conditions. Behaviorally, significant spatial congruency effect was revealed in both the egocentric and allocentric task. Neurally, the dorsal‐medial visuoparietal stream was commonly involved in the spatial congruency effect induced by the task‐irrelevant egocentric and allocentric representations. The right superior parietal cortex and the right precentral gyrus were specifically involved in the spatial congruency effect induced by the irrelevant egocentric and allocentric representations, respectively. Taken together, these results suggested that different subregions in the parieto‐frontal network played different functional roles in the spatial interaction between the egocentric and allocentric reference frame. Hum Brain Mapp 38:2112–2127, 2017. © 2017 Wiley Periodicals, Inc.

Keywords: egocentric, allocentric, spatial congruency effect, parieto‐frontal network, fMRI

INTRODUCTION

The spatial location of an object can be represented either in the egocentric (relative to the observer's body parts) or the allocentric (relative to another object or a background independent of the observer) reference frame. Egocentric representations are coded in the brain to guide goal‐directed actions [Andersen et al., 1997; Goodale and Milner, 1992; Milner and Goodale, 2008], while allocentric representations are coded to support conscious perception of the external world [Andersen and Buneo, 2002; Goodale and Milner, 1992; Rolls, 1999; Rolls and Xiang, 2006].

It has been well documented that the egocentric and allocentric reference frames are two interacting, rather than independent, spatial frames of reference. First, neuropsychological evidence from patients with visuospatial neglect showed that the egocentric and allocentric manifestations of neglect could occur not only in isolation on different patients but also together on the same patient [Bisiach et al., 1985; Driver and Halligan, 1991; Marshall and Halligan, 1993; Walker, 1995], indicating that egocentric and allocentric reference frames may have both shared and specific neural correlates in the brain. It has been accordingly revealed in humans that the two spatial reference frames have not only specific mechanisms in temporal, occipital cortices, and the hippocampus, but also common neural mechanisms in the frontal and parietal lobes [Chen et al., 2012; Fink et al., 1997; Galati et al., 2000; Zaehle et al., 2007].

Moreover, recent studies directly investigated the mutual influences between the two spatial frames of reference in a variety of behavioral tasks [Neggers et al., 2006; Thaler and Todd, 2010; Zhang et al., 2013]. Upon the appearance of an object, people immediately form multiple representations of the object. More importantly, the allocentric and the egocentric positions of the same object can be either congruent or incongruent. Specifically speaking, a behavioral target could be on the left or right side of the midsagittal line of the participants, and in the meanwhile on the left or right side of another reference object in the background. Therefore, the egocentric and allocentric positions will be congruent if the target is on the same side of the egocentric and the allocentric frames, and incongruent if on different sides (Fig. 1A). It has been accordingly revealed that the egocentric judgments could be interfered by the irrelevant allocentric positions, and similarly the allocentric judgment could be interfered by the irrelevant egocentric positions [Zhang et al., 2013].

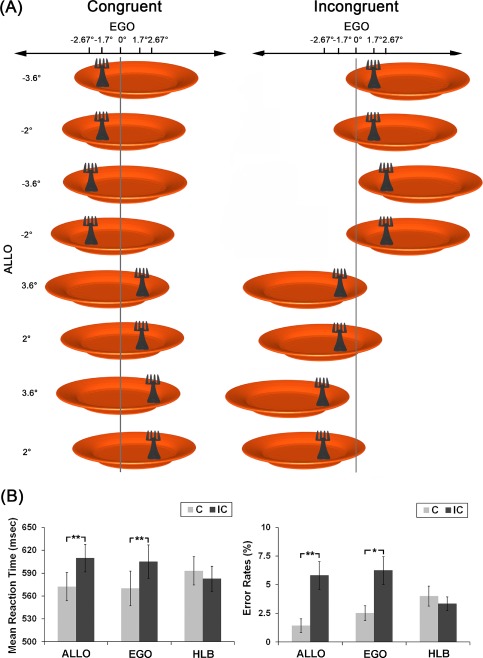

Figure 1.

A: Experimental stimuli. The visual stimuli consisted of a fork on the top of a plate. Participants were asked to judge the position of the fork with reference either to the midsagittal of the plate or to the midsagittal of their body. The fork could appear at one of four egocentric positions (−2.67°, −1.7°, 1.7°, 2.67°). For each of the four egocentric locations, the location of the plate was independently varied, forming four allocentric positions of the fork (−3.6°, −2°, 2°, 3.6°). The stimulus set could be classified into two types according to the spatial congruency between the egocentric and allocentric positions of the target: congruent (the left panel) and incongruent (the right panel). The visual angles of the eccentricities for the egocentric and allocentric positions are shown on top and on the left side of the figure, respectively. The vertical gray line indicates the egocentric midsagittal line. B: Behavior results. Mean RTs (the left panel) and error rates (the right panel) in the six experimental conditions (**P < 0.01; *P < 0.05). The error bars represent standard errors. [Color figure can be viewed at http://wileyonlinelibrary.com]

It remains unknown, however, the common and specific neural correlates underlying the spatial congruency effect caused by the egocentric and the allocentric representations, respectively. In this study, we asked participants to alternatively perform allocentric and egocentric spatial judgments on the same stimulus set. Therefore, the target positions relative to one spatial reference frame were task‐relevant while the target positions relative to the other frame were task‐irrelevant. In this way, the spatial judgments based on the task‐relevant frame of reference could be either facilitated or interfered by the spatial representations in the task‐irrelevant frame of reference, depending on the spatial congruency between the two types of representations. In addition, since the bottom‐up stimuli were inevitably different between the congruent and incongruent conditions (Fig. 1A), a nonspatial high‐level baseline (HLB, color) task was adopted to control for the potential difference induced by the bottom‐up stimuli. Participants were asked to perform a nonspatial color discrimination task on the same set of bottom‐up stimuli as that in the spatial tasks. Therefore, by including the nonspatial control task as the HLB, we were allowed to exclude the difference in the bottom‐up stimuli between the congruent and the incongruent conditions, and accordingly get the clean effect of spatial congruency in the spatial tasks.

Since the spatial congruency effect in the allocentric task was caused by the irrelevant egocentric representations, we predicted that the neural correlates, which were specifically involved in representing the egocentric positions, would be activated by the spatial congruency effect in the allocentric task. Similarly, since the spatial congruency effect in the egocentric task was caused by the irrelevant allocentric representations, the neural correlates, which were specifically involved in representing the allocentric positions, would be activated by the spatial congruency effect in the egocentric task.

MATERIALS AND METHODS

Participants

Nineteen right‐handed participants (7 males and 12 females, 18–25 years old) took part in this study and got paid for their participation. They all have normal or corrected‐to‐normal vision and no history of neurological or psychiatric disorders. All the participants gave informed written consent prior to the experiments in accordance with the Declaration of Helsinki. This study was approved by the Academic Committee of the School of Psychology, South China Normal University.

Stimuli and Experimental Design

The stimuli consisted of a fork on the top of a plate on a gray background. The diameter of the plate was 15° of visual angle, and the closer end of the fork was 2.5° of visual angle. There were two luminance values for the color of the fork: 64, 64, 64 or 192, 192, 192 (24 bits red, green, blue color coding). The fork could be located at 4 different locations with respect to the midsagittal of participants (i.e., −2.67°, −1.7°, 1.7°, and 2.67°), forming four different egocentric locations of the fork. The relative locations of the fork with respect to the midsagittal of the plate were varied as well (i.e., −3.6°, −2°, 2°, and 3.6°), forming four different allocentric locations of the fork (Fig. 1A). Therefore, there were 4 (egocentric locations) × 4 (allocentric locations) × 2 (luminance of the fork) = 32 types of stimuli in total. The 32 types of stimuli were classified into the congruent and the incongruent conditions according to the spatial congruency between the egocentric and the allocentric locations of the fork. In the congruent condition, the allocentric and the egocentric positions of the fork were both left or both right (Fig. 1A, left); in the incongruent condition, the allocentric position of the fork were on the opposite side of the egocentric position (Fig. 1A, right).

Participants performed three types of task on the same set of stimuli in Figure 1A, i.e. allocentric, egocentric, and nonspatial color discrimination tasks. In the allocentric judgment task (ALLO), participants were asked to judge whether the fork was on the left or the right side of the plate. In the egocentric judgment task (EGO), participants were asked to judge whether the fork was on the left or right side of the midsagittal of their own body. For the two spatial tasks, participants used the left thumb to press the left button on the response pad for the “left” responses, and used the right thumb to press the right button for the “right” responses. In the nonspatial HLB task (i.e., nonspatial color discrimination task), participants were required to judge whether the fork was light gray or dark gray by pressing the left or the right button on the response pad with their left or right thumb. The mapping between the color of the fork and the response buttons was counter‐balanced across participants. Therefore, the experimental design was a 3 (type of task: ALLO, EGO, and HLB) × 2 (spatial congruency between the egocentric and allocentric positions: congruent vs. incongruent) within‐subject design.

Procedures

The presentation of stimuli used a hybrid fMRI design in which the type of task was blocked, and the congruent and incongruent trials were randomly mixed within each block. Each block started with a 3 s visual introduction informing the participants of the type of the task in the following block. Participants performed the three types of task alternately. In each trial, the target was presented for 250 ms. Such a short presentation time of the stimuli was chosen to minimize eye movements [Findlay, 1997; Findlay et al., 2001]. To prevent the participants from using the central fixation, rather than the midsagittal of their body, as the reference to judge the egocentric position of the target, we did not present a central fixation throughout the experiment. For the egocentric and allocentric tasks, there were 48 trials in the congruent and incongruent condition, respectively, and for the nonspatial HLB task, there were 96 trials in the congruent and incongruent condition, respectively, resulting in 512 trials in total (384 experimental trials and 128 null trials). In the null trials, only a blank screen was displayed. There were 6 egocentric blocks, 6 allocentric blocks, and 12 nonspatial HLB blocks. Each block consisted of 16 experimental trials and 5–6 null trials. In each block, the congruent, the incongruent, and the null trials were randomly mixed. The intertrial interval in a block was jittered from 2000 to 3000 ms (2000, 2250, 2500, 2750, and 3000 ms). All participants performed a 3 min practice session before the scanning.

Data Acquisition and Preprocessing

A Siemens 3T Trio system with a standard head coil at MRI Center in South China Normal University was used to obtain T2*‐weighted EPIs with blood oxygenation level‐dependent contrast. The matrix size was 64 × 64 mm³, and the voxel size was 3.4 × 3.4 × 3 mm³. Thirty‐six transversal slices of 3 mm thickness that covered the whole brain were acquired interleaved with a 0.75 mm gap (repetition time = 2.2 s, echo time = 30 ms, field of view =132 mm, flip angle = 90°). There was only one run of functional scanning, which was 23.5 min including 640 EPI volumes. The first five volumes were deleted to allow for T1 equilibration effects. After the functional scanning, high‐resolution anatomical images were acquired using a standard T1 weighted 3D MPRAGE sequence (voxel size = 1 × 1 × 1 mm³).

Data were preprocessed with Statistical Parametric Mapping software SPM8 (Wellcome Department of Imaging Neuroscience, London, http://www.fil.ion.ucl.ac.uk). Images were realigned to the first volume to correct for interscan head movements. The mean EPI image of each participant was then computed and spatially normalized to the MNI single‐subject template using the “unified segmentation” function in SPM8. This algorithm is based on a probabilistic framework that enables image registration, tissue classification, and bias correction to be combined within the same generative model. The resulting parameters of a discrete cosine transform, which define the deformation field necessary to move individual data into the space of the MNI tissue probability maps, were combined with the deformation field transforming between the latter and the MNI single participant template. The ensuing deformation was subsequently applied to individual EPI volumes. All images were thus transformed into standard MNI space and resampled to a 2 × 2 × 2 mm³ voxel size. The data were then smoothed with a Gaussian kernel of 8 mm full‐width half‐maximum to accommodate interparticipant anatomical variability.

Statistical Analysis of Behavioral Data

For each of the six experimental conditions, omissions, incorrect responses, and outlier trials with reaction times (RTs) longer than mean RT plus three times standard deviation (SD) or shorter than mean RT minus three times SD were excluded from further analysis. Proportion of the excluded outlier trials in each of the six experimental conditions was calculated as the rate between the number of outlier trials and the overall number of trials in each condition. Error rates were calculated as the proportion of omissions and incorrect trials in each experimental condition. Mean RTs and error rates were submitted to a 3 (type of task: ALLO, EGO, and HLB) × 2 (spatial congruency between the egocentric and allocentric positions: congruent vs. incongruent) repeated‐measures ANOVA, respectively.

Statistical Analysis of Imaging Data

Data were high‐pass‐filtered at 1/290 Hz and modeled using the general linear model (GLM) as implemented in SPM8. At the individual level, the general linear model was used to construct a multiple regression design matrix including the six experimental conditions, that is, “ALLO_C”, “ALLO_IC”, “EGO_C”, “EGO_IC”, “HLB_C”, and “HLB_IC”. The six types of events were time locked to the onset of target by a canonical synthetic hemodynamic response function and its first‐order time derivative with an event duration of 0 s. To account for variance caused by varying RTs within each of the six experimental conditions, RT on each trial was entered as a parametric regressor for each of the six experimental conditions into the general linear model. This parametric regressor modeled the variance in the average BOLD signal that varied linearly with RTs in each of the six experimental conditions. It indicated how much the BOLD responses in a brain region varied with RTs. In addition, all of the 3 s visual introductions before the blocks and the six head movement parameters derived from the realignment procedure were included as confounds. The error trials and outlier trials were modeled as another regressor of no interest. Parameter estimates were calculated subsequently for each voxel using weighted least‐squares analysis to provide maximum likelihood estimators based on the temporal autocorrelation of the data.

For each participant, simple main effects for each of the six experimental conditions were computed by applying appropriate “1 0” baseline contrasts, that is, the experimental conditions versus implicit baseline (null trials) contrasts. Specifically speaking, for each of the six experimental conditions, “1” was put on the corresponding HRF regressor, and “0”s were put on all the other regressors. The six first‐level individual contrast images were then fed to a 3 × 2 within‐subject ANOVA at the second group level using a random‐effects model (i.e., the flexible factorial design in SPM8 including an additional factor modeling the subject means). In the modeling of variance components, violations of sphericity were allowed for by modeling nonindependence across parameter estimates from the same subject and allowed for unequal variances between conditions and between participants using the standard implementation in SPM8.

For the parametric modulation effect, at the first individual level, the positive parametric modulation effect of RTs in each of the six experimental conditions was calculated by assigning “1” to the corresponding parametric regressor, and assigning “0”s to all the other regressors. The six first‐level positive parametric modulation contrast images were then fed into a within‐participants ANOVA model at the second level group analysis. At the group level analysis, the positive parametric modulation effect of RTs in each experimental condition was calculated by assigning “1” to the corresponding regressor and “0”s to all the other regressors. For both the neural contrasts based on the HRF regressors, and the parametric modulation effects of RTs, areas of activation were identified as significant only if they passed a conservative threshold of P < 0.001, family wise error correction for multiple comparisons at the cluster level, with an underlying voxel level of P < 0.001 uncorrected [Poline et al., 1997].

Definition of Neural Contrasts

For the main effect of the type of task (collapsed over the spatial congruency factor), the two spatial tasks were first compared with the nonspatial HLB task, respectively, and were then directly contrasted with each other. Specifically, “EGO > HLB” was calculated for the main effect of egocentric task, “ALLO > HLB” for the main effect of allocentric task, and “EGO > ALLO” for the differential activations between the two spatial tasks. Moreover, a statistical conjunction was performed between the “EGO > HLB” and the “ALLO > HLB” contrasts to localize the common activations between the two spatial tasks.

For the main effect of spatial congruency (collapsed over the two types of spatial task), the neural contrast “(EGO_IC + ALLO_IC) > (EGO_C + ALLO_C)” was calculated. Moreover, the main effect of spatial congruency in the egocentric and allocentric tasks was defined by the neural contrasts “EGO (IC > C)” and “ALLO (IC > C)”, respectively. Since there were differences in the bottom‐up stimuli between the incongruent and the congruent conditions, and the differences existed in both the spatial tasks and the nonspatial HLB task (Fig. 1A), the clean spatial congruency effect was further calculated by excluding the differences in bottom‐up stimuli between the incongruent and congruent conditions. Specifically speaking, since there existed only physical stimulus difference, but no spatial congruency effect, in the “IC > C” contrast of the nonspatial HLB task, the clean spatial congruency effect commonly induced by egocentric and allocentric representations in the spatial tasks was defined by the neural contrast “[EGO (IC > C) + ALLO (IC > C)]/2 > HLB (IC > C)”, in which the potential effect of differences in physical stimuli was excluded by subtracting the same “IC > C” contrast in the nonspatial HLB task. Similarly, the spatial conflict effect specifically induced by the egocentric representations was defined by the neural contrast “ALLO (IC > C) > [EGO (IC > C) + HLB (IC > C)]/2”, in which both the potential differences in the bottom‐up stimuli and the spatial conflict effect between the incongruent and congruent condition in the egocentric task were excluded. Following the same logic, the spatial conflict effect specifically induced by the allocentric representation was defined by the neural contrast “EGO (IC > C) > [ALLO (IC > C) + HLB (IC > C)]/2”, in which both the potential differences in the bottom‐up stimuli and the spatial conflict effect between the incongruent and congruent conditions in the allocentric task were excluded.

Regions of Interest (ROI) Analysis

Based on the neural activations from the second‐level analysis, six ROIs were defined: (1) the right middle frontal gyrus (MNI: x = 30, y = 12, z = 48) and (2) the right superior parietal cortex (−14, −72, 54), which were conjointly activated by the egocentric and allocentric tasks, compared to the nonspatial HLB task; (3) the left precuneus (−2, −38, 72), which was commonly involved by spatial conflict effect by the egocentric and allocentric representations; (4) the right superior parietal cortex (18, −58, 48), which was specifically activated by the spatial congruency effect induced by the egocentric representations; and (5) the right precentral gyrus (36, −22, 60), and (6) the left inferior occipital gyrus (−24, −98, −6), which were specifically activated by the spatial congruency effect induced by the allocentric representations. Mean parameter estimates in the six experimental conditions were further extracted from a sphere of 4 mm radius (twice the voxel size) around the individual peak voxels of the six ROIs, respectively, by using MarsBar 0.43 (http://sourceforge.net/projects/marsbar).

Furthermore, to avoid the problem of double dipping [Kriegeskorte et al., 2009], for the neural contrasts of main effects, no further ANOVA analysis was performed on the extracted parameter estimates in the ROIs, and the Figures of the extracted parameter estimates are shown only for the purpose of demonstration (Figs. 2 and 3). However, since the neural interaction contrasts do not provide statistical information with regard to the specific difference between the key experimental conditions, which drives the interaction, we directly performed planned paired t‐tests, rather than the ANOVA analysis, on the key experimental conditions to clarify the specific patterns of interaction (Figs. 4 and 5).

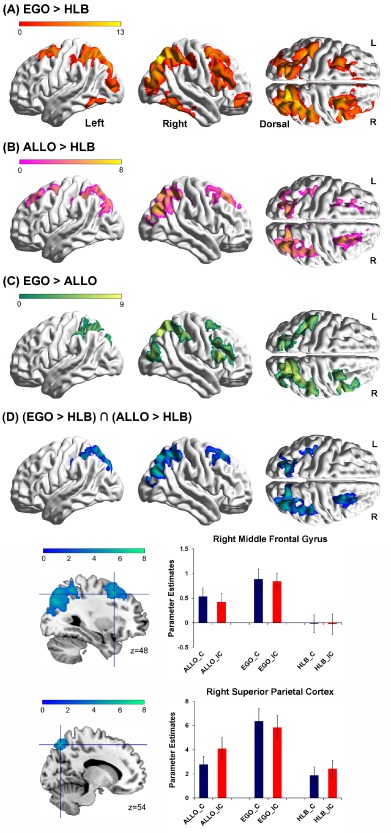

Figure 2.

Main effect of the type of task. A: Brain regions activated by egocentric judgment tasks (EGO) as compared with the nonspatial HLB task. B: Brain regions activated by allocentric judgment tasks (ALLO) as compared with the nonspatial HLB task. C: Brain regions activated by egocentric judgment tasks as compared with allocentric judgment tasks. D: Common brain regions activated by both egocentric and allocentric judgment tasks, as depicted by the conjunction analysis of the two contrasts “EGO > HLB” and “ALLO > HLB” (collapsed across the congruency factor). Mean parameter estimates in the right middle frontal gyrus (upper panel) and in the right superior parietal cortex (lower panel) are shown as a function of the six experimental conditions, respectively. The error bars represent standard errors. [Color figure can be viewed at http://wileyonlinelibrary.com]

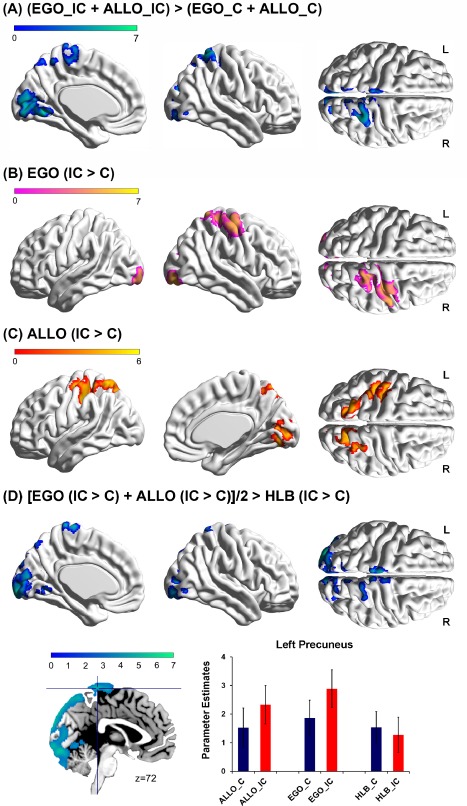

Figure 3.

A: Brain regions activated in the incongruent condition as compared to the congruent condition (collapsed over the allocentric and egocentric tasks). B: Brain regions activated in the incongruent condition compared with the congruent condition in the egocentric task. C: Brain regions activated in the incongruent condition compared to the congruent condition in the allocentric task. D: Common neural correlates underlying the spatial congruency effect caused by the egocentric and the allocentric representations, with differences in the physical stimuli being excluded. Mean parameter estimates in the left precuneus are shown as a function of the six experimental conditions. The error bars indicate standard errors. The pattern of neural activity in the other regions was similar to that in the left precuneus. [Color figure can be viewed at http://wileyonlinelibrary.com]

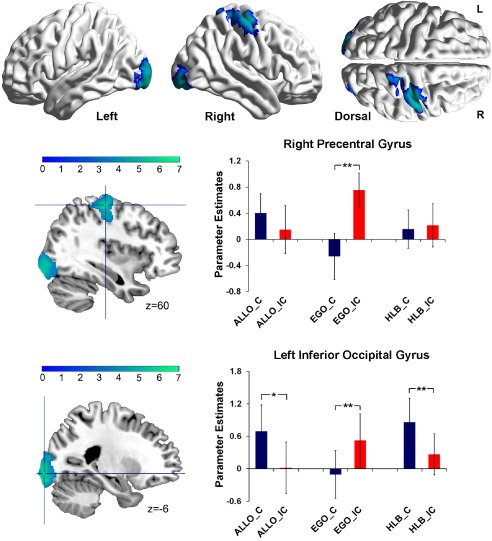

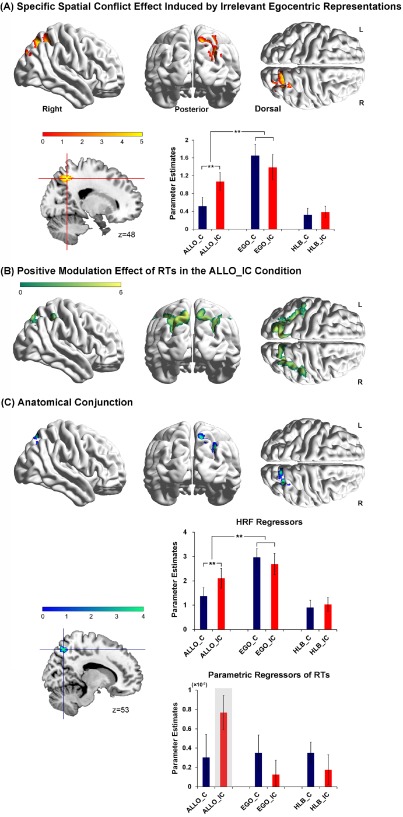

Figure 4.

Specific neural correlates underlying the spatial congruency effect caused by the irrelevant allocentric representations in the egocentric judgment task. The bilateral inferior occipital gyrus and the right precentral gyrus were significantly activated by the contrast “EGO (IC > C) > [ALLO (IC > C) + HLB (IC > C)]/2”. Mean parameter estimates in the right precentral gyrus and the left inferior occipital gyrus are shown as a function of the six experimental conditions, respectively (**P < 0.01; *P < 0.05). The error bars represent standard errors. The pattern of neural activity in the right inferior occipital gyrus was similar to that in the left inferior occipital gyrus. [Color figure can be viewed at http://wileyonlinelibrary.com]

Figure 5.

A: Specific neural correlates underlying the spatial congruency effect caused by the irrelevant egocentric representations in the allocentric judgment task. The right superior parietal cortex was significantly activated by the neural interaction contrast “ALLO (IC > C) > [EGO (IC > C) + HLB (IC > C)]/2”. Mean parameter estimates in the right superior parietal cortex are shown as a function of the six experimental conditions (**P < 0.01). The error bars indicate standard errors. B: Neural regions activated by the positive parametric modulation effect of RTs in the ALLO_IC condition. C: Anatomical conjunction between (A) and (B) showed overlapped activation in the right superior parietal cortex. Mean parameter estimates on the HRF regressors (upper panel) and on the parametric regressors of RTs (lower panel) were extracted from the conjointly activated superior parietal cortex, and are shown as a function of the six experimental conditions, respectively (**P < 0.01). The error bars indicate standard errors. [Color figure can be viewed at http://wileyonlinelibrary.com]

RESULTS

Behavioral Results

First, please note that the mean proportion of the outlier trials was lower than 2% in all the experimental conditions (mean ± SD: ALLO_C: 1.64 ± 1.49%; ALLO_IC: 1.54 ± 1.36%; EGO_C: 1.75 ± 1.43%; EGO_IC: 1.54 ± 1.36%; HLB_C: 1.32 ± 0.97%; HLB_IC: 1.64 ± 1%), and paired t‐tests (with Bonferroni correction) showed that there was no significant difference between six experimental conditions, all Ps > 0.05.

For RTs, the main effect of spatial congruency between the egocentric and allocentric positions was significant, F (1, 18) = 31.52, P < 0.001. Mean RTs in the incongruent condition (599 ms) were significantly slower than mean RTs in the congruent condition (578 ms), indicating a significant spatial congruency effect between the egocentric and allocentric reference frames. The main effect of the three types of task was not significant, F < 1. The two‐way interaction was significant, F (1, 18) = 21.34, P < 0.001. Further planned t‐tests on simple effects indicated that participants responded significantly slower in the incongruent condition than in the congruent condition during the egocentric, t (18) = 5.58, P < 0.001, and the allocentric judgment tasks, t (18) = 5.65, P < 0.001, but there was no significant difference between the congruent and incongruent conditions during the nonspatial HLB task, P > 0.05 (Fig. 1B, left). We further calculated the size of the spatial congruency effect (incongruent > congruent) in the egocentric and the allocentric judgment tasks, respectively. There was no significant difference between the size of the spatial congruency effect in the egocentric and the allocentric tasks, P > 0.05.

For error rates, the main effect of congruency between the egocentric and allocentric positions was significant, F (1, 18) = 12.98, P < 0.01, indicating more errors in the incongruent condition (5.14%) than in the congruent condition (2.65%). The main effect of task was not significant, F (1, 18) = 1.05, P > 0.05. The two‐way interaction was significant, F (1, 18) = 4.53, P < 0.05. Planned t‐tests on simple effects revealed that participants made more errors in the incongruent condition than in the congruent condition during both the egocentric, t (18) = 2.56, P < 0.05, and the allocentric judgment tasks, t (18) = 3.22, P < 0.01, but there was no significant difference between the congruent and incongruent conditions in the nonspatial HLB task, P > 0.1 (Fig. 1B, right). Furthermore, there was no significant difference between the size of the spatial congruency effect in the egocentric and the allocentric tasks, P > 0.05.

Imaging Results

Main effect of tasks

We first identified neural activations associated with the egocentric and allocentric judgments (collapsed over the congruent and the incongruent conditions). Compared with the nonspatial HLB task, egocentric (“EGO > HLB”, Fig. 2A and Table 1) and allocentric judgments (“ALLO > HLB”, Fig. 2B and Table 1) conjointly activated right middle frontal gyrus, and bilateral parietal cortex extending to the bilateral middle occipital gyrus (Fig. 2D and Table 1). Mean parameter estimates extracted from the right middle frontal gyrus and the right superior parietal cortex are shown in Figure 2D as a function of the six experimental conditions. In both regions, for both the congruent and incongruent conditions, neural activity was higher in the allocentric and egocentric tasks than in the nonspatial HLB task, and neural activity was higher in the egocentric task than in the allocentric task. Directly compared to the allocentric task, the egocentric task specifically activated the dorsal parieto‐frontal areas including the right middle and inferior frontal gyrus, the right superior parietal cortex, and the left inferior parietal cortex (Fig. 2C and Table 1). No significant activation was found in the reverse contrast, i.e. “ALLO > EGO”.

Table 1.

Brain regions showing significant increases of BOLD response associated with the egocentric and allocentric judgment tasks

| Anatomical Region | Side | Cluster Peak (mm) | t Score | k E (Voxels) |

|---|---|---|---|---|

| A. EGO > HLB | ||||

| Superior parietal cortex | R | 24, −72, 52 | 12.28 | 15,370 |

| Middle occipital gyrus | L | −26, −76, 32 | 10.22 | |

| Middle frontal gyrus | R | 30, 2, 58 | 9.14 | 6,662 |

| Inferior temporal gyrus | R | 58, −52, −14 | 8.35 | 1,640 |

| Inferior temporal gyrus | L | −60, −54, −16 | 6.13 | 623 |

| Middle orbital frontal gyrus | R | 44, 52, −4 | 4.89 | 549 |

| B. ALLO > HLB | ||||

| Middle occipital gyrus | R | 34, −74, 34 | 7.72 | 5,216 |

| Superior parietal cortex | R | 20, −72, 54 | 6.27 | |

| Middle occipital gyrus | L | −26, −76, 32 | 6.31 | 2,070 |

| Middle frontal gyrus | R | 26, 30, 46 | 6.03 | 1,469 |

| Superior frontal gyrus | L | −20, 34, 46 | 4.44 | 523 |

| C. EGO > ALLO | ||||

| Superior parietal cortex | R | 20, −64, 60 | 8.39 | 5,612 |

| Middle frontal gyrus | R | 32, 0, 60 | 6.31 | 623 |

| Inferior parietal cortex | L | −40, −40, 38 | 6.08 | 2,533 |

| Inferior frontal gyrus | R | 54, −52, 12 | 5.21 | 582 |

| D. (EGO > HLB) ∩ (ALLO > HLB) | ||||

| Middle occipital gyrus | R | 34, −74, 34 | 7.72 | 4,251 |

| Superior parietal cortex | R | 20, −72, 54 | 5.81 | |

| Middle occipital gyrus | L | −26, −76, 32 | 6.31 | 1,833 |

| Middle frontal gyrus | R | 30, 12, 48 | 5.98 | 1,107 |

| Superior parietal cortex | L | −14, −72, 54 | 5.76 | |

The coordinates (x, y, z) correspond to MNI coordinates. Displayed are the coordinates of the maximally activated voxel within a significant cluster as well as the coordinates of relevant local maxima within the cluster (in Italics).

Main effect of spatial congruency

We then identified the pattern of neural activity elicited by the main effect of the spatial congruency effect. Compared to the congruent condition (collapsed over the egocentric and allocentric tasks), the left precuneus, the right superior parietal cortex, and bilateral calcarine sulcus were significantly activated in the incongruent condition, i.e., in the main effect contrast “(EGO_IC + ALLO_IC) > (EGO_C + ALLO_C)” (Fig. 3A and Table 2). In the egocentric task, the right precentral gyrus, the left calcarine sulcus, the right lingual gyrus, and the right inferior occipital gyrus showed significantly higher neural activity in the incongruent condition than in the congruent condition, i.e., “EGO (IC > C)” (Fig. 3B and Table 2). In the allocentric task, the left superior parietal cortex and the right precuneus extending to the right calcarine sulcus showed enhanced neural activity in the incongruent compared with the congruent condition, i.e. “ALLO (IC > C)” (Fig. 3C and Table 2).

Table 2.

Brain regions showing significant increases of BOLD response associated with the spatial congruency effect

| Anatomical Region | Side | Cluster Peak (mm) | t Score | k E (Voxels) |

|---|---|---|---|---|

| A. (EGO_IC + ALLO_IC) > (EGO_C + ALLO_C) | ||||

| Calcarine sulcus | R | 8, −88, 0 | 7.24 | 4,361 |

| Calcarine sulcus | L | −6, −90, 12 | 5.57 | |

| Superior parietal cortex | R | 30, −52, 60 | 5.22 | 1,532 |

| Precuneus | L | −2, −54, 54 | 4.23 | |

| B. EGO (IC > C) | ||||

| Precentral gyrus | R | 36, −22, 60 | 6.28 | 2,285 |

| Lingual gyrus | R | 22, −88, −10 | 6.12 | 3,895 |

| Calcarine sulcus | L | 8, −88, 0 | 6.10 | |

| Inferior occipital gyrus | R | 34, −88, −6 | 5.67 | |

| C. ALLO (IC > C) | ||||

| Superior parietal cortex | L | −30, −56, 60 | 5.78 | 1,802 |

| Precuneus | R | 14, −62, 50 | 5.66 | 1,035 |

| Lingual gyrus | R | 14, −78, −4 | 4.97 | 550 |

| Calcarine sulcus | R | 10, −86, 2 | 4.53 | |

The coordinates (x, y, z) correspond to MNI coordinates. Displayed are the coordinates of the maximally activated voxel within a significant cluster as well as the coordinates of relevant local maxima within the cluster (in Italics).

Common neural mechanisms underlying the spatial conflict effect induced by the egocentric and allocentric representations

Neural regions commonly activated by the spatial congruency effect induced by the egocentric and allocentric representations were further localized by the neural contrast “[(EGO (IC > C) + ALLO (IC > C)]/2 > HLB (IC > C)”, in which the differences in the physical stimuli between the incongruent and congruent conditions were excluded, and only the neural areas commonly involved in the spatial conflict effect induced by the egocentric and allocentric representations were localized. An extended cluster, including the bilateral calcarine sulcus extending medially to the left precuneus, was significantly activated (Fig. 3D and Table 3). The extracted parameter estimates in the six experimental conditions from the left precuneus showed that neural activity was higher in the incongruent than congruent condition during both the allocentric and the egocentric judgments, but not during the non‐spatial HLB task (Fig. 3D).

Table 3.

The common and specific spatial conflict effects induced by the irrelevant allocentric and egocentric representations in the egocentric and allocentric judgment tasks

| Anatomical Region | Side | Cluster Peak (mm) | t Score | k E (Voxels) |

|---|---|---|---|---|

| A. Common: [EGO (IC > C) + ALLO (IC > C)]/2 > HLB (IC > C) | ||||

| Middle occipital gyrus | L | −28, −92, 12 | 6.40 | 7,708 |

| L | −18, −94, 12 | 6.13 | ||

| Cuneus | R | 12, −88, 16 | 5.59 | |

| Calcarine sulcus | L | −2, −88, −12 | 5.44 | |

| L | −10, −98, −2 | 5.37 | ||

| Precuneus | L | −2, −38, 72 | 4.89 | |

| B. EGO‐specific: ALLO (IC > C) > [EGO (IC > C) + HLB (IC > C)]/2 | ||||

| Superior parietal cortex | R | 18, −58, 48 | 5.08 | 664 |

| R | 28, −84, 38 | 3.88 | ||

| C. ALLO‐specific: EGO (IC > C) > [ALLO (IC > C) + HLB (IC > C)]/2 | ||||

| Inferior occipital gyrus | L | −24 −98, −6 | 6.15 | 4,205 |

| Inferior occipital gurus | R | 34, −88, −6 | 5.41 | |

| Precentral gyrus | R | 36, −22, 60 | 6.02 | 1,490 |

The coordinates (x, y, z) correspond to MNI coordinates. Displayed are the coordinates of the maximally activated voxel within a significant cluster as well as the coordinates of relevant local maxima within the cluster (in Italics).

Specific neural mechanisms underlying the spatial conflict effect induced by the irrelevant allocentric representations in the egocentric task

The bilateral inferior occipital gyrus and the right precentral gyrus were significantly activated in the neural contrast “EGO (IC > C) > [ALLO (IC > C) + HLB (IC > C)]/2” (Fig. 4 and Table 3). For the right precentral gyrus, planned t‐tests on the extracted mean parameter estimates revealed significantly higher neural activity in the EGO_IC condition than in the EGO_C condition, t (18) = 4.45, P < 0.01, whereas there was no significant difference between the congruent and incongruent conditions in either the allocentric, t (18) = 1.47, P = 0.16, or the nonspatial HLB tasks, t (18) = 0.42, P = 0.68 (Fig. 4, upper panel). For the left inferior occipital gyrus, neural activity was significantly higher in the EGO_IC condition than in the EGO_C condition, t (18) = 2.96, P < 0.01, while the pattern of neural activity was significantly reversed in the allocentric, t (18) = 2.83, P < 0.05, and the nonspatial HLB, t (18) = 3.63, P < 0.01, tasks, by showing significantly higher neural activity in the congruent than incongruent condition (Fig. 4, lower panel). The right inferior occipital gyrus showed similar patterns of neural activity as the left inferior occipital gyrus.

Specific neural mechanisms underlying the spatial conflict effect induced by the irrelevant egocentric representations in the allocentric task

Neural correlates underlying the spatial conflict effect specifically in the allocentric task were localized by the neural contrast “ALLO (IC > C) > [EGO (IC > C) + HLB (IC > C)]/2”. The right superior parietal cortex was significantly activated (Fig. 5A and Table 3). Mean parameter estimates extracted from the right superior parietal cortex are shown in Figure 5A as a function of the six experimental conditions. Planned t‐tests on the extracted parameter estimates revealed that neural activity was significantly higher in the ALLO_IC condition than in the ALLO_C condition, t (18) = 2.96, P < 0.01, but there was no significant difference between the congruent and incongruent conditions in either the egocentric, t (18) = 1.57, P = 0.13, or the non‐spatial HLB task, t (18) = 0.59, P = 0.56 (Fig. 5A). Moreover, neural activity was significantly higher in the egocentric task than in the allocentric, t (18) = 3.24, P < 0.01, and the nonspatial HLB tasks, t (18) = 5.24, P < 0.01 (collapsed over the congruent and incongruent conditions).

Parametric modulation effects of RTs

Significant positive parametric modulation effect of RTs was found only in the ALLO_IC condition, but not in the other experimental conditions. Moreover, bilateral superior parietal cortex was significantly activated by the positive parametric modulation effect of RTs specifically in the ALLO_IC condition: the higher the neural activity in the bilateral superior parietal cortex, the slower the RTs (Fig. 5B). Importantly, the superior parietal regions activated by the positive parametric modulation effect of RTs in the ALLO_IC condition (Fig. 5B) overlap with the superior parietal regions specifically involved in the spatial conflict effect evoked by the irrelevant egocentric representations in the ALLO_IC condition (Fig. 5A). An anatomical conjunction analysis between the positive parametric modulation effect of RTs in the ALLO_IC condition (Fig. 5B) and the spatial conflict effect specifically induced by irrelevant egocentric representations in the ALLO_IC condition (Fig. 5A) further confirmed that the right superior parietal cortex was conjointly activated by the two effects (Fig. 5C, upper panel). Mean parameter estimates on the HRF regressors and on the parametric regressors of RTs of the six experimental conditions were extracted from the conjointly activated superior parietal region, and are shown as a function of the six experimental conditions, respectively (Fig. 5C, lower panel). The extracted parameter estimates on the HRF regressors revealed similar pattern of neural activity as that shown in Figure 5A: neural activity in the right superior parietal cortex was not only higher in the incongruent than congruent condition of the allocentric judgment task, but also higher during the egocentric than allocentric judgment task (Fig. 5C, upper panel). In the meanwhile, parameter estimates on the parametric regressors of RTs showed that the positive correlation between neural activity in the right superior parietal cortex and RTs was significant specifically in the ALLO_IC condition, rather than in the other conditions (Fig. 5C, lower panel).

DISCUSSION

In this fMRI study, by manipulating the spatial congruency between the allocentric and egocentric positions (congruent vs. incongruent) and asking participants to perform egocentric, allocentric, and nonspatial HLB tasks on the same set of stimuli, we aimed to investigate the common and specific neural mechanisms underlying the spatial congruency effect induced by the egocentric and allocentric representations. At the behavioral level, significant spatial congruency effect was observed in both the egocentric and the allocentric tasks, i.e., responses were significantly slower in the incongruent than congruent condition (Fig. 1B). The present behavioral results thus replicated previous evidence by showing that spatial positions relative to the irrelevant frame of reference were not only represented but also interfered with the spatial judgments relative to the task‐relevant frame of reference [Zhang et al., 2013].

At the neural level, the main effect of the type of tasks replicated previous evidence by showing that the parieto‐frontal network was more significantly involved in the egocentric than allocentric task (Fig. 2) [Chen et al., 2012; Committeri et al., 2004; Fink et al., 1997, 2003; Neggers et al., 2006; Vallar et al., 1999; Zaehle et al., 2007]. Furthermore, the current results extend the previous findings by showing that the involvement of the parieto‐frontal network in coding the egocentric and allocentric representations is independent of the spatial congruency between the two frames (Fig. 2). However, the main effect of the spatial congruency effect revealed that the dorsal visual stream along the medial wall is involved in the spatial congruency effect between the two frames, irrespective of the type of spatial tasks (Fig. 3). Moreover, the right superior parietal cortex (Fig. 5A) and the right precentral gyrus (Fig. 4) were specifically involved in the spatial congruency effect induced by the irrelevant egocentric and allocentric representations, respectively. Please note, in the present study, the spatial conflicts between the allocentric and egocentric reference frames implicated the parieto‐occipital areas (Fig. 3), rather than the typical prefrontal (anterior cingulate cortex and/or dorsolateral prefrontal cortex) cognitive control areas, which have been associated with the classical executive control tasks [e.g., Fan et al., 2003; Peterson et al., 2002]. It has been suggested that specific neural regions are engaged in different kinds of conflict depending on the task demands [Banich et al., 2000; Liu et al., 2004; Niendam et al., 2012]. For example, a variety of neuroimaging evidence demonstrated that parietal‐occipital areas (including superior parietal cortex, precuneus, and visual processing areas) showed enhanced activity in attention‐demanding tasks, especially when the spatial information was task‐relevant [Banich et al., 2000; Fan et al., 2003; Liu et al., 2004; Peterson et al., 2002]. The spatial relationship between the allocentric and egocentric positions in this study may emphasize the attentional demands on spatial information, and accordingly recruit the parietal‐occipital regions, rather than the prefrontal regions.

In the following paragraphs, we will focus our discussions on the novel findings in this study, that is, the common and specific neural correlates underlying the spatial congruency effect induced by task‐irrelevant allocentric (in the egocentric judgment task) and task‐irrelevant egocentric (in the allocentric judgment task) representations, respectively.

Common Neural Correlates Underlying the Spatial Conflict Effect Induced by the Allocentric and Egocentric Representations

The spatial conflict effect commonly induced by the task‐irrelevant allocentric and egocentric representations, irrespectively of the type of spatial tasks, was associated with enhanced neural activity in the bilateral calcarine sulcus extending medially to the left precuneus in the incongruent conditions, compared to the congruent conditions. Moreover, the above spatial conflict existed only in the two types of spatial judgment tasks, but not in the nonspatial HLB task (Fig. 3D), indicating a clean effect free of the influences from bottom‐up stimuli.

It has been suggested before that the medial parietal cortex and the dorsal visual association were generally involved in spatial conflict tasks irrespective of the type of the task‐irrelevant information [Fan et al., 2003; Peterson et al., 2002; Wittfoth et al., 2006]. For example, in the Simon effect, a typical spatial conflict effect, the precuneus and the dorsal visuospatial association showed significantly enhanced neural activity when the task‐irrelevant target position was incongruent with the task‐relevant response coding compared to when congruent [Fan et al., 2003; Liu et al., 2004; Peterson et al., 2002; Wittfoth et al., 2006]. Moreover, another classical type of spatial conflict effect is observed in the Flanker task, which involves responding to a central stimulus surrounded by flankers [Eriksen and Eriksen, 1974]. For example, one version of the flanker task uses a central target arrow pointing to one direction and two flanker arrows pointing to either the same or different direction from the central arrow. Previous evidence suggested that the spatial conflict caused by the task‐irrelevant flanker arrows was also associated with enhanced neural activity in the precuneus and the visual association areas [Fan et al., 2003]. It has been suggested that when the irrelevant target position was incongruent with the required response in the classical Simon effect, the demands on spatial processing increased, which might result in enhanced neural activity in the brain regions associated with processing spatial information [Cieslik et al., 2010; Liu et al., 2004; Peterson et al., 2002].

Consistent with previous findings, in this study, the dorsal‐medial visuoparietal stream showed enhanced activity whenever there existed conflicts between the two spatial reference frames, irrespective of the type of the irrelevant spatial information. It has been suggested before that the dorsal visual stream consisted of two substreams: the dorso‐dorsal (d‐d) stream and the ventro‐dorsal (v‐d) stream [Rizzolatti and Matelli, 2003]. Anatomically, the medial part of the dorsal visual stream, that is, the dorso‐dorsal stream (d‐d stream), projects from early visual areas to the superior parietal cortex; the ventro‐dorsal (v‐d) stream is formed by area MT and the inferior parietal lobule. Functionally, the dorso‐dorsal stream is involved in transforming information about the spatial location of the target objects into the coordinate frames of the effectors being used to perform actions, whereas the ventro‐dorsal stream is responsible for the organization of grasping and action manipulations [Goodale, 2014; Goodale and Milner, 1992; Murata et al., 2000; Rizzolatti and Matelli, 2003]. Together with previous evidence, these results suggested that when spatial information from the two frames of reference was in conflict, the dorso‐dorsal stream might be involved to expedite the access to sensorimotor representations for spatial information from the task‐relevant frame of reference.

Specific Neural Correlates Underlying the Spatial Conflict Effect Induced by the Irrelevant Allocentric Representations

The bilateral inferior occipital gyrus and the right precentral gyrus were significantly involved in the spatial conflict effect induced by the irrelevant allocentric representation during the egocentric judgment task (Fig. 4). Our hypothesis was that the specific neural correlates underlying the spatial conflicts induced by the task irrelevant allocentric representations should be found in the neural regions specifically coding the allocentric representations. In contrast to our hypothesis, however, the bilateral inferior occipital gyrus and the right precentral gyrus did not show significantly higher neural activity during the allocentric than egocentric judgment task, indicating that these areas were not specifically involved in coding the allocentric representations.

The right precentral gyrus showed significantly higher neural activity in the incongruent than congruent condition of the egocentric tasks, and no significant difference between the congruent and incongruent conditions of the other two tasks (Fig. 4). These results indicated the involvement of the right precentral gyrus in the spatial conflict effect per se, rather than in the difference of bottom‐up stimuli. Due to its anatomical organization, the precentral gyrus is considered to be a critical area for the planning, selection and execution of responses [Murray et al., 2000; Riehle et al., 1997; Schluter et al., 1998; Simon et al., 2002; Ugur et al., 2005]. Moreover, it has been revealed before that the precentral gyrus was involved in various spatial compatibility tasks [Crammond and Kalaska, 1994; Dassonville et al., 2001; Koski et al., 2005]. For example, in the stimulus‐response compatibility tasks, in which the participants were instructed to press a response button on the same side of the stimulus in the compatible condition while on the opposite side in the incompatible condition, the precentral gyrus showed enhanced activity in the incompatible than compatible condition [Dassonville et al., 2001; Koski et al., 2005]. TMS over the precentral gyrus could facilitate the responses in the incompatible condition, indicating its functional role in conflict resolution [Koski et al., 2005]. However, previous evidence from both human and nonhuman primate studies indicated that the precentral gyrus was involved in multisensory integration, during which sensory inputs were transformed to the sensorimotor representations to maintain the egocentric coordinate frame [Desmurget et al., 2014; Galati et al., 2001; Lemon, 2008; Pouget and Snyder, 2000]. For example, in the rod‐and‐frame illusion, a square frame titled from the vertical line distorts the observer's sense of straight ahead, causing the perceived orientation of a vertical rod within the frame to tilt towards the opposite direction of the frame [Zoccolotti et al., 1997]. In the rod‐and‐frame illusion, the integration of visual information with somatosensory and internal egocentric representation is necessary to orient the rod despite of the influence from the titled frame [Barra et al., 2010; Fiori et al., 2015]. Recent evidence indicated that the precentral gyrus was involved in such illusion by coding accurate egocentric representations despite of the influences from background (allocentric) information [Baier et al., 2012]. In this study, especially in the incongruent condition of the egocentric task, the participants are required to maintain unbiased egocentric representations of the fork against the contextual (allocentric) influences from the background plate. Accordingly, the precentral gyrus might be involved in coding unbiased egocentric representations via resolving conflicts from the contextual information.

Specific Neural Correlates Underlying the Spatial Conflict Effect Induced by the Task‐Irrelevant Egocentric Representations

Our hypothesis is that the specific neural correlates underlying the spatial conflicts induced by the task‐irrelevant egocentric representations should be found in the neural regions specifically coding the egocentric representations. Furthermore, the more active the task‐irrelevant egocentric representations, the higher neural activity should be observed in the brain regions specifically coding the egocentric representations, the higher spatial conflicts should be caused by the task‐irrelevant egocentric representations, and the slower RTs should be observed specifically in the ALLO_IC condition. Consistent with the above hypothesis, the right superior parietal cortex showed not only higher neural activity in the incongruent than congruent condition of the allocentric judgment task (Fig. 3C), but also significantly higher neural activity during the egocentric than allocentric judgment task (Fig. 5A). Moreover, exactly the same right superior parietal area was significantly involved in the positive parametric modulation effect of RTs specifically in the ALLO_IC condition (Fig. 5B,C). The latter parametric modulation results further confirmed our predictions: the higher neural activity in the superior parietal cortex which codes the irrelevant egocentric representations, the more active these irrelevant egocentric representations, the higher spatial conflicts and the slower RTs were observed in the ALLO_IC condition (Fig. 5C).

Evidence from previous neuropsychological studies suggested that the right superior parietal cortex played an important role in forming egocentric representations. For example, patients with damages to the right hemisphere show a dysfunction of egocentric reference frame by displacing the midsagittal plane towards the side of the lesion. Neural correlates underlying such egocentric disorders are associated with damages to the right parietal cortex, especially the right superior parietal cortex [Levine et al., 1978; Perenin and Vighetto, 1988; Vallar, 1998]. Moreover, a large body of brain imaging literature provided evidence on the involvement of the superior parietal cortex in the EGO [Chen et al., 2012; Fink et al., 1997, 2003; Galati et al., 2000; Neggers et al., 2006]. In this study, the higher neural activity in the right superior parietal cortex in the egocentric than allocentric tasks is consistent with the critical role of the right superior parietal cortex in coding egocentric representations (Fig. 5A). Furthermore, the right superior parietal cortex was specifically activated by the spatial conflicts caused by the irrelevant egocentric representations, indicating the processing of the task‐irrelevant egocentric spatial information during the allocentric judgment tasks (Fig. 5A–C). Upon the onset of a behavioral target in the visual scene, both its allocentric and egocentric positions are represented and interact with each other [Zhang et al., 2013]. In the present allocentric tasks, the covariance of neural activity in the superior parietal cortex with RTs specifically in the ALLO_IC condition, but not in the ALLO_C condition, might indicate that the automatically coded task‐irrelevant egocentric representations became more active in the incongruent, rather than congruent, condition (Fig. 5).

CONCLUSION

To summarize, by manipulating the spatial congruency between the allocentric and egocentric reference frames, we found both shared and specific neural correlates underlying the spatial congruency effect induced by the allocentric and the egocentric representations in the human parieto‐frontal brain network. The dorsal‐dorsal visual stream was generally involved in spatial conflicts irrespective of the type of spatial distracting information. The right superior parietal cortex and the right precentral gyrus, however, were specifically involved in the spatial conflicts induced by the egocentric and the allocentric positions, respectively. Taken together, our results, for the first time, revealed the different functional roles played by different sub‐regions of the human parieto‐frontal network in processing the spatial congruency effects between the allocentric and the egocentric representations.

REFERENCES

- Andersen RA, Buneo CA (2002): Intentional maps in posterior parietal cortex. Annu Rev Neurosci 25:189–220. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Snyder LH, Bradley DC, Xing J (1997): Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci 20:303–330. [DOI] [PubMed] [Google Scholar]

- Baier B, Suchan J, Karnath HO, Dieterich M (2012): Neural correlates of disturbed perception of verticality. Neurology 78:728–735. [DOI] [PubMed] [Google Scholar]

- Banich MT, Milham MP, Atchley R, Cohen NJ, Webb A, Wszalek T, Kramer AF, Liang ZP, Wright A, Shenker J, Magin R (2000): fMRI studies of Stroop tasks reveal unique roles of anterior and posterior brain systems in attentional selection. J Cogn Neurosci 12:988–1000. [DOI] [PubMed] [Google Scholar]

- Barra J, Marquer A, Joassin R, Reymond C, Metge L, Chauvineau V, Perennou D (2010): Humans use internal models to construct and update a sense of verticality. Brain 133:3552–3563. [DOI] [PubMed] [Google Scholar]

- Bisiach E, Capitani E, Porta E (1985): Two basic properties of space representation in the brain: Evidence from unilateral neglect. J Neurol Neurosurg Psychiatry 48:141–144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Q, Weidner R, Weiss PH, Marshall JC, Fink GR (2012): Neural interaction between spatial domain and spatial reference frame in parietal‐occipital junction. J Cogn Neurosci 24:2223–2236. [DOI] [PubMed] [Google Scholar]

- Cieslik EC, Zilles K, Kurth F, Eickhoff SB (2010): Dissociating bottom‐up and top‐down processes in a manual stimulus‐response compatibility task. J Neurophysiol 104:1472–1483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Committeri G, Galati G, Paradis AL, Pizzamiglio L, Berthoz A, Bihan DL (2004): Reference frames for spatial cognition: different brain areas are involved in viewer‐, object‐, and landmark‐centered judgments about object location. J Neurosci 16:1517–1535. [DOI] [PubMed] [Google Scholar]

- Crammond DJ, Kalaska JF (1994): Modulation of preparatory neuronal activity in dorsal premotor cortex due to stimulus–response compatibility. J Neurophysiol 71:1281–1284. [DOI] [PubMed] [Google Scholar]

- Dassonville P, Lewis SM, Zhu XH, Ugurbil K, Kim SG, Ashe J (2001): The effect of stimulus‐response compatibility on cortical motor activation. Neuroimage 13:1–14. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Richard N, Harquel S, Baraduc P, Szathmari A, Mottolese C, Sirigu A (2014): Neural representations of ethologically relevant hand/mouth synergies in the human precentral gyrus. Proc Natl Acad Sci USA 111:5718–5722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Halligan PW (1991): Can visual neglect operate in object‐centred co‐ordinates? An affirmative single‐case study. Cogn Neuropsychol 8:475–496. [Google Scholar]

- Eriksen BA, Eriksen CW (1974): Effects of noise letters upon the identification of target letter in a nonsearch task. Percept Psychophys 16:143–149. [Google Scholar]

- Fan J, Flombaum JI, McCandliss BD, Thomas KM, Posner MI (2003): Cognitive and brain consequences of conflict. Neuroimage 18:42–57. [DOI] [PubMed] [Google Scholar]

- Findlay JM (1997): Saccade target selection during visual search. Vision Res 37:617–631. [DOI] [PubMed] [Google Scholar]

- Findlay JM, Brown V, Gilchrist ID (2001): Saccade target selection in visual search: The effect of information from the previous fixation. Vision Res 41:87–95. [DOI] [PubMed] [Google Scholar]

- Fink GR, Dolan RJ, Halligan PW, Marshall JC, Frith CD (1997): Space‐based and object‐based visual attention: Shared and specific neural domains. Brain 120:2013–2028. [DOI] [PubMed] [Google Scholar]

- Fink GR, Marshall JC, Weiss PH, Stephan T, Grefkes C, Shah NJ, Zilles K, Dieterich M (2003): Performing allocentric visuospatial judgments with induced distortion of the egocentric reference frame: An fMRI study with clinical implications. Neuroimage 20:1505–1517. [DOI] [PubMed] [Google Scholar]

- Fiori F, Candidi M, Acciarino A, David N, Aglioti SM (2015): The right temporo‐parietal junction plays a causal role in maintaining the internal representation of verticality. J Neurophysiol 114:2983–2990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galati G, Committeri G, Sanes JN, Pizzamiglio L (2001): Spatial coding of visual and somatic sensory information in body‐centred coordinates. Eur J Neurosci 14:737–746. [DOI] [PubMed] [Google Scholar]

- Galati G, Lobel E, Vallar G, Berthoz A, Pizzamiglio L, Le BD (2000): The neural basis of egocentric and allocentric coding of space in humans: A functional magnetic resonance study. Exp Brain Res 133:156–164. [DOI] [PubMed] [Google Scholar]

- Goodale MA (2014): How (and why) the visual control of action differs from visual perception. Proc Biol Sci 281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodale MA, Milner AD (1992): Separate visual pathways for perception and action. Trends Cogn Sci 15:20–25. [DOI] [PubMed] [Google Scholar]

- Koski L, Molnar‐Szakacs I, Iacoboni M (2005): Exploring the contributions of premotor and parietal cortex to spatial compatibility using image‐guided TMS. Neuroimage 24:296–305. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI (2009): Circular analysis in systems neuroscience: The dangers of double dipping. Nat Neurosci 12:535–540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lemon RN (2008): Descending pathways in motor control. Annu Rev Neurosci 31:195–218. [DOI] [PubMed] [Google Scholar]

- Levine DN, Kaufman KJ, Mohr JP (1978): Inaccurate reaching associated with a superior parietal lobe tumor. Neurology 28:555–561. [DOI] [PubMed] [Google Scholar]

- Liu X, Banich MT, Jacobson BL, Tanabe JL (2004): Common and distinct neural substrates of attentional control in an integrated Simon and spatial Stroop task as assessed by event‐related fMRI. Neuroimage 22:1097–1106. [DOI] [PubMed] [Google Scholar]

- Marshall JC, Halligan PW (1993): Visuo‐spatial neglect: a new copying test to assess perceptual parsing. J Neurol 240:37–40. [DOI] [PubMed] [Google Scholar]

- Milner AD, Goodale MA (2008): Two visual systems re‐viewed. Neuropsychologia 46:774–785. [DOI] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H (2000): Selectivity for the shape, size and orientation of objects in the hand‐manipulation‐related neurons in the anterior intraparietal (AIP) area of the macaque. J Neurophysiol 83:2580–2601. [DOI] [PubMed] [Google Scholar]

- Murray EA, Bussey TJ, Wise SP (2000): Role of prefrontal cortex in a network for arbitrary visuomotor mapping. Exp Brain Res 133:114–129. [DOI] [PubMed] [Google Scholar]

- Neggers SF, Van der Lubbe RH, Ramsey NF, Postma A (2006): Interactions between ego‐and allocentric neuronal representations of space. Neuroimage 31:320–331. [DOI] [PubMed] [Google Scholar]

- Niendam TA, Laird AR, Ray KL, Dean YM, Glahn DC, Carter CS (2012): Meta‐analytic evidence for a superordinate cognitive control network subserving diverse executive functions. Cogn Affect Behav Neurosci 12:241–268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perenin MT, Vighetto A (1988): Optic ataxia: A specific disruption in visuomotor mechanisms. I. Different aspects of the deficit in reaching for objects. Brain 111:643–674. [DOI] [PubMed] [Google Scholar]

- Peterson BS, Kane MJ, Alexander GM, Lacadie C, Skudlarski P, Leung HC, May J, Gore JC (2002): An event‐related functional MRI study comparing interference effects in the Simon and Stroop tasks. Brain Res Cogn Brain Res 13:427–440. [DOI] [PubMed] [Google Scholar]

- Poline JB, Worsley KJ, Evans AC, Friston KJ (1997): Combining spatial extent and peak intensity to test for activations in functional imaging. Neuroimage 5:83–96. [DOI] [PubMed] [Google Scholar]

- Pouget A, Snyder LH (2000): Computational approaches to sensorimotor transformations. Nat Neurosci 3:1192–1198. [DOI] [PubMed] [Google Scholar]

- Riehle A, Kornblum S, Requin J (1997): Neuronal correlates of sensorimotor association in stimulus‐response compatibility. J Exp Psychol Hum Percept Perform 23:1708–1726. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Matelli M (2003): Two different streams form the dorsal visual system: anatomy and functions. Exp Brain Res 153:146–157. [DOI] [PubMed] [Google Scholar]

- Rolls ET (1999): Spatial view cells and the representation of place in the primate hippocampus. Hippocampus 9:467–480. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Xiang JZ (2006): Spatial view cells in the primate hippocampus and memory recall. Rev Neurosci 17:175–200. [DOI] [PubMed] [Google Scholar]

- Schluter ND, Rushworth MF, Passingham RE, Mills KR (1998): Temporary interference in human lateral premotor cortex suggests dominance for the selection of movements. A study using transcranial magnetic stimulation. Brain 121:785–799. [DOI] [PubMed] [Google Scholar]

- Simon SR, Meunier M, Piettre L, Berardi AM, Segebarth CM, Boussaoud D (2002): Spatial attention and memory versus motor preparation: Premotor cortex involvement as revealed by fMRI. J Neurophysiol 88:2047–2057. [DOI] [PubMed] [Google Scholar]

- Thaler L, Todd JT (2010): Evidence from visuomotor adaptation for two partially independent visuomotor systems. J Exp Psychol Hum Percept Perform 36:924. [DOI] [PubMed] [Google Scholar]

- Ugur HC, Kahilogullari G, Coscarella E, Unlu A, Tekdemir I, Morcos JJ, Elhan A, Baskaya MK (2005): Arterial vascularization of primary motor cortex (precentral gyrus). Surg Neurol 64:48–52. [DOI] [PubMed] [Google Scholar]

- Vallar G (1998): Spatial hemineglect in humans. Trends Cogn Sci 2:87–97. [DOI] [PubMed] [Google Scholar]

- Vallar G, Lobel E, Galati G, Berthoz A, Pizzamiglio L, Bihan DL (1999): A fronto‐parietal system for computing the egocentric spatial frame of reference in humans. Exp Brain Res 124:281–286. [DOI] [PubMed] [Google Scholar]

- Walker R (1995): Spatial and object‐based neglect. Neurocase 1:371–383. [Google Scholar]

- Wittfoth M, Buck D, Fahle M, Herrmann M (2006): Comparison of two Simon tasks: neuronal correlates of conflict resolution based on coherent motion perception. Neuroimage 32:921–929. [DOI] [PubMed] [Google Scholar]

- Zaehle T, Jordan K, Wustenberg T, Baudewig J, Dechent P, Mast FW (2007): The neural basis of the egocentric and allocentric spatial frame of reference. Brain Res 1137:92–103. [DOI] [PubMed] [Google Scholar]

- Zhang M, Tan X, Shen L, Wang A, Geng S, Chen Q (2013): Interaction between allocentric and egocentric reference frames in deaf and hearing populations. Neuropsychologia 54:68–76. [DOI] [PubMed] [Google Scholar]

- Zoccolotti P, Antonucci G, Daini R, Martelli ML, Spinelli D (1997): Frame‐of‐reference and hierarchical‐organisation effects in the rod‐and‐frame illusion. Perception 26:1485–1494. [DOI] [PubMed] [Google Scholar]