Abstract

The processes underlying perceptual decision making are diverse and typically engage a distributed network of brain areas. It is a particular challenge to establish a sensory‐to‐motor functional hierarchy in such networks. This is because single‐cell recordings mainly study the nodes of decision networks in isolation but seldom simultaneously. Moreover, imaging methods, which allow simultaneously accessing information from overall networks, typically suffer from either the temporal or the spatial resolution necessary to establish a detailed functional hierarchy in terms of a sequential recruitment of areas during a decision process. Here we report a novel analytical approach to work around these latter limitations: using temporal differences in human fMRI activation profiles during a tactile discrimination task with immediate versus experimentally delayed behavioral responses, we could derive a linear functional gradient across task‐related brain areas in terms of their relative dependence on sensory input versus motor output. The gradient was established by comparing peak latencies of activation between the two response conditions. The resulting time differences described a continuum that ranged from zero time difference, indicative for areas that process information related to the sensory input and, thus, are invariant to the response delay instruction, to time differences corresponding to the delayed response onset, thus indicating motor‐related processing. Taken together with our previous findings (Li Hegner et al. [2015]: Hum Brain Mapp 36:3339–3350), our results suggest that the anterior insula reflects the ultimate perceptual stage within the uncovered sensory‐to‐motor gradient, likely translating sensory information into a categorical abstract (non‐motor) decision. Hum Brain Mapp 38:1172–1181, 2017. © 2016 Wiley Periodicals, Inc.

Keywords: anterior insula, fMRI, functional gradient, intraparietal sulcus, somatosensory, BOLD, human brain

INTRODUCTION

A simple perceptual decision involves complex neuronal dynamics within a distributed cortical network. There is so far only a limited understanding about how our brain represents the different processes underlying perceptual decisions, and how the various related neuronal representations form a functional sensory‐to‐motor hierarchy when making a choice. Electrophysiological studies in animals have provided important clues about the flow of information within the perceptual decision network [Gold and Shadlen, 2007; Guo et al., 2014; Hernandez et al., 2010; Romo et al., 2006; Siegel et al., 2015]. In particular, a serial flow of information from primary sensory cortex at the beginning of a perceptual decision process, to motor cortex demarcating the ultimate behavioral reflection of a perceptual decision, has become evident [Guo et al., 2014]. However, the interposed processing stages and their interactions during the decision process remain blurred.

Various studies highlight a fronto‐parietal network as the crucial interposed nodes for decision formation. Extracellular recordings performed sequentially in multiple areas in monkeys have shown that various fronto‐parietal areas engage to a different extent in the representation of a sensory stimulus. This information is gradually fed‐forward to more frontal regions to reach a decision, and finally, to motor cortex triggering the execution of a corresponding action [Hernandez et al., 2010]. Yet, to fully capture the functional sensory‐to‐motor hierarchy and to even discriminate bi‐directional information flow (bottom‐up vs. top‐down) one would require simultaneous recordings from multiple regions of the brain. Using such an approach, Siegel and colleagues simultaneously recorded single cell activities across six brain areas in two monkeys while they were performing a rule‐dependent visual discrimination task [Siegel et al., 2015]. Their study revealed a sensory information flow from visual to parietal to prefrontal cortices, as well as a task‐rule related flow of information from fronto‐parietal to visual cortices. Decision‐related information thereby arose simultaneously in frontoparietal regions and this information was then propagated forward to motor execution areas and backward to sensory areas. These above‐mentioned animal studies offered intriguing insights into the cortical information flow during perceptual decision making. It remains unclear, however, how these findings and in particular the suggested functional cortical hierarchy would translate to the human brain. We, therefore, adopted a non‐invasive imaging method, namely functional magnetic resonance imaging (fMRI), to investigate perceptual decision processes in the human brain. While fMRI is only a slow and indirect measure of neural activity, it offers the tremendous advantage that in contrast to single‐cell electrophysiological recordings, it can assess activity estimates from the whole brain and thus provide an unbiased view on the involvement of different decision‐related brain regions.

Similar to the animal work reported above, previous human fMRI studies have also revealed a fronto‐parietal decision network, emphasizing the role of intraparietal sulcus (IPS) [Kayser et al., 2010], inferior frontal cortex [Filimon et al., 2013] and anterior insula [aINS, Binder et al., 2004; Ho et al., 2009; Li Hegner et al., 2015; Liu and Pleskac, 2011] in perceptual decision making. Converging evidence from these previous studies [Binder et al., 2004; Ho et al., 2009; Li Hegner et al., 2015; Liu and Pleskac, 2011] further highlights the aINS as a domain‐general perceptual decision area that reflects categorical perceptual decisions, irrespective of the modality of either motor response or sensory input. Yet, it still remains a challenge to detail these (and other) areas' “position” in a sensory‐to‐motor functional hierarchy within the set of areas contributing to perceptual decisions. This shall be exemplified by a human fMRI study of our group, in which we uncovered a distributed set of cortical areas contributing to perceptual decision making during tactile spatial pattern discrimination [Li Hegner et al., 2015]. In brief, in this study we exhibited multiple task‐related areas in which fMRI activity was positively correlated with decision difficulty and subjects' reaction times. To further isolate those areas in which activity could not be explained by motor processing (i.e., reaction times) we additionally included an experimental condition in which the perceptual decision could not be signaled immediately but only after a 3 s experimental delay. This was because only after this delay the response options were provided. Importantly, the delay also enabled subjects to complete their perceptual decision even before an appropriate response could be prepared. As a consequence, subjects were able to signal their decision without any difference in reaction time—irrespective of decision difficulty. The areas that still exhibited a modulation of fMRI activity as a function of decision difficulty despite the absence of changes in motor behavior were SI in the postcentral sulcus contralateral to the stimulated hand, contralateral IPS, and bilateral aINS. The presence of decision‐related activity in the latter two areas was further confirmed by our ability to successfully decode subjects' perceptual decision within trials of identical difficulty using multivariate pattern analysis. On the one hand, our approach allowed us to highlight the aINS and the IPS as decision areas, while their activity could not be explained by differences in sensory input or motor output [Li Hegner et al., 2015]. On the other hand, our previous analysis could not detail whether processing in these and other decision‐related brain areas relates to the sensory input they require or to the motor response, which they ultimately cause.

To further detail the actual stage of our “decision areas” in the transition from a somatosensory input to a decision‐related motor output (and with respect to the overall set of decision‐related brain regions), we here devised a novel analytical approach. This approach was applied to our previously recorded dataset and enabled us to uncover a stable functional somatosensory‐to‐motor gradient. The aINS and the IPS reflected an interposed stage within this functional continuum, while the aINS was significantly further downstream than the IPS, potentially representing the ultimate categorical decision, before being translated into a corresponding motor response.

MATERIALS AND METHODS

Experimental Task

Fifteen right‐handed healthy volunteers (seven females, mean age 25 ± 2.8 years) took part in this study. They gave their written informed consent before the experiment. All subjects had normal or corrected‐to‐normal vision. The local ethics committee approved the protocol of this human study, which was conducted in accordance with the Declaration of Helsinki. A detailed description of the experimental paradigm used in this study can be found in our earlier article [Li Hegner et al., 2015].

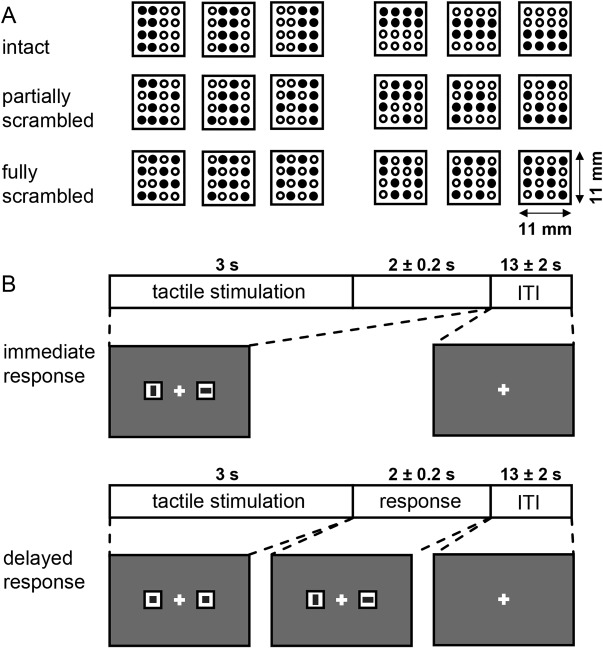

In brief, subjects were instructed to perform a vibrotactile pattern discrimination task (Fig. 1, previously published in Li Hegner et al., 2015): they experienced a vibrotactile bar‐stimulus for 3 s on the tip of the left middle finger and had to indicate its perceived orientation relative to the finger axis (horizontal bar or vertical bar) by performing a saccadic eye movement to a response cue that corresponded to their perceptual decision. The response cues were two visual icons (with either a vertical or a horizontal bar) placed horizontally beside the central fixation cross with 4.2° eccentricity. Tactile patterns were generated by driving designated little plastic rods (Ø 1.1 mm) simultaneously in (beneath the panel surface) and out (1.5 mm above the surface) at 24 Hz. Except for the saccadic response, subjects were asked to maintain visual fixation at the central cross (Fig. 1B) throughout the experiment. Eye movements were continuously monitored using an MRI‐compatible infrared camera system (Resonance Technology) positioned over the right eye, in combination with an eye‐tracking system from Arrington Research (Monocular PC‐60 integrator system, PCI framegrabber and ViewPoint eye‐tracking software). For each subject, we collected 144 trials in total, equally divided into four scanning sessions.

Figure 1.

Tactile stimulus and trial structure. A. There were three quality levels in the tactile pattern discrimination task, namely intact, partially scrambled and fully scrambled patterns representing either a vertical (left columns) or horizontal object (right columns). In intact patterns (top row), objects were defined by eight vibrating pins (filled circles; empty circles represent inactive pins that remain retrieved beneath the panel surface). In partially scrambled (middle row)/fully scrambled patterns (bottom row), 2/4 object‐pins were inactive while 2/4 non‐object pins were vibrating and served as distracters, respectively. The task was to discriminate whether the pattern reflected a horizontal or a vertical bar relative to the finger axis. B. There were two task conditions. In both conditions subjects initially received a tactile stimulation for 3 s in order to perform the discrimination task. In the immediate response condition, subjects were instructed to report the perceived orientation by making a quick saccade toward the corresponding icon (horizontal or vertical bar, randomly placed left or right) as soon as a decision was made. The response cues were present at the onset of the tactile stimulation and lasted 5 ± 0.2 s. In contrast, in the delayed response condition, they could only respond when the neutral icons (squares), presented during stimulation (3 s), turned into the response options (lasting 2 ± 0.2 s) at the offset of the tactile stimulus. Both types of trials were presented pseudo‐randomly interleaved and were counterbalanced within and across sessions. Figure was originally published in Li Hegner et al. [2015], Human Brain Mapping.

Importantly, half of the trials in our experiment were so‐called “immediate response” trials and the other half so‐called “delayed response” trials. In immediate response trials, the assignment of a perceptual decision to a specific saccadic response was instructed through the response cues immediately at the onset of the tactile stimulus. In contrast, in delayed response trials the assignment was presented only after a delay of 3 s at the offset of the tactile stimulus (compare Fig. 1B). The delayed response condition thereby served to temporally separate sensory processes related to perceptual decision making from decision‐specific motor preparation and execution. Hence, in the immediate response condition, subjects were shown the response cues already at the onset of the tactile stimulus and they were supposed to respond with a saccade as soon as they reached a decision. In contrast, the delayed response condition was indicated by two neutral response cues (two squares instead of a horizontal and a vertical bar). The actual response cues only appeared after 3 s at tactile stimulus offset. Hence, prior to the disclosure of the response cues, subjects could perform a perceptual decision but they could not prepare a specific saccade as the location of the vertical and horizontal cues were pseudorandomly exchanged between the right and left position and thus unknown.

Within each of these two response conditions we additionally varied decision difficulty by manipulating spatial pattern quality over three levels. There were 24 trials for each of the three different spatial pattern qualities (intact patterns [easy], partially scrambled patterns [medium difficulty], and fully scrambled patterns [difficult]), respectively (compare Fig. 1A). Decision difficulty was reflected by the time subjects needed to initiate a saccade in the immediate response condition: across subjects the average reaction times significantly varied between 845 ± 200 ms, 1151 ± 292 ms, and 1347 ± 492 ms (mean ± standard deviation) for the intact, partially scrambled and fully scrambled patterns in the immediate response condition. Instead, in the delayed response condition, reaction times were mostly identical and amounted to 437 ± 99 ms, 443 ± 127 ms, and 445 ± 125 ms, respectively (for details please refer to [Li Hegner et al., 2015]). This suggests that—across the varying difficulty levels—the decision process was completed before subjects planned a corresponding saccade in the delayed response condition.

The order of presentation of trials of different response conditions (immediate and delayed), of varying decision difficulty: easy, medium, or difficult) and of pattern type (horizontal or vertical) was pseudorandomized across the experiment and counterbalanced within and across sessions. After each trial there was an inter‐trial interval of 13 ± 2 s (randomly jittered in 200 ms steps) during which subjects should maintain central fixation. This inter‐trial interval served as a baseline period for fMRI analysis and, due to the random jitter of ±2 s in 200 ms steps we also could effectively sample fMRI‐activity at a higher temporal resolution (than the TR of 2 s; compare next paragraph) across trials [Amaro and Barker, 2006].

Imaging Procedures

The experiments were performed in a 3 Tesla MR scanner (Siemens Trio, Max Planck Institute of Biological Cybernetics, Tuebingen, Germany) with a 12‐channel head coil. The functional scan was acquired with an echo‐planar imaging sequence (TR = 2 s, TE = 35 ms, flip angle 90°, FoV read 192 mm, FoV phase 100.0%, Fat saturation included), consisting of 30 axial slices (thickness: 3.2 mm) covering the whole brain in a descending order (interleaved multislice mode). The planar resolution was 3 × 3 mm2 and the inter‐slice gap was 0.8 mm. For every subject we also acquired a high‐resolution (1 mm, isotropic) T1‐weighted structural image with an MPRAGE sequence directly after the functional imaging sessions.

Image Preprocessing and GLM Analysis

Details of our imaging procedures are described in detail in our previous article [Li Hegner et al., 2015]. In brief, preprocessing of the fMRI data included slice scan time correction, 3D rigid‐body motion correction, and temporal high‐pass filtering (0.0045 Hz, including linear trend removal). No spatial smoothing was performed. In a first step we analyzed our fMRI data using a multisubject random‐effects general linear model (GLM) with two main predictors (immediate and delayed response conditions). These two main predictors were modeled with a box car function (starting at the onset of the tactile stimulation until the offset of the response cues), which was further convolved with a two‐gamma hemodynamic response function (peak at 5 s). For each main predictor, we included a parametric modulation predictor varying with pattern quality. The weight of this parametric modulation predictor was separately defined for each individual subject and for each experimental session, namely according to her/his z‐scored average reaction times in each pattern quality level in the immediate response condition (serving as a proxy for decision difficulty in both interleaved immediate and delayed response conditions).

Regions of Interest

The analyses that we focus on here were performed on various decision‐related regions‐of‐interest (ROIs) as were defined in our earlier study [Li Hegner et al., 2015]. We considered those areas as decision‐related ROIs that exhibited task‐related increases in blood‐oxygenation‐level‐dependent (BOLD) activity and, in addition, exhibited a significant parametric modulation by decision difficulty. In brief, the more difficult a decision, the longer the process of decision making, as was signified by subjects' reaction times in the immediate response condition (see above). Areas that are related to the decision process will therefore exhibit a characteristic pattern of BOLD‐signal amplitudes, which will be the higher, the longer the decision process (see Supporting Information Fig. S1 for illustration). The following areas showed such parametrically‐modulated activation in the immediate response condition (P corrected = 0.05): the right calcarine sulcus (visual cortex, VC), left (ipsilateral to the stimulated hand) dorsal premotor cortex (dPM), left medial intraparietal sulcus (mIPS); bilateral postcentral sulcus (PCS), anterior intraparietal sulcus (aIPS), lateral intraparietal sulcus (ℓIPS), superior parietal lobule (SPL), ventral premotor cortex (vPM), anterior insula (aINS) spilling over to the frontal operculum (FO), pre‐supplementary motor area (pre‐SMA), supplementary motor area (SMA), and anterior cingulate cortex (ACC). Only a subset of these regions also exhibited parametrically modulated activation in the delayed response condition, namely, the right PCS, right IPS and bilateral aINS/FO. Importantly, the modulation of the BOLD response in these areas during the delayed response condition could not be explained by differences in motor behavior (see above). To clearly identify these “decision‐areas” among our ROIs, we labeled them with an extra apostrophe (‘), also enabling differentiation from the corresponding areas mapped in the immediate condition. Although the ROIs of the delayed response condition greatly overlapped with the ROIs identified in the immediate condition, we analyzed them separately because as was mentioned before, decision‐related activity in these ROIs could not be explained by the motor response [compare Li Hegner et al., 2015]. A separate analysis was also done because overlapping ROIs might entail varying subdivisions of the same cortical area and thus have different functions, as was for instance illustrated for the case of the anterior insula/frontal operculum during perceptual decision making [Rebola et al., 2012].

ROI‐Based Temporal‐Difference Analysis

Building on our previously collected dataset we here set out to examine potential temporal differences of BOLD activation profiles between immediate and delayed response conditions across our ROIs. In each ROI and in each subject we extracted average BOLD‐signal time courses for each pattern quality category (intact, partially‐scrambled and fully‐scrambled) and for each response condition (immediate and delayed) calculated across corresponding subsets of trials with an interpolated temporal resolution of 1 s (percent BOLD signal change was calculated by subtracting the mean signal of −2 to 0 s relative to tactile stimulus onset). Our ROI‐based analysis explicitly focused on putative differences in the peak latencies of the average BOLD response between these delayed and immediate response conditions, while peaks were identified by the maximum BOLD amplitude within 2–10 s after tactile stimulus onset. For every subject and for each difficulty level we separately calculated the temporal difference of the mean peak BOLD responses between the immediate and the delayed response condition.

This temporal‐difference analysis was driven by the following rationale: if processing within a respective ROI would exclusively relate to the tactile stimuli, which were identical in the immediate and the delayed response condition, we would not reveal any temporal difference in peak activity across these response conditions (i.e. the difference should equal 0 s; see Supporting Information Fig. S1 for illustration). In turn, the peak latency differences in a “pure” motor area (or an area driven by the sensory consequences of a motor act—here VC) should amount to roughly 2.35 s (i.e., the 3 s delay minus the average difference in saccade reaction times between delayed and immediate response conditions, which amounted to roughly 0.65 s; compare Li Hegner et al., 2015). This is because, as compared with the immediate response condition, motor processes in the delayed response condition are put back due to the experimentally instructed delay. Accordingly, the smaller the latency difference in a ROI the closer its function should be related to sensory processing while the larger this difference the closer its function would relate to motor preparation and execution. This interdependence is further illustrated in Supporting Information Figure S1. Hence, using these mean peak BOLD latency differences we derived a linear functional index which supposedly captured the cortical somatosensory‐to‐motor transition as it unfolded during our tactile decision task. Regression analyses on our ROIs' peak BOLD latency differences, calculated between pairs of difficulty levels, were performed to test the robustness of using such temporal‐difference parameter in BOLD activation profiles to establish such a functional gradient. Two factorial (Factor ROI: two levels; Factor difficulty: three levels) repeated‐measures analyses of variance (ANOVA) were further performed to assess whether the peak BOLD latency differences were significant between different pairs of decision‐related ROIs, as were defined by the delayed response condition (see “Regions of Interest”).

RESULTS

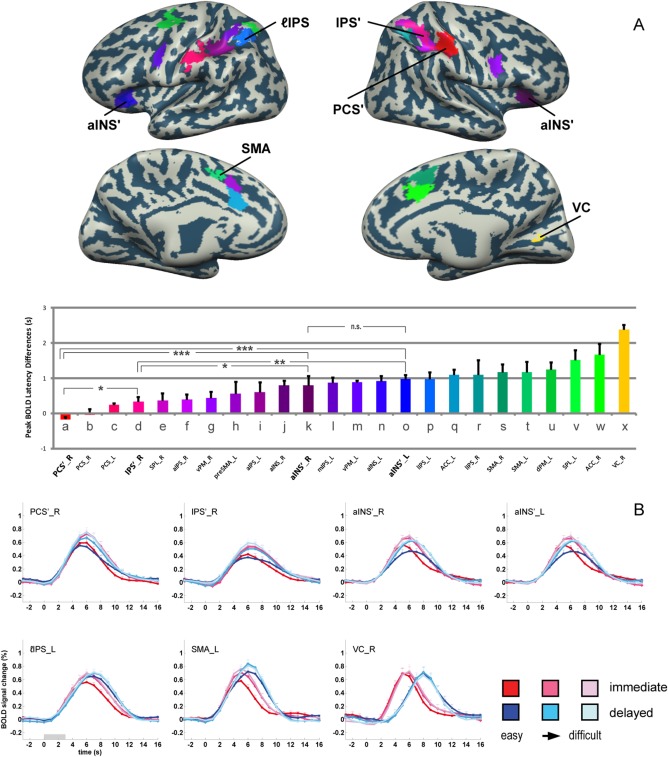

Our ROI‐based temporal‐difference analysis of peak latencies in BOLD signal time courses revealed a linear gradient of time differences across the various cortical areas considered (see Fig. 2). Time differences ranged from around 0 s, that is, indicative for an area's exclusive engagement in processes related to the sensory stimulus rather than motor output, to about 2.4 s, that is, indicative for motor‐related processing.

Figure 2.

Overview of mean differences in BOLD peak latencies across difficulty levels. A. Mean BOLD peak latency differences were color‐coded to reveal the gradient of latency differences across ROIs. Color‐coded ROIs are overlaid on the cortical surface reconstruction of a representative subject. Note that the larger the latency difference, the closer the processing within a ROI should relate to the motor response, while smaller values rather imply an engagement in sensory‐related processing. Pairwise (between two ROIs) repeated measures ANOVA (factor difficulty: 3 levels; factor ROI: 2 ROIs) were additionally performed on the peak BOLD latency differences between the candidate perceptual decision areas. Significant influences of factor ROI are indicated (***: P < 0.001; **: P < 0.01; *: P < 0.05). B. BOLD signal time courses are shown for representative ROIs. Candidate decision areas are marked with an apostrophe (') to allow differentiation from the corresponding areas mapped in the immediate condition. Error bars denote standard errors.

To visualize this decision‐related gradient from sensory processing to motor execution we sorted the ROIs according to their mean peak BOLD latency differences calculated across the three difficulty levels (see Fig. 2A). As to be expected, tactile areas in the posterior central sulcus (PCS) thereby designate the sensory end of this somatosensory‐to‐motor gradient. Specifically, our results suggest that during our tactile pattern discrimination task, this gradient starts at the right PCS (contralateral to the stimulated finger), continuing to the left (ipsilateral) PCS, then to the right IPS', SPL_R, aIPS_R, and vPM_R, then to the left preSMA and aIPS_L, to the right aINS, left mIPS, vPM_L, aINS_L, and lIPS_L, then to the left ACC, lIPS_R, bilateral SMA, and dPM_L, and finally to the left SPL and the right ACC. Finally, area VC is found at the motor end of our sensory‐to‐motor gradient, as it demonstrated the largest latency differences, which was probably caused by the processing of re‐afferent visual input due to the saccadic response. Hence, VC should not be considered to play a driving role in the reported decision‐related functional gradient.

Averages of subjects' BOLD signal time‐courses underlying our temporal‐difference analysis are additionally depicted in Figure 2B for a subset of ROIs. While, for instance, in PCS'_R peak response times were rather identical across conditions and difficulty levels (signifying its chief role in sensory processing), the peak response in SMA_L occurred at later times in the delayed response conditions (signifying motor processing) (also compare respective model predictions, which are illustrated in Supporting Information Fig. S1). Importantly, the temporal differences between the signal peaks in the immediate vs. the delayed response condition were stable across the depicted ROIs, irrespective of the actual difficulty level. We will focus on this point in detail further below.

The ROIs depicted in the upper row of Figure 2B are regions that we previously identified as decision‐related, that is, their activity varied with decision difficulty in the delayed response condition and thus was independent from any contribution to decision‐related motor responses [Li Hegner et al., 2015]. These areas were, PCS'_R, IPS'_R, aINS'_R, and aINS'_L (also see Fig. 2A). Focusing on these ROIs, we next wanted to assess the stage of each of these areas within the somatosensory‐to‐motor gradient revealed by our new analytical approach. For this purpose we performed six two‐factorial (Factor ROI: pair of ROIs; Factor difficulty: three levels) repeated‐measures ANOVAs on the temporal difference estimates revealed for these putative “decision areas.” While for the left and right aINS' there was no significant peak BOLD latency difference, all other pairwise comparisons were significant (PCS'_R < IPS'_R < aINS'_R/aINS'_L, P < 0.05), suggesting that the bilateral aINS' is at the top of our functional gradient within this subset of ROIs (see lower panel of Fig. 2A).

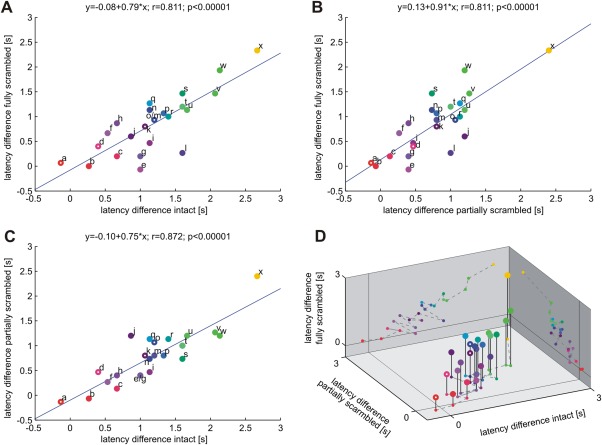

In order to further investigate the robustness of the functional gradient derived from the peak BOLD latency differences between the immediate and the delayed response conditions across ROIs, we tested whether this gradient is stable when estimated separately for each difficulty level. Toward this end we performed three pair‐wise regression analyses to examine how similar the ROIs' peak BOLD latency differences were across the three tactile pattern qualities (Fig. 3). Indeed, very high correlation was found between intact and fully scrambled patterns (r = 0.811, P < 0.00001; Fig. 3A), between partially and fully scrambled patterns (r = 0.811, P < 0.00001; Fig. 3B), as well as between intact and partially scrambled patterns (r = 0.872, P < 0.00001; Fig. 3C). We additionally visualized the full interrelation in a 3D graph, plotting our ROIs' latency differences of the different difficulty levels against each other (Fig. 3D). Thus, the hierarchical order of ROIs (according to their peak BOLD latency differences between the immediate and delayed response conditions) was very stable across different difficulty levels.

Figure 3.

Regression analysis on peak BOLD latency differences between immediate and delayed response conditions across decision difficulty levels. High correlation coefficients suggest similar and stable sensory‐to‐motor gradients across ROIs during easy (intact patterns), medium (partially scrambled patterns) and difficult (fully scrambled patterns) tactile pattern decisions. Unfilled circles denote those decision candidate areas revealed in the delayed response condition. Area labels and color codes correspond to those defined in Figure 2 (a: PCS'_R, b: PCS_R, c: PCS_L, d: IPS'_R, e: SPL_R, f: aIPS_R, g: vPM_R, h: preSMA_L, i: aIPS_L, j: aINS_R, k: aINS'_R, l: mIPS_L, m: vPM_L, n: aINS_L, o: aINS'_L, p: ℓIPS_L, q: ACC_L, r: ℓIPS_R, s: SMA_R, t: SMA_L, u: dPM_L, v: SPL_L, w: ACC_R, x: VC_R).

Finally, we would like to refer the interested reader to our supplemental results and Supporting Information Figure S2 in which we further report respective results in additional brain regions that were task‐related but did not modulate their BOLD activity with decision difficulty (neither in the immediate nor in the delayed response condition) and, for this reason, were not included as ROIs. We still report our temporal difference measure for these areas, namely bilateral SII at the parietal operculum, bilateral dorsal lateral prefrontal cortices and contralateral medial temporal area, because of their general relevance in decision tasks.

DISCUSSION

In this study we performed a novel ROI‐based BOLD signal time course analysis on the dataset of our previously published work on the “cortical correlates of perceptual decision making during tactile spatial pattern discrimination” [Li Hegner et al., 2015]. In this fMRI study subjects performed perceptual decisions during interleaved trials with either immediate or delayed responses that varied in decision difficulty. While in our previous work we solely were interested in exhibiting “decision areas” in which BOLD activity was modulated by decision difficulty while keeping motor preparation and execution identical (in delayed response trials), we here further focused on how these decision areas and other task‐related ROIs form a functional somatosensory‐to‐motor gradient. Toward this end, we compared the BOLD activation profiles between the two instructed response conditions in these areas. We inferred the functional gradient from an index which reflected the temporal difference in peak activation between trials with an experimentally instructed delay in subjects' motor responses and immediate response trials. We observed a gradient of latency differences across brain regions that ranged from 0 s time difference, indicative for processes tightly coupled to the sensory input, to a time difference up to 2.4 s, indicative for processes tightly coupled to the delayed motor response. This linear functional processing gradient derived from BOLD peak latency differences was highly consistent across the three decision difficulty levels, suggesting a similar cortical “flow” of information during a perceptual decision despite varying difficulty.

We would first like to stress that our approach of delineating a linear functional gradient from somatosensory‐ to motor‐related processing is only one of various ways in trying to “order” processing performed in cerebral cortex. As compared with other techniques such as stepwise functional connectivity analyses [Sepulcre et al., 2012; for a recent review, see Sepulcre, 2014] or anatomical work [Felleman and Van Essen, 1991], our approach does not aim to infer an absolute hierarchy present in cortical networks. It rather reveals a gradient of functional processing across brain areas. On the one hand, this functional gradient will certainly depend on the hierarchical organization of the respective network. Still, as this “hardware” typically engages various parallel feed‐forward and feed‐back projections that are differentially engaged depending on the task at hand, functional indices that characterize the functional status of an area relative to all other nodes in a network are certainly a small but helpful step in the attempt to unravel the principles of cortical information processing. More specifically, our novel method to infer a sensory‐to‐motor gradient could complement existing techniques that do uncover network hierarchies but that neglect any differences in task‐related functional processing present within a network.

Our approach utilized differences in the latency of the peak BOLD response between delayed and immediate response conditions to illustrate an area's relative relation to either the sensory input or to the decision‐related motor preparation and response (also compare Supporting Information Fig. S1). We assumed that the larger this latency difference (which means the closer to the difference in onset of the actual responses between conditions), the higher its share of neurons contributing to motor output‐related than to sensory input‐related processing. With sensory input‐related processing (0 s time difference) we refer not only to the processes that arise in primary sensory areas during the initial processing of afferent information but also to processes such as sensory evidence accumulation and decision making which likewise operate time‐locked with respect to the sensory input. The other end of our functional spectrum (and a respective 2.4 s delay, corrected for RT differences between immediate and delayed response tasks) does reflect motor‐related processes such as action preparation and action execution proper. Processing the sensory consequence of a motor response is of course also time‐locked to the motor behavior (here saccades). Such motor‐related processing of the sensory reafference is nicely illustrated by the 2.4 s time difference in visual cortex. Most areas perhaps host both, processing related to sensory‐input and motor‐output [compare, e.g., Bennur and Gold, 2011; Rizzolatti et al., 2014]. As a consequence, the temporal difference in peak BOLD‐activation between delayed and immediate response trials will be in between these extremes (0 and 2.4 s), expressing the relative contribution of sensory‐ versus motor‐related processing.

Our approach was inspired by and applied to our previous published work on tactile decisions [Li Hegner et al., 2015]. In this study we engaged immediate and delayed response conditions in combination with a variation of task difficulty in order to exhibit decision‐areas whose activity could not be explained by motor responses but still was modulated by decision difficulty. Areas that fulfilled these criteria were right PCS, right IPS, and bilateral aINS. Furthermore, using multi‐voxel pattern analysis (MVPA) we could decode perceptual decisions in right IPS and bilateral aINS, while decisions could be decoded earlier in aINS. At a later point we developed the idea to derive the above described functional index that would allow us to assess how activity in these decision areas would relate to sensory‐input versus motor output. Specifically, our current findings show that bilateral aINS exhibited latency differences that were significantly larger than that of the other putative decision areas, namely PCS and IPS. Thus, bilateral aINS ranges on a later stage within our somatosensory‐to‐motor gradient than the latter two areas. Combining this information with our decoding results, it appears likely that the perceptual decision is first formed in aINS and is then projected back to more sensory‐related areas (here IPS). This is in line with a recent animal study with simultaneous extracellular recordings in several brain areas which has demonstrated that decision signals travel not only forward to motor areas but also backward to sensory areas [Siegel et al., 2015]. Taken together with previous human imaging studies indicating a key role of aINS in various decision tasks [Ho et al., 2009; Liu and Pleskac, 2011] we suggest that aINS could be the neural structure in which a categorical decision is represented.

On a more general note, our current analytical approach further demonstrates that, although fMRI does not have high temporal resolution, a fine‐scaled somatosensory‐to‐motor functional gradient in decision‐related cortical processing could still be retrieved using our experimental paradigm and our analytical strategy. This sensory‐to‐motor functional gradient originated in primary sensory areas (PCS) and then extended both posteriorly along the IPS as well as anteriorly to the anterior insula, ventral premotor cortex and the pre‐SMA. It ultimately culminated in anterior (SMA, PMd, ACC) and posterior (SPL/LIP) saccade‐related areas and in VC (supposedly reflecting processing of the saccade‐related visual reafference). Hence, the reported functional gradient clearly does not merely follow any arbitrary axis through the brain but rather mirrors the sensory‐to‐motor functional hierarchy that has been suggested by previous research [Hernandez et al., 2010; Siegel et al., 2015]. Our analytical approach thus provides an alternative account to characterize decision‐related human brain areas using fMRI while complementing other experimental designs like the artificial slowing of evidence accumulation speed [Ploran et al., 2007] and other analytical methods [e.g., Kayser et al., 2010; White et al., 2012]. Importantly, this approach of course is only applicable in areas that exhibit task‐related activation (and thus a signal peak). Also, this approach requires short response delays, in which—due to the long time constant of the BOLD response—processes time locked to either sensory‐input or motor‐output do not exhibit bimodal (or multimodal) activation profiles but lead to a respective shift of a single peak of the overall activation (e.g., compare Fig. 2B). Finally, our approach requires a good temporal resolution (1 s or better), which here was obtained through a temporally jittered experimental design (200 ms steps). Ultimately, fMRI recordings with shorter TRs would be preferable. While our novel approach and our results appear plausible at first glance, our approach clearly needs to be further validated, for instance engaging perceptual decision tasks using different modalities and different effectors. Moreover, the same principle task‐design and analytical approach could help to further delineate retrospective (sensory input‐related) from prospective (motor output‐related) processing during action planning [compare Lindner et al., 2010] and action perception [Rizzolatti et al., 2014]. These few examples may help to stress the relevance of our approach for a broad spectrum of research questions.

In conclusion, our present finding revealed a detailed gradient of functional processing during a perceptual decision task. This gradient was stable across cortical areas despite varying decision difficulty. While our previous work demonstrated that right IPS and bilateral aINS are involved in forming tactile decisions, our novel analysis method further revealed that aINS is more down‐stream within the cortical cascade of somatosensory‐to‐motor processing. This suggests that bilateral aINS could represent the final categorical perceptual decision. Such assessment of an area's relation to the processing of sensory‐input versus motor‐output is clearly not only of interest for our task. Our approach can also be applied to delineate functional gradients in perceptual decision problems engaging other modalities and effectors as well as in other cognitive tasks such as action planning or action perception.

Supporting information

Supporting Information

ACKNOWLEDGMENTS

We thank Juergen Dax for technical support, and Fabienne Kernhof and Manuel Roth for help with data collection. We are grateful to the Max Planck Institute of Biological Cybernetics and especially to Frank Muehlbauer for the support of the fMRI measurement.

REFERENCES

- Amaro E, Barker GJ (2006): Study design in fMRI: Basic principles. Brain Cogn 60:220–232. [DOI] [PubMed] [Google Scholar]

- Bennur S, Gold JI (2011): Distinct representations of a perceptual decision and the associated oculomotor plan in the monkey lateral intraparietal area. J Neurosci 31:913–921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Liebenthal E, Possing ET, Medler DA, Ward BD (2004): Neural correlates of sensory and decision processes in auditory object identification. Nat Neurosci 7:295–301. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC (1991): Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex 1:1–47. [DOI] [PubMed] [Google Scholar]

- Filimon F, Philiastides MG, Nelson JD, Kloosterman NA, Heekeren HR (2013): How embodied is perceptual decision making? Evidence for separate processing of perceptual and motor decisions. J Neurosci 33:2121–2136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN (2007): The neural basis of decision making. Annu Rev Neurosci 30:535–574. [DOI] [PubMed] [Google Scholar]

- Guo ZV, Li N, Huber D, Ophir E, Gutnisky D, Ting JT, Feng G, Svoboda K (2014): Flow of cortical activity underlying a tactile decision in mice. Neuron 81:179–194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernandez A, Nacher V, Luna R, Zainos A, Lemus L, Alvarez M, Vazquez Y, Camarillo L, Romo R (2010): Decoding a perceptual decision process across cortex. Neuron 66:300–314. [DOI] [PubMed] [Google Scholar]

- Ho TC, Brown S, Serences JT (2009): Domain general mechanisms of perceptual decision making in human cortex. J Neurosci 29:8675–8687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser AS, Buchsbaum BR, Erickson DT, D'Esposito M (2010): The functional anatomy of a perceptual decision in the human brain. J Neurophysiol 103:1179–1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Hegner Y, Lindner A, Braun C (2015): Cortical correlates of perceptual decision making during tactile spatial pattern discrimination. Hum Brain Mapp 36:3339–3350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindner A, Iyer A, Kagan I, Andersen RA (2010): Human posterior parietal cortex plans where to reach and what to avoid. J Neurosci 30:11715–11725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Pleskac TJ (2011): Neural correlates of evidence accumulation in a perceptual decision task. J Neurophysiol 106:2383–2398. [DOI] [PubMed] [Google Scholar]

- Ploran EJ, Nelson SM, Velanova K, Donaldson DI, Petersen SE, Wheeler ME (2007): Evidence accumulation and the moment of recognition: Dissociating perceptual recognition processes using fMRI. J Neurosci 27:11912–11924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rebola J, Castelhano J, Ferreira C, Castelo‐Branco M (2012): Functional parcellation of the operculo‐insular cortex in perceptual decision making: An fMRI study. Neuropsychologia 50:3693–3701. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Cattaneo L, Fabbri‐Destro M, Rozzi S (2014): Cortical mechanisms underlying the organization of goal‐directed actions and mirror neuron‐based action understanding. Physiol Rev 94:655–706. [DOI] [PubMed] [Google Scholar]

- Romo R, Hernandez A, Zainos A, Lemus L, de Lafuente V, Luna R, Nacher V (2006): Decoding the temporal evolution of a simple perceptual act. Novartis Found Symp 270:170–186. [PubMed] [Google Scholar]

- Sepulcre J (2014): Integration of visual and motor functional streams in the human brain. Neurosci Lett 567:68–73. [DOI] [PubMed] [Google Scholar]

- Sepulcre J, Sabuncu MR, Yeo TB, Liu H, Johnson KA (2012): Stepwise connectivity of the modal cortex reveals the multimodal organization of the human brain. J Neurosci 32:10649–10661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siegel M, Buschman TJ, Miller EK (2015): Cortical information flow during flexible sensorimotor decisions. Science 348:1352–1355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White CN, Mumford JA, Poldrack RA (2012): Perceptual criteria in the human brain. J Neurosci 32:16716–16724. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information