Abstract

Face perception is essential for daily and social activities. Neuroimaging studies have revealed a distributed face network (FN) consisting of multiple regions that exhibit preferential responses to invariant or changeable facial information. However, our understanding about how these regions work collaboratively to facilitate facial information processing is limited. Here, we focused on changeable facial information processing, and investigated how the functional integration of the FN is related to the performance of facial expression recognition. To do so, we first defined the FN as voxels that responded more strongly to faces than objects, and then used a voxel‐based global brain connectivity method based on resting‐state fMRI to characterize the within‐network connectivity (WNC) of each voxel in the FN. By relating the WNC and performance in the “Reading the Mind in the Eyes” Test across participants, we found that individuals with stronger WNC in the right posterior superior temporal sulcus (rpSTS) were better at recognizing facial expressions. Further, the resting‐state functional connectivity (FC) between the rpSTS and right occipital face area (rOFA), early visual cortex (EVC), and bilateral STS were positively correlated with the ability of facial expression recognition, and the FCs of EVC‐pSTS and OFA‐pSTS contributed independently to facial expression recognition. In short, our study highlights the behavioral significance of intrinsic functional integration of the FN in facial expression processing, and provides evidence for the hub‐like role of the rpSTS for facial expression recognition. Hum Brain Mapp 37:1930–1940, 2016. © 2016 Wiley Periodicals, Inc.

Keywords: facial expression recognition, posterior superior temporal sulcus, face network, functional connectivity, early visual cortex, occipital face area

Abbreviations

- AMG

Amygdala

- ATC

Anterior temporal cortex

- EVC

Early visual cortex

- FC

Functional connectivity

- FFA

Fusiform face area

- FN

Face network

- FRA

Face‐specific recognition ability

- FWHM

Full‐width at half‐maximum

- GBC

Global brain connectivity

- GLM

General linear model

- GRE‐EPI

Gradient‐echo echo‐planar‐imaging

- IFG

Inferior frontal gyrus

- MFG

Medial frontal gyrus

- MNI

Montreal Neurological Institute

- OFA

Occipital face area

- PAM

Probabilistic activation map

- PCG

Precentral gyrus

- RMET

“Reading the Mind in the Eyes” Test

- rOFA

Right occipital face area

- rpSTS

Right posterior superior temporal sulcus

- SD

Standard deviation

- SFG

Superior frontal gyrus

- WNC

Within‐network connectivity

INTRODUCTION

A human face is a complicated source of information and conveys a wealth of social signals, such as individual's identity and expression. Neuroimaging studies have revealed a distributed face network (FN) that exhibit preferential responses to faces than to other object categories, commonly composing of the fusiform face area (FFA), occipital face area (OFA), posterior superior temporal sulcus (pSTS), and other regions [for reviews, see Gobbini and Haxby, 2007; Haxby et al., 2000; Ishai, 2008; Pitcher et al., 2011b]. The dominant models of face processing have proposed dissociable processing of invariant and changeable facial information in the FN, with the FFA preferentially responsive to invariant facial aspects (i.e., facial identity) [Haxby et al., 2000; Kanwisher et al., 1997], while the pSTS preferentially responsive to changeable facial aspects (i.e., facial expression) [Allison et al., 2000; Engell and Haxby, 2007; Haxby et al., 2000], though there is also evidence suggesing relatvie rather than absolute dissociation of identity and expresssion processing [e.g., Calder, 2011; Calder and Young, 2005; Fox et al., 2009]. However, our understanding about how these face‐selective regions work collaboratively to facilitate facial information processing is limited.

The frequently co‐activated regions in the FN may interact with each other to facilitate facial information processing [Ishai, 2008]. Indeed, recent fMRI studies have shown that some face‐selective regions are strongly connected (e.g., the OFA‐FFA, FFA‐pSTS, EVC‐FFA, EVC‐pSTS, and OFA‐pSTS) under both task‐state and resting‐state [Davies‐Thompson and Andrews, 2012; Fairhall and Ishai, 2007; Furl et al., 2014; O'Neil et al., 2014; Turk‐Browne et al., 2010; Yang et al., 2015; Zhang et al., 2009; Zhu et al., 2011]. In addition, the connectivity between face‐selective regions are modulated by tasks, for example, effective connectivity between the OFA and FFA is increased by the facial identity task, while the facial expression task enhanced the OFA‐pSTS connectivity [Kadosh et al., 2011]. Furthermore, there is emerging evidence showing that functional connectivity (FC) at resting‐state between face‐selective regions is related to behavioral performance in face tasks. For example, stronger OFA‐FFA resting‐state FC is related to better performance in a variety of face recognition tasks [Zhu et al., 2011], and stronger resting‐state FC between the FFA and a face region in the perirhinal cortex is associated with larger face inversion effect [O'Neil et al., 2014]. However, these findings mainly focused on the relation between FC and recognition of invariant facial aspects, yet no study has investigated whether FC between FN regions relates to recognition of changeable facial aspects. Therefore, the present study addressed the association between functional integration of the FN and facial expression recognition.

In this study, we characterized the functional integration of the FN in a large sample of participants (N = 268) with a voxel‐based global brain connectivity (GBC) method [Cole et al., 2012] using resting‐state fMRI (rs‐fMRI), and then examined whether the functional integration was read out for behavioral performance in facial expression recognition. Since resting‐state FC in the FN can predict individual differences in the performance of face tasks, it provides an ideal tool for testing the behavioral significance of the FN's functional integration in facial expression recognition. Specifically, the FN was defined as a set of voxels that are selectively responsive to faces over objects with a standard functional localizer. For each voxel in the FN, the within‐network connectivity (WNC) was calculated as the averaged resting‐state FC of a voxel to the rest of the FN voxels. Next, we used the “Reading the Mind in the Eyes” Test (RMET) [Baron‐Cohen et al., 2001] to measure individual's facial expression recognition ability. Finally, by correlating the WNC of each voxel in the FN with facial expression recognition ability across participants, we characterized the behavioral relevance of the FN's functional integration. As previous studies have consistently indicated the pSTS in facial expression processing [e.g., Haxby et al., 2000; Pitcher, 2014a], we hypothesized that the pSTS may play a central role in intrinsic functional integration of the FN which contributes to facial expression recognition.

MATERIALS AND METHODS

Participants

There were two groups of participants in the present study. One group of participants (N = 202; 124 females; 182 self‐reported right‐handed; mean age = 20.3 years, standard deviation [SD] = 0.9 years) participated in the functional localizer scan, and the other group of 268 participants (162 females; 248 self‐reported right‐handed; mean age = 20.4 years, SD = 0.9 years; of which 180 had participated in the functional localizer scan) participated in the rs‐fMRI scan and behavioral session. All participants were college students recruited from Beijing Normal University, Beijing, China, and had no history of medication and neurological or psychiatric disorders and reported normal or corrected‐to‐normal vision. This study is part of an ongoing project (Gene, Environment, Brain, and Behavior) [e.g., Huang et al., 2014; Kong et al., 2014a, 2014b; Kong et al., 2015; Wang et al., 2012, 2014; Zhang et al., 2015a, 2015b; Zhen et al., 2015]. Both behavioral and MRI protocols were approved by the Institutional Review Board of Beijing Normal University. Written informed consent was obtained from all participants before the experiment.

Functional Localizer for FN

A standard dynamic face localizer was used to define the FN [Pitcher et al., 2011a]. Specifically, three blocked‐design functional runs were conducted for each participant. Each run lasted 3 min 18 s, containing two block sets intermixed with three 18 s fixation blocks at the beginning, middle, and end of the run. Each block set consisted of four blocks with each stimulus category (faces, objects, scenes, and scrambled objects) presented in an 18 s block that contained six 3 s movie clips. During the scanning, participants were instructed to passively view the movie clips [for more details on the paradigm, see Zhen et al., 2015].

Image Acquisition

MRI scanning was conducted on a Siemens 3T scanner (MAGENTOM Trio, a Tim system) with a 12‐channel phased‐array head coil at Beijing Normal University Imaging Center for Brain Research, Beijing, China. In total, three data sets were acquired. Specifically, the ts‐fMRI was acquired using a T2*‐weighted gradient‐echo echo‐planar‐imaging (GRE‐EPI) sequence (TR = 2,000 ms, TE = 30 ms, flip angle = 90°, number of slices = 30, voxel size = 3.125 × 3.125 × 4.8 mm). The rs‐fMRI was scanned using the GRE‐EPI sequence (TR = 2,000 ms, TE = 30 ms, flip angle = 90°, number of slices = 33, voxel size = 3.125 × 3.125 × 3.6 mm, 240 volumes). During the 8 min scan, participants were instructed to relax without engaging in any specific behavioral task and to remain still with their eyes closed. In addition, a high‐resolution T1‐weighted magnetization prepared gradient echo sequence (MPRAGE: TR/TE/TI = 2,530/3.39/1,100ms, flip angle = 7°, matrix = 256 × 256, number of slices = 128, voxel size = 1 × 1 × 1.33 mm) anatomical scan was acquired for registration purposes and for better localizing the functional activations. Earplugs were used to attenuate scanner noise and a foam pillow and extendable padded head clamps were applied to restrain head motion of participants.

Image Preprocessing

ts‐fMRI data preprocessing

The ts‐fMRI preprocessed with FEAT (FMRI Expert Analysis Tool Version 5.98), part of FSL (FMRIB's Software Library, http://www.fmrib.ox.ac.uk/fsl). The first‐level analysis was conducted separately on each run for each participant. Preprocessing included the following five steps: head motion correction, brain extraction, spatial smoothing with a 6 mm full‐width at half‐maximum (FWHM) Gaussian kernel, mean‐based intensity normalization, and high‐pass temporal filtering (120 s cutoff). Statistical analysis on the ts‐fMRI was performed using FILM (FMRIB's Improved Linear Model) with a local autocorrelation correction. Predictors (i.e., the faces, objects, scenes, and scrambled objects) were convolved with a gamma hemodynamic response function to generate four main explanatory variables. The temporal derivatives of these explanatory variables were modeled to improve the sensitivity of the general linear model (GLM). Motion parameters were also included in the GLM as confounding variables of no interest to account for the effect of residual head motion. Two face‐selective contrasts were calculated for each run of each session: (1) faces versus objects, and (2) faces versus fixation.

A second‐level analysis was performed to combine all runs within each participant. Specifically, the parameter image from the first‐level analysis was initially aligned to the individual's anatomical images through FLIRT (FMRIB's linear image registration tool) with six degrees‐of‐freedom and then warped to the Montreal Neurological Institute (MNI) standard space through FNIRT (FMRIB's nonlinear image registration tool) running with the default parameters. The spatially normalized parameter images were resampled to 2 mm isotropic voxels, and then summarized across runs in each participant using a fixed‐effect model. The parameter estimates from the second‐level analysis were then used for higher‐level group analysis.

rs‐fMRI data preprocessing

The rs‐fMRI also preprocessed with FSL. The preprocessing included the removal of first four volumes, head motion correction (by aligning each volume to the middle volume of the 4‐D image with MCFLIRT), spatial smoothing (with a Gaussian kernel of 6 mm FWHM), mean‐based intensity normalization, and the removal of linear trend. Next, a band‐pass temporal filter (0.01–0.1 Hz) was applied to reduce low‐frequency drifts and high‐frequency noise.

To further eliminate physiological noise, such as fluctuations caused by head motion, cardiac and respiratory cycles, 18 nuisance signals from cerebrospinal fluid, white matter, global brain average, motion correction parameters, and first derivatives of these signals were regressed out using the methods described in previous studies [Biswal et al., 2010; Fox et al., 2005]. The 4‐D residual time series obtained after regressing out the nuisance covariates were used for the FC analyses. The strength of the intrinsic FC between two voxels was estimated as the Pearson's correlation of the residual rs‐fMRI time series at those voxels.

Registration of each participant's rs‐fMRI to the anatomical images was carried out using FLIRT to produce a 6 degree‐of‐freedom affine transformation matrix. Registration of each participant's anatomical images to the MNI space was accomplished using FLIRT to calculate a 12 degree‐of‐freedom linear affine matrix [Jenkinson et al., 2002; Jenkinson and Smith, 2001].

Behavioral Measurements

Participants completed two computer‐based tasks: the RMET and old/new recognition task in a separate session out of the MRI scanner.

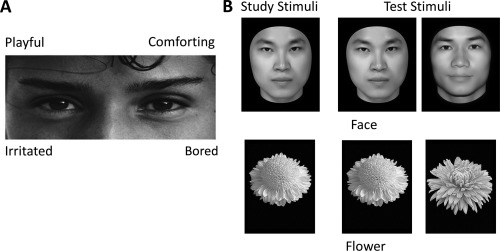

“Reading the mind in the eyes” test

In our study, the RMET, a widely used and reliable measure of emotional expression recognition, was used to assess the ability of the participants to recognize facial expressions [Baron‐Cohen et al., 2001]. Each trial started with a fixation for 500 ms, followed by a photo of the eye‐region presented along with four words around (Fig. 1A). The English words in the original RMET were translated into Chinese in the test. The participants were asked to choose the word that best described the feelings of the person in the photo. The photo was presented until the participants responded. In total, there were 37 trials in the test; the first trial was for practice, and the others were for the formal test. The original test defined the target word for each item as the word which the majority of participants selected [Baron‐Cohen et al., 2001]. To account for possible effects of cultural difference and translation, we calculated the percentage of the participants selecting each word for each item and compared it to the original version. Of all the 36 items, the majority of our participants (more than 50%) chose the original target word in 35 items, indicating that the words were comprehended in a similar manner as those in the original version. Only in item 17, 69.3% of the participants recognized the expression as “affectionate,” and 24.6% of the participants selected the original target word “doubtful.” Therefore, the target word for item 17 was changed from “doubtful” to “affectionate.” The number of correct trials in RMET was defined as the score for each participant, and a larger score indicated a higher ability to recognize facial expressions.

Figure 1.

Example stimuli and trial types. (A) Stimulus exemplar in the “reading the mind in the eyes” test (RMET). The participants were asked to choose the word that best described the feelings of the person in the photo. (B) Stimulus exemplars and trial types in the old/new recognition task. In the old/new recognition task, participants studied a single image (either a face or a flower). They were then shown a series of individual images of the corresponding type and asked to indicate which of the images had been shown in the study stage.

Four participants were excluded in this test. One participant was excluded due to computer program failure, two participants were excluded because their scores were not significantly higher than the chance level (i.e., score ≤ 12), and one participant was excluded for careless performance (RTs < 200 ms in eight trials).

In addition, we recruited a new cohort of 50 participants (21 females, mean age of 21.7) and administrated both the RMET and MSCEIT [Mayer–Salovey–Caruso Emotional Intelligence Test, Mayer et al., 2003] in them. The RMET scores were positively correlated with the scores on the emotional expression perception subtest of the MSCEIT (r = 0.317, P = 0.02), further indicating the validity of the translated RMET in our study.

Old/new recognition task

To assess the specificity of the WNC‐RMET correlation, we also examine participants’ facial identity recognition ability as a control variable. Here, an old/new recognition task was used to measure the face‐specific recognition ability (FRA) of the participants [Wang et al., 2012; Zhu et al., 2011]. Thirty face images and 30 flower images were used (Fig. 1B). Face images were gray‐scale pictures of adult Chinese faces with the external contours removed (leaving a roughly oval shape with no ears and hair), and flower images were gray‐scale pictures of common flowers with leaves and background removed. There were two blocks in this task: a face block and a flower block, which were counterbalanced across participants. Each block consisted of one study stage and one test stage. In the study stage, 10 images of each object category were shown for 1 s per image with an inter‐stimulus interval of 0.5 s, and these studied images were shown twice. In the test stage, 10 studied images were shown twice, randomly intermixed with 20 new images from the same category. For each trial, participants were instructed to indicate whether the image had been shown in the study stage. For each participant, a recognition score was calculated as the recognition accuracy for each category (face and flower). The FRA was calculated as the normalized residual of the face recognition score after regressing out the object (i.e., flower) recognition score.

WNC Analysis for the FN

Definition of the FN

A voxel that satisfied the following two criteria was considered a face‐selective voxel [Kanwisher et al., 1997]. First, the response to face condition must be significantly higher than the fixation condition. Second, the response to face condition must be significantly higher than non‐face object condition. The first criterion ensures that a voxel is responsive to faces, and the second criterion ensures that the response of the voxel is selective to faces. Therefore, we combined the contrasts of faces versus fixation and faces versus objects to define face‐selective activation in individual participants. The conjunction face‐selective activation was defined as the smaller Z value of the two contrasts, and then the conjunction activation of each participant was thresholded at Z > 2.3 (P < 0.01, uncorrected). Thus, only the voxels that were significantly activated in both contrasts were included in individual face‐selective activation map. To capture the inter‐individual variability of face‐selective activation, a probabilistic activation map (PAM) for face recognition was created. The probability for each voxel was calculated as the percentage of participants who showed face‐selective activation. Finally, the FN was created by keeping only the voxels that showed a probability of activation larger than 0.2 in the PAM [Engell and McCarthy, 2013]. The resulted group‐level FN based on the PAM derived from the first group of participants was applied to each individual of the second group participating in the rs‐fMRI and behavioral session. The following FC measures for each participant of the second group were calculated within this FN.

WNC estimation

A GBC method, which is a recently developed analytical approach for rs‐fMRI data, was used to examine the integration property of each voxel within the FN [Cole et al., 2012]. The GBC value of a voxel was generally defined as the averaged FC of that voxel to the rest of the voxels in the global brain or a predefined region. Instead of computing interregional connectivity between two regions, this method allows us to comprehensively examine each voxel's full range FC within the whole FN. Specifically, the WNC of each voxel in the FN was computed as the averaged FC of that voxel to the rest of the voxels within the FN. Notably, participant‐level WNC maps were transformed to z‐score maps using Fisher's z‐transformation to yield normally distributed WNC values [Cole et al., 2012; Gotts et al., 2013].

WNC‐RMET correlation analysis

Correlation analysis was conducted to reveal the FN's functional integration in relation to the individual differences in the RMET scores. Specifically, a GLM was conducted for each voxel, with the scores of RMET as an independent variable and the WNC of the voxel as the dependent variable, and gender and FRA as the confounding covariates. Multiple comparison correction was performed on statistical map using the 3dClustSim program implemented in AFNI (http://afni.nimh.nih.gov). A threshold of cluster‐level P < 0.01 and voxel‐level P < 0.01 (cluster size > 102 voxels) was set based on Monte Carlo simulations in the FN mask.

Seed‐based FC‐RMET correlation analysis

As the WNC was calculated by averaging FC of a voxel to all of the other FN voxels, we further investigated with which specific regions the FCs of the identified cluster in the aforementioned WNC‐RMET correlation analysis correlate with the RMET scores. First, seed‐based FC analysis was performed with the identified cluster as the seed, and each FN voxel's FC (Fisher's z‐transformed) with the seed were correlated with the RMET scores (controlling for gender and FRA). A threshold of cluster‐level P < 0.01 and voxel‐level one‐tailed P < 0.01 (cluster size > 125 voxels) was set within the FN mask based on Monte Carlo simulations. The significant clusters obtained in this post hoc seed‐based FC‐RMET correlation analysis were defined as the target regions of interest (ROIs). Then, to investigate the specificity of the FC‐RMET associations, the pairwise FCs (Fisher's z‐transformed) between the seed and target ROIs were correlated with FRA.

RESULTS

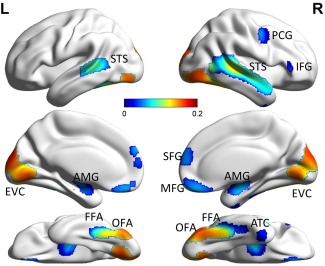

In the present study, we defined the FN based on a probabilistic map for face‐selective activation, which includes the bilateral FFA, bilateral OFA, bilateral STS, bilateral amygdala (AMG), bilateral superior frontal gyrus (SFG), bilateral medial frontal gyrus (MFG), right precentral gyrus (PCG), right inferior frontal gyrus (IFG), right anterior temporal cortex (ATC), and bilateral early visual cortex (EVC) (Fig. 2). The regions in the FN were in agreement with the face‐selective regions identified in previous studies [Engell and McCarthy, 2013; Gobbini and Haxby, 2007; Haxby et al., 2000; Ishai, 2008; Kanwisher and Yovel, 2006; Pitcher et al., 2011a; Zhen et al., 2013].

Figure 2.

Group average within‐network connectivity (WNC) map of the identified face network (FN) overlaid on brain surface. FFA, fusiform face area; OFA, occipital face area; STS, superior temporal sulcus; AMG, amygdala; SFG, superior frontal gyrus; MFG, medial frontal gyrus; PCG, right precentral gyrus; IFG, right inferior frontal gyrus; ATC, right anterior temporal cortex; EVC, early visual cortex. The visualization was provided by BrainNet Viewer (http://www.nitrc.org/projects/bnv/). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

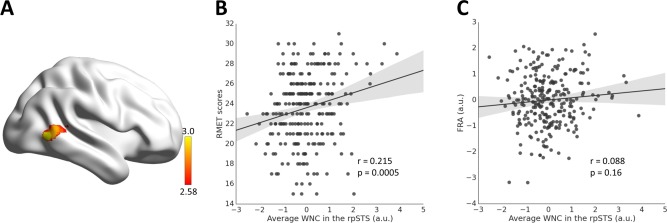

After identifying the FN, we investigated whether the functional integration of the FN is relevant to individual's ability of facial expression recognition. To do so, the participant's facial expression recognition ability was measured using the RMET (mean = 23.61, SD = 3.48), and functional integration of the FN was characterized with the WNC of each FN voxel, computed as the averaged FC of that voxel to the rest of the voxels within the FN (Fig. 2). Then a voxel‐wise GLM analysis was conducted with the WNC of each FN voxel as a dependent variable and the RMET scores as an independent variable, while gender and FRA (mean = 0, SD = 1.00) as confounding covariates. As shown in Figure 3, a cluster in the right pSTS (rpSTS) showed a significant positive correlation between voxel‐wise WNC and RMET scores (103 voxels, voxel‐level P < 0.01, cluster‐level P < 0.01, corrected; r = 0.215, P = 0.0005; MNI coordinates: 52 −62 2), indicating that individuals with a stronger within‐network integration in the rpSTS performed better in recognizing facial expression. In addition, the WNC‐RMET correlation in the rpSTS did not differ significantly between males and females (Fisher's Z = 1.29, P = 0.2), and a condition by covariate interaction analysis with gender as a condition and RMET score as a covariate of interest showed that there was no effect of gender by RMET interaction in the rpSTS (F = 2.55, P = 0.1).

Figure 3.

Correlation between WNC and RMET scores. (A) The WNC in the right posterior superior temporal sulcus (rpSTS) was significantly correlated with the RMET scores. (B) Scatter plot between the RMET scores and average WNC in the rpSTS across participants is shown for illustration purposes. (C) Scatter plot showing correlation between the face‐specific recognition ability (FRA) and average WNC in the rpSTS. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Recent studies have shown that resting‐state FC is largely affected by head motion [Kong et al., 2014c; Power et al., 2012; Satterthwaite et al., 2012; Van Dijk et al., 2012]. To rule out this confounding factor, we calculated the mean framewise displacement of each brain volume compared to the previous volume for each participant to measure the extent of her/his head motion (mean = 0.044, SD = 0.018, ranging from 0.013 to 0.115) [Van Dijk et al., 2012], and reanalyzed the WNC‐RMET correlation when controlling for head motion. We found that the averaged WNC in the rpSTS cluster was significantly correlated with RMET scores with head motion controlled (r = 0.213, P = 0.0005), indicating that the WNC‐RMET correlation is not an artifact from head motion.

Then, we assessed the specificity of the obtained rpSTS WNC‐RMET correlation. First, the average WNC in the rpSTS cluster was not correlated with the facial identity recognition measured by FRA (r = 0.088, P = 0.16; Fig. 3C), and the magnitude of this correlation was significantly smaller than that for RMET (Steiger's Z = 1.71, P = 0.04), indicating that the association was specific to facial expression recognition. To further examine whether the WNC‐RMET association was specific to the FN, we performed a similar analysis for the object network (defined by the conjunction contrast of objects versus fixation and objects versus faces) as that for the FN. No significant cluster was obtained by correlating the WNC of each voxel in the object network with RMET scores, suggesting that the WNC‐RMET association was specific to the FN.

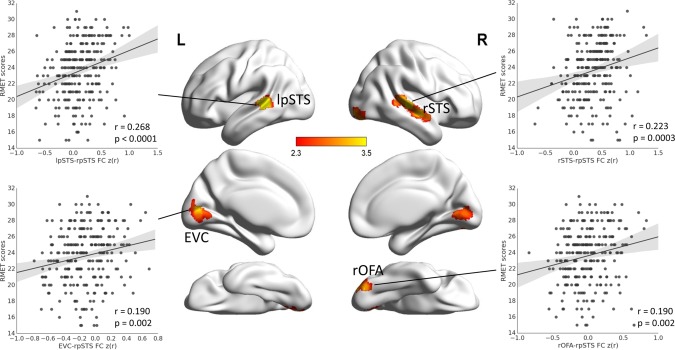

Further, we specified the source of the association between WNC in the rpSTS and facial expression recognition. First, we examined whether the rpSTS WNC‐RMET association originated from the connectivity of the voxels within the rpSTS (i.e., within‐region connectivity) or the connectivity between the rpSTS voxels and the voxels in other regions of the FN (i.e., between‐region connectivity). We found that the averaged between‐region connectivity of the rpSTS (r = 0.209, P = 0.0007), but not the within‐region connectivity (r = 0.079, P = 0.2), was significantly correlated with the RMET scores. Thus, the WNC‐RMET association in the rpSTS was largely resulted from the connectivity between the rpSTS voxels and the voxels in other regions of the FN. Then, we further examined with which specific FN regions the FCs of rpSTS contributed to the ability of facial expression recognition. The seed‐based voxel‐wise FC‐RMET correlation analysis with the rpSTS as the seed region showed that the FCs between the rpSTS and four clusters were positively correlated with the RMET scores (voxel‐level one‐tailed P < 0.01, cluster‐level P < 0.01, corrected), including the right OFA (rOFA, r = 0.190, P = 0.002, 170 voxels, MNI coordinates: 32 −82 −18), EVC (r = 0.190, P = 0.002, 444 voxels, MNI coordinates: 2 −70 −4), left pSTS (lpSTS, r = 0.268, P < 0.0001, 152 voxels, MNI coordinates: −54 −38 6), and right STS (rSTS, r = 0.223, P = 0.0003, 310 voxels, MNI coordinates: 56 −26 2) (Fig. 4). Note that the obtained OFA and STS clusters were located within the respective regions in a face‐selective probabilistic atlas based on individual delineations of the OFA and STS [Zhen et al., 2015], indicating that the obtained clusters correspond to normally referred OFA and STS. Moreover, the FCs between these regions and the rpSTS were not related to facial identity recognition (r (rOFA‐rpSTS, FRA) = −0.002, P = 0.98; r (EVC‐rpSTS, FRA) = 0.090, P = 0.15; r (rSTS‐rpSTS, FRA) = 0.055, P = 0.38; r (lpSTS‐rpSTS, FRA) = 0.074, P = 0.23), and the magnitude of three FC‐FRA correlations were significantly smaller than that for RMET (rOFA‐rpSTS: Steiger's Z = 2.57, P = 0.005; EVC‐rpSTS: Steiger's Z = 1.34, P = 0.09; rSTS‐rpSTS: Steiger's Z = 2.26, P = 0.01; lpSTS‐rpSTS: Steiger's Z = 2.64, P = 0.004). These results indicated that the associations were specific to facial expression recognition. To further confirm the results of the seed‐based analysis, we also calculated the ROI‐based FC between the rpSTS cluster and the ROIs obtained from the FN with a watershed algorithm [Meyer, 1994], including the rOFA, EVC, and bilateral STS. We found that the FCs of EVC‐rpSTS (r = 0.130, P = 0.04), rOFA‐rpSTS (r = 0.135, P = 0.03), and lpSTS‐rpSTS (r = 0.125, P = 0.04) were positively correlated with RMET scores, largely consistent with the results of the seed‐based FC‐RMET analysis. The correlation between the FC of rSTS‐rpSTS and RMET did not reach significance (r = 0.081, P = 0.2), though a larger number of voxels in the right STS were obtained with the seed‐based analysis (Fig. 4), possibly caused by the dilution of significant voxels with insignificant voxels in the whole ROI of rSTS (Fig. 2). Taken together, these results suggest that the rpSTS interact with the rOFA, EVC, and bilateral STS during resting‐state to facilitate facial expression recognition.

Figure 4.

Correlation between seed‐based resting‐state functional connectivity (FC) and RMET scores. The FCs between the rpSTS and the right occipital face area (rOFA), early visual cortex (EVC), left pSTS (lpSTS), and right STS were positively correlated with the RMET scores. Scatter plots between four pairwise FCs (rOFA‐rpSTS, EVC‐rpSTS, lpSTS‐rpSTS, and rSTS‐rpSTS) and RMET scores across participants are shown for illustration purposes only. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

According to current models of face perception network [Haxby et al., 2000], the EVC conveys expression information to pSTS through the sole gateway of the OFA. Alternatively, a recent study showed that expression information from the EVC and rOFA may reach the rpSTS via two dissociable pathways [Pitcher et al., 2014b]. Here we examined the independency between the contributions of the two pathways (i.e., EVC‐rpSTS and rOFA‐rpSTS) to facial expression recognition. First, we performed a path analysis to evaluate the path coefficients of the EVC‐rpSTS and rOFA‐rpSTS paths for each individual, and then correlated the path coefficients with RMET scores across participants. We found that the path coefficients of both the EVC‐rpSTS and rOFA‐rpSTS paths were positively correlated with RMET scores (r (EVC‐rpSTS, RMET) = 0.149, P = 0.02; r (rOFA‐rpSTS, RMET) = 0.159, P = 0.01). Further, we performed another path analysis among the RMET scores and FCs of EVC‐rpSTS and rOFA‐rpSTS. We found that both path coefficients were significant (from EVC‐rpSTS to RMET: path coefficient = 0.136, P = 0.04; from rOFA‐rpSTS to RMET: path coefficient = 0.137, P = 0.04). These results suggested that these two pathways may independently contribute to the facial expression recognition.

DISCUSSION

In the current study, we investigated the behavioral significance of the FN's functional integration in facial expression recognition by correlating WNC of the FN and RMET scores across participants. First, we found that individuals with stronger WNC in the rpSTS performed better in facial expression recognition task. Then, we found that the resting‐state FCs between the rpSTS and rOFA, EVC, and bilateral STS contributed to facial expression recognition, and the contributions of the FCs of EVC‐pSTS and OFA‐pSTS were dissociable. Our study highlights the behavioral significance of intrinsic functional integration of the FN in facial expression recognition, and provides empirical evidence for the hub‐like role of the rpSTS within the FN for facial expression recognition.

The resting‐state FC strength of the rpSTS with other face‐selective voxels in the FN was correlated with the RMET scores, suggesting that the rpSTS may serve as a hub to integrate all face‐selective voxels for facial expression recognition. This result is in line with previous FC studies showing that the pSTS is widely connected to other co‐activated face‐selective regions, such as OFA, FFA, and AMG [e.g., Davies‐Thompson and Andrews, 2012; Turk‐Browne et al., 2010; Yang et al., 2015]. Crucially, our study extended these findings by directly investigating the behavioral relevance of the full‐range FC of the pSTS with all other face‐selective voxels. Thus, the present study provides direct evidence supporting the influential model of face perception in which the pSTS presumably plays a central role in processing changeable facial information, with the coordinated participation of more regions in the core and extended FN [Allison et al., 2000; Calder and Young, 2005; Engell and Haxby, 2007; Haxby et al., 2000]. It has been shown that resting‐state FC can be modulated by recent or ongoing experiences in functionally related networks [Albert et al., 2009; Gordon et al., 2014; Hasson et al., 2009; Lewis et al., 2009; Stevens et al., 2015], consistent with the hypothesis that co‐activation during tasks likely leads to Hebbian strengthening of connections among co‐activated neurons/regions [Hebb, 1949]. Therefore, a possible explanation for the WNC‐RMET association is that co‐activation history in facial expression tasks leads to strengthened intrinsic connectivity between the pSTS and other regions, and these regions maintain meaningful inherent interaction for better task performance even during resting‐state [Fair et al., 2008; Fair et al., 2009; Fair et al., 2007].

In current influential models of face perception, the pSTS receives information from the OFA for recognition of changeable facial aspects [Calder and Young, 2005; Haxby et al., 2000; Ishai, 2008]. Our study provides the first empirical evidence demonstrating that the FC between the OFA and pSTS contributed to facial expression recognition. This result dovetails with a recent study showing that the connectivity between the OFA and pSTS was selectively increased by the facial expression task [Kadosh et al., 2011]. The OFA‐pSTS connectivity was modulated not only by tasks, but also by the stimuli, for example, effective connectivity from the OFA to pSTS was stronger for dynamic than for static facial expressions [Foley et al., 2012]. These results suggest the hypothesis that the OFA‐pSTS connectivity may be involved in facial expression recognition, and our study provides direct evidence for this hypothesis.

In addition, we found that FC between EVC and pSTS also contributed to facial expression recognition. This result is in line with previous finding that effective connectivity between EVC and STS is modulated by facial expression stimuli (dynamic versus static) [Foley et al., 2012]. Interestingly, in the present study, the FCs of rOFA‐rpSTS and EVC‐rpSTS may contribute to facial expression recognition separately. That is, the rpSTS may interact directly with EVC, independent of the rOFA, during resting‐state to perform better in expression recognition. In the influential models of face perception, the OFA is the sole gateway for conveying expression information from EVC to the pSTS [Calder and Young, 2005; Haxby et al., 2000]. In contrast, recent lesion and “virtual” lesion findings suggest that facial expression information in the pSTS may be conveyed via a direct pathway from EVC, independent of the OFA pathway [Pitcher et al., 2014b; Rezlescu et al., 2012; Steeves et al., 2006]. For example, the theta‐burst transcranial magnetic stimulation (TBS) disruption of the rOFA does not reduce the responses to dynamic faces in the rpSTS [Pitcher et al., 2014b]. In addition, neuropsychological patients with lesions in the OFA still show neural activation to faces in the pSTS [Rezlescu et al., 2012]. Our result is more consistent with the latter alternative and suggests that direct input from EVC to pSTS during facial expression tasks may lead to their direct interaction during resting‐state.

In sum, the present study found that the intrinsic functional integration of the rpSTS within the FN was related to behavioral performance of facial expression recognition. There are several unaddressed issues that are important topics for future research. First, the present study focused on the pSTS's integration within the FN; however, RMET task relies not only on facial expression recognition, but also on other cognitive functions including theory of mind. Previous studies have shown that the STS is additionally implicated in tasks of theory of mind [Gallagher and Frith, 2003; Saxe, 2006; Zilbovicius et al., 2006], and a recent meta‐analysis shows that the pSTS plays a central role in both the neural system underling facial expression recognition and that underlying theory of mind [Yang et al., 2015]. Further studies are awaited to investigate how behavioral performance in RMET task is related to the pSTS's functional interaction within and between these two neural systems. Second, the present results highlight the central role of the pSTS within the FN in facial expression recognition; further studies are invited to investigate whether the pSTS can serve as a target region for interventions designed to improve social functioning for disorders characterized by social deficits, especially for those with autism spectrum disorder characterized by difficulties in inferring others’ emotional facial expressions [Baron‐Cohen et al., 2001; Chung et al., 2014]. Third, the participants in the present study are young adults; further developmental studies with wider age range are needed to explore the association between FN's functional integration and facial expression recognition as a function of age. Finally, previous studies have shown increased specificity in resting‐state FC analyses at individual level as compared to those at group level [e.g., Laumann et al., 2015; Stevens et al., 2015]. Therefore, future studies are awaited to define the FN at individual level and then examine its connectivity pattern with behavioral performance in facial expression recognition.

REFERENCES

- Albert NB, Robertson EM, Miall RC (2009): The resting human brain and motor learning. Curr Biol 19:1023–1027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G (2000): Social perception from visual cues: Role of the STS region. Trends Cogn Sci 4:267–278. [DOI] [PubMed] [Google Scholar]

- Baron‐Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I (2001): The “Reading the Mind in the Eyes” Test revised version: A study with normal adults, and adults with Asperger syndrome or high‐functioning autism. J Child Psychol Psychiatry 42:241–251. [PubMed] [Google Scholar]

- Biswal BB, Mennes M, Zuo XN, Gohel S, Kelly C, Smith SM, Beckmann CF, Adelstein JS, Buckner RL, Colcombe S, Dogonowski AM, Ernst M, Fair D, Hampson M, Hoptman MJ, Hyde JS, Kiviniemi VJ, Kotter R, Li SJ, Lin CP, Lowe MJ, Mackay C, Madden DJ, Madsen KH, Margulies DS, Mayberg HS, McMahon K, Monk CS, Mostofsky SH, Nagel BJ, Pekar JJ, Peltier SJ, Petersen SE, Riedl V, Rombouts SA, Rypma B, Schlaggar BL, Schmidt S, Seidler RD, Siegle GJ, Sorg C, Teng GJ, Veijola J, Villringer A, Walter M, Wang L, Weng XC, Whitfield‐Gabrieli S, Williamson P, Windischberger C, Zang YF, Zhang HY, Castellanos FX, Milham MP (2010): Toward discovery science of human brain function. Proc Natl Acad Sci U S A 107:4734–4739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder, AJ (2011) Does facial identity and facial expression recognition involve separate visual routes? In: Calder AJ, Rhodes G, Johnson M, Haxby JV (Eds.). The Oxford handbook of face perception. Oxford, UK: Oxford University Press.

- Calder AJ, Young AW (2005): Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci 6:641–651. [DOI] [PubMed] [Google Scholar]

- Chung YS, Barch D, Strube M (2014): A meta‐analysis of mentalizing impairments in adults with schizophrenia and autism spectrum disorder. Schizophr Bull 40:602–616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole MW, Yarkoni T, Repovs G, Anticevic A, Braver TS (2012): Global connectivity of prefrontal cortex predicts cognitive control and intelligence. J Neurosci 32:8988–8999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davies‐Thompson J, Andrews TJ (2012): Intra‐ and interhemispheric connectivity between face‐selective regions in the human brain. J Neurophysiol 108:3087–3095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engell AD, Haxby JV (2007): Facial expression and gaze‐direction in human superior temporal sulcus. Neuropsychologia 45:3234–3241. [DOI] [PubMed] [Google Scholar]

- Engell AD, McCarthy G (2013): Probabilistic atlases for face and biological motion perception: An analysis of their reliability and overlap. Neuroimage 74:140–151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Dosenbach NU, Church JA, Cohen AL, Brahmbhatt S, Miezin FM, Barch DM, Raichle ME, Petersen SE, Schlaggar BL (2007): Development of distinct control networks through segregation and integration. Proc Natl Acad Sci U S A 104:13507–13512. 17679691 [Google Scholar]

- Fair DA, Cohen AL, Dosenbach NU, Church JA, Miezin FM, Barch DM, Raichle ME, Petersen SE, Schlaggar BL (2008): The maturing architecture of the brain's default network. Proc Natl Acad Sci U S A 105:4028–4032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Cohen AL, Power JD, Dosenbach NU, Church JA, Miezin FM, Schlaggar BL, Petersen SE (2009): Functional brain networks develop from a “local to distributed” organization. PLoS Comput Biol 5:e1000381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A (2007): Effective connectivity within the distributed cortical network for face perception. Cerebral Cortex 17:2400–2406. [DOI] [PubMed] [Google Scholar]

- Foley E, Rippon G, Thai NJ, Longe O, Senior C (2012): Dynamic facial expressions evoke distinct activation in the face perception network: A connectivity analysis study. J Cogn Neurosci 24:507–520. [DOI] [PubMed] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME (2005): The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc Nat Acad Sci U S A 102:9673–9678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox CJ, Moon SY, Iaria G, Barton JJ (2009): The correlates of subjective perception of identity and expression in the face network: An fMRI adaptation study. Neuroimage 44:569–580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furl N, Henson RN, Friston KJ, Calder AJ (2014): Network interactions explain sensitivity to dynamic faces in the superior temporal sulcus. Cereb Cortex 25:2876–2882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallagher HL, Frith CD (2003): Functional imaging of 'theory of mind'. Trend Cogn Sci 7:77–83. [DOI] [PubMed] [Google Scholar]

- Gobbini MI, Haxby JV (2007): Neural systems for recognition of familiar faces. Neuropsychologia 45:32–41. [DOI] [PubMed] [Google Scholar]

- Gordon EM, Breeden AL, Bean SE, Vaidya CJ (2014): Working memory‐related changes in functional connectivity persist beyond task disengagement. Hum Brain Mapp 35:1004–1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gotts SJ, Jo HJ, Wallace GL, Saad ZS, Cox RW, Martin A (2013): Two distinct forms of functional lateralization in the human brain. Proc Natl Acad Sci U S A 110:E3435–E3444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Nusbaum HC, Small SL (2009): Task‐dependent organization of brain regions active during rest. Proc Natl Acad Sci U S A 106:10841–10846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI (2000): The distributed human neural system for face perception. Trends Cogn Sci 4:223–233. [DOI] [PubMed] [Google Scholar]

- Hebb, D.O. (1949) The Organization of Behavior: A Neuropsychological Theory. New York: Wiley; xix, pp. 335. [Google Scholar]

- Huang L, Song Y, Li J, Zhen Z, Yang Z, Liu J (2014): Individual differences in cortical face selectivity predict behavioral performance in face recognition. Front Hum Neurosci 8:483 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishai A (2008): Let's face it: It's a cortical network. Neuroimage 40:415–419. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S (2001): A global optimisation method for robust affine registration of brain images. Med Image Anal 5:143–156. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S (2002): Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage 17:825–841. [DOI] [PubMed] [Google Scholar]

- Kadosh KC, Kadosh RC, Dick F, Johnson MH (2011): Developmental changes in effective connectivity in the emerging core face network. Cerebral Cortex 21:1389–1394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G (2006): The fusiform face area: A cortical region specialized for the perception of faces. Philos Trans R Soc Lond B Biol Sci 361:2109–2128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997): The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci 17:4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong F, Ding K, Yang Z, Dang X, Hu S, Song Y, Liu J (2014a): Examining gray matter structures associated with individual differences in global life satisfaction in a large sample of young adults. Soc Cogn Affect Neurosci 10:952–960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong F, Liu L, Wang X, Hu S, Song Y, Liu J (2014b): Different neural pathways linking personality traits and eudaimonic well‐being: A resting‐state functional magnetic resonance imaging study. Cogn Affect Behav Neurosci 15:299–309. [DOI] [PubMed] [Google Scholar]

- Kong Xz, Zhen Z, Li X, Lu Hh, Wang R, Liu L, He Y, Zang Y, Liu J (2014c): Individual differences in impulsivity predict head motion during magnetic resonance imaging. PloS One 9:e104989 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong F, Hu S, Wang X, Song Y, Liu J (2015): Neural correlates of the happy life: The amplitude of spontaneous low frequency fluctuations predicts subjective well‐being. Neuroimage 107:136–145. [DOI] [PubMed] [Google Scholar]

- Laumann TO, Gordon EM, Adeyemo B, Snyder AZ, Joo SJ, Chen MY, Gilmore AW, McDermott KB, Nelson SM, Dosenbach NU, Schlaggar BL, Mumford JA, Poldrack RA, Petersen SE (2015): Functional system and areal organization of a highly sampled individual human brain. Neuron 87:657–670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis CM, Baldassarre A, Committeri G, Romani GL, Corbetta M (2009): Learning sculpts the spontaneous activity of the resting human brain. Proc Natl Acad Sci U S A 106:17558–17563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer F (1994): Topographic distance and watershed lines. Signal Process 38:113–125. [Google Scholar]

- Mayer JD, Salovey P, Caruso DR, Sitarenios G (2003): Measuring emotional intelligence with the MSCEIT V2.0. Emotion 3:97–105. [DOI] [PubMed] [Google Scholar]

- O'Neil EB, Hutchison RM, McLean DA, Kohler S (2014): Resting‐state fMRI reveals functional connectivity between face‐selective perirhinal cortex and the fusiform face area related to face inversion. Neuroimage 92:349–355. [DOI] [PubMed] [Google Scholar]

- Pitcher D (2014a): Facial expression recognition takes longer in the posterior superior temporal sulcus than in the occipital face area. J Neurosci 34:9173–9177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N (2011a): Differential selectivity for dynamic versus static information in face‐selective cortical regions. Neuroimage 56:2356–2363. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Duchaine B (2011b): The role of the occipital face area in the cortical face perception network. Exp Brain Res 209:481–493. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Duchaine B, Walsh V (2014b): Combined TMS and FMRI reveal dissociable cortical pathways for dynamic and static face perception. Curr Biol 24:2066–2070. [DOI] [PubMed] [Google Scholar]

- Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE (2012): Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59:2142–2154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rezlescu C, Pitcher D, Duchaine B (2012): Acquired prosopagnosia with spared within‐class object recognition but impaired recognition of degraded basic‐level objects. Cogn Neuropsychol 29:325–347. [DOI] [PubMed] [Google Scholar]

- Satterthwaite TD, Wolf DH, Loughead J, Ruparel K, Elliott MA, Hakonarson H, Gur RC, Gur RE (2012): Impact of in‐scanner head motion on multiple measures of functional connectivity: Relevance for studies of neurodevelopment in youth. Neuroimage 60:623–632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxe R (2006): Uniquely human social cognition. Curr. Opin. Neurobiol. 16:235–239. [DOI] [PubMed] [Google Scholar]

- Steeves JK, Culham JC, Duchaine BC, Pratesi CC, Valyear KF, Schindler I, Humphrey GK, Milner AD, Goodale MA (2006): The fusiform face area is not sufficient for face recognition: Evidence from a patient with dense prosopagnosia and no occipital face area. Neuropsychologia 44:594–609. [DOI] [PubMed] [Google Scholar]

- Stevens WD, Buckner RL, Schacter DL (2010): Correlated low‐frequency BOLD fluctuations in the resting human brain are modulated by recent experience in category‐preferential visual regions. Cereb Cortex 20:1997–2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens WD, Tessler MH, Peng CS, Martin A (2015): Functional connectivity constrains the category‐related organization of human ventral occipitotemporal cortex. Hum Brain Mapp 36:2187–2206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turk‐Browne NB, Norman‐Haignere SV, McCarthy G (2010): Face‐Specific Resting Functional Connectivity between the Fusiform Gyrus and Posterior Superior Temporal Sulcus. Front Hum Neurosci 4:176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Dijk KR, Sabuncu MR, Buckner RL (2012): The influence of head motion on intrinsic functional connectivity MRI. Neuroimage 59:431–438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang R, Li J, Fang H, Tian M, Liu J (2012): Individual differences in holistic processing predict face recognition ability. Psychol Sci 23:169–177. [DOI] [PubMed] [Google Scholar]

- Wang X, Xu M, Song Y, Li X, Zhen Z, Yang Z, Liu J (2014): The network property of the thalamus in the default mode network is correlated with trait mindfulness. Neuroscience 278:291–301. [DOI] [PubMed] [Google Scholar]

- Yang DY, Rosenblau G, Keifer C, Pelphrey KA (2015): An integrative neural model of social perception, action observation, and theory of mind. Neurosci Biobehav Rev 51:263–275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang H, Tian J, Liu J, Li J, Lee K (2009): Intrinsically organized network for face perception during the resting state. Neurosci Lett 454:1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J, Liu J, Xu Y (2015a): Neural decoding reveals impaired face configural processing in the right fusiform face area of individuals with developmental prosopagnosia. J Neurosci 35:1539–1548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang L, Liu L, Li X, Song Y, Liu J (2015b): Serotonin transporter gene polymorphism (5‐HTTLPR) influences trait anxiety by modulating the functional connectivity between the amygdala and insula in Han Chinese males. Hum Brain Mapp [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhen Z, Fang H, Liu J (2013): The hierarchical brain network for face recognition. PLoS One 8:e59886 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhen Z, Yang Z, Huang L, Kong XZ, Wang X, Dang X, Huang Y, Song Y, Liu J (2015): Quantifying interindividual variability and asymmetry of face‐selective regions: A probabilistic functional atlas. Neuroimage 113:13–25. [DOI] [PubMed] [Google Scholar]

- Zhu Q, Zhang J, Luo YL, Dilks DD, Liu J (2011): Resting‐state neural activity across face‐selective cortical regions is behaviorally relevant. J Neurosci 31:10323–10330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zilbovicius M, Meresse I, Chabane N, Brunelle F, Samson Y, Boddaert N (2006): Autism, the superior temporal sulcus and social perception. Trends Neurosci 29:359–366. [DOI] [PubMed] [Google Scholar]