Abstract

Several brain regions are involved in the processing of emotional stimuli, however, the contribution of specific regions to emotion perception is still under debate. To investigate this issue, we combined behavioral testing, structural and resting state imaging in patients diagnosed with behavioral variant frontotemporal dementia (bvFTD) and age matched controls, with task‐based functional imaging in young, healthy volunteers. As expected, bvFTD patients were impaired in emotion detection as well as emotion categorization tasks, testing dynamic emotional body expressions as stimuli. Interestingly, their performance in the two tasks correlated with gray matter volume in two distinct brain regions, the left anterior temporal lobe for emotion detection and the left inferior frontal gyrus (IFG) for emotion categorization. Confirming this observation, multivoxel pattern analysis in healthy volunteers demonstrated that both ROIs contained information for emotion detection, but that emotion categorization was only possible from the pattern in the IFG. Furthermore, functional connectivity analysis showed reduced connectivity between the two regions in bvFTD patients. Our results illustrate that the mentalizing network and the action observation network perform distinct tasks during emotion processing. In bvFTD, communication between the networks is reduced, indicating one possible cause underlying the behavioral symptoms. Hum Brain Mapp 37:4472–4486, 2016. © 2016 Wiley Periodicals, Inc.

Keywords: connectivity, emotion, frontotemporal dementia, functional imaging, mentalizing

INTRODUCTION

One of the most critical components of social behavior is the recognition of the emotional states of others. Consequently, numerous cortical and subcortical brain regions are involved in the processing of emotional signals from conspecifics [Barrett and Satpute, 2013; Fusar‐Poli et al., 2009; Jastorff et al., 2015; Lindquist et al., 2012]. They are components of various intrinsic brain networks such as the salience network [Seeley et al., 2007], comprising anterior cingulate cortex and anterior insula, the default or mentalizing network [Buckner et al., 2008], including precuneus, temporo‐parietal junction, medial prefrontal cortex, and the temporal poles, the action observation network [Grafton, 2009], including occipito‐temporal, parietal and premotor areas, and the limbic network [Yeo et al., 2011]. The above regions have been identified using primarily functional imaging, contrasting emotional with neutral stimuli. To gain insights into the specific functions that individual brain regions perform during emotion perception, we investigated emotion processing in patients diagnosed with the behavioral variant of frontotemporal dementia (bvFTD). This condition is characterized by progressive deterioration of personality, behavior, and cognition, with atrophy of anterior temporal, mesio‐frontal and subcortical areas [Seeley et al., 2008; Whitwell et al., 2009]. The affected regions include several brain areas involved in emotion processing, located in frontal and anterior temporal cortex. Furthermore, numerous behavioral studies have shown impaired emotion recognition in bvFTD patients, using faces [Baez et al., 2014; Bediou et al., 2009; Bertoux et al., 2015; Couto et al., 2013; Diehl‐Schmid et al., 2007; Fernandez‐Duque and Black, 2005; Keane et al., 2002; Kumfor et al., 2011; Lavenu et al., 1999; Lough et al., 2006; Miller et al., 2012; Rosen et al., 2002; Snowden et al., 2008; Virani et al., 2013], bodies [Van den Stock et al., 2015] and music [Downey et al., 2013; Omar et al., 2011] as test stimuli.

In the present study, we were specifically interested in two related tasks during emotion processing: emotion detection and emotion categorization. As stimuli, we used neutral and emotionally expressive (angry, happy, fearful, and sad) gaits, presented as avatars animated with 3D motion‐tracking data. These stimuli have been used in our previous study, where they elicited widespread fMRI signals in midline structures, the dorsolateral and ventrolateral prefrontal cortex, and the temporal lobes [Jastorff et al., 2015]. Our hypothesis was that these two tasks, although linked, might tap into different sub‐systems. Intuitively, one might be able to distinguish whether a specific body motion appears emotional, but not able to identify the exact emotion expressed. Two brain networks are of particular importance in this process, the mentalizing network and the action observation network. The mentalizing network has been shown to be activated in tasks involving a judgment of the emotional state of another [Ochsner et al., 2004]. The action observation network, conversely, is involved in the perception of others' motor actions [Abdollahi et al., 2013; Jastorff et al., 2010]. Regions within both networks are involved in emotion processing, and both networks have been shown to be functionally impaired in bvFTD patients [Filippi et al., 2013; Whitwell et al., 2011; Zhou et al., 2010].

Because task‐based functional imaging in bvFTD patients has proved difficult, given their behavioral symptoms [but see Virani et al., 2013], we opted for a combination of behavioral testing with structural and resting‐state imaging in patients and age‐matched controls, together with task‐based functional imaging in young, healthy volunteers. Starting with the prediction that bvFTD patients would be impaired in emotion detection as well as emotion categorization, we intended to answer four specific questions: (1) Does an individual's impairment correlate with gray matter atrophy in distinct regions involved in emotion processing? (2) Is there an anatomical distinction in the correlation between emotion detection and emotion categorization impairment? (3) Do the localized brain regions show differences in functional connectivity between patients and controls after correcting for whole‐brain, voxelwise gray matter concentrations? And finally (4), do those regions indeed contain information for emotion detection and categorization at the multi‐voxel response pattern level in young healthy volunteers?

MATERIALS AND METHODS

Study I: Investigation of bvFTD Patients and Age Matched Controls

Participants

Fourteen patients diagnosed with bvFTD and nineteen age‐matched controls participated in our study. Patients were recruited from the memory clinic and the old age psychiatry department of the University Hospitals Leuven (Leuven, Belgium) as well as from the neurology department at the regional Onze‐Lieve‐Vrouw Ziekenhuis Aalst‐Asse‐Ninove (Aalst, Belgium). Diagnoses were made by experienced neurologists or old age psychiatrists through clinical assessment, medical history and cognitive neuropsychological testing, complemented with imaging results consistent with bvFTD, that is, typical patterns of atrophy on structural MRI. For 11 patients, diagnosis was also based on a typical pattern of hypometabolism on [18F]FDG‐PET scan. Patients initially presented with changes in behavior and personality, displaying disinhibition, apathy and/or loss of empathy. One patient fulfilled the revised diagnostic criteria of “behavioral variant FTD with definite FTLD Pathology,” based on a C9orf72 pathogenic mutation, while the other patients fulfilled the criteria for “Probable bvFTD” [Rascovsky et al., 2011]. In none of the patients, language difficulty was the most prominent clinical feature. Furthermore, in none of the patients, aphasia was the most prominent deficit at symptom onset and during the initial phase of the disease. These phenotypes do not comply with the current diagnostic criteria for primary progressive aphasia [Gorno‐Tempini et al., 2011]. Additional information on individual patients is presented in Supporting Information Table 1.

Age‐matched control subjects were recruited through a database of elderly volunteers as well as through advertisements in a local newspaper. Exclusion criteria were present or past neurological or psychiatric disorders including substance abuse as well as use of medication susceptible to affect the central nervous system.

The study was approved by the Ethical Committee of University Hospitals Leuven and all volunteers gave their written informed consent in accordance with the Helsinki Declaration prior to the experiment. Demographic data and neuropsychological test results of all participants are presented in Table 1.

Table 1.

Demographic and neuropsychological test results

| bvFTD | Controls | ||||||

|---|---|---|---|---|---|---|---|

| Mean | (STD) | Mean | (STD) | t (χ2) | P | ||

| Age | 67.2 | (8.4) | 66.5 | (6.3) | 0.3 | 0.79 | |

| Sex (M/F) | 7/7 | 11/8 | 0.2 | 0.65 | |||

| MMSE | 26.6 | (1.5) | 29.3 | (0.7) | 6.6 | 0.001 | |

| RAVLT | A1‐A5 | 28.6 | (9.1) | 50.9 | (7.5) | 7.7 | 0.001 |

| % recall | 49.3 | (29.4) | 80.4 | (17.7) | 3.8 | 0.001 | |

| Recognition | 7.2 | (6.7) | 14.0 | (1.4) | 4.3 | 0.001 | |

| BNT | 41.1 | (11.7) | 54.3 | (3.0) | 4.7 | 0.001 | |

| AVF | 15.1 | (5.9) | 22.5 | (5.8) | 3.6 | 0.001 | |

| TMT | A (secs) | 66.3 | (49.7) | 32.6 | (9.7) | 2.9 | 0.007 |

| B (secs)a | 195.4 | (152.3) | 90.5 | (43.3) | 2.7 | 0.01 | |

| BORB | Length | 88.3 | (7.9) | 90.2 | (4.5) | 0.9 | 0.39 |

| Size | 85.2 | (7.2) | 88.9 | (6.3) | 1.6 | 0.13 | |

| Orientation | 80.5 | (9.9) | 86.1 | (6.0) | 2.0 | 0.05 | |

| RCPMT | 16.1 | (4.0) | 20.7 | (2.8) | 3.9 | 0.001 | |

| AAT | Comprehension | 94.5 | (12.2) | 109.2 | (5.3) | 4.7 | 0.001 |

Twelve patients.

Abbreviations: MMSE: Mini‐Mental‐State Examination; RAVLT: Rey AuditoryVerbal Learning Test (A1–A51: Sum of scores on trials A1 to A5 of the RAVLT; Recognition: Difference between number of hits and false alarms on the recognition trial); BNT: Boston Naming Test; AVF: Animal Verbal Fluency; TMT: Trail Making Test; BORB: Birmingham Object Recognition Battery; RCPMT: Raven Colored Progressive Matrices Test; AAT: Aachen Aphasia Test.

Stimuli

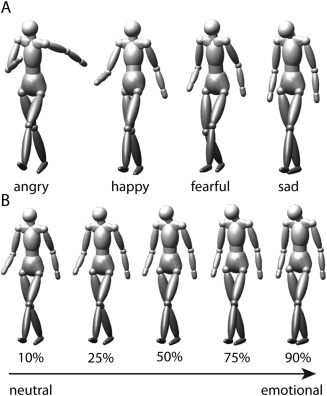

The stimuli used in the study were identical to the ones of our earlier imaging study [Jastorff et al., 2015]. In short, we recorded motion‐capture data of lay actors performing emotionally neutral gaits and four emotionally expressive gaits after a mood induction procedure (angry, happy, fearful, and sad). This motion‐capture data was used to animate a custom‐built volumetric puppet model rendered in MATLAB (Fig. 1). To eliminate translational movement of the stimulus, the horizontal, but not the vertical, translation at the midpoint between the two hip joints was removed, resulting in a natural‐looking walk as performed on a treadmill. Extended psychophysical testing ensured that the effect of the final stimulus could be easily identified [Roether et al., 2009]. These stimuli served as prototypes for subsequent motion morphing (Fig. 1A).

Figure 1.

Stimuli. (A) Example frames taken from 4 prototypical stimuli displaying the emotions angry, happy, fearful, and sad used in the functional imaging experiment. (B) Illustration of the morphed stimuli indicating different morph levels between neutral and emotional (sad) gaits tested during the behavioral experiment.

We generated a continuum of expressions ranging from almost neutral (90% neutral prototype and 10% emotional) to almost emotional (10% neutral prototype and 90% emotional) by means of motion morphing [Giese and Poggio, 2000; Jastorff et al., 2006]. Each continuum between neutral and emotional was represented by nine stimuli with the weights of the neutral prototype set to the values of 0.9, 0.75, 0.65, 0.57, 0.5, 0.43, 0.35, 0.25, and 0.1. The weight of each emotional prototype was always chosen such that the sum of the morphing weights was equal to one (Fig. 1B). Stimuli were presented dynamically throughout the experiments.

Behavioral assessment

Stimuli were displayed on an LCD screen (60 Hz frame rate; 1,600 × 1,200 pixels resolution) that was viewed binocularly from a distance of 40 cm, producing a stimulus size of about 7 degrees visual angle. Stimulus presentation and recording of the participants' responses was implemented with the MATLAB Psychophysics Toolbox [Brainard, 1997]. The stimuli were shown as puppet models (Fig. 1) on a uniform gray background.

The experiment started with a demonstration session where subjects were allowed to familiarize themselves with the stimuli for 10 trials without feedback. A single trial consisted of the presentation of a motion morph at the center of the screen for 10 s. No fixation requirements were imposed. The subject had to first answer whether the stimulus was emotional or neutral, and, dependent on this answer, categorize the emotion as happy, angry, fearful, or sad. Subjects were told to respond as soon as they had made their decisions but we did not emphasize responding quickly. If the subject answered within the 10 s, stimulus presentation was stopped immediately, otherwise, it halted after 10 s and a uniform gray screen was shown until the subject entered a response. After a 1.5 s intertrial interval, the next trial started. No feedback regarding performance was provided during the behavioral testing. In total, the experiment contained 144 trials (4 actors per emotion × 4 emotions × 9 morphing steps) that were shown in random order.

We analyzed the responses of every subject independently for each emotion and morph level, averaged over the four actors. During the behavioral experiment, subjects had to categorize not only whether the stimulus was “neutral” or “emotional,” but also, where they responded “emotional,” which emotion was expressed. The answer to the first question was used to determine the subjects' emotion detection performance, independently of whether they subsequently correctly identified the emotion. Their answer to the second question served to determine their emotion categorization performance. Here, we were interested whether the participant correctly identified the emotion. Behavioral data were fitted using psychometric curves as described by Wichmann and Hill [2001]. The goal of this experiment was to determine each subject's ambiguity point (AP), the morph level at which they answered equally often “neutral” and “emotional” for the emotion detection task and correctly identified the emotion in half of the trials for the emotion categorization task. We also determined their “discrimination sensitivity,” indicating their ability to discriminate between neutral and emotional stimuli or between emotions, respectively, which is given by the slope of the psychometric function. Subsequently, the AP's were used as covariates in regression analyses with voxel‐wise gray matter volume. In cases where the data satisfied the normality assumption (Shapiro–Wilk W Test), statistical comparisons between patients and controls were performed using two sample t‐tests. Otherwise, Mann–Whitney U tests were used.

Structural and resting state imaging and analysis

Scanning was performed using a 32‐channel head coil and a 3T MR scanner (Achieva; Philips Medical Systems) located at the University Hospitals Leuven. In total, 250 resting state images (7 min) were acquired using gradient‐echo planar imaging with the following parameters: 31 horizontal slices (4 mm slice thickness; 0.3‐mm gap), repetition time (TR), 1.7 s; time of echo (TE), 33 ms; flip angle, 90°; 64 × 64 matrix with 3.59 × 3.59 mm in plane resolution, and SENSE reduction factor of 2. During acquisition, subjects were asked to close their eyes and not to think of anything in particular. A structural, high resolution, T1‐weighted image covering the entire brain was used for voxel‐based morphometry analysis and anatomical reference (TE/TR 4.6/9, 7 ms; inversion time, 900 ms; slice thickness, 1.2 mm; 256 × 256 matrix; 182 coronal slices; SENSE reduction factor, 2.5).

Structural images of patients and controls were tissue‐classified into gray and white matter using SPM8. Subsequently, we applied the DARTEL toolbox to warp all images into a common space for normalization. The warped gray matter segmentations were modulated to account for local shape differences, re‐sliced to an isotropic voxel size of 1.5 mm and smoothed using a Gaussian kernel of 8 mm FWHM. To investigate regional differences between patients and controls, we performed a two sample t‐test comparing the amount of gray matter per voxel across groups. Gray matter maps used for the regression analysis with emotion detection/categorization performance were generated likewise, however, the template was generated using only the 14 bvFTD patients. We confined the regression analysis to the patient group, as the primary focus of this analysis was to investigate the neural substrates of impaired emotion processing in bvFTD patients, not those of emotion processing per se, which we have already addressed in our earlier study [Jastorff et al., 2015]. This procedure, while reducing statistical power, prevented contamination of the results by non‐bvFTD data.

Preprocessing of functional images was performed using software packages SPM8 and FSL. Resting state scans were acquired in 12 out of the 14 bvFTD patients and the scans of two patients could not be analyzed further due to excessive head motion. After slice‐time correction (SPM), realignment (SPM) and co‐registration (FSL), seed‐based functional connectivity maps were generated using the REST toolbox and DPARSF for every participant [Song et al., 2011]. The Friston 24‐parameter model (i.e., 6 head motion parameters, 6 head motion parameters one time point before, and the 12 corresponding squared items) as well as the signals from WM and CSF served as nuisance covariates to remove head motion and potential effects of physiological processes. Linear trends were removed to account for scanner drift. In addition, volumes exceeding a framewise displacement of 0.8 (derived with Jenkinson's relative root mean square algorithm [Jenkinson et al., 2002]) were excluded from the analysis by introducing an additional regressor for each “bad” volume. We also included a regressor for the one volume before and the two volumes following a “bad” one. The number of excluded volumes did not differ between groups (t = 0.14, P = 0.89) and averaged 12 volumes for the bvFTD patients and 13 volumes for the control subjects, that is, about 5% of the total volumes. Finally, Fischer's z‐transformed connectivity maps were normalized to MNI space using DARTEL and smoothed with a Gaussian kernel of 6 mm FWHM. To control for the confounding influences of gray matter atrophy on resting‐state BOLD signal, modulated gray matter volumes obtained from the VBM analysis were included as nuisance covariates in all tests comparing functional connectivity between patients and controls [Oakes et al., 2007].

Cluster size thresholding was performed using AlphaSim, as implemented in the REST toolbox. Monte Carlo simulations were carried out 1,000 times within the search volume. The search volume encompassed the entire brain in the VBM comparison between bvFTD and controls (1,448,985 voxel, Gaussian filter width ∼ 6 mm). For the comparison of the connectivity of the anterior temporal lobe (ATL) across groups, the search volume was restricted to the resting state connectivity network of the ATL with the rest of the brain in controls, thresholded at P < 0.001 uncorrected (35,995 voxel, Gaussian filter width ∼ 13 mm). For the comparison of the connectivity of the inferior frontal gyrus (IFG) across groups, the search volume was restricted to the resting state connectivity network of the IFG in controls, thresholded at P < 0.001 uncorrected (27,955 voxel, Gaussian filter width ∼ 13 mm).

Study II: Task‐Based Functional Imaging of Young Healthy Volunteers

Participants

Sixteen volunteers (8 females, mean age 25 years, range 23–32 years) participated in the experiment. All participants were right‐handed, had normal or corrected‐to‐normal visual acuity and no history of mental illness or neurological diseases. The study was approved by the Ethical Committee of KU Leuven Medical School and all volunteers gave their written informed consent in accordance with the Helsinki Declaration prior to the experiment.

Stimuli

Instead of the morphed stimuli used in the behavioral assessment of Study I, we presented the prototypical stimuli (Fig. 1A) in the task‐based fMRI experiment. The complete stimulus set contained 30 stimuli, six examples of neutral prototypes (6 different actors) and six angry, happy, fearful, and sad prototypes, respectively.

Procedure

Details concerning the scanning procedure and data processing are outlined in Jastorff et al. [2015]. In short, prototypical neutral or emotional stimuli were shown in an event‐related design. The stimulus set was composed of 30 stimuli, comprising 5 different conditions (4 emotions × 6 actors + 1 neutral × 6 actors), presented at two different sizes within a single run. Subjects were required to fixate a small red square superimposed on all stimuli and asked to respond as to whether the preceding stimulus was emotional or neutral by pressing a button on an MR‐compatible button box during the ISI. A single run lasted 200 s and 8 runs were acquired in a single session.

Classification of the emotion based on support vector machines

MVPA analyses were performed in each subject's native space. We used a linear support vector machines (SVM) [Cortes and Vapnik, 1995] to assess performance in classification between emotional and neutral stimuli, as well as across the four emotions, based on the t‐scores. To this end, every stimulus was modeled as a separate condition (30 t‐images; 4 emotions × 6 actors + 1 neutral × 6 actors). The t‐scores of all voxels of a given ROI for a particular stimulus were concatenated across subjects, resulting in a single activation vector per stimulus and per ROI across subjects [Caspari et al., 2014]. The length of this vector was given by the sum of the voxels included in the ROI over subjects. The activations of four stimuli from each condition were used for training the SVM. The remaining two stimuli in each condition were used as test set. This analysis was repeated each time with differently composed training and test sets for all possible combinations. The SVM analysis was carried out using the CoSMoMVPA toolbox. As a control, the analysis was performed with shuffled category labels (10,000 permutations), where all stimuli were randomly assigned to the five conditions, assuring that the shuffling did not exchange labels between subjects.

RESULTS

Study I: Investigation of bvFTD Patients and Age Matched Controls

VBM comparison between bvFTD patients and controls

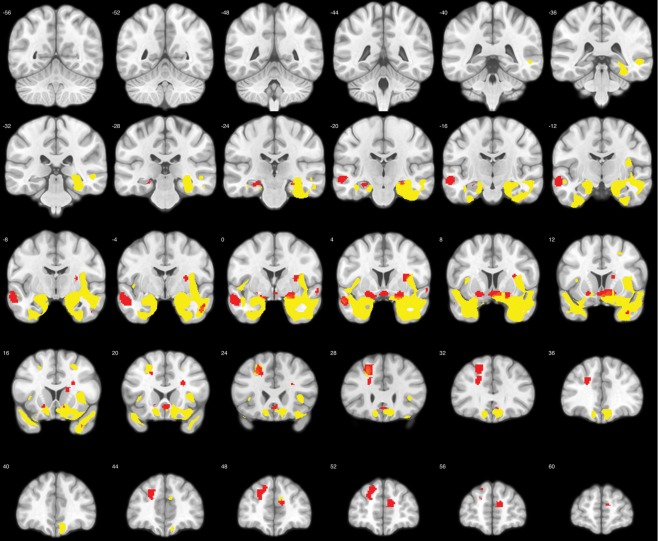

Using VBM analysis, we determined regions with significant gray matter atrophy in our population of bvFTD patients compared to age‐matched controls. In Figure 2, significantly atrophic voxels (P < 0.001 uncorrected at P < 0.05 FWE cluster size correction) are highlighted in yellow, confirming the typical pattern of atrophy in bvFTD [Seeley et al., 2008; Whitwell et al., 2009].

Figure 2.

Comparison of gray matter volumes in bvFTD patients and controls. Shown in yellow are significantly atrophic voxels in the bvFTD group (P < 0.001 uncorrected at P < 0.05 FWE cluster size correction). Red voxels indicate the regions involved in emotion processing as defined in Jastorff et al. [2015], containing significantly less gray matter in patients compared to controls. [Color figure can be viewed at http://wileyonlinelibrary.com]

In our earlier study [Jastorff et al. 2015], we identified 65 distinct brain regions located in midline structures, the dorsolateral and ventrolateral prefrontal cortex, and the temporal lobes that were involved in the visual processing of emotional gaits. These regions showed reliable brain‐behavior correlations between fMRI activation and sensitivity for emotion categorization in a group of 16 young, healthy volunteers. The stronger the fMRI activation was in these regions for the contrast emotional versus neutral stimuli, the more sensitive the subject was in a behavioral emotion‐categorization experiment identical to the one used in the present study. To investigate whether any of these regions were atrophic in our population of bvFTD patients, we compared gray matter volume between patients and controls within the 65 ROIs. The 18 ROIs showing significantly lower gray matter volume in patients (two‐sample t‐test, P < 0.05) are highlighted in red (Fig. 2). These ROIs were located in dorsolateral prefrontal cortex, ventral striatum, hippocampus, insula, amygdala, anterior cingulate cortex, and the ATL. This validated our approach of studying bvFTD patients to gain insights into the contributions made by individual brain regions involved in emotion processing to emotion recognition.

Behavioral results

Emotion task

During a psychophysical session, participants had to first indicate whether the presented stimulus was emotional or neutral (emotion detection). If the response was “emotional,” they were then asked to categorize the stimulus as angry, happy, fearful, or sad (emotion categorization). This design was intended to tap into two potentially different processing mechanisms. Participants could answer “emotional” to the first question, if they had the “feeling” that the stimulus expressed some emotion, even if unsure which exact emotion it might be. We, therefore, expected the APs for emotion detection to be lower than the APs for the correct identification of the emotion. APs and discrimination sensitivity (i.e., slope of the function/ability to discriminate between the two conditions) were determined by fitting the behavioral data using psychometric curves as described by Wichmann and Hill [2001].

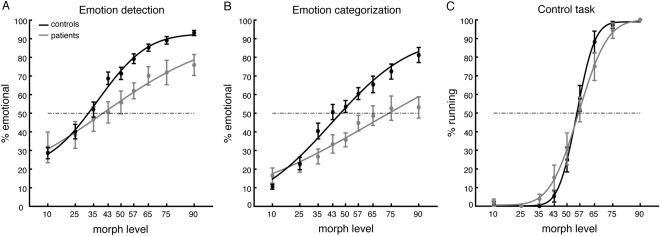

Behavioral results for the emotion detection task are shown in Figure 3A. The average APs for the group of bvFTD patients and the control group were 0.40 and 0.32, respectively. Interestingly, this difference was not significant across groups (P = 0.27; Mann–Whitney U test), indicating that patients and controls required similar levels of the emotional prototype to be added the morph to judge the stimulus as emotional in 50% of the trials. Thus, their “intuitive feelings” about whether a stimulus was emotional did not differ significantly. Nevertheless, both groups differed with respect to discrimination sensitivity (P < 0.001; Mann–Whitney U test). The higher discrimination sensitivity obtained for patients (average: 0.47) compared to controls (average: 0.21) indicates a shallower slope fit and signifies that a greater change in stimulus is required to affect performance. In other words, to increase the number of “emotion” responses by the same amount as in the controls, patients required a higher morph level.

Figure 3.

Behavioral performance. Percent emotional responses (± SEM) as a function of morph level for the emotion detection task (A), the emotion categorization task (B), and the control task (C). Solid lines indicate the fit of the psychometric curve.

Behavioral differences between the groups became more apparent in the emotion categorization task. When tested for correct identification (Fig. 3B), not only did discrimination sensitivity differ across groups (P < 0.001; Mann–Whitney U test) but also the respective APs (P < 0.01; Mann–Whitney U test). Thus, patients required higher contributions from the emotional prototype to the morph to correctly identify the emotion than controls. To test whether identifications of all or only some of the emotions were impaired in patients, we also compared APs for correct identification per emotion between the groups. We obtained significantly higher APs for angry (z = −3.85, P < 0.001), fearful (z = −2.09, P < 0.05), and sad (z = −2.64, P < 0.01) expressions in patients compared to controls. Interestingly, APs for happy expressions were not significantly different (z = −1.77, P = 0.08). Nevertheless, the difference in APs between the average of the negative emotions (angry, fearful, and sad) and happy across groups was not significant (z = −1.58, P = 0.11). This indicates that happy was the least impaired emotion, but that performance was not significantly better compared to negative emotions in general.

Control task

In the control task, participants had to categorize motion morphs derived from neutral walking and neutral running prototypes as either running or walking. We chose this task because it was very similar to the emotion task in terms of stimuli, task instruction, display parameters and overall complexity. Our reasoning was that if the processing deficit observed in patients would be specific to emotional stimuli, then threshold and sensitivity for our control task should not differ between groups. This was indeed what we found (Fig. 3C). Neither APs (z = 0.63, P = 0.53), nor discrimination sensitivity (z = −0.10, P = 0.92) differed significantly between patients and controls. Direct comparison of the APs of the emotion and control tasks using a factorial ANOVA confirmed our interpretation, showing a significant interaction between group (patients/controls) and task (emotion/control) (F (1,60) = 14.37, P < 0.001).

Imaging results: VBM

The bvFTD patients showed a reduced sensitivity in the emotion detection task (Fig. 3A) and were significantly impaired in the emotion categorization task (Fig. 3B). To investigate potential neural correlates of these impairments, we entered APs as covariates in an analysis of gray matter volume in the group of bvFTD patients. We limited this analysis to the patient group because our intention was to link impaired emotion processing with gray matter volume. As controls differed with regard to both behavioral and structural imaging variables, combining the two groups might have led to results without significant within‐group brain‐behavior correlations. We performed two independent correlation analyses, one using the APs for emotion detection and another using the APs for emotion categorization. In both analyses, APs obtained from the control task were included as variables of no interest, because we assumed that this task was equally prone to any influences arising from demographic and disease‐related factors.

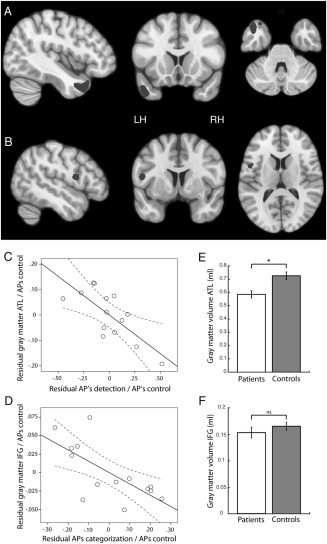

Results of the regression analysis are presented in Figure 4. Gray matter volume in the left ATL [−45, 13, −37] correlated significantly (P < 0.001 uncorrected) with APs obtained from the emotion detection task (Fig. 4A). Conversely, gray matter volume in the left opercular part of the IFG [−52, 2, 14] correlated significantly (P < 0.001 uncorrected) with the APs obtained for the emotion categorization task (Fig. 4B). The latter region is part of ventral premotor cortex, comprising areas BA 44 and ventral BA 6. These correlations remained significant (both P < 0.05 FWE small volume correction, sphere of 5 mm radius) after adding the categorization APs to the detection regression model as a nuisance variable and vice versa. As our VBM analyses were performed using parametric statistics, we validated that the unstandardized residuals of the regression fulfilled the normality assumption. This was the case in both regions as indicated by non‐significant Shapiro–Wilk tests (ATL: P = 0.21; IFG: P = 0.35).

Figure 4.

Brain regions showing a significant correlation between (A) patients' APs in the emotion detection task and gray matter volume and (B) patients' APs in the emotion categorization task and gray matter volume. Volumes are shown at P < 0.01 uncorrected for illustration purposes. (C) Partial correlation between gray matter volume of the ATL ROI and the detection APs, when correcting for the influence of the APs obtained in the control task. (D) Partial correlation between gray matter volume of the IFG ROI and the categorization APs, when correcting for the influence of the APs obtained in the control task. (E) Comparison of gray matter volume in the ATL ROI (P < 0.01) between patients and controls. (F) Comparison of gray matter volume in the IFG ROI (P = 0.33) between patients and controls.

Panels C and D of Figure 4 plot the partial correlation between gray matter volume and the APs for emotion detection and emotion categorization respectively, after correcting for the influence of the APs obtained from the control task. Panels E and F compare the gray matter volume between patients and controls in left ATL and the left IFG, respectively. Whereas gray matter volume in the temporal lobe was significantly reduced in the patient group (t (31) = 3.44, P < 0.01), the IFG showed no difference between groups (t (31) = 1.0, P = 0.33).

Imaging results: resting state connectivity

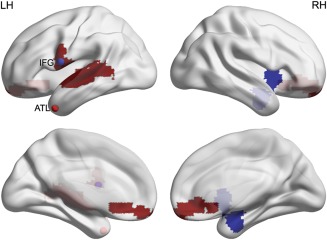

ATL and IFG are nodes in different functional brain networks, with the ATL involved in the mentalizing network and the IFG in the action observation network. To investigate whether the two regions showed differences in functional connectivity in our sample of bvFTD patients, we directly compared resting state functional connectivity between the groups, using a sphere of 5 mm radius around the peak coordinates of the ROIs as seeds. Indeed, connectivity between the left ATL (Fig. 5, red sphere) and the mid‐ and posterior temporal cortex was significantly reduced in the patient population, as was connectivity between ATL and IFG and between ATL and medial orbito‐frontal cortex (Fig. 5, red colored voxels). The left IFG (Fig. 5, blue sphere), conversely, showed significantly reduced connectivity with the contralateral anterior insula, the contralateral amygdala and the contralateral ATL in the bvFTD patients (Fig. 5, blue colored voxels). For both ROIs, results were thresholded at P < 0.001 with a FWE cluster size correction of P < 0.05 (see Methods). The analyses included modulated gray matter volumes obtained from the VBM analysis to control for confounding influences of gray matter atrophy on resting‐state BOLD signal [Oakes et al., 2007]. At a more liberal threshold (P < 0.001, no cluster size correction), left IFG also showed reduced functional connectivity with the ipsilateral anterior insula, amygdala, and ATL.

Figure 5.

Resting state analysis. Red and blue spheres illustrate the ATL and IFG ROIs shown in Figure 4. Red and blue voxels exhibit significantly (P < 0.001 uncorrected at P < 0.05 FWE cluster size correction) reduced functional connectivity between the ATL ROI and the rest of the brain, and the IFG ROI and the rest of the brain, respectively. [Color figure can be viewed at http://wileyonlinelibrary.com]

Study II: Task‐Based Functional Imaging of Young Healthy Volunteers

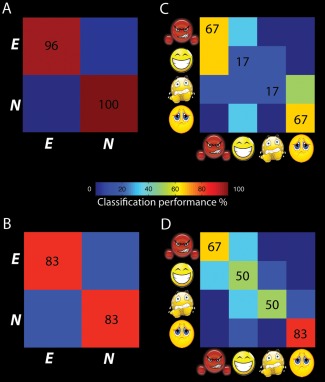

Our results indicate that the left ATL and the left IFG play different roles during emotion processing, with the ATL involved in emotion detection and the IFG in emotion categorization. To test this specific hypothesis, we re‐investigated the fMRI results obtained in our earlier study [Jastorff et al., 2015]. In that study, healthy volunteers observed prototypical neutral and emotional (angry, happy, fearful and sad) stimuli in the fMRI scanner in an event‐related design. The stimuli were identical to the prototypes used to generate the morphed stimuli in the present study. Using the ATL and IFG as a priori regions of interest (ROIs), we investigated whether the multi‐voxel fMRI response pattern within these ROIs contained sufficient information to reliably discriminate between emotional and neutral stimuli or between the four emotional stimuli. This was accomplished by means of SVM, trained on 2/3 of the data and tested with the remaining 1/3 (see Methods). ROIs were back‐projected to each subjects' native space for analysis.

Figure 6A,B shows classification results for emotional and neutral stimuli in the ATL and IFG, respectively. For this classification, all emotional stimuli were treated as one category and the SVM was trained to discriminate the fMRI response patterns obtained for those stimuli from those of the neutral stimuli. Classification performance was almost perfect in the ATL (A) and reached 83% correct in the IFG ROI. Both ROIs contained information to reliably discriminate between neutral and emotional stimuli, as determined by permutation testing with 1,000 permutations (both P < 0.001, chance level: 50%). Panels C and D illustrate the classification performance when the SVM was trained to discriminate between the four emotion categories. Here, average classification performance for the ATL was not significantly better than chance level (P = 0.07). In contrast, average classification performance in the IFG was highly significant (P < 0.001, chance level in both cases 25%).

Figure 6.

Classification performance levels of the ATL ROI (A, C) and the IFG ROI (B, D) discriminating emotional and neutral stimuli and identifying the four emotions in young healthy volunteers. [Color figure can be viewed at http://wileyonlinelibrary.com]

Taken together, classification results obtained from reanalyzing fMRI data obtained in an earlier study confirmed our hypothesis inspired by the patient data. Whereas the fMRI signal in both areas contained information that could discriminate emotional from neutral stimuli, only the ventral premotor cortex also contained information capable of categorizing the four different emotions.

DISCUSSION

The main goal of our study was to investigate the contributions of regions involved in emotion processing to emotion detection and emotion categorization from dynamic body expressions. To this end, we studied behavioral performance for emotion detection and categorization in bvFTD patients, who exhibited significant gray matter atrophy in several of the anterior brain regions involved in emotion processing. Such regions included the dorsolateral prefrontal cortex, ventral striatum, hippocampus, insula, amygdala, anterior cingulate cortex, and the ATL. In agreement with earlier studies, patients were found to be impaired in emotion processing compared to controls (see Kumfor and Piguet, 2012 for review). Interestingly, this impairment was more pronounced for emotion categorization than for detection per se. In the following section, we will discuss our results for the two tasks independently and subsequently summarize the implications for emotion processing in general and with respect to bvFTD in particular.

Emotion Detection

Gray matter volumes in the left ATL correlated with individual emotion detection performance in bvFTD patients. That is, the more sensitive a patient was in detecting an emotion, the less atrophy his/her left ATL presented. Consistent with the general pattern of atrophy in bvFTD, the ATL averaged significantly less gray matter than controls. Our results also showed reduced resting‐state connectivity between the ATL and middle temporal regions, between ATL and IFG, and between ATL and medial orbito‐frontal cortex in these patients. Whereas altered resting‐state connectivity within the salience, default mode, and fronto‐parietal network has been reported in the case of bvFTD [Filippi et al., 2013; Whitwell et al., 2011; Zhou et al., 2010], no study specifically investigated altered connectivity with the ATL in bvFTD. Nonetheless, the reduced connectivity of the ATL with sensory areas, motor association regions and areas involved in decision‐making observed in our study, matches well with its anatomical connections. The ATL is connected to middle and posterior temporal cortex through the inferior longitudinal fasciculus, providing access to a wide variety of primarily visual and auditory information. Moreover, it is closely connected to the amygdala and medial orbito‐frontal cortex through the uncinate fasciculus and to lateral prefrontal cortex through the inferior fronto‐occipital fasciculus [Blaizot et al., 2010; Morecraft et al., 1992; Thiebaut de Schotten et al., 2011]. This connectivity pattern establishes the ATL as an important node within a network often referred to as the mentalizing network or “social brain,” that allows us to make predictions about people's actions based on their mental states [Frith, 2007].

Numerous neuropsychological studies support the role of the ATL in social behavior. Bilateral resection of anterior temporal cortices in monkeys led to withdrawal and inappropriate responses to social behaviors of other monkeys [Franzen and Myers, 1973]. bvFTD patients having only limited frontal damage still exhibit social behavioral changes such as lack of empathy, social awkwardness or disinhibition [Edwards‐Lee et al., 1997; Liu et al., 2004; Miller et al., 2003; Rankin et al., 2005]. Patients with semantic dementia, the variant of FTD that is associated with mainly temporal atrophy, exhibit important social cognitive deficits on verbal as well as non‐verbal tasks [Duval et al., 2012; Kumfor et al., 2016]. Although right temporal variant FTD has been proposed as a distinct subtype characterized by personality change and inappropriate behaviors [Chan et al., 2009; Josephs et al., 2009], left‐sided anterior temporal hypo‐perfusion and atrophy in FTD has been associated with interpersonal changes and specific emotion recognition deficits as well [Kumfor et al., 2013; McMurtray et al., 2006]. Also, patients with ATL epilepsy have problems with identifying facial expressions and attributing mental states to others [Amlerova et al., 2014; Wang et al., 2015].

Among other tasks, the ATL is frequently activated in neuroimaging experiments involving emotion processing (see Olson et al., 2007 for review). In our earlier study testing healthy participants [Jastorff et al., 2015], the left ATL showed the highest correlation between fMRI activation and emotion sensitivity. In this study, participants performed a behavioral task identical to that in the current study and subsequently viewed the same stimuli in the MRI scanner. Analogous to the present study, the more sensitive a participant was in detecting an emotion in the behavioral task, the higher the fMRI activation for emotional compared to neutral stimuli proved to be in the left ATL. Even though the relationship between cortical thickness and fMRI signal is not straightforward [Hegarty et al., 2012], reanalysis of our 2015 data showed that the ATL ROI determined in the present study, located slightly more anterior than in our earlier study, contained information sufficient to reliably discriminate between emotional and neutral stimuli in healthy volunteers. The mentalizing system has been implicated in tasks requiring an understanding of why a person behaves in a particular way [Spunt and Lieberman, 2012]. The fact that participants in our studies were not required to integrate action and context shows ATL is also involved in a more general processing of social stimuli.

Emotion Categorization

Gray matter volume in the left posterior IFG correlated significantly with individual APs for emotion categorization in bvFTD patients. The more sensitive the patient was in identifying the emotion presented, the less atrophic his/her IFG gray matter was found to be. Interestingly, even though average gray matter volume was reduced, it was not significantly lower than in controls. Nevertheless, the left IFG showed reduced resting state connectivity with the anterior insula, the amygdala and the ATL in bvFTD patients, mainly contralaterally. This fits with the reported connectivity pattern, showing that the base of the opercular part of the IFG is connected to the limbic system [Anwander et al., 2007], whereas the lateral components are connected to the superior frontal gyrus, parietal and posterior temporal regions [Bernal et al., 2015].

The posterior IFG is part of ventral premotor cortex, including BA44 and the ventral aspect of BA6. This region has been implicated in language functions such as semantic retrieval [Binder et al., 2009]. With respect to emotion perception, language might help to acquire and use emotion concept knowledge by binding different sensorimotor representations of a single emotion. Thus, two different representations of the same emotion category might be linked by one common label [Borghi and Binkofski, 2014; Lindquist et al., 2015].

Conversely, the IFG is also presumed to house “mirror neurons” [Rizzolatti and Sinigaglia, 2010]. In the monkey, these neurons become active when the monkey performs a particular movement, as well as when he observes that same movement. This holds true not only for manual actions [Gallese et al., 1996] but also for communicative actions such as lip smacking [Ferrari et al., 2003]. Furthermore, it has recently been shown that mirror neurons in monkey ventral premotor cortex are modulated by social cues such as gaze direction [Coude et al., 2016]. Human fMRI experiments report ventral premotor cortex activation for the visual presentation and imitation of emotional facial expressions [Leslie et al., 2004; Wicker et al., 2003] and have suggested that it acts as a relay station for observed movements to limbic areas [Carr et al., 2003]. Moreover, Shamay‐Tsoory et al. [2009] showed that patients with lesions in the IFG are selectively impaired in emotional empathy. The proposed link between observation and execution in the ventral premotor cortex has culminated in the theory of embodied simulation, a mechanism that may allow us to directly decode the emotions and sensations experienced by others [Bastiaansen et al., 2009; Gallese, 2007].

Average gray matter volume in the left IFG of our population of bvFTD patients was not significantly reduced compared to controls. This agrees with previous findings indicating a correlation between gray matter volume and emotion‐matching in the left IFG of bvFTD patients [Van den Stock et al., 2015] and reduced fMRI activation for emotional faces in the same region of patients with Alzheimer's disease [Lee et al., 2013]. In both cases, gray matter volume of the left IFG was not significantly reduced compared to controls. A possible interpretation here is that, apart from reduced functionality in the area itself, altered communication between the IFG, coding motor information, and the anterior insula, receiving affective information from the rest of the insula and the limbic system, leads to behavioral impairments in emotion categorization. This possibility fits well with our observed reduced resting state connectivity between IFG and anterior insula, amygdala, and ATL. Thus, our results are in line with the hypothesis that nominally motor regions also play an important role in emotion perception.

Functional Implications

Our results showed dissociation between brain regions primarily involved in emotion detection and emotion categorization, providing support for the existence of two processing streams for the analysis of emotional body expressions. The first may involve regions of the social brain, with the left ATL playing an important role in representing and retrieving social knowledge. This route may provide an intuitive feeling that could be used to infer, for example, the valence or the level of arousal induced by the stimulus. The second pathway may recruit regions of the action observation network for precise analysis of the movement pattern and for subsequent integration of this information with the limbic system. This analysis may help to discriminate between different emotions with similar valences. Enhanced communication between the IFG and the ATL during social inference has been previously described [Spunt and Lieberman, 2012]. Dissociation between ATL and IFG is also supported by our functional imaging results from young, healthy controls. Whereas the ATL almost perfectly distinguished between emotional and neutral stimuli, but did not contain information sufficient to discriminate between emotions, the IFG ROI performed significantly above chance in both tasks. In young, healthy participants, with intact communication between the two processing streams, APs for emotion detection and emotion categorization were highly correlated [Jastorff et al., 2015]. This implies that when healthy participants judged a stimulus as emotional, they were generally correct in identifying the emotion. While this was also the case for our control population in the present study (r = 0.67), emotion detection and emotion categorization APs did not correlate significantly in bvFTD patients (r = 0.37). This, together with the finding of reduced resting‐state connectivity between ATL, limbic regions and IFG, may indicate that communication between the two streams is impaired in the case of bvFTD. Support for this interpretation comes from a study by Virani et al. [2013], reporting reduced functional fMRI activation in bvFTD patients compared to controls in the IFG, insula and amygdala for viewing emotional facial expressions.

Interestingly, our behavioral data showed that bvFTD patients were less impaired for emotion detection than for emotion categorization. This result suggests that bvFTD patients may be able to detect that the other person is in some kind of emotional state, but have difficulties correctly identifying exactly what state it is. Nevertheless, this interpretation should be taken with caution, as our method of single stimuli used to determine perceptual sensitivity does not allow us to investigate a possible response bias. However, our finding is in line with Lavenu et al. [1999], who noticed that FTD patients were able to detect an emotional face when shown next to a neutral one; however, patients were strongly impaired when they had to label the emotion. A similar dissociation was also reported by Lindquist et al. [2014] in the case of semantic dementia. The fact that the ATL was significantly atrophic in our patient sample, whereas the IFG was not, might indicate a possible compensation mechanism in the IFG, also containing information for emotion detection in healthy volunteers.

Possible Limitations

We would like to mention that our results should be interpreted with some degree of caution because of the small sample size. Nevertheless, given the behavioral symptoms of bvFTD patients, many of the studies investigating emotion processing in FTD rely on sample sizes between 6 and 13 patients, even fewer than the 14 participants tested here [Bediou et al., 2009; Fernandez‐Duque and Black, 2005; Keane et al., 2002; Kessels et al., 2007; Rosen et al., 2002; Snowden et al., 2008; Zhou et al., 2010].

The atrophy pattern in our patient sample indicates strong temporal rather than frontal atrophy. This may reflect the possibility that temporal dominant bvFTD patients [Whitwell et al., 2009] are more likely to comply with requirements related to imaging protocols. This limitation may have led to an inclusion bias with a higher proportion of temporal dominant bvFTD patients.

Conclusion

In conclusion, our results indicate that the mentalizing network as well as the action observation network are involved in processing emotional body expressions and that both networks perform distinct tasks in such operations. In the case of bvFTD, communication between the two pathways seems to be reduced, which might be one deficit underlying the behavioral symptoms observed in this disease.

Supporting information

Supporting Information Table 1.

ACKNOWLEDGMENT

The authors declare no competing financial interests. Author Contributions: JJ and MV designed the experiment, MAG provided material for stimulus generation, JJ generated the stimuli, FLD, JVDS and JJ acquired the data, JVDS, RVDB and MV recruited the patients, JJ analyzed the functional data, FLD analyzed the structural data, JJ wrote the paper. All authors discussed the results and implications and commented on the manuscript.

REFERENCES

- Abdollahi RO, Jastorff J, Orban GA (2013): Common and segregated processing of observed actions in human SPL. Cereb Cortex 23:2734–2753. [DOI] [PubMed] [Google Scholar]

- Amlerova J, Cavanna AE, Bradac O, Javurkova A, Raudenska J, Marusic P (2014): Emotion recognition and social cognition in temporal lobe epilepsy and the effect of epilepsy surgery. Epilepsy Behav 36:86–89. [DOI] [PubMed] [Google Scholar]

- Anwander A, Tittgemeyer M, von Cramon DY, Friederici AD, Knosche TR (2007): Connectivity‐Based Parcellation of Broca's Area. Cereb Cortex 17:816–825. [DOI] [PubMed] [Google Scholar]

- Baez S, Manes F, Huepe D, Torralva T, Fiorentino N, Richter F, Huepe‐Artigas D, Ferrari J, Montanes P, Reyes P, Matallana D, Vigliecca NS, Decety J, Ibanez A (2014): Primary empathy deficits in frontotemporal dementia. Front Aging Neurosci 6:262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Satpute AB (2013): Large‐scale brain networks in affective and social neuroscience: Towards an integrative functional architecture of the brain. Curr Opin Neurobiol 23:361–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bastiaansen JA, Thioux M, Keysers C (2009): Evidence for mirror systems in emotions. Philos Trans R Soc Lond B Biol Sci 364:2391–2404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bediou B, Ryff I, Mercier B, Milliery M, Henaff MA, D'Amato T, Bonnefoy M, Vighetto A, Krolak‐Salmon P (2009): Impaired Social Cognition in Mild Alzheimer Disease. J Geriatr Psych Neur 22:130–140. [DOI] [PubMed] [Google Scholar]

- Bernal B, Ardila A, Rosselli M (2015): Broca's area network in language function: A pooling‐data connectivity study. Front Psychol 6:687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertoux M, de Souza LC, Sarazin M, Funkiewiez A, Dubois B, Hornberger M (2015): How preserved is emotion recognition in Alzheimer Disease compared with behavioral variant frontotemporal dementia? Alzheimer Dis Assoc Dis 29:154–157. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL (2009): Where is the semantic system? A critical review and meta‐analysis of 120 functional neuroimaging studies. Cereb Cortex 19:2767–2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blaizot X, Mansilla F, Insausti AM, Constans JM, Salinas‐Alaman A, Pro‐Sistiaga P, Mohedano‐Moriano A, Insausti R (2010): The human parahippocampal region: I. Temporal pole cytoarchitectonic and MRI correlation. Cereb Cortex 20:2198–2212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borghi AM, Binkofski F (2014): As social Tools: An embodied view on abstract concepts. Berlin; New York, NY: Springer, 2014. [Google Scholar]

- Brainard DH (1997): The psychophysics toolbox. Spat Vis 10:433–436. [PubMed] [Google Scholar]

- Buckner RL, Andrews‐Hanna JR, Schacter DL (2008): The brain's default network: Anatomy, function, and relevance to disease. Ann N Y Acad Sci 1124:1–38. [DOI] [PubMed] [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL (2003): Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci USA 100:5497–5502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspari N, Popivanov ID, De Maziere PA, Vanduffel W, Vogels R, Orban GA, Jastorff J (2014): Fine‐grained stimulus representations in body selective areas of human occipito‐temporal cortex. Neuroimage 102P2:484–497. [DOI] [PubMed] [Google Scholar]

- Chan D, Anderson V, Pijnenburg Y, Whitwell J, Barnes J, Scahill R, Stevens JM, Barkhof F, Scheltens P, Rossor MN, Fox NC (2009): The clinical profile of right temporal lobe atrophy. Brain 132:1287–1298. [DOI] [PubMed] [Google Scholar]

- Cortes C, Vapnik V (1995): Support‐vector networks. Mach Learn 20:273–297. [Google Scholar]

- Coudé G, et al. (2016): “Mirror Neurons of Ventral Premotor Cortex Are Modulated by Social Cues Provided by Others' Gaze.” The Journal of Neuroscience 3611:3145–3156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Couto B, Manes F, Montanes P, Matallana D, Reyes P, Velasquez M, Yoris A, Baez S, Ibanez A (2013): Structural neuroimaging of social cognition in progressive non‐fluent aphasia and behavioral variant of frontotemporal dementia. Front Hum Neurosci 7:467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diehl‐Schmid J, Pohl C, Ruprecht C, Wagenpfeil S, Forstl H, Kurz A (2007): The Ekman 60 Faces Test as a diagnostic instrument in frontotemporal dementia. Arch Clin Neuropsych 22:459–464. [DOI] [PubMed] [Google Scholar]

- Downey LE, Blezat A, Nicholas J, Omar R, Golden HL, Mahoney CJ, Crutch SJ, Warren JD (2013): Mentalising music in frontotemporal dementia. Cortex 49:1844–1855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duval C, Bejanin A, Piolino P, Laisney M, de La Sayette V, Belliard S, Eustache F, Desgranges B (2012): Theory of mind impairments in patients with semantic dementia. Brain 135:228–241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards‐Lee T, Miller BL, Benson DF, Cummings JL, Russell GL, Boone K, Mena I (1997): The temporal variant of frontotemporal dementia. Brain 120:1027–1040. [DOI] [PubMed] [Google Scholar]

- Fernandez‐Duque D, Black SE (2005): Impaired recognition of negative facial emotions in patients with frontotemporal dementia. Neuropsychologia 43:1673–1687. [DOI] [PubMed] [Google Scholar]

- Ferrari PF, Gallese V, Rizzolatti G, Fogassi L (2003): Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. Eur J Neurosci 17:1703–1714. [DOI] [PubMed] [Google Scholar]

- Franzen EA, Myers RE (1973): “Neural control of social behavior: prefrontal and anterior temporal cortex.” Neuropsychologia 11.2:141–157. [DOI] [PubMed] [Google Scholar]

- Filippi M, Agosta F, Scola E, Canu E, Magnani G, Marcone A, Valsasina P, Caso F, Copetti M, Comi G, Cappa SF, Falini A (2013): Functional network connectivity in the behavioral variant of frontotemporal dementia. Cortex 49:2389–2401. [DOI] [PubMed] [Google Scholar]

- Frith CD (2007): The social brain? Philos Trans R Soc Lond B Biol Sci 362:671–678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar‐Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuire P, Politi P (2009): Functional atlas of emotional faces processing: A voxel‐based meta‐analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci 34:418–432. [PMC free article] [PubMed] [Google Scholar]

- Gallese V (2007): Before and below 'theory of mind': Embodied simulation and the neural correlates of social cognition. Philos Trans R Soc Lond B Biol Sci 362:659–669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G (1996): Action recognition in the premotor cortex. Brain 119:593–609. [DOI] [PubMed] [Google Scholar]

- Giese MA, Poggio T (2000): Morphable models for the analysis and synthesis of complex motion pattern. Int J Comput Vis 38:59–73. [Google Scholar]

- Gorno‐Tempini M, Hillis A, Weintraub S, Kertesz A, Mendez M, Cappa SF, Ogar J, Rohrer J, Black S, Boeve BF (2011): Classification of primary progressive aphasia and its variants. Neurology 76:1006–1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grafton ST (2009): Embodied cognition and the simulation of action to understand others. Ann N Y Acad Sci 1156:97–117. [DOI] [PubMed] [Google Scholar]

- Hegarty CE, Foland‐Ross LC, Narr KL, Townsend JD, Bookheimer SY, Thompson PM, Altshuler LL (2012): Anterior cingulate activation relates to local cortical thickness. Neuroreport 23:420–424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jastorff J, Kourtzi Z, Giese MA (2006): Learning to discriminate complex movements: Biological versus artificial trajectories. J Vis 6:791–804. [DOI] [PubMed] [Google Scholar]

- Jastorff J, Begliomini C, Fabbri‐Destro M, Rizzolatti G, Orban GA (2010): Coding observed motor acts: Different organizational principles in the parietal and premotor cortex of humans. J Neurophysiol 104:128–140. [DOI] [PubMed] [Google Scholar]

- Jastorff J, Huang YA, Giese MA, Vandenbulcke M (2015): Common neural correlates of emotion perception in humans. Hum Brain Mapp 36:4184–4201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S (2002): Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage 17:825–841. [DOI] [PubMed] [Google Scholar]

- Josephs KA, Whitwell JL, Knopman DS, Boeve BF, Vemuri P, Senjem ML, Parisi JE, Ivnik RJ, Dickson DW, Petersen RC, Jack CR Jr (2009): Two distinct subtypes of right temporal variant frontotemporal dementia. Neurology 73:1443–1450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keane J, Calder AJ, Hodges JR, Young AW (2002): Face and emotion processing in frontal variant frontotemporal dementia. Neuropsychologia 40:655–665. [DOI] [PubMed] [Google Scholar]

- Kessels RP, Gerritsen L, Montagne B, Ackl N, Diehl J, Danek A (2007): Recognition of facial expressions of different emotional intensities in patients with frontotemporal lobar degeneration. Behav Neurol 18:31–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumfor F, Piguet O (2012): Disturbance of emotion processing in frontotemporal dementia: A synthesis of cognitive and neuroimaging findings. Neuropsychol Rev 22:280–297. [DOI] [PubMed] [Google Scholar]

- Kumfor F, Miller L, Lah S, Hsieh S, Savage S, Hodges JR, Piguet O (2011): Are you really angry? The effect of intensity on facial emotion recognition in frontotemporal dementia. Soc Neurosci 6:502–514. [DOI] [PubMed] [Google Scholar]

- Kumfor F, Irish M, Hodges JR, Piguet O (2013): Discrete neural correlates for the recognition of negative emotions: Insights from frontotemporal dementia. PLoS One 8:e67457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumfor F, Landin‐Romero R, Devenney E, Hutchings R, Grasso R, Hodges JR, Piguet O (2016): On the right side? A longitudinal study of left‐ versus right‐lateralized semantic dementia. Brain 139:986–998. [DOI] [PubMed] [Google Scholar]

- Lavenu I, Pasquier E, Lebert F, Petit H, Van der linden M (1999): Perception of emotion in frontotemporal dementia and Alzheimer disease. Alzheimer Dis Assoc Dis 13:96–101. [DOI] [PubMed] [Google Scholar]

- Lee TM, Sun D, Leung MK, Chu LW, Keysers C (2013): Neural activities during affective processing in people with Alzheimer's disease. Neurobiol Aging 34:706–715. [DOI] [PubMed] [Google Scholar]

- Leslie KR, Johnson‐Frey SH, Grafton ST (2004): Functional imaging of face and hand imitation: Towards a motor theory of empathy. Neuroimage 21:601–607. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Wager TD, Kober H, Bliss‐Moreau E, Barrett LF (2012): The brain basis of emotion: A meta‐analytic review. Behav Brain Sci 35:121–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Gendron M, Barrett LF, Dickerson BC (2014): Emotion perception, but not affect perception, is impaired with semantic memory loss. Emotion 14:375–387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, MacCormack JK, Shablack H (2015): The role of language in emotion: Predictions from psychological constructionism. Front Psychol 6:444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu W, Miller BL, Kramer JH, Rankin K, Wyss‐Coray C, Gearhart R, Phengrasamy L, Weiner M, Rosen HJ (2004): Behavioral disorders in the frontal and temporal variants of frontotemporal dementia. Neurology 62:742–748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lough S, Kipps CM, Treise C, Watson P, Blair JR, Hodges JR (2006): Social reasoning, emotion and empathy in frontotemporal dementia. Neuropsychologia 44:950–958. [DOI] [PubMed] [Google Scholar]

- McMurtray AM, Chen AK, Shapira JS, Chow TW, Mishkin F, Miller BL, Mendez MF (2006): Variations in regional SPECT hypoperfusion and clinical features in frontotemporal dementia. Neurology 66:517–522. [DOI] [PubMed] [Google Scholar]

- Miller BL, Diehl J, Freedman M, Kertesz A, Mendez M, Rascovsky K (2003): International approaches to frontotemporal dementia diagnosis: From social cognition to neuropsychology. Ann Neurol 54:S7–10. [DOI] [PubMed] [Google Scholar]

- Miller LA, Hsieh S, Lah S, Savage S, Hodges JR, Piguet O (2012): One size does not fit all: Face emotion processing impairments in semantic dementia, behavioural‐variant frontotemporal dementia and Alzheimer's disease are mediated by distinct cognitive deficits. Behav Neurol 25:53–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morecraft RJ, Geula C, Mesulam MM (1992): Cytoarchitecture and neural afferents of orbitofrontal cortex in the brain of the monkey. J Comp Neurol 323:341–358. [DOI] [PubMed] [Google Scholar]

- Oakes TR, Fox AS, Johnstone T, Chung MK, Kalin N, Davidson RJ (2007): Integrating VBM into the General Linear Model with voxelwise anatomical covariates. Neuroimage 34:500–508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ochsner KN, Knierim K, Ludlow DH, Hanelin J, Ramachandran T, Glover G, Mackey SC (2004): Reflecting upon feelings: An fMRI study of neural systems supporting the attribution of emotion to self and other. J Cogn Neurosci 16:1746–1772. [DOI] [PubMed] [Google Scholar]

- Olson IR, Plotzker A, Ezzyat Y (2007): The Enigmatic temporal pole: A review of findings on social and emotional processing. Brain 130:1718–1731. [DOI] [PubMed] [Google Scholar]

- Omar R, Henley SM, Bartlett JW, Hailstone JC, Gordon E, Sauter DA, Frost C, Scott SK, Warren JD (2011): The structural neuroanatomy of music emotion recognition: Evidence from frontotemporal lobar degeneration. Neuroimage 56:1814–1821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rankin KP, Kramer JH, Miller BL (2005): Patterns of cognitive and emotional empathy in frontotemporal lobar degeneration. Cogn Behav Neurol 18:28–36. [DOI] [PubMed] [Google Scholar]

- Rascovsky K, Hodges JR, Knopman D, Mendez MF, Kramer JH, Neuhaus J, van Swieten JC, Seelaar H, Dopper EG, Onyike CU, Hillis AE, Josephs KA, Boeve BF, Kertesz A, Seeley WW, Rankin KP, Johnson JK, Gorno‐Tempini ML, Rosen H, Prioleau‐Latham CE, Lee A, Kipps CM, Lillo P, Piguet O, Rohrer JD, Rossor MN, Warren JD, Fox NC, Galasko D, Salmon DP, Black SE, Mesulam M, Weintraub S, Dickerson BC, Diehl‐Schmid J, Pasquier F, Deramecourt V, Lebert F, Pijnenburg Y, Chow TW, Manes F, Grafman J, Cappa SF, Freedman M, Grossman M, Miller BL (2011): Sensitivity of revised diagnostic criteria for the behavioural variant of frontotemporal dementia. Brain 134:2456–2477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Sinigaglia C (2010): The functional role of the parieto‐frontal mirror circuit: Interpretations and misinterpretations. Nat Rev Neurosci 11:264–274. [DOI] [PubMed] [Google Scholar]

- Roether CL, Omlor L, Christensen A, Giese MA (2009): Critical features for the perception of emotion from gait. J Vis 9:32–31. [DOI] [PubMed] [Google Scholar]

- Rosen HJ, Perry RJ, Murphy J, Kramer JH, Mychack P, Schuff N, Weiner M, Levenson RW, Miller BL (2002): Emotion comprehension in the temporal variant of frontotemporal dementia. Brain 125:2286–2295. [DOI] [PubMed] [Google Scholar]

- Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, Reiss AL, Greicius MD (2007): Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci 27:2349–2356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seeley WW, Crawford R, Rascovsky K, Kramer JH, Weiner M, Miller BL, Gorno‐Tempini ML (2008): Frontal paralimbic network atrophy in very mild behavioral variant frontotemporal dementia. Arch Neurol 65:249–255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shamay‐Tsoory SG, Aharon‐Peretz J, Perry D (2009): Two systems for empathy: A double dissociation between emotional and cognitive empathy in inferior frontal gyrus versus ventromedial prefrontal lesions. Brain 132:617–627. [DOI] [PubMed] [Google Scholar]

- Snowden JS, Austin NA, Sembi S, Thompson JC, Craufurd D, Neary D (2008): Emotion recognition in Huntington's disease and frontotemporal dementia. Neuropsychologia 46:2638–2649. [DOI] [PubMed] [Google Scholar]

- Song XW, Dong ZY, Long XY, Li SF, Zuo XN, Zhu CZ, He Y, Yan CG, Zang YF (2011): REST: A toolkit for resting‐state functional magnetic resonance imaging data processing. PLoS One 6:e25031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spunt RP, Lieberman MD (2012): An integrative model of the neural systems supporting the comprehension of observed emotional behavior. Neuroimage 59:3050–3059. [DOI] [PubMed] [Google Scholar]

- Thiebaut de Schotten M, Ffytche DH, Bizzi A, Dell'Acqua F, Allin M, Walshe M, Murray R, Williams SC, Murphy DG, Catani M (2011): Atlasing location, asymmetry and inter‐subject variability of white matter tracts in the human brain with MR diffusion tractography. Neuroimage 54:49–59. [DOI] [PubMed] [Google Scholar]

- Van den Stock J, De Winter FL, de Gelder B, Rangarajan JR, Cypers G, Maes F, Sunaert S, Goffin K, Vandenberghe R, Vandenbulcke M (2015): Impaired recognition of body expressions in the behavioral variant of frontotemporal dementia. Neuropsychologia 75:496–504. [DOI] [PubMed] [Google Scholar]

- Virani K, Jesso S, Kertesz A, Mitchell D, Finger E (2013): Functional neural correlates of emotional expression processing deficits in behavioural variant frontotemporal dementia. J Psychiatry Neurosci 38:174–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang WH, Shih YH, Yu HY, Yen DJ, Lin YY, Kwan SY, Chen C, Hua MS (2015): Theory of mind and social functioning in patients with temporal lobe epilepsy. Epilepsia 56:1117–1123. [DOI] [PubMed] [Google Scholar]

- Whitwell JL, Przybelski SA, Weigand SD, Ivnik RJ, Vemuri P, Gunter JL, Senjem ML, Shiung MM, Boeve BF, Knopman DS, Parisi JE, Dickson DW, Petersen RC, Jack CR, Josephs KA (2009): Distinct anatomical subtypes of the behavioural variant of frontotemporal dementia: A cluster analysis study. Brain 132:2932–2946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitwell JL, Josephs KA, Avula R, Tosakulwong N, Weigand SD, Senjem ML, Vemuri P, Jones DT, Gunter JL, Baker M, Wszolek ZK, Knopman DS, Rademakers R, Petersen RC, Boeve BF, Jack CR Jr (2011): Altered functional connectivity in asymptomatic MAPT subjects: A comparison to bvFTD. Neurology 77:866–874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ (2001): The psychometric function: I. Fitting, sampling, and goodness of fit. Percept Psychophys 63:1293–1313. [DOI] [PubMed] [Google Scholar]

- Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G (2003): Both of us disgusted in My insula: The common neural basis of seeing and feeling disgust. Neuron 40:655–664. [DOI] [PubMed] [Google Scholar]

- Yeo BT, Krienen FM, Sepulcre J, Sabuncu MR, Lashkari D, Hollinshead M, Roffman JL, Smoller JW, Zollei L, Polimeni JR, Fischl B, Liu H, Buckner RL (2011): The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J Neurophysiol 106:1125–1165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou J, Greicius MD, Gennatas ED, Growdon ME, Jang JY, Rabinovici GD, Kramer JH, Weiner M, Miller BL, Seeley WW (2010): Divergent network connectivity changes in behavioural variant frontotemporal dementia and Alzheimer's disease. Brain 133:1352–1367. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information Table 1.