Abstract

Facial color is important information for social communication as it provides important clues to recognize a person's emotion and health condition. Our previous EEG study suggested that N170 at the left occipito‐temporal site is related to facial color processing (Nakajima et al., [2012]: Neuropsychologia 50:2499–2505). However, because of the low spatial resolution of EEG experiment, the brain region is involved in facial color processing remains controversial. In the present study, we examined the neural substrates of facial color processing using functional magnetic resonance imaging (fMRI). We measured brain activity from 25 subjects during the presentation of natural‐ and bluish‐colored face and their scrambled images. The bilateral fusiform face (FFA) area and occipital face area (OFA) were localized by the contrast of natural‐colored faces versus natural‐colored scrambled images. Moreover, region of interest (ROI) analysis showed that the left FFA was sensitive to facial color, whereas the right FFA and the right and left OFA were insensitive to facial color. In combination with our previous EEG results, these data suggest that the left FFA may play an important role in facial color processing. Hum Brain Mapp 35:4958–4964, 2014. © 2014 Wiley Periodicals, Inc.

Keywords: facial color, fMRI, FFA, OFA

INTRODUCTION

Facial color provides useful clues for estimation of mental or physical condition of another person. Changizi et al. [2006] proposed that color vision in primates was selected for discriminating skin color modulations, presumably for the purpose of discriminating emotional states, socio‐sexual signals, and threat displays. Moreover, several behavioral studies have shown that facial skin color is related to the perception of age, sex, health condition, and attractiveness of the face [Bruce and Langton, 1994; Fink et al., 2006; Jones et al., 2004; Matts et al., 2007; Stephen et al., 2009; Tarr et al., 2001]. Facial skin color distribution, independent of facial shape‐related features, has a significant influence on the perception of female facial age, attractiveness, and health [Fink et al., 2006]. Stephen et al. [2009] instructed participants to manipulate the skin color of face photographs to enhance healthy appearance, and found that participants increased skin redness, suggesting that facial color may play a role in perception of health in human faces. Similarly, increased skin redness enhances the appearance of dominance, aggression, and attractiveness in male faces viewed by female participants, which suggests that facial redness is perceived as conveying similar information about male qualities [Stephen et al., 2012]. Thus, facial color is important facial information as it facilitates social communication.

In addition to these behavioral data, recent EEG studies have investigated the influence of facial color on the face‐selective ERP component, such as N170 [Minami et al., 2011; Nakajima et al., 2012]. The N170, a negative ERP component observed at occipito‐temporal sites with a latency of ∼170 ms, clearly distinguishes face from non‐facial stimuli [Itier and Taylor, 2004; Rossion et al., 2003]. Therefore, the N170 has been regarded as a face‐selective component [Botzel and Grusser, 1989; Jeffreys and Tukmachi, 1992]. Minami et al. [2011] demonstrated that the N170 was larger in amplitude for atypical‐colored (bluish‐colored) faces than for natural‐colored faces, which suggests that face color is important for face detection. Nakajima et al. [2012] investigated modulation of the N170 while subjects viewed face images with eight different color hues, and found that the N170 amplitude recorded in the left occipito‐temporal site was modulated by facial color, but not in the homologous site of the right hemisphere. These findings suggest that facial color information is processed in the early stage of face processing, and the processing occurs in the left occipito‐temporal site.

In neuroimaging studies, face‐selective regions that show higher activity to faces than other category objects were identified in the occipito‐temporal cortex [Haxby et al., 2000; Yovel and Kanwisher, 2005]. These regions were the “fusiform face area” (FFA) found in the middle fusiform gyrus [Kanwisher et al., 1997], the “occipital face area” (OFA) found in the inferior occipital gyrus [Gauthier et al., 2000] and the lateral fusiform gyrus, and the superior temporal sulcus (STS) [Ishai et al., 2005]. In the neural model for face perception, these regions compose a core system and have different functions [Haxby et al., 2000]. The FFA is assumed to mediate the invariant aspects of faces related to identity. By contrast, the STS is assumed to process changeable aspects of faces such as eye gaze, expression, and lip movement. The OFA is suggested to be related to early perception of facial features, and provide a major input to both the FFA and STS.

From our previous results [Minami et al., 2011; Nakajima et al., 2012], we assumed that these face‐selective regions in the occipito‐temporal cortex are related to facial color processing. Thus, the aim of the present study was to identify the brain region involved in facial color processing. Here, we measured the response of the face‐selective regions to the natural‐ and unnatural‐colored face and their phase‐scrambled images with fMRI. We used a bluish color as an unusual facial color following the EEG study of Minami et al. [2011].

MATERIALS AND METHODS

Participants

Twenty‐five healthy subjects (five females, mean age = 25.24 years, S.D. = 5.25) participated in the present study. Subjects had normal or corrected‐to‐normal visual acuity. Twenty three subjects were right‐handed and two were left‐handed according to the Edinburgh handedness inventory [Oldfield, 1971]. No subjects had a history of neurological or psychiatric illness. Experimental protocols were approved by the ethical committee of the National Institute for Physiological Sciences, Okazaki, Japan and the Committee for Human Research of Toyohashi University of Technology. The experiments were undertaken in compliance with national legislation and the Code of Ethical Principles for Medical Research Involving Human Subjects of the World Medical Association (Declaration of Helsinki). All subjects provided written informed consent.

Stimuli

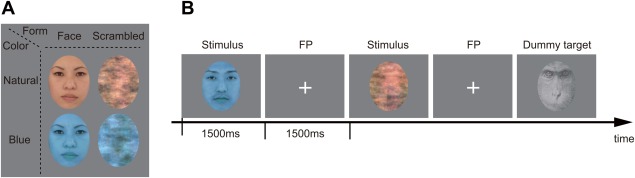

The stimuli were based on color images of 20 Asian faces (10 females) with neutral expressions (provided and approved by Softopia Japan Foundation). Face images were ovalized using Photoshop CS2 (Adobe Systems, San Jose, CA) to remove external features (neck, ears, and hairline). All stimuli size were 158 × 205 pixels (2.9° × 3.8° as visual angle). The faces were edited into a bluish‐colored (opponent color of natural color) face, and natural‐ and bluish‐colored faces were phase‐scrambled using a Fourier phase randomization procedure, which preserves global low‐level properties of the original image [Jacques and Rossion, 2006; Nasanen, 1999], with MATLAB software (MathWorks, Natick, MA). The total set of stimuli consisted of natural‐ and bluish‐colored faces, natural‐ and bluish‐colored scrambled images (Fig. 1), and a monkey image as a target. To dissipate color similarity with the human face images, the monkey image was converted to gray scale. The mean luminance of image and root mean square (RMS) contrast were adjusted to be equal across all images. All stimuli were presented in the center of a neutral gray background.

Figure 1.

Stimuli and experimental procedure. (A) Examples of stimuli: the 2 × 2 design (form: face or scrambled image; color: a natural‐colored or a bluish‐colored). (B) Each session comprised ∼200 stimuli (40 natural‐ and bluish‐colored facial stimuli, 40 natural and bluish‐colored scrambled stimuli, and 26–31 monkey images). Each stimulus was presented for 1,500 ms followed by a blank interval (including FP) of 1,500 ms. The participants were asked to press a button when the target monkey was presented and silently count the number of target monkey images to concentrate on the experiment. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Procedure

The experiment involved four sessions. Each session comprised ∼200 stimuli, with 40 natural‐ and bluish‐colored facial stimuli, 40 natural‐ and bluish‐colored scrambled stimuli, and 26–31 monkey images. Each stimulus was presented for 1,500 ms followed by a blank interval (including FP) of 1,500 ms. To keep attentional state during the experiment, the participants were asked to press a button when the target monkey image was presented and silently count the number of target monkey images. The sequence of the four conditions was calculated by a genetic algorithm [Wager and Nichols, 2003] to maximize contrast detection efficiency for the main effect of form and color and their interaction.

Scanning Procedures and Data Analysis

Functional and structural MRI images were acquired using a 3‐Tesla MR scanner (Allegra; Siemens, Erlangen, Germany). For functional imaging during the sessions, the scanning parameters were: repetition time (TR), 3,000 ms; echo time (TE), 30 ms; flip angle, 83°; field of view, 192 mm; slice thickness, 3 mm; 64 × 64 matrix; voxel dimensions, 3.0 × 3.0 × 3.0 mm3; number of slices, 45. Four sessions of ∼10 min (200 images) were acquired. A high‐resolution, T1‐weighted three‐dimensional image was also acquired for each subject using a magnetization‐prepared rapid‐acquisition gradient‐echo (MPRAGE) sequence (TR, 2,500 ms; TE, 4.38 ms; flip angle, 8°; field of view, 256 mm; slice thickness, 1 mm; voxel dimensions, 1.0 × 1.0 × 1.0 mm3).

Preprocessing and statistical analysis of fMRI data was conducted using SPM8 version 4290 (Wellcome Department of Imaging Neuroscience, London, UK, http://www.fil.ion.ucl.ac.uk/spm) implemented in MATLAB 2011b. Functional scans were realigned to correct for head motion, then corrected for differences in slice timing within each volume. The whole‐head MP‐RAGE image volume was coregistered with the image volume of the mean EPI volume of session 1. The whole‐head image volume was normalized to the Montréal Neurological Institute T1 image template using a nonlinear basis function. The same parameters were applied to all the EPI volumes, which were spatially smoothed in three dimensions using an 8‐mm full‐width at half‐maximum Gaussian kernel.

Face‐selective regions of interest such as the FFA, OFA, and STS were defined separately for each participant, as follows. First, we identified the group‐level's face‐selective regions by performing the group‐level analyses with the contrast natural‐colored face vs. natural‐colored scrambled image (P < 10−3, uncorrected for the multiple comparisons). Second, we defined the group ROIs on functional grounds as sphere regions (radius = 10 mm) centered on the peak activation coordinates in the group‐level's face‐selective regions using MarsBaR toolbox version 0.43 (http://marsbar.sourceforge.net/). Finally, we defined the individual ROIs using the group ROIs as a mask. We searched the peak activation in each subjects' face‐selective regions (natural‐colored face vs. natural‐scrambled image, P < 0.05, uncorrected for the multiple comparisons) masked with group ROIs, and defined the sphere regions centered on the coordinates (radius = 10 mm) as the individual ROIs. If the subjects had no activation within a search region, the group ROIs were used instead for the ROI analysis. Percent signal change data of the ROIs were extracted using MarsBaR toolbox. A two‐way repeated measures analysis of variance (ANOVA) was performed with form (face or scrambled) and color (natural or blue) as factors to identify the area related to facial color processing using SPSS software (IBM, Armonk, NY).

RESULTS

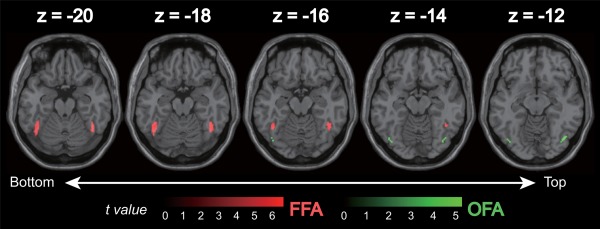

The bilateral FFA and OFA were found as group‐level's face‐selective regions (Fig. 2 and Table 1). The right FFA was successfully localized in 23 subjects, the left FFA in 22 subjects, the right OFA in 23 subjects, and the left OFA in 21 subjects. Only liberal significance thresholds (P < 0.05, uncorrected for the multiple comparisons) were found for the right STS as a group‐level's face‐selective region, although it was localized in only less than half of the subjects (12/25). The left STS was not found as a group‐level's face‐selective region (P < 0.05, uncorrected for the multiple comparisons). Therefore, we did not include the bilateral STS in the ROI analysis. No other area was found as a group‐level's face‐selective region (P < 10−3, uncorrected for the multiple comparisons).

Figure 2.

Location of face‐selective regions [bilateral (A) FFA: fusiform face area, (B) OFA: occipital face area] showing significantly higher responses to natural‐colored face compared to the natural‐scrambled image (P < 10−3, uncorrected for multiple comparisons). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Table 1.

The group‐level's face‐selective regions by performed the group‐level analyses with the contrast natural‐colored face vs. natural‐colored scrambled image

| Brain area | MNI coordinates (mm) | Cluster size (mm3) | t max | ||

|---|---|---|---|---|---|

| x | y | z | |||

| Left fusiform gyrus (left FFA) | −44 | −56 | −20 | 768 | 6.6 |

| Right fusiform gyrus (right FFA) | 44 | −54 | −20 | 728 | 5.87 |

| Right inferior occipital gyrus (right OFA) | 42 | −72 | −12 | 144 | 5.27 |

| Left inferior occipital gyrus (left OFA) | −42 | −74 | −14 | 88 | 5.07 |

Threshold P < 10−3, uncorrected for multiple comparisons.

The face‐selective ROIs were defined on the basis of these coordinates.

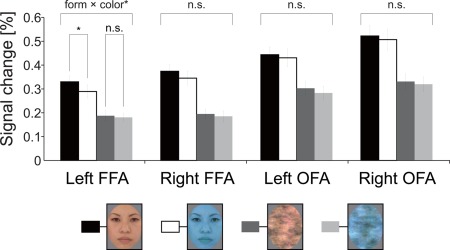

Figure 3 shows the mean percent signal change in the face‐selective ROIs. Two‐way ANOVA revealed a significant main effect of form (face > scrambled) in the all ROIs [right FFA: F(1, 24) = 98.876, P < 10−4; left FFA: F(1, 24) = 74.035, P < 10−4; right OFA: F(1, 24) = 80.705, P < 10−4; left OFA: F(1, 24) = 55.701, P < 10−4]. The main effect of color was statistically significant in the bilateral left FFA [right FFA: F(1,24) = 5.543, P < 0.05; left FFA: F(1,24) = 14.868, P < 0.001]. There was no main color effect in the right and left OFA [right OFA: F(1, 24) = 1.749, P > 0.1; left OFA: F(1, 24) = 4.164, P > 0.05]. Importantly a form × color interaction was significant only in the left FFA [F(1,24) = 5.577, P < 0.05]. Post‐hoc test revealed a simple main effect of form for both colors, such that the face image elicited a larger response than the face scrambled image (natural color: P < 0.0001; bluish color: P < 0.0001). More importantly, there was a simple main effect of color only for the face, such that natural‐colored faces elicited a larger response than bluish‐colored faces [face: P < 0.05; scrambled image: P > 0.4]. There was no significant form × color interaction in the right FFA or the right and left OFA [Right FFA: F(1, 24) = 2.133, P > 0.1; right OFA: F(1, 24) = 0.063, P > 0.8; left OFA: F(1, 24) = 0.193, P > 0.6].

Figure 3.

Results of the ROI analyses. Percent signal change in left and right FFA, and the left and right OFA. A significant form × color interaction was found only in the left FFA. There was a simple main effect of color only for the face, such that natural‐colored faces elicited a larger response than bluish‐colored faces. The error bars show the standard error of the mean of the percent signal change across subjects. *P < 0.05. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

DISCUSSION

The present study examined the brain regions involved in facial color processing using fMRI, with a particular focus on areas that show sensitivity for faces (FFA and OFA). We demonstrated that the bilateral FFA was sensitive to color difference, whereas the bilateral OFA was insensitive to color. Most importantly, there was a significant form × color interaction only in the left FFA, showing that natural‐colored images elicited a larger response than bluish‐colored images only for face images (not for scrambled‐images). These results suggest that the left FFA may reflect facial color processing rather than simple color differences.

Our previous EEG study demonstrated that the face‐selective N170 component recorded on the left occipito‐temporal site was modulated by facial color, whereas that on the right site was not [Nakajima et al., 2012]. Source analysis of ERP studies has shown that the source of the N170 was localized in the fusiform gyrus [Deffke et al., 2007; Rossion et al., 2003]. Moreover, a recent simultaneous ERP‐fMRI measurement study showed that the FFA and the STS were face‐selective, while the OFA was not associated with N170 face‐selectivity [Sadeh et al., 2010]. Although our previous EEG and the present fMRI studies were not simultaneously measured, our EEG finding that the left N170 is sensitive to facial color supports that the left FFA is sensitive to facial color difference.

Several studies suggest that the right and left FFAs may play different roles in face processing. The right FFA is more related to configural face processing [Maurer et al., 2007; Rossion et al., 2000], whereas the left FFA seems to be more involved in parts‐based processing [Harris and Aguirre, 2010; Rossion et al., 2000]. In self‐face recognition, the left FFA plays an important role in the low level (physical properties) processing of self‐face, while the right FFA is involved in registration of self‐face identity [Ma and Han, 2012]. Some studies examining other‐race effects have shown that the activation difference between own‐ and other‐race faces in the left FFA was correlated with the behavioral difference of own‐ and other‐race face categorization accuracy [Feng et al., 2011; Golby et al., 2001].

In our study, the FFA was sensitive to color difference, whereas the OFA were insensitive to color. Jiang et al. [2009] suggested that face‐sensitive areas (FFA and OFA) of the right hemisphere are more sensitive to shape than to surface reflectance of faces, while the left FFA and OFA appear to process both kinds of cues [Jiang et al., 2009]. Steeves et al. [2006] investigated face processing in a patient D.F., who has acquired brain damage overlapping with the OFA bilaterally. The patient demonstrated impairment in higher‐level face processing such as face identification, gender, and emotional expression. However, she showed higher FFA activation for faces and she was able to differentiate faces from non‐faces using texture information. Importantly, she can discriminate among hue and name colors appropriately [Milner and Heywood, 1989]. Although the left OFA is related to processing of surface reflectance information including color and texture, the left OFA is a nonessential area for processing of surface information, particularly color. In a color vision study, some regions related to color judgment were found in the fusiform gyrus [McKeefry and Zeki, 1997]. Although surface information on the face is processed in both the left FFA and OFA, our results suggest facial color is processed in the left FFA.

Single‐cell studies in monkeys have suggested that a strong role for color in recognition of familiar object including face. Achromatic or falsely (bluish) colored face images were found to evoke significantly reduced responses in comparison with naturally colored face images in the majority of face‐selective cells in the inferotemporal (IT) cortex [Edwards et al., 2003]. Our result showed facial color sensitivity in the human FFA. Recent report comparing face recognition in monkey and human suggested that face‐selective area in the anterior IT of monkeys has similar functions to the human FFA [Yovel and Freiwald, 2013].

Some neuroimaging studies suggest that the left fusiform gyrus is related to processing of emotional stimuli along with the left amygdala [Hardee et al., 2008; Talmi et al., 2008]. For example, the left fusiform gyrus showed a significantly greater response to fearful eye compared with other eye conditions (gaze, happy, neutral eyes), which is similar to that observed in the left amygdala [Hardee et al., 2008]. The left fusiform and left amygdala form a functional network for emotional picture processing, and the left fusiform is involved in emotion‐dependent enhancement of attention to pictures [Talmi et al., 2008]. On the other hand, studies of virtual facial image synthesis show that facial color is effective for the affect display of the face [Yamada and Watanabe, 2004, 2007]. Thus, facial color information contributes to emotional recognition on faces. In fact, we use the phrases linking facial color with emotion such as “he turns red with anger” or “she turns white with fear” on a routine basis. The present study used natural‐ and bluish‐colored face images, and showed facial color selective responses in the left FFA. The bluish‐colored faces may evoke a negative emotion such as fear or sad as it is suggested that the left fusiform gyrus is related to emotional processing [Hardee et al., 2008; Talmi et al., 2008].

One limitation of the present study is that there is a risk of “double‐dipping” because we used the same data for the ROI selection and ROI analysis. To test the effect of double‐dipping, we performed additional analysis. In the additional analysis, we defined the ROI using odd sessions only (sessions 1 and 3), and we performed analysis on the percent signal change extracted from the even sessions only (sessions 2 and 4). In this way, the data extracted from the ROI are independent form the data used to select the ROI [Kriegeskorte et al., 2009]. The bilateral FFA and OFA were found as group‐level's face‐selective regions and we define ROIs for each participant using the same procedure as reported above. ROI analysis revealed an approaching significance interaction between form and color for the left FFA [F(1,24) = 3.85, P = 0.06]. The interaction was due to differential response only for facial color; the larger response for natural‐colored faces than for bluish‐colored faces [face: P < 0.05; scrambled image: P > 0.9]. However, the interactions in other areas were far from a significant level for other face‐selective ROIs [right FFA: F(1,24) = 0.06, P > 0.8; right OFA: F(1,24) = 0.46, P > 0.5; left OFA: F(1,24) = 0.42, P > 0.5]. In the additional analysis, although the facial color effect is statistically approaching, the effect was observed only in the left FFA in line with the previous analysis. There is a possibility of decreasing the statistical robustness by decreasing data for segmentation in the additional analysis.

In summary, the present study provides novel evidence for facial color processing. We found that activation in the face‐selective left FFA reflected a facial color difference. Our findings indicate the possibility that the left FFA process facial color information related to processing such as familiarity, sociality, and emotion.

REFERENCES

- Botzel K, Grusser OJ (1989): Electric brain potentials evoked by pictures of faces and non‐faces: A search for “face‐specific” EEG‐potentials. Exp Brain Res 77:349–360. [DOI] [PubMed] [Google Scholar]

- Bruce V, Langton S (1994): The use of pigmentation and shading information in recognising the sex and identities of faces. Perception 23:803–822. [DOI] [PubMed] [Google Scholar]

- Changizi MA, Zhang Q, Shimojo S (2006): Bare skin, blood and the evolution of primate colour vision. Biol Lett 2:217–221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deffke I, Sander T, Heidenreich J, Sommer W, Curio G, Trahms L, Lueschow A (2007): MEG/EEG sources of the 170‐ms response to faces are co‐localized in the fusiform gyrus. Neuroimage 35:1495–1501. [DOI] [PubMed] [Google Scholar]

- Edwards R, Xiao D, Keysers C, Földiák P, Perrett D (2003): Color sensitivity of cells responsive to complex stimuli in the temporal cortex. J Neurophysiol 90:1245–1256. [DOI] [PubMed] [Google Scholar]

- Feng L, Liu J, Wang Z, Li J, Li L, Ge L, Tian J, Lee K (2011): The other face of the other‐race effect: An fMRI investigation of the other‐race face categorization advantage. Neuropsychologia 49:3739–3749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fink B, Grammer K, Matts PJ (2006): Visible skin color distribution plays a role in the perception of age, attractiveness, and health in female faces. Evol Hum Behav 27:433–442. [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW (2000): The fusiform "face area" is part of a network that processes faces at the individual level. J Cogn Neurosci 12:495–504. [DOI] [PubMed] [Google Scholar]

- Golby AJ, Gabrieli JD, Chiao JY, Eberhardt JL (2001): Differential responses in the fusiform region to same‐race and other‐race faces. Nat Neurosci 4:845–850. [DOI] [PubMed] [Google Scholar]

- Hardee JE, Thompson JCJC, Puce A (2008): The left amygdala knows fear: Laterality in the amygdala response to fearful eyes. Social Cogn Affect Neurosci 3:47–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris A, Aguirre GK (2010): Neural tuning for face wholes and parts in human fusiform gyrus revealed by fMRI adaptation. J Neurophysiol 104:336–345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI (2000): The distributed human neural system for face perception. Trends Cogn Sci 4:223–233. [DOI] [PubMed] [Google Scholar]

- Ishai A, Schmidt CF, Boesiger P (2005): Face perception is mediated by a distributed cortical network. Brain Res Bull 67:87–93. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ (2004): N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb Cortex 14:132–142. [DOI] [PubMed] [Google Scholar]

- Jeffreys DA, Tukmachi ES (1992): The vertex‐positive scalp potential evoked by faces and by objects. Exp Brain Res 91:340–350. [DOI] [PubMed] [Google Scholar]

- Jiang F, Dricot L, Blanz V, Goebel R, Rossion B (2009): Neural correlates of shape and surface reflectance information in individual faces. Neuroscience 163:1078–1091. [DOI] [PubMed] [Google Scholar]

- Jones BC, Little AC, Burt DM, Perrett DI (2004): When facial attractiveness is only skin deep. Perception 33:569–576. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997): The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci 17:4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI (2009): Circular analysis in systems neuroscience: The dangers of double dipping. Nat Neurosci 12:535–540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma Y, Han S (2012): Functional dissociation of the left and right fusiform gyrus in self‐face recognition. Hum Brain Mapp 33:2255–2267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matts PJ, Fink B, Grammer K, Burquest M (2007): Color homogeneity and visual perception of age, health, and attractiveness of female facial skin. J Am Acad Dermatol 57:977–984. [DOI] [PubMed] [Google Scholar]

- Maurer D, O'Craven KM, Le Grand R, Mondloch CJ, Springer MV, Lewis TL, Grady CL (2007): Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia 45:1438–1451. [DOI] [PubMed] [Google Scholar]

- McKeefry DJ, Zeki S (1997): The position and topography of the human colour centre as revealed by functional magnetic resonance imaging. Brain 120:2229–2242. [DOI] [PubMed] [Google Scholar]

- Milner AD, Heywood CA (1989): A disorder of lightness discrimination in a case of visual form agnosia. Cortex 25:489–494. [DOI] [PubMed] [Google Scholar]

- Minami T, Goto K, Kitazaki M, Nakauchi S (2011): Effects of color information on face processing using event‐related potentials and gamma oscillations. Neuroscience 176:265–273. [DOI] [PubMed] [Google Scholar]

- Nakajima K, Minami T, Nakauchi S (2012): The face‐selective N170 component is modulated by facial color. Neuropsychologia 50:2499–2505. [DOI] [PubMed] [Google Scholar]

- Oldfield RC (1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9:97–113. [DOI] [PubMed] [Google Scholar]

- Rossion B, Dricot L, Devolder A, Bodart JM, Crommelinck M, De Gelder B, Zoontjes R (2000): Hemispheric asymmetries for whole‐based and part‐based face processing in the human fusiform gyrus. J Cogn Neurosci 12:793–802. [DOI] [PubMed] [Google Scholar]

- Rossion B, Joyce CA, Cottrell GW, Tarr MJ (2003): Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 20:1609–1624. [DOI] [PubMed] [Google Scholar]

- Sadeh B, Podlipsky I, Zhdanov A, Yovel G (2010): Event‐related potential and functional MRI measures of face‐selectivity are highly correlated: A simultaneous ERP‐fMRI investigation. Hum Brain Mapp 31:1490–1501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steeves JKE, Culham JC, Duchaine BC, Pratesi CC, Valyear KF, Schindler I, Humphrey GK, Milner AD, Goodale MA (2006): The fusiform face area is not sufficient for face recognition: Evidence from a patient with dense prosopagnosia and no occipital face area. Neuropsychologia 44:594–609. [DOI] [PubMed] [Google Scholar]

- Stephen ID, Smith MJL, Stirrat MR, Perrett DI (2009): Facial skin coloration affects perceived health of human faces. Int J Primatol 30:845–857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephen ID, Oldham FH, Perrett DI, Barton RA (2012): Redness enhances perceived aggression, dominance and attractiveness in men's faces. Evol Psychol 10:562–572. [PubMed] [Google Scholar]

- Talmi D, Anderson AK, Riggs L, Caplan JB, Moscovitch M (2008): Immediate memory consequences of the effect of emotion on attention to pictures. Learn Mem 15:172–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tarr MJ, Kersten D, Cheng Y, Rossion B (2001): It's Pat! Sexing faces using only red and green. J Vis 1:337. [Google Scholar]

- Wager TD, Nichols TE (2003): Optimization of experimental design in fMRI: A general framework using a genetic algorithm. Neuroimage 18:293–309. [DOI] [PubMed] [Google Scholar]

- Yamada T, Watanabe T (2004): Effects of facial color on virtual facial image synthesis for dynamic facial color and expression under laughing emotion. In: Robot and Human Interactive Communication, 2004. 13th IEEE International Workshop on IEEE, pp 341–346.

- Yamada T, Watanabe T (2007): Virtual facial image synthesis with facial color enhancement and expression under emotional change of anger. In: Robot and Human interactive Communication, 2007. The 16th IEEE International Symposium on IEEE, pp 49–54.

- Yovel G, Freiwald WA (2013): Face recognition systems in monkey and human: Are they the same thing? F1000prime Rep 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N (2005): The neural basis of the behavioral face‐inversion effect. Curr Biol 15:2256–2262. [DOI] [PubMed] [Google Scholar]