Abstract

Sedation has a graded effect on brain responses to auditory stimuli: perceptual processing persists at sedation levels that attenuate more complex processing. We used fMRI in healthy volunteers sedated with propofol to assess changes in neural responses to spoken stimuli. Volunteers were scanned awake, sedated, and during recovery, while making perceptual or semantic decisions about nonspeech sounds or spoken words respectively. Sedation caused increased error rates and response times, and differentially affected responses to words in the left inferior frontal gyrus (LIFG) and the left inferior temporal gyrus (LITG). Activity in LIFG regions putatively associated with semantic processing, was significantly reduced by sedation despite sedated volunteers continuing to make accurate semantic decisions. Instead, LITG activity was preserved for words greater than nonspeech sounds and may therefore be associated with persistent semantic processing during the deepest levels of sedation. These results suggest functionally distinct contributions of frontal and temporal regions to semantic decision making. These results have implications for functional imaging studies of language, for understanding mechanisms of impaired speech comprehension in postoperative patients with residual levels of anesthetic, and may contribute to the development of frameworks against which EEG based monitors could be calibrated to detect awareness under anesthesia. Hum Brain Mapp 35:2935–2949, 2014. © 2013 Wiley Periodicals, Inc.

Keywords: semantic processing, propofol, inferior frontal gyrus, sedation, functional MRI

INTRODUCTION

Although apparently effortless, understanding the meaning of a spoken word is a complex cognitive process, underpinned by coordinated activity in multiple brain regions over the duration of a typical spoken word lasting just a few hundred milliseconds [Davis and Gaskell, 2009; Friederici, 2002; Marslen‐Wilson and Tyler, 1980; O'Rourke and Holcomb, 2002]. Such processing commences with acoustic analysis in Heschl's Gyrus (common to all auditory stimuli) followed by identification of speech elements (phonemes, syllables etc) in peri‐auditory regions of the Superior and Middle Temporal Gyri (STG, MTG) [Binder et al., 2000; Davis and Johnsrude, 2003; Jacquemot et al., 2003]. Current accounts of the neuroanatomical basis of speech perception propose two or more hierarchically organized processing streams, dorsal and ventral to these peri‐auditory regions [Poeppel and Hickok, 2004; Saur et al., 2008; Scott and Johnsrude, 2003]. More anatomically distant regions of the ventral processing pathway in anterior temporal [Rauschecker et al., 2008] and posterior inferior temporal regions [Hickok and Poeppel, 2007] may play a critical role in supporting successful access to word meaning (semantic processing, see [Binder et al., 2009]). In addition to these regions, parts of the anterior LIFG (Brodmann's area 47, located in the junction between the pars triangularis and pars orbitalis) are also considered to be involved in semantic processing [Binder et al., 2009]. Several fMRI studies identify BA 47 activation during semantic tasks [Kapur et al., 1994] and implanted electrodes [Abdullaev and Bechtereva, 1993] and lesion studies [Swick and Knight, 1996] also provide converging evidence on the role of the LIFG in semantic processing.

Coordinated activity among these multiple regions may be critical in supporting comprehension of and behavioural responses to spoken stimuli, thus contributing to conscious awareness for speech. This conclusion is supported by the differential sensitivity of these regions to alterations in the degree of awareness of speech seen, for example, with auditory masking, or administration of varying doses of anesthetic drugs. Findings from graded sedation can both complement and extend results from behavioural methods for studying neural responses to spoken language in the absence of explicit perceptual awareness [e.g., divided attention, Sabri et al., 2008; or masked auditory priming, Kouider et al., 2010]. It is important to note however, that the state of awareness as modulated by anesthetic drugs is different from the level of perceptual awareness as modulated by divided attention or masking strategies. Controlled sedation with anesthetic drugs thus provides an important additional method for studying the hierarchy of auditory and speech processing at varying levels of awareness.

Graded conscious sedation provides a controlled method for manipulating level of awareness. Relatively light sedation impairs complex auditory processing (for example, of verbal or musical stimuli) [Davis et al., 2007; Heinke et al., 2004; Plourde et al., 2006], whilst simple auditory processing (for example, processing of pure tones) may remain intact even during surgical anesthesia [Dueck et al., 2005; Kerssens et al., 2005]. In a previous fMRI study, we have shown that activation of the LIFG in response to semantic ambiguity in spoken sentences is sensitive to the effects of sub‐anesthetic doses of the anesthetic agent propofol [Davis et al., 2007]. Even light sedation was associated with reduced responses to semantic manipulations (high vs. low ambiguity sentences) in the LIFG. However, since this study only included a retrospective assessment of comprehension based on subsequent memory for sentence content, it was impossible to determine whether altered LIFG responses under sedation were associated with decreased processing of semantic content, or merely impaired subsequent memory for spoken material.

The success of semantic processing can be measured using behavioural responses (e.g., decisions), but also using neural measures (e.g., differential activity for high‐low ambiguity sentences or N400 responses to semantically anomalous words). In this study we investigated additional neural responses to spoken words (compared with matched nonspeech sounds) during graded sedation in volunteers performing a behavioral task involving semantic decisions (compared with matched auditory decisions for nonspeech sounds). Semantic decision‐making refers to the underlying processes involved in making decisions about the meaning of words. This involves processes in addition to accessing meaning, and in the context of this experiment involves making (button‐press) decisions about the meanings of single spoken words heard in the scanner. This method provides a direct test of whether the regionally selective and condition‐specific attenuation of frontal responses to spoken stimuli during propofol sedation is associated with impaired semantic processing. More specific hypotheses that we tested include whether the decreased LIFG activation produced by propofol is directly associated with impaired semantic processing of single spoken words, as well as how activation in other, posterior, regions involved in perceptual and semantic processing of spoken language are modulated by sedation. Concurrent assessment of behavioral responses and functional imaging was employed to assess whether intact comprehension of spoken words can be established by the presence of preserved patterns of neural activity known to be associated with speech comprehension.

MATERIAL AND METHODS

Ethical approval for these studies was obtained from the Cambridgeshire 2 Regional Ethics Committee. Twenty participants were recruited to the study; all were fit, healthy, native English speakers (11 male), and their mean (range) age was 35 (19–53) years. Two senior anesthetists were present during scanning sessions and observed the subjects throughout the study from the MRI control room and on a video link that showed the subject in the scanner. Electrocardiography and pulse oximetry were performed continuously, and measurements of heart rate, noninvasive blood pressure, and oxygen saturation were recorded at regular intervals.

Sedation

Propofol was administered intravenously as a “target controlled infusion” (plasma concentration mode), using an Alaris PK infusion pump (Carefusion, Basingstoke, UK) programmed with the Marsh pharmacokinetic model [Absalom et al., 2009; Marsh et al., 1991]. With such a system the anesthesiologist inputs the desired (“target”) plasma concentration, and the system then determines the required infusion rates to achieve and maintain the target concentration (using the patient characteristics which are covariates of the pharmacokinetic model). The Marsh model is routinely used in clinical practice to control propofol infusions for general anesthesia and for sedation. In this study three target plasma levels were used ‐ no drug (baseline), 0.6 µg/ml (low sedation), and 1.2 µg/ml (moderate sedation). A period of 10 min was allowed for equilibration of plasma and effect‐site propofol concentrations before cognitive tests were commenced. Blood samples (for plasma propofol levels) were drawn towards the end of each titration period and before the plasma target was altered. In total, 6 blood samples were drawn during the study. The level of sedation was assessed verbally immediately before and after each of the scanning runs. Following cessation of infusion, plasma propofol concentration decline exponentially and would theoretically never reach zero (asymptote). Computer simulations with TIVATrainer (a pharmacokinetic simulation software package‐ available at http://www.eurosiva.org) revealed that plasma concentration would approach zero by 15 minutes; hence we performed the recovery scan at 20 minutes following cessation of sedation. Three participants were initially unresponsive after the deepest sedation scanning run, but were easily roused by loud commands. At each level and after cessation of drug administration, participants made semantic and auditory decisions while undergoing fMRI scanning. The mean (SD) measured plasma propofol concentration was 304.8 (141.1) ng/ml during light sedation, 723.3 (320.5) ng/ml during moderate sedation and 275.8 (75.42) ng/ml during recovery. Mean (SD) total mass of propofol administered was 210.15 (33.17) mg, equivalent to 3.0 (0.47) mg/kg.

Semantic and Auditory Decision Tasks

Prior to the scanning session, all participants underwent a practice session in which they were presented with a set of eight words and eight nonspeech stimuli not repeated in the main tasks. This session was intended to familiarize participants with the semantic decision task such that they learnt the nature of the task and response categories during the practice session, but not the specific stimuli that would be presented in the scanner. All participants underwent four scanning runs; each run lasted 5.5 min and comprised alternating 30 s blocks of words and acoustically matched nonspeech (buzz/noise) stimuli presented with the Cognition and Brain Sciences Unit Audio Stimulation Tool (CAST) (Fig. 1). Stimuli were presented with a stimulus onset asynchrony (SOA) of 3 s in the silent intervals between scans. Words used in each of the four scanning runs were pseudo‐randomly drawn from a set of 280 items (140 living items, e.g. tiger, birch, and 140 nonliving items, e.g., table, stone) in subsets of 40 items (20 living, 20 nonliving), matched for relevant psycholinguistic variables (word frequency, length, imageability, acoustic amplitude, and familiarity). Participants heard 4 of the 7 groups of spoken words in the four scanning runs with assignment of items to sedation levels counter‐balanced over participants. Buzz/noise stimuli were generated from word stimuli by extracting the amplitude envelope of a spoken word and using that envelope to modulate either a broad band noise (noise) or a harmonic complex with a 150Hz fundamental frequency (buzz). These sounds were filtered to match the average spectral profile of the source word. This process, implemented using a custom script implemented with Praat software (http://www.praat.org) generates complex, non‐speech stimuli matched to the spoken words for relevant acoustic characteristics (i.e., spectral composition and amplitude envelope).

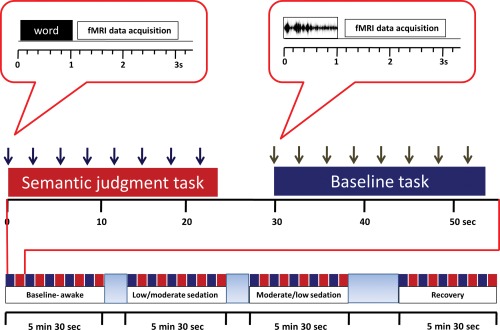

Figure 1.

Experimental design and fMRI protocol. Each sedation period comprised 5 blocks of words and 5 blocks of nonspeech stimuli. Semantic tasks alternated in 24 s blocks (presentation of 8 stimuli interspersed with silence) in separate scanning runs with a nonspeech buzz/noise baseline task. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Blocks of 8 stimuli were presented with an additional 6 s of silence between blocks to allow estimation of resting activity. Participants were asked to respond with a button press to indicate whether presented words referred to living or nonliving items and whether nonspeech stimuli were buzz‐type or noise‐type items.

fMRI Acquisition

Scanning was performed on a Siemens Trio Tim 3 Tesla MRI system (Erlangen, Germany) equipped with a 12‐channel head matrix transmit‐receive coil, using a fast‐sparse 32 slice axial oblique sequence, with a repetition time of 3 s, and acquisition time of 2 s, leaving a 1 s silent period for stimulus presentation (TE 30 ms, flip angle 78, voxel size 3.0 × 3.0 × 3.0 mm3, matrix size 64x64, field of view 192 mm ×192 mm, slice thickness 3.0 mm, 0.8 mm gap between slices, bandwidth 2,442 Hz/Px). One hundred and ten EPI images were acquired during the total acquisition time for each scanning run. At the start of each run, eight dummy scans were discarded to allow for T1 equilibrium effects.

Behavioral Data Analysis

Behavioral responses were categorized into correct responses, incorrect responses, and time‐outs (no response to stimuli within 3 s of presentation). Response times (RTs) were analyzed for correctly classified stimuli (measured from word onset); the number of incorrect responses excluding “time‐outs,” and number of “time‐outs” were averaged over trials in each condition and scanning run for each participant and reported as error rates and “time‐out” proportions. The resulting mean responses times, error rates and time‐out proportions for each condition were analyzed in SPSS16 (SPSS Inc, Chicago, IL) to detect behavioral differences between levels of sedation. The data were analyzed to check for outliers; when present, data were subjected to a statistical bootstrap procedure to test the reliability of the slope estimate. Bootstrapping generates a random “bootstrap” sample with replacement from the original dataset. The statistic under investigation is then computed using the bootstrap sample. This process of resampling and computing statistics was repeated multiple times to create a good approximation of the unknown true probability function underlying the statistic [Efron and Tibshirani, 1993]. Bootstrapping was performed in SPSS and data were analyzed using linear regression in SPSS.

fMRI Pre‐Processing and Analysis

Imaging data was pre‐processed and analyzed with SPM5 (Wellcome Institute of Imaging Neuroscience, London, UK, http://www.fil.ion.ucl.ac.uk/spm) implemented in MATLAB version 6.5 (Mathworks, Natick, MA). Pre‐processing involved movement correction using within subject realignment. The mean image of the realigned scans was computed during realignment and was spatially normalized to the Montreal Neurological Institute (MNI) standard space. Normalization parameters calculated in this manner were applied to all EPI images. Data were then spatially smoothed with an 8 mm3 full‐width half‐maximum Gaussian isotropic kernel. The time series in each voxel were high pass‐filtered to 1/128 Hz to remove low frequency noise, and corrected for temporal autocorrelation.

Data were analyzed with SPM5 using the general linear model and event‐onsets convolved with the canonical hemodynamic response function. We modeled conditions of interest for correct responses only, in order to assess the neuroanatomical correlates of successful semantic processing. In addition to the conditions of interest (words and nonspeech sounds), we also modeled incorrect and time‐out responses as a separate event type. Movement parameters from the realignment preprocessing step were included as covariates of no interest in the design matrix to account for any residual movement artifacts. Parameter estimates from the least mean square fit of this model in each participant were used to calculate linear contrasts between words and non‐speech sounds separately for each phase of sedation. A further contrast was used to assess additional activity for responses to non‐speech sounds compared to the inter‐block rest periods. Contrast images obtained from single subject analyses were combined in group‐level random effects analyses. Results were thresholded at a voxel‐level of P < 0.001 uncorrected, and reported at random field corrected P < 0.05 for cluster extent adjusted for the entire brain, unless otherwise stated [Friston, 1995]. Peak activation clusters are reported in MNI coordinates (which were converted to Talairach space such that anatomical and cytoarchitectonic nomenclature could be assigned to peak voxels) [Lancaster et al., 2007; Talairach and Tournoux, 1988].

To test whether decreased LIFG activation is associated with impaired semantic processing, we employed a region of interest (ROI) analysis approach to assess the influence of sedation on the LIFG. We also performed similar ROI analyses on the left middle temporal gyrus (LMTG) and LITG, regions which are also important for semantic processing of spoken words [Binder et al., 2009]. Spherical ROIs (radius 8mm) were created, centered on the peak co‐ordinates from the LIFG, LMTG and LITG from the group level words‐sounds contrast averaged across all levels of sedation (Table 1). Since all sedation conditions contributed equally to the definition of these ROIs, the choice of ROI was not influenced by the level of sedation. This is considered a valid approach to ROI selection and not “double‐dipping”, see [Kriegeskorte et al., 2009, Supporting Information]. MarsBaR version 0.41 (http://marsbar.sourceforge.net/index.html) was used to extract time series data from each of the ROIs, and repeated measures ANOVA implemented in MarsBaR was used to test differences between the means.

Table 1.

Areas showing a significant response to words compared to signal correlated noise averaged across all levels of sedation

| Cluster p (cor) | Cluster size (vox) | Voxel (T) | Talairach coordinate (x y z) | Brain region | ||

|---|---|---|---|---|---|---|

| 0.000 | 2837 | 8.95 | −62 | −18 | −4 | L Middle Temporal G BA21 |

| 8.32 | −58 | −8 | −8 | L Middle Temporal G BA21 | ||

| 8.06 | −48 | −52 | −12 | L Inferior Temporal G BA37 | ||

| 7.36 | −38 | 28 | −14 | L Inferior Frontal G BA47 | ||

| 6.94 | −38 | −36 | −24 | L Fusiform G BA20 | ||

| 6.17 | −36 | −40 | −26 | L Cerebellum | ||

| 0.000 | 414 | 6.71 | 58 | −26 | −2 | R Middle Temporal G BA21 |

| 5.56 | 68 | −18 | 0 | R Superior Temporal G BA22 | ||

| 5.38 | 64 | −6 | −8 | R Superior Temporal G BA21 | ||

RESULTS

Behavioral Data

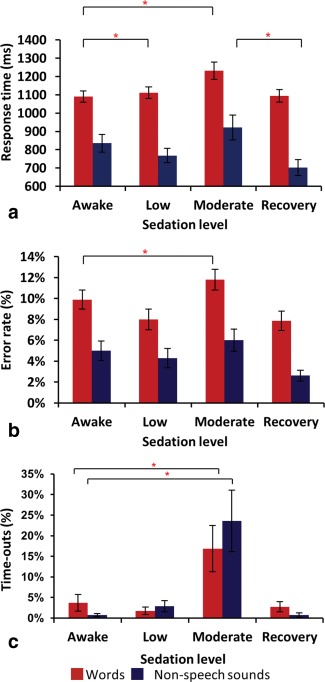

Volunteers under low sedation displayed normal to slowed responses to conversation (Fig. 2). Moderately sedated volunteers displayed delayed or occasionally no conversational response, but were always rousable by loud commands. Repeated measures ANOVAs performed on error rates and RT data for words and sounds during the semantic task showed concordant changes with sedation. RT to words was significantly slower during light and moderate sedation than baseline (main effect of sedation condition: P < 0.0001, F[3,76]=6.631). Errors in categorizing word stimuli were also significantly increased during moderate sedation (P < 0.0001, F[3,76]=4.256). In contrast, RT to nonspeech sounds were significantly increased during moderate sedation only when compared with the recovery stage (P < 0.0003, F[3,76]=5.842). There was no change in error rates to sounds across sedation level.

Figure 2.

Behavioral results. (a) RTs vs. sedation level (b) Error rates vs. sedation level and (c) Time‐outs vs. sedation level. * denotes significance at P < 0.05. Error bars show standard error. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

“Time‐outs” were non‐normally distributed and subjected to Friedman's test (with posthoc Dunn's multiple comparison test). Moderate sedation induced a 17% increase in “time‐outs” to words (P = 0.0016, Friedman statistic 15.26) and a 24% increase to sounds (P = 0.0011, Friedman statistic 16.07). Nonetheless, over 75% of trials were completed successfully at all stages of the study (including the moderate level of sedation). There was also a significantly greater change in “time‐outs” to speech stimuli compared to “time‐outs” to nonspeech stimuli with increasing sedation (P = 0.005, F[3,76]= 7.23).

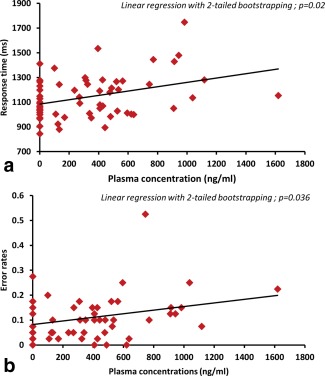

Behavioral Data and Plasma Propofol Levels

A linear regression analysis showed a significant association between RT to words and plasma propofol concentrations (r 2 = 0.1288, P = 0.0049) (Fig. 3 ), and between error rates and plasma propofol concentrations (r 2 = 0.086, P = 0.023). Since there were outlier data points in both these analyses, data were further analyzed using linear regression after bootstrapping in SPSS. This analysis revealed a significant association between response times and plasma propofol concentrations (bootstrapped significance P[2‐tailed]=0.02; 95%CI= 0.051 to 0.334) and between error rates and plasma propofol concentrations (bootstrapped significance P[2‐tailed]=0.036; 95% CI=3.15 × 10−6 to 0.000).

Figure 3.

Relationship between plasma concentration and (a) Response times (b) Error rates. Increasing response times (top panel) and error rates (bottom panel) in response to increasing propofol plasma concentrations (awake is modeled as zero). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Imaging Results

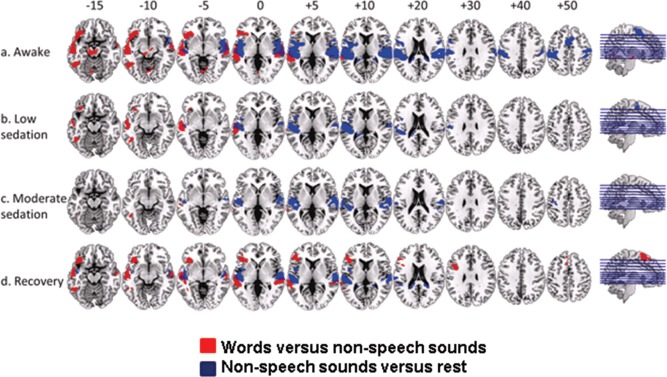

Activation persisted in bilateral primary auditory cortex to both words and nonspeech buzz and noise stimuli compared to inter‐block silent periods at all levels of sedation. Additional cortical and subcortical activations were present during the awake state, which did not persist with sedation (Fig. 4).

Figure 4.

Group average responses to words vs. nonspeech sounds at awake (a), low (b), and moderate (c) levels of sedation and recovery (d), shown superimposed on a standard T1 weighted structural image. Talairach and Tournoux z coordinates are shown. Red color in the brain slices represents responses to words vs. nonspeech sounds and blue color represents responses to nonspeech sounds vs. rest. We report clusters that survived a voxel threshold of P ≤ 0.001 uncorrected and a random field cluster threshold of P ≤ 0.05 corrected for the entire brain. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

BOLD signal changes in response to the speech specific contrast (words > nonspeech) showed significant activation along bilateral STG and MTG, fusiform gyri, LIFG (orbitalis and triangularis), LITG and left insula when participants were awake (Fig. 4a and Table 2). A mask created from these activations obtained in the awake state was used for small volume correction of data obtained during sedation (though not for ROI analyses looking for differences between different levels of sedation, see methods). We report results which achieved significance within this mask because the regions comprising the mask are recognized to be crucial in semantic processing, and also because they were significantly active in the awake comparison. Conclusions concerning differential responses to sedation, however, will only be drawn from ROI analyses. With deepening sedation, the same contrast revealed activation in the LMTG, LITG and LIFG (orbitalis) (during light sedation, Fig. 4b and Table 3). Activation in the LITG and a trend towards activation in the posterior LMTG persisted at the deepest level of sedation tested (Fig. 4c). Recovery from sedation was associated with robust activations to words and non‐speech stimuli in primary auditory cortical regions, superior and middle temporal regions similar to the awake state. Similarly, the contrast between words and sounds resulted in activation along bilateral STG and MTG, LIFG (all three subdivisions), and the left insula, left fusiform gyrus and LITG (Fig. 4d and Table 3).

Table 2.

Areas showing a significant response to words compared to signal correlated noise in awake volunteers

| Cluster p (cor) | Cluster size (vox) | Voxel (T) | Talairach co‐ordinate (x y z) | Brain region | ||

|---|---|---|---|---|---|---|

| 0.000 | 2465 | 10.33 | −62 | −18 | −6 | L Middle Temporal G BA21 |

| 9.46 | −58 | −10 | −8 | L Middle Temporal G BA21 | ||

| 8.83 | −42 | 26 | −14 | L Inferior Frontal G BA47 | ||

| 0.000 | 651 | 8.07 | 66 | −8 | −4 | R Superior Temporal G BA21 |

| 6.85 | 60 | −24 | −2 | R Superior Temporal G BA21 | ||

| 5.06 | 48 | −26 | −2 | R Superior Temporal G BA22 | ||

| 0.000 | 1087 | 7.9 | −36 | −36 | −24 | L Fusiform G BA20 |

| 6.99 | −48 | −54 | −14 | L Fusiform G BA37 | ||

| 6.25 | −36 | −12 | −34 | L Uncus BA20 | ||

| 0.002 | 250 | 6.03 | −10 | −22 | −14 | L Substantia Nigra |

| 5.32 | −2 | −22 | −16 | L Red Nucleus | ||

| 4.25 | 8 | −12 | −12 | R Substantia Nigra | ||

| 0.004 | 227 | 5.64 | 36 | −36 | −28 | R Culmen |

| 4.09 | 38 | −24 | −24 | R Parahippocampal G BA36 | ||

| 3.66 | 40 | −30 | −18 | R Parahippocampal G BA36 | ||

| 0.028 | 151 | 4.93 | 8 | −62 | −8 | R Culmen |

| 4.35 | −4 | −70 | −6 | L Culmen | ||

| 4.2 | −10 | −68 | −16 | L Culmen | ||

| 0.009 | 195 | 4.74 | 26 | −76 | −38 | R Pyramis |

| 4.15 | 16 | −80 | −34 | R Uvula | ||

| 3.87 | 12 | −72 | −28 | R Declive | ||

Table 3.

Areas showing a significant response to words compared to signal correlated under sedation and during recovery from sedation

| Cluster p (cor) | Cluster size (vox) | Voxel (T) | Talairach co‐ordinate (x y z) | Brain region | ||

|---|---|---|---|---|---|---|

| Low sedation | ||||||

| 0.000 | 632 | 7.43 | −58 | −20 | −4 | L Middle Temporal G BA21 |

| 6.85 | −60 | −4 | −12 | L Middle Temporal G BA21 | ||

| 5.68 | −52 | −16 | −10 | L Middle Temporal G BA21 | ||

| 0.000 | 255 | 6.33 | −44 | −56 | −10 | L Inferior Temporal G BA19 |

| 4.69 | −42 | −52 | −18 | L Fusiform G BA37 | ||

| 4.66 | −36 | −40 | −24 | L Fusiform G BA20 | ||

| 0.023 | 58 | 6.24 | −34 | −8 | −36 | L Uncus BA20 |

| 6.13 | −38 | −16 | −30 | L Fusiform G BA20 | ||

| Moderate sedation | ||||||

| 0.059 | 34 | 5.69 | −46 | −52 | −10 | L Inferior Temporal G BA37 |

| 0.061 | 33 | 5.09 | −36 | −28 | −26 | L Parahippocampal G BA36 |

| 0.069 | 30 | 6.5 | −64 | −36 | 4 | L Middle Temporal G BA22 |

| Recovery from sedation | ||||||

| 0.000 | 982 | 8.18 | −56 | −12 | −8 | L Middle Temporal G BA21 |

| 6.4 | −60 | −38 | 4 | L Middle Temporal G BA22 | ||

| 5.79 | −48 | −38 | 0 | L Superior Temporal G BA22 | ||

| 0.000 | 506 | 7.94 | 66 | −28 | 2 | R Superior Temporal G BA22 |

| 4.41 | 46 | −36 | 2 | R Superior Temporal G BA41 | ||

| 4.4 | 60 | −10 | −6 | R Middle Temporal G BA21 | ||

| 0.000 | 387 | 5.96 | −56 | −50 | −14 | L Middle Temporal G BA37 |

| 5.26 | −42 | −40 | −22 | L Fusiform G BA20 | ||

| 5 | −48 | −48 | −16 | L Fusiform G BA37 | ||

| 0.000 | 1157 | 5.62 | −42 | 40 | 4 | L Inferior Frontal G BA46 |

| 5.35 | −38 | 30 | −6 | L Inferior Frontal G BA47 | ||

| 5.32 | −40 | 22 | −22 | L Inferior Frontal G BA47 | ||

| 0.001 | 312 | 5.37 | −44 | 14 | 24 | L Inferior Frontal G BA9 |

| 5.04 | −38 | 6 | 30 | L Inferior Frontal G BA9 | ||

| 0.000 | 556 | 5.13 | −6 | 10 | 64 | L Superior Frontal G BA6 |

| 4.65 | −2 | 32 | 58 | L Superior Frontal G BA8 | ||

| 4.53 | 0 | 12 | 72 | L Superior Frontal G BA6 | ||

Region of Interest (ROI) Analyses

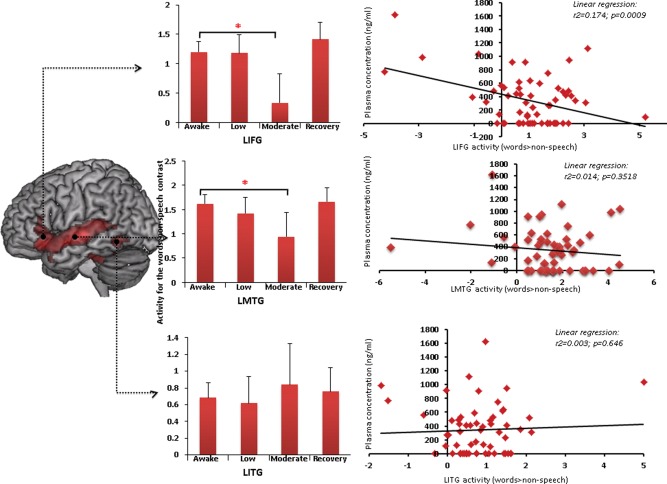

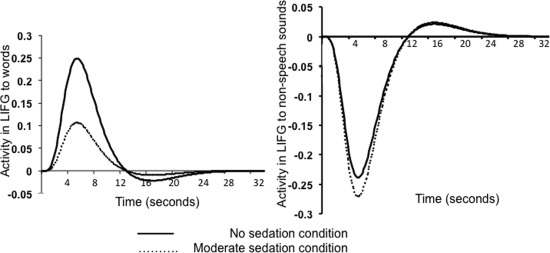

A group ROI analysis of the LIFG revealed a significant effect of sedation on LIFG activation for the words versus non‐speech contrast (8 mm spherical ROI centered on the peak LIFG co‐ordinate [−38 28 −14]; MarsBar ANOVA; F = 3.15 P = 0.03; awake>moderate sedation: t = 2.01 P = 0.024; recovery>moderate sedation: t = 1.69 P = 0.048) (Figs. 5 and 6). ROI analysis of the LMTG also revealed a significant effect of sedation on LMTG activity for the words>nonspeech contrast (8 mm radius spherical ROI centered on the peak LMTG co‐ordinate [−62 −18 −4]; MarsBar ANOVA; F = 2.85 P = 0.045, awake>moderate sedation: t = 1.83 P = 0.036). However, sedation did not have a significant effect on LITG activity for the same contrast (8 mm spherical ROI centered on the peak LITG co‐ordinate [−48 −52 −12]; MarsBar ANOVA; F = 1.84 P = 0.15). Figure 6 shows the fitted hemodynamic response function profiles of voxels in the LIFG showing activation and deactivation to the task (relative to inter‐stimulus rest periods) in the awake state and under moderate sedation. Comparing the ROIs for a region specific difference in speech vs. nonspeech activity revealed a significant difference between the LIFG and the LITG ROIs (2 × 4 ANOVA; F = 3.47 P = 0.04). To test the hypothesis that the effect of sedation is specific to responses to words in these regions, we also performed an ROI analysis of these three regions for the nonspeech versus rest contrast. Sedation did not affect activity in any of these three ROIs for this contrast (LIFG: F = 2.11 P = 0.11; LITG: F = 0.72 P = 0.55; LMTG: F = 0.66 P = 0.58). Thus, the region by sedation interaction seen for speech vs. nonspeech responses confirms the differential impact of sedation on speech‐specific processes in frontal and temporal lobe regions.

Figure 5.

ROI analyses with MarsBar showing changes in activity in the LIFG (top panel), LMTG (middle panel) and LITG (lower panel) in response to increasing sedation. * denotes significance at P < 0.05. Error bars show standard error. The relationship between changes in activity in the LIFG and plasma propofol concentrations is shown in the correlation plots to the right. Activity in the LIFG negatively correlates with plasma propofol concentration (P = 0.0009). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Figure 6.

Fitted hemodynamic response function profiles of voxels in the LIFG to words (left panel) and nonspeech sounds (right panel) with changes in sedation.

Correlational Analyses

Behavioral data and imaging data

No significant correlation could be demonstrated between activity in the three ROIs (from all levels of sedation) and behavior (RT and error rates); however there was a trend towards an increase in RT with decreasing activity in the LIFG (P = 0.07).

Plasma propofol levels and imaging data

Plasma propofol concentrations from the different levels of sedation were correlated against the parameter estimates extracted from the three ROIs; there was significant negative correlation between plasma levels and activity in the LIFG ROI (r 2 = 0.174, P = 0.0009). This association was confirmed with a statistical bootstrapping technique in SPSS (P[2‐tailed]= 0.036; 95%CI= −0.003 to −0.006).

DISCUSSION

The results presented here show a graded, brain‐region and condition‐specific effect of sedation on BOLD responses to speech and nonspeech sounds. This is demonstrated by the persistence of primary auditory cortical activation to both words and non‐speech stimuli during sedation, suggesting that basic auditory information processing persists during sedation. Our findings of region by sedation level interactions of LIFG and LITG BOLD responses to speech vs. nonspeech suggest conversely, that higher level information processing is impaired in both a region‐specific and dose‐dependent manner. This is in agreement with findings from previous fMRI studies [Dueck et al., 2005; Kerssens et al., 2005], and the widely held view that during propofol sedation, higher cognitive functions are impaired earlier and to a greater degree than early cortical sensory processing [Bonhomme et al., 2001; Fiset et al., 1999; Heinke and Schwarzbauer, 2001; Veselis et al., 2002]. However, before we explore these results, we must first rule out the possibility that condition‐specific effects of sedation are purely hemodynamic in the absence of any impact on neural activity. Propofol has been demonstrated to have no major direct effects on cerebrovascular tone and preserve flow‐metabolism coupling [Johnston et al., 2003]. Studies by Veselis et al. and Engelhard et al. [Engelhard et al., 2001; Veselis et al., 2009] provide good evidence that any observed changes in activation patterns with sedative concentrations of propofol are likely to represent effects on brain activity itself, a consensus that is broadly accepted in the context of human fMRI studies, notwithstanding some disagreement in animal experiments [Franceschini et al., 2010] and occasional human studies [Klein et al., 2011; Masamoto and Kanno, 2012] there is broad consensus on this issue. Further, at the sedative doses used in our study, propofol did not cause significant changes in mean arterial pressure, and hence the cerebral autoregulatory response is likely to have been preserved. We therefore believe that the observed changes in BOLD contrast are likely to reflect propofol‐induced changes in neural activity and not changes in neuro‐vascular or flow‐metabolism coupling.

The present findings therefore build on previous results in showing regional, condition‐specific effects of sedation and anesthesia on neural responses to complex auditory stimuli. However, none of these previous investigations collected concurrent behavioral measures of auditory and speech‐specific processing during sedation. Our data, in addition to demonstrating decreases in fMRI activation in response to speech specific stimuli in the LIFG and LMTG, allow assessment of the impact of reduced activation in these regions on semantic decisions to spoken words and auditory decisions to non‐speech sounds. Sedation was associated with an increase in error rates and response times; this behavioral effect persisted even when outlier data were accounted for using bootstrapping analyses. The increased error rates for semantic judgments with sedation might suggest that the decrease in LIFG activity we observed is associated with impaired semantic processing. However, since participants remained able to respond rapidly (less than 20% slower than when fully awake) and with a high degree of accuracy (mean 88% correct where chance is 50%), it is clear that successful semantic processing of spoken words remains possible even during moderate sedation. Instead, the most pronounced changes are observed in the number of time‐out or no‐response trials which increased significantly both for semantic decisions to words and for auditory decisions to nonspeech sounds. During moderate sedation, therefore, it appears that participants are able to access semantic information about spoken words up to the point at which overt responses cease. This leads us to consider in more detail whether frontal and/or temporal lobe responses are necessarily associated with semantic processing of spoken words.

Fronto‐Temporal Contributions to Semantic Processing

Our analysis of changes in speech‐specific activity during sedation focused on three ROIs in left inferior frontal and lateral and inferior temporal regions. During moderate sedation we observed region‐ and condition‐specific effects of sedation in that both the LIFG and LMTG showed a significant reduction in activation for semantic (vs. auditory) decision making, whereas activation in the LITG remained significant and unchanged. This difference between LITG and LIFG responses to sedation was confirmed by a significant brain‐region by sedation‐condition interaction. This finding suggests that the LIFG may not play quite such a key role in supporting intact semantic processing during sedation. We instead propose that preserved activity in the LITG is more directly associated with successful semantic retrieval and this implies persistent semantic processing at moderate levels of sedation.

How then can we explain participant's ability to make accurate semantic decisions despite sedation‐induced reduction in BOLD responses in inferior frontal regions? We propose that making semantic judgments during experimental tasks involves several diverse processes in addition to word recognition and access to semantic representations. Before the first judgments can be made, participants must attend to specific elements of their amodal semantic representations in order to map particular words onto semantic decisions (e.g., deciding that “tree” is a living thing, whereas “desk” is nonliving despite being composed of the same material, i.e., wood). This requires frontal systems for flexible, goal‐directed behavior [Duncan, 2010] to ensure that appropriate aspects of word meaning are activated and mapped onto the two response alternatives in order to make semantic decisions. This executive element in guiding semantic retrieval is therefore crucial for successful task‐relevant behavior. Jeffries and Lambon‐Ralph [2006] proposed a distinction between these executive functions (performed by the left prefrontal region) and accessing semantic content and representations (performed by both the left prefrontal and lateral temporal regions) in patients with semantic dementia and stroke, two populations with superficially similar deficits in word comprehension. Consistent with this proposal, Thompson‐Schill et al. [1997] have argued that activity in the LIFG is driven by selection of information among competing alternatives from semantic memory, rather than retrieval of semantic knowledge per‐se. Studies involving target detection across a range of domains (not just semantic) point to LIFG involvement in task‐specific decision making that reduces with increased practice [Hampshire et al., 2008].

On this basis, then, we argue that a critical part of our experimental design is that participants have already practiced the semantic task (though not the specific words) prior to performing the actual experiment. This practice period suffices for participants to learn the two response alternatives for the task, and to understand that their goal is to make living/nonliving decisions for spoken words. Given evidence for frontal contributions to flexible, goal‐directed behavior, we would anticipate that making their first few semantic decisions requires inferior frontal regions. However, if living/nonliving decisions have been practiced previously, then successful decisions could be made without frontal contributions to semantic processing. Even during a practiced task, these frontal regions may still contribute to performance (e.g., in monitoring for errors, or adjusting response criteria to ensure optimal responding). Had we used an unpracticed task, sedated participants could have struggled not only due to a failure of semantic processing, but also due to a failure of flexible, goal‐directed behavior. Yet, for the practiced task used here, participants make successful semantic decisions and thereby show that they are able to process the meanings of spoken words. The present findings can therefore be taken to imply that access to the meaning of spoken words can be achieved without these frontal processes. Thus, our use of a well‐practiced task is a vital part of our experimental design; it allows us to show that semantic processing remains possible during moderate sedation (albeit with reduced efficiency) despite reduced frontal activity.

It is important to note however, that the reduced activation under sedation cannot be attributed to practice alone. Activation (and behavioral performance) returned to supra‐baseline levels in the recovery phase. Furthermore, this order confound was eliminated in the experimental design through counterbalancing of the order of the light and moderate levels of sedation (there was no difference between the two groups, and hence the decrease in activity during moderate sedation occurred irrespective of order).

These frontal contributions to establishing and maintaining task‐relevant behavior may go some way towards explaining inconsistencies in the existing literature. For example, our previous findings [Davis et al., 2007] linked decreased LIFG activity with impaired processing of semantic ambiguity during sedation. Ambiguity resolution is a controlled, frontal process that changes from sentence to sentence and hence requires flexible, frontal computations [Rodd, 2010]. Any decrease in frontal activity will therefore be associated with impairment in resolving semantic ambiguity in sentences, whilst preserving participants' ability to retrieve meanings from isolated words for a well practiced task. A similar explanation can apply to results showing impaired comprehension especially for more complex sentences following chronic damage to anterior portions of the LIFG (BA47) [Dronkers et al., 2004]. Previous studies in healthy volunteers [Tyler et al., 2010] have shown that reduced volume of gray matter in LIFG is also associated with increased right IFG recruitment during sentence comprehension. These studies suggest an LIFG contribution to semantic processing in the context of sentence comprehension that might involve other component processes (e.g. syntactic processing or working memory). One piece of counter‐evidence, however, comes from transcranial magnetic stimulation (TMS) studies which show slower and more error prone responses following TMS to inferior frontal regions [Gough et al., 2005]. It might be that further studies exploring the impact of task‐familiarity and decision components would be valuable in exploring whether inferior‐frontal TMS disrupts semantic processing per‐se, rather than semantic decision making. The present results, however, demonstrate persistent successful semantic decisions with concurrent significant reductions in LIFG activity, a surprising and novel finding.

A second brain area previously associated with semantic retrieval is the left posterior inferior temporal lobe [Binder et al., 2009; Dronkers et al., 2004; Rodd et al., 2005]. In this study, subjects activated the LITG for spoken words compared to non‐speech sounds and these activations were not significantly reduced even during moderate sedation. However, despite successful meaning access during sedation, we observed that semantic decisions were slower and less accurate under sedation than when awake. Activity in the LITG alone is thus insufficient to respond to semantic stimuli with maximal efficiency. One question that should drive future research is whether these ITG activations (and perhaps semantic access) can also be observed during deeper levels of sedation. Such a finding would provide (troubling) evidence of semantic processing even in the absence of overt behavioral responses and motivate further research to monitor ITG activity during anesthesia.

A third area that shows activation differences between speech and nonspeech stimuli in our study was peri‐auditory regions of the LMTG. There is considerable debate regarding the functional contribution of the LMTG to semantic processing. However, converging evidence suggests that both the anterior most and the posterior parts of the LMTG contribute to accessing abstract, semantic properties of spoken materials [Davis and Johnsrude, 2003; Okada et al., 2010; Peelle et al., 2010; Scott et al., 2006]. Our study, however, focused on a central portion of the LMTG (ventral to Heschl's Gyrus, an anatomical landmark for primary auditory cortex), hence a region that may contribute as much to perceptual as semantic processing of spoken materials. Previous findings have observed contributions of this region to perception of categorical differences in speech stimuli [Jacquemot et al., 2003; Raizada and Poldrack, 2007]. For this reason, then, we suggest that the LMTG region activated in our study, and that shows a sedation‐induced reduction in activity, is likely not involved in semantic processing, but rather in speech‐associated perceptual processes that are critical for word identification. Previous work from our group reported a progressive decline in LMTG activity with increased sedation, and showed that activity in LMTG along with the LIFG was predictive of subsequent sentence memory [Davis et al., 2007]. Hence, the present demonstration of sedation induced reduction in LMTG activity provides further evidence of the effects of sedation/anesthesia on perceptual processing of speech sounds in the left temporal lobe, perhaps due to withdrawal of top‐down support from frontal regions [Davis et al., 2007]. However, since the comparisons of activity in the LIFG vs. LMTG, and LMTG vs. LITG were not statistically supported in the current study, we refrain from making specific conclusions about the LMTG response and whether it contributes specifically to intact or impaired semantic decisions during sedation.

Conscious awareness of speech in awake individuals may thus involve activity in specific frontal systems and coherent or concurrent activity in distributed frontotemporal regions [Davis and Johnsrude, 2007; Hickok and Poeppel, 2007; Rauschecker and Scott, 2009]. Our observation of persistent activation in the LITG, associated with significant reduction in LIFG activity, may therefore arise through disruption of long‐range functional interactions by sedation [Liu, et al., 2012, 2013; Stamatakis et al., 2010; Supp et al., 2011]. This may result in less efficient and accurate semantic decisions, but residual semantic activation in isolated cortical regions. Further research could therefore test disruptions of these functional interactions by sedation.

In summary, these results suggest that LIFG activation traditionally associated with accurate semantic judgments might not be a reliable marker of successful semantic processing. In contrast, the LITG does not appear to be as strongly affected by sedation, and may account for the persistence of semantic processing of spoken language under sedation. These results suggest an account in which multiple fronto‐temporal brain regions contribute to persistent semantic processing, and motivate exploring connectivity patterns that explain the potential persistence of semantic processing during sedation.

Implications for Anesthetic Monitoring

Understanding the processing of speech during anesthesia has important clinical implications. Anesthetic awareness (defined as inadvertent return of consciousness during general anesthesia with subsequent explicit recall of intra‐operative events) occurs in ∼0.2% of patients‐ most of these patients also receive muscle relaxant drugs that prevent behavioral motor responses during the period of awareness [Sandin et al., 2000]. Up to 80% of such patients develop severe long‐term psychological sequelae, including post‐traumatic stress disorder [Lennmarken et al., 2002]. However, use of this definition of anesthetic awareness probably underestimates the true number of patients who experience it, since explicit postoperative recall of intraoperative events is only possible if memory was intact during the period of awareness. Since anesthetic agents are powerful amnesics, episodes of awareness without explicit recall may occur even more frequently (than 0.2%) and may be associated with implicit processing which although not reported by patients, could contribute to post‐anesthetic psychological morbidity [Osterman and van der Kolk, 1998] and modulate clinical course and outcome.

The measured plasma propofol concentrations in this study were variable, but scaled concordantly with behavioral and imaging findings (which were also variable), and thus it was possible to include them as a regressor, which we believe has enhanced the imaging and behavioral analysis. Pharmacokinetic model predictions are subject to error (as are all models of all biological systems), and in particular the model predictions were of little value during the period after the drug infusion was stopped. Since the residual plasma concentration in the recovery stage was higher than predicted by the model, we refrain from commenting or making conclusions about the recovery data from this study. The biological variability present in all of nature is also present in the relationships between anesthetic doses and clinical effects, which show considerable inter‐ and intra‐individual variability. Among apparently similar individuals, the same dose will achieve a range of plasma concentrations (pharmacokinetic variability), and among apparently similar individuals, the same plasma concentrations will result in a spectrum of clinical effects (pharmacodynamic variability). This variability is, as expected, present too in the data we report here. Thus, in during the conduct of anesthesia, we cannot assume that any given dose, or blood concentration, of a drug, is sufficient to prevent some patients from inadvertently regaining consciousness during surgery.

At present we do not have a signal that reliably indicates return of consciousness in patients who have been administered muscle relaxants (and thus cannot move to indicate that they are suffering). Commonly used depth of anesthesia monitoring devices analyze scalp recordings of the spontaneous electroencephalogram (such as the Bispectral Index), provide an output that correlates with anesthetic depth, but cannot detect whether consciousness is present or absent [Russell, 2013]. Furthermore, use of such monitors has been shown to be no more effective at preventing anesthetic awareness than real‐time measurements of exhaled anesthetic concentrations (which correlate with plasma concentrations) [Mashour et al., 2012].

Given this context, we believe that detection of conscious awareness during anesthesia requires techniques that directly detect neural responses to intra‐operative stimuli, rather than inferring them from drug doses, drug concentrations, spontaneous EEG activity, behavioral outputs, or subsequent memory. Detection of covert speech processing is important since victims of awareness commonly recall conversations heard during surgery, and there are currently no reliable methods of detecting higher order auditory processing when behavioral outputs are not accessible. The availability of validated fMRI techniques to detect such covert cognitive processing holds great promise in this setting [Davis et al., 2007; Monti et al., 2010]. Our finding of preserved ITG activity during sedation thus has potential implications for anesthetic monitoring. A clear definition of the functional neuroanatomy of covert cognitive processing could provide a robust framework against which EEG‐based monitors could be calibrated to develop endpoints that indicate that cognitive processes, rather than their behavioral consequence, have been obtunded.

Finally, the cognitive impact of graded sedation on semantic processing in apparently awake patients is also clinically relevant. It is important to understand the mechanisms and extent of preserved language processing in order to optimize the verbal instructions given to subjects as they undergo surgical procedures under conscious sedation. For example, a simple instruction to “lie still”, or “don't cough” may be readily understood and performed, whereas more complex or novel verbal instructions may be followed more effectively if they have been practiced prior to sedation. Further, patients recovering from full surgical anesthesia may have low levels of circulating anesthetic drug, and impaired speech comprehension. The comprehension of (sometimes complex) instructions given to such individuals is of both clinical and medicolegal significance. Understanding and calibrating the effect of sedation on language processing allows a rational basis to approach these issues.

ACKNOWLEDGMENTS

The authors thank their volunteers and the radiographers at the Wolfson Brain Imaging Centre for their assistance.

REFERENCES

- Abdullaev YG, Bechtereva NP (1993): Neuronal correlate of the higher‐order semantic code in human prefrontal cortex in language tasks. Int J Psychophysiol 14:167–177. [DOI] [PubMed] [Google Scholar]

- Absalom AR, Mani V, De Smet T, Struys MM (2009): Pharmacokinetic models for propofol—Defining and illuminating the devil in the detail. Br J Anaesth 103:26–37. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL (2009): Where is the semantic system? A critical review and meta‐analysis of 120 functional neuroimaging studies. Cereb Cortex 19:2767–2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET (2000): Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex 10:512–528. [DOI] [PubMed] [Google Scholar]

- Bonhomme V, Fiset P, Meuret P, Backman S, Plourde G, Paus T, Bushnell MC, Evans AC. (2001): Propofol anesthesia and cerebral blood flow changes elicited by vibrotactile stimulation: A positron emission tomography study. J Neurophysiol 85:1299–1308. [DOI] [PubMed] [Google Scholar]

- Davis MH, Coleman MR, Absalom AR, Rodd JM, Johnsrude IS, Matta BF, Owen AM, Menon DK (2007): Dissociating speech perception and comprehension at reduced levels of awareness. Proc Natl Acad Sci USA 104:16032–16037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH, Gaskell MG (2009): A complementary systems account of word learning: Neural and behavioural evidence. Philos Trans R Soc Lond B Biol Sci 364:3773–3800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS (2003): Hierarchical processing in spoken language comprehension. J Neurosci 23:3423–3431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS (2007): Hearing speech sounds: top‐down influences on the interface between audition and speech perception. Hear Res 229(1–2):132–147. [DOI] [PubMed] [Google Scholar]

- Dronkers NF, Wilkins DP, Van Valin RD Jr, Redfern BB, Jaeger JJ (2004): Lesion analysis of the brain areas involved in language comprehension. Cognition 92(1–2):145–177. [DOI] [PubMed] [Google Scholar]

- Dueck MH, Petzke F, Gerbershagen HJ, Paul M, Hesselmann V, Girnus R, Krug B, Sorger B, Goebel R, Lehrke R, et al. (2005): Propofol attenuates responses of the auditory cortex to acoustic stimulation in a dose‐dependent manner: A FMRI study. Acta Anaesthesiol Scand 49:784–791. [DOI] [PubMed] [Google Scholar]

- Duncan J (2010): How Intelligence Happens. Yale University Press: New Haven, CT. [Google Scholar]

- Efron B, Tibshirani RJ (1993): An Introduction to the Bootstrap. New York: London: Chapman & Hall. [Google Scholar]

- Engelhard K, Werner C, Mollenberg O, Kochs E (2001): S(+)‐ketamine/propofol maintain dynamic cerebrovascular autoregulation in humans. Can J Anaesth 48:1034–1039. [DOI] [PubMed] [Google Scholar]

- Fiset P, Paus T, Daloze T, Plourde G, Meuret P, Bonhomme V, Hajj‐Ali N, Backman SB, Evans AC (1999): Brain mechanisms of propofol‐induced loss of consciousness in humans: A positron emission tomographic study. J Neurosci 19:5506–5513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franceschini MA, Radhakrishnan H, Thakur K, Wu W, Ruvinskaya S, Carp S, Boas DA (2010): The effect of different anesthetics on neurovascular coupling. Neuroimage 51:1367–1377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD (2002): Towards a neural basis of auditory sentence processing. Trends Cogn Sci 6:78–84. [DOI] [PubMed] [Google Scholar]

- Friston KJ HA, Worsley KJ, Poline J‐B, Frith CD, Frackowiak RSJ (1995): Statistical parametric maps in functional imaging: A general linear approach. Hum Brain Mapp 2:189–210. [Google Scholar]

- Gough PM, Nobre AC, Devlin JT (2005): Dissociating linguistic processes in the left inferior frontal cortex with transcranial magnetic stimulation. J Neurosci 25:8010–8016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampshire A, Thompson R, Duncan J, Owen AM (2008): The target selective neural response—Similarity, ambiguity, and learning effects. PLoS One 3:e2520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinke W, Fiebach CJ, Schwarzbauer C, Meyer M, Olthoff D, Alter K (2004): Sequential effects of propofol on functional brain activation induced by auditory language processing: An event‐related functional magnetic resonance imaging study. Br J Anaesth 92:641–650. [DOI] [PubMed] [Google Scholar]

- Heinke W, Schwarzbauer C (2001): Subanesthetic isoflurane affects task‐induced brain activation in a highly specific manner: A functional magnetic resonance imaging study. Anesthesiology 94:973–981. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2007): The cortical organization of speech processing. Nat Rev Neurosci 8:393–402. [DOI] [PubMed] [Google Scholar]

- Jacquemot C, Pallier C, LeBihan D, Dehaene S, Dupoux E (2003): Phonological grammar shapes the auditory cortex: A functional magnetic resonance imaging study. J Neurosci 23:9541–9546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jefferies E, Lambon Ralph MA (2006): Semantic impairment in stroke aphasia versus semantic dementia: A case‐series comparison. Brain 129(Part 8):2132–2147. [DOI] [PubMed] [Google Scholar]

- Johnston AJ, Steiner LA, Chatfield DA, Coleman MR, Coles JP, Al‐Rawi PG, Menon DK, Gupta AK (2003): Effects of propofol on cerebral oxygenation and metabolism after head injury. Br J Anaesth 91:781–786. [DOI] [PubMed] [Google Scholar]

- Kapur S, Craik FI, Tulving E, Wilson AA, Houle S, Brown GM (1994): Neuroanatomical correlates of encoding in episodic memory: levels of processing effect. Proc Natl Acad Sci USA 91:2008–2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerssens C, Hamann S, Peltier S, Hu XP, Byas‐Smith MG, Sebel PS (2005): Attenuated brain response to auditory word stimulation with sevoflurane: A functional magnetic resonance imaging study in humans. Anesthesiology 103:11–19. [DOI] [PubMed] [Google Scholar]

- Klein KU, Fukui K, Schramm P, Stadie A, Fischer G, Werner C, Oertel J, Engelhard K (2011): Human cerebral microcirculation and oxygen saturation during propofol‐induced reduction of bispectral index. Br J Anaesth 107:735–741. [DOI] [PubMed] [Google Scholar]

- Kouider S, de Gardelle V, Dehaene S, Dupoux E, Pallier C (2010): Cerebral bases of subliminal speech priming. Neuroimage 49:922–929. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI (2009): Circular analysis in systems neuroscience: The dangers of double dipping. Nat Neurosci 12:535–540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster JL, Tordesillas‐Gutierrez D, Martinez M, Salinas F, Evans A, Zilles K, Mazziotta JC, Fox PT (2007): Bias between MNI and Talairach coordinates analyzed using the ICBM‐152 brain template. Hum Brain Mapp 28:1194–1205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lennmarken C, Bildfors K, Enlund G, Samuelsson P, Sandin R (2002): Victims of awareness. Acta Anaesthesiol Scand 46:229–231. [DOI] [PubMed] [Google Scholar]

- Liu X, Lauer KK, Ward BD, Li SJ, Hudetz AG (2012): Differential effects of deep sedation with propofol on the specific and nonspecific thalamocortical systems: A functional magnetic resonance imaging study. Anesthesiology 118:59–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X, Lauer KK, Ward BD, Rao SM, Li SJ, Hudetz AG (2013): Propofol disrupts functional interactions between sensory and high‐order processing of auditory verbal memory. Hum Brain Mapp 33:2487–2498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsh B, White M, Morton N, Kenny GN (1991): Pharmacokinetic model driven infusion of propofol in children. Br J Anaesth 67:41–48. [DOI] [PubMed] [Google Scholar]

- Marslen‐Wilson W, Tyler LK (1980): The temporal structure of spoken language understanding. Cognition 8:1–71. [DOI] [PubMed] [Google Scholar]

- Masamoto K, Kanno I (2012): Anesthesia and the quantitative evaluation of neurovascular coupling. J Cereb Blood Flow Metab 32:1233–1247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mashour GA, Shanks A, Tremper KK, Kheterpal S, Turner CR, Ramachandran SK, Picton P, Schueller C, Morris M, Vandervest JC, Lin N, Avidan MS (2012): Prevention of intraoperative awareness with explicit recall in an unselected surgical population: A randomized comparative effectiveness trial. Anesthesiology 117:717–725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monti MM, Vanhaudenhuyse A, Coleman MR, Boly M, Pickard JD, Tshibanda L, Owen AM, Laureys S (2010): Willful modulation of brain activity in disorders of consciousness. N Engl J Med 362:579–589. [DOI] [PubMed] [Google Scholar]

- O'Rourke TB, Holcomb PJ (2002): Electrophysiological evidence for the efficiency of spoken word processing. Biol Psychol 60(2–3):121–150. [DOI] [PubMed] [Google Scholar]

- Okada K, Rong F, Venezia J, Matchin W, Hsieh IH, Saberi K, Serences JT, Hickok G (2010): Hierarchical organization of human auditory cortex: Evidence from acoustic invariance in the response to intelligible speech. Cereb Cortex 20:2486–2495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osterman JE, van der Kolk BA (1998): Awareness during anesthesia and posttraumatic stress disorder. Gen Hosp Psychiatry 20:274–281. [DOI] [PubMed] [Google Scholar]

- Peelle JE, Johnsrude IS, Davis MH (2010): Hierarchical processing for speech in human auditory cortex and beyond. Front Hum Neurosci 4:51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plourde G, Belin P, Chartrand D, Fiset P, Backman SB, Xie G, Zatorre RJ (2006): Cortical processing of complex auditory stimuli during alterations of consciousness with the general anesthetic propofol. Anesthesiology 104:448–457. [DOI] [PubMed] [Google Scholar]

- Poeppel D, Hickok G (2004): Towards a new functional anatomy of language. Cognition 92(1–2):1–12. [DOI] [PubMed] [Google Scholar]

- Raizada RD, Poldrack RA (2007): Selective amplification of stimulus differences during categorical processing of speech. Neuron 56:726–740. [DOI] [PubMed] [Google Scholar]

- Rauschecker AM, Pringle A, Watkins KE (2008): Changes in neural activity associated with learning to articulate novel auditory pseudowords by covert repetition. Hum Brain Mapp 29:1231–1242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP, Scott SK (2009): Maps and streams in the auditory cortex: Nonhuman primates illuminate human speech processing. Nat Neurosci 12:718–724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodd J, Johnsrude, IS , Davis, MH (2010): The role of domain‐general frontal systems in language comprehension: Evidence from dual‐task interference and semantic ambiguity. Brain Lang 115:182–188. [DOI] [PubMed] [Google Scholar]

- Rodd JM, Davis MH, Johnsrude IS (2005): The neural mechanisms of speech comprehension: fMRI studies of semantic ambiguity. Cereb Cortex 15:1261–1269. [DOI] [PubMed] [Google Scholar]

- Russell IF (2013): The ability of bispectral index to detect intra‐operative wakefulness during total intravenous anaesthesia compared with the isolated forearm technique. Anaesthesia 68:502–511. [DOI] [PubMed] [Google Scholar]

- Sabri M, Binder JR, Desai R, Medler DA, Leitl MD, Liebenthal E (2008): Attentional and linguistic interactions in speech perception. Neuroimage 39:1444–1456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandin RH, Enlund G, Samuelsson P, Lennmarken C (2000): Awareness during anaesthesia: A prospective case study. Lancet 355:707–711. [DOI] [PubMed] [Google Scholar]

- Saur D, Kreher BW, Schnell S, Kummerer D, Kellmeyer P, Vry MS, Umarova R, Musso M, Glauche V, Abel S, et al. (2008): Ventral and dorsal pathways for language. Proc Natl Acad Sci USA 105:18035–18040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Johnsrude IS (2003): The neuroanatomical and functional organization of speech perception. Trends Neurosci 26:100–107. [DOI] [PubMed] [Google Scholar]

- Scott SK, Rosen S, Lang H, Wise RJ (2006): Neural correlates of intelligibility in speech investigated with noise vocoded speech—A positron emission tomography study. J Acoust Soc Am 120:1075–1083. [DOI] [PubMed] [Google Scholar]

- Stamatakis EA, Adapa RM, Absalom AR, Menon DK (2010): Changes in resting neural connectivity during propofol sedation. PLoS One 5:e14224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Supp GG, Siegel M, Hipp JF, Engel AK (2011): Cortical hypersynchrony predicts breakdown of sensory processing during loss of consciousness. Curr Biol 21:1988–1993. [DOI] [PubMed] [Google Scholar]

- Swick D, Knight RT (1996): Is prefrontal cortex involved in cued recall? A neuropsychological test of PET findings. Neuropsychologia 34:1019–1028. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988): Co‐Planar Stereotaxic Atlas of the Human Brain: 3‐Dimensional Proportional System: An Approach to Cerebral Imaging. Stuttgart: Thieme. [Google Scholar]

- Thompson‐Schill SL, D'Esposito M, Aguirre GK, Farah MJ (1997): Role of left inferior prefrontal cortex in retrieval of semantic knowledge: A reevaluation. Proc Natl Acad Sci USA 94:14792–14797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tyler LK, Shafto MA, Randall B, Wright P, Marslen‐Wilson WD, Stamatakis EA (2010): Preserving syntactic processing across the adult life span: The modulation of the frontotemporal language system in the context of age‐related atrophy. Cereb Cortex 20:352–364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Veselis RA, Pryor KO, Reinsel RA, Li Y, Mehta M, Johnson R Jr. (2009): Propofol and midazolam inhibit conscious memory processes very soon after encoding: An event‐related potential study of familiarity and recollection in volunteers. Anesthesiology 110:295–312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Veselis RA, Reinsel RA, Feshchenko VA, Dnistrian AM (2002): A neuroanatomical construct for the amnesic effects of propofol. Anesthesiology 97:329–337. [DOI] [PubMed] [Google Scholar]