Abstract

Whether neuroimaging findings support discriminable neural correlates of emotion categories is a longstanding controversy. Two recent meta‐analyses arrived at opposite conclusions, with one supporting (Vytal and Hamann [2010]: J Cogn Neurosci 22:2864–2885) and the other opposing this proposition (Lindquist et al. [2012]: Behav Brain Sci 35:121–143). To obtain direct evidence regarding this issue, we compared activations for four emotions within a single fMRI design. Angry, happy, fearful, sad and neutral stimuli were presented as dynamic body expressions. In addition, observers categorized motion morphs between neutral and emotional stimuli in a behavioral experiment to determine their relative sensitivities. Brain–behavior correlations revealed a large brain network that was identical for all four tested emotions. This network consisted predominantly of regions located within the default mode network and the salience network. Despite showing brain–behavior correlations for all emotions, muli‐voxel pattern analyses indicated that several nodes of this emotion general network contained information capable of discriminating between individual emotions. However, significant discrimination was not limited to the emotional network, but was also observed in several regions within the action observation network. Taken together, our results favor the position that one common emotional brain network supports the visual processing and discrimination of emotional stimuli. Hum Brain Mapp 36:4184–4201, 2015. © 2015 Wiley Periodicals, Inc.

Keywords: human, fMRI, emotion, action observation, bodies, perception, visual

INTRODUCTION

Basic emotion theory assumes the existence of a limited set of emotions that are universal, biologically inherited, and associated with a distinctive physiological pattern for each emotion [Ekman, 1992; Tracy and Randles, 2011]. Indeed, initial neuroimaging research investigating the processing of anger, fear, happiness, or sadness suggested that their respective neural correlates could be localized in distinct anatomical locations or networks in the brain [Blair et al., 1999; Morris et al., 1996; Phillips et al., 1997, 1998], which was taken as support for basic emotion theory. More recently, a competing model, termed the conceptual act theory of emotion, has been put forward. In contrast to the basic emotion view, the latter hypothesizes that the same brain networks would be engaged during a variety of emotions. Discrete emotions are constructed from these networks that are in themselves not specific to those emotions [Barrett, 2006; Lindquist and Barrett, 2012]. Neuroimaging support for one or the other theory is contradictory. While one recent meta‐analysis supported the view that different emotions involve distinct arrays of cortical and subcortical structures [Vytal and Hamann, 2010], others have reported mixed results regarding specificity [Murphy et al., 2003; Phan et al., 2002] and another concluded that brain regions demonstrate remarkably consistent increases in activity during a variety of emotional states [Lindquist et al., 2012].

In any event, meta‐analyses alone are inadequate for testing the predictions of the two outlined emotion models, because they rely on absolute differences in activations between conditions. Yet, brain–behavior correlation studies, as well as multivariate pattern classification approaches can reveal aspects of neural function even in the absence of a main effect. Therefore, in order to gain direct support for one of the two hypotheses, studies need to investigate the processing of several emotions within the same design, allowing for a detailed analysis of commonalities and differences. At present, there is little direct evidence regarding specific neural correlates of emotions, because the very few investigations using within‐study designs have focused on specific brain regions [van der Gaag et al., 2007], did not contrast activations for different emotions against one another [Damasio et al., 2000; Tettamanti et al., 2012], or did not address the question of specificity [Kim et al., 2015; Peelen et al., 2010].

To bridge this gap, we investigated the processing of neutral and emotionally expressive (angry, happy, fearful, sad) gaits within the same event‐related fMRI study. The stimuli were presented as avatars, animated with 3D motion‐tracking data. Numerous studies have shown that human observers readily recognize the emotions expressed in body movement [see de Gelder, 2006 for review], even with impoverished stimuli such as point‐light displays or avatars [Atkinson et al., 2004; Dittrich et al., 1996; Pollick et al., 2001; Roether et al., 2009]. These stimuli allowed us to search for emotion‐specific signals within regions commonly associated with the processing of emotional stimuli such as the amygdala, insula, orbitofrontal, or cingulate cortex, but also within the more general action observation network (AON), comprising regions in occipito‐temporal, parietal, and premotor cortex [Grafton, 2009; Rizzolatti and Craighero, 2004].

Recent results indicated that accommodating for individual differences in emotion processing can reveal aspects of neural function that are not detectable using standard subtraction methods [see Calder et al., 2011 for review]. Therefore, instead of focusing on group differences in absolute brain activations between emotional and neutral stimuli, we correlated brain activations with the subject's perceptual sensitivity for emotional stimuli, as determined in a behavioral experiment using morphed stimuli. Using this design, we localized an emotional brain network composed of regions showing reliable brain–behavior correlations. Subsequently, we investigated whether some regions correlate more strongly with one emotion compared to the others, whether the fine‐grained fMRI activation within the regions allowed discrimination between emotions, and how these regions are connected with the AON.

METHODS

Participants

Sixteen volunteers (eight females, mean age 25 years, range 23–32 years) participated in the experiment. All participants were right‐handed, had normal or corrected‐to‐normal visual acuity, and no history of mental illness or neurological diseases. The study was approved by the Ethical Committee of KU Leuven Medical School and all volunteers gave their written informed consent in accordance with the Helsinki Declaration prior to the experiment.

Stimuli

Stimuli were generated from motion‐capture data of lay actors performing emotionally neutral gaits and four emotionally expressive gaits after a mood induction procedure (angry, happy, fearful, sad). A single, complete gait cycle was selected from the recording, defined as the interval between two successive heel strikes of the same foot. Details about the recording process can be found in the work by Roether et al. [2009]. The motion‐capture data was used to animate a custom‐built volumetric puppet model rendered in MATLAB. The model was composed of three‐dimensional geometric shape primitives (Fig. 1). The puppet's anatomy was actor‐specific, but scaled to a common height. In order to eliminate translational movement of the stimulus, the horizontal, but not the vertical translation of the center of the hip joint was removed, resulting in a natural‐looking walk as though performed on a treadmill. Extended psychophysical testing ensured that the affect of the final stimuli could be easily identified [Roether et al., 2009]. These stimuli will subsequently be referred to as prototypical neutral or emotional stimuli and were used in the fMRI experiment. The complete stimulus set for the fMRI experiment contained 30 stimuli, six examples of neutral prototypes (six different actors) and six angry, happy, fearful, and sad prototypes, respectively.

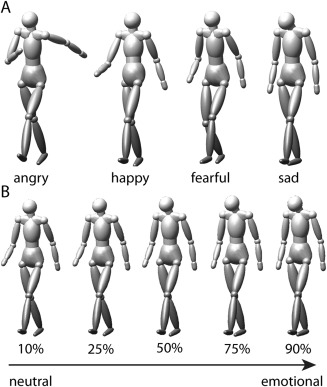

Figure 1.

Stimuli. A: Example frames taken from four prototypical stimuli displaying the emotions angry, happy, fearful, and sad used in the functional imaging experiment. B: Illustration of the morphed stimuli indicating different morph levels between neutral and emotional (sad) gaits tested during the behavioral experiment.

The stimuli used in the behavioral experiment were motion morphs between neutral and emotional prototypes used in the fMRI experiment. By morphing, we created a continuum of expressions ranging from almost neutral (90% neutral prototype and 10% emotional prototype) to almost emotional (10% neutral prototype and 90% emotional prototype). Morphing was based on spatio‐temporal morphable models [Giese and Poggio, 2000], a method which generates morphs by linearly combining prototypical movements exploiting a spatio‐temporal correspondence algorithm. The method has previously been shown to produce morphs with high degrees of realism for rather dissimilar movements [Jastorff et al., 2006]. Each continuum between neutral and emotional was represented by nine different stimuli with the weights of the neutral prototype set to the values of 0.9, 0.75, 0.65, 0.57, 0.5, 0.43, 0.35, 0.25, and 0.1. The weight of each emotional prototype was always chosen such that the sum of the morphing weights was equal to one (Fig. 1B).

Procedure

Behavioral testing

Motion morphs between prototypical neutral and emotional stimuli were used for behavioral testing. The full stimulus set included 216 stimuli (nine morph levels × four emotions × 6 actors). This set was shown twice during the experiment, resulting in 432 trials. The presentation order of the stimuli was randomly selected for each subject.

Stimuli were displayed on an LCD screen (60 Hz frame rate; 1600 × 1200 pixels resolution) that was viewed binocularly from a distance of 40 cm, leading to a stimulus size of about 7 degrees visual angle. Stimulus presentation and recording of the participants' responses was implemented with the MATLAB Psychophysics Toolbox [Brainard, 1997]. The stimuli were shown as puppet models (Fig. 1) on a uniform gray background.

The experiment started with a demonstration session where subjects were allowed to familiarize themselves with the stimuli for 10 trials. A single trial consisted of the presentation of a motion morph at the center of the screen for 10 s. No fixation requirements were imposed. The subject had to first answer whether the stimulus was emotional or neutral, and, dependent on this answer, categorize the emotion as happy, angry, fearful, or sad. Subjects were told to respond as soon as they had made their decisions but we did not emphasize responding quickly. If the subject answered within the 10 s, stimulus presentation was stopped immediately, otherwise, it halted after 10 s and a uniform gray screen was shown until the subject entered a response. After a 1.5 s intertrial interval, the next trial started. No feedback regarding performance was provided during the behavioral testing.

Functional imaging

Only prototypical neutral or emotional stimuli were shown during imaging. The stimulus set was composed of 30 stimuli, belonging to five different conditions (four emotions × six actors + one neutral × six actors) presented on a black background. This set was shown twice within a single run, once at 6 and once at 4.5 degrees visual angle. Two different sizes were chosen to render low‐level features, i.e. retinal position, less informative with regard to categorization between the conditions. One run contained 60 stimulus events (six stimuli × two sizes × four emotions + six stimuli × two sizes × one neutral) and 12 baseline fixation events (condition 6), presented in a rapid event‐related design. The six conditions were shown in a pseudo‐random order with controlled history, so that each condition was preceded equally often by an exemplar of all other conditions within any given run [Jastorff et al., 2009]. A small red square (0.2°) was superimposed onto all individual stimuli. This fixation dot remained constant at the center of the display, but the center of mass of the puppet was randomly offset up to 1 degree from the fixation point to reduce low‐level retinotopic effects. For any given movement, the offset was constant throughout the video.

Each walking pattern was presented for two gait cycles. Depending on the given stimulus, the presentation lasted between ∼2 and ∼4 s. Fixation events showed the fixation dot on an otherwise black screen and lasted 3 s. For the subject, these fixation events were undistinguishable from the period of the ISI. The ISI was variable between 2,300 ms and 5,000 ms, determined by an exponential function [Dale, 1999]. During this period subjects were asked to respond as to whether the preceding stimulus was emotional or neutral by pressing a button on a MR‐compatible button box placed in each hand of the subject. Half of the subjects responded using their right thumb for the emotional response, the other half responded with the left thumb to indicate an emotional stimulus. Importantly, subjects were not asked to identify the specific emotion shown. No response was required after the fixation condition. A single run lasted 200 s and eight runs were scanned in one session. Every run started with the acquisition of four dummy volumes to assure that the MR signal had reached its steady state.

In addition to the eight experimental runs, we also acquired one resting state fMRI scan lasting 425 s. During acquisition, subjects were asked to close their eyes and not to think of anything in particular.

Presentation and Data Collection

The stimuli were presented using a liquid crystal display projector (Barco Reality 6400i; 1024 × 768, 60 Hz refresh frequency; Barco) illuminating a translucent screen positioned in the bore of the magnet at a distance of 36 cm from the point of observation. Participants viewed the stimuli through a mirror tilted at 45 degree that was attached to the head coil. Throughout the scanning session, participants' eye movements were monitored with an ASL eye tracking system 5000 (60 Hz; Applied Science Laboratories).

Scanning was performed with a 3 T MR scanner (Intera; Philips Medical Systems) using a 32 channel head coil, located at the University Hospital of KU Leuven. Functional images were acquired using gradient‐echo planar imaging with the following parameters: 37 horizontal slices (3 mm slice thickness; 0.3 mm gap), repetition time (TR), 2 s; time of echo (TE), 30 ms; flip angle, 90°; 80 × 80 matrix with 2.75 × 2.75 mm in‐plane resolution, and SENSE reduction factor of 2. The resting state scan was performed with slightly different parameters: 31 horizontal slices (4 mm slice thickness; 0.3 mm gap), TR 1.7 s; TE 33 ms; flip angle 90°; 64 × 64 matrix with 3.59 × 3.59 mm in‐plane resolution, and SENSE reduction factor of 2.

A three‐dimensional, high resolution, T1‐weighted image covering the entire brain was also acquired during the scanning session and used for anatomical reference (TE/TR 4.6/9.7 ms; inversion time 900 ms; slice thickness 1.2 mm; 256 × 256 matrix; 182 coronal slices; SENSE reduction factor 2.5).

Data Analysis

To investigate brain–behavior correlations, we first determined the perceptual sensitivity of each individual subject for our emotional body expressions. Next, we performed a random effects group analysis to determine brain regions that reliably correlated with perceptual sensitivity in the group. After having determined this “general emotion network” (GEN), we investigated whether regions within this network contain information that could reliably discriminate between the presented emotions using muli‐voxel pattern analyses (MVPA). This analysis was carried out in each subjects' native (i.e., non‐normalized) space to maximize sensitivity. In order to investigate discrimination performance outside the GEN, we also performed a searchlight analysis [Kriegeskorte et al., 2006], taking into account all voxels in the brain. Finally, we performed a resting‐state analysis to investigate connections within the GEN and between the GEN and the action observation network (AON).

Behavioral data

We analyzed the responses of every subject separately for each emotion and morph level, averaged over the six actors. During the behavioral experiment, subjects had to categorize not only whether the stimulus was “neutral” or “emotional,” but also, where they responded “emotional,” which emotion was expressed. The goal of this experiment was to determine each subject's ambiguity point (AP), the morph level, at which they answered equally often “neutral” and “emotional.” To assure that subjects indeed categorized the emotion correctly for 50% of the trials, we took their response to the second question into account to determine APs. Thus, in cases where they wrongly classified the emotion, their response was not counted as emotional, but as neutral. We opted for this procedure because we wanted to maximize our sensitivity for identifying emotion‐specific processing. Had we taken only the first answer into account, we would not have been able to determine the AP for “angry,” but only that for “emotional.” Nevertheless, APs calculated with and without taking their correct answers into account were highly correlated (r = 0.94). APs were determined after fitting the data by sigmoidals. Subsequently, these values formed the basis of our brain–behavior correlation analyses.

Functional imaging

Data analysis was performed in two processing streams using the SPM12b software package (Wellcome Department of Cognitive Neurology, London, UK) running under MATLAB (The Mathworks, Inc., Natick, MA). For the random effects group analyses, the preprocessing steps involved: (1) slice time correction, (2) realignment of the images, (3) coregistration of the anatomical image and the mean functional image, (4) spatial normalization of all images to a standard stereotaxic space (MNI) with a voxel size of 2 mm × 2 mm × 2 mm, and (5) smoothing of the resulting images with an isotropic Gaussian kernel of 8 mm. For the native space analyses, the first three steps were identical, followed by smoothing with a 3 mm kernel. A 3 mm kernel was chosen because it is in the range of our native resolution and will correct interpolation errors resulting from the realignment process and from the 0.3 mm gap between slices during acquisition.

For every participant, the onset and duration of each condition was modeled by a General Linear Model (GLM). The design matrix was composed of six regressors modeling the six conditions (four emotions + neutral + baseline) plus six regressors obtained from the motion correction in the realignment process. The latter variables were included to account for voxel intensity variations due to head movement. To exclude variance related to the subjects' response, two additional regressors were included, modeling the button presses during the ISIs. All regressors were convolved with the canonical hemodynamic response function. Subsequently, we calculated contrast images for each participant for every stimulus condition versus baseline fixation, each of the four emotional conditions versus the neutral condition and the average of all four emotional conditions versus the neutral condition. Brain–behavior correlations (“emotion network”) at the group level (normalized data) were determined by multiple regression analyses using the subjects' APs as covariate.

Regions of interest (ROIs), dividing the emotion network into separate clusters, were defined in an unsupervised way using a watershed image segmentation algorithm [Meyer, 1991]. This algorithm finds local maxima and “grows” regions around these maxima incorporating neighboring voxels, one voxel at a time, in decreasing order of voxel intensity (i.e., t‐value), and as long as all of the labeled neighbors of a given voxel have the same label. To increase the number of voxels within each ROI for multi‐voxel pattern analyses, we used a more liberal threshold of P < 0.01 uncorrected for the brain–behavior correlation. Prior to applying the watershed algorithm, we smoothed the t‐map with a 4 mm Gaussian kernel. This step reduced the number of partitions from 231 (without smoothing) to 65. Subsequent ROI analyses within the emotion network were carried out in each subject's native space. Thus, we mapped the group ROIs back to native space using the deformations utility of SPM12b.

Classification of the Emotion Based on Support Vector Machines

Instead of including a single regressor per emotion, we also performed an analysis in the subject's native space modeling each emotional stimulus as a separate condition (24 t‐images; four emotions × six actors). A linear support vector machine (SVM) [Cortes and Vapnik, 1995] was used to assess the classification performance across the four emotions, based on the t‐scores.

For the ROI‐based SVM analysis, the t‐scores of all voxels of a given ROI for a particular stimulus were concatenated across subjects, resulting in a single activation vector per stimulus and per ROI across subjects [Caspari et al., 2014]. The length of this vector was given by the sum of the voxels included in the ROI over subjects. The activations of four stimuli of each emotion were used for training the SVM (= training set, 16 stimuli). The remaining two stimuli of each emotion were used as a test set (eight stimuli). This analysis was repeated each time with differently composed training and test sets for all possible combinations. The SVM analysis was run using the CoSMoMVPA toolbox. As a control, the analysis was performed with shuffled category labels (10,000 permutations), where all stimuli were randomly assigned to the four emotions.

For the searchlight analysis, SVM analyses were performed for each subject separately using a searchlight radius of 3 voxels. After subtracting chance level (0.25) from each voxel of the final classification images, these were normalized to MNI space and smoothed with a kernel of 4 mm. Subsequently, we performed a random effects analysis over the 16 subjects. To identify regions with significant classification performance within the AON, the final searchlight map was masked with the group result of the contrast all stimuli versus fixation baseline at P < 0.01 uncorrected. Clusters showing significant classification within the AON were determined by 3DClustSim (AFNI), correcting for multiple comparisons using Monte Carlo Simulations. Similarly, significant clusters within the GEN were determined by masking the searchlight group image with the GEN at P < 0.01 uncorrected, followed by correction for multiple comparisons using 3DClustSim.

Resting‐State fMRI Analysis

Spatial and temporal preprocessing of resting‐state data was performed using SPM12b together with the REST toolbox (Beijing Normal University, Beijing, China). Spatial preprocessing steps involved: (1) slice time correction, (2) realignment of the images, (3) coregistration of the anatomical image and the mean functional image, and (4) spatial normalization of all images to MNI space with a voxel size of 2 mm × 2 mm × 2 mm. Temporal preprocessing steps involved: (1) detrending, (2) band‐pass filtering covering the frequency band from 0.01 Hz to 0.1 Hz, (3) linear regression to remove the covariate signals, including cerebrospinal fluid signal, white matter signal, and six rigid‐body motion parameters.

ROIs for correlation analysis were defined as spheres of 5 mm radius. The center of the sphere was the voxel with the highest t‐score obtained from the group searchlight SVM analysis. We defined 34 seed ROIs. Fifteen of these related to main nodes of the AON showing significant classification in the searchlight analysis. Nineteen were derived from the GEN by inclusively masking the group searchlight classification results with the ROIs of the GEN. After extraction of the timecourses of the 34 seed ROIs, we calculated the pairwise Pearson's correlation coefficient between all seed regions independently for each subject. Subsequently, the correlations were Fisher z‐transformed and significant functional connectivity was assessed by performing t‐tests on the pairwise correlations across subjects.

We also performed hierarchical clustering (Wards method) on the final functional connectivity matrix to group the 34 seed regions into separate clusters depending on their connectivity profile. The distance matrix used for clustering was derived from the t‐score of the pairwise correlations minus the maximum t‐score across all seeds. In other words, seeds with high correlations (high t‐values) would have small distance values and seeds with low correlations (low t‐values) would have large distance values.

Between‐network hubs were identified following previous work [Sporns et al., 2007]. A seed region had to fulfill two criteria in order to be defined as a between‐network hub: first, the number of connections of the seed should exceed the mean connections within the network by one standard deviation at the significance level of P < 0.00001; second, the participation index should exceed 0.3. The participation index of seed region j is defined as: P(j) = 1 − (K i(j)/K t(j))2 − (K o(j)/K t(j))2, where K i is the number of within‐network connections of the seed region j, K o is the number of between‐network connections of the seed region j, and K t is the total number of network connections of the seed region j, such that K t(j) = K i(j) + K o(j).

RESULTS

Behavioral Results

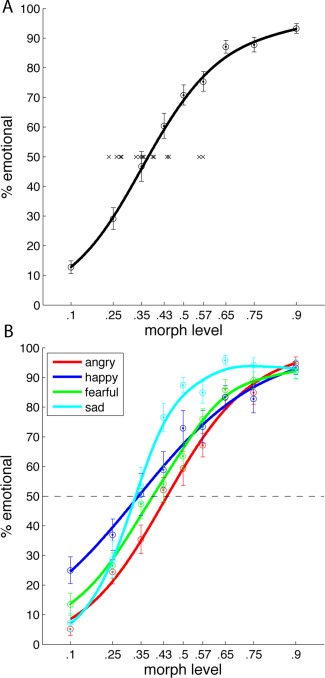

Prior to the fMRI scanning session, subjects categorized test stimuli morphed between “neutral” and “emotional” in a psychophysical session. One trial consisted of the presentation of one test stimulus. Here, the subject had to first answer whether the stimulus was emotional or neutral, and, depending on this answer, subsequently categorize the emotion as happy, angry, fearful, or sad. By parametrically varying the contribution of the emotional prototype to the morph, we tested categorization at nine different morph levels (Fig. 1). Figure 2A shows the average responses across subjects at the different morph levels fitted by a sigmoid curve. This curve represents the proportions of “emotional” responses as a function of the morphing weight of the emotional prototype. Average reaction times across subjects and emotion categories ranged from 3.3 s to 2.1 s and were slowest for the 10, 25, and 35% morph level and fastest for the 90% morph level. We also fitted the response curves for each subject individually to determine the “ambiguity point” (AP), i.e. the morph level at which subjects gave neutral and emotional responses equally often. The 16 crosses in Figure 2A illustrate the individual APs. Whereas the AP for the group was at 37% morph level, the APs for the individual subjects varied considerably, with a minimum of 23% and a maximum of 57%. The individual values were subsequently used for correlation with fMRI activation.

Figure 2.

Behavioral results. A: Average “emotional” responses across subjects and across emotions at the different morph levels (± sem) fitted by a sigmoid curve. Crosses indicate the individual ambiguity points of the 16 subjects. B: Average “emotional” responses across subjects separate for each emotion at the different morph levels (±sem). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Figure 2B plots the response curves for the individual emotions. The APs for the four emotions varied significantly (1‐way repeated measures ANOVA: F (4,12) = 75, P < 0.001) with the lowest average AP for sad (33%) followed by happy (34%), fear (39%), and angry (44%). Nevertheless, differences in APs across emotions are not very informative, as they depend on the prototypes of the four emotions used for morphing. More interesting however, would be whether a subject more sensitive to angry walks compared to the group, would also be more sensitive to the other emotions compared to the group. Pairwise testing for positive correlations between thresholds of the four emotions showed significant correlations for five comparisons (all P < 0.05, Bonferroni corrected) and only one non‐significant correlation between the thresholds for fearful and sad walks (P = 0.09). Thus, indeed, subjects more sensitive to one emotion were in general also more sensitive to the other emotions compared to the entire group.

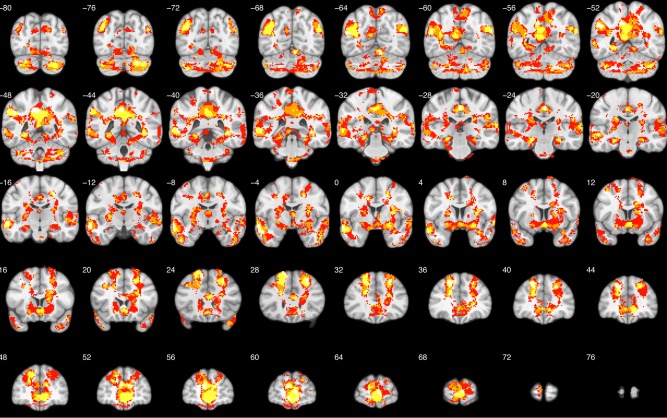

Correlation Between Brain Activation and Emotion Sensitivity

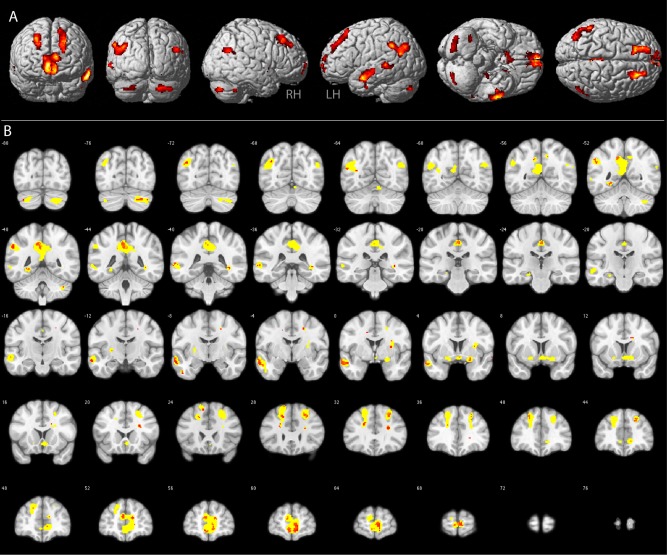

Differing from most previous studies, our intention was not to investigate, where in the brain emotional stimuli lead to significantly increased activation compared to neutral stimuli. Rather, we wanted to identify brain regions in which activation correlated with individual perceptual thresholds for emotion recognition. Our reasoning was that subjects more sensitive to the emotional content might exhibit stronger fMRI activation for an emotional stimulus compared to a neutral one, whereas this difference might be smaller for subjects less sensitive to the emotional content. Having determined the ambiguity point for each subject psychophysically, we correlated brain activation for the contrast emotional stimuli versus neutral stimuli with the subjects' individual average AP (crosses in Fig. 2A). Figure 3 illustrates brain regions that were more strongly activated in the contrast emotional versus neutral for subjects with low AP values and showed weaker activation in this contrast for subjects with a higher AP values. In other words, in these regions, activation for emotional compared to neutral stimuli was stronger, in proportion to how sensitive the subject was in the emotion recognition task. For illustration purposes, t‐maps in Figure 3A are thresholded at P < 0.01 uncorrected with a cluster extent threshold of 30 voxels. These correspond to the yellow voxels in Figure 3B. The red voxels in Figure 3B reach significance at P < 0.001 uncorrected. Significant correlation was observed in cortical, subcortical, and cerebellar regions (see Table 1). Most prominent correlations were present bilaterally in the temporo‐parietal junction, the precuneus, along the superior frontal sulcus, the medial orbitofrontal cortex, in the left medial and anterior temporal lobe, the left lingual gyrus, the right parahippocampal gryrus, the right amygdala, and the right putamen.

Figure 3.

GEN. Group results of the brain–behavior correlation analysis between the fMRI contrast all emotions versus neutral stimuli and the average perceptual ambiguity point determined in the behavioral experiment. Results are displayed on the rendered MNI brain template (A) and respective coronal sections (B). Yellow voxels in B: P < 0.01, red voxels in B: P < 0.001. See Table 1 for anatomical locations and respective t‐scores of the red voxels. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Table 1.

Main nodes of the emotion network

| Region | Hem | Coordinates | t‐score | ||

|---|---|---|---|---|---|

| X | Y | Z | |||

| post. Cerebellum | R | 22 | −76 | −36 | 4.3 |

| Temporoparietal junction | L | −40 | −72 | 32 | 4.3 |

| Precuneus | R | 14 | −54 | 44 | 4.2 |

| Parahippocampal gyrus | L | −28 | −50 | −6 | 4.7 |

| Temporoparietal junction | L | −56 | −50 | 36 | 5.1 |

| Post. cingulate | L | −6 | −44 | 42 | 5.5 |

| Mid. middle temporal gyrus | L | −66 | −40 | 2 | 4.1 |

| Mid. cingulate | L | 2 | −26 | 44 | 5.0 |

| Ant. inferior temporal gyrus | L | −50 | −8 | −38 | 4.8 |

| Post. mid. frontal gyrus | R | 22 | −6 | 44 | 5.1 |

| Ant. superior temporal sulcus | L | −62 | −2 | −20 | 6.8 |

| Putamen | R | 30 | 4 | 12 | 4.6 |

| Amygdala | R | 22 | 4 | −12 | 4.3 |

| Nucleus accumbens | R | 2 | 6 | −8 | 4.0 |

| Mid. frontal gyrus | L | −18 | 26 | 42 | 4.1 |

| Post. sup. frontal gyrus | L | −16 | 28 | 60 | 4.7 |

| Ant. Cingulate | R | 22 | 32 | 22 | 6.4 |

| Ant. mid. frontal gyrus | R | 26 | 32 | 46 | 5.5 |

| Ant. sup. frontal gyrus | L | −24 | 40 | 44 | 4.1 |

| Ant. sup. frontal gyrus | R | 22 | 44 | 36 | 4.2 |

| Medial prefrontal cortex | R | 14 | 54 | 14 | 4.9 |

| Medial prefrontal cortex | L | −6 | 54 | 14 | 4.8 |

| Medial prefrontal cortex | R | 8 | 62 | 0 | 5.7 |

Anatomical locations and respective t‐scores for regions showing significant brain–behavior correlations (general emotion network, red voxels in Fig. 3). Regions are ordered from posterior to anterior.

Distinct Networks for Individual Emotions?

Our correlation analysis used the individual APs averaged across emotions, as well as the average brain activation of all emotional stimuli compared to neutral stimuli. Thus, the network shown in Figure 3 might be a general network for emotion as outlined in the introduction. On the other hand, emotion‐specific nodes, showing strong brain–behavior correlations for only one of the emotions might be present within subparts or even outside of the GEN. To test this hypothesis, we correlated individual APs for one emotion with the brain activation of the same emotion versus neutral stimuli. As this procedure results in only four different maps, one for each emotion, we could not compare them statistically at the second level. Rather, to identify emotion‐specific nodes, we thresholded one of the maps at P < 0.001 and exclusively masked the resulting image with the other three maps thresholded at P < 0.05. Thus, remaining voxels would show strong brain–behavior correlations for one emotion, but only weak or no correlations for the other emotions.

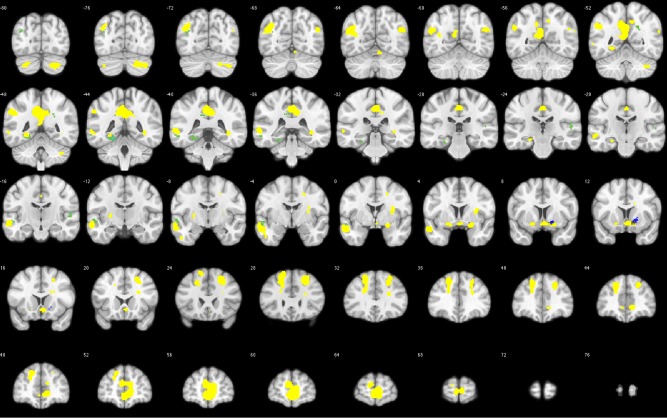

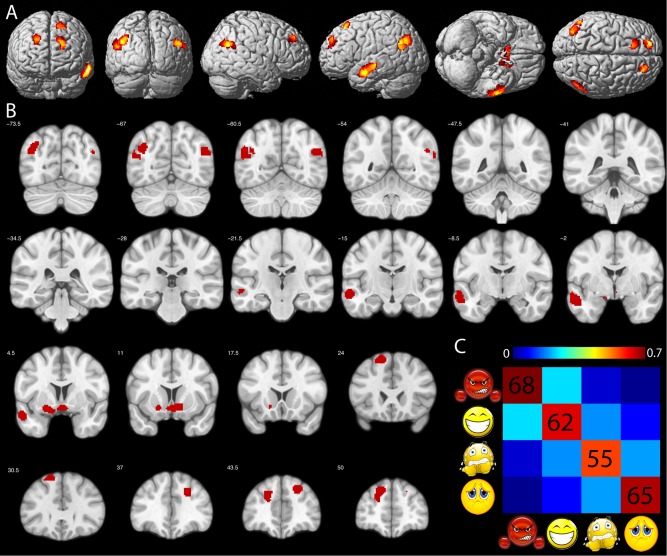

Figure 4 shows the GEN in yellow (same as Fig. 3) and voxels more strongly correlated with happy in the caudate nucleus (blue) and sad in the left parahippocampal gyrus and the left medial temporal lobe (green) compared to the other emotions. We obtained no voxels predominantly correlating with angry or fearful stimuli at a cluster level of 15 voxels. This level was chosen because a cluster containing less than 15 voxels seemed unlikely to play a significant role in emotion processing. Our result indicates that the GEN seems indeed to be involved in the processing of all four emotions, as none of the nodes showed a predominant correlation with only one of the emotions. At the same time, voxels predominantly correlating with happy or sad stimuli were located in close proximity to this general network, extending it somewhat.

Figure 4.

Emotion‐specific voxels. Yellow voxels indicate the GEN (same as Fig. 3). Green voxels show stronger brain–behavior correlations for sad, and blue voxels show stronger brain–behavior correlations for happy compared to the other emotions. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

To confirm this interpretation, we also plotted the conjunction map of all four brain–behavior correlation maps thresholded at P < 0.05 uncorrected. In Figure 5, white indicates spatial overlap for all four emotion maps. Yellow indicates spatial overlap for three of the four maps. Orange indicates spatial overlap for two of the maps, and red indicates no spatial overlap. Indeed, most of the GEN is depicted in white/yellow, illustrating that these regions show significant brain–behavior correlations for the majority of emotions tested.

Figure 5.

Conjunction map. Conjunction of all four brain–behavior correlation maps. White indicates spatial overlap for all four maps. Yellow indicates spatial overlap for three of the four maps. Orange indicates spatial overlap for two of the maps and red indicates no spatial overlap. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Information About Individual Emotions Within the General Network?

If we assume that the general network is involved in the processing of emotional body expressions regardless of the emotion displayed, then how are the subjects able to discriminate between the emotions? In other words, does the network, or specific nodes of the network, contain information to discriminate between the four emotions?

To address this question, we performed multi‐voxel pattern analysis with the nodes of the network as ROIs. By design, this network was not biased toward any of the emotions, neither in terms of fMRI activation, nor with respect to the task or the motor response. The network was determined by correlating the subject's average AP across emotions with his/her fMRI activation averaged across emotions compared to neutral stimuli. Also, within each run, a specific emotion was shown at two different sizes, rendering retinal position unreliable as a means of classification. The subjects' task was to categorize the stimuli in neutral and emotional, not to discriminate between emotions, and the buttonpress response was identical for all emotions.

Sixty‐five ROIs were identified from the GEN in an unsupervised manner using a watershed image segmentation algorithm (see Methods section). To investigate whether fMRI activation patterns within the ROIs contained reliable information about the specific emotion presented, we determined how well a stimulus could be classified as belonging to one of the four emotion categories. By training SVMs with 2/3 of the stimuli and subsequently applying the trained model to classify the remaining 1/3, we explicitly tested for stimulus generalization, an essential feature of categorization. Significant classification was determined by permutation testing (10,000 permutations) and results were corrected for 65 comparisons.

Results of the SVM analysis are shown in Figure 6. Highlighted are those 10 ROIs that showed significant (P < 0.05 Bonferroni corrected) classification performance averaged over the four emotions, and at the same time revealed significant classification performance for each of the four emotions (P < 0.05 uncorrected). Thus, fMRI activation patterns within these ROIs contained information, which could reliably discriminate among all four emotions. The average classification matrix over the 10 ROIs is displayed in Figure 6C. Average correct classification ranged between 55% (fear) and 68% (anger) along the diagonal, all clearly above the 25% expected by chance. Main confusions occurred between angry and happy (22%), happy and fearful (17%), and fearful and sad (19%).

Figure 6.

SVM classification. Colored voxels in (A) and (B) indicate ROIs of the GEN showing significant SVM classification performance. C: Average percent correct classification across the 10 ROIs highlighted in (A) and (B). Chance level = 25%. Order of conditions from left to right and top to bottom: angry, happy, fearful, and sad. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

We also investigated whether some of the ROIs would contain information that could correctly classify only one emotion, but not the other three. This was tested by requiring significant (Bonferroni corrected) classification performance for one emotion and non‐significant (uncorrected) classification performance for the other three emotions. However, none of our ROIs met these criteria.

Taken together, MVPA analyses indicated that several nodes within the GEN contain information capable of reliably discriminating all four emotions from one another. This result was obtained despite the fact that these ROIs show similar brain–behavior correlations for all four emotions.

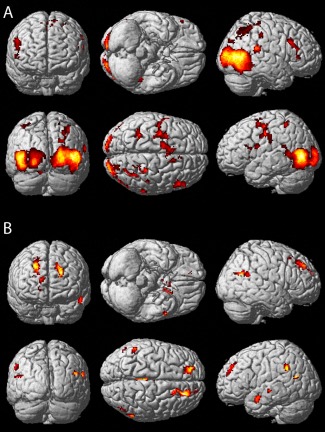

Are Regions Outside the General Emotion Network Sensitive to the Specific Emotion Presented?

To our surprise, the GEN was almost entirely composed of areas outside the classical action observation network (AON). As the stimuli presented were dynamic full body movements, we expected that the AON might process information of value for emotion categorization. To address this question, we performed a whole brain searchlight analysis within each subject and investigated above chance classification at the second level (see Methods section). We also determined the AON by contrasting the average of all dynamic body stimuli with fixation baseline at the second level and thresholded the resulting t‐map at P < 0.01 uncorrected as mask of the AON. Subsequently, we inclusively masked the second level classification map with the AON mask to identify regions within the AON showing significant classification performance. Figure 7A illustrates brain regions of the AON showing significant (P < 0.05 familywise error (FWE) cluster level correction) above chance level classification across participants. Main clusters were observed bilaterally in the posterior inferior temporal sulcus, the middle temporal gyrus and the superior temporal sulcus, the parahippocampal gyrus, the intraparietal sulcus, the precentral sulcus, and the insula. Predominantly, right hemispheric clusters were located in the cuneus, the fusiform gyrus, the posterior cingulate cortex, and the inferior frontal sulcus.

Figure 7.

Searchlight analysis. A: Regions of the AON with significant classification performance in the SVM searchlight analysis rendered on the MNI brain template. B: Regions of the GEN with significant classification performance. Searchlight results confirm the results of the ROI‐based classification indicated by the similarity of Figures 6A and 7B. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

In order to investigate whether the searchlight analysis would also confirm our initial ROI classification analysis, we inclusively masked the searchlight results with the GEN thresholded at P < 0.01 uncorrected. As expected, significant voxels (P < 0.05 FWE cluster level correction) were located predominantly within the ROIs showing significant classification performance in our earlier ROI analysis, as indicated by the close correspondence of the maps shown in Figures 6A and 7B. However, whereas our ROI classification analysis indicated significant classification performance in 10 out of the 65 ROIs of the GEN, the searchlight analysis revealed 19 local maxima (Table 2). This difference might arise from the fact that for the ROI classification analysis, all voxels of a given ROI were contributing to the classification, whereas the searchlight analysis performed individual tests for each voxel including only its direct neighborhood.

Table 2.

Resting state connectivity between emotion network and action observation network

| ROI | Region | Hem. | Coordinates | AON/GEN | Network | ||

|---|---|---|---|---|---|---|---|

| X | Y | Z | |||||

| 1 | Temporoparietal junction | L | −52 | −50 | 36 | GEN | 2 |

| 2 | Temporoparietal junction | R | 44 | −60 | 30 | GEN | 2 |

| 3 | Temporoparietal junction | L | −40 | −64 | 36 | GEN | 2 |

| 4 | Precuneus | R | 8 | −50 | 36 | GEN | 2 |

| 5 | Parahippocampal gyrus | L | −28 | −52 | −4 | GEN | 3 |

| 6 | Parahippocampal gyrus | R | 32 | −42 | −8 | GEN | 3 |

| 7 | Mid. middle temporal sulcus | L | −60 | −32 | 4 | GEN | 4 |

| 8 | Ant. superior temporal sulcus | L | −58 | −12 | −16 | GEN | 2 |

| 9 | Putamen | L | −26 | −10 | 4 | GEN | 2 |

| 10 | Ant. Insula | R | 36 | 4 | 12 | GEN | 1 |

| 11 | Amygdala | R | 18 | 2 | −16 | GEN | 3 |

| 12 | Amygdala | L | −24 | −6 | −12 | GEN | 3 |

| 13 | Nucleus accumbens | L | −2 | 4 | −8 | GEN | 3 |

| 14 | Post. superior frontal gyrus | R | 20 | 20 | 50 | GEN | 2 |

| 15 | Post. superior frontal gyrus | L | −14 | 24 | 58 | GEN | 2 |

| 16 | Ant. superior frontal gyrus | L | −18 | 38 | 28 | GEN | 2 |

| 17 | Ant. superior frontal gyrus | R | 22 | 40 | 40 | GEN | 2 |

| 18 | Medial prefrontal cortex | R | 14 | 44 | −4 | GEN | 3 |

| 19 | Medial prefrontal cortex | L | −8 | 54 | 18 | GEN | 2 |

| 20 | Fusiform gyrus | R | 50 | −46 | −14 | AON | 3 |

| 21 | Fusiform gyrus | L | −36 | −54 | −14 | AON | 4 |

| 22 | Post. superior temporal sulcus | L | −46 | −48 | 10 | AON | 4 |

| 23 | Post. superior temporal sulcus | R | 40 | −50 | 8 | AON | 4 |

| 24 | Extrastriate Body Area | L | −46 | −74 | 8 | AON | 4 |

| 25 | Extrastriate Body Area | R | 48 | −74 | 8 | AON | 4 |

| 26 | Precentral sulcus | L | −26 | −6 | 46 | AON | 3 |

| 27 | Precentral sulcus | R | 30 | −12 | 48 | AON | 3 |

| 28 | Inferior frontal sulcus | R | 34 | 6 | 16 | AON | 1 |

| 29 | Supplementary motor area | R | 14 | 6 | 48 | AON | 3 |

| 30 | Ant. Cingulated | R | 10 | 20 | 32 | AON | 1 |

| 31 | Ant. insula | R | 32 | 20 | 10 | AON | 1 |

| 32 | Ant. Insula | L | −32 | 14 | 14 | AON | 1 |

| 33 | Inferior orbitofrontal | L | −30 | 28 | −4 | AON | 2 |

| 34 | Inferior orbitofrontal | R | 30 | 28 | −10 | AON | 3 |

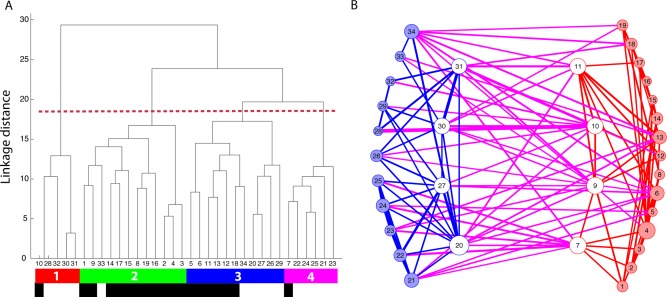

Connectivity Between the Action Observation Network and the General Emotion Network

Presumably, our dynamic body expressions were initially processed within the AON. In a final analysis step, we investigated how information about the different walking patterns might be relayed to the areas of the GEN. To this end, we tested connectivity between main nodes of the AON showing significant classification (Fig. 7A) and the local maxima within the GEN displaying significant classification (Fig. 7B) using resting‐state fMRI. We selected 15 seed regions from the AON and 19 seed regions from the GEN (see Methods section and Table 2). The centers of the seed regions were the voxels showing the highest group classification performance in the searchlight analysis.

We assessed relative similarities among the 34 seed regions using hierarchical clustering (Fig. 8A). This analysis resulted in four main clusters. The first cluster showed partial overlap with the salience network [Seeley et al., 2007] and comprised seeds in the right anterior cingulate cortex, the right inferior frontal sulcus, and bilateral anterior insula. The second cluster contained predominantly seed regions from the GEN and showed overlap with the default mode or mentalizing network [Buckner et al., 2008]. Regions included were the medial prefrontal cortex, bilateral temporo‐parietal junction, right precuneus, and the anterior superior temporal sulcus. The third cluster was probably the most interesting one regarding information flow between regions of the AON and the GEN, as it contained six emotional seed regions and five action observation seed regions. This “mixed” network consisted of seeds in the parahippocampal gyrus (bilateral), the amygdala (bilateral), the left anterior cingulate cortex, and the right medial orbitofrontal cortex from the GEN and seeds in the right fusiform gyrus, bilateral precentral sulcus, the right supplementary motor area, and the right inferior orbitofrontal cortex from the AON. The fourth cluster contained mainly seeds from the AON including the bilateral superior and inferior posterior temporal sulci, left fusiform gyrus, and left middle superior temporal sulcus and showed overlap with the somatomotor and dorsal attention network [Corbetta et al., 2008]. Similarities between the clusters and their respective resting state networks as defined by Yeo et al. [2011] are illustrated in Supporting Information Figures.

Figure 8.

Resting‐state fMRI analysis. A: Results of the clustering analysis based on the pairwise correlation between seed regions from the GEN and the AON. Numbers 1–34 refer to the numbers in Table 2 and indicate the location of the seed region. We obtained four main clusters, color‐coded in red, green, blue, and pink respectively. Black labels indicate seeds from the GEN and white labels indicated seeds from the AON. B: Illustration of significant functional connections within the AON (blue) and within the GEN (red). Significant functional connections between these two networks are shown in purple. White circles signal between group hubs. Numbers within each circle refer to the location of the seed region defined in Table 2. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

In addition, we also assessed which seed regions showed strong connectivity between the emotion‐ and the action observation network. Such between‐group hubs were defined in a manner analogous to that of previous studies [see Methods section; Sporns et al., 2007]. Figure 8B illustrates the seed regions from the AON in blue and the GEN in red. Seeds shown with white markers fulfill the criteria for a between‐group hub. The sum of the connections is illustrated by the size of the marker. Blue lines represent connections within the AON, red lines connections within the GEN, and pink lines connections between the networks. The thickness of the lines indicates the strength of the correlation. Four seeds from the emotion network were defined as between‐group hubs: right amygdala, right insula, the left putamen, and the left middle STS. Also, four seeds from the AON fulfilled the definition of a between‐group hub: right anterior insula, right precentral sulcus, right anterior cingulate cortex, and right fusiform gyrus.

DISCUSSION

This work investigated the processing of neutral and emotionally expressive (angry, happy, fearful, sad) gaits within a single functional imaging study to directly test the predictions of the basic emotion and conceptual act theories of emotion with respect to distinct or shared neural correlates of emotions. In agreement with the conceptual act theory, we obtained significant brain–behavior correlations for all four emotions within the same network. Moreover, none of the nodes of this network seemed to be preferentially involved in the processing of any single emotion. Nevertheless, multi‐voxel activity patterns within several nodes of this common network contained reliable information about the emotion category presented. Emotion category information was not limited to the localized emotion network, but was also present in several regions of the action observation network (AON). Finally, functional connectivity analysis revealed strong functional links between regions of the AON and the localized emotional network.

Two recent meta‐analyses summarizing the available literature on emotion processing arrived at opposite conclusions regarding whether emotions are associated with both consistent and discriminable regional brain activations. Vytal and Hamann [2010] argued in favor, whereas Lindquist et al. [2012] argued against this viewpoint. One of the main differences in the studies was that the first performed a pairwise comparison between emotion categories (e.g., happy vs. sad, happy vs. fear, etc.) whereas the latter compared activation for one emotion with the average across all other emotions (e.g., fear perception vs. perception of all other emotion categories). Our criterion for emotion specificity was that the area should show strong brain–behavior correlation for one emotion and only weak correlations for the remaining three emotions. In our view, this region would qualify for having a consistent relationship with one emotion as its activation compared to neutral stimuli correlates with the subject's perceptual sensitivity for that emotion. It would also qualify for having a discriminable role for this one emotion, as it would be the only emotion with which this area would show brain–behavior correlations. In summary, our results are more compatible with the conclusions drawn by Lindquist et al. [2012].

Instead of discriminable emotion circuits, we obtained brain–behavior correlations common to all four of the emotions investigated, in a large brain network spanning cortical and subcortical areas. This result is compatible with the conceptual act theory of emotion [Barrett, 2006; Lindquist and Barrett, 2012], which would predict that (a) multiple brain regions belonging to different brain networks support the perception of a single emotion and that (b) one network supports the perception of multiple emotion categories. Our emotion network, comprising similar regions as the “neural reference space” for emotion [Lindquist et al., 2012], showed strong overlap with two previously defined functional networks, the default mode or mentalizing network and the salience network. The default mode network comprises regions along the anterior and posterior midline, the lateral parietal cortex, prefrontal cortex, and the medial temporal lobe and has been implicated among others in theory of mind and affective decision making [Buckner et al., 2008; Ochsner et al., 2004; Schilbach et al., 2008]. The salience network involves anterior cingulate and fronto‐insular cortices and has extensive connections with subcortical and limbic structures such as the putamen or amygdala [Seeley et al., 2007]. Alterations in functional connectivity within both networks have been associated with diseases featuring social–emotional deficits such as autism, schizophrenia, or behavioral variant frontotemporal dementia [von dem Hagen et al., 2013; Woodward et al., 2011; Zhou et al., 2010].

One of the main findings that distinguishes our work from previous studies testing the predictions of different emotion theories using meta‐analyses [Lindquist et al., 2012; Murphy et al., 2003; Phan et al., 2002; Vytal and Hamann, 2010] or analysis of resting state data [Touroutoglou et al., [Link]] is that we obtained emotion‐specific activity patterns at a finer level in several regions within the emotion network. This indicates that, despite being involved in the processing of all emotions, individual emotions indeed elicit distinct distributed activation patterns. These differences, however, do not manifest themselves in significant activation differences between emotions and are thus not picked up using univariate methods or meta‐analyses. It is conceivable that this finer‐grained organization relates to emotion‐specific subnetworks within the general network. Alternatively, it could indicate that the regions involved interact in distinct spatial [Tettamanti et al., 2012] or temporal [Costa et al., 2014] patterns during the perception of one emotion compared to another. This might lead to the emotion‐specific activation patterns that can be used by the brain to categorize emotions. Such patterns, even though possibly different across individuals, could explain why we are able to interpret our perceptions or feelings despite such broad activation within distributed networks. Whereas the existence of subnetworks would be in line with basic emotion theory, qualifying as distinctive neural correlates for each emotion, the flexible interaction of regions, depending on the perceived stimulus, would be more in line with the conceptual act theory of emotion. Future studies specifically comparing the functional connectivity patterns across different emotions might help to shed light on this question.

Our study links nicely with two previous studies investigating supramodel representations of emotions using whole‐brain searchlight analyses [Kim et al., 2015; Peelen et al., 2010]. Together, these studies highlighted five brain regions (medial prefrontal cortex, posterior parietal cortex, precuneus, temporo‐parietal junction, and superior temporal sulcus (STS)) that contained information concerning several emotions, independent of presentation modality (face, body, sound, or abstract pattern). All these regions (apart from the STS) are located within our GEN and contain information sufficient to discriminate between our stimuli (Table 2). We thus believe that the network localized in the present study is not specific to body processing, but is involved in the processing of emotions in general.

We did not obtain significant brain–behavior correlations within the AON [Grafton, 2009; Rizzolatti and Craighero, 2004]. This is in agreement with a current meta‐analysis concluding that both networks are rarely activated concurrently [Van Overwalle and Baetens, 2009]. Nevertheless, several studies propose that the AON contributes to emotion perception through a mechanism termed embodied simulation [Blakemore and Decety, 2001; Gallese et al., 2004; Niedenthal et al., 2010]. Undoubtedly, identification of body posture or specific kinematics of the emotionally expressive gaits as subserved by the AON provides valuable information for emotion perception. Our MVPA searchlight results demonstrate that this information is available in several nodes of the AON, which allowed reliable discrimination between emotion categories.

Central to interactions between regions involved in action observation and mentalizing would be areas with a high degree of connectivity between the two networks. Our resting‐state analysis identified four such hubs within the AON: the right anterior insula, the right anterior cingulate cortex, the right precentral sulcus, and the right fusiform gyrus. These findings match nicely with previously published imaging data investigating links between action observation and social cognition. Studies of the direct experience and observation of pain or emotion showed overlapping activations within the anterior insula and the anterior cingulate cortex [Carr et al., 2003; Singer et al., 2004]. Modulation of activity in the fusiform gyrus by observation of emotional bodies has been consistently reported [de Gelder et al., 2004; Grosbras and Paus, 2006] and was proposed to be induced by discrete projections from the amygdala [Peelen et al., 2007]. Future studies investigating connectivity in more detail may shed light on the directionality of functional connections between hubs of the action observation and the mentalizing network.

Our study differed in one other aspect from most previous functional imaging work on visual emotion perception: We investigated brain–behavior correlations instead of absolute differences in brain activation. From the recent literature investigating individual differences in emotion processing, it is apparent that correlation analyses can reveal aspects of neural function that are not detectable using standard subtraction methods [see Calder et al., 2011 for review]. The standard univariate approach tests whether neural activation in response to one condition is significantly higher than the activation associated with another. Significant correlation with a behavioral measure, however, can occur even in the absence of such a group effect. This can be observed because lower and higher scores on the behavioral dimension are associated with relative reductions and increases, respectively, in the neural response to the contrast of interest, producing an overall effect that does not statistically differ from zero [Calder et al., 2011]. We are confident that correlation between individual perceptual sensitivity to emotionally expressive gaits and neural activation contrasting emotional with neutral gaits provides a valid method for investigating emotion circuits in the brain. Our results, highlighting a similar emotion network compared to recent meta‐analyses and reviews [Barrett et al., 2007; Lindquist et al., 2012; Phan et al., 2002; Vytal and Hamann, 2010], support this view.

We used an explicit task, in which subjects had to respond as to whether the stimulus presented was emotional or neutral. Studies directly comparing explicit vs. implicit emotional processing reported mixed results. In one case, explicit processing elicited greater temporal activation whereas implicit processing increased activation in the amygdala [Critchley et al., 2000]. Other studies, however, reported the opposite, with stronger amygdalar and hippocampal activity during the explicit task [Gur et al., 2002; Habel et al., 2007]. We believe that our specific task requirements did not significantly affect our results, as nowhere during the scanning session did the subjects have to decide which emotion was presented. They were asked only to categorize the stimuli as neural or emotional. Therefore, our MVPA analyses, focusing only on emotional trials, were not influenced by task requirements, as the responses of the subjects were identical for all trials. For the same reason, semantic processing cannot have affected our results, as the semantic labeling of the stimuli was “emotion,” irrespective of whether the given stimulus was angry, happy, fearful, or sad. Nevertheless, the behavioral data showed that subjects reached almost 100% correct performance for the 90% emotional morph. Thus, they were very well able to categorize the stimulus presented in the scanner. Moreover, our choice of correlation analyses instead of absolute subtraction methods makes it unlikely that unspecific task effects drove parts of the emotion network described. For this to be the case, the degree of task involvement would need to correlate with perceptual sensitivity for emotional stimuli.

Taken together, our data favor the existence of a single, common brain network supporting the visual processing of emotional stimuli. Nevertheless, several nodes within this network contain information about the category of the emotion processed at the multi‐voxel response pattern level. Whether this finding results from emotion‐specific subnetworks within the general network, compatible with basic emotion theory, or from changes in connectivity strength specific to each emotion, compatible with the conceptual act theory of emotion, awaits further clarification. In general, neuroimaging research on emotions can only establish associations with brain activations. To gain evidence for the necessity of a certain brain region or network for emotion recognition, additional neuopsychological research is needed.

Supporting information

Supporting Information

ACKNOWLEDGMENTS

JJ and MV designed the experiment, MAG provided material for stimulus generation, JJ generated the stimuli, JJ acquired the data, JJ analyzed the functional data, YH analyzed the resting state data, JJ wrote the paper. All authors discussed the results and implications and commented on the manuscript. JJ is a postdoctoral fellow supported by FWO Vlaanderen.

REFERENCES

- Atkinson AP, Dittrich WH, Gemmell AJ, Young AW (2004): Emotion perception from dynamic and static body expressions in point‐light and full‐light displays. Perception 33:717–746. [DOI] [PubMed] [Google Scholar]

- Barrett LF (2006): Solving the emotion paradox: Categorization and the experience of emotion. Pers Soc Psychol Rev 10:20–46. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Mesquita B, Ochsner KN, Gross JJ (2007): The experience of emotion. Annu Rev Psychol 58:373–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blair RJ, Morris JS, Frith CD, Perrett DI, Dolan RJ (1999): Dissociable neural responses to facial expressions of sadness and anger. Brain 122 (Part 5):883–893. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Decety J (2001): From the perception of action to the understanding of intention. Nat Rev Neurosci 2:561–567. [DOI] [PubMed] [Google Scholar]

- Brainard DH (1997): The psychophysics toolbox. Spat Vis 10:433–436. [PubMed] [Google Scholar]

- Buckner RL, Andrews‐Hanna JR, Schacter DL (2008): The brain's default network: Anatomy, function, and relevance to disease. Ann NY Acad Sci 1124:1–38. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Ewbank M, Passamonti L (2011): Personality influences the neural responses to viewing facial expressions of emotion. Philos Trans R Soc Lond B Biol Sci 366:1684–1701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL (2003): Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci USA 100:5497–5502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspari N, Popivanov ID, De Maziere PA, Vanduffel W, Vogels R, Orban GA, Jastorff J (2014): Fine‐grained stimulus representations in body selective areas of human occipito‐temporal cortex. Neuroimage 102P2:484–497. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Patel G, Shulman GL (2008): The reorienting system of the human brain: From environment to theory of mind. Neuron 58:306–324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cortes C, Vapnik V (1995): Support‐vector networks. Mach Learn 20:273–297. [Google Scholar]

- Costa T, Cauda F, Crini M, Tatu MK, Celeghin A, de Gelder B, Tamietto M (2014): Temporal and spatial neural dynamics in the perception of basic emotions from complex scenes. Soc Cogn Affect Neurosci 9:1690–1703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley H, Daly E, Phillips M, Brammer M, Bullmore E, Williams S, Van Amelsvoort T, Robertson D, David A, Murphy D (2000): Explicit and implicit neural mechanisms for processing of social information from facial expressions: A functional magnetic resonance imaging study. Hum Brain Mapp 9:93–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM (1999): Optimal experimental design for event‐related fMRI. Hum Brain Mapp 8:109–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio AR, Grabowski TJ, Bechara A, Damasio H, Ponto LL, Parvizi J, Hichwa RD (2000): Subcortical and cortical brain activity during the feeling of self‐generated emotions. Nat Neurosci 3:1049–1056. [DOI] [PubMed] [Google Scholar]

- de Gelder B (2006): Towards the neurobiology of emotional body language. Nat Rev Neurosci 7:242–249. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N (2004): Fear fosters flight: A mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc Natl Acad Sci USA 101:16701–16706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dittrich WH, Troscianko T, Lea SE, Morgan D (1996): Perception of emotion from dynamic point‐light displays represented in dance. Perception 25:727–738. [DOI] [PubMed] [Google Scholar]

- Ekman P (1992): An argument for basic emotions. Cogn Emot 6:169–200. [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G (2004): A unifying view of the basis of social cognition. Trends Cogn Sci 8:396–403. [DOI] [PubMed] [Google Scholar]

- Giese MA, Poggio T (2000): Morphable models for the analysis and synthesis of complex motion pattern. Int J Comput Vis 38:59–73. [Google Scholar]

- Grafton ST (2009): Embodied cognition and the simulation of action to understand others. Ann NY Acad Sci 1156:97–117. [DOI] [PubMed] [Google Scholar]

- Grosbras MH, Paus T (2006): Brain networks involved in viewing angry hands or faces. Cereb Cortex 16:1087–1096. [DOI] [PubMed] [Google Scholar]

- Gur RC, Schroeder L, Turner T, McGrath C, Chan RM, Turetsky BI, Alsop D, Maldjian J, Gur RE (2002): Brain activation during facial emotion processing. Neuroimage 16:651–662. [DOI] [PubMed] [Google Scholar]

- Habel U, Windischberger C, Derntl B, Robinson S, Kryspin‐Exner I, Gur RC, Moser E (2007): Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia 45:2369–2377. [DOI] [PubMed] [Google Scholar]

- Jastorff J, Kourtzi Z, Giese MA (2006): Learning to discriminate complex movements: Biological versus artificial trajectories. J Vis 6:791–804. [DOI] [PubMed] [Google Scholar]

- Jastorff J, Kourtzi Z, Giese MA (2009): Visual learning shapes the processing of complex movement stimuli in the human brain. J Neurosci 29:14026–14038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J, Schultz J, Rohe T, Wallraven C, Lee SW, Bulthoff HH (2015): Abstract representations of associated emotions in the human brain. J Neurosci 35:5655–5663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P (2006): Information‐based functional brain mapping. Proc Natl Acad Sci USA 103:3863–3868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF (2012): A functional architecture of the human brain: Emerging insights from the science of emotion. Trends Cogn Sci 16:533–540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Wager TD, Kober H, Bliss‐Moreau E, Barrett LF (2012): The brain basis of emotion: A meta‐analytic review. Behav Brain Sci 35:121–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer F (1991): Un algorithme optimal pour la ligne de partage des eaux. Dans 8me congrès de reconnaissance des formes et intelligence artificielle, Lyon, France. pp 847–857.

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ (1996): A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383:812–815. [DOI] [PubMed] [Google Scholar]

- Murphy FC, Nimmo‐Smith I, Lawrence AD (2003): Functional neuroanatomy of emotions: A meta‐analysis. Cogn Affect Behav Neurosci 3:207–233. [DOI] [PubMed] [Google Scholar]

- Niedenthal PM, Mermillod M, Maringer M, Hess U (2010): The Simulation of Smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behav Brain Sci 33:417–433; discussion 433‐80. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Knierim K, Ludlow DH, Hanelin J, Ramachandran T, Glover G, Mackey SC (2004): Reflecting upon feelings: An fMRI study of neural systems supporting the attribution of emotion to self and other. J Cogn Neurosci 16:1746–1772. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Andersson F, Vuilleumier P (2007): Emotional modulation of body‐selective visual areas. Soc Cogn Affect Neurosci 2:274–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Vuilleumier P (2010): Supramodal representations of perceived emotions in the human brain. J Neurosci 30:10127–10134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I (2002): Functional neuroanatomy of emotion: A meta‐analysis of emotion activation studies in PET and fMRI. Neuroimage 16:331–348. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Young AW, Senior C, Brammer M, Andrew C, Calder AJ, Bullmore ET, Perrett DI, Rowland D, Williams SC, Gray JA, David AS (1997): A specific neural substrate for perceiving facial expressions of disgust. Nature 389:495–498. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Bullmore ET, Howard R, Woodruff PW, Wright IC, Williams SC, Simmons A, Andrew C, Brammer M, David AS (1998): Investigation of facial recognition memory and happy and sad facial expression perception: An fMRI study. Psychiatr Res 83:127–138. [DOI] [PubMed] [Google Scholar]

- Pollick FE, Paterson HM, Bruderlin A, Sanford AJ (2001): Perceiving affect from arm movement. Cognition 82:B51–B61. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L (2004): The mirror‐neuron system. Annu Rev Neurosci 27:169–192. [DOI] [PubMed] [Google Scholar]

- Roether CL, Omlor L, Christensen A, Giese MA (2009): Critical features for the perception of emotion from gait. J Vis 9:32–31. [DOI] [PubMed] [Google Scholar]

- Schilbach L, Eickhoff SB, Rotarska‐Jagiela A, Fink GR, Vogeley K (2008): Minds at rest? Social cognition as the default mode of cognizing and its putative relationship to the “default system” of the brain. Conscious Cogn 17:457–467. [DOI] [PubMed] [Google Scholar]

- Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, Reiss AL, Greicius MD (2007): Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci 27:2349–2356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan RJ, Frith CD (2004): Empathy for pain involves the affective but not sensory components of pain. Science 303:1157–1162. [DOI] [PubMed] [Google Scholar]

- Sporns O, Honey CJ, Kotter R (2007): Identification and classification of hubs in brain networks. PLoS One 2:e1049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tettamanti M, Rognoni E, Cafiero R, Costa T, Galati D, Perani D (2012): Distinct pathways of neural coupling for different basic emotions. Neuroimage 59:1804–1817. [DOI] [PubMed] [Google Scholar]

- Touroutoglou A, Lindquist KA, Dickerson BC, Barrett LF: Intrinsic connectivity in the human brain does not reveal networks for ‘basic’ emotions. Soc Cogn Affect Neurosci. 2015 Feb 12. pii: nsv013. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tracy JL, Randles D (2011): Four models of basic emotions: A review of Ekman and Cordaro, Izard, Levenson, and Panksepp and Watt. Emot Rev 3:397–405. [Google Scholar]

- van der Gaag C, Minderaa RB, Keysers C (2007): The BOLD signal in the amygdala does not differentiate between dynamic facial expressions. Soc Cogn Affect Neurosci 2:93–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Overwalle F, Baetens K (2009): Understanding others' actions and goals by mirror and mentalizing systems: A meta‐analysis. Neuroimage 48:564–584. [DOI] [PubMed] [Google Scholar]

- von dem Hagen EA, Stoyanova RS, Baron‐Cohen S, Calder AJ (2013): Reduced functional connectivity within and between ‘social’ resting state networks in autism spectrum conditions. Soc Cogn Affect Neurosci 8:694–701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vytal K, Hamann S (2010): Neuroimaging support for discrete neural correlates of basic emotions: A voxel‐based meta‐analysis. J Cogn Neurosci 22:2864–2885. [DOI] [PubMed] [Google Scholar]

- Woodward ND, Rogers B, Heckers S (2011): Functional resting‐state networks are differentially affected in schizophrenia. Schizophr Res 130:86–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeo BT, Krienen FM, Sepulcre J, Sabuncu MR, Lashkari D, Hollinshead M, Roffman JL, Smoller JW, Zöllei L, Polimeni JR, Fischl B, Liu H, Buckner RL (2011): The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J Neurophysiol 106:1125–1165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou J, Greicius MD, Gennatas ED, Growdon ME, Jang JY, Rabinovici GD, Kramer JH, Weiner M, Miller BL, Seeley WW (2010): Divergent network connectivity changes in behavioural variant frontotemporal dementia and Alzheimer's disease. Brain 133:1352–1367. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information