Abstract

The orienting of attention to the spatial location of sensory stimuli in one modality based on sensory stimuli presented in another modality (i.e., cross‐modal orienting) is a common mechanism for controlling attentional shifts. The neuronal mechanisms of top‐down cross‐modal orienting have been studied extensively. However, the neuronal substrates of bottom‐up audio‐visual cross‐modal spatial orienting remain to be elucidated. Therefore, behavioral and event‐related functional magnetic resonance imaging (FMRI) data were collected while healthy volunteers (N = 26) performed a spatial cross‐modal localization task modeled after the Posner cuing paradigm. Behavioral results indicated that although both visual and auditory cues were effective in producing bottom‐up shifts of cross‐modal spatial attention, reorienting effects were greater for the visual cues condition. Statistically significant evidence of inhibition of return was not observed for either condition. Functional results also indicated that visual cues with auditory targets resulted in greater activation within ventral and dorsal frontoparietal attention networks, visual and auditory “where” streams, primary auditory cortex, and thalamus during reorienting across both short and long stimulus onset asynchronys. In contrast, no areas of unique activation were associated with reorienting following auditory cues with visual targets. In summary, current results question whether audio‐visual cross‐modal orienting is supramodal in nature, suggesting rather that the initial modality of cue presentation heavily influences both behavioral and functional results. In the context of localization tasks, reorienting effects accompanied by the activation of the frontoparietal reorienting network are more robust for visual cues with auditory targets than for auditory cues with visual targets. Hum Brain Mapp 35:964–974, 2014. © 2013 Wiley Periodicals, Inc.

Keywords: cross‐modal, bottom‐up, attention, functional magnetic resonance imaging, vision, audition

INTRODUCTION

Humans derive most of their information about the external environment through the auditory and visual senses. However, the auditory and visual modalities are associated with very different spatial maps. Specifically, the localization of auditory information is dependent upon the synthesis of interaural time differences and interaural level differences, which converge across frequency channels to create a coarse but spatially complete (i.e., 360°) map of auditory space (King, 2009; Spierer et al., 2009). In contrast, the one‐to‐one mapping of the retina onto visual cortex results in a detailed map of extrapersonal space that is limited to the fovea, with fidelity degrading as a function of peripheral vision. Regardless of these differences, responding to sensory stimuli in one modality based on information presented in another modality (i.e., cross‐modal) is a common mechanism for controlling the focus of attention and occurs through both bottom‐up (i.e., exogenous orienting) and top‐down (i.e., endogenous) attentional mechanisms (Wright and Ward, 2008; Lupiáñez, 2010; Spence, 2010). Although the neuronal mechanisms of top‐down shifts of cross‐modal attention have been documented using electrophysiological (Martin‐Loeches et al., 1997; Eimer and Driver, 2001; Eimer and Van Velzen, 2002; Eimer et al., 2003; Green et al., 2005; Green and McDonald, 2006) and hemodynamic (Macaluso et al., 2002; Macaluso et al., 2003; Shomstein and Yantis, 2004; Santangelo et al., 2008a; Krumbholz et al., 2009; Smith et al., 2010) techniques, the neuronal mechanisms of bottom‐up attention have been primarily examined only utilizing electrophysiological techniques (McDonald and Ward, 2000; McDonald et al., 2001) or have focused on tactile and visual stimulation (Macaluso et al., 2000). Therefore, a detailed examination of the neuronal substrates of audio‐visual spatial orienting has not been conducted.

During bottom‐up orienting paradigms, a luminosity change (visual) or a sudden sound (auditory) is briefly presented either in the left or right hemifield which correctly (i.e., valid trials) or incorrectly (i.e., invalid trials) indicates the spatial location of another sensory stimulus at chance levels. During both auditory and visual intramodal cueing paradigms, validly cued trials usually result in faster response times relative to invalid trials (i.e., facilitation) at stimulus onset asynchrony (SOA) of 250 ms or less (Mueller and Rabbitt, 1989). In contrast, response times become faster for invalidly cued trials during longer cue‐target intervals (400–3,000 ms), which has been operationally defined as inhibition of return (IOR; Posner et al., 1985; Klein, 2000). Although there is convincing evidence of cross‐modal facilitation during bottom‐up orienting (Spence et al., 2004; Wright and Ward, 2008; Spence, 2010), IOR effects are typically much less robust in the cue‐target paradigm (Spence and Driver, 1997; Schmitt et al., 2000; Ward et al., 2000; Santangelo et al., 2006; Mazza et al., 2007).

The reorienting of auditory and visual attention are heavily influenced by experimental manipulations, with auditory but not visual cues resulting in cross‐modal shifts of attention during speeded suprathreshold detection and orthogonal spatial‐cuing tasks (Spence and Driver, 1997; Schmitt et al., 2000; Mazza et al., 2007). In contrast, visual cues are typically more effective in engendering cross‐modal attention shifts during speeded implicit spatial discrimination or localization tasks (Ward, 1994; Ward et al., 1998; Schmitt et al., 2000; Ward et al., 2000). Finally, other research suggests that the spatial congruence between the cue and target strongly influences visual (Prime et al., 2008) but not auditory (Santangelo and Spence, 2007; Santangelo et al., 2008b; Koelewijn et al., 2009) cross‐modal cueing.

Intramodal shifts of auditory and visual attention have been associated with activations of similar dorsal and ventral frontal and parietal regions (Mayer et al., 2006, 2007, 2004), with some suggesting that bottom‐up orienting is more associated with activation of a right lateralized ventral fronto‐parietal network with core regions of ventral frontal cortex (VFC) and temporoparietal junction (TPJ) (Corbetta and Shulman, 2002; Corbetta et al., 2008). Several electrophysiological studies have examined bottom‐up audio‐visual facilitation effects during speeded implicit spatial discrimination (McDonald and Ward, 2000), speeded frequency discrimination (McDonald, et al., 2001), simple detection (McDonald et al., 2003), and perceptual quality judgment (McDonald, et al., 2005; Stormer et al., 2009) tasks. The behavioral facilitation effects found during cued versus uncued trials were reflected by a negative shift in ERPs on cued versus uncued trials indicated by a negative difference (Nd) wave (valid–invalid). Results from these studies generally suggest that facilitation effects are typically observed in the primary cortical areas of the target, with supramodal fronto‐parietal control areas mediating cross‐modal attentional shifts.

To date, there has not been a functional magnetic resonance imaging (FMRI) study that has directly examined the neuronal mechanisms of bottom‐up shifts of attention across the auditory and visual modalities. Other FMRI studies have used conflicting or congruent audio‐visual cues during orthogonal spatial cueing tasks to determine the effects of a task‐irrelevant nonpredictive auditory cue on top‐down visual orienting (Santangelo et al., 2008a). Whereas task‐irrelevant visual nonpredictive cues do not affect top‐down visual attention (Berger et al., 2005), task‐irrelevant auditory cues both interact with the voluntary deployment of visual spatial attention and modulate activity within the fronto‐parietal attention network (Santangelo et al., 2008a). Therefore, regardless of intramodal or cross‐modal paradigm, the fronto‐parietal network appears to be activated during the bottom‐up attention shift and serves as the supramodal mechanism of attentional control (Wright and Ward, 2008).

The current study used event‐related FMRI to investigate the neuronal correlates mediating bottom‐up audio‐visual orienting during a spatial localization paradigm. Based on previous behavioral studies (Ward, 1994; Ward et al., 1998; Schmitt et al., 2000; Ward et al., 2000), we predicted that visual cues would facilitate cross‐modal shifts of attention to a greater degree than auditory cues during a localization task, thereby resulting in increased activation within the fronto‐parietal attention network during invalid trials. Similarly, we predicted that IOR (faster response times for invalid trials) would be weak or absent in both conditions, resulting in no differences in activation across all brain regions for valid and invalid trials.

METHODS

Participants

Thirty adult volunteers completed the study. Of the 30 participants, two participants were identified as outliers due to excessive head motion (above three standard deviations on more than two motion parameters) and another two participants were identified as outliers due to poor behavioral performance (accuracy below chance performance or reaction time (RT) above three standard deviations on more than two conditions). These participants were subsequently discarded from the final analysis, leaving a final sample of 26 participants (15 males; mean age = 27.7 ± 6.0 years; Edinburgh handedness Inventory score = 97.0 ± 8.4%). None of the study participants were taking psychoactive prescriptive mediations or reported a history of major neurological, psychiatric, or substance abuse disorders. Informed consent was obtained from all participants according to institutional guidelines at the University of New Mexico.

Procedure

Participants performed two event‐related cross‐modal spatial attention tasks while undergoing FMRI. Participants rested supine in the scanner with their head secured by chin and forehead straps, and foam padding to limit head motion in the head coil. The first task utilized auditory spatial cues followed by visual targets [hereafter referred to as auditory cues with visual targets (ACVT)] whereas the second task used visual spatial cues followed by auditory targets [hereafter referred to as visual cues with auditory targets condition (ACVT) condition (VCAT)]. For both tasks, the basic visual display consisted of a black background with two white boxes (visual eccentricity = 5.15°) flanking a central fixation box (visual eccentricity = 0.71°). Visual stimuli were rear‐projected using a Sharp XG‐C50X LCD projector on an opaque white Plexiglas projection screen. Auditory stimuli were delivered through an Avotec SS‐3100 system to the headphones (see Fig. 1A for basic experimental setting and approximate stimuli angles). Presentation software was used to control stimulus presentation, synchronization of stimulus events with the MRI scanner, and the collection of accuracy and RT data for offline analyses.

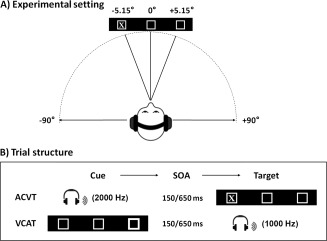

Figure 1.

This figure presents a cartoon representation of the basic experimental setting (A) as well as a representation of the trial structure (B) for the two cross‐modal orienting tasks: auditory cues with visual targets (ACVT) and visual cues with auditory targets (VCAT). The basic visual display consisted of a black background with two white boxes flanking a central fixation box. Auditory stimuli were delivered to the headphones at approximately 90° from the central meridian, whereas visual stimuli were delivered at a visual eccentricity of 5.15°. The stimulus onset asynchrony (SOA) between cues and targets was 150 or 650 ms.

Four ACVT and four VCAT imaging series were collected. Task‐order was counterbalanced and participants were randomly assigned to a group determining whether the ACVT or the VCAT condition was presented first. Spatial orienting trials (320 trials; 76% of total trials) consisted of a 100 ms cue followed by a target with pseudo‐random stimulus‐onset asynchrony (SOA) of either 150 or 650 ms. The inter‐trial interval was randomly varied between 4 and 10 s to permit for modeling of the hemodynamic response (Burock et al., 1998) and to minimize the likelihood of nonlinear summation of hemodynamic responses (Glover, 1999). In ACVT (Fig. 1B), cues consisted of a unilaterally presented tone pip (2,000 Hz pure tone with a 10 ms linear rise and fall) appearing in one side of the headphones whereas targets consisted of the letter X appearing in one of the two flanking boxes for 100 ms. In VCAT (Fig. 1B), cues consisted of a shape change (i.e., increased line width for box) in either of the flanking boxes and targets consisted of a monaural tone pip (1,000 Hz pure tone of 100 ms duration with a 10 ms linear rise and fall) presented in one side of the headphones. For the ACVT and VCAT spatial orienting trials, cues correctly (i.e., valid cue) predicted target location on 50% of the trials. The remaining 50% of the spatial orienting trials involved cues that incorrectly predicted target location (invalid trials).

In addition to the spatial orienting trials, catch trials were also presented for both conditions as a sensory‐motor control (100 trials; 24% of total trials). For the ACVT condition, the letter X was presented in the central fixation box without any cue information, and participants were asked to depress both buttons. In VCAT, a binaural 1,000 Hz tone was presented as a central target and participants were asked to depress both buttons. Catch trial analyses will be presented in a separate publication. In spatial orienting trials, participants were required to respond to the spatial location of the target by depressing either their index (left target) or middle (right target) finger of their right hand as quickly and as accurately as possible. A brief behavioral testing session was administered to ensure that participants understood the task before being placed in the scanner.

FMRI

FMRI was acquired on a 1.5 T Siemens scanner. At the beginning of the scanning session, a high resolution T 1 [echo time (TE) = 4.76 ms, repetition time (TR) = 12 ms, 20° flip angle, number of excitations = 1, slice thickness = 1.5 mm, field‐of‐view (FOV) = 256 mm, resolution = 256 × 256] scan was performed. Echo‐planar images were collected using a single‐shot, gradient‐echo, echo‐planar pulse sequence [TE = 36 ms, TR = 2000 ms, 90° flip angle, slice thickness = 5 mm, FOV = 256 mm, matrix size = 64 × 64]. Twenty‐eight sagittal slices (interleaved during data collection) were selected to provide coverage of the entire brain (voxel size: 4 × 4 × 5 mm3). For each of the eight imaging series, 190 sequential echo‐planar images were collected per run. In addition to dummy scans, the first image of each run was eliminated to account for T 1 equilibrium effects, leaving a total of 1,512 images for the final analyses.

Image Processing and Statistical Analyses

Functional images were generated using Analysis of Functional NeuroImages software package (Cox, 1996). Time series images were spatially registered to the second echoplanar image of the first run in both two‐dimensional (2D) and 3D space to minimize effects of head motion, temporally interpolated to correct for slice‐time acquisition differences and de‐spiked. These images were then resliced to 3 mm3, converted to a standard stereotaxic coordinate space (Talairach and Tournoux, 1988), and blurred using a 6 mm Gaussian full‐width half‐maximum filter. A deconvolution analysis was performed on a voxel‐wise basis to generate one hemodynamic response function (HRF) for each of the conditions of interest. The temporal resolution of the blood oxygen level dependent response makes it difficult to separately model activation associated with the cue or the target under typical SOA, such that the entire trial (i.e., valid and invalid trials) was modeled with a single regressor in the current experiment. The six rigid‐body motion parameters were entered as regressors of no interest to reduce the impact of head motion on patterns of functional activation in this event‐related design (Johnstone et al., 2006). Each HRF was derived relative to the baseline state (visual fixation plus baseline gradient noise) and based on the first 16 s poststimulus onset. The images acquired 4.0–8.0 s poststimulus onset, corresponding to the peak of the HRF (Cohen, 1997), were then averaged and divided by the baseline to obtain an estimate of percent signal change (PSC).

For each run type (i.e., ACVT and VCAT), a voxel‐wise, 2 × 2 (Validity × SOA) repeated measures ANOVA was then performed on the spatially normalized PSC data. For all voxel‐wise analyses, a significance threshold corresponding to P < 0.005 was applied in combination with a minimum cluster size threshold of 1.280 ml (16 native voxels) to reduce false positives, which resulted in a corrected P value of 0.05 based on 10,000 Monte Carlo simulations (Forman et al., 1995).

RESULTS

Behavioral Results

Behavioral accuracy for both VCAT and ACVT conditions was very high and approached ceiling (VCAT: 96.8%; ACVT: 98.8%), suggesting that participants had little difficulty distinguishing cues from targets. As a result of low variability across participants, accuracy data was not subjected to further analyses.

As response time data has a tendency toward positive skew, the median RT was selected as a more representative measure of central tendency for all behavioral analyses. A 2 × 2 × 2 × 2 repeated‐measures ANOVA with condition (ACVT, VCAT), cue validity (valid, invalid) and SOA (150 or 650 ms) as within‐subjects factors and order (ACVT first, VCAT first) as the between‐subjects factor was first conducted to rule out any effects (main effects or interactions) for condition order. However, neither the main effect of order nor any of the interaction terms for order were significant (P > 0.10) such that all remaining effects were investigated with a reduced model. A significant condition × validity × SOA interaction effect was observed for the reduced model [F(1, 25) = 34.9, P < .001]. As a result of this interaction, two 2 × 2 (validity × SOA) repeated measure ANOVAs were performed for VCAT and ACVT conditions separately.

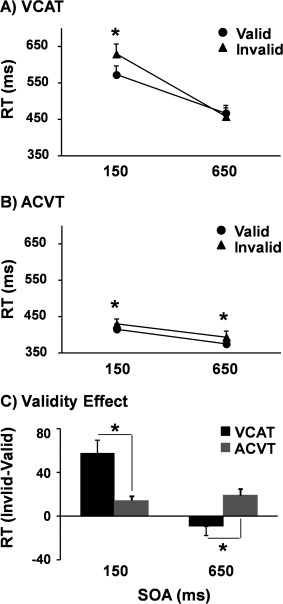

For the VCAT condition (Fig. 2A), the validity × SOA interaction effect was significant [F(1,25) = 34.0, P < 0.001], with simple‐effects tests indicating that RT were faster for valid (573.2 ± 119.6 ms) than invalid (630.5 ± 135.3 ms) trials at the 150 ms SOA [t(25) = 4.7, P < 0.001] with no difference between trials at the 650 ms SOA (P > 0.10). The main effects of validity [F(1,25) = 7.6, P < 0.05] and SOA [F(1,25) = 84.2, P < 0.001] were also significant, with RTs being faster for valid trials (520.7 ± 108.7 vs. 544.7 ± 116.6 ms) and those at the 650 ms SOA (463.5 ± 109.7 vs. 601.8 ± 123.9 ms).

Figure 2.

Graphs A and B show the reaction time (RT) in milliseconds (ms) for visual cues/auditory targets (VCAT: Panel A) and auditory cues/visual targets (ACVT: Panel B). In both panels, RT for valid (solid circle) and invalid (solid triangle) trials were plotted as a function of the stimulus onset asynchrony (SOA). Graph C depicts the validity effect score (RT: invalid–valid trials) for VCAT (black bars) and ACVT (gray bars) tasks at each SOA. Error bars correspond to the standard error of the mean. The asterisk (*) is used to denote a significant result.

For the ACVT condition (Fig. 2B), the validity × SOA interaction effect was not significant (P > 0.10). The main effect of validity [F(1,25) = 20.5, P < 0.001] and SOA [F(1,25) = 29.3, P < 0.001] were also significant, with RTs being faster for valid (395.8 ± 69.1 ms) than invalid trials (412.6 ± 71.8 ms) and for the 650 ms (385.1 ± 72.1 ms) than 150 ms SOA trials (423.3 ± 72.1 ms). Examination of Figure 2B suggested that the magnitude of the validity effect was relatively small. Therefore, post hoc tests were conducted to confirm that valid trials were significantly faster than invalid trials at both the short [t(25) = 3.6, P < 0.001] and long [t(25) = 3.3, P < 0.005] SOA.

To examine the magnitude of cuing effects across the different experimental conditions, an additional 2 × 2 repeated measures ANOVA was conducted on the validity effect score (RT: invalid–valid trials) with condition and SOA as two within‐subject factors (Fig. 2C). Results indicated a significant condition × SOA interaction [F(1,25) = 34.9, P < 0.001], with follow‐up tests indicating that the validity effect was larger in VCAT (57.3 ± 61.8 ms) compared to ACVT (14.4 ± 20.5 ms) trials at the 150 ms SOA [t(25) = 3.4, P < 0.01]. The validity effect was also significantly [t(25) = 2.7, P < 0.05] different between conditions at the 650 ms SOA, with some evidence of IOR in VCAT (−9.2 ± 40.5 ms) in conjunction with continuing evidence of facilitation during ACVT (19.0 ± 29.8 ms).

Functional Results

Primary analyses

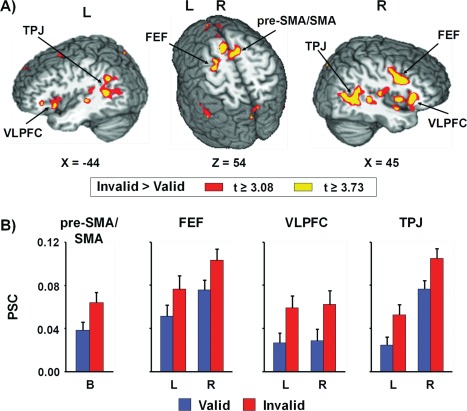

To investigate the patterns of brain activation associated with exogenous cross‐modal attention for both visually and aurally cued targets, two 2 × 2 (Validity × SOA) repeated measure ANOVAs were performed on the spatially normalized PSC measure for ACVT and VCAT separately. For VCAT, a large network of bilateral cortical and subcortical areas exhibited greater activation for invalidly cued trials (main effect of validity; Fig. 3 and Supporting Information Table 1). These areas included the pre‐supplementary/supplementary motor area (pre‐SMA/SMA) and cingulate gyrus [Brodmann areas (BAs) 6/8/24/32], precentral and middle frontal gyrus (BAs 6/9/46) corresponding to the frontal eye fields (FEFs), cingulate and posterior cingulate gyrus (BAs 23/24/29/31), ventrolateral prefrontal cortex, and insula (BAs 13/45/47) extending into the temporal pole (BAs 20/21/22/38), TPJ extending into insula and secondary visual cortex (BAs 13/19/21/22/37/39/40/41), cerebellum, thalamus, basal ganglia, and pons. In addition, increased activation was also observed within the right medial prefrontal cortex (BAs 9/10), left primary and secondary auditory cortex (BAs 21/22/41), and left precuneus extending into cingulate gyrus and cuneus (BAs 7/19/31) for invalidly cued trials. This increased activation for invalidly cued trials was consistent for both short and long SOA. Although there were no areas that demonstrated greater activation during validly cued trials, deactivation within medial fronto‐polar cortex and anterior cingulate cortex (BAs 8/9/10/24/32) was observed during validly cued trials.

Figure 3.

Panel A presents regions showing significant main effect of validity for the visual cues with auditory targets condition (VCAT). The magnitude and direction of the following contrast t‐scores are represented by either warm (invalid > valid; red or yellow representing P < 0.005 or P < 0.001 respectively) or cool (valid > invalid; blue or cyan representing P < 0.005 or P < 0.001 respectively) coloring. Locations of the sagittal (X) and axial (Z) slices are given according to the Talairach atlas (L = left and R = right). Regions of greater activation for invalid trails compared to valid trials was observed within bilateral (B) pre‐supplementary and supplementary motor area (pre‐SMA/SMA), frontal eye fields (FEF), ventrolateral prefrontal cortex (VLPFC), and temporoparietal junction (TPJ). Panel B presents the percent signal change (PSC) values for valid (blue bars) and invalid (red bars) trials within these selected regions. Error bars correspond to the standard error of the mean.

Activation was greater for the 150 ms compared to 650 ms SOA VCAT trials within bilateral cuneus (BAs 17/18), left lingual gyrus (BAs 17/18), left medial temporal lobe (hippocampus and parahippocampal gyrus, BAs 27/28/35), and left subcortical areas including basal ganglia and thalamus (Supporting Information Table 2). There were no areas that demonstrated greater activation during 650 ms SOA. Several regions exhibited a significant validity by SOA interaction, including bilateral precuneus (BA 7) extending into left paracentral lobule, and bilateral thalamus. Follow‐up simple effects tests indicated that activation was greater for valid compared to invalid trials at the 150 ms SOA, whereas activation was greater for the invalid than valid trials at 650 ms SOA (all P < 0.05).

For ACVT, the main effect of validity and the interaction of validity by SOA were not significant. In contrast, several regions in ACVT also exhibited a main effect of SOA (Supporting Information Table 3). Specifically, increased activation during the 650 ms compared to 150 ms SOA trials was observed within the bilateral pre‐SMA/SMA and cingulate gyrus (BAs 6/24/32), frontal eye fields (BA 6), and inferior frontal gyrus (BA 9). Lateralized areas included the left primary somatosensory and motor area (BAs 2/3/4), superior and inferior parietal lobule across the intraparietal sulcus (BAs 7/40) and supramarginal gyrus. In addition, the 650 ms SOA trials also showed increased deactivation relative to the 150 ms trials within the left superior frontal gyrus (BAs 8/9), bilateral medial frontal gyrus (BAs 10/11/32), and right anterior cingulate (BA 24).

Supplementary analyses

In addition to primary analyses, two 2 × 2 (Laterality × SOA) repeated measures ANOVAs were conducted for ACVT and VCAT separately to examine regions that exhibited differences in functional activation as a result of target laterality (i.e., right or left hemifield). The laterality for the stimulus presentation in each trial was operationally defined based on the location of the target, consistent with conventions established in previous cueing studies (Mangun et al., 1994; Corbetta et al., 2000; Shulman et al., 2010). Analyses were restricted to valid trials (both cue and target appeared in the same hemifield) given the inability to disambiguate activity resulting from the laterality of cues versus targets on invalid trials (i.e., opposite hemifields) due to the temporal coarseness of the BOLD response. The effects of SOA are not discussed to reduce redundancy with principle analyses.

For VCAT (Supporting Information Fig. 1A), activation was greater in the left middle occipital gyrus extending into the cuneus (BAs 18/19) as well as the left lateral tempero‐occipital cortex extending into the declive (BAs 19/37/39) for validly cued targets occurring in the right hemifield. In contrast, activation was greater in the right primary and secondary auditory cortex (BAs 13/22/41) for validly cued targets occurring in the left hemifield. In addition, a significant laterality by SOA interaction was observed within right primary and secondary visual cortex (BAs 17/18) (Supporting Information Fig. 2), with follow‐up tests indicating that the activation was greater for stimuli in the right than left hemifield at the short SOA (P < 0.05), with a reversal in the pattern (left hemifield > right hemifield) at the longer SOA (P < 0.001).

During ACVT (Supporting Information Fig. 1B), increased activity was observed in the left primary/secondary auditory cortex extending into the pulvinar nucleus of the thalamus (BAs 13/40/41/42) as well as the left lateral tempero‐occipital cortex extending into the declive (BAs 18/19/31/37) when valid trials occurred in the right hemifield. In contrast, increased activity was observed in the right lateral tempero‐occipital cortex extending into the declive (BAs 18/19/37) when targets occurred in the left hemifield.

DISCUSSION

This study examined the behavioral and neuronal correlates of bottom‐up, cross‐modal spatial attention using event‐related FMRI. Behavioral results indicated that although facilitation (RT: valid < invalid) was present during the 150 ms SOA for both VCAT and ACVT, the magnitude of the effect was greater for visual cues. These findings replicate previous studies that utilized a localization task in conjunction with a relatively simple trial design (Schmitt et al., 2000) as well as an implicit spatial discrimination task with a complex cue/target environment (Ward, 1994; Ward et al., 1998, 2000). Collectively, these results suggest that compared to auditory cues, visual cues may be more effective in capturing spatial attention under certain orienting paradigms, although other task conditions may favor auditory cues (Spence and Driver, 1997; Schmitt et al., 2000; Mazza et al., 2007). In addition, the requirement for a strict spatial co‐localization between cues and targets to observe cross‐modal facilitation effects for visual cues with auditory targets may not hold true as previously intimated (Prime et al., 2008), or may be limited to certain task conditions (localization versus stimulus characterization). Specifically, even though there was approximately an 85° offset between visual and auditory stimuli (Fig. 1A), both forms of cross‐modal cueing still resulted in facilitation.

In contrast to the behavioral evidence for supramodal mechanisms of facilitation, there was only weak (visual cues/auditory targets) or no (auditory cues/visual targets) evidence of supramodal mechanisms for IOR at the 650 ms SOA. These findings are generally consistent with previous results on exogenous cross‐modal spatial orienting (Spence and Driver, 1997; Schmitt et al., 2000; Ward et al., 2000; Santangelo et al., 2006; Mazza et al., 2007) and suggest that cross‐modal cues may not be sufficient for either the development of ocular inhibition to cue location (Klein, 2000), for maintaining spatial selectivity in the environment (Posner et al., 1985), or for activating an object‐representation for target processing (Lupiáñez, 2010). However, as previously noted, cross‐modal cues were presented in spatially different positions from the target in the current experiment, which may have reduced IOR secondary to increased angular distance (Maylor and Hockey, 1985; Posner et al., 1985). Alternatively, in contrast to the relatively rapid development of IOR during both visual and auditory intramodal cueing (225 ms), IOR may be delayed during cross‐modal orienting. Specifically, previous studies have not reported significant evidence of IOR during cross‐modal orienting at SOA of 575 (Schmitt et al., 2000), 600 (Santangelo et al., 2006), or 700 (Mazza et al., 2007) ms. The evidence for IOR in the current study was minimal (i.e., nonsignificant reversal in RT) at the 650 ms SOA and was present only for visual cues with auditory targets. Future studies employing longer SOA (800–1,000 ms) are needed to confirm whether IOR may be delayed in cross‐modal orienting, and whether this pattern is modality‐specific.

It is well established that the frontoparietal re‐orienting network is activated during the reorienting of attention to intramodal (e.g., visual, auditory and tactile) cues (Corbetta and Shulman, 2011), as well as during top‐down cross‐modal cueing (Eimer and Van Velzen, 2002; Macaluso et al., 2002; Eimer et al., 2003; Shomstein and Yantis, 2004; Green et al., 2005; Green and McDonald, 2006; Krumbholz et al., 2009). Previous FMRI studies have also demonstrated bottom‐up cross‐modal cueing across the visual and tactile modalities (Macaluso et al., 2000). Consistent with these studies, current findings of concomitant greater activation in the frontoparietal attention network, visual and auditory “where” streams, primary auditory cortex, and thalamus during reorienting support previous suggestions that high‐order multisensory regions (frontoparietal network) coordinate multimodal shifts of attention regardless of external or internal control.

However, there is one important caveat to the statement that frontoparietal network is supramodal in nature. Specifically, activation in the attentional reorienting network was only present when cues were presented in the visual modality (auditory targets), and absent when cues occurred in the auditory modality. This finding was somewhat surprising given the fact that behavioral results from the current experiment indicated a true supramodal mechanism (i.e., auditory to visual and visual to auditory) for facilitatory cueing effects. One possible explanation for this null effect is that auditory cross‐modal cuing effects were not robust enough to invoke activity in the reorienting network. Support for this hypothesis can be derived from the reduced magnitude of the validity effect (facilitation) resulting from auditory versus visual cross‐modal cues. Moreover, the potency of auditory cues to modulate spatial orienting is relatively weak compared to visual cuing during both line bisection (Sosa et al., 2011) and perceived location (i.e. spatial ventriloquism) (Bertelson, 1999). Therefore, current results provide additional support for the hypothesis that visual stimuli are generally more effective during spatial localization tasks whereas auditory stimuli may be more robust for temporal tasks (Aschersleben and Bertelson, 2003; Witten and Knudsen, 2005).

Previous electrophysiological studies have reported increased activation in both visual and auditory cortex for valid versus invalidly cued targets during bottom‐up audio‐visual cueing (McDonald et al., 2003, 2001). Thus, our observation of greater activation within the auditory cortex during invalid compared with valid trials was somewhat unexpected. This discrepancy may be attributable to differences in experimental paradigms (ACVT in previous study versus VCAT in the current study) or to differences in methodologies. Alternatively, current results may be consistent with the auditory cortex's role in deviance (i.e. novelty or expectation) detection (Grimm and Escera, 2012). Specifically, previous studies on mismatch negativity have reported increased signal amplitude within the human auditory cortex 100–250 ms after the occurrence of a rare irregular sound (Alho et al., 1998). Although there were equal numbers of invalid and valid trials in the current experiment, the occurrence of the target in an unexpected location may have violated participants' expectancies during invalid trials. However, given the potential discrepancies with previous electrophysiological work, this finding will require replication in an independent sample.

A prominent model of visual spatial orienting suggests that the frontoparietal network can be segregated into both dorsal and ventral subsystems (Corbetta and Shulman, 2002) that work collaboratively to control the bottom‐up “reorienting” response (Corbetta et al., 2008). Current results showed that visual cues and auditory targets co‐activated both the dorsal (i.e., FEFs and precuneus) and ventral networks (i.e., VFC and TPJ), suggesting that this model may be extended into certain cross‐modal situations. Previous lesion studies have also reported right hemisphere lateralization of visual spatial neglect which might reflect a corresponding asymmetry for spatial attention (Corbetta and Shulman, 2011). The right hemisphere dominance in spatial attention has been further supported by FMRI studies assessing hemispheric asymmetries on healthy participants using direct voxel‐wise comparisons for both intramodal visual (Shulman et al., 2010) and auditory (Teshiba et al., 2012) attentional shifts. Qualitative evidence from the current study indicates that although activation of the frontoparietal network was largely bilateral, an increased volume and degree of activation was evident within the right hemisphere. Future studies are needed to directly compare intramodal relative to cross‐modal orienting to determine differences in hemispheric asymmetries.

Several factors may contribute to the discrepancy between bilateral (auditory intramodal and cross‐modal) compared to right‐lateralized (intramodal visual) activation, including modality and the magnitude of attention shifts across the central meridian. Unlike the visual system, in which spatial information is encoded according to a direct mapping of extrapersonal space on the retina and topographically projected to higher visual cortex (Kollias, 2004), the brain must compute the location of a sound source based on the time and intensity differences between both ears (Spierer, et al., 2009). Thus, it is possible that the localization of auditory sounds as either cues or targets requires resources from both hemispheres, even though activation of the right frontoparietal network may be greater (Teshiba et al., 2012). Alternatively, the activation of the left ventral network may depend on the magnitude of attention shifts across the central meridian. For invalidly cued visual trials, although attention is shifted across the meridian, it occurs at a much smaller angle of extrapersonal space (e.g., ∼10° in current experiment setting). In contrast, cross‐modal shifts between the auditory and visual modality can involve a much greater angular distance given that the auditory system provides coarse coverage of all of extrapersonal space.

We have previously observed an interaction effect in the frontoparietal‐cerebellar network for intramodal auditory orienting, with the reorienting of attention during facilitation being associated with greater activation during invalid relative to valid trials (Mayer et al., 2009, 2007), followed by a reversal or equalization of activity by a reversal (valid > invalid) for several of these structures during auditory IOR (Mayer et al., 2007). In the current study, a validity by SOA interaction was only present in the bilateral precuneus and thalamus rather than the frontoparietal‐cerebellar network, and it was only present during the visual cues/auditory target condition. In addition, instead of mirroring the RT data in terms of direction of effects, activation was greater for valid trials at the shorter SOA and for invalid trials at the longer SOA. One important difference between current results and previous intramodal findings (Lepsien and Pollmann, 2002; Mayer et al., 2007, 2004) is the relative lack of behavioral evidence for IOR across both conditions. Similar to our null findings in the auditory cue condition, the weak magnitude of the IOR response may have also resulted in the absence of a reversal or equalization of the activation within the fronto‐parietal network for validly and invalidly cued trials at the longer SOA.

Current results indicated that a strong contralateral bias was observed within primary and/or secondary sensory cortex during valid trials. Specifically, with the exception of the right visual cortex at the earlier SOA, activation was greater in both hemispheres when auditory/visual cues and visual/auditory targets were presented in the contralateral hemifield. Previous imaging studies have also reported increased activation within unimodal cortex for stimuli appearing in the contralateral relative to ipsilateral hemifield (Rinne, 2010; Prado and Weissman, 2011). Similarly, animal models suggest that a contralateral bias would be the expected default model of functioning for both visual (Hubel and Wiesel, 1977; Ramoa et al., 1983) and auditory (Moore et al., 1984; Irvine, 1986) cortex in the absence of higher‐order attentional modulation. As the current stimuli involved a mixture of stimuli from both modalities (auditory and visual stimuli) and activation was observed in both auditory and visual cortex, the contralateral bias is likely to be strongly supramodal in nature. Moreover, the contralateral bias was present in a condition with minimal behavioral or functional effects (i.e., ACVT), suggesting that the bias is not dependent on higher‐order attentional involvement.

Some potential limitations of the present experiment should be considered. First, the cues and the targets were not presented in the same spatial location due to the physical structure of the FMRI environment (i.e., necessity to use headphones), which may have reduced the likelihood of observing IOR as suggested earlier by Posner et al. (1985) and Maylor and Hockey (1985). Second, eye movements were not monitored in the current study, so the activation within the frontal oculomotor network during reorienting for the visual cues/auditory targets may be reflective of overt eye movement. However, this is not a compelling explanation as it is unlikely that subjects would increase the incidence of eye movements during invalid trials only. Furthermore, previous studies using visual orienting tasks have shown that healthy participants are capable of maintaining fixation in the FMRI environment and rarely execute eye movements (Arrington, et al., 2000; Thiel et al., 2004). Third, the coarse temporal resolution of the blood oxygen level dependent response did not permit for the disambiguation of neuronal responses to cues versus targets during typical SOA. Fourth, participants were asked to respond to the target location with a lateralized motor response (e.g., right index and middle finger for the target appearing on the left and right side respectively), which may have confounded attentional and motor responses.

In summary, current behavioral results demonstrated that whereas both visual and auditory cues were effective in producing bottom‐up shifts of cross‐modal spatial attention, the classical biphasic response pattern (greater magnitude of facilitation with weak evidence of IOR) was stronger for visual cues and auditory targets. Similarly, functional results indicated that visual cues for auditory targets resulted in greater activation within the frontoparietal reorienting network across both short and long SOA, providing direct evidence that this network of multisensory regions may direct attention across different modalities. However, this highly reproducible pattern of activation (secondary to reorienting) was absent during ACVT, indicating the reorienting network does not exhibit purely supramodal properties (i.e., similar response regardless of modality of information). The cross‐modal links in bottom‐up spatial attention therefore appear to be more robust (i.e., larger behavioral and functional effects) during spatial localization for VCAT rather than ACVT.

Supporting information

Supplemental figure 1: This figure presents regions that exhibited a significant effect of laterality for the visual cues with auditory targets condition (Panel A: VCAT) and for the auditory cues with visual targets (Panel B: ACVT). The magnitude and direction of the following contrast t‐scores are represented by either warm (right > left; red or yellow representing p < 0.005 or p < 0.001 respectively) or cool (left > right; blue or cyan representing p < 0.005 or p < 0.001 respectively) coloring. Locations of the axial (Z) slices are given according to the Talairach atlas (L = left and R = right). Differential effects of laterality were observed in the left middle occipital gyrus (MOG), left lateral tempero‐occipital cortex (LTO) and right auditory cortex (AC) during VCAT. During the ACVT condition, the left AC and bilateral LTO showed differential activation dependent on stimulus laterality. For both conditions, results indicated increased activity for contralateral stimuli. The percent signal change (PSC) values for left (blue bars) and right (red bars) trials within these selected regions are displayed, with error bars equal to the standard error of the mean.

Supplemental figure 2: This figure presents a significant laterality by stimulus onset asynchrony (SOA) interaction effect within the visual cortex (VC) during the visual cues with auditory targets condition (VCAT). The magnitude of the effect is represented by warm coloring (red or yellow representing p < 0.005 or p < 0.001 respectively). Locations of the axial (Z) slices are given according to the Talairach atlas (L = left and R = right). Percent signal change (PSC) values for each of the four main conditions of interest are displayed, with error bars corresponding to the standard error of the mean.

Supporting Information Tables

ACKNOWLEDGMENTS

Special thanks to Josef Ling for his assistance with data analysis.

REFERENCES

- Alho K, Winkler I, Escera C, Huotilainen M, Virtanen, J , Jaaskelainen IP, Pekkonen, E , Ilmoniemi, RJ (1998): Processing of novel sounds and frequency changes in the human auditory cortex: Magnetoencephalographic recordings. Psychophysiology 35:211–224. [PubMed] [Google Scholar]

- Arrington CM, Carr TH, Mayer AR, Rao SM. (2000): Neural mechanisms of visual attention: object‐based selection of a region in space. J Cogn Neurosci 12 (Suppl 2):106–117. [DOI] [PubMed] [Google Scholar]

- Aschersleben G, Bertelson P (2003): Temporal ventriloquism: Crossmodal interaction on the time dimension. 2. Evidence from sensorimotor synchronization. Int J Psychophysiol 50:157–163. [DOI] [PubMed] [Google Scholar]

- Berger A, Henik A, Rafal R (2005): Competition between endogenous and exogenous orienting of visual attention. J Exp Psychol Gen 134:207–221. [DOI] [PubMed] [Google Scholar]

- Bertelson P (1999): Ventriloquism: A case of cross‐modal perceptual grouping In: Aschersleben G, Bachmann T, Musseler J, editors. Cognitive Contributions to the Perception of Spatial and Temporal Events. Amasterdam: Elsevier; pp 347–362. [Google Scholar]

- Burock MA, Buckner RL, Woldorff MG, Rosen BR, Dale AM (1998): Randomized event‐related experimental designs allow for extremely rapid presentation rates using functional MRI. Neuroreport 9:3735–3739. [DOI] [PubMed] [Google Scholar]

- Cohen MS. (1997): Parametric analysis of fMRI data using linear systems methods. Neuroimage 6:93–103. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Kincade JM, Ollinger JM, McAvoy MP, Shulman GL. (2000): Voluntary orienting is dissociated from target detection in human posterior parietal cortex. Nat Neurosci 3:292–297. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Patel G, Shulman GL (2008): The reorienting system of the human brain: From environment to theory of mind. Neuron 58:306–324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL (2002): Control of goal‐directed and stimulus‐driven attention in the brain. Nat Rev Neurosci 3:201–215. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL (2011): Spatial neglect and attention networks. Annu Rev Neurosci 34:569–599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW (1996): AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173. [DOI] [PubMed] [Google Scholar]

- Eimer M, Driver J (2001): Crossmodal links in endogenous and exogenous spatial attention: Evidence from event‐related brain potential studies. Neurosci Biobehav Rev 25:497–511. [DOI] [PubMed] [Google Scholar]

- Eimer M, Van Velzen J (2002): Crossmodal links in spatial attention are mediated by supramodal control processes: Evidence from event‐related potentials. Psychophysiology 39:437–449. [DOI] [PubMed] [Google Scholar]

- Eimer M, van Velzen J, Forster B, Driver J (2003): Shifts of attention in light and in darkness: An ERP study of supramodal attentional control and crossmodal links in spatial attention. Brain Res Cogn Brain Res 15:308–323. [DOI] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC (1995): Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): Use of a cluster‐size threshold. Magn Reson Med 33:636–647. [DOI] [PubMed] [Google Scholar]

- Glover GH (1999): Deconvolution of impulse response in event‐related BOLD fMRI. Neuroimage 9:416–429. [DOI] [PubMed] [Google Scholar]

- Green JJ, McDonald JJ (2006): An event‐related potential study of supramodal attentional control and crossmodal attention effects. Psychophysiology 43:161–171. [DOI] [PubMed] [Google Scholar]

- Green JJ, Teder‐Salejarvi WA, McDonald JJ (2005): Control mechanisms mediating shifts of attention in auditory and visual space: A spatio‐temporal ERP analysis. Exp Brain Res 166:358–369. [DOI] [PubMed] [Google Scholar]

- Grimm S, Escera C (2012): Auditory deviance detection revisited: Evidence for a hierarchical novelty system. Int J Psychophysiol 85:88–92. [DOI] [PubMed] [Google Scholar]

- Hubel DH, Wiesel TN (1977): Ferrier lecture. Functional architecture of macaque monkey visual cortex. Proc R Soc Lond B Biol Sci 198:1–59. [DOI] [PubMed] [Google Scholar]

- Irvine DRF (1986): A Review of the Structure and Function of Auditory Brainstem Processing Mechanisms. Berlin: Springer Verlag; 1–279 p. [Google Scholar]

- Johnstone T, Ores Walsh KS, Greischar LL, Alexander AL, Fox AS, Davidson RJ, Oakes TR (2006): Motion correction and the use of motion covariates in multiple‐subject fMRI analysis. Hum Brain Mapp 27:779–788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King AJ (2009): Visual influences on auditory spatial learning. Philos Trans R Soc Lond B Biol Sci 364:331–339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein RM (2000): Inhibition of return. Trends Cogn Sci 4:138–147. [DOI] [PubMed] [Google Scholar]

- Koelewijn T, Bronkhorst A, Theeuwes J (2009): Competition between auditory and visual spatial cues during visual task performance. Exp Brain Res 195:593–602. [DOI] [PubMed] [Google Scholar]

- Kollias SS (2004): Investigations of the human visual system using functional magnetic resonance imaging (FMRI). Eur J Radiol 49:64–75. [DOI] [PubMed] [Google Scholar]

- Krumbholz K, Nobis EA, Weatheritt RJ, Fink GR (2009): Executive control of spatial attention shifts in the auditory compared to the visual modality. Hum Brain Mapp 30:1457–1469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lepsien J, Pollmann S (2002): Covert reorienting and inhibition of return: An event‐related fMRI study. J Cogn Neurosci 14:127–144. [DOI] [PubMed] [Google Scholar]

- Lupiáñez J (2010): Inhibition of return In: Nobre AC, Coull JT, editors. Attention and Time. Oxford: Oxford University Press; p17–34. [Google Scholar]

- Macaluso E, Eimer M, Frith CD, Driver J (2003): Preparatory states in crossmodal spatial attention: spatial specificity and possible control mechanisms. Exp Brain Res 149:62–74. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Frith CD, Driver J (2000): Modulation of human visual cortex by crossmodal spatial attention. Science 289:1206–1208. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Frith CD, Driver J (2002): Supramodal effects of covert spatial orienting triggered by visual or tactile events. J Cogn Neurosci 14:389–401. [DOI] [PubMed] [Google Scholar]

- Mangun GR, Luck SJ, Plager R, Loftus W (1994): Monitoring the visual world: Hemispheric asymmetries and subcortical processes in attention. J Cogn Neurosci 6:267–275. [DOI] [PubMed] [Google Scholar]

- Martin‐Loeches M, Barcelo F, Rubia FJ (1997): Sources and topography of supramodal effects of spatial attention in ERP. Brain Topogr 10:9–22. [DOI] [PubMed] [Google Scholar]

- Mayer AR, Franco AR, Harrington DL (2009): Neuronal modulation of auditory attention by informative and uninformative spatial cues. Hum Brain Mapp 30:1652–1666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayer AR, Harrington D, Adair JC, Lee R (2006): The neural networks underlying endogenous auditory covert orienting and reorienting. Neuroimage 30:938–949. [DOI] [PubMed] [Google Scholar]

- Mayer AR, Harrington DL, Stephen J, Adair JC, Lee RR (2007): An event‐related fMRI Study of exogenous facilitation and inhibition of return in the auditory modality. J Cogn Neurosci 19:455–467. [DOI] [PubMed] [Google Scholar]

- Mayer AR, Seidenberg M, Dorflinger J, Rao SM (2004): An event‐related fMRI study of exogenous orienting: Supporting evidence for the cortical basis of inhibition of return? J Cogn Neurosci, 16:1262–1271. [DOI] [PubMed] [Google Scholar]

- Maylor EA, Hockey R (1985) Inhibitory component of externally controlled covert orienting in visual space. J Exp Psychol Hum Percept Perform 11:777–787. [DOI] [PubMed] [Google Scholar]

- Mazza V, Turatto M, Rossi M, Umilta C (2007): How automatic are audiovisual links in exogenous spatial attention? Neuropsychologia 45:514–522. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Teder‐Salejarvi WA, Di Russo F, Hillyard SA (2003) Neural substrates of perceptual enhancement by cross‐modal spatial attention. J Cogn Neurosci 15:10–19. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Teder‐Salejarvi W, Di Russo F, Hillyard SA (2005): Neural basis of auditory‐induced shifts in visual time‐order perception. Nat Neurosci 8:1197–1202. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Teder‐Salejarvi WA, Heraldez D, Hillyard SA (2001) Electrophysiological evidence for the “missing link” in crossmodal attention. Can J Exp Psychol 55:141–149. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Ward LM (2000): Involuntary listening aids seeing: Evidence from human electrophysiology. Psychol Sci 11:167–171. [DOI] [PubMed] [Google Scholar]

- Moore DR, Semple MN, Addison PD, Aitkin LM (1984): Properties of spatial receptive fields in the central nucleus of the cat inferior colliculus. I. Responses to tones of low intensity. Hear Res 13:159–174. [DOI] [PubMed] [Google Scholar]

- Mueller HJ, Rabbitt PM (1989): Reflexive and voluntary orienting of visual attention: Time course of activation and resistance to interruption. J Exp Psychol Hum Percept Perform 15:315–330. [DOI] [PubMed] [Google Scholar]

- Posner M, Rafal RD, Choate L, Vaughn L. (1985): Inhibition of return: Neural mechanisms and function. Cogn Neuropsychol 2:211–228. [Google Scholar]

- Prado J, Weissman DH (2011): Spatial attention influences trial‐by‐trial relationships between response time and functional connectivity in the visual cortex. Neuroimage 54:465–473. [DOI] [PubMed] [Google Scholar]

- Prime DJ, McDonald JJ, Green J, Ward LM (2008): When cross‐modal spatial attention fails. Can J Exp Psychol 62:192–197. [DOI] [PubMed] [Google Scholar]

- Ramoa AS, Rocha‐Miranda CE, Mendez‐Otero R, Josua KM (1983): Visual perception fields in the superficial layers of the opossum's superior colliculus: Representation of the ipsi and contralateral hemifields by each eye. Exp Brain Res 49:373–380. [DOI] [PubMed] [Google Scholar]

- Rinne T (2010): Activations of human auditory cortex during visual and auditory selective attention tasks with varying difficulty. Open Neuroimag J 4:187–193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santangelo V, Belardinelli MO, Spence C, Macaluso E (2008a): Interactions between voluntary and stimulus‐driven spatial attention mechanisms across sensory modalities. J Cogn Neurosci 21:2384–2397. [DOI] [PubMed] [Google Scholar]

- Santangelo V, Ho C, Spence C (2008b): Capturing spatial attention with multisensory cues. Psychon Bull Rev 15:398–403. [DOI] [PubMed] [Google Scholar]

- Santangelo V, Spence C (2007): Multisensory cues capture spatial attention regardless of perceptual load. J Exp Psychol Hum Percept Perform 33:1311–1321. [DOI] [PubMed] [Google Scholar]

- Santangelo V, Van der Lubbe RH, Belardinelli MO, Postma A. (2006): Spatial attention triggered by unimodal, crossmodal, and bimodal exogenous cues: A comparison of reflexive orienting mechanisms. Exp Brain Res 173:40–48. [DOI] [PubMed] [Google Scholar]

- Schmitt M, Postma A, De Haan E. (2000): Interactions between exogenous auditory and visual spatial attention. Q J Exp Psychol A 53:105–130. [DOI] [PubMed] [Google Scholar]

- Shomstein S, Yantis S. (2004): Control of attention shifts between vision and audition in human cortex. J Neurosci 24:10702–10706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shulman GL, Pope DL, Astafiev SV, McAvoy MP, Snyder AZ, Corbetta M (2010): Right hemisphere dominance during spatial selective attention and target detection occurs outside the dorsal frontoparietal network. J Neurosci 30:3640–3651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith DV, Davis B, Niu K, Healy EW, Bonilha L, Fridriksson J, Morgan PS, Rorden C (2010): Spatial attention evokes similar activation patterns for visual and auditory stimuli. J Cogn Neurosci 22:347–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sosa Y, Clarke AM, McCourt ME (2011): Hemifield asymmetry in the potency of exogenous auditory and visual cues. Vision Res 51:1207–1215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spence C. (2010): Crossmodal spatial attention. Ann N Y Acad Sci 1191:182–200. [DOI] [PubMed] [Google Scholar]

- Spence C, Driver J. (1997): Audiovisual links in exogenous covert spatial orienting. Percept Psychophys 59:1–22. [DOI] [PubMed] [Google Scholar]

- Spence C, McDonald JJ, Driver J (2004): Exogenous spatial cuing studies of human crossmodal attention and multisensory integreation In: Spence C, Driver J, editors. Crossmodal Space and Crossmodal Attention. Oxford: Oxford University Press; pp 277–320. [Google Scholar]

- Spierer L, Bellmann‐Thiran A, Maeder P Murray MM, Clarke S (2009): Hemispheric competence for auditory spatial representation. Brain 132:1953–1966. [DOI] [PubMed] [Google Scholar]

- Stormer VS, McDonald JJ, Hillyard SA (2009): Cross‐modal cueing of attention alters appearance and early cortical processing of visual stimuli. Proc Natl Acad Sci USA 106:22456–22461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988): Co‐Planar Stereotaxic Atlas of the Human Brain. New York: Thieme. [Google Scholar]

- Teshiba TM, Ling J, Ruhl DA, Bedrick BS, Pena A, Mayer AR (2012): Evoked and intrinsic asymmetries during auditory attention: Implications for the contralateral and neglect models of functioning. Cereb Cortex doi: 10.1093/cercor/bhs039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thiel CM, Zilles K, Fink GR (2004) Cerebral correlates of alerting, orienting and reorienting of visuospatial attention: An event‐related fMRI study. Neuroimage 21:318–328. [DOI] [PubMed] [Google Scholar]

- Ward LM (1994): Supramodal and modality‐specific mechanisms for stimulus‐driven shifts of auditory and visual attention. Can J Exp Psychol 48:242–259. [DOI] [PubMed] [Google Scholar]

- Ward LM, McDonald JJ, Golestani, N. (1998): Cross‐modal control of attention shifts In: Wright R, editor. Visual attention. New York: Oxford University Press; p232–268. [Google Scholar]

- Ward LM, McDonald JJ, Lin D (2000): On asymmetries in cross‐modal spatial attention orienting. Percept Psychophys 62:1258–1264. [DOI] [PubMed] [Google Scholar]

- Witten IB, Knudsen EI (2005): Why seeing is believing: merging auditory and visual worlds. Neuron 48:489–496. [DOI] [PubMed] [Google Scholar]

- Wright R, Ward LM (2008): Crossmodal attention shifts In: Wright R, Ward LM, editors. Orienting of Attention. New York: Oxford University Press; pp 199–217. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental figure 1: This figure presents regions that exhibited a significant effect of laterality for the visual cues with auditory targets condition (Panel A: VCAT) and for the auditory cues with visual targets (Panel B: ACVT). The magnitude and direction of the following contrast t‐scores are represented by either warm (right > left; red or yellow representing p < 0.005 or p < 0.001 respectively) or cool (left > right; blue or cyan representing p < 0.005 or p < 0.001 respectively) coloring. Locations of the axial (Z) slices are given according to the Talairach atlas (L = left and R = right). Differential effects of laterality were observed in the left middle occipital gyrus (MOG), left lateral tempero‐occipital cortex (LTO) and right auditory cortex (AC) during VCAT. During the ACVT condition, the left AC and bilateral LTO showed differential activation dependent on stimulus laterality. For both conditions, results indicated increased activity for contralateral stimuli. The percent signal change (PSC) values for left (blue bars) and right (red bars) trials within these selected regions are displayed, with error bars equal to the standard error of the mean.

Supplemental figure 2: This figure presents a significant laterality by stimulus onset asynchrony (SOA) interaction effect within the visual cortex (VC) during the visual cues with auditory targets condition (VCAT). The magnitude of the effect is represented by warm coloring (red or yellow representing p < 0.005 or p < 0.001 respectively). Locations of the axial (Z) slices are given according to the Talairach atlas (L = left and R = right). Percent signal change (PSC) values for each of the four main conditions of interest are displayed, with error bars corresponding to the standard error of the mean.

Supporting Information Tables