Abstract

During face‐to‐face communication, body orientation and coverbal gestures influence how information is conveyed. The neural pathways underpinning the comprehension of such nonverbal social cues in everyday interaction are to some part still unknown. During fMRI data acquisition, 37 participants were presented with video clips showing an actor speaking short sentences. The actor produced speech‐associated iconic gestures (IC) or no gestures (NG) while he was visible either from an egocentric (ego) or from an allocentric (allo) position. Participants were asked to indicate via button press whether they felt addressed or not. We found a significant interaction of body orientation and gesture in addressment evaluations, indicating that participants evaluated IC‐ego conditions as most addressing. The anterior cingulate cortex (ACC) and left fusiform gyrus were stronger activated for egocentric versus allocentric actor position in gesture context. Activation increase in the ACC for IC‐ego>IC‐allo further correlated positively with increased addressment ratings in the egocentric gesture condition. Gesture‐related activation increase in the supplementary motor area, left inferior frontal gyrus and right insula correlated positively with gesture‐related increase of addressment evaluations in the egocentric context. Results indicate that gesture use and body‐orientation contribute to the feeling of being addressed and together influence neural processing in brain regions involved in motor simulation, empathy and mentalizing. Hum Brain Mapp 36:1925–1936, 2015. © 2015 Wiley Periodicals, Inc.

Keywords: gesture, social addressment, body orientation, fMRI, second person

INTRODUCTION

Everyday conversations with other individuals account for the most important part of our social communicative life, allowing us to exchange ideas and discuss thoughts. In these situations, speaker and addressee usually talk to each other in a natural face‐to‐face position. Under certain circumstances, however, the speaker is visible from varying perspectives, for example, in multiparty communication situations. Thus, the interlocutor may—from the perspective of one specific addressee—change from an egocentric to an allocentric position to involve all present recipients equally. The neural correlates of this orientation change during social communication have already been investigated in a study using virtual characters [Schilbach et al., 2006]. The characters were presented either looking straight at the participant or facing a supposed third person while producing either facial expressions or arbitrary facial movements. For the conditions addressing, the participant in contrast to the side‐facing conditions the authors report an activity increase in the left posterior cerebellar lobe, right medial prefrontal, and left insular cortex. Neural responses were attributed to interpersonal processes related to self‐awareness and mentalizing.

Another study by Ciaramidaro et al. looked at the influence of orientation on the processing of communicative or private intentions [Ciaramidaro et al., 2014]. For this purpose, the authors used video material of actors presenting objects either to themselves (from a frontal or lateral view), a second person (frontal view), or a third person (lateral view). Their results revealed a significant interaction between self‐ or other‐directed intentions and orientation. For this contrast they report increased activity in medial prefrontal and bilateral premotor cortices. A further psycho‐physiological interaction (PPI) analysis identified a stronger functional connectivity between medial prefrontal cortex (MPFC) and metalizing as well as mirror neuron areas for objects presented in second person perspective.

Both studies mentioned so far support and implement a “second‐person neuroscience,” as put forward by Schilbach et al. [2013]. The authors argue that social interactions and the cognitive processes they evoke are largely affected by the participants' feelings of engagement and emotional responses, which in turn are engendered only by self‐involvement instead of the sole observation of others interacting. The authors' concept is thus “based on the premise that social cognition is fundamentally different when we are in interaction with others rather than merely observing them” [Schilbach et al., 2013, p. 393], an aspect that is not captured with commonly used “isolation paradigms” which do not allow for a participation. Schilbach et al. [2013] hence point out that it is still unknown how activity in mirror neuron and mentalizing networks is influenced by the extent to which a person feels involved in an interaction. They propose the simulation of a true reciprocal interaction, for example, using a responsive virtual character. In this vein, this study is trying to further illucidate the neural underpinnings of social addressment and self‐involvement by presenting subjects with an actor either directly facing them (egocentric) or facing to an additional third interlocutor (allocentric).

Besides the change of direct “physical” addressment, the speaker in a natural communication context will not only use facial expressions and actions, but further clarify or underpin the content of a verbal utterance using gestures. However, the neural correlates of the presence or absence of co‐speech gesture for social addressment with respect to varying speaker orientations have not been directly investigated. Gestures per se play an important social communicative role [Holler et al., 2012, 2013], rather than being a mere epiphenomenon of human speech production [Goldin‐Meadow and Alibali, 2013]. Iconic gestures (IC) particularly refer to the semantic content of a message, mostly providing additional information about a specific form, size, or shape of an object, for example, spreading the arms to indicate the size of a fish while verbalizing, “He caught a huge fish.” Thus, ICs are rather concrete by nature and are often used to deliver additional information to the listener, in particular with respect to visuospatial aspects [Wu and Coulson, 2007]. The processing of ICs and their integration with speech is mostly found to evoke increased activity in the left inferior frontal and posterior middle temporal gyrus (Dick et al., 2014; Straube Green, Bromberger, et al., 2011). There is further evidence for a common or shared neural network for semantic information conveyed in speech comprehension and brain activations evoked during co‐speech gesture comprehension [Straube et al., 2012; Xu et al., 2009, for review see Andric and Small, 2012].

To date, it is yet unknown, in how far the exposure to gesture information perceived from either an egocentric or allocentric speaker's orientation influences the degree to which the recipient feels directly addressed by an utterance. In a previous study [Straube et al., 2010], we investigated the neural basis of different gesture categories (person‐related vs. object‐related) and different actor orientations (egocentric vs. allocentric). The main effect for egocentric versus allocentric actor orientation revealed enhanced brain responses in occipital, inferior frontal, medial frontal, right anterior temporal, and left hemispheric parietal regions. Most of these areas have been previously associated with mentalizing or theory of mind (ToM) processes [Spunt et al., 2011]. These data supported the assumption that social cognitive processes are specifically activated when audio‐visual information is conveyed in a direct face‐to‐face situation.

Another imaging study also investigated the neural effects of actor orientation on gesture processing [Saggar et al., 2014]. Apart from the manipulation of the face visibility (visible vs. blur), the authors also compared actor orientation toward (egocentric) and away (allocentric) from the participant while presenting social or nonsocial gestures. Participants performed an attentional cover task in pressing a button when a red dot appeared near the eyes and nose of the actor. In this study, faster reaction times for detecting the dot were found in egocentric conditions. On a neural level, egocentric as compared to allocentric orientation elicited enhanced neural responses in the bilateral occipital pole and the left fusiform gyrus. The inverse contrast revealed blood oxygen level dependent (BOLD) enhancements in the “where‐pathway,” encompassing the occipito‐parietal areas as well as the parahippocampal, pre and postcentral gyrus, right insula, cingulate cortex, and bilateral operculum. However, in this experiment no direct behavioral addressment measures were collected.

A further fMRI study with a similar design was conducted by Holler et al. [2014]. They presented participants with prerecorded videos of an actor speaking short sentences of which half were accompanied by ICs. The subjects, however, were made to believe that they were taking part in a live triadic interaction, taking place during scanning and involving a second addressee. In contrast to the study by Saggar et al. [2014], the actor's body remained in the same position throughout the experiment while addressing either the real or fictive recipient. Thus, adddressment was achieved solely by a change in eye gaze direction. Furthermore, the authors used an attentional task aimed at 16 filler trials but did not record any direct behavioral measurements concerning the social content of the videos. For addressing in contrast to nonaddressing trials they found increased neural activity in the right inferior occipital gyrus and another activation cluster in the right middle temporal gyrus, which was specific to the addressment condition with gesture. The authors take this finding to indicate a modulation of the semantic integration of speech and gesture evoked by a social cue signaling addressment.

Two further studies by Holler et al. investigated an eye gaze paradigm as a subtle social cue of addressment. For this purpose, the authors used uni‐ and bimodal conditions of speech and ICs while participants had to either match words or objects to the content of the previous utterance. The first study reported slower reaction times for word‐gesture matching in the bimodal condition when participants were unaddressed [Holler et al., 2012]. In a more recent study, an advantage of speed for addressed subjects in the object matching task, only after the unimodal speech condition, were found while reaction times did not differ for speech and gesture combined [Holler et al., 2014]. As the author acknowledge, inconsistent results can be traced back to the differing tasks focusing either on verbal or visual information. Taken together, both studies indicate that even a social cue such as a subtle shift in eye gaze can significantly affect the processing of speech and gesture.

There is an increasing interest in the neural correlates of the addressing nature of gestures per se and its relation to contextual factors such as the body orientation or eye gaze of a speaker. However, the possible interactions of these factors are currently not well understood, neither on the behavioral nor on the neural level. Thus, this study focused on the question in how far the presence or absence of gesture—either presented from an egocentric or allocentric view—contributes to social addressment and its neural correlates. Behaviorally, differences in addressment evaluations were expected to reveal a preference for egocentrically conveyed utterances combined with coverbal gestures (IC‐ego). Moreover, sentences spoken to an invisible audience (allocentric; IC‐allo, NG‐allo) or spoken without accompanying gesture (NG‐ego, NG‐allo) were expected to be less addressing. Regarding brain activation, we expected BOLD enhancements for the main effect of gesture in the bilateral posterior temporal regions as well as in prefrontal brain areas [Green et al., 2009; Kircher et al., 2009; Straube, Green, Bromberger, et al., 2011]. For the main effect of actor orientation (ego>allo), we expected parietal, medial and inferior frontal areas to be involved, as found in our previous experiment [Straube et al., 2010]. The inverse contrast (allo>ego) was hypothesized to highlight occipital and anterior/middle cingulate regions. Furthermore, an interaction between gesture and actor orientation was hypothesized to involve brain regions that are sensitive to mentalizing and self‐referential processing, including the anterior cingulate cortex (ACC) and other medial frontal brain regions [Straube, Green, Chatterjee, et al., 2011].

METHODS

Participants

Thirty‐seven healthy right‐handed and native German students were recruited (23 female, mean age = 25.13, SD = 3.90, range = 20–39). None of the participants had either impairments of vision or hearing, or any medical, psychiatric, or neurological illnesses. Participants gave written informed consent and received 30 Euro for the participation in the study. The study was approved by the local Ethics Committee.

Stimuli

The stimuli consisted of 20 German sentences presented to the participants as short video clips with four different visual contexts: (1) Egocentric actor position with a coverbal iconic gesture (IC‐ego), (2) Egocentric actor position without gesture—no gesture—(NG‐ego), (3) Allocentric actor position with a coverbal iconic gesture (IC‐allo), and (4) Allocentric actor position without gesture (NG‐allo). The grammatical structure (subject‐predicate‐object) was consistent across stimuli. The sentences and co‐speech gestures were presented in a natural way, for example, “The man caught a big fish” while the actor indicated the size of the fish with his hands; “The grandma has got a crooked back” while the actor indicates the crooked form with his hands; “The coach stretches the elastic band” while the actor indicates the stretching procedure with his hands; “The gym has got an arched roof” while the actor indicates the roundish shape with his hands (Please note that the examples are translated from German into English).

The male actor neither spoke nor moved for 0.5 s at the beginning and end of each clip. With respect to the egocentric versus allocentric distinction, two cameras simultaneously filmed the actor while speaking or gesturing, so that only the viewpoint differed between these two conditions.

To countervail sequence effects, four different versions of the same stimuli were created. Thus, each of those four pseudorandomized sets started with the same sentence but in another condition and no sentence was repeated literally in the same, identical condition across one set. Each stimulus set encompassed 80 video clips in total (40 egocentric, 40 allocentric), each lasting exactly 5 s. Respectively half of those 40 egocentric and 40 allocentric videos were presented with speech but no gesture (NG‐ego and NG‐allo). The other half contained speech accompanied by iconic gestures (IC‐ego and IC‐allo).

Behavioral Pre‐Study/Rating Experiment

In a prior behavioral experiment, a separate sample of 20 healthy native German‐speaking subjects performed a rating to evaluate the study material. First, the addressing, viewer‐related character of the audiovisual material was evaluated on a scale from 1 to 7, while 7 corresponded to a highly addressing degree (“To what degree do you feel addressed by the speaker's message?”). Second, participants were asked to rate the comprehensiveness of the message, again on a scale from 1 to 7 (“To what degree is the message comprehensible to you?”). Participants were instructed to evaluate the whole video information.

Comprehension was found to be equally high (>6) in all conditions. Moreover, the results from the behavioral prestudy showed that speech with ICs was significantly more addressing (F=28.12, P<0.001) than NG. Furthermore, egocentric presented sentences were rated more addressing (F=29.73, P<0.001) than those presented with an allocentric actor position. With respect to the addressment ratings, there was also a significant interaction between gesture and body posture (F=6.40, P<0.05), showing highest addressment scores for egocentric presented gestures.

Experimental Design and Procedure

Prior to the fMRI scanning procedure, participants underwent four practice‐training trials to make sure that the task was understood properly. Stimuli used for this purpose were not part of the experimental material presented subsequently in the scanner.

For the scanner experiment, MRI‐compatible headphones together with earplugs were used to optimize scanner noise reduction. Stimuli were presented in the middle of the video screen using presentation software (Neurobehavioral Systems). The 20 videos for each of the four conditions were presented in a pseudorandomized and counterbalanced order across subjects. Subsequent to the presentation of each video, a low level baseline with a variable duration of 3750—6,750 ms (M = 5,000 ms) followed. This baseline consisted of a blank grey screen. A similar experimental procedure was used in a previous study of ours [for details see e.g., Straube et al., 2010].

While being scanned, participants were asked to successively decide whether they felt addressed by a stimulus or not, taking into account the whole video. Thus, subjects were instructed to take all presented information into account rather than focusing on the content of the verbal message alone. To evaluate the addressing nature of the videos, participants were asked to press a button for “yes” or “no” on an MR‐compatible response device fixed to their left leg. Thus, feeling addressed resulted in a button press with the left middle finger, not feeling addressed resulted in a left index finger button press. Participants were further instructed to respond immediately after the video had disappeared from the screen.

Subsequent to the fMRI experiment, subjects filled in a questionnaire with statements concerning the task (e.g., “I understood the addressing task properly”) on a 1 (low agreement) to 7 (high agreement) likert scale as well as a questionnaire about gesture specific issues (e.g., “I like it when people use gestures to underpin their verbal statement.”) with a 1 to 5 likert scale.

fMRI Data Acquisition

MRI data were collected on a Siemens 3 Tesla MR Magnetom Trio Trim scanner. To minimize head motion artefacts, subjects' heads were fixated using foam pads, additional to the MR‐compatible headphones.

Subsequent to the acquisition of functional data, T1‐weighted high‐resolution anatomical images were acquired for each subject. Functional data were acquired using a T2‐weighted echo planar image (EPI) sequence [repetition time (TR) = 2,000 ms; echo time (TE) = 30 ms; flip angle = 90°]. The volume included 33 transversal slices [slice thickness = 3.6 mm; interslice gap = 0.36 mm; field of view (FoV)=230 mm, voxel resolution = 3.6 mm2]. In total, 411 functional volumes were acquired in each subject.

fMRI Data Analysis

Functional imaging data were analyzed with the statistical parametric mapping software (SPM8, http://www.fil.ion.ucl.ac.uk/spm/software/spm8/). To countervail magnetic field inhomogeneities and saturation effects, the experiment started with the sixth image, thus the first five images ere discarded from the analysis.

A slice‐time correction was performed to correct for fluctuations in slice acquisition timing. For this purpose, the middle slice (16th slice) was used as reference slice. Images were then realigned to the sixth image and normalized into standard stereotaxic anatomical space using the transformation matrix (mean image) calculated from the first EPI‐scan for each subject and the EPI‐template created by the Montreal Neurological Institute (MNI). The normalized data (resliced voxel size: 2 mm³) were then smoothed with an 8 mm³ Gaussian kernel to correct for intersubject variance in brain anatomy.

An event‐related design with a duration of 1 s was used to measure differences in BOLD‐responses with respect to the four different experimental conditions (NG‐ego, NG‐allo, IC‐ego, IC‐allo). Individual integration points for each video thus formed one event [for further information see Green et al., 2009]. Movement parameters of each subject were implemented as multiple regressors into the data analyses to correct for head movement during data acquisition.

A full factorial analysis was conducted with the four baseline‐contrast images (one for each condition) from each participant. In this group analysis, specific contrasts of interest were defined (see below).

We chose to use Monte‐Carlo simulation of the brain volume to establish an appropriate voxel contiguity threshold [Slotnick and Schacter, 2004; see also Green et al., 2009; Kircher et al., 2009; Straube, Green, Bromberger, et al., 2011]. This correction has the advantage of higher sensitivity to smaller effect sizes, while still correcting for multiple comparisons across the whole brain volume. Assuming an individual voxel type I error of P < 0.05, a cluster extent of 211 contiguous resampled voxels was indicated as necessary to correct for multiple voxel comparisons at P < 0.05. This cluster threshold (based on the whole brain volume) has been applied to all contrasts. The reported voxel coordinates of activation peaks are located in MNI space.

Contrasts of interest

To identify neural networks sensitive to orientation and gesture processing, main effects were calculated for ego>allo as well as for IC>NG. Conjunction analyses were applied to test for brain regions sensitive to both gesture and orientation (ego>allo ∩ IC>NG). Interaction analysis between gesture presence and orientation were used to reveal the specific effects of gesture in egocentric versus allocentric actor orientations.

In a further step, we investigated the relation between BOLD signal changes in relevant regions identified through the interaction analysis (gesture x orientation) and the addressment evaluations. For this purpose, differential scores for both BOLD signal changes and addressment ratings were calculated. In subtracting either Allocentric from Egocentric or NG from IC, possible relations between brain activations and individual addressment evaluations were explored.

RESULTS

Behavioral Results (fMRI‐Study)

The addressment rating results are presented in Table 1. Participants unanimously rated stimuli with allocentric presented speech as least (4%, 0.70 out of 20) and those with egocentric presented gesture as most addressing (92%, 18.41 out of 20). The behavioral results of the other two conditions (NG‐ego, IC‐allo), conversely, were nondistinctive.

Table 1.

Addressment evaluations and reaction times across the four experimental conditions (NG‐ego=No Gesture egocentric; NG‐allo=No Gesture allocentric; IC‐ego=Iconic Gesture egocentric; IC‐allo=Iconic Gesture allocentric): number of stimuli rated as addressing (out of 20)

| Addressment | Reaction Time | |||

|---|---|---|---|---|

| Condition | Mean | SD | Mean | SD |

| NG‐ego | 9.24 | 7.8 | 5.90 | 0.24 |

| NG‐allo | 0.7 | 1.24 | 5.89 | 0.18 |

| IC‐ego | 18.41 | 2.33 | 5.83 | 0.19 |

| IC‐allo | 9.24 | 7.42 | 5.94 | 0.23 |

Reaction times for the evaluation of addressment were measured in seconds, from the beginning of each video (duration 5 s)

Shortest reaction times (RT) were found for the in an egocentric manner presented iconic gestures (IC‐ego), whereas longest RTs were found for the IC‐allo condition (see Table 1). Sig. differences were found between NG‐ego and IC‐ego (repeated measurement ANOVA, pair‐wise comparisons; P=0.01) as well as between NG‐allo versus IC‐ego (P=0.02) and IC‐ego versus IC‐allo (P<0.001). No effect of order was found.

Main BOLD Effects

Main effect of gesture

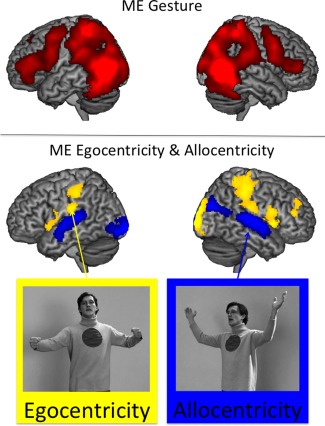

The main effect of gesture (IC>NG) revealed activations in a widespread neural network, including the bilateral motor cortical areas, posterior occipito‐temporal cortices as well as parietal regions (see Table 2, Fig. 1 upper panel). Moreover, bilateral inferior frontal regions were found to be involved in gesture processing. The inverse contrast (NG>IC) revealed no significant results.

Table 2.

Activations for the main effect of Gesture (IC>NG)

| Contrast | Anatomical Region | Hem. | No. Voxels | T value | x | y | z |

|---|---|---|---|---|---|---|---|

| IC>NG | Middle Occipital Gyrus | L | 47,470 | 12.17 | −50 | −72 | 4 |

| 11.18 | 46 | −66 | 0 | ||||

| 6.60 | −42 | −62 | −14 | ||||

| Precentral Gyrus | R | 4,579 | 4.62 | 56 | 10 | 38 | |

| 3.92 | 54 | 10 | 22 | ||||

| 3.50 | 54 | 42 | 6 | ||||

| Hippocampus | L | 1,077 | 3.85 | −24 | −28 | −8 | |

| 2.28 | −8 | −22 | −12 | ||||

| 2.25 | −10 | −20 | 8 | ||||

| Superior Medial Gyrus | L | 389 | 2.45 | −4 | 36 | 46 |

Figure 1.

Upper panel: Main effect (ME) for Gesture ((IC‐ego + IC‐allo) > (NG‐ego + NG‐allo), red). Lower panel: Main effect (ME) for Egocentricity (f) ((IC‐ego + NG‐ego) > (IC‐allo + NG‐allo), yellow) and Allocentricity (l) ((IC‐allo + NG‐allo) > (IC‐ego + NG‐ego), blue). Note: the actor was simultaneously speaking while producing gestures (IC‐ego, IC‐allo). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Main effects of egocentric and allocentric actor orientation

Egocentric (ego) as opposed to allocentric (allo) orientation elicited activations in a neural network encompassing the right‐hemispheric supramarginal gyrus, the inferior parietal gyrus and the postcentral gyrus (see Table 3, ego>allo; Fig. 1 lower panel). Another cluster of activation was found in the right cuneus extending to the superior occipital gyrus. Furthermore, enhanced neural responses were found in the right inferior frontal gyrus (IFG) (p. Opercularis) and the middle frontal gyrus. A cluster of significant activation was found in the right supplementary motor area (SMA) extending to the left SMA and the bilateral middle cingulate cortex. Enhanced BOLD responses were further found in the left IFG (p. Opercularis), rolandic operculum, and the precentral gyrus. Both the left postcentral gyrus as well as the left cerebellum were found to be sig. more activated for the egocentric conditions.

Table 3.

Activations for the main effects of egocentric (ego) versus allocentric (allo) actor orientation

| Contrast | Anatomical Region | Hem. | No. Voxels | T value | x | y | z |

|---|---|---|---|---|---|---|---|

| ego > allo | Superior Occipital Gyrus | R | 1,478 | 4.38 | 18 | −98 | 6 |

| 3.51 | 12 | −96 | 22 | ||||

| 2.56 | 18 | −92 | 34 | ||||

| IFG | R | 3,669 | 3.17 | 38 | 0 | 8 | |

| 2.95 | 46 | 10 | 4 | ||||

| 2.65 | 58 | 8 | 20 | ||||

| SMA | R | 2,149 | 2.98 | 8 | 6 | 50 | |

| 2.90 | 0 | 4 | 50 | ||||

| 2.75 | 14 | 14 | 42 | ||||

| Supramarginal Gyrus | R | 2,850 | 2.84 | 50 | −32 | 46 | |

| 2.79 | 42 | −24 | 52 | ||||

| 2.78 | 54 | −30 | 54 | ||||

| Supramarginal Gyrus | L | 298 | 2.80 | −52 | −22 | 24 | |

| Postcentral Gyrus | L | 619 | 2.65 | −42 | −32 | 44 | |

| Lingual Gyrus | L | 595 | 2.60 | −8 | −82 | −4 | |

| 2.36 | −22 | −74 | 0 | ||||

| 2.18 | −32 | −60 | −2 | ||||

| Middle Frontal Gyrus | R | 254 | 2.52 | 38 | 42 | 16 | |

| 2.08 | 30 | 46 | 32 | ||||

| Cerebellum | L | 283 | 2.30 | −14 | −46 | −26 | |

| 2.00 | −22 | −60 | −34 | ||||

| 1.97 | −22 | −48 | −30 | ||||

| allo > ego | Inferior Occipital Gyrus | L | 1,220 | 3.91 | −16 | −94 | −8 |

| 2.35 | −38 | −84 | −6 | ||||

| 1.75 | −32 | −88 | 8 | ||||

| Middle Occipital Gyrus | R | 664 | 3.35 | 44 | −76 | 26 | |

| 2.03 | 50 | −54 | 16 | ||||

| 1.87 | 38 | −80 | 44 | ||||

| Superior Temporal Gyrus | L | 2,658 | 3.26 | −60 | −28 | 10 | |

| 2.97 | −50 | −10 | −2 | ||||

| 2.84 | −48 | −20 | 2 | ||||

| Superior Temporal Gyrus | R | 2,328 | 3.16 | 60 | −24 | 10 | |

| 2.98 | 52 | −4 | −4 | ||||

| Middle Cingulate Cortex | L | 225 | 2.34 | −16 | −10 | 34 | |

| Middle Cingulate Cortex | L/R | 388 | 2.26 | −2 | −46 | 48 | |

| 2.12 | 0 | −62 | 58 | ||||

| 1.87 | 14 | −50 | 44 |

Note: anatomical regions refer to peak voxel activations.

The opposite contrast (allo>ego) revealed activations in the bilateral superior temporal gyri. Allocentric as opposed to egocentric actor orientation further elicited activations in the left middle and inferior occipital gyri (see Table 3, allo>ego; Fig. 1 lower panel). Clusters of activation were also found in bilateral middle and superior occipital gyri and in a further cluster of activation extending from the middle cingulate cortex to the bilateral precuneus.

Conjunction Analysis

Communalities of main effects: gesture ∩ egocentricity

The conjunction analysis between the two main effects for Gesture and Egocentricity (IC>NG ∩ ego>allo) revealed activations in the right inferior parietal lobe extending to the postcentral and supramarginal gyrus (MNI: x = 54, y=−30, z = 54; t = 2.78; cluster extension (k E) = 2,143 voxels). Moreover, enhanced activations were found in the right cuneus and superior occipital gyrus (MNI: x = 18, y = −100, z = 12; t = 3.58; k E = 854 voxels). The left precentral gyrus (MNI: x=−42, y = −32, z = 44; t = 2.65; k E = 609 voxels) as well as the right precentral gyrus (MNI: x = 58, y = 8, z = 20; t = 2.65; k E = 226 voxels) were also found to be jointly involved for both main effects. Right precentral activation included the inferior frontal as well as the rolandic opercula region.

Interaction Analyses

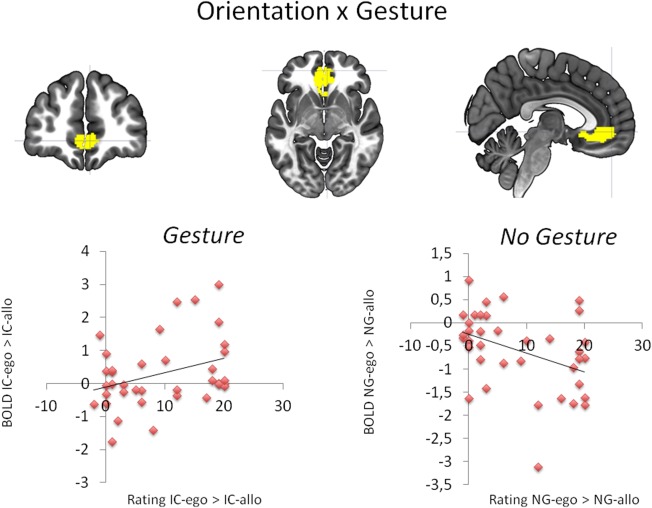

Interaction 1: the effect of egocentricity in a gesture versus no‐gesture context (IC‐ego>IC‐allo)>(NG‐ego>NG‐allo)

Two clusters of enhanced neural responses were found for the effect of egocentricity in a gesture versus no‐gesture context (see Fig. 2, Table 4). The first cluster encompassed the bilateral ACC as well as the left mid orbital gyrus. The second cluster encompassed the left cerebellum, extending to the left fusiform gyrus. All areas were strongest activated in the IC‐ego condition.

Figure 2.

Effect of egocentricity in a gesture versus no‐gesture context (IC‐ego>IC‐allo)>(NG‐ego>NG‐allo): Upper panel: BOLD signal changes in the ACC. Lower panel: Association between BOLD signal changes in the ACC specific for egocentric actor orientation (ego>allo) either with gesture (IC; left) or without gesture (NG; right) and the corresponding rating results. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Table 4.

Activations for interaction 1: The effect of egocentricity in a gesture versus no‐gesture context (IC‐ego>IC‐allo)>(NG‐ego>NG‐allo)

| Contrast | Anatomical Region | Hem. | No. Voxels | T value | x | y | z |

|---|---|---|---|---|---|---|---|

| (IC‐ego>IC‐allo)>(NG‐ego>NG‐allo) | Anterior Cingulate | L/R | 903 | 2.69 | 2 | 20 | −10 |

| 2.34 | −6 | 38 | −6 | ||||

| 2.15 | 4 | 36 | −6 | ||||

| Cerebellum | L | 294 | 2.57 | −24 | −34 | −28 | |

| 2.57 | −32 | −32 | −18 |

Individual differences in addressment ratings correlated with activation in the ACC. Only in the gesture conditions, subjects sensitive to body orientation in their ratings (IC‐ego>IC‐allo) showed BOLD enhancements in the ACC region (positive correlation between BOLD signal changes for IC‐ego>IC‐allo and addressment evaluations for IC‐ego>IC‐allo; r=0.340; P=0.039; see Fig. 2). A negative association was found for the absence of gesture presented from the egocentric position (negative correlation between BOLD signal changes for NG‐ego>NG‐allo and addressment evaluations for NG‐ego>NG‐allo; r = −0.397; P = 0.015; see Fig. 2). These data indicate that the ACC is relevant for the feeling of addressment induced by body‐orientation. However, the ACC involvement is crucially dependent on the presence of gesture, leading to correlations in opposite directions.

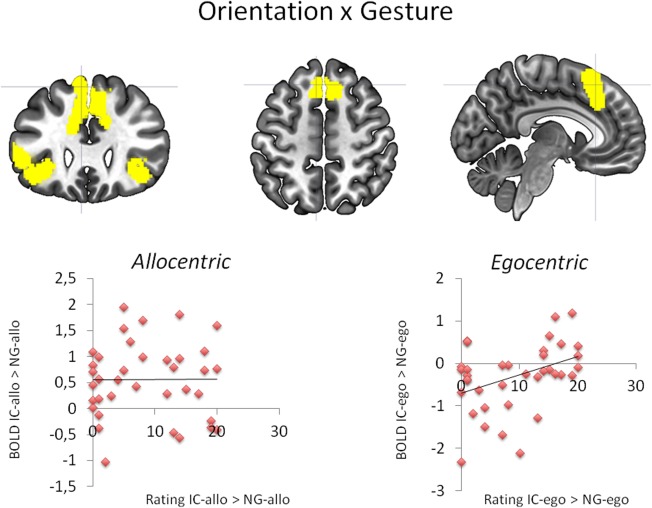

Interaction 2: the effect of gesture in an egocentric versus allocentric context (IC‐allo>NG‐allo)>(IC‐ego>NG‐ego)

The interaction analysis testing for the effect of gesture in an egocentric versus allocentric context revealed enhanced neural responses in the bilateral SMA extending to the left superior medial gyrus and the right middle cingulate cortex (see Fig. 3, Table 5). A second cluster of activation was found in the left IFG encompassing the p. Triangularis as well as the p. Orbitalis. This cluster dorsally extended to the left insula. The third cluster of enhanced BOLD responses was found in the right insula lobe encompassing the right IFG (p. Triangularis and p. Orbitalis). BOLD signal changes showed strongest activation increase in the NG‐ego in contrast to all other conditions.

Figure 3.

The effect of gesture in an egocentric versus allocentric context (IC‐allo>NG‐allo)>(IC‐ego>NG‐ego): Upper panel: BOLD signal changes in the SMA, left IFG and right insula lobe. Lower panel: Association between BOLD signal changes in the SMA specific for gesture presence (IC>NG) either in allocentric (allo; left) or egocentric actor position (ego; right) and the corresponding rating results. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Table 5.

Activations for interaction 2: the effect of gesture in an egocentric versus allocentric context (IC‐allo>NG‐allo)>(IC‐ego>NG‐ego)

| Contrast | Anatomical Region | Hem. | No. Voxels | T value | x | y | z |

|---|---|---|---|---|---|---|---|

| (IC‐allo>NG‐allo)>(IC‐ego>NG‐ego) | SMA | L/R | 1,781 | 3.04 | −8 | 22 | 60 |

| 2.94 | 8 | 22 | 48 | ||||

| 2.77 | −6 | 28 | 42 | ||||

| IFG | L | 1,333 | 2.96 | −54 | 22 | 6 | |

| 2.95 | −32 | 24 | −8 | ||||

| 2.60 | −44 | 40 | −8 | ||||

| Insula Lobe | R | 465 | 2.27 | 32 | 26 | −4 | |

| 2.10 | 48 | 42 | −10 | ||||

| 2.07 | 40 | 28 | −12 |

Individual differences in addressment ratings correlated with activation in the SMA, left IFG and the insula. Only in the egocentric context subjects sensitive to gestures in their ratings (IC>NG) showed BOLD enhancements in these regions (positive correlations between BOLD signal changes for IC‐ego>NG‐ego and addressment evaluations for IC‐ego>NG‐ego; SMA r=0.395; P=0.015; IFG r=0.375; P=0.022; Insula r=0.510; P=0.001; see Fig. 3). No significant association was found in the allocentric context (correlation BOLD signal changes for IC‐allo>NG‐allo and addressment evaluations for IC‐allo>NG‐allo; all P>0.25, see Fig. 3). These data indicate that the SMA, left IFG and the insula are relevant for the feeling of addressment in an egocentric communication context only.

DISCUSSION

Natural, personal communication goes far beyond the level of mere verbal information exchange and involves nonverbal cues such as gestures and body orientation. For example, communication within a larger group of people such as in multiparty conversation situations goes along with different body postures and speaker positions, respectively. This study investigated the neural networks involved in the processing of egocentric versus allocentric presented co‐speech gestures in response to naturally presented video clips. To identify mere gesture (IC) “addressment effects”—either in egocentric (ego) or in allocentric (allo) actor orientation –, additional control conditions were used containing NG at all. Identical speech material was presented across all conditions, allowing for direct comparisons and interaction analysis, thereby avoiding potentially confounding factors such as differences in semantic content. In addition, no independent control conditions were necessary as the participants were asked to evaluate their addressment subsequent to each video clip. Since the actor was filmed from two positions simultaneously (egocentric and allocentric), no movement differences in arm and hand motions between conditions may have influenced the imaging data.

Extending previous research indicating that both the body or eye gaze orientation as well as the presence of gesture influence the processing of socially relevant utterances [Holler et al., in press; Saggar et al., 2014; Straube et al., 2010], we here demonstrated that both gestures and body‐orientation contribute to feeling addressed by communication partners and together influence neural processing in brain regions previously found to be involved in motor simulation, empathy and mentalizing processes. Together, this highlights the human ability to use and integrate multiple cues to evaluate the social relevance of a communicative process.

Behavioral Results

As expected, participants evaluated the egocentric iconic gesture (IC‐ego) stimuli as most addressing, whereas the no gesture‐allocentric (NG‐allo) condition was found to be least addressing. No difference became evident between the no gesture‐egocentric (NG‐ego) and the IC allocentric (IC‐allo) condition. These results are in line with our behavioral pre‐study rating and are also supported by a recent investigation on the neural correlates of gesture and actor‐orientation [Shaggar et al., 2014]. In this study, an interaction in the behavioral measures between social gestures and body orientation had been reported. However, the dependent variable was the reaction time in response to an attentional cover task (red dot detection) not being directly related to the rationale of the experiment. This subtle evidence—the dot being detected faster in a social, egocentric context—limited the conclusion that the reported effect may have resulted from the social relevance of information. In contrast, we directly measured addressment evaluations, which have been directly related to the imaging results (s. below).

Main Effect of Gesture

On the neural level, the main effect of gesture (IC>NG) resulted in activations encompassing bilateral motor cortical areas, posterior occipito‐temporal cortices as well as parietal regions. In addition, bilateral inferior frontal regions were activated during gesture processing. These regions are part of the well‐known network being associated with movement observation as well as integration and interpretation of bimodal gesture and speech information processing [Kircher et al., 2009] and are thus in line with our hypotheses. These findings further correspond to our previous comparisons of iconic coverbal gestures and corresponding speech [Green et al., 2009; Straube, Green, Bromberger, et al., 2011]. The opposite contrast (NG>IC) revealed no effects, indicating that comparatively less neural resources were activated when only speech was presented.

Egocentric Versus Allocentric Actor Orientation

The egocentric orientation—irrespective of gesture presence—is a marker of social communicative intent and evokes self‐involvement of the addressee. For egocentric (ego) as opposed to allocentric (allo) orientation, activations in a neural network encompassing bilateral frontoparietal brain regions as well as medial structures such as the cingulate gyrus and the SMA were found. In line with previous results [Straube et al., 2010], it can be assumed that “mentalizing” and ToM, that is the process of inferring the mental state or social communicative intention of a speaker, resulted in the recruitment of these frontoparietal neural pathways. In particular, the involvement of the frontoparietal network was highlighted in previous imaging studies directly investigating perspective‐taking tasks in a social environment [Ramsey et al., 2013]. Ramsey et al. found that another person's perspective is automatically computed. The authors moreover claim that we do not only compute what other people see, but we do this before we are explicitly aware of our own perspective [Ramsey et al., 2013]. With respect to the interpretation of our main effects, however, the mechanisms relying on IC gesture processing, which might have driven BOLD signal changes in regions being associated with “social communicative intentions,” should be taken into account. We further focus on this issue in discussing the results of the interaction analysis.

Allocentric Versus Egocentric Actor Orientation

The inverse contrast (allo>ego) resulted in enhanced neural responses in occipital as well as middle cingulate regions. Moreover, allo>ego elicited activations in the bilateral superior temporal areas. These results indicate that enhanced effort in understanding and interpreting particularly the verbal information lead to recruitment of bilateral temporal regions. As participants evaluated the allocentric positions as least addressing, social communicative intentions are assumed to play a minor role for the neural processing of the videos.

The Processing of Gesture and Egocentricity

To identify common neural networks for gesture processing (main effect) and egocentric actor orientation (main effect) in general, a conjunction analysis was performed.

Patterns of enhanced neural responses were found in the right inferior parietal lobe including the postcentral and supramarginal gyrus, the right cuneus and the superior occipital gyrus. Moreover, the left and the right precentral gyrus were jointly recruited for both main effects, whereas an additional activation was also found in the right inferior frontal region, extending to the rolandic operculum region. As both factors had a significant effect on these regions, they likely represent candidates to provide integrative function and might contribute to the fact that both gestures and body orientations were commonly used for addressment evaluation. Thus, these mainly right‐hemispheric neural networks might be recruited in connection with the contextual factors, gesture and orientation, resulting in the observed interaction effects.

Interaction Analyses

Effect of egocentricity in a gesture versus no‐gesture context (IC‐ego>IC‐allo)>(NG‐ego>NG‐allo)

First, we analyzed the effect of the actor's body orientation in a gesture versus NG context (IC‐ego>IC‐allo)>(NG‐ego>NG‐allo). This interaction analysis revealed two clusters of activation, one in the ACC extending to the mid orbital frontal regions and another in the left cerebellum encompassing the fusiform region. Correlation analyses indicated a connection between egocentricity‐related increases in addressment ratings (IC‐ego>IC‐allo) and enhanced neural responses for egocentric versus allocentric IC gesture conditions (IC‐ego>IC‐allo) in the aforementioned regions. Conversely, a decrease of BOLD signal changes was found during the NG‐ego condition (NG‐ego>NG‐allo) being related to addressment evaluations (NG‐ego>NG‐allo). The ACC, as “an integrative hub for human socially‐driven interactions” [Lavin et al., 2013], is involved in socially relevant information processing as well as in decision making. Thus, in our experiment it might be assumed that the activation of the ACC for IC‐ego versus IC‐allo conditions was mainly driven by social cognitive processes. Moreover, the ACC was found to be generally recruited during decision making. Here, the task‐dependent (potentially) facilitated demands on decision making, reflective reasoning and attention might have resulted in the reduced inhibition [Regenbogen et al., 2013]. This hypothesis is supported by the reduced RTs for the IC‐ego condition, giving rise to the assumption that ACC activations are related to quick decision making processes.

Moreover, mentalizing processes asking the interlocutor to “read the mind” of the opposite when being directly addressed [Straube et al., 2010], might explain the modulatory effects in the ACC. Here, both socially relevant factors together, the egocentric body orientation and gesture use indicate the speaker's wish to communicate, which evoked increased feelings of addressment and mentalizing processes in the listener. Further support for this claim is based on our previous research on recognizing the intention of another person's communicative gestures [Mainieri et al., 2013]. This study investigated the neural substrates of observation and execution of gestures focusing on the role of the mentalizing and the mirror neuron system in gesture perception and production. Here, an interaction between the observation versus execution task (imitation vs. motor control condition) and stimulus condition (social vs. nonsocial) in the right medial orbitofrontal cortex was found. Activations in the latter region were interpreted in terms of enhanced self‐referential processes in the context of socially relevant gestures, which is in line with the current results on social addressment evaluations and enhanced neural responses in prefrontal areas.

Effect of gesture in an ego‐ versus allocentric context (IC‐allo>NG‐allo)>(IC‐ego>NG‐ego)

Secondly, we investigated the specific effect of gesture use in an allocentric versus egocentric context (IC‐allo>NG‐allo)>(IC‐ego>NG‐ego). On the neural level, we found an involvement of the bilateral (pre‐) SMA and the right middle cingulate cortex, the left IFG including the left insula. Moreover, enhanced BOLD responses were found in the right insula lobe encompassing the right IFG. With respect to ratings, we found a positive correlation between gesture‐related addressment evaluations (IC>NG) and enhanced BOLD responses in the egocentric (ego) context (IC‐ego>NG‐ego) only. On the contrary, no such association was found in the allocentric (allo) context, pointing to a specific relevance of gestures as social cues only in an addressing egocentric communicative context. Thus, the presence of gesture in the egocentric actor position may have increased the neural processing of socially relevant cues. Furthermore, a previous fMRI study found enhanced activations in the right IFG during a visual perspective taking task [Mazzarella et al., 2013]. Similar to our experimental approach, the authors investigated differences in the neural processing of either allocentric (actor's perspective) or egocentric (own perspective) body positions examining another person's viewpoint and actions in visual perspective judgments [Mazzarella et al., 2013]. Interestingly, Mazzarella et al. also report enhanced BOLD responses in the dorsomedial prefrontal cortex (dmPFC) for the allocentric condition, a region that was found to be highlighted in our experiment, however, during the NG‐ego condition. These results generally suggest that both dmPFC as well as IFG recruitment were based on differential demands on visual judgment processes. The potential conflict between gesture presence or absence and socially relevant egocentric body orientation for the judgment might have highlighted brain regions being primarily involved in both perspectives taking as well as “conflict resolution.” It can be further hypothesized that IC‐ego > NG‐ego (interaction contrast: orientation x gesture) particularly involves enhanced decision making processes as compared to the allocentric conditions. In the latter manipulation, the allocentric orientation of the actor per se may have facilitated decision making and voluntary action control with respect to addressment ratings. As Nachev et al. [2005] stated, a conflict between different action plans may be greatest when no single action is preferable to another (free‐choice paradigms), which may have resulted in the pre‐SMA activations. In other words, this conflict may be driven by the egocentric context; those people which feel addressed by both gesture and actor orientation in a similar way, may have more effort in decision making, response competition (selection between different responses) and conflict processing. The latter was found to be particularly associated with the rostral part of the pre‐SMA, which fits nicely to the results for the orientation x gesture contrast [Nachev, 2006; Nachev et al., 2005; Picard and Strick, 1996].

Whereas our study results are in line with Holler et al. [2014] regarding the main effect Gesture (Speech+Gesture>Speech), we found no interaction effect in the right middle temporal gyrus. However, several differences between the imaging investigations could account for this. First, we used an explicit social judgment task whereas Holler et al. used a passive viewing task. Second, we manipulated the whole body perspective whereas Holler et al. varied the eye gaze, and finally differences in syntactic structure and semantic content could account for the absent interaction in the middle temporal gyrus (MTG). These factors might have also contributed to the observed effect in the network including ACC, SMA and Insula, which was directly associated to social addressment judgments in our study. Thus, our active task might have stronger induced neural processing in brain regions involved in motor simulation, empathy and mentalizing.

Limitations

Despite the obvious advantages of the current approach it is important to mention the disadvantages, which include specifically the relative restricted stimulus material and the repetition of contents across conditions. However, despite potential habituation or carryover effects we found significant and plausible differences between conditions supporting the assumption that the advantages of the applied design outperform the potential disadvantages. Furthermore, the reported findings are based solely on ICs and might be specific to those. Future studies should examine the interaction of body orientation and other types of gestures such as deictic or beat gestures. Moreover, the inclusion of a gesture only condition could clarify the interaction of body orientation and gesture independent of spoken language.

CONCLUSIONS

Here, we demonstrated for the first time that both gesture and body‐orientation contribute to feeling addressed by communication partners and together influence neural processing in brain regions previously found to be involved in motor simulation, empathy and mentalizing processes. Neural as well as behavioral results highlight the human ability to use and integrate multiple social cues to evaluate the communicative act. These results corroborate the importance of “second‐person neuroscience” [Schilbach et al., 2013] ‐ emphasizing the relevance of self‐involvement in social interactions ‐ and may further provide a novel basis for the investigation of dysfunctional use and neural processing of socially relevant cues during natural communication in patients with psychiatric disorders such as schizophrenia or autism spectrum disorders.

Conflict of interest: The authors confirm to have no conflict of interest.

This research project is supported by a grant from the “Deutsche Forschungsgemeinschaft” (project no. DFG: Ki 588/6‐1) and the “Von Behring‐Röntgen‐Stiftung” (project no. 59‐0002).

REFERENCES

- Andric M, Small SL (2012): Gesture's neural language. Front Psychol 3:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciaramidaro A, Becchio C, Colle L, Bara BG, Walter H (2014): Do you mean me? Communicative intentions recruit the mirror and the mentalizing system. Soc Cogn Affect Neurosci 9:909–916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dick A, Mok E, Beharelle A, Goldin‐Meadow S, Small SL (2014): Frontal and temporal contributions to understanding the iconic co‐speech gestures that accompany speech. Hum Brain Mapp 35, 900–917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin‐Meadow S, Alibali MW (2013): Gesture's role in speaking, learning, and creating language. Annu Rev Psychol 64:257–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green A, Straube B, Weis S, Jansen A, Willmes K, Konrad K, Kircher T (2009): Neural integration of iconic and unrelated coverbal gestures: A functional MRI study. Hum Brain Mapp 30:3309–3324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holler J, Kelly S, Hagoort P, Özyürek A (2012): When gestures catch the eye: The influence of gaze direction on co‐speech gesture comprehension in triadic communication. Proceedings of the 34th Annual Meeting of the Cognitive Science Society, 467–472.

- Holler J, Kokal I, Toni I, Hagoort P, Kelly SD, Ozyürek A (2014): Eye'm talking to you: Speakers' gaze direction modulates co‐speech gesture processing in the right MTG. Soc Cogn Affect Neurosci. Doi: 10.1093/scan/nsu047. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holler J, Schubotz L, Kelly S, Hagoort P, Schuetze M, Ozyürek A (2014): Social eye gaze modulates processing of speech and co‐speech gesture. Cognition 133:692–697. [DOI] [PubMed] [Google Scholar]

- Holler J, Turner K, Varcianna T (2013): It's on the tip of my fingers: Co‐speech gestures during lexical retrieval in different social contexts. Lang Cogn Process 28:1509–1518. [Google Scholar]

- Kircher T, Straube B, Leube D, Weis S, Sachs O, Willmes K, Konrad K, Green A (2009): Neural interaction of speech and gesture: Differential activations of metaphoric co‐verbal gestures. Neuropsychologia 47:169–179. [DOI] [PubMed] [Google Scholar]

- Lavin C, Melis C, Mikulan E, Gelormini C, Huepe D, Ibañez A (2013): The anterior cingulate cortex: An integrative hub for human socially‐driven interactions. Front Neurosci 7:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mainieri AG, Heim S, Straube B, Binkofski F, Kircher T (2013): Differential role of mentalizing and the mirror neuron system in the imitation of communicative gestures. NeuroImage 81:294–305. [DOI] [PubMed] [Google Scholar]

- Mazzarella E, Ramsey R, Conson M, Hamilton A (2013): Brain systems for visual perspective taking and action perception. Soc Neurosci 8:248–267. [DOI] [PubMed] [Google Scholar]

- Nachev P (2006): Cognition and medial frontal cortex in health and disease. Curr Opin Neurol 19:586–592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nachev P, Rees G, Parton A, Kennard C, Husain M (2005): Volition and conflict in human medial frontal cortex. Curr Biol 15:122–128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picard N, Strick PL. (1996): Motor areas of the medial wall: A review of their location and functional activation. Cereb Cortex 6:342–353. [DOI] [PubMed] [Google Scholar]

- Ramsey R, Hansen P, Apperly I, Samson D (2013): Seeing it my way or your way: Frontoparietal brain areas sustain viewpoint‐independent perspective selection processes. J Cogn Neurosci 25:670–684. [DOI] [PubMed] [Google Scholar]

- Regenbogen C, Habel U, Kellermann T (2013): Connecting multimodality in human communication. Front Hum Neurosci 7:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saggar M, Shelly EW, Lepage J‐F, Hoeft F, Reiss AL (2014): Revealing the neural networks associated with processing of natural social interaction and the related effects of actor‐orientation and face‐visibility. NeuroImage 84:648–656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schilbach L, Wohlschlaeger AM, Kraemer NC, Newen A, Shah NJ, Fink GR, Vogeley K (2006): Being with virtual others: Neural correlates of social interaction. Neuropsychologia 44:718–730. [DOI] [PubMed] [Google Scholar]

- Schilbach L, Timmermans B, Reddy V, Costall A, Bente G, Schlicht T, Vogeley K (2013): Toward a second‐person neuroscience. Behav Brain Sci 36:393–414. [DOI] [PubMed] [Google Scholar]

- Slotnick SD, Schacter DL (2004): A sensory signature that distinguishes true from false memories. Nat Neurosci 7:664–672. [DOI] [PubMed] [Google Scholar]

- Spunt R, Satpute A, Lieberman M (2011): Identifying the what, why, and how of an observed action: An fMRI study of mentalizing and mechanizing during action observation. J Cogn Neurosci 23:63–74. [DOI] [PubMed] [Google Scholar]

- Straube B, Green A, Jansen A, Chatterjee A, Kircher T (2010): Social cues, mentalizing and the neural processing of speech accompanied by gestures. Neuropsychologia 48:382–393. [DOI] [PubMed] [Google Scholar]

- Straube B, Green A, Bromberger B, Kircher T (2011): The differentiation of iconic and metaphoric gestures: Common and unique integration processes. Hum Brain Mapp 32:520–533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Straube B, Green A, Chatterjee A, Kircher T (2011): Encoding social interactions: The neural correlates of true and false memories. J Cogn Neurosci 23:306–324. [DOI] [PubMed] [Google Scholar]

- Straube B, Green A, Weis S, Kircher T (2012): A supramodal neural network for speech and gesture semantics: An fMRI study. PLoS One 7:e51207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu YC, Coulson S (2007): How iconic gestures enhance communication: An ERP study. Brain Lang 101:234–245. [DOI] [PubMed] [Google Scholar]

- Xu J, Gannon PJ, Emmorey K, Smith JF, Braun AR (2009): Symbolic gestures and spoken language are processed by a common neural system. Proc Natl Acad Sci USA 106:20664–20669. [DOI] [PMC free article] [PubMed] [Google Scholar]