Abstract

In the predictive coding framework, mismatch negativity (MMN) is regarded a correlate of the prediction error that occurs when top–down predictions conflict with bottom–up sensory inputs. Expression‐related MMN is a relatively novel construct thought to reflect a prediction error specific to emotional processing, which, however, has not yet been tested directly. Our paradigm includes both neutral and emotional deviants, thereby allowing for investigating whether expression‐related MMN is emotion‐specific or unspecifically arises from violations of a given sequence. Twenty healthy participants completed a visual sequence oddball task where they were presented with (1) sequence deviants, (2) emotional sequence deviants, and (3) emotional deviants. Mismatch components were assessed at ventral occipitotemporal scalp sites and analyzed regarding their amplitudes, spatiotemporal profiles, and neuronal sources. Expression‐related MMN could be clearly separated from its neutral counterpart in all investigated aspects. Specifically, expression‐related MMN showed enhanced amplitude, shorter latency, and different neuronal sources. Our results, therefore, provide converging evidence for a quantitative specificity of expression‐related MMN and seems to provide an opportunity to study prediction error during preattentive emotional processing. Our neurophysiological evidence ultimately suggests that a basic cognitive operator, the prediction error, is enhanced at the cortical level by processing of emotionally salient stimuli. Hum Brain Mapp 36:3641–3652, 2015. © 2015 Wiley Periodicals, Inc.

Keywords: predictive coding, emotion, mismatch negativity, expression, face

INTRODUCTION

Predictive coding describes the assumption that the brain does not receive inputs passively but actively predicts what is going to happen next on the basis of patterns and regularities of previous inputs [Friston, 2005]. The predictive coding framework assumes that the brain constantly compares top–down expectations to bottom–up sensory inputs. Prediction errors can be seen as the discrepancy between predictions made by higher cortical areas and the information that the brain receives from its sensory inputs. From the computational perspective, the brain then aims at creating a model that minimizes prediction error, thereby creating an accurate representation of the environment [Friston, 2005].

An elegant route to assessing predictive coding is to study the phenomenon of mismatch negativity (MMN). MMN is an event‐related potential (ERP) component that—in the well‐studied auditory modality—occurs approximately 150 to 200 ms after stimulus presentation but only if the stimulus qualitatively deviates from former stimuli, for example, in sound intensity, duration, or pitch [Näätänen et al., 2007]. The MMN response is computed by subtracting the response to standard stimuli from the response to deviant stimuli and is thus thought to directly represent the residual variance between higher cortical predictions and sensory information, that is, the prediction error [Friston, 2005]. This thought has recently been experimentally confirmed by Wacongne et al. [2012] who showed that MMN complies with assumptions derived from the predictive coding model rather than the habituation model, at least in the auditory modality. Another paper by Rentzsch et al. [2015] further corroborates the generative model by demonstrating a strong correlation of auditory MMN with a repetition suppression measure, which is thought to rely on minimization of prediction error [Friston, 2005].

Most studies on MMN have focused on the auditory modality exclusively [Näätänen et al., 2007]. While MMN in the visual modality has received considerably less attention, researchers have found similar responses, albeit with a different spatiotemporal profile, that is, at around 300 ms over posterior cortex areas [Kimura et al., 2012; Neuhaus et al., 2013]. The visual mismatch negativity (vMMN) is evoked when a deviant visual stimulus violates the inherent rule of a series of preceding visual standard stimuli, thus complying with the predictive coding hypothesis [Kimura, 2012; Stefanics et al., 2014, 2015].

A few studies have focused on the vMMN in response to faces with different emotional expressions. Zhao and Li [2006] found that a MMN is evoked when deviant happy and sad faces followed a neutral face expression in a classic oddball paradigm. The phenomenon was consequently dubbed expression‐related MMN and was the first evidence to suggest that MMN relates to facial information. As expression‐related MMN was first discovered, several other researchers have reproduced these findings using more sophisticated techniques. One goal of this line of research is to establish that expression‐related MMN occurs specifically in response to emotional content of and not merely in response to the violation of a learned sequence. In the literature thus far, all studies that have focused on expression‐related MMN compared a neutral standard to one or two emotional deviants or two emotionally salient faces (i.e., fearful and happy) with each other [Astikainen et al., 2013; Astikainen and Hietanen, 2009; Kimura et al., 2012; Stefanics et al., 2012; Zhao and Li, 2006]. None of the studies, however, have presented both neutral and emotional deviants in the same study design. Doing so would have provided evidence that expression‐related MMN is emotion‐specific and does not occur due to deviations from a previously established sequence, which constitutes a regular MMN. A study by Gayle et al. [2012] did apply a classical MMN paradigm with both neutral and emotional deviants; however, they neither quantitatively differentiate neutral from emotional MMN components nor analyzed their respective neuronal sources.

The present study thus compares MMN waveforms evoked by neutral and emotional, that is, fearful, deviants and investigates if the MMN component evoked by emotionally salient deviants are different from their neutral counterpart in terms of amplitude, spatiotemporal profile, and neuronal sources. We apply a visual sequence oddball paradigm, where a sequence of two faces with neutral expression is established as the standard (70% of trials) that is intermittently interrupted by insertion of a second face that deviates in identity (sequence deviant; 10%), in emotion (emotional deviant; 10%), or both (emotional sequence deviant; 10%). In the predictive coding context, this study design may help answering the questions whether and how basic cortical computations are modified by processing of emotional stimuli. Interactions with emotion are well‐characterized for, for example, memory and learning, where emotionally salient stimuli and hence emotionally tagged memory contents are processed more in‐depth and are retrieved more accurately than emotionally neutral contents [see LaBar and Cabeza, 2006, for a review]. Identifying emotion–cognition interactions is thus at the fundament of cognitive neuroscience. Advancing our understanding of the role of emotional processing may thus open new vistas on predictive coding mechanisms relevant to cognitive neuroscience and neighboring disciplines.

MATERIALS AND METHODS

Participants

Twenty‐two healthy participants (16 males, 6 females) were recruited for this study using Internet advertisements. Two participants had to be excluded from the study because of technical artifacts and poor behavioral performance. The remaining 20 participants (15 males, 5 females) were between 21‐ and 43‐year old (30.5 ± 6.3 years) and had no history of psychiatric illness or any neurological condition. Prior to commencement of the study, participants were asked to sign a standard written consent and to fill out a battery of questionnaires. A complete list of questionnaires is specified under neuropsychological profile. All participants were right handed and had normal or corrected‐to‐normal vision. They received a reimbursement of 30 € for their efforts. The study was approved by the local ethics committee of the Charité University Medicine Berlin.

Neuropsychological Profile

The participants were asked to complete a battery of tests and questionnaires. The descriptives of the 20 participants on those tests and questionnaires are summarized in Table 1. The Fagerström Test for Nicotine Dependence (FTND) is a measure designed to assess nicotine dependency [Heatherton et al., 1991]. It consists of six questions and classifies nicotine dependency ranging between “very low” and “very high.” The Edinburgh Handedness Inventory (EHI) is a 10‐item questionnaire that assesses the participant's preferences for using his/her left or right hand in daily situations such as, for example, writing and throwing [Oldfield, 1971]. It allows to calculate a laterality score for the participant's dominant side, with 100 being the maximum. The Interpersonal Rectivity Index (IRI) was designed to assess empathy on four different subscales: fantasizing (FS), perspective taking (PT), empathic concern (EC), and personal distress (PD) [Davis, 1983]. The Digit Symbol Test (DST) was designed to assess memory functioning and processing speed [Wechsler, 1997]. In this test, the digits 1–9 are assigned to different geometrical symbols. The participant's task is to complete the table by drawing the according symbol under the presented digits. In total, 100 substitutions are possible and the participant is required to work as fast as possible. As a behavioral measurement, substitutions are counted after 120 sec. In previous research the DST has been shown to be sensitive to brain damage and lowered IQ [Glosser et al., 1977; van der Elst et al., 2006]. The Mehrfachwortschatztest (multiple choice vocabulary test) is a German measurement of crystallized intelligence. This test consists of 37 multiple‐choice items of which only one of five actually reflects a German word. The others are pseudo words and the participant's task is to mark the real word [Lehrl, 1999]. The Leistungsprüfsystem (performance testing system) is a nonverbal intelligence test of which subscale three contains irregularities in geometric figures. It is used to assess the participant's ability to reason [Horn, 1983].

Table 1.

Sample description

| Measure | Mean ± SD |

|---|---|

| Age | 30.5 ± 6.3 |

| Education (years) | 17.1 ± 2.55 |

| Proportion questions tale correct | 0.76 ± 0.17 |

| Accuracy button click | 0.99 ± 0.02 |

| FTND | 0.95 ± 1.93 |

| EHI (laterality index) | 82.7 ± 15.93 |

| Estimated verbal IQ | 110.8 ± 13.12 |

| Estimated nonverbal IQ | 113.45 ± 13.08 |

| DST (2 min) | 78.75 ± 12.54 |

| IRI‐PT | 18.35 ± 3.5 |

| IRI‐FS | 15.9 ± 4.23 |

| IRI‐EC | 19.55 ± 3 |

| IRI‐PD | 11.85 ± 5.98 |

FTND, Fagerström Test for Nicotine Dependence; EHI, Edinburgh Handedness Inventory; DST, Digit Symbol Test; IRI, Interpersonal Rectivity Index (PT, perspective taking; FS, fantasizing; EC, empathic concern; PD, personal distress).

Procedure and Study Design

Participants were seated 100 cm in front of a 22″ widescreen monitor for this EEG experiment and a headrest was used to minimize head movements during recording. Similar to earlier studies on MMN in the visual and auditory modality, the participant's attention was maintained in a task‐independent modality. According to Duncan et al. [2009], the optimal way to study MMN is in a passive paradigm where participants ignore the stimuli that are being presented to eliminate other cognitive components that would be active during active attention. Following these guidelines, participants were instructed to focus on a tale that was read to them via headphones while observing the stimuli appearing on the screen. The tale “Garden of Paradise” by the Danish author Hans Christian Andersen has been translated into German and was read out by a volunteer at the online audiobook database http://librivox.org. Participants were instructed to watch the faces that were appearing on screen but to attend the story because after the experiment they would be asked to answer 10 multiple‐choice questions about the content of the tale. Participants who answered 50% or more of the questions incorrectly were excluded from the study to ensure that included participants were attending to the story. Participants who already knew the tale were also excluded from the study. As a behavioral control to ensure that participants were looking toward the screen, participants were instructed to press the mouse button whenever they saw a face with a large and salient red star on it. Ten percentage of the trials was behavioral control trials, where a salient red star was clearly visible on the left top corner of a presented face. If the accuracy of an individual was below 80%, he or she was excluded from the study. The faces database was provided by the Max‐Planck Institute of Biological Cybernetics in Tuebingen, Germany [Blanz and Vetter, 1999; Troje and Bülthoff, 1996].

Paradigm

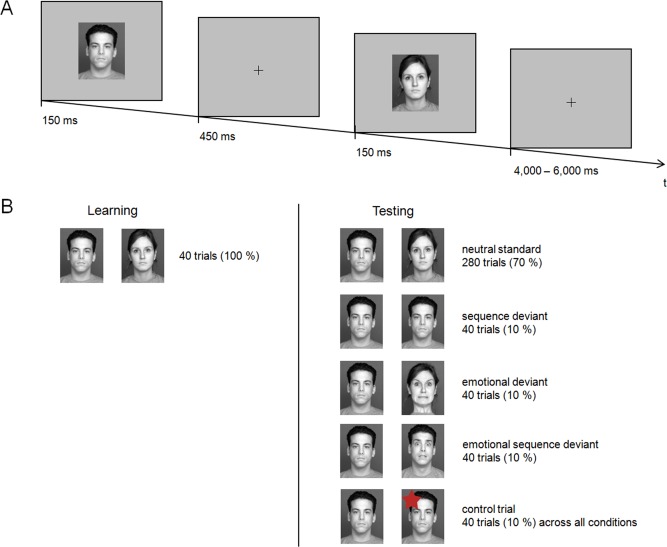

The EEG paradigm consisted of two major parts, a learning phase and a test phase. In the learning phase, participants were presented with 40 repetitions of the standard sequence that consisted of a combination of two neutral faces (male, female; same stimuli throughout the experiment) with a stimulus onset asynchrony of 600 ms and a stimulus presentation time of 150 ms. The purpose of the learning phase was to establish the standard sequence, so that participants were able to detect sequence violations in the subsequent test phase. The combination of a male followed by a female face served as the standard condition in the test phase and was shown 70% of the time. During the test phase, the other three conditions were sequence deviant (neutral male face, neutral male face), sequence emotional deviant (neutral male face, fearful male face), and emotional deviant (netral male face, fearful female face), each of which was presented 10% of the time. The trial condition (i.e., standard vs. deviant) was always defined by the second stimulus. Control trials (10% of all trials; see Procedure and Study Design) were evenly distributed across blocks and were only used for behavioral analysis of response accuracy. Figure 1 gives an overview of the task. All conditions were randomized over all 400 test trials. After 100 trials, the participants were given a short break. The experiment lasted for approximately 45 min.

Figure 1.

Schematic of the applied task. (A) Illustration of a neutral standard trial. (B) The learning phase (left) consisted of a total of N = 40 standard trials that were presented to establish this sequence as the standard sequence. The test phase consisted of a total N = 400 trials including standard trials (N = 280) and three deviant conditions (N = 40 each). Arithmetically, every tenth trial served as a behavioral control trial (N = 40); control trials were distributed across experimental conditions proportionate to their overall probability. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

EEG Acquisition and Analysis

EEG data were collected using WaveGuard electrode caps with 64 Ag/Ag‐Cl electrodes, ASA software, and a DC amplifier (ANT Neurosystems, Enschede, The Netherlands). To analyze the EEG data, BrainVision Analyzer 2 (Brain Products, Munich, Germany) was used. During recording, electrodes impendences were kept below 5 kΩ and all channels were referenced to the Cz electrode.

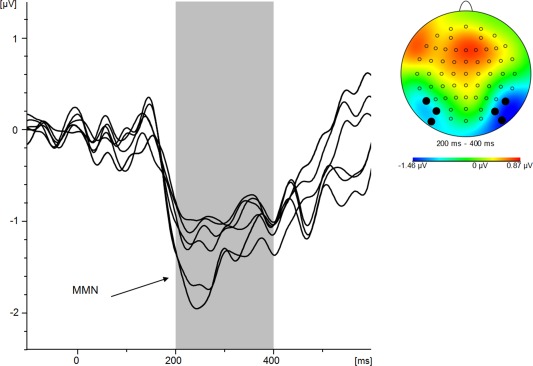

For offline analysis, filters were used with 0.5 Hz high‐pass and 20 Hz low pass. EEG signals were then re‐referenced to common average. Ocular artifact correction was performed using BrainVision Analysers's independent component analysis approach [Jung et al., 2000]. After automated ocular artifact correction, artifacts (amplitude criterion: 80 μV at every channel) were tagged for later whole‐segment removal. The EEG was then segmented according to the experimental conditions. After baseline correction, ERPs were averaged for all four conditions, for each individual separately; this step also involved removal of artifact‐contaminated segments. At this point, mean amplitude values pooled across all electrodes were extracted to confirm the presence of a significant negativity in the deviant conditions compared with the standard condition. Next, MMN components were calculated by subtracting the ERP in response to the neutral standard from the ERPs in response to the three deviant conditions, thus leaving difference waveforms that are hypothesized to contain different MMN components, depending on the experimental condition: (1) MMN elicited by sequence deviants; (2) MMN elicited by emotional sequence deviants; and (3) MMN elicited by emotional deviants. For those three components, six electrodes were selected that represent the underlying regions of interest: P7, P07, P09 (left hemisphere) and P8, P08, P010 (right hemisphere). Electrode selection was based on own previous research [Neuhaus et al., 2013] and on visual inspection of topographical maps to confirm the validity of electrode selection for MMN signals—see Figure 2. MMN signals were visually inspected for all three deviant conditions using butterfly view of grand averaged signals, that is, across all participants. Based on this, peak detection for the MMN components was performed in the interval of 200 to 400 ms and peak information on voltage and latency were exported to SPSS.

Figure 2.

Overlay of the difference potential collapsed across deviants minus neutral standard that indicates an average negativity between 200 and 400 ms. First, a butterfly plot was constructed. Next, corresponding topographical maps revealed confined negative potentials of the involved ERP components over ventral occipital cortical areas which led to the inclusion of electrodes P7, P07, P09 (left hemisphere) and P8, P08, P010 (right hemisphere). Electrodes not selected for further analysis were then removed from the butterfly plot, thus leaving included difference waveforms. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

On average, the analysis was based on the following numbers of artifact‐free segments per condition: 235.1 ± 12.0 (standards); 33.4 ± 2.3 (sequence deviant); 34.25 ± 2.5 (emotional deviant); and 33.8 ± 1.7 (emotional sequence deviant).

Source Localization Analysis

The cortical distribution of electrical activity recorded from scalp electrodes in response to averaged adapters was computed with Standardized LowResolution Brain Electromagnetic Tomography (sLORETA) [Pascual‐Marqui, 2002]. sLORETA uses a realistic three‐shell head model registered to the Talairach atlas of the human brain [Talairach and Tournoux, 1988] with a three‐dimensional solution space that is restricted to the cortical gray matter and the hippocampus. The intracerebral volume comprises a total of 6,239 voxels at 5 mm spatial resolution. Without a priori assumptions on number and location of active sources, this solution to the inverse problem computes the standardized current density at each voxel as the weighted sum of the scalp electric potentials. The time frames of interest for statistical imaging were selected on a data‐driven base following ERP analysis, that is, the time frames were identical to those used for peak detection.

Statistical Analysis

SPSS 20.0 (IBM, Armonk) was used for statistical computations. First, to demonstrate the presence of a significant negativity in the time frame of interest, we analyzed waveforms elicited by all experimental condition, that is, standard, sequence deviant, emotional deviant, and emotional sequence deviant, with a 4‐level repeated measures analysis of variance (ANOVA). After having established that a significant overall negativity is present in the time frame of interest, the difference ERPs were spatiotemporally analyzed using another ANOVA in a 3 × 3 × 2 design using the within‐subjects factors “Condition” (three levels: sequence deviant, emotional sequence deviant, emotional deviant); “Electrode” (three levels: P7/P8, PO7/PO8, PO9/PO10); and “Hemisphere” (two levels: left, right). Post hoc, significant main effects were analyzed using pairwise comparisons; all post hoc tests were corrected using the Bonferroni method. Correlation analyses were done using Pearson rank correlations with Bonferroni correction. Alpha was set at P < 0.05 for all tests.

Statistical imaging of current density differences was done based on nonparametric voxel‐by‐voxel t‐tests [Holmes et al., 1996]. This maximum t‐statistic offers a procedure of bootstrap resampling (5,000 randomly created groups across conditions) and produces noncorrected threshold values for single voxel P's.

RESULTS

Behavioral Results

Mean accuracy of the button click in response to interspersed target trials for the 20 participants was 0.99 ± 0.02 and the mean proportion of correct answers on the 10‐item multiple‐choice questionnaire regarding the content of the tale was 0.76 ± 0.12.

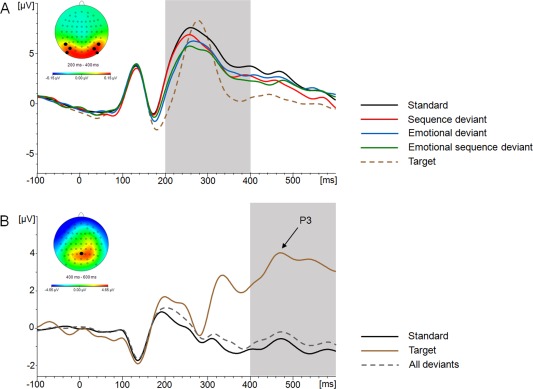

ERP RESULTS

In a first step, we sought to determine the presence of a vMMN by analyzing the waveforms elicited by all experimental conditions, that is, standard and all deviants, in a 4‐level ANOVA model. We found a significant main effect of “Condition” (F (3,57) = 8.193; P < 0.001; η 2 = 0.301), where the standard condition was associated with a significantly more positive mean amplitude (5.27 ± 2.6 µV) compared with the sequence deviant (4.44 ± 3.0 µV; T (19) = 3.243; P = 0.004), the emotional deviant (4.12 ± 2.6 µV; T (19) = 4.842; P < 0.001), and the emotional sequence deviant condition (3.77 ± 3.1 µV; T (19) = 4.392; P < 0.001), thus demonstrating the presence of a negativity in the postulated time frame (see also Fig. 3A). Differences between deviants were not significant. Figure 3B illustrates the ERP at parietal midline electrode Pz in response to target trials.

Figure 3.

(A) Grand average ERPs for different conditions pooled across parieto‐occipital electrodes P7/8, PO7/8, and PO9/10. The neutral standard condition (black line) serves as baseline condition that is later subtracted from the deviant conditions (colored lines). The target ERP (broken line) is morphologically different from standard and deviant ERPs. (B) Grand average ERP at parietal midline electrode Pz. The target ERP (brown line) indicates a late positive deflection, the P3 component, which is absent in non‐target trials, that is, standard (black line) and deviant trials (broken line, pooled across conditions). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

In the next step, we spatiotemporally analyzed the difference waveforms associated with deviant processing, that is, deviant minus standard condition.

Voltage

Repeated measures ANOVA of all MMN components revealed significant main effects of “Condition” (F (2,38) = 3.436; P = 0.043; η 2 = 0.153) and of “Hemisphere” (F (1,19) = 5.878; P = 0.025; η 2 = 0.236) but not an interaction thereof.

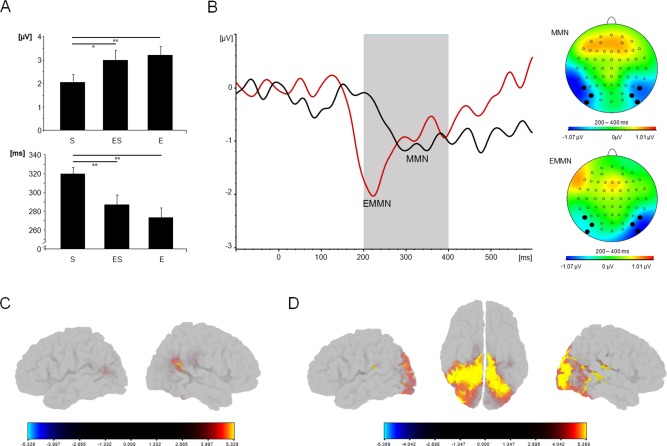

Post hoc analyses of the main effect of “Condition” showed that amplitudes of MMN components evoked by emotional deviants (−2.97 ± 1.59 µV) were significantly more negative compared with those evoked by sequence deviants (−1.91 ± 1.23 µV; T (19) = −2.99; P = 0.024). Combined emotional sequence deviants evoked an intermediate MMN amplitude (−2.78 ± 1.75 µV) that showed a trend‐level difference from sequence MMN (T (19) = 2,005; P = 0.059; see also Fig. 4A). Figure 4B illustrates the ERP traces for the significantly different MMN conditions, that is, sequence deviant versus emotional deviant. The amplitude effect size of sequence versus emotional deviant was d = (M E − M S)/SDpooled = (−2.9718 to −1.9066)/1.17306= −0.91, corresponding to a large effect size.

Figure 4.

(A) Differences between sequence (S), emotional sequence (ES), and emotional (E) deviants in amplitude (rectified microvolts) and latency (milliseconds) across electrodes. * indicates P < 0.1; ** indicates P < 0.05. (B) ERP traces for emotional mismatch negativity (expression‐related MMN, EMMN, red) and MMN (black) across electrodes along with topographical maps for MMN (top) and expression‐related MMN (bottom). (C) Neuronal source estimation with significant clusters of cortical current density activation in response to sequence deviants during the time interval of sequence‐related MMN from 300 to 400 ms. (D) Neuronal source estimation with significant clusters of cortical current density activation in response to emotional deviants during the time interval of the expression‐related MMN from 200 to 300 ms. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Post hoc analysis of the main effect of “Hemisphere” revealed that right‐hemispheric amplitudes (−2.82 ± 1.23 µV) were significantly more negative compared with MMN responses of the left hemisphere (−2.29 ± 1.08 µV; T (19) = 2.424; P = 0.025).

Latency

Repeated measures ANOVA revealed a significant main effect of “Condition” (F (2,38) = 7.122; P = 0.002; η 2 = 0.273), where emotional deviants (271.73 ± 43.91 ms) were associated with a significantly earlier MMN response compared with those evoked by sequence deviants (316.43 ± 29.88 ms; T (19) = −3.225; P = 0.012; see also Fig. 4A). Combined emotional sequence deviants had an intermediate latency (285.26 ± 43.24 ms) that significantly differed from MMN latency evoked by sequence deviants (T (19) = 2.443; P = 0.024). The latency effect size of sequence versus emotional deviant was d = (M E − M S)/SDpooled = (271.733 – 316.433)/21.21214 = −2.11, corresponding to a very large effect size.

We exploratively computed correlation analyses between MMN components and neuropsychological measures, especially scores of the IRI. None of the neuropsychological measures, however, was significantly correlated with MMN.

SOURCE LOCALIZATION ANALYSIS

Significant clusters of cortical current density activation in response to sequence deviants during the time interval of the sequence‐related MMN (300 to 400 ms) relative to baseline (−700 to −600 ms) were located in the temporal cortex bilaterally (see also Table 2). Current density sources in response to emotional deviants during the time interval of the expression‐related MMN (200 to 300 ms) relative to baseline were widespread and included activations in the temporal cortex, occipital cortex, cingulate, medial temporal lobe, and insula (see also Table 3). Source estimation results are illustrated in Figure 4C.

Table 2.

Brain regions with significant activation for MMN in response to sequence deviants

| Region (BA) | R/L | x | y | z | t value |

|---|---|---|---|---|---|

| Temporal | |||||

| Middle temporal gyrus (22) | L | −35 | −57 | 17 | 6.14 |

| Superior temporal gyrus (13) | R | 50 | −43 | 21 | 5.47 |

Table 3.

Brain regions with significant activation for expression‐related MMN in response to emotional deviants

| Region (BA) | R/L | x | y | z | t value |

|---|---|---|---|---|---|

| Temporal | |||||

| Superior temporal gyrus (22) | R | 50 | −29 | 6 | 10.58 |

| Superior temporal gyrus (41) | L | −35 | −33 | 15 | 6.67 |

| Inferior temporal gyrus (20) | R | 50 | −49 | −6 | 6.81 |

| Insula (13) | R | 30 | −33 | 20 | 8.16 |

| Occipital | |||||

| Transverse temporal gyrus (18) | R | 20 | −53 | 7 | 7.27 |

| L | −20 | −63 | 3 | 7.66 | |

| Middle occipital gyrus (19) | R | 30 | −77 | 22 | 6.36 |

| L | −30 | −77 | 18 | 5.75 | |

| Inferior occipital gyrus (19) | R | 45 | −83 | −4 | 5.53 |

| Middle temporal gyrus (19) | L | −35 | −57 | 17 | 5.47 |

| Paralimbic | |||||

| Posterior cingulate (30) | R | 5 | −48 | 16 | 8.51 |

| Posterior cingulate (31) | L | −20 | −42 | 25 | 9.09 |

| Fusiform gyrus (37) | R | 45 | −49 | −10 | 7.03 |

| Fusiform gyrus (19) | L | −30 | −68 | −9 | 6.15 |

| Parahippocampal gyrus (30) | R | 25 | −53 | 3 | 6.96 |

| L | −10 | −48 | 2 | 6.38 |

DISCUSSION

In this study, we sought to investigate the effect of emotion on predictive coding on the level of the vMMN, a correlate of the prediction error. We compared MMNs evoked by sequence deviants, emotional deviants, and emotional sequence deviants in terms of peak amplitude and spatiotemporal profile. Converging results from amplitude, latency, topography, and generator analyses provide robust evidence for the specificity of expression‐related MMN to emotionally salient stimuli, that is, a strong facilitation of prediction error computation by emotional processing. Expression‐related MMN elicited by emotional deviants develops about 100 ms earlier with a highly pronounced amplitude compared with MMNs evoked by sequence deviants. Moreover, the expression‐related MMN component shows a relatively strong right‐hemispheric dominance and is generated by a considerably larger neuronal network compared with the vMMN evoked by sequence deviants. The present work thus extends prior knowledge derived from previous expression‐related MMN studies [Astikainen et al., 2013; Astikainen and Hietanen, 2009; Kimura et al., 2012; Stefanics et al., 2012; Zhao and Li, 2006] that did not use neutral deviant conditions and, therefore, do not allow for deciding whether expression‐related MMN components were specific to emotional contexts or were evoked by physical differences between stimuli in a strict sense. Our study also extends the work by Gayle et al. [2012] by estimating underlying neuronal generator patterns and by directly comparing neutral and emotional MMN components, which allows for quantitatively estimating the effect sizes of the investigated features. Here, large to very large effect sizes were found for predictive signaling amplitude increase (d = −0.91) and latency decrease (d = −2.11), respectively. We did not observe any correlation between MMN components and neuropsychological scores, especially the Interpersonal Reactivity Index.

The shift toward earlier and stronger predictive signaling response by emotional stimuli is in good accordance with established findings in emotional face processing [see Eimer and Holmes, 2007; Vuilleumier and Pourtois 2007, for reviews]. Processing of emotional stimuli has consistently been shown to deviate from processing of neutral stimuli within 200 ms after stimulus presentation [Batty and Taylor, 2003; Eimer and Holmes, 2003] and to enhance ERP components associated with face processing, which seems to be especially true for fearful faces [Ashley et al., 2004; Batty and Taylor, 2003; Leppänen et al., 2007; Pourtois et al., 2005]. Facilitated processing of emotionally salient face expressions is also consistent with their importance in social interactions via the extraction of meaning and extrapolation of motivation and future behavior from this nonverbal form of communication [Adolphs, 2003]. Here, we demonstrate a comparable pattern of prediction error facilitation by emotional processing compared to neutrally valenced processing. In this context, it may be important to notice that peak and average amplitude comparisons can lead to slightly different statistical results; while the use of average amplitudes might be statistically more reliable, it precludes analysis of peak latency data, which may contribute valuable information, as is the case in the present data.

Topographically, the right hemispheric dominance, especially in temporal cortex areas, during emotional deviant processing is highly consistent with evidence derived from early studies on face processing [Bentin et al., 1996; Clark et al., 1996; Haxby et al., 1994; Kanwisher et al., 1997; McCarthy et al., 1997; Sergent et al., 1992] and with the right hemisphere hypothesis of emotional processing [Adolphs et al., 1996; Alves et al., 2008]. Neuronal source localization revealed activation of bilateral temporal cortex, occipital areas, posterior cingulate, fusiform and parahippocampal gyri, and right insula during emotionally salient deviants. This activation pattern is in good agreement with earlier expression‐related MMN source localization studies [Kimura et al., 2012; Li et al., 2012; but see Csukly et al., 2013, for discrepant results] with only very few discrepancies across studies: while Kimura et al. [2012] results additionally showed frontal areas involved in the processing of deviant fearful faces, Li et al. [2012] described additional parietal areas activated during processing of deviant sad faces, none of which was seen in the present data. Regarding concordant areas, however, activation of the visual cortex including extrastriate areas (Brodmann Areas 18 and 19) are in line with earlier expression‐related MMN studies [e.g., Kimura et al., 2012], can be expected during a visual task, and have been reported in many studies on the neural meachanisms of the vMMN component in nonemotional contexts [Kimura et al., 2010; Urakawa et al., 2010; Yucel et al., 2007]. Activation of the fusiform face area is associated with the perception of emotion in facial expressions [Haxby et al., 2000; Radua et al., 2010]. Insula activation has been associated, that is, with the emotional content of facial expression [Haxby et al., 2000], experience and mirroring of disgust [Wicker et al., 2003], perception of angry faces [Fusar‐Poli et al., 2009], and perception of fearful faces [Klumpp et al., 2012].

In contrast to the widespread activation associated with emotionally salient deviants, emotionally neutral deviants were associated with confined activations of the left middle temporal gyrus (BA 22) and the right superior temporal gyrus (BA 13) in close proximity to the superior temporal sulcus. This activation pattern is well in line with the commonly observed activation of posterior temporal cortex during face perception processes [Haxby et al., 2000]. Moreover, several studies have reported temporal area activation when presenting a critical stimulus in a visual oddball design [see Pazo‐Alvarez et al., 2003, for a review; but see Kimura et al., 2010; Susac et al., 2014]. Strikingly, however, sequence deviants were associated with quantitatively less activation compared with emotional deviants, which can—at least partially—be explained by the lack of emotional context and associated cortical processes. The fact that sequence deviants were produced by combining identical stimuli makes it likely that decreases of neuronal activity after repeated presentation of identical stimuli are involved [Grill‐Spector et al., 2006; Kristjansson and Campana, 2010]. This repetition suppression effect is observed in a variety of measurement methods and paradigms, spanning from single‐cell recordings in monkeys [Desimone, 1996] to human neuronal network activation during face processing assessed via functional neuroimaging [Henson et al., 2000] and ERPs [Kühl et al., 2013]. The neuronal response reduction via repetition suppression may also explain the failure to replicate occipital cortex activation observed in other source localization studies on vMMN [Kimura et al., 2010; Susac et al., 2014], especially in the light of a plausibly high degree of extrastriate visual cortex responsivity to repetition effects in visual tasks [de Gardelle et al., 2013; Ewbank et al., 2013].

Regarding the predictive coding framework, our study design provides direct evidence that basic cortical prediction error signals are enhanced by processing of emotional stimuli. It has been shown most recently that reward prediction errors in the ventral striatum—the difference between expected and received reward—are also increased by emotional context. Specifically, and in good accordance with our results, reward prediction error signals increased when experimental trials were preceded by a fearful compared to a neutral face in a probabilistic learning task [Watanabe et al., 2013]. In another study, participants performed a cue‐outcome task under stressful and nonstressful conditions, where fearful faces indicated an aversive outcome; stressful conditions were associated with increased prediction errors for aversive stimuli [Robinson et al., 2013]. Our results extend these findings by extending the predictive coding‐emotion interaction to basic cortical computational operations outside the reward processing network.

A few limitations of the present study have to be acknowledged. Regarding the paradigm, as discussed above, repetition suppression and prediction error effects are difficult to disentangle here, because the sequence deviant consists of the unexpected presentation of the same stimulus, whereas the emotional deviant consists of the unexpected presentation of a different stimulus. Both conditions, however, evoked a clear‐cut mismatch response, which indicates sufficient recruitment of neuronal resources in both conditions. Moreover, repetition suppression effects may partially explain amplitude and signal strength of neuronal source activity, but cannot account for differences in neuronal response latency and lateralization. Regarding the source estimation approach, it is important to notice that there are various factors that can affect the spatial resolution of sLORETA so that information on the activation of specific brain regions should only be regarded as an approximate approach to the actual distribution of activation [Pizzagalli, 2007]. A common factor that limits LORETAs spatial resolution is the estimation of cortical activation on a model of an average brain that cannot account for individual differences between participants [Ding et al., 2005], which, however, is a disadvantage common to the large majority of functional neuroimaging techniques. The relatively coarse spatial resolution, conversely, is a disadvantage that can be eliminated by the future use of fMRI to verify the findings obtained here. Last, we used both an auditory and a visual distractor task. While the use of a modality‐independent distractor task is recommended, using a task in the experimental sensory modality increases the risk of confounding results with undesired attention effects. Although the visual task was very easy, as shown by the mean accuracy rate, and although clear‐cut differential effects per experimental conditions were observed that cannot be explained by the evenly distributed visual control task, the use of an eye‐tracking method may have been favorable over a visual control task.

In conclusion, the present study demonstrates that expression‐related MMN as evoked by emotional deviants is clearly separable from MMN evoked by sequence deviants in terms of voltage, latency, topography, and neuronal sources. This study provides evidence that emotion amplifies and accelerates those mechanisms that underlie the phenomenon of MMN, that is, prediction error. Thus, we here provide evidence that MMN is not only determined by the physical properties of applied stimuli or their sequential order, but also by the emotional content of applied stimuli. Further investigations into predictive coding and its modulation by emotional salience and affective valence provide fascinating research opportunities for future studies.

ACKNOWLEDGMENT

The authors thank Philipp Sterzer for helpful discussions and all participants for their contribution to this study.

Conflict of interest: There is no conflict of interest, financial or otherwise, related to this work for any of the authors.

REFERENCES

- Adolphs R (2003): Cognitive neuroscience of human social behaviour. Nat Rev Neurosci 4:165–178. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Damasio AR (1996): Cortical systems for the recognition of emotion in facial expressions. J Neurosci 16:7678–7687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alves NT, Fukusima SS, Aznar‐Casanova A (2008): Models of brain asymmetry in emotional processing. Psychol Neurosci 1:63–66. [Google Scholar]

- Ashley V, Veilleumeir P, Swick D (2004): Time course and specificity of event‐related potentials to emotional expressions. Neuroreport 15:211–216. [DOI] [PubMed] [Google Scholar]

- Astikainen P, Hietanen JK (2009): Event‐related potentials to task‐irrelevant changes in facial expressions. Behav Brain Funct 5:30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astikainen P, Cong F, Ristaniemi Hietanen JK (2013): Event‐related potentials to unattended changes in facial expressions: Detection of regularity violations or encoding of emotions? Front Hum Neurosci 7:557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batty M, Taylor MJ (2003): Early processing of the six basic facial emotional expressions. Cogn Brain Res 17:613–620. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G (1996): Electrophysiological studies of face perception in humans. J Cogn Neurosci 8:551–565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanz V, Vetter T (1999): A morphable model for the synthesis of 3D faces. In: Proceedings of 26th Annual Conference Computer Graphics Interactive Techniques ‐ SIGGRAPH ’99. pp 187–194.

- Clark VP, Keil K, Maisog JM, Courtney S, Ungerleider LG, Haxby JV (1996): Functional magnetic resonance imaging of human visual cortex during face matching: A comparison with positron emission tomography. Neuroimage 4:1–15. [DOI] [PubMed] [Google Scholar]

- Csukly G, Stefanics G, Komlose S, Czigler I, Czobor P (2013): Emotion‐related visual mimatch responses in schizophrenia: Impairments and correlations with emotion recognition. PLoS One 8:e75444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH (1983): Measuring individual differences in empathy: Evidence for a multidimensional approach. J Pers Soc Psychol 44:113–126. [Google Scholar]

- de Gardelle V, Waszczuk M, Egner T, Summerfield C (2013): Concurrent repetition enhancement and suppression responses in extrastriate visual cortex. Cereb Cortex 23:2235–2244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R (1996): Neural mechanisms for visual memory and their role in attention. Proc Natl Acad Sci USA 93:13494–13499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding L, Lai Y, He B (2005): Low resolution brain electromagnetic tomography in a realistic geometry head model: A simulation study. Phys Med Biol 50:45–56. [DOI] [PubMed] [Google Scholar]

- Duncan CC, Barry RJ, Connolly JF, Fischer C, Michie PT, Näätänen R, Polich J, Reinvang I, Van Petten C (2009): ‐related potentials in clinical research: Guidelines for eliciting, recording, and quantifying mismatch negativity, P300, and N400. Clin Neurophysiol 120:1883–1908. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A (2003): An ERP study on the time course of emotional face processing. Neuroreport 13:427–431. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A (2007): Event‐related brain potential correlates of emotional face processing. Neuropsychologia 45:15–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ewbank MP, Henson RN, Rowe JB, Stoyanova RS, Calder AJ (2013): Different mechanisms within occipitotemporal cortex underlie repetition suppression across same and different‐size faces. Cereb Cortex 23:1073–1084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K (2005): A theory of cortical responses. Philos Trans R Soc Lond B Biol Sci 360:815–836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar‐Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuir P, Politi P (2009): Functional atlas of emotional faces processing: A voxel‐based meta‐analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci 34:418–432. [PMC free article] [PubMed] [Google Scholar]

- Gayle LC, Gal DE, Kieffaber PD (2012): Measuring affective reactivity in individual with autism personality traits using the visual mismatch negativity event‐related brain potential. Front Hum Neurosci 6:334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glosser G, Butters N, Kaplan E (1977): Visuoperceptual processes in brain damaged patients on the digit symbol substitution test. Int J Neurosci 7:59–66. [DOI] [PubMed] [Google Scholar]

- Grill‐Spector K, Henson R, Martin A (2006): Repetition and the brain: Neural models of stimulus‐specific effects. Trends Cogn Sci 10:14–23. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL (1994): The functional organization of human extrastriate cortex: A PET‐rCBF study of selective attention to faces and locations. J Neurosci 14:6336–6353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI (2000): The distributed human neural system for face perception. Trends Cogn Sci 4:223–233. [DOI] [PubMed] [Google Scholar]

- Heatherton TF, Kozlowski LT, Frecker RC, Fagerstrom KO (1991): The Fagerstrom test for nicotine dependence: A revision of the Fagerstrom Tolerance Questionnaire. Br J Addict 86:1119–1127. [DOI] [PubMed] [Google Scholar]

- Henson R, Shallice T, Dolan R (2000): Neuroimaging evidence for dissociable forms of repetition priming. Science 287:1269–1272. [DOI] [PubMed] [Google Scholar]

- AP Holmes, RC Blair, JD Watson, I Ford (1996): Nonparametric analysis of statistic images from functional mapping experiments. J Cereb Blood Flow Metab 16:7–22. [DOI] [PubMed] [Google Scholar]

- Horn W (1983): Leistungsprüfsystem: LPS. Göttingen: Hogrefe. [Google Scholar]

- Jung TP, Makeig S, Humphries C, Lee TW, McKeown MJ, Iragui V, Sejnowski TJ (2000): Removing electroencephalographic artifacts by blind source separation. Psychophysiology 37:163–178. [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997): The fusiform face area: A module in human extrastriate cortex specialized for the perception of faces. J Neurosci 17:4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kimura M (2012): Visual mismatch negativity and unintentional temporal‐contex‐based prediction in vision. Int J Psychophysiol 83:144–155. [DOI] [PubMed] [Google Scholar]

- Kimura M, Ohira H, Schröger E (2010): Localizing sensory and cognitive systems for pre‐attentive visual deviance detection: An sLORETA analysis of the data of Kimura et al. 2009. Neurosci Lett 485:198–203. [DOI] [PubMed] [Google Scholar]

- Kimura M, Kondo H, Ohira H, Schröger E (2012): Unintentional temporal context‐based prediction of emotional faces: An electrophysiological study. Cereb Cortex 22:1774–1785. [DOI] [PubMed] [Google Scholar]

- Klumpp H, Angstadt M, Phan KL (2012): Insula reactivity and connectivity to anterior cingulate cortex when processing threat in generalized social anxiety disorder. Biol Psychol 89:273–276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kristjansson A, Campana G (2010): Where perception meets memory: A review of repetition priming in visual search tasks. Atten Percept Psychophys 72:5–18. [DOI] [PubMed] [Google Scholar]

- Kühl LK, Brandt ESL, Hahn E, Dettling M, Neuhaus AH (2013): Exploring the time course of N170 repetition suppression: A preliminary study. Int J Psychophysiol 87:183–188. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Cabeza R (2006): Cognitive neuroscience of emotional memory. Nat Rev Neurosci 7:54–64. [DOI] [PubMed] [Google Scholar]

- Lehrl S (1999): Mehrfachwahl‐Wortschatz‐Intelligenztest: MWT‐B. Balingen: Spitta. [Google Scholar]

- Leppänen JM, Moulson MC, Vogel‐Farley VK, Nelson CA (2007): An ERP study of emotional face processing in the adult and infant brain. Child Dev 78:232–245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li X, Lu Y, Sun G, Gao L, Zhao L (2012): Visual mismatch negativity elicited by facial expressions: New evidence from the equiprobable paradigm. Behav Brain Funct 8:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T (1997): Face‐specific processing in the human fusiform gyrus. J Cogn Neurosci 9:605–610. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Paavilainen P, Rinne T, Alho K (2007): The mismatch negativity (MMN) in basic research of central auditory processing: A review. Clin Neurophysiol 118:2544–2590. [DOI] [PubMed] [Google Scholar]

- Neuhaus AH, Brandt ES, Goldberg TE, Bates JA, Malhotra AK (2013): Evidence for impaired visual prediction error in schizophrenia. Schizophr Res 147:326–330. [DOI] [PubMed] [Google Scholar]

- Oldfield RC (1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9:97–113. [DOI] [PubMed] [Google Scholar]

- Pascual‐Marqui RD (2002): Standardized low‐resolution brain electromagnetic tomography (sLORETA): Technical details. Methods Find Exp Clin Pharmacol 24:5–12. [PubMed] [Google Scholar]

- Pazo‐Alvarez P, Cadaveira F, Amenedo E (2003): MMN in the visual modality: A review. Biol Psychol 63:199–236. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA (2007): Electroencephalography and high‐density electrophysiological source localization In: Cacioppo JT, Tassinary LG, Berntson GG, editors. Handbook of Psychophysiology, 3rd ed. pp 56–84. [Google Scholar]

- Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P (2005): Enhanced extrastriate visual responses to bandpass spatial frequencey filtered fearful faces: Time course and topographic eveked‐potentials mapping. Hum Brain Mapp 26:65–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Radua J, Phillips ML, Russell T, Lawrence N, Marshall N, Kalidindi S, El‐Hage W, McDonald C, Giampietro V, Brammer MJ, David AS, Surguladze SA (2010): Neural response to specific components of fearful faces in healthy and schizophrenic adults. Neuroimage 49:939–946. [DOI] [PubMed] [Google Scholar]

- Rentzsch J, Shen C, Jockers‐Scherübl MC, Gallinat J, Neuhaus AH (2015): Auditory mismatch negativity and repetition suppression in schizophrenia explained by irregular computation of prediction error. PLoS One 10: e0126775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson OJ, Overstreet C, Charney DR, Vytal K, Grillon C (2013): Stress increases aversive prediction error signal in the ventral striatum. Proc Natl Acad Sci USA 110:4129–4133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sergent J, Ohta S, MacDonald B (1992): Functional neuroanatomy of face and object processing. A positron emission tomography study. Brain 1:15–36. [DOI] [PubMed] [Google Scholar]

- Stefanics G, Csukly G, Komlósi S, Czobor P, Czigler I (2012): Processing of unattended facial emotions: A visual mismatch negativity study. Neuroimage 59:3042–3049. [DOI] [PubMed] [Google Scholar]

- Stefanics G, Kremlacek J, Czigler I (2014): Visual mismatch negativity: A predictive coding view. Front Hum Neurosci. 8:666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stefanics G, Astikainen P, Czigler I (2015): Visual mismatch negativity (vMMN): A prediction error signal in the visual modality. Front Hum Neurosci 8:1074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Susac A, Heslenfeld DJ, Huonker R, Supek S (2014): Magnetic source localization of early visual mismatch response. Brain Topogr 27:648–651. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988): Co‐Planar Stereotaxic Atlas of the Human Brain: 3‐Dimensional Proportional System: An Approach to Cerebral Imaging. New York: Thieme Medical Publishers. [Google Scholar]

- Troje NF, Bülthoff HH (1996): Face recognition under varying poses: The role of texture and shape. Vision Res 36:1761–1771. [DOI] [PubMed] [Google Scholar]

- Urakawa T, Inui K, Yamashiro K, Kakigi R (2010): Cortical dynamics of the visual change detection process. Psychophysiology 47:905–912. [DOI] [PubMed] [Google Scholar]

- Van der Elst W, van Boxtel MP, van Breukelen GJP, Jolles J (2006): The Letter Digit Substitution Test: Normative data for 1,858 healthy participants aged 24–81 from the Maastricht Aging Study (MAAS): influence of age, education, and sex. J Clin Exp Neuropsychol 28:998–1009. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G (2007): Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neurpsychologia 45:174–194. [DOI] [PubMed] [Google Scholar]

- Wacongne C, Changeux JP, Dehaene S (2012): A neuronal model of predictive coding accounting for the mismatch negativity. J Neurosci 32:3665−3678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe N, Sakagami M, Haruno M (2013): Reward prediction error signal enhanced by striatum‐amygdala interaction explains the acceleration of probabilistic reward learning by emotion. J Neurosci 33:4487–4493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D (1997): Wechsler Memory Scale, 3rd ed. San Antonio: The Psychological Corporation. [Google Scholar]

- Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G (2003): Both of us disgusted in my insula: The common neural basis of seeing and feeling disgust. Neuron 40:655–664. [DOI] [PubMed] [Google Scholar]

- Yucel G, McCarthy G, Belger A (2007): fMRI reveals that involuntary visual deviance processing is resource limited. Neuroimage 34:1245–1252. [DOI] [PubMed] [Google Scholar]

- Zhao L, Li J (2006): Visual mismatch negativity elicited by facial expressions under non‐attentional condition. Neurosci Lett 410:126–131. [DOI] [PubMed] [Google Scholar]