Abstract

Facial happiness is consistently recognized faster than other expressions of emotion. In this study, to determine when and where in the brain such a recognition advantage develops, EEG activity during an expression categorization task was subjected to temporospatial PCA analysis and LAURA source localizations. Happy, angry, and neutral faces were presented either in whole or bottom‐half format (with the mouth region visible). The comparison of part‐ versus whole‐face conditions served to examine the role of the smile. Two neural signatures underlying the happy face advantage emerged. One peaked around 140 ms (left N140) and was source‐located at the left IT cortex (MTG), with greater activity for happy versus non‐happy faces in both whole and bottom‐half face format. This suggests an enhanced perceptual encoding mechanism for salient smiles. The other peaked around 370 ms (P3b and N3) and was located at the right IT (FG) and dorsal cingulate (CC) cortices, with greater activity specifically for bottom‐half happy versus non‐happy faces. This suggests an enhanced recruitment of face‐specific information to categorize (or reconstruct) facial happiness from diagnostic smiling mouths. Additional differential brain responses revealed a specific “anger effect,” with greater activity for angry versus non‐angry expressions (right N170 and P230; right pSTS and IPL); and a coarse “emotion effect,” with greater activity for happy and angry versus neutral expressions (anterior P2 and posterior N170; vmPFC and right IFG). Hum Brain Mapp 36:4287–4303, 2015. © 2015 Wiley Periodicals, Inc.

Keywords: facial expression, smile, temporospatial PCA, brain sources, lateralization

INTRODUCTION

Facial expressions convey information about emotional and mental states (feelings and motives, intentions, and action tendencies), and thus play a significant communicative and adaptive role. Among the basic expressions of emotion [Ekman, 1992; anger, fear, disgust, sadness, happiness, and surprise], facial happiness is special for several reasons. First, happy faces fulfil important functions in social interaction, by facilitating cooperation and influence [Johnston et al., 2010]; and also at an intrapersonal level, by improving psychological and physiological well‐being [Kraft and Pressman, 2012]. Second, people believe they have seen happy faces in their daily life more frequently [Somerville and Whalen, 2006], and actually display happy faces in social environments more often [Calvo et al., 2014a], relative to any other emotional expression. And, third, across cultural contexts and in laboratory research, happy facial expressions are consistently recognized more accurately and faster than other emotional faces [see Nelson and Russell, 2013; see below]. Accordingly, the mechanisms involved in the processing of happy faces deserve special attention.

Processing of Happy Faces: Behavioral and Neurophysiological Research

Behavioral research using explicit categorization tasks has shown a recognition advantage for happy faces over all the other basic expressions, both in accuracy and efficiency, across different response modalities (manual, verbal, and saccadic) and with different face databases [Beaudry et al., 2014; Calder et al., 2000; Calvo and Lundqvist, 2008; Calvo and Nummenmaa, 2009; Elfenbein and Ambady, 2003; Palermo and Coltheart, 2004; 2002], under low‐threshold or masking conditions [Milders et al., 2008; Svärd et al., 2012; Sweeny et al., 2013], in parafoveal or peripheral vision [Calvo et al., 2014b; Goren and Wilson, 2006], and in dynamic format [Recio et al., 2013, 2014]. In addition, affective priming studies have demonstrated that affective content—not only semantic or categorical information—is obtained automatically from happy faces [Calvo et al., 2012; Lipp et al., 2009; McLellan et al., 2010].

A bulk of research has revealed ERP modulations by facial expressions [see George, 2013]. Nevertheless, results have been less consistent than in behavioral research. Some studies have found an earlier activation by angry faces [e.g., Rellecke et al., 2012; Schupp et al., 2004; Willis et al., 2010] or fearful faces [e.g., Frühholz et al., 2009; Luo et al., 2010; Williams et al., 2006], while others have reported similar patterns for all six basic expressions [Eimer et al., 2003]. In three experiments, happy faces elicited ERP responses earlier than other expressions. Rellecke et al. [2011] observed an increased positivity at parieto‐occipital regions and negativity at frontal regions, between 50 and 100 ms, for happy (but not for angry) relative to neutral faces. Batty and Taylor [2003] reported an earlier latency (at 140 ms) of N170 evoked by happy relative to fearful, disgusted, and sad faces, although the amplitudes were equivalent. Schacht and Sommer [2009] noted an enhanced fronto‐central positivity between 128 and 144 ms, and parieto‐occipital negativity between 144 and 172 ms, for happy relative to angry faces. In most of all these ERP studies, however, task instructions did not ask for explicit expression encoding, and categorization performance was not assessed, which makes the results not directly comparable with those in behavioral research. Such a comparison will thus benefit from an experimental design that combines expression categorization with continuous EEG recording.

Role of the Smile

The explicit recognition advantage of happy faces can be attributed to their having a semantically distinctive feature, i.e., the smiling mouth, which is diagnostic of the facial happiness category. This means that the smile is systematically and uniquely associated with this category, whereas other facial features overlap to some extent across different expressions [Calvo and Marrero, 2009; Kohler et al., 2004]. As a consequence, the smile can be used as a shortcut for a quick categorization of a face as happy [Leppänen and Hietanen, 2007]. In contrast, discrimination of non‐happy expressions would require the processing of particular combinations of facial features, which would make the recognition process less accurate and efficient. In addition, the smile is more visually salient [in terms of physical image properties such as contrast, luminance, and spatial orientation; see Borji and Itti, 2013; Itti and Koch, 2001] than any other region of the various expressions [Calvo and Nummenmaa, 2008]. Presumably, visual saliency would enhance sensory gain [Calvo et al., 2014c], followed by selective initial attention to the smile, thus securing the allocation of processing resources to the most informative facial cue. As a consequence, facial happiness could be recognized quickly and accurately from a single cue, without the need of whole‐face integration. In contrast, for non‐happy faces, the lower saliency of the respective diagnostic features would allow for more attentional competition and require configural information processing.

Evidence supporting this conceptualization has been obtained with behavioral measures. First, regarding the smile diagnostic value or distinctiveness, the recognition of facial happiness is as accurate and fast when only the mouth region is shown as when the whole face is displayed, whereas recognition of the other expressions generally declines when only one region is visible [Calder et al., 2000; Calvo et al., 2014b]. The smiling mouth is necessary and sufficient for categorizing faces as happy, while the eye region makes only a modest contribution [Beaudry et al., 2014; Calder et al., 2000; Calvo et al., 2014b; Leppänen and Hietanen, 2007; Nusseck et al., 2008; Wang et al., 2011]. In addition, when a smile is placed in composite faces with non‐happy (e.g., fearful, etc.) eyes, there is a bias towards judging the face as happy [Calvo et al., 2012]. Second, regarding visual saliency, not only is the smile more salient than any other region in happy and non‐happy faces, but such a high saliency remains even when the smile is placed in a face with non‐happy eyes [Calvo et al., 2012]. In correspondence, the smile also attracts more attention than any other region of expressions, as indicated by eye fixations (particularly, the first fixation on the face) during recognition [Beaudry et al., 2014; Bombari et al., 2013; Calvo and Nummenmaa, 2008; Eisenbarth and Alpers, 2011]; and this happens even when the smile is placed in a face with non‐happy eyes [Calvo et al., 2013].

ERP studies on the role of the smile have been scarce. An approach to address this issue involves comparing the effects of an isolated face region (e.g., only the mouth or the eyes visible) with those of the whole face [e.g., Calder et al., 2000, using behavioral measures]. Generally, only whole‐face stimuli, rather than face parts, have been used in ERP research on facial expressions [but see Leppänen et al., 2008, for fearful and neutral faces; and Weymar et al., 2011, with schematic rather than photographic faces]. Calvo and Beltrán [2014] investigated ERP activity in response to the eye and the mouth regions, relative to the whole face, of happy, angry, surprised, and neutral expressions. Results indicated that the mouth region of happy faces enhanced left temporo‐occipital activity (150–180 ms) and also LPC centro‐parietal activity (350–450 ms, P3b) earlier than other face regions did (e.g., the angry eyes, 450–600 ms). Importantly, computational modeling revealed that the smiling mouth was visually salient by 150 ms following stimulus onset. This suggests that analytical processing of the salient smile occurs early (150–180 ms) and is subsequently used as a shortcut to identify facial happiness (350–450 ms). This is consistent with the happy face recognition advantage explanation as a function of the smile saliency and distinctiveness.

The Current Study: Brain Signatures of the Smile

We aimed to establish a more detailed spatiotemporal profile of neural activity in the recognition of happy faces and the role of the smile. That is, where in the brain (source localization) and when (time course) each of various mechanisms are engaged. To this end, we presented facial expressions (happy, angry, and neutral) either in whole‐face format or bottom‐face‐half format (i.e., with the mouth region visible, but the upper‐face‐half masked), for explicit recognition. The upper half was scrambled, rather than simply being removed, to keep the face perceptual shape and equivalent (to the whole face) low‐level image properties. The comparison of the part versus whole conditions allowed us to examine how much the smiling mouth, relative to the angry and the neutral mouths, engages the neural mechanisms of facial expression encoding. The visibility of the upper face half with the eye region was not manipulated because such condition was already investigated by Calvo and Beltrán [2014], and here we wanted to focus on the role of the smiling mouth. Beyond our previous study addressing this issue [Calvo and Beltrán, 2014], the current study makes a major contribution by assessing the neural sources and brain structures involved, in combination with the time course of neural processes. The temporal and spatial distribution of face‐locked ERP activity was investigated by using a data‐driven approach that coupled two‐step temporospatial PCA and LAURA source localizations.

This approach allowed us to determine when, i.e., the temporal stages, the smile alone versus the whole facial configuration modulated ERP activity at successive processing stages (and thus when expression recognition begins and how it develops). The face stimuli were displayed for 150 ms, followed by a 650‐ms interval, at the end of which participants explicitly categorized the expression. If the smile facilitates recognition as a function of visual saliency, the activity of early neural components involving perceptual processes (e.g., N1 or N170) will be enhanced for happy faces, relative to angry or neutral faces. In addition, if the smile facilitates recognition as a function of diagnostic value or distinctiveness, the activity of middle‐range components involving semantic categorization (e.g., P3b) will be enhanced for happy faces, particularly in the bottom‐half format condition. This would reveal whether the happy face mental template can be accessed and the expression reconstructed from the smiling mouth region alone, in the absence of the whole face.

Finally, we aimed to localize the neural source(s) of the smile effects on surface ERP activity at each temporal stage. Multiple studies using fMRI measures have explored the brain network that is sensitive to facial expressions [see the meta‐analyses conducted by Vytal and Hamann, 2010, and Fusar‐Poli et al., 2009]. Nevertheless, the fMRI measures do not assess temporal dynamics. To examine how the brain structures responsible for emotional face processing vary across temporal stages, EEG/MEG‐based source localization techniques have been employed [e.g., Carretié et al., 2013a; Pegna et al., 2008; Pourtois et al., 2004; Morel et al., 2012; Williams et al., 2006]. We extended such an approach to specifically investigate the role of the smile. If the recognition of happy faces benefits from an early part‐based, featural encoding of the smile because of its visual saliency, activity in brain areas subtending analytic perceptual processing [e.g., Maurer et al., 2007] will be increased for both whole and bottom‐half happy faces relative to non‐happy faces. Also, if facial happiness recognition is facilitated by the smile diagnostic value, activity in brain regions known to support stimulus categorization [e.g., structures within infero‐temporal cortex and the dorsal fronto‐parietal network, Bledowski et al., 2004, 2006] will be enhanced for bottom‐half happy faces relative to non‐happy faces.

METHOD

Participants

Thirty‐one psychology undergraduates (23 females; all between 18 and 25 years of age) gave informed consent, and received either course credit or were paid (7 Euro per hour) for their participation. All were right‐handed and reported normal or corrected‐to‐normal vision and no neurological or neuropsychological disorder. Informed consent was obtained from all the participants. The study was conducted in accordance with the WMA Declaration of Helsinki 2008.

Stimuli

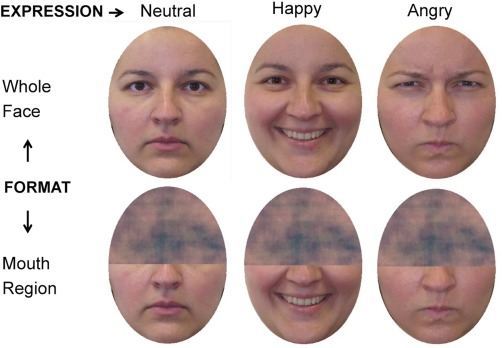

We selected 60 digitized color photographs from the KDEF [Lundqvist et al., 1998] stimulus set, for the current whole‐face condition. The experimental face stimuli portrayed 20 individuals (10 females: KDEF no. 01, 07, 09, 11, 14, 19, 20, 26, 29, and 31; and 10 males: KDEF no. 05, 10, 11, 12, 13, 22, 23, 25, 29, and 31), each posing three expressions (neutral, happiness, and anger). Nonfacial areas (e.g., hair, etc.) were removed by applying an ellipsoidal mask. The faces were presented against a black background. Each face stimulus was 11.5 cm high by 8.5 cm wide, equalling a visual angle of 9.40° (vertical) × 6.95° (horizontal) at 70‐cm viewing distance. In addition, for a half‐face (mouth format) condition, we generated 60 half‐visible faces, one for each of the whole‐face KDEF stimuli. For these new stimuli, only the lower half of each face was visible, while the top half was subjected to Fourier‐phase scrambling, and therefore masked (for an illustration, see Fig. 1). An average scrambled mask on the top half of the face was used for all the faces, so that they were comparable in the covered half while their visible bottom half remained different.

Figure 1.

Sample of face stimuli for each stimulus format condition. For copyright reasons, a different face is shown in the figure, instead of the original KDEF pictures. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Apparatus and Procedure

The stimuli were presented on a 24” computer monitor. Stimulus presentation and response collection were controlled by means of Presentation software (version 15.1, Neurobehavioral Systems, Inc.). On each trial, after a 500‐ms central fixation cross, a face was displayed for 150 ms in the centre of the screen, followed by a black screen for 650 ms, and then a probe word (“happy,” “angry,” or “neutral”) appeared. In an expression categorization task, participants responded whether or not the word represented the expression conveyed by the face, by pressing one of two keys (labeled as “Yes” or “No”). The probe words represented the actually displayed facial expression on 50% of the trials (correct responses), and a different expression on the other 50% (25% for each of the two non‐valid probe words). Response latencies were time‐locked to the presentation of the probe word. There was a 2‐s intertrial interval. Participants were told to look at the centre of the screen and to blink only during the interval. A short, 150‐ms stimulus display was used to avoid eye movements. A 150‐ms display has otherwise proved to allow for an average 87% recognition accuracy of similar face stimuli in expression categorization tasks [Calvo et al., 2014b].

Following 24 practice trials, each participant was presented with 40 experimental trials of each of the three expressions and each of the two stimulus formats (i.e., whole face, and bottom‐half visible), in four blocks. Each block consisted of a total of 60 trials, with 10 different faces of each expression in each format. Each stimulus was presented twice to each participant, in different blocks. The probe word (happy, angry, and neutral) represented the actually displayed facial expression once for each stimulus, and a different expression on the other. Within each block, trial order was randomly established for each participant. Recognition performance measures of accuracy and correct response reaction times were collected. Each experimental session lasted approximately between 20 and 25 min, plus the time required for the application of the EEG cap (30 min, on average).

EEG Recording

EEG and EOG signals were recorded using Ag/AgCl electrodes mounted in elastic Quick‐caps (Neuromedical Supplies, Compumedics Inc., Charlotte). EOG signal was measured from two bipolar channels: One was formed by two electrodes placed at the outer canthus of each eye; another, by two electrodes below and above the left eye. EEG signal was recorded from 60 electrodes arranged according to the standard 10 to 20 system. All EEG electrodes were referenced online to an electrode at vertex, and recomputed off‐line against the average reference. EEG and EOG signals were amplified at 500 Hz sampling rate using Synamp2 amplifier (Neuroscan, Compumedics Inc., Charlotte), with high‐ and low‐pass filter set at 0.05 and 100 Hz, respectively. EEG electrode impedance was kept below 5 kΩ.

EEG data pre‐processing was conducted using Edit 4.5 (Neuroscan, Compumedics Inc., Charlotte). The following transforms were applied to each participant's dataset. Data were initially down‐sampled to 250 Hz and low‐pass filtered at 30 Hz. EEG segments were then extracted with an interval of 200 ms preceding and 800 ms following the face onset. On these segments, artifact rejection was performed in two steps. First, trials containing activity exceeding a threshold of ±70µV at vertical and horizontal EOG and EEG channels were automatically detected and rejected. Second, nonautomatically rejected artifacts were manually removed, including trials with saccades identified over the horizontal EOG channel. For the computation of ERPs, artifact‐free segments (including both correct and incorrect responses) were averaged separately per subject (31) and condition (6). A total of 9.5% of trials were excluded because of artifacts (mainly, eye blinks, drifts, and saccades). Baseline correction of averaged data was carried out using the 200‐ms period preceding face onset.

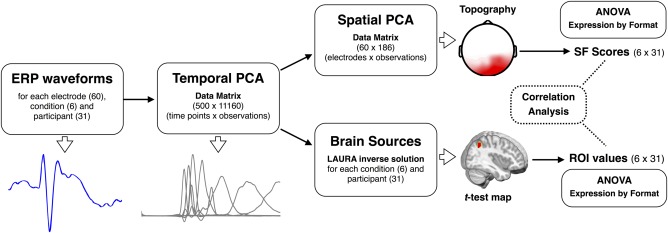

Scalp ERP Analysis: Two‐Step PCA

As illustrated in Figure 2, averaged ERP waveforms were analyzed by means of two‐step, temporospatial principal component analyses (PCA) [for similar procedures, see Albert et al., 2013; Foti et al., 2011]. First, a temporal PCA was computed to determine the ERP variance across time in a data matrix that included all ERP waveform time points (500) as variables and the combination of participants (31), conditions (6), and electrode sites (60) as observations (11,160). Kaiser‐normalization and Promax rotation were applied, with no restriction on the number of orthogonal factors that were extracted and retained for rotation [Kayser and Tenke, 2003]. This first step produces distinctive temporal components (factor loadings) and corresponding weighting coefficients (factor scores), which describe the contribution of temporally overlapping ERP components. Factor loadings represent the weights that a given temporal component has in every time point (variable), and hence the time course of that component. Instead, factor scores reflect the contribution of the same temporal component to each observation, and result from multiplying the factor loadings by the normalized original data matrix [Kayser and Tenke, 2003]. Well‐known advantages of the application of temporal PCA are that it allows for an objective “data‐driven” reduction of ERP data dimensionality, separates temporally overlapped ERP components, and helps to improve the localization in the brain of the ERP sources [Carretié et al., 2004; Dien and Frishkoff, 2005; Foti et al., 2009].

Figure 2.

Pipeline of the strategy followed to analyze averaged ERP waveforms in both surface and source spaces (for details, see Methods section). ANOVA: analysis of variance. SF: spatial factors. ROI: region of interest. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

In the second step, a spatial PCA was computed for each of the temporal factors that accounted for at least 2% of the ERP variance, by using the scores in every electrode site as variables (60) and the combination of participants and trial type as observations (186). As was the case for the temporal PCA, Kaiser‐normalization and Promax rotation were applied, with no restriction on the number of orthogonal factors that were extracted and retained for rotation. This spatial PCA decomposes the temporal components obtained in the first step by extracting a set of spatial factors, which account for the contribution of spatially overlapping ERP components. Thus, the resulting factor loadings represent the underlying topographies of the temporal factors and presumably reflect the activity of spatially separated neural processes, which otherwise show a similar time course.

One important advantage of this two‐step PCA procedure is that the resulting factor scores provide a single parameter for each whole topography (spatial factor), which can be directly submitted to statistical analysis with no need for time window and/or electrode selections on the basis of visual inspection or multiple testing approaches [e.g., Carretié et al., 2013b]. Accordingly, for the spatial factors accounting for at least 2% of the variance in each temporal factor, we analyzed factor scores (the unique value for each topography) by means of 3 (Expression: happy vs. angry vs. neutral) × 2 (Format: whole face vs. bottom‐half) repeated‐measures ANOVAs. In addition, separate one‐way ANOVAs with expression as a factor were conducted to decompose significant interactions between expression and format. Greenhouse‐Geisser corrections were applied to account for possible violations of sphericity assumptions in these and all the ANOVAs in this study.

Brain Sources Analysis

As illustrated in Figure 2, the factor scores resulting from the temporal PCA (first PCA step) that showed reliable expression‐related effects (main effect of expression or/and an interaction) were submitted to distributed source analyses using LAURA approach [Local Auto‐Regressive Average, Grave de Peralta et al., 2001; for a comparison of inverse solution methods, see Michel et al., 2004], implemented in Cartool software [Brunet et al., 2011]. The solution space was calculated on a realistic head model that included 4,026 solution points, defined in regular distances within the gray matter of a standard MRI (Montreal Neurological Institute's average brain). Current density magnitudes (ampere per square millimeter) at each solution point were calculated per subject and condition, and submitted to statistical analyses using paired t‐tests. These source t‐test maps were estimated only for those pairs of conditions that yielded significant differences on surface ERP analyses. In addition, only the t‐test maps that showed differences below the statistical threshold of 0.005 for at least 15 nearby solution points were selected for further analysis. For these statistically reliable t‐test maps, regions of interest (ROI) were formed from the solution points showing the strongest differences (as defined by t‐values), and their current density magnitudes were submitted to a repeated‐measures two‐way (expression by format) ANOVA. In addition, to test the relationship between the significant brain sources and the distinctive topographies extracted following the temporospatial PCA procedure, source‐scalp partial correlations were conducted—using nonparametric Spearman rank‐order partial coefficients—between ROI current density magnitudes (31 participants × 6 conditions = 186) and the topographies (spatial factors) that accounted for at least 2% of the variance in temporal factor scores.

As noted the topographical configuration of a given temporal PCA component reflects the contribution of several spatially distributed neural processes, rather than the activity produced in a single brain region [e.g., Dien and Frishkoff, 2005]. Spatial PCA helps to separate these processes at the topographical level while source localization analysis estimates their distribution in the brain. Thus these approaches contribute to spatially delimit the neural processes that are temporally co‐occurring in a given time window (temporal factor). Brain source analyses complement the surface analyses by providing anatomical localizations that can be related more specifically to particular neural mechanisms. Finally, although EEG source estimation should be interpreted with caution due to potential error of the algorithms, the use of temporal PCA factor scores, source t‐test maps, and a relatively large sample size (n = 31), reduced the margin of error.

RESULTS

Behavioral Data

Response accuracy and reaction times of correct responses were analyzed by means of 3 (Expression: happy vs. angry vs. neutral) × 2 (Format: whole face vs. bottom‐half) repeated‐measures ANOVAs. Bonferroni adjustments (P < 0.05) were performed for post‐hoc multiple comparisons. For response accuracy, effects of expression, F (2,60) = 34.79, P < 0.0001, η p 2 = 0.667, and format, F (1,30) = 59.97, P < 0.0001, η p 2 = 0.706, emerged. Happy expressions were recognized more likely (M = 98.8%) than the others (angry: 91.7; neutral: 94.6), which did not differ from each other. Accuracy was higher in the whole face (M = 96.7%) than in the bottom‐half (93.4) condition. Similarly, for reaction times, effects of expression, F (2,60) = 38.09, P < 0.0001, η p 2 = 0.559, and format, F (1,30) = 16.61, P < 0.0001, η p 2 = 0.356, revealed that happy faces were recognized faster (M = 750 ms) than the others (angry: 885; neutral: 840), and responses were faster in the whole‐face (M = 804 ms) than in the bottom‐half (846) condition.

Scalp ERP Results

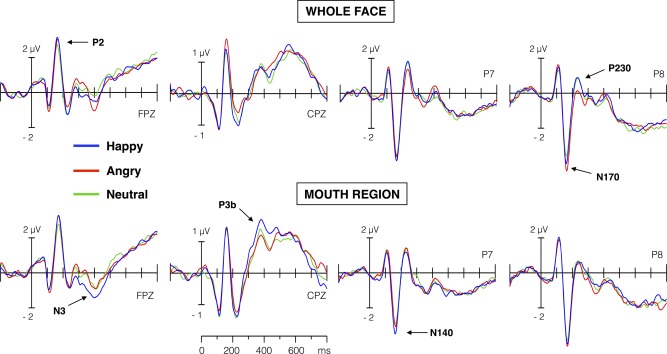

Figure 3 shows averaged ERP waveforms at prototypical scalp sites for ERP components that were sensitive to expression‐related effects (see below). ERP waveforms were analyzed using the “data‐driven” procedure described in the Method section (Scalp ERP Analysis: Two‐Step PCA section), which involved a reduction of data dimensionality by means of a two‐step PCA (temporal and spatial). In the first step, the application of the temporal PCA yielded seven temporal factors (TFs) that accounted for at least 2% of the ERP variance. In the second step, the spatial PCA decomposed each TF in a set of underlying topographies (spatial factors, SFs), which were submitted to further statistical analyses only if they explained at least 2% of the corresponding TF variance. Below we report the results for those topographies that were sensitive to expression‐related effects (main effect of expression or/and the format by expression interaction), ordered according to the latency of the peak amplitude of the corresponding TF.

Figure 3.

Averaged ERP waveforms elicited at frontal (FPZ), centro‐parietal (CPZ), and left and right temporo‐occipital electrodes (P7 and P8), for whole and bottom‐half face conditions. Labels in bold letter (e.g., N170, etc.) indicate the ERP components that were extracted and showed significant differences in the temporospatial PCA analysis. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

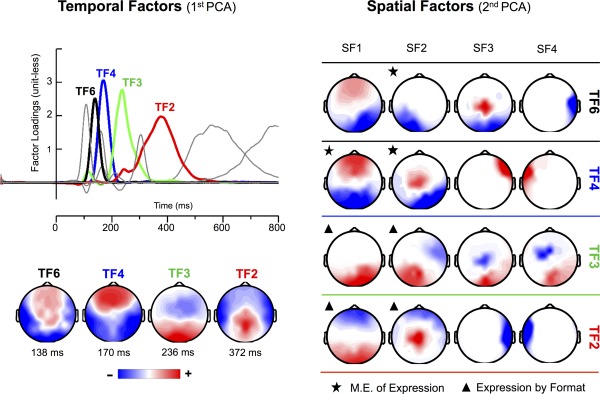

As Figure 4 illustrates, the first factor that accounted for at least 2% of ERPs variance was TF6 (5% of unique variance explained). This factor peaked around 138 ms poststimulus, with maximal positive amplitudes at fronto‐central scalp sites (P140) and maximal negative amplitudes at left temporal and occipital sites (N140). The application of the spatial PCA decomposed TF6 in four underlying topographies. Of them, only the topography SF2 (negative amplitudes at left posterior sites, i.e., left N140) was sensitive to expression‐related effects. In particular, this left N140 topography yielded effects of format, F (1,30) = 8.81, P < 0.001, η p 2 = 0.227), and expression, F (2,60) = 10.3, P < 0.0005, η p 2 = 0.255, but not an interaction. Post‐hoc comparisons revealed larger left N140 amplitudes for whole than for bottom‐half faces, and for happy than for both angry (P < 0.0005) and neutral (P < 0.01) expressions.

Figure 4.

Left side (temporal PCA): time course (upper left half) and topography (lower left half) of temporal factors (TFs) extracted from ERPs. Numbers below brain maps indicate the peak latency of the factor loadings. Topographies are shown only for the temporal factors with statistically significant effects of expression and/or the interaction between expression and format. Right side (spatial PCA): topography of the first four spatial factors extracted for each of the temporal factors represented in the left side of the figure. A star over a map shows a main effect of expression; the triangle, an interaction effect between expression and face format. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

The next significant factor was TF4 (9.6% variance explained), which peaked at 170 ms poststimulus and showed a topographical distribution that was entirely consistent with the typical anterior P2 (maximal positive amplitudes at frontal sites) and N170 (maximal negative amplitudes at temporal and occipital sites) (Fig. 4, left side). For this temporal factor, format‐independent effects of expression were obtained in two topographies. The topography SF1 (maximal amplitude at anterior and posterior sites; anterior P2 and posterior N170) showed effects of format, F (2,60) = 21.5, P < 0.0001, η p 2 = 0.417, and expression, F (2,60) = 13.2, P < 0.0001, η p 2 = 0.305, but not an interaction. Posterior, non‐lateralized N170 amplitudes were smaller for whole than for bottom‐half faces, and for neutral than for angry (P < 0.0001) and happy (P < 0.005) expressions. The second significant topography, SF2 (maximal amplitude at right posterior sites; right N170), was sensitive only to expression, F (2,60) = 9.67, P < 0.0005, η p 2 = 0.244, reflecting larger amplitudes for angry than for both neutral and happy (Ps < 0.0005) expressions. Format‐independent effects of both emotion (emotional vs. neutral expressions) and anger (angry vs. non‐angry expressions) thus coincided temporally (TF4) but diverged topographically (SF1 and SF2).

The factor TF3 (10% variance explained) peaked at 236 ms poststimulus and showed a topography characterized by fronto‐central negative (consistent with an anterior N250 component) and occipital positive (consistent with a posterior P230) amplitudes (Fig. 4). For this factor, spatial PCA identified two topographies with format‐selective effects. For the topography SF1 (maximal amplitudes at right parietal and occipital sites; right P230), the interactive effect, F (2,60) = 3.31, P < 0.05, η p 2 = 0.099, was accompanied by an effect of expression, F (2,60) = 3.28, P < 0.05, η p 2 = 0.098. Separate one‐way ANOVAs revealed expression differences in the whole face format, F (2,60) = 5.16, P < 0.01, η p 2 = 0.147, but not in the bottom‐half format (F < 1) condition. Right P230 (SF1) amplitudes were smaller for angry than for happy (P < 0.01) and neutral (P < 0.025) whole faces. Similarly, for the topography SF2 (maximal amplitudes at left parietal and occipital sites; left P230), the interaction reached significance, F (1,30) = 4.63, P < 0.05, η p 2 = 0.134. Further analyses did not detect reliable differences among expressions in any format. Thus, right‐lateralized P230 activity showed the earlier format‐selective effect, which consisted of a diminished activity for angry relative to non‐angry (happy and neutral) expressions in whole face format.

Finally, the factor TF2 (11% variance explained) peaked around 370 ms poststimulus and showed a topography that corresponds to the typical posterior P3b and anterior N3 components (Fig. 4). The spatial decomposition of this factor identified two topographies that were modulated by expression‐related effects (Fig. 4). The topography SF1 (maximal amplitudes at anterior sites: anterior N3) yielded effects of expression, F (1,30) = 4.64, P < 0.025, η p 2 = 0.134, and the interaction, F (2,60) = 4.32, P < 0.025, η p 2 = 0.127. In the bottom‐half format, F (2,60) = 4.66, P < 0.025, η p 2 = 0.135, happy expressions showed larger N3 than both angry (P < 0.01) and neutral expressions (P < 0.05). The second topography, SF2 (maximal amplitudes at parietal sites: P3b), was sensitive to the effects of format, F (1,30) = 14.01, P < 0.001, η p 2 = 0.319, expression, F (2,60) = 3.83, P < 0.05, η p 2 = 0.113, and the interaction, F (2,60) = 3.94, P < 0.025, η p 2 = 0.117. Separate one‐way ANOVAs for each format revealed expression differences in the bottom‐half format only, F (2,60) = 6.34, P < 0.005, η p 2 = 0.175. There were larger P3b amplitudes for happy than for non‐happy expressions in bottom‐half format (angry, P < 0.001; and neutral, P < 0.025). Thus, a format‐selective “happiness effect” was evident for the temporal factor TF2 (≈370 ms), both in N3 and P3b topographies.

Neural Sources of Scalp ERP Results

For each participant and condition, distributed LAURA brain sources were estimated using the scores corresponding to the temporal factors that showed reliable expression‐related effects in the two‐step (temporal and spatial) PCA analysis (Fig. 2). Next, source t‐test maps were computed for the pairwise comparisons that were significant in the above analysis. Regions of interest (ROIs) were defined following the solution points showing the maximal statistical difference, and then submitted to two‐way (3: Expression by 2: Format) ANOVAs (for details, see Brain Sources Analysis section). In this section, we will report results for the ROIs that provided evidence of reliable expression‐related effects in the two‐way ANOVA (expression and/or interaction effects). Figure 5 illustrates the brain sources related to the happy expression effects (“happiness effect”); Figure 6, the sources related to “anger” and “emotion” effects.

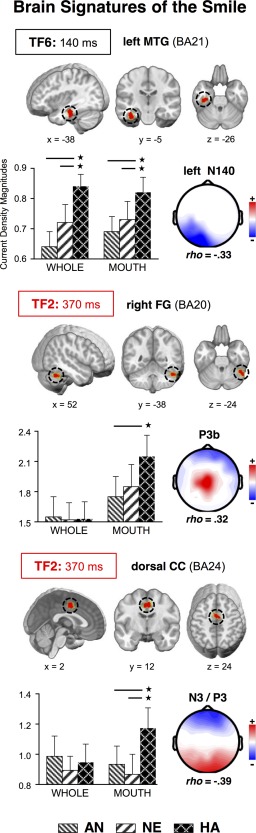

Figure 5.

Brain sources for smile‐related effects. Location (brain images), mean current density magnitudes (bar graph), and the topography with maximal partial correlations for regions of interest (ROIs) sensitive to the distinction between happy and non‐happy faces. Dotted circles enclose the location of the ROI; x, y, z point out the coordinates at Talairach space. Bars represent current density means and SEM for each stimulus condition. Asterisks and lines indicate significant differences between the conditions at each end of the line. WHOLE: whole‐face format. MOUTH: bottom‐face‐half format. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

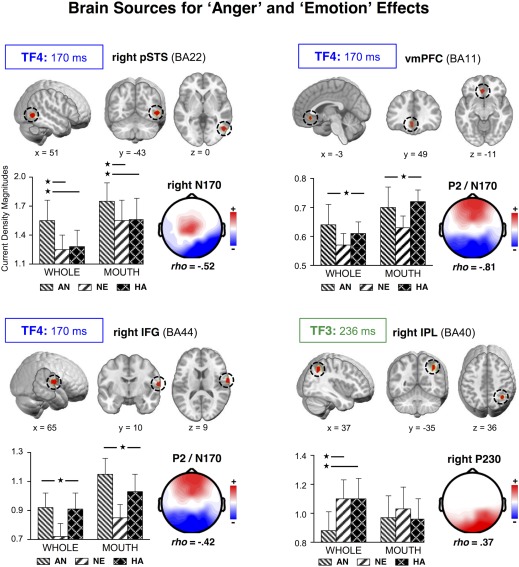

Figure 6.

Brain sources for anger and emotion effects. Location (brain images), mean values (bar graph), and the topography with maximal partial correlations for ROIs sensitive to the distinction between angry and non‐angry whole faces, and between emotional and non‐emotional faces. Dotted circles surround the location of the ROI; x, y, z, for coordinates at Talairach space. Bars represent the mean and SEM of the current density magnitudes for each stimulus condition. Asterisks and lines indicate significant differences between the conditions at each end of the line. WHOLE: whole‐face format. MOUTH: bottom‐face‐half format. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

TF6: Left MTG

For the factor TF6 (Fig. 5), a ROI defined from the source t‐tests between happy and angry expressions resulted in a main effect of expression, F (2,60) = 7.93, P < 0.001, η p 2 = 0.184. This source was located at the anterior part of the left inferior temporal cortex [MTG; Tailarach coordinates: x = −38, y = −5, z = −26; Talairach and Tournoux, 1988; corresponding to BA21], and revealed that, regardless of face format, there was higher activation for happy than for angry (P < 0.005) and neutral (P < 0.025) expressions. Partial correlation analyses yielded maximal significant relationships between the left MTG source and the left N140 topography (TF6‐SF2: ρ = −0.33, P < 0.0001), thereby confirming that left infero‐temporal (IT) sources are the major contributor to the format‐independent “happiness effect” observed in this topography.

TF4: Right pSTS, vmPFC, and IFG

Two ROIs were defined for TF4 from source t‐tests between neutral and emotional (happy and angry) expressions (Fig. 6). These ROIs were located at the ventro‐medial prefrontal cortex (vmPFC: x = −3, y = 49, z = −11; BA11) and the right inferior frontal gyrus (IFG: x = 65, y = 10, z = 9; BA44), and showed main effects of both format, Fs(2,60) = 21.8 and 6.12, Ps < 0.0001, and 0.025, η p 2 = 0.421 and 0.169, and expression, Fs(2,60) = 4.28 and 9.42, Ps < 0.025 and 0.0005, η p 2 = 0.125 and 0.239. The effects were characterized by larger activation for bottom‐half than for whole faces, and greater activation for happy (Ps < 0.005 and 0.025) and angry (Ps < 0.0001 and 0.005) than for neutral expressions. Source‐scalp correlations yielded maximal (and negative) relationships of both sources with the TF4‐SF1 topography (P2 and posterior N170: for vmPFC, ρ = −0.81, P < 0.0001, and for right IFG, ρ = −0.42, P < 0.0001).

Another expression‐related ROI emerged at the right posterior superior temporal sulcus (right pSTS: x = 51, y = −43, z = 0; BA22) for the t‐test contrast between happy and angry expressions (Fig. 6). This ROI yielded effects of both format, F (1,30) = 5.9, Ps < 0.025, η p 2 = 0.164, and expression, F (2,60) = 10.4, Ps < 0.0005, η p 2 = 0.257. Right pSTS activation was larger for bottom‐half than for whole faces, and for angry than for non‐angry expressions (happy, P < 0.0005; and neutral, P < 0.005). This ROI showed maximal (and negative) correlations with the right N170 topography (TF4‐SF2: ρ = −0.52, P < 0.0001).

TF3: Right IPL

For the factor TF3 (Fig. 6), there was one ROI involving significant effects of expression, F (2,60) = 4.41, P < 0.025, η p 2 = 0.128, and the interaction between format and expression, F (2,60) = 3.65, P < 0.05, η p 2 = 0.110. This source was located at the right inferior parietal lobe (right IPL: x = 37, y = −35, z = 36; BA40) and emerged from the t‐tests between angry and non‐angry expressions in whole face format. The interaction reflected differences between expressions in the whole face format only, F (2,60) = 6.66, P < 0.005, η p 2 = 0.182. Angry whole faces produced smaller activations than neutral and happy whole faces (Ps < 0.025 and 0.005, respectively). Partial source‐topography correlations indicated that right IPL activation correlated maximally (and positively) with the TF3‐SF1 topography (right P230, ρ = 0.37, P < 0.0001).

TF2: Right FG and dorsal CC

For TF2 (anterior N3/P3b; Figs. 5 and 6), source analyses identified two significant ROIs. First, from the source t‐test between happy and angry bottom halves (Fig. 5), there was a source located at the right lateral fusiform gyrus (FG: x = 52, y = −38, z = −24; BA20), involving an expression by format interaction, F (2,60) = 3.87, P < 0.05, η p 2 = 0.110. Separate one‐way (Expression) ANOVAs for each format revealed significant effects only in the bottom‐half conditions, F (2,60) = 5.43, P < 0.01, η p 2 = 0.153, with happy expressions eliciting larger activation than angry expressions (P < 0.005). The source‐topography correlation was maximal (and positive) for the TF2‐SF6 topography (P3b, ρ = 0.32, P < 0.0005).

The other source (Fig. 5), which was obtained from the t‐tests between happy and neutral expressions in bottom‐half format, emerged at the dorsal cingulate cortex (BA24: x = 2, y = 12, z = 24). For this source, there were effects of expression, F (2,60) = 4.31, P < 0.025, η p 2 = 0.126, format, F (1,30) = 7.12, P < 0.025, η p 2 = 0.192, and the interaction, F (2,60) = 4.61, P < 0.025, η p 2 = 0.133. Separate one‐way ANOVAs revealed a “happiness effect” in the bottom‐half format condition, F (2,60) = 7.09, P < 0.005, η p 2 = 0.191, with stronger dorsal CC activation for happy than for neutral and angry expressions (Ps < 0.005). The maximal correlation (negative) of the dorsal CC activation was with the TF2‐SF1 topography (ρ = −0.39, Ps < 0.0001), which suggests that this source had a major contribution to anterior N3 activity.

DISCUSSION

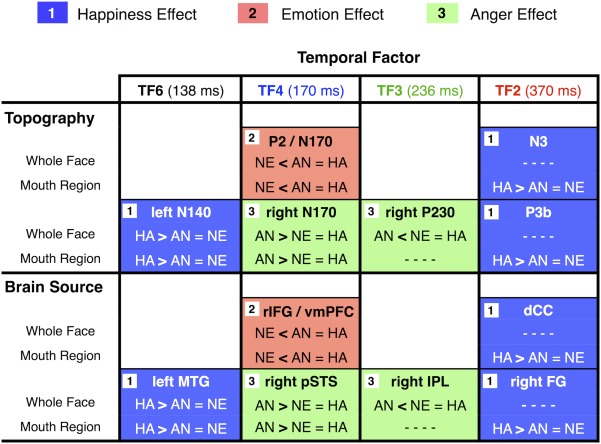

By means of temporospatial PCA and LAURA source localization of EEG activity in response to bottom‐half (mouth regions) and whole faces (happy, angry, and neutral) in an expression categorization task, we investigated the time course and locus of neural processes underlying the role of the smile in the typical (also found here) happy face recognition advantage. The major results revealed two temporospatial profiles of differential brain responses to happy faces (“happiness effect”): One was sensitive to both whole and bottom‐half faces, thus reflecting format‐independent effects, and involved an early temporospatial PCA factor (≈140 ms after face onset, left N140 hereafter); the other was elicited later only by bottom‐half faces (≈370 ms, N3 and P3b), thereby showing a format‐selective effect (see Figs. 3 and 4). Sources in the left inferior temporal (IT) cortex (MTG, BA21) were the greatest contributors to the format‐independent neural signature, with an enhanced left N140 to happy faces (see Fig. 5). Sources in the right IT cortex (FG, BA20) and the dorsal cingulate cortex (CC, BA24) contributed most to the format‐selective signature, with an enhanced P3b and anterior N3 for happy bottom‐half faces (see Fig. 5). A more detailed integrative overview of all the findings to be discussed next (including also a specific “anger effect” and a general “emotion effect”) is presented in Figure 7.

Figure 7.

Summary of neurophysiological findings. Comparisons of happiness, emotion, and anger effects on scalp field potentials (surface topography) and brain sources, for whole face and mouth region conditions. AN, angry face; HA, happy face; NE, neutral face. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Enhanced Left N140 and IT Cortex Activity: Perceptual Encoding of Salient Smiles

ERP activity around 140 ms (TF6) from face onset was sensitive to the distinction between happy and non‐happy (angry and neutral) expressions, regardless of face format. This early brain response revealed, first, an ERP topography characterized by negative amplitudes at left temporo‐occipital scalp sites (N140) for smiling relative to non‐smiling faces; and, second, a neural source for the ERP surface effect that was located at the left IT cortex (MTG), which, in addition, correlated maximally with the left N140 topography.

Recently, we have reported an augmented ERP response (150–180 ms) at left temporo‐occipital scalp sites for smiling faces [Calvo and Beltrán, 2014]. The current left N140 results are in agreement with this finding, but extend it by suggesting that the left IT cortex could be at the basis of the early brain discrimination between smiling and nonsmiling faces. This is also consistent with a recent ERP study in which, using a similar PCA‐based source reconstruction approach, early differences between happy and non‐happy faces were source‐located at a more posterior area of the left IT cortex (fusiform gyrus) [Carretié et al., 2013a]. In this prior source localization study, however, only whole emotional faces were investigated, and a task not requiring explicit expression categorization was used. Here, in contrast, we presented both whole and bottom‐half faces during an expression categorization task. Importantly, we found that early activity in left IT distinguished between smiling and non‐smiling faces independently of the displayed format, i.e., also when the smile appeared separately from the whole facial configuration of a happy face.

Accordingly, the current left IT sources presumably index the activity of an early part‐based, analytical perceptual mechanism from which the smile, as a single feature, benefits because of its high visual saliency [Calvo and Nummenmaa, 2008]. As already noted, the visual saliency of single features in a face, especially the smiling mouth, strongly affects early perceptual processes [see Borji and Itti, 2013]. More specifically, high visual saliency has been found to be associated with the faster detection of the smile through sensory gain and early attentional capture mechanisms [Calvo and Beltrán, 2014; Calvo et al., 2014c]. The current study adds to this research by relating visual saliency to an enhanced neural response at higher‐order visual areas of the left hemisphere, which are thought to support part‐based, analytical encoding of faces, albeit not being specific to them [Meng et al., 2012; Renzi et al., 2013; Rossion et al., 2000; Scott and Nelson, 2006].

Enhanced P3b and Right IT Cortex Activity: Facilitated Expression Categorization From Distinctive Smiles

The bottom half of happy faces (with the smiling mouth) elicited larger ERP activity than non‐happy mouth regions on the topography of the temporal factor TF2 (≈370 ms), which was coincident with the P3b component. P3b activity is thought to reflect the amount of resources invested to categorize or evaluate task relevant stimuli [Folstein and Van Petten, 2011; Kok, 2001; Polich, 2011]. In our expression categorization task, all the face stimuli were task‐relevant, thus implying that P3b effects arose from differences in categorization demands of the various expressions.

As already noted, two smile properties, i.e., visual saliency and categorical distinctiveness (or diagnostic value), are thought to facilitate the happy face recognition advantage [Calvo et al., 2012]. While saliency has been proposed to affect earlier perceptual encoding distinctiveness is proposed to account for the later categorization effects. Distinctiveness refers to the specific or unique categorical information (i.e., the smile diagnostic value) conveyed by a facial feature, and therefore it is expected to influence brain activity related to categorization by drawing processing resources, as indexed by P3b [Calvo and Beltrán, 2014]. Furthermore, the smile distinctiveness is expected to produce greater differences among bottom‐half faces than among whole faces. The reason is that a distinctive feature is particularly important for recognition when the face configuration is broken down due to only part of the face being visible [Calvo et al., 2014c; Goren and Wilson, 2006]. Accordingly, the larger P3b amplitudes observed in the current study for happy relative to non‐happy bottom‐half faces may be seen as driven by the higher diagnostic value of the smile: A distinctive smile alone, even in the absence of the whole face configuration, could elicit the cognitive representation or reconstruction of the happy expression category, hence enhancing use of processing resources, while the other—less distinctive—mouth regions of non‐happy faces could not.

Our source localization analyses further clarify the meaning of the larger P3b amplitudes for smiling mouths. The right inferior temporal (IT) cortex was the greatest contributor to P3b effects. This suggests that a large amount of the brain resources allocated to recognize emotions from bottom‐half faces involves differential responses within modality‐specific areas [e.g., Haxby and Gobbini, 2011; Kanwisher and Yovel, 2006; Rangarajan et al., 2014]. This finding is consistent with prior source localization research showing modality‐specific generators of P3b activity [Bledowski et al., 2004, 2006; Morgan et al., 2008, 2010]. Increased activity in modality‐specific visual areas (e.g., IT cortex) is associated with evaluation mechanisms, which are thought to operate by linking a stimulus perceptual representation to the task‐relevant conceptual category [e.g., Bledowski et al., 2006]. This is in line with the proposal that categorization is established through the link between perceptual and memory systems [Kok, 2001]. Accordingly, in the current study, activity in the right IT cortex can be interpreted as playing a key role in the categorical evaluation (i.e., expression recognition) of bottom‐half faces. The more active the right IT cortex is, the more likely the evaluation of a feature, i.e., the smile, as belonging to an emotional category (i.e., facial happiness) would be, and hence the larger P3b amplitudes reflecting recruitment of neural resources. The activity in the right IT cortex can be seen as contributing to close the gap between the visible facial feature and the expression category, a process that would be accomplished more likely for highly diagnostic features such as the smile.

Beyond the parietal P3b modulation, TF2 analyses also showed differences between happy and non‐happy bottom‐half faces over N3 topography. Thus, at the same time as the right IT cortex was contributing to generate the P3b at parietal sites, other neural sources were mostly responsible for a different pattern of effects on surface ERP activity. The maximal contributor to these scalp effects was located at the dorsal cingulate cortex (CC), which is consistent with prior source localization evidence [Polich, 2011]. The dorsal and posterior cingulate cortex, as well as surrounding parietal areas, have in fact been associated with ERP activity at the time window of the P300 complex, thus suggesting that these sources reflect attentional allocation mechanisms during stimulus evaluation [Bledowski et al., 2004, 2006; Clayson and Larson, 2013]. Consistently, in the current study, both TF2 sources (i.e., IT and dCC) converged in showing an enhanced allocation of processing resources to expression categorization of the smiling mouth.

P2/N170, Right N170, and Right P230: Perceptual Encoding of Anger and Emotion Encoding

In addition to the neural profile for the happy face recognition advantage, the current study revealed two further ERP modulations: one distinguished between angry and non‐angry expressions (“anger effect”), while the other distinguished between emotional and non‐emotional faces (“emotion effect”). The “anger effect” was evident in PCA equivalents of the right‐lateralized N170 (TF4: ≈170 ms) and the posterior P230 (TF3: ≈230 ms), involving sources at the right posterior superior temporal sulcus (pSTS) and the right inferior parietal lobe (IPL), respectively. The effect at right N170 and pSTS was format‐independent, thus reflecting stronger activity for angry than the two non‐angry (neutral and happy) expressions regardless of face format. In contrast, the right P230 and IPL effects were format‐selective, and showed reduced activity for angry than non‐angry expressions only in whole face format. The “emotion effect” (≈170 ms) was format‐independent, it emerged in a topography corresponding to anterior P2 and posterior N170 ERP activity, and coincided in time with the right‐lateralized N170. This effect was source‐located at more anterior regions of the brain: the ventromedial prefrontal cortex (vmPFC) and the right inferior frontal gyrus (IFG).

Remarkably, the temporal overlapping of “emotion” and “anger” effects at the time window of the N170 component (≈170 ms) reveals converging but dissociable influences of facial expressions on brain activity. Regarding the source localization of the “emotion effect,” the vmPFC and right IFG are believed to underlie affective experience and evaluation [Fusar‐Poli et al., 2009; Haxby and Gobbini, 2011; Williams et al., 2006]. The larger activation in such brain sources for both happy and angry expressions than for neutral faces suggests that there is early—albeit rather global or coarse—emotional processing (i.e., discrimination of emotional versus non‐emotional faces), which might even arise at earlier stages [e.g., Andino et al., 2009; Krolak‐Salmon et al., 2004; Pourtois et al., 2010]. In contrast, the localization of the “anger effect” at the right pSTS cortex, which is consistent with other source‐localizations of the N170 component [Itier and Taylor, 2004; Nguyen and Cunnington, 2014], points to a more fine‐grained, yet perceptually driven discrimination [i.e., Haxby and Gobbini, 2011]. In particular, the right pSTS is thought to be a core structure at the brain network for the encoding of facial expressions, with contributions at early processing stages [e.g., Pitcher, 2014], and involving the computation of continuous rather than categorical (or holistic) representations of expressions [e.g., Flack et al., 2015; Harris et al., 2012]. Accordingly, the current “anger effect” might be interpreted as indexing the differential recruitment by angry faces of this perceptual mechanism. Thus, in agreement with the prevailing neural model for face processing [Haxby and Gobbini, 2011], the current study shows that neural traces of perceptual and emotional processing of faces concur since relatively early stages.

In a further ERP component (right P230), only the format‐selective (whole faces) “anger effect” remained, subtended by right‐lateralized posterior sources (IPL). Prior research has related occipital P2 activity to a second stage in the structural or configural processing of faces [Latinus and Taylor, 2006; Mercure et al., 2008]. Thus, in line with the interpretation given to the right N170‐pSTS effect, the smaller posterior P230 observed for angry whole faces relative to non‐angry faces could be seen also as perceptually driven, although possibly revealing a distinct encoding mechanism. In support of this interpretation, there is also some evidence relating activation in IPL and nearby structures to facial expression processing [Carretié et al., 2013a; Haxby and Gobbini, 2011].

CONCLUSIONS

The well‐known behavioral recognition advantage for happy facial expressions relies on two differential brain processes: one, at a perceptual stage; the other, at a categorization stage. The first neural signature peaks around 140 ms (left N140) and is source‐located at the left IT cortex, as reflected by greater activity for happy than for non‐happy faces, both when they appear in whole format and also when only the mouth region is displayed. This suggests an enhanced perceptual encoding mechanism for salient smiles that is similarly engaged whenever the smile is visible. The second neural signature peaks around 370 ms (P3b) and is source‐located at the right IT cortex, with greater activity specifically for the mouth region of happy faces than for non‐happy faces. This suggests an enhanced recruitment of face‐specific information to categorize (or reconstruct) facial happiness from distinctive or diagnostic configurations of smiling mouths. This neuro‐cognitive conceptualization is represented in Figure 8.

Figure 8.

Neural dynamics and underpinnings for the role of the smile in happy face processing, at two critical stages (perceptual encoding and expression categorization) and three levels (objective stimulus, brain activity, and cognitive representation). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

REFERENCES

- Albert J, López‐Martín S, Hinojosa JA, Carretié L (2013): Spatiotemporal characterization of response inhibition. Neuroimage 76:272–281. doi: 10.1016/j.neuroimage.2013.03.011 [DOI] [PubMed] [Google Scholar]

- Andino SL, Menendez RG, Khateb A, Landis T, Pegna AJ (2009): Electrophysiological correlates of affective blindsight. Neuroimage 44:581–589. doi: 10.1016/j.neuroimage.2008.09.002 [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ (2003): Early processing of the six basic facial emotional expressions. Brain Res Cogn Brain Res 17:613–620. DOI: 10.1016/S0926-6410(03)00174-5 [DOI] [PubMed] [Google Scholar]

- Beaudry O, Roy‐Charland A, Perron M, Cormier I, Tapp R (2014): Featural processing in recognition of emotional facial expressions. Cogn Emot 28:416–432. doi: 10.1080/02699931.2013.833500 [DOI] [PubMed] [Google Scholar]

- Bledowski C, Prvulovic D, Hoechstetter K, Scherg, M , Wibral M, Goebel R, Linden DE (2004): Localizing P300 generators in visual target and distractor processing: A combined event‐related potential and functional magnetic resonance imaging study. J Neurosci 24:9353–9360. doi: 10.1523/JNEUROSCI.1897-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bledowski C, Cohen Kadosh K, Wibral M, Rahm, B , Bittner RA, Hoechstetter K, Scherg M, Maurer K, Goebel R, Linden DE (2006): Mental chronometry of working memory retrieval: A combined functional magnetic resonance imaging and event‐related potentials approach. J Neurosci 26:821–829. doi: 10.1523/JNEUROSCI.3542-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bombari D, Schmid PC, Schmid‐Mast M, Birri, S , Mast FW, Lobmaier JS (2013): Emotion recognition: The role of featural and configural face information. Q J Exp Psychol 66:2426–2442. doi: 10.1080/17470218.2013.789065 [DOI] [PubMed] [Google Scholar]

- Borji A, Itti L (2013): State‐of‐the‐art in visual attention modeling. IEEE Trans Pattern Anal Mach Intell 35:185–207. doi: 10.1109/TPAMI.2012.89 [DOI] [PubMed] [Google Scholar]

- Brunet D, Murray MM, Michel CM (2011): Spatiotemporal analysis of multichannel EEG: CARTOOL. Comput Intell Neurosci 2011:1–15. doi: 10.1155/2011/813870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M (2000): Configural information in facial expression perception. J Exp Psychol Hum Percept Perform 26:527–551. DOI: 10.1037/0096-1523.26.2.527 [DOI] [PubMed] [Google Scholar]

- Calvo MG, Beltrán D (2014): Brain lateralization of holistic versus analytic processing of emotional facial expressions. Neuroimage 92:237–247. doi: 10.1016/j.neuroimage.2014.01.048 [DOI] [PubMed] [Google Scholar]

- Calvo MG, Lundqvist D (2008): Facial expressions of emotion (KDEF): Identification under different display‐duration conditions. Behav Res Methods 40:109–115. DOI: 10.3758/BRM.40.1.109 [DOI] [PubMed] [Google Scholar]

- Calvo MG, Marrero H (2009): Visual search of emotional faces: The role of affective content and featural distinctiveness. Cogn Emot 23:782–806. doi: 10.1080/02699930802151654 [DOI] [Google Scholar]

- Calvo MG, Nummenmaa L (2008): Detection of emotional faces: Salient physical features guide effective visual search. J Exp Psychol Gen 137:471–494. doi: 10.1037/a0012771 [DOI] [PubMed] [Google Scholar]

- Calvo MG, Nummenmaa L (2009): Eye‐movement assessment of the time course in facial expression recognition: Neurophysiological implications. Cogn Affect Behav Neurosci 9:398–411. doi: 10.3758/CABN.9.4.398 [DOI] [PubMed] [Google Scholar]

- Calvo MG, Fernández‐Martín A, Nummenmaa L (2012): Perceptual, categorical, and affective processing of ambiguous smiling facial expressions. Cognition 125:373–393. doi: 10.1016/j.cognition.2012.07.021 [DOI] [PubMed] [Google Scholar]

- Calvo MG, Gutiérrez‐García A, Avero P, Lundqvist D (2013): Attentional mechanisms in judging genuine and fake smiles: Eye‐movement patterns. Emotion 13:792–802. DOI: 10.1037/a0032317. [DOI] [PubMed] [Google Scholar]

- Calvo MG, Gutiérrez‐García A, Fernández‐Martín A, Nummenmaa L (2014a): Recognition of facial expressions of emotion is related to their frequency in everyday life. J Nonverbal Behav 38:549–567. DOI: 10.1007/s10919-014-0191-3 [DOI] [Google Scholar]

- Calvo MG, Fernández‐Martín A, Nummenmaa L (2014b): Facial expression recognition in peripheral versus central vision: Role of the eyes and the mouth. Psychol Res 78:180–195. doi: 10.1007/s00426-013-0492-x [DOI] [PubMed] [Google Scholar]

- Calvo MG, Beltrán D, Fernández‐Martín A (2014c): Processing of facial expressions in peripheral vision: Neurophysiological evidence. Biol Psychol 100:60–70. doi: 10.1016/j.biopsycho.2014.05.007 [DOI] [PubMed] [Google Scholar]

- Carretié L, Tapia M, Mercado F, Albert, J , López‐Martin S, de la Serna JM (2004): Voltage‐based versus factor score‐based source localization analyses of electrophysiological brain activity: A comparison. Brain Topogr 17:109–115. DOI: 10.1007/s10548-004-1008-1. [DOI] [PubMed] [Google Scholar]

- Carretié L, Kessel D, Carboni A, López‐Martín, S , Albert J, Tapia M, Mercado F, Capilla A, Hinojosa JA (2013a): Exogenous attention to facial vs non‐facial emotional visual stimuli. Soc Cogn Affect Neurosci 8:764–773. doi: 10.1093/scan/nss068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carretié L, Albert J, López‐Martín S, Hoyos, S , Kessel D, Tapia M, Capilla A (2013b): Differential neural mechanisms underlying exogenous attention to peripheral and central distracters. Neuropsychologia 51:1838–1847. DOI: 10.1016/j.neuropsychologia. [DOI] [PubMed] [Google Scholar]

- Clayson PE, Larson MJ (2013): Adaptation to emotional conflict: Evidence from a novel face emotion paradigm. PLoS One 8:e75776. doi: 10.1371/journal.pone.0075776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dien J, Frishkoff GA (2005): Principal components analysis of ERP data In: Handy TC, editor. Event‐Related Potentials: A Methods Handbook. Cambridge: The MIT Press; pp 189–207. [Google Scholar]

- Eimer M, Holmes A, McGlone F (2003): The role of spatial attention in the processing of facial expression: An ERP study of rapid brain responses to six basic emotions. Cogn Affect Behav Neurosci 3:97–110. DOI: 10.3758/CABN.3.2.97 [DOI] [PubMed] [Google Scholar]

- Eisenbarth H, Alpers GW (2011): Happy mouth and sad eyes: Scanning emotional facial expressions. Emotion 11:860–865. DOI: 10.1037/a0022758 [DOI] [PubMed] [Google Scholar]

- Ekman P (1992): Are there basic emotions? Psychol Rev 99:550–553. DOI: 10.1037/0033-295X.99.3.550 [DOI] [PubMed] [Google Scholar]

- Elfenbein HA, Ambady N (2003): When familiarity breeds accuracy: Cultural exposure and facial emotion recognition. J Pers Soc Psychol 85:276–290. DOI: 10.1037/0022-3514.85.2.276 [DOI] [PubMed] [Google Scholar]

- Flack TR, Andrews TJ, Hymers M, Al‐Mosaiwi, M , Marsden SP, Marsden SP, Strachan JW, Trakukpipat C, Wang L, Wu T, Young AW (2015): Responses in the right posterior superior temporal sulcus show a feature‐based response to facial expression. Cortex, 69:14–23. doi: 10.1016/j.cortex.2015.03.002 [DOI] [PubMed] [Google Scholar]

- Folstein JR, van Petten C (2011): After the P3: Late executive processes in stimulus categorization. Psychophysiology 48:825–841. doi: 10.1111/j.1469-8986.2010.01146.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foti D, Hajcak G, Dien J (2009): Differentiating neural responses to emotional pictures: Evidence from temporal‐spatial PCA. Psychophysiology 46:521–530. DOI: 10.1111/j.1469-8986.2009.00796.x [DOI] [PubMed] [Google Scholar]

- Foti D, Weinberg A, Dien J, Hajcak G (2011): Event‐related potential activity in the basal ganglia differentiates rewards from nonrewards: Temporospatial principal components analysis and source localization of the feedback negativity. Hum Brain Mapp 32:2207–2216. doi: 10.1002/hbm.21182 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frühholz S, Fehr T, Herrmann M (2009): Early and late temporospatial effects of contextual interference during perception of facial affect. Int J Psychophysiol 74:1–13. doi: 10.1016/j.ijpsycho.2009.05.010 [DOI] [PubMed] [Google Scholar]

- Fusar‐Poli P, Placentino A, Carletti F, Landi, P , Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparoti R, Barale F, Perez J, McGuire P, Politi P (2009): Functional atlas of emotional faces processing: A voxel‐based meta‐analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci 34:418–432. [PMC free article] [PubMed] [Google Scholar]

- George N (2013): The facial expression of emotions In: Armony J, Vuilleumier P, editors. The Cambridge Handbook of Human Affective Neuroscience. New York: Cambridge University Press; pp 171–196 [Google Scholar]

- Goren D, Wilson HR (2006): Quantifying facial expression recognition across viewing conditions. Vision Res 46:1253–1262. doi: 10.1016/j.visres.2005.10.028 [DOI] [PubMed] [Google Scholar]

- Grave de Peralta R, Andino S, Lantz G, Michel CM, Landis T (2001): Noninvasive localization of electromagnetic epileptic activity. I. Method descriptions and simulations. Brain Topogr 14:131–137. DOI: 10.1023/A:1012944913650 [DOI] [PubMed] [Google Scholar]

- Harris RJ, Young AW, Andrews TJ (2012): Morphing between expressions dissociates continuous from categorical representations of facial expression in the human brain. Proc Natl Acad Sci USA 109:21164–21169. doi: 10.1073/pnas.1212207110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI (2011): Distributed neural systems for face perception In: Rhodes G, Calder A, Johnson M, Haxby JV, editors. Oxford Handbook of Face Perception. NewYork: Oxford University Pres; pp 93–110 [Google Scholar]

- Itier RJ, Taylor MJ (2004): Source analysis of the N170 to faces and objects. Neuroreport 15:1261–1265. [DOI] [PubMed] [Google Scholar]

- Itti L, Koch C (2001): Computational modeling of visual attention. Nat Rev Neurosci 2:194–203. doi: 10.1038/35058500 [DOI] [PubMed] [Google Scholar]

- Johnston L, Miles L, Macrae C (2010): Why are you smiling at me? Social functions of enjoyment and non‐enjoyment smiles. Br J Soc Psychol 49:107–127. doi: 10.1348/014466609X412476 [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G (2006): The fusiform face area: A cortical region specialized for the perception of faces. Philos Trans R Soc Lond B Biol Sci 361:2109–2128. doi: 10.1098/rstb.2006.1934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser J, Tenke CE (2003): Optimizing PCA methodology for ERP component identification and measurement: Theoretical rationale and empirical evaluation. Clin Neurophysiol 114:2307–2325. DOI: 10.1016/S1388-2457(03)00241-4 [DOI] [PubMed] [Google Scholar]

- Kohler CG, Turner T, Stolar NM, Bilker, WB , Brensinger CM, Gur RE, Gur RC (2004): Differences in facial expressions of four universal emotions. Psychiatry Res 128:235–244. doi: 10.1016/j.psychres.2004.07.003 [DOI] [PubMed] [Google Scholar]

- Kok A (2001): On the utility of P3 amplitude as a measure of processing capacity. Psychophysiology 38:557–577. DOI: 10.1017/S0048577201990559 [DOI] [PubMed] [Google Scholar]

- Kraft TL, Pressman SD (2012): Grin and bear it: The influence of manipulated facial expression on the stress response. Psychol Sci 23:1372–1378. doi: 10.1177/0956797612445312 [DOI] [PubMed] [Google Scholar]

- Krolak‐Salmon P, Hénaff MA, Vighetto A, Bertrand O, Mauguière F (2004): Early amygdala reaction to fear spreading in occipital, temporal, and frontal cortex: A depth electrode ERP study in human. Neuron 42:665–676. DOI: 10.1016/S0896-6273(04)00264-8 [DOI] [PubMed] [Google Scholar]

- Latinus M, Taylor MJ (2006): Face processing stages: Impact of difficulty and the separation of effects. Brain Res 1123:179–187. doi: 10.1016/j.brainres.2006.09.031 [DOI] [PubMed] [Google Scholar]

- Leppänen J, Hietanen JK (2007): Is there more in a happy face than just a big smile? Vis Cogn 15:468–490. doi: 10.1080/13506280600765333 [DOI] [Google Scholar]

- Leppänen JM, Hietanen JK, Koskinen K (2008): Differential early ERPs to fearful versus neutral facial expressions: A response to the salience of the eyes?. Biol Psychol 78:150–158. doi: 10.1016/j.biopsycho.2008.02.002 [DOI] [PubMed] [Google Scholar]

- Lipp O, Price SM, Tellegen CL (2009): No effect of inversion on attentional and affective processing of facial expressions. Emotion 9:248–259. doi: 10.1037/a0014715 [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A (1998): The Karolinska Directed Emotional Faces – KDEF. CD–ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, Stockholm, Sweden. ISBN 91–630–7164–9.

- Luo W, Feng W, He W, Wang NY, Luo YJ (2010): Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage 49:1856–1867. doi: 10.1016/j.neuroimage.2009.09.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLellan T, Johnston L, Dalrymple–Alford J, Porter R (2010): Sensitivity to genuine vs. posed emotion specified in facial displays. Cogn Emot 24:1277–1292. doi: 10.1080/02699930903306181 [DOI] [Google Scholar]

- Maurer D, O'Craven KM, Le Grand R, Mondloch, CJ , Springer MV, Lewis TL, Grady CL (2007): Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia 45:1438–1451. doi: 10.1016/j.neuropsychologia.2006.11.016 [DOI] [PubMed] [Google Scholar]

- Meng M, Cherian T, Singal G, Sinha P (2012): Lateralization of face processing in the human brain. Proc Biol Sci 279:2052–2061. doi: 10.1098/rspb.2011.1784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mercure E, Dick F, Johnson MH (2008): Featural and configural face processing differentially modulate ERP components. Brain Res 1239:162–170. doi: 10.1016/j.brainres.2008.07.098 [DOI] [PubMed] [Google Scholar]

- Michel CH, Murray MM, Lantz G, Gonzalez, S , Spinelli L, Grave de Peralta R (2004): EEG source imaging. Clin Neurophysiol 115:2195–2222. doi: 10.1016/j.clinph.2004.06.001 [DOI] [PubMed] [Google Scholar]

- Milders M, Sahraie A, Logan S (2008): Minimum presentation time for masked facial expression discrimination. Cogn Emot 22:63–82. doi: 10.1080/02699930701273849 [DOI] [Google Scholar]

- Morel S, Beaucousin V, Perrin M, George N (2012): Very early modulation of brain responses to neutral faces by a single prior association with an emotional context: evidence from MEG. Neuroimage 61:1461–1470. doi: 10.1016/j.neuroimage.2012.04.016 [DOI] [PubMed] [Google Scholar]

- Morgan HM, Klein C, Boehm SG, Shapiro KL, Linden DE (2008): Working memory load for faces modulates P300, N170, and N250r. J Cogn Neurosci 20:989–1002. doi: 10.1162/jocn.2008.20072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan HM, Jackson MC, Klein C, Mohr, H , Shapiro KL, Linden DE (2010): Neural signatures of stimulus features in visual working memory–a spatiotemporal approach. Cereb Cortex 20:187–197. doi: 10.1093/cercor/bhp094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson NL, Russell JA (2013): Universality revisited. Emot Rev 5:8–15. doi: 10.1177/1754073912457227 [DOI] [Google Scholar]

- Nguyen VT, Cunnington R (2014): The superior temporal sulcus and the N170 during face processing: single trial analysis of concurrent EEG‐fMRI. Neuroimage 86:492–502. doi: 10.1016/j.neuroimage.2013.10.047 [DOI] [PubMed] [Google Scholar]

- Nusseck M, Cunningham DW, Wallraven C, Bülthoff HH (2008): The contribution of different facial regions to the recognition of conversational expressions. J Vis 8:1–23. DOI: 10.1167/8.8.1 [DOI] [PubMed] [Google Scholar]

- Palermo R, Coltheart M (2004): Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behav Res Methods Instrum Comput 36:634–638. DOI: 10.3758/BF03206544 [DOI] [PubMed] [Google Scholar]

- Pegna AJ, Landis T, Khateb A (2008): Electrophysiological evidence for early non‐conscious processing of fearful facial expressions. Int J Psychophysiol 70:127–136. doi: 10.1016/j.ijpsycho.2008.08.007 [DOI] [PubMed] [Google Scholar]

- Pitcher D (2014): Facial expression recognition takes longer in the posterior superior temporal sulcus than in the occipital face area. J Neurosci 34:9173–9177. doi: 10.1523/JNEUROSCI.5038-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polich J (2011): Neuropsychology of P300 In: Luck SJ, Kappenman ES, editors. The Oxford Handbook of Event‐Related Potential Components. NewYork: Oxford University Press; pp 159–188. [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P (2004): Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb Cortex 14:619–633. doi: 10.1093/cercor/bhh023 [DOI] [PubMed] [Google Scholar]

- Pourtois G, Spinelli L, Seeck M, Vuilleumier P (2010): Modulation of face processing by emotional expression and gaze direction during intracranial recordings in right fusiform cortex. J Cogn Neurosci 22:2086–2107. doi: 10.1162/jocn.2009.21404 [DOI] [PubMed] [Google Scholar]

- Rangarajan V, Hermes D, Foster BL, Weiner, KS , Jacques C, Grill‐Spector K, Parvizi J (2014): Electrical stimulation of the left and right human fusiform gyrus causes different effects in conscious face perception. J Neurosci 34:12828–12836. doi: 10.1523/JNEUROSCI.0527-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recio G, Schacht A, Sommer W (2013): Classification of dynamic facial expressions of emotion presented briefly. Cogn Emot 27:1486–1494. doi: 10.1080/02699931.2013.794128 [DOI] [PubMed] [Google Scholar]

- Recio G, Schacht A, Sommer W (2014): Recognizing dynamic facial expressions of emotion: Specificity and intensity effects in event–related brain potentials. Biol Psychol 96:111–125. doi: 10.1016/j.biopsycho.2013.12.003 [DOI] [PubMed] [Google Scholar]

- Rellecke J, Palazova M, Sommer W, Schacht A (2011): On the automaticity of emotion processing in words and faces: Event‐related brain potentials evidence from a superficial task. Brain Cogn 77:23–32. doi: 10.1016/j.bandc.2011.07.001. [DOI] [PubMed] [Google Scholar]

- Rellecke J, Sommer W, Schacht A (2012): Does processing of emotional facial expressions depend on intention? Time‐resolved evidence from event‐related brain potentials. Biol Psychol 90:23–32. doi: 10.1016/j.biopsycho.2012.02.002 [DOI] [PubMed] [Google Scholar]

- Renzi C, Schiavi S, Carbon CC, Vecchi, T , Silvanto J, Cattaneo Z (2013): Processing of featural and configural aspects of faces is lateralized in dorsolateral prefrontal cortex: A TMS study. Neuroimage 74:45–51. doi: 10.1016/j.neuroimage.2013.02.015 [DOI] [PubMed] [Google Scholar]

- Rossion B, Dricot L, Devolder A, Bodart, JM , Crommelinck M, De Gelder B, Zoontjes R (2000): Hemispheric asymmetries for whole‐based and part‐based face processing in the human fusiform gyrus. J Cogn Neurosci 12:793–802. [DOI] [PubMed] [Google Scholar]

- Schacht A, Sommer W (2009): Emotions in word and face processing: Early and late cortical responses. Brain Cogn 69:538–550. doi: 10.1016/j.bandc.2008.11.005 [DOI] [PubMed] [Google Scholar]

- Schupp H, Öhman A, Junghöfer M, Weike, AI , Stockburger J, Hamm AO (2004): The facilitated processing of threatening faces: An ERP analysis. Emotion 4:189–200. doi: 10.1037/1528-3542.4.2.189 [DOI] [PubMed] [Google Scholar]

- Scott LS, Nelson CA (2006): Featural and configural face processing in adults and infants: A behavioral and electrophysiological investigation. Perception 35:1107–1128. DOI: 10.1068/p5493 [DOI] [PubMed] [Google Scholar]

- Somerville LH, Whalen PJ (2006): Prior experience as a stimulus category confound: An example using facial expressions of emotion. Soc Cogn Affect Neurosci 1:271–274. doi: 10.1093/scan/nsl040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svärd J, Wiens S, Fischer H (2012): Superior recognition performance for happy masked and unmasked faces in both younger and older adults. Front Psychol 3:520. doi: 10.3389/fpsyg.2012.00520 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweeny TD, Suzuki S, Grabowecky M, Paller KA (2013): Detecting and categorizing fleeting emotions in faces. Emotion 13:76–91. doi: 10.1037/a0029193 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988): Co‐planar Sterotactic Atlas of the Human Brain. Thieme: Stuttgart. [Google Scholar]

- Vytal K, Hamann S (2010): Neuroimaging support for discrete neural correlates of basic emotions: A voxel–based meta–analysis. J Cogn Neurosci 22:2864–2885. doi: 10.1162/jocn.2009.21366 [DOI] [PubMed] [Google Scholar]

- Wang HF, Friel N, Gosselin F, Schyns PG (2011): Efficient bubbles for visual categorization tasks. Vis Res 51:1318–1323. doi: 10.1016/j.visres.2011.04.007 [DOI] [PubMed] [Google Scholar]

- Weymar M, Löw A, Öhman A, Hamm AO (2011): The face is more than its parts—Brain dynamics of enhanced spatial attention to schematic threat. Neuroimage 58:946–954. doi: 10.1016/j.neuroimage.2011.06.061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams LM, Palmer D, Liddell BJ, Song L, Gordon E (2006): The when and where of perceiving signals of threat versus non–threat. Neuroimage 31:458–467. doi: 10.1016/j.neuroimage.2005.12.009 [DOI] [PubMed] [Google Scholar]

- Willis ML, Palermo R, Burke D, Atkinson CM, McArthur G (2010): Switching associations between facial identity and emotional expression: A behavioural and ERP study. Neuroimage 50:329–339. doi: 10.1016/j.neuroimage.2009.11.071 [DOI] [PubMed] [Google Scholar]