Abstract

In natural viewing conditions, different stimulus categories such as people, objects, and natural scenes carry relevant affective information that is usually processed simultaneously. But these different signals may not always have the same affective meaning. Using body‐scene compound stimuli, we investigated how the brain processes fearful signals conveyed by either a body in the foreground or scenes in the background and the interaction between foreground body and background scene. The results showed that left and right extrastriate body areas (EBA) responded more to fearful than to neutral bodies. More interestingly, a threatening background scene compared to a neutral one showed increased activity in bilateral EBA and right‐posterior parahippocampal place area (PPA) and decreased activity in right retrosplenial cortex (RSC) and left‐anterior PPA. The emotional scene effect in EBA was only present when the foreground body was neutral and not when the body posture expressed fear (significant emotion‐by‐category interaction effect), consistent with behavioral ratings. The results provide evidence for emotional influence of the background scene on the processing of body expressions. Hum Brain Mapp 35:492–502, 2014. © 2012 Wiley Periodicals, Inc.

Keywords: body, scene, emotion, EBA, PPA

INTRODUCTION

Often when we see that somebody is afraid, we notice at the same time what causes his fear. For example, when we see a person running away, we also notice the car crash or the house on fire. In natural viewing conditions, different stimulus categories carrying affective information, such as people, objects, and backgrounds, may all be relevant and processed together, and these information streams may interact. The brain has a remarkable capacity to detect affective information conveyed by the gist of a scene [Thorpe et al., 1996] as well as the emotional valence displayed by bodily expressions [de Gelder et al., 2004], even with limited awareness [Stienen and de Gelder, 2011; Tamietto et al., 2009; Van den Stock et al., 2009].

In fact, the importance of visual context for improving target detection is now widely acknowledged in computer vision [Yao et al., 2011]. But little is known about how the brain perceives the emotional expression of a person when this is presented in a realistic context and how the information from the person's expression and that from the background scene influence each other, whether or not they have the same affective relevance.

A recent study shows that there is indeed an interaction between both types of information: observers are more accurate in recognizing body expressions when they are emotionally congruent with the affective valence of the background scene [Kret and de Gelder, 2010]. There is evidence that activity in body selective regions like the fusiform body area (FBA) [Peelen and Downing, 2005] and extrastriate body area (EBA) [Downing et al., 2001] is modulated by emotional information conveyed by bodily expressions [de Gelder et al., 2010]. In addition to boosting activity in category‐selective brain areas, it has been reported that perceiving body expressions also involves other brain regions; for instance, the amygdala [Hadjikhani and de Gelder, 2003], cortical and subcortical motor related areas [de Gelder et al., 2004], superior temporal sulcus [e.g., Candidi et al., 2011], and periaquaductal gray [van de Riet et al., 2009].

The emotional modulation of scene‐selective areas like the parahippocampal place area (PPA) [Epstein and Kanwisher, 1998], the retrosplenial cortex (RSC) [Bar and Aminoff, 2003], and the transverse occipital sulcus (TOS) [Hasson et al., 2003] has not yet been explored. Studies investigating the neural correlates of emotional scenes have typically compared scenes containing emotional body expressions (e.g., erotic pictures or attacking robbers) with neutral scenes containing household objects, but without human bodies. Therefore, it is unclear to what extent this comparison reflects category‐specific rather than emotional responses. From this perspective, it may not be surprising that emotional scenes activate extrastriate cortices in the region of EBA [Bradley et al., 2003; Keightley et al., 2011; Schafer et al., 2005]. On the other hand, we recently reported that threatening scenes that were matched on categorical content, activate the EBA [Sinke et al., 2012].

To our knowledge, no study manipulated the valence of bodies in emotional and neutral scenes while systematically controlling for category‐specific features. In the present study, we targeted this issue and investigated how the brain responds to emotional information conveyed by bodies as well as by background scenes and how both types of information interact.

MATERIALS AND METHODS

Participants

Twenty participants [7 males; mean age (SD): 23.4 (3.90)] took part in the fMRI experiment. All participants had normal or corrected‐to‐normal vision and no neurological or psychiatric history. Informed written consent was obtained in accordance with the declaration of Helsinki, and the study was approved by the local ethics committee.

Body‐Scene Experiment

Pictures of whole‐body expressions with the faces blurred were taken from our own validated database [de Gelder and Van den Stock, 2011a]. Twenty‐four images (12 identities, each with a neutral and a fearful expression) were selected for use in the present study (all recognized accurately above 80% in the pilot study). Scenes of happy, threatening, neutral, sad, or disgusting everyday situations were downloaded from the internet. We selected three familiar scene categories (buildings, cars, and landscapes) that involve the same objects but with the different affective significances. Examples of stimuli are a house on fire or a holiday cottage, a damaged car in an accident, or a shiny new convertible. None of the scenes displayed humans or animals. In a pilot study, the scenes were presented one by one for 4,000 ms with a 4,000‐ms interstimulus interval. Participants were instructed to categorize as accurately and as quickly as possible the pictures according to the emotion they induced in the observer (anger, fear, happiness, disgust, sadness, or neutral). On the basis of these results, we selected 24 scenes (12 threatening and 12 neutral) for the present experiment (all recognized correctly above 70%). Each category contained four exemplars with a car, four with a building, and four landscapes. We created scrambled versions of every scene by dividing it in 10,000 (100 × 100) squares and randomly rearranging the squares. Each of the 24 scenes was combined into a compound stimulus in four different combinations (with a fearful male body, a fearful female body, a neutral male body, and a neutral female body) resulting in 96 realistically looking compound stimuli. We used the scrambles as controls for the scenes and a triangle as control for the bodies. We presented the triangles with horizontal baseline and upward vertex, as one study reported that downward vertices convey threat [Aronoff et al., 1992]. The 24 scrambled scenes were combined once with a fearful body and once with a neutral body, leading to an additional 48 compound stimuli. We also paired every scene with a white triangle (24 intact scenes + 24 scrambled scenes). These scene‐triangle combinations were used as a control condition instead of only the scenes in order to maintain the same task in all conditions (see below) and to ensure that all stimuli had a clear foreground/background structure. This procedure results in a total of 192 compound stimuli.

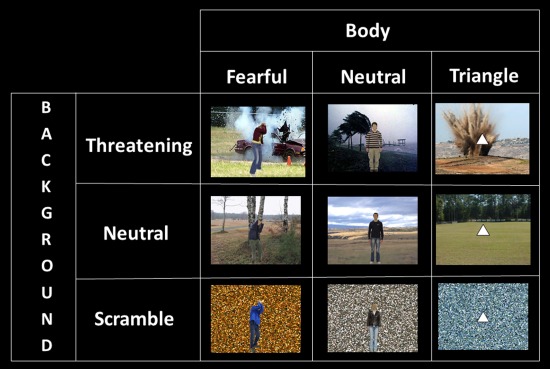

Stimuli were presented in blocks of 9,000 ms, separated by fixation blocks of 15,750 ms. Within a block, eight stimuli were presented for 800 ms with an ISI of 370 ms, during which a gray screen was shown. In fixation blocks, a gray screen with a black fixation cross was presented. We used a 3 (body: fearful, neutral and triangle) × 3 (scene: threatening, neutral and scrambled) factorial design (see Fig. 1). Participants were given an oddball detection task and instructed to press the response button when the foreground figure (body or triangle) was shown upside‐down. A run lasted 711 s and consisted of 31 experimental blocks and 32 fixation blocks. The order of the blocks was randomized. In 4 of the 31 blocks (13%), an oddball stimulus occurred, while the remaining 27 blocks were divided in three blocks of every condition. The experiment consisted of four runs.

Figure 1.

Schematic overview and stimulus examples of the conditions in the 3 body × 3 background factorial design. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Object Category Localizer

The methods of the object category localizer are described in detail in Van den Stock et al. 2011. In summary, neutral body postures, neutral faces, buildings, and handheld tools were presented in a pseudo‐randomized blocked design with alternating stimulation (12,000 ms) and fixation blocks (14,000 ms). There were 20 exemplars of each category. Half of the body images and half of the face images were male. There was no overlap between the body identities presented in the main experiment and in the localizer.

Half of the participants were scanned with a parallel version in which the order of the blocks was reversed. Participants performed a one‐back task. The mean number of one back targets per block was 1 (range, 0–2) with an equal distribution among conditions.

fMRI Scan Acquisition and data Analysis

Brain imaging was performed on a Siemens MAGNETOM Allegra 3T MR head scanner at the Maastricht Brain Imaging Center, Maastricht University.

All participants underwent four experimental runs, in which 348 T2*‐weighted BOLD contrast volumes were acquired. On the basis of structural information from a nine‐slice localizer scan, 42 axial slices (slice thickness = 2.5 mm; no gap; inplane resolution = 3.5 × 3.5 mm; matrix size = 64 × 64; FOV = 224 mm) were positioned to cover the whole brain (TE = 25; TR = 2,250 ms; flip angle = 90°). Slices were scanned in an interleaved ascending order. A high‐resolution T1‐weighted anatomical image (voxel size = 1 × 1 × 1 mm) was acquired for each subject using a three‐dimensional magnetization‐prepared rapid acquisition gradient echo sequence (TR = 2,250 ms; TE = 26 ms; matrix size = 256 × 256; 192 slices). Two functional runs were followed by the structural scan, after which the two remaining functional runs were completed.

The localizer scan was run at the end of the session and was optimized for defining category selective areas in occipitotemporal cortex. Two hundred sixty‐seven T2*‐weighted BOLD contrast volumes were acquired, consisting each of 28 slices (slice thickness = 2 mm; no gap; inplane resolution = 2 × 2 mm; matrix size = 128 × 128; FOV = 256 mm) that were axially positioned to cover the (ventral) occipitotemporal cortex (TE = 30; TR = 2,000 ms; flip angle = 90°). Slices were scanned in an interleaved ascending order.

Imaging data were analyzed using BrainVoyager QX software (Brain Innovation, Maastricht, the Netherlands). Structural scans were segmented to delineate white matter from gray matter, and we performed cortex‐based intersubject alignment based on the gyral/sulcal pattern of individual brains [Goebel et al., 2006] to maximize anatomical between‐subject alignment. On the basis of this, an average cortical reconstruction was made based on all individual brains. The first two volumes of every functional run were discarded to allow for T1 equilibration. Preprocessing of the functional data included slice scan time correction (cubic spline interpolation), 3D motion correction (trilinear/sinc interpolation), temporal filtering (high‐pass GLM‐Fourier of two sines/cosines), and Gaussian spatial smoothing (6 mm). Functional data were then co‐registered with the anatomical volume and transferred into Talairach space. Analysis of cortical activation included cortex‐based intersubject alignment [Goebel et al., 2006].

The statistical analysis was based on the general linear model, with each condition defined as a predictor plus one for the oddball. As categorical differences are significantly larger than emotional differences, we used a threshold of 10−4 (uncorrected) for the categorical comparisons and a threshold of 10−3 (uncorrected) for the emotional comparisons. This approach has also been used in other studies examining both categorical and emotional effects [e.g., Peelen et al., 2007].

Behavioral Experiment

After the scanning session, 14 of the participants [5 males; mean age (SD): 26.4 (10.3)] participated in a behavioral experiment. All body–scene stimuli (excluding the stimuli containing the triangle) were randomly presented twice one by one for 800 ms while participants were instructed to categorize the emotion expressed by the body in a two alternative forced choice task (fearful or neutral). The instructions stated to respond as accurately and quickly as possible.

RESULTS

Whole Brain Analysis

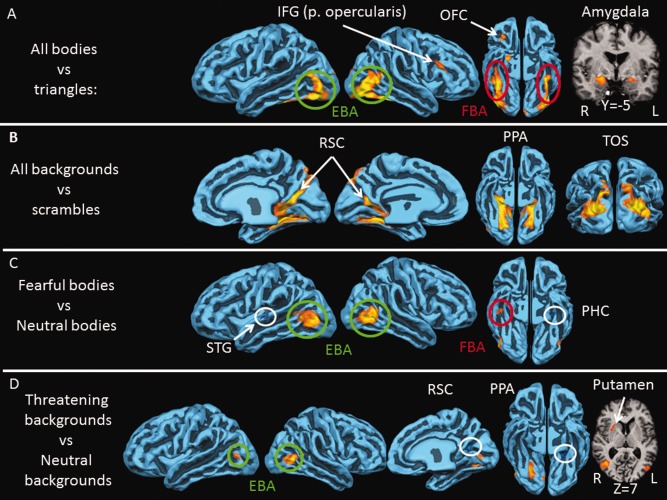

We first examined categorical responses, that is, the regions that respond to bodies and to scenes by comparing each of the two categories (regardless of the emotional information) with its control condition (fearful and neutral bodies vs. triangles; threatening and neutral scenes vs. scrambled scenes). The results are displayed in Figure 2 and Table 1. We do not report negative activations (i.e., activations from the reverse contrast), because they are not of interest for the research question.

Figure 2.

Neural activity for perceiving bodies and backgrounds. A: Brain areas responding more to bodies than to triangles (P < 10−4): FBA (red); EBA (green), inferior frontal gyrus (IFG), pars opercularis, orbitofrontal cortex (OFC), and amygdala. B: Brain areas responding more to backgrounds than to scrambled images (P < 10−4): transverse occipital sulcus (TOS), parahippocampal place area (PPA), and retrosplenial cortex (RSC). C: brain areas responding more to fearful bodies than neutral bodies (P < 10−3): EBA and FBA. The superior temporal gyrus (STG) and parahippocampal cortex (PHC) responded more to neutral than to fearful bodies. D: Brain areas responding more to threatening backgrounds than to neutral backgrounds (P < 10−3): EBA right posterior PPA, and putamen. The right RSC and left anterior PPA responded more to neutral than to threatening backgrounds. Cortical activity is shown on a reconstruction of the average cortically aligned brains of all 20 participants. Gyri are shown in light blue and sulci in dark blue. Negative activation is circled in white. Coordinates refer to TAL space.

Table 1.

Results of the categorical and emotional contrasts

| x | y | z | t | N | |

|---|---|---|---|---|---|

| Bodies versus triangles | |||||

| RH | |||||

| OFC | 28 | 28 | −7 | 6.667 | 32 |

| L‐shape | 43 | −71 | −2 | 12.905 | 1285 |

| Cuneus | 16 | −90 | 16 | 10.926 | 73 |

| IFG | 46 | 26 | 20 | 7.453 | 126 |

| AMG | 20 | −5 | −9 | 7.985 | 1138 |

| Pulvinar | 14 | −26 | 3 | 7.370 | 317 |

| LH | |||||

| L‐shape | −38 | −36 | −17 | 11.944 | 581 |

| Cuneus | −6 | −95 | 6 | 8.055 | 78 |

| AMG | −22 | −5 | −9 | 6.207 | 174 |

| Pulvinar | −19 | −26 | 0 | 5.636 | 46 |

| Backgrounds versus scrambles | |||||

| RH | |||||

| RSC | 18 | −52 | 17 | 12.047 | 557 |

| PPA | 23 | −72 | −6 | 11.777 | 1037 |

| TOS | 43 | −70 | −1 | 13.549 | 600 |

| LH | |||||

| RSC | −17 | −54 | 7 | 8.519 | 186 |

| PPA | −26 | −43 | −5 | 13.622 | 699 |

| TOS | −23 | −78 | 20 | 12.903 | 510 |

| Fearbodies versus neutral bodies | |||||

| RH | |||||

| EBA | 43 | −71 | −2 | 9.785 | 482 |

| FBA | 42 | −19 | −17 | 5.222 | 54 |

| LH | |||||

| EBA | −43 | −75 | 6 | 9.055 | 532 |

| STG | −61 | −19 | 7 | −4.842 | 46 |

| PHC | −35 | −23 | −18 | −5.595 | 94 |

| Cuneus | −9 | −92 | 10 | −4.905 | 22 |

| Threatening backgrounds versus neutral backgrounds | |||||

| RH | |||||

| EBA | 44 | −70 | 3 | 9.157 | 192 |

| PPA | 25 | −70 | −8 | 7.965 | 163 |

| RSC | 18 | −52 | 17 | −6.246 | 46 |

| LG | 7 | −77 | 1 | 5.967 | 23 |

| Putamen | 26 | 13 | 12 | 5.177 | 441 |

| LH | |||||

| EBA | −43 | −71 | 10 | 4.922 | 37 |

| PPA | −21 | −38 | −7 | −5.030 | 83 |

X, Y, and Z refer to Talairach coordinates of the peak voxel/vertex. N refers to the number of voxels/vertices. RH, right hemisphere; LH, left hemisphere; OFC, orbitofrontal cortex; AMG, amygdala; RSC, retrosplenial cortex; PPA, parahippocampal place area; TOS, transverse occipital sulcus; EBA, extrastriate body area; FBA, fusiform body area; STG, superior temporal gyrus; PHC, parahippocampal cortex; LG, lingual gyrus.

Categorical Contrasts

Bodies > triangles

Regions responding more to bodies than to triangles consisted of the bilateral amygdala, pulvinar nuclei of the thalamus, cuneus, an L‐shaped region in occipitotemporal cortex containing EBA [Downing et al., 2001] and FBA [Peelen and Downing, 2005] the right inferior frontal gyrus (IFG) and finally a region in the right orbitofrontal cortex (OFC).

Scenes > scrambled scenes

The scene perception areas comprised the bilateral PPA, RSC, and TOS.

Emotional Contrasts

Second, we examined the effect of emotion. For these comparisons, the statistical threshold was set at P < 10−3, uncorrected. Negative activations (i.e., activations from the reverse contrast) are also reported.

Fearful bodies > neutral bodies

Comparing fearful bodies with neutral bodies revealed activity in the bilateral region corresponding to EBA and the right FBA. Neutral bodies activated the left superior temporal gyrus, cuneus, and right anterior parahippocampal cortex (PHC) more than fearful bodies.

Threatening scenes > neutral scenes

Surprisingly, comparing threatening with neutral scenes activated the region of EBA. Additionally, the right‐posterior collateral sulcus and putamen were more active during perception of threatening versus neutral scenes, while the left‐anterior collateral sulcus and right retrosplenial cortex (RSC) responded more to neutral than to threatening scenes.

Category × Emotion Interaction

Third, we examined the interaction between category and emotion. We performed a contrast to investigate the additive effect of the combination of a fearful body in a threatening context, controlled for the effect of both categories separately [(fearful body in threatening background – fearful body in neutral background) – (neutral body in threatening background – neutral body in neutral background)]. In addition, we made the reverse comparison [(neutral body in threatening background – neutral body in neutral background) – (fearful body in threatening background – fearful body in neutral background)]. This revealed no significant results at whole‐brain level.

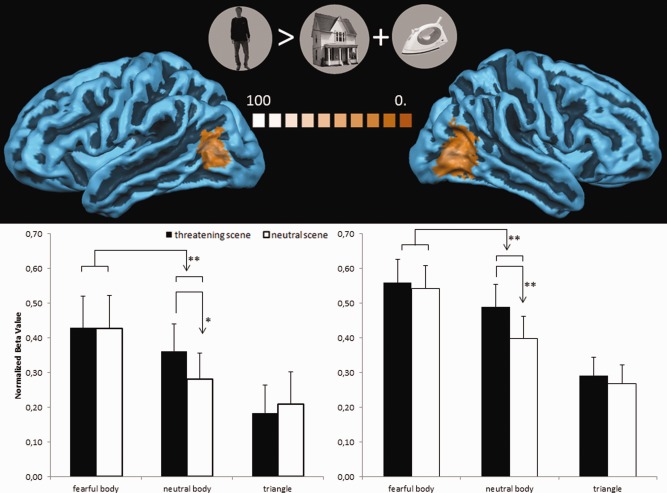

ROI Analysis

In view of the finding that the EBA region responded both to fearful body expressions and to threatening background information, we defined EBA at subject level by contrasting bodies with houses and tools in the localizer (P < 0.05, FDR corrected for multiple comparisons). The right EBA was active in all subjects [mean (SD) Talairach coordinates: 45 (3.4), −66 (4.6), 5 (3.9)], whereas 10 subjects also activated the left EBA [mean (SD) Talairach coordinates: −43 (1.9), −65 (3.7), 9 (3.7)]. We extracted the cluster‐averaged normalized beta values in left and right EBA on a subject basis of the conditions of the main experiment. The ROI area consisted of the 50 activated vertices around the peak vertex. To investigate the effect of background emotion on the body stimulus, we performed a 2 (body emotion: fear and neutral) × 2 (background emotion: threatening and neutral) repeated‐measures ANOVA. The results are shown in Figure 3 and revealed in the right EBA a main effect of body emotion [F(1,19) = 18.035, P < 0.001], background emotion [F(1,19) = 18.179, P < 0.001], and body × background emotion interaction [F(1,19) = 6.593, P < 0.019)]. Post hoc paired‐samples t‐tests between the threatening and neutral background conditions were performed as a function of body condition to follow up on the interaction effect and to investigate the body specificity of the background emotion effect. The results showed that threatening backgrounds trigger more activity than neutral backgrounds, but only when they contain a neutral body [t(19) = 3.819; P < 0.001] and not when they contain a fearful body [t(19) = 1.247; P < 0.228] or a triangle [t(19) = 0.029; P < 0.369].

Figure 3.

Interaction between bodies and background in EBA defined by independent localizer. Top: left EBA and right EBA as defined by an independent localizer (bodies > tools and buildings, stimuli shown in the middle). EBA was defined on subject‐level (FDR‐corrected P < 0.05). The color coding corresponds to the percentage of overlap across subjects. Bottom: histograms showing normalized beta‐values of the main experiment conditions in left and right EBA. Error bars represent 1 SEM. **P < 0.001; *P < 0.03. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

The same analysis in the left EBA showed a main effect of body emotion [F(1,9) = 5.805, P < 0.038], whereas the main effect of background emotion [F(1,9) = 4.296, P < 0.068] and body × background interaction [F(1, 9) = 3.613, P < 0.089] was only marginally significant. The post hoc tests showed that threatening backgrounds trigger more activity than neutral backgrounds, however, again only when a neutral body was present [t(9) = 2.577; P < 0.030] and not when a fearful body [t(9) = 0.058; P < 0.955] or a triangle [t(9) = −0.537; P < 0.604) was present.

Behavioral Results

In the behavioral experiment that followed the scanning session, the subjects were instructed to categorize the emotion expressed by the body stimulus. This task was different from the one during scanning, as subjects performed an orthogonal oddball detection task in the latter. We computed the mean accuracies per condition and the median reaction times (RT) of the correct trials of the behavioral experiment. Accuracies were near ceiling (all conditions above 95% correct). A 2 (body emotion: fear and neutral) × 2 (background emotion: threatening and neutral) repeated‐measures ANOVA on the RT data revealed a significant interaction [F(1,13) = 9.634, P < 0.008]. Post hoc‐paired sample t‐tests showed that participants were slower during the task when threatening backgrounds compared to neutral backgrounds were presented, but only when the body expression was neutral [t(13) = 3.050; P < 0.009] and not when it was fearful [t(13) = −1.189; P < 0.256].

DISCUSSION

Our goal was to investigate the processing of bodily expressions presented against matching or mismatching background scenes. Using body‐scene compound stimuli, we investigated how the brain processes fearful signals conveyed by either a body in the foreground or scenes in the background and the interaction between foreground body and background scene. Left and right EBA responded more to fearful than to neutral bodies. More interestingly, a threatening background scene compared to a neutral one showed increased activity in bilateral EBA and right‐posterior PPA and decreased activity in RSC and left‐anterior PPA. The emotional scene effect in EBA was only present when the foreground body was neutral and not when the body posture expressed fear (significant emotion‐by‐category interaction effect), consistent with behavioral ratings.

Cross‐Categorical Bias and Ambiguity Reduction in EBA?

The main result of the present study is that extrastriate body area (EBA) responds to threat signals displayed by the body as well as by the background scene. The latter effect is only present when the compound stimulus contains a neutral body expression and not when it contains a fearful body expression or when no body is presented. This pattern indicates that the effect cannot be explained by a general emotional effect from the background unrelated to the presence of a body. Although the key body × background interaction does not emerge from the whole‐brain analysis, the ROI analysis can be regarded as more sensitive and valuable than the whole‐brain analysis, because in the ROI analysis, we defined EBA at subject level, therefore minimizing between subject spatial functional variability [Nieto‐Castanon and Fedorenko, in press].

To explain the results, we refer to the well‐known perceptual bias effect, whereby the perceptual response on one dimension of the stimulus (the target body) is biased in the direction of another, task irrelevant stimulus dimension (the scene). The more ambiguous the target stimulus, the more bias is observed. This holds that the influence on one stimulus (the target stimulus) is proportionate to its degree of ambiguity [Bertelson and de Gelder, 2004; de Gelder and Bertelson, 2003].

Neutral bodies are emotionally more ambiguous than fearful bodies [e.g., Bannerman et al., 2009]. This makes neutral bodies more susceptible to multiple interpretations. The interpretation of a neutral body posture may therefore at least partly be based on nonbody information sources; in this case, the emotional gist of the background scene. As fearful bodies are less ambiguous than neutral bodies, the former is less receptive to different interpretations, reducing the influence of other information channels like the background valence. We explicitly investigated this in the behavioral experiment following the scanning session. Body‐background compound stimuli were presented to the participants, and they were instructed to categorize the emotion expressed by the body. The behavioral results indeed show that recognition of neutral (but not fearful) bodies is influenced by the emotional background information. The argumentation is consistent with real‐world situations: when we are unable to readily encode the emotional state of a person, we turn to other information channels like the visual context. For instance, our perception of the emotional state of a man running is quite dependent on whether we see him running away from an attacker or when we see him running in a race.

The cross‐categorical bias effect between simultaneously presented information sources where one of which is a body expression (whether in the same or in different modalities) is known from multisensory audiovisual perception studies [Van den Stock et al., 2009, 2007, 2008b] where the technique of creating mismatching stimulus compounds has long been used to study perceptual integration [de Gelder and Bertelson, 2003] and obtains equally in the visual–visual domain. Electrophysiological studies investigating emotional body‐background integration would reveal additional insights into the time course and nature of the underlying mechanism, but we are not aware of any respective reports so far. A similar perceptual mechanism has been proposed for facial expressions occurring in the context of bodies and scenes [de Gelder and Van den Stock, 2011b], and, in line with this, ERP studies have shown that the emotional valence of the visual context influences early perceptual analysis of facial expressions [Meeren et al., 2005; Righart and de Gelder, 2006, 2008].

Alternative Explanations

An alternative hypothesis relates to the action component elicited by the body and background stimuli. If threatening backgrounds elicit adaptive action, the affective background effects may be interpreted as motor preparation. Furthermore, our finding that threatening scenes activate subcortical motor structures like the putamen is in line with this reasoning. It has been reported previously that the EBA responds both to imagery of motor actions as well as to performance of motor actions [Astafiev et al., 2004]. However, if threatening backgrounds trigger more action‐related processes in EBA than neutral backgrounds, one would expect increased activity for threatening backgrounds with a fearful compared to a neutral body expression, which is incompatible with our findings. A similar reasoning can be followed for the hypothesis that the threatening scene effect can be explained by the possibility that they contain more implicit motion than the neutral scenes.

A second alternative explanation of the emotional context effect observed here relates to increased attention for neutral bodies in a threatening background compared to the same bodies but in a neutral background. Although the task was directed toward the foreground body figure, it cannot be ruled out that the incongruent body‐background combination results in an automatic attention increase toward the neutral body. The finding that the background has no significant influence when a fearful body or a triangle is on the foreground is not necessarily incompatible with this hypothesis. EBA activity is higher for fearful than for neutral bodies, and so the lack of a significant influence of the background may be explained by a ceiling effect both at the attention level and the brain activity level. On the other hand, when a triangle is shown, there is little emotional incongruency between background and foreground. This hypothesis is contradicted by the results from the behavioral experiment: if neutral bodies trigger more attention in a threatening compared to a neutral background, one would expect faster categorization of the bodies in a threatening background, which is the opposite pattern of the behavioral data.

An analogous reasoning may explain the emotional background effect and relates to attentional load [Lavie, 2005]: the attentional demands in the neutral body conditions are lower, leaving more attentional resources available to apprehend the background of the scene. If this were the case, we would expect the opposite result of the one we observed here: considering EBA is primarily involved in processing the human body shape, detracting attention from the body should reduce EBA activity [Pichon et al., 2012]. In addition, the same attentional load argument can be made for the triangle conditions, and there is no emotional background modulation in EBA when a triangle is present.

Third, the results also challenge the hypothesis that EBA comprises populations of neurons primarily (if not exclusively) processing the body shape rather than coding other information like affective valence or background cues [e.g., Downing and Peelen, 2011]. Our results show that EBA activation is not only modulated by the background valence, but also that this background modulation is only observed for a select category of body postures. This implies that perceiving the same neutral body posture may activate EBA to a different extent, depending on the background valence against which it is presented. This is contradictory to the view that EBA only encodes the fine details of the body posture, as this would result in the same EBA activation for perceiving a particular body stimulus, regardless of the background valence or emotional expression.

Based on the arguments earlier, namely that that there are incompatibilities between our results and the alternative hypotheses, we believe that the data are more in line with the cross‐categorical ambiguity reduction hypothesis [de Gelder and Bertelson, 2003].

Emotional Modulation of Scene Areas

The second main finding concerns the influence of threat represented in the backgrounds. Next to the activity in EBA, threatening scenes trigger more activity in the posterior part of the collateral sulcus. This area falls in the PPA region that responded more to scenes than to scrambled images and could reflect a subdivision of the PPA along the anterior–posterior direction, with the posterior part involved in processing emotional cues conveyed by background scenes. In addition, the right putamen is more activated during threatening compared to neutral scene perception. The putamen is primarily involved in motor function but has also been associated with threat perception in bodily expressions [de Gelder et al., 2004; Sinke et al., 2010]. The present findings show that the putamen is also involved in threatening scene perception. This may be taken as an indication that the pulvinar activation for perceiving threatening scenes reflects (preparatory) motor responses, but further evidence is needed to substantiate this. Two regions show increased activation for neutral compared to threatening scenes: the right RSC and left‐anterior PPA. Although RSC and PPA are primarily associated with scene perception, the exact contribution of these areas in navigational and visuospatial functioning is poorly understood [Epstein, 2008]. Moreover, we are not aware of any reports on how RSC and PPA respond to emotional scenes. One hypothesis may be that the increased activation for neutral backgrounds reflects an increase in visuospatial and navigational processing for the neutral scenes as opposed to emotional processing occurring during threatening scene perception.

The Role of the Amygdala

We observed amygdala activity for bodies compared to triangles but not for fearful compared neutral bodies. However, ever since the landmark case study by Adolphs et al. 1994 reporting impaired fear perception in rare patient with bilateral amygdala damage, emotion perception research has focused on the amygdala, and as a result, the amygdala plays a prominent role in current mainstream thinking about emotions. On the other hand, amygdala activation is not consistently observed at whole‐brain level for emotional versus neutral bodies [e.g., Peelen et al., 2007; van de Riet et al., 2009; Van den Stock et al., 2008a]. In fact, there is also accumulating evidence from face‐perception studies in normal [Fusar‐Poli et al., 2009; Herry et al., 2007; Sander et al., 2003; Santos et al., 2011] and clinical populations [Piech et al., 2011; Terburg et al., 2012], challenging the notion that the amygdala is specifically involved in fear processing. Our results substantiate this alternative view and support the notion that the amygdala is primarily involved in relevance processing including social perception, rather than emotion or fear perception per se.

Generalizability of the Results

The present study used two expressions for each stimulus category: fearful and neutral for the body expressions and threatening and neutral for the background scenes, and we included the neutral condition to contrast with the emotional conditions. The reason we choose fear for bodies and threat for the background is that a threatening situation (like a fire or car crash) results in a fearful (and not a threatening) reaction in the bystander. This implies that the congruent (neutral body against neutral background and fearful body against a threatening background) conditions are both realistic and ecologically valid: seeing a person dodging an explosion is more plausible than seeing an aggressor during an explosion.

Although we used a limited number of expressions per category, our interpretation of the results can be equally applied to other affective expressions. We argue that the context effects are a function of the ambiguity of the body expression. This implies that they can also occur for other bodily emotions like anger, sadness, or happiness, provided that they are ambiguous to some extent. However, we have previously reported evidence that angry, happy, and sad body postures are usually less ambiguous than fearful expressions (Experiment 1; [Van den Stock et al., 2009]. An interesting question for future studies might therefore relate to how stimulus intensity (as a measure of ambiguity, with weakly intense expressions more ambiguous than more intense ones) of body expressions relates to their susceptibility for contextual influences. We have investigated this issue previously in a behavioral experiment using faces and body postures. Participants were instructed to categorize the emotion of a face that was morphed on a fear‐happy continuum and that was presented on top of a fearful or happy body stimulus. The results showed that recognition of the facial expression was biased toward the body expression, and this effect was largest when the face was most ambiguous (i.e., around the center of the morph continuum; Experiment 2 [Van den Stock et al., 2009]).

CONCLUSION

Taken together, our results show that emotional information conveyed by bodies and background scenes is primarily processed in categorical areas EBA and PPA, respectively. In addition, the affective valence of the background modulates activity in EBA, but only when a neutral body is on the foreground. The results are currently best explained with the hypothesis that perception of ambiguous body stimuli is influenced by affective information provided by the visual context, as also evidenced in the behavioral data. Furthermore, the data indicate that categorical scene areas show emotional modulation, with activation of the right posterior PPA for threatening scenes and activation of right RSC and left‐anterior PPA for neutral scenes, possibly reflecting emotional as opposed to spatial processing.

Acknowledgments

The authors thank R. Goebel for advice on the design and A. Heinecke from Brain Innovation for advice on the data analysis. They are grateful to two anonymous reviewers for their helpful suggestions.

REFERENCES

- Adolphs R, Tranel D, Damasio H, Damasio A (1994): Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372:669–672. [DOI] [PubMed] [Google Scholar]

- Aronoff J, Woike BA, Hyman LM (1992): Which are the stimuli in facial displays of anger and happiness? Configurational bases of emotion recognition. J Pers Soc Psychol 62:1050–1066. [Google Scholar]

- Astafiev SV, Stanley CM, Shulman GL, Corbetta M (2004): Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nat Neurosci 7:542–548. [DOI] [PubMed] [Google Scholar]

- Bannerman RL, Milders M, de Gelder B, Sahraie A (2009): Orienting to threat: Faster localization of fearful facial expressions and body postures revealed by saccadic eye movements. Proc Biol Sci 276:1635–1641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Aminoff E (2003): Cortical analysis of visual context. Neuron 38:347–358. [DOI] [PubMed] [Google Scholar]

- Bertelson P, de Gelder B (2004): The psychology of multimodal perception In: Spence C, Driver J, editors.Crossmodal Space and Crossmodal Attention.Oxford:Oxford University Press; pp151–177. [Google Scholar]

- Bradley MA, Sabatinelli D, Lang PJ, Fitzsimmons JR, King W, Desai P (2003): Activation of the visual cortex in motivated attention. Behav Neurosci 117:369–380. [DOI] [PubMed] [Google Scholar]

- Candidi M, Stienen BM, Aglioti SM, de Gelder B (2011): Event‐related repetitive transcranial magnetic stimulation of posterior superior temporal sulcus improves the detection of threatening postural changes in human bodies. J Neurosci 31:17547–17554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Bertelson P (2003): Multisensory integration, perception and ecological validity. Trends Cogn Sci 7:460–467. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J (2011a): The Bodily Expressive Action Stimulus Test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front Psychol 2:181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J (2011b): Real faces, real emotions: Perceiving facial expressions in naturalistic contexts of voices, bodies and scenes In: Calder AJ, Rhodes G, Johnson MH, Haxby JV, editors.The Oxford Handbook of Face Perception.New York:Oxford University Press; pp535–550. [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N (2004): Fear fosters flight: A mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc Natl Acad Sci USA 101:16701–16706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J, Meeren HK, Sinke CB, Kret ME, Tamietto M (2010): Standing up for the body. Recent progress in uncovering the networks involved in processing bodies and bodily expressions. Neurosci Biobehav Rev 34:513–527. [DOI] [PubMed] [Google Scholar]

- Downing PE, Peelen MV (2011): The role of occipitotemporal body‐selective regions in person perception. Cogn Neurosci 2:186–226. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N (2001): A cortical area selective for visual processing of the human body. Science 293:2470–2473. [DOI] [PubMed] [Google Scholar]

- Epstein RA (2008): Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn Sci 12:388–396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N (1998): A cortical representation of the local visual environment. Nature 392:598–601. [DOI] [PubMed] [Google Scholar]

- Fusar‐Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuire P, Politi P (2009): Functional atlas of emotional faces processing: A voxel‐based meta‐analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci 34:418–432. [PMC free article] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E (2006): Analysis of functional image analysis contest (FIAC) data with brainvoyager QX: From single‐subject to cortically aligned group general linear model analysis and self‐organizing group independent component analysis. Hum Brain Mapp 27:392–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N, de Gelder B (2003): Seeing fearful body expressions activates the fusiform cortex and amygdala. Curr Biol 13:2201–2205. [DOI] [PubMed] [Google Scholar]

- Hasson U, Harel M, Levy I, Malach R (2003): Large‐scale mirror‐symmetry organization of human occipito‐temporal object areas. Neuron 37:1027–1041. [DOI] [PubMed] [Google Scholar]

- Herry C, Bach DR, Esposito F, Di Salle F, Perrig WJ, Scheffler K, Luthi A, Seifritz E (2007): Processing of temporal unpredictability in human and animal amygdala. J Neurosci 27:5958–5966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keightley ML, Chiew KS, Anderson JA, Grady CL (2011): Neural correlates of recognition memory for emotional faces and scenes. Soc Cogn Affect Neurosci 6:24–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kret M, de Gelder B (2010): Perceiving bodies in a social context. Exp Brain Res 203:169–180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavie N (2005): Distracted and confused? Selective attention under load. Trends Cogn Sci 9:75–82. [DOI] [PubMed] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B (2005): Rapid perceptual integration of facial expression and emotional body language. Proc Natl Acad Sci USA 102:16518–16523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieto‐Castanon A, Fedorenko E: Subject‐specific functional localizers increase sensitivity and functional resolution of multi‐subject analyses. Neuroimage (in press). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE (2005): Selectivity for the human body in the fusiform gyrus. J Neurophysiol 93:603–608. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Andersson F, Vuilleumier P (2007): Emotional modulation of body‐selective visual areas. Soc Cogn Affect Neurosci 2:274–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichon S, de Gelder B, Grezes J (2012): Threat prompts defensive brain responses independently of attentional control. Cereb Cortex 22:274–285. [DOI] [PubMed] [Google Scholar]

- Piech RM, McHugo M, Smith SD, Dukic MS, Van Der Meer J, Abou‐Khalil B, Most SB, Zald DH (2011): Attentional capture by emotional stimuli is preserved in patients with amygdala lesions. Neuropsychologia 49:3314–3319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righart R, de Gelder B (2006): Context influences early perceptual analysis of faces—An electrophysiological study. Cereb Cortex 16:1249–1257. [DOI] [PubMed] [Google Scholar]

- Righart R, de Gelder B (2008): Rapid influence of emotional scenes on encoding of facial expressions: An ERP study. Soc Cogn Affect Neurosci 3:270–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander D, Grafman J, Zalla T (2003): The human amygdala: An evolved system for relevance detection. Rev Neurosci 14:303–316. [DOI] [PubMed] [Google Scholar]

- Santos A, Mier D, Kirsch P, Meyer‐Lindenberg A (2011): Evidence for a general face salience signal in human amygdala. Neuroimage 54:3111–3116. [DOI] [PubMed] [Google Scholar]

- Schafer A, Schienle A, Vaitl D (2005): Stimulus type and design influence hemodynamic responses towards visual disgust and fear elicitors. Int J Psychophysiol 57:53–59. [DOI] [PubMed] [Google Scholar]

- Sinke CB, Sorger B, Goebel R, de Gelder B (2010): Tease or threat? Judging social interactions from bodily expressions. Neuroimage 49:1717–1727. [DOI] [PubMed] [Google Scholar]

- Sinke CB, Van den Stock J, Goebel R, de Gelder B (2012): The constructive nature of affective vision: seeing fearful scenes activates extrastriate body area. PLoS One 7:e38118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stienen BM, de Gelder B (2011): Fear detection and visual awareness in perceiving bodily expressions. Emotion 11:1182–1189. [DOI] [PubMed] [Google Scholar]

- Tamietto M, Castelli L, Vighetti S, Perozzo P, Geminiani G, Weiskrantz L, de Gelder B (2009): Unseen facial and bodily expressions trigger fast emotional reactions. Proc Natl Acad Sci USA 106:17661–17666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Terburg D, Morgan BE, Montoya ER, Hooge IT, Thornton HB, Hariri AR, Panksepp J, Stein DJ, van Honk J (2012): Hypervigilance for fear after basolateral amygdala damage in humans. Transl Psychiatr 2:e115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thorpe S, Fize D, Marlot C (1996): Speed of processing in the human visual system. Nature 381:520–522. [DOI] [PubMed] [Google Scholar]

- van de Riet WA, Grezes J, de Gelder B (2009): Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Soc Neurosci 4:101–120. [DOI] [PubMed] [Google Scholar]

- Van den Stock J, Righart R, de Gelder B (2007): Body expressions influence recognition of emotions in the face and voice. Emotion 7:487–494. [DOI] [PubMed] [Google Scholar]

- Van den Stock J, Grezes J, de Gelder B (2008a): Human and animal sounds influence recognition of body language. Brain Res 1242:185–190. [DOI] [PubMed] [Google Scholar]

- Van den Stock J, van de Riet WA, Righart R, de Gelder B (2008b): Neural correlates of perceiving emotional faces and bodies in developmental prosopagnosia: An event‐related fMRI‐study. PLoS One 3:e3195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van den Stock J, Peretz I, Grezes J, de Gelder B (2009): Instrumental music influences recognition of emotional body language. Brain Topogr 21:216–220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van den Stock J, Tamietto M, Sorger B, Pichon S, Grezes J, de Gelder B (2011): Cortico‐subcortical visual, somatosensory, and motor activations for perceiving dynamic whole‐body emotional expressions with and without striate cortex (V1). Proc Natl Acad Sci USA 108:16188–16193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van den Stock J, de Gelder B, De Winter FL, Van Laere K, Vandenbulcke M (2012): A strange face in the mirror. Face‐selective self‐misidentification in a patient with right lateralized occipito‐temporal hypo‐metabolism. Cortex 48:1088–1090. [DOI] [PubMed] [Google Scholar]

- Yao A, Gall J, Van Gool L (2011): Coupled action recognition and pose estimation from multiple views. Int J Comp Vis 33:2188–2202. [Google Scholar]