Abstract

How the human brain represents distinct motor features into a unique finalized action still remains undefined. Previous models proposed the distinct features of a motor act to be hierarchically organized in separated, but functionally interconnected, cortical areas. Here, we hypothesized that distinct patterns across a wide expanse of cortex may actually subserve a topographically organized coding of different categories of actions that represents, at a higher cognitive level and independently from the distinct motor features, the action and its final aim as a whole. Using functional magnetic resonance imaging and pattern classification approaches on the neural responses of 14 right‐handed individuals passively watching short movies of hand‐performed tool‐mediated, transitive, and meaningful intransitive actions, we were able to discriminate with a high accuracy and characterize the category‐specific response patterns. Actions are distinctively coded in distributed and overlapping neural responses within an action‐selective network, comprising frontal, parietal, lateral occipital and ventrotemporal regions. This functional organization, that we named action topography, subserves a higher‐level and more abstract representation of finalized actions and has the capacity to provide unique representations for multiple categories of actions. Hum Brain Mapp 36:3832–3844, 2015. © 2015 The Authors Human Brain Mapping Published by Wiley Periodicals, Inc.

Keywords: action representation, action topography, pattern classification, fMRI, multivariate analysis

INTRODUCTION

It has been proposed that the distinct features of a motor action (e.g., kinematics, effector‐target interaction, target identity) are hierarchically organized in the human brain [Grafton and Hamilton, 2007] and are represented in separated, though functionally interconnected, cortical areas [Kilner, 2011].

At the same time, however, several observations, including the substantial overlap of brain areas activated during both action observation and execution [Macuga and Frey, 2012; Rizzolatti and Luppino, 2001], and the independence of action representation from a specific sensory modality [Ricciardi et al., 2014a, 2014b], along with current models of praxis processing [Petreska et al., 2007; Rothi et al., 1991] and semantics [Gallese and Lakoff, 2005], concur to indicate that, at a higher cognitive level, actions and their final aims are eventually represented as a whole, and not as the mere collection or sequence of single motor features.

Multivariate approaches to the analysis of patterns of neural responses recently suggested that action category representation may be actually based on a distributed functional organization [Ricciardi et al., 2013], similarly to what has already been described for the representations of categories of objects and sounds in the ventrotemporal extrastriate [Haxby et al., 2001] and temporal auditory [Staeren et al., 2009] cortex, respectively. As a matter of fact, multivariate methods are particularly suitable for the analyses of brain functional imaging data when different brain areas within a network elaborate distinct fragments of information allowing for a multidimensional, integrated representation of a complex stimulus with various features [Davis et al., 2014]. Indeed, these approaches can be used to identify which distinct pattern of activity is associated with a specific sensory information or mental state, and how that information is encoded and organized in each brain region [Haxby et al., 2014]. In particular, evidence obtained using multivariate pattern analyses indicates that distinct fronto‐parietal subregions are involved in the functional processing of specific features —including, viewpoint, effector‐target interaction, content, and the behavioral significance— of different motor gestures, when actions are either recognized (via visual and non‐visual stimuli), covertly imagined or overtly performed [Dinstein et al., 2008; Jastorff et al., 2010; Molenberghs et al., 2012; Oosterhof et al., 2012a, 2012b, 2013, 2010; Ricciardi et al., 2013]. Nonetheless, previous functional studies, that focused primarily only on transitive movements and on precise features of motor actions, failed to demonstrate that representations of different categories of actions are truly distributed and overlapping within the whole action‐selective network. These observations relied on the hypothesis that a limited number of brain areas are specialized for representing distinct action features [Beauchamp and Martin, 2007; Goldenberg et al., 2007; Grafton and Hamilton, 2007; Kroliczak and Frey, 2009; Macuga and Frey, 2012; Peeters et al., 2013]. In other words, while the model of a limited number of areas that are specialized for representing well‐defined features of action has been extensively explored, it is not yet fully understood how the higher conceptual representation of the action as a whole emerges at a network level, and to which degree informative contents are actually shared across neighboring and distant regions.

Here, we propose that distinct patterns across a wide expanse of cortex reflect a topographically organized coding of different categories of actions and underlie action recognition, similarly to the model previously reported by Haxby et al. [2001] to explain object category representation in the visual association cortex. In addition, the topographically organized representation of distinct action categories would assimilate previous models of hierarchically and spatially organized processing of simpler features of motor acts [Grafton and Hamilton, 2007] in a unified, distinctive representation of action features and goals.

Specifically, we tested topographically organized representations by investigating the neural response as measured by functional magnetic resonance imaging (fMRI) while participants passively watched movies of three distinct categories of hand‐performed actions —tool‐mediated, distal transitive, and meaningful intransitive— and using a multivariate pattern analysis approach. According to this model, the response patterns for a specific action category should extend to a wide expanse of cortex in which information on different action features are represented (distributed), and should even maintain their distinctiveness both when the analysis is restricted to those brain areas that hold the most informative content for a specific action category, or when these most informative clusters are excluded, thus limiting the analysis to the lesser informative regions (overlapping representation). The alternative hypothesis would be that specific brain areas process information related to a well‐defined category of actions, but not (or to a lesser degree) to other categories. In particular, in this case the multivariate classifier would rely on a few, small clusters in limited brain areas (thus, action representation would be not distributed but localized) and show no discrimination capabilities when relying only on the clusters less specific for that type of action (that is, it would be not overlapping).

MATERIALS AND METHODS

Subjects

Fourteen right‐handed healthy adults (M/F: 4/10, mean age ± SD: 37 ± 6 years) were enrolled. All subjects received a medical examination, including a brain structural MRI scan, to exclude any disorder that could affect brain structure or function. All participants gave their written informed consent after the study procedures and potential risks had been explained. The study was conducted under protocols approved by the University of Pisa and University of Modena and Reggio Emilia Ethical Committees.

Stimuli

A set of movie clips depicted hand‐performed tool‐mediated (three arguments: agent, object, tool; e.g., sawing), transitive (object‐directed, two arguments: agent, object; e.g., grasping) and meaningful intransitive (non‐object directed, one argument: agent; e.g., thumb up) actions (Fig. 1; see Supporting Information Table S1 and Fig. 3 for a stimulus list). Only the agent's right arm and hand movements were visible, and represented in a third person perspective. To improve generalization of the action categories (i.e., higher level action representation) and to reduce the impact of the fine grained details of each movie clip (e.g., object form, hand kinematics), each action was filmed four times [Haxby et al., 2001], changing the observer perspective (left or right side) and the gender of the agent (male or female arm) (see Supporting Information Table S2 for a low‐level feature description of this set of stimuli). Overall, each participant observed 60 movie clips of this first set.

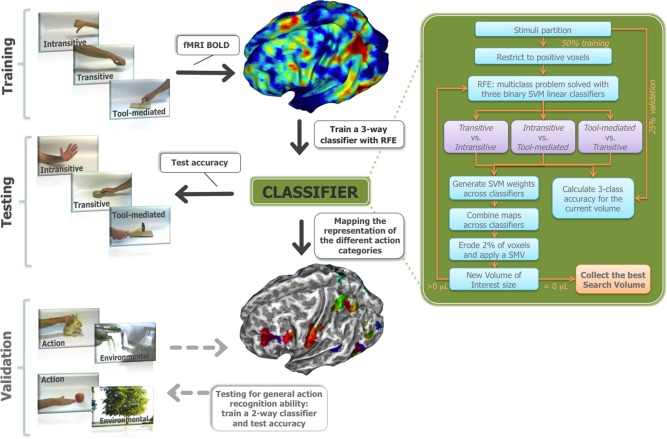

Figure 1.

Methodological workflow. BOLD responses to transitive, intransitive, and tool‐mediated actions from the training set were used in a three‐way classifier. The trained classifier tested a different subset of actions to obtain discrimination accuracy, and isolated the most informative regions. The discrimination ability of this network was subsequently tested on an independent stimuli dataset. Refer to Materials and Methods for further details. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

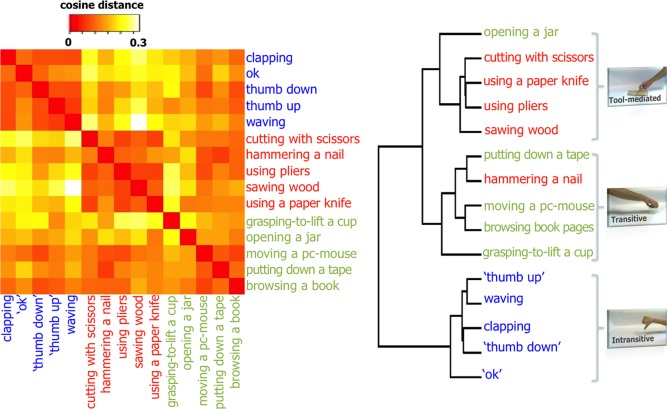

Figure 3.

Specificity of the distributed representations within the action‐selective network. On the left, the heatmap representing the dissimilarity matrix of the different action stimuli and, on the right, the derived hierarchical clustering. Stimuli were depicted in red font for tool‐mediated, in blue font for intransitive and in green font for transitive actions. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Image Acquisition and Experimental Setup

Patterns of response were measured with a fMRI six‐runs slow event‐related design (gradient echo echoplanar images, Philips Intera 3T, TR 2.5s, FA: 80°, TE 35 ms, 35 axial slices, 3 mm isovoxel) while participants watched 3s‐long movies that randomly alternated between different types of hand‐made actions or environmental scenes, with 7s of inter stimulus interval. Movies were presented using an in‐house developed software via the IFIS‐SA fMRI System (Invivo Corp, FL USA; visual field: 15°, 7.5′′, 352 × 288 pixels, 60 Hz). High‐resolution T1‐weighted spoiled gradient recall (TR = 9.9 ms, TE = 4.6 ms, 170 sagittal slices, 1 mm isovoxel) images were obtained for each participant to provide detailed brain anatomy.

fMRI Data Analysis

The AFNI package was used to analyze imaging data [Cox, 1996]. All volumes from the different runs were temporally aligned, corrected for head movements, spatially smoothed (Gaussian kernel 5 mm, FWHM) and normalized. Afterwards, a multiple regression analysis was performed. The pattern of response to each stimulus type was modeled with a separate regressor: two stimulus repetitions were randomly collapsed into a unique regressor to improve the quality of the hemodynamic response for each stimulus. As four repetitions of the same stimulus were present, two patterns of responses were then obtained for each stimulus. Movement parameters and signal trends were included in the multiple regression analysis as regressors of no‐interest. The t‐score response patterns of all stimuli were transformed into the Tailairach space [Talairach and Tournoux, 1988], and resampled into 2 mm isovoxels for group analysis. To limit the number of voxels, a structural template available in AFNI was used to select voxels representing grey matter [Holmes et al., 1998], and only those voxels with an averaged t‐score response across stimuli and participants greater than zero within the training subset were retained. The resulting t‐score patterns were used with the software SVMlight [Joachims, 1999] as input vectors for the Support Vector Machine (SVM) classifiers [Cortes and Vapnik, 1995; Joachims, 1999].

Classification of the Patterns of Neural Response for the Different Categories of Actions

To discriminate between categories and to isolate the best subset of voxels that contributes to the classification, a multiclass procedure [Hsu and Lin, 2002] and a Recursive Feature Elimination algorithm —RFE [De Martino et al., 2008; Ricciardi et al., 2013]— were adopted to recursively prune uninformative voxels (Fig. 1). In brief, the first set of stimuli with transitive, intransitive, and tool‐mediated actions (420 examples overall) was randomly parted across individuals into three subsets (50% of examples for training, 25% for validation of the RFE procedure and 25% for testing). Here, the multiclass problem was decomposed into multiple independent binary classification tasks, using a pairwise strategy, where each pair of categories was trained and tested separately and subsequently the results of the three binary classifiers were combined in an unique three‐way accuracy, using the max‐wins strategy [Hastie and Tibshirani, 1998; Hsu and Lin, 2002]. We decided to adopt such a procedure for two reasons. First, usually the algorithmic implementation of multiclass was computationally expensive or intractable on large datasets [Crammer and Singer, 2001]. Second, using separated binary classifiers we were able to test the distinctiveness of the representation of each class.

Thus, a three‐way classifier was trained and validated for each RFE iteration. Specifically, in each iteration, a definite number of voxels was pruned until the search volume was empty. To isolate the voxels to be removed, we adopted the following procedure. First, the feature weights of the support vectors of each classifier were estimated thus to generate a discriminative map for each classifier [Lee et al., 2010]. Within each discriminative map, the absolute value of each voxel weight was converted to a z‐score and subsequently averaged. After this step, the 2% of the features with the lowest z‐scores were discarded. A small volume correction (SVC) with an arbitrary minimum cluster size of 100 voxels, nearest‐neighbor, was performed to remove isolated clusters, thus to reduce the number of iterations during the RFE procedure [Ricciardi et al., 2013]. For each RFE iteration, an accuracy performance was computed on the validation set. Then, comparing the accuracies from all the iterations, the best feature set was selected based on the highest accuracy [Cawley and Talbot, 2010; De Martino et al., 2008; Pereira et al., 2009] (see also Supporting Information Fig. S3). Eventually, within the best RFE iteration, the final accuracy of the three‐way classifier was evaluated with the remaining test subset.

Further, to estimate the goodness of the features (i.e., voxels) selection procedure, a comparable machine learning technique was developed with an independent dataset of distinct actions, as described below (Fig. 1).

Mapping the Representation of the Different Action Categories

To map voxels activity of the classifier (Fig. 2), the feature weights of the three subtended binary classifiers were averaged in accordance to their function in the recognition of each category, thus to generate a dataset for each action category [Lee et al., 2010]. For instance, the absolute values of the positive feature weights of the classifier tool‐mediated versus transitive were averaged with the absolute values of the negative feature weights of the classifier intransitive vs. tool‐mediated to generate a new dataset with a value of intensity for each voxel related the tool‐mediated action. Then, a specific color channel in the 16‐bit RGB color model was assigned to each dataset (red to tool‐mediated, green to transitive and blue to intransitive) according to the ranked voxel intensities in percentiles. Finally, the datasets were rendered onto a subject cortical surface mesh using BrainVISA package.

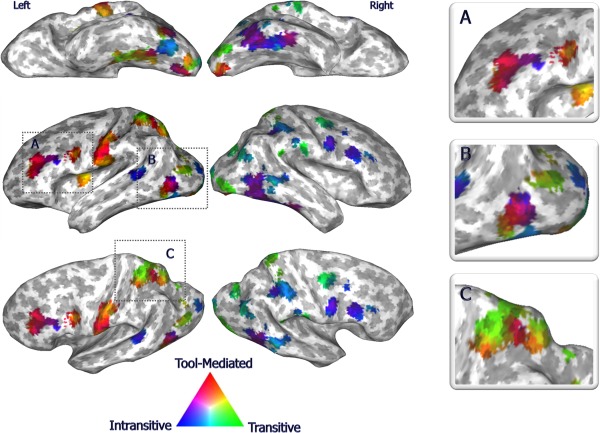

Figure 2.

The action‐selective network. The most informative regions described by the three‐way classifier for tool‐mediated (red), transitive (green) and intransitive (blue) discrimination and projected onto an inflated surface template. Magnified views of left prefrontal, temporo‐occipital and superior parietal regions in boxes A, B, and C, respectively.

Testing for General Action Recognition Ability

Does the classifier‐defined network truly code for actions, or merely rely on distinct features (e.g., engagement of object, agent, or tool) to separate categories? To understand the nature of the enhanced ability to discriminate action features within the action‐sensitive regions selected by the three‐way classifier, an independent dataset is required to confirm the goodness (i.e., reliability) of the most informative voxels [Guyon et al., 2002]. Consequently, a new binary classifier (“action” vs. “non‐action”) was trained and tested using an independent validation set of stimuli. Specifically, a set of movie clips, including “non‐action” movies (i.e., environmental scenes) and three distal transitive actions performed with the right hand and acted upon a definite list of ten animate and ten inanimate objects (“action”, Table S1 in the online Supporting Information), were presented in the same fMRI session, alternated to the first set of action stimuli. All “actions” and “non‐action” movies of this second set were filmed twice. Overall, each participant observed 162 movie clips from this second set. The pattern of response to each stimulus type was modeled with a separate regressor in a multiple regression analysis, identically to what has been described in the fMRI Data Analysis section for the other stimuli. Thus, all the “non‐action” stimuli and a balanced randomly downsampled number of “action” stimuli from the independent validation set were divided into two bins (66% of examples for training and 33% for testing). Similarly to the above procedure, a linear binary SVM classifier was created without applying the RFE procedure (Fig. 1).

Action Categories Identification based on the Less Informative Cortical Regions with a Knock‐Out Procedure

Although whole brain classification may suggest category‐specific patterns of response to be distributed and overlapping, higher within‐action category correlations could be due to the information contributed by the regions whose content was more specific for a determinate action category, with limited information about other categories. To test whether the patterns of responses in lesser informative regions also carry action category‐related information, the discriminative map of the classifier was employed in a “knock‐out” procedure [Carlson et al., 2003; Ricciardi et al., 2013]. First, the voxels of the discriminative map of the best three‐way classifier were removed from the initial search volume, so to generate a new mask that would retain the less informative regions (Supporting Information Fig. S5). Within this “knock‐out” map, a three‐way classifier was trained and tested to estimate the potential decreases in classification performances related only to this set of voxels. The classifier was built, trained and tested following the same procedure described above, but without applying the RFE technique.

Action Categories Identification based on Cortical Regions that Most Coherently Contributed to the Discrimination of One Specific Action Category

To investigate whether the action category specificity of response extends also to those brain regions that are most informative for a specific action category, a measure of voxels coherence was derived, through the mapping of the action category weights of the three‐way classifier. For instance, a voxel that contribute to recognize tool‐mediated gesture in both the comparisons with intransitive and transitive acts was stated as coherent for the tool‐mediated category. Thus, the volume extracted from the three‐way classifier RFE procedure was parted into three subregions, accordingly to their coherence during category discrimination. Within each subregion, specifically related to the discrimination of a defined category, a linear binary SVM classifier was created to recognize between the other two categories (i.e., discriminate between transitive and intransitive gestures within the tool‐mediated subregion). The classifier was built, trained and tested following the same procedure described above, but without applying the RFE technique.

Assessing Goodness of Classification Accuracies

Accuracy values were tested as significantly different from chance with permutation tests (n = 1000), randomizing the labels of the examples during training phase, and using one‐tailed rank tests [Pereira et al., 2009] (see also Supporting Information Fig. S4).

Assessing Specificity of Category Representation

To highlight the specificity of action representations across participants, a heatmap was obtained within the most informative regions of the action representation network (Fig. 3). In brief, BOLD patterns of response of the stimuli were averaged across subjects and compared to each other using cosine distance as measure of dissimilarity, to obtain a heatmap [Kriegeskorte and Kievit, 2013]. Hierarchical clustering was obtained from the heatmap using the generalized Ward's criterion [Batagelj, 1988]. Only the stimuli of the test set of the three‐way classifier procedure were taken into account to generate dissimilarity matrices, so to retrieve an unbiased representational space and an unbiased hierarchical clustering.

The resulting representational dissimilarity matrix enabled us to determine the category specificity of the distinct action stimuli across participants and, at the same time, to compare the distinctiveness of representation among categories. Theoretically, we would expect the stimuli belonging to the same action category to gather together, but also to be separated from the stimuli of different action categories, that is, to show similar within‐category and dissimilar between‐categories neural patterns.

Action Category‐Specific Neural Responses as Obtained with a “Standard” Univariate Analysis

To perform univariate analysis, the β‐coefficients from the multiple regression analysis within the initial search volume (Supporting Information Fig. S5) were used to perform a two‐ways repeated measure ANOVA, including the “action category” as a fixed factor (with three levels that were tool‐mediated, transitive, and meaningful intransitive actions) and subjects as a random factor (P < 0.05). Multiple comparisons correction was performed using AlphaSim from AFNI package. From this procedure, only clusters with a minimum size of 1,840 μL resulted to be significant at P < 0.05. Within the regions that resulted to be significant from the ANOVA analysis, post hoc t‐tests were performed to assess the specific effects related to the individual action categories (corrected P < 0.05).

RESULTS

Classification and Mapping of the Patterns of Neural Response for the Different Categories of Actions

The three‐way pattern classifier significantly discriminated (accuracy: 75.2%; chance: 33.3%; P < 0.001) and characterized the distinct patterns of response for each action category within a bilateral action‐selective network, comprising middle (MF) and inferior (IF) frontal, ventral (vPM), and dorsal premotor, inferior (IP) and superior (SP) parietal, lateral occipital (LO) and ventrotemporal regions (Fig. 2, Supporting Information Table S3). Within the three‐way classifier, sensitivity, and specificity, respectively reached 59% and 86% for transitive, 86% and 86% for intransitive and 79% and 84% for tool‐mediated actions.

While all these action‐selective clusters participated in the distributed representation of action categories (Fig. 2 and boxes A‐B‐C), left prefrontal regions, together with a left anterior supramarginal (aSM) and postcentral (PoC) cluster, were maximally informative for tool‐mediated actions, the right MF, IP, and ventrotemporal regions for intransitive gestures, while the bilateral SP, inferior occipital, and right precentral cortex for transitive actions. Additionally, critical nodes of action processing, such as the left vPM (Fig. 2A), left intraparietal (IPS) and SP cortex, represented multiple categories (Fig. 2B).

A “Proneness” to a Broader Action Representation

To demonstrate that the classifier‐defined network mainly codes for action representations and may rely only minimally, if any, on distinct motor features to separate categories, neural responses of these brain areas were tested using an independent stimuli set comprising hand‐performed actions and environmental scenes. Remarkably, the “action” versus “non‐action” discrimination reached an excellent accuracy (94%; chance 50%; P < 0.001), thus indicating that our approach likely defines the most informative cortical areas for action representation. Moreover, a multidimensional scaling procedure was performed to better represent the differences in patterns of neural activity between actions and environmental scenes in these brain areas (see Supporting Information Fig. S6).

Category identification based on patterns limited to the either less or most informative cortical regions: distributed and overlapping representations. Although the whole brain classification identified category‐specific patterns that were distributed and overlapping, one could argue that discrimination accuracy actually could be related mainly to the information contained in selective brain areas whose neural content is more specific for a determinate action category, with limited or null information about other categories. To exclude this possibility, we performed the following tests.

First, we removed the most discriminative areas from the original search volume (Supporting Information Fig. S5). Action categories resulted still identifiable (accuracy: 55%; chance: 33.3%; P < 0.01). Thus, the distributed patterns of responses in lesser informative regions also carry action category‐related information that is sufficient to discriminate among action categories.

Second, we tested the ability to discriminate between the other two remaining categories within the cortical regions that most coherently contributed to the discrimination of a specific category. Within the tool‐mediated action category regions, transitive versus intransitive categories were also separable (accuracy: 70%; chance: 50%; P < 0.001), as well as tool‐mediated vs. intransitive actions within transitive action areas (accuracy: 71%, P < 0.001). Conversely, within intransitive action category subregions, the classification of other categories was at a chance level (accuracy: 56%; P = n.s.; see also Fig. 3).

Category‐Specific Representations within the Action‐Selective Network

The topographic category‐specificity of these distributed representations across subjects was tested via a representational dissimilarity matrix and a derived hierarchical clustering of the different action stimuli (Fig. 3). A simple visual inspection confirms the specificity of representation across participants for the distinct action categories: the patterns of neural responses gathered in three distinct clusters that overlap with our original stimuli selection. In addition, consistently with the discrimination accuracies reported above, intransitive gestures showed a more distinctive representation (i.e., a lower within‐ and a higher between‐category distance) than tool‐mediated and than transitive actions that are clustered together and also share few mislabeled items.

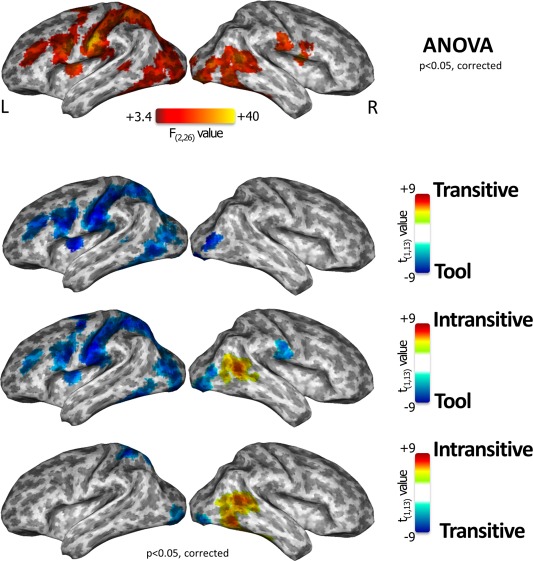

The ANOVA results indicated that a volume of ∼103,000 μL of gray matter retained a significant effect related to the action categories. These results extended mostly on the left hemisphere, comprising middle and inferior frontal, ventral and dorsal premotor, inferior and superior parietal, lateral occipital and ventrotemporal regions (Fig. 4).

Figure 4.

Comparative action category‐specific neural responses of a “standard” univariate analysis. Brain regions showing a significant effect for “action category” were projected onto an inflated surface template, as identified by a univariate repeated measure ANOVA (first row). Post hoc direct comparisons between tool‐mediated, intransitive and transitive action categories have been also reported (second, third, and fourth rows). [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Within these regions, the results of the comparison between transitive vs. tool‐mediated actions were located almost entirely on the left hemisphere and were specific for the tool‐mediated action category (e.g., higher BOLD responses during tool‐mediated category relative to the transitive one —Figure 4, second row, blue regions). Moreover, a similar representation of the tool‐mediated category during the contrast with intransitive actions was observed (Fig. 4, third row, blue clusters), although intransitive actions retained higher BOLD activity on the right superior and ventrotemporal areas (Fig. 4, third row, red/yellow clusters). Finally, the contrast between the intransitive versus transitive categories showed that the right superior and ventrotemporal areas still maintained a specific effect for intransitive actions (Fig. 4, fourth row, red/yellow clusters), while the transitive actions had a significant higher response in left superior temporal and bilateral lateral occipital cortex (Fig. 4, fourth row, blue clusters).

Overall, the univariate results indicated that tool‐mediated actions retained a specific representation in a large extent of cortex on the left hemisphere, while the intransitive actions were represented only in temporal cortex of the right hemisphere, and finally no specific regions were found for transitive actions. Moreover, in our set of stimuli and experimental task of passive action recognition, no evidence for a specific left hemisphere representation for transitive and meaningful intransitive actions was found. Intransitive‐specific regions were shown in the right superior temporal and ventrotemporal regions, while representation of transitive actions relied on limited clusters in left superior parietal and bilateral lateral occipital cortex, just when compared to intransitive but not to tool‐mediated actions.

DISCUSSION

Action Topography

How the human brain merges different motor features into a higher‐level, distinctive conceptual representation of a finalized action still remains undefined. To date, brain functional studies have focused primarily on univariate approaches to define brain areas that specifically supply discrete features of finalized movements, as action representation in the human brain would rely on hierarchically organized, separate but functionally interconnected, cortical areas.

In the present study, using a novel multivariate approach, we demonstrated that different categories of action are distinctively coded in distributed and overlapping patterns of neural responses: this functional cortical organization, that we named action topography, subserves a higher‐level and more abstract representation of distinct finalized action categories.

Specifically, a three‐way pattern classifier discriminated with high accuracy neural patterns of response among transitive, intransitive, and tool‐mediated actions. Distinct patterns of neural response for each action category were distributed across a brain network that comprises frontal, premotor, parietal, lateral occipital, and ventrotemporal clusters, belonging to well‐known bilateral action‐selective regions [Binkofski and Buccino, 2006; Kroliczak and Frey, 2009]. Therefore, this network retains a topographically organized representation that subserves action category recognition.

According to this model of action topography, the response patterns for a specific action category should possess two characteristics [Haxby et al., 2001]. First, the response pattern of each action category should extend to the whole network, that is, each category relies on a distributed representation. At the same time, the response pattern for a specific action category should maintain its distinctiveness both when the analysis is restricted to those brain areas that hold the most informative content for a specific action category, and also when these most informative clusters are excluded, thus limiting the analysis to the lesser informative regions. That is, each category relies on a neural representation that overlaps with those of other action categories. Actually, as discussed below, the distinctiveness of within‐action category representation could be related to the information contributed by the regions whose content was more specific for a determinate action, or a specific action feature (e.g., presence of a manipulable object, hand preshaping, etc.), with a limited information about other categories. Consequently, the specificity of these patterns of responses have been demonstrated also when removing the most category‐selective clusters from the original search volume, and when investigating whether the action category specificity of response extends also to those brain regions that are most informative for another distinct specific action category.

Furthermore, the present multivariate approach showed that these category‐specific patterns of neural response are consistent across subjects. The topographic specificity of these distributed representations within action category is also confirmed by the dissimilarity matrix and the derived hierarchical clustering of the different action stimuli (Fig. 3). In addition, consistent with the discrimination accuracies, intransitive gestures showed a more distinctive representation (i.e., a lower within‐ and a higher between‐category distance) than tool‐mediated and transitive actions, likely related to their more “evolved” communicative content [Rizzolatti and Craighero, 2004].

An Increased Sensitivity in Defining Category‐Selective Regions

In general, as stated above, multivoxel pattern analyses appear to particularly improve the sensitivity towards multidimensional psychological variables, such as the representation of the whole action and its distinct features (e.g., kinematics, effector‐target interaction, target identity), as compared with “more classical” univariate voxel‐wise approaches [Davis et al., 2014; Naselaris et al., 2011]. Indeed, our pattern classification not only localized the most informative clusters, in line with findings from previous neuroimaging studies, but, because of this increased sensitivity, identified category‐specific neural responses that were not detected by the univariate analysis.

Most of the previous functional studies typically contrasted neural responses between two separate action categories only, and consequently identified limited clusters, putatively specialized for a restricted number of actions, as activated most strongly during processing a specific action category [Buxbaum et al., 2006; Frey, 2008; Gallivan et al., 2013].

Consistently with this approach, in the cortical mapping of the action category weights of the three‐way classifier, the left aSM and vPM contained clusters greatly informative for manipulative objects and tools [Binkofski and Buccino, 2006]. As well, superior parietal regions encoded visuomotor transformations of transitive actions [Abdollahi et al., 2013; Valyear et al., 2007], arranged more dorsally and medially than tool‐mediated representation [Valyear et al., 2007]. Similarly, superior temporal and fusiform areas were more informative for body parts and intransitive (non‐object directed) biological motion, while middle temporal and LO cortex for non‐biological motion and object form implied in transitive (object prehension or interaction) or tool‐mediated (object utilization) actions [Orban et al., 2004; Peelen and Downing, 2007] (Fig. 2C).

However, according to our topographically organized representations, category specificity of responses within the action selective network is not just restricted solely to the cortical regions that respond maximally to certain stimuli, but all large‐ and small‐amplitude neural responses also contribute to convey category‐related information and to build a specific action representation. For instance, our more sensitive methods not only confirmed that tool‐mediated actions rely on a bilateral, though left‐dominant representation [Binkofski and Buccino, 2006; Kroliczak and Frey, 2009], but also revealed a representation for transitive and intransitive actions in both left and right action selective network.

As a matter of fact, previous univariate comparisons between transitive versus intransitive or tool‐mediated versus intransitive motor acts often reported a left‐dominant circuit for transitive and tool‐mediated actions and typically failed in defining a bilaterally distributed action‐specific network, particularly for the transitive and intransitive gestures [Buxbaum, 2001; Buxbaum et al., 2007; Cubelli et al., 2006; Goldenberg et al., 2007; Kroliczak and Frey, 2009; Lewis, 2006]. Nonetheless, consistently with our results, many neuropsychological reports on apraxic patients and a few functional studies in healthy individuals suggest a distributed bilateral network for both transitive and intransitive gestures [De Renzi et al., 1980; Helon and Kroliczak, 2014; Rothi et al., 1991; Streltsova et al., 2010; Villarreal et al., 2008]. Even when applying a “standard” univariate analysis to our experimental data, we failed to demonstrate a category‐specific, bilateral representation for either transitive or intransitive actions. In particular, the univariate contrasts among the three action categories revealed significant responses mainly in the left action‐sensitive regions. On the contrary, a multivariate approach, based on multiclass discrimination, was able to assess specific cortical patterns involved in the representation of different action categories, with a higher specificity and to a finer detail in both the left and right hemisphere (clearly observable when comparing Figs. 2, 3, 4). In addition, the use of a multivariate approach allows to identify the most informative cortical areas for each action category overcoming many methodological constraints of the univariate analyses, including the use of predefined statistical thresholds or contrasts.

While the left hemisphere appears to have a predominant role in action representation, an independent involvement of the right hemisphere in praxis is acknowledged, though the nature of this recruitment is still undefined [Goldenberg, 2013]. Several neuropsychological [De Renzi et al., 1980; Goldenberg, 1996 1999; Haaland and Flaherty, 1984; Heath et al., 2001; Stamenova et al., 2010] and functional [Harrington et al., 1998; Rao et al., 1997; Streltsova et al., 2010; Weiss et al., 2006] observations appear to indicate that right hemisphere involvement is somehow related to specific features of the gesture (e.g., motor, spatial and temporal complexity) or to other cognitive mechanisms inherent to action representation (e.g., linguistic or social knowledge for intransitive actions). Unfortunately, the multivariate decoding analysis applied to our experimental setup did not allow to draw any specific conclusion either on the specific role of a single region within the action selective network, or on the distinct functioning of the left or right hemisphere that could be directly compared to cortical lesions observed in apraxia disorder. Nonetheless, according to our topographical‐organization model, all these distant and localized pieces of information that may reflect distinct features of motor acts, or specific processing of action representation, are at a bilateral network level merged into a unified, distinctive representation of actions.

Which are the Action Features Represented in a Distributed Pattern of Neural Response?

While multivariate decoding approaches that rely on distributed patterns of neural activation enhance the capability to identify informative brain areas, they do not specifically allow for a clear identification of the individual features, or dimensions encoded in each region. One may wonder whether it is the action categories per se that are represented (and thus isolated), or whether the classifier merely relies on other physical attributes of the stimuli—for example, dynamicity, imageability, onset time, and so forth—or on their specific content—for example, form of manipulable objects toward which the actions are directed (i.e., hands alone vs. graspable objects vs. tools), the number of objects involved in each clip, hand preshaping, and so forth.

Though we cannot completely rule out that one of these features or dimensions may have contributed to drive the whole classification accuracy, several elements make this highly unlikely.

First, from a methodological perspective, each action within the three categories was chosen to be as different from the others as possible, so that they share only the distinctive feature of each specific action category representation and so to limit any possible confounds related to stimulus selection or perceptual processing. Furthermore, additional analyses have been performed to demonstrate that the low‐level features of our set of stimuli did not impact neural representation in early visual areas or in regions within the action‐selective network (see Supporting Information Figs. S1 and S2). Finally, if we assume that “simpler” object‐based topography could have guided our classification, one would expect that the most informative areas be limited to a smaller and more localized set of brain regions, such as the objects‐selective ventrotemporal cortex [Bracci and Peelen, 2013; Haxby et al., 2001], which is actually not the case in the present results.

Second, from a theoretical point of view, our observations demonstrated distinct patterns of brain response for multiple categories of actions in a distributed and overlapping network. These patterns of response (i.e., functional representations) are specific for each action and consistent across participants, both when considering the most informative brain areas for a specific action category and when limiting the analysis to the lesser informative clusters. Although a final consideration about the dimensionality of action representation cannot be directly inferred from the present decoding analysis [Davis et al., 2014], this multivariate methodology is capable to offer a unique, category‐selective and topographically organized representation of a specific action category. These category‐selective patterns of response reflect a higher level, distinctive representation of “action‐as‐a‐whole”.

In this sense, we believe that action topography includes the representation of the simpler features of motor acts, and integrates previous hierarchically and spatially organized models of action representation. For instance, as discussed above, the distinctiveness of the neural patterns in ventral temporo‐occipital regions may reflect differences in hand‐object interactions, or target object forms, among tool‐mediated, transitive, and meaningful intransitive action categories. Similarly, the specificity of the patterns in superior parietal and ventral premotor areas may convey information about movement selection (e.g., hand preshaping, reaching, etc.). Nonetheless, all these distant and localized pieces of information are at a network level merged into a unified, distinctive representation of action category.

Of note, even if action topography relies on a widely distributed network, the fact that different features of motor acts are incorporated within the “most informative” regions may contribute to explain how even localized lesions are sufficient to cause selective impairments in recognizing specific categories of actions, or in dealing with a particular action feature [Buxbaum et al. (2014); Urgesi et al. (2014)].

In these regards, machine learning approaches using multivariate analysis aim at isolating those brain areas that provide the informative regions that mostly enhance the differences among the categories of selected stimuli. We could therefore expect that particular cortical clusters, involved in action representation, might have been discarded by our classifier, if those voxels (i.e., brain areas), although “action‐selective,” would have not conveyed sufficiently significant information to discriminate tool‐mediated, transitive, and intransitive actions. Actually, as shown in Supporting Information Figure S5, the final discriminative volume after the RFE procedure loses several portions of precentral and inferior parietal cortex directly involved in motor control and action representation. Even if these areas convey sufficient information to allow discrimination among action categories, none of them appear to be category‐specific.

Ultimately, to strengthen the hypothesis that our classifier‐defined network relies on the most informative cortical areas for action representation, and not merely on single motor features, to distinguish among categories, neural responses were tested using an independent stimuli set comprising hand‐performed actions and “non‐action” (i.e., environmental scenes). When limited to action‐selective regions, discrimination reached a notable recognition accuracy (94%)—well above other performances (about 80%) that our group previously reported in actions versus “non‐action” discrimination [Ricciardi et al., 2013].

CONCLUSIONS

Using fMRI in combination with an innovative multiclass pattern discrimination approach (i.e., a three‐way classifier), we were able to identify the distinct patterns of neural response that discriminate among different action categories. This topographical organization subserves a more abstract, conceptual representation of distinct finalized action categories.

According to this hypothesis, action topography assimilates previous models of hierarchically and spatially organized processing of simpler features of motor acts [Grafton and Hamilton, 2007] into a more abstract, distinctive representation of “action‐as‐a‐whole”. Moreover, action topography is consistent with neuropsychological pieces of evidence of a “conceptual” representation of action categories [Rothi et al., 1991], matches with the action execution‐recognition functional overlapping [Macuga and Frey, 2012; Rizzolatti et al., 2001] and with a sensory‐independent representation of action goals [Ricciardi et al., 2009, 2013]. Ultimately, action topography provides this network with the capacity to produce unique representations for multiple categories of actions.

In this perspective, these findings provide a novel view of how the human brain recognizes and represents various motor features into a unique finalized action, similarly to what has already been described for object form and sound category representations [Haxby et al., 2001;Staeren et al., 2009].

Nevertheless, future studies should necessarily explain how these action features are processed, organized and merged into a distinct representation as conveyed by a distributed pattern of neural response.

Moreover, these results have general implications for other research and clinical fields, such as neurorehabilitation and robotics. In fact, action topography clearly indicates that optimal brain–computer interfaces and neuroprostheses should take into account that a satisfying decoding of a human action necessarily depends on the ability to recover different pieces of information topographically distributed across distinct brain areas.

Supporting information

Supporting Information

ACKNOWLEDGMENTS

The author thanks Corrado Sinigaglia for comments on an earlier version of the manuscript.

REFERENCES

- Abdollahi RO, Jastorff J, Orban GA (2013): Common and segregated processing of observed actions in human SPL. Cereb Cortex 23:2734–2753. [DOI] [PubMed] [Google Scholar]

- Batagelj V (1988): Generalized Ward and related clustering problems In: Bock HH, editor. Classification and Related Methods of Data Analysis. Amsterdam: North‐Holland; pp 67–74. [Google Scholar]

- Beauchamp MS, Martin A (2007): Grounding object concepts in perception and action: Evidence from fMRI studies of tools. Cortex 43:461–468. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Buccino G (2006): The role of ventral premotor cortex in action execution and action understanding. J Physiol Paris 99:396–405. [DOI] [PubMed] [Google Scholar]

- Bracci S, Peelen MV (2013): Body and object effectors: The organization of object representations in high‐level visual cortex reflects body‐object interactions. J Neurosci 33:18247–18258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buxbaum LJ (2001): Ideomotor apraxia: A call to action. Neurocase 7:445–458. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Kyle K, Grossman M, Coslett HB (2007): Left inferior parietal representations for skilled hand‐object interactions: Evidence from stroke and corticobasal degeneration. Cortex 43:411–423. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Kyle KM, Tang K, Detre JA (2006): Neural substrates of knowledge of hand postures for object grasping and functional object use: Evidence from fMRI. Brain Res 1117:175–185. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Shapiro AD, Coslett HB (2014): Critical brain regions for tool‐related and imitative actions: A componential analysis. Brain 137:1971–1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlson TA, Schrater P, He S (2003): Patterns of activity in the categorical representations of objects. J Cogn Neurosci 15:704–717. [DOI] [PubMed] [Google Scholar]

- Cawley GC, Talbot NLC (2010): On over‐fitting in model selection and subsequent selection bias in performance evaluation. J Machine Learn Res 11:2079–2107. [Google Scholar]

- Cortes C, Vapnik V (1995): Support‐vector networks. Machine Learn 20:273–297. [Google Scholar]

- Cox RW (1996): AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173. [DOI] [PubMed] [Google Scholar]

- Crammer K, Singer Y (2001): On the algorithmic implementation of multiclass kernel‐based vector machines. J Machine Learn Res 2:265–292. [Google Scholar]

- Cubelli R, Bartolo A, Nichelli P, Della Sala S (2006): List effect in apraxia assessment. Neurosci Lett 407:118−120. [DOI] [PubMed] [Google Scholar]

- Davis T, LaRocque KF, Mumford JA, Norman KA, Wagner AD, Poldrack RA (2014): What do differences between multi‐voxel and univariate analysis mean? How subject‐, voxel‐, and trial‐level variance impact fMRI analysis. Neuroimage 97:271–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino F, Valente G, Staeren N, Ashburner J, Goebel R, Formisano E (2008): Combining multivariate voxel selection and support vector machines for mapping and classification of fMRI spatial patterns. Neuroimage 43:44–58. [DOI] [PubMed] [Google Scholar]

- De Renzi E, Motti F, Nichelli P (1980): Imitating gestures. A quantitative approach to ideomotor apraxia. Arch Neurol 37:6–10. [DOI] [PubMed] [Google Scholar]

- Dinstein I, Gardner JL, Jazayeri M, Heeger DJ (2008): Executed and observed movements have different distributed representations in human aIPS. J Neurosci 28:11231–11239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frey SH (2008): Tool use, communicative gesture and cerebral asymmetries in the modern human brain. Philos Trans R Soc Lond B Biol Sci 363:1951–1957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Lakoff G (2005): The Brain's concepts: the role of the Sensory‐motor system in conceptual knowledge. Cogn Neuropsychol 22:455–479. [DOI] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Valyear KF, Culham JC (2013): Decoding the neural mechanisms of human tool use. Elife 2:e00425 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldenberg G (1996): Defective imitation of gestures in patients with damage in the left or right hemispheres. J Neurol Neurosurg Psychiatry 61:176–180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldenberg G (1999): Matching and imitation of hand and finger postures in patients with damage in the left or right hemispheres. Neuropsychologia 37:559–566. [DOI] [PubMed] [Google Scholar]

- Goldenberg G (2013): Apraxia ‐ The Cognitive Side of Motor Control. New York, Oxford: Oxford University Press. [DOI] [PubMed] [Google Scholar]

- Goldenberg G, Hermsdorfer J, Glindemann R, Rorden C, Karnath HO (2007): Pantomime of tool use depends on integrity of left inferior frontal cortex. Cereb Cortex 17:2769–2776. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Hamilton AF (2007): Evidence for a distributed hierarchy of action representation in the brain. Hum Mov Sci 26:590–616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guyon I, Weston J, Barnhill S, Vapnik V (2002): Gene selection for cancer classification using support vector machines. Machine Learn 46:389–422. [Google Scholar]

- Haaland KY, Flaherty D (1984): The different types of limb apraxia errors made by patients with left vs. right hemisphere damage. Brain Cogn 3:370–384. [DOI] [PubMed] [Google Scholar]

- Harrington DL, Haaland KY, Knight RT (1998): Cortical networks underlying mechanisms of time perception. J Neurosci 18:1085–1095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R (1998): Classification by pairwise coupling. Ann Stats 26:451–471. [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P (2001): Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Connoly AC, Guntupalli JS (2014): Decoding neural representational spaces using multivariate pattern analysis. Ann Rev Neurosci 37:435–436. [DOI] [PubMed] [Google Scholar]

- Heath M, Roy EA, Black SE Westwood DA (2001): Intransitive limb gestures and apraxia following unilateral stroke. J Clin Exp Neuropsychol 23:628–642. [DOI] [PubMed] [Google Scholar]

- Helon H, Kroliczak G (2014): The effects of visual half‐field priming on the categorization of familiar intransitive gestures, tool use pantomimes, and meaningless hand movements. Front Psychol 5:454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes CJ, Hoge R, Collins L, Woods R, Toga AW, Evans AC (1998): Enhancement of MR images using registration for signal averaging. J Comput Assist Tomogr 22:324–333. [DOI] [PubMed] [Google Scholar]

- Hsu CW, Lin CJ (2002): A comparison of methods for multiclass support vector machines. IEEE Trans Neural Netw 13:415–425. [DOI] [PubMed] [Google Scholar]

- Jastorff J, Begliomini C, Fabbri‐Destro M, Rizzolatti G, Orban GA (2010): Coding observed motor acts: Different organizational principles in the parietal and premotor cortex of humans. J Neurophysiol 104:128–140. [DOI] [PubMed] [Google Scholar]

- Joachims T. 1999. Making large‐Scale SVM Learning Practical In: Schölkopf B, Burges C, Smola A, editors. Advances in Kernel Methods ‐ Support Vector Learning. Cambridge, MA: MIT Press; pp 41–56. [Google Scholar]

- Kilner JM (2011): More than one pathway to action understanding. Trends Cogn Sci 15:352–357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Kievit RA (2013): Representational geometry: integrating cognition, computation, and the brain. Trends Cogn Sci 17:401–412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kroliczak G, Frey SH (2009): A common network in the left cerebral hemisphere represents planning of tool use pantomimes and familiar intransitive gestures at the hand‐independent level. Cereb Cortex 19:2396–2410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee S, Halder S, Kubler A, Birbaumer N, Sitaram R (2010): Effective functional mapping of fMRI data with support‐vector machines. Hum Brain Mapp 31:1502–1511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW (2006): Cortical networks related to human use of tools. Neuroscientist 12:211–231. [DOI] [PubMed] [Google Scholar]

- Macuga KL, Frey SH (2012): Neural representations involved in observed, imagined, and imitated actions are dissociable and hierarchically organized. Neuroimage 59:2798–2807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molenberghs P, Cunnington R, Mattingley JB (2012): Brain regions with mirror properties: A meta‐analysis of 125 human fMRI studies. Neurosci Biobehav Rev 36:341–349. [DOI] [PubMed] [Google Scholar]

- Naselaris T, Kay KN, Nishimoto S, Gallant JL (2011): Encoding and decoding in fMRI. Neuroimage 56:400–410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof NN, Tipper SP, Downing PE (2012a): Viewpoint (in)dependence of action representations: An MVPA study. J Cogn Neurosci 24:975–989. [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, Tipper SP, Downing PE (2012b): Visuo‐motor imagery of specific manual actions: A multi‐variate pattern analysis fMRI study. Neuroimage 63:262–271. [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, Tipper SP, Downing PE (2013): Crossmodal and action‐specific: neuroimaging the human mirror neuron system. Trends Cogn Sci 17:311–318. [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, Wiggett AJ, Diedrichsen J, Tipper SP, Downing PE (2010): Surface‐based information mapping reveals crossmodal vision‐action representations in human parietal and occipitotemporal cortex. J Neurophysiol 104:1077–1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orban GA, Van Essen D, Vanduffel W (2004): Comparative mapping of higher visual areas in monkeys and humans. Trends Cogn Sci 8:315–324. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE (2007): The neural basis of visual body perception. Nat Rev Neurosci 8:636–648. [DOI] [PubMed] [Google Scholar]

- Peeters RR, Rizzolatti G, Orban GA (2013): Functional properties of the left parietal tool use region. Neuroimage 78:83–93. [DOI] [PubMed] [Google Scholar]

- Pereira F, Mitchell T, Botvinick M. (2009): Machine learning classifiers and fMRI: A tutorial overview. Neuroimage 45(1 Suppl):S199–S209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petreska B, Adriani M, Blanke O, Billard AG (2007): Apraxia: A review. Prog Brain Res 164:61–83. [DOI] [PubMed] [Google Scholar]

- Rao SM, Harrington DL, Haaland KY, Bobholz JA, Cox RW, Binder JR (1997): Distributed neural systems underlying the timing of movements. J Neurosci 17:5528–5535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ricciardi E, Bonino D, Pellegrini S, Pietrini P (2014a): Mind the blind brain to understand the sighted one! Is there a supramodal cortical functional architecture? Neurosci Biobehav Rev 41:64–77. [DOI] [PubMed] [Google Scholar]

- Ricciardi E, Bonino D, Sani L, Vecchi T, Guazzelli M, Haxby JV, Fadiga L, Pietrini P (2009): Do we really need vision? How blind people "see" the actions of others. J Neurosci 29:9719–9724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ricciardi E, Handjaras G, Bonino D, Vecchi T, Fadiga L, Pietrini P (2013): Beyond motor scheme: A supramodal distributed representation in the action‐observation network. PLoS One 8:e58632 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ricciardi E, Handjaras G, Pietrini P. (2014b): The blind brain: How (lack of) vision shapes the morphological and functional architecture of the human brain. Exp Biol Med (Maywood) 239:1414–1420. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L (2004): The mirror‐neuron system. Annu Rev Neurosci 27:169–192. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V (2001): Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci 2:661–670. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G (2001): The cortical motor system. Neuron 31:889–901. [DOI] [PubMed] [Google Scholar]

- Rothi LJG, Ochipa C, Heilman KM (1991): A cognitive neuropsychological model of limb praxis. Cogn Neuropsychol 8:443–458. [Google Scholar]

- Staeren N, Renvall H, De Martino F, Goebel R, Formisano E (2009): Sound categories are represented as distributed patterns in the human auditory cortex. Curr Biol 19:498–502. [DOI] [PubMed] [Google Scholar]

- Stamenova V, Roy EA, Black SE (2010): Associations and dissociations of transitive and intransitive gestures in left and right hemisphere stroke patients. Brain Cogn 72:483–490. [DOI] [PubMed] [Google Scholar]

- Streltsova A, Berchio C, Gallese V, Umilta MA (2010): Time course and specificity of sensory‐motor alpha modulation during the observation of hand motor acts and gestures: A high density EEG study. Exp Brain Res 205:363–373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988): Co‐Planar Stereotaxic Atlas of the Human Brain. New York: Thieme Medical Publisher. [Google Scholar]

- Urgesi C, Candidi M, Avenanti A (2014): Neuroanatomical substrates of action perception and understanding: an anatomic likelihood estimation meta‐analysis of lesion‐symptom mapping studies in brain injured patients. Front Hum Neurosci 8:344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valyear KF, Cavina‐Pratesi C, Stiglick AJ, Culham JC. (2007): Does tool‐related fMRI activity within the intraparietal sulcus reflect the plan to grasp? Neuroimage 36(Suppl 2):T94–T108. [DOI] [PubMed] [Google Scholar]

- Villarreal M, Fridman EA Amengual A, Falasco G, Gerschcovich ER, Ulloa ER, Leiguarda RC (2008): The neural substrate of gesture recognition. Neuropsychologia 46:2371–2382. [DOI] [PubMed] [Google Scholar]

- Weiss PH, Rahbari NN, Lux S, Pietrzyk U, Noth J, Fink GR (2006): Processing the spatial configuration of complex actions involves right posterior parietal cortex: An fMRI study with clinical implications. Hum Brain Mapp 27:1004–1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information