Abstract

Linking observed and executable actions appears to be achieved by an action observation network (AON), comprising parietal, premotor, and occipitotemporal cortical regions of the human brain. AON engagement during action observation is thought to aid in effortless, efficient prediction of ongoing movements to support action understanding. Here, we investigate how the AON responds when observing and predicting actions we cannot readily reproduce before and after visual training. During pre‐ and posttraining neuroimaging sessions, participants watched gymnasts and wind‐up toys moving behind an occluder and pressed a button when they expected each agent to reappear. Between scanning sessions, participants visually trained to predict when a subset of stimuli would reappear. Posttraining scanning revealed activation of inferior parietal, superior temporal, and cerebellar cortices when predicting occluded actions compared to perceiving them. Greater activity emerged when predicting untrained compared to trained sequences in occipitotemporal cortices and to a lesser degree, premotor cortices. The occipitotemporal responses when predicting untrained agents showed further specialization, with greater responses within body‐processing regions when predicting gymnasts' movements and in object‐selective cortex when predicting toys' movements. The results suggest that (1) select portions of the AON are recruited to predict the complex movements not easily mapped onto the observer's body and (2) greater recruitment of these AON regions supports prediction of less familiar sequences. We suggest that the findings inform both the premotor model of action prediction and the predictive coding account of AON function. Hum Brain Mapp, 2013. © 2011 Wiley Periodicals, Inc.

Keywords: prediction, perception, action, parietal, premotor, human body, biological motion, fMRI, occipitotemporal

INTRODUCTION

In daily life, we interact with our environment by perceiving, predicting, and responding to other people and objects moving around us. For example, such simple tasks as giving someone a high‐five, stepping onto an escalator, or holding open the door for a friend require behavioral responses based on careful prediction of other bodies and objects in motion. According to a dominant theory of action understanding, we are able to respond to others' actions by simulating their movements within sensorimotor regions of the brain [Gallese and Goldman, 1998; Grèzes and Decety, 2001; Rizzolatti and Sinigaglia, 2010]. In support of the simulation account, numerous studies suggest that we accomplish such efficient prediction of others' actions by drawing upon prior motor experience [Hommel et al., 2001; Jeannerod and Frak, 1999; Knoblich and Flach, 2001; Neal and Kilner, 2010; Prinz and Rapinett, 2008]. However, it is often the case that we are confronted with bodies or objects moving in ways we have never physically experienced, for instance, when watching gymnasts in the Olympics or picking up a child's wind‐up toy as it scoots away. If prior experience guides perception and prediction processes, how is it possible to understand and predict actions that are impossible to perform with one's own body? In the present study, we evaluate the influence of visually based practice on the prediction of complex action sequences with which participants have no prior motor experience, by comparing behavioral performance and neural activity whilst predicting visually trained and untrained actions.

The idea that action perception and production share common representations has been around for many years [Hommel et al., 2001; James, 1890; Prinz, 1990]. Recent research that focused on identifying the neural substrates that might serve to link action perception with production in the human brain has found evidence for an action observation network (AON; Rizzolatti and Sinigaglia, 2010]. The AON comprises parietal and premotor cortical regions, which appear to code observed and executed actions in a common space [Kilner et al., 2009; Oosterhof et al., 2010] as well as occipitotemporal regions responsive to biological motion and human body perception [Caspers et al., 2010; Cross et al., 2009; Gazzola and Keysers, 2009; Grèzes and Decety, 2001; Kilner et al., 2007a]. One intriguing hypothesis put forth as to why sensorimotor brain regions are engaged during action perception is that such activation enables us to smoothly and effortlessly predict the ongoing actions of others [Grush, 2004; Jeannerod and Decety, 1995; Kilner et al., 2004; Prinz, 2006; Schütz‐Bosbach and Prinz, 2007; Wilson and Knoblich, 2005]. Such a hypothesis is intuitively appealing, given how critical prediction is for interacting with other people and the environment and thus, for survival [Sebanz and Knoblich, 2009].

The notion that prior motor experience aids in action prediction has been convincingly demonstrated by a number of behavioral studies, in terms of both spatial [Knoblich and Flach, 2001] and temporal prediction [Graf et al., 2007; Springer et al., 2011]. Although not studied as widely, a handful of experiments provide evidence that observational experience alone also confers benefits on action prediction in a range of animal species, from penguins to nonhuman primates [Chamley, 2003; Subiaul et al., 2004]. Moreover, preliminary evidence with human participants suggests that the sensorimotor regions of the brain might be shaped by observational experience in a similar manner to physical experience [Burke et al., 2010; Cross et al., 2009], thus presenting the possibility that prior experience with an action in either the visual or physical domain can lead to improved action prediction.

To date, research investigating how an observer's prior experience influences prediction has focused almost exclusively on simple, everyday actions and agents that are similar or familiar to the observer [Graf et al., 2007; Prinz and Rapinett, 2008; Stadler et al., 2011]. Returning to the example of watching gymnasts in action or a child's mechanical toy, how is it possible to predict these movements when one has no prior physical experience performing them? One intriguing hypothesis is that the premotor portion of the AON, the same neural region engaged when predicting familiar actions [Schubotz and von Cramon, 2003; Stadler et al., 2011], is also recruited to predict ongoing dynamic events in general [Schubotz, 2007; Wolfensteller et al., 2007]. According to this account, the premotor cortex is recruited to aid in perception and prediction of a much wider variety of dynamic events ranging from complex events performed by humans, such as expert feats of acrobatics, to events far removed from our physical experience, such as the flight of a dragonfly, or the rigid movements of a robot [Schubotz, 2007].

Another possible explanation for how brain regions subserving action understanding and prediction might process unfamiliar actions can be derived from the predictive coding account of the AON, based on empirical Bayes inference [Kilner et al., 2007a, b]. According to this model, the AON functions to minimize prediction error through reciprocal interactions among levels of a cortical hierarchy comprising parietal, premotor, and occipitotemporal regions [Gazzola and Keysers, 2009; Kilner et al., 2007b]. Whenever we observe an agent moving, our prior motor and visual experience informs our expectations about how that agent might move. The comparison between the observer's predictions of the agent's movements (based on prior visuomotor experience) and the observed action generates a prediction error. This prediction error is then used to update our representation of the agent's action. According to predictive coding, the optimal state is minimal prediction error at all levels of the AON, which is achieved when observed actions match with predicted actions as closely as possible [Neal and Kilner, 2010; Schippers and Keysers, 2011]. Thus, it might be expected that observing unfamiliar actions would result in greater prediction error (as evidenced by greater AON activity), while observing familiar actions would generate a more efficient, finely tuned AON response. To date, however, this has not been explicitly tested.

In the present study, we examine how the brain perceives and predicts the motion of human and nonhuman agents and ask whether perceptual experience alone can influence prediction performance. We test this with a series of coordinated functional neuroimaging and behavioral training methods, holding physical experience constant (i.e., participants were naïve to all action sequences before the study and could not physically perform the actions at any stage of the study). We focus on perception and prediction of two classes of actions: complex gymnastic sequences (biological motion) and wind‐up toy sequences (nonbiological motion)1.

With the present study, we investigate a declarative form of temporal prediction, where participants' task is to watch a gymnast or toy move behind an occluding surface and to press a button the moment they expect the gymnast or toy to reappear. First, we measure prediction performance behaviorally across all testing and training sessions. Second, we investigate how perception (when the agent's motion is directly observable) and prediction (when the agent's motion is concealed behind the occluder) of these complex sequences compare at the neural level, in part to determine whether the AON is more active during perception or prediction.

Third, we evaluate which brain regions are engaged when predicting biological compared to non‐biological action sequences. If the AON confers special status to predicting the human body in motion [Buccino et al., 2004; Shimada, 2010; Tai et al., 2004], then we might expect to see greater activation within all or some nodes of this network when perceiving gymnasts compared to toys. Such a finding would suggest agent‐specificity of prediction mechanisms, even when all actions are impossible to perform. Alternatively, more general prediction mechanisms that are adaptable to any kind of dynamic event sequence might support a hypothesis of fewer or no differences when predicting gymnasts or toys [Schubotz, 2007; Schubotz and von Cramon, 2004].

Finally, we evaluate whether brain regions within or beyond the AON are responsive to purely visual training, collapsed across agent. Although no single theoretical framework exists upon which we can base hypotheses for these findings, it is nonetheless possible to speculate on possible patterns of findings based on several studies from the action perception literature. Specifically, if frontal components of the AON are responsive to purely visual experience, as prior work has demonstrated for executable actions [Burke et al., 2010; Cross et al., 2009], we would expect to see changes within premotor regions based on the visual training manipulation. However, it is equally plausible that visual experience will lead to changes only within the visual nodes of the AON and not anterior motor regions, because participants have no physical experience or ability to perform the observed actions [Cross et al., 2010]. In this case, occipitotemporal cortices might be the most sensitive to visual experience, in line with a predictive coding model proposed by Kilner et al. [2007b].

METHODS

Participants

Twenty‐four participants were recruited to take part in 4 days of behavioral training and two functional neuroimaging scans (one pretraining scan and one posttraining scan, Fig. 1). All participants were free of neurological or psychiatric diagnoses, and none was taking any medication at the time of the measurements. All participants provided written informed consent and were paid for their participation. They were treated according to the ethical regulations outlined in the Declaration of Helsinki, and the local ethics committee approved all fMRI procedures. Two participants were excluded from the final sample due to excessive motion artifacts in the fMRI data. The final sample comprised 13 men and 9 women (mean age, 26.4 years; range, 23.0–32.8). All participants were strongly right handed as measured by the Edinburgh Handedness Inventory [Oldfield, 1971].

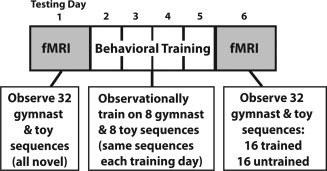

Figure 1.

Schematic depiction of the three phases of this study, chronologically arranged. All participants completed two fMRI sessions and four days of behavioral training, with each training session separated by ∼48 h.

Stimuli

Stimuli comprised 32 videos featuring different agents moving across the screen from left to right. Sixteen videos featured wind‐up toys that moved autonomously across a neutral background. Individual toys were chosen that skittered, hopped, spun, or crept rather than simply moving in a smooth, straight trajectory, in order to make the prediction task more challenging. The remaining 16 videos featured three different expert female gymnasts performing complex gymnastic sequences across a neutral gymnasium space. The time of each video ranged from 3.2 to 18.8 s, and no differences in average duration existed between the wind‐up toy videos (M = 8.36) and the gymnastic videos (M = 7.18), t = 0.76, P = 0.45. All videos were presented in full color with a resolution of 480 × 270 pixels and a frame rate of 25 frames per second.

From these original 32 videos, 128 new video components were constructed to create the prediction video stimuli. Two prediction videos with occlusions of different lengths were constructed from original video: one with a short occlusion duration, that is, using a narrow black rectangular occluder measuring 4.2 × 2.3 cm, and one with a long occlusion duration, that is, a wider occluder measuring 4.2 cm × 3.4 cm. The left edge of the occluder was placed in the identical spatial location for both the long and short occlusion videos, corresponding to 4.5 cm from the left edge (or start) of the video. Each agent moved from left to right and spent a variable amount of time moving across the screen before disappearing behind the occluder, ranging from 1.76 to 11.42 s, and stayed behind the occluder between 0.5 and 8.5 s.

Because participants' task was to accurately predict when each agent should reappear from behind the occluding surface (via a button press), the videos required further manipulation to make this task possible. Each of the new 64 videos with the occluding black rectangles was edited to artificially lengthen the segment in which the agent was occluded by three beyond the actual time when the agent should reappear. This meant that if participants were very late in their estimates of how long an agent should be behind the occluder, the task would still continue uninterrupted. Once participants made a button press indicating the point in time they thought the agent should reappear, a second video immediately began playing: the continuation of the first video, with the starting points set to the moment any part of the agent reemerged from behind the occluding surface. This procedure was applied to all 64 videos, so that in each trial, participants experienced one smooth video, wherein the gymnast or toy disappeared behind the occluder (video 1), and as soon as the participant made a button press, the agent reappeared from behind the occluder and continued moving until off screen (video 2).

Study Design

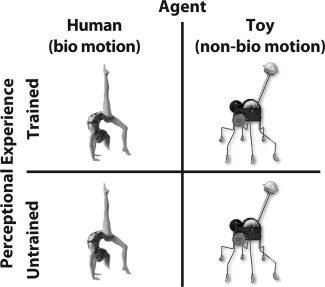

The experimental conditions fall into a 2 (agent type: human or toy) by 2 (observational training experience: trained or untrained) factorial design, illustrated in Figure 2. During the first imaging session, all 32 sequences (one exemplar of each agent/action, with only the wide occluding surface present) observed during scanning were novel to the participants. During the second imaging session, participants viewed eight trained gymnast sequences, eight trained wind‐up toy sequences, eight untrained gymnast sequences, and eight untrained wind‐up toy sequences.

Figure 2.

Two (Agent: Human or Wind‐up Toy) by two (perceptual experience: Trained or Untrained) factorial design of study.

Neuroimaging

Neuroimaging procedure

An event‐related design was used to identify responses to predicting the occluded actions of toys and gymnasts in identical pretraining and posttraining fMRI sessions. During functional imaging, all videos were presented via Psychophysics Toobox 3 running under Matlab 7.2. In the scanner, participants viewed videos via a back projection system, in which a mirror installed above participants' eyes reflected an LCD projection onto a screen behind the magnet. Participants predicted the same 32 different toy and gymnast sequences during the pretraining and posttraining scanning sessions. Instructions were to observe each video, to imagine the movement of the agent continuing behind the black occluding surface, and to press a button held in the right hand at the precise moment the agent should reappear from behind the occluder. As soon as participants pressed the button, the video appeared to continue to play, with the agent reappearing from behind the occluder and continuing off the right side of the screen. No feedback was given during the scanning sessions. Participants completed a total of 160 trials (five instances of each of the 32 stimuli), and each stimulus video was separated by a fixation cross of 2–12 sconds in duration, pseudologarithmically distributed. Because of the variation of stimuli duration, the effective range of jittering between the onset of each video and the moment an agent disappeared entirely behind the occluder ranged from 2.12 to 8.70 s.

Scanning was performed on a 3 Tesla MRI scanner (Siemens TRIO, Germany). For functional measurements, a gradient‐echo EPI sequence was used with TE = 30 ms, flip angle 90°, TR = 2 s, and acquisition bandwidth 100 kHz. The matrix acquired was 64 × 64 voxels, FOV 19.2 mm, in‐plane resolution 3 × 3 mm. Twenty‐two axial slices allowing for full‐brain coverage were acquired (slice thickness = 4 mm and interslice gap = 1 mm) in an interleaved fashion. Depending on participants' prediction performance and the length of the randomly assigned rest trials, the total number of functional scans collected per participant ranged between 1,080 and 1,305, with 1,174 being the average number of scans collected per participant per scanning session. Geometric distortions were characterized by a B0 field‐map scan, consisting of a gradient‐echo readout (32 echoes, interecho time 0.64 ms) with a standard 2D phase encoding. The B0 field was obtained by a linear fit to the unwarped phases of all odd echoes. For registration, T1‐weighted modified driven equilibrium Fourier transform imagers were obtained before the functional runs. Additionally, a set of T1‐weighted spin‐echo EPI images was taken with the same geometrical parameters and the same bandwidth as used for the fMRI data. For anatomical data, a T1‐weighted 3D magnetization‐prepared rapid gradient echo sequence was obtained.

Neuroimaging analyses

The neuroimaging analyses were designed to achieve four objectives. First, we wanted to determine which brain regions were more strongly activated when perceiving compared to predicting complex actions that are not readily mapped on to the observer's body, and which brain regions were more strongly activated by prediction compared to perception. Next, we sought to identify regions that demonstrated an interaction in BOLD signal from the pretraining to the posttraining scan session, based on training experience or the type of agent whose movement was being simulated during occlusion. The third set of analyses focused on regions that were sensitive to the main effects and interactions of agent type and training experience within our 2 × 2 factorial design during the prediction phase of the posttraining scan session. Finally, we aimed to further explore the main effects of training during action prediction by evaluating the simple effects of training within each class of agent.

To accomplish these objectives, data from both scan sessions were separately corrected for slice timing, realigned, unwarped, normalized to individual participants' T1‐segmented anatomical scans with a resolution of 3 mm × 3 mm × 3 mm, and spatially smoothed with a resolution of 8 mm using SPM8 software. A design matrix was fitted to for each scanning session of each subject independently, with eight regressors of interest. Events with 0 s duration were fitted for each of the four video types in the factorial design: (to be) trained gymnasts and toys and (to be) untrained gymnasts and toys, at two different points in each video: when the video began, and when the agent was first fully occluded behind the black box. To avoid false positives in direct comparisons, all main effects were evaluated with the conjunction of A > B with A [Nichols et al., 2005]. All neuroimaging analyses were evaluated in whole‐brain analyses at a voxel‐wise threshold of P < 0.001 uncorrected, k = 10 voxels. Corrections for multiple comparisons were performed at the cluster level using P < 0.05, FDR‐corrected [Friston et al., 1993]. In the Results section, we focus on brain regions that reached cluster‐corrected significance (FDR cluster‐corrected P < 0.05) for the main and simple effects and uncorrected results for the interactions. The tables list all activations significant at the P < 0.001 uncorrected, k = 10 voxels threshold.

To most clearly illustrate all fMRI findings, t‐images from cluster‐corrected activations only are visualized on a participant‐averaged high‐resolution anatomical scan. Parameter estimates (beta values) were extracted and plotted for visualization purposes only for those regions that reached a significance level of P < 0.05 FDR‐corrected for all main and simple effects and P < 0.001 for the interactions. Anatomical localization of all activations was assigned based on consultation of the Anatomy Toolbox in SPM [Eickhoff et al., 2005, 2006], in combination with the SumsDB online search tool (http://sumsdb.wustl.edu/sums/).

Behavioural training and evaluation

Between pre‐ and posttraining fMRI sessions, participants came into the behavioral testing laboratory for four perceptual training sessions, each lasting 45 min. Each perceptual training session was separated by ∼48 h (range, 42–54 h). For ease of training, four distinct sets of the experimental stimuli were constructed, so that across participants, different assemblies of eight gymnast and eight toy sequences would be trained while another eight gymnast and eight toy sequences would be untrained. The four different training stimuli sets were pseudorandomly assembled: care was taken, so that videos of a variety of lengths composed each training set and that no significant differences existed between video lengths between sets (all P‐values > 0.80). Participants were randomly assigned to one of four training sets and practiced the prediction task on the same eight gymnastic sequences and eight toy sequences each day of training.

During each of the four training sessions, participants sat alone in a quiet laboratory testing room and practiced the action prediction task on a desktop computer running Psychophysics Toolbox 3 in Matlab 7.2. During all 4 days of training, participants practiced the action prediction task. As in the fMRI version of the task, participants were again instructed to attend closely to each video and to press the space bar on a computer keyboard when they thought the agent should reappear from behind the occluding box; however, during behavioral training, participants also received feedback on their performance (Fig. 3). Feedback consisted of one of four different cartoon symbols expressing different valences (red X, neutral face, smiley face, and very enthusiastic smiley face). If participants did not receive the most positive face as feedback, they saw an additional cartoon next to the valenced symbol, which was either a tortoise (if their prediction time was too slow) or a hare (if their prediction time was too fast). This response scale was carefully explained to participants before behavioral training began, and a printed sheet explaining each of the possible feedback combinations was placed next to the testing computer during the first day of training in case participants wished to consult it. On the third day of training, participants also passively watched each of the 32 videos just once with no occluder present. This was done in order to familiarize participants with how each video ran in its natural, unaltered, and unoccluded state.

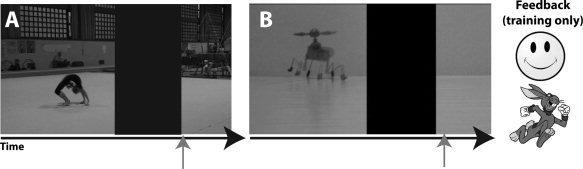

Figure 3.

Example still images from both classes of video stimuli. For both fMRI sessions and the behavioral training sessions, participants' task was to watch videos of expert gymnasts (A) and wind‐up toys (B) moving across the screen from left to right and to press a button at the point in time when they believed the agent should reappear from behind the occluder, indicated by the orange arrows. Upon pressing the button, the agent reappeared and continued moving off screen. Participants then received symbolic feedback concerning the accuracy of their performance (see main text).

To make the training task more difficult and motivating, participants' performance had to become increasingly more accurate across the days of training in order to elicit the same degree of feedback. To get the most positive feedback on the first day of training, participants had to predict the correct reemergence time within ±0.25 s. If prediction time was ≤±0.50 s of accurate reemergence, they received positive feedback, if ≤ ±0.75 s, neutral feedback, and if off by >±0.75 s, negative feedback. Across each day of training, the feedback thresholds decreased by 0.05 s per time bin, so that by the fourth and final day of testing, the most positive feedback was given when prediction accuracy was ±0.10 of actual occlusion time, positive feedback for ≤±0.35 s, neutral feedback for ≤±0.60 s, and negative feedback for >±0.60 s.

Prediction values for each trial, during pre‐ and posttraining fMRI sessions and during the behavioral training sessions, were calculated as mean deviation from actual time the gymnast or toy should reappear from behind the occluding surface. A signed mean prediction score was calculated for each participant for each agent class (positive values indicate prediction slower than reality; negative values indicate prediction faster than reality). Prediction scores for the long and short occlusion trials were collapsed into one value for each of the 4 days of behavioral training. Two sets of analyses were performed on the behavioral prediction data. First, the data were submitted to a 2 (agent type) × 6 (testing day: prescan; training days 1–4; postscan) repeated measures ANOVA. For this analysis, only trials from the trained (or to‐be trained) categories were analyzed from the fMRI sessions. To compare specific effects of the training manipulation, a 2 (agent type) × 2 (training experience) × 2 (scanning session) repeated‐measures ANOVA was run on the prediction data from just the fMRI sessions.

RESULTS

Behavioral Training Results

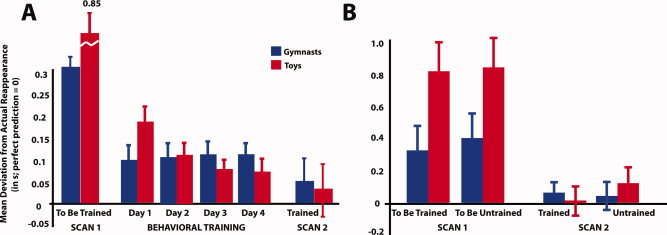

Participants' performance was measured by their ability to accurately predict the correct time the human or wind‐up toy agent should reappear from behind the occluder. The first analysis, quantifying prediction performance across all 6 days of the experiment involving the same stimuli, demonstrated a main effect of agent, with participants predicting gymnasts' reappearances more accurately than toys', F 1,21 = 8.97, P = 0.007. A main effect of day also emerged, with participants prediction performance increasing across days, F 1.5,32.2 = 8.72, P = 0.002. In addition, an interaction was present between agent and day, with prediction performance improving more markedly for the wind‐up toys compared to the gymnasts, F 2.7,57.2 = 16.04, P < 0.001. These results are illustrated in Figure 4A.

Figure 4.

Panel A illustrates behavioural data from the pre‐ and post‐training scanning sessions as well as the four days of visual training wherein participants practiced the prediction task on a subset of stimuli. The bars in Panel A represent participants' performance on the same set of stimuli across all days of the study (i.e., performance on the same 16 stimuli that were untrained and novel during Scan 1 is plotted throughout the course of the whole study). Panel B illustrates participants' performance on the 16 stimuli that were to be trained and the 16 that were to remain untrained during Scan 1 and performance on those same stimuli after training during Scan 2. Across both figures, blue bars correspond to performance on gymnast videos, red bars are performance on wind‐up toy videos, and the y‐axis depicts the mean deviation from each agent's actual reappearance from behind the occluder in seconds. Error bars represent standard error of the mean. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

The second analysis took into account the effects of training and agent type across scanning sessions. Note that training experience for the pretraining scan is an artificial construct: at this stage, all stimuli are equally novel, and no effects of to‐be‐assigned training category were manifest (P = 0.40). A repeated‐measures ANOVA including the factors scanning session (pretraining vs. posttraining), agent (gymnast vs. toy), and training (trained vs. untrained) revealed a main effect of session, with participants' prediction errors decreasing across scanning session, thus reflecting a general increase in accuracy, F 1,21 =13.47, P = 0.001. These results are illustrated in Figure 4B. A main effect of agent similar to the one from the first analysis also emerged, whereby participants were generally more accurate at predicting gymnasts' actions than the wind‐up toys', F 1,21 = 24.23, P < 0.001. Finally, an interaction emerged between scanning session and agent, reflecting here again greater improvements in prediction between the pretraining and posttraining scan sessions for the wind‐up toys compared to the gymnasts, F 1,21 = 40.36, P < 0.001. Importantly, there was no interaction between scanning session and training, providing evidence for a high degree of learning that generalized from the trained to the untrained stimuli. This is further substantiated by an exploratory 2 × 2 repeated‐measure ANOVA run on the behavioral data from the postscanning session only. This analysis revealed no main effects or interactions between agent and training, demonstrating that prediction for both classes of agent, regardless of training experience, was equivalent by the posttraining fMRI session. Therefore, any effect that might emerge between agent or training experience in the brain data from the posttraining scanning session is not readily attributable to discernable differences in prediction accuracy between classes of stimuli.

Imaging Results

Effects comparing perception and prediction

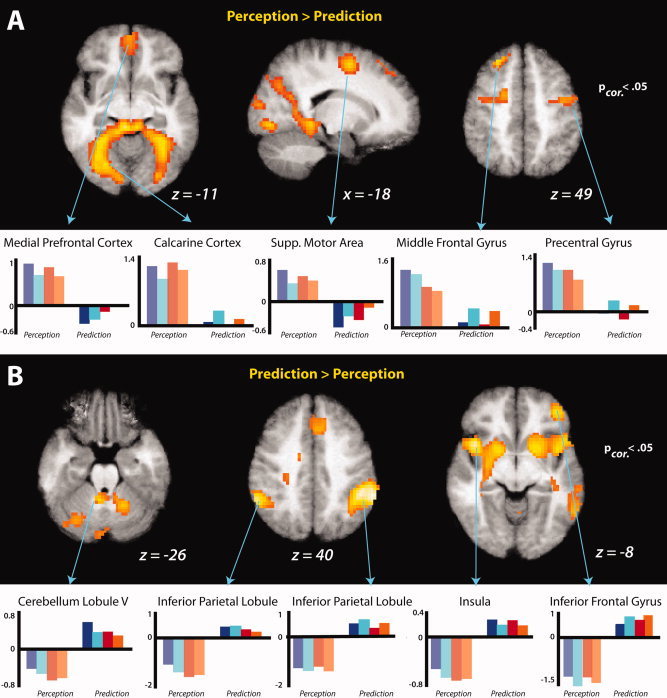

By modeling two time points of interest within each stimulus video, we established perception events (when the gymnast or toy was visibly moving across the screen) and prediction events (when participants were actively imagining the continued movement of the agent behind the occluder). When comparing action perception to action prediction, a network of visual and motor regions was more responsive, including calcarine cortex centered on V2, and bilateral clusters spanning the precentral and superior frontal gyri, corresponding to the frontal eye fields and supplemental motor area (SMA). In addition to these regions, more anterior portions of the frontal lobe showed comparatively greater activation, including a midline activation spreading to bilateral medial prefrontal cortex and a portion of the left middle frontal gyrus. Figure 5A illustrates the peaks of each cluster‐corrected activation, and Table I lists all regions emerging from this contrast. To more fully illustrate this activity, cortical renderings are visualized in Figure S1.

Figure 5.

Comparison of action perception and prediction during post‐training fMRI session. Group averaged statistical maps (n = 22) show regions more responsive when observing an agent move across space compared to imagining the agents' actions once they have moved behind the occluder (A), and those regions more responsive when actively imagining agents' movements in order to predict the precise time of reappearance from behind the occluder (B). Note that during the prediction condition, no movement or agent is observed on the screen. T‐Maps are thresholded at t > 4.01, and the bar graphs illustrate the parameter estimates in the clusters that reached a thresholding value of P < 0.05, FDR‐corrected, for perception (left side of each plot) and prediction (right side of each plot).

Table I.

Main effects of perceiving a moving agent and predicting the time course of an occluded agent from post‐training scan session

| Anatomical region | BA | MNI coordinates | Putative functional name | t‐Value | Cluster size | P corrected value | ||

|---|---|---|---|---|---|---|---|---|

| x | y | z | ||||||

| Perception > Prediction | ||||||||

| L calcarine cortex | 18 | −9 | −91 | −5 | V2 | 10.2 | 2306 | <0.001 |

| R fusiform gyrus | 37 | 33 | −46 | −17 | 9.7 | <0.001 | ||

| L calcarine gyrus | 18 | −6 | −94 | −11 | V2 | 8.8 | <0.001 | |

| L middle frontal gyrus | 8/9 | −27 | 35 | 49 | MFG | 7.5 | 168 | 0.004 |

| L middle frontal gyrus | 8 | −24 | 29 | 55 | MFG | 7.0 | 0.004 | |

| L superior frontal gyrus | 9 | −9 | 65 | 22 | SFG | 6.0 | 0.004 | |

| L sup. frontal gyrus | 6 | −18 | −1 | 55 | SMA | 6.5 | 221 | 0.001 |

| L inferior frontal gyrus (pars opercularis) | 6 | −42 | 2 | 25 | PMv | 5.9 | 0.001 | |

| L precentral gyrus | 6 | −33 | −7 | 46 | 5.0 | 0.001 | ||

| R precentral gyrus | 4 | 39 | −7 | 46 | FEF | 5.7 | 112 | 0.019 |

| R middle frontal gyrus | 6 | 51 | −4 | 52 | MFG | 5.2 | 0.019 | |

| R precentral gyrus | 4/6 | 48 | −19 | 61 | 5.0 | 0.019 | ||

| R middle cingulate gyrus | 31 | 15 | −22 | 43 | 5.7 | 29 | 0.331 | |

| Medial orbital gyrus | 10 | 0 | 56 | −11 | mPFC | 5.5 | 216 | 0.001 |

| R rectal gyrus | 11 | 3 | 47 | −17 | 5.2 | 0.001 | ||

| L middle cingulate cortex | 24 | −9 | −19 | 37 | 5.4 | 29 | 0.331 | |

| R middle temporal gyrus | 37 | 42 | −64 | 7 | MTG | 4.9 | 40 | 0.217 |

| R middle temporal gyrus | 39 | 54 | −73 | 13 | MTG | 4.6 | 0.217 | |

| R inferior parietal lobule | 2 | 30 | −43 | 52 | IPL | 4.3 | 11 | 0.660 |

| Prediction > Perception | ||||||||

| R inferior parietal lobule | 40 | 57 | −37 | 49 | IPL | 9.0 | 784 | <0.001 |

| R supramarginal gyrus | 7/40 | 48 | −43 | 43 | IPL | 8.9 | <0.001 | |

| R supramarginal gyrus | 40 | 60 | −43 | 40 | IPL | 8.7 | <0.001 | |

| L insula | 52 | −42 | 11 | −8 | 8.1 | 5077 | <0.001 | |

| R sup. temporal gyrus | 22 | 24 | −34 | 10 | STS/STG | 7.2 | <0.001 | |

| L putamen | −27 | −7 | 1 | 7.1 | <0.001 | |||

| L inferior parietal lobule | 40 | −60 | −49 | 37 | IPL | 7.8 | 284 | <0.001 |

| L inferior parietal lobule | 40 | −48 | −46 | 55 | IPL | 5.5 | <0.001 | |

| L inferior parietal lobule | 2 | −54 | −28 | 49 | S1 | 4.3 | <0.001 | |

| R middle frontal gyrus | 9 | 39 | 38 | 28 | MFG | 6.9 | 557 | <0.001 |

| R inferior frontal gyrus (pars orbitalis) | 11 | 42 | 44 | −5 | IFG | 6.9 | <0.001 | |

| R middle frontal gyrus | 9 | 36 | 44 | 16 | MFG | 6.6 | <0.001 | |

| L cerebellum lobule V | −6 | −49 | −26 | 6.4 | 249 | <0.001 | ||

| R cerebellum lobule VI | 18 | −55 | −26 | 5.5 | <0.001 | |||

| R cerebellum lobule VI | 12 | −58 | −17 | 5.4 | <0.001 | |||

| L middle frontal gyrus | 10 | −39 | 47 | 10 | MFG | 5.6 | 60 | 0.077 |

| L intraparietal sulcus | 7 | −21 | −55 | 31 | IPS | 4.9 | 12 | 0.475 |

| L middle occipital gyrus | 18 | −30 | −97 | −5 | 4.7 | 36 | 0.183 | |

| L cerebellum lobule VIIa | −18 | −91 | −23 | 4.5 | 0.183 | |||

| L lingual gyrus | 18 | −24 | −97 | −17 | 4.3 | 0.183 | ||

| L cerebellum lobule VIIa | −33 | −73 | −29 | 4.6 | 62 | 0.077 | ||

| L cerebellum lobule VIIa | −45 | −67 | −29 | 4.5 | 0.077 | |||

| R superior occipital gyrus | 17 | 21 | −91 | 7 | V2 | 4.2 | 22 | 0.322 |

| R calcarine gyrus | 17 | 12 | −88 | −2 | V2 | 4.0 | 0.322 | |

| R superior frontal gyrus | 9 | 21 | 59 | 22 | SFG | 3.9 | 20 | 0.324 |

| R superior frontal gyrus | 9 | 21 | 44 | 22 | SFG | 3.7 | 0.324 | |

MNI coordinates of peaks of relative activation within regions more responsive to perceiving a moving agent compared to predicting an occluded agent (a), and more responsive to predicting an occluded agent compared to perceiving a moving agent (b), collapsed across training experience and agent type. Results were calculated at P uncorrected < 0.001, k = 10 voxels and inclusively masked by the perception only or prediction only compared to baseline, respectively. Up to three local maxima are listed when a cluster has multiple peaks more than 8 mm apart. Entries in bold denote activations significant at the FDR cluster‐corrected level of P < 0.05. A selection of regions that reached cluster‐corrected significance are illustrated in the figures. Abbreviations for brain regions: V2, visual area V2/prestriate cortex; MFG, middle frontal gyrus; SFG, superior frontal gyrus; SMA, supplementary motor area; PMv, ventral premotor cortex; FEF, frontal eye fields; mPFC, medial prefrontal cortex; MTG, middle temporal gyrus; IPL, inferior parietal lobule; STS, superior temporal sulcus; STG, superior temporal gyrus; S1, primary somatosensory cortex; IFG, inferior frontal gyrus; IPS, intraparietal sulcus.

The inverse contrast revealed regions that were more active during action prediction compared to perception. Activations from this contrast appeared within bilateral inferior parietal lobules, the superior temporal gyrus, the cerebellum, and anterior portions of left middle frontal gyrus that extend into mesial areas (i.e., the supplementary and pre‐supplementary motor areas). The peaks of cluster‐corrected activations from this contrast are illustrated in Figure 5B, and Table I lists all regions (cluster‐corrected and uncorrected) that emerged from this contrast. Beneath activations maps for both contrasts, parameter estimates depicting beta values for prediction and perception for each region are presented for illustration purposes only. To further explore the relationship between perception and prediction, each condition was also evaluated against the implicit baseline. Individual regions emerging from these contrasts are listed in Table SI, and the conjunction of these contrasts, broken down by training experience, is illustrated in Figure 6.

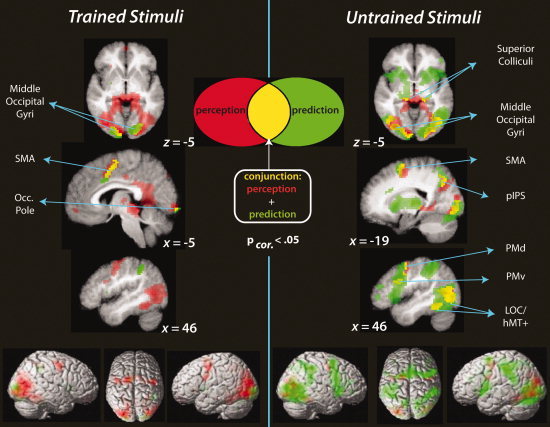

Figure 6.

Combined visualization of perception only (red), prediction only (green), and overlap between perception and prediction (yellow) during the post‐training scan session, separated by trained stimuli and untrained stimuli (left and right panels of the figure, respectively). The conjunction analysis highlights those regions where both the prediction > implicit baseline and perception > implicit baseline contrasts, for trained or untrained stimuli, passed the cluster‐corrected significance threshold of t > 4.5, P < 0.05, FDR‐corrected.

Interactions between pre/posttraining scanning sessions with main factors

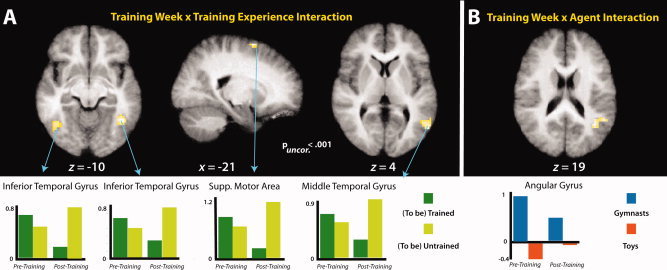

The first statistical interaction tested for brain regions showing a stronger response in the first scanning session when predicting actions that will be trained compared to untrained, and a stronger response in the second scanning session when predicting actions that were untrained compared to trained. This analysis revealed modulation of bilateral inferior temporal gyri, the left supplementary motor area, and the right middle temporal gyrus. The parameter estimates illustrated beneath the neural activations in Figure 7A reveal that the marked differences between the untrained and trained sequences in the posttraining scan session appear to be driving this effect in each region, as would be anticipated, because all stimuli should be equally unfamiliar/untrained during the pretraining scan session. The inverse interaction did not reveal any suprathreshold activations (see Table II for details of training week by training experience interactions).

Figure 7.

Panel A illustrates the interaction between training week (pretraining; posttraining) and training experience (trained; untrained). Panel B illustrates the interaction between training week (pretraining; posttraining) and agent (gymnasts; toys). T‐Maps are thresholded at t > 3.53. The bar graphs illustrate parameter estimates from all clusters that reached a significance value of p (uncorrected) < 0.001. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

Table II.

Interaction effects from training week by factor analyses during occluded action prediction, from pre‐ and posttraining scan sessions

| Region | BA | MNI Coordinates | Putative functional name | t‐Value | Cluster size | P corrected value | ||

|---|---|---|---|---|---|---|---|---|

| x | y | z | ||||||

| W1 To‐be‐trained + W2 Untrained > W1 To‐be‐untrained + W2 Trained | ||||||||

| R inferior temporal gyrus | 37 | 42 | −55 | −11 | LOC | 4.82 | 61 | 0.195 |

| L superior frontal gyrus | 6 | −21 | 5 | 67 | SMA | 4.74 | 10 | 0.776 |

| R middle temporal gyrus | 37 | 57 | −67 | 4 | v5 | 4.51 | 71 | 0.149 |

| R superior temporal gyrus | 22/39 | 57 | −52 | 7 | STS | 3.99 | 0.149 | |

| L inferior temporal gyrus | 37 | −45 | −61 | −8 | LOC | 4.49 | 56 | 0.223 |

| L inferior temporal gyrus | 37 | −54 | −7 | −2 | LOC | 3.69 | 0.223 | |

| W1 To‐be‐untrained + W2 Trained > W1 To‐be‐trained + W2 Untrained | ||||||||

| No suprathreshold regions emerged from this analysis | ||||||||

| W1 Gymnasts + W2 Toys > W1 Toys + W2 Gymnasts | ||||||||

| R angular gyrus/inferior parietal cortex | 39 | 39 | −58 | 19 | 4.54 | 91 | 0.069 | |

| R middle temporal gyrus | 39 | 51 | −52 | 19 | MTG | 4.22 | 0.069 | |

| R middle occipital gyrus | 39/19 | 39 | −64 | 31 | MOG | 4.20 | 0.069 | |

| W1 Toys + W2 Gymnasts > W1 Gymnasts + W2 Toys | ||||||||

| No suprathreshold regions emerged from this analysis | ||||||||

MNI coordinates of peaks of relative activation within regions demonstrating an interaction between training week and one of the main experimental factors of interest. Section (a) lists regions responding to the interaction of training week (pretraining = W1; posttraining = W2) and training experience (trained; untrained) with stronger activations emerging when predicting actions that were going to be trained in week 1 and actions that were untrained in week 2, compared to predicting actions that were going to be untrained in week 1 and those that were trained in week 2. The inverse contrast (b) revealed no suprathreshold regions. Section (c) lists regions that showed a stronger response to predicting gymnasts in week 1 and to toys in week 2 than predicting toys in week 1 and gymnasts in week 2. Results were calculated at P uncorrected < 0.001, k = 10 voxels. Up to three local maxima are listed when a cluster has multiple peaks more than 8mm apart. As no entries were significant at the FDR cluster‐corrected level of P < 0.05, all regions that were significant from these interaction analyses are illustrated in the figures in the main text. Abbreviations for brain regions: LOC, lateral occipital complex; SMA, supplemental motor area; STS, superior temporal sulcus; IPC, inferior parietal cortex; MTG, middle temporal gyrus; MOG, middle occipital gyrus.

The next interaction contrasts assessed those regions that showed an interaction between training week and agent form. One cluster emerged from this contrast, within the angular gyrus of the inferior parietal cortex. Examining the parameter estimate plot in Figure 7B, it appears that during the pretraining scan session, this region responded much more strongly to predicting gymnasts than wind‐up toys, while during the posttraining session, the differentiation between agent forms shown in this region's responses was attenuated. The inverse interaction revealed no suprathreshold activations (see Table II for details of training week by agent interactions).

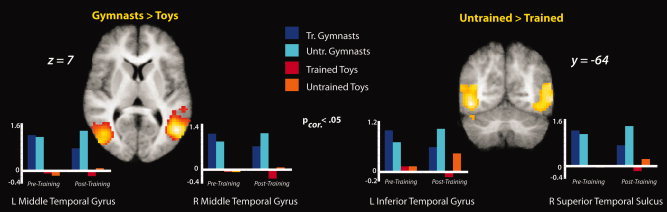

Prediction‐specific main effects

We next examined the main effects of the factorial design from the prediction portion only of the posttraining fMRI session. The main effect comparing prediction of gymnasts compared to toys (collapsed across training experience) revealed bilateral activation of clusters spanning the middle and inferior occipitotemporal cortex. The centers of both clusters are very near to the part of occipitotemporal cortex previously identified as extrastriate body area (EBA; Fig. 8, Table III). A third cluster more responsive to predicting gymnasts than toys emerged within the left middle occipital gyrus. The inverse contrast revealed no activations that reached cluster‐corrected significance responding more strongly to predicting wind‐up toys than gymnasts. However, uncorrected results from this contrast are listed in Table III for completeness.

Figure 8.

Imaging results from the main effects of the factorial design during prediction, evaluated during the posttraining scan session. t‐Maps are thresholded at t > 6.0, P < 0.05, FDR‐corrected. Parameter estimates were extracted from each cluster from both the pretraining and posttraining scan sessions and are plotted beneath the t‐maps for illustration purposes only. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

Table III.

Main and simple effects of factorial design during occluded action prediction, from posttraining scan session

| Region | BA | MNI coordinates | Putative functional name | t‐Value | Cluster size | P corrected value | ||

|---|---|---|---|---|---|---|---|---|

| x | y | z | ||||||

| Gymnasts > Toys | ||||||||

| R middle temporal gyrus | 19 | 48 | −64 | 4 | EBA | 12.2 | 856 | <0.001 |

| R sup. temporal gyrus | 22 | 66 | −37 | 22 | STS | 6.6 | <0.001 | |

| R middle temporal gyrus | 19 | 45 | −73 | 22 | MTG | 6.0 | <0.001 | |

| L occipitotemporal cortex | 37/39 | −45 | −70 | 10 | EBA | 8.4 | 545 | <0.001 |

| L inf. occipital gyrus | 19 | −51 | −79 | 7 | IOG | 7.9 | <0.001 | |

| L temporoparietal junct | 40 | −54 | −40 | 25 | TPJ | 4.9 | <0.001 | |

| L mid. occipital gyrus | 18 | −21 | −94 | −11 | MOG | 6.3 | 165 | 0.009 |

| R medial parietal cortex | 19 | 21 | −58 | −8 | 5.2 | 18 | 0.064 | |

| R lingual gyrus | 18 | 21 | −85 | −8 | 5.2 | 80 | ||

| R fusiform gyrus | 37 | 45 | −43 | −20 | 4.7 | 16 | 0.380 | |

| R inferior frontal gyrus (pars triangularis) | 44 | 39 | 20 | 28 | IFG/PMv | 4.3 | 19 | 0.343 |

| R inferior frontal gyrus | 6/44 | 33 | 11 | 23 | IFG | 3.6 | 0.343 | |

| R precentral gyrus | 6 | 39 | 2 | 34 | premotor | 4.2 | 20 | 0.380 |

| L intraparietal sulcus | 7 | −9 | −55 | 61 | IPS | 4.1 | 43 | 0.301 |

| Toys > Gymnasts | ||||||||

| L middle frontal gyrus | 10 | −15 | 44 | 13 | MFG | 4.9 | 27 | 0.482 |

| L superior occipital gyrus | 17 | −9 | −103 | 10 | 4.9 | 59 | 0.199 | |

| L cuneus | 18 | −6 | −91 | 25 | 4.1 | 0.199 | ||

| R anterior hippocampus | 36 | 27 | −10 | −14 | 4.8 | 14 | 0.684 | |

| L rolandic operculum | 22 | −51 | −4 | 10 | STS | 4.7 | 22 | 0.553 |

| L sup. temporal gyrus | 42 | −54 | −13 | 10 | STG | 4.5 | 0.553 | |

| R inferior frontal gyrus | 44 | 57 | 5 | 13 | premotor | 4.6 | 26 | 0.495 |

| R anterior cingulate cortex | 24 | 9 | 44 | 13 | 4.4 | 10 | 0.758 | |

| L insula | 13 | −42 | 8 | 1 | 4.3 | 20 | 0.584 | |

| L middle insula | 13 | −39 | −1 | 10 | 3.8 | 0.584 | ||

| R supramarginal gyrus | 2 | 60 | −13 | 28 | S1 | 4.2 | 11 | 0.739 |

| Trained > Untrained | ||||||||

| No superthreshold regions emerged from this analysis | ||||||||

| Untrained > Trained | ||||||||

| L inferior temporal gyrus | 37 | −45 | −64 | −8 | LOC | 6.8 | 396 | <0.001 |

| L middle temporal gyrus | 39 | −42 | −67 | 13 | MT+ | 5.1 | <0.001 | |

| L fusiform gyrus | 37 | −45 | −58 | −20 | 5.0 | <0.001 | ||

| R sup. temporal gyrus | 39/22 | 54 | −52 | 7 | STS | 6.7 | 707 | <0.001 |

| R fusiform gyrus | 37 | 42 | −55 | −11 | 6.2 | <0.001 | ||

| R middle temporal gyrus | 37 | 57 | −67 | −2 | ITG | 5.9 | <0.001 | |

| L middle frontal gyrus | 6 | −39 | 8 | 55 | 5.2 | 39 | 0.350 | |

| L precentral gyrus | 4 | −42 | −1 | 55 | 4.1 | 0.350 | ||

| L superior frontal gyrus | 6 | −21 | 5 | 67 | SMA | 4.6 | 21 | 0.580 |

| L middle occipital gyrus | 19 | −36 | −88 | 22 | 4.4 | 27 | 0.491 | |

| L middle occipital gyrus | 19 | −27 | −76 | 28 | 4.0 | 0.491 | ||

| R precentral gyrus | 44 | 57 | 11 | 34 | PMv | 4.3 | 51 | 0.249 |

| L precentral gyrus | 44 | −45 | 5 | 19 | PMv | 4.1 | 49 | 0.264 |

| L precentral gyrus | 6 | −54 | 2 | 25 | 3.9 | 0.264 | ||

| L inferior parietal lobule | 40 | −36 | −34 | 37 | IPL | 4.0 | 78 | 0.119 |

| L inferior parietal lobule | 2 | −45 | −40 | 46 | S1 | 4.0 | 0.119 | |

| L postcentral gyrus | 2 | −33 | −37 | 46 | S1 | 3.9 | 0.119 | |

| Untrained > Trained Gymnasts | ||||||||

| R middle temporal gyrus | 39 | 60 | −64 | −2 | EBA | 7.1 | 759 | <0.001 |

| R inferior parietal lobule | 40 | 60 | −58 | 19 | IPL | 6.7 | <0.001 | |

| R middle temporal gyrus | 39 | 45 | −55 | 13 | MTG | 6.4 | <0.001 | |

| R precuneus | 5/7 | 9 | −49 | 49 | SPL | 5.3 | 102 | 0.03 |

| R postcentral gyrus | 2 | 27 | −49 | 55 | SPL | 5.2 | 0.03 | |

| R intraparietal suclus | 2 | 33 | −40 | 52 | IPL | 4.0 | 0.03 | |

| L middle temporal gyrus | 19/39 | −51 | −70 | 13 | EBA | 4.9 | 65 | 0.06 |

| L middle occipital gyrus | 19 | −48 | −70 | −2 | LOC | 3.9 | 0.06 | |

| L inferior parietal lobule | 7/40 | −36 | −46 | 64 | IPL | 4.9 | 23 | 0.302 |

| L middle frontal gyrus | 6 | −33 | 11 | 49 | PMd | 4.9 | 18 | 0.374 |

| L inferior frontal gyrus | 47 | −48 | 20 | −11 | vlPFC | 4.7 | 33 | 0.273 |

| L inferior frontal gyrus | 47 | −42 | 26 | −8 | IFG | 4.2 | 0.273 | |

| L inferior frontal gyrus | 45 | −54 | 20 | 1 | IFG | 3.7 | 0.273 | |

| R superior temporal suclus | 22 | 39 | −22 | −5 | pSTS | 4.7 | 22 | 0.302 |

| L inferior frontal gyrus | 6 | −54 | −1 | 37 | PMv | 4.7 | 44 | 0.204 |

| R inferior parietal lobule | 40/42 | 54 | −34 | 25 | IPL | 4.5 | 25 | 0.302 |

| R inferior frontal gyrus | 6/44 | 54 | 11 | 37 | PMv | 4.5 | 58 | 0.122 |

| R inferior frontal gyrus | 44 | 57 | 23 | 7 | IFG | 4.2 | 0.122 | |

| R inferior frontal gyrus | 45 | 51 | 20 | 16 | IFG | 3.7 | 0.122 | |

| R anterior insular cortex | 13 | 39 | −4 | −14 | 4.4 | 24 | 0.302 | |

| R sup. temporal gyrus | 22 | 66 | −31 | 7 | pSTS | 4.3 | 11 | 0.541 |

| L fusiform gyrus | 37 | −42 | −40 | −17 | 4.3 | 39 | 0.226 | |

| L fusiform gyrus | 37 | −42 | −55 | −20 | 4.2 | 0.226 | ||

| L precentral sulcus | 6 | −21 | 2 | 64 | SMA | 4.3 | 25 | 0.302 |

| L intraparietal sulcus | 5/7 | −33 | −37 | 49 | IPS | 4.3 | 31 | 0.273 |

| R middle occipital gyrus | 39/19 | 36 | −67 | 31 | 4.1 | 14 | 0.476 | |

| R superior occipital gyrus | 19 | 36 | −76 | 31 | 3.8 | |||

| L precentral gyrus | 6 | −42 | −4 | 52 | PMd | 4.1 | 11 | 0.541 |

| Untrained > Trained Toys | ||||||||

| L inferior temporal gyrus | 37 | −42 | −61 | −8 | LOC | 5.5 | 135 | 0.015 |

| R inferior occipital gyrus | 37 | 42 | −46 | −23 | LOC | 4.7 | 44 | 0.264 |

| R fusiform gyrus | 37 | 33 | −40 | −23 | 4.3 | 0.264 | ||

| R inferior temporal gyrus | 37 | 48 | −58 | −14 | LOC | 4.0 | 0.264 | |

| L inferior frontal gyrus | 6 | −48 | 5 | 19 | PMv | 4.0 | 17 | 0.665 |

MNI coordinates of peaks of relative activation within regions responding to the main effects of agent, collapsed across training experience [predicting gymnasts compared to toys (a) and toys compared to gymnasts (b)], the main effects of training, collapsed across agent [predicting trained compared to untrained gymnasts and toys (c), predicting untrained compared to trained gymnasts and toys (d)], and the simple effects of predicting untrained relative to trained gymnasts (e) and toys (f). Results were calculated at P uncorrected < 0.001, k = 10 voxels. Up to three local maxima are listed when a cluster has multiple peaks more than 8 mm apart. Entries in bold denote activations significant at the FDR cluster‐corrected level of P < 0.05. Only regions that reached cluster‐corrected significance are illustrated in the figures. Abbreviations for brain regions: EBA, extrastriate body area; STS, superior temporal sulcus; MTG, middle temporal gyrus; IOG, inferior occipital gyrus; TPJ, temporoparietal junction; IPS, intraparietal sulcus; IFG, inferior frontal gyrus; PMv, ventral premotor cortex; MFG, middle frontal gyrus; STG, superior temporal gyrus; M1, primary motor cortex; LOC, lateral occipital cortex; MT+, visual area MT; ITG, inferior temporal gyrus; SMA, supplemental motor area; IPL, inferior parietal lobule; PMd, dorsal premotor cortex; vlPFC, ventrolateral prefrontal cortex; pSTS, posterior superior temporal sulcus; S1, primary somatosensory cortex.

We then evaluated the main effects of training on prediction, collapsed across both classes of agent. The analysis comparing brain regions more active when predicting trained compared to untrained stimuli did not reveal any suprathreshold clusters of activation. This signifies that no brain regions were more active when imagining those stimuli participants had spent 4 days learning to predict. Given that previous experiments have reported modulation of parietal and premotor components of the AON based on visual experience [Cross et al., 2009], we performed an exploratory ROI analysis to determine whether these regions might show modulation based on visual training when a more targeted approach was used. Two 10 mm spheres were generated based on coordinates reported previously [Cross et al., 2009] in the right premotor cortex (x = 36, y = 6, z = 51) and the left inferior parietal lobule (x = −36, y = −51, z = 36). Even when focusing on regions previously shown to be modulated by visual experience, this exploratory ROI analysis did not reveal any modulation of parietal and premotor components of the AON.

However, the inverse contrast revealed two large suprathreshold clusters centered on the left inferior temporal gyrus and the right superior temporal gyrus. These regions were more active when predicting untrained compared to trained stimuli, signifying the recruitment of additional neural resources to perform the same task for less familiar stimuli. The right panel of Figure 8 and Table III depicts these findings.

Prediction‐specific interactions

As evaluation of the prediction‐specific interactions between training experience and agent did not reveal any clusters significant at the P < 0.001, k = 10 voxel threshold, we investigated whether any clusters in the brain did show an interaction between these factors at a lower statistical threshold of P < 0.01, uncorrected, k = 10 voxels. The first direction of the interaction (trained gymnasts + untrained toys) > (untrained gymnasts + trained toys), revealed activation within the middle frontal, middle temporal, and posterior cingulate cortices. The inverse direction of the interaction (untrained gymnasts + trained toys) > (trained gymnasts + untrained toys) revealed activation within a broader network of regions, including the inferior parietal lobule, middle temporal gyrus, and calcarine sulcus. A complete listing of activations revealed from this exploratory analysis is presented in Table SII.

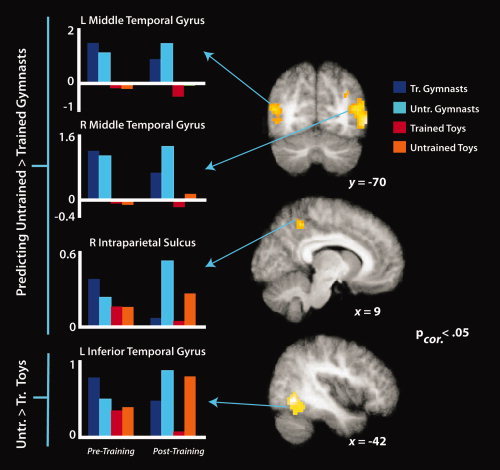

Prediction‐specific simple effects

With the final set of analyses, we examined the main effect of training in greater detail by breaking it down into its constituent simple effects. Our analysis of prediction of untrained compared to trained gymnastic sequences revealed bilateral activation within the middle temporal gyri. In addition, a cluster centered on the right precuneus/superior parietal lobule emerged from this contrast. The complementary contrast for regions more responsive when predicting untrained compared to trained wind‐up toy sequences revealed significant activation within the left inferior temporal gyrus. These findings are illustrated in Figure 9 and Table III.

Figure 9.

Simple effects imaging results for predicting untrained compared to trained gymnastic sequences (top two brains and three plots) and untrained compared to trained wind‐up toy sequences (lower brain and plot), evaluated during the posttraining scan session. t‐Maps are thresholded at t > 4.9, P < 0.06, FDR‐corrected (the left occipitotemporal activation reached marginal cluster‐corrected significance at P = 0.06; all other regions are FDR‐corrected P < 0.05). Parameter estimates were extracted from each cluster from both the pretraining and posttraining scan sessions and are plotted to the left of the t‐maps for illustration purposes only.

DISCUSSION

In the present study, we investigated the flexibility of sensorimotor brain regions when observing and predicting action sequences observers have never before physically performed and the extent to which visually based practice modulates neural responses. Overall, our findings demonstrate greater activation within the AON when predicting compared to perceiving complex action sequences and recruitment of additional neural resources within body and object processing visual regions when predicting unfamiliar compared to familiar human and object sequences, respectively. The consequences and implications of each of these findings are considered in turn.

Perceiving Versus Predicting Complex Actions

When participants viewed gymnasts or wind‐up toys moving across the screen compared to imagining their movements behind an occluder, relatively greater activity emerged within visuomotor regions of the brain, including the frontal eye fields and a part of the calcarine cortex corresponding to V2. This pattern of findings broadly reflects regions involved in visuospatial attention [Corbetta et al., 2008], with activation of the frontal eye fields in particular likely reflecting the current task demands to spatially track the agents' movements [Kelley et al., 2008; Srimal and Curtis, 2008].

When an agent disappeared and participants imagined how the action continued behind the occluding surface, more activation was seen within a brain network comprising bilateral inferior parietal lobule, superior temporal gyrus, inferior frontal gyrus extending up to the supplementary motor area, and the cerebellum. Our finding that predicting complex actions engages parietal, frontal, and temporal nodes of the AON is largely consistent with results from earlier studies that examined prediction of less complex action sequences [e.g., Kilner et al., 2004; Schubotz, 2007; Stadler et al., 2011]. Thus, it appears this network contributes to prediction processes for events ranging from actions that are familiar and readily mapped onto the body [Stadler et al., 2011] to complex sequences performed by human and nonhuman agents, as in the present study, as well as for highly abstract temporal or spatial sequences of events [Schubotz, 2007; Schubotz and von Cramon, 2002, 2004].

The other brain region found to contribute to general prediction processes in the present task is the cerebellum. A likely interpretation of cerebellar activity in the present task is that it organizes the temporal component of perceptual prediction [Diedrichsen et al., 2005; Dreher and Grafman, 2002; O'Reilly et al., 2008]. In a recent study, O'Reilly and colleagues investigated the brain systems involved in a spatial compared to a temporal‐spatial prediction task. They report bilateral cerebellar activation as well as activation within frontal and parietal regions, when the task requires both temporal and spatial prediction [O'Reilly et al., 2008]. They interpret these findings as consistent with the notion that the cerebellum works with sensorimotor cortices to aid in efficient prediction by supplying these regions with temporal information about dynamic events. The present findings implicating AON and cerebellar activation during a task that likely engaged both temporal and spatial predictive processes provide additional support for this idea.

Predicting Complex Movements Before and After Visual Training

One of the benefits conferred by a training study with pre‐ and posttraining neuroimaging measures is the ability to examine how patterns of neural activity change based on participants' experience with the stimuli. One of these interactions contrasted training week and training experience and revealed that bilateral temporal regions as well as left SMA responded more while predicting untrained compared to trained sequences during the posttraining compared to the pretraining scan session. This pattern of findings within the temporal cortex is consistent with the notion that greater activity within the visual node of the AON is required to support accurate prediction of visually less‐familiar action sequences [c.f. Cross et al., in press]. This idea is discussed in more detail below under the Effects of Training section. In contrast, the relatively greater SMA activation observed when predicting untrained sequences in the posttraining session compared to the pretraining session is consistent with the idea that this region is involved in dynamically transforming action‐related internal references during action prediction [Stadler et al., 2011]. The SMA, which is part of the premotor node of the AON, works closely with more lateral elements of the premotor cortex and is believed to be critical in initiating an action simulation [Grush, 2004; Voss et al., 2006]. The fact that this region is more active when simulating untrained actions likely reflects greater demands to internally continue the occluded action's time course, for which there is less information than the trained actions. Similar to the result reported by Stadler and colleagues [2011], this area seems to be especially important when perceptual action cues are no longer available.

The other training week contrast evaluated the interaction between training week and agent form (gymnast vs. toy). The activity pattern within the right angular gyrus demonstrated a decreased sensitivity to agent form in the posttraining scan session compared to the pretraining session. The angular gyrus portion of the parietal lobe is associated with the visual processing of others' bodies [Felician et al., 2009], which likely draws upon an observer's own experience of his or her body [Blanke et al., 2002; Spitoni et al., 2010]. A possible interpretation of the present findings is that after training, participants could make more use of their own body as a template for predicting both human and nonhuman agents' actions. However, such an interpretation is speculative at this stage and will require careful further testing in order to be confirmed.

Predicting Human Versus Nonhuman Agents

One feature of action prediction we were interested in probing was the impact of the agents' form and motion on predictive processes. We intentionally chose actions performed by gymnasts and wind‐up toys in order to sidestep confounds of between‐subjects differences in physical or visual familiarity with the action sequences before the experiment. When directly comparing prediction of gymnastic to wind‐up toy sequences, stronger activation emerged within higher‐level visual regions, including bilateral middle temporal gyri, centered on coordinates less than 10 mm from the region identified as the EBA [Cross et al., 2010; Downing et al., 2001, 2006; Peelen and Downing, 2007]. Past neuroimaging and neurostimulation work on this specific region of the occipitotemporal cortex demonstrates stronger responses when participants view contorted postures [Cross et al., 2010] or biomechanically impossible postures [Avikainen et al., 2003] and actions [Costantini et al., 2005]. The present findings complement this literature by demonstrating reliable occipitotemporal activation when watching dynamic actions that are biomechanically possible, but beyond the current physical capacity of the observer.

Impact of Visual Experience on Post‐Training Performance

The other feature of complex prediction we aimed to investigate was the role that purely visual experience plays when predicting sequences that are impossible for the observer to perform. Before discussing the imaging results, it is worth considering that our behavioral findings demonstrated no training‐specific effects during the posttraining fMRI session (right‐most bars of Fig. 4B). Although we expected that the participants should perform the prediction task more accurately for the trained compared to untrained action sequences during the posttraining scan session, we instead found that the training phase generalized to large degree to the untrained stimuli. Although these findings appear somewhat surprising at first, evidence from the perceptual learning literature supports the notion that such generalization from trained to untrained stimuli when performing a perceptual decision task is not uncommon [Fahle and Poggio, 2001] and can be even more pronounced when feedback is given during training [Herzog and Fahle, 1997]. Thus, we believe the lack of a main effect of training is due in part to the use of feedback in our training procedure. As mentioned previously, though the absence of an effect of visual experience in behavioral prediction performance potentially makes the neuroimaging findings all the more illuminating, they demonstrate that markedly different patterns of activity are engaged to support equivalent performance for predicting untrained stimuli.

Turning our focus to the imaging findings, a striking feature in the analysis of this main effect is that no brain regions were found to be more active when predicting the familiar, visually trained sequences. Although this finding is consistent with emerging evidence exploring changes in “neural efficiency” among karate experts [Babiloni et al., 2010] and guitarists [Vogt et al., 2007], it stands in stark contrast to studies where physical training manipulations were used with expert dance [Calvo‐Merino et al., 2005; Cross et al., 2006] or gymnastic [Munzert et al., 2008; Zentgraf et al., 2005] populations. The dance and gymnastics studies commonly show stronger AON activation when participants observe or imagine sequences that they have physically practiced or for which they have some general physical familiarity. Moreover, several studies investigating the impact of visual learning also demonstrate increased AON activation when observing visually‐trained sequences [Burke et al., 2010; Cross et al., 2009; Frey and Gerry, 2006]. In each of these prior studies, however, visual experience is always confounded to some degree with physical ability (i.e., even if participants engaged in no physical practice of observed movements, the observed movements were still generally within their range of motor and flexibility capabilities). Thus, it seems plausible that the discrepancy concerning AON activation and visual experience might be related to participants having some degree of relevant physical experience in prior studies and no relevant physical experience in the current investigation.

The inverse contrast, which compared prediction of untrained sequences to those participants visually trained to predict, revealed activation of bilateral clusters centered on the occipitotemporal node of the AON (left inferior temporal gyrus and right superior temporal gyrus). This finding is consistent with the notion that unfamiliar actions that do no belong in an observer's motor repertoire are processed mainly with respect to their visual properties. Moreover, this result is congruent with the findings reported by Buccino and colleagues [2004], where they used fMRI to investigate observation of different mouth actions performed by conspecifics (humans), monkeys, and dogs. They found that actions for which participants' have no prior motor experience with, such as a dog barking, elicit activation solely within visual regions of the AON and concluded that a lack of motor experience leads to understanding on a visual basis only. Returning to the present findings, even though participants have no motor experience with any of the actions they are predicting (whether trained or untrained), it seems that the untrained actions require even more visual processing to accurately predict.

Examination of the simple effects of training brings to light the engagement of agent‐specific processing during prediction. When participants were predicting visually untrained compared to trained gymnastic sequences, the regions recruited to support these predictions include bilateral middle temporal gyri, with activations centered on a region likely to be EBA [Downing et al., 2001; Peelen and Downing, 2007], and a cluster situated on the precuneus in the superior parietal lobule. In contrast, the left inferior temporal gyrus, in a cluster likely to be part of the lateral occipital complex (LOC), was recruited when predicting untrained compared to trained wind‐up toy actions.

Our finding that visual regions that selectively process the human body are more responsive when predicting unfamiliar compared to familiar gymnastic sequences is in line with prior work on this area and the representation of complex or impossible postures or actions [Avikainen et al., 2003; Costantini et al., 2005; Cross et al., 2010]. In contrast to the middle temporal activations, the parietal activation from this contrast might reflect increased imagery demands for unfamiliar gymnastic sequences. In particular, this region of the parietal cortex is strongly implicated in imagery tasks2 [Grèzes and Decety, 2001; Guillot et al., 2009], especially those that involve imagined transformations of the human body [Jackson et al., 2006; Zacks et al., 2002].

The activation within the left inferior temporal gyrus/LOC that emerged while predicting unfamiliar compared to familiar mechanical toy actions is consistent with this visual region's role in object processing [Grill‐Spector et al., 1998, 2001; Malach et al., 1995; Vuilleumier et al., 2002]. Moreover, work comparing the visual analysis of bodies and objects points to a clear distribution of processing within the extrastriate cortex, with LOC responding more to objects than bodies, and EBA showing the inverse pattern of results [Spiridon et al., 2006]. Thus, our present finding that object‐processing visual regions support prediction of unfamiliar object motion, and body‐processing visual regions supporting prediction of unfamiliar human actions is consistent with a category‐specific account of the visual node of the AON's contribution to action prediction.

Theoretical Implications

Taken together, the findings from this study further inform both the prediction model of premotor cortex [Schubotz, 2007; Schubotz and von Cramon, 2002, 2004] as well as the predictive coding model of AON function [Gazzola and Keysers, 2009; Kilner et al., 2007a, b; Neal and Kilner, 2010]. Revisiting the prediction model of premotor cortex, the main premise of this model is that the predictive functions of the premotor cortex are generally exploited for anticipating dynamic events [Schubotz, 2007]. Much evidence has been reported that demonstrates lateral and medial premotor structures supporting prediction of both nonbiological events [Schubotz and von Cramon, 2002, 2004; Wolfensteller et al., 2007] as well as more familiar actions performed by humans [Stadler et al., 2011]. In the present study, we show that premotor structures also support prediction of human and nonhuman agents performing actions participants cannot physically reproduce, but only when the actions are visually unfamiliar/untrained (see Fig. 6 and Table III). A challenge for future studies will be to determine on a finer scale how visual (and motor) familiarity interacts with responses within the different medial and lateral subcomponents of the premotor cortex during action prediction.

Turning our attention to the predictive coding account of AON function, evidence from the main and simple effects of training provide support for this model, which maintains that when an unfamiliar action is observed or imagined, the brain has less prior visual or motor information to inform a prediction of how that action might unfold across time and space, and consequently such predictions are less accurate and more error prone [Gazzola and Keysers, 2009; Kilner et al., 2007a,b; Neal and Kilner, 2010]. Thus, the AON must perform more active computations to generate a prediction of how an unfamiliar action might unfold [Cross et al., in press; Kilner et al., 2007a; Schippers and Keysers, 2011]. The present findings are consistent with this notion: when participants had to predict visually unfamiliar actions, visual (and, to a lesser extent, premotor) regions of the AON responded more robustly than when the actions were familiar. We think that this is likely due to increased demand on these regions to construct a prediction of an unfamiliar event, as well as an increased error signal, which is driven by a lack of prior visual experience.

In summary, daily life demands that we accurately anticipate the actions of people and objects, not all of which move in a familiar, predictable manner. To smoothly interact with such agents, we used a declarative temporal prediction task to show that when one does not have physical experience with the observed actions, the brain relies upon parietal and visual areas of the AON, as well as the cerebellum, to form accurate predictions of ongoing, unfamiliar actions. Moreover, the brain becomes more efficient in predicting complex actions after visual practice, and less visual analysis is required to support efficient prediction. Therefore, when confronted with a novel situation in daily life that requires accurate prediction (perhaps following traffic signals in a foreign land or plucking your lunch from a sushi conveyer belt?), taking some time to observe before acting could result in more accurate execution of the intended action.

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information Figure 1.

Supporting Information Table 1.

Supporting Information Table 2.

Acknowledgements

The authors gratefully acknowledge critical feedback on an earlier version of this manuscript by Richard X. Ramsey and two anonymous reviewers, assistance with manuscript preparation by Lauren R. Alpert, fMRI modeling assistance by Jöran Lepsien, the gymnasts at the Turn‐ und Gymnastikclub e. V. Leipzig, and an outstanding team of interns who assisted with various stages of this project, including Carmen Hause, Andreas Wutz, Raphael Schultze‐Kraft, and Jana Strakova.

Footnotes

It should be noted that neither class of action featured goal‐directed hand actions, like those typically used in many experiments that have examined the role of the AON in action perception and prediction. Here, we were specifically interested in studying actions for which participants had no prior motor experience, and full‐body actions, where the agent's goal is simply to move from one side of the space to the other, offer a broader range of options for this purpose. Moreover, a number of empirical studies [e.g., Cross et al., 2006; 2009; in press; Lui et al., 2008; Munzert et al., 2008; Zentgraf et al., 2005] and a recent meta‐analysis [Caspers et al., 2010] support the notion that the AON is also strongly engaged by observing nonobject‐directed actions.

To be clear on the distinction between prediction and imagery, we contend that imagery, defined as overt or covert access of sensorimotor representations (Grèzes and Decety, 2001), plays a role in simulation processes engaged during action prediction (as in the present study). However, we would argue that imagery does not always serves action prediction processes, and thus aim to distinguish between these two terms in our interpretation of prior work on prediction and imagery.

REFERENCES

- Avikainen S, Liuhanen S, Schurmann M, Hari R ( 2003): Enhanced extrastriate activation during observation of distorted finger postures. J Cogn Neurosci 15( 5): 658–663. [DOI] [PubMed] [Google Scholar]

- Babiloni C, Marzano N, Infarinato F, Iacoboni M, Rizza G, Aschieri P, Cibelli G, Soricelli A, Eusebi F, Del Percio C ( 2010): “Neural efficiency” of experts' brain during judgment of actions: A high‐resolution EEG study in elite and amateur karate athletes. Behav Brain Res 207: 466–475. [DOI] [PubMed] [Google Scholar]

- Blanke O, Ortigue S, Landis T, Seeck M ( 2002): Stimulating illusory own‐body perceptions. Nature 419: 269–270. [DOI] [PubMed] [Google Scholar]

- Buccino G, Lui F, Canessa N, Patteri I, Lagravinese G, Benuzzi F, Porro CA, Rizzolatti G ( 2004): Neural circuits involved in the recognition of actions performed by nonconspecifics: An fMRI study. J Cogn Neurosci 16: 114–126. [DOI] [PubMed] [Google Scholar]

- Burke CJ, Tobler PN, Baddeley M, Schultz W ( 2010): Neural mechanisms of observational learning. Proc Natl Acad Sci USA 107: 14431–14436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvo‐Merino B, Glaser DE, Grezes J, Passingham RE, Haggard P ( 2005): Action observation and acquired motor skills: An fMRI study with expert dancers. Cereb Cortex 15: 1243–1249. [DOI] [PubMed] [Google Scholar]

- Caspers S, Zilles K, Laird AR, Eickhoff SB ( 2010): ALE meta‐analysis of action observation and imitation in the human brain. Neuroimage 50: 1148–1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamley C ( 2003): Rational Herds: Economic Models of Social Learning. New York: Cambridge University Press. [Google Scholar]

- Corbetta M, Patel G, Shulman GL ( 2008): The reorienting system of the human brain: From environment to theory of mind. Neuron 58: 306–324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costantini M, Galati G, Ferretti A, Caulo M, Tartaro A, Romani GL, Aglioti SM ( 2005): Neural systems underlying observation of humanly impossible movements: An FMRI study. Cereb Cortex 15: 1761–1767. [DOI] [PubMed] [Google Scholar]

- Cross ES, Hamilton AF, Grafton ST ( 2006): Building a motor simulation de novo: Observation of dance by dancers. Neuroimage 31: 1257–1267. [DOI] [PMC free article] [PubMed] [Google Scholar]