Abstract

Whether attention exerts its impact already on primary sensory levels is still a matter of debate. Particularly in the auditory domain the amount of empirical evidence is scarce. Recently noninvasive and invasive studies have shown attentional modulations of the auditory Steady‐State Response (aSSR). This evoked oscillatory brain response is of importance to the issue, because the main generators have been shown to be located in primary auditory cortex. So far, the issue whether the aSSR is sensitive to the predictive value of a cue preceding a target has not been investigated. Participants in the present study had to indicate on which ear the faster amplitude modulated (AM) sound of a compound sound (42 and 19 Hz AM frequencies) was presented. A preceding auditory cue was either informative (75%) or uninformative (50%) with regards to the location of the target. Behaviorally we could confirm that typical attentional modulations of performance were present in case of a preceding informative cue. With regards to the aSSR we found differences between the informative and uninformative condition only when the cue/target combination was presented to the right ear. Source analysis indicated this difference to be generated by a reduced 42 Hz aSSR in right primary auditory cortex. Our and previous data by others show a default tendency of “40 Hz” AM sounds to be processed by the right auditory cortex. We interpret our results as active suppression of this automatic response pattern, when attention needs to be allocated to right ear input. Hum Brain Mapp, 2011. © 2011 Wiley‐Liss, Inc.

Keywords: selective attention, auditory cortex, MEG, beamforming

INTRODUCTION

A large body of data shows that attentional resources can be selectively directed to distinct features of a stimulus such as its spatial location. This results in a processing advantage of attended features, expressed on a behavioral level for example in reduced reaction times as well as increased accuracy [Fan and Posner, 2004]. Conceptually the effect of attention can be partly understood as a gain modulation of sensory brain regions treating the relevant input. Attended features either leads to increased neuronal responses or vice versa for ignored features or both. On a perceptual level, selective attention is known to modulate the representation of basic sensory features of stimuli: In an elegant approach Carrasco et al. [ 2004] were able to demonstrate a “boosting” effect of attention on visual stimulus contrast, thus implying a direct influence of attention on stimulus appearance. This implies that attention could have a direct modulatory effect on primary visual cortical regions. Indeed attentional modulation of early visual processing areas have been documented ranging from single‐unit activity [see Reynolds and Desimone, 1999] to a macroscopic systems level as investigated using magneto‐/electro‐encephalography [Keil et al., 2005] and fMRI [Silver et al., 2007].

Despite clear indications from the visual modality that attention influences the earliest stages in the cortical hierarchy, the question as to whether the impact of such a gain modulation can also be observed on stimulus‐evoked responses from primary auditory cortex is still an issue of ongoing debate. Evidence from neuroimaging studies for attentional modulation of primary auditory cortical regions are mixed with some studies reporting increases [Jancke et al., 1999], whereas others only demonstrate increases for secondary and association regions. For example in a recent fMRI study by Petkov et al. [ 2004] using cortical surface mapping techniques providing high spatial resolution of the involved fields, the authors showed that whereas mesial (primary) auditory cortical regions are equally active when an auditory stimulus is attended or not, lateral (secondary) auditory cortex becomes activated only when the stimulus is attended. Furthermore, whereas unattended stimuli elicited greater activity in the right hemisphere, enhanced responses were observed in the left auditory cortex for attended stimuli. A different line of evidence comes from invasive electrophysiological measurements. Animal studies e.g. suggest a functional neuronal architecture that allows for rapid adaption of receptive fields in A1 according to saliency of the stimulus. Even though large parts of the results stem from the classical conditioning domain [Edeline, 1999], rapid receptive field changes in A1 are also observed during frequency discrimination [Fritz et al., 2003] and detection tasks of complex tones [Fritz et al., 2007b]. By this means according to Fritz et al. [ 2007a], attention enhances figure‐ground separation in a filter‐like manner by amplifying responsiveness to salient stimuli and/or reducing responses to irrelevant stimuli already at the primary auditory cortical level.

Intracranial recordings in humans are possible only under very rare circumstances. Recently Bidet‐Caulet et al. [ 2007] studied the effect of selectively attending one of two possible auditory streams in epileptic patients implanted with depth electrodes. The streams were characterized by different carrier and amplitude modulation (AM) frequencies (21 and 29 Hz), the latter driving an evoked oscillatory response (so called Steady‐State Response, SSR; in case of a driving auditory stimulus, the evoked oscillatory response is referred to as aSSR) of the same frequency. The authors found evidence for attentional modulations of the aSSR, with amplitude enhancements for the relevant stream and suppressions for the irrelevant stream. This pattern was particularly pronounced for the left hemisphere, while results for the right hemisphere were more complex. Furthermore, this study shows with high spatial resolution that the aSSR is an evoked activity generated almost exclusively along the Heschl's Gyrus [Bidet‐Caulet et al., 2007], including its posteriomedial part corresponding to primary auditory cortex [Penhune et al., 1996]. This confirms inferences made based on magneto‐encephalographic data analyzed with magnetic source imaging [Pantev et al., 1996; Ross et al., 2000; Weisz et al., 2004]. So in general the aSSR would be an ideal access to noninvasively scrutinize the attentional modifiability of primary auditory cortical brain activity (similar to works in visual and somatosensory system; [Giabbiconi et al., 2007]). However, there are only very few reports on this topic. This may be related to the wide‐spread consensus that the aSSR cannot be modulated after an initial thorough electroencephalographic (EEG) investigation [Linden et al., 1987] yielded negative results. It took almost 20 years until first evidence was gained that spoke in favor of an attentional effect on aSSR. In a magnetoencephalographic (MEG) study, Ross et al. [ 2004] made participants count infrequent 30 Hz AM tones among standard tones of 40 Hz AM, and compared this with a control condition in which they counted visual targets. An enhancement of the aSSR was observed 200 to 500 ms following sound onset. Despite monaural presentation to the right ear, amplitudes were overall stronger for the ipsilateral hemisphere. The attention effect however was observed exclusively in the left (contralateral) hemisphere. A recent EEG study [Skosnik et al., 2007] indicates that attentional effects may also be to some extent dependent of the AM frequency, with strongest effects to be expected around 40 Hz. Using AM‐frequencies of 45 and 20 Hz, Müller et al. [ 2009] were able to demonstrate increased left‐hemispheric responses when attention was focused on the right ear or decreases ipsilateral responses when attention was directed to the left ear, only when the attended AM sound was modulated at 20 Hz but not at 45 Hz. Recently, Okamoto et al. [2010] investigated the influence of bottom–up and top–down modulations on different evoked responses by manipulating listening condition (active vs. distracted) and the signal‐to‐noise level of the stimulus. They could convincingly show that the more “nonprimary” the response (i.e. N1m and the sustained field, SF) the larger the influence of active—i.e. top–down—listening. The aSSRs on the other hand faithfully reflected the signal‐to‐noise level, i.e. bottom‐up influences. Nevertheless, despite being overall smaller than the N1m and SF effects, small but highly significant top–down modulations could also be observed for the aSSR.

Taken together, current works appear to revise the conception that the aSSR is not sensitive to attentional modulations. Yet, several open issues remain: (1) for the “40 Hz” aSSR, which is classically held to be a primary auditory cortical response, the spatially selective modulation by attention has never been established [see Müller et al., 2009; Okamoto et al., [2010]; Ross et al., 2004]. This issue is of importance, since it allows for crossmodal comparisons for which such modulations have been demonstrated [e.g. Fuchs et al., 2008; Giabbiconi et al., 2007]. (2) In the auditory domain, the attentional modulation of the aSSR has not been unequivocally localized to primary auditory regions. The standard approach [see Müller et al., 2009; Okamoto et al., in press; Ross et al., 2004] is to fit few sources to the component of interest and investigate source‐waveforms projected onto these locations. However, despite the focus of the aSSR clearly is primary auditory cortical in origin, regions outside of auditory cortex—including frontal areas—sensitive to 40 Hz AM of a sound have been reported as well [Pastor et al., 2002; Reyes et al., 2004]. If this is indeed the case, then it is not clear to what extent a few seeded single dipoles may capture also some of the distant non‐auditory cortical activity. In the present study, our aim was to address these issues. Using a Posner‐type cueing task [Posner et al., 1980], we manipulated the predictive value of a cue stimulus preceding a target (42 Hz AM‐tone) in the presence of a distractor (19 Hz AM‐tone). In one condition the cue indicated with an above‐chance probability the likely location (left/right ear) at which the target would be presented, whereas in a second condition the cue was not informative in this sense. By this means we were able to differentiate top–down (the case when the cue is informative) from bottom‐up/general attentional effects (the case when the cue is uninformative) in a spatially selective manner (attend left/right). In contrast to the preceding MEG works on the aSSR we used a distributed sources approach, i.e. not restricting the effects of attentional modulation to a priori locations. By applying beamformers [Van Veen et al., 1997] we further tried to reduce the impact of volume conduction on our findings. Even though the focus of the paper lies on the aSSR, we also analyzed and report effects on transient Event Related Fields (ERF). Our results indicate that the aSSR in right primary auditory cortex can be modulated by increasing spatial selective attention using a predictive cue, largely reflected in a decreased response when the target is presented on the ipsilateral ear.

MATERIALS AND METHODS

Participants

Eleven healthy right‐handed volunteers (six females; age range: 24–38 years) were recruited for this experiment. All participants reported normal hearing and no history of previous neurological or psychiatric disorders. Before the beginning of the experiment, participants were acquainted with the MEG and the basic experimental procedure. Furthermore written informed consent was provided. The experiment was approved by the local Ethical Committee.

Procedure and Materials

The participants' task was to indicate on which ear a certain target sound was presented, defined by an AM frequency of 42 Hz. Simultaneously a distractor sound with 19 Hz AM was presented on the opposite ear. To aid the perceptual segregation of sounds, the carrier frequencies (CF) were chosen to be 500 Hz or 1300 Hz. Each AM frequency was combined with each CF to yield overall four compound sounds (800 ms duration; 10 ms linear fading at on‐ and offset): [left ear: 42 Hz AM, 1300 Hz CF; right ear: 19 Hz AM, 500 Hz CF], [left ear: 42 Hz AM, 500 Hz CF; right ear: 19 Hz AM, 1300 Hz CF], [left ear: 19 Hz AM, 1300 Hz CF; right ear: 42 Hz AM, 500 Hz CF] and [left ear: 19 Hz AM, 500 Hz CF; right ear: 42 Hz AM, 1300 Hz CF]. The four possible sounds were created in Matlab and the sequence of presentation within a block was predetermined via lists generated in R (available at: http://www.r-project.org/). The sequence was pseudo‐randomized in a sense that each sound occured equally often within a block (i.e. 28 times; see below), however apart from this restriction the sequence was randomized. This means that within one block the carrier frequency presented to one ear altered in a pseudorandom fashion. We are aware that other aSSR experiments employing a frequency tagging approach [Bidet‐Caulet et al., 2007; Müller et al., 2009] kept the carrier frequency constant for both ears. However, in the present study, we wanted to avoid that potential attentional effects on a behavioral as well as neurophysiological level are confounded by rigid relationship between ear and carrier frequency. Before creating the compound sounds each separate sound (e.g. left ear: 42 Hz AM, 500 Hz CF) was matched to equal subjective loudness to a 1,000 Hz calibration sound, that was previously set to 50 dB SL. Sounds were delivered via air‐conducting tubes with ear inserts (Etymotic Research, IL) in the MEG shielded‐room.

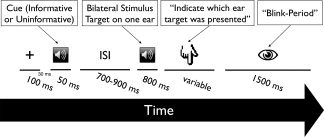

The basic setup of each trial is shown in Figure 1. Each trial began with a brief (100 ms) visual warning stimulus that indicated the beginning of the trial. Thirty milliseconds after offset of the warning stimulus a brief cue sound (50 ms; 750 Hz) was presented either to the left or the right ear. Following a variable inter‐stimulus interval (ISI; 700, 800 or 900 ms) the compound stimulus containing the target and distractor AM sound was presented. In order to be able to analyze the aSSR without artefacts caused by the button press, participants were requested to indicate the side on which they perceived the target AM following sound offset. The participants were not specifically instructed to pay attention to the preceding cue (i.e. they were told to focus on the ear on which the perceived the target sound), but were told prior to the begin of the actual experiment that depending on the block (see below), the cue could either indicate the probable location of the target or be entirely unpredictive. They were also asked to blink in an interval after their response, so that relevant periods of the trial were generally not contaminated by blink artefacts. The entire experiment consisted of six blocks with 112 trials each. Each block belonged to one of the two conditions, presented in an alternating manner and counterbalanced across subjects: In one condition (“uninformative”) the location cue sound that preceded the target (see Fig. 1) stood in random relation to the location of the target (50% on same ear). Contrary to that, in another condition (“informative”) the ear at which the cue was presented was likely to be also the side of the target (75% correspondence). We deliberately chose not to use 100% valid cues in the informative condition for two reasons: Firstly, we attempted to make the task more challenging for the participant forcing him/her to pay close attention to the compound sound. Secondly, next to showing a behavioral advantage for congruent cue/target pairs in the informative condition as compared with the uninformative condition, we also wanted to assess potential disadvantages induced by an invalid cue in the informative condition. At the beginning of each block participants were informed about the condition via visual presentation of the word “informative” or “uninformative” on the screen. Conditions were presented in an alternating manner (i.e. A‐B‐A‐B‐A‐B), with the initial condition switching between each successive participant (i.e. ∼half of the participants started with the “informative” condition). Before the beginning of the experiment in the MEG, each participant practiced ∼10 min with a reduced version of the experiment, i.e. without cue but with a visual feedback about the correctness of their response. This was to ensure that participants were well capable of differentiating the sounds and to detect the targets. The entire experimental procedure was controlled using Psyscope X, an open‐source software to design and run psychological experiments [Macwhinney et al., 1997; available at: http://psy.ck.sissa.it/).

Figure 1.

Trial event sequence. A tonal cue preceded a target consisting of a compound stimulus with a 19‐ and 42‐Hz AM tone on either ear. Following the offset of the target, participants had to indicate on which ear they heard the 42 Hz AM sound. Blocks differed with the cue either being informative (75%) or uninformative (50%) with respect to the upcoming target location.

Data Acquisition and Analysis

Magneto‐encephalographic data (480 Hz sampling rate) were recorded continuously using a 275 sensor whole‐head axial gradiometer system (CTF Omega, VSM MedTech, Canada) kept in a magnetically shielded room. Head positions of the individuals relative to the MEG sensors were controlled continuously within a block using three coils placed at three fiducial points (nasion, left and right preauricular points). Head movements did not exceed 1.5 cm within and between blocks.

Epochs of 2 s pre‐ and 2 s poststimulus were extracted from the continuous data stream around cues and compound sounds. In order to remove DC‐offset, data were detrended by subtracting the mean amplitude of each epoch from all sampling points within the epoch and subsequently 1 Hz high‐pass filtered. Due to the reduced number of epochs in which target and cue locations were incongruent during the Informative condition, only epochs were considered where target and cue sides were congruent. Epochs were visually inspected for artefacts (critical time‐window for cues: −0.5 to 0 s relative to cue onset; critical time‐window for targets: −0.5 to 0.8 s relative to target onset), and contaminated epochs were excluded. Due to the break following each trial, participants were comfortably able to blink, leaving the period of interest mostly unaffected (max. rejection of 10% of trials). In order to assure that our results are not confounded by differences in signal‐to‐noise ratio, within one participant the amount of trials was equalized for all cue and target conditions.

Before estimation of the aSSR, the time‐series of all epochs were averaged thus emphasizing activation time‐locked to the AM of the stimulus, while suppressing so‐called “induced” brain responses as well as nonbrain related noise. Subsequently, the resulting ERF was transformed into time‐frequency domain using Hanning tapers with a fixed time window length of 500 ms (i.e. frequency resolution of 2 Hz). This window was shifted from −0.5 pre‐ to 1 s poststimulus in steps of 2 ms and power was calculated between 10 to 70 Hz. Finally, power estimates from a −0.1 to −0.4 precue period was subtracted from the compound sound related evoked time‐frequency representations. Since the relevant frequencies of interest are clearly defined by the AM frequencies, we extracted temporal profiles for the target‐related 42 and distractor‐related 19 Hz activity. These waveforms were subsequently used for statistical analysis (see below). The 1 to 25 Hz filtered ERF was calculated by averaging single trials from −0.2 s pre‐ to 0.9 s after stimulus onset in order to estimate the strength of the transient responses.

In order to obtain more information as to the approximate generators of our sensor data effects we carried out source analysis using a time‐domain beamformer [lcmv; Van Veen et al., 1997]. For each participant an anatomically realistic headmodel was generated based on individual headshapes [Nolte, 2003] and leadfields were calculated for grid points separated by 1 cm. The conventional approach of filtering the data around the modulation frequencies prior to source analysis turned out to be inadequate in our study since particularly the lower modulation frequency (19 Hz) produced some activity at a harmonic frequency in the vicinity of the more rapid modulation frequency (42 Hz). In order to reduce this issue we decided to apply spatial filters derived by the lcmv beamformer to the complex Fourier transformed MEG data at 19 and 42 Hz [see Bardouille and Ross, 2008 for a similar approach in which, however, inter‐trial phase‐locking was analyzed]. For this purpose data epochs were first filtered broadly around the respective modulation frequencies (2–40 Hz for the 19 Hz AM sound; 25–65 Hz for the 42 Hz AM sound). Baseline periods were defined as periods ranging from −0.6 to 0 s precue. Stimulation periods were set from 0.2 to 0.8 s poststimulus. The data covariance matrix was calculated for baseline and stimulation periods and subsequently averaged. This was to ensure the use of an identical filter weights for both periods as well as for all conditions. For each grid point filter weights were calculated according to Van Veen et al. [ 1997]:

where H is the leadfield matric and x describes the activity as measured by the MEG sensors. This filter was subsequently multiplied with the complex FFT coefficients calculated at 19 and 42 Hz respectively after averaging all trials of a condition separately for the baseline and stimulation period. The amplitude at each grid point were calculated as the moduli of complex spectral coefficients of source activity. In order to remove the depth bias inherent to beamforming (spatial normalization), we used the estimation of the baseline source activity as described in the next paragraph.

The ERF effect (30–90 ms; see Results) was localized in a more conventional manner using the same time‐domain (lcmv) beamformer as mentioned above. Again a common spatial filter was created for all conditions by concatenating all available trials (matched with regards to amount between trials; see above). In a first step time series were low‐pass filtered and the covariance matrix was calculated for a baseline period (−0.4 to 0 s) and the activation period (0 to 0.4) and subsequently averaged over both periods. This covariance matrix was used to generate the common spatial filter. In a second step ERFs were calculated separately for each condition within a 100 ms period centered around the time‐window of interest (0.01–0.11 s) and a baseline period (−0.2 to −0.1 s). These values where then projected into source space using the common spatial filter and averaged over the respective time period in order to yield a single activation value per voxel. Since beamforming suffers from a characteristic depth bias, source analysis of both signals (i.e. the aSSR and ERF) was normalized by: (activation–baseline)/baseline.

In order to visualize the data, individual grid points were interpolated onto “pseudo”‐individual MRIs that were created based on an affine transformation of the “headshape” of a MNI template and the individually gained headshape points. This transformation matrix was then applied to the template MRI. Source analysis results were normalized onto a common MNI template brain for later group statistics. All offline treatment of MEG data was carried out using functions supplied by the fieldtrip toolbox (available at: http://fieldtrip.fcdonders.nl/).

For the analysis of behavioral data we calculated repeated measures ANOVAs for reaction time (RT) and response accuracy. Besides of cue side and condition (analogous to above), behavioral analysis also included congruency, describing whether target location were conform to cue location. Significant interaction effects (P ≤ 0.05) were followed up using student t‐tests. Statistical analyses were carried out using R (available at: http://www.r-project.org/). Regarding the MEG data we focused on the contrast between responses to AM sounds that were preceded by an informative cue versus sounds that were preceded by an uninformative cue when cue and target were presented on the same ear (congruent trials). As stated above, due to the largely decreased number of incongruent trials within the informative condition the analysis of congruency was dropped with regards to the MEG data. We performed this analysis by applying a student's t‐test separately to both cue locations (left and right). A nonparametric randomization test was undertaken to control for multiple comparsisons [Maris and Oostenveld, 2007], by repeating the test 1,000 times on shuffled data (across conditions) and remembering the largest summed t‐value of a temporospatially coherent cluster (time window entered into the statistic; aSSR: 0.2 to 0.8 s; ERF: 0 to 0.3 s). Empirically observed clusters could then be compared against the distribution gained from the randomization procedure and were considered as significant when their probability was below 5%. In the following, the term positive or negative cluster refers to the sign of the t‐value. Since the contrast was always informative versus uninformative and our approach of analyzing aSSRs removes polarity reversals (i.e. by calculating power at the respective frequencies of interest) a negative cluster would mean relatively decreased aSSRs for the informative condition and vice versa for a positive cluster. For the ERF this interpretation is not as straightforward since the time‐series contains positive and negative peaks, i.e. the specific features of the waveform have to be taken into account. In order to derive probable locations underlying the sensor based effects, analogous t‐test contrasts were undertaken for the source solutions. Emergence effects were masked with a P < 0.01 whereas attentional effects were masked with a P < 0.05.

RESULTS

Behavior: Reaction‐Time (RT) and Accuracy

Overall, subjects were able to solve the task with ∼80% accuracy, even though there were strong interindividual differences (accuracy ranging from 53% to 92%). Regarding our experimental manipulations, significant effects were obtained for Congruency (F (1,10) = 9.04, P = 0.013) and the Congruency × Information interaction (F (1,10) = 5.01, P = 0.049). These effects are due to responses being ∼10% more accurate when target and cue locations were the same (84%) as opposed to when they differed (75%). The interaction (see Fig. 2 left panel) is due to the differential effect being only present when the cue was informative (87% vs. 69%; t 10 = 2.64, P = 0.025) but not when it was uninformative (81% vs. 80%; t 10 = 1.79, P = 0.104).

Figure 2.

Behavioral results. Effects (mean + SE) are shown separately for trials preceded by left (top panel) or right ear (bottom panel) cue. Each graph depicts the impact of whether the preceding cue was informative or uninformative and whether it corresponded to the upcoming target ear (i.e. 42 Hz AM tone; congruent) or not (incongruent). Accuracy is expressed as percentage of correct responses and RTs as time (in ms) until button press relative to the offset of the compound sound. Reactions were less accurate and prolonged on incongruent trials when the preceding cue was indicative (“informative”) of the probable target side.

Similar effects could be observed for RT, with responses for targets preceded by spatially congruent cues being faster (836 vs. 914 ms; Congruency: F (1,10) = 6.36, P = 0.030). Again, modulatory effects were only present when the cue was informative (821 vs. 979 ms; t 10 = −2.71, P = 0.022) but not when it was uninformative (both 850 ms; t 10 = 0.02, P = 0.99) yielding a significant Congruency × Information interaction (F (1,10) = 6.93, P = 0.025; see Fig. 2 right panel). In contrast to accuracy, an additional Cue Side × Congruency effect (F (1,10) = 16.73, P = 0.002) could be observed, resulting from the fact that congruency effects were only present when stimuli were presented to the rigth ear (780 vs. 956 ms; t 10 = −3.65, P = 0.004) but not when presented to the left ear (893 vs. 871 ms; t 10 = 0.82, P = 0.434). Despite of this, the Cue Side × Congruency × Information interaction was not significant (F (1,10) = 0.79, P = 0.394).

Overall our behavioral effects support the notion that the experimental manipulation (information content of the cue) affected attentional processes. This was mainly pronounced as increased cost of incongruency (reduced accuracy and prolonged reaction time) in case of the informative cue.

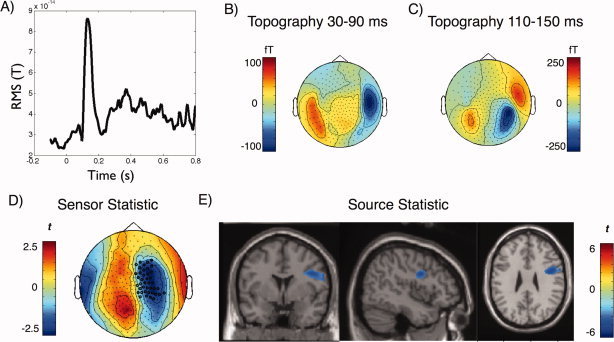

ERF Effects

The compound sound elicited a pronounced P1‐N1‐P2 component followed by a sustained activity during the period of the sound (see Fig. 3A). Contrasting ERF activity preceded by an informative versus an uninformative cue yielded one singificant cluster (P = 0.04), when the target was presented to the left ear (preceded by a left‐sided cue; see Fig. 3D). This effect was temporally constrained to 30 to 90 ms post target sound onset and spatially localized at right frontocentral sensors. Source localization suggests right frontal regions (inferior frontal gyrus, BA9) to underly this effect (see Fig. 3E). In this region the compound sound preceded by an informative cue produced less evoked activity as compared with the compound sound preceded by an uninformative cue. No significant cluster could be identified for compound sounds in which the target was presented to the right ear (preceded by a right‐sided cue).

Figure 3.

ERF results. A) Average (RMS) time‐course of the ERF over all conditions (target onset at 0 ms), and corresponding topographies of the B) middle latency and C) N1 periods. D) A significant negative cluster was identified between 30 and 90 ms at right frontocentral sensors when the cue‐target combination was presented to the left ear, indicating a stronger relative negativity when the preceding cue was informative as compared with when it was uninformative. E) Analogous statistical contrast as in D) on source level shows relatively weaker activity in right IFG in the informative condition as compared with the uninformative condition. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

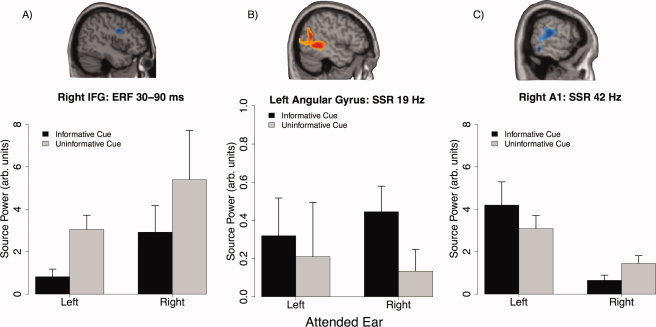

For the right IFG we then extracted individual values (averaged over ROI) for each condition and entered them into a repeated measures ANOVA with side and cue as within‐subject factors. From Figure 7A it can be seen that amplitudes were overall larger when the target sound was presented to the right ear (and the distractor on the left, respectively), yet this difference did not reach significance (F (1,10) = 2.27, P = 0.16). Furthermore compound sounds preceded by uninformative cues went along with significantly stronger right IFG activity as compared with those preceded by an informative cue (cue main effect: F (1,10) = 8.14, P = 0.02). Although in the nonparametric permutation test a significant cluster was only observed when the sounds were presented to the left ear, the ANOVA on the right IFG ROI did not yield a significant cue × side interaction (F (1,10) = 0.04, P = 0.84), suggesting a greater importance of the predictive value of the cue (informative vs. uninformative) rather than the side of sound presentation.

Figure 7.

Summary of effects in ROIs. Average values for the right IFG, left angular gyrus and right primary auditory cortex was extracted for all individuals (shown in top panel) in order to calculated ANOVAs including all factors (see text). Values (“source power”) describe activation values from the beamformer analysis normalized by a precue baseline (see Methods and Results) and therefore have no actual physical units. (A)–(C) Depicts the average (±standard error) activations for this region under all conditions. Of particular relevance for this study is (C) showing overall stronger activity in right primary auditory cortex when the 42 Hz AM sound was presented to the left ear, however a stronger attentional (i.e. cue information) modulation when the target was presented to the right ipsilateral ear. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

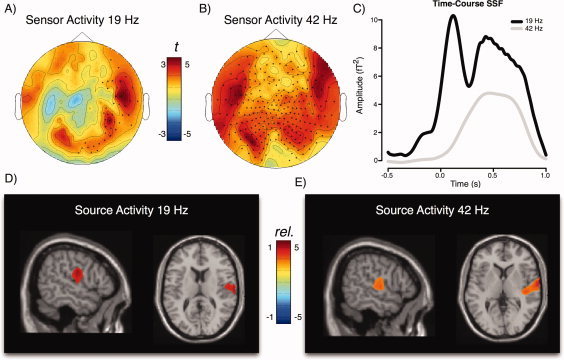

Basic aSSR Characteristics

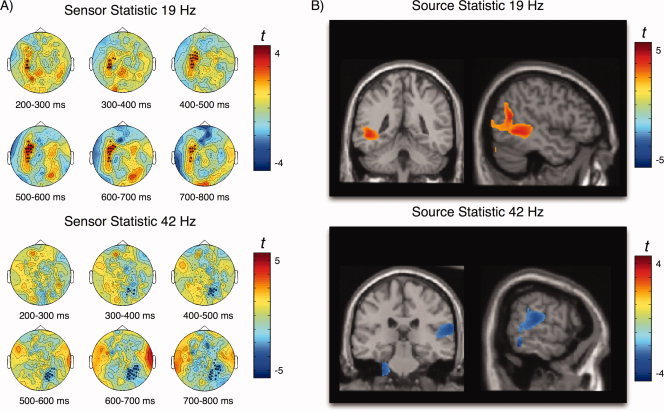

Clear poststimulus evoked activity at 19 and 42 Hz could be observed at the sensor level leading to significant spatiotemporal clusters for each modulation frequency (see Fig. 4A,B). Overall (i.e. over all conditions) the evoked activity for the 42 Hz modulation appeared to be more pronounced and wide‐spread than for the 19 Hz AM (19 Hz cluster: P = 0.016; 42 Hz cluster: P = 0). The average temporal profiles of the evoked activity in the respective clusters are displayed in Figure 4C, corroborating the impression that robust aSSRs were elicited by the stimuli. The sound with 19 Hz modulation lead to two peaks, with an early (∼50–100 ms) one corresponding to high frequency proportions of the transient ERP responses and a later sustained response commencing from about 250 ms and rapidly wearing of following sound offset. The 42 Hz AM sound however was only reflected in a later sustained response with a similar temporal profile like the lower frequency AM sound. With regards to localization, our beamforming approach indicates the emergence of respective rhythmic evoked activity primarily in right auditory cortex (see Fig. 4D,E) for both modulation frequencies. The focus of activity mainly originates along Heschl's gyrus, including primary (BA 41, 42) and secondary regions (BA 22). Whereas, the 19 Hz aSSR has primary generators on the lateral aspect of Heschl's gyrus, the 42 Hz aSSR appears to have generators in medial proportions as well. This part illustrates that our approach in localizing aSSR activity indeed yielded valid results, conforming with standard literature reports on aSSR [Bidet‐Caulet et al., 2007; Ross et al., 2000].

Figure 4.

Basic aSSR characteristics (“Emergence”). Topographic representations of the A) 19 Hz aSSR and B) 42 Hz aSSR. Colors represent statistical (t) values as compared with the precue baseline averaged over 200 to 800 ms postonset of the compound sound. For the 19 Hz AM tone, aSSR are strongly right lateralized, whereas bilateral responses can be seen for the 42 Hz aSSR. Please note that the topographies do not contain polarity information, since they are lost due to our analysis of the aSSR in the time‐frequency domain. C) Time‐courses of the 19 and 42 Hz aSSR extracted from the time‐frequency representation of the unfiltered evoked field. Sustained aSSR activity starts ∼250 ms poststimulus onset and falling off rapidly after stimulus offset. The 19 Hz AM sound additionally elicited a strong transient response in the P1/N1 time‐window. Results of source localization of the D) 19 Hz and E) 42 Hz aSSR for the 200 to 800 ms period (compared with precue baseline). Colors correspond to relative changes (1 = 100% increase relative to baseline). Figures are masked at P < 0.01 and indicate strongly right‐lateralized responses. The 42 Hz aSSR includes regions of the primary auditory cortex. For the 42 Hz aSSR, an additional left source was seen in the left hemisphere at a more liberal masking level, however slightly superior to auditory cortex (see Supp. Info.). [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

The sensor topography for the 42 Hz aSSR in Figure 4A suggests a left generator as well besides of the one identified in the right auditory cortex. However at masking levels of P < 0.01 it became barely visible. Setting the masking level to a more liberal P < 0.05, a left hemispheric generator becomes visible, that extends into superior temporal regions and is overall slightly posterior to the right hemispheric source, fitting the well‐known left‐right asymmetry of auditory cortex (see Supp. Info., Fig. 1). Yet, the focus of this source is on the central gyrus, somewhat superior to actual auditory cortex. At this stage it is not clear whether this is an actual mislocalization either e.g. by the method or missing individual anatomical information. In contrast to the right auditory cortex generator, the localization of left auditory cortex sources of the aSSR appears more unreliable.

aSSR Attention Effects

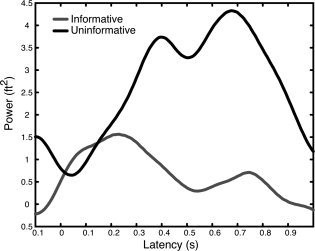

In order to scrutinize the effects of cue information, that behaviorally has been shown to boost performance, we first tested aSSR activation differences on a sensor level, by contrasting within a cueing condition (cue‐target right/left) differences between the informative and uniformative cue. Our nonparametric permutation test yielded a trend level cluster for the 19 Hz (i.e. the non‐target) sound and a significant cluster for the 42 Hz (i.e. the target) sound only when the preceding cue was presented to the right ear. The spatiotemporal evolution of the 19 Hz cluster (P = 0.07; 0.2–0.8 s with maximum ∼0.45–0.75 s) can be taken from Figure 5A (top panel), indicating enhanced 19 Hz aSSR activity over left temporal sensors, i.e. ipsilaterally to the ear in which the 19 Hz AM sound was presented, when it was preceded by an informative cue. Contrasting the informative versus the uniformative trials for right ear target presentation in source space illustrates that the effect is not driven by auditory cortex (see Fig. 4 for comparison) but by areas at the left temporoparieto‐occipital junction (BA39, BA37; see Fig. 5B, top panel; subsequently called “angular gyrus” for simplicity). By contrast, a significant negative cluster (P = 0.007; 0.3–0.8 s with maximum ∼0.6–0.75 s; see Fig. 5A) was identified for the 42 Hz sound at right parietal sensors, indicating a relatively smaller aSSR at sites ipsilateral to the cue, when the cue was informative as compared to when it was uninformative. This effect is depicted more clearly in Figure 6 for which we averaged the time series for the 42 Hz aSSR over sensors belonging to the respective significant cluster (see Fig. 5A bottom panel) separately for the two conditions: Whereas a clear aSSR can be observed when the preceding cue was uninformative starting ∼200 ms following onset of the compound sound (maximum betwenn 400 and 700 ms), an aSSR was suppressed when the preceding cue was informative. Again source space statistics was performed in order to infer generators that may be driving the sensor level effect. Contrary to the 19 Hz effect, the effect for the 42 Hz AM sound had its main focus in superior temporal regions in the vicinity of primary auditory cortex (Fig. 5B, bottom panel; see Fig. 4 for comparison).

Figure 5.

Attentional modulation of aSSR following right ear cue/target presentation. A) Topographies and time course for significant clusters identified on sensor level. Top panel shows the 19 Hz aSSR trend level effect (P = 0.07) over right temporal sensors indicating stronger activity when the cue was informative. The bottom panel shows the significant negative cluster observed for the 42 Hz aSSR at right parietotemporal sensors, reflecting decreased aSSR when the preceding cue was informative as compared with when it was uninformative. B) The same contrast (informative vs. uninformative) at the source level yields significant differences at the left angular gyrus for the 19 Hz aSSR (top panel), whereas for the 42 Hz aSSR the effect are located in right primary auditory cortex (bottom). [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

Figure 6.

Forty‐two Hz aSSR time course at right temporal sensors for right ear cue/target presentation. Time series (relative to precue baseline) were averaged for sensors belonging to the negative cluster depicted in Figure 5. A strong aSSR response can be observed when the preceding cue is uninformative. The response is greatly reduced (maximum difference ∼400–700 ms following onset of compound sound) when the preceding cue is informative.

Again, we extracted average ROI activity separately for each AM frequency, in this case left angular gyrus (for the 19 Hz aSSR) and right primary auditory cortex (for the 42 Hz aSSR), in order to test for potential interaction patterns that were not directly tested by the permutation test. The results for the 19 and 42 Hz AM sound are summarized in Figure 7B,C respectively. For the 19 Hz AM sound neither the cue type (informative versus uninformative) nor the side of presentation yielded a significant effect (F (1,10) < 1.1, P > 0.3). Also no significant cue × side interaction effects was observed (F (1,10) = 0.25, P = 0.62), putatively due to the great interindividual variability when the target sound was presented to the left ear (i.e. the distractor 19 Hz AM sound was contralateral to the ROI). A simple contrast for 19 Hz ROI activity when the 42 Hz AM target was presented to the right (i.e. the 19 Hz AM sound was ipsilateral to the ROI) indicates an enhanced response at a trend level (t 10 = 1.97, P = 0.07), when the preceding cue was informative. In the right primary auditory cortex ROI for the 42 Hz aSSR, the ANOVA yielded a significant effect of side (F (1,10) = 14.01, P = 0.003). This effect stems from an approximately 3.5 times stronger activation, when the target sound was presented to the contralateral left ear (M = 3.64, SE = 0.62; right ear presentation: M = 1.05, SE = 0.23). Furthermore, a trend level interaction was found (F (1,10) = 3.91, P = 0.07), indicating an influence of the predictive value of the cue (informative versus uninformative). Following up this interaction with simple contrasts, shows that while no significant difference between informative and uninformative could be found when targets were presented to the left (contralateral) ear (t 10 = 1.46, P = 0.17), a significant decreased activity was found for the informative relative to the uninformative condition, when targets were presented to the right (ipsilateral) ear (t 10 = −2.80, P = 0.02). The effect of the cue main effect on the 42 Hz aSSR did not reach statistical significance (F (1,10) = 0.26, P = 0.61).

DISCUSSION

The present study is the first to show in humans that selective spatial auditory attention impacts responses in primary auditory cortex as measured with aSSRs in a concurrent dichotic listening situation. However effects are only observed in right auditory cortical regions, when participants are cued to focus their attention on their right, i.e. ipsilateral, ear. In this case aSSRs at 42 Hz (the target AM) were relatively smaller when the preceding cue was informative as compared to the case when the preceding cue was uninformative with regards to laterality of target presentation. For the same region a small increase of the aSSR could be observed when the target was presented to the left (contralateral) ear and the preceding left‐sided cue was informative (see Fig. 7C). However, this increase failed to reach statistical significance which could be due to the inability of the rather moderate sample size to capture this effect. Since for all comparsions within this study, the amount of trials was equalized between conditions, we can exclude trivial explanations such as differences in signal‐to‐noise ratio. Behaviorally, the degree of attention allocated to the input from one ear was modulated by the informational value of the cue that preceded the compound sound. This is particularly reflected in the worsening of performance (both accuracy and RT) in the informative condition, when the target ear was incongruent with the cued ear. Such a pattern was not observed within the uninformative condition, in which congruent as well as incongruent cue‐target combinations yielded very similar behavioral patterns. From these results we infer that selective attention was exclusively deployed in the informative condition, including reduced processing resources for the uncued ear. However, regarding RT it was also evident that subjects were on average faster in congruent than incongruent cases when the cue was presented to the right ear. There was no such behavioral congruency effect when the cue was presented to the left ear. Nevertheless, whether the cue was informative or not did not differentially influence behavioral outcomes for left and right ear presentation. At this point it should be stated that the delayed response by the participant, i.e. to wait until the offset of the sound (in order to avoid button presses affecting the neuromagnetic data), certainly limits the power to find meaningful RT effects of spatial attention. However this limitation applies more strongly to missing RT differences between the different factor combinations. In general, the identified RT effects in the current experiment support the effects found on accuracy despite of the delayed response task, indicating a very potent experimental manipulation of attention. It can be speculated that the RT effects might have even been more pronounced if the participant was instructed to respond immediately upon target detection.

Overall, our results on the 42 Hz aSSRs may indicate that the seemingly similar behavioral outcomes with regards to side of target presentation may be mediated by distinct mechanisms: In general the right hemispheric dominance for processing steady‐state AM sounds is a well‐documented finding in the research literature [Ross et al., 2005], implying a default processing of such stimuli by the right auditory cortex. This notion is validated by our investigation of emergence effects, i.e. disregarding the effects of attention (see Fig. 4). With regards to our experimental manipulation, this could mean that processing of a 42 Hz AM sound that is presented to the left ear does not become significantly enhanced by attention as the right hemisphere is already activated by default. However, when the 42 Hz AM target is presented to the right ear, focussing attention by the informative cue leads to a diminuation of the putative default activation. This notion is to some extent conform with fMRI data by Petkov et al. showing that “default” stimulus‐driven right dominant lateralization pattern can be altered by means of selective attention particularly by decreasing right hemispheric responses [see Fig. 5 in Petkov et al., 2004]. Even though the overall responsiveness in left auditory cortex increased by attention, Petkov et al. found greater modulations within the right hemisphere within the attention conditions (left vs. right ear presentation). Different to this fMRI study, however, localizing attentional modulations primarily to secondary auditory regions, our present results imply the involvement of primary auditory cortex at least for 42 Hz modulated steady‐state sounds. The hemispheric dominance pattern as well as the involvement of primary auditory cortex may thus be strongly dependent on the type of stimuli and task used.

Our results corroborate and extend recent findings that aSSRs known to be generated mainly in primary auditory cortex can be modulated by attention [Bidet‐Caulet et al., 2007; Muller et al., 2009; Okamoto et al., 2010; Ross et al., 2004; Saupe et al., 2009]. They support a previous report of Bidet‐Caulet et al. [ 2007], that effects selective attention on aSSRs also manifests itself by reductions of responses to not attended input, in their case distinct auditory streams tagged by a modulation frequency. Ipsilateral decreases of aSSR by attention were also demonstrated by Müller et al. [ 2009], however, only for a 20‐Hz AM target but not for the 45 Hz AM target. Furthermore, this study found effects only for the left hemisphere. However, the present study differs in many respects to the previous one, e.g. by having uninformative cues as well next to the informative ones and by making the 42 Hz AM sound always the target independently of the cued ear. This may explain why the auditory cortex effects for attention were restricted to the 42 Hz aSSR in the present study. Since no auditory cortex effects were seen for the 19 Hz AM distractor, we interpret our results as a suppression of right primary auditory cortex mechanisms specialized in processing the family of “40 Hz” AM sounds. Contrary to what may be expected however, an informative cue did not significantly increase left auditory cortical 42 Hz responses alongside of the right hemispheric reduction. This may be due to the fact that the left auditory cortex was generally not strongly activated by the 42 Hz sound. Even though the topography (see Fig. 4) suggests a source in the left hemisphere, the beamformer could not localize it to the auditory cortex (a left hemisphere source was found superior to the auditory cortex along the central sulcus; see Supplementary Materials). However, if a strong attentional effect was present for the left auditory cortex we could expect our method to be sensitive enough to reveal it, as e.g. the left TPJ for the 19 Hz AM tone, which also did not show any significant emergence effect. We therefore assume that the informative cue largely exerted its impact on right primary auditory cortical activation, similar to the pattern shown in Petkov et al. [ 2004; see above].

Even though not the central to the focus of the present study, the nonparametric permutation test employed pointed to some effects putatively generated in nonauditory regions, contrasting the influence of the informative versus the uninformative cue. An early effect between 30‐90 ms in the ERF data was revealed when cue/targets were presented to the left ear (i.e. distractor presented on right ear). The latency of the effect overlaps with the classical middle latency responses, which have dominant sources in primary auditory cortex [Pantev et al., 1995]. However the attentional effect when comparing the informative versus the uninformative condition (Fig. 3D) clearly differed from the sound evoked middle latency response (Fig. 3B) in terms of topography, suggesting a different generator for the attentional effect. Indeed, the beamformer solution suggested mainly right IFG to underly this effect, with relatively smaller responses when the cue was informative. Evidently, the experiment was not designed to scrutinize the functional role of right IFG, therefore any explanation necessarily remains in the realm of speculation. A possible explanation for the observed effect may be that an informative cue reduced required cognitive effort to process the upcoming stimulus. Inferior frontal regions have been associated with task difficulty and invested cognitive effort previously [Leshikar et al., 2010; Obleser and Kotz, 2010]. In the spirit of the interpretation outlined above, the effect could be lateralized to left ear sound presentation, since a right ear presentation of 42 Hz AM sounds may require high levels of effort disregarding the predictive value of the cue. In this context it is noteworthy to mention that no auditory cortical middle latency differences were found for the informative versus uninformative condition, which may have been expected from some studies [Woldorff et al., 1993], indicating that an informative does not influence the earliest primary auditory cortical responses, but that these effects develop over the course of the stimulation putatively by backprojections from higher auditory fields [Kayser and Logothetis, 2009]. It is important to emphasize here however, that it is not possible within the current experiment to dissociate target and distractor contribution to the ERF effect, as the ERF constitues an evoked response to the compound sound. This is in contrast to the aSSR where target and distractor contributions are “tagged” by the modulation frequency of the stimulus.

Apart of the right IFG another nonauditory cortical region—the left angular gyrus—was selectively stronger activated for the 19 Hz aSSR when the preceding cue was informative and the target was presented to the right ear (i.e. the 19 Hz AM sound was presented to the left ear). This ipsilateral increased activity for the distractor sound is conceptually difficult to grasp, since the angular gyrus has been implicated in a wide range of different cognitive functions [e.g. Decety and Lamm, 2007; Lopez et al., 2008; Spierer et al., 2009]. A very speculative idea would be that rather than reflecting an increased processing of the 19 Hz sound in the informative condition, this effect could rather reflect a reduced processing when the preceding cue was uninformative. Essentially this could be associated with different strategies of solving the task for the different cue conditions: While an informative cue would promote to a focusing of attention on the 42 Hz AM sound, leading to reduced responses ipsilateral to the target presentation, the processing the combined sound after the uninformative cue could be associated to suppressing ipsilateral response to the distractor sound. Interestingly the angular gyrus has been proposed to be a crucial region for processing the spatial location of sounds [Wang et al., 2008]. Functionally this may correspond to a deeper processing of the 19 Hz AM sound, i.e. in order to solve the task under uncertainty (i.e. the uninformative condition) it may be helpful to also locate the 19 Hz AM sound: excluding the location of the distractor sound may be one mechanism that contributes to localizing the target sound. However in the face of a missing a priori hypothesis for the angular gyrus and the trend level of the effect itself, further studies will be needed to follow up this speculative idea and to confirm its replicability.

Finally it should be mentioned that even though the focus of the present study was on the neuronal influence of the cue in predicting the probable target location, behaviorally the congruency effects within the informative condition were stronger than the differences between informative and uninformative condition. This may suggest even stronger effects on the aSSRs than the ones obtained from the present analysis. Unfortunately, due to the necessity of equalizing the amount of trials when contrasting conditions, the amount of trials in the present experiment was too low for the incongruent stimuli within the informative condition. After having established the influence of the predictive value of the cue on aSSRs, an upcoming study should focus on congruency effects within the informative condition only. A further limitation of the study concerns the anatomical interpretation of the 42 Hz aSSR effect. In the absence of individual structural information and realistic headmodels along with the knowledge of the limitations of localization accuracy of MEG we can not claim with certainty that the effects are indeed attributable to changes of activity in primary auditory cortex. Even though attentional influences have been unequivocally demonstrated elsewhere [Bidet‐Caulet et al., 2007] it is theoretically conceivable that primary auditory cortical activity remains unchanged whereas activity outside of primary regions changes. Even though our data are in principle conform with an interpretation that primary auditory cortical activity has been affected by our experimental manipulation, future intractranial experiments will be needed in order to settle this issue.

CONCLUSION

Our results suggests that behavioral attentional gains yielded by a target‐preceding informative cue can modulate primary auditory cortical responses. This pattern was restricted to right auditory cortex when the target is presented to the right ear. We suggest that right ear target presentation demands a change of the “default” right hemispheric activation pattern for “40 Hz” AM sounds, promoted by selective attention. In this sense attention can be seen as a kind of gain modulator, downregulating activation from actively ignored primary auditory cortical regions, which conforms with interpretations forwarded by electrophysiology, neuroimaging and also neurocomputational modeling [Buia and Tiesinga, 2006].

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information Figure 1

Acknowledgements

The authors thank Anne Hauswald for helpful discussions.

REFERENCES

- Bardouille T, Ross B ( 2008): MEG imaging of sensorimotor areas using inter‐trial coherence in vibrotactile steady‐state responses. Neuroimage 42: 323–331. [DOI] [PubMed] [Google Scholar]

- Bidet‐Caulet A, Fischer C, Besle J, Aguera PE, Giard MH, Bertrand O ( 2007): Effects of selective attention on the electrophysiological representation of concurrent sounds in the human auditory cortex. J Neurosci 27: 9252–9261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buia C, Tiesinga P ( 2006): Attentional modulation of firing rate and synchrony in a model cortical network. J Comput Neurosci 20: 247–264. [DOI] [PubMed] [Google Scholar]

- Carrasco M, Ling S, Read S ( 2004): Attention alters appearance. Nat Neurosci 7: 308–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J, Lamm C ( 2007): The role of the right temporoparietal junction in social interaction: How low‐level computational processes contribute to meta‐cognition. Neuroscientist 13: 580–593. [DOI] [PubMed] [Google Scholar]

- Edeline JM ( 1999): Learning‐induced physiological plasticity in the thalamo‐cortical sensory systems: A critical evaluation of receptive field plasticity, map changes and their potential mechanisms. Prog Neurobiol 57: 165–224. [DOI] [PubMed] [Google Scholar]

- Fan J, Posner M ( 2004): Human attentional networks. Psychiatr Prax 31( Suppl 2): S210–S214. [DOI] [PubMed] [Google Scholar]

- Fritz J, Shamma S, Elhilali M, Klein D ( 2003): Rapid task‐related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci 6: 1216–1223. [DOI] [PubMed] [Google Scholar]

- Fritz JB, Elhilali M, David SV, Shamma SA ( 2007a): Does attention play a role in dynamic receptive field adaptation to changing acoustic salience in A1? Hear Res 229: 186–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fritz JB, Elhilali M, Shamma SA ( 2007b): Adaptive changes in cortical receptive fields induced by attention to complex sounds. J Neurophysiol 98: 2337–2346. [DOI] [PubMed] [Google Scholar]

- Fuchs S, Andersen SK, Gruber T, Muller MM ( 2008): Attentional bias of competitive interactions in neuronal networks of early visual processing in the human brain. Neuroimage 41: 1086–1101. [DOI] [PubMed] [Google Scholar]

- Giabbiconi CM, Trujillo‐Barreto NJ, Gruber T, Muller MM ( 2007): Sustained spatial attention to vibration is mediated in primary somatosensory cortex. Neuroimage 35: 255–262. [DOI] [PubMed] [Google Scholar]

- Jancke L, Mirzazade S, Shah NJ ( 1999): Attention modulates activity in the primary and the secondary auditory cortex: A functional magnetic resonance imaging study in human subjects. Neurosci Lett 266: 125–128. [DOI] [PubMed] [Google Scholar]

- Kayser C, Logothetis NK ( 2009): Directed interactions between auditory and superior temporal cortices and their role in sensory integration. Front Integr Neurosci 3: 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keil A, Moratti S, Sabatinelli D, Bradley MM, Lang PJ ( 2005): Additive effects of emotional content and spatial selective attention on electrocortical facilitation. Cereb Cortex 15: 1187–1197. [DOI] [PubMed] [Google Scholar]

- Leshikar ED, Gutchess AH, Hebrank AC, Sutton BP, Park DC ( 2010): The impact of increased relational encoding demands on frontal and hippocampal function in older adults. Cortex 46: 507–521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linden RD, Picton TW, Hamel G, Campbell KB ( 1987): Human auditory steady‐state evoked potentials during selective attention. Electroencephalogr Clin Neurophysiol 66: 145–159. [DOI] [PubMed] [Google Scholar]

- Lopez C, Halje P, Blanke O ( 2008): Body ownership and embodiment: Vestibular and multisensory mechanisms. Neurophysiol Clin 38: 149–161. [DOI] [PubMed] [Google Scholar]

- Maris E, Oostenveld R ( 2007): Nonparametric statistical testing of EEG‐ and MEG‐data. J Neurosci Methods 164: 177–190. [DOI] [PubMed] [Google Scholar]

- Muller N, Schlee W, Hartmann T, Lorenz I, Weisz N ( 2009): Top‐down modulation of the auditory steady‐state response in a task‐switch paradigm. Front Hum Neurosci 3: 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nolte G ( 2003): The magnetic lead field theorem in the quasi‐static approximation and its use for magnetoencephalography forward calculation in realistic volume conductors. Phys Med Biol 48: 3637–3652. [DOI] [PubMed] [Google Scholar]

- Obleser J, Kotz SA ( 2010): Expectancy constraints in degraded speech modulate the language comprehension network. Cereb Cortex 20: 633–640. [DOI] [PubMed] [Google Scholar]

- Okamoto H, Stracke H, Bermudez P, Pantev C: Sound processing hierarchy within human auditory cortex. J Cogn Neurosci. DOI:10.1162/jocn.2010.21521. [DOI] [PubMed] [Google Scholar]

- Pantev C, Bertrand O, Eulitz C, Verkindt C, Hamson S, Schuierer G, Elbert, T ( 1995): Specific tonotopic organizations of different areas of the human auditory cortex revealed by simultaneous magnetic and electric recordings. Electroencephalogr Clin Neurophysiol 94: 26–40. [DOI] [PubMed] [Google Scholar]

- Pantev C, Roberts LE, Elbert T, Ross B, Wienbruch C ( 1996): Tonotopic organization of the sources of human auditory steady‐state responses. Hear Res 101: 62–74. [DOI] [PubMed] [Google Scholar]

- Pastor MA, Artieda J, Arbizu J, Marti‐Climent JM, Penuelas I, Masdeu JC ( 2002): Activation of human cerebral and cerebellar cortex by auditory stimulation at 40 Hz. J Neurosci 22: 10501–10506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penhune VB, Zatorre RJ, MacDonald JD, Evans AC ( 1996): Interhemispheric anatomical differences in human primary auditory cortex: Probabilistic mapping and volume measurement from magnetic resonance scans. Cereb Cortex 6: 661–672. [DOI] [PubMed] [Google Scholar]

- Petkov CI, Kang X, Alho K, Bertrand O, Yund EW, Woods DL ( 2004): Attentional modulation of human auditory cortex. Nat Neurosci 7: 658–663. [DOI] [PubMed] [Google Scholar]

- Posner MI, Snyder CRR, Davidson JR ( 1980): Attention and the detection of signals. J Exp Psychol 109: 160–174. [PubMed] [Google Scholar]

- Reyes SA, Salvi RJ, Burkard RF, Coad ML, Wack DS, Galantowicz PJ, et al. ( 2004): PET imaging of the 40 Hz auditory steady state response. Hear Res 194: 73–80. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Desimone R ( 1999): The role of neural mechanisms of attention in solving the binding problem. Neuron 24: 19–29, 111–125. [DOI] [PubMed] [Google Scholar]

- Ross B, Borgmann C, Draganova R, Roberts LE, Pantev C ( 2000): A high‐precision magnetoencephalographic study of human auditory steady‐state responses to amplitude‐modulated tones. J Acoust Soc Am 108: 679–691. [DOI] [PubMed] [Google Scholar]

- Ross B, Herdman AT, Pantev C ( 2005): Right hemispheric laterality of human 40 Hz auditory steady‐state responses. Cereb Cortex 15: 2029–2039. [DOI] [PubMed] [Google Scholar]

- Ross B, Picton TW, Herdman AT, Pantev C ( 2004): The effect of attention on the auditory steady‐state response. Neurol Clin Neurophysiol 2004: 22. [PubMed] [Google Scholar]

- Saupe K, Schroger E, Andersen SK, Muller MM ( 2009): Neural mechanisms of intermodal sustained selective attention with concurrently presented auditory and visual stimuli. Front Hum Neurosci 3: 58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silver MA, Ress D, Heeger DJ ( 2007): Neural correlates of sustained spatial attention in human early visual cortex. J Neurophysiol 97: 229–237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skosnik PD, Krishnan GP, O'Donnell BF ( 2007): The effect of selective attention on the gamma‐band auditory steady‐state response. Neurosci Lett 420: 223–228. [DOI] [PubMed] [Google Scholar]

- Spierer L, Bernasconi F, Grivel J ( 2009): The temporoparietal junction as a part of the “when” pathway. J Neurosci 29: 8630–8632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Veen BD, van Drongelen W, Yuchtman M, Suzuki A ( 1997): Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans Biomed Eng 44: 867–880. [DOI] [PubMed] [Google Scholar]

- Wang WJ, Wu XH, Li L ( 2008): The dual‐pathway model of auditory signal processing. Neurosci Bull 24: 173–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weisz N, Keil A, Wienbruch C, Hoffmeister S, Elbert T ( 2004): One set of sounds, two tonotopic maps: Exploring auditory cortex with amplitude‐modulated tones. Clin Neurophysiol 115: 1249–1258. [DOI] [PubMed] [Google Scholar]

- Woldorff MG, Gallen CC, Hampson SA, Hillyard SA, Pantev C, Sobel D, et al. ( 1993): Modulation of early sensory processing in human auditory cortex during auditory selective attention. Proc Natl Acad Sci USA 90: 8722–8726. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supporting Information Figure 1