Abstract

Observing another person being touched activates our own somatosensory system. Whether the primary somatosensory cortex (S1) is also activated during the observation of passive touch, and which subregions of S1 are responsible for self‐ and other‐related observed touch is currently unclear. In our study, we first aimed to clarify whether observing passive touch without any action component can robustly increase activity in S1. Secondly, we investigated whether S1 activity only increases when touch of others is observed, or also when touch of one's own body is observed. We were particularly interested in which subregions of S1 are responsible for either process. We used functional magnetic resonance imaging at 7 Tesla to measure S1 activity changes when participants observed videos of their own or another's hand in either egocentric or allocentric perspective being touched by different pieces of sandpaper. Participants were required to judge the roughness of the different sandpaper surfaces. Our results clearly show that S1 activity does increase in response to observing passive touch, and that activity changes are localized in posterior but not in anterior parts of S1. Importantly, activity increases in S1 were particularly related to observing another person being touched. Self‐related observed touch, in contrast, caused no significant activity changes within S1. We therefore assume that posterior but not anterior S1 is part of a system for sharing tactile experiences with others. Hum Brain Mapp, 2013. © 2012 Wiley Periodicals, Inc.

Keywords: fMRI, primary somatosensory cortex, self and other, S1, 7 Tesla, observed touch

INTRODUCTION

Observing a spider crawling over the hand of another person may instantaneously evoke a shiver running down your spine. Likewise, seeing somebody being hurt may affect you in the same way as if you experienced the pain yourself. Such anecdotal reports [Bradshaw and Mattingley, 2001; Keysers et al., 2004; Morrison et al., 2004] illustrate that we not only react towards direct tactile or painful stimulation, but also show similar bodily responses when such events are only observed. A number of functional magnetic resonance imaging (fMRI) studies have focused on the question of whether observed painful [Avenanti et al., 2005, 2006; Botvinick et al., 2005; Bufalari et al., 2007; Morrison et al., 2004; Singer et al., 2004, 2006] or tactile [Blakemore et al., 2005; Ebisch et al., 2008; Keysers et al., 2004; Schaefer et al., 2009] stimulation triggers activity in the same brain areas as when such events are directly experienced. For the domain of pain, such shared neuronal representations were localized in the bilateral anterior insula, the rostral anterior cingulate cortex, the brainstem, and the cerebellum [Botvinick et al., 2005; Morrison et al., 2004; Singer et al., 2004, 2006]. For the domain of touch, overlapping activity for both felt touch and observed touch was mainly found in voxels of the secondary somatosensory cortex (S2) [Ebisch et al., 2008; Schaefer et al., 2009] and the inferior parietal cortex (IPC) [Ebisch et al., 2008]. Importantly, whereas fMRI studies only offer information about shared brain activations in the order of millimeters, a recent single‐unit recording study provided the first clear evidence of the existence of visuotactile bimodal neurons in the monkey IPC that discharge both when the monkey perceives a tactile stimulation and when he observes it at or near the equivalent body part of the experimenter [Ishida et al., 2010].

Recent research indicates that the primary somatosensory cortex (S1) may also be part of a system that internally represents observed touch of others. Traditionally, S1 has been assigned the role of a sensory input area that is inherently private in nature [Bufalari et al., 2007] and shows increased neuronal firing rates only when a tactile event is directly experienced [Kaas, 1983] and not when it is merely observed. Evidence for this interpretation is provided by studies that report significant increases in S1 activity only for experienced pain [Morrison et al., 2004; Singer et al., 2004, 2006] and touch [Keysers et al., 2004; Morrison et al., 2004], but not for the mere observation of such events [Jackson et al., 2005; Keysers et al., 2004; Morrison et al., 2004; Singer et al., 2004, 2006]. It is therefore often assumed that only higher order features of observed pain and touch, i.e., affective [Singer et al., 2004] or conceptual [Keysers et al., 2004] components, are corepresented in the observer, but that basic somatosensory features are not commonly shared.

However, this role of S1 has lately been challenged. Recent neuroimaging studies indicate that neuronal activity in S1 can be modulated by the mere observation of touch [Bufalari et al., 2007], and that such activity changes involve areas within S1 that are also active during experienced touch [Blakemore et al., 2005; Ebisch et al., 2008; Pihko et al., 2010; Schaefer et al., 2009]. This suggests a role of S1 in social situations not directly associated with real touch. For instance, in an fMRI study conducted by Blakemore et al. (2005), participants either received tactile stimulation on their own necks and faces while lying in the scanner, or observed objects and humans being touched at corresponding sites. Their task when observing the video sequences was to decide whether the intensity of the observed touch events was “hard,” “medium,” or “soft.” Voxels in contralateral S1 showed overlapping activity for both experienced touch and observed human touch, but not for experienced touch and observed object touch. Such results [see also Ebisch et al., 2008; Pihko et al., 2010; Schaefer et al., 2009] indicate that S1 might be particularly responsive to the active observation of tactile events that occur to a human counterpart, but not to objects, and indicate the existence of an area extracting primary sensory features of observed touch events. This redefines the role of S1 as only being a sensory input area but also an area which potentially has social properties.

However, with regard to the nature of S1 activity during observed touch, two main questions remain unanswered. Firstly, it is still a matter of debate whether increases in S1 activity during observed touch can really be related to observing a tactile event per se [Schaefer et al., 2009], or whether they only occur when observed touch is combined with observed action [Keysers et al., 2010]. The latter account questions the involvement of S1 in a system to specifically represent observed touch and is grounded in a number of studies that indeed showed increased S1 activity in response to observed movement [Avikainen et al., 2002; Dinstein et al., 2007; Filimon et al., 2007; Gazzola and Keysers, 2009; Gazzola et al., 2007; Grezes et al., 2003; Turella et al., 2009] and therefore included S1 within a system for representing observed action. Furthermore, in some previous studies on observed touch, reaching movements of the experimenter were shown [Blakemore et al., 2005; Ebisch et al., 2008; Pihko et al., 2010], which might have triggered the reported S1 activity during observation. In addition, S1 activity has often not been found in fMRI studies on observed passive touch [Keysers et al., 2004; Morrison et al., 2004]. However, one recent study conducted by Schaefer et al. (2009) provides contradictory evidence. In this study, participants made judgments on video sequences of static hands that were either touched or not touched by a paintbrush. They were required to count the number of strokes applied to the hand or to the floor, respectively, and to decide whether this number was equal or unequal to 25. Comparing the touch with the no‐touch conditions, seeing a static hand being touched significantly increased activity in S1, thus providing a clear indication that observing action might not be a mandatory component to find increased S1 activity during observed touch.

Secondly, it is still unclear which subregions of S1 are activated when touch is observed, and whether these are different for self‐ compared to other‐related observed touch. The precise characterization of the activations of S1 subregions during observed touch is decisive to fully understand how observed touch is represented in the observer's somatosensory system. More precisely, it is a matter of current debate whether only higher order (posterior) parts of S1 (i.e., Area 1 and 2) are recruited during observed human touch [Keysers et al., 2010], or whether the most primary (anterior) areas of S1 (i.e., Area 3a and 3b) are also activated when touch is observed [Schaefer et al., 2009]. The former account is supported by a number of studies that report activity peaks for observed touch solely in posterior S1 [Blakemore et al., 2005; Ebisch et al., 2008; Schaefer et al., 2009]. However, a recent fMRI study indicated that anterior S1 is also activated during observed touch, but only when activity changes during observed touch of egocentrically presented hands were directly compared to those occurring during observed touch of allocentrically presented hands [Schaefer et al., 2009]. Because of the well‐known influence of body perspective on self‐referential processing [Chan et al., 2004; Costantini and Haggard, 2007; Saxe et al., 2006; van den Bos and Jeannerod, 2002], it can be argued that in this study, anterior S1 showed selective activity when observed touch was more related to the self than to others.

However, this hypothesis needs additional exploration because it is currently unclear whether this effect would also generalize to a situation where participants really see their own hand versus another's hand being touched. This modulation is important particularly because of the unique effect seeing one's own hand has on behavioral [Frassinetti et al., 2008, 2010] and neuronal responses [Devue et al., 2007; Hodzic et al., 2009a, b; Myers and Sowden, 2008]. What makes current results additionally difficult to interpret is the fact that fMRI designs at 3 Tesla (T) offer well‐known limitations with regard to spatial specificity and signal detection power [Gati et al., 1997; Sanchez‐Panchuelo et al., 2010; Scouten et al., 2006; Stringer et al., 2011; Yacoub et al., 2003]. With S1 as region of interest, these restrictions are particularly critical due to the high likelihood of partial volume effects [Fischl and Dale, 2000; Sanchez‐Panchuelo et al., 2010; Scouten et al., 2006; Stringer et al., 2011] and the rather subtle activity changes of S1 in response to observed touch [Blakemore et al., 2005; Keysers et al., 2004; Schaefer et al., 2009]. It is therefore not clear whether the shift in S1 activity to anterior sites when touch is more related to the self would be reproducible with the use of ultra‐high field fMRI at 7T, which provides advances with respect to the aforementioned limitations [Bandettini, 2009; Stringer et al., 2011; van der Zwaag et al., 2009].

To clarify these issues, we conducted an fMRI study at 7T to investigate (I) whether increases in S1 activity can be related to observed passive touch, (II) to what extent this effect can be modulated by self‐ and other‐related observed touch, and (III) which subregions of S1 display increased activity levels for self‐ and other‐related observed touch, respectively. Similar to the study conducted by Schaefer et al. (2009), we recorded video sequences while we applied touch to participants' passively lying hands without the experimenter being visible. Here, touch was applied via sandpaper samples with different levels of roughness. When watching the video sequences in the scanner participants were required to decide which of two sandpaper samples had the rougher surface.

To directly test for the effect of self‐ and other‐related observed touch on S1 activity changes, we included not only the factor of hand perspective but also that of hand identity, resulting in experimental conditions where participants observed videos of their own or another person's hand being touched either from egocentric or allocentric perspectives. It was expected that any neuronal differentiation in S1 during self‐ versus other‐related observed touch should be clearly detectable with the inclusion of both experimental factors. Importantly, we were able to circumvent the well‐known limitations of 3T fMRI designs regarding spatial specificity and signal detection power [Gati et al., 1997; Sanchez‐Panchuelo et al., 2010; Scouten et al., 2006; Stringer et al., 2011; Yacoub et al., 2003] by using fMRI at 7T, which provides better spatial resolution, spatial specificity and detection sensitivity as compared with standard 3T imaging [Bandettini, 2009; Stringer et al., 2011; van der Zwaag et al., 2009].

MATERIALS AND METHODS

Participants

Seventeen healthy volunteers (7 men, 10 women; mean age 24.9 years, age range 20–31 years) participated in our study. All were right‐handed (mean handedness score Edinburgh inventory: 93.4, Oldfield, 1971), had normal or corrected‐to‐normal vision and had no reported history of neurological, major medical or psychiatric disorders. The study was approved by the ethics committee of the University of Leipzig and informed consent was obtained from every subject.

Materials and Design

During scanning, participants observed short video sequences of right hands, which were either touched or not touched by a rectangular piece of aluminum oxide sandpaper (3 cm in width). The content of the video sequences (each 6 s in length) was varied in a 2 × 2 × 2 factorial design, using the factors of touch (observed touch, no‐touch), hand identity (self, other), and hand perspective (egocentric, allocentric) (see Fig. 1A). These video sequences were recorded before the fMRI experiments using the same participants. In the observed touch conditions, the video sequences showed a right hand being passively stroked by a piece of sandpaper, vertically moving up and down along the underside of the index finger (one vertical movement/2 s). The contact between the sandpaper and the hand was clearly visible because of slow skin shifts of the index finger when the sandpaper was moved along the finger. In the no‐touch conditions, a piece of sandpaper was seen also moving in the same temporal sequence and direction as in the observed touch videos, but here, it did not touch the hand. Instead, it was moved in close proximity to the index finger. Importantly, because both the hand and the piece of sandpaper were observed in reference to a static (white) background in the videos, it was obvious that only the sandpaper, and not the hand, was moving. In the self conditions, the video sequences showed the participant's own hand, whereas in the other conditions, another person's hand was seen. Self/other‐pairs were previously matched in terms of gender, approximate hand size, finger shape, brightness and color. In the egocentric conditions, hands were presented in a self‐referenced (1st person) perspective, whereas in the allocentric conditions, hands were presented in an other‐referenced (3rd person) perspective. This resulted in eight different conditions (i.e., self egocentric observed touch, self allocentric observed touch, other egocentric observed touch, other allocentric observed touch, self egocentric no‐touch, self allocentric no‐touch, other egocentric no‐touch, and other allocentric no‐touch).

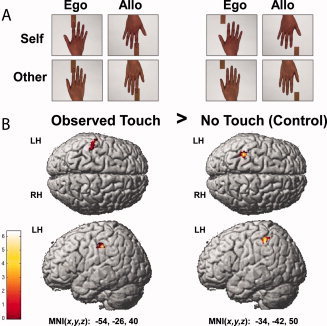

Figure 1.

(A) Video stimuli of an example pair. Different types of video sequences were varied in a 2 × 2 × 2 factorial design with the factors Touch (Observed Touch, No‐Touch), Hand Identity (Self, Other), and Viewing Perspective (Ego = Egocentric, Allo = Allocentric); (B) Activity changes in S1 for the contrast Observed Touch > No‐Touch across experimental conditions superimposed on the MNI reference brain (visualized at P < 0.005 (uncorrected) and masked with left S1).

When they were videotaped, participants were naive to the purpose of the video, and could see neither their own nor the experimenter's hand. Participants were instructed to completely relax their hands and fingers and not to put force on the sandpaper or on the tabletop. All videos were recorded using the same distance and angle between the camera, the piece of sandpaper, and the participant's hand, and a constant illumination level was provided. Video sequences in which the experimenter's hand was visible or sandpaper motion caused overt movements of the participant's finger were not used for the experiment.

Importantly, the pieces of sandpaper depicted varied in their grit values (i.e., P40, P60, P80, P120, P150, and P220) and thus in their individual level of roughness. Sandpapers with higher grit values are generally perceived as smoother, whereas sandpapers with lower grit values are perceived as rougher [Heller, 1982]. In each experimental condition (see later), six different pairs of sandpaper grit values were shown to participants (i.e., P40/P60, P40/P80, P60/P120, P80/P150, P120/P220, and P150/P220), who were required to distinguish between the roughness levels of the sandpaper pairs by sight.

PROCEDURE

Hand Recognition Task (Before Scanning)

Before scanning, all participants performed a hand recognition task. In this task, they were tested as to whether they could reliably differentiate between their own hand and another person's hand presented on the screen. In this test, participants were shown pictures of their own hand and another person's hand, either in an egocentric or an allocentric viewing perspective, in a randomized sequence. Each hand picture was shown for 2 seconds, followed by a 2‐second fixation until the next picture was presented. Participants were asked to decide whose hand they saw on the screen as quickly and as accurately as possible. Responses were given via right hand button presses: Half of the participants answered with their right middle finger for their own hand and with their right index finger for the other hand, and the other half responded using the opposite finger to button pairing. Each condition was repeated five times, resulting in 20 trials altogether.

Roughness Estimation Task (Scanning Session)

After completion of the hand recognition task, participants were prepared for the roughness estimation task to be subsequently performed in the scanner. For this purpose, they practiced this task with example stimuli for 3 minutes outside of the scanner room. Example stimuli were composed of the same videos as subsequently used in the scanning session, but were combined differently so that the sequence of videos seen in the training phase did not occur during the scanning session. During this training, participants answered in the same response mode and with the same response device as they did in the subsequent scanning session.

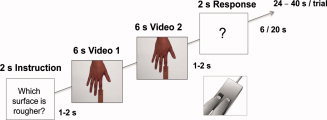

The scanning session consisted of one long run lasting 45 minutes that included all experimental conditions. A block design was applied, and each of the eight experimental conditions was presented 12 times, resulting in 96 trials in total. Each trial started with the same instruction screen (“Which surface is rougher?”) to remind participants about the goal of the task and to inform them about the onset of a new trial. After 1–2 seconds, participants were shown two video sequences in direct succession that were always from the same experimental condition but presented two different sandpaper samples (for sandpaper combinations, see earlier). After 1–2 seconds, participants were then asked to answer via button press which of the two different sandpaper samples had the rougher surface (see Fig. 2). Participants had to respond within a comfortable 2‐seconds time window (forced choice), and no speeded responses were required. Half of the participants responded with their left index finger when they thought the first surface was rougher and with their left middle finger when they thought the second surface was rougher; the other half responded in the opposite manner. Half of the video sequences in each condition started with the rougher surface, the other half started with the smoother surface. To control for the influence of preceding trials on following trials, the time interval between trials was 6 seconds for three out of four trials, and 20 seconds for the rest of the trials; this was randomized between trials and counterbalanced across conditions. Trials were presented in a pseudorandomized order, and in such a way that trials of each condition added up to the same relative time point within the experiment.

Figure 2.

Trial structure of scanning session.

MRI DATA ACQUISITION

Functional and anatomical MRI data were acquired with a 7T MRI Scanner (Magnetom 7T, Siemens Healthcare Sector, Erlangen, Germany) with a 24‐channel NOVA head coil (Nova Medical, Wilmington, MA). Before functional scanning, high‐resolution 3D anatomical T1‐weighted scans were acquired (MP2RAGE, TR = 5.0 s, TE = 2.43 ms, TI½ = 900 ms/2750 ms, flip angle ½ = 7°/5°) with a voxel resolution of (0.7 mm)3 isotropic [Marques et al., 2010]. T1‐weighted scans were subsequently used for selecting 30 axial slices (interleaved slice acquisition, slice thickness = 1.5 mm, no gap) covering the region of interest (ROI), i.e., bilateral S1 and adjacent areas, relevant for the functional scans. It was important that participants did not perform any significant head movements from this point on, to ensure slice selection would cover our ROI during the entire experiment. Therefore, to prevent head movements occurring during breaks in the experiment, functional data for each participant were acquired in one scan run. Functional T ‐weighted gradient‐echo echo‐planar images of our ROI were then acquired using GRAPPA acceleration (iPAT = 3) [Griswold et al., 2002]. A field of view of 192 × 192 mm2 and an imaging matrix of 128 × 128 were used. The functional images had isotropic voxels with an edge length of 1.5 mm. The other sequence parameters were: TR = 1.5 s, TE = 20 ms, flip angle = 90°. For the visual task, participants viewed a projector screen mounted on the receiving coil by means of a small moveable mirror adjusted to give best visibility for each participant. The middle and index fingers of the participant's left hand were placed on the two buttons of a response box. To attenuate scanner noise, participants were provided with earplugs and defenders.

fMRI Group Analyses

Data preprocessing and statistical analyses were carried out using SPM8 (Statistic Parametric Mapping, Wellcome Department of Imaging Neuroscience, University College London, London, UK). A slice timing correction was applied to correct for differences in image acquisition time between slices, and realignment was performed to minimize movement artifacts in the time series [Unser et al., 1993a, b]. Normalization to standard MNI space was done using the unified segmentation approach based on image registration and tissue classification [Ashburner and Friston, 2005]. The original isotropic voxel resolution of 1.5 mm3 was preserved during this procedure. Data were then smoothed with a Gaussian kernel of 4 mm full‐width half‐maximum (FWHM), and filtered with a high‐pass filter of 0.01 Hz to eliminate slow signal drifts. A general linear model (GLM) was fitted to the data and t‐maps were created on the individual subject level via a fixed‐effect model. The observation times of the two video sequences in each trial (12 seconds altogether) were modeled as a block and used to compute contrast images by linear combination of parameter estimates. We used t‐tests to calculate the main effect of observed touch by contrasting grouped observed touch conditions against grouped no‐touch conditions (observed touch [self egocentric + other egocentric + self allocentric + other allocentric] > no touch [self egocentric + other egocentric + self allocentric + other allocentric]). Furthermore, we investigated the effect of observed touch individually for all four single conditions (observed touch self egocentric > no‐touch self egocentric, observed touch other egocentric > no‐touch other egocentric, observed touch self allocentric > no‐touch self allocentric, observed touch other allocentric > no‐touch other allocentric). We also calculated the main effects of perspective on the observed touch versus no‐touch contrast (observed touch > no touch [self egocentric + other egocentric] < > observed touch > no touch [self allocentric + other allocentric]), and the main effect of identity on the touch versus no‐touch contrast (observed touch > no‐touch [self egocentric + self allocentric] < > observed touch > no‐touch [other egocentric + other allocentric]). We also calculated the interaction effects between perspective and identity on the observed touch versus no‐touch contrasts.

Functional images were masked with anatomical ROIs encompassing the left and right S1. Since we specifically wished to compare activity changes between anterior S1 (i.e., Areas 3a and 3b) and posterior S1 (i.e., Areas 1 and 2), we also created anatomical masks of anterior and posterior S1, respectively. These were used for small volume corrections, and for estimating contrast estimates of the observed touch versus no‐touch contrasts in both subregions. All mask images were defined a priori using the Anatomy Toolbox implemented in SPM [Eickhoff et al., 2005, 2006, 2007; Geyer et al., 1999, 2000; Grefkes et al., 2001]. The threshold for significant clusters and voxels was defined at P < 0.05 (FWE‐corrected) with a minimal cluster size of five voxels. Observed touch versus no‐touch contrast estimates of the anterior and posterior S1 were compared to one another by performing Bonferroni‐corrected paired‐sample t‐tests (two‐sided), and were tested against zero by performing one‐sample t‐tests (two‐sided).

fMRI Single Subject Analyses

In addition to group analyses, functional and anatomical data of all participants were also separately evaluated in single subject analyses. As for the group analyses, slice timing correction, realignment, and normalization were performed using SPM 8. In contrast to our group analyses, to preserve the high spatial resolution (1.5 mm3 isotropic), smoothing was not applied to the functional scans. The unsmoothed data were used to precisely localize the clusters significantly activated in response to observed touch (main effect and single effects) in each individual subject. To quantify the consistency of activations in response to the main effect of observed touch across subjects, we additionally summed the 14 contrasts (observed touch > no‐touch) of all subjects and calculated a spatial consistency map. This map contained values ranging from 0 to 14 and quantified the number of subjects for which a particular voxel was active.

Furthermore, we performed a second analysis on the single subject level, using preprocessing identical to the first analysis, except that functional data were not normalized into stereotactic space. Here, the high‐resolution T1 images of each participant were taken to define the hand area of S1 and S1 subregions anatomically for each individual subject [Stringer et al., 2011]. The defined subregions could subsequently be used to identify the location of S1 activity changes based on individual brain anatomy. To identify the hand area in S1, we took advantage of the fact that this can easily be localized in anatomical scans. The “hand‐knob” area, that is, the inverted‐omega‐shaped gyrus, is a reliable anatomical marker for the hand area in S1, and is clearly identifiable in coronal and sagittal T1 images [Moore et al., 2000, Sastre‐Janer et al., 1998, White et al., 1997, Yousry et al., 1997]. We specified S1 subregions anatomically by using guidelines that linked cytoarchitectonic labeling with anatomical descriptions of subregions [Geyer et al., 1999, 2000; Grefkes et al., 2001; White et al., 1997]. According to these specifications, Areas 3a and 3b are found in the deep valley of the central sulcus and in the anterior wall of the postcentral gyrus, respectively, whereas Areas 1 and 2 are located at the crown of the postcentral gyrus and at the posterior wall of the postcentral gyrus, respectively. The border between anterior and posterior S1 (i.e., between Areas 3a/3b and 1/2) is thus located at the posterior lip of the central sulcus. Importantly, this border shows high consistency across and within subjects [White et al., 1997] and therefore allowed us a relatively specific distinction between anterior and posterior S1, although it is important to note that no clear anatomical landmark exists for the exact transition zone between regions [Geyer et al., 1999]. In both single subject analyses, we report significant voxels at P < 0.001 (uncorrected) that belong to a cluster with the minimum size of 5 voxels. Further, we only included peak voxels that were located within the hand area of participants (z > 40 for normalized data).

RESULTS

Hand Recognition Task (Before Scanning)

Before scanning, we assessed participants' ability to correctly distinguish their own from another individual's hand via a speeded hand recognition task. We calculated a repeated measures ANOVA with the within‐subject factors identity (self, other) and perspective (egocentric, allocentric), which was applied for percentage correct responses (% accuracy) and reaction times (RT). We only included participants in further analyses if they could differentiate their own hand from another's hand in both egocentric and allocentric perspectives in at least 85% of the cases [n = 14, mean % accuracy egocentric: 99.23% ± 2.7% (SD), mean % accuracy allocentric: 99.23% ± 2.7% (SD)]. Percentage accuracies did not differ between conditions, but there was a significant effect of perspective on participants' reaction times. Participants took longer to differentiate self from other in the allocentric compared to the egocentric conditions [mean RT egocentric: 864.3 ± 279.6 (SD), mean RT allocentric: 926.7 ± 267.5 (SD), F(1,13) = 12.1, P < 0.005]. Furthermore, there was a significant interaction effect between perspective and identity [F(1,13) = 8.1, P < 0.05], indicating that seeing hands in the allocentric perspective caused a particular slowing of responses when the participant's own hand was seen compared to when another person's hand was seen (see Supporting Information Table S1).

Roughness Estimation Task (Scanning Session)

While watching the observed touch and no‐touch videos in the scanner, participants had to decide which of two sandpaper pieces displayed in subsequent video sequences had the rougher surface. Our inclusion criterion was a minimum of 75% accuracy in this task to ensure each participant paid careful attention to the observed touch and no‐touch videos [n = 14, mean % accuracy: 88.9% ± 6.8% (SD)]. A repeated measures ANOVA with the within‐subject factors touch (observed touch, no touch), identity (self, other), and perspective (egocentric, allocentric) on % accuracy was performed to assess differences and interaction effects between conditions. There were no significant differences or interaction effects with regard to accuracy in the task [F(1,13) = 1.6, P = 0.23 for the factor perspective, F(1,13) = 4.0, P = 0.07 for the interaction between touch and perspective].

fMRI Group Results

fMRI group level statistics were performed with functional data from 14 participants (4 men, 10 women). We first compared activation levels during no‐touch conditions to baseline activation levels. Observing no‐touch videos (compared to baseline) activated significant clusters in left posterior S1 as a main effect. Single contrasts (self egocentric > baseline, self allocentric > baseline, other egocentric > baseline, other allocentic > baseline) revealed increased activity within bilateral posterior S1 (see Table I). When compared directly, there were no significant differences in activation levels between the different no‐touch versus baseline conditions. We therefore used all four no‐touch conditions as control conditions to pursue the main aim of our study; to specifically investigate the effect of observed touch on brain activity changes in S1.

Table I.

Neuronal responses in S1 for Observed Touch > No‐Touch and No‐Touch > Baseline in different experimental conditions

| Contrast | Brain region | MNI location (x, y, z) | Peak t‐value | No of voxels | |

|---|---|---|---|---|---|

| Observed touch > | Main effect | L Area 2 | −54, −26, 41 | 4.97 | 53 |

| No‐touch | L Area 2 | −34, −42, 50 | 6.26 | 52 | |

| Self egocentric | — | ||||

| Self allocentric | L Area 1 | −62, −20, 40 | 5.74 | 27* | |

| Other egocentric | — | ||||

| Other allocentric | L Area 2 | −44, −44, 62 | 5.66 | 79 | |

| L Area 2 | −24, −50, 58 | 5.06 | 70 | ||

| No‐touch > baseline | Main effect | L Area 2 | −28, −48, 50 | 5.77 | 83 |

| L Area 2 | −44, −36, 42 | 5.15 | 73 | ||

| Self egocentric | R Area 2 | 36, −43, 47 | 7.01 | 136 | |

| L Area 2 | −27, −49, 52 | 5.92 | 58 | ||

| Self allocentric | R Area 2 | 39, −43, 53 | 5.68 | 43 | |

| Other egocentric | L Area 2 | −46, −40, 48 | 7.87 | 79 | |

| L Area 2 | −28, −48, 50 | 7.78 | 63 | ||

| R Area 2 | 38, −42, 46 | 6.91 | 46 | ||

| Other allocentric | R Area 2 | 46, −36, 48 | 6.56 | 46 | |

| R Area 2 | 32, −46, 53 | 5.84 | 87 | ||

| L Area 2 | −45, −36, 44 | 5.51 | 76 |

P < 0.05 (FWE‐corrected) at cluster level, small volume corrected, k > 5; *only significant at peak voxel level.

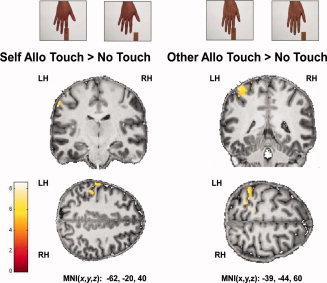

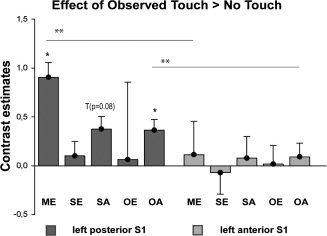

We first compared grouped activity levels of the observed touch conditions to grouped activity levels of the no‐touch conditions. As a main effect, we found two clusters of significantly higher activity during the observed touch conditions compared to the no‐touch conditions, both located in left posterior S1 (see Fig. 1 and Table I). There were no significant activity changes in left anterior S1 or in right anterior or posterior S1. In an exploratory analysis (see discussion), we examined whether this main effect of observed touch would also survive significance thresholds when functional data were not small volume corrected. Our results showed that the main effect of observed touch versus no‐touch indeed also survived significance thresholds when results were not small volume corrected [x = −54, y = −26, z = 41, k = 126, t = 4.97, P < 0.05 (FWE‐corrected)]. We then specifically characterized the effect of observed touch versus no‐touch in the four different experimental conditions. That is, we calculated the contrast observed touch versus no‐touch separately for each of the four experimental conditions, self egocentric, self allocentric, other egocentric, and other allocentric. Significantly greater activity for observed touch versus no‐touch was detected in the allocentric conditions only: For the other allocentric observed touch versus no‐touch contrast, two significant clusters in left posterior S1 showed higher levels of activity, and for the self allocentric observed touch versus no‐touch contrast, we found one cluster in posterior left S1 with significantly higher activity (see Fig. 3 and Table I). Notably, in the contrast self allocentric observed touch versus no‐touch, activity changes were significant only on a peak‐voxel level, but not on a cluster level, whereas in the contrast other allocentric observed touch versus no‐touch, activity changes were also significant at a cluster level. No effect of observed touch versus no‐touch in S1 was detected for the egocentric conditions. When testing the observed touch versus no‐touch contrast estimates against zero, we found similarly that only contrast estimates of posterior S1, but not of anterior S1, were significantly different from zero. More precisely, for posterior S1, only the contrast estimates for the main effect of observed touch versus no‐touch and for the effect of other allocentric observed touch versus no‐touch were significantly different from zero (main effect posterior: mean: 0.90 ± 1.26 (SD), t(13) = 2.689, P < 0.05; other allocentric posterior: mean: 0.36 ± 0.51 (SD), t(13) = 2.681, P < 0.05), with an additional trend towards significance for the contrast estimate self allocentric observed touch versus no‐touch (self allocentric posterior: mean: 0.37 ± 0.73 (SD), t(13) = 1.927, P = 0.076, see Fig. 4).

Figure 3.

Activity changes in S1 for the contrasts Self Allo (=Allocentric) Observed Touch > No‐Touch and Other Allo Observed Touch > No‐Touch superimposed on coronal and axial slices of an individual's normalized T1‐image (visualized at P < 0.005 (uncorrected) and masked with left S1).

Figure 4.

Contrast estimates extracted from left posterior (Area 1+2) and left anterior (Area 3a+3b) S1 for the contrast Observed Touch > No‐Touch in different experimental conditions, i.e., for the main effect (ME), and for the self egocentric (SE), self allocentric (SA), other egocentric (OE), and other allocentric (OA) conditions.

We were further interested in whether S1 activity changes in response to observed touch as reported above would significantly differ between experimental conditions when compared directly. Here, we did not find any significant differences between conditions for either the main effects (i.e., perspective and identity) or single effects. Likewise, the interaction effect (touch × identity × perspective) did not reveal any significant activity changes. We also compared the contrast estimates of the observed touch versus no‐touch contrasts between anterior and posterior S1. We found that contrast estimates were significantly higher for posterior S1 than for anterior S1 for the main effect of observed touch, and for other allocentric observed touch (main effect: mean anterior: 0.11 ± 0.56 (SD), mean posterior 0.90 ± 1.26 (SD), t(13) = −3.050, P < 0.05; other allocentric: mean anterior 0.09 ± 0.41 (SD), mean posterior 0.36 ± 0.51 (SD), t(13) = −4.285, P < 0.005, Bonferroni‐corrected). There were no significant differences in the observed touch versus no‐touch contrast estimates for anterior versus posterior S1 for the self egocentric, self allocentric, and other egocentric conditions (see Fig. 4).

fMRI Single Subject Analyses

The analyses of single subject data fulfilled two purposes. First, we aimed to use unsmoothed functional data to track functional activity specifically related to observed touch versus no‐touch in each single participant using automated labeling tools as well as anatomical landmarks. Second, we wished to avoid the misinterpretation of group level statistics resulting from a lack of overlap between single subject activity clusters, which can be due to small cluster size or high individual variability in cluster location. Because we observed a strong left lateralization in the observed touch versus no‐touch contrast in the group level statistics, and to keep analyses within reasonable limits, the single subject analyses were restricted to each individual's left S1 activity changes.

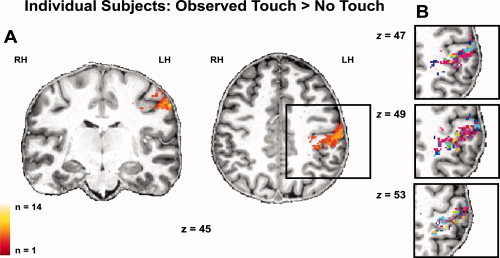

Our analyses demonstrated that 12 out of 14 participants showed a main effect of observed touch in left S1, and that in all except two participants, this effect peaked in posterior parts of S1 (see Fig. 5 and Supporting Information Table S2). The spatial consistency map obtained similar results, in that highest values (i.e., voxels activated by a high number of participants) were found in left posterior S1 (see Fig. 5). We were further interested in whether the clear dominance of the observed touch effect to allocentrically observed touch and to posterior sites of S1 would also be present looking at single subject data. For the self allocentric observed touch versus no‐touch contrast, 11 out of 14 participants showed increased activity in left S1, and 10 out of 14 participants showed this effect for the other allocentric observed touch versus no‐touch contrast; in all except one participant, peak values of these activations were located in left posterior S1. One participant showed peak voxel values in left anterior S1 for the self allocentric observed touch versus no‐touch comparison. For the egocentric conditions, 8 out of 14 participants showed significant increases during observed touch versus no‐touch in the self egocentric condition, and 8 out of 14 participants also showed this effect during the other egocentric observed touch versus no‐touch condition. All except one of these activity peaks were again localized in left posterior S1. One participant showed peak voxel values in anterior S1 for the self allocentric observed touch versus no‐touch comparison. These analyses confirmed that almost all main activity peaks in S1 during observed touch were located in posterior parts of S1, and that more participants showed an effect for allocentrically observed touch than for egocentrically observed touch. Although main peaks of activity were located in posterior S1, we investigated whether any significant activity changes in anterior S1 would occur at all when observed touch conditions were compared to no‐touch conditions. The results showed that only a minority of our participants showed any significant activity changes in left anterior S1 in response to observed touch versus no‐touch (n = 3 for self egocentric observed touch versus no‐touch, n = 2 for self allocentric observed touch versus no‐touch, n = 7 for other egocentric observed touch versus no‐touch, and n = 4 for other allocentric observed touch versus no‐touch).

Figure 5.

Individual activity profiles of all participants' (n = 14) unsmoothed functional data for the main effect of Observed Touch > No‐Touch superimposed on coronal and axial slices of an individual's normalized T1 image (visualized at P ≤ 0.001 (uncorrected) and masked with left S1); (A) Spatial consistency map of individual participants, bright yellow colors represent areas of high overlap, dark orange colors areas of low overlap between participants; (B) Individual participants' activity profiles are shown separately, each color represents active voxels of an individual subject.

DISCUSSION

Our study offers two main findings that are novel with respect to the current literature. Firstly, we provide clear evidence that observed passive touch distinctively activates posterior parts of S1, but not anterior parts. Secondly, we demonstrate that increases in S1 activity in response to observed touch versus no‐touch occur specifically during other‐related observed touch, but do not show significant levels of activity during self‐related observed touch. Importantly, we did not detect any systematic shifts from posterior to anterior sites when observed touch was more clearly related to the self. We therefore conclude that posterior but not anterior S1 may be part of a system for sharing tactile experiences with others.

Methodologically, our approach represents important advances as compared with standard 3T fMRI designs that suffer from well‐known limitations in terms of spatial specificity and sensitivity [Bandettini, 2009; Gati et al., 1997; Scouten et al., 2006; Yacoub et al., 2003]. Since S1 is markedly susceptible to partial volume effects [Scouten et al., 2006; Stringer et al., 2011] and offers low degrees of activity changes in response to observed touch [Blakemore et al., 2005; Fitzgibbon et al., 2010; Keysers et al., 2004; Schaefer et al., 2009], such limitations are particularly critical in the framework investigated. Ultra‐high field fMRI at 7T offers high resolution images of both functional and anatomical scans, combined with high sensitivity without compromising temporal resolution (by the use of parallel imaging), and therefore offers an optimized approach to investigate activity changes in S1 subregions in response to observed touch [Bandettini, 2009; Gati et al., 1997; Sanchez‐Panchuelo et al., 2010; Scouten et al., 2006; Stringer et al., 2011].

Main Effect of Observed Touch

In our study, participants observed pairs of short video sequences that showed a static hand being either touched or not touched by different pieces of sandpaper while scanning took place. Their task was always to decide which of the two sandpaper samples had the rougher surface. Our results show that observing hand touch compared with observing the same hand not being touched is related to increased activity in left S1, that is, contralateral to the observed hand that was touched. In comparison to other fMRI studies that also reported increased contralateral S1 activity during observed touch [Blakemore et al., 2005; Schaefer et al., 2009], our results reached significance even without applying small volume corrections to the functional data. This strengthens existing evidence that even the most primary sensory areas, such as S1, are responsive when touch is actively observed. An early indication that S1 plays a role during the encoding of observed touch was provided by a study by Zhou and Fuster in 1997. In this study, discharge rates of single neurons in the anterior parietal cortex of monkeys, roughly corresponding to the human S1, were recorded during a visuo‐haptic delayed matching‐to‐sample task. Units in the anterior parietal cortex of monkeys increased their discharge rates during haptic exploration, but also when surface patterns were only passively observed. Recent evidence that such findings can be generalized to the human population was provided by an fMRI study by Blakemore et al. (2005). In this study, participants rated the intensity of tactile stimulation of human necks and faces as shown on video, as well as touching of nonbody objects. Increased activity in contralateral S1 was observed when human touch conditions were contrasted with object touch conditions, thus specifying the responsivity of S1 to the observation of human touch. In a similar vein, Schaefer et al. (2009) demonstrated that increases in S1 activity can be specifically related to seeing a hand being touched versus seeing the same hand not being touched [Schaefer et al., 2009].

However, there are also fMRI studies which did not find any significant increases in S1 activity in response to observed touch [Keysers et al., 2004; Morrison et al., 2004]. In one study, participants observed tactile stimulation of legs while lying in the scanner, which was compared to brain activity changes when nontouched legs were observed [Keysers et al., 2004]. Here, only a trend towards significantly increased S1 activity was detected. In another study, participants observed hands being either touched with a cotton bud or pricked with a needle [Morrison et al., 2004]. Likewise, in this case, no increase in S1 activity in response to observed touch was detected.

We hypothesize that the main reason that these studies did not detect an effect in S1 in response to observed touch is offered by the limited sensitivity provided by the methods used [Sanchez‐Panchuelo et al., 2010; Scouten et al., 2006; van der Zwaag et al., 2009]. The effect of observed touch on S1 activity might be subtle enough to escape common detection thresholds using standard fMRI 3T designs [Fitzgibbon et al., 2010]. This is particularly plausible given that most studies which found increased activity in S1 during observed touch applied small volume corrections of very narrow width to the functional data [Blakemore et al., 2005; Schaefer et al., 2009]. Since sensitivity increases with increasing field strength [Bandettini, 2009; van der Zwaag et al., 2009], this clearly highlights the advantages of fMRI at ultra‐high fields. Indeed, the main effect of observed touch was highly significant in our study. As previously noted [Schaefer et al., 2009], it is, however, also possible that the neural representation of the human leg, used to detect responses to observed touch in one study [Keysers et al., 2004], might be less easily detectable with fMRI than that of the human hand or face [Blakemore et al., 2005; Schaefer et al., 2009] given the much larger cortical territory in S1 representing the latter compared with the former. A third explanation for the divergent findings may be the fact that in both studies giving negative findings, participants were not provided with a particular task during scanning, but merely looked passively at the video projection screen. In studies that found increased S1 activity during observed touch, such as the present one, however, participants were asked to engage with the video sequences by counting the number of strokes [Schaefer et al., 2009], the number of videos where no touch occurred [Ebisch et al., 2008], by rating the intensity [Blakemore et al., 2005] or roughness (this study) levels of the touch applied. It has been argued previously that the level of task activity can affect brain responses to observed tactile events [Gu and Han, 2007; Singer and Frith, 2005]. It may also be noted that in three out of the four listed articles that found S1 activity during observed touch [Blakemore et al., 2005, Schaefer et al., 2009, current study] a judgment task was solved when the video sequences were observed, which could possibly also influence the degree to which S1 activity was detected. However, the degree to which the task applied alters S1 activity changes during observed touch is currently unclear and certainly needs further exploration by future studies.

Another theory proposes that the failure to find increased S1 activity during observed touch has a more qualitative explanation referring to the observed event per se. It was argued that whenever S1 activity in response to observed touch is detected, this merely represents observed hand movement or haptic exploration rather than the mirroring of passive touch [Keysers et al., 2010]. In other words, the effect reflects processing of the action rather than the sensation. However, in our study, we carefully controlled for possible confounding factors such as action components included in the video sequences [Blakemore et al., 2005; Ebisch et al., 2008; Pihko et al., 2010], and only showed videos of a static hand being passively touched by a piece of sandpaper. The experimenter was not visible in any of our video sequences. Also, when videotaped before the actual experiment, participants saw neither their own arm nor the arm of the experimenter, were instructed to relax their hands and fingers as much as possible, and were required to not actively press on the tabletop or on the sandpaper. Therefore, it is highly unlikely that participants associated the video stimuli directly with human movement [Yoo et al., 2003; Zhou and Fuster, 1997] or active haptic exploration. Nevertheless, we found a clear activity increase in contralateral S1 when comparing observed touch to no‐touch conditions. We therefore conclude that contralateral primary somatosensory cortical areas are selectively responsive to the observation of passive hand touch compared with observing the same hand not being touched. Our study hence adds to the growing evidence, as for example also provided in the motor domain [Caetano et al., 2007; Dushanova and Donoghue, 2010], indicating that the interplay between different senses not only affects established multisensory convergence zones, such as the posterior and inferior parietal cortices, but may also include brain regions previously considered to be specific to sensation. Such findings have lately been termed the “revolution in multisensory research” [Driver and Noesselt, 2008], and indeed challenge our common‐sense view that self‐ and other‐related (i.e., experienced and observed) events are fundamentally different in nature.

Posterior S1 is Active in Response to Observed Touch

One main rationale for the present study was to precisely localize activity changes in S1 in response to observed touch. On the group level, all activity peaks in response to observed touch were clearly assignable to posterior S1, and there were no significant effects at all in anterior S1. Contrast estimates in response to observed touch were also significantly higher in posterior compared to anterior S1. Consistent with this, at the single subject level, almost all activity peaks in S1 during observed touch were located in posterior S1, and there were only few significant changes in anterior S1 during observed touch. These results confirm the recently formulated hypothesis by Keysers et al. (2010), which argues that during observed touch, only posterior and not anterior parts of S1 are recruited. They hypothesized that this is due to a strong link between neurons in posterior S1 and visual input areas that activate those neurons via backprojections. Our results confirm this hypothesis in providing clear evidence that activity changes during observed touch are maximal in posterior S1, and are almost absent in anterior S1. Previous studies already indicated a particularly strong response of posterior S1 during observed touch [Blakemore et al., 2005; Ebisch et al., 2008; Schaefer et al., 2009]. However, we are the first to validate these assumptions by using both automated labeling tools and anatomical definitions on the group and single‐subject level in an ultra‐high resolution fMRI study.

We can thus be confident that S1 activity during observed touch occurs mainly in posterior parts of S1 and that experienced touch is represented both in posterior and anterior parts of S1 [Schaefer et al., 2009]. With these findings, one puzzling question seems to be answered, namely, how one could resolve the confusion regarding who is being touched if the same neuronal substrate was active for observed touch and felt touch. It seems that anterior parts of S1 are mostly private in nature, and show increased activity for experienced touch (see, for example, Keysers et al., 2004; Schaefer et al., 2009; Stringer et al., 2011), whereas posterior parts of S1 respond both when touch is received [Schaefer et al., 2009; Stringer et al., 2011] and when touch of another person is observed, building an ideal basis for shared representations in the tactile domain.

Reduced Activity during Self‐Related Observed Touch

The second main aim of the present study was to compare S1 activity changes between conditions where self‐related touch was observed to those where other‐related touch was observed. To this end, we included conditions not only where other individuals' hands were observed in egocentric and allocentric perspectives as in a previous study [Schaefer et al., 2009], but also investigated whether any differences would occur between conditions where participants actually saw their own hand or another's hand being touched. Our functional results generally indicate a strong influence of perspective on S1 activity changes. S1 activity reached significant levels in response to observed touch only when allocentric touch was observed, whereas significance completely vanished at the group level when touch of their own and another's hand was observed in an egocentric perspective. Furthermore, we found a small effect of hand identity, in that a strong effect of observed touch on S1 activity was only seen when participants observed another person's hand, but not their own hand, being touched in an allocentric perspective. Additionally, a significant difference between anterior and posterior S1 activity was only found for the other allocentric observed touch condition, but not for the self allocentric observed touch condition. We therefore find a clear preference of S1 activity changes towards other‐related observed touch, whereas lower activity levels were observed when touch was more self‐related. Importantly, neither the group‐level nor the single‐subject level analyses provide any indication that S1 activity shifts to more anterior sites when touch is more related to the self.

Although our study is the first fMRI study showing that reduced activity levels in S1 occur when touch of one's own hand is observed, a number of earlier studies using other methods also support this. One such study used somatosensory evoked potentials (SEPs) to investigate the influence of observed pain and (harmless) touch on neuronal activity changes in S1 [Bufalari et al., 2007]. It was shown that the amplitude of the P45 component, supposedly originating in S1, was increased during the observation of pain, but decreased during the observation of touch relative to baseline. Importantly, observed stimulation was shown in a self‐referenced (egocentric) perspective, and thus the decrease of the P45 component was interpreted as a sensory gating effect for self‐related observed touch. In a recent study, Longo et al. (2011) found a similar attenuation of SEPs originating in S1 when participants were looking at their own hands in an egocentric perspective within breaks during a tactile discrimination task [Longo et al., 2011].

Sensory gating mechanisms for observed self‐related events have also been proposed to occur in the motor domain. Using transcranial magnetic stimulation, Schütz‐Bosbach et al. (2006) demonstrated that motor evoked potentials (MEPs) of subjects' hand muscles are slightly attenuated during observation of self‐related actions, but are increased during observation of other‐related actions [Schütz‐Bosbach et al., 2009; Schütz‐Bosbach et al., 2006]. This dichotomy in MEPs between observing the self and observing others strongly resembles our present results, and was interpreted as evidence that the neural mechanisms underlying action observation are intrinsically social in nature, because they represent observed action in an agent‐specific rather than an agent‐neutral way [Schütz‐Bosbach et al., 2006]. This is in line with theoretical frameworks which assume that the action mirror neuron system, that is, the system that shows shared activity for experienced and observed action, mainly fulfills social purposes by supporting action understanding, imitation [Gallese et al., 2004; Rizzolatti et al., 2001], and empathic concern [Kaplan and Iacoboni, 2006]. Our results assign similar properties to a system for representing observed touch, and lead to the suggestion that within a human population, higher activity in posterior S1 during observed touch is related to higher reactivity when others are observed. This suggestion, which undoubtedly needs further empirical exploration, gains additional support from a study by Blakemore et al. (2005), which showed that people who have a strong tendency to map observed touch to their own body (i.e., vision‐touch synesthesia) show higher activity than control participants in posterior S1 during observed human touch. Interestingly, vision‐touch synesthesia is also related to high degrees of empathy [Banissy and Ward, 2007; Fitzgibbon et al., 2010]. One might therefore speculate that higher than normal levels of S1 activity are even related to an increased affective response to observed touch of another person, while implicit knowledge of self/other difference is preserved, as traditionally assumed for empathic reactions [Batson et al., 1997; Decety and Jackson, 2004].

However, the study by Schaefer et al. (2009) provides evidence which at first sight seems to be contradictory to our findings. In their study, participants observed short video sequences of self‐referenced (egocentric) and other‐referenced (allocentric) touch while being scanned [Schaefer et al., 2009]. In contrast to our results, their study reported increased S1 activity in response to both observed allocentric and egocentric touch. Furthermore, greater activity changes in anterior S1 were observed when egocentric observed touch was compared to allocentric observed touch conditions, whereas greater changes in posterior S1 were seen for the reverse contrast. This is at odds with our conclusion that activity levels in posterior S1, but not shifts between anterior and posterior S1, are responsible for representing observed touch, and that the effect of observed touch is specifically related to observing another person being touched. In their study, it is however notable that the main peaks of activity for egocentrically observed touch versus no‐touch were also located in posterior S1. The fact that we did not find such topographic activity changes can perhaps be explained by different statistical corrections applied to the functional data. Whereas Schaefer et al. applied post hoc small volume corrections of 5 mm diameter to the functional data, we used a priori anatomical definitions to restrict statistical tests to anterior and posterior parts of S1. Since our search volumes were thus considerably larger, it is not surprising that significance thresholds for the contrast egocentric observed touch versus no‐touch were not reached in our case. Indeed, applying the same statistical correction to the functional data as used by Schaefer et al., we also find significant activity for the egocentric observed touch versus no‐touch contrast in left posterior S1 (x = −51, y = −26, z = 40, k = 27, t = 4.01). This illustrates that activation levels in response to self‐related observed touch, and thus the sensory gating effects possibly at work, are relative rather than absolute, and that specific care has to be taken with the statistical tests applied.

Whereas the different statistical tests applied in both studies can thus explain why our results differ with respect to activity changes in response to egocentrically observed touch, in contrast, our results are not at all in line with the assumption that a directed spatial shift of activity occurs towards anterior S1 when touch is more related to the self. We do not find increased activity in anterior S1 in response self‐versus other‐related observed touch even when we lower significance thresholds considerably. In this case, we hypothesize that this is probably due to the lower spatial specificity offered by standard fMRI designs at 3T compared to those at 7T, as discussed above [Scouten et al., 2006; Stringer et al., 2011], that might have resulted in a more imprecise characterization of where exactly significant activity changes were located. However, given that two individuals in our participant group did actually show main activity peaks in anterior S1 in response to observed touch, although not particularly during self‐related observed touch, conclusions cannot yet be drawn as to when exactly anterior S1 might be recruited during observed touch. Our results nevertheless do not support the assumption that activity changes in this region can specifically be related to self‐related observed touch.

In conclusion, our study utilizes important advances in methodology, design and analysis to characterize S1 activity in response to observed touch. We demonstrate that the use of fMRI at 7 Tesla offers important advantages when the spatial details of subtle activity changes are the major focus of interest. By applying these novel methods, we were able to characterize activity changes precisely in S1 resulting from observation of passive touch, clearly locate them in posterior S1, and show that they are smaller but do not spatially shift whenever observed touch was self‐related. We therefore hypothesize that a similar mechanism as for observed action also exists for observed touch, and that these mechanisms are specifically active when others are observed.

Supporting information

Additional Supporting Information may be found in the online version of this article.

Suppporting Information

REFERENCES

- Ashburner J, Friston KJ ( 2005): Unified segmentation. Neuroimage 26: 839–851. [DOI] [PubMed] [Google Scholar]

- Avenanti A, Bueti D, Galati G, Aglioti SM ( 2005): Transcranial magnetic stimulation highlights the sensorimotor side of empathy for pain. Nat Neurosci 8: 955–960. [DOI] [PubMed] [Google Scholar]

- Avenanti A, Minio‐Paluello I, Bufalari I, Aglioti SM ( 2006): Stimulus‐driven modulation of motor‐evoked potentials during observation of others' pain. Neuroimage 32: 316–324. [DOI] [PubMed] [Google Scholar]

- Avikainen S, Forss N, Hari R ( 2002): Modulated activation of the human SI and SII cortices during observation of hand actions. Neuroimage 15: 640–646. [DOI] [PubMed] [Google Scholar]

- Bandettini PA ( 2009): What's new in neuroimaging methods? Ann NY Acad Sci 1156: 260–293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banissy MJ, Ward J ( 2007): Mirror‐touch synesthesia is linked with empathy. Nat Neurosci 10: 815–816. [DOI] [PubMed] [Google Scholar]

- Batson CD, Sager K, Garst E, Kang M, Rubchinsky K, Dawson K ( 1997): Is empathy‐induced helping due to self‐other merging? J Pers Soc Psychol 73: 495–509. [Google Scholar]

- Blakemore SJ, Bristow D, Bird G, Frith C, Ward J ( 2005): Somatosensory activations during the observation of touch and a case of vision‐touch synaesthesia. Brain 128: 1571–1583. [DOI] [PubMed] [Google Scholar]

- Botvinick M, Jha AP, Bylsma LM, Fabian SA, Solomon PE, Prkachin KM ( 2005): Viewing facial expressions of pain engages cortical areas involved in the direct experience of pain. Neuroimage 25: 312–319. [DOI] [PubMed] [Google Scholar]

- Bradshaw JL, Mattingley JB ( 2001): Allodynia: A sensory analogue of motor mirror neurons in a hyperaesthetic patient reporting instantaneous discomfort to another's perceived sudden minor injury? J Neurol Neurosurg Psychiatry 70: 135–136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bufalari I, Aprile T, Avenanti A, Di Russo F, Aglioti SM ( 2007): Empathy for pain and touch in the human somatosensory cortex. Cereb Cortex 17: 2553–2561. [DOI] [PubMed] [Google Scholar]

- Caetano G, Jousmaki V, Hari R ( 2007): Actor's and observer's primary motor cortices stabilize similarly after seen or heard motor actions. Proc Natl Acad Sci USA 104: 9058–9062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan AW, Peelen MV, Downing PE ( 2004): The effect of viewpoint on body representation in the extrastriate body area. Neuroreport 15: 2407–2410. [DOI] [PubMed] [Google Scholar]

- Costantini M, Haggard P ( 2007): The rubber hand illusion: Sensitivity and reference frame for body ownership. Conscious Cogn 16: 229–240. [DOI] [PubMed] [Google Scholar]

- Decety J, Jackson PL ( 2004): The functional architecture of human empathy. Behav Cogn Neurosci Rev 3: 71–100. [DOI] [PubMed] [Google Scholar]

- Devue C, Collette F, Balteau E, Degueldre C, Luxen A, Maquet P, Bredart S ( 2007): Here I am: The cortical correlates of visual self‐recognition. Brain Res 1143: 169–182. [DOI] [PubMed] [Google Scholar]

- Dinstein I, Hasson U, Rubin N, Heeger DJ ( 2007): Brain areas selective for both observed and executed movements. J Neurophysiol 98: 1415–1427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Noesselt T ( 2008): Multisensory interplay reveals crossmodal influences on ‘sensory‐specific’ brain regions, neural responses, and judgments. Neuron 57: 11–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dushanova J, Donoghue J ( 2010): Neurons in primary motor cortex engaged during action observation. Eur J Neurosci 31: 386–398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebisch SJ, Perrucci MG, Ferretti A, Del Gratta C, Romani GL, Gallese V ( 2008): The sense of touch: Embodied simulation in a visuotactile mirroring mechanism for observed animate or inanimate touch. J Cogn Neurosci 20: 1611–1623. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Heim S, Zilles K, Amunts K ( 2006): Testing anatomically specified hypotheses in functional imaging using cytoarchitectonic maps. Neuroimage 32: 570–582. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Paus T, Caspers S, Grosbras MH, Evans AC, Zilles K, Amunts K ( 2007): Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage 36: 511–521. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K ( 2005): A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage 25: 1325–1335. [DOI] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Hagler DJ, Sereno MI ( 2007): Human cortical representations for reaching: Mirror neurons for execution, observation, and imagery. Neuroimage 37: 1315–1328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Dale AM ( 2000): Measuring the thickness of the human cerebral cortex from magnetic resonance images. Proc Natl Acad Sci USA 97: 11050–11055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgibbon BM, Giummarra MJ, Georgiou‐Karistianis N, Enticott PG, Bradshaw JL ( 2010): Shared pain: From empathy to synaesthesia. Neurosci Biobehav Rev 34: 500–512. [DOI] [PubMed] [Google Scholar]

- Frassinetti F, Maini M, Benassi M, Avanzi S, Cantagallo A, Farne A ( 2010): Selective impairment of self body‐parts processing in right brain‐damaged patients. Cortex 46: 322–328. [DOI] [PubMed] [Google Scholar]

- Frassinetti F, Maini M, Romualdi S, Galante E, Avanzi S ( 2008): Is it mine? Hemispheric asymmetries in corporeal self‐recognition. J Cogn Neurosci 20: 1507–1516. [DOI] [PubMed] [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G ( 2004): A unifying view of the basis of social cognition. Trends Cogn Sci 8: 396–403. [DOI] [PubMed] [Google Scholar]

- Gati JS, Menon RS, Ugurbil K, Rutt BK ( 1997): Experimental determination of the BOLD field strength dependence in vessels and tissue. Magn Reson Med 38: 296–302. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Keysers C ( 2009): The observation and execution of actions share motor and somatosensory voxels in all tested subjects: Single‐subject analyses of unsmoothed fMRI data. Cereb Cortex 19: 1239–1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzola V, Rizzolatti G, Wicker B, Keysers C ( 2007): The anthropomorphic brain: The mirror neuron system responds to human and robotic actions. Neuroimage 35: 1674–1684. [DOI] [PubMed] [Google Scholar]

- Geyer S, Schleicher A, Zilles K ( 1999): Areas 3a, 3b, and 1 of human primary somatosensory cortex. Neuroimage 10: 63–83. [DOI] [PubMed] [Google Scholar]

- Geyer S, Schormann T, Mohlberg H, Zilles K ( 2000): Areas 3a, 3b, and 1 of human primary somatosensory cortex. Part 2. Spatial normalization to standard anatomical space. Neuroimage 11: 684–696. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Geyer S, Schormann T, Roland P, Zilles K ( 2001): Human somatosensory area 2: Observer‐independent cytoarchitectonic mapping, interindividual variability, and population map. Neuroimage 14: 617–631. [DOI] [PubMed] [Google Scholar]

- Grezes J, Armony JL, Rowe J, Passingham RE ( 2003): Activations related to “mirror” and “canonical” neurones in the human brain: An fMRI study. Neuroimage 18: 928–937. [DOI] [PubMed] [Google Scholar]

- Griswold MA, Jakob PM, Heidemann RM, Nittka M, Jellus V, Wang J, Kiefer B, Haase A ( 2002): Generalized autocalibrating partially parallel acquisitions (GRAPPA). Magn Reson Med 47: 1202–1210. [DOI] [PubMed] [Google Scholar]

- Gu X, Han S ( 2007): Attention and reality constraints on the neural processes of empathy for pain. Neuroimage 36: 256–267. [DOI] [PubMed] [Google Scholar]

- Heller MA ( 1982): Visual and tactual texture perception: Intersensory cooperation. Percept Psychophys 31: 339–344. [DOI] [PubMed] [Google Scholar]

- Hodzic A, Kaas A, Muckli L, Stirn A, Singer W ( 2009a): Distinct cortical networks for the detection and identification of human body. Neuroimage 45: 1264–1271. [DOI] [PubMed] [Google Scholar]

- Hodzic A, Muckli L, Singer W, Stirn A ( 2009b): Cortical responses to self and others. Hum Brain Mapp 30: 951–962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishida H, Nakajima K, Inase M, Murata A ( 2010): Shared mapping of own and others' bodies in visuotactile bimodal area of monkey parietal cortex. J Cogn Neurosci 22: 83–96. [DOI] [PubMed] [Google Scholar]

- Jackson PL, Meltzoff AN, Decety J ( 2005): How do we perceive the pain of others? A window into the neural processes involved in empathy. Neuroimage 24: 771–779. [DOI] [PubMed] [Google Scholar]

- Kaas JH ( 1983): What, if anything, is SI? Organization of first somatosensory area of cortex. Physiol Rev 63: 206–231. [DOI] [PubMed] [Google Scholar]

- Kaplan JT, Iacoboni M ( 2006): Getting a grip on other minds: Mirror neurons, intention understanding, and cognitive empathy. Soc Neurosci 1: 175–183. [DOI] [PubMed] [Google Scholar]

- Keysers C, Kaas JH, Gazzola V ( 2010): Somatosensation in social perception. Nat Rev Neurosci 11: 417–428. [DOI] [PubMed] [Google Scholar]

- Keysers C, Wicker B, Gazzola V, Anton JL, Fogassi L, Gallese V ( 2004): A touching sight: SII/PV activation during the observation and experience of touch. Neuron 42: 335–346. [DOI] [PubMed] [Google Scholar]

- Longo MR, Pernigo S, Haggard P ( 2011): Vision of the body modulates processing in primary somatosensory cortex. Neurosci Lett 489: 159–163. [DOI] [PubMed] [Google Scholar]

- Marques JP, Kober T, Krueger G, van der Zwaag W, Van de Moortele PF, Gruetter R ( 2010): MP2RAGE, a self bias‐field corrected sequence for improved segmentation and T1‐mapping at high field. Neuroimage 49: 1271–1281. [DOI] [PubMed] [Google Scholar]

- Moore CI, Stern CE, Corkin S, Fischl B, Gray AC, Rosen BR, Dale AM ( 2000): Segregation of somatosensory activation in the human rolandic cortex using fMRI. J Neurophysiol 84: 558–569. [DOI] [PubMed] [Google Scholar]

- Morrison I, Lloyd D, di Pellegrino G, Roberts N ( 2004): Vicarious responses to pain in anterior cingulate cortex: Is empathy a multisensory issue? Cogn Affect Behav Neurosci 4: 270–278. [DOI] [PubMed] [Google Scholar]

- Myers A, Sowden PT ( 2008): Your hand or mine? The extrastriate body area. Neuroimage 42: 1669–1677. [DOI] [PubMed] [Google Scholar]

- Oldfield ( 1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Pihko E, Nangini C, Jousmaki V, Hari R ( 2010): Observing touch activates human primary somatosensory cortex. Eur J Neurosci 31: 1836–1843. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V ( 2001): Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci 2: 661–670. [DOI] [PubMed] [Google Scholar]

- Sanchez‐Panchuelo RM, Francis S, Bowtell R, Schluppeck D ( 2010): Mapping human somatosensory cortex in individual subjects with 7T functional MRI. J Neurophysiol 103: 2544–2556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sastre‐Janer FA, Belin P, Mangin JF, Dormont D, Masure MC, Remy P, Frouin V, Samson Y ( 1998): Three‐dimensional reconstruction of the human central sulcus reveals a morphological correlate of the hand area. Cereb Cortex 8: 641–647. [DOI] [PubMed] [Google Scholar]

- Saxe R, Jamal N, Powell L ( 2006): My body or yours? The effect of visual perspective on cortical body representations. Cereb Cortex 16: 178–182. [DOI] [PubMed] [Google Scholar]

- Schaefer M, Xu B, Flor H, Cohen LG ( 2009): Effects of different viewing perspectives on somatosensory activations during observation of touch. Hum Brain Mapp 30: 2722–2730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schütz‐Bosbach S, Avenanti A, Aglioti SM, Haggard P ( 2009): Don't do it! Cortical inhibition and self‐attribution during action observation. J Cogn Neurosci 21: 1215–1227. [DOI] [PubMed] [Google Scholar]

- Schütz‐Bosbach S, Mancini B, Aglioti SM, Haggard P ( 2006): Self and other in the human motor system. Curr Biol 16: 1830–1834. [DOI] [PubMed] [Google Scholar]

- Scouten A, Papademetris X, Constable RT ( 2006): Spatial resolution, signal‐to‐noise ratio, and smoothing in multi‐subject functional MRI studies. Neuroimage 30: 787–793. [DOI] [PubMed] [Google Scholar]

- Singer T, Frith C ( 2005): The painful side of empathy. Nat Neurosci 8: 845–846. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan RJ, Frith CD ( 2004): Empathy for pain involves the affective but not sensory components of pain. Science 303: 1157–1162. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty JP, Stephan KE, Dolan RJ, Frith CD ( 2006): Empathic neural responses are modulated by the perceived fairness of others. Nature 439: 466–469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stringer EA, Chen LM, Friedman RM, Gatenby C, Gore JC ( 2011): Differentiation of somatosensory cortices by high‐resolution fMRI at 7 T. Neuroimage 54: 1012–1020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turella L, Erb M, Grodd W, Castiello U ( 2009): Visual features of an observed agent do not modulate human brain activity during action observation. Neuroimage 46: 844–853. [DOI] [PubMed] [Google Scholar]

- Unser M, Aldroubi A, Eden M ( 1993a): B‐Spline Signal‐Processing .1. Theory. IEEE T Signal Proces 41: 821–833. [Google Scholar]

- Unser M, Aldroubi A, Eden M ( 1993b): B‐Spline Signal‐Processing .2. Efficient Design and Applications. IEEE T Signal Proces 41: 834–848. [Google Scholar]

- van den Bos E, Jeannerod M ( 2002): Sense of body and sense of action both contribute to self‐recognition. Cognition 85: 177–187. [DOI] [PubMed] [Google Scholar]

- van der Zwaag W, Francis S, Head K, Peters A, Gowland P, Morris P, Bowtell R ( 2009): fMRI at 1.5, 3 and 7 T: Characterising BOLD signal changes. Neuroimage 47: 1425–1434. [DOI] [PubMed] [Google Scholar]

- White LE, Andrews TJ, Hulette C, Richards A, Groelle M, Paydarfar J, Purves D ( 1997): Structure of the human sensorimotor system. I: Morphology and cytoarchitecture of the central sulcus. Cereb Cortex 7: 18–30. [DOI] [PubMed] [Google Scholar]

- Yacoub E, Duong TQ, Van De Moortele PF, Lindquist M, Adriany G, Kim SG, Ugurbil K, Hu X ( 2003): Spin‐echo fMRI in humans using high spatial resolutions and high magnetic fields. Magn Reson Med 49: 655–664. [DOI] [PubMed] [Google Scholar]

- Yoo SS, Freeman DK, McCarthy JJ, 3rd , Jolesz FA ( 2003): Neural substrates of tactile imagery: A functional MRI study. Neuroreport 14: 581–585. [DOI] [PubMed] [Google Scholar]

- Yousry TA, Schmid UD, Alkadhi H, Schmidt D, Peraud A, Buettner A, Winkler P ( 1997): Localization of the motor hand area to a knob on the precentral gyrus. A new landmark. Brain 120 ( Part 1): 141–157. [DOI] [PubMed] [Google Scholar]

- Zhou YD, Fuster JM ( 1997): Neuronal activity of somatosensory cortex in a cross‐modal (visuo‐haptic) memory task. Exp Brain Res 116: 551–555. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Suppporting Information