Abstract

According to an embodied view of language comprehension, language concepts are grounded in our perceptual systems. Evidence for the idea that concepts are grounded in areas involved in action and perception comes from both behavioral and neuroimaging studies (Glenberg [1997]: Behav Brain Sci 20:1‐55; Barsalou [1999]: Behav Brain Sci 22:577‐660; Pulvermueller [1999]: Behav Brain Sci 22:253‐336; Barsalou et al. [2003]: Trends Cogn Sci 7:84‐91). However, the results from several studies indicate that the activation of information in perception and action areas is not a purely automatic process (Raposo et al. [2009]: Neuropsychologia 47:388‐396; Rueschemeyer et al. [2007]: J Cogn Neurosci 19:855‐865). These findings suggest that embodied representations are flexible. In these studies, flexibility is characterized by the relative presence or absence of activation in our perceptual systems. However, even if the context in which a word is presented does not undermine a motor interpretation, it is possible that the degree to which a modality‐specific region contributes to a representation depends on the context in which conceptual features are retrieved. In the present study, we investigated this issue by presenting word stimuli for which both motor and visual properties (e.g., Tennis ball, Boxing glove) were important in constituting the concept. Conform with the idea that language representations are flexible and context dependent, we demonstrate that the degree to which a modality‐specific region contributes to a representation considerably changes as a function of context. Hum Brain Mapp 33:2322–2333, 2012. © 2011 Wiley Periodicals, Inc.

Keywords: embodiment, semantics, action, conceptual flexibility

INTRODUCTION

Embodied theories of language hold that language concepts are grounded in brain areas generally dedicated to perception and action. According to a grounded cognition account, understanding a word like hammer entails a reactivation of experiential traces (e.g., a hammer is an object with an elongated handle and is typically moved away from the body) that are stored in modality‐specific brain regions (e.g., visual and motor areas) [Barsalou, 1999, 2008; Glenberg, 1997; Pulvermueller, 1999, 2005]. In the past decade a number of theoretical proposals have been put forth within the general embodied framework [e.g., Barsalou, 1999, 2008; Mahon and Caramazza, 2008; Vigliocco et al., 2004]. These proposals share the notion that sensorimotor experience affects the cognitive architecture of the language system; however, they differ in the role they ascribe to sensorimotor brain areas in representing meaning [review see Meteyard et al., 2010]. On one end of the spectrum are theories which see links between sensorimotor areas and language meaning as epiphenomenal and associative. In other words, word meaning is linked to sensorimotor experience, which can result in motor and perceptual simulation following comprehension; however, these simulation processes are not a reflection of how meaning is represented [i.e., Mahon and Caramazza, 2008]. On the other end of the spectrum are theories which argue that lexical‐semantic representations are stored in sensorimotor brain areas (e.g., Pulvermueller, 2005; Pulvermueller and Fadiga, 2010]. On such theories, sensorimotor activations observed during language processing are more than associative or epiphenomenal, indeed they reflect how word meaning is stored in the brain. Between the two ends of the spectrum are theories which suggest that word meaning is mediated by initial connections to sensorimotor processes, but which propose that other information is also integral in forming a complete lexical‐semantic representation [i.e., Barsalou, 2008; Simmons et al., 2008; Vigliocco et al., 2004].

There is ample behavioral and neuroimaging evidence for selective involvement of areas involved in action and perception during language processing (see further below); however, the functional contribution of perception and action areas to language processing remains a topic of debate. Proponents of a strong embodied account argue that lexically driven visual and motor activations reflect static semantic representations, which are automatically triggered upon encountering a word. For example, it has been shown that action words used in idiomatic phrases in which no motor meaning is conveyed, (e.g., “he kicked the bucket”) still activate the neural motor system [Boulenger et al., 2009]. However, the notion of automaticity contrasts with the general notion that words can be used very flexibly‐indeed this is one of the greatest strengths of the human natural systems (and indeed Raposo et al. [ 2009] also provide evidence contrasting with the results of Boulenger et al. [ 2009].

Converging evidence for the stance that language comprehension shares mechanisms with perception and action comes from behavioural, electrophysiological and neuroimaging studies. For example, it has been shown that comprehending sentences and single words denoting actions interacts with action execution [Glenberg and Kaschak, 2002; Rueschemeyer et al., 2010; van Dam et al, 2010a]. This suggests that comprehending language about action recruits the same neural resources required for action execution. In addition, behavioral studies have provided evidence that the motor content that is activated during lexical retrieval reflects specific spatial [Rueschemeyer et al., 2010] and effector‐specific information [Scorolli and Borghi, 2007]. In a similar vein, Zwaan et al. [ 2004] have provided evidence that language comprehension also activates visual representations. In their study, participants listened to sentences and subsequently indicated if two sequentially presented visual objects were identical or not. Critically, the size of the picture of the first object could be smaller or larger than the size of the second picture, thereby suggesting motion of the object towards or away from the observer. Participants were faster to respond if the direction of the movement implied by the sentence matched the direction suggested by the sequence in which the two pictures were presented. Thus behavioural data provide evidence for a crucial role of modality‐specific brain regions (e.g., visual and motor system) in language comprehension.

Neuroimaging studies have provided evidence that comprehension of verbs that entail a motor component [Hauk et al., 2004; Hauk and Pulvermueller, 2004] and words denoting manipulable objects [Chao and Martin, 2000; Rueschemeyer et al., 2010; Saccuman et al., 2006] activate the cerebral motor system. More specifically, several neuroimaging studies indicate that the processing of action verbs modulates the motor system in an effector‐specific fashion [Aziz‐Zadeh et al., 2006; Hauk et al., 2004; Hauk and Pulvermueller, 2004; Kemmerer et al., 2008; Tettamanti et al., 2005]. In a similar vein, neuroimaging studies have provided evidence that comprehension of words that are semantically related to color, activate areas within the visual system that have been linked to color processing [Pulvermueller and Hauk, 2006; Kellenbach et al., 2001; Martin et al., 1995; Simmons et al., 2007]. All the studies reviewed above provide evidence that brain areas generally dedicated to perception and action are involved in the processing of language semantically related to visual and action information.

Neuroimaging work has demonstrated that category‐specific activation can be observed as early as ∼200 ms after word onset [Hauk and Pulvermueller, 2004; Pulvermueller et al., 2000], occurs irrespective of attention to action words [Pulvermueller and Shtyrov, 2006, 2009; Shtyrov et al., 2004], and is also effective when action verbs are embedded within abstract sentences like “grasp an idea” [Boulenger et al., 2009]. These findings have been taken as evidence that embodied lexical‐semantic representations are fast, automatic and unaffected by the context. However, in a recent study, Raposo et al. [ 2009] failed to find motor‐related activation for action verbs that were presented in an idiomatic context (e.g., kick the bucket). These findings strongly challenge the claim that lexical‐semantic representations are automatic and static, and suggest that the activation of meaning attributes of words is a flexible and contextually dependent process (but see also Boulenger et al., 2009]. In a similar vein, Rueschemeyer et al. (2007) showed that processing of morphologically complex verbs built on motor stems showed no differences in involvement of the motor system when compared with processing complex verbs with abstract stems (e.g., the difference between the complex verbs comprehend and consider, in which only the first verb is built on a motor verb stem). A crucial factor for observing activity in motor and premotor regions during action word processing seems to be that the context in which the word is presented supports a motor interpretation and that the word form as a whole conveys a motor meaning. These findings suggest that embodied representations are flexible to some degree. Flexibility is characterized by the relative presence or absence of activation in motor and perceptual brain areas. However, even if activation in perceptual and motor brain regions are present for a given word form, it is possible that the degree to which a modality‐specific sensory region contributes to a representation depends on the relevance of this information for the task at hand.

Hoenig et al. [ 2008] demonstrated that features that are less relevant in constituting a particular concept are strongly activated when they are directly probed by the task. Verification of a category's less accessible, nondominant feature (i.e., visual features for artifacts and action features for natural categories) elicited relatively greater activation in modality‐specific brain regions than the verification of a highly accessible, dominant feature (i.e., action features for artifacts and visual features for natural categories). The authors discuss the observed differences in activation in terms of priming effects. That is, a non‐dominant feature of a concept is not primed by the attribute probe and therefore requires increased processing capacity in the corresponding modality‐specific region. In other words, they show that the neural signature of a concept differs depending on whether a dominant versus a nondominant feature of the concept has to be retrieved. These findings were taken as evidence that conceptual features contribute to a concept to varying degrees in a flexible context‐dependent manner. The results of Hoenig et al. [ 2008] clearly demonstrate that if processing demands are low (the easy task of verifying that to cut is an attribute of the object knife) this leads to relatively lower levels of activation in the corresponding modality‐specific region as compared with a situation in which processing demands are high (the difficult task of verifying that elongated is an attribute of the object knife). A question that remains is whether a flexible recruitment of conceptual features can also be demonstrated in a situation in which processing demands are kept constant and the context in which a word is presented is manipulated. That is, can the context in which a word is presented modulate the importance of a given modality for lexical‐semantic representations? Such a within‐word (across context) effect would provide further evidence for the idea of flexible conceptual representations.

In this study, we investigated precisely this issue: do words consistently activate the same visual and/or motor information in all language contexts, or does modality‐specific activation in response to a given word depend on the linguistic context in which a word is presented? To this end we measured changes in the hemodynamic response while participants read words belonging to one of three critical experimental categories (1) action words (i.e., words highly associated with a specific action, such as stapler), (2) color words (i.e., words highly associated with a specific color, such as wedding dress), and (3) action‐color words (i.e., words highly associated with both an action and a color, such as tennis ball or boxing glove). The goals of our study were the following: first we aimed to replicate previous results showing that words associated with actions activate the neural motor network [Aziz‐Zadeh et al., 2006; Hauk et al., 2004; Kemmerer et al., 2008; Tettamanti et al., 2005], while words associated with a visual percept activate relevant parts of the visual processing stream [Simmons et al., 2007; Kellenbach et al., 2001; Martin et al., 1995; Pulvermueller and Hauk, 2006]. Second, we sought to go beyond this replication of previous work to show that words highly associated with more than one experiential feature (i.e., words associated with both an action and a color) activate relevant brain areas in both action and perception pathways. Third, and most interestingly, we investigated whether the strength of modality‐specific activations elicited by word comprehension could be modified by encouraging participants to focus on one property of denoted objects vs. another. In other words, does the strength of activation in motor areas elicited by comprehension of the word tennis ball change depending on whether the participant is thinking about (1) how to use a tennis ball or (2) the appearance of a tennis ball?

EXPERIMENTAL METHODS

Participants

Twenty students of the Radboud University participated in the study, all of whom were right‐handed and between 18 and 24 years of age (M = 20.5, SD = 2.2; 14 females). All participants had normal or corrected‐to‐normal vision and no history of neurological disorders. Beforehand, all participants were informed about the experimental procedures, were given practice trials, and signed informed consent. Afterward, all students were awarded 12,5 Euros for participating. The data from one subject were excluded from analysis because of technical problems during the recording. The experiment was conducted in accordance with the national legislation for the human protection and the Helsinki Declaration of 1975, revised in 2004.

Stimuli

A total of 120 Dutch words were used as experimental stimuli (stimuli with English translations can be seen in Table I). One hundred of these constituted our critical experimental stimuli, and 20 constituted catch trials (see below for more information). The 100 critical stimuli belonged to one of four conditions: (1) Action Color word condition, i.e., words denoting objects that are associated with both a specific action and color (e.g., boxing glove, tennis ball), (2) Action word condition, i.e., words denoting objects that are mainly associated with an action (e.g., doorbell, lighter), (3) Color word condition, i.e., words denoting objects that are mainly associated with a color (e.g., rear‐light, buoy), (4) Abstract word condition, i.e., words denoting abstract concepts (e.g., magic, justice).

Table I.

Experimental stimuli, English translations are printed in italics

| Action words | Color word | Action/Color | Abstract word | Catch items | |||||

|---|---|---|---|---|---|---|---|---|---|

| Aansteker | Lighter | Achterlicht | Rear‐light | Bakpapier | Waxed paper | Beperking | Restriction | Dollarbiljet | Dollar bill |

| Boek | Book | Aluminiumfolie | Aluminium shell | Bezem | Broom | Beroepsgroep | Professional group | Glasbak | Bottle bank |

| Dartpijl | Dart | Asfaltweg | Asphalt road | Bokshandschoen | Boxing glove | Bijzonderheid | Peculiarity | Gras | Grass |

| Deurbel | Doorbell | Astronautenpak | Spaceman suit | Cello | Cello | Breuk | Fraction | Krokodil | Crocodile |

| Drumstel | Drum set | Autoband | Tire | Deegrol | Rolling pin | Commissie | Commission | Legerbroek | Army jeans |

| Haarborstel | Hairbrush | Caravan | Caravan | Deurklink | Door latch | Creativiteit | Creativity | Legerhelm | Army helmet |

| Haarklem | Hairclip | Douchekop | Showerhead | Dobbelsteen | Dice | Criminaliteit | Criminality | Legeruniform | Army uniform |

| Haarspraybus | Can of hair spray | Eurocent | Euro cent | Drumstok | Drum stick | Crisis | Crisis | Pooltafel | Pool table |

| Harp | Harp | Parketvloer | Parquetry | Gitaar | Guitar | Detail | Detail | Regenlaars | Jackboot |

| Hockeystick | Hockey stick | Paspoort | Passport | Lucifer | Match | Economie | Economy | Voetbalveld | Soccer field |

| Knikker | Marble | Piepschuim | Polystyrene foam | Mascara | Mascara | Gerechtigheid | Justice | Gaspedaal | Gas pedal |

| Knop | Button | Piramide | Pyramid | Nagelknipper | Nail clipper | Gigabyte | Gigabyte | Skateboard | Skateboard |

| Kruiwagen | Wheelbarrow | Rails | Rails | Paperclip | Paperclip | Goedkoop | Cheap | Laars | Boot |

| Nietmachine | Stapler | Reddingsboei | Buoy | Pincet | Tweezers | Kwartaal | Quarter | Schaats | Skate |

| Oogschaduw | Eye shadow | Regenpijp | Drainpipe | Prittstift | Glue stick | Maatschappij | Society | Pianopedaal | Piano pedal |

| Paraplu | Umbrella | Rookmelder | Smoke detector | Schroef | Screw | Magie | Magic | Ski | Ski |

| Penseel | Paint brush | Ruitenwisser | Screen wiper | Sleutel | Key | Oorlog | War | Trampoline | Trampoline |

| Perforator | Perforator | Stoeptegel | Paving stone | Spijker | Nail | Percentage | Percentage | Trapper | Pedal |

| Rekenmachine | Calculator | Stopbord | Stop sign | Tennisbal | Tennis ball | Probleem | Problem | Voetbal | Football |

| Schoenveter | Shoe lace | Trein | Train | Toiletpapier | Toilet paper | Spelregel | Rule of the game | Waterfiets | Pedal boat |

| Squashracket | Squash racket | Trouwjurk | Wedding dress | Viool | Violin | Verzekering | Insurance | ||

| Telefoon | Telephone | Ventieldop | Air‐valve | Wasbak | Sink | Welvaart | Prosperity | ||

| Tennisracket | Tennis racket | Verbodsbord | Prohibition sign | Winkelwagen | Shopping trolley | Werkgeheugen | Working memory | ||

| Tondeuse | (Pair) of clippers | Windmolen | Wind mill | Zaag | Saw | Winst | Profit | ||

| Vulpen | Fountain‐pen | Zwaailicht | Flashing light | Zwaard | Sword | Wraakzucht | Revengefulness | ||

The validity and psycholinguistic properties of the experimental stimuli were tested using a prescanning questionnaire. Ten participants who did not take part in the subsequent scanning session were asked to rate critical stimuli on (1) actions associated with the word's referent, (2) colors associated with the word's referent, (3) familiarity (5 point scale, 1 = unfamiliar, 5 = very familiar), (4) comprehensibility (1 = not comprehensible, 5 = very comprehensible) and (5) imageability (1 = very difficult to image, 5 = very easy to image) (see Table II). Importantly, participants consistently indicated that Action‐Color and Action words denoted objects used primarily with the hand/arm, and that Action‐Color and Color words denoted objects highly associated with one specific color. Furthermore, the results of the questionnaire showed that objects were matched across conditions with respect to familiarity with the word (Action: M = 4.95, Color: M = 4.98; Action/Color: M = 4.96; Abstract: M = 4.98) F(3,96) = 1.48, P > 0.200., word meaning (Action: M = 4.99, Color: M = 4.98, Action/Color: M = 4.97, Abstract: M = 4.95), F(3,96) = 1.15, P > 0.300., and imageability (Action: M = 4.90, Color: M = 4.92, Action/Color: M = 4.94, Abstract: M = 3.13), F(3,96) = 241.38, P < 0.050. To obtain an objective measure of word frequency, we calculated the mean logarithmic lemma frequency per million for each condition using the CELEX lexical database [Baayen et al., 1993]. This value was 22.3 for the Action words, 4.6 for the Color words, 5.8 for the Action Color words and 42.9 for Abstract words. A One‐Way ANOVA indicated that there were no reliable differences in the mean logarithmic lemma frequency per million between any of the experimental conditions, F(3,96) = 2.65, P > 0.050. Thus, stimuli were matched for relevant linguistic parameters, familiarity, comprehensibility, and frequency. Words were also matched across conditions for length (Action: M = 8.5 letters, Color: M = 9.5 letters, Action Color: M = 7.9 letters, Abstract: M = 9.0 letters) F (3,96) = 1.83, P > 0.100, and for word duration (Action: M = 793, Color: M = 793, Action Color: M = 895, Abstract: M = 872) F (3,96) = 3.88, P > 0.100. Participants confirmed that Action words and Action Color words denoted objects that are highly associated with an action other than a foot action, whereas Color words and Abstract words were not (percentage of yes‐responses: Action: M = 85.6, Color: M = 14.0, Action Color: M = 84.8, Abstract: M = 3.6, all means significantly differed from 50% as indicated by one‐sample t‐tests [all Ps < 0.001)]. In addition, participants confirmed that if a word was associated with an action, it was consistently rated as a hand/arm action (Action: M = 95.1, Action Color: M = 98.4, means significantly differed from 50% as indicated by one‐sample t‐tests (all Ps < 0.001)). Additionally, participants confirmed that Color words and Action Color words denoted objects that are highly associated with another color than green, whereas Action words and Abstract words were not (percentage of yes‐responses: Action: M = 19.6, Color: M = 79.6, Action Color: M = 74.8, Abstract: M = 2.4, all means significantly differed from 50% as indicated by one‐sample t‐tests [all Ps < 0.005)]. In addition, participants confirmed that if a word was associated with a color, it was consistently rated as associated with the same specific color across participants (Color: M = 88.1, Action Color: M = 91.0, means significantly differ from 50% as indicated by one‐sample t‐tests [all Ps < 0.001)].

Table II.

Mean ratings of the pre‐tests and standard deviations printed in parentheses

| Action | Action Color | Color | Abstract | |

|---|---|---|---|---|

| Length | 8.5 (2.55) | 7.9 (2.57) | 9.5 (2.24) | 9.0 (2.67) |

| Word duration | 793 (134.37) | 895 (225.25) | 793 (217.18) | 872 (217.49) |

| Familiarity | 4.95 (0.08) | 4.96 (.08) | 4.98 (0.05) | 4.98 (0.05) |

| Log lemma Frequency per million | 22.29 (78.06) | 5.75 (10.19) | 4.64 (16.22) | 42.92 (75.61) |

| Word meaning | 4.99 (0.03) | 4.97 (0.06) | 4.98 (0.06) | 4.95 (0.11) |

| Imageability | 4.90 (0.12) | 4.94 (0.09) | 4.92 (0.08) | 3.13 (0.55) |

| Color association (%) | 19.6 (13.06) | 74.8 (15.03) | 79.6 (13.69) | 2.4 (5.23) |

| Action association (%) | 85.6 (12.61) | 84.8 (15.84) | 14.0 (15.28) | 3.6 (7.57) |

All words and questions were presented auditory by a female voice over MR‐compatible headphones.

After recording the spoken stimuli, they were segmented and equalized in amplitude using Cool Edit Pro 2.1 (Syntrillium Software Corporation)

Procedure

The order of stimulus presentation was randomized individually for each participant. All participants heard all experimental stimuli. A single trial lasted 8 s and constituted the presentation of a single word. Stimuli were presented in mini blocks of five items. In half of the blocks participants had to answer a question that focused on the color attributes of the object, whereas in the other half of the blocks participants had to answer a question that focused on the action attributes of the object. To enhance the temporal resolution of the acquired signal, a variable jitter time of 0, 500, 1,000, or 1,500 ms was included at the beginning of each trial. Following this, the actual stimulus was introduced by a fixation cross in the center of the screen. The auditory stimulus word was presented 400 ms after the onset of the fixation cross. The fixation cross remained visible until 2,000 ms after word offset and remained visible during word presentation. Hereafter, a variable intertrial interval filled the remaining time, so that every trial lasted exactly 8,000 ms, however the interstimulus interval was randomly jittered. Participants were instructed to listen to all words carefully and to perform a go/no‐go semantic categorization task, in which go responses should be made only to words denoting objects that were associated with either a green color or a foot action. In this manner, we ensured that participants semantically processed all words (i.e., participants had to comprehend the words to decide not to answer), but critical experimental stimuli were kept free of motor execution artifacts. In 20 trials a fixation cross was presented for the complete length of the trial (i.e., null events). To ensure that our results are not driven by acoustic, phonological or lexical factors we presented each word twice. Presentation order of a specific word (i.e., in the color‐judgment or action‐judgment condition) was randomized both within and across participants.

fMRI Data Acquisition

Functional images were acquired on a Siemens TRIO 3.0 T MRI system (Siemens, Erlangen, Germany) equipped with EPI capabilities, using a birdcage head coil for radio‐frequency transmission and signal reception. BOLD‐sensitive functional images were acquired using a single‐shot gradient EPI sequence (echo time/repetition time = 31/2,000 ms; 31 axial slices in ascending order, voxel size = 3.5 × 3.5 × 3.5). High‐resolution anatomical images were acquired using an MPRAGE sequence (echo time = 3.03, voxel size = 1 × 1 × 1 mm3, 192 sagittal slices, field of view = 256).

fMRI Data Analysis

Functional data were preprocessed and analyzed with SPM5 (Statistical Parametric Mapping, http://www.fil.ion.ucl.ac.uk/spm). Preprocessing involved removing the first three volumes to allow for T1 equilibration effects. Rigid body registration along three translations and three rotations was applied to correct for small head movements. Subsequently, the time series for each voxel was realigned temporally to acquisition of the middle slice (Slice 17) to correct for slice timing acquisition delays. Images were normalized to a standard EPI template centered in Montreal Neurological Institute space and resampled at an isotropic voxel size of 2 mm. The normalized images were smoothed with an isotropic 8‐mm FWHM Gaussian kernel. The ensuing preprocessed fMRI time series were analyzed on a subject‐by‐subject basis using an event‐related approach in the context of the general linear model with regressors for each condition [Action Color (AC), Action (A), Color (C) and Abstract (Abs)] as well as the error trials, catch trials and null trials (Null) convolved with a canonical hemodynamic response function. The parameters from the motion correction algorithm were included in the model as effects of no interest.

For each participant, five contrast images were generated, representing (1) the main effect of reading the different categories of concrete words (AC ‐ Abs, A ‐ Abs, C ‐ Abs) as well as (2) the effect of context on Action Color words (AC ‐ Abs, both for the Action and Color context). Because individual functional data sets had been aligned to standard stereotactic reference space, a group analysis based on the contrast images could be performed. Single‐participant contrast images were entered into a second‐level random effects analysis for the critical contrast of interest. The group analysis consisted of a one‐sample t test across the contrast images of all subjects that indicated whether observed differences between conditions were significantly distinct from zero. To protect against false‐positive activation, a double threshold was applied, by which only voxels with a P < 0.005, uncorrected, and volume exceeding 65 voxels were considered, resulting in a corrected P‐value of P < 0.05. The 65‐voxel threshold was determined by modeling the entire imaging volume, assuming an individual voxel Type I error, and subsequently smoothing the volume with a gaussian kernel. To achieve the desired correction for multiple comparisons, we calculated the probability associated with each cluster extent across 1,000 Monte Carlo simulations [Forman et al., 1995; Slotnick et al., 2003].

ROI Analysis

In order to assess whether the activations in motor and color processing areas were modulated by the context, we conducted a regions of interest (ROI) analysis. ROIs were selected based upon the second level group results from the contrast Action Color ‐ Abstract (AC ‐ abs) in the whole brain analysis. This yielded four ROIs: the left fusiform gyrus (FFG), the left posterior intraparietal sulcus (IPS), the left inferior parietal lobule (IPL) and the left middle temporal gyrus (MTG) (see also Table III). Percent signal change values for both word types and contexts (AC and Abs in color and action context) were extracted from all voxels in a given ROI averaged within each participant. Thus for each ROI we calculated 4% signal change values per participant. These values were entered as dependent variables in a repeated measures analysis of variance (ANOVA).

Table III.

Brain regions showing significantly more activation for action/color, action, color than for abstract words (P < 0.005, k > 65)

| Brain regions | Action/Color > Abstract | Action > Abstract | Color > Abstract | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Z max | Extent (Voxels) | x | y | z | Zmax | Extent (Voxels) | x | y | z | Z max | Extent (Voxels) | x | y | z | |

| Fusiform Gyrus | 3.77 | 180 | −24 | −30 | −28 | 4.25 | 182 | −38 | −26 | −26 | |||||

| −24 | −30 | −26 | |||||||||||||

| Posterior intraparietal sulcus | 3.43 | 310 | −20 | −60 | 44 | 3.34 | 164 | −24 | −64 | 56 | |||||

| Inferior parietal lobule | 3.55 | 435 | −64 | −30 | 38 | ||||||||||

| Middle temporal gyrus | 3.42 | 120 | −54 | −66 | −8 | ||||||||||

The maximum Z scores, the cluster extent (in voxels), and the Montreal Neurological institute coordinates are reported.

RESULTS

Behavioral Results

The results of the behavioral data show that participants were alert and performing the semantic categorization task accurately (performance rates: Color Word, M = 97.6%, SE = 0.55; Action Word, M = 97.2%, SE = 0.49; Action Color word, M = 98.5%, SE = 0.43; Abstract word, M = 100%, SE = 0). The data from one subject were excluded from analysis because of technical problems during the recording. Thus, results of 19 participants entered the analysis.

Neuroimaging Results

Whole brain analysis

A list of significant activations can be seen in Table III. Whole‐brain analysis revealed several areas to be more strongly activated in response to Action Words (e.g., lighter) as compared with Abstract words (e.g., magic). The A‐Abs contrast yielded a significant result in the left posterior intraparietal sulcus (pIPS). Contrasting Color words (e.g., stop sign) with Abstract words, yielded a significant result in the left fusiform gyrus (FFG). Finally, several areas were more strongly activated in response to Action Color words (e.g., boxing glove) as compared with Abstract words. The AC‐abs contrast yielded significant results in left FFG, left pIPS, left inferior parietal lobule (IPL), and left middle temporal gyrus (MTG).

The AC‐Abs contrast in the Action context yielded significant results in the middle occipital gyrus (MOG), posterior IPS, IPL, and middle frontal gyrus (MFG) of the left hemisphere. The AC‐Abs contrast in the Color context did not yield any significant results (Table IV).

Table IV.

Brain regions showing significantly more activation for action color, action, color than for abstract words for both the action and color context (P < 0.005, k > 65)

| Brain regions | Action Color > Abstract (action context) | Action > Abstract (action context) | Color > Abstract (action context) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Z max | Extent (Voxels) | x | y | z | Z max | Extent (Voxels) | x | y | z | Z max | Extent (Voxels) | x | y | z | |

| Middle occipital gyrus | 3.38 | 74 | −36 | −72 | 32 | ||||||||||

| Posterior intraparietal sulcus | 3.27 | 238 | −32 | −62 | 50 | ||||||||||

| −18 | −55 | 52 | |||||||||||||

| Inferior parietal lobule | 3.70 | 692 | −60 | −30 | 38 | ||||||||||

| −34 | −40 | 38 | |||||||||||||

| Middle frontal gyrus | 3.56 | 103 | −28 | 10 | 50 | ||||||||||

| Left Hippocampus | 3.96 | 351 | −18 | −34 | 6 | ||||||||||

| Right Hippocampus | 3.36 | 191 | 18 | −32 | 14 | ||||||||||

| 25 | −35 | 4 | |||||||||||||

| Action Color > Abstract (color context) | Action > Abstract (color context) | Color > Abstract (color context) | |||||||||||||

| Z max | Extent (Voxels) | x | y | z | Z max | Extent (Voxels) | x | y | z | Z max | Extent (Voxels) | x | y | z | |

| Fusiform Gyrus | 3.33 | 83 | −34 | −34 | −24 | ||||||||||

| Middle frontal gyrus | 3.56 | 77 | −28 | 20 | 58 | ||||||||||

The maximum Z scores, the cluster extent (in voxels), and the Montreal Neurological institute coordinates are reported.

ROI Analysis

A two‐by‐two repeated measures ANOVA was conducted on the percent signal change values from each ROI. Percent signal change values were interrogated with respect to Word (AC, Abs) and Context (Action, Color).

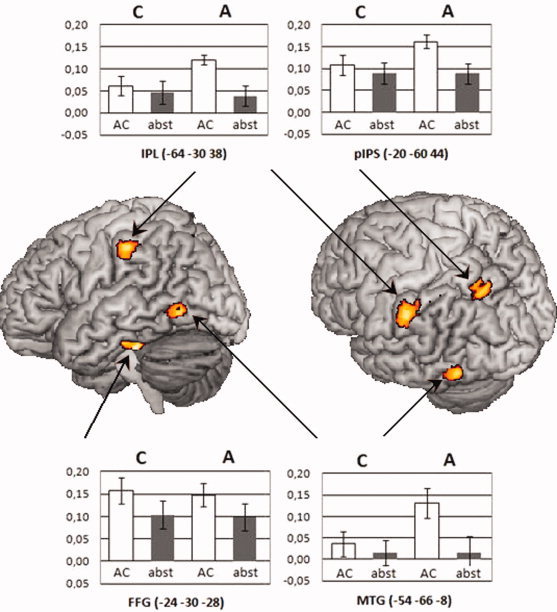

Within the left Fusiform Gyrus we observed a reliable main effect of Word, F(1,18) = 18.23, P < 0.001, MSE = 0.003, η = 0.503. No reliable main effect of Context or Word × Context interaction was observed (all Ps > 0.5). Within the pIPS we observed a main effect of Word, F(1,18) = 19.39, P < 0.001, MSE = 0.002, η = 0.519. No reliable main effect of Context or Word × Context interaction was observed (all Ps > 0.2). Additionally, we calculated paired sample t‐tests on the percent signal change values for AC words within both the Action and Color context. In the pIPS, AC words in the Action context elicited more activation than AC words in the Color context, t(18) = 2.33, P < 0.05. Within the left IPL we observed a main effect of Word, F(1,18) = 13.17, P < 0.005, MSE = 0.003, η = 0.423. In addition, we observed a reliable Word × Context interaction, F(1,18) = 4.51, P < 0.05, MSE = 0.005, η = 0.200, indicating that the differences in percent signal change values between AC and Abs words were modulated by the different context conditions. To further explore this interaction, we calculated post‐hoc paired sample t‐tests. AC words (i.e., words that have both action and color semantic features) elicited more activation when the context focused on action features, t(18) = 3.26, P < 0.005. However, the neural response to Abs words (i.e., words without action or color semantic features) were not modulated by context, t(18) < 1. Within the left MTG we observed a main effect of Word, F(1,18) = 15.86, P < 0.005, MSE = 0.005, η = 0.468, and a marginally significant effect of Context, F(1,18) = 4.23, P = 0.055, MSE = 0.010, η = 0.190. In addition, the Word × Context interaction was marginally significant, F(1,18) = 3,77, P = 0.068, MSE = 0.011, η = 0.173. Subsequently, we calculated post‐hoc paired sample t‐tests. AC words elicited more activation when the context focused on action features, t(18) = 2,84, P < 0.05. However, the neural response to Abs words were not modulated by context, t(18) < 1 (Fig. 1).

Figure 1.

Differences in BOLD response for Action Color (AC) versus Abstract (Abs) words (P < 0.005, k > 65). Significant differences are seen in the left inferior parietal lobule (IPL), the left posterior intraparietal sulcus (pIPS), the left fusiform gyrus (FFG) and the left middle temporal gyrus (MTG). The regions of (ROIs) analysis revealed a significant interaction of word category and context in the left inferior parietal lobule and a marginally significant interaction in the left middle temporal gyrus.

DISCUSSION

The current experiment was designed to explore whether a word consistently activates specific visual or motor information, or if the modality‐specific activation in response to a given word depends on the context in which a word is perceived. There are three main results of this study. First, words denoting objects associated with actions elicited activation in the posterior intraparietal sulcus (IPS), while words denoting objects associated with a color elicited activation in the left fusiform gyrus (FFG). Second, words denoting objects associated with both actions and colors activated both the IPL, IPS, MTG, and FFG of the left hemisphere. Third, modality‐specific activation elicited by words showed some sensitivity to linguistic context. Specifically, within action areas (i.e., left IPL) the BOLD response was greater when participants were instructed to focus on the action performed on a word's referent than when participants were instructed to focus on the object's color. This difference was not seen in response to words with no putative action or color properties, therefore the results cannot be attributed to the linguistic context per se.

Modality Specific Activations

Our results demonstrate that words that are semantically related to action recruit the motor system, whereas words related to color recruit visual areas. These findings are consistent with previous studies showing that words with motor meanings engage portions of the neural motor system [Desai et al., 2010; Raposo et al., 2009; Rueschemeyer et al., 2007; Tettamanti et al., 2005; van Dam et al., 2010a]. In addition, words semantically related with color information recruit color processing areas within the visual system [Kellenbach et al., 2001; Martin et al., 2005; Pulvermueller and Hauk, 2005; Simmons et al., 2007]. Beyond previous research, the present findings demonstrate that words semantically related to both types of content (action and color) are represented in a distributed network encompassing both visual and motor brain regions; this is a modest but non‐trivial extension of previous observations. In addition, it is among the first demonstrations in adults that auditory word perception (instead of visual perception) recruits visual and motor regions [see James and Maouene, 2009 for a similar result obtained in preschool children]. Taken together, these findings provide evidence that modality‐specific brain regions are involved in the processing of language denoting objects that are semantically related with color and action information.

Context‐Dependent Activations in Modality‐Specific Brain Regions

To investigate the effects of context on modality‐specific activation we focused our analysis on Action‐Color words only (i.e., words with both a putative action and color feature). Specifically, we interrogated the BOLD response elicited by (1) Action‐Color words and (2) abstract words in two different contexts: one in which participants were focused on motor information about the denoted object and one in which participants focused on visual information about the denoted object. In the following we discuss the results of both a whole‐brain analysis and an ROI analysis.

In the whole brain analysis motor areas of the brain (i.e., left pIPS and left IPL) were more strongly responsive to words with a putative action feature (i.e., Action‐color words) than to abstract words only if participants were encouraged to think about the action properties of the denoted object. In other words, while focused on how to act upon a denoted object, motor areas were more strongly engaged for Action‐color words than for abstract words; however, while focused on the appearance of a denoted object, motor areas were not differentially engaged in response to Action‐color and abstract words. Thus, our results provide evidence for flexible and context‐dependent language processing. That is, at the whole brain level the BOLD response in modality‐specific brain regions (i.e., motor areas) in response to a given category of word changes considerably as a function of linguistic context. Specifically, greater activation is observed in brain regions relevant for coding action information (IPL, pIPS), when the linguistic context emphasizes action properties.

In the context highlighting action properities, action‐color words also elicited a greater change in the BOLD signal in contrast to abstract words in the middle occipital gyrus (MOG). The peak activation in this extrastriate visual area was somewhat posterior and superior to the Extrastriate Body area (EBA). Previous research has indicated that relative to visual processing of objects, human body parts (other than faces) engages the EBA [Downing et al., 2001; Downing et al., 2006; Saxe et al., 2006]. Furthermore, in addition to the role that EBA plays in the perception of other people's body parts it is involved in goal‐directed movements of the observer's body parts [Astafiev et al., 2004]. These findings suggest that the EBA is a specialized system for processing the visual appearance of the human body. In addition, it may be involved in the execution of visually guided hand movements. Importantly, a recent study by Van Dam et al. [ 2010b] showed greater activation in this area for verbs with motor meanings versus verbs with abstract meanings. We suggest that the observed middle occipital lobe activation reflects a corresponding increase in the importance of body (body part) knowledge in a context that emphasizes action properties.

To test activity changes related to word category and context, along with their possible interaction effect, we performed an analysis of percent signal change within four regions of interest (ROIs). These ROIs that were selected based upon the second level group results from the contrast Action Color‐Abstract in the whole‐brain analysis. Results of this analysis show a reliable word category × context interaction within the left inferior parietal lobule (IPL), a region that has been shown to be critical for the representation of action plans and goals [Hamilton and Grafton, 2006]. In a similar vein, a marginally significant word category × context interaction is present within the left middle temporal gyrus (MTG), a region in close proximity to the human motion area [MT +, Rees et al., 2000]. This brain region is implicated in accessing conceptual information about motion attributes [Kable et al., 2005; Saygin et al., 2010]. A recent study by Desai et al. [ 2010] further demonstrates that the left IPL and MTG elicited a stronger activation for action verbs embedded in spoken sentences as compared to matched visual/abstract verbs. Within the pIPS the word category × context interaction failed to reach significance but descriptively showed a similar pattern of results (i.e., AC words elicited more activation when the context focused on action features, whereas the neural response to Abs words were not modulated by context). These findings reinforce the idea that the recruitment of modality‐specific conceptual features does not only depend on whether the context emphasizes corresponding features but also on the fact that these features are relevant in constituting a particular concept. These findings seem to oppose the idea that lexically driven visual and motor activations reflect static semantic representations that are automatically triggered upon encountering a word [e.g., Pulvermueller, 2005]. In contrast, the results suggest that the activation of action‐related properties is modulated by the relevance of modality‐specific properties in a given context. However, it can still be argued that words in both contexts initially elicit automatic activations in corresponding visual and motor areas, which are then quickly suppressed by task demands. Time‐sensitive measurements (e.g., MEG/EEG) need to be obtained to rule out such a possibility.

In our study we found that a brain region relevant for processing color information (i.e., left FFG) was activated for Action Color words. However, in contrast to our hypothesis we did not observe significantly more activation within the left FFG when Action Color words were presented in a context emphasizing color than in a context emphasizing action. Participants rated words to be equally associated with action and color properties, thus both types of features are important aspects of words' referents. However, there is nevertheless a crucial difference between action and color information, namely action features are variant, with different types of actions becoming relevant for the same tool in different sentence (and action) contexts [Masson et al., 2008], while color is a static visual property. Thus Action Color words refer to objects which can be encountered in different action settings, but not in different colors. In language contexts in which participants were encouraged to focus on the canonical use of an object, action information became particularly relevant, and more brain activation was seen in cortical motor areas. On the other hand, language context did not mediate the color associated with an Action Color word's referent, and thus activation differences were not seen between language contexts. One way to investigate this hypothesis would be to look at activation in the FFG in response to words which denote objects that change color (e.g., leaves) depending on different contexts (e.g., spring vs. autumn), or in response to words denoting objects that are acted upon differently depending on the color they have (e.g., a green strawberry vs. a red one). Other factors that might play a role in how the color of an object is represented (and therefore might influence the susceptibility to contextual modulation) are the diagnosticity of color for an object (e.g., identifying toilet paper might critically depend on its white color property) and whether the color provides information about the functionality of the object (e.g., the function of a prohibition sign or flashing light is to attract someone's attention/warn them, and this function is served by the redness of the object).

A last point deserves consideration. First, it could be argued that the observed effect of context on embodied lexical‐semantic representations might merely reflect differences in task requirements. That is, our design required participants to perform either a visual or motor property verification task. The nature of the task ensured that critical trials were not coupled with a button response; however, the task demanded participants to pay attention to either action or visual features conveyed by the words. It could thus be argued that action and visual areas were differentially activated in our study because of task requirements. Two aspects of the data render it implausible that our effects can be reduced to an effect of task requirements. First, greater activations for Action Color, Action, and Color words that we report are all relative to an Abstract word baseline for which the task requirements were completely identical (the only difference being that action and visual information is not relevant in constituting Abstract concepts). Thus for all contrasts of interest, task requirements are the same and should not contribute to observed differences. Second, the interaction between context (emphases on visual or motor properties) and category (words semantically related to either visual, motor or both types of content) is hard to explain solely in terms of task demands.

CONCLUSION

In this study, we investigated the neural response to comprehension of words denoting objects semantically related to either visual, motor, or both types of information. Our results replicate findings from previous studies showing that words with motor meanings engage portions of the neural motor system and words semantically related with color information recruit color processing areas. Furthermore, it extends previous research by showing that words semantically related to both types of content (action and color) are represented in a distributed network encompassing the corresponding modality‐specific brain regions. Beyond previous research, we demonstrated an interaction between word category and context. The results showed greater activation in brain regions relevant for coding action (motion) information (IPL, MTG), providing that the context emphasized action properties and that the corresponding features were relevant in constituting the concept. The results indicate (1) that language denoting objects semantically related with visual and motor information elicits activation in corresponding modality‐specific brain regions, and (2) that the recruitment of modality‐specific conceptual features is a flexible process and depends on contextual constraints.

Acknowledgements

The authors thank Paul Gaalman and Pascal de Water for technical support. The authors also thank Alex Brandemeyer, Sybrine Bultena and Rutger Vlek for their help in making the recordings. The authors acknowledge Eelco van Dongen for his help in acquiring the data.

REFERENCES

- Astafiev SV, Stanley C, Shulman G, Corbetta M ( 2004): Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nat Neurosci 7: 542–548. [DOI] [PubMed] [Google Scholar]

- Aziz‐Zadeh L, Wilson SM, Rizzolatti G, Iacoboni M ( 2006): Congruent embodied representations for visually presented actions and linguistic phrases describing actions. Curr Biol 16: 1818–1823. [DOI] [PubMed] [Google Scholar]

- Baayen R, Piepenbrock R, Van Rijn H ( 1993): The CELEX Lexical Database (CD‐ROM). Philadelphia, PA: Linguistic Data Consortium, University of Pennsylvania. [Google Scholar]

- Barsalou LW ( 1999): Perceptual symbol systems. Behav Brain Sci 22: 577–660. [DOI] [PubMed] [Google Scholar]

- Barsalou LW ( 2008): Grounded cognition. Ann Rev Psychol 59: 617–645. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Simmons WK, Barbey AK, Wilson CD. ( 2003): Grounding conceptual knowledge in modality‐specific systems. Trends Cogn Sci 7: 84–91. [DOI] [PubMed] [Google Scholar]

- Boulenger V, Hauk O, Pulvermueller F ( 2009): Grasping ideas with your motor system: Semantic somatotopy in idiom comprehension. Cerebral Cortex 19: 1905–1914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao LL, Martin A ( 2000): Representation of manipulable man‐made objects in the dorsal stream. Neuroimage 12: 478–484. [DOI] [PubMed] [Google Scholar]

- Desai RH, Binder JR, Conant LL, Seidenberg MS ( 2010): Activation of sensory‐motor activations in language comprehension. Cerebral Cortex 20: 468–478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N ( 2001): A cortical area selective for visual processing of the human body. Science 293: 2470–2473. [DOI] [PubMed] [Google Scholar]

- Downing PE, Chan AWY, Peelen MV, Dodds CM, Kanwisher N ( 2006): Domain specificity in visual cortex. Cerebral Cortex 16: 1453–1461. [DOI] [PubMed] [Google Scholar]

- Forman S, Cohen J, Fitzgerald M, Eddy W, Mintun M, Noll D ( 1995): Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): Use of a cluster‐size threshold. MRM 33: 637–647. [DOI] [PubMed] [Google Scholar]

- Glenberg AM ( 1997): What memory is for. Behav Brain Sci 20: 1–55. [DOI] [PubMed] [Google Scholar]

- Glenberg A, Kaschak M ( 2002): Grounding language in action. Psychonomic Bull Rev 9: 558–565. [DOI] [PubMed] [Google Scholar]

- Hamilton AFC, Grafton ST ( 2006): Goal representation in human anterior intraparietal sulcus. J Neurosci 26: 1133–1137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermueller F ( 2004): Somatotopic representation of action words in human motor and premotor cortex. Neuron 41: 301–307. [DOI] [PubMed] [Google Scholar]

- Hauk O, Pulvermueller F ( 2004): Neurophysiological distinction of action words in the fronto‐central cortex. Hum Brain Mapp 21: 191–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoenig K, Sim E‐J, Bochev V, Herrnberger B, Kiefer M ( 2008): Conceptual flexibility in the human brain: dynamic recruitment of semantic maps from visual, motor, and motion‐related areas. J Cogn Neurosci 20: 1799–1814. [DOI] [PubMed] [Google Scholar]

- James KH, Maouene J ( 2009): Auditory verb perception recruits motor systems in the developing brain: An fMRI investigation. Dev Sci F26–F34. [DOI] [PubMed] [Google Scholar]

- Kable JW, Kan IP, Wilson A, Thompson‐Schill SL, Chatterjee A ( 2005): Conceptual representations of action in the lateral temporal cortex. J Cogn Neurosci 17: 1855–1870. [DOI] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K ( 2001): Large, colorful or noisy? Attribute and modality‐specific activations during retrieval of perceptual attribute knowledge. Cogn Affect Behav Neurosci 1: 207–221. [DOI] [PubMed] [Google Scholar]

- Kemmerer D, Castillo JG, Talavage T, Patterson S, Wiley C ( 2008): Neuroanatomical distribution of five semantic components of verbs: Evidence from fMRI. Brain Lang 107: 16–43. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A ( 2008): A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. J Physiol Paris 102: 59–70. [DOI] [PubMed] [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG ( 1995): Discrete cortical regions associated with knowledge of color and knowledge of action. Science 270: 102–105. [DOI] [PubMed] [Google Scholar]

- Masson MEJ, Bub DN, Warren CM ( 2008): Kicking calculators: Contribution of embodied representations to sentence comprehension. J Memory Lang 59: 256–265. [Google Scholar]

- Meteyard L, Cuadrado SR, Bahrami B, Vigliocco G ( 2010): Coming of age: A review of embodiment and neuroscience of semantics. Cortex. Doi: 10.1016/j.cortex.2010.11.002. [DOI] [PubMed] [Google Scholar]

- Pulvermueller F ( 1999): Words in the brain's language. Behav Brain Sci 22: 253–336. [PubMed] [Google Scholar]

- Pulvermueller F ( 2005): Brain mechanisms linking language and action. Nat Rev Neurosci 6: 576–582. [DOI] [PubMed] [Google Scholar]

- Pulvermueller F, Fadiga L ( 2010). Active perception: Sensorimotor circuits as a cortical basis for language understanding. Nat Rev Neurosci 11: 351–360. [DOI] [PubMed] [Google Scholar]

- Pulvermueller F, Härle M, Hummel F ( 2000): Neurophysiological distinction of verb categories. Neuroreport 11: 2789–2793. [DOI] [PubMed] [Google Scholar]

- Pulvermueller F, Hauk O ( 2006): Category‐specific conceptual processing of color and form in left fronto‐temporal cortex. Cerebral Cortex 16: 1193–1201. [DOI] [PubMed] [Google Scholar]

- Pulvermueller F, Shtyrov Y. ( 2006). Language outside the focus of attention: The mismatch negativity as a tool for studying higher cognitive processes. Prog Neurobiol 79: 49–71. [DOI] [PubMed] [Google Scholar]

- Pulvermueller F, Shtyrov Y ( 2009): Spatio‐temporal signatures of large‐scale synfire chains for speech: MEG evidence. Cerebral Cortex 19: 79–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raposo A, Moss HE, Stamatakis EA, Tyler LK ( 2009): Modulation of motor and premotor cortices by actions, action words and action sentences. Neuropsychologia 47: 388–396. [DOI] [PubMed] [Google Scholar]

- Rees G, Friston K, Koch C ( 2000): A direct quantitative relationship between the functional properties of human and macaque V5. Nat Neurosci 3: 716–723. [DOI] [PubMed] [Google Scholar]

- Rueschemeyer S‐A, Brass M, Friederici AD ( 2007): Comprehending prehending: Neural correlates of processing verbs with motor stems. J Cogn Neurosci 19: 855–865. [DOI] [PubMed] [Google Scholar]

- Rueschemeyer S‐A, Rooij D, Lindemann O, Willems R, Bekkering H ( 2010): The function of words: Distinct neural correlates for words denoting differently manipulable objects. J Cogn Neurosci 22: 1844–1851. [DOI] [PubMed] [Google Scholar]

- Rueschemeyer S‐A, Pfeiffer C, Bekkering H ( 2010): Body schematics: On the role of the body schema in embodied lexical‐semantic representations. Neuropsychologia 48: 774–781. [DOI] [PubMed] [Google Scholar]

- Saccuman MC, Cappa SF, Bates EA, Arevalo A, Della Rosa P, Danna M, Perani D ( 2006): The impact of semantic reference on word class: An fMRI study of action and object naming. Neuroimage 32: 1865–1878. [DOI] [PubMed] [Google Scholar]

- Saxe R, Jamal N, Powell L ( 2006): My body or yours? The effect of visual perspective on cortical body representations. Cerebral Cortex 16: 178–182. [DOI] [PubMed] [Google Scholar]

- Saygin AP, McCullough S, Alac M, Emmory K ( 2010): Modulation of bold response in motion‐sensitive lateral temporal cortex by real and fictive motion sentences. J Cogn Neurosci 22: 2480–2490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scorolli C, Borghi AM ( 2007): Sentence comprehension and action: Effector specific modulation of the motor system. Brain Res 1130: 119–124. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Hauk O, Pulvermueller F ( 2004): Distributed neuronal networks for encoding category‐specific semantic information: The mismatch negativity to action words. Eur J Neurosci 19: 1083–1092. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Ramjee V, Beauchamp MS, McRae K, Martin A, Barsalou LW ( 2007): A common neural substrate for perceiving and knowing about color. Neuropsychologia 45: 2802–2810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Hamann SB, Harenski CL, Hu XP, Barsalou LW ( 2008): fMRI evidence for word association and situated simulation in conceptual processing. J Physiol‐Paris 102( 1–3): 106–119. [DOI] [PubMed] [Google Scholar]

- Slotnick SD, Moo LR, Segal JB, Hart J ( 2003): Distinct prefrontal cortex activity associated with item memory for visual shapes. Cogn Brain Res 17: 75–82. [DOI] [PubMed] [Google Scholar]

- Tettamanti M, Buccino G, Saccuman MC, Gallese V, Danna M, Scifo P, et al. ( 2005): Listening to action‐relate sentences activates fronto‐parietal motor circuits. J Cogn Neurosci 17: 273–281. [DOI] [PubMed] [Google Scholar]

- Van Dam WO, Rueschemeyer S‐A, Bekkering H ( 2010a) How specifically are action verbs represented in the neural motor system: An fMRI study. Neuroimage 53: 1318–1325. [DOI] [PubMed] [Google Scholar]

- Van Dam WO, Rueschemeyer S‐A, Lindemann O, Bekkering H ( 2010b): Context effects in embodied lexical‐semantic processing. Front Psychol 1:150. doi: 10.3389/fpsyg.2010.00150 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigliocco G, Vinson DP, Lewis W, Garrett M ( 2004): Representing the meanings of object and action words: The featural and unitary semantic space hypothesis. Cogn Psychol 48: 422–488. [DOI] [PubMed] [Google Scholar]

- Zwaan RA, Madden CJ, Xaxley RH, Aveyard ME ( 2004): Moving words: Dynamic mental representations in language comprehension. Cogn Sci 28: 611–619. [Google Scholar]